Abstract

Background:

In ambulatory care settings, physicians largely rely on clinical guidelines and guideline-based clinical decision support (CDS) systems to make decisions on hypertension treatment. However, current clinical evidence, which is the knowledge base of clinical guidelines, is insufficient to support definitive optimal treatment.

Objective:

The goal of this study is to test the feasibility of using deep learning predictive models to identify optimal hypertension treatment pathways for individual patients, based on empirical data available from an electronic health record database.

Materials and Methods:

This study used data on 245,499 unique patients who were initially diagnosed with essential hypertension and received anti-hypertensive treatment from January 1, 2001 to December 31, 2010 in ambulatory care settings. We used recurrent neural networks (RNN), including long short-term memory (LSTM) and bi-directional LSTM, to create risk-adapted models to predict the probability of reaching the BP control targets associated with different BP treatment regimens. The ratios for the training set, the validation set, and the test set were 6:2:2. The samples for each set were independently randomly drawn from individual years with corresponding proportions.

Results:

The LSTM models achieved high accuracy when predicting individual probability of reaching BP goals on different treatments: for systolic BP (< 140 mmHg), diastolic BP (< 90 mmHg), and both systolic BP and diastolic BP (< 140/90 mmHg), F1-scores were 0.928, 0.960, and 0.913, respectively.

Conclusions:

The results demonstrated the potential of using predictive models to select optimal hypertension treatment pathways. Along with clinical guidelines and guideline-based CDS systems, the LSTM models could be used as a powerful decision-support tool to form risk-adapted, personalized strategies for hypertension treatment plans, especially for difficult-to-treat patients.

Keywords: hypertension treatment pathways, clinical decision support, deep learning, recurrent neural networks, long short-term memory

1. Introduction

Cardiovascular disease (CVD) remains the leading cause of death in the United States, while hypertension acts as a major contributing risk factor [1]. Hypertension is a highly prevalent health condition that affects about 103.3 million or 45.6% of the adult population in the United States [2] and 1.3 billion or 31% of adults worldwide [3]. Uncontrolled high blood pressure (BP) is closely associated with many severe diseases, including myocardial infarction, stroke, renal failure, and other long-term cardiovascular and renal consequences [1].

Hypertension is the most common modifiable risk factor for CVD [4,5]. If BP is lowered to normal levels, life expectancies can be significantly improved [6,7]. Physical activity, exercise, and healthy diets play an important role in BP management [8]. However, when these lifestyle changes alone cannot lower BP to a target level, medication treatment is needed to reach BP goals.

Many hypertension patients experience uncontrolled BP while taking medications, and some of them are difficult-to-treat [9,10]. Currently, physicians make treatment decisions based on their domain knowledge, clinical guidelines, and patient health conditions. However, human knowledge is often limited by personal experience and the current clinical guidelines do not always provide definitive optimal treatment recommendations [2,11,12]. In addition, current clinical evidence has not yet established whether first-line therapies are more effective than thiazide and thiazide-like diuretics (HCTZ) in BP lowering. In fact, research has shown that not a single class of first-line therapies, i.e., angiotensin-converting enzyme inhibitors (ACEI), angiotensin-receptor blockers (ARB), calcium channel blockers (CCB), or beta blockers, can achieve significantly better outcomes than HCTZ in all situations [13]. Based on clinical evidence, for some conditions, the clinical guidelines provide recommendations that have multiple options for physicians to choose from, the state of affairs encourages variable interpretations. In addition, the presence of certain symptoms would warrant a prescription of one particular medication over other medications [2,11,12]. If any pre-existing conditions were neglected in the medical decision-making process, adverse patient outcomes might occur. Further, for many difficult-to-treat cases, treatment recommendations in the guidelines can be complex and hard to follow [2,14].

In the past two decades, many guideline-based clinical decision support (CDS) systems have been developed to facilitate and improve hypertension treatment. Studies have shown that CDS systems can significantly improve BP outcomes, lower cost, and reduce cardiovascular morbidity and mortality [15-25]. However, existing CDS systems are inherently limited because they were all developed based on the clinical guidelines, whose knowledge base is largely empirical evidence derived from population-level data and thus insufficient to support definitive optimal treatment regimens [13].

This study uses a deep learning technique, namely recurrent neural networks (RNN), to determine personalized optimal hypertension treatment regimens, by identifying complex patterns from empirical data available in electronic health record (EHR) data [26-31]. While deep learning techniques have been used for investigating risk factors in the hypertension domain [30-35], they have not yet been used to determine personalized treatment. With this study, we aim to establish the feasibility of developing predictive models to identify optimal hypertension treatment pathways for individual patients using RNN. Specifically, LSTM is used because it has masking layer support to handle missing data and dedicated “memory cell” to maintain the information in memory for long periods of time.

2. Materials and Methods

2.1. Related work

Deep learning methods have been increasingly applied to EHR based predictive models due to its ability to learn complex patterns from medical data. Several studies are particularly relevant to our work. Using ICU patient records, Lipton et al. [27] applied long short-term memory (LSTM) models to predict clinical diagnosis patterns with multivariate time-series analysis. LSTM was also used by Choi et al. [26] to predict diagnosis, medication order in the next visit, and the time to next visit using discrete medical codes extracted from longitudinal patient visit records. In another study, Choi et al. [29] used recurrent neural network models for early detection of heart failure onset. Ge et al. [36] adopted the deep learning architecture proposed by Choi et al. [37] and predicted post-stroke pneumonia in 7-day and 14-day during hospital stay, with the data collected during hospitalization. Also utilizing LSTM and Gated recurrent units (GRU), Koshimizu et al. [38] predicted blood pressure variability over 4 weeks using time-series data.

Most of these studies used regular time-series approach and treated missing data with imputation. For example, Lipton et al. [27] imputed missing values with forward- and backward-filling or a clinical normal value defined by clinical experts. In the Koshimizu, et al. study [38], missing values were interpolated from values of previous dates or median values in the context data. The Choi et al. study [29] treated missing values differently, where the visits were temporarily ordered, and an auxiliary duration feature was added to indicate the gap of when an event occurred to address the irregularity. All these studies failed to fully leverage the publicly available medical terminologies to standardize feature engineering procedure for generalizability to different electronic medical records. In fact, the Choi et al. study [29] pointed out that their model performance would benefit from incorporating well-established medical terminologies and medical ontologies. Our study improves upon these previous studies using well-established medical terminologies and medical ontologies. In addition, we address the missing values with a modified temporal matrix representation approach [39,40] and a masking layer added in the neural network. We choose recurrent neural network (RNN) for our study, because currently it is the only deep neural network architecture to support masking layers in the network. We elaborate this point in Section 2.5.

Among different RNN architectures, LSTM and GRU have proven to outperform standard RNN, while it is unclear which of the two is superior to the other [41]. In fact, both LSTM and GRU achieved nearly identical performance in the Koshimizu et al. study [38]. For this study, we adopted LSTM over GRU, because LSTM has the advantage of modeling long-distance relations with its dedicated “memory cell” and more gates, although it comes with a more complex neural network structure and higher computing cost than GRU [41].

2.2. Data Sources

The data source for this study was the GE Centricity Electronic Medical Record research database, which is a de-identified research database, comprised of data submitted by consortium members in the United States. The database represents a variety of practice types, ranging from solo practitioners to community clinics, academic medical centers, and large integrated delivery networks in ambulatory care settings.

As of 2012, the database contained longitudinal ambulatory electronic health data for more than 17 million unique patients from 45 states, with a mean follow-up duration of slightly over 2 years [42]. The database contains de-identified patient demographics, vital signs, ICD-9-CM diagnosis and procedure codes, prescriptions, medication list, problem list, laboratory tests, and chief complaints.

2.3. Cohort Identification and Sample Size

This study included patients who met the following inclusion criteria:

Had an initial diagnosis of essential hypertension (401.xx, ICD-9-CM) between 01/01/2001 and 12/31/2010;

Prescribed at least one anti-hypertensive agent on the date of diagnosis;

Were at least 18 years old at the time of diagnosis;

- Had > = 12-month of pre-diagnosis history and > = 24-month post-diagnosis follow-up;

- The 12-month pre-diagnosis period was used to identify and exclude non-essential hypertension (402.xx-405.xx, ICD-9-CM) patients and to establish the baseline record. Using a 24-month post-diagnosis period was to assure that all patients have sufficient follow-up medical records.

Had race/ethnicity information reported at least once in the data set.

A total of 245,499 patients met the inclusion criteria. Samples were split by patients with the proportion of 6:2:2 for the training, validation, and test sets. The patients in each set were independently randomly drawn from individual years with corresponding proportions (See Supplementary material Tables S1 and S2).

2.4. Data Processing

Features were extracted and constructed from demographics, vital signs, lifestyle, ICD-9-CM, laboratory values, medications, and chief complaints (See Supplementary material Fig. S1).

2.4.1. Demographics, lifestyle, and vital signs

Features in this category include race, gender, age, geographic region (West, Midwest, South, and Northeast), smoking status, physical activity level, systolic blood pressure (SBP), diastolic blood pressure (DBP), height, weight, and body mass index. Continuous features were scaled into range from 0 to 1 using the min-max scaling approach.

2.4.2. Laboratory values

The clinical guidelines and the literature have identified laboratory test results that are associated with hypertension treatment and BP outcomes [2], including LDL, HDL, HbA1c, triglycerides, blood glucose, blood urea nitrogen (BUN), carbon dioxide, serum creatinine, chloride, potassium, sodium, thyroid stimulating hormone (TSH), urine dipstick test (bilirubin & ketones), red blood cell, white blood cell (leukocyte), hematocrit (HCT), mean corpuscular volume (MCV), blood hemoglobin, and platelets.

We categorized BUN, CO2, serum chloride, serum potassium, serum sodium, blood hemoglobin, leucocyte, and TSH into three categories: low, normal, or high. All other laboratory values were categorized into two groups: normal or abnormal. In addition, we added corresponding features to indicate whether these specific lab tests were performed at the time of visit.

2.4.3. ICD-9-CM codes

Unlike other studies that used ICD-9-CM diagnosis codes directly [26,27], we mapped ICD-9-CM diagnosis codes to multi-level CCS indexes [43], from which 135 features were constructed at the second level (See Supplementary material Table S3).

2.4.4. Non-hypertensive medications

Medical terminologies, including NDF-RT/MED-RT, RxNorm, and UMLS, were used for medication mapping. Leveraging the linkage between these medical terminologies, the non-hypertensive medications were aggregated into the VA national drug classes (defined in NDR-RT/MED-RT) at its top level (See Supplementary material Table S4).

2.4.5. Hypertensive medications

Using RxNorm and UMLS, we mapped hypertension medications into 10 drug classes, including ACEI, ARB, CCB, beta blockers, HCTZ, alpha blockers, loop diuretics, potassium-sparing diuretics, acting alpha-agonist, and vasodilators. Some of these 10 classes have more than a dozen agents (See Supplementary material Table S5, Monotherapy panel).

When a fixed-dose therapy (i.e., a medicine that includes two or more active ingredients combined in a single dosage form) was prescribed, we categorized it into two or more different drug classes based on its ingredient components. We also captured the dosage information to indicate whether the prescribed agent was at its peak dose or not.

2.4.6. Major adverse events

Following the SPRINT trial protocol [44], we captured five major adverse events that were associated with hypertension treatment, namely hypotension, syncope, electrolyte abnormalities, acute kidney injury, and injurious falls. ICD-9-CM codes, lab test values, and the coded text from chief complaints were used to identify these adverse events.

2.4.7. Outcomes of endpoint

The outcomes of this study were whether patients achieved SBP goal (< 140 mmHg), DBP goal (< 90 mmHg), or both SBP and DBP goals (< 140/90 mmHg) after receiving hypertensive treatment for primary prevention of CVD in adults [2]. We predicted the goal achievement probabilities of the potential treatment regimens to identify the personalized optimal hypertension treatment pathways.

2.4.8. Monthly record generation

American Heart Association (AHA) guidelines recommend that patients on BP-lowering medication should be reassessed in one month and followed up for reassessment in 3 to 6 months [2]. However, this does not always happen. In order to address the irregularity of patient visits in data preparation, we adopted the following discretization approach using a sliding window:

Features were constructed at encounter level at the time of the office visits.

The 12-month pre-diagnosis period was collapsed into a single baseline record.

The post-diagnosis period was discretized into up to 24 monthly records so that each record covered the visit(s) in the period of a 30-day window.

The outcome was whether a certain BP goal was achieved in the month after receiving certain treatment.

After this step, all patients had a baseline record and up to 24 monthly follow-up records. It is worth noting that, patients may receive multiple prescriptions within a 30-day window, where the second prescription could be a therapy change or a therapy add-on. For simplicity, in such cases, we aggregated the prescriptions into one treatment regimen. In addition, the medical conditions were aggregated if multiple encounters appeared within a month window.

2.4.9. Model inputs assembly

Past 12 monthly medical history was utilized for optimal treatment pathways determination. We used 3-dimentional tensors (patients, time-steps, features) for predictions. Suppose is the time of the current visit, then a tensor has a total of 13 time-steps, .

The time-step data (visits by month) were constructed as following:

is a feature vector at time when a patient received treatment. is the BP outcomes at time . The elements in vector were features at time-step , where is the total number of features.

2.5. Recurrent Neural Network (RNN) Development

RNN uses memory cells to process long sequence data for modeling temporal dependency in a relatively long date range. It outperforms human experts in certain aspects and is regarded as the state-of-the-art approach in temporal sequence analysis [45,46]. RNNs, especially long short-term memory (LSTM) [47] and gated recurrent unit [48], have become popular for time series analysis for temporal reasoning [35,49]. LSTM has been found extremely successful in many applications, such as unconstrained handwriting recognition [50], speech recognition [51,52], handwriting generation [51], machine translation, image captioning [53-55], and parsing [56]. Recently, RNNs have been explored in clinical domains [26-30,35].

In this study, we used two different algorithms of RNNs, namely LSTM and Bi-LSTM, to predict optimal hypertension treatment regimens for BP goals achievement.

2.5.1. LSTM algorithm

LSTM is a type of RNN architecture explicitly designed to have the capacity of learning long-term dependencies. LSTM can remember information of long periods from the past and regulate the proportion of information to be kept in the entire network, making it suitable for use in the development of CDS systems in medical domain, including hypertension management. Fully connected LSTM is very powerful for handling temporal correlation for time series data through input gate , output gate , forget gate , and cell state . The cell state functions as the conveyor belt to keep information flow unchanged from the beginning to the end in the entire networks. LSTM adjusts the information to the cell state that is regulated by the three gates as shown in the formula below.

2.5.2. Bi-LSTM algorithm

Bi-LSTM is a variant of LSTM that combines two independent LSTM recurrent neural networks. One network is fed by the input sequence in a normal time order while the other receives the input sequence in a reverse time order. The outputs of these two networks are concatenated at each individual time-step. Bi-LSTM obtains both backward and forward information about the sequences at every time-step, which sometimes leads to better performance than LSTM algorithm in certain situations [57-59].

2.5.3. RNN architecture

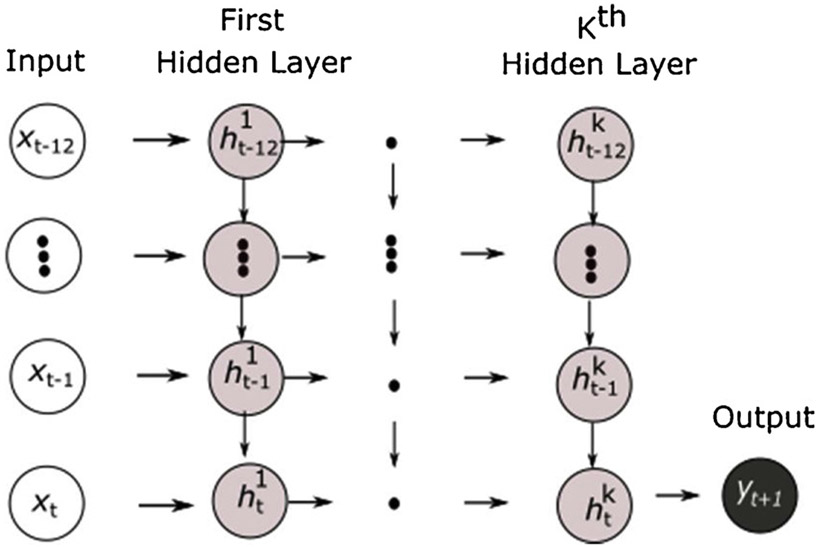

As described earlier, 12 monthly past medical records were used for model training, which resulted in a total of 13 time-steps of patients who had recorded BP outcomes after receiving treatment. As illustrated in Fig. 1, the RNN networks were used to model a sequence of a fixed-size of 13 inputs for a target at the very end. The networks had recurrent connections between hidden units, which read an entire sequence from time to time and then produced a single output at time .

Fig. 1.

Recurrent neural network with a single output.

is the BP outcome (i.e., SBP goal achievement, DBP goal achievement, and both SBP & DBP goal achievement) at time , and is a feature vector that represents all features at time . is the hidden layers with recurrent hidden units. is the hidden unit in the first hidden layer at time-step , and is the hidden unit in the first hidden layer at time . Similarly, is the hidden unit of the hidden layer at time , and is the hidden unit in the hidden layer at time .

2.5.4. Padding and Masking

RNNs can handle variable-length inputs but the inputs must have a uniform length. To make all sequences have the same length, we padded all features with “−1.0” (an arbitrary number with no medical meaning) at the time-steps where data were not available. We then added a masking layer so that LSTM would skip those “−1.0” padded time-steps for modeling.

2.5.5. Model Training

Our samples were split by patients with the proportion of 6:2:2 for the training, validation, and test sets, respectively. We trained the models on the training set and validated the models on the validation set. The training and validation results were reported in Table 1. After the model tuning, we provided the unbiased evaluation of final model fit on the test set (See Table 2).

Table 1.

Training and Validation Accuracies.

| LSTM |

Bi-LSTM |

|||

|---|---|---|---|---|

| Training | Validation | Training | Validation | |

| Achieving DBP goal | 0.937 | 0.937 | 0.937 | 0.937 |

| Achieving SBP goal | 0.903 | 0.903 | 0.903 | 0.903 |

| Achieving Both SBP and DBP goals | 0.895 | 0.897 | 0.895 | 0.897 |

Table 2.

LSTM and Bi-LSTM Performance Metrics on Test set.

| Precision | Recall | F1-score | Cohen’s Kappa |

ROC Curve |

|

|---|---|---|---|---|---|

| LSTM | |||||

| Achieving DBP goal | 0.963 | 0.960 | 0.961 | 0.788 | 0.896 |

| Achieving SBP goal | 0.931 | 0.925 | 0.928 | 0.783 | 0.893 |

| Achieving Both SBP & DBP goals | 0.919 | 0.913 | 0.916 | 0.781 | 0.891 |

| Bi-LSTM | |||||

| Achieving DBP goal | 0.963 | 0.960 | 0.961 | 0.787 | 0.896 |

| Achieving SBP goal | 0.931 | 0.925 | 0.928 | 0.783 | 0.893 |

| Achieving Both SBP & DBP goals | 0.912 | 0.915 | 0.913 | 0.773 | 0.886 |

2.5.6. Hyperparameter Tuning

Grid search [60] was used to find the best hyperparameters, including optimizer, activation functions, hidden layers, number of neurons in hidden layers, number of epochs, L1/L2 regularization, dropout rates, batch size, learning rate, and momentum.

Seven different optimizers were explored, including RMSprop, Adam, AdaDelta, SGD, AdaGrad, Adamax, and Nadam. AdaDelta was chosen [61] because it performed slightly better than its counterparts. Hyperbolic tangent function (tanh) was used as activation function for the hidden units and a sigmoid was used for the output units. Since AdaDelta (an extension of AdaGrad) automatically adapts learning rates based on a moving window of gradient updates, no learning rate tuning was needed. Four hidden layers with a mini-batch of 64 were selected because they were sufficient to achieve desired accuracies. To avoid over fitting, an L2 kernel regularizer of 0.01 was applied in the models. In addition, a dropout layer with drop rate of 0.2 was added after each hidden layer (See Supplementary material Figs. S2 and S3).

2.6. Treatment Pathways

2.6.1. Treatment pathway

Because patients may receive different prescriptions at each visit and their visit frequencies can vary in different ways, treatment regimens can be complicated. In order to identify the optimal treatment pathways, we made the following assumptions to simplify treatment regimens with consideration of AHA guidelines (See Supplementary Material “Treatment Scenarios” for details). We assume a patient can have up to three office visits , with about 1-month gap in between for therapy change or dosage titration. In addition, at a specific visit, a patient may receive up to three hypertensive agents/classes of the 10 drug classes. Under these assumptions of visits and drug combinations, the following scenarios of prescriptions and visits are possible:

For a one-visit scenario, a patient could receive one of 175 possible regimens;

For a two-visit scenario, a patient could receive one of 810 possible regimens; and

For a three-visit scenario, a patient could receive one of 720 regimens.

As such, the total treatment regimens of three different visit schedules would be 1,705. Further, because ACEI and ARB are not recommended to be prescribed concurrently in the clinical guidelines, we excluded the 50 combinations where ACEI and ARB appeared concurrently. The pre-determined final total treatment regimens were 1,655.

2.6.2. Tensor Creation

The LSTM models require patients to have 12 consecutive monthly medical records immediately before the time of visit, which is highly unlikely. We padded all the vectors with “−1.0” where data in certain months were not available. After that, we attached our pre-determined 1,655 treatment regimens to the patient’s records for prediction. Using the pre-determined treatment regimens, we predicted the probabilities of BP goals achievement to identify the optimal treatment pathways.

The analyses were conducted on a high-performance PC, which has a 12-core Intel i7-8750H CPU, 32GB RAM, and a NVIDIA GeForce GTX 1060 GPU (6GB RAM). TensorFlow (v1.13.1) and Keras (v 2.2.4) on Python (v3.7.3) were used for deep learning model training. PostgreSQL server (v10.5) and Scikit-Learn (v0.20.3) were used for data processing, and the latter was also used to report model performance metrics.

3. Results

3.1. Training and Validation Accuracies

LSTM and Bi-LSTM models peaked their efficiencies at different epochs. The performance of LSTM models peaked at the 15th epochs and started over-fitting thereafter. Similarly, the performance of bi-LSTM models peaked at the 11th epochs. Table 1 shows the training and validation accuracies of LSTM and Bi-LSTM models for achieving the DBP goal, SBP goal, and both SBP and DBP goals. Overall, the models for the DBP goal achievement have higher accuracies than the models for SBP goal only and for both SBP and DBP goals. In addition, Bi-LSTM and LSTM perform equally well in terms of training and validation accuracies for the three different outcomes.

3.2. Performance metrics

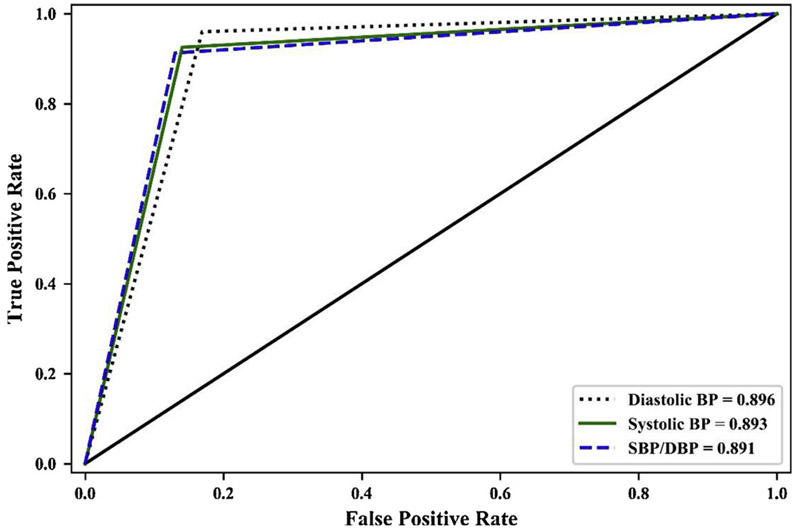

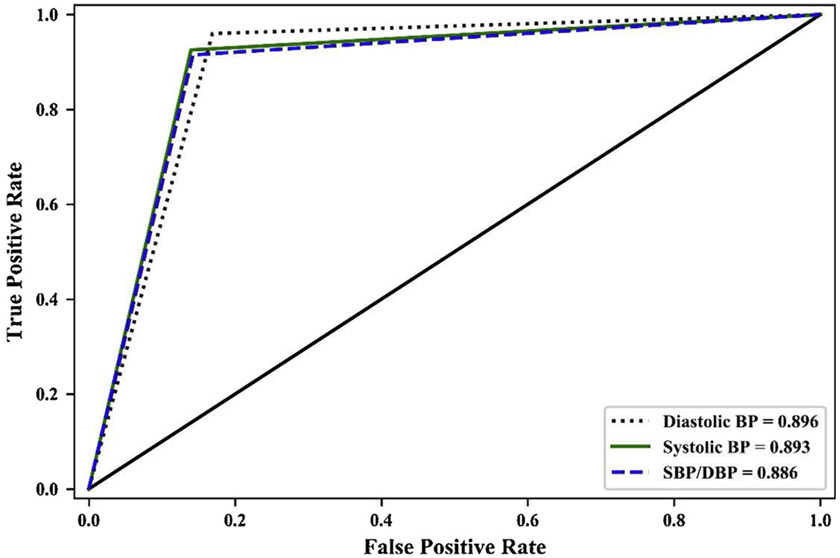

As shown in Table 2, all LSTM and Bi-LSTM models achieved high precision, recall, and F1-scores. All the ROC under curve areas of LSTM and Bi-LSTM models were very close to 0.90, indicating that the prediction accuracies were considered excellent and close to outstanding [62]. The Cohen’s Kappa coefficients were very close to 0.8, indicating substantial levels of agreement between the observed and predicted goal achievement outcomes in all LSTM and Bi-LSTM models [63]. ROC curves of LSTM and Bi-SLTM models of achieving three different BP goals were reported in Figs. 2 and 3. The ROC curves of LSTM and Bi-LSTM were similar except trivial differences in SBP/DBP goal achieving.

Fig. 2.

ROC Curves of Achieving BP Goals (LSTM).

Fig. 3.

ROC Curves of Achieving BP Goals (LSTM).

4. Discussion

This study used LSTM and Bi-LSTM models to determine optimal BP treatment regimens, with the goal to establish the feasibility of developing predictive models to personalize optimal hypertension treatment pathways using historical patient medical records. Both LSTM and Bi-LSTM models were built to identify optimal hypertension treatment pathways to achieve the best BP outcomes, derived from clinical EHR data from a large population. The LSTM and Bi-LSTM models were used because they are able to take into account patients’ temporal clinical features. The clinical history included diagnosis codes, laboratory test results, medication prescriptions, and coded text of chief complaints. CCS index was used to aggregate both ICD-9-CM and ICD-10-CM diagnoses. Since medical conditions are usually highly correlated, it presents a challenge in traditional statistical modeling approaches and often requires clinical expertise to tackle the issue. One advantage of LSTM and Bi-LSTM is that the algorithms can model the inter-variable and temporal interactions without the need of extensive feature engineering.

The LSTM and Bi-LSTM predictive models achieved equally high accuracies for all BP outcomes, indicating their effectiveness in determining optimal treatment regimens. It can potentially inform risk-adapted, personalized strategies of hypertension treatment plans. We favored LSTM models over the Bi-LSTM because LSTM models are more parsimonious and less expensive in terms of computation. Our LSTM models can predict the probabilities of achieving desired BP outcomes if a patient is prescribed each one of the 1,655 possible treatment regimens. Based on the prediction results, LSTM models can identify the optimal treatment pathways for the patient, which can assist physicians’ clinical decision making, mitigate major adverse events, increase early prevention and detection of hypertension associated risks, empower patient awareness, and encourage lifestyle modification.

There are several limitations need to be mentioned. First, this study’s population is akin to the subjects in the intention-to-treat (ITT) analysis. No information was available to tackle the issues of noncompliance [64], protocol deviations, withdrawal (in our case, patients stopped taking the prescribed medications), and anything that happens after prescriptions. However, attributing to the similarity of this study to the ITT analysis [65], we believed that this study’s estimate of treatment effect is conservative. In addition, with a focus on the pragmatic evaluation of the benefit of drug regimen changes toward different treatment pathways, noncompliance was not a major issue in this study.

Second, the data completeness and data quality are a limiting factor, even though the data we used for model training were carefully prepared, cleaned, and integrated by the commercial data provider. In this study, we did not attempt to impute for any missing data. Instead, we implemented a set of strict requirements to avoid the incompleteness issue, and used padding and masking approaches to let our LSTM models skip the time-steps where data were not available. Consequently, the exclusion of incomplete records may compromise the model prediction performance. In addition, the external validity and generalizability of the GE centricity EHR data may be limited because it is not necessarily representative of the US population.

Third, within a certain hypertensive drug class, there are still many agents to choose from (See Supplementary material Table S5), which may have slightly different effects on individual patients. The differences in the effects of agents within one class, however, were not modeled in this research.

Fourth, medication induced adverse events are associated with compliance and therapy changes. In this study, we only captured five major adverse events. Other less severe adverse events not included in the models might cause bias in the prediction.

Finally, although deep neural networks are very successful in prediction, they act as a black box [66], and do not provide information about how a particular decision is made. To address the problem, researchers have explored different methods for interpretabilities in a variety of domains. Attention mechanisms [67], layer-wise relevance propagation [68,69], and local interpretable model-agnostic explanations [70] are among the mostly studied methods. We plan to apply these approaches to increase interpretability in our future studies.

5. Conclusion

To the best of our knowledge, this research is the first comprehensive exploration of utilizing deep learning techniques and EHR data to build temporal models for predicting optimal hypertension treatment pathways. Compared with other deep learning approaches, LSTM has the advantages of missing data handling via masking layer, long-term dependencies learning, and inter-variable and temporal interactions modeling. The LSTM models achieved high accuracies. The predictive models are flexible in terms of the assumptions on the number of drug classes and visit scenarios, so that health care providers can personalize the optimal treatment pathways for patients. Along with clinical guidelines and guideline-based CDS systems, the LSTM predictive models could be used as a powerful decision-making tool to form risk-adapted, personalized strategies for hypertension treatment plans, especially for difficult-to-treat patients.

Supplementary Material

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors. Declarations of interest for XY, QZ, DB, MC, and BB: none.

Footnotes

Declaration of Competing Interest

JCF is partially funded by NIH award UL1TR001067 from the National Center for Advancing Translational Sciences of the National Institutes of Health. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health.

Appendix A. Supplementary data

Supplementary material related to this article can be found, in the online version, at doi:https://doi.org/10.1016/j.ijmedinf.2020.104122.

References

- [1].Benjamin EJ, Muntner P, Alonso A, Bittencourt MS, Callaway CW, Carson AP, Chamberlain AM, Chang AR, Cheng S, Das SR, Delling FN, Djousse L, Elkind MSV, Ferguson JF, Fornage M, Jordan LC, Khan SS, Kissela BM, Knutson KL, Kwan TW, Lackland DT, Lewis TT, Lichtman JH, Longenecker CT, Loop MS, Lutsey PL, Martin SS, Matsushita K, Moran AE, Mussolino ME, O’Flaherty M, Pandey A, Perak AM, Rosamond WD, Roth GA, Sampson UKA, Satou GM, Schroeder EB, Shah SH, Spartano NL, Stokes A, Tirschwell DL, Tsao CW, Turakhia MP, VanWagner LB, Wilkins JT, Wong SS, Virani SS, E. American Heart Association Council on, C. Prevention Statistics, S. Stroke Statistics, Heart Disease and Stroke Statistics-2019 Update: A Report From the American Heart Association, Circulation 139 (2019) e56–e528. [DOI] [PubMed] [Google Scholar]

- [2].Whelton PK, Carey RM, Aronow WS, Casey DE Jr., Collins KJ, Dennison Himmelfarb C, DePalma SM, Gidding S, Jamerson KA, Jones DW, MacLaughlin EJ, Muntner P, Ovbiagele B, Smith SC Jr., Spencer CC, Stafford RS, Taler SJ, Thomas RJ, Williams KA Sr., Williamson JD, Wright JT Jr., 2017 Guideline for the Prevention, Detection, Evaluation, and Management of High Blood Pressure in Adults: A Report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines, J Am Coll Cardiol 71 (2018) e127–e248. [DOI] [PubMed] [Google Scholar]

- [3].Bloch MJ, Worldwide prevalence of hypertension exceeds 1.3 billion, J Am Soc Hypertens 10 (2016) 753–754. [DOI] [PubMed] [Google Scholar]

- [4].Fryar CD, Hirsch R, Eberhardt MS, Yoon SS, Wright JD, Hypertension, high serum total cholesterol, and diabetes: racial and ethnic prevalence differences in U.S. adults, 1999-2006, NCHS Data Brief (2010) 1–8. [PubMed] [Google Scholar]

- [5].Lopez AD, Disease Control Priorities Project, Global burden of disease and risk factors, Oxford University Press; World Bank, New York, NY; Washington, DC, 2006. [Google Scholar]

- [6].Danaei G, Rimm EB, Oza S, Kulkarni SC, Murray CJ, Ezzati M, The promise of prevention: the effects of four preventable risk factors on national life expectancy and life expectancy disparities by race and county in the United States, PLoS Med 7 (2010) e1000248. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Franco OH, Peeters A, Bonneux L, de Laet C, Blood pressure in adulthood and life expectancy with cardiovascular disease in men and women: life course analysis, Hypertension 46 (2005) 280–286. [DOI] [PubMed] [Google Scholar]

- [8].Lopes S, Mesquita-Bastos J, Alves AJ, Ribeiro F, Exercise as a tool for hypertension and resistant hypertension management: current insights, Integr Blood Press Control 11 (2018) 65–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Egan BM, Li J, Hutchison FN, Ferdinand KC, Hypertension in the United States, 1999 to 2012: progress toward Healthy People 2020 goals, Circulation 130 (2014) 1692–1699. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Persell SD, Prevalence of resistant hypertension in the United States, 2003-2008, Hypertension 57 (2011) 1076–1080. [DOI] [PubMed] [Google Scholar]

- [11].Chobanian AV, Bakris GL, Black HR, Cushman WC, Green LA, Izzo JL Jr., Jones DW, Materson BJ, Oparil S, Wright JT Jr., Roccella EJ, L. National Heart, D.E. Blood Institute Joint National Committee on Prevention, P. Treatment of High Blood, C. National High Blood Pressure Education Program Coordinating, The Seventh Report of the Joint National Committee on Prevention, Detection, Evaluation, and Treatment of High Blood Pressure: the JNC 7 report, JAMA 289 (2003) 2560–2572. [DOI] [PubMed] [Google Scholar]

- [12].James PA, Oparil S, Carter BL, Cushman WC, Dennison-Himmelfarb C, Handler J, Lackland DT, LeFevre ML, MacKenzie TD, Ogedegbe O, Smith SC Jr., Svetkey LP, Taler SJ, Townsend RR, Wright JT Jr., Narva AS, Ortiz E, 2014 evidence-based guideline for the management of high blood pressure in adults: report from the panel members appointed to the Eighth Joint National Committee (JNC 8), JAMA 311 (2014) 507–520. [DOI] [PubMed] [Google Scholar]

- [13].Reboussin DM, Allen NB, Griswold ME, Guallar E, Hong Y, Lackland DT, Miller EPR 3rd, Polonsky T, Thompson-Paul AM, Vupputuri S, Systematic Review for the 2017 ACC/AHA/AAPA/ABC/ACPM/AGS/APhA/ASH/ASPC/NMA/PCNA Guideline for the Prevention, Detection, Evaluation, and Management of High Blood Pressure in Adults: A Report of the American College of Cardiology/American Heart Association Task Force on Clinical Practice Guidelines, J Am Coll Cardiol 71 (2018) 2176–2198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Nguyen Q, Dominguez J, Nguyen L, Gullapalli N, Hypertension management: an update, Am Health Drug Benefits 3 (2010) 47–56. [PMC free article] [PubMed] [Google Scholar]

- [15].Montgomery AA, Fahey T, Peters TJ, MacIntosh C, Sharp DJ, Evaluation of computer based clinical decision support system and risk chart for management of hypertension in primary care: randomised controlled trial, BMJ 320 (2000) 686–690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Garg AX, Adhikari NK, McDonald H, Rosas-Arellano MP, Devereaux PJ, Beyene J, Sam J, Haynes RB, Effects of computerized clinical decision support systems on practitioner performance and patient outcomes: a systematic review, JAMA 293 (2005) 1223–1238. [DOI] [PubMed] [Google Scholar]

- [17].Shojania KG, Jennings A, Mayhew A, Ramsay CR, Eccles MP, Grimshaw J, The effects of on-screen, point of care computer reminders on processes and outcomes of care, Cochrane Database Syst Rev (2009) CD001096. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Persson M, Mjorndal T, Carlberg B, Bohlin J, Lindholm LH, Evaluation of a computer-based decision support system for treatment of hypertension with drugs: retrospective, nonintervention testing of cost and guideline adherence, J Intern Med 247 (2000) 87–93. [DOI] [PubMed] [Google Scholar]

- [19].Roumie CL, Elasy TA, Greevy R, Griffin MR, Liu X, Stone WJ, Wallston KA, Dittus RS, Alvarez V, Cobb J, Speroff T, Improving blood pressure control through provider education, provider alerts, and patient education: a cluster randomized trial, Ann Intern Med 145 (2006) 165–175. [DOI] [PubMed] [Google Scholar]

- [20].Hicks LS, Sequist TD, Ayanian JZ, Shaykevich S, Fairchild DG, Orav EJ, Bates DW, Impact of computerized decision support on blood pressure management and control: a randomized controlled trial, J Gen Intern Med 23 (2008) 429–441. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Rinfret S, Lussier MT, Peirce A, Duhamel F, Cossette S, Lalonde L, Tremblay C, Guertin MC, LeLorier J, Turgeon J, Hamet P, Investigators LS, The impact of a multidisciplinary information technology-supported program on blood pressure control in primary care, Circ Cardiovasc Qual Outcomes 2 (2009) 170–177. [DOI] [PubMed] [Google Scholar]

- [22].Anchala R, Kaptoge S, Pant H, Di Angelantonio E, Franco OH, Prabhakaran D, Evaluation of effectiveness and cost-effectiveness of a clinical decision support system in managing hypertension in resource constrained primary health care settings: results from a cluster randomized trial, J Am Heart Assoc 4 (2015) e001213. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Bosworth HB, Olsen MK, Dudley T, Orr M, Goldstein MK, Datta SK, McCant E, Gentry P, Simel DL, Oddone EZ, Patient education and provider decision support to control blood pressure in primary care: a cluster randomized trial, Am Heart J 157 (2009) 450–456. [DOI] [PubMed] [Google Scholar]

- [24].Shelley D, Tseng TY, Matthews AG, Wu D, Ferrari P, Cohen A, Millery M, Ogedegbe O, Farrell L, Kopal H, Technology-driven intervention to improve hypertension outcomes in community health centers, Am J Manag Care 17 (2011) SP103–SP110. [PubMed] [Google Scholar]

- [25].Samal L, Linder JA, Lipsitz SR, Hicks LS, Electronic health records, clinical decision support, and blood pressure control, Am J Manag Care 17 (2011) 626–632. [PubMed] [Google Scholar]

- [26].Choi E, Bahadori MT, Schuetz A, Stewart WF, Sun J, Doctor AI: Predicting Clinical Events via Recurrent Neural Networks, JMLR Workshop Conf Proc 56 (2016) 301–318. [PMC free article] [PubMed] [Google Scholar]

- [27].Zachary DCK, Lipton C, Elkan Charles, Wetzel Randall, Learning to Diagnose with LSTM Recurrent Neural Networks, (2015). [Google Scholar]

- [28].Yanbo SB, Shriprasad Xu, Deshpande R, Maher Kevin O., Sun Jimeng, RAIM: Recurrent Attentive and Intensive Model of Multimodal Patient Monitoring Data, (2018). [Google Scholar]

- [29].Choi E, Schuetz A, Stewart WF, Sun J, Using recurrent neural network models for early detection of heart failure onset, J Am Med Inform Assoc 24 (2017) 361–370. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [30].LaFreniere D, Zulkernine F, Barber D, Martin K, Using machine learning to predict hypertension from a clinical dataset, 2016 IEEE Symposium Series on Computational Intelligence (SSCI) (2016). [Google Scholar]

- [31].Elshawi R, Al-Mallah MH, Sakr S, On the interpretability of machine learning-based model for predicting hypertension, BMC Med Inform Decis Mak 19 (2019) 146. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Sakr S, Elshawi R, Ahmed A, Qureshi WT, Brawner C, Keteyian S, Blaha MJ, Al-Mallah MH, Using machine learning on cardiorespiratory fitness data for predicting hypertension: The Henry Ford Exercise Testing (FIT) Project, PLoS One 13 (2018) e0195344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [33].Zhou SH, Nie SF, Wang CJ, Wei S, Xu YH, Li XH, Song EM, The application of artificial neural networks to predict individual risk of essential hypertension, Zhonghua Liu Xing Bing Xue Za Zhi 29 (2008) 614–617. [PubMed] [Google Scholar]

- [34].Krittanawong C, Bomback AS, Baber U, Bangalore S, Messerli FH, Wilson Tang WH, Future Direction for Using Artificial Intelligence to Predict and Manage Hypertension, Curr Hypertens Rep 20 (2018) 75. [DOI] [PubMed] [Google Scholar]

- [35].Li X, Wu S, Wang L, Blood Pressure Prediction via Recurrent Models with Contextual Layer, Proceedings of the 26th International Conference on World Wide Web, International World Wide Web Conferences Steering Committee, Perth, Australia, 2017, pp. 685–693. [Google Scholar]

- [36].Ge Y, Wang Q, Wang L, Wu H, Peng C, Wang J, Xu Y, Xiong G, Zhang Y, Yi Y, Predicting post-stroke pneumonia using deep neural network approaches, Int J Med Inform 132 (2019) 103986. [DOI] [PubMed] [Google Scholar]

- [37].Choi E, Taha Bahadori M, Kulas JA, Schuetz A, Stewart WF, Sun J, RETAIN: An Interpretable Predictive Model for Healthcare using Reverse Time Attention Mechanism, arXiv e-prints (2016). [Google Scholar]

- [38].Koshimizu H, Kojima R, Kario K, Okuno Y, Prediction of blood pressure variability using deep neural networks, Int J Med Inform 136 (2020) 104067. [DOI] [PubMed] [Google Scholar]

- [39].Wang F, Lee N, Hu J, Sun J, Ebadollahi S, Towards heterogeneous temporal clinical event pattern discovery: A convolutional approach, 18th ACM SIGKDD Conf. Knowl. Discovery Data Mining; (2012). [Google Scholar]

- [40].Cheng Yu, Wang Fei, Zhang Ping, Hu J, Risk Prediction with Electronic Health Records: A Deep Learning Approach, 2016 SIAM International Conference on Data Mining (2016). [Google Scholar]

- [41].Chung J, Gulcehre C, Cho K, Bengio Y, Empirical Evaluation of Gated Recurrent Neural Networks on Sequence Modeling, arXiv e-prints (2014). [Google Scholar]

- [42].Unni S, White K, Goodman M, Ye X, Mavros P, Bash LD, Brixner D, Hypertension control and antihypertensive therapy in patients with chronic kidney disease, Am J Hypertens 28 (2015) 814–822. [DOI] [PubMed] [Google Scholar]

- [43].Elixhauser A SC, Palmer L, Clinical Classifications Software (CCS), U.S. Agency for Healthcare Research and Quality, 2015. [Google Scholar]

- [44].Group SR, Wright JT Jr., Williamson JD, Whelton PK, Snyder JK, Sink KM, Rocco MV, Reboussin DM, Rahman M, Oparil S, Lewis CE, Kimmel PL, Johnson KC, Goff DC Jr., Fine LJ, Cutler JA, Cushman WC, Cheung AK, Ambrosius WT, A Randomized Trial of Intensive versus Standard Blood-Pressure Control, N Engl J Med 373 (2015) 2103–2116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [45].Young T, Hazarika D, Poria S, Cambria E, Recent Trends in Deep Learning Based Natural Language Processing, arXiv e-prints (2017). [Google Scholar]

- [46].Bychkov D, Linder N, Turkki R, Nordling S, Kovanen PE, Verrill C, Walliander M, Lundin M, Haglund C, Lundin J, Deep learning based tissue analysis predicts outcome in colorectal cancer, Sci Rep 8 (2018) 3395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [47].Hochreiter S, Schmidhuber J, Long short-term memory, Neural Comput 9 (1997) 1735–1780. [DOI] [PubMed] [Google Scholar]

- [48].Bart K.v.M. Cho, Gulcehre Caglar, Bahdanau Dzmitry, Bougares Fethi, Schwenk Holger, Bengio Yoshua, Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation, arXiv (2014). [Google Scholar]

- [49].Sutskever I, Vinyals O, Le QV, Sequence to Sequence Learning with Neural Networks, arXiv e-prints (2014). [Google Scholar]

- [50].Graves A, #252, r. Schmidhuber, Offline handwriting recognition with multi-dimensional recurrent neural networks, Proceedings of the 21st International Conference on Neural Information Processing Systems, Curran Associates Inc., Vancouver, British Columbia, Canada, 2008, pp. 545–552. [Google Scholar]

- [51].Graves A, Generating Sequences With Recurrent Neural Networks, arXiv e-prints (2013). [Google Scholar]

- [52].Graves A, Jaitly N, Towards end-to-end speech recognition with recurrent neural networks, Proceedings of the 31st International Conference on International Conference on Machine Learning - Volume 32, JMLR.org, Beijing, China, 2014. II-1764–II-1772. [Google Scholar]

- [53].Kiros R, Salakhutdinov R, Zemel RS, Unifying Visual-Semantic Embeddings with Multimodal Neural Language Models, arXiv e-prints (2014). [Google Scholar]

- [54].Xu K, Ba J, Kiros R, Cho K, Courville A, Salakhutdinov R, Zemel R, Bengio Y, Show, Attend and Tell: Neural Image Caption Generation with Visual Attention, arXiv e-prints (2015). [Google Scholar]

- [55].Vinyals O, Toshev A, Bengio S, Erhan D, Show and Tell: A Neural Image Caption Generator, arXiv e-prints (2014). [Google Scholar]

- [56].Vinyals O, Kaiser L, Koo T, Petrov S, Sutskever I, Hinton G, Grammar as a Foreign Language, arXiv e-prints (2014). [Google Scholar]

- [57].Graves A, Schmidhuber J, Framewise phoneme classification with bidirectional LSTM and other neural network architectures, Neural Netw 18 (2005) 602–610. [DOI] [PubMed] [Google Scholar]

- [58].Graves A, Jaitly N, Mohamed A, Hybrid speech recognition with Deep Bidirectional LSTM, 2013 IEEE Workshop on Automatic Speech Recognition and Understanding (2013) 273–278. [Google Scholar]

- [59].Cui Z, Ke R, Wang Y, Deep Bidirectional and Unidirectional LSTM Recurrent Neural Network for Network-wide Traffic Speed Prediction, arXiv e-prints (2018). [Google Scholar]

- [60].Qiujun H, Jingli M, Yong L, An improved grid search algorithm of SVR parameters optimization, 2012 IEEE 14th International Conference on Communication Technology (2012) 1022–1026. [Google Scholar]

- [61].Zeiler MD, ADADELTA: An Adaptive Learning Rate Method, arXiv e-prints (2012). [Google Scholar]

- [62].Mandrekar JN, Receiver operating characteristic curve in diagnostic test assessment, J Thorac Oncol 5 (2010) 1315–1316. [DOI] [PubMed] [Google Scholar]

- [63].McHugh ML, Interrater reliability: the kappa statistic, Biochem Med (Zagreb) 22 (2012) 276–282. [PMC free article] [PubMed] [Google Scholar]

- [64].Burnier M, Egan BM, Adherence in Hypertension, Circ Res 124 (2019) 1124–1140. [DOI] [PubMed] [Google Scholar]

- [65].Gupta SK, Intention-to-treat concept: A review, Perspect Clin Res 2 (2011) 109–112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [66].Zhang Z, Beck MW, Winkler DA, Huang B, Sibanda W, Goyal H, A.M.E.B.-D.C.T.C.G. written on behalf of, Opening the black box of neural networks: methods for interpreting neural network models in clinical applications, Ann Transl Med 6 (2018) 216. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [67].Bahdanau D, Cho K, Bengio Y, Neural Machine Translation by Jointly Learning to Align and Translate, arXiv e-prints (2014). [Google Scholar]

- [68].Bach S, Binder A, Montavon G, Klauschen F, Muller KR, Samek W, On Pixel-Wise Explanations for Non-Linear Classifier Decisions by Layer-Wise Relevance Propagation, PLoS One 10 (2015) e0130140. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [69].Binder A, Montavon G, Bach S, Müller K-R, Samek W, Layer-wise Relevance Propagation for Neural Networks with Local Renormalization Layers, arXiv e-prints (2016). [Google Scholar]

- [70].Tulio Ribeiro M, Singh S, Guestrin C, "Why Should I Trust You?": Explaining the Predictions of Any Classifier, arXiv e-prints (2016). [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.