Abstract

Behavioral neuroscience faces two conflicting demands: long-duration recordings from large neural populations and unimpeded animal behavior. To meet this challenge, we developed ONIX, an open-source data acquisition system with high data throughput (2GB/sec) and low closed-loop latencies (<1ms) that uses a novel 0.3 mm thin tether to minimize behavioral impact. Head position and rotation are tracked in 3D and used to drive active commutation without torque measurements. ONIX can acquire from combinations of passive electrodes, Neuropixels probes, head-mounted microscopes, cameras, 3D-trackers, and other data sources. We used ONIX to perform uninterrupted, long (~7 hours) neural recordings in mice as they traversed complex 3-dimensional terrain. ONIX allowed exploration with similar mobility as non-implanted animals, in contrast to conventional tethered systems which restricted movement. By combining long recordings with full mobility, our technology will enable new progress on questions that require high-quality neural recordings during ethologically grounded behaviors.

There is a growing recognition that, to maximize their explanatory power, neural recordings must be conducted during normal animal behavior. From the recent discovery that motor actions can dominate the activity of brain regions that were believed to be predominately sensory1,2, to findings of different learning strategies between head-fixed and freely moving subjects3, mounting evidence indicates that free behavior transforms the function of the nervous system. These observations are leading towards a consensus that learning3,4, social interactions5,6, sensory processing 7,8, and cognition9,10 are best addressed in animals that engaged in naturalistic behavior.

In recent years, remarkable progress has been made on methods for tracking and quantifying animal behavior11–42. Parallel advances in recording technologies have enabled electrophysiology43,44, optical imaging45–47, and actuation of neural ensembles48 in mobile animals. Still, applying these technologies, which are often bulky and require tethers, to study naturalistic behavior remains a major challenge. In larger animals like rats49, primates19, or even bats50, wireless systems are available. However, in mice, which are the predominant animal model system in neuroscience, recordings are limited by the weight of recording devices. For example, a 6 g wireless logger can achieve only a 70 minute long recordings51 and the weight of its batteries limits movement beyond slow locomotion, requiring that experiments be designed around the head torque imposed by the recording device51. Therefore, current technologies for mice, and similar sized species, do not allow for unencumbered motion, nor for recordings during behavior that unfolds over long periods, limiting our ability to capture neural activity during ethologically relevant behaviors.

To address this need, we developed an open-source multi-instrument hardware standard and API (Open Neuro Interface, ‘ONI’, Suppl. Figs 1,2). We then used ONI to implement a recording system called ‘ONIX’ – a modular and extendable data acquisition and behavior tracking system that greatly reduces the conflict between large-scale neural recordings and their impact on mouse behavior. The system uses a thin and light micro coaxial tether (~0.31 mm diameter, 0.37 g/m) compared to widely used options (e.g. 3 mm diameter, 6.35 g/m, Fig. 1c), that causes minimal forces on the animal’s head (Fig. 1d). The tether simultaneously powers and transmits data (150 MB/s, equivalent to 2500 channels of spike-band electrophysiology data) to and from sensors and actuators. ONIX includes modular, miniaturized headstages (Figs. 1 & 4, Suppl. Fig. 3) for passive electrical recording probes, tetrode drives44 (via Intan RHD and RHS chips), and Neuropixels43 (1.0, 2.0 probes, and BGA-packaged chips). In addition to neural activity, these headstages record 6-degrees-of-freedom head pose at ~30 Hz via on-board sensors with sub-degree and sub-millimeter resolution (90% of jitter < 0.02 mm at 2 m distance, Suppl. Fig. 8) via a consumer-grade 3D-tracking system (HTC Vive). Real-time tracking permits the system to measure the rotation of an animal and automatically untwist the tether via a small motor (Fig. 1b, Suppl. Fig. 5) without requiring torque measurements. This approach removes the behavioral impact and time limits typically associated with tethered recordings and provides experimenters with the ability to monitor neural activity and behavior for arbitrarily long sessions in complex environments (Figs. 2 & 3).

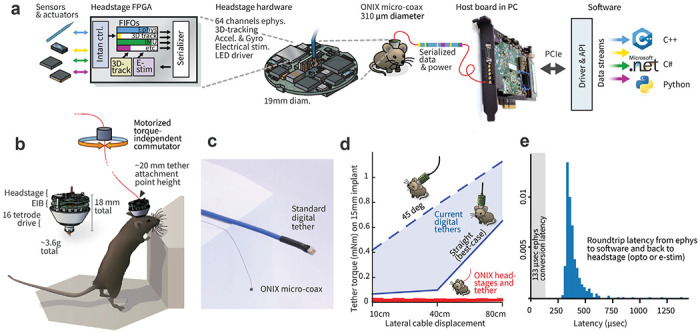

Figure 1: ONIX, a unified open-source platform for unencumbered freely moving recordings.

a, Simplified block diagram of the ONI (open neuro interface), illustrated via the tetrode headstage: multiple devices all communicate with the host PC over a single micro-coax cable via a serialization protocol, making it possible to design small multi-function headstages. b, The integrated inertial measurement unit and 3D-tracking redundantly measure animal rotation, which drives the motorized commutator without the need to measure tether torque. Small drive implants44 enable low-profile implants (~20 mm total height). c, The ONIX micro-coax, a 0.31 mm thin tether of up to 12 m length, compared to standard 12-wire digital tethers. d, Torque exerted on an animal’s head by tethers. Current tethers allow full mobility only in small arenas and in situations when the tether does not pull on the implant, while the ONIX micro-coax applies negligible torque. e, Performance of ONIX: With the 64 channel headstage, a 99.9% worst case closed-loop latency, from neural voltage reading, to host PC, and back to the headstage (e.g. to trigger an LED) of <1 ms can be achieved on Windows 10 (see also Suppl. Figs 6 & 7).

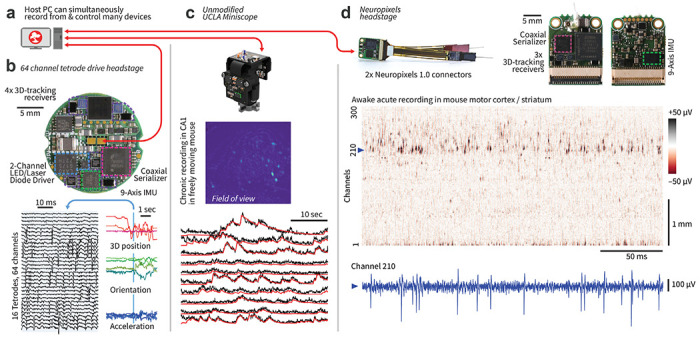

Figure 4: ONIX is compatible with existing and future recording technologies.

a, ONIX, together with Bonsai, can simultaneously record from and synchronize multiple data sources, such as tetrode headstages, Neuropixels headstages, and/or UCLA Miniscopes. b, Top: a 64 channel extracellular headstage, as used in Figs. 1–3, with 3D-tracking, electrical stimulator, dual-channel LED driver, and IMU (bottom side; not shown). Bottom: example neural recording and corresponding 3D pose traces collected from the headstage. c, ONIX is compatible with existing UCLA Miniscopes (versions 3 and 4)45,55. Middle: Maximum projection after background removal of an example recording in mouse CA1. Bottom: Background-corrected fluorescence traces (black) and CNMF output (via Minian57, red) of 10 example neurons. d, An ONIX headstage for use with 2 Neuropixels probes, and IMU to enable torque-free commutator use for long-term freely behaving recordings. A voltage heat map shows a segment from a head-fixed recording. A voltage time-series from the channel indicated by the dotted line is shown in blue.

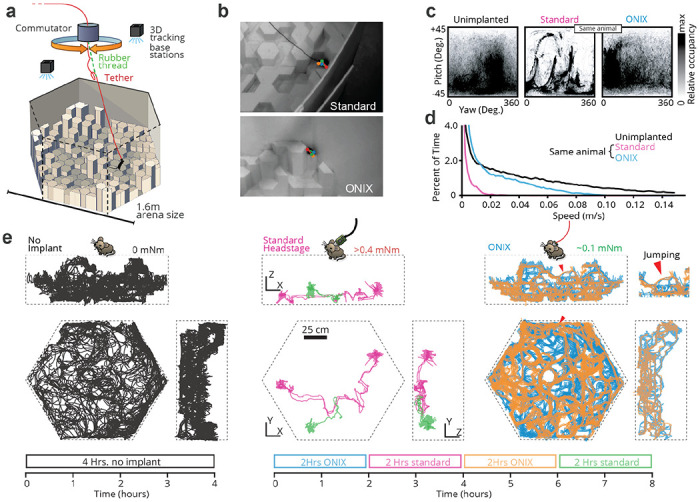

Figure 2: Unrestricted naturalistic locomotion behavior with ONIX.

a, Overview of experiment: Mice were freely exploring a 3D arena made out of styrofoam pieces of varying heights. b, Unimplanted mice and mice with a standard tether (top) or ONIX micro coax (bottom) were tracked in 3d using multicamera markerless pose estimation31. c, Head yaw and pitch occupancies over the course of a recording. Standard tethering significantly reduces freedom of head movement relative to unimplanted mice, whereas ONIX does not. d, Speed distributions over the course of a recording. Standard implants significantly reduce running speed, while ONIX results in only a modest speed reduction relative to unimplanted mice. e, 2D projection of mouse trajectories over the course of a recording session. ONIX retains the same spontaneous exploration behavior as unimplanted mice, while standard headstages and cables greatly reduce locomotion.

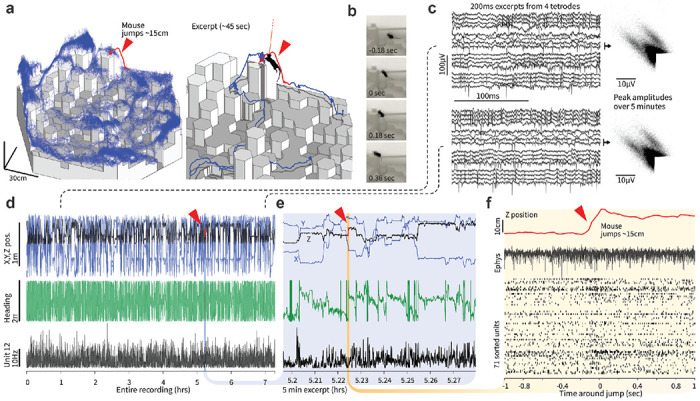

Figure 3: Stable long-term recordings during naturalistic locomotion.

a, Position of one 3D-tracking sensor on the headstage during a 7.3 hour-long ONIX recording during which the mouse was free to explore the 3D arena. Red trace and excerpt show one of multiple instances of the mouse spontaneously jumping from a lower to a higher tile. b, Video frames of the jump (the tether is too thin to be visible at this magnification), see supplementary video 1. c, Raw voltages and spike peak amplitudes from the beginning (left) and end (right) of the recording. d, 3D-position, heading, and smoothed firing rate of entire recording. e, Same data as in d, for excerpt around jump. f, Z-position, raw voltage trace example, and sorted spikes from 71 neurons during the jump.

ONIX is capable of sub-millisecond closed-loop neural stimulation on a standard (non real-time) operating system (Fig. 1e, Suppl. Figs. 4 & 6). This level of latency is otherwise only achievable on specialized operating systems52 or hardware53. Further, its hardware-agnostic, open-source C API allows scientists to more easily develop, share, and replicate algorithms for such experiments in commonly used programming languages such as C++, C#, Bonsai54, Python, etc., without being tied to specific hardware.

To demonstrate ONIX’s ability to perform rich, uninterrupted studies of freely moving mice, we performed ~8-hour recordings while mice explored a 3D arena. The arena was a 1.5 x 1.5 x 0.5 m arena constructed from hexagonal blocks of styrofoam, cut to different heights, giving mice the opportunity to run, climb, and jump (Figs. 2,3, Supplementary video 1). We exposed naive animals implanted with micro-drives44 to this unfamiliar environment without behavioral shaping or human intervention. We compared the mouse behavior achievable with the ONIX system to a typical, modern acquisition system (Fig. 2c–e), by attaching a standard tether (Intan “SPI Cable”, 1.8 mm diameter), counterweighted with an elastic band to eliminate tether weight, alongside the micro-tether. This allowed use of the ONIX headstage for position measurements and commutation/untwisting, effectively adding torque-free commutation to the SPI tether, while imposing the mechanical effect of the weight of a traditional tether on the mouse. The tethers were switched every 2 hours over an 8-hour recording session (Fig. 2e). Except for tether exchanges, no experimenter was present. Even with our zero-torque commutation, the additional forces imposed by the standard tether (head-torque >0.4 mNm, measured in separate experiment, Fig. 1d) had a substantial deleterious effect on exploratory behavior and freedom of head movement (Fig. 2c–e, quantified via entropy of the spatial occupancy and head position distributions, median entropy 4.21 vs. 0.287 bits for spatial occupancy, and 0.75 vs. 0.50 bits for heading, P<0.0001, Wilcoxon rank sum test, see methods). When only the micro-coax tether was used (head-torque ≈ 0.1 mNm), the animal resumed free exploration of the arena (Fig. 2e). Some digital tethers, for example the twisted pairs used with Neuropixels43, would fall in between the standard SPI cables used here and the ONIX micro-coax in terms of weight and flexibility, but they would lack zero-torque commutation, impacting behavior and limiting recording duration.

To compare the behavior achieved with ONIX to an implant-free condition, we used 5 synchronized cameras for marker-less 3D tracking31 of non-implanted mice (Fig. 2e, left). The degree of arena exploration and the head orientation distributions of ONIX vs. non-implanted mice were statistically indistinguishable with 4 hours of data per condition yielding largely overlapping confidence intervals (one mouse per experiment, quantified via entropy of the occupancy distributions, 95% confidence intervals for spatial occupancy: [0.208,0.369] non-implanted vs. [0.224,0.383] ONIX, and confidence intervals for heading: [0.479, 0.536] non-implanted vs. [0.474, 0.533] ONIX, see methods). The median and maximum running speed of the implanted mice was reduced by a factor of ~2 compared to animals with no implant. However, the ONIX micro-coax provided a ~12x increase in median running speed and a ~2x maximum speed compared to the standard tether (Fig. 2d).

To demonstrate the utility of long recordings without behavioral disruption, we conducted a 7.3-hour recording with a tetrode drive implant44 in retrospenial cortex in the 3D arena (Fig. 3). Mice spontaneously jumped to heights of >10cm (Fig. 3a,b), allowing us to observe neural activity during jumps (Fig. 3f). This behavior was absent in mice with the heavier tether.

Finally, we demonstrate the ONI standard’s flexibility by using the same ONIX system to acquire from and control two additional widely-used, third-party devices: UCLA Miniscopes45,55 (Fig. 4c, Suppl. Fig. 5) and Neuropixels probes43 (Fig. 4d). These recordings can also be performed simultaneously if needed. By using the Bonsai software54 for data acquisition, we also demonstrate integration of synchronized multi-camera tracking (Fig. 2, Suppl. Fig. 5). Bonsai enables the integration of real-time processing tools such as animal tracking via real-time DLC56 or SLEAP24, enabling experiments that react to animal behavior with high precision.

For developers, the ONI hardware standard and API streamlines the development of new probe and sensor technologies into headstages that have immediate integration with existing technologies, which lowers the barrier for individual labs to create custom instruments designed for specific experiments. Similarly, ONI simplifies the development of completely new data acquisition systems by providing a scalable, easy-to-use interface for communication between software and FPGA firmware, and ensures interoperability between these systems.

In sum, our system provides a probe-agnostic open-source interface for use in neuroscience. It allows, for the first time, long and high-bandwidth recordings in mice and similarly sized animals during naturalistic behaviors comparable to those of non-implanted animals. This ability will accelerate progress in many areas of research, such as motor learning58, sensory processing during natural behaviors8, social behaviors59, play9, or on cognitive aspects of spatial behaviors60,3,61,62 by allowing unimpaired motor behavior, reducing animal fatigue over time, and enabling navigation in large environments.

Supplementary Material

Acknowledgements:

We thank the Open Ephys Production Site team for beta-testing, Emily J. Dennis, Antonin Blot for additional system testing, Joaquim Alves da Silva and Hanne Stensola for testing of the 3-D tracking system, Andrew Bahle for help with testing the Neuropixels headstage. Albert Lee and Emily J. Dennis for comments on the manuscript. Funding Sources - Jonathan P. Newman: National Institute of General Medical Sciences T32GM007753 (E.H.S.T), the Center for Brains, Minds and Machines (CBMM) at MIT, funded by NSF STC award CCF-1231216, and NIH 1R44NS127725-01 to Open Ephys Inc. Jie Zhang: NIH 1R21EY028381. Takato Honda: Picower Fellowship, JPB Foundation and Picower Institute at MIT, Osamu Hayaishi Memorial Scholarship for Study Abroad, Uehara Memorial Foundation Overseas Fellowship, and Japan Society for the Promotion of Science (JSPS) Overseas Fellowship. Marie-Sophie H. van der Goes: Mathworks Graduate Fellowship. Mark T Harnett: NIH R01NS106031 and R21NS103098, was a Klingenstein-Simons Fellow, a Vallee Foundation Scholar, and a McKnight Scholar. Matthew A. Wilson: National Science Foundation STC award CCF-1231216, and NIH TR01-GM10498. Jakob Voigts: NIH 1K99NS118112-01 and Simons Center for the Social Brain at MIT postdoctoral fellowship.

Footnotes

Code and design file availability: The full ONI specification is available at https://github.com/open-ephys/ONI. Design documents for the described ONIX hardware are available as follows -- Host interface: https://github.com/open-ephys/onix-fmc-host, Breakout board: https://github.com/open-ephys/onix-breakout, 64-Channel Intan headstage: https://github.com/open-ephys/onix-headstage-64, and Neuropixels headstage: https://github.com/open-ephys/onix-headstage-neuropix1. Software along with extensive hardware and API documentation is available at https://open-ephys.github.io/onix-docs. Firmware can be made available upon request as configurable IP blocks. Unless specified in the respective repositories, all material is distributed under the creative commons CC BY-NC-SA 4.0 license and is therefore free to adapt and to share with appropriate attribution and under the same license for non-commercial purposes.

Conflict of interest statement: The authors declare the following competing interests: JPN is president and JV and JHS are board member of Open Ephys Inc., a public benefit workers cooperative in Atlanta GA. FC is the founder of, and ACL and FC are and AHL was employed at the Open Ephys Production Site in Lisbon Portugal. GL is director of NeuroGEARS Ltd. The remaining authors have no conflicts of interest to declare.

Bibliography:

- 1.Niell C. M. & Stryker M. P. Modulation of Visual Responses by Behavioral State in Mouse Visual Cortex. Neuron 65, 472–479 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Stringer C. et al. Spontaneous behaviors drive multidimensional, brainwide activity. 13 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Rosenberg M., Zhang T., Perona P. & Meister M. Mice in a labyrinth exhibit rapid learning, sudden insight, and efficient exploration. eLife 10, e66175 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Meister M. Learning, fast and slow. ArXiv220502075 Q-Bio (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Clemens A. M., Wang H. & Brecht M. The lateral septum mediates kinship behavior in the rat. Nat. Commun.. 11, 3161 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Marlin B. J., Mitre M., D’amour J. A., Chao M. V. & Froemke R. C. Oxytocin enables maternal behaviour by balancing cortical inhibition. Nature 520, 499–504 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Gire D. H., Kapoor V., Arrighi-Allisan A., Seminara A. & Murthy V. N. Mice develop efficient strategies for foraging and navigation using complex natural stimuli. Curr. Biol. CB 26, 1261–1273 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Michaiel A. M., Abe E. T. T. & Niell C. M. Dynamics of gaze control during prey capture in freely moving mice. eLife 9, e57458 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Reinhold A. S., Sanguinetti-Scheck J. I., Hartmann K. & Brecht M. Behavioral and neural correlates of hide-and-seek in rats. Science 365, 1180–1183 (2019). [DOI] [PubMed] [Google Scholar]

- 10.Tervo D. G. R. et al. The anterior cingulate cortex directs exploration of alternative strategies. Neuron 109, 1876–1887.e6 (2021). [DOI] [PubMed] [Google Scholar]

- 11.Bagi B., Brecht M. & Sanguinetti-Scheck J. I. Unsupervised discovery of behaviorally relevant brain states in rats playing hide-and-seek. Curr. Biol. 0, (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Branson K., Robie A. A., Bender J., Perona P. & Dickinson M. H. High-throughput ethomics in large groups of Drosophila. Nat. Methods 6, 451–457 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Calhoun A. J., Pillow J. W. & Murthy M. Unsupervised identification of the internal states that shape natural behavior. Nat. Neurosci. 22, 2040–2049 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Egnor S. E. R. & Branson K. Computational Analysis of Behavior. Annu. Rev. Neurosci. 39, 217–236 (2016). [DOI] [PubMed] [Google Scholar]

- 15.Hsu A. I. & Yttri E. A. B-SOiD, an open-source unsupervised algorithm for identification and fast prediction of behaviors. Nat. Commun. 12, 5188 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.JARVIS - Markerless Motion Capture Toolbox. https://jarvis-mocap.github.io/jarvis-docs/.

- 17.Kabra M., Robie A. A., Rivera-Alba M., Branson S. & Branson K. JAABA: interactive machine learning for automatic annotation of animal behavior. Nat. Methods 10, 64–67 (2013). [DOI] [PubMed] [Google Scholar]

- 18.Mathis A. et al. DeepLabCut: markerless pose estimation of user-defined body parts with deep learning. Nat. Neurosci. 21, 1281–1289 (2018). [DOI] [PubMed] [Google Scholar]

- 19.Nourizonoz A. et al. EthoLoop: automated closed-loop neuroethology in naturalistic environments. Nat. Methods 1–8 (2020) doi: 10.1038/s41592-020-0961-2. [DOI] [PubMed] [Google Scholar]

- 20.Pereira T. D., Shaevitz J. W. & Murthy M. Quantifying behavior to understand the brain. Nat. Neurosci. 1–13 (2020) doi: 10.1038/s41593-020-00734-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Pereira T. D. et al. Fast animal pose estimation using deep neural networks. Nat. Methods 16, 117–125 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Wiltschko A. B. et al. Mapping Sub-Second Structure in Mouse Behavior. Neuron 88, 1121–1135 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Yi D., Musall S., Churchland A., Padilla-Coreano N. & Saxena S. Disentangled multi-subject and social behavioral representations through a constrained subspace variational autoencoder (CS-VAE). 2022.09.01.506091 Preprint at 10.1101/2022.09.01.506091 (2022). [DOI] [Google Scholar]

- 24.Pereira T. D. et al. SLEAP: A deep learning system for multi-animal pose tracking. Nat. Methods 19, 486–495 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Lauer J. et al. Multi-animal pose estimation, identification and tracking with DeepLabCut. Nat. Methods 19, 496–504 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Pérez-Escudero A., Vicente-Page J., Hinz R. C., Arganda S. & de Polavieja G. G. idTracker: tracking individuals in a group by automatic identification of unmarked animals. Nat. Methods 11, 743–748 (2014). [DOI] [PubMed] [Google Scholar]

- 27.Romero-Ferrero F., Bergomi M. G., Hinz R. C., Heras F. J. H. & de Polavieja G. G. idtracker.ai: tracking all individuals in small or large collectives of unmarked animals. Nat. Methods 16, 179–182 (2019). [DOI] [PubMed] [Google Scholar]

- 28.de Chaumont F. et al. Real-time analysis of the behaviour of groups of mice via a depth-sensing camera and machine learning. Nat. Biomed. Eng. 3, 930–942 (2019). [DOI] [PubMed] [Google Scholar]

- 29.Gosztolai A. et al. LiftPose3D, a deep learning-based approach for transforming two-dimensional to three-dimensional poses in laboratory animals. Nat. Methods 18, 975–981 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Günel S. et al. DeepFly3D, a deep learning-based approach for 3D limb and appendage tracking in tethered, adult Drosophila. eLife 8, e48571 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Karashchuk P. et al. Anipose: A toolkit for robust markerless 3D pose estimation. Cell Rep. 36, 109730 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Dunn T. W. et al. Geometric deep learning enables 3D kinematic profiling across species and environments. Nat. Methods 18, 564–573 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Bala P. C. et al. Automated markerless pose estimation in freely moving macaques with OpenMonkeyStudio. Nat. Commun. 11, 4560 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Schneider A. et al. 3D pose estimation enables virtual head fixation in freely moving rats. Neuron 110, 2080–2093.e10 (2022). [DOI] [PubMed] [Google Scholar]

- 35.Berman G. J., Choi D. M., Bialek W. & Shaevitz J. W. Mapping the stereotyped behaviour of freely moving fruit flies. J. R. Soc. Interface 11, 20140672 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Batty E. et al. BehaveNet: nonlinear embedding and Bayesian neural decoding of behavioral videos. in Advances in Neural Information Processing Systems vol. 32 (Curran Associates, Inc., 2019). [Google Scholar]

- 37.Nilsson S. R. et al. Simple Behavioral Analysis (SimBA) – an open source toolkit for computer classification of complex social behaviors in experimental animals. 2020.04.19.049452 Preprint at 10.1101/2020.04.19.049452 (2020). [DOI] [Google Scholar]

- 38.Shi C. et al. Learning Disentangled Behavior Embeddings. in Advances in Neural Information Processing Systems vol. 34 22562–22573 (Curran Associates, Inc., 2021). [Google Scholar]

- 39.Brattoli B. et al. Unsupervised behaviour analysis and magnification (uBAM) using deep learning. Nat. Mach. Intell. 3, 495–506 (2021). [Google Scholar]

- 40.Luxem K. et al. Open-Source Tools for Behavioral Video Analysis: Setup, Methods, and Development. ArXiv220402842 Cs Q-Bio (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Marshall J. D., Li T., Wu J. H. & Dunn T. W. Leaving flatland: Advances in 3D behavioral measurement. Curr. Opin. Neurobiol. 73, 102522 (2022). [DOI] [PubMed] [Google Scholar]

- 42.Shemesh Y. & Chen A. A paradigm shift in translational psychiatry through rodent neuroethology. Mol. Psychiatry 1–11 (2023) doi: 10.1038/s41380-022-01913-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Steinmetz N. A. et al. Neuropixels 2.0: A miniaturized high-density probe for stable, long-term brain recordings. Science 372, (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Voigts J., Newman J. P., Wilson M. A. & Harnett M. T. An easy-to-assemble, robust, and lightweight drive implant for chronic tetrode recordings in freely moving animals. J. Neural Eng. (2020) doi: 10.1088/1741-2552/ab77f9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Aharoni D., Khakh B. S., Silva A. J. & Golshani P. All the light that we can see: a new era in miniaturized microscopy. Nat. Methods 16, 11 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Helmchen F., Fee M. S., Tank D. W. & Denk W. A miniature head-mounted two-photon microscope. high-resolution brain imaging in freely moving animals. Neuron 31, 903–912 (2001). [DOI] [PubMed] [Google Scholar]

- 47.Klioutchnikov A. et al. A three-photon head-mounted microscope for imaging all layers of visual cortex in freely moving mice. 2022.04.21.489051 Preprint at 10.1101/2022.04.21.489051 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Tsai H.-C. et al. Phasic firing in dopaminergic neurons is sufficient for behavioral conditioning. Science 324, 1080–1084 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Widloski J. & Foster D. J. Flexible rerouting of hippocampal replay sequences around changing barriers in the absence of global place field remapping. Neuron (2022) doi: 10.1016/j.neuron.2022.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Yartsev M. M. & Ulanovsky N. Representation of Three-Dimensional Space in the Hippocampus of Flying Bats. Science 340, 367–372 (2013). [DOI] [PubMed] [Google Scholar]

- 51.Padilla-Coreano N. et al. Cortical ensembles orchestrate social competition through hypothalamic outputs. Nature 603, 667–671 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Patel Y. A. et al. Hard real-time closed-loop electrophysiology with the Real-Time experiment Interface (RTXI). PLOS Comput. Biol. 13, e1005430 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Müller J., Bakkum D. & Hierlemann A. Sub-millisecond closed-loop feedback stimulation between arbitrary sets of individual neurons. Front. Neural Circuits 6, (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Lopes G. et al. Bonsai: an event-based framework for processing and controlling data streams. Front. Neuroinformatics 9, (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Cai D. J. et al. A shared neural ensemble links distinct contextual memories encoded close in time. Nature 534, 115–118 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Kane G. A., Lopes G., Saunders J. L., Mathis A. & Mathis M. W. Real-time, low-latency closed-loop feedback using markerless posture tracking. eLife 9, e61909 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Dong Z. et al. Minian, an open-source miniscope analysis pipeline. eLife 11, e70661 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Sauerbrei B. A. et al. Cortical pattern generation during dexterous movement is input-driven. Nature 577, 386–391 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Lee W., Yang E. & Curley J. P. Foraging dynamics are associated with social status and context in mouse social hierarchies. PeerJ 6, e5617 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Stopka P. & Macdonald D. W. Way-marking behaviour: an aid to spatial navigation in the wood mouse (Apodemus sylvaticus). BMC Ecol. 3, 3 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Voigts J. et al. Spatial reasoning via recurrent neural dynamics in mouse retrosplenial cortex. 2022.04.12.488024 Preprint at 10.1101/2022.04.12.488024 (2022). [DOI] [Google Scholar]

- 62.Dennis E. J. et al. Systems Neuroscience of Natural Behaviors in Rodents. J. Neurosci. 41, 911–919 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.