Abstract

Ultrasound computed tomography (USCT) is an emerging imaging modality that holds great promise for breast imaging. Full-waveform inversion (FWI)-based image reconstruction methods incorporate accurate wave physics to produce high spatial resolution quantitative images of speed of sound or other acoustic properties of the breast tissues from USCT measurement data. However, the high computational cost of FWI reconstruction represents a significant burden for its widespread application in a clinical setting. The research reported here investigates the use of a convolutional neural network (CNN) to learn a mapping from USCT waveform data to speed of sound estimates. The CNN was trained using a supervised approach with a task-informed loss function aiming at preserving features of the image that are relevant to the detection of lesions. A large set of anatomically and physiologically realistic numerical breast phantoms (NBPs) and corresponding simulated USCT measurements was employed during training. Once trained, the CNN can perform real-time FWI image reconstruction from USCT waveform data. The performance of the proposed method was assessed and compared against FWI using a hold-out sample of 41 NBPs and corresponding USCT data. Accuracy was measured using relative mean square error (RMSE), structural self-similarity index measure (SSIM), and lesion detection performance (DICE score). This numerical experiment demonstrates that a supervised learning model can achieve accuracy comparable to FWI in terms of RMSE and SSIM, and better performance in terms of task performance, while significantly reducing computational time.

Index Terms—: Ultrasound Computed Tomography, Convolutional Neural Networks, Data-Driven Image Reconstruction, Task Informed Image Reconstruction, Computer-simulation Study

I. Introduction

Ultrasound computed tomography (USCT) is an emerging medical imaging technology that can provide high-resolution estimates of tissue acoustic properties by utilizing ultrasound and tomographic principles. Image formation in USCT is based on the interaction of acoustic wave signals with biological tissues. Quantitative reconstructions of a tissue’s acoustic properties from USCT data can then be achieved via a variety of computational methods [1], providing high-resolution images of breast tissue acoustic properties of significant diagnostic value for breast cancer [2]–[4].

Full waveform inversion (FWI) is an image reconstruction method that estimates high-resolution maps of breast tissue acoustic properties from measurements of pressure distributions. FWI models the propagation of an ultrasound signal in biological tissues by numerical solution of wave equation. By incorporating accurate wave-physics in the implementation of the imaging operator, FWI allows for superior accuracy and resolution compared to geometric reconstruction methods for USCT, such as bent-ray methods [5]–[10]. However, this comes at the cost of a significant computational burden compared to geometric reconstruction methods. A single 3D reconstruction can take hours or days to compute and requires a high-performance, possibly GPU accelerated, computer [11], [12]. This computational expense is a limiting factor for the widespread applications of FWI in a clinical setting where fast reconstruction methods are highly desired. Furthermore, the need for a powerful computer increases the cost of USCT and prevents its adoption in developing areas.

This work proposes a learned FWI method utilizing convolutional neural networks (CNNs) for accelerated reconstructions. Neural networks have demonstrated the ability to construct inverse mappings of nonlinear imaging operators [13], [14], including some promising methods for USCT reconstruction [15]–[19]. A key contribution of this work is to acknowledge and leverage the fact that USCT is often used for specified diagnostic tasks, such as tumor detection and localization. To this aim, a novel loss function is proposed that includes task-specific information using a model of tumor segmentation provided by a U-Net numerical observer [20]. Specifically, the proposed loss function consists of a weighted sum of the commonly-used mean square error loss in the image domain and a novel task-informed objective based on features extracted by the U-Net observer. A second contribution of this work is the use of source encoding, a common technique used in FWI to reduce computational cost, within a learned reconstruction method. By exploiting redundancies in data, source encoding can reduce complexity of the CNN architecture and accelerate training of the learned FWI method. It is also worth noting that the proposed method is developed with the use of anatomically realistic training and testing sets that are relevant to breast imaging [21], [22].

Four simulation studies are performed to demonstrate the feasibility of the proposed method. The first study assesses the role of source encoding as a means of data reduction. Source encoding involves the application of linear combinations of sources and corresponding measurement data to reduce the overall size and complexity of the learned FWI network. This study compares different source encoding methods and assesses the resulting accuracy of the learned reconstruction methods after a fixed number of training epochs. The second study assesses the role of incorporating task-specific information into a learned reconstruction method. Here, the specific task chosen is tumor detection and localization. Task information is incorporated into training with a task-informed objective function, which includes a term based on features extracted from a U-Net observer. In this study, multiple learned reconstruction methods are trained using a sequence of loss functions that pose increased emphasis on the task-informed loss. The third study assessed the robustness of the learned reconstruction with respect to noise. In this study, the learned reconstruction methods constructed in the second study were reassessed using measurements with a higher level of added noise than they were trained on. The fourth study assessed the generalizability of the learned reconstruction method. In this study, the training and testing sets were separated into distinct distributions and the accuracy of the trained CNN was assessed for reconstruction of underrepresented populations in the training set.

The remainder of this paper is structured as follows. In Section II, the USCT imaging operator in its continuous and discretized form as well as the full waveform inversion (FWI) method are reviewed. This section also provides a brief discussion on task-based assessment of image quality and its role in USCT. In Section III, a solution method utilizing a specific CNN architecture, InversionNet [23], is presented with particular emphasis on the implementation of source encoding and the proposed task-informed loss function. In Section IV, the design of four numerical studies is presented: the first study compares multiple methods of source encoding for use with InversionNet; the second study assesses the use of a task-informed loss function with varying weights on task information; the third study assesses the proposed methods robustness with measurement noise; the fourth study analyzed the ability of the proposed method to reconstruct new unseen objects from an underrepresented population in the training set. In Section V, the results of the numerical studies are presented. Section VI presents the conclusions drawn from these results and discusses future extensions.

II. Background

A. Imaging Operator

USCT data is formed by measuring acoustic signals resulting from a series of ultrasonic excitation pulses emitted from multiple tomographic views surrounding the object to be imaged. These ultrasound waves generated from these excitation pulses then interact with the object according to the tissues’ acoustic properties. The reflected and scattered ultrasound waves then propagate outside of the object where they are recorded by a set of receiving transducers surrounding the object, often with water as a coupling medium between the object and the measurement surface.

In this work, the USCT imaging operator is modeled by solving the acoustic wave equation. This work assumes a non-lossy medium with homogeneous density [24] and a spatially varying speed of sound , where ) is a point in the spatial domain. For pressure measurements collected on an aperture surrounding the object, the relationship between the measurements and the speed of sound can be expressed as a continuous to continuous (C-C) imaging operator defined as

| (1) |

for where denotes the acquisition time for a single shot, denotes the total number of emitting transducers, and denote the excitation pulse and the acoustic pressure field generated when the -th emitter is fired. Above, the notation is used to underline the dependence of the imaging operator on the medium speed of sound . Under the assumption of a non-lossy propagation medium with homogeneous density, the acoustic pressure field satisfies the wave equation

| (2) |

Assuming that idealized point-like transducers are distributed along the measurement aperture at locations , the sampling operator mapping the pressure to the vector is defined as

| (3) |

where is the sampling interval; and is the number of pressure samples measured over the acquisition interval .

Using the continuous-to-discrete imaging operator , the USCT data acquisition process is modeled as

| (4) |

where is additive noise [25].

Finally, with the introduction of a Cartesian grid consisting of pixels, the imaging operator can be approximated with an analogous discrete-to-discrete (D-D) imaging operator. Denoting the center of the pixel with , the finite-dimensional vectors and are defined as

| (5) |

With the above notation, the D-D USCT model is given by

| (6) |

where is the discrete counterpart of the sampling operator defined via nearest neighbor interpolation of transducer coordinates to the pixel centers of the Cartesian grid, stems from finite difference approximation of the C-C imaging operator , and represents measurement noise.

B. Full Waveform Inversion

Full waveform inversion (FWI) [26] is a method for highresolution reconstruction of the speed of sound map given pressure traces popularly used in the geophysics community. FWI utilizes the D-D imaging model in Eq. (6) and seeks a speed of sound estimate such that

| (7) |

This discrete optimization problem can be solved using a gradient-based method to update estimates of . However, each evaluation of the objective function and its gradient require the solution of forward and adjoint wave equations. Source encoding is a technique that, by leveraging the linearity of the imaging operator with respect to the excitation pulse , allows for a drastic reduction in the computational cost [5], [11], [27]. In the source encoding method, the deterministic minimization problem in Eq. (7) is reformulated as the stochastic optimization problem

| (8) |

where is the stochastic encoding vector sampled according to the distribution with zero mean and identity covariance matrix, and denote the superimposed (encoded) measurement data and excitation source, respectively. Previous studies have used a Rademacher or a normal distribution to sample the independent identically distributed components of the encoding vector. The stochastic optimization problem in Eq. (8) is then often solved using stochastic gradient descent, thus requiring the solution of only one forward and one backward wave equation for iteration. However, even when acceleration techniques, such as Nesterov [28] or momentum [29], or modern stochastic optimization methods, such as ADAM [30], are applied, convergence is still slow and reconstructions for individual images may take several minutes or hours.

C. Task-Based Assessment of Image Quality

In biomedical imaging, the reconstructed image is often utilized to inform a specific task, with the image itself being of secondary interest. Such tasks can include but are not limited to, classification, segmentation, registration, detection, and decision planning. However, in many cases, metrics of task performance may not be directly correlated to physical metrics of image quality [31], [32], such as the mean square error or the structural similarity index. Furthermore, designing an image reconstruction strategy focusing purely on image quality may have the unintended consequence of reducing task performance [33]. For improved task performance, task-based information must be included in the design of an image reconstruction strategy [34], [35]. These tasks are often automated using a numerical observer, which are mathematical models that identify the task-relevant features in an image and estimate the resulting task outcome [36]. Examples of numerical observers include Luenberger observers and Kalman filters for control tasks [37], library and model-based dose estimation for treatment planning tasks [38], and Hotelling observers (or channelized versions) and machine learning based observers for signal detection tasks [39]. With a numerical observer, task based-assessment of large sets of images can be done quickly and used to design a reconstruction method for optimal task performance.

III. Method

This section presents the main contribution of this work: the development of a task-aware learned USCT reconstruction method utilizing CNNs. Once fully trained, this CNN acts as an inverse mapping from the set of pressure traces to the corresponding speed of sound map. The specific CNN architecture used here is InversionNet [23], which was originally developed for FWI of seismic waveform data in geophysics. Both seismic and USCT imaging problems are focused on reconstructing speed of sound based on acoustic wave models but have a few key differences. First, measurements in seismic imaging are sparse and expensive to acquire while USCT measurements can be quite dense. This work explores utilizing source encoding for reducing data complexity in USCT data and accelerating training. Second, seismic and USCT have very different image priors. A learned USCT reconstruction method then requires application-relevant training and testing sets. This work utilizes medically realistic, stochastically generated acoustic breast phantoms to construct the training and testing sets [22]. Third, the end goal for seismic imaging in geophysics is often to understand geological structures based on acoustic properties, whereas USCT imaging is often focused on a medically specific task with structural information being a secondary concern. This work then explores utilizing a task-based objective function to train a learned reconstruction method tailored for tumor/lesion detection and localization.

A. Learned FWI via InversionNet

InversionNet utilizes an end-to-end trained encoder-decoder structure. In this scheme, pressure traces are encoded to a high-dimensional latent space and then decoded to the space of images. Specifically, the input to InversionNet is a 3-D tensor where corresponds to the measurement data from the -th source, -th receiver, and -th time sample and its output is a 2-D tensor (with ) corresponding to pixel values of speed of sound estimates over the field of view.

The parameters of the InversionNet are then trained by minimizing the loss function

| (9) |

where are data pairs consisting of the speed of sound maps and corresponding USCT measurements. Here the norm is implemented in the training loss; however, other choices of loss are possible [23].

B. Source Encoding

USCT data often contains several redundancies and a large memory footprint for measurements collected at multiple receivers over a long sampling period when multiple sources are sequentially excited one at a time. Furthermore, InversionNet was originally designed for seismic reconstruction in geophysics in which measurements are collected with sparse spatial coverage. Nevertheless, InversionNet demonstrates sufficient performance on these sparse measurements. This observation, together with the large success in applying source encoding methods to accelerate FWI reconstruction of USCT data, is the key motivation to explore methods to reduce the dimensionality of the measurement data provided as input to InversionNet.

This work proposes utilizing a fixed source encoding approach to reduce the dimensionality of the data. Exploiting redundancies in the data, not only allows for to reduce memory footprint of InversionNet, but it also has the potential of improving the performance of the learned method [40].

As described in Section II, source encoding, originally proposed for accelerated FWI in geophysics problems [27], exploits the linearity of the imaging operator with respect to the source term. Given an encoding vector , the superimposition of multiple sources in the D-D USCT model in Eq. (6) gives

| (10) |

where , and denote the superimposed (encoded) measurement data, excitation pulse, and measurement noise, respectively. By use of independent source encoding vectors , the input can be reduced to a smaller tensor , where is the encoding matrix with entries . Above, denotes the matrix-tensor multiplication defined by saturation of the last index of the matrix with the first index of the 3D tensor . That is, the entries of are given by

| (11) |

for .

For a given (fixed) encoding matrix , InversionNet is then trained by solving the minimization problem

An instance of InversionNet with a learned sourced encoder is also considered and was trained by solving the minimization problem

C. Task-Informed Training

Medical imaging is typically performed for some specific diagnostic task. A learned reconstruction method should therefore be assessed and optimized with respect to this task. In particular, it is desirable to develop learned reconstruction methods that can preserve task-relevant information in the reconstructed images. This can be achieved by utilizing a task-informed loss function during training. To this aim, the following supervised training problem is considered

| (12) |

where the first term is the MSE loss and the second term is the task-informed loss. Above, is a weighting factor balancing the trade-off between the two losses, and is a differentiable (possibly) non-linear map that extracts the task-relevant features of the image. The map can then take a variety of forms depending on the task and should be designed based on the numerical observer used. For example could be a linear mapping, such as the template of a Hotelling observer [41] or a projector onto the linear subspace spanned by channels of a channelized Hotelling observer [42], [43], or a more general nonlinear mapping between an image and task-relevant features, possibly learned by a CNN-based approximation of the ideal observer [39].

Setting the task weighting factor to 0 recovers the original supervised training problem and creates an image reconstruction strategy only focused on physical metrics of image quality. Increasing places a greater emphasis on task performance. Setting (which means the MSE component is discarded) creates an image reconstruction strategy that is only driven by the task.

However, even though image quality and task performance are not directly linked [33], [34], there is still an indirect relationship. Therefore an image reconstruction strategy that has the proper weighting between MSE and task information, has the potential to outperform, both in terms of image quality and task performance, the reconstruction strategies that are only informed by image quality or task information. This means that for a properly chosen task there is a selected value of that maximizes both image quality and task performance. It should be noted that may have a null-space or the nonlinearity of may present a harder optimization problem to solve with large values of . In practice this issue can be alleviated by training with successively increasing values of . This training process then allows the MSE component to first drive the solution towards a good local minima in terms of physical metrics of image quality and then progressively incorporate more task information.

IV. Numerical Studies

A. Construction of the Training and Testing Sets

The objects used in this study were anatomically realistic numerical breast phantoms (NBPs) to which spatially varying speeds of sound were stochastically assigned within feasible ranges. These NBPs were developed and constructed by Li et al [22] using tools adapted from the Virtual Imaging Clinical Trial for Regulatory Evaluation (VICTRE) project at the US Food and Drugs Administration [21] for use in USCT virtual imaging studies. Examples of these NBPs are available from [44]. In particular, the generated NBPs are stratified based on the four different levels of breast density (percentage of fibroglandular tissue) defined according to the American College of Radiology’s (ACR) Breast Imaging Reporting and Data System (BI-RADS) [45]: A] almost all fatty breasts, B] breasts with scattered fibroglandular density, C] breasts with heterogeneous density, and D] extremely dense breasts. The breast size and percentage of fibroglandular tissue of each NBPs are randomly chosen based on the within the physiological range for each breast density type. Then anatomically realistic breast tissue structures are stochastically generated by the use of the VICTRE tools and the speed of sound maps corresponding to physiological variations in breast tissues are stochastically generated [22]. Four NBPs, one from each of the BI-RADs categories, are shown in Fig. 1. The training set consisted of 1,353 NBPs while the testing test consisted of 41 NBPs.

Fig. 1.

Four examples of the anatomically realistic numerical breast phantoms (NBPs), one from each of BI-RADS breast density types, used to train and evaluate the proposed learned FWI method. NBPs present clinically relevant variability in size, tissue composition and structures, and speed-of-sound maps. From left to right: Type A (almost all fatty), Type B (scattered fibroglandular density), Type C (heterogeneous density), and Type D (extremely dense).

B. Definition of the Virtual Imaging System

The measurements geometry consisted of a circular transducer array [5], [46] of radius along which 256 transducers (shown in blue and red) were equispaced and acted as receivers. Every fourth transducer (shown in red), 64 in total, also acted as a transmitter and would emit an excitation pulse in sequence. The -th excitation pulse was of the form

where is the location of the -th emitter, is the central frequency, is the time shift, and controls the signal width. Measurements are collected by firing one transmitter at a time and recording data at every receiver. This is repeated for each transmitter and results in multi-channel measurements.

To numerically simulate the pressure field generated by each transmitter, the wave equation in Eq. (2) was solved using a finite difference scheme (4th order in space and 2nd order in time). A spatial grid of size in Eq. (5)), with , and a temporal grid with samples were employed for the discretization. Absorbing boundary conditions were implemented to prevent wave reflections at the boundaries of the computational domain [47]. Electronic noise was modeled as additive white Gaussian noise with a standard derivation of 4.3 · 10−6, corresponding to an SNR of 30 dB. The imaging system parameters are summarized in Table I.

TABLE I.

Virtual imaging system and discretization parameters

| Ultrasound system | Discretization | ||

|---|---|---|---|

| Number of receivers J | 256 | Grid size Nx | 360 |

| Number of transmitters I | 64 | Grid intervals δx | 0.6 mm |

| Transducer radius R | 110.4 mm | Points per wavelength | 5.0 |

| Pulse frequency f0 | 0.5 MHz | Number of time steps K | 640 |

| Sampling frequency | 5 MHz | Time steps δt | 0.2 μs |

| Acquisition time (per source) | 128 μs | CFL Number | 0.53 |

C. Study Design

1). Study 1: Source encoding:

The first numerical study addressed the role of source encoding in training InversionNet. This study investigates the use of source encoding as a method of data reduction to accelerate training. In this study, four different approaches for source encoding are considered. Four instances of InversionNet were compared: 1) The Reference instance (117,762,947 trainable parameters) was trained with no source encoding and utilizing all 64 measurement channels; 2) The Subsample instance (117,686,147 trainable parameters) was trained utilizing subsampling down to 16 measurement channels, i.e. only keeping every fourth measurement channel; 3) The Random instance (117,686,147 trainable parameters) was trained with fixed randomly chosen source encoding, where the weights are drawn from a standard normal distribution, from 64 channels to 16 channels; 4) The Learned instance (117,687,171 trainable parameters) was trained with by while jointly learning the weights of a source encoding from 64 channels to 16 channels.

2). Study 2: Task-informed loss:

The second numerical study explored the impact of the task-informed loss function in training InversionNet, where the chosen task was tumor detection and localization. A numerical observer for this task was implemented by use of a U-net, a specific neural network architecture developed for the segmentation of medical images [20]. The U-net is a multilevel architecture consisting of a contractive path, which applies a sequence of convolutional and downsampling layers to half the image size at each level, and an expansive path, which restores the output to its original size by use of transpose convolutional and upsampling layers. Skip connections are used to concatenate feature maps computed in the contractive path to the inputs of the corresponding layer in the expansive path. Specifically, the U-net-based numerical observer was trained using the speed of sound maps as input and corresponding binary segmentation masks of tumor regions as outputs. More details can be found in Appendix A. The task-relevant feature maps were then constructed by extracting and concatenating all feature maps computed as an output of the convolutional layers at each level in the contractive path of the U-Net.

Six versions of InversionNet were trained in succession, i.e., each network was initialized using the final trained state of the previous version. These instances of InversionNet were trained with varying task weights in the loss function in Eq. (12). The selected task weights were ; where corresponds to the original MSE loss function and corresponds to a task-only informed loss. The InversionNet reconstructions were then compared to a traditional FWI method on the testing set utilizing a source encoding stochastic descent method [5].

3). Study 3: Robustness w.r.t. noise:

The third numerical study explored the robustness of the learned reconstruction method to an increased amount of noise. In this study, the instances of InversionNet trained in the second study were used to reconstruct USCT data corrupted by i.i.d. additive noise ten times larger than that used while training the network. These reconstructions from measurements with increased noise were then compared to the results of the second numerical study for the same objects in the testing set.

4). Study 4: Generalizability w.r.t. underrepresented groups:

The fourth numerical study explored the generalizability of the proposed method when a group of objects is underrepresented in the training set. In particular, the anatomically realistic NBPs used to train and assess our method can be divided in four groups based on the corresponding BI-RADS breast density type. As shown in Fig. 1, NBPs from different groups (density type) exhibit large differences in size and tissue composition. For this study, a training set with 1,377 NBPs (approximately the same number of training examples as that used in the previous study) was employed. However, the prevalence of type A (fatty breast) in the training set was only 6% (81 NBPs). An instance of InversionNet was then trained on this unbalanced training set as described in Study 2 and assessed using a testing set consisting of 81 NBPs from the underrepresented group for reconstructing underrepresented groups (BI-RADS breast density types) in the testing set.

D. Image Quality Assessment Criteria

Image quality was quantified in terms of ensemble average relative mean square error (RMSE) and ensemble average structural self-similarity index measure (SSIM) [48]. Task accuracy was quantified using by a numerical observer. In particular, the area under the curve (AUC) of the receiver operating characteristic (ROC) and a Dice coefficient for correct tumor detection and localization (tumor-wise Dice coefficient) were employed. The ROC curve for each reconstruction method was constructed by plotting the tumor-wise true positive rate on the y-axis and the pixel-wise false positive rate on the x-axis for a range of thresholds. The AUC was then computed and used as a figure of merit to assess the proposed learned reconstruction method.

The ROC curves were also used to select a threshold for tumor detection in the computation of the tumor-wise Dice coefficient. In particular, the selected threshold parameter corresponds to the upper left corner of the ROC. The tumor-wise Dice coefficient was then computed as

| (13) |

where is the number of detected tumors in the reconstructed image, is the number of tumors presented in the true image, and is the number of tumors that are correctly detected and localized by the numerical observer applied to the reconstructed image.

V. Results and Discussion

Simulation of USCT measurements and image reconstruction using the FWI with source encoding method were implemented using Devito [49], a Python package for solving partial differential equation using optimized finite difference stencils. Each set of measurements was perturbed by additive white Gaussian noise with standard deviation 4.3 × 10−6 (SNR = 30 dB) for the first, second, and fourth experiments while the third experiment utilized measurements with a ten times larger amount of noise (SNR = 20 dB). The FWI reconstructions of the 41 test images used in the first three studies took approximately 6 hours on a workstation with two Intel Xeon Gold 5218 Processors (16 cores, 32 threads, 2.3 GHz, 22 MB cache each), 384 GB of DDR4 2933Mhz memory, and one NVidia Titan RTX 24GB graphic processing unit (GPU).

InversionNet was implemented in PyTorch, an open-source machine learning framework [50], and trained for 1,000 epochs with a batch size of 50 using Adam optimizer [30]. At each training iteration, noise with a fixed SNR of 30 dB was added online as a form of data augmentation, which is known to improve training [51]. Training each instance of InversionNet took approximately 10 hours on an HPC node with 512 GB of memory and 4 NVidia Volta V100 graphic processing units (GPUs). Evaluation of an instance of InversionNet on the testing set (41 examples) took approximately 1 second.

A. Study 1: Source Encoding

1). Qualitative Assessment:

An example reconstruction of a type C NBP from each instance of InversionNet in the source encoding study is shown in Fig. 2. In this example, the instances of InversionNet utilizing all sources (Reference) or subsampling (Subsample) have the worst visual appearance (blurred edges, lack of details) while the other instances (Random, Learned) have better visual appearance (sharper edges, finer details). However, several hallucinated features (i.e. false structures that do not exist in the underlying object but are introduced by the reconstruction method [52]) appear in the images reconstructed by all instances. For example, the estimates appear to be hallucinating, or overestimating, the presence of a high speed of sound region in the middle of the zoomed region.

Fig. 2.

Study 1: Examples of speed of sounds maps reconstructed by each instance of InversionNet in the source encoding study. From left to right are the object and its estimates reconstructed using the reference instance without source encoding, the instance with subsampling, the instance with random source encoding, and that with a learned source encoding. The bottom row is a zoomed-in feature for each image highlighting differences in image resolution and hallucinated features. One notable hallucinated feature is annotated with a red arrow.

2). Quantitative Assessment:

Ensemble average RMSE and SSIM figures of merits computed over the testing set are illustrated in Fig. 3 for each of the four trained instances of InversionNet. These results indicate that the Random instance is able to achieve a statistically significant lower ensemble average RMSE than the Reference (p-value 3.03e – 03%) and comparable accuracy to the Subsample and Learned instances (p-values 23.7% and 76.5% respectively). Similarly, the Random instance is able to achieve a statistically significant higher ensemble average SSIM than the Reference (p-value 0.214%) and comparable SSIM to the Subsample and Learned instances (p-values 45.5% and 72.4% respectively).

Fig. 3.

Study 1: Boxplots of RMSEs and SSIMs across the testing set for reconstructions in the source encoding study. The instances of InversionNet utilizing a fixed random source encoder (Random) and the learned encoder (Learned) demonstrate the lowest ensemble average RMSEs and highest ensemble average SSIMs.

3). Discussion:

While the Reference network has a larger number of trainable parameters than the Random network and thus a larger representation power, it performs worse than the Random network. Similarly, the Learned network has a larger representation power, but yields comparable performance to the the Random network. This is possibly due to the fact that all networks were trained on the same number of training examples and for the same number of epochs. It is possible that was a larger training set available and given longer training times, the Reference and Learned networks would eventually outperform the Random network.

B. Study 2: Task-informed Loss

1). Qualitative Assessment:

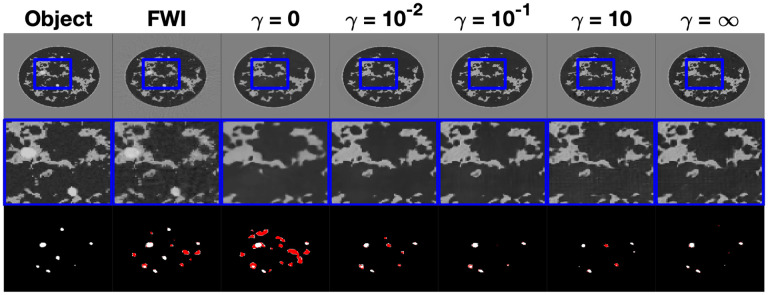

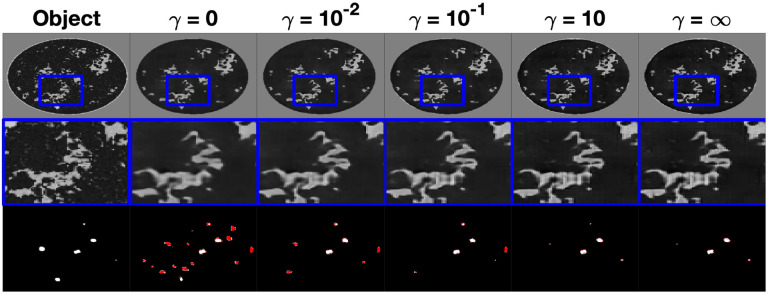

An example reconstruction of a type B NBP from the instances of InversionNet corresponding to and the FWI reconstruction in the task informed experiment is shown in Fig. 4. The top row of this figure displays the reconstructed images with a window over a selected region of interest, the second row displays a zoomed-in image on the windowed region of interest, and the third row displays the resulting tumor segmentation based on the estimated speed of sound. Tumor segmentation was obtained by thresholding the output of the U-net observer with a fixed threshold of 0.02, which was chosen based on a receiver operator curve (ROC) curve analysis. The tumor structures are shown in white for tumors that were correctly detected and localized and in red for hallucinated structures. In this example, there is a notable improvement in image quality as the task-informed weight increases and peaks at . For values of equal to or larger than 1, there is a stabilization in visual appearance. The FWI reconstruction exhibits high-frequency artifacts, which can be clearly seen in the zoomed portion. Tumor detection and localization improve as the task weight increases.

Fig. 4.

Study 2: Examples of speed of sound maps reconstructed by FWI and various instances of InversionNet trained with increasing weight of the task informed loss. From left to right is the object, FWI reconstruction, InversionNet reconstructions with and . The middle row is a zoomed-in feature for each image highlighting differences in image resolution and detected features. The bottom row is the resulting tumor segmentation with the true tumor material shown in white and the hallucinated tumor materials shown in red. Speed of sound estimates reconstructed using FWI and the instance of InversionNet without task-informed loss shows a large number of hallucinated tumors, while instances of InversionNet trained with lead to accurate tumor segmentation masks.

2). Quantitative Assessment:

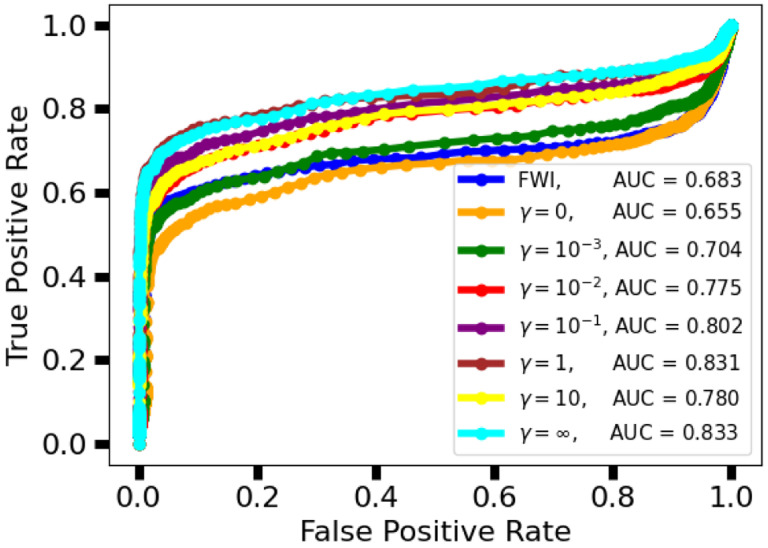

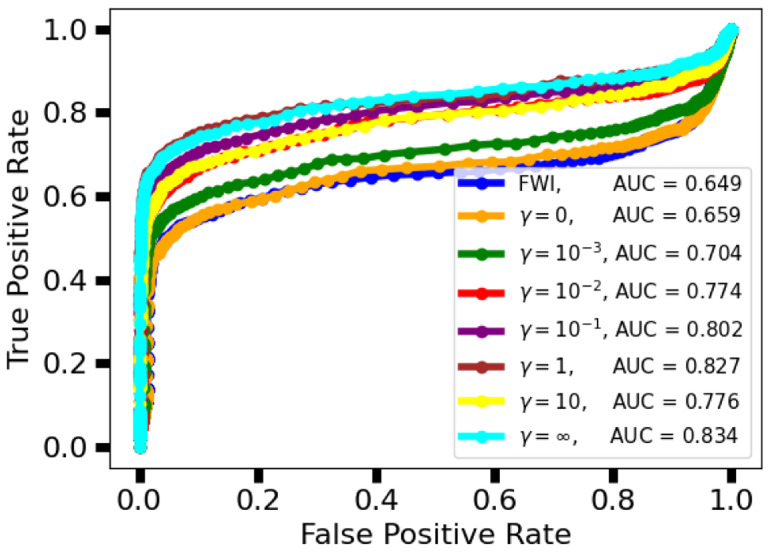

The ROC curves and their respective areas under the curve (AUC) are shown in Fig. 6. These ROC curves were used to select the threshold for tumor detection/localization. The selected threshold was 0.02, which corresponds to the upper left corner of the ROC curve. For , the learned reconstructions demonstrated a higher AUC than the FWI method, with AUC increasing alongside the task weight, with the exception of .

Fig. 6.

Study 2: Receiver operator characteristic (ROC) curve for FWI and various instances of the task-informed InversionNet. Higher values of the task-informed weight lead to higher AUC. Instances of InversionNet trained with outperform FWI in terms of AUC.

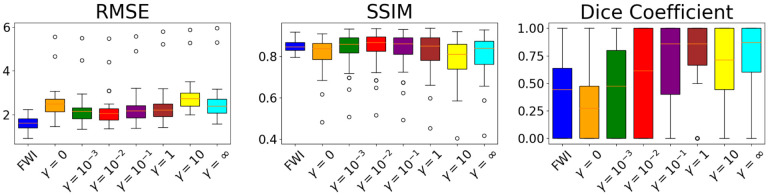

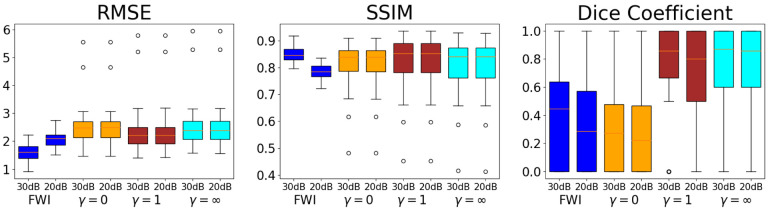

The RMSEs, SSIMs, and Dice coefficients for each of these reconstruction methods across the testing set are illustrated in Fig. 5. The learned reconstruction methods have a statistically significant higher RMSE compared to the FWI method for all weights (p-values less that 0.001%).

Fig. 5.

Study 2: Boxplots of RMSEs, SSIMs, and Dice coefficients across the testing set for reconstructions from the task-informed study. Using the taskinformed loss reduces RMSEs and increases the SSIMs and Dice coefficient. Best performance is achieved for the task informed weight . The iterative FWI method outperforms the InversionNet in terms of RMSE but underperforms in terms of SSIM. With the proper task-informed weight the InversionNet demonstrates better task performance than the iterative FWI methods as quantified by the Dice coefficient.

For certain task weights, there is no statistical difference between the learned reconstruction methods and the FWI method in terms of SSIM (p-values 32.6%, 57.3%, 25.1%, 12.0% for while the learned methods underperform with either no or a heavy weight on the task information (p-values 0.601%, 3.70e – 02%, 1.68% for . For low task weights, the learned reconstruction methods perform comparably to the FWI method in terms of Dice coefficient (p-values geq11%) and statistically significantly better for higher task weights (p-values ≤ 0.215% for ).

3). Discussion:

Continuation over was needed in this study to ensure that InversionNet converged to a “good” local minima using the task-informed loss function. Preliminary experiments showed that training a learned reconstruction with a large task weight and without a good initialization led to very poor image quality and task performance, most likely due to the nonlinearity introduced by the numerical observer. A possible limitation of this study is that no regularization was used in the FWI reconstruction. However, the use of densely samples data, absence of modeling error (discretization inverse crime), and high signal to noise ratio mitigate this limitation.

C. Study 3: Robustness w.r.t. Noise

1). Assessment:

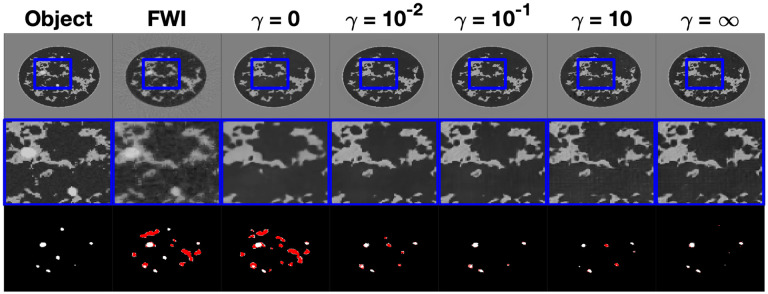

An example reconstruction of a type B NBP, the same from the previous study, from each instance of InversionNet from the robustness experiment is shown in Fig. 7. In this example there is not a noticeable drop in image quality or a clear difference in tumor segmentation compared to the low-noise reconstructions in the Section V-B, The ROC curve for each reconstruction is plotted in Fig. 9, with the corresponding AUC shown in the legend. The increased level of noise results in a very slight decrease in AUC for all reconstruction methods, thus demonstrating observer robustness with respect to noise. Furthermore, for the learned reconstruction methods demonstrate a higher AUC than the FWI method. Figure 8 displays box plots of RMSEs, SSIMs, and Dice coefficients achieved by the proposed method with as well as by FWI. For the FWI reconstructions, a statistically significant increase in RMSE and decrease in SSIM was noted in the high noise compared to low noise (Case study 2) cases (p-values: and , respectively). No statically significant change in RMSE and SSIM was noted for the learned reconstructions with (all p-values ≥ 95%). Moreover, for each reconstruction method (FWI, ), the reduction in Dice coefficient between the low noise and high noise reconstructions is statistically insignificant (p-values 44.1%, 84.1%, 75.1, and 96.1%).

Fig. 7.

Study 3: Examples of speed of sound maps reconstructed using FWI and various instances of InversionNet in the robustness study. From left to right is the object, FWI reconstruction, InversionNet reconstructions with task weight parameter and . The middle row is a zoomed-in feature for each image highlighting differences in image resolution and detected features. There is no visually clear degradation in the quality in reconstructions despite the increased level of measurement noise. The bottom row is the resulting tumor segmentation with the true tumor material shown in white and the hallucinated tumor materials shown in red. Comparing the visual appearance of reconstructed images and segmentation masks with those shown in Fig. 4 for the same object demonstrates the robustness of InversionNet with respect to measurement noise.

Fig. 9.

Study 3: Receiver operator characteristic (ROC) curve for task-informed, learned reconstruction methods with increased noise level. All instances of InversionNet achieved a higher AUC than FWI. Compared with Fig. 6, AUC for all methods decreases slightly with the higher level of noise.

Fig. 8.

Study 3: Boxplots of RMSEs, SSIMs, and Dice coefficients across the testing set for reconstructions from robustness study. Each color corresponds to an instance of InversionNet trained with a specified task weighting and tested with two different levels of measurement noise. The increased level of noise results in a slight increase in RMSE and decrease in SSIM and Dice coefficient.

2). Discussion:

Performance in terms of RMSE, SSIM, Dice coefficient, and AUC for the learned reconstruction methods decreases very slightly, statistically insignificantly, with the presence of increased noise and maintains its comparative performance with respect to the FWI method. This study shows that the proposed learned reconstruction methods are robust with respect to increased noise.

D. Study 4: Generalizability w.r.t. underrepresented groups

1). Assessment:

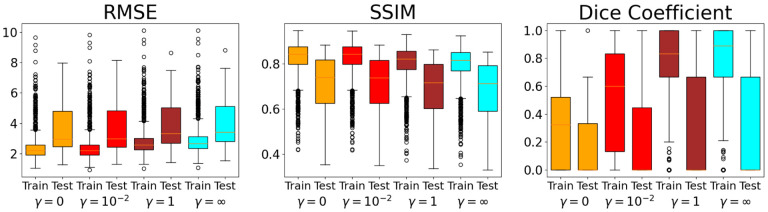

An example reconstruction from each instance of InversionNet from the generalization experiment is shown in Fig. 10. In these images there is a drop in image quality compared to the other experiments and the accuracy of the tumor segmentation decreases. The ROC curve for each reconstruction method is plotted in Fig. 12, with the corresponding AUC shown in the legend. This displays that the task-informed objective does not improve task performance for underrepresented reconstructions. For a select few of these reconstruction methods for both the training and testing sets, the RMSEs, SSIMs, and Dice coefficients across the testing set are illustrated in Fig. 11. The boxplots display a significant decrease in image quality and task performance for underrepresented data.

Fig. 10.

Study 4: Examples of speed of sounds maps reconstructed by each instance InversionNet in the generalization study. From left to right is the object and InversionNet reconstructions with and . The middle row is a zoomed-in feature for each image highlighting differences in image resolution and reconstructed features. The bottom row is the resulting tumor segmentation with the true tumor material shown in white and the hallucinated tumor materials shown in red. Image quality and tumor detection noticeably decrease when the testing set consists of an underrepresented population in the training set but does not completely degrade and is still relatively accurate.

Fig. 12.

Study 4: Receiver operator characteristic (ROC) curve for task-informed, learned reconstruction methods on underrepresented population. Compared to Fig. 6. this study displays a clear decrease in observer performance on an underrepresented population, with a no improvement in AUC as increases.

Fig. 11.

Study 4: Boxplots of RMSEs, SSIMs, and Dice coefficients across the testing set for reconstructions from generalization study. The testing set generated from an underrepresented group demonstrates an increase in RMSE and decrease in SSIM and Dice coefficient.

2). Discussion:

In addition to the experiments reported here, several instances of InversionNet were trained on a data set consisting of only types B, C, D NBPs and then evaluated on an out-of-distribution testing set consisting only of type A NBPs. Results showed poor generalization performances in this case and are not reported here. This decrease in performance was expected as it is known that deep learning methods that map between different input/output domains tend to perform worse, be more difficult to train, and generalize poorly compared to methods that map between input/outputs in the same domain [53]. This means that InversionNet is at a disadvantage for achieving accurate performance compared to other methods that combine a learned image (or data) translation/correction and an approximated physics-based mapping between the data and image domains [19], [54].

VI. Conclusion

This work presents a deep-learning image reconstruction method for ultrasound computed tomography (USCT). In particular, the InversionNet architecture, originally proposed for seismic imaging, was extended to produce quantitatively accurate speed of sound maps of breast tissues from simulated USCT data, without the computational burden of model-based iterative methods, such as full waveform inversion (FWI). The proposed deep learning image reconstruction method was illustrated in four virtual imaging studies using a large set of anatomically and physiologically realistic numerical breast phantoms.

The first study assessed different source encoding approaches for InversionNet. The source encoding study showed that random source encoding results in lower RMSE and higher SSIM compared to a subsampling. Furthermore, the network trained using random source encoding performed better than the reference network (no source encoding) and the one with learned source encoder. A reason for this is that all networks were trained on the same training set and for the same number of epochs. While it is expected that—given a sufficiently large training set and training time—using no source encoding or a trained encoder would eventually lead to better performance, this study demonstrates that a fixed random source encoder is able to reduce data complexity and simplify training, leading to improved accuracy when training data is limited.

The second study assessed the role of a task-informed loss function in training InversionNet and its effect on task performance. In this study, increasing the weight of the task-informed loss in the loss function used during training increased image quality both in terms of RMSE and SSIM until it was competitive with an FWI reconstruction despite being three orders of magnitude faster than FWI. Furthermore, network trained with a task-informed objective demonstrated better task performance in terms of Dice coefficient compared to the FWI reconstruction. Broadly, this study shows that a learned reconstruction can be tailored for a specific task and that task information can improve image quality.

The third study assessed the proposed method robustness with respect to additive noise levels. In this study, the learned FWI networks trained in the second study were used to reconstruct unseen data which were corrupted using a ten times higher noise level. This study exhibited minimally diminished accuracy compared to the second study. These results demonstrate that the proposed method has robustness with respect to measurement noise.

The fourth study assessed InversionNet’s ability to reconstruct out-of-distribution images. In this study, an instance of InversionNet was trained using a dataset for which BI-RADS type A (fatty breast) NBPs were severely underrepresented, with only one-fifth the number of examples as the other categories. The network achieved only slightly diminished accuracy (compared with that achieved in the second study) and then tested on a testing set consisting of only BI-RADS type A NBPs. These results demonstrate that InversionNet is able to generalize for reconstruction on underrepresented populations in the training sets. Nevertheless, the diminished accuracy of this underrepresented population highlights the need for a large distribution of representative training images for highly accurate results.

In summary, this work established the feasibility of employing a learned FWI reconstruction method employing CNNs from USCT data and demonstrated reduced computational burden and the ability to leverage task-specific information. Future work will include the application of these learned reconstruction methods to clinical data. For this application, several additional challenges will need to be addressed. Primarily, the forward model implemented will be extended to a 3D wave physics model and a 3D ring array USCT imaging system [25]. Additionally, future works will address the lack, rarity, or ground truth images by implementing a self-supervised training method [55].

ACKNOWLEDGMENTS

This work was co-funded by the Los Alamos National Laboratory (LANL) - the Center for Space and Earth Science and Laboratory Directed Research and Development program under project number 20200061DR. Luke Lozenski, Fu Li, Mark Anastasio, and Umberto Villa would like to acknowledge support from NIH under award number R01 EB028652.

Appendix A. Training a U-Net Observer

The numerical observer used in the tumor detection and localization task study utilized a U-Net architecture. Mathematically, the U-Net observer can be represented as an operator , with trainable parameters that maps a speed of sound image to an image those pixel values represent the probability that a tumor is present in that location. The U-Net observer was then trained in a supervised manner with a pixelwise cross-entropy loss function that compares the output of with the truth tumor segmentation maps. A training set consisting of all the 1,435 available speed of sound NBPs and corresponding tumor segmentation maps was used to train the U-net observer. The area under the receiver operator characteristic curve was 0.941.

Contributor Information

Luke Lozenski, Department of Electrical and Systems Engineering, Washington University in St. Louis, St. Louis, MO 63130, USA.; Energy and Natural Resources Security Group, Los Alamos National Laboratory, Los Alamos, NM 87545, USA.

Hanchen Wang, Energy and Natural Resources Security Group, Los Alamos National Laboratory, Los Alamos, NM 87545, USA..

Fu Li, Department of Bioengineering, University of Illinois Urbana-Champaign, Urbana, IL, 61801 USA..

Mark Anastasio, Department of Bioengineering, University of Illinois Urbana-Champaign, Urbana, IL, 61801 USA..

Brendt Wohlberg, Applied Mathematics and Plasma Physics Group, Los Alamos National Laboratory, Los Alamos, NM 87545, USA.Youzuo Lin Member IEEE.

Youzuo Lin, Energy and Natural Resources Security Group, Los Alamos National Laboratory, Los Alamos, NM 87545, USA..

Umberto Villa, Oden Institute for Computational Engineering and Sciences, University of Texas at Austin, Austin, TX 78712..

References

- [1].Koulountzios P., Rymarczyk T., and Soleimani M., “A triple-modality ultrasound computed tomography based on full-waveform data for industrial processes,” IEEE Sensors Journal, vol. 21, no. 18, pp. 20896–20909, 2021. [Google Scholar]

- [2].Duric N., Littrup P., Poulo L., Babkin A., Pevzner R., Holsapple E., Rama O., and Glide C., “Detection of breast cancer with ultrasound tomography: First results with the computed ultrasound risk evaluation (CURE) prototype,” Medical physics, vol. 34, no. 2, pp. 773–785, 2007. [DOI] [PubMed] [Google Scholar]

- [3].Gemmeke H. and Ruiter N., “3d ultrasound computer tomography for medical imaging,” Nuclear Instruments and Methods in Physics Research Section A: Accelerators, Spectrometers, Detectors and Associated Equipment, vol. 580, no. 2, pp. 1057–1065, 2007, imaging 2006. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S016890020701323X [Google Scholar]

- [4].Hopp T., Zapf M., Kretzek E., Henrich J., Tukalo A., Gemmeke H., Kaiser C., Knaudt J., and Ruiter N., “3d ultrasound computer tomography: update from a clinical study,” in Medical Imaging 2016: Ultrasonic Imaging and Tomography, vol. 9790. SPIE, 2016, pp. 75–83. [Google Scholar]

- [5].Wang K., Matthews T., Anis F., Li C., Duric N., and Anastasio M. A., “Waveform inversion with source encoding for breast sound speed reconstruction in ultrasound computed tomography,” IEEE transactions on ultrasonics, ferroelectrics, and frequency control, vol. 62, no. 3, pp. 475–493, 2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Guasch L., Calderón Agudo O., Tang M.-X., Nachev P., and Warner M., “Full-waveform inversion imaging of the human brain,” NPJ digital medicine, vol. 3, no. 1, p. 28, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [7].Huang L., Lin Y., Zhang Z., Labyed Y., Tan S., Nguyen N. Q., Hanson K. M., Sandoval D., and Williamson M., “Breast ultrasound waveform tomography: Using both transmission and reflection data, and numerical virtual point sources,” in Medical Imaging 2014: Ultrasonic Imaging and Tomography, vol. 9040. Spie, 2014, pp. 187–198. [Google Scholar]

- [8].Pratt R. G., Huang L., Duric N., and Littrup P., “Sound-speed and attenuation imaging of breast tissue using waveform tomography of transmission ultrasound data,” in Medical Imaging 2007: Physics of Medical Imaging, vol. 6510. SPIE, 2007, pp. 1523–1534. [Google Scholar]

- [9].Javaherian A. and Cox B., “Ray-based inversion accounting for scattering for biomedical ultrasound tomography,” Inverse Problems, vol. 37, no. 11 , p. 115003, 2021. [Google Scholar]

- [10].Hormati A., Jovanović I., Roy O., and Vetterli M., “Robust ultrasound travel-time tomography using the bent ray model,” in Medical Imaging 2010: Ultrasonic Imaging, Tomography, and Therapy, vol. 7629. International Society for Optics and Photonics, 2010, p. 76290I. [Google Scholar]

- [11].Lucka F., Pérez-Liva M., Treeby B. E., and Cox B. T., “High resolution 3d ultrasonic breast imaging by time-domain full waveform inversion,” Inverse Problems, vol. 38, no. 2, p. 025008, 2021. [Google Scholar]

- [12].Zhang Z., Huang L., and Lin Y., “Efficient implementation of ultrasound waveform tomography using source encoding,” in Medical Imaging 2012: Ultrasonic Imaging, Tomography, and Therapy, vol. 8320. SPIE, 2012, pp. 22–31. [Google Scholar]

- [13].Wang G., Ye J. C., and De Man B., “Deep learning for tomographic image reconstruction,” Nature Machine Intelligence, vol. 2, no. 12, pp. 737–748, 2020. [Google Scholar]

- [14].Reader A. J., Corda G., Mehranian A., da Costa-Luis C., Ellis S., and Schnabel J. A., “Deep learning for pet image reconstruction,” IEEE Transactions on Radiation and Plasma Medical Sciences, vol. 5, no. 1, pp. 1–25, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Fan Y., Wang H., Gemmeke H., Hopp T., and Hesser J., “Modeldata-driven image reconstruction with neural networks for ultrasound computed tomography breast imaging,” Neurocomputing, vol. 467, pp. 10–21, 2022. [Online]. Available: https://www.sciencedirect.com/science/article/pii/S092523122101393X [Google Scholar]

- [16].Donaldson R. W. and He J., “Instantaneous ultrasound computed tomography using deep convolutional neural networks,” in Health Monitoring of Structural and Biological Systems XV, vol. 11593. SPIE, 2021, pp. 396–405. [Google Scholar]

- [17].Prasad S. and Almekkawy M., “Dl-uct: A deep learning framework for ultrasound computed tomography,” in 2022 IEEE 19th International Symposium on Biomedical Imaging (ISBI), 2022, pp. 1–5. [Google Scholar]

- [18].Liu X. and Almekkawy M., “Ultrasound computed tomography using physical-informed neural network,” in 2021 IEEE International Ultrasonics Symposium (IUS), 2021, pp. 1–4. [Google Scholar]

- [19].Jeong G., Li F., Villa U., and Anastasio M. A., “A deep learningbased image reconstruction method for usct that employs multimodality inputs,” in Medical Imaging 2023: Ultrasonic Imaging and Tomography, vol. 12470. SPIE, 2023, pp. 105–110. [Google Scholar]

- [20].Ronneberger O., Fischer P., and Brox T., “U-net: Convolutional networks for biomedical image segmentation,” in Medical Image Computing and Computer-Assisted Intervention-MICCAI 2015: 18th International Conference, Munich, Germany, October 5–9, 2015, Proceedings, Part III 18. Springer, 2015, pp. 234–241. [Google Scholar]

- [21].Badano A., Graff C. G., Badal A., Sharma D., Zeng R., Samuelson F. W., Glick S. J., and Myers K. J., “Evaluation of digital breast tomosynthesis as replacement of full-field digital mammography using an in silico imaging trial,” JAMA network open, vol. 1, no. 7, pp. e185 474–e185 474, 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Li F., Villa U., Park S., and Anastasio M. A., “3-D stochastic numerical breast phantoms for enabling virtual imaging trials of ultrasound computed tomography,” IEEE Transactions on Ultrasonics, Ferroelectrics, and Frequency Control, vol. 69, no. 1, pp. 135–146, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Wu Y. and Lin Y., “InversionNet: An efficient and accurate data-driven full waveform inversion,” IEEE Transactions on Computational Imaging, vol. 6, pp. 419–433, 2020. [Google Scholar]

- [24].Jensen J. A., “A model for the propagation and scattering of ultrasound in tissue,” The Journal of the Acoustical Society of America, vol. 89 , no. 1, pp. 182–190, 1991. [DOI] [PubMed] [Google Scholar]

- [25].Li F., Villa U., Duric N., and Anastasio M. A., “A forward model incorporating elevation-focused transducer properties for 3 d full-waveform inversion in ultrasound computed tomography,” 2023. [Online]. Available: https://arxiv.org/abs/2301.07787 [DOI] [PMC free article] [PubMed]

- [26].Virieux J. and Operto S., “An overview of full-waveform inversion in exploration geophysics,” Geophysics, vol. 74, no. 6, pp. WCC1–WCC26, 2009. [Google Scholar]

- [27].Krebs J. R., Anderson J. E., Hinkley D., Neelamani R., Lee S., Baumstein A., and Lacasse M.-D., “Fast full-wavefield seismic inversion using encoded sources,” Geophysics, vol. 74, no. 6, pp. WCC177”WCC188, 2009. [Google Scholar]

- [28].Nesterov Y. E., “A method of solving a convex programming problem with convergence rate o\bigl(kˆ2\bigr),” in Doklady Akademii Nauk, vol. 269, no. 3. Russian Academy of Sciences, 1983, pp. 543–547. [Google Scholar]

- [29].Polyak B. T., “Some methods of speeding up the convergence of iteration methods,” Ussr computational mathematics and mathematical physics, vol. 4, no. 5, pp. 1–17, 1964. [Google Scholar]

- [30].Kingma D. and Ba J., “Adam: A method for stochastic optimization,” arXiv e-prints, 2014. [Google Scholar]

- [31].Barrett H. H., Yao J., Rolland J. P., and Myers K. J., “Model observers for assessment of image quality.” Proceedings of the National Academy of Sciences, vol. 90, no. 21, pp. 9758–9765, 1993. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Christianson O., Chen J. J., Yang Z., Saiprasad G., Dima A., Filliben J. J., Peskin A., Trimble C., Siegel E. L., and Samei E., “An improved index of image quality for task-based performance of ct iterative reconstruction across three commercial implementations,” Radiology, vol. 275 , no. 3, pp. 725–734, 2015. [DOI] [PubMed] [Google Scholar]

- [33].Li K., Zhou W., Li H., and Anastasio M. A., “Assessing the impact of deep neural network-based image denoising on binary signal detection tasks,” IEEE transactions on medical imaging, vol. 40, no. 9, pp. 2295–2305, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Zhang X., Kelkar V. A., Granstedt J., Li H., and Anastasio M. A., “Impact of deep learning-based image super-resolution on binary signal detection,” Journal of Medical Imaging, vol. 8, no. 6, pp. 065501–065501, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [35].Adler J., Lunz S., Verdier O., Schönlieb C.-B., and Öktem O., “Task adapted reconstruction for inverse problems,” Inverse Problems, vol. 38, no. 7 , p. 075006, 2022. [Google Scholar]

- [36].He X. and Park S., “Model observers in medical imaging research,” Theranostics, vol. 3, no. 10, p. 774, 2013. . [DOI] [PMC free article] [PubMed] [Google Scholar]

- [37].Luenberger D., “An introduction to observers,” IEEE Transactions on automatic control, vol. 16, no. 6, pp. 596–602, 1971. [Google Scholar]

- [38].Moore K. L., “Automated radiotherapy treatment planning,” in Seminars in radiation oncology, vol. 29, no. 3. Elsevier, 2019, pp. 209–218. [DOI] [PubMed] [Google Scholar]

- [39].Li K., Zhou W., Li H., and Anastasio M. A., “Estimating task-based performance bounds for image reconstruction methods by use of learned-ideal observers,” in Medical Imaging 2023: Image Perception, Observer Performance, and Technology Assessment, vol. 12467. SPIE, 2023, pp. 120–125. [Google Scholar]

- [40].Chen J.-A., Niu W., Ren B., Wang Y., and Shen X., “Survey: Exploiting data redundancy for optimization of deep learning,” ACM Computing Surveys, vol. 55, no. 10, pp. 1–38, 2023. [Google Scholar]

- [41].Barrett H. H. and Myers K. J., Foundations of image science. John Wiley & Sons, 2013. [Google Scholar]

- [42].Yao J. and Barrett H. H., “Predicting human performance by a channelized hotelling observer model,” in Mathematical Methods in Medical Imaging, vol. 1768. SPIE, 1992, pp. 161–168. [Google Scholar]

- [43].Gifford H. C., King M. A., de Vries D. J., and Soares E. J., “Channelized hotelling and human observer correlation for lesion detection in hepatic spect imaging,” Journal of Nuclear Medicine, vol. 41, no. 3, pp. 514–521,2000. . [PubMed] [Google Scholar]

- [44].Li F., Villa U., Park S., and Anastasio M., “2D acoustic numerical breast phantoms and USCT measurement data: V1,” Harvard Dataverse, 2021. [Google Scholar]

- [45].A. C. of Radiology, D’Orsi C. J. et al. , ACR BI-RADS Atlas: Breast Imaging Reporting and Data System; Mammography, Ultrasound, Magnetic Resonance Imaging, Follow-up and Outcome Monitoring, Data Dictionary. ACR, American College of Radiology, 2013. [Google Scholar]

- [46].Stotzka R., Wuerfel J., Mueller T. O., and Gemmeke H., “Medical imaging by ultrasound computer tomography,” in Medical Imaging 2002: Ultrasonic Imaging and Signal Processing, vol. 4687. SPIE, 2002, pp. 110–119. [Google Scholar]

- [47].Clayton R. and Engquist B., “Absorbing boundary conditions for acoustic and elastic wave equations,” Bulletin of the seismological society of America, vol. 67, no. 6, pp. 1529–1540, 1977. [Google Scholar]

- [48].Wang Z., Bovik A., Sheikh H., and Simoncelli E., “Image quality assessment: from error visibility to structural similarity,” IEEE Transactions on Image Processing, vol. 13, no. 4, pp. 600–612, 2004. [DOI] [PubMed] [Google Scholar]

- [49].Kukreja N., Louboutin M., Vieira F., Luporini F., Lange M., and Gorman G., “Devito: Automated fast finite difference computation,” in 2016 Sixth International Workshop on Domain-Specific Languages and High-Level Frameworks for High Performance Computing (WOLFHPC). IEEE, 2016, pp. 11–19. [Google Scholar]

- [50].Paszke A. et al. , “Pytorch: An imperative style, high-performance deep learning library,” in Advances in Neural Information Processing Systems 32. Curran Associates, Inc., 2019, pp. 8024–8035. [Online]. Available: http://papers.neurips.cc/paper/9015-pytorch-an-imperative-style-high-performance-deep-learning-library.pdf [Google Scholar]

- [51].Shorten C. and Khoshgoftaar T. M., “A survey on image data augmentation for deep learning,” Journal of big data, vol. 6, no. 1, pp. 1–48, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [52].Bhadra S., Kelkar V. A., Brooks F. J., and Anastasio M. A., “On hallucinations in tomographic image reconstruction,” IEEE Transactions on Medical Imaging, vol. 40, no. 11, pp. 3249–3260, 2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [53].Pang Y., Lin J., Qin T., and Chen Z., “Image-to-image translation: Methods and applications,” IEEE Transactions on Multimedia, vol. 24, pp. 3859–3881, 2021. [Google Scholar]

- [54].Feng Y., Chen Y., Feng S., Jin P., Liu Z., and Lin Y., “An intriguing property of geophysics inversion,” in International Conference on Machine Learning. PMLR, 2022, pp. 6434–6446. [Google Scholar]

- [55].Jin P., Zhang X., Chen Y., Huang S. X., Liu Z., and Lin Y., “Unsupervised learning of full-waveform inversion: Connecting CNN and partial differential equation in a loop,” in International Conference on Learning Representations (ICLR), 2022. [Online]. Available: https://openreview.net/forum?id=izvwgBic9q [Google Scholar]