Abstract

Purpose

A systematic review was conducted to examine the state of the literature regarding using ecologically valid virtual environments and related technologies to assess and rehabilitate people with Acquired Brain Injury (ABI).

Materials and methods

A literature search was performed following the PRISMA guidelines using PubMed, Web of Science, ACM and IEEE databases. The focus was on assessment and intervention studies using ecologically valid virtual environments (VE). All studies were included if they involved individuals with ABI and simulated environments of the real world or Activities of Daily Living (ADL).

Results

Seventy out of 363 studies were included in this review and grouped and analyzed according to the nature of its simulation, prefacing a total of 12 kitchens, 11 supermarkets, 10 shopping malls, 16 streets, 11 cities, and 10 other everyday life scenarios. These VE were mostly presented on computer screens, HMD’s and laptops and patients interacted with them primarily via mouse, keyboard, and joystick. Twenty-five out of 70 studies had a non-experimental design.

Conclusion

Evidence about the clinical impact of ecologically valid VE is still modest, and further research with more extensive samples is needed. It is important to standardize neuropsychological and motor outcome measures to strengthen conclusions between studies.

Systematic review registration

identifier CRD42022301560, https://www.crd.york.ac.uk/prospero/display_record.php?RecordID=301560.

Keywords: ecological validity, virtual reality, assessment, rehabilitation, acquired brain injury, activities of daily living

1. Introduction

Given the high prevalence of cognitive impairment, functional dependence and social isolation after acquired brain injury (ABI), namely traumatic brain injury (TBI) (Robba and Citerio, 2023) and stroke (Tsao et al., 2023), finding effective motor and cognitive assessment and rehabilitation solutions has been a primary goal for many research studies in the field of health technologies (Bernhardt et al., 2019). Performance of many daily activities, such as doing the groceries, implies getting to outdoor locations, such as supermarkets or shopping malls. Street crossing and driving are demanding tasks that require multiple and complex cognitive skills that are commonly impaired after ABI (Parsons, 2015). Although the goal of rehabilitation is to improve individuals’ independence in these activities, their practice in real environments can be dangerous because of intrinsic hazards such as traffic or pedestrians, and are extremely resource-intensive in terms of staff management and financial costs, which are scarce in most clinics (Bohil et al., 2011). These limitations have motivated the use of Virtual Reality (VR) to safely recreate different scenarios in the clinic (Rizzo et al., 2004).

Most ABI rehabilitation approaches rely on theoretically valid principles, however the exercises and activities, such as physiotherapy and occupational therapy, repetitive motor exercises, and paper-and-pencil cognitive exercises with static stimuli, are demotivating and lack ecological validity (Parsons, 2016). The issue of ecological validity started being discussed already in 1982 when Neisser argued that cognitive psychology experiments were conducted in artificial settings and employed measures with no counterparts in everyday life (Neisser, 1982). In opposition, Banaji and Crowder (1989) advocated that ecological approaches lack the internal validity and experimental control needed for scientific progress (Banaji and Crowder, 1989). In 1996, Franzen and Wilhelm conceptualized ecological validity as having two aspects; veridicality, in which the person’s performance on a construct-driven measure should predict some feature(s) of the person’s everyday life functioning, and verisimilitude, in which the requirements of a neuropsychological measure and the testing conditions should resemble requirements found in a person’s ADL’s (Franzen and Wilhelm, 1996). Since then, the search for a balance between everyday activities and laboratory control has a long history in clinical neuroscience (Parsons, 2015) and researchers have been using different definitions and interpretations of the term ecological validity (Holleman et al., 2020).

A promising approach to improve neuropsychological assessment and rehabilitation ecological validity is the use of immersive and non-immersive VR systems (Rizzo et al., 2004; Parsons, 2015; Kourtesis and MacPherson, 2023). VR combines the control and rigor of laboratory measures with a simulation that depicts real life situations in a balance between naturalistic observation and the need for control key variables (Bohil et al., 2011). Over the last years, VR-based methodologies have been developed to assess and improve cognitive (Aminov et al., 2018; Luca et al., 2018; Maggio et al., 2019) and motor (Laver et al., 2015, 2017) functions, via immersive (e.g., Head Mounted Displays (HMDs), Cave Automatic Virtual Environment (CAVEs)) and non-immersive (2D computer screens, tablets, mobile phones) technologies. Non-immersive VR requires the use of controllers, joysticks and keyboards, which can be challenging for individuals with no gaming or pc-using experience, namely older adults and clinical populations (Werner and Korczyn, 2012; Zygouris and Tsolaki, 2015; Parsons et al., 2018; Laver et al., 2017; Zaidi et al., 2018). On the other hand, immersive VR using naturalistic interactions seems to facilitate comparable performance between gamers and non-gamers (Zaidi et al., 2018).

Some critical elements, such as presence, time perception, plausibility and embodiment, collectively contribute to the ecological validity of VR-based assessment and rehabilitation programs. Presence comprehends two illusions, usually referred to as Place Illusion (illusion of being in the place depicted by the VE) and Plausibility (illusion that the virtual events are really happening). Embodiment refers to the feeling of “owning” an avatar or virtual body. This aspect is particularly significant for patients with motor impairments. An embodied experience enhances motor learning and fosters a stronger mind–body connection during rehabilitation sessions (Vourvopoulos et al., 2022). Presence together with the embodiment are the key illusions of VR (Slater et al., 2022). Another important element is that time perception in VR differs from the physical world, leading to potential alterations in the patient’s temporal experience. Understanding how time is perceived in VR is crucial for designing effective assessment and rehabilitation protocols and managing patient expectations (Mullen and Davidenko, 2021). The integration of these key elements potentially enhances patient motivation and engagement, which might result in better adherence and improved outcomes.

According to Slater (1999) if a VR system allows the individual to turn their head in any direction at all and still receive visual information only from within the VE then it is a more immersive system than one where the individual can only see the VE visual signals along one fixed direction (Slater, 1999). Accordingly, while in a non-immersive VR system the VE is displayed on a computer monitor and the interaction is limited to a mouse, joystick or keyboard, in an immersive VR system (typically HMDs and CAVEs) the user is surrounded by a 3D representation of the real world and can use their own body for a naturalistic interaction. This strong feeling of ‘being physically present’ in the VE allows one to respond in a realistic way to the virtual stimuli (Agrewal et al., 2020), eliciting the activation of brain mechanisms that underlies sensorimotor integration and cerebral networks that regulate attention (Vecchiato et al., 2015). Notwithstanding the technical and theoretical differences between immersive and non-immersive VR, both have pros and cons concerning the use of novel assessment and rehabilitation systems to improve and personalize treatments according to the patient’s needs.

One of the explanations for the growing interest in VR is its potential to incorporate motor and cognitive tasks within the simulation of ADL’s, and to provide safe and controlled environments for patients to rehabilitate ADL’s. As part of their limitations, VR technologies may cause cybersickness symptomatology such as nausea, dizziness, disorientation, and postural instability. However, recent reviews and meta-analyses suggest that the symptoms are experienced due to the inappropriate implementation of immersive VR hardware and software (Kourtesis et al., 2019; Saredakis et al., 2020). Another problem is that researchers and clinicians do not quantitatively assess cybersickness and it can affect cognitive performance (Nesbitt et al., 2017; Arafat et al., 2018; Mittelstaedt et al., 2019). Since ABI patients may be more susceptible to experience these symptoms (Spreij et al., 2020b), there should be extra caution in the development of VR based assessment and intervention tools. Collaboration between clinicians, researchers, and technology developers is essential to produce VR based tools that can address the assessment and treatment need of the ABI patients (Zanier et al., 2018).

In the last years a number of reviews analyzed the use of ecologically-valid environments in cognitive and motor assessment and/or rehabilitation of multiple sclerosis (Weber et al., 2019), addictive disorders (Segawa et al., 2020), hearing problems (Keidser et al., 2020) mental health (Bell et al., 2022), language (Peeters, 2019) and neglect (Azouvi, 2017). In the last years some reviews have provided overviews within this field. Romero-Ayuso et al. (2021) conducted a review to determine the available tests for the assessment of executive functions with ecological validity to predict individuals’ functioning (Romero-Ayuso et al., 2021). The authors analyzed 76 studies and identified 110 tools to assess instrumental activities of daily living, namely menu preparation and shopping. Since they have focused in the executive functions assessment, they found a predominance of tests based on the Multiple Errands Test paradigm (Romero-Ayuso et al., 2021). Corti et al. (2022) have performed a review about the VR assessment tools for ABI, described in scientific publications between 2010 and 2019. Through the analysis of 38 studies they have identified 16 different tools that assessed executive functions and prospective memory and other 15 that assessed visuo-spatial abilities. The authors found that about half of these tools delivered tasks that differ from everyday life activities, limiting the generalization of the assessment to real world performance. Although the authors recognize great potential of VR for ABI assessment, they recommend the improvement of existing tools or development of new ones with more ecological validity (Corti et al., 2022).

To the best of our knowledge, no review has analyzed the characteristics and clinical validation of ecologically valid daily life scenarios developed to both assess and/or rehabilitate acquired brain injury patients concerning the cognitive and motor domains together. As such, this review aims to examine the state of the literature regarding the use of ecologically valid virtual environments to assess and rehabilitate people with ABI. This review will focus on (1) what are the most common virtual environments used in acquired brain injury assessment and rehabilitation, (2) which technologies are used for presentation and interaction in these environments, (3) how are these virtual environments being clinically validated about their impact in ABI assessment and rehabilitation.

2. Methods

A systematic search of the existing literature was performed following the PRISMA guidelines using four digital databases: PubMed, Web of Science, IEEE and ACM. The search focused on assessment and intervention studies published in English, from 2000 to 2021, in peer-reviewed journals and conferences. The search targeted titles and abstracts using the following keywords and boolean logic: ‘virtual reality’ OR ‘virtual environment’ OR ‘immersive’ AND ‘stroke’ OR ‘traumatic brain injury’ OR ‘acquired brain injury’ AND ‘rehabilitation’ OR ‘assessment’ AND ‘simulated environments’ OR ‘activities daily living’ NOT ‘motor’ OR ‘mobility’ OR ‘limb’ OR ‘balance’ OR ‘gait’.

All types of articles (not reviews and editorials) were included if: (1) they involved individuals of any age with stroke or TBI; (2) simulated environments of the real world or ADL and; (3) had at least one outcome measure result related to the intervention clinical effects. All simulated environments were considered, including 360° videos and serious games. We have included non-immersive (e.g., computer screen and tablet), semi-immersive (e.g., wall projections and driving simulators), and fully immersive systems (e.g., HMD). Additionally, there were no limitations regarding the assessment or intervention administration, frequency, duration, or intensity or sessions.

Articles were excluded if they did not provide outcome data from objective clinical measures (such as cognitive and motor assessment instruments, questionnaires, interviews), were not peer-reviewed or were systematic reviews or meta-analyses. In addition, articles known by the authors from fulfilling the search criteria but not accessible through the search above were also added to the review. These articles were all published in the International Conference on Disability, Virtual Reality & Associated Technologies, which does not have its proceedings indexed.

Data from the included articles were extracted by two of the authors (ALF and JL). Inclusion of the articles was discussed and reviewed in two meetings (one in the screening and one in the eligibility phase) with the remaining senior authors. The general characteristics and results of the studies were extracted, namely the author’s name and year of publication, study design, type of participants, targeted domains, type of interaction and display, type of assessment/rehabilitation VR task, outcome measures and main conclusions. These general characteristics are displayed in Tables 1–6.

Table 1.

Virtual reality-based technologies in a kitchen environment for assessment and rehabilitation of acquired brain injury.

| Study Design | Participants | Purpose and targeted domains | Interaction, display and level of immersion | Assessment/Rehabilitation | Outcome Measures | Conclusions | |

|---|---|---|---|---|---|---|---|

| Zhang et al. (2001) | Experimental | 30 TBI and 30 volunteers without brain injury | Assessment and Rehabilitation of Executive functions (information processing, problem solving, logical sequencing, and speed of responding) |

HMD and touchscreen or computer cursor - Fully Immersive |

Daily living tasks were tested and scored in participants using a computer-simulated virtual kitchen. Each subject was evaluated twice within 7 to 10 days. | Instruments: WAIS-Revised; WRAT; WCST; CVLT and FIM. Performance: Score for each subtasks. |

A computer-generated VE represents a reproducible tool to assess selected cognitive functions and can be used as a supplement to traditional rehabilitation assessment in ABI. |

| Hilton et al. (2002) | Non-experimental | 7 stroke | Assessment of Executive functions and motor | Screen and a TUI - Semi-Immersive |

Coffee preparation: the activity was simulated by toy equivalents, and reproduced in the VE (sequential task). | Performance: Appropriate responses; errors made and nature of errors. |

The location of objects, the instructions given, the physical constraints, the ineffective user response, and the visual and auditory feedback were pointed as important aspects when recreating kitchens in VR. |

| Zhang et al. (2003) | Non-experimental | 54 TBI | Assessment of Attention | Screen and mouse - Non-Immersive |

Meal preparation both in a VR kitchen and an actual kitchen twice over a 3-week period. | Instruments: WAIS-R. Performance: Time and errors on task completion using VR; real kitchen performance and OT evaluation. |

The VR system showed adequate reliability and validity as a method of assessment in persons with ABI. |

| Edmans et al. (2006) | Experimental | 50 stroke | Rehabilitation of Attention | Touch screen - Non-Immersive |

Making a hot drink: the errors observed were compared for standardized task performance in the real world and in a VE. | Instruments: SAST; MMSE; Star Cancellation; RBMT; VOSPB; RPAB; TEA; Kimura Box; 10-Hole Peg Test; MI and BI. Performance: Errors and tasks scores. |

The results would indicate that this VE may be a useful rehabilitation tool for patients undergoing stroke rehabilitation in hospital Although, it posed a different rehabilitation challenge from the task it was intended to simulate, and so it might not be as effective as intended as a rehabilitation tool. |

| O’Brien (2007) | Non-experimental | 3 stroke | Assessment and Rehabilitation of Levels of engagement | HMD and the investigator (moves the VE obeying the indications of the participant) - Fully Immersive |

The participants were asked to perform simple navigation in the most familiar kitchen and then in most unfamiliar. Then, the process is repeated using familiar and non-familiar objects. | Instruments: MI and ITQ. Performance: GSR and Task-Specific Feedback Questionnaire. |

Participants' home environments can be simulated for the purposes of post-stroke rehabilitation, but that participants’ personal proclivities might affect successful use of the system. |

| Edmans et al. (2009) | Experimental | 13 stroke | Assessment and Rehabilitation of Attention | Touch screen - Non-Immersive |

Intervention phase: prepare a hot drink in a virtual environment. Control phase: attention control training. |

Intruments: Barthel ADL score. Performance: Real world hot drink score; Real world hot drink-making errors; Virtual hot drink score. |

Trend towards improvement over time in both real and virtual hot drink making ability in both control and intervention phases. No significant differences in the improvements in real and virtual hot drink making ability during all control and intervention phases in the 13 cases. |

| Cao et al. (2010) | Experimental | 7 (4 TBI 2 stroke and 1 meningo-encephalitis) and 13 HC | Assessment of Executive functions | Desktop computer (screen + keyboard and mouse) - Non-Immersive |

Using the TVK and after the familiarization session and recall, the assessment session consists in the preparation of a black coffee during 20 minutes. | Instruments: BDVO. Performance: Time; numbers of errors; number of executed steps; omissions. |

It is possible to computerize a daily life task (coffee preparation) in the TVK. ABI or HC were able to complete the virtual complex tasks. |

| Adams et al. (2015) | Non-experimental | 14 stroke | Assessment and Rehabilitation of Motor (upper-limb) | Computer desktop and a Kinect TM. - Non-Immersive/Semi-Immersive |

In four visits, participants were asked to use their stroke-affected arm to practice a Meal Preparation activity that included 17 motorscore sub-tasks. | Instruments: WMFT. Performance: Motor score; shoulder flexion; shoulder abduction; shoulder rotation; forearm rotation. |

The results support the criterion validity of VOTA measures as a means of tracking patient progress during an UE rehabilitation program that includes practice of virtual ADLs. |

| Besnard et al. (2016) | Experimental | 19 TBI and 24 HC |

Assessment of Executive functions | Desktop computer (screen, keyboard and a mouse) - Non-Immersive |

Participants were asked to prepare a cup of coffee in a real context and in the virtual context (NI-VCT). | Instruments: TMT A and B; WCST; TOL; Stroop Test; BADS; Key Search Task; Zoo Map and MSET. Performance: Time to completion in seconds; accomplishment score; total errors; omission errors; commission errors. |

TBI participants performed worse than HC on both real and virtual tasks and on all tests of executive functions. Correlation analyses revealed that NI-VCT scores were related to real task scores. The virtual kitchen is a valid tool for IADL assessment in TBI. |

| Huang et al. (2017) | Non-experimental | 8 stroke | Rehabilitation of Motor (upper-limb) | Amadeo (hand rehabilitation robotic device) and Oculus Rift DK2 - Fully Immersive |

18 training sessions along six weeks training using the Amadeo (training protocol include 4 different intervention) A: Passive Mode Training B: Assist-as-needed C: 2D VR task-oriented [(1) Flying bird VR game; (2) Spaceship VR game; (3) Transferring VE-simulated supermarket; (4) Transferring VE-kitchen and cooking scenario.] D: 3D VR task-oriented using Oculus Rift DK2 (first-person view SpaceWar RGS) |

Instruments: FMA, MAS Performance: active Range of Motion (ROM) and force intensity of fingers |

All subacute stroke subjects undergone the proposed rehabilitation approach showed improvement in their motor skills as indicated by clinical evaluation methods using FMA Hand Sub-section and MAS Hand Movement Score, as well as kinematic characteristics suggested by active ROM and output force intensity. |

| Triandafilou et al. (2018) | Non-experimental | 15 stroke | Rehabilitation of Arm movement | Laptop, Xbox Kinect Sensor (Microsoft Corp, Redmond, WA), pen mouse. - Non-Immersive/Semi-Immersive |

Virtual Environment for Rehabilitative Gaming Exercises (VERGE): 1) Ball Bump: players move their hands to contact a virtual ball and send it across the table. 2) Trajectory Trace: a user creates a 3D trajectory (black line) and then passes it to another user to erase by retracing. 3) Food Fight: players move their hands to grab food items that they throw at the other avatar. Control: Alice in Wonderland VR (AWVR) and a home exercise program (HEP). |

Instruments: VERGE Survey; Hand displacement was measured using a commercial IMU system (Xsens 3D motion tracker system). |

85% of the subjects found VERGE an effective means of promoting arm movement. Arm displacement averaged 350m for each VERGE training session. Arm displacement was not significantly less when using VERGE. Participants were split on preference for VERGE, AWVR or HEP. Almost all subjects indicated willingness to perform the training for at least 2–3 days per week at home. |

| Thielbar et al. (2020) | Non-experimental | 20 stroke | Assessment and Rehabilitation of Arm movement | Laptop, Xbox Kinect Sensor (Microsoft Corp, Redmond, WA), pen mouse. - Non-Immersive/Semi-Immersive |

VERGE: 1) Ball Bump: players move their hands to contact a virtual ball and send it across the table. 2) Trajectory Trace: a user creates a 3D trajectory (black line) and then passes it to another user to erase by retracing. 3) Food Fight: players move their hands to grab food items that they throw at the other avatar. Control: AWVR and HEP. |

Instruments: Hand displacement was measured using a commercial IMU system (Xsens 3D motion tracker system). Performance: exercise time, exercise scores, total hand and shoulder movement. |

Mean voluntary hand displacement, after accounting for trunk displacement, was greater than 350 m per therapy session for the VERGE system. Compliance for home-based therapy was 94% of scheduled sessions completed. Multiple players led to longer sessions and more arm movement. |

BADS, Behavioral Assessment of the Dysexecutive Syndrome; BDVO, Battery of Visual Decision of Objects; BI, Barthel ADL Index; CVLT, California Verbal Learning Test; FIM, Functional Independence Measure; FMA, Fugl–Meyer Assessment; ITQ, Immersive Tendencies Questionnaire; MAS, Motor Assessment Scale; MI, Motricity Index; MMSE, Mini Mental State Examination; MSET, Modified Six Elements Test; RBMT, Story Recall from Rivermead Behavioral Memory Test; RPAB, Rivermead Perceptual Assessment Battery; SAST, Sheffield Aphasia Screening Test; TEA, Test of Everyday Attention; TMT A and B, Trail-Making Test; TOL, Tower of London; TUI, Tangible User Interface; VOSPB, Visual Object and Space Perception Battery; WAIS-R, Wechsler Adult Intelligence Scale–Revised; WCST, Wisconsin Card Sorting Test; WMFT, Wolf Motor Function Test; WRAT, Wide Range Achievement Test-3rd Edition.

Table 2.

Virtual reality-based technologies in a supermarket environment for assessment and rehabilitation of acquired brain injury.

| Study Design | Participants | Purpose and targeted domains | Interaction, display and level of immersion | Assessment/Rehabilitation | Outcome Measures | Conclusions | |

|---|---|---|---|---|---|---|---|

| Josman et al. (2006) | Non-experimental | 26 stroke | Assessment and Rehabilitation of Executive Functions | Desktop computer (screen + keyboard + mouse) - Non-Immersive |

VAPS: purchase seven items from a clearly defined list of products then proceed to the cashier's desk, and pay for them. | Instruments: MMSE and BADS. Performance: Total trajectory, trajectory duration, number of items purchased, number of correct actions, number of incorrect actions, number of pauses, total duration of pauses, and time to pay. |

Moderate relationships were found between performance within the VAPS and EF. The results support the use of the virtual supermarket as an EF assessment and training tool after stroke. |

| Kang et al. (2008) | Experimental | 20 stroke | Assessment of Executive Functions, Memory and Attention | HMD and Joystick - Fully Immersive |

VR shopping simulation: select and place an item in the cart according to auditory and visual stimulated stimuli, respond to unexpected events such as dropping an item, and select all items in a specified category. | Instruments: K MMSE, K-WAIS; R-KMT, K-FENT and MVPT. Performance: 1. Computer navigation interaction: total time, distance and collision, number of selected items, interaction error and performance index. 2. Memory and attention: immediate and delayed recognition, visual and auditory memory score, attention reaction time, responsiveness, selected items, and attention index. 3. Executive Functions: total time and distance, judgment and calculation score and executive index. |

Significant program-performance differences between the stroke and control groups. In the stroke group, decreased perceptive and visuospatial functions might have seriously affected participants’ performance in the VR program. |

| Raspelli et al. (2012) | Experimental | 9 stroke, 10 young and 10 older HC |

Assessment of Executive Functions | Screen and joypad - Non-Immersive |

VR version of the Multiple Errands Test based on NeuroVR software as an assessment tool for executive functions. | Instruments: MMSE, BIT, Token Test, SCT, Stroop Test, IGT, DEX, TEA, STAI, BDI, ADL and, IADL. Performance: errors, inefficiencies, rule breaks, strategies, interpretation failures, partial task failures. |

The construct validity of a virtual version of the Multiple Errands Test has been demonstrated. |

| Yip and Man (2013) | Experimental | 37 ABI | Assessment and Rehabilitation of Prospective Memory | Screen and keyboard or joystick (participants choose the input device they preferred). - Non-Immersive |

VRPM: PM training program on everyday PM. Event-based, time-based PM training tasks and ongoing tasks were arranged to occur in a virtual convenience store. | Instruments: MMSE–CV, TONI-3, SADI-CV, CAMPROMPT–CV, HKLLT, FAB, WFT–CV, CTT, CIQ–CV. Performance: VR-based test on everyday PM tasks scores and real life behavioral PM test score. |

Significantly better changes were seen in both VR-based and real-life PM outcome measures, related to frontal lobe functions and semantic fluency. |

| Sorita et al. (2014) | Non-experimental | 33 TBI and 17 stroke | Assessment of Executive Functions (planning) | Wall screen, keyboard and mouse - Semi-Immersive |

VAPS: participants have to purchase 7 items according to a shopping list. | Instruments: CIQ, CAMPROMPT, POI, WAIS-III, GB and TEA. Performance: Total duration of pauses, total duration of move, total number of errors of selection of items. |

Functional performance in VAPS offers promising information on the impact of neuropsychological diseases in daily life. |

| Josman et al. (2014) | Experimental | 24 stroke and 24 HC |

Rehabilitation of Action planning, executive functions and IADLs | Desktop computer (screen + keyboard + mouse) - Non-Immersive |

VAPS: purchase 7 items from a clearly marked list of products, to then proceed to the cashier’s desk, and to pay for them. | Instruments: BADS and OTDL-R. Performance: Trajectory, trajectory duration, items purchased, correct actions, incorrect actions, number of pauses, duration of pauses and time to pay. |

The VAPS showed adequate validity and an ability to predict IADL performance, providing support for its use in cognitive stroke rehabilitation. |

| Yin et al. (2014) | Experimental | 23 stroke | Rehabilitation of Motor: upper-limbs | Sixense and laptop - Semi-Immersive |

Virtual local Supermarket: Participants were instructed to pick a virtual fruit from a shelf and release it into a virtual basket as many times as possible within a two minute trial. |

Instruments: FMA, ARAT, MAL and FIM. |

Although additional VR training was not superior to conventional therapy alone, this study demonstrates the feasibility of VR training in early stroke. |

| Ogourtsova et al. (2018) | Experimental | 27 stroke and 9 HC | Assessment of Unilateral Spatial Neglect | Joystick - Non-Immersive |

EVENS consists of two (simple and complex) immersive, 3-D scenes, depicting grocery shopping shelves, with object-detection and navigation tasks. | Instruments: Apples Test, Line Bisection Test, and Star Cancellation Test. Performance: detection time and maximal mediolateral deviation from an ideal navigation trajectory represented by the most direct route possible from the start position to the respective target. |

Navigation and detection abilities are affected by environmental complexity of the VR scene in individuals with post-stroke USN and can be employed for USN assessment. |

| Demers and Levin (2020) | Experimental | 22 stroke 15 HC |

Assessment of Upper Limbs | Large screen and Kinect II camera - Semi-Immersive |

Grocery shopping task with two scenes representing aisles filled with typical supermarket items, to determine whether reach-to-grasp movements made in a low-cost 2D VE were kinematically similar to those made in a physical environment (PE) in healthy subjects and subjects with stroke. | Instruments: CSI; FMA; MoCA; NSA. Performance: Arm and trunk kinematics were recorded with an optoelectronic measurement system (23 markers; 120 Hz). Temporal and spatial characteristics of the endpoint trajectory, arm and trunk movement patterns were compared. |

Hand positioning at object contact time and trunk displacement were unaffected by the environment. Compared to PE, in VE, unilateral movements were less smooth and time to peak velocity was prolonged. In HC, bilateral movements were simultaneous and symmetrical in both environments. In subjects with stroke, movements were less symmetrical in VE. |

| Cogné et al. (2018) | Experimental | 40 stroke | Assessment of Executive Functions | Keyboard, mouse and headphones - Non-Immersive |

VAPS: purchase 7 items from a list of products, go to the supermarket checkout, pay for the collected items and leave the store in < 30 minutes. There was 5 conditions: 1) without additional auditory stimuli; 2) beeping sounds occurring every 25 sec; 3) sounds from living beings 4) sounds from supermarket objects and; 5) names of other products not in the supermarket. | Instruments: MMSE; GREFEX battery; and Catherine Bergego scale. Performance: Number of products purchased; total time in seconds; and time of navigation. |

The 40 stroke patients navigational performance decreased under the 4 conditions with non-contextual auditory stimuli, especially for those with dysexecutive disorders. |

| Spreij et al. (2020b) | Experimental | 88 stroke and 66 HC | Assessment of Executive Functions and Memory | HMD (Oculus Rift or HTC Vive), Computer Monitor and Xbox 360 Controller - Fully-Immersive |

The VR task consisted in passing through the entry gates, finding three products from a shopping list, and passing through the cash registers to finish. A grocery list was randomly presented over three trials, and participants were asked to recall the products. There were two different shopping lists: (1) salt, matches, sprinkles; (2) hair wax, cookies, socks. | Instruments: 1 questionnaire regarding user-experience and 1 questionnaire regarding preference. Performance: completion rate, total time needed to complete the VR-task, and total number of products found that were presented on the shopping list. |

Both stroke patients and HC reported an enhanced feeling of engagement, transportation, flow, and presence when tested with a HMD but more negative side effects were experienced. The majority of stroke patients had no preference for one interface over the other, yet younger patients tended to prefer the HMD. |

ADL, Activities of Daily Living Test; ARAT, Action Research Arm Test; BADS, Behavioral Assessment of Dysexecutive Syndrome; BDI, Beck Depression Inventory; BIT, Behavioral Inattention Test; CAMPROMPT, Cambridge Prospective Memory Test; CAMPROMPT–CV, The Cambridge Prospective Memory Test – Chinese Version; CIQ, Community Integration Questionnaire; CIQ–CV, Chinese Version of the Community Integration Questionnaire; CSI, Composite Spasticity Index; CTT, Color Trails Test; DEX, Dysexecutive Questionnaire; EVENS, Ecological VR-based Evaluation of Neglect Symptoms; FAB, Frontal Assessment Battery; FIM, Functional Independence Measure; FMA, Fugl–Meyer Assessment for the upper extremity; GB, Grober Buschke; HKLLT, Hong Kong List Learning Test; GREFEX, Groupe de réflexion pour l’évaluation des fonctions exécutives; IADL, Instrumental Activities of Daily Living Test; IGT, Iowa Gambling Task; K-FENT, Kim’s Frontal Executive Function Neuropsychological Test; KMMSE, Korean Mini-Intelligence Scale; MAL, Motor Activity Log; MCST, Modified Card Sorting Test; MMSE, Mini Mental State Examination; MMSE–CV, Chinese Version Mini Mental State Examination; MoCA, Montreal Cognitive Assessment; MVPT, Motor-Free Visual Perception Test; NSA, Nottingham Sensory Assessment; OTDL-R, Observed Tasks of Daily Living-Revised; POI, Perceptual Organization Index; R-KMT, Rey-Kim Memory Test; SADI-CV, Chinese Version of the Self-Awareness of Deficit Interview; SCT, Street’s Completion Test; STAI, State and Trait Anxiety Index; Stroop Test, Stroop Colour-Word Test; TEA, Test of Attentional Performance; TMT, Trail Making Test; TONI-3, Test of Nonverbal Intelligence – 3rd Edition; WFT–CV, Word Fluency Test – Chinese Version; WAIS-III, Working Memory Index.

Table 3.

Virtual reality-based technologies in a shopping mall environment for assessment and rehabilitation.

| Study Design | Participants | Purpose and targeted domains | Interaction, display and level of immersion | Assessment/Rehabilitation | Outcome Measures | Conclusions | |

|---|---|---|---|---|---|---|---|

| Rand et al. (2007) | Experimental | 14 stroke and 93 HC from three age groups | Assessment and Rehabilitation of Upper Limbs and Executive Functions |

GestureTek (IREX system) – Semi-Immersive | VMAll four-item shopping task: the products are located in two different aisles on both the top and middle shelves. The list is written in large letters on a board. | Instruments: MMSE; FMA; SFQ; Borg’s scale of perceived exertion; SFQ- CHILD. Performance: time it took to shop for the four items; the order of products bought while shopping; and the number and type of products bought by mistake. |

VMall significantly differentiates between HC and stroke participants. The shopping task was challenging for the stroke participants, which has positive implications for treatment. |

| Rand et al. (2009a) | Non-experimental | 6 stroke | Rehabilitation of Upper Limbs and Executive Functions | GestureTek (IREX system) – Semi-Immersive |

Large supermarket in which the users can navigate and select aisles and grocery products by waving the affected hand. Complex, everyday task of shopping in which their weak upper extremity and executive functions deficits can be trained. | Instruments: MMSE; Star Cancellation and GDS. Performance: shopping time and number of mistakes. |

VMall has great potential as an intervention tool for treating the weak upper extremity of individuals with stroke while providing opportunities for practicing functional tasks. |

| Rand et al. (2009b) | Non-experimental | 4 stroke | Rehabilitation of Executive Functions | GestureTek (IREX system) – Semi-Immersive |

VMall: Virtual supermarket that encourages planning, multitasking, and problem solving while practicing an everyday shopping task. Products are virtually selected and placed in a shopping cart using upper-extremity movements. |

Instruments: MET–HV and IADL questionnaire. Performance: total number of mistakes; rule break mistakes; non-efficiency mistakes and; use of strategies mistakes. |

VMall is a motivating and effective intervention tool for post-stroke rehabilitation of multitasking deficits during the performance of daily tasks. |

| Rand et al. (2009c) | Experimental | 9 stroke, 20 young and 20 older HC | Assessment of Executive Functions | GestureTek (IREX system) – Semi-Immersive |

VMall: virtual supermarket that encourages planning, multitasking, and problem solving while practicing an everyday shopping task. Products are virtually selected and placed in a shopping cart using upper-extremity movements. |

Instruments: MET–HV; BADS (Zoo map) and IADL questionnaire. Performance: total number of mistakes; rule break mistakes; non-efficiency mistakes and; use of strategies mistakes |

VMET differentiates between two age groups of HC and between HC and post-stroke thus demonstrating that it is sensitive to brain injury and ageing. Significant correlations were found between MET and VMET for both post-stroke and older HC. |

| Hadad et al. (2012) | Experimental | 5 stroke and 6 HC | Assessment and Rehabilitation of Executive Functions | Kinect (SeeMe system) – Semi-Immersive |

VIS: a VE of a 3-store mall. A store mock-up. A Cafeteria that served as location of shopping in a real setting. | Instruments: MMSE; FIM; SFQ. Performance: time to complete the test and the number of errors (buying the wrong item or not buying an item on the list). Modified version: budget handling. |

Post-stroke group performed more slowly than control group. Both groups took longer to complete the test in the VIS than in the store mock- up and the cafeteria. Performance in the VIS, the store mock-up and the cafeteria were correlated in the post-stroke group. The VIS may be used to assess and train shopping cognitive abilities. |

| Jacoby et al. (2013) | Experimental | 12 TBI | Assessment of Executive Functions | GestureTek (IREX system) – Semi-Immersive |

VMall: a supermarket that enables training of motor and cognitive abilities during IADL practice. It enables the performance of a variety of tasks. | Instruments: MET; EFPT. Performance: Total score. |

Results show a trend towards an advantage of VR therapy compared to cognitive retraining OT without VR, as it leads to greater improvement in IADL’s. |

| Erez et al. (2013) | Experimental | 20 TBI children and 20 typically developing children | Assessment and Rehabilitation of Executive Functions | GestureTek (IREX system) – Semi-Immersive |

VMall: a supermarket that enables training of motor, cognitive and EF abilities during IADL practice. It enables the performance of a variety of tasks. | Instruments: SFQ-CHILD; Borg’s scale of perceived exertion; BADS (Zoo Map). Performance: Time to complete; number of mistakes (items bought by mistake, additional items bought, or required items not bought); and sequence of bought products. |

Good usability. Significant difference in performance between the two groups: mean shopping time and number of mistakes higher for TBI children. Poorer performance of TBI children may be due to EF deficits. |

| Okahashi et al. (2013) | Experimental | 5 Stroke, 5 TBI, 10 HC, 10 old and 10 young HC. |

Assessment of Executive Functions, Attention and Memory | Touch panel display: by touching the bottom of the screen, users could move in the virtual shopping mall, entering a shop and buying an item. – Semi-Immersive |

We set a shopping task, which asks users to buy four specific items. The user must search the shops that sell specific items and select the target item out of six items inside a shop. | Instruments: MMSE; RBMT; EMC; DEX; SDMT; SRT; Star and Letter Cancellation Task. Performance: Bag use; list use; cue use; forward movement; reverse movement; correct purchases; total time; time in shops; time on road and mean time per shop. |

VST is able to evaluate the ability of attention and everyday memory in participants with brain damage. The time of VST is increased by age. |

| Canty et al. (2014) | Experimental | 30 TBI and 24 HC | Assessment of Prospective Memory | Keyboard, computer. – Non-Immersive |

VRST: simulates the graphical perspective of the participant’s avatar and requires the participant to purchase 12 items in a pre-specified order from a selection of 20 shops. Participants were instructed to complete 3 time- and 3 event-based PM tasks. | Instruments: LDPMT; TMT A and B; HVLT-R; COWAT; LNS; HSCT; SPRS; IR; IL. Performance: Performance in cue detection. |

The performance of the TBI group was significantly poorer than that of controls on event-based PM, as measured by the LDPMT, and on time- and event-based PM as measured by the VRST. |

| Nir-Hadad et al. (2015) | Experimental | 19 stroke and 20 HC | Assessment of Executive Functions | Kinect (SeeMe system) – Semi-Immersive |

Adapted Four-Item Shopping Task in the SeeMe VIS environment with a supermarket, a toy store and a hardware store. | Instruments: SFQ; BADS (Zoo Map and the Rule Shift Cards); EFPT (Telephone Use and Bill Payment); FIM; FMA; CDT. Performance: selected products; selection time; mistakes; total cost of purchased items; and distance traversed while shopping. |

Good initial support for the validity of the Adapted Four-Item Shopping Task as an IADL assessment that requires the use of EF. |

BADS, Behavioral Assessment of the Disexecutive Syndrome; Borg’s scale of perceived exertion; CDT, Clock Drawing Test; COWAT, Controlled Oral Word Association Task; DEX, Dysexecutive Questionnaire; EFPT, Executive Function Performance Test; EFPT, Executive Function Performance Test; EMC, Everyday Memory Checklist; FIM, Functional Independence Measure; FMA, Fugl–Meyer Motor Assessment; GDS, Geriatric Depression Scale; HSCT, Hayling Sentence Completion Test; HVLT-R-TR, Total Recall on the Hopkins Verbal Learning Test-Revise; IADL Performance, Instrumental Activities of Daily Living Performance; IADL questionnaire, Instrumental Activities of Daily Living questionnaire; IL, independent living; IR, interpersonal relationships; LDPMT, Lexical decision prospective memory task; LNS, Letter Number Sequencing; MET, Multiple Errands Test; MET–HV, Multiple Errands Test–Hospital Version; MET-SV, Multiple Errands Test—Simplified Version; MMSE, Mini-Mental State Examination; RBMT, Rivermead Behavioral Memory Test; SDMT, Symbol Digit Modalities Test; SFQ-CHILD, Short Feedback Questionnaire for children; SFQ, Short Feedback Questionnaire; SPRS, Sydney Psychosocial Reintegration Scale; SRT, Simple Reaction Time Task; TMT, Trail Making Test: Parts A and B.

Table 4.

Virtual reality-based technologies in a street environment for assessment and rehabilitation.

| Study Design | Participants | Purpose and targeted domains | Interaction, display and level of immersion | Assessment/Rehabilitation | Outcome Measures | Conclusions | |

|---|---|---|---|---|---|---|---|

| Naveh et al. (2000) | Experimental | 6 HC and 6 stroke with USN | Assessment and Rehabilitation of Attention, Street crossing consciousness. |

15” CRT monitor or projected on a screen via video projector, keyboard and mouse. - Semi-Immersive |

The participants’ task was to commence crossing the street when, in his or her opinion, it was safe to do so. The number of training sessions varied from 1 to 4, and the duration of each session varied from 30 to 60 minutes. |

Instruments: BIT; MSC and ADL’s questionnaire. Performance: Frequency, order and direction that participants searched for oncoming vehicles; number of trials and total time to successfully complete each level; highest level successfully completed; time taken at each level of difficulty to cross the virtual street safely; number of accidents at each level; number of times looking to the left and to the right before crossing the street. |

The performance of the patients was considerably more variable; they were able to complete fewer levels and usually took more time to do so. The results indicate that the VR training could be beneficial to people who have difficulty with street crossing. |

| Wald et al. (2000) | Non-experimental | 28 TBI | Assessment of Perception (drive performance) | DriVR and HMD - Fully Immersive |

Concurrent validity of the DriVR was examined by comparing DriVR measures to other indicators of driving ability, which consisted of on-road, cognitive and visual-perceptual, and driving video tests. The entire driving assessment occurred over a 2-day period within the same week. | Instruments: DPT II; DRI II and On-road Driving test. Performance: Follow traffic event; shop road and opposite road; stop signs missed; driveway choice event and avoid traffic event. |

The DriVR appeared to be a useful adjunctive screening tool for assessing driving performance in persons with ABI. |

| Weiss et al. (2003) | Experimental | 6 stroke with USN and 6 HC |

Assessment and Rehabilitation of Attention, Street crossing consciousness. |

Screen, Keyboard and mouse - Non-Immersive |

The subject’s task was to commence crossing the street when, in his or her opinion, it was safe to do so. In the initial study, the number of training sessions varied from 1 to 4. During the intervention study, there were 12 training sessions given over a period of four weeks. In each configuration, the levels of difficulty were graded from 1 to 7. |

Performance: Frequency, order and direction searching for oncoming vehicles; number of trials as well as the total time it took to successfully complete each level; highest level successfully completed; time taken at each level to cross the virtual street safely; number of accidents at each level; number of times looking to the left and to the right before crossing the street. |

This VE was suitable in both its cognitive and motor demands for the targeted population. The VR training is likely to prove beneficial to people who have difficulty with crossing streets. |

| Katz et al. (2005) | Experimental | 19 stroke participants with USN | Assessment of Attention, street crossing consciousness. |

Computer desktop, keyboard and mouse - Non-Immersive |

Experimental group was given computer based VR street crossing training and control group was given computer based visual scanning tasks, both for 9 hours distributed in twelve sessions for four weeks. | Instruments: BIT; MSC and ADL checklist. Performance: Number of looks to the left; number of looks to the right and number of accidents. |

Both groups improved similarly. The VR group achieved on the USN measures results that equaled those achieved by the control group treated with conventional visual scanning tasks. |

| Titov and Knight (2005) | Non-Experimental | 3 participants with neurological injuries | Assessment of Prospective memory | Touchscreen connected to a laptop - Non-Immersive |

Virtual Street: a street that participants can ‘walk’ along. It has distracting sounds (car horns, people talking, music, news reports). Animations, such as people walking on the scene have been added. Prospective memory can be tested using the Virtual Street environment by instructing participants to complete errands as they walk along. | Instruments: WMS-III Digit Span; NART Word Lists; WCST; F-A-S Verbal Fluency and Stroop Test. Performance: SMT and CMT. |

The patient performed more poorly on the multi-tasking test than the matched control. Patients performance in the computer-based tests was consistent with their memory deficits. |

| Lloyd et al. (2006) | Non-Experimental | 20 ABI and 14 HC | Assessment and Rehabilitation of Route Memory | Playstation 2 games console and TV - Non-Immersive |

Driv3r is based upon real-world town of Nice, and contains a large network of streets and buildings. It also features drivers and pedestrians moving around. Study 1: HC were driven around the real street and then had to give directions to the driver in the virtual street and contrariwise in a counterbalanced order. Study 2: Participants learned one route in an errorless way, watching the entire route correctly through completion three times before attempting to call out directions themselves. Another route, they learned in an errorful way, being shown the correct route only once before being asked to take two practice attempts (during which errors typically occurred) at calling out the directions before the final, ‘test’ trial. |

Performance: number of wrong turns, number of errors, used strategies. | The first study demonstrated the ecological validity of a non-immersive virtual town, showing performance therein to correlate well with real-world route learning performance. The second study found that a rehabilitation strategy known as ‘errorless learning’ is more effective than traditional ‘trial- and-error’ methods for route learning tasks. |

| Kim et al. (2007) | Experimental | 10 stroke and 40 HC | Rehabilitation of Unilateral neglect | HMD and Mouse - Fully Immersive |

The training procedure consisted on completing missions while keeping the virtual avatar safe during crossing the street in a VE. | Instruments: MMSE; line bisection and cancellation test. Performance: deviation angle; reaction time; right and left reaction time; visual cue; auditory cue; failure rate of mission. |

The system was proper to the training of unilateral neglect patients. |

| Akinwuntan et al. (2009) | Experimental | 73 stroke | Rehabilitation of Cognitive training | Driving simulator - Semi-Immersive |

Forty-two participants received simulator-based driving training, whereas 41 participants received cognitive training for 15 hours. | Instruments: CARA. |

Simulator-based driving training improved driving ability, especially for well educated and less disabled stroke patients. |

| Devos et al. (2009) | Experimental | 73 stroke | Rehabilitation of Cognitive training (driving retraining) | Driving simulator - Semi-Immersive |

Forty-two participants received simulator-based driving training, whereas 41 participants received cognitive training for 15 hours. | Instruments: TRIP; CARA. |

Contextual training in a driving simulator appeared to be superior to cognitive training to treat impaired on-road driving skills after stroke. |

| Lloyd et al. (2009) | Experimental | 20 ABI (8 TBI, 6 stroke, 6 others (brain tumor and cortical cysts) | Assessment and Rehabilitation of Learning (Route learning) | Screen and Ps2 - Non-Immersive |

For the demonstration trial and two learning trials, participants were shown around a route. As in the errorless condition, participants were asked to watch as the experimenter moved along the route and called out directions in the demonstration trial. For the test trial participants were asked to call out the directions at each junction. | Instruments: ROCF; AMIPB (List Learning). Performance: Wrong turns and errors made. |

Route recall following the errorless learning was significantly more accurate than recall after errorfull learning. This suggests that the benefits of errorless over errorfull learning in ABI rehabilitation extend beyond verbal learning tasks to the route memorization task. |

| Sorita et al. (2013) | Non-experimental | 27 TBI | Assessment of Learning (route learning) | Screen and joystick - Non-Immersive |

Two immediate and one delayed route recall assessing procedural learning in spatial memory. The two tasks were performed within two modalities: route learning within a RE district and route learning within a virtual reproduction of the urban district (VE). | Instruments: GCS; TEA and GBT. Performance: Sketch map test; Map recognition test; Scene arrangement test; VE design. |

The routes learned within the VE transferred better to the real setting than the routes directly learned in the RE. Therefore VR might provide ecological rehabilitation scenarios to assess daily functioning. |

| Navarro et al. (2013) | Experimental | 32 stroke (15 non-USN and 17 USN) and 15 HC | Assessment of Arousal, Attention, Consciousness. (Street crossing). |

Screen, infrared tracking system and joystick - Non-Immersive |

The assessment session consisted of two consecutive repetitions of virtual street crossing. In each session, the participants were asked to move from the starting point to a large department store and then to come back as quickly and safely as possible. | Instruments: BIT; CPT-II; Stroop Test; CTT; BADS (Zoo Map and Key Search Test). Performance: Time to complete the task; number of head turns, accidents and warning signs. |

The performance of neglect participants was significantly worse than the performance of non-neglect and HC. |

| Park (2015) | Experimental | 30 stroke | Assessment of Incidence of driving errors | Driving simulator (GDS-300, Gridspace) - Semi-Immersive |

Virtual on-road course with road traffic rules. The test course simulated driving in downtown Seoul and on the highway and was designed to resemble actual driving, incorporating various buildings, moving cars, traffic signals, and road signs. |

Performance: Failure to use seat belt; exceed speed limit; turn signal errors; drop out the course; cross center line; accidents; brake reaction time and total error score. | Patients with lesions in the left or right hemispheres showed differences in driving skills, such as: frequencies of center line crossing, turn signal errors, accidents, brake reaction time, total driving errors and test failure rate. |

| Ettenhofer et al. (2019) | Experimental | 17 TBI | Assessment and Rehabilitation of Dual processing, attention, working memory, and response inhibition | General Simulation Driver Guidance System: 8-foot circular frame supporting a curved screen and a driving console analogous to that found in a typical automobile - Semi-Immersive |

1) Brief review of training and progress; 2) practice of cognitive skills through cognitive driving scenarios; 3) practice of composite driving while performing working memory or visual attention tasks; and 4) open-ended race-track course to promote engagement. | Instruments: WAIS-III (Digit Span, Symbol Search, Coding); TMT A and B; Letter and Animal Fluency; CVLT-II; Grooved Pegboard; NSI; PTSD Checklist-Civilian; BDI-II; SWLS; SF-36 (physical and mental); ESS and FSS. Performance: VR Tactical and Operational Driving Quotient. |

NeuroDRIVE intervention enabled significant improvements in working memory and selective attention. |

| Spreij et al. (2020a) | Experimental | 138 stroke and 21 HC | Assessment of Visuo Spatial Neglect | Large Screen + steering wheel - Semi-Immersive |

Participants were instructed to use the steering wheel to maintain the starting position at the center of the right lane, which is in line with Dutch road traffic regulations. Participants needed to adjust their position continuously. | Instruments: Shape Cancellation Task; Catherine Bergego Scale. Performance: Average position on the road and the average standard deviation of the position, as an indication of the magnitude of sway. |

Patients with left-sided VSN and recovered VSN deviated more regarding position on the road compared to patients without VSN. The deviation was larger in patients with more severe VSN. Regarding diagnostic accuracy, 29% of recovered VSN patients and 6% of patients without VSN showed abnormal performance on the simulated driving task. The sensitivity was 52% for left-sided VSN. |

| Wagner et al. (2021) | Non-experimental | 18 stroke (9 USN and 9 No USN) | Assessment of Unilateral Spatial Neglect | iVRoad HTC Vive Controller - Fully Immersive |

The task consists of dropping a letter in a mailbox on the way to work. To do so, the user first has to safely cross two roads and the square in between, and then return to the starting position to continue his/her way to work. | Instruments: IPQ; USEQ; SSQ. Performance: Error rate; decision time (elapsed time between task onset and start of road crossing by button press); Head direction ratio between the time patients looked left and right before making the decision to cross the street. |

Parameters and conditions for distinguishing patients with and without USN were identified: Decision time has been identified as a very good measure. Error rate and the Head direction ratio were also reliable measures of separation. |

ADL checklist, Activities of daily living checklist; AMIPB, Adult Memory and Information Processing battery; BADS, Behavioral Assessment of the Dysexecutive Syndrome; BDI-II, Beck Depression Inventory; BIT, Behavioral Inattention Test; CARA, Center for Fitness to Drive Evaluation and Car Adaptations; CMT, Complex Multi-tasking Test; COWAT, Controlled Oral Word Association Task; CPT-II, Conner’s Continuous Performance Test-II; CTT, Color Trail Test; CVLT-II, California Verbal Learning Test II; D-KEFS, Delis–Kaplan Executive Function System; DPT-II, Driver Performance Test II; DRI-II, Driver Risk Index II; ESS, Epworth Sleepiness Scale; FAS, FAS Verbal Fluency Test; FSS, Fatigue Severity Scale; GBT, Grober and Buschke’s test; GCS, Glasgow Coma Score; IPQ, Igroup Presence Questionnaire; MRMTDS, Money Road Map Test of Direction Sense; MSC, Mesulam Symbol Cancellation test; NART, National Adult Reading Test; NSI, Neurobehavioral Symptom Inventory; ROCF, Rey Osterrieth Complex Figure Task; SMT, Simple Multi-tasking Test; SSQ, Simulator Sickness Questionnaire; SWLS, Satisfaction with Life Scale; TEA, Test for Assessment of Attention; TMT, Trail Making Test; TRIP, Test Ride for Investigating Practical fitness to drive; USEQ, User Satisfaction Evaluation Questionnaire; VSN, Visuo Spatial Neglet; WAIS, Wechsler Adult Intelligence Scale; WCST, Wisconsin Card Sorting Test; WMS III, Wechsler Memory Scale III.

Table 5.

Virtual reality-based technologies in a city environment for assessment and rehabilitation of acquired brain injury.

| Methods/Study design | Participants | Domain | Interaction and display/Instrumentation | Assessment/Treatment | Measures | Conclusions | |

|---|---|---|---|---|---|---|---|

| Gamito et al. (2011a,b) | Non-experimental | 1 TBI | Working Memory and Attention. | HMD, keyboard and mouse. | Small town populated with digital robots. The town comprised several buildings arranged in eight squared blocks, along with a 2-room apartment and a mini-market, where the participant was able to move freely around and to grab objects. | Instruments: PASAT. Performance: Completion time of each task as an indicator of task performance speed. |

Satisfactory level of performance after some practice, with an average time for each task of 5 min. These data revealed a significant increase in working memory and attention levels, suggesting an improvement on patient cognitive function. |

| Gamito et al. (2011a,b) | Non-experimental | 2 stroke | Memory and Attention. | HMD, keyboard and mouse. | Activities of daily living such as: morning hygiene, taking the breakfast, finding the way to the minimarket and buying several items from a shopping list. | Instruments: WMS-III; TP; PQ; Immersion and Cybersickness. Performance: Completion time of each task as an indicator of task performance speed. |

Increase in memory and attention/concentration skills, which can suggest a higher level of executive functioning after the VR training. |

| Jovanovski et al. (2012) | Experimental | 11 stroke, 2 TBI and 30 HC | Evaluate the ecological validity of the MCT and other standardized executive function tests. | Joystick and computer screen | The MCT consists of a post office, drug store, stationery store, coffee shop, grocery store, optometrist’s office, doctor’s office, restaurant and pub, bank, dry cleaner, pet store, and the participant’s home. | Instruments: WTAR; TOMM; COWAT; WCST; BADS-MSE; TMT A and B; WAIS-III (Block Design, and Digit Span); JLO; ROCF; CVLT-II; WMS-III (Logical Memory); BDI-II and BAI. Performance: Completion Time; number of Tasks Completed; and Task Repetitions. Qualitative errors: Task Failures, Task Repetitions, or Inefficiencies. |

VR technology, designed with ecological validity in mind, are valuable tools for neuropsychologists attempting to predict real-world functioning in participants with ABI. |

| Gamito et al. (2014) | Non-experimental | 17 stroke | Memory and attention | HMD, desktop screenplay, keyboard and mouse. | Small town with a 2-room apartment and a minimarket in the vicinity. | Instruments: WMS-III; ROCF; TP. Performance: a therapist assessed the session outcome. |

Increased working memory and sustained attention from initial to final assessment regardless of the VR device used. |

| Vourvopoulos et al. (2014) | Non-experimental | 7 stroke, 2 TBI and 1 MCI | Visuospatial orientation, Attention and Executive functions. | Joystick and computer screen | Simulations of several activities of daily living (supermarket, post-office, pharmacy and bank) within a city. | Instruments: MMSE; SIS and SUS. Performance: Total score and execution time. |

Strong correlation between the Reh@City performance and the MMSE score. High usability scores (M = 77%). |

| Gamito et al. (2015) | Experimental | 20 stroke | Memory and Attention | HMD, keyboard and mouse. | The VR scenario comprised several daily life activities: buying several items, finding the way to the minimarket, finding a virtual character dressed in yellow, recognition of outdoor advertisements and digit retention. | Instruments: WMS-III; ROCF; TP. |

Significant improvements in attention and memory functions in the intervention group, but not in the controls. |

| Faria et al. (2016) | Experimental | 18 stroke | Global cognitive functioning | Joystick and computer screen | Reh@City v1.0: an immersive three-dimensional environment with streets, sidewalks, commercial buildings, parks and moving cars. Participants have to accomplish some common ADL’s in four places: supermarket, post office, bank, and pharmacy. | Instruments: ACE; TMT A and B; WAIS III (Picture Arrangement); SIS 3.0; SUS. Performance: Total score and execution time. |

A within groups analysis revealed significant improvements in global cognitive functioning, attention, memory, visuo-spatial abilities, executive functions, emotion and overall recovery in the VR group. The control group only improved in self-reported memory and social participation. Between groups analysis, showed significantly greater improvements in global cognitive functioning, attention and executive functions when comparing VR to conventional therapy. |

| Claessen et al. (2016) | Experimental | 68 stroke and 44 HC | Spatial navigation | Not defined. | Each route contained 11 decision points. Eight subtasks were used to assess the participants’ knowledge of the studied route in the testing phase. | Instruments: NART; CORSI; TMT A and B; WAIS-III (Digit Span). Performance: Scene Recognition. |

Moderate overlap of the total scores between the two navigation tests indicates that virtual testing of navigation ability is a valid alternative to navigation tests that rely on real-world route exposure. |

| Faria et al. (2019) | Experimental | 31 stroke | Global cognitive functioning | Customized handle with a tracking pattern. | Reh@City v2.0: an immersive three-dimensional environment with streets, sidewalks, commercial buildings, parks and moving cars. Participants have to accomplish some common ADL’s in eight places: supermarket, post office, bank, pharmacy, fashion store, kiosk, home and park. | Instruments: MoCA. Performance: Total score and execution time. |

Both groups performed at the same level and there was not an effect of the training methodology in overall performance. However, Reh@City enabled a more intensive training, which may translate in more cognitive improvements. |

| Faria et al. (2020) | Experimental | 36 stroke | Global cognitive functioning | Customized handle with a tracking pattern. | Reh@City v2.0: an immersive three-dimensional environment with streets, sidewalks, commercial buildings, parks and moving cars. Participants have to accomplish some common ADL’s in eight places: supermarket, post office, bank, pharmacy, fashion store, kiosk, home and park. | Instruments: MoCA; TMT A and B; WMS-III Verbal Paired Associates; WAIS-III Digit Symbol Coding, Symbol Search, Digit Span and Vocabulary subtests, and PRECiS. | Within-groups Reh@City v2.0 improved general cognitive functioning, attention, visuospatial ability and executive functions. These improvements generalized to verbal memory, processing speed and self-perceived cognitive deficits assessments. TG only improved MoCA orientation, and processing speed and verbal memory outcomes. Between-groups Reh@City v2.0 was superior in MoCA general cognitive functioning, visuospatial ability, and executive functions. |

| Oliveira et al. (2020) | Experimental | 30 stroke | Global cognitive functioning | Mouse and computer screen | City with tasks as using a toothbrush, following the steps of a recipe to bake a cake, or by watching news on a television and recollect pieces of this information on latter tasks. Outside the tasks consist of buying products in the grocery store or to perform visual search tasks in a virtual art gallery. | Instruments: MoCA, FAB, WMS-III, and CTT. | Improvements were found in the assessed cognitive domains at 6 to 10 post-treatment sessions. In-depth analysis through reliable change scores has suggested larger treatment effects on global cognition. |

ACE, Addenbrooke Cognitive Examination; BADS-MSE, Behavioral Assessment of the Dysexecutive Syndrome–Modified Six Elements Test; BAI, Beck Anxiety Inventory; BDI-II, The Beck Depression Inventory-Second Edition; CORSI, The Corsi Block-Tapping Task; COWAT, Controlled Oral Word Association Test; CTT, Colors Trail Test; CVLT-II, California Verbal Learning Test-Second Edition; Cybersickness, Simulator Sickness Questionnaire; FAB, Frontal Assessment Battery; Immersion, Immersion Tendencies Questionnaire; JLO, Judgment of Line Orientation; MMSE, Mini-Mental State Examination test; MoCA, Montreal Cognitive Assessment; NART, National Adult Reading Test; PASAT, The Paced Auditory Serial Addition Task; PQ, Presence Questionnaire; PRECiS, Patient-Reported Evaluation of Cognitive State; ROCF, Rey-Osterreith Complex Figure Test; SIS, Stroke Impact Scale 3.0; SUS, System Usability Scale; TMT A and B, Trail-Making Test Parts A and B; TOMM, Test of Memory Malingering; TP, Toulouse–Pieron; WAIS-III, Wechsler Adult Intelligence Scale-Third Edition; WCST, Wisconsin Card-Sorting Test; WMS-III, Wechsler Memory Scale – 3rd edition; WTAR, The Wechsler Test of Adult Reading.

Table 6.

Virtual reality-based technologies in other everyday life environments for assessment and rehabilitation of acquired brain injury.

| Methods/Study design | Participants | Domain | Interaction and display/Instrumentation | Assessment/Treatment | Measures | Conclusions | |

|---|---|---|---|---|---|---|---|

| Matheis et al. (2007) | Experimental | 20 TBI and 20 HC | Learning and Memory | Dell laptop +5TH Dimension Technologies (5DT) 800 Series HMD | The participant had to learn 16 target items, depicted among numerous other office distracters (e.g., computer, file cabinet), during a sequence of 12 sequential exposures to the VR Office environment. | Instruments: WASI; WAIS (digit span and digit symbol-coding subtests); TMT A and B; WCST; BNT; HVOT; COWAT; CVLT; BVMT-R; and m-SSQ. Performance: Initial list acquisition, short-term recall (30 min.) and long term recall (24 h). |

VR memory testing accurately distinguished the TBI group from controls. Non-memory-impaired TBI acquired targets at the same rate as HC. There was a relationship between the VR Office and a standard measure of memory, suggesting the construct validity of the task. |

| Fong et al. (2010) | Non-experimental | 12 intracerebral hemorrhage, 6 stroke, 5 TBI and 1 brain tumor | Cognition | 27″ touch monitor that represents the actual dimensions of a real ATM. | VR-ATM | Instruments: MMSE and COGNISTAT. Performance: Success or failure in using VR-ATM real ATM (cash withdrawals, money transfers, and electronic payments); average reaction time; percentage of incorrect responses; number of cues needed; and time spent. |

Sensitivity was 100% for cash withdrawals and 83.3% for money transfers. Specificity was 83 and 75%, respectively. For cash withdrawals, average reaction time of the VR group was shorter than the conventional program group. No differences in average reaction time or accuracy between groups for money transfers, although there was improvement for the VR-ATM group. |

| Renison et al. (2012) | Experimental | 30 TBI and 30 HC | Executive Functions | An X-box and Playstation compatible handset. | VLT models the dimensions and contents of two rooms in the Library at Epworth Hospital. Participants perform several tasks associated with the day to day running of the library. |

Instruments: BADS Zoo Map and MSET; WTAR; WMS III; WAIS III (Digit span); WCST; BSAT and DEX. Performance: Task analysis, strategy generation and regulation, prospective working memory, interference and dual task management, response inhibition, time-based prospective memory and event-based prospective memory tasks scores. |

Performances on the VLT and the RLT were correlated indicating that VR performance is similar to real world performance. TBI group performed significantly worse than the control group on the VLT and the MSET but the other four measures of EF failed to differentiate the groups. Both MSET and VLT predicted everyday EF suggesting that are both ecologically valid tools for EF assessment. VLT has the advantage of providing objective measurement of EF individual components. |

| Krch et al. (2013) | Non-experimental | 7 TBI, 5 MS and 7 HC | Executive Functions | Computer and mouse | Assessim Office: respond to emails; decide whether to accept or reject real estate offers based on specific criteria; print the real estate offers that met specific criteria; retrieve printed offers from the printer and deliver them to a file box located on participants’ desk; and ensure that the conference room projector light remained on. | Instruments: WAIS III; D-KEFS; WASI Performance: emails correctly replied; correct decision, real estate offers; declined offers incorrectly printed; printed offers delivered to file box; projector light missed; redundant clicks. |

AO was well tolerated by TBI and MS samples. Performance by clinical samples on the AO was distinct from HC. Patient performance was poorer than HC across all AO tasks. Evaluation of the relationship between performance on AO tasks and EF tests revealed that there were more significant relationships within TBI group as compared with MS group. |

| Fluet et al. (2013) | Experimental | 30 stroke | Upper-limbs | Computer and CyberGlove: Subject interacted with VE with haptic guidance provided by robot. | VE activities: Reach/Touch; Placing Cups; Hammer Task; Blood Cell; Plasma Pong; Hummingbird Hunt; Piano Trainer and; Space Pong. | Instruments: UEFMA; WMFT; JTHF. Performance: Simulations and real activities performance. |

Both groups improved in UEFMA, WMT and JTHF. Gains in UEFMA maintained at follow-up. No differences at any of the three measurement times and no significant group time interactions. |

| Gerber et al. (2014) | Non-experimental | 19 TBI | Engagement in computer-based simulations of functional tasks. | Computer and a haptic device: Phantom® OmniTM. | Interactive virtual scenes in 3D space: remove tools from a workbench, compose 3 letter words, manipulate utensils to prepare a sandwich, and tool use. | Instruments: WMFT; BPS; NSI and PPT. Performance: Tool use: grabbing the tool; tool interaction with nail or screw; movement of nail or screw; and success in completing the task. Making a sandwich: grabbing an object (piece of bread or knife); moving bread; touching the jar cover; touching peanut or jelly or bread with the knife. Spelling: grabbing and releasing a letter; placing letter on the grid; and completing a three-letter word. |

Participants reported being engaged in using haptic devices that interact with 3D VEs. Haptic devices are able to capture objective data about motor and cognitive performance. |

| Gilboa et al. (2017) | Experimental | 29 ABI children and adolescents and 30 HC | Executive Functions | Computer and mouse | JEF-C: the participant has to plan, set up and run its own party through the completion of tasks. | Instruments: WASI, BADS-C and the BRIEF questionnaire (parents). Performance in 8 different cognitive constructs: planning; prioritization; selective, adaptive and creative thinking and; action, time and event-based prospective memory. |

Patients performed significantly worse than controls on most of the JEF-C subscales and total score, with 41.4% of participants with ABI classified as having severe executive dysfunction. |

| Cho and Lee (2019) | Experimental | 42 stroke | Cognitive functioning | HMD developed by Company S | Fishing: the user catches fish using upper extremities. Picture matching: the user flips cards and finds a match, the initial screen has 8 cards; the user can turn or look back to see all the cards. The user needs to place his or her hand on the card whose picture they want to check as if they reach out and touch it. RehaCom |

Instruments: CNT, LOTCA, FIM. | Virtual reality immersive training might be an affordable approach for cognitive function and activity of daily living performance recovery for patients with acute stroke. |

| Qiu et al. (2020) | Non-Experimental | 15 stroke | Upper-extremities | Laptop and Leap Motion Controller (with arm supporters) | HoVRS: 5 games (Maze, Wrist Flying, Finger Flying, Car, Fruit Catch) targeting different movement patterns (Elbow-Shoulder, Wrist, Hand, Whole Arm) |

Intruments: UEFMA Performance: six hand and arm kinematic outcomes (HOR, WPR, HRR, HOA, WPa, HRA) |

Subjects spent 13.5 h using the system at home and demonstrated an increase of 5.2 on the UEFMA, which exceeds the minimally clinically important difference of 4.25. They also improved in six measurements of hand kinematics. |

| Lorentz et al. (2021) | Non-experimental | 21 stroke, 6 TBI, 6 degenerative disease, 1 encephalitis and 1 brain tumor | Attention and Working Memory | Oculus quest with two OLED displays and two touch-controllers. | VR Traveller: each scenario is set in a different location and challenges alertness, selective attention, visual scanning and working memory. For instance, the New York City module primarily trains tonic alertness: patients are asked to press a response key as soon as a skyscraper lights up. At higher levels, visual distractions (streets, cars, and traffic lights) are added in order to place an additional cognitive load on patients’ selective attention. | Instruments: UEQ, a self-constructed feasibility questionnaire, and the VRSQ. | Patients’ ratings of the VR training in terms of acceptability and feasibility were positive. |

BADS, Behavioral Assessment of the Dysexecutive Syndrome; BPS, Boredom Propensity Scale; BSAT, Brixton Spatial Anticipation Test; BNT, Boston Naming Test; BRIEF, Behavior Rating Inventory of Executive Function; BVNTR, Brief Visuospatial Memory Test-Revised; CNT, Computerized Neurocognitive Function Test; COGNISTAT, The Neurobehavioral Cognitive Status Examination; COWAT, Controlled Oral Word Association Test; CVLT, California Verbal Learning Test; DEX, Dysexecutive Questionnaire; D-KEFS, Delis–Kaplan Executive Function System; FIM, Functional Independence Measure; HMD, Head Mount Display; HoVRS, Home based Virtual Rehabilitation System (HoVRS); HVOT, Hooper Visual Organization Test; JEF-C, Jansari assessment of Executive Functions for Children; JTHF, Jebsen Test of Hand Function; LOTCA, Loewenstein Occupational Therapy Cognitive Assessment; MMSE, Mini-Mental State Examination; MSET, Modified Six Elements Test; m-SSQ, modified Simulator Sickness Questionnaire; NSI, Neuropsychological symptom inventory; PPT, Purdue Peg Motor Test; TBI, Traumatic Brain Injury; TMT, Trail Making Test; UEFMA, Upper Extremity Fugl Meyer Assessment; UEQ, User Experience Questionnaire; VRSQ, Virtual Reality Sickness Questionnaire; WAIS-III, Wechsler Adult Intelligence Scale-Third Edition; WASI, Wechsler Abbreviated Scale of Intelligence; WCST, Wisconsin Card Sorting Test; WMFT, Wolf Motor Function Test; WMS-III, Wechsler Memory Scale – Third Edition; WTAR, Wechsler Test of Adult Reading.

3. Results

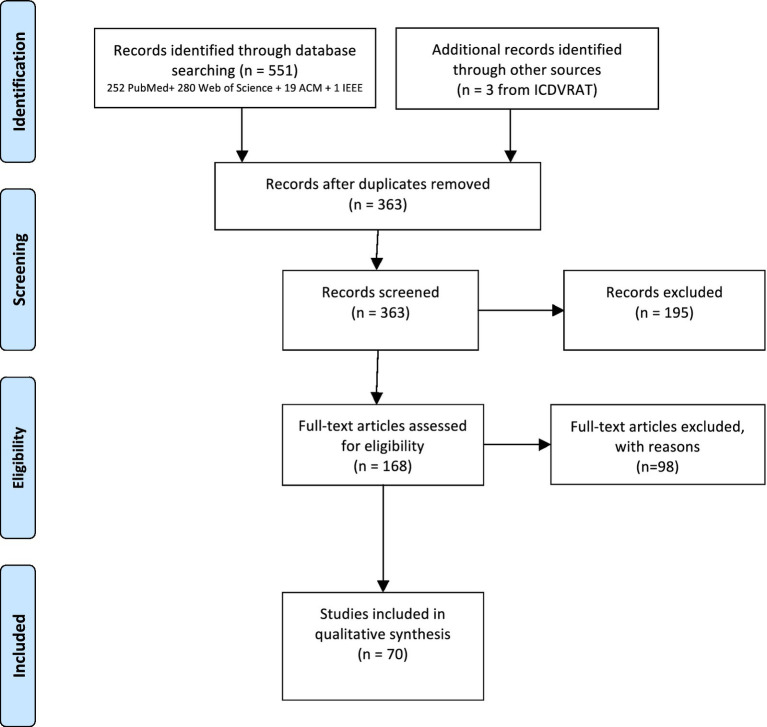

The results of the different phases of the systematic review are depicted in the PRISMA flow diagram (Figure 1). A total of 551 papers were identified through database searching, 363 after duplicates removal. In the first screening based on titles and abstracts, 195 were removed mainly due to the type of study (review articles, theoretical articles, studies describing tools with no clinical validation). In total, 168 full-text articles were assessed for eligibility, being that 98 were excluded as they did not involve VR, did not include ABI participants, did not describe rehabilitation or assessment studies, or did not comprehend simulated environments or ADL. Accordingly, 70 articles were included for analysis in this systematic review. From the total of 70 studies, 45 had an experimental design and 25 a non-experimental design. Due to the reduced number of participants per trial and heterogeneity of outcome measures – 136 different cognitive, functional, motor, emotional, cyber sickness, immersion and engagement assessment instruments and questionnaires - it was not possible to perform a meta-analysis.

Figure 1.