Abstract

Background and Aims

Endoscopic ultrasonography‐guided fine‐needle aspiration/biopsy (EUS‐FNA/B) is considered to be a first‐line procedure for the pathological diagnosis of pancreatic cancer owing to its high accuracy and low complication rate. The number of new cases of pancreatic ductal adenocarcinoma (PDAC) is increasing, and its accurate pathological diagnosis poses a challenge for cytopathologists. Our aim was to develop a hyperspectral imaging (HSI)‐based convolution neural network (CNN) algorithm to aid in the diagnosis of pancreatic EUS‐FNA cytology specimens.

Methods

HSI images were captured of pancreatic EUS‐FNA cytological specimens from benign pancreatic tissues (n = 33) and PDAC (n = 39) prepared using a liquid‐based cytology method. A CNN was established to test the diagnostic performance, and Attribution Guided Factorization Visualization (AGF‐Visualization) was used to visualize the regions of important classification features identified by the model.

Results

A total of 1913 HSI images were obtained. Our ResNet18‐SimSiam model achieved an accuracy of 0.9204, sensitivity of 0.9310 and specificity of 0.9123 (area under the curve of 0.9625) when trained on HSI images for the differentiation of PDAC cytological specimens from benign pancreatic cells. AGF‐Visualization confirmed that the diagnoses were based on the features of tumor cell nuclei.

Conclusions

An HSI‐based model was developed to diagnose cytological PDAC specimens obtained using EUS‐guided sampling. Under the supervision of experienced cytopathologists, we performed multi‐staged consecutive in‐depth learning of the model. Its superior diagnostic performance could be of value for cytologists when diagnosing PDAC.

Keywords: artificial intelligence, deep learning, endoscopic ultrasound‐guided fine‐needle aspiration, neural network models, pancreatic ductal carcinoma

Accurate pathological diagnosis of pancreatic ductal adenocarcinoma (PDAC) poses a challenge for cytopathologists. Therefore, we aimed to develop a hyperspectral imaging (HSI)‐based convolution neural network (CNN) algorithm to aid in the diagnosis of pancreatic cytology specimens obtained using endoscopic ultrasonography‐guided fine‐needle aspiration/biopsy (EUS‐FNA/B). A total of 1913 HSI images were obtained from 33 benign pancreatic tissue samples and 39 PDAC specimens, and the model was trained on these images.

1. INTRODUCTION

Pancreatic ductal adenocarcinoma (PDAC) is a significant cause of cancer‐related mortality globally, with a poor prognosis and an increasing incidence such that it is likely to become the second leading cause of cancer death within 10 years. 1 , 2 Making an accurate pathological diagnosis of PDAC is essential for follow‐up management and treatment. Endoscopic ultrasonography‐guided fine‐needle aspiration/biopsy (EUS‐FNA/B) is considered to be a first‐line procedure for the pathological diagnosis of pancreatic cancer owing to its high accuracy. EUS‐FNA/B reportedly has a diagnostic sensitivity of 85%–92% and a specificity of 96%–98% for PDAC. 3 , 4 Moreover, it has been proven to be a feasible and safe technique with a complication rate of less than 1%. 5 , 6

The cytologic atypia and architectural distortion of PDAC are similar to that seen in chronic pancreatitis; specifically, the presence of atypical ductal epithelial cells might create confusion. 7 The usual concern is that PDAC is clinically misinterpreted as reactive epithelial changes of pancreatitis. Crowded architecture, high nuclear to cytoplasmic ratio, irregular nuclear membranes, prominent nucleoli, and vacuolated cytoplasm are helpful in the specific diagnosis of pancreatic cancer. 8 However, prominent nucleoli could be present in both reactive ductal epithelium of pancreatitis and PDAC, and this feature is not sufficient to distinguish between these two diseases. 9 In addition, the presence of necrosis acts as a strong support for the diagnosis of PDAC. Whereas, the pathological smears of pancreatic pseudocyst with pancreatitis are often composed of necrosis and inflammatory debris, which breaks the above rule and makes it difficult for cytopathologists to differentiate from PDAC. Therefore, an accurate cytopathological diagnosis of PDAC is challenging for general or inexperienced pathologists.

Artificial intelligence (AI) based on deep learning models has been proved to assist in the diagnosis of cervical, thyroid, and pancreatic cancer, and has potential in for facilitating clinical diagnostic applications. 10 , 11 , 12 Compared with pathologists, deep learning models have a shorter training period and a higher objectivity. The evaluation of pancreatic lesions using deep learning models was reported to result in highly accurate diagnoses. 12 , 13 However, all previous research was based on cytomorphological features under traditional optical microscopy followed by the identification of pancreatic diseases through deep learning. Momeni‐Boroujeni et al. reported the use of a multilayer perceptron neural network (MNN) to classify pancreatic specimens obtained from EUS‐FNA as benign or malignant in 2017, which is the first study that can be retrieved using FNA/FNB samples for cytological analysis. 12 It finally achieved 77% accuracy for the atypical cases and more information might be required to reduce uncertainty regarding the likelihood of carcinoma. In 2022, Lin et al. reported an AI model which used to substitute manual rapid on site cytopathological evaluation during EUS‐FNA. 14 It had good performance in detecting cancer cells, and presented an accuracy of 83.4% in the internal validation dataset and the similar result in the external validation dataset (88.7%). The implementation of above AI model could accelerate slide evaluation and reduce the waiting time for endoscopists. Hyperspectral imaging (HSI) is a new optical diagnostic technology that combines spectroscopy, it can measure the interaction of tissue and light through an HSI camera and further obtain spectral features that cannot be captured by conventional imaging modalities and provide more information for identification and differentiation. 15 The specific spectral information reflects the chemical composition and content of different substances, which will change the optical and pathological properties of the tissue with the development of diseases and could be transformed into quantitative diagnostic information. However, the information produced by HSI is impossible to directly interpret by clinicians, and it often requires the help of computational algorithms, especially machine learning models. In recent years, HSI has been used in assisting diagnosis, micro‐environmental monitoring, and margin assessment of solid tumors through taking advantage of their composition‐specific spectral features. 16 , 17 HSI has been also proven to achieve promising results in the identification of diseases at the cytological level. 18 , 19 A study of the application of HSI model in distinguishing blood cells and it reached a great classification performance (Accuracy = 97.65%). 18 However, there is not a published article to compare the classification accuracy of AI model to RGB images and HSI images at the same time, so it is difficult to determine whether the spectral information obtained by HSI will bring greater benefits to disease recognition.

Until now, the HSI features of PDAC cells obtained by EUS‐FNA/B and their application value in the diagnosis of PDAC have not been reported. In this article, we developed an HSI‐based convolution neural network (CNN) algorithm and compared the classification accuracy for RGB images and HSI images. The aim is to improve the diagnosis of pancreatic EUS‐FNA/B cytology specimens prepared using a liquid‐based cytology (LBC) method and to increase and advance the knowledge of pathologists.

2. MATERIALS AND METHODS

2.1. Case selection

In order to construct deep learning model and evaluate its diagnostic performance, the archives of the department of cytology were queried for pancreatic FNA specimens accessioned between January 2020 and January 2022 at a single medical center (Ruijin Hospital). Furthermore, the pancreatic FNA specimens accessioned between November 2022 and March 2023 were also collected as additional test cases to prove the generalization ability of the model. The complete demographic data of all cases are displayed in Appendix S1. According to the guidelines for pancreaticobiliary cytology from the Papanicolaou Society of Cytopathology, all cases of LBC‐prepared slides that were categorized as “negative for malignancy,” “atypical,” “suspicious for malignancy,” or “positive for malignancy” were included. 20 For statistical purposes, suspicious FNA diagnoses were classified as positive for malignancy. All selected specimens had their respective LBC‐prepared slides reviewed by a second independent cytopathologist. For cancerous group, we included patients with histologically confirmed PDAC based on evaluation of resected specimens. For benign group, all cases were obtained from pseudotumoral chronic pancreatitis or autoimmune pancreatitis, which had histological diagnoses and underwent a follow‐up period of 6 months. The exclusion criteria were (1) EUS‐FNA/B specimens that were nondiagnostic, (2) all cases that have not been histologically confirmed, and (3) obtained regions of interest (ROI) were less than eight microscopic vision fields. Overall, a total of 72 patients were analyzed with histological diagnoses; 60 cases (32 cases were diagnosed as PDAC and 28 as benign cases) were selected for the training, validation and testing of the CNN algorithm, 12 cases (7 PDAC cases and 5 benign cases) were used to conduct additional test. This study protocol was approved by the ethics committee of Shanghai Jiao Tong University School of Medicine (No. (2022) Linlun‐213th) and was conducted in accordance with the World Medical Association (Declaration of Helsinki). Written consent was obtained from each participant before enrollment into this study.

2.2. Image acquisition and preprocessing

The hematoxylin–eosin‐stained LBC slides were scanned with an Olympus DP73 camera (Olympus Corporation) scanner, and the images were analyzed by two pathologists who have more than 10 years of professional experience. According to the Papanicolaou Society of Cytopathology guidelines proposed by Pitman et al., pathologists classified cases as PDAC or benign and manually delineated the ROIs on these images for further collection of hyperspectral images. 20 Subsequently, the scanned slides were imported into QuPath (v.0.3.0, University of Edinburgh) for review 21 ; the ROIs were then annotated by the pathologists (GLL and ZBY) and exported to the local drives.

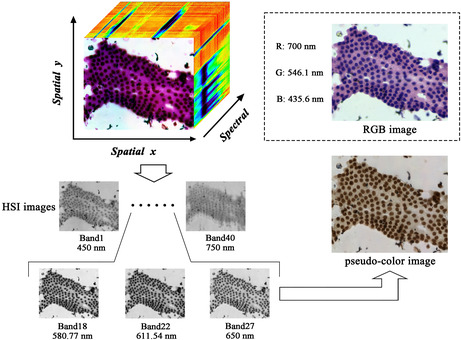

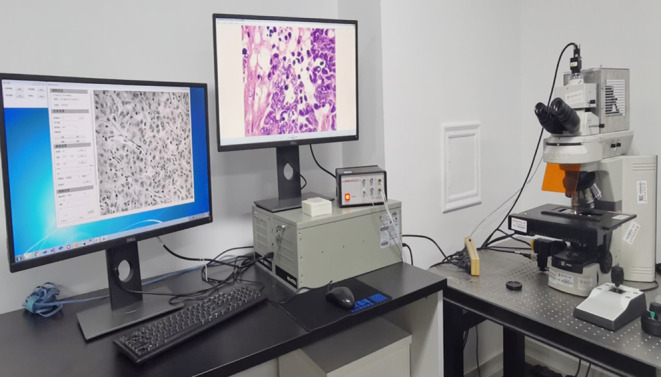

The image information of the labeled slides was collected for hyperspectral pathology imaging using a homemade medical hyperspectral imaging (MHSI) system at the Shanghai Key Laboratory of Multidimensional Information Processing, MHSI system is shown in Figure 1. 22 The system is based on an optical microscope with a color charge‐coupled device (CCD) camera from Omron, an acousto‐optic tunable filter (AOTF) from Brimrose, and a grayscale Complementary Metal‐Oxide‐Semiconductor (CMOS) camera from Omron installed in the upper part of the system. The hyperspectral data were in the spectral dimension of 450–750 nm with a band number of 40 bands (i.e., the intensity of light transmitted through the sample at 450, 457.5, 465 nm, etc.), and a spectral resolution of 7.5 nm. Before the image collection, MHSI system has been calibrated, including spatial and spectral calibration. In the process of image collection, the sample is placed on the carrier table and the halogen light source is mounted at the base. The whole system is a transmitted light imaging system. The acquisition of color and hyperspectral images is regulated by the reflector. When the reflector is pulled out of the optical path, the light propagates straight upward into the color CCD camera to obtain color images; when the reflector is inserted into the optical path, the light is reflected and passes through the AOTF into the grayscale CCD camera to obtain hyperspectral image data. Figure 2 displays microscopic HSI data cube, the RGB image and the pseudo‐color image with the same field of view. Compared with the RGB image, the microscopic HSI data cube contains more pathological information which is helpful for the diagnosis of PDAC.

FIGURE 1.

The hardware configuration of MHSI system.

FIGURE 2.

Microscopic hyperspectral image data cube and RGB image of the same field of view. The collected hyperspectral image data cube contained the images of 40 bands, three bands (Band18, Band22, and Band27) are selected to generate the pseudo‐color HSI image. Hematoxylin–eosin‐stained RGB image is also made up of the images of three bands (R: red band; G: green band; B: blue band).

The transmission of optics in the microscope and the detection sensitivity of the CCD camera might interfere with the hyperspectral images and cause data redundancy and noise. We used principal component analysis to select the components with the largest amount of information as the reference band. We first manually marked the whole cell of benign and PDAC in the HSI images, and a blank hyperspectral image was used as the reference image to eliminate any electronic instrument noise. Then, the nucleus of benign and PDAC were extracted and segmented by using a few‐shot GAN network in order to obtain the manual annotation masks and generate the characteristic spectra of benign and PDAC cells. 23 There were great differences of spectral curves between benign and malignant cells in the spectral range from 530 to 620 nm, and a total of 20 consecutive bands were selected for the segmentation task; the remaining unselected bands were considered to be redundant and were excluded.

2.3. Development and generalization ability of the CNN algorithm

We adapted the ResNet architecture that can be directly applied to analyze HSI images and RGB images and has good generalization abilities for benign pancreatic tissues and PDAC. 24 The collected pool of image dataset was used to develop the CNN algorithm, which obtained from 60 patients: 32 samples were categorized as PDAC, and 28 were categorized as benign cases. To ensure reliable results and fair comparisons, we used training set, validation set, and test set as the main method of the experiment and employed data augmentation consisting of variations in brightness, saturation, and rotation to boost the performance of the CNN. Specifically, we randomly separated the preprocessed hyperspectral data into three datasets according to the distribution of cell types: 60% for training, 20% for validation, and 20% for testing. Furthermore, 12 cases collected from November 2022 to March 2023 were defined as additional test set to prove the generalization ability of the model, including seven PDAC samples and five benign samples. Meanwhile, in order to realize the reproducibility of diagnostic performance, we fixed random seed before the model training. During the training stage, we expanded the training set, and the expanded dataset was used to construct the CNN model and minimize the loss function as much as possible. The validation set was used to evaluate the general error rate of the model, and to adjust the hyperparameter based on it. The classification accuracy of the test set was used as the performance of our classification algorithm. Finally, the accuracy of the CNN model for the additional test set and the test set was compared to evaluate its generalization. There was no overlap of patients between the different splits.

In general, a two‐phase deep learning algorithm was required to process the data. In phase one, we used the blank HSI image as the reference image to eliminate noisy data. 25 The aim of this phase was to reduce the influence of electronic instrument noise so that we could obtain the significant characteristic spectra of the cytopathological specimens. The equation of image processing was as follows:

The second phase involved designing suitable architectures to handle natural RGB images and spatial‐spectral data cubes that are distinct from natural images. In the process, a vanilla CNN‐like model was applied as our backbone by adjusting the first convolutional layer. Then, a multilayer perceptron was set to map the features extracted from the CNN model to the final prediction. We set the initial learning rate to 1e−05. The maximum training epoch was 200 for each model and the training step stopped when training loss of test set no longer decreased.

On this basis, SimSiam algorithm was added into the HSI‐based CNN model to perform self‐supervised learning of image representations to further improve the model training efficiency and generalization ability. It achieved a higher accuracy without using negative sample pairs, when used in combination with ResNet architectures. 26 Specifically, we adjust the first convolution layer of ResNet architecture to accommodate multi‐channel input images and pre‐train the whole architecture via SimSiam algorithm to improve generalization capabilities. Note, our proposed method could be adapted to almost all existing deep learning classification frameworks. All experiments were performed using Pytorch (Facebook's AI Research Lab [FIRE]), an open‐source machine learning library designed for deep learning and its relative applications, and conducted on a single NVIDIA GeForce RTX 3090 graphics processing unit (NVIDIA).

2.4. Explainability analysis

Attribution Guided Factorization Visualization (AGF‐Visualization) is a technique to visualize regions of important classification features that are identified by deep learning models. 27 To investigate if the model was learning the correct features, we utilized the AGF‐Visualization technique to visualize potential pathological areas on the HSI images. AGF‐Visualization produced a class activation map for each HSI image through the final convolutional layer of ResNet architecture, it can highlight discriminative object regions for the class of interest and the part with the strongest activation was used for the model to make predictions. Specifically, we utilize the gradients to highlight the different importance of each location in the feature map for the class of interest. Through such operation, the fine‐grained details of the target cells can be effectively kept while the details in the background can be removed.

2.5. Statistical analysis

To evaluate the classification performance of the CNN model, the primary outcome measures included sensitivity, specificity, false‐negative rate (FNR), false‐positive rate (FPR), precision, recall, and the smoothness of receiver operating characteristic (ROC) curves, area under the ROC curves (AUC). The samples were divided reasonably by selecting the appropriate threshold and calculating the corresponding precision and recall to indicate how many model predictions are actually correct. AUC‐ROC curves are used as performance measurements for classification problems at various threshold settings. The ROC is a probability curve, and the circle on the ROC curve corresponds to a point with a threshold of 0.5; the area on the lower right of the ROC curve represents the AUC, which measures the separability, with a larger AUC indicating better performance. All statistical analyses were performed using Sklearn package in Python software (version 3.11.3, Python Software Foundation).

3. RESULTS

3.1. Study data

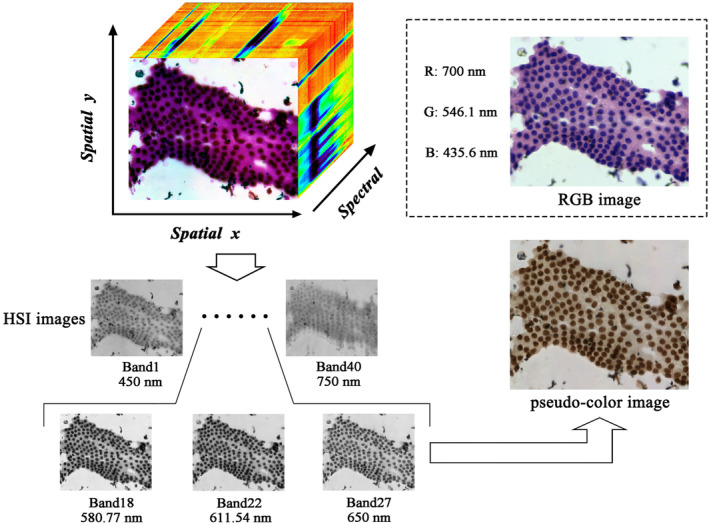

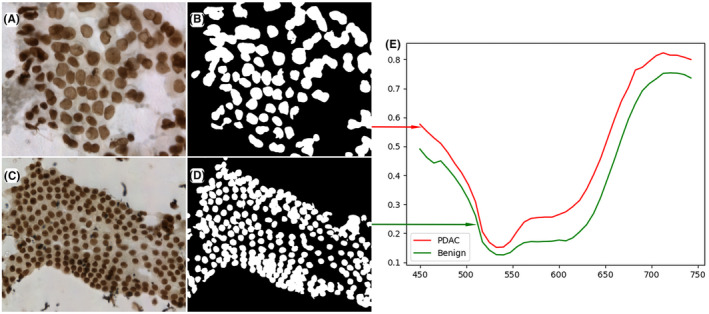

The aim of our study was to train a binary classification model to differentiate between benign pancreatic cells and PDAC cells. The study included 72 patients and the baseline characteristics are presented in Table 1. The mean age was 60.2 ± 13.2 years, and 47 (65.3%) patients were males. The mean size of lesions was 3.0 ± 1.1 cm and most of them were located in pancreatic head–neck (65.3%). Additionally, 40.3% of cytopathological diagnoses were positive for malignancy and 8.3% was suspicious for malignancy, 38.9% was negative and the rest were classified as atypical. A total of 1913 pairs of HSI and RGB images were obtained. The dataset used for developing the model consisted of 1025 effective scenes of images and they were exported from the MHSI system in two formats: HSI images, 512 × 612 pixels resolution, TIFF format, and RGB images, 2048 × 2448 pixels resolution, JPG format. RGB and HSI images were matched on the same field of view for qualitative comparison. Figure 3 displays an example of a hematoxylin–eosin‐stained RGB image and its corresponding HSI image of typical clusters of cells used for training. Due to the limited amounts of images, data augmentation (random cropping, flipping, rotation, scaling, and translation) was utilized to expand the dataset and a total of 1719 HSI images and corresponding RGB images were eventually collected. Among these scenes, 890 of them belonged to PDAC, and the rest ones were benign cases. Furthermore, 194 effective scenes of images were collected to prove the generalization ability of the model, including 101 PDAC images.

TABLE 1.

Baseline characteristics of the training, validation, test, and additional test sets.

| Baseline | Training set (n = 35) | Validation set (n = 13) | Test set (n = 12) | Additional test set (n = 12) | Total (n = 72) |

|---|---|---|---|---|---|

| Age (years), mean ± SD | 60.6 ± 14.1 | 62.6 ± 15.7 | 56.6 ± 12.1 | 59.9 ± 8.3 | 60.2 ± 13.2 |

| Sex, n (%) | |||||

| Male | 23 (65.7%) | 6 (46.2%) | 10 (83.3%) | 8 (66.7%) | 47 (65.3%) |

| Female | 12 (34.3%) | 7 (53.8%) | 2 (16.7%) | 4 (33.3%) | 25 (34.7%) |

| Size (cm), mean ± SD | 3.1 ± 1.2 | 3.3 ± 0.9 | 2.8 ± 1.1 | 2.7 ± 1.0 | 3.0 ± 1.1 |

| Location, n (%) | |||||

| Head/neck | 25 (71.4%) | 8 (61.5%) | 6 (50.0%) | 8 (66.7%) | 47 (65.3%) |

| Body/tail | 10 (28.6%) | 5 (38.5%) | 6 (50.0%) | 4 (33.3%) | 25 (34.7%) |

| Cytopathological diagnosis, n (%) | |||||

| Positive for malignancy | 16 (45.7%) | 5 (38.4%) | 4 (33.4%) | 4 (33.3%) | 29 (40.3%) |

| Suspicious for malignancy | 1 (2.9%) | 2 (15.4%) | 1 (8.3%) | 2 (16.7%) | 6 (8.3%) |

| Atypical | 4 (11.4%) | 2 (15.4%) | 1 (8.3%) | 2 (16.7%) | 9 (12.5%) |

| Negative for malignancy | 14 (40.0%) | 4 (30.8%) | 6 (50.0%) | 4 (33.3%) | 28 (38.9%) |

| Final diagnosis, n (%) | |||||

| PDAC | 19 (54.3%) | 8 (61.5%) | 5 (41.7%) | 7 (58.3%) | 39 (54.2%) |

| Benign | 16 (45.7%) | 5 (38.5%) | 7 (58.3%) | 5 (41.7%) | 33 (45.8%) |

Abbreviation: PDAC, pancreatic ductal adenocarcinoma.

FIGURE 3.

Comparison of hematoxylin–eosin‐stained RGB image and its corresponding HSI images of typical clusters of cells used for training. (A) Typical PDAC hematoxylin–eosin‐stained RGB image and (B) the corresponding HSI image of PDAC; (C) benign hematoxylin–eosin‐stained image and (D) its HSI image.

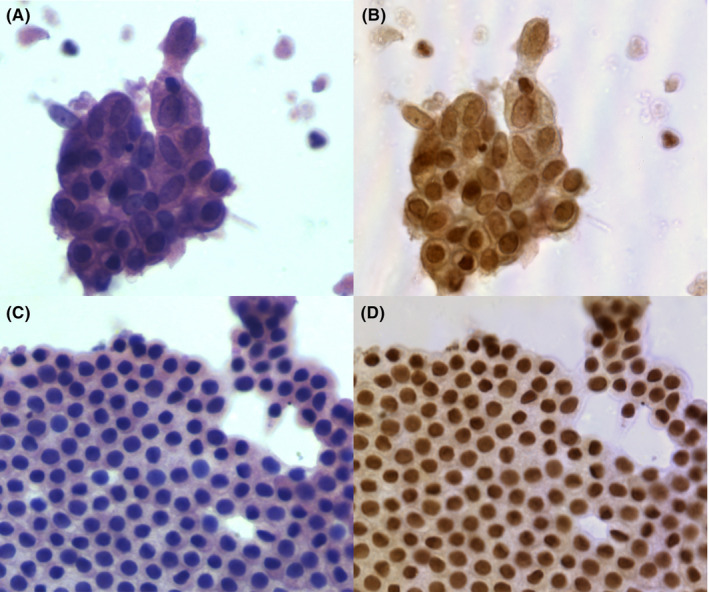

3.2. Spectrum features and differences between PDAC and benign cells

The manual annotation masks of benign pancreatic ductal/acinar cells and PDAC cells were generated by using a few‐shot GAN network to process the pseudo‐color HSI images and the representative spectrum features of benign cells and PDAC cells are shown in Figure 4. The red curve represents the transmittance spectrum feature of PDAC in the hyperspectral images, and the spectrum feature of benign cells is displayed by the green curve. The typical spectral features of PDAC cells and benign pancreatic ductal/acinar cells are obviously different in the wavelength range of 530–620 nm. Therefore, different types of pancreatic cells could be classified by identifying their spectral and spatial features.

FIGURE 4.

The representative spectrum features of benign pancreatic ductal/acinar cells and PDAC cells. (A) the pseudo‐color HSI images of PDAC cells; (B) the manual annotation masks of PDAC which are obtained by few‐shot GAN network; (C) the pseudo‐color HSI images of benign cells; (D) the manual annotation masks of benign; (E) The red curve represents the transmittance spectrum feature of PDAC in the hyperspectral images, and the spectrum feature of benign cells is the green curve.

3.3. Comparison of the RGB‐based CNN and the HSI‐based CNN

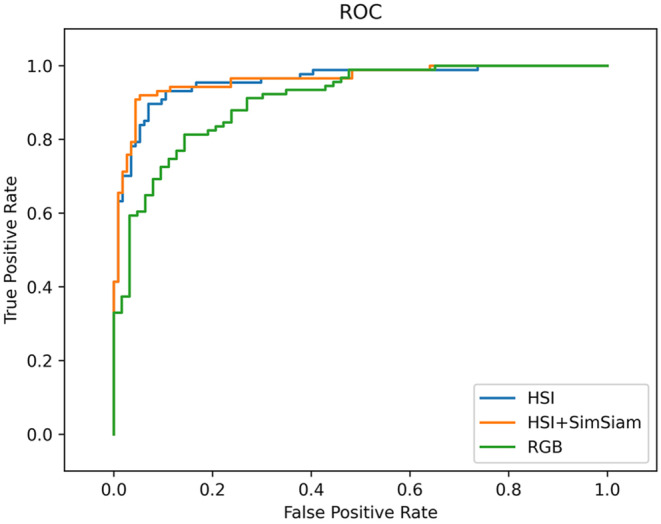

By utilizing these image patches, we compared the performance between the RGB‐based CNN and the HSI‐based CNN model. Among the expanded datasets for the construction of the model, 890 images obtained from PDAC cases and 829 images from benign cases. The scenes of HSI images and their matching RGB images were first separated into three independent datasets, 1341 scenes for the training set, 177 for the validation set, and 201 for the test. With repeated input of HSI data and RGB data into the multilayer CNN, the training, and validation accuracies of CNN models were improved. For the validation set, the accuracy of ResNet18‐RGB was 91.72%, which was lower than ResNet18‐HSI with an accuracy of 93.22% (Table S1). For the test set, compared with ResNet18‐RGB that had an accuracy of 82.47%, ResNet18‐HSI achieved a higher accuracy of 88.05%. In addition, the sensitivity and specificity of ResNet18‐HSI were also higher than ResNet18‐RGB (Table 2). Therefore, one thing can be learned is the spectral information along with the spatial information make it easier for CNN models to distinguish PDAC and benign cells.

TABLE 2.

Diagnostic performance of the CNN model.

| Model | Accuracy (%) | Sensitivity (%) | Specificity (%) | Precision (%) | Recall (%) | FNR (%) | FPR (%) | AUC (%) |

|---|---|---|---|---|---|---|---|---|

| ResNet18 | 82.47 | 89.01 | 73.02 | 82.65 | 89.10 | 10.99 | 26.98 | 90.79 |

| (RGB) | ||||||||

| ResNet18 | 88.05 | 94.25 | 83.33 | 81.18 | 94.25 | 5.75 | 16.67 | 95.91 |

| (HSI) | ||||||||

| ResNet18 | 92.04 | 93.10 | 91.23 | 89.01 | 93.10 | 6.90 | 8.77 | 96.25 |

| +SimSiam | ||||||||

| (HSI) |

Abbreviations: AUC, area under the ROC curves; FNR, false negative rate; FPR, false positive rate; ROC, receiver operating characteristic.

3.4. Diagnostic performance of the HSI‐based CNN model

We further employed SimSiam algorithm for ResNet18‐HSI model to analyzing existing sets of data augmentation, and evaluated the diagnostic performance of the HSI‐based models and calculated their relative metrics. Table 2 summarizes the metrics calculated for CNN models. Overall, the diagnostic performance of the ResNet18‐HSI‐SimSiam model was slightly higher than that of the purely ResNet18‐HSI model. The accuracy of the ResNet18‐HSI‐SimSiam model was 92.04%, and its sensitivity, specificity, FNR, and FPR for the diagnosis and differential diagnosis of benign cells and PDAC were 93.10%, 91.23%, 6.90%, and 8.77%, respectively. In contrast, the overall accuracy of the purely ResNet18‐HSI was 88.05%, its specificity was slightly lower and its sensitivity was similar to ResNet18‐HSI‐SimSiam. Furthermore, we used ROC curve to analyze the probability prediction of multi‐class classification. The ROC curve could balance the true positive rate and FPR in the prediction models with different probability thresholds, and the upper left corner of the plots was regarded as the true positive rate with a value of 1, whereas the FPR was 0. The value of the AUC of the ROC in our ResNet18‐HSI‐SimSiam model for the differential diagnosis of PDAC was 0.9625, whereas ResNet18‐HSI had an AUC‐ROC performance of 0.9591. Figure 5 demonstrates the ROC curves, and confusion matrices are shown in Tables 3 and 4.

FIGURE 5.

Comparison of the receiver operating characteristic (ROC) curve of CNN model at the image level. Green curve represents ResNet18‐RGB with an area under the curve [AUC] of 0.9079, blue curve indicates ResNet18‐HSI with AUC of 0.9591, and orange curve is ResNet18‐HSI‐SimSiam model with an AUC‐ROC performance of 0.9625.

TABLE 3.

Confusion matrix for the true labels and predicted labels in ResNet18‐HSI.

| Actual | Predict | ||

|---|---|---|---|

| PDAC | Benign | Total | |

| PDAC | 82 | 5 | 87 |

| Benign | 19 | 95 | 114 |

| Total | 101 | 100 | 201 |

Abbreviation: PDAC, pancreatic ductal adenocarcinoma.

TABLE 4.

Confusion matrix for the true labels and predicted labels in ResNet18‐HSI‐SimSiam.

| Actual | Predict | ||

|---|---|---|---|

| PDAC | Benign | Total | |

| PDAC | 81 | 6 | 87 |

| Benign | 10 | 104 | 114 |

| Total | 91 | 110 | 201 |

Abbreviation: PDAC, pancreatic ductal adenocarcinoma.

In addition, nine atypical cases were included in our study and five cases were eventually confirmed as PDAC. Our ResNet18‐HSI‐SimSiam model is also used to predict the benign or malignant outcomes of the atypical cases in all test sets. The accuracy of the ResNet18‐HSI‐SimSiam model for the images of the atypical cases was 83.02%, with its sensitivity and specificity were greater than 80% (Table S2 and S3).

3.5. Generalization ability of the CNN

In order to prove the generalization ability of our ResNet18‐HSI‐SimSiam model, we used ResNet18‐HSI‐SimSiam model to classify the additional test set. For the additional test set, a total of 194 images were collected. Overall, the diagnostic accuracy of the ResNet18‐HSI‐SimSiam model was 92.27%, which was similar to the 92.04% accuracy of the test set. Notably, the value of AUC of our model on the additional test was 0.9683, and it achieved a comparable result to the AUC of 0.9625 on the test set (Tables S2 and S4). Therefore, our ResNet18‐HSI‐SimSiam model showed generalizable and robust performance.

3.6. Computing performance of the CNN

Our ResNet18‐HSI‐SimSiam model could process the validation and test sets with an average speed of 0.144 s/frame. This translates to an approximate read rate of 6.94 frames per second.

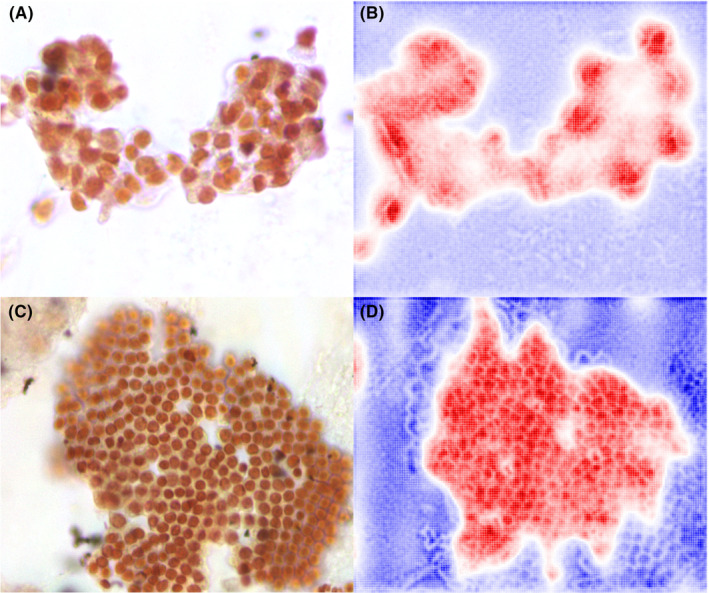

3.7. Explainability of the model

The representative class activation maps generated by AGF‐Visualization are shown in Figure 6. Both the nuclei in benign cells and the nuclei in PDAC displayed the greatest level of activation, which is important for the model to make correct predictions. Therefore, we concluded that the classification of our model is based on morphological features rather than technical features.

FIGURE 6.

Visualization of two cases using AGF‐Visualization on HSI‐based model. (A) original HSI image of PDAC case; (B) class activation map using the AGF‐Visualization for PDAC case; (C) the HSI image of benign case and (D) class activation map of benign case. The high intensity area (red color) reflects the area of interest to our model.

4. DISCUSSION

Accurate pathological diagnosis of pancreatic specimens requires professional cytopathologists with sufficient experience; moreover, the rising incidence of pancreatic cancer is increasing the workload of pathologists. 28 However, currently, cytopathologists are in short supply, and very few are proficient in pancreatic cytopathology, which poses a challenge for the cytological diagnosis of pancreatic cancer. Therefore, we developed a ResNet‐18 architecture with SimSiam using HSI images to diagnose pancreatic EUS‐FNA specimens prepared using an LBC method, and the model was validated to have good performance (AUC = 0.9625). AGF‐Visualization confirmed that the diagnoses were based on the features of tumor cell nuclei.

In recent years, LBC in cytological diagnosis has become increasingly popular as it reduces debris/lubricant contamination and the presence of blood compared with conventional smear cytology. 29 , 30 , 31 LBC slides have a cleaner background and preserve the cytomorphological features of the cells from the specimen; thus, it could allow better discrimination of fine cellular details and achieve more efficient cytological evaluation and easier AI recognition. 32 The cytology specimens of PDAC show an abnormal cell structure and arrangement under traditional optical microscopy. Abnormalities on the cellular level specifically include nuclear enlargement; the nuclei in ductal epithelial cell clusters vary in size and might show a three‐ to fourfold variability. In addition, the major criteria of PDAC include nuclear crowding or overlapping, irregular nuclear membranes, and irregular chromatin, and the minor criteria include nuclear enlargement, single epithelial cells, necrosis, and an increased number of cells in mitosis. 8 According to the guidelines for pancreaticobiliary cytology from the Papanicolaou Society of Cytopathology, two or more of the major criteria or one major criterium with three minor criteria should be met to establish a diagnosis of PDAC, which poses a challenge to cytopathologists. 8 , 20

Owing to the improvement of deep‐learning technologies and computing power in recent years, AI has made remarkable progress in recognizing complex cytopathological images, and some technologies have matured into commercial products. 33 A few studies have reported the application of AI in the cytopathological diagnosis of pancreatic cancer; however, the number of these investigations is limited, and most of them were based on traditional optical imaging technology that collects and processes information from spatial dimensions. 12 , 13 A retrospective study of 75 pancreatic FNA specimens evaluated the ability of a CNN based on traditional optical microscopy to distinguish benign cells from malignant pancreatic cytology specimens. 12 It was demonstrated that there were significant differences in features such as contour, perimeter, and area between benign and malignant cells. With binary classification using these features, atypical cases could be categorized as benign or malignant with 77% accuracy. 12 This result was not satisfactory for clinical application, and the diagnostic accuracy was suboptimal. HSI can obtain additional information about the spectral dimension of the images besides traditional optical imaging information and has been verified to be beneficial for cytopathological diagnosis. 18 Our team previously designed an HSI‐based deep learning model for the identification of lymphoblasts in blood samples and demonstrated that the model assists with early acute lymphoblastic leukemia diagnosis. 19 Compared with HSI, the information about spatial features provided by traditional optical images is insufficient to differentiate between lymphocytes and lymphoblasts. Therefore, the development of a novel algorithm is significant for maximizing the spatial‐spectral information of HSI data for cytopathological level diagnosis.

Based on the above limitations, the development of an ideal CNN model that can accurately distinguish PDAC and non‐cancerous cases at the cytological level might help to improve the ability of cytopathologists to diagnose atypical pancreatic cancer cells. Therefore, we designed a proof‐of‐concept study that aimed to explore the potential of an HSI‐based AI model for cytologically diagnosing PDAC. We first used HSI images to generate the transmittance curves of benign and PDAC cells. These two curves seem similar and the major difference seeking to be that the PDAC curve is shifted up (Figure 4E). We hypothesized that the origin of curve difference might be attributable to changes in the amount and arrangement of PDAC chromatin. PDAC is a genetic and epigenetic disease associated with rendered complex genome, and the most prevalent mechanism is polyploidy or whole‐genome doubling which involved the alteration in the DNA content. 34 As previous literature reported, the change of DNA content affects the spectral shape, and it is sufficient to distinguish cancer cells with high DNA content from normal cells. 35 In addition, the epigenetic changes in PDAC such as DNA methylation and histone post‐translational modification increase genomic instability. 36 These changes could affect the absorbance of DNA or histone, which lead to differences in spectral curves. 36 , 37 , 38 From the cytomorphology aspect, overlapping and crowding nucleus of PDAC cells and irregular chromatin distribution could interfere the reflected and transmitted light from tissue captured by HSI microscope, which might also affect the spectral transmittance curve of PDAC cells. 39 Subsequently, our divided 1719 pairs of HSI and RGB images into training set, validation set, and test set for constructing CNN model, respectively and used the classification accuracy of test set to indicate diagnostic performance. We fixed random seed before the model training, which could realize the reproducibility of diagnostic performance. As shown in Table 2, ResNet18‐RGB with an accuracy of 82.47% was lower than our CNN model which were trained with HSI images. Consistent results could also be obtained from the validation set, the diagnostic accuracy was raised in ResNet18‐HSI (Table S1). Therefore, our HSI model is superior to a model trained on the exact same images captured using traditional optical scanning. In order to further improve the training efficiency of our HSI model, we employed SimSiam algorithm for ResNet18‐HSI model. Ultimately, it achieved an accuracy of 92.04%, sensitivity of 93.10%, specificity of 91.23%, and a high AUC ROC performance of 0.9625, which is comparable to previous models for predicting malignant tumors. 10 , 40 Furthermore, we used the additional test set to prove the generalization ability of our ResNet18‐HSI‐SimSiam model, the diagnostic accuracy and the value of AUC achieved comparable results to the test set (accuracy 92.27% vs. 92.04%; AUC 0.9625 vs. 0.9683).

Our study contains several highlights, and the primary one is that this is the first study on the application of an HSI‐based CNN model for image analysis of pancreatic diseases at the cellular level. We found that the typical spectral features of benign pancreatic ductal/acinar cells and PDAC cells are obviously different in the wavelength range of 530–620 nm. Second, we confirm the superiority of the HSI‐based CNN model by comparing HSI images with RGB images which captured by traditional optical scanning. Meanwhile, it also supports that the spectral features obtained by hyperspectral imaging techniques are helpful in pathological classification and could provide more information for identification and differentiation. Third, our model possesses the advantages of high accuracy, sensitivity, and specificity. We conducted a patient‐based analysis, adopted cross‐validation (training set, validation set, and test set), and achieved good results, which confirmed the effectiveness of the prediction model. Fourthly, our algorithm has excellent performance in image processing, with a reading rate of about 6.94 frames per second. This means that our model could be valuable in helping cytopathologists make more consistent diagnoses and reducing the workload. Overall, our model could support medical centers that lack experience in the diagnosis of cytological specimens of pancreatic lesions obtained using EUS‐FNA.

However, our work also has several limitations that should be considered. The first is that our model could only differentiate between benign pancreatic cells and PDAC cells. It cannot identify other pancreatic diseases such as pancreatic neuroendocrine tumor, pancreatoblastoma, solid pseudopapillary neoplasms, pancreatic acinar cell carcinoma, intrapancreatic metastases from other primary tumors, and other rare solid pancreatic lesions. In a follow‐up study, we aim to improve the model by increasing the types of diseases that can be identified. The second limitation is the small number of cases in our study. However, this limitation was partly offset because we were able to obtain 1913 HSI and RGB images through data augmentation. The third is that our study was a single‐center retrospective study, and a well‐designed multi‐center study is needed in the future to further evaluate the new technology to ensure that the collected data are representative and could enhance the credibility of the experimental evidence.

In conclusion, we developed and validated an HSI‐based model for diagnosing cytological pancreatic EUS‐FNA/B specimens. Under the supervision of experienced pathologists, we performed multi‐staged consecutive in‐depth learning of the model. AGF‐Visualization allow for human scrutiny to detect undesirable behavior in AI, and the increasing diagnostic performance of our model could help pathologists to identify PDAC and lay the foundation for further exploration of AI in this field in the future.

AUTHOR CONTRIBUTIONS

Xianzheng Qin: Conceptualization (equal); data curation (equal); formal analysis (equal); investigation (equal); supervision (equal); validation (equal); writing – original draft (equal). Minmin Zhang: Conceptualization (equal); data curation (equal); formal analysis (equal); investigation (equal); supervision (equal); writing – original draft (equal). Chunhua Zhou: Conceptualization (equal); data curation (equal); formal analysis (equal); supervision (equal); writing – review and editing (equal). Taojing Ran: Data curation (equal); formal analysis (equal); supervision (equal); writing – original draft (equal). Yundi Pan: Data curation (equal); formal analysis (equal); supervision (equal); writing – original draft (equal). Yingjiao Deng: Data curation (equal); formal analysis (equal); methodology (supporting); software (equal); validation (equal); visualization (equal). Xingran Xie: Data curation (equal); formal analysis (equal); methodology (supporting); software (equal); validation (equal); visualization (equal). Yao Zhang: Data curation (equal); formal analysis (equal); supervision (equal). Tingting Gong: Formal analysis (supporting); supervision (equal); writing – review and editing (equal). Benyan Zhang: Formal analysis (equal); supervision (equal); validation (equal); writing – review and editing (equal). Ling Zhang: Formal analysis (equal); supervision (equal); writing – review and editing (equal); writing – review and editing (equal). Yan Wang: Formal analysis (equal); methodology (equal); writing – review and editing (equal). Qingli Li: Formal analysis (equal); methodology (equal); writing – review and editing (equal). Dong Wang: Formal analysis (equal); supervision (equal); writing – review and editing (equal). Lili Gao: Conceptualization (equal); data curation (equal); formal analysis (equal); supervision (equal); writing – review and editing (equal). Duowu Zou: Conceptualization (equal); data curation (equal); funding acquisition (equal); investigation (equal); project administration (lead); resources (equal); supervision (equal); writing – review and editing (equal).

FUNDING INFORMATION

This work was supported by grants from the Science and Technology Commission of Shanghai Municipality (No. 21S31903500 and No. 21Y11908100).

CONFLICT OF INTEREST STATEMENT

The authors made no disclosures.

ETHICS STATEMENT

This study protocol was approved by the ethics committee of Shanghai Jiao Tong University School of Medicine (No. (2022) Linlun‐213th) and was conducted in accordance with the World Medical Association (Declaration of Helsinki). Written consent was obtained from each participant before enrollment into this study.

Supporting information

Table S1.

Table S2.

Table S3.

Table S4.

Appendix S1.

ACKNOWLEDGMENTS

The authors are grateful to the Science and Technology Commission of Shanghai Municipality for its financial support.

Qin X, Zhang M, Zhou C, et al. A deep learning model using hyperspectral image for EUS‐FNA cytology diagnosis in pancreatic ductal adenocarcinoma. Cancer Med. 2023;12:17005‐17017. doi: 10.1002/cam4.6335

Xianzheng Qin, Minmin Zhang, and Chunhua Zhou contributed equally to this article.

Contributor Information

Lili Gao, Email: gaolili_510@163.com.

Duowu Zou, Email: zdw_pi@163.com.

DATA AVAILABILITY STATEMENT

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.

REFERENCES

- 1. Rahib L, Smith BD, Aizenberg R, Rosenzweig AB, Fleshman JM, Matrisian LM. Projecting cancer incidence and deaths to 2030: the unexpected burden of thyroid, liver, and pancreas cancers in the United States. Cancer Res. 2014;74(11):2913‐2921. [DOI] [PubMed] [Google Scholar]

- 2. Siegel RL, Miller KD, Jemal A. Cancer statistics, 2020. CA Cancer J Clin. 2020;70(1):7‐30. [DOI] [PubMed] [Google Scholar]

- 3. Masuda S, Koizumi K, Shionoya K, et al. Comprehensive review on endoscopic ultrasound‐guided tissue acquisition techniques for solid pancreatic tumor. World J Gastroenterol. 2023;29(12):1863‐1874. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Chen G, Liu S, Zhao Y, Dai M, Zhang T. Diagnostic accuracy of endoscopic ultrasound‐guided fine‐needle aspiration for pancreatic cancer: a meta‐analysis. Pancreatology. 2013;13(3):298‐304. [DOI] [PubMed] [Google Scholar]

- 5. Li DF, Wang JY, Yang MF, et al. Factors associated with diagnostic accuracy, technical success and adverse events of endoscopic ultrasound‐guided fine‐needle biopsy: a systematic review and meta‐analysis. J Gastroenterol Hepatol. 2020;35(8):1264‐1276. [DOI] [PubMed] [Google Scholar]

- 6. Mizuide M, Ryozawa S, Fujita A, et al. Complications of endoscopic ultrasound‐guided fine needle aspiration: a narrative review. Diagnostics (Basel). 2020;10(11):964. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Kloppel G, Adsay NV. Chronic pancreatitis and the differential diagnosis versus pancreatic cancer. Arch Pathol Lab Med. 2009;133(3):382‐387. [DOI] [PubMed] [Google Scholar]

- 8. Layfield LJ, Jarboe EA. Cytopathology of the pancreas: neoplastic and nonneoplastic entities. Ann Diagn Pathol. 2010;14(2):140‐151. [DOI] [PubMed] [Google Scholar]

- 9. Jarboe EA, Layfield LJ. Cytologic features of pancreatic intraepithelial neoplasia and pancreatitis: potential pitfalls in the diagnosis of pancreatic ductal carcinoma. Diagn Cytopathol. 2011;39(8):575‐581. [DOI] [PubMed] [Google Scholar]

- 10. Elliott Range DD, Dov D, Kovalsky SZ, Henao R, Carin L, Cohen J. Application of a machine learning algorithm to predict malignancy in thyroid cytopathology. Cancer Cytopathol. 2020;128(4):287‐295. [DOI] [PubMed] [Google Scholar]

- 11. Wang CW, Liou YA, Lin YJ, et al. Artificial intelligence‐assisted fast screening cervical high grade squamous intraepithelial lesion and squamous cell carcinoma diagnosis and treatment planning. Sci Rep. 2021;11(1):16244. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Momeni‐Boroujeni A, Yousefi E, Somma J. Computer‐assisted cytologic diagnosis in pancreatic FNA: an application of neural networks to image analysis. Cancer Cytopathol. 2017;125(12):926‐933. [DOI] [PubMed] [Google Scholar]

- 13. Naito Y, Tsuneki M, Fukushima N, et al. A deep learning model to detect pancreatic ductal adenocarcinoma on endoscopic ultrasound‐guided fine‐needle biopsy. Sci Rep. 2021;11(1):8454. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Lin R, Sheng LP, Han CQ, et al. Application of artificial intelligence to digital‐rapid on‐site cytopathology evaluation during endoscopic ultrasound‐guided fine needle aspiration: a proof‐of‐concept study. J Gastroenterol Hepatol. 2022;38:883‐887. [DOI] [PubMed] [Google Scholar]

- 15. Halicek M, Fabelo H, Ortega S, Callico GM, Fei B. In‐vivo and ex‐vivo tissue analysis through hyperspectral imaging techniques: revealing the invisible features of cancer. Cancers (Basel). 2019;11(6):756. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Yoon J. Hyperspectral imaging for clinical applications. Biochip J. 2022;16(1):1‐12. [Google Scholar]

- 17. Berisha S, Lotfollahi M, Jahanipour J, et al. Deep learning for FTIR histology: leveraging spatial and spectral features with convolutional neural networks. Analyst. 2019;144(5):1642‐1653. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Huang Q, Li W, Zhang B, Li Q, Tao R, Lovell NH. Blood cell classification based on hyperspectral imaging with modulated Gabor and CNN. IEEE J Biomed Health Inform. 2020;24(1):160‐170. [DOI] [PubMed] [Google Scholar]

- 19. Wang Q, Wang J, Zhou M, Li Q, Wang Y. Spectral‐spatial feature‐based neural network method for acute lymphoblastic leukemia cell identification via microscopic hyperspectral imaging technology. Biomed Opt Express. 2017;8(6):3017‐3028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20. Pitman MB, Layfield LJ. Guidelines for pancreaticobiliary cytology from the Papanicolaou Society of Cytopathology: a review. Cancer Cytopathol. 2014;122(6):399‐411. [DOI] [PubMed] [Google Scholar]

- 21. Bankhead P, Loughrey MB, Fernandez JA, et al. QuPath: open source software for digital pathology image analysis. Sci Rep. 2017;7(1):16878. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Gao Y, Zhou M, Li Q, Liu H, Zhang Y. AOTF based molecular hyperspectral imaging system and its image pre‐processing method. 2015 8th International Conference on Biomedical Engineering and Informatics (BMEI): 14–16 Oct 2015. Institute of Electrical and Electronics Engineers (IEEE); 2015:14‐18. [Google Scholar]

- 23. Yoo TK, Choi JY, Kim HK. Feasibility study to improve deep learning in OCT diagnosis of rare retinal diseases with few‐shot classification. Med Biol Eng Comput. 2021;59(2):401‐415. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. He F, Liu T, Tao D. Why resnet works? residuals generalize. IEEE Trans Neural Netw Learn Syst. 2020;31(12):5349‐5362. [DOI] [PubMed] [Google Scholar]

- 25. Wang Q, Sun L, Wang Y, et al. Identification of melanoma from hyperspectral pathology image using 3D convolutional networks. IEEE Trans Med Imaging. 2021;40(1):218‐227. [DOI] [PubMed] [Google Scholar]

- 26. Chen X, He K. Exploring simple siamese representation learning. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2021;2021:15750‐15758. [Google Scholar]

- 27. Gur S, Ali A, Wolf L. Visualization of supervised and self‐supervised neural networks via attribution guided factorization. In: Proceedings of the AAAI Conference on Artificial Intelligence. 2021;2021:11545‐11554. [Google Scholar]

- 28. Khalaf N, El‐Serag HB, Abrams HR, Thrift AP. Burden of pancreatic cancer: from epidemiology to practice. Clin Gastroenterol Hepatol. 2021;19(5):876‐884. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Remmerbach TW, Pomjanski N, Bauer U, Neumann H. Liquid‐based versus conventional cytology of oral brush biopsies: a split‐sample pilot study. Clin Oral Investig. 2017;21(8):2493‐2498. [DOI] [PubMed] [Google Scholar]

- 30. Jiang X, Yang T, Li Q, et al. Liquid‐based cytopathology test: a novel method for diagnosing pulmonary mucormycosis in bronchial brushing samples. Front Microbiol. 2018;9:2923. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Rossi ED, Zannoni GF, Moncelsi S, et al. Application of liquid‐based cytology to fine‐needle aspiration biopsies of the thyroid gland. Front Endocrinol (Lausanne). 2012;3:57. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Zhou W, Gao L, Wang SM, et al. Comparison of smear cytology and liquid‐based cytology in EUS‐guided FNA of pancreatic lesions: experience from a large tertiary center. Gastrointest Endosc. 2020;91(4):932‐942. [DOI] [PubMed] [Google Scholar]

- 33. Landau MS, Pantanowitz L. Artificial intelligence in cytopathology: a review of the literature and overview of commercial landscape. J Am Soc Cytopathol. 2019;8(4):230‐241. [DOI] [PubMed] [Google Scholar]

- 34. Hayashi A, Hong J, Iacobuzio‐Donahue CA. The pancreatic cancer genome revisited. Nat Rev Gastroenterol Hepatol. 2021;18(7):469‐481. [DOI] [PubMed] [Google Scholar]

- 35. Lin S, Ke Z, Liu K, et al. Identification of DAPI‐stained normal, inflammatory, and carcinoma hepatic cells based on hyperspectral microscopy. Biomed Opt Express. 2022;13(4):2082‐2090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Iguchi E, Safgren SL, Marks DL, Olson RL, Fernandez‐Zapico ME. Pancreatic cancer, a mis‐interpreter of the epigenetic language. Yale J Biol Med. 2016;89(4):575‐590. [PMC free article] [PubMed] [Google Scholar]

- 37. Vidal Bde C, Ghiraldini FG, Mello ML. Changes in liver cell DNA methylation status in diabetic mice affect its FT‐IR characteristics. PloS One. 2014;9(7):e102295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Ansari NA, Chaudhary DK, Dash D. Modification of histone by glyoxal: recognition of glycated histone containing advanced glycation adducts by serum antibodies of type 1 diabetes patients. Glycobiology. 2018;28(4):207‐213. [DOI] [PubMed] [Google Scholar]

- 39. Lu G, Fei B. Medical hyperspectral imaging: a review. J Biomed Opt. 2014;19(1):10901. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Holmstrom O, Linder N, Kaingu H, et al. Point‐of‐care digital cytology with artificial intelligence for cervical cancer screening in a resource‐limited setting. JAMA Netw Open. 2021;4(3):e211740. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Table S1.

Table S2.

Table S3.

Table S4.

Appendix S1.

Data Availability Statement

The datasets used and/or analyzed during the current study are available from the corresponding author on reasonable request.