Abstract

Traction patterns of adherent cells provide important information on their interaction with the environment, cell migration, or tissue patterns and morphogenesis. Traction force microscopy is a method aimed at revealing these traction patterns for adherent cells on engineered substrates with known constitutive elastic properties from deformation information obtained from substrate images. Conventionally, the substrate deformation information is processed by numerical algorithms of varying complexity to give the corresponding traction field via solution of an ill-posed inverse elastic problem. We explore the capabilities of a deep convolutional neural network as a computationally more efficient and robust approach to solve this inversion problem. We develop a general purpose training process based on collections of circular force patches as synthetic training data, which can be subjected to different noise levels for additional robustness. The performance and the robustness of our approach against noise is systematically characterized for synthetic data, artificial cell models, and real cell images, which are subjected to different noise levels. A comparison with state-of-the-art Bayesian Fourier transform traction cytometry reveals the precision, robustness, and speed improvements achieved by our approach, leading to an acceleration of traction force microscopy methods in practical applications.

Significance

Traction force microscopy is an important biophysical technique to gain quantitative information about forces exerted by adherent cells. It relies on solving an inverse problem to obtain cellular traction forces from image-based displacement information. We present a deep convolutional neural network as a computationally more efficient and robust approach to solve this ill-posed inversion problem. We characterize the performance and the robustness of our approach against noise systematically for synthetic data, artificial cell models, and real cell images, which are subjected to different noise levels and compare performance and robustness with state-of-the-art Bayesian Fourier transform traction cytometry. We demonstrate that machine learning can enhance robustness, precision, and speed in traction force microscopy.

Introduction

Many cellular processes are intrinsically connected to mechanical interactions of the cell with its surroundings. Mechanical surface forces control the shape of single cells or groups of cells in tissue patterns and morphogenesis (1). Forces alter cell behavior via mechanotransduction (2) and affect cell migration and adhesion. Gaining access to the forces (or tractions, i.e., forces per area) exerted by the cell during critical processes like migration or proliferation can give insight into biophysical processes underlying force generation and aid the development of novel medication and treatment, e.g., by identifying changes of cellular forces in diseased states. Altered cell behavior is present for diseases (3) such as atherosclerosis (4), deafness (5), or tumor metastasis (6).

Traction force microscopy (TFM) is a modern method to measure tractions exerted by an adherent cell by deducing them from the cell-induced deformations of an engineered external substrate of known elastic properties (7,8,9). Beyond adherent cells it has applications to a broader range of biological and physical systems where interfacial forces are of interest (10). TFM thus constitutes a classic inverse problem in elasticity, where tractions or forces are calculated from displacement for given material properties. This inverse problem turns out to be ill-posed, i.e., noise or slight changes in displacement input data induce large deviations in traction output data because of singular components of the elastic Green’s tensor. This technical problem has been addressed by different regularization schemes that have been developed over the last two decades (11,12,13,14,15). Recent studies show that machine learning (ML) can be an elegant alternative to numerical schemes when the inverse problem to a bounded problem is ill-posed in the context of elasticity or rheology. This has been explored for other rheological inverse problem classes such as in pendant drop tensiometry (16). ML-aided traction force determination can thus provide an elegant way to improve TFM as a method, as recent studies have already begun to show (17,18). A systematic investigation of ML-aided TFM with respect to an optimal general purpose training set that allows the machine to predict tractions accurately across many experimental situations, as well as a systematic investigation of accuracy and of robustness with respect to noise, which is present in any experimental realization, are still lacking.

The first implementation of TFM was achieved by Harris et al. in the early 1980s, where thin silicone films are wrinkled by compressive surface stresses, inflicted by the traction field of the cell (19). Due to the inherent nonlinearity of wrinkling and the connected difficulties solving the inverse elastic problem, this method has been superseded by linear elastic hydrogel marker-based TFM introduced by Dembo et al. (20). Due to the simplicity of the hydrogel marker-based approach, it is the most commonly used and most evolved method. Alternative techniques and extensions include micro-needle deformations (21), force microscopy with molecular tension probes (22), and three-dimensional techniques (23). Wrinkling-based TFM has recently been re-explored with generative adversarial neural networks with promising results (18).

In this paper, we focus on the hydrogel marker-based technique and train a deep convolutional neural network (CNN), which has the capabilities to solve the inverse elastic problem reliably, giving fast and robust access to the traction pattern exerted by the cell onto a substrate. Specifically, we do this by numerically solving the elastic forward problem, where we prescribe generic traction fields and solve the governing elastic equations to generate an associated displacement field. The “synthetic” displacement field generated this way is used as a training input for our NN, while we use the prescribed traction field as the labels for our training set. This way the network learns the mapping between displacement and traction fields and is able to generate traction fields for displacement fields never seen before, while still respecting the relevant governing elastic equations. Complete knowledge of the prescribed tractions for the synthetic training data enables a training process that directly minimizes deviations in the predicted tractions. This contrasts conventional TFM techniques that determine traction forces indirectly by minimizing deviations in the resulting displacement field. We use traction force distributions generated from collections of circular force patches as training data, which seems a natural general choice to allow the NN to predict generic force distributions in cell adhesion but should also cover other future applications. We show that the proper, “physics-informed” choice of training data and inclusion of artificial noise is a similarly important step in the ML solution of the inverse problem as the proper choice of regularization in conventional TFM techniques, to achieve the best compromise between accuracy and robustness.

Materials and methods

Hydrogel marker-based TFM

The hydrogel marker approach to TFM can be described as follows. First, a cross-linked gel substrate, often polydimethylsiloxane or polyacrylamide substrates (24), is cultivated. The cross-linked gel can be classified as an elastic substrate with long linkage lifetimes compared with the imaging process (25).

Second, the substrate is coated with proteins prevalent in the extracellular matrix, such as collagen type I, gelatin, laminin, or fibronectin, allowing the cell to adhere to the substrate. Fluorescent marker beads embedded in the gel substrate aid the determination of cell-induced substrate deformations. The reference and stressed positions of the marker beads can be determined via various microscopy techniques, ranging from confocal to optical microscopy (19).

Third, to infer the displacement field from the marker bead positions, a particle-tracking velocimetry algorithm, a particle image velocimetry algorithm, or a CNN particle tracker (26) is used, which calculates the discrete displacement field.

The information about the displacement field, combined with the predetermined constitutive properties of the hydrogel substrate, gives access to the traction field of the cell via the inverse solution of the elastic deformation problem. For homogeneous, isotropic, and linear elastic solids, the displacement field satisfies the equations of equilibrium in the bulk (27)

| (1) |

while the force balance at the surface is modified to account for external tractions (forces per area applied to the surface)

| (2) |

where is the surface normal vector and σ the stress tensor.

The TFM gel substrate can be considered sufficiently thick to be modeled as an elastic half-space (), bounded by the x-y plane, at which traction forces are applied. The displacements are a solution of the boundary problem Eqs. 1 and 2, which is given by the spatial convolution of the external traction field with the Green’s tensor over the boundary of the surface S (27):

| (3) |

Up to this point both tractions and displacement are three-dimensional vectors. The full three-dimensional Green’s tensor is given in the supporting material (see reduction of the elastic Green’s tensor to two dimensions).

In TFM, it can be assumed that adherent cells exert in-plane surface tractions (), and we are interested in in-plane displacement only because out-of-plane z-displacements are hard to quantify by microscopy. Moreover, out-of-plane displacements are small for incompressible materials (see supporting material). These assumptions make the problem Eq. 3 quasi-two-dimensional in the plane , such that the Green’s tensor is given by the 2 × 2 matrix (20)

| (4) |

It solves the elastic boundary problem for in-plane tractions and displacements if the tractions vanish at infinity.

TFM is essentially a technique to provide a numerical solution for the inverse elastic problem posed by asking to recover the traction field from Eq. 3 via a deconvolution of the right-hand-side surface integral. This can be done in real space (11,12,20) or in Fourier space (28).

Employing the convolution theorem for the Fourier transform of a convolutional integral, the deconvolution problem encountered in Eq. 3 can equivalently be stated as performing two Fourier transforms and one inverse Fourier transform

| (5) |

| (6) |

which is named Fourier transform traction cytometry (FTTC) (28).

Common iterative techniques used for numerical deconvolution can become unstable when subjected to noisy data, which is why conventional approaches to the ill-posed inverse elastic problem rely on regularization techniques (e.g., Tikhonov(L2) or Lasso(L1) regularization) coupled with iterative minimization schemes (11,12,13,14,29). This applies both to real space and Fourier space methods. These methods minimize deviations in the resulting displacement field subject to suitable regularization constraints on the traction forces. Regularization improves stability while accuracy might suffer. The optimal choice of regularization parameters is important but subjective. In Bayesian FTTC (BFTTC), the regularization parameters need not be picked manually and heuristically, but they are inferred from probability theory, making an easy to use and objective FTTC method (15,30).

The shortcomings of most conventional approaches are systematic underpredictions and edge smoothing of the constructed traction field caused by the regularization (31), as well as elevated computational effort inflicted by the computationally demanding iterative deconvolution techniques and transformations at play.

A recent trend in many fields, including the natural sciences, has shown the capabilities of ML-based approaches in such ill-posed and ill-conditioned scenarios (32,33), often outperforming complex algorithms by orders of magnitude in computing time and precision, and thus allowing for new and more accessible workflows with reduced computational overhead. ML-based approaches to TFM (17) and wrinkle force microscopy (18) have recently been discussed and find that deep CNNs can perform the deconvolution of Eq. 3 by learning the mapping from strain-space to surface traction-space in training. The existing NN approaches show a promising proof-of-concept that we want to extend further in this article by systematic studies of accuracy and robustness to noise. While regularization is particularly important in conventional TFM approaches for accuracy and stability, accuracy and robustness to noise of deep CNNs crucially depend on the choice of training data.

Physics-informed ML methods have also been applied to directly solve general partial differential equations with boundary conditions, such as Eqs. 1 and 2 that are underlying TFM (34,35,36). In TFM we can solve the elastic problem analytically up to the point that the Green’s tensor Eq. 4 is exactly known but proper inversion is difficult. We want to solve this inversion problem by deep CNNs with a physics-informed choice of training data and learning metric.

ML: The inverse problem

If we want to teach a machine to solve an inverse problem for us, counterintuitively, we do not need to know how to solve the inverse problem itself. We only need to know how to solve the corresponding forward problem, i.e., we only need to know how to precisely formulate the learning task for the network and provide sample data that characterize the problem well enough. In training, the machine detects correlations in the data and uses them to solve for arbitrary nonlinear mappings from input space to output space. This is one of the groundbreaking traits deep learning offers and which, in combination with hardware acceleration, allows to solve problems not feasible or traceable before (16,32,33).

Thus, we are interested in formulating the learning task in the most precise way. A first step to this is a solid understanding of the forward problem and all involved quantities including the proper, physics-informed choice of training data. Since we generate our training data numerically, the second step is a robust numerical framework, which outputs physically correct data. The third step is to find a sensible data representation, which contains all the relevant information. The final step is to find a network architecture with enough capacity for the problem, to train it, and to evaluate its performance and robustness. We address these points in the following and finally compare our network with state-of-the-art approaches.

Understanding the forward problem for traction patches

The forward problem we are trying to solve involves cell tractions on elastic substrates. Thus, essential to the performance of our NN is the accurate interpretation of cell-characteristic deformations of the substrate. Cells generate forces exerted onto the substrate via focal adhesion complexes with sizes in the range and tractions in the range (37). Forces are generated by tensing actomyosin stress fibers that attach to the focal adhesions and, therefore, have a well-defined direction over a focal adhesion complex. Therefore, typical cellular traction patterns consist of localized patches, which can comprise single or several focal adhesion complexes and are anchored to the substrate at positions . Within these patches tractions have a well-defined in-plane angle with the x axis resulting in a traction pattern

| (7) |

These tractions are applied to circular patches of variable radius at the anchored nodes (see Fig. 1), such that

| (8) |

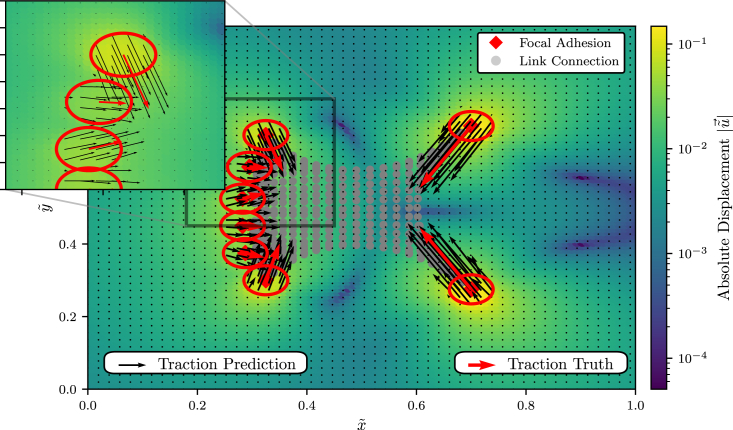

Figure 1.

We model a cell with circular focal adhesion points. The model cell perturbs the elastic substrate it is resting on by generating tractions (red arrows) at the focal adhesion spots (red circles), resulting in the color-coded displacement field; tractions (red arrows) are generated based on a contractile network model (see text). Red arrows are the “true” average tractions generated by the cell model over the red circles, while the black arrows indicate the local tractions that the network predicts at the discrete grid spots. To see this figure in color, go online.

We model typical traction patterns as a linear superposition of traction patches Eq. 7 localized at different anchoring points. Within linear elasticity, the resulting displacement pattern is also a linear superposition of all the displacement patterns caused by all traction patches i.

For a single traction patch, we solve the forward elastic problem by exploiting the convolution theorem

| (9) |

where the Fourier transform of the Green’s kernel in polar coordinates ρ and φ is known (28),

| (10) |

and the Fourier transform of the traction spot is given by the Hankel transform

| (11) |

where are the Bessel functions of the first kind.

The Fourier transformed displacement field is now accessible and can be converted back to the displacement field by performing the inverse Fourier transform . The inverse Fourier transform can be performed analytically in polar coordinates centered around the corresponding anchored node with a scaled radial component and an angle θ with the x axis,

| (12) |

| (13) |

where are specific functions that describe the geometric dependence of the displacement field, and are obtained by explicitly solving the occurring inverse Fourier transforms in the appendix (see Eqs. 19, 20, 21, and 22). Strictly speaking, this analytical solution of the forward elastic problem for a single traction patch anchored at is valid on an infinite substrate. We will neglect finite size effects in the following and use this analytical solution also on finite substrates. The solution for many traction patches anchored at different points is obtained by linear superposition.

Numerically solving the forward problem

We consider a square substrate of size , in which displacements are analyzed (the total substrate size can be larger). Typical sizes are in the range . We use the size L to nondimensionalize all length scales: , , and , such that the substrate in which displacements are observed always has unit size. Typical focal adhesion patch sizes are in the range of several (15,37); in dimensionless units, we take as typical value. The above dimensionless coordinate remains unchanged by nondimensionalization.

Furthermore, we use the elastic constant E as traction scale: . Typical hydrogel substrate elastic moduli of (15) and tractions in the range up to (37) imply typical dimensionless tractions up to . We note that this choice of typical dimensionless tractions does not limit our approach to substrates of stiffness , it rather sets an upper bound for the dimensional tractions we allow on a substrate of given stiffness. For example, a substrate of stiffness would allow dimensional tractions, corresponding to our upper bound , of . The dimensionless quantities and dimensionless equations are obviously entirely equivalent for a substrate with stiffness and tractions of and a substrate with stiffness and tractions of . In addition, we show in the supporting material (Fig. S5) that our approach is able to correctly predict dimensionless tractions of up to , increasing the available range of dimensional tractions to .

We create a square grid, on which we discretize the solution of Eqs. 12 and 13 for a supplied traction patch with direction and use superposition of the individual patch solutions for all anchored nodes, such that we get the full displacement field for a number of n circular traction patches of variable radius .

We discretize both displacement and traction fields on the same square grid. While generating the displacements in Eqs. 12 and 13 on a discrete grid is simple, we note that the discretization of the traction field needs to be performed with great care. A naive approach for the discretization of the circular traction patches onto a square pixel grid with indices would be the direct discretization of Eq. 7, i.e., to check whether any square segment center point is contained in the circular traction patch of radius and center point . If the center point is contained, the grid segment is assigned the traction of the circular patch. This naive discretization suffers from a critical artifact: it is not force conserving, i.e., does not conserve the total traction force exerted by the patch, which is given by the area-integrated tractions. This violates the fundamental physical requirement of force balance.

In the supporting material (see discretization of traction patches), we discuss improvements and present an exactly force-conserving traction discretization procedure by calculating the exact overlap area of each square grid segment (with side lengths ) and the circular traction spots. Then we assign a corresponding fraction of the traction to each square grid segment. In the supporting material (Fig. S1), we also quantify the accuracy gain by the force-conserving traction discretization method by computing the errors in the displacement field of a large circular patch that is discretized.

We want to emphasize the relevance of these findings to our approach: As the NN will be trained with the discretized traction fields and we are ultimately interested in an accurate discretized traction prediction by our machine, we are forced to deliver as accurate discrete traction field representations as “truths” for training as possible.

Generating arbitrary traction fields via superposition

Because of the underlying linearity of the elastic problem at hand we are able to construct displacements for arbitrary traction patterns via superpositions of the circular traction patch solutions. While this might seem obvious, it has far-reaching implications for our approach and implies that a solver with the ability to reconstruct traction fields constructed from circular traction patches will also be able to reconstruct arbitrary traction fields if the solver preserves the linearity of the elastic problem.

Because we present our NNs with an arbitrary superposition of circular traction spots, discretized to a finite grid, it is trained to exploit the linearity of the problem explicitly, and we thus expect the networks to be able to solve the more general problem of predicting an arbitrary superposition of traction patches. In a sense, generating superpositions of the analytical circular traction patch solutions is an optimization we employ to reduce the computational effort for generating displacement fields for training, while retaining the relevant properties of the problem, as we will show.

Another implication of this observation is that we are able to check the predicted discretized traction fields for consistency with a supplied displacement field by constructing a superposition of displacement fields for circular traction spots with radius for each grid point, where a is the distance between grid points. The choice assures conservation of the total traction force. We implement this method along with our solver to generate the displacement fields from arbitrary superpositions of circular traction patches.

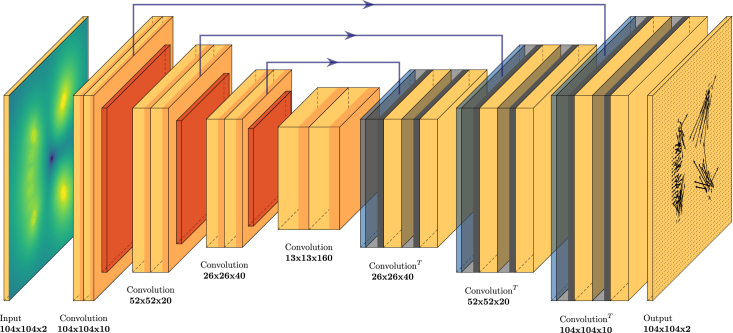

Architecture of the deep CNN

We choose to employ a Unet structure (38) consisting of an input encoder, which extracts and compresses the relevant information from the high-dimensional input displacement field into a lower-dimensional representation. From the lower-dimensional and compressed displacement information we inflate the dimensionality again with a decoder, such that we finally receive the representation of a traction field in the output of the network, as shown in Fig. 2. The motivation for this choice is the conceptual similarity of image procession tasks such as segmentation, which involves local classification of an image, to the assignment of local “traction labels” to each grid point of the “displacement image.” Furthermore, the elastic problem has long-range interactions, where a localized traction spot causes large-scale displacements. The layered structure of a Unet is well suited to handle this problem, as the high-dimensional layers process short-scale information and the increasingly lower-dimensional layers will be able to handle longer-range correlations. Finally, through the process of compressing and reinflating dimensionality we might lose spatial precision and, thus, use the skip connections to provide the upsampling layers with additional spatial information. In addition, skip connections have been demonstrated to improve generalization potential and stability when used in combination with batch normalization (which is also used in our networks) (39).

Figure 2.

The network we employ is a Unet convolutional neural network with a discretized displacement field as an input and a discretized traction field as an output. The mapping from input to output is learned in training by adapting the parameters of the convolutional and transposed convolutional layers of the network. Eventually the network will be able to reconstruct the traction field for displacement fields never seen before. We do not enforce a strict bottleneck, rather we allow for skip connections from the encoding process to the decoding process (blue arrows). The skip connections thus offer a way for the network to manipulate the decoding process with selected information gathered during encoding, increasing the capacity of the network. To see this figure in color, go online.

Our network (as shown in Fig. 2) is a fully convolutional NN, where the encoding part is a stack of convolutional blocks and max pooling layers, while the symmetric decoding part consists of transposed convolutional layers, skip connections, and convolutional blocks. Each convolutional block consist of two convolutional layers, with one Dropout layer and LeakyReLU activation functions, which introduce nonlinearity. Specially, the last layer uses a linear activation function, ensuring that the output maps to the domain of a traction field. The encoding part uses size 3 kernels and size 1 strides, while the decoding part uses size 4 kernels and size 2 strides to avoid checkerboard effects that would otherwise negatively impact performance.

Training data sampling and the training process

To train our NN we choose a grid, which holds the discrete representation of the dimensionless displacement and traction fields. We later show that our networks are still able to work on arbitrary grid sizes (with proper scaling of the input) since they are fully convolutional. The training data are generated by numerically solving the explicit forward problem in dimensionless form as outlined in numerically solving the forward problem.

The traction distribution is generated by a random number (uniformly sampled in ) of traction spots , where the radius is drawn uniformly in the range with a random center point . The traction magnitude is uniformly distributed in the range and the polar angle is uniformly distributed in .

While traction values and patch sizes are typical for adherent cells, our training data are more general in the sense that other important characteristics of cellular force patterns, such as the occurrence of force dipoles at the end of stress fibers, are not contained in our training data. This makes our approach more general compared with (17), where training was performed on traction patterns typical for migrating cells. In combination with nondimensionalization, this will allow us to easily adapt the training process to other applications of TFM in interfacial physics (10) in future applications. Below, we demonstrate the ability of the CNN to specialize from our general patch-based training set to artificial and real cell data. As a convenient model to generate realistic cell traction data artificially we use the contractile network model of (40).

We expect an NN trained with noisy data to also perform better when confronted with noisy data. To test this hypothesis, we add different levels of background noise to the displacement field in our training data. To evaluate the effects on robustness we train two types of NN:

-

•

A network is trained with a low level of background noise: to each dimensionless training displacement field value a spatially uncorrelated Gaussian noise with a variance that is of the average variance of the dimensionless displacement field over all training samples,

| (14) |

where is an average over all training samples.

-

•

A network is trained with a high level of background noise that is of the average variance of the dimensionless displacement field over all training samples,

| (15) |

We want to emphasize that we use uniform Gaussian noise for the training. The assumption of uniform Gaussian noise is used as the central assumption in BFTTC to evaluate the likelihood. The training data can easily be adapted to contain different types of noise if there is a concrete experimental motivation to do so.

As a loss or performance metric we use the mean-square error (MSE) between the output guess for tractions and the corresponding labels of the input traction data averaged over M training batches,

| (16) |

We train in batches of 50 samples per batch by backpropagation using the Adadelta algorithm. The number of training steps per epoch consists of 900 training batches or 45,000 samples.

A traction-based objective function comparing the residual of the force balance that generates the displacements is the proper physics-informed error metric, since we are interested in correct traction forces in TFM. Training for correct traction forces is enabled by using synthetic training data based on traction patches, where we know the true tractions. Alternatively, one could use the residual between the input displacement field and a displacement field generated from the predicted traction field as a training metric, but this approach has an obvious problem: to do the backpropagation during training we would have to compute all predictions of the network for the displacement field in each step of the training, which slows down training several orders of magnitude (in a first approximation by a factor of ). Implicitly, conventional TFM techniques such as the BFTTC algorithm follow this strategy as they minimize deviations in the resulting displacement field (subject to additional regularization) (15). Therefore, we expect networks trained according to this strategy to perform qualitatively similar to the BFTTC algorithm. We will investigate in detail the resulting differences in accuracy of the traction and displacement predictions in displacement versus traction error.

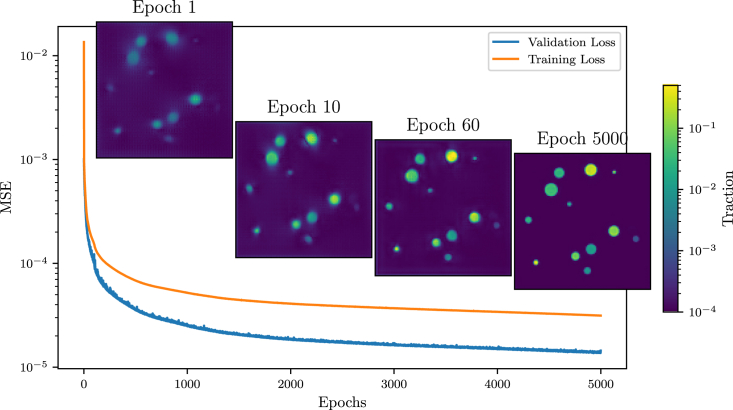

During training, we evaluate the loss MSE Eq. 16 for the training data and a validation MSE for unknown displacement data of the same type. The validation and training errors in Fig. 3 show constant learning and generalization of the model without overfitting. We note that a valuation loss lower than the training loss is common when using dropout layers, which are active in training but inactive during inference. In total, the training is performed for 5000 epochs, which we chose as an arbitrary training limit to truncate the power law tail seen in training, took on an NVIDIA QUADRO RTX 8000 GPU, with the main learning advancements occurring in the first . Each epoch consists of 50,000 randomly chosen traction patch distributions of which 45,000 samples are used in training and 5000 are used for validation.

Figure 3.

The time evolution of training and validation MSE during training of the neural network already achieves a rough traction reconstruction after the first epoch. After 10 epochs the reconstruction gains significantly in terms of visual sharpness, which is further increased in the following epochs. We stop the training process after 5000 epochs, because longer training yields diminishing returns. To see this figure in color, go online.

Sampling the hyperparameter space of networks

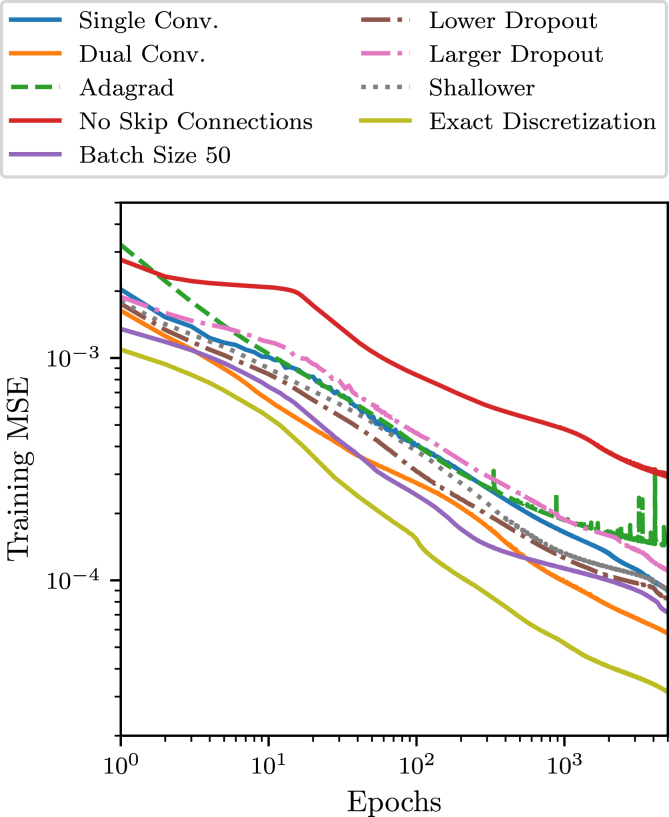

We sample the hyperparameter space to detect which network traits are important for its performance, i.e., we train a number of different networks on the same data for the same duration and compare their learning progress. The findings of this sampling are contained in Fig. 4, where the baseline (solid blue, label “Single Conv.”) is a network with a single convolutional layer per block, skip connections, a dropout of and a batch size of 132, using the Adadelta optimizer. For this figure we use data generated with the “naive” traction patch discretization and switch to the force-conserving method for one of the trials.

Figure 4.

The learning processes of different networks where we sweep the hyperparameter space by changing one property at a time to search for well performing networks (see text) generally follows a power law, where we cut off training at 5000 epochs. To see this figure in color, go online.

In Fig. 4 we vary only one parameter at a time, i.e., we disable the skip connections, vary the batch size, change the optimizer, change the dropout rate, change the number of convolutional layers in a block, or change the traction discretization method, this allows us to get an understanding of the hyperparameter space and its implications on predictive performance.

-

1.

The base line configuration Single Conv. (solid blue) with a single convolutional layer and trained with the Adadelta optimizer performs better compared with training with the Adagrad optimizer (“Adagrad,” dashed green).

-

2.

The training performance is improved when using two convolutional layers per block (“Dual Conv.,” solid orange), but, because of the increased complexity, training and inference is computationally significantly more expensive.

-

3.

We observe that the network without skip connections (“No Skip Connections,” solid red) performs significantly worse than all other networks.

-

4.

We are able to improve learning by using a lower batch size of 50 (“Batch Size 50,” solid purple).

-

5.

Changing dropout affects training as one would expect—a larger dropout decreases and a lower dropout increases training precision (“Larger/Lower Dropout,” dashed dotted magenta/brown).

-

6.

When employing a shallower network obtained by removing one encoder and one decoder block (“Shallower,” dotted gray) the learning is faster initially, but seems to plateau earlier before improving again.

-

7.

Finally, when using the exact force-conserving discretization for the traction grid (“Exact Discretization,” solid yellow) we are able to drastically improve training performance, supporting our above claim that conserving the force balance exactly is of great importance.

For all network variants, the training progress shown in Figs. 3 and 4 follows a power law with exponents .

We finally settle on a network architecture that has one convolutional layer per block, skip connections, a dropout of , and a batch size of 50, while using the Adadelta optimizer. This network architecture has also been used for the training process shown in Fig. 3 and has been used to produce all results shown in the following. The entire network structure is implemented using the Keras Python API (41).

BFTTC algorithm

To evaluate the performance of our CNN in comparison with conventional TFM methods, we employ the BFTTC algorithm as a standard to compare with. The algorithm is described in (15,30) and has been made publicly available by the authors at https://github.com/CellMicroMechanics/Easy-to-use_TFM_package.

Results

We analyze the performance of our ML approach on a set of error metrics. In addition, we compare the performance to the BFTTC algorithm (15,30) as a state-of-the-art conventional TFM method. Importantly, we want to discriminate between background noise and signal while also evaluating magnitude and angle reconstruction precision to infer whether our network generalizes to data never seen before. This will be done for synthetic displacement data first, which is generated in the same way as the training data and contains an additional varying level of noise. Subsequently, we can evaluate the performance of the CNN on artificial cell data using the same error metrics on completely random displacement fields and, finally, we apply the CNN to real cell data.

Evaluation metrics and application to synthetic patch-based data

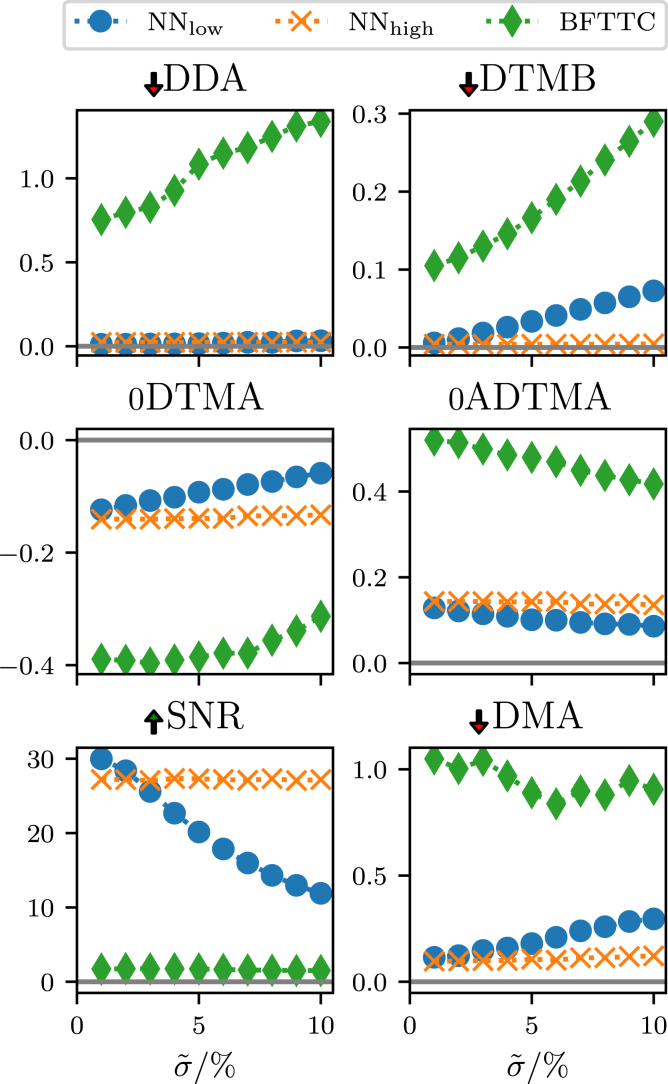

We employ six evaluation metrics, four of which have already been introduced by Huang et al. (15) or earlier, to better distinguish between noise and signal, as well as between bias and resolution. Precise definitions of all evaluation metrics are given in the appendix. In Fig. 5 we evaluate these metrics for varying noise levels during training of the network and in Fig. 6 we evaluate these metrics for varying noise levels on our synthetic data patch-based data.

Figure 5.

We evaluate the final low-noise network during training by computing six precision metrics at intermediate points during training. We use the metrics to quantify the performance of the traction reconstruction during training of the network. This evaluation is performed with new data drawn from the same distribution and problem class as the training data. We clearly observe drastic improvements in predictive precision in the first 100 epochs, after which the improvement of the metrics is drastically slower. For the metrics DDA, DTMB, and DMA a lower score is better (as indicated by the red arrow), while the SNR is better for larger values. Finally, the DTMA and ADTMA scores are better when they are closer to zero. To see this figure in color, go online.

Figure 6.

The comparison of our networks (trained with low-noise background) and (trained with high-noise background) with a state-of-the-art conventional BFTTC approach shows the precision across the six evaluation metrics (see text) for varying noise levels on synthetic patch-based data. This test is performed with an ensemble of traction spots randomly chosen in count, size, magnitude, and orientation, testing our networks performance on data similar to the training data. The arrows next to the metric name indicate whether higher or lower is better; “0” indicates that the metric has a sign and a value of zero is optimal. Both of our networks outperform the BFTTC method in most metrics. With the high-noise network we trade low noise fidelity for elevated noise-handling capabilities. To see this figure in color, go online.

The noise applied to the displacement field data is uncorrelated between pixels and randomly chosen from a Gaussian distribution centered around zero, with standard deviation σ. Let the dimensionless displacement field standard deviation be , then we define our noise levels , such that is the relative noise applied to the displacement field. In the following considerations we vary between and .

We pass the exact noise standard deviation to the BFTTC method for the noise evaluations, such that the BFTTC method has optimal conditions. Our networks do not get any additional information about standard deviation of the noise floor.

First, we introduce a measure to more precisely quantify the orientation resolution via the deviation of traction direction at adhesions (DDA)

| (17) |

between predicted and true traction angles γ (see Eq. 28 in the appendix for a more precise definition of the average); measures the periodic distance between two angles α and β. A small DDA indicates precise traction direction reconstruction. For both of our networks, the direction reconstruction is more precise than the BFTTC method across the range of tested noise levels.

Second, we evaluate the deviation of traction magnitude in the background (DTMB) (15), which quantifies how accurate the traction magnitude reconstruction works in the background (see Eq. 24 in the appendix); thus, if there is no prediction of an underground noise floor, not associated with any focal adhesion point, the DTMB score will be zero. Both of our NNs have a much lower DTMB score in than the BFTTC method in the limit , which should manifest in a much less noisy traction force reconstruction. While the low-noise network again departs from that score linearly the high-noise network again stays comparatively constant. During training we see that precision in low-noise scenarios is traded for less robustness as evident from the increasing slope in Fig. 5. The high-noise network again does not show this tendency.

Third, we discuss the deviation of traction magnitude at adhesions (DTMA) (13,15), which evaluates the precision of traction magnitude reconstruction at the focal adhesion points (see Eq. 23 in the appendix), thus the DTMA is zero for a perfect reconstruction, negative for an underestimation, and positive for an overestimation of traction magnitudes. During training (see Fig. 5), this quantity consistently improves but trades precision in low-noise scenarios for an increasing slope of the DTMA as a function of background noise. This is a first evidence that this network might perform poorly on high-noise experimental data. We do not see the increase in slope for the high-noise network (see Fig. 6). In Fig. 6, we see similar DTMA scores for all approaches in the limit , with a systematic underprediction of tractions. For increasing noise floors, both the BFTTC and the low-noise network () depart from this common score and start to overestimate tractions. While the DTMA score for the high-noise network barely changes, the low-noise network DTMA score increases linearly with the noise floor , while the BFTTCs DTMA increases faster than linearly. The high-noise network () retains a comparatively constant DTMA score and always underpredicts the traction magnitude.

Fourth, we introduce the absolute deviation of traction magnitude at adhesions (ADTMA that is similar to the DTMA, but evaluates the absolute deviations, capturing the actual reliability of reconstructions more precisely than DTMA, since alternating under- and overpredictions do not cancel out in this score. We can again see the same qualitative behavior as in most of the other metrics: both networks are more precise in the low-noise region, but the low-noise network seems to perform best at a noise level of , while the high-noise network is robust against increases in background noise.

Fifth, the signal/noise ratio (SNR) (15) also gives an insight into the noise floor of predictions (see Eq. 25 in the appendix), it is high for a precise distinction between background noise and actual focal adhesion induced deformation and goes to zero for an increasingly noisy reconstruction. Both of our networks have a consistently higher SNR than the BFTTC method, undermining the assumption that the networks will yield a less noisy reconstruction overall. The low-noise network SNR decays quickly with increasing noise levels, while the high-noise network is more resilient against the increases in noise floor.

Finally, the deviation of the maximum traction at adhesions (DMA) (15) gives a more detailed insight into the consistency for high amplitude tractions within a focal adhesion point (see Eq. 26 in the appendix). A perfect reconstruction would yield a DMA score of zero, while underpredictions give negative scores and overpredictions positive scores. We can again observe that both of our networks give similar scores for low-noise scenarios, which are both lower than the score of the BFTTC method. The low-noise network again departs linearly from this common value, while the high-noise network DMA score stays at a consistent level.

Across all six measures we observe the following trends for network trained with a low level of background noise as compared with network trained with a high level of background noise: perform superior to the BFTTC-standard and for low noise data, because they are trained on low-noise data. Their performance deteriorates, however, for higher noise levels , where their performance drops below but also below the BFTTC standard. finds a better compromise between robustness and accuracy such that it outperforms the BFTTC standard across all noise levels. Remarkably, the performance of the only deteriorates above noise levels .

Tractions of artificial cells

To test the ability of the CNN to specialize from our general patch-based training set to realistic cell data, we first test the model on artificial cell data. The advantage of artificial cell data is that the “true” tractions are precisely known. A convenient model to generate realistic cell traction data artificially is the contractile network model (40). In this model, the stress fibers are active cable links with specific nodes anchored to the substrate at positions . To construct a typical cell shape with lamellipodium and tail focal adhesions the tractions generated at each anchor point are generated by minimizing the total energy of the cable network. These tractions are then applied to circular patches of radius under an angle given by the stress fiber orientation at the anchored nodes (see Fig. 1).

In addition to Fig. 6, we want to evaluate the aforementioned metrics (DDA, ADTMA, DTMB, DMA, DTMA, and SNR) on a displacement field generated by an artificial cell as shown in Fig. 1. Again, we add Gaussian noise to the displacement field with varying noise levels and evaluate the behavior of our networks , , and the BFTTC method in Fig. 7. We average all our results over 10 artificial cells.

Figure 7.

We compare our networks and with the BFTTC method for data generated from an artificial cell model using the same six evaluation metrics for varying noise levels as in Fig. 6. Since the method of generating the data is now different from the training process, we expect to see the generalization potential of our networks more clearly than in Fig. 6. The BFTTC method works considerably worse on this data compared with Fig. 6. In addition, we see an amplified underestimation of the traction magnitudes for the BFTTC method. With respect to noise, the high-noise network seems to give the best compromise between precision and regularization of the output traction fields. To see this figure in color, go online.

We see qualitatively similar results to Fig. 6 in the SNR and DTMB metrics, while the absolute performance in those metrics is better (higher SNR and lower DTMB scores) for the artificial cell data. This is likely due to the lower number of traction patches in total and the equal radii of all traction patches. Both networks, are able to reconstruct the traction fields more reliably and with greater precision. This is true across all observed metrics.

The artificial cell data show a clear tendency toward a traction magnitude underestimation (DTMA and ADTMA) for all approaches. Since we are generating tractions in a strongly bounded range due to the cable network, the traction spots tend to be in close proximity to each other, which can increase smearing of sharply separated traction spots.

Reconstruction of random traction fields

To prove that our networks indeed have learned to exploit the linearity of the problem and that they have learned a general solution for the problem, we probe them on entirely random traction fields. These are generated starting from spatially uncorrelated Gaussian noise, which is subsequently convolved with a proximity filter with a characteristic correlation length of in dimensionless units of the image size. Then we compute the corresponding displacement field and pass it to our high-noise network and the BFTTC method for reconstruction.

The advantage of this reconstruction setting is that we have the exact ground truth for tractions while not using traction fields that are similar to the training data. As detailed in the supporting material (Fig. S3), our high-noise network can reconstruct the random traction field and associated displacements with higher accuracy than the BFTTC method.

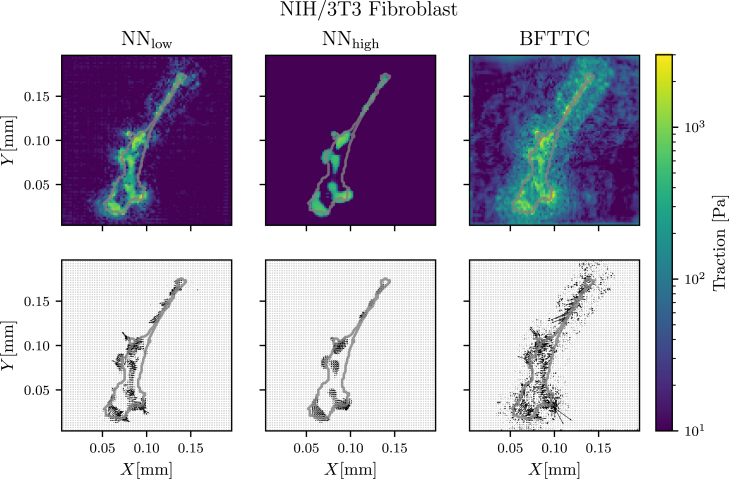

Tractions of real cells

Finally, we want to test our ML approach on real cell images. Of course, we do not have access to the true traction field for those images; however, we can qualitatively compare the results obtained from our ML approach with those obtained by the well-tested BFTTC method. The example cell results shown in Fig. 8 are for an NIH/3T3 (National Institutes of Health 3T3 cultivated) fibroblast on a substrate with length and elastic modulus (the data were made available in (17)). Additional results for all 14 cells provided in (17) are similar and provided in the supporting material (Figs. S8–S21).

Figure 8.

A comparison of traction reconstruction for a real fibroblast between our networks and and the BFTTC method (top and middle row) shows to qualitative differences in reconstruction. Although we do not have the “true” traction field at hand for a quantitative evaluation of precision across the methods, we see compatible results across the board, while both networks have a significantly reduced noise floor. The top row shows traction magnitude reconstruction, while the center row shows angle reconstruction. To see this figure in color, go online.

It is apparent that the network trained with low noise reconstructs a traction field that is similar to that of the BFTTC method, while the noise in the vicinity of the cell is significantly reduced. The network trained with a high noise floor gives a more regular traction pattern and cuts off lower-amplitude tractions.

The reason for these results is that the network trained with low noise exhibits an SNR superior both to BFTTC and the network trained with high noise levels if noise in the experimental data is low (see Figs. 6 and 7); for artificial cell data also the DTMA and ADTMA of the network trained with low noise is superior for low experimental noise levels (see Fig. 7). A low noise in experimental data seems to be realized here. We can thus infer that the tractions reconstructed by the high-noise network systematically underpredict the real tractions for this particular data.

As expected from our previous analysis, the resistance to additional noise is much better for the network that saw high noise levels during training and the low-noise network fails completely when subjected to very high noise. The robustness of the high-noise network is highlighted in Fig. 9, where the cell data are superposed with significant background noise of . Both our low-noise network and the BFTTC method produce a noisy traction field in this case, while the high-noise network still displays a similar traction pattern in both of these cases. The noise seen in the BFTTC method is significantly reduced, as we pass the exact standard deviation of the applied noise to the method. In the supporting material (Fig. S7), we perform an explicit sensitivity test by varying the regularization parameter manually to show that the BFTTC method does indeed provide a traction reconstruction that is optimal with respect to the constraints of the method itself.

Figure 9.

Adding noise to the fibroblast displacement field shows strong noise robustness of our network , which has been trained for high-noise scenarios, in traction reconstruction (top and middle row). The low-noise network fails to compensate for the high noise and the BFTTC method yields qualitatively similar results to the high-noise network but exhibits strong noise artifacts in the reconstructed traction fields. The BFTTC method has an unfair advantage, since we pass the exact standard deviation of the perfectly uniform Gaussian noise to it for its regularization, while our networks do not get this information. The bottom row displays the displacement field computed from the reconstructed traction fields. To see this figure in color, go online.

Overall, the result from the high-noise network and the BFTTC method is qualitatively similar in the high-noise scenario, while the high-noise network reconstruction is more regular and fits the circular focal adhesion point model more consistently and stays invariant for a wide range of background noise levels. Apparently, the noise the network sees in training directly controls the regularization of the reconstruction and it would be possible to create intermediate networks with higher sensitivity but lower noise invariance.

Finally, we compute the residual error between the experimental (already noisy) input image and the reconstructed displacement field, by solving the reconstructed traction field for the displacement field. We do this for all the 14 cells provided in (17) (see supporting material, Figs. S8–S21). For the low-noise network we achieve a mean , while the high-noise network performs slightly better at an . The BFTTC method performs significantly better with an average . While the residual errors are low for all approaches we can conclude that the BFTTC method reconstructs the input displacement field more accurately. This seems surprising in light of the higher traction background noise outside the cell shape that the BFTTC clearly produces. The reasons are discussed in the next section in more detail.

We can additionally quantify the contribution of tractions that lie outside the cell contour, which can be considered unphysical. For this we subtract all tractions inside of the contour from the full traction fields and are left with tractions that lie outside the cell contour. When calculating the rooted mean square of these outside tractions we are left with a metric that quantifies physical consistency. We perform this analysis for the cell in Fig. 8 and find that the mean background traction for the BFTTC method is , while it is for and for . Since the cell segmentation is only an approximation, this metric is only a proxy for physical consistency.

We conclude that the high-noise network is the better choice for a traction field where the noise is not known, or might be inhomogeneous. If the experimental error is high, or the displacement field reconstruction is imprecise, the high-noise network provides a robust way of extracting the traction field, while conventional methods are plagued by high background noise in the reconstructed traction fields in this case.

Evaluating a traction field with our networks takes , while evaluation an image with the BFTTC method takes (with size ). This is a performance improvement of more than three orders of magnitude. Because of the nondimensionalization we perform it is only necessary to train one network for a large range of experimental realizations. Thus, when the same network is reused for a number of different experiments the long training time will eventually be outweighed by the significant performance advantage at inference.

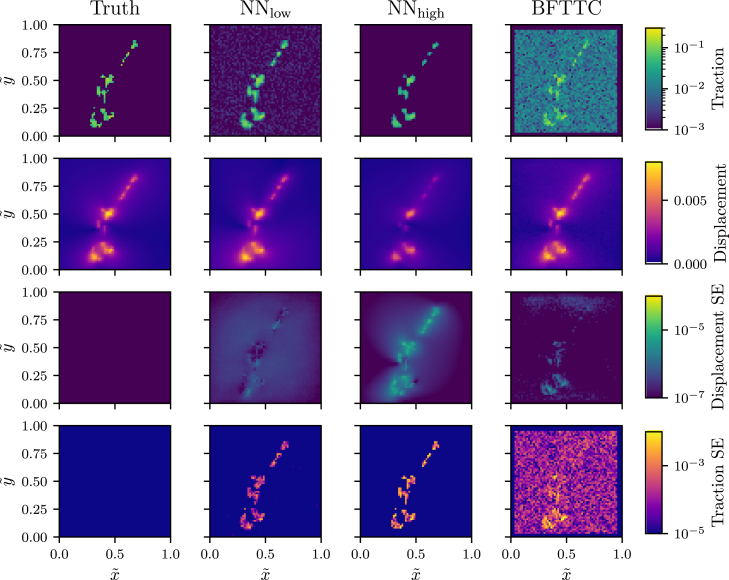

Displacement versus traction error

We train our NNs for correct tractions by using the MSE of tractions from Eq. 16 as objective function. This is only possible with synthetic data, where the “truth” for tractions is known. Within this approach we optimize accuracy in tractions but concede errors in the displacement that are not of primary interest in TFM.

Alternatively, one could base training on the MSE of displacements with the drawback of slowing down the training process by orders of magnitude but with the advantage that training could also be performed with actual experimental data. Networks trained with displacement-based metrics will minimize the displacement error to obtain correct tractions, which is a more indirect approach. The BFTTC algorithm also minimizes the deviations in displacements to determine an optimal traction field (15). We show that this causes deviations in tractions by applying both strategies to situations where we know the true tractions.

Fig. 10 shows a comparison for a synthetic traction field that we generate from the NIH/3T3 traction data (see Fig. 8) by suppressing tractions outside the cell shape (which should be artifacts). This provides the traction truth for comparison. The corresponding displacement field is computed, and spatially uncorrelated low-amplitude Gaussian noise is added. This displacement field is analyzed by the low- and high-noise networks and the BFTTC algorithm. Fig. 10 clearly shows that, on the one hand, both low- and high-noise networks give a significantly better traction reconstruction, in particular outside the cell shape where the BFTTC method tends to generate background traction noise. On the other hand, this background traction noise obviously enables the BFTTC method to lower the displacement errors, in particular inside the cell shape. As we are interested in correct traction reconstruction in TFM, this comparison clearly pinpoints the advantages of the CNN reconstruction when trained with the traction MSE as objective function.

Figure 10.

A comparison of traction and displacement error between our networks and and the BFTTC method for a synthetic fibroblast-like traction field and corresponding calculated displacements is needed to assert the different reconstruction characteristics. The two top rows show the reconstructed traction and displacement fields compared with the “truth” (first column), which is at hand in this comparison. The two bottom rows display the square error calculated between the “true” and reconstructed displacement and traction fields. We see that both NNs give better traction reconstruction with reduced noise outside the cell compared with BFTTC, while displacement errors are slightly higher. To see this figure in color, go online.

Input resolution scaling

So far we used a fixed size of for input images. Since our networks are exclusively composed of input-size-independent layers, we are able to feed arbitrary input sizes (i.e., arbitrary image resolutions) to the networks.

It is, however, important to realize that we provide no spatial information to the network apart from the size of the input array. Because the total input size is not visible to our convolutional layers, the network has no means to adapt to changes of the input size N. It is possible to circumvent this limitation by scaling the input displacements properly, such that the input is locally equivalent to that of a grid. A local section of a image of a substrate of length L corresponds to a section of smaller length , such that the dimensionless displacement for a resolution corresponds to a larger dimensionless displacement for a section of the same substrate. Or, in other words, the displacement scale must be coupled to the pixel scale, since our networks directly operate on the pixel level with dimensionless displacements. The scale factor for the input displacements for an image of size is thus . We show in the supporting material (Fig. S6) that this is sufficient to make the networks usable for arbitrary resolutions. We compare the performance of our networks with the BFTTC method on grids and find superior precision and speed provided by our networks. In particular, the SNR metric further improves upon increasing resolution.

Discussion

We present an ML approach to TFM via a deep convolutional NN trained on a general set of synthetic displacement-traction data derived from the analytic solution of the elastic forward problem for random ensembles of circular traction patches. This follows the general strategy that NNs trained with easy-to-generate data of representative forward solutions can serve as a regressor to solve the inverse problem with high accuracy and robustness.

Our approach to TFM uses synthetic training data derived from superpositions of known and representative traction patches. This allows us to employ an objective training function that directly measures traction errors, which contrasts conventional TFM approaches such as BFTTC where the tractions are adjusted to match the displacement field (eventually subject to additional regularizing constraints on the tractions), such that low displacement errors are the implicit objective. We show that a force-conserving discretization is crucial for high performance networks and find a significant enhancement of the robustness of the NN if the training data are subjected to an appropriate level of additional noise.

In conventional TFM approaches the inverse elastic problem is ill posed and the suitable choice of regularization in the inversion procedure is crucial and has been a topic of active research over the last 20 years. ML approaches circumvent the need for explicit regularization and provide an implicit regularization by a proper choice of the network architecture, i.e., convolutional NNs for TFM, and after proper training. Our work shows that the suitable choice of physics-informed training data and, moreover, the suitable choice of noise on the training data governs the applicability of the NN and the compromise between accuracy and robustness in ML approaches, somewhat analogous to the role of the regularization procedure in conventional TFM approaches.

We employ a sufficiently general patch-based training set and show that this allows the CNN to successfully specialize to artificial cell data and real cell data. Moreover, training with an additional background noise that is of the average variance of the dimensionless displacement field (the network Eq. 15), gives a robustness against noise in the NN performance that is superior to state-of-the-art conventional TFM approaches without significantly compromising accuracy. We can systematically back these claims by characterizing both the NN performance and the performance of state-of-the-art conventional TFM (the BFTTC method) via six error metrics both for the patch-based training set (Fig. 6) and the artificial cell data set (Fig. 7), which are two data sets where we can compare the prediction to the true traction labels. We also test the NN performance on random traction fields (see supporting material, Fig. S3) and traction fields derived from real cell data (Fig. 10). Whenever the true tractions are known, we find that our NNs, which were trained to minimize traction errors, give more accurate traction reconstruction with a reduced background traction noise outside the cell shape, although the NNs tend to concede higher errors in the corresponding displacement fields.

For real cell data, we find that an NN trained with low noise () gives the best performance if the experimental data are of high quality with low noise levels (see Fig. 8). For noisy experimental data, on the other hand, the NN trained with high levels of noise () clearly performs best (see Fig. 9). This suggests that it might be beneficial to first employ the high-noise network on experimental data and only switch to the low-noise network if the background noise level is below of the displacement standard deviation.

Overall, we provide a computationally efficient way to accelerate TFM as a method and improve both on accuracy and noise resilience of conventional approaches, while reducing the computational complexity, and thus execution time by multiple orders of magnitude compared with state-of-the-art conventional approaches. It is apparent from our analysis that ML approaches have the potential to shift the paradigm in solving inverse problems away from conventional iterative methods, toward educated regressors that are trained on a well-understood and numerically simple to solve the forward problem.

We make all NNs discussed in this work freely available for further use in TFM. We use a grid for the displacement data, but show that our networks are able to handle arbitrary displacement data resolutions. Experimental data can easily be adapted to comply with the network input shape by properly scaling the displacements or, alternatively, by interpolating or downsampling to a grid, which will, however, decrease the traction resolution.

By using nondimensionalized units, the NNs made available with this work are widely applicable across different problems and can also be easily further adapted, for example, to problems where typical tractions are not limited to the range traction ranges discussed here by repeating the training process. In the supporting material (Fig. S5), we show that the present networks are able to generalize to larger dimensionless traction magnitudes than trained for, with ranging up to 1.5, without retraining. Another potential problem to be addressed in future work is the effect of spatial noise correlations, for example, from optical aberration or from the displacement-tracking routine that is applied to generate the displacement input data. In the supporting material (Fig. S4), we consider uncorrelated Gaussian noise with a standard deviation that decreases with the distance from the image center and find a robust performance of the high-noise network. Robustness to noise with genuine spatial correlations over characteristic distances significantly larger than the pixel size will presumably require retraining of the networks. All necessary routines to retrain an NN to new traction levels, new characteristic patch sizes, or other noise levels are made freely available with this work at https://gitlab.tu-dortmund.de/cmt/kierfeld/mltfm. This will also allow to easily adapt the training to other types of noise correlations.

Details of the solution of the forward problem for traction patches

For the solution of the forward problem for a tractions patch of size , we obtained the functions in Eqs. 12 and 13. They are defined as

| (18) |

with

By definition, .

The remaining integrals in the functions can be performed analytically:

| (19) |

with the complete elliptic integrals and ;

| (20) |

| (21) |

| (22) |

Definitions of evaluation metrics

We employ six evaluation metrics (see Figs. 6 and 7). Their definition is based on a comparison of traction predictions in sample s compared with true tractions , which are known for the artificial data for random circular traction patches. We evaluate all six metrics by averaging over samples; the sample average is denoted by . All traction vectors in patch i () are indexed by v. All tractions vectors outside patches are considered as belonging to the background b and indexed by w. For completeness we give the precise definitions of all six evaluation metrics:

-

1.

DTMA (15):

| (23) |

Note that because artificial traction data are piecewise constant in traction patches.

-

2.

DTMB (15):

| (24) |

Note that because artificial traction data exactly vanishes outside patches.

-

3.

SNR (15):

| (25) |

where std is the standard deviation.

-

4.

DMA (15):

| (26) |

Note that because artificial traction data are piecewise constant in traction patches.

-

5.

ADTMA:

| (27) |

-

6.

DDA:

| (28) |

where measures the periodic distance between two angles α and β.

Author contributions

F.S.K., L.M., and J.K. developed the theoretical and computational model. F.S.K. and L.M. performed all computations, construction and training of neural networks, and data analysis. F.S.K. and J.K. wrote the original draft. F.S.K., L.M., and J.K. reviewed and edited the manuscript.

Acknowledgments

F.S.K. acknowledges support by the German Academic Scholarship Foundation.

Declaration of interests

The authors declare no competing interests.

Editor: Timo Betz.

Footnotes

Supporting material can be found online at https://doi.org/10.1016/j.bpj.2023.07.025.

Supporting citations

References (11,17,27) appear in the supporting material.

Supporting material

References

- 1.Lecuit T., Lenne P.F. Cell surface mechanics and the control of cell shape, tissue patterns and morphogenesis. Nat. Rev. Mol. Cell Biol. 2007;8:633–644. doi: 10.1038/nrm2222. [DOI] [PubMed] [Google Scholar]

- 2.Vogel V., Sheetz M. Local force and geometry sensing regulate cell functions. Nat. Rev. Mol. Cell Biol. 2006;7:265–275. doi: 10.1038/nrm1890. [DOI] [PubMed] [Google Scholar]

- 3.Jaalouk D.E., Lammerding J. Mechanotransduction gone awry. Nat. Rev. Mol. Cell Biol. 2009;10:63–73. doi: 10.1038/nrm2597. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Hahn C., Schwartz M.A. Mechanotransduction in vascular physiology and atherogenesis. Nat. Rev. Mol. Cell Biol. 2009;10:53–62. doi: 10.1038/nrm2596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Vollrath M.A., Kwan K.Y., Corey D.P. The micromachinery of mechanotransduction in hair cells. Annu. Rev. Neurosci. 2007;30:339–365. doi: 10.1146/annurev.neuro.29.051605.112917. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Yu H., Mouw J.K., Weaver V.M. Forcing form and function: biomechanical regulation of tumor evolution. Trends Cell Biol. 2011;21:47–56. doi: 10.1016/j.tcb.2010.08.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Plotnikov S.V., Sabass B., et al. Waterman C.M. In: Waters J.C., Wittman T., editors. Academic Press; 2014. High-Resolution Traction Force Microscopy; pp. 367–394. (Quantitative Imaging in Cell Biology). 123 of Methods in Cell Biology. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Schwarz U.S., Soiné J.R.D. Traction force microscopy on soft elastic substrates: A guide to recent computational advances. Biochim. Biophys. Acta. 2015;1853:3095–3104. doi: 10.1016/j.bbamcr.2015.05.028. [DOI] [PubMed] [Google Scholar]

- 9.Lekka M., Gnanachandran K., et al. Zemła J. Traction force microscopy – Measuring the forces exerted by cells. Micron. 2021;150 doi: 10.1016/j.micron.2021.103138. [DOI] [PubMed] [Google Scholar]

- 10.Style R.W., Boltyanskiy R., et al. Dufresne E.R. Traction force microscopy in physics and biology. Soft Matter. 2014;10:4047–4055. doi: 10.1039/c4sm00264d. [DOI] [PubMed] [Google Scholar]

- 11.Dembo M., Wang Y.L. Stresses at the cell-to-substrate interface during locomotion of fibroblasts. Biophys. J. 1999;76:2307–2316. doi: 10.1016/S0006-3495(99)77386-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Schwarz U.S., Balaban N.Q., et al. Safran S.A. Calculation of Forces at Focal Adhesions from Elastic Substrate Data: The Effect of Localized Force and the Need for Regularization. Biophys. J. 2002;83:1380–1394. doi: 10.1016/S0006-3495(02)73909-X. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sabass B., Gardel M.L., et al. Schwarz U.S. High resolution traction force microscopy based on experimental and computational advances. Biophys. J. 2008;94:207–220. doi: 10.1529/biophysj.107.113670. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Kulkarni A.H., Ghosh P., et al. Gundiah N. Traction cytometry: regularization in the Fourier approach and comparisons with finite element method. Soft Matter. 2018;14:4687–4695. doi: 10.1039/c7sm02214j. [DOI] [PubMed] [Google Scholar]

- 15.Huang Y., Schell C., et al. Sabass B. Traction force microscopy with optimized regularization and automated Bayesian parameter selection for comparing cells. Sci. Rep. 2019;9:539. doi: 10.1038/s41598-018-36896-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Kratz F.S., Kierfeld J. Pendant drop tensiometry: A machine learning approach. J. Chem. Phys. 2020;153 doi: 10.1063/5.0018814. [DOI] [PubMed] [Google Scholar]

- 17.Wang Y.-l., Lin Y.-C. Traction force microscopy by deep learning. Biophys. J. 2021;120:3079–3090. doi: 10.1016/j.bpj.2021.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Li H., Matsunaga D., et al. Deguchi S. Wrinkle force microscopy: a machine learning based approach to predict cell mechanics from images. Commun. Biol. 2022;5:361. doi: 10.1038/s42003-022-03288-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Harris A.K., Wild P., Stopak D. Silicone rubber substrata: a new wrinkle in the study of cell locomotion. Science. 1980;208:177–179. doi: 10.1126/science.6987736. [DOI] [PubMed] [Google Scholar]

- 20.Dembo M., Oliver T., et al. Jacobson K. Imaging the traction stresses exerted by locomoting cells with the elastic substratum method. Biophys. J. 1996;70:2008–2022. doi: 10.1016/S0006-3495(96)79767-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Tan J.L., Tien J., et al. Chen C.S. Cells lying on a bed of microneedles: An approach to isolate mechanical force. Proc. Natl. Acad. Sci. USA. 2003;100:1484–1489. doi: 10.1073/pnas.0235407100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Brockman J.M., Blanchard A.T., et al. Salaita K. Mapping the 3D orientation of piconewton integrin traction forces. Nat. Methods. 2018;15:115–118. doi: 10.1038/nmeth.4536. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Steinwachs J., Metzner C., et al. Fabry B. Three-dimensional force microscopy of cells in biopolymer networks. Nat. Methods. 2016;13:171–176. doi: 10.1038/nmeth.3685. [DOI] [PubMed] [Google Scholar]

- 24.Beningo K.A., Wang Y.-L. Flexible substrata for the detection of cellular traction forces. Trends Cell Biol. 2002;12:79–84. doi: 10.1016/s0962-8924(01)02205-x. [DOI] [PubMed] [Google Scholar]

- 25.Schwarz U.S., Safran S.A. Physics of adherent cells. Rev. Mod. Phys. 2013;85:1327–1381. [Google Scholar]

- 26.Newby J.M., Schaefer A.M., et al. Lai S.K. Convolutional neural networks automate detection for tracking of submicron-scale particles in 2D and 3D. Proc. Natl. Acad. Sci. USA. 2018;115:9026–9031. doi: 10.1073/pnas.1804420115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Landau L.D., Lifshitz E.M., et al. Pitaevskiĭ L.P. Pergamon Press; 1986. Theory of Elasticity. Course of Theoretical Physics. [Google Scholar]

- 28.Butler J.P., Tolić-Nørrelykke I.M., et al. Fredberg J.J. Traction fields, moments, and strain energy that cells exert on their surroundings. Am. J. Physiol. Physiol. 2002;282:C595–C605. doi: 10.1152/ajpcell.00270.2001. [DOI] [PubMed] [Google Scholar]

- 29.Han S.J., Oak Y., et al. Danuser G. Traction microscopy to identify force modulation in subresolution adhesions. Nat. Methods. 2015;12:653–656. doi: 10.1038/nmeth.3430. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Huang Y., Gompper G., Sabass B. A Bayesian traction force microscopy method with automated denoising in a user-friendly software package. Comput. Phys. Commun. 2020;256 [Google Scholar]

- 31.Soiné J.R.D., Hersch N., et al. Schwarz U.S. Measuring cellular traction forces on non-planar substrates. Interface Focus. 2016;6 doi: 10.1098/rsfs.2016.0024. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Adler J., Öktem O. Solving ill-posed inverse problems using iterative deep neural networks. Inverse Probl. 2017;33 [Google Scholar]

- 33.Xu L., Ren J., et al. Jia J. Deep convolutional neural network for image deconvolution. Adv. Neural Inf. Process. Syst. 2014;2:1790–1798. [Google Scholar]

- 34.Raissi M., Perdikaris P., Karniadakis G. Physics-informed neural networks: A deep learning framework for solving forward and inverse problems involving nonlinear partial differential equations. J. Comput. Phys. 2019;378:686–707. [Google Scholar]

- 35.Bar-Sinai Y., Hoyer S., et al. Brenner M.P. Learning data-driven discretizations for partial differential equations. Proc. Natl. Acad. Sci. USA. 2019;116:15344–15349. doi: 10.1073/pnas.1814058116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Karniadakis G.E., Kevrekidis I.G., et al. Yang L. Physics-informed machine learning. Nat. Rev. Phys. 2021;3:422–440. [Google Scholar]

- 37.Balaban N.Q., Schwarz U.S., et al. Geiger B. Force and focal adhesion assembly: a close relationship studied using elastic micropatterned substrates. Nat. Cell Biol. 2001;3:466–472. doi: 10.1038/35074532. [DOI] [PubMed] [Google Scholar]

- 38.Ronneberger O., Fischer P., Brox T. U-Net: Convolutional Networks for Biomedical Image Segmentation. 2015;9351:234–241. [Google Scholar]

- 39.Furusho Y., Ikeda K. Theoretical analysis of skip connections and batch normalization from generalization and optimization perspectives. APSIPA Trans. Signal Inf. Process. 2020;9:e9. [Google Scholar]

- 40.Guthardt Torres P., Bischofs I.B., Schwarz U.S. Contractile network models for adherent cells. Phys. Rev. E. 2012;85 doi: 10.1103/PhysRevE.85.011913. [DOI] [PubMed] [Google Scholar]

- 41.Chollet F., et al. Keras. 2015. https://keras.io

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.