Abstract

Background:

Simulations are a critical component of anesthesia education, and ways to broaden their delivery and accessibility should be studied. The primary aim was to characterize anesthesiology resident, fellow, and faculty experience with augmented reality (AR) simulations. The secondary aim was to explore the feasibility of quantifying performance using integrated eye-tracking technology.

Methods:

This was a prospective, mixed-methods study using qualitative thematic analysis of user feedback and quantitative analysis of gaze patterns. The study was conducted at a large academic medical center in Northern California. Participants included 7 anesthesiology residents, 6 cardiac anesthesiology fellows, and 5 cardiac anesthesiology attendings. Each subject participated in an AR simulation involving resuscitation of a patient with pericardial tamponade. Postsimulation interviews elicited user feedback, and eye-tracking data were analyzed for gaze duration and latency.

Results:

Thematic analysis revealed 5 domains of user experience: global assessment, spectrum of immersion, comparative assessment, operational potential, and human-technology interface. Participants reported a positive learning experience and cited AR technology’s portability, flexibility, and cost-efficiency as qualities that may expand access to simulation training. Exploratory analyses of gaze patterns suggested that trainees had increased gaze duration of vital signs and gaze latency of malignant arrythmias compared with attendings. Limitations of the study include lack of a control group and underpowered statistical analyses of gaze data.

Conclusions:

This study suggests positive user perception of AR as a novel modality for medical simulation training. AR technology may increase exposure to simulation education and offer eye-tracking analyses of learner performance.

Keywords: Medical education, simulation, augmented reality, behavioral skills, qualitative methods

Introduction

Recent technological advances have accelerated the development of immersive modalities in medical education.1-3 Augmented reality (AR) integrates holographic content within the natural world, creating an interactive, mixed-reality experience. AR has predominantly been used for surgical skill training and has similarly been adopted in anesthesiology education for procedural learning,1 but it has yet to be leveraged for teaching communication and nontechnical skills in anesthesiology. As such, availability and use ofimmersive technologies in anesthesiology education continue to lag behind traditional hardware-based modalities, such as mannequins, task trainers, and ultrasound simulators.4 Because AR has the potential to deliver simulation experiences beyond procedural learning and without the time, space, and cost constraints of traditional in situ simulations, its feasibility and effectiveness warrant continued investigation. Additionally, AR can incorporate eye-tracking capabilities, a methodology that has been well established in the training, assessment, and feedback practices in clinical settings and serves as a useful way to determine proficiency of both technical and nontechnical skills.5-9

The Chariot AR Medical Simulator (Stanford Chariot Program, Stanford, CA) allows instructors to illuminate holographic patients, monitors, and other medical equipment to create lifelike environments with minimal setup. Participants view the AR arrangement through the Magic Leap 1 (ML1) headset (Magic Leap, Inc., Plantation, FL). Holograms are controlled via holographic menus visible only to instructors, allowing the simulation to be modulated to participants’ training levels. Integrated eye-tracking software provides immediate access to gaze patterns after conclusion of the simulation. This provides a novel, dynamic, tailored learning experience.

The goal of this study was to explore anesthesiology trainee and faculty experiences with AR simulations. The primary aim sought to understand user perceptions through thematic analyses of qualitative interviews. The secondary aim explored the feasibility of using the MLl’s integrated eye-tracking software for quantitative analyses. We hypothesized that eye-tracking data could be obtained and analyzed to provide objective metrics of participant performance.

Materials and Methods

Study Design and Researcher Characteristics

The primary aim used a prospective, qualitative thematic analysis that adhered to the Standards for Reporting Qualitative Research guidelines.10 There are several different methods of qualitative analyses, including thematic, grounded theory, and phenomenological. Whereas phenomenology is a more theoretically informed methodology of a lived experience and how that experience relates to the participants’ own world and grounded theory seeks to develop explanatory theories of social processes, a thematic analysis was performed on interview texts to produce a representation of themes and patterns of the participants’ experiences. Volunteer participants were cardiothoracic anesthesiology faculty and trainee physicians.

Context

This study was performed at a large academic medical center in Northern California and was conducted in small groups, with 1 participant and 3 study personnel in each group. Data collection occurred between March and April 2021.

Sampling Strategy

Eligible participants included cardiothoracic anesthesia residents, fellows, and faculty. Research personnel used purposive sampling common in qualitative studies to recruit eligible volunteers that represented various stages of training.11 These volunteers possessed a broad spectrum of experiences with clinical simulation and management of intraoperative cardiac arrests. Recruitment concluded after thematic saturation was reached, defined as a point at which no new information was obtained from further interviews.10 Participants with a history of motion sickness or seizures were excluded.

Human Subject Ethical Approval

The Stanford University Institutional Review Board approved this study (Clinical Trials Identifier NCT04376255, phase 1, protocol 55657). Informed consent was obtained before participation, and participants were permitted to withdrawal from the study at any point or decline answering any questions during the interview without study exclusion.

Data Collection Methods

After participants completed a demographic survey (Table 1), qualitative data were collected during the postsimulation interview. Transcripts were generated using video recordings with an audio transcription service (Otter.ai, Los Altos, CA) and edited for accuracy by research personnel.

Table 1.

Participant Demographics

| Characteristic | Value |

|---|---|

| Age, mean ± SD, y | 33.3 ± 4.0 |

| Sex (n) | |

| Male | 10 |

| Female | 8 |

| Race, n (%) | |

| American Indian or Alaskan Native | 1 (5.6) |

| Asian | 6 (33.3) |

| Black or African American | 1 (5.6) |

| Native Hawaiian or Other Pacific | 0 |

| White | 10 (56) |

| Prefer not to answer | 1 (5.6) |

| Ethnicity, n (%) | |

| Hispanic or Latino | 2 (11.1) |

| Not Hispanic or Latino | 15 (83.3) |

| Prefer not to answer | 1 (5.6) |

| Level of training, n (%) | |

| PGY1 | 0 |

| PGY2 | 6 (33.3) |

| PGY3 | 1 (5.6) |

| PGY4 | 0 |

| PGY5 | 3 (16.7) |

| PGY6 | 2 (11.1) |

| PGY7 | 1 (5.6) |

| Attending or faculty | 5 (27.8) |

| Previous exposure to any AR, n (%) | |

| Yes | 9 (50.0) |

| No | 9 (50.0) |

| Experience initiating resuscitative efforts on a person, n (%) | |

| Yes | 17 (94) |

| No | 1 (6) |

| Experience initiating resuscitative efforts on a mannequin, n (%) | |

| Yes | 18 (100) |

| No | 0 |

| Received training on effective communication skills during resuscitation, n (%) | |

| Yes | 17 (94) |

| No | 1 (6) |

Abbreviations: AR, augmented reality; PGY, postgraduate year; SD, standard deviation.

Gaze data were collected via ML1 headsets using previously validated eye-tracking software.5 Latency to gaze of ventricular fibrillation was calculated based on timestamps of when subjects looked at the rhythm after it was presented during the simulation. Gaze duration of other vital signs were tracked by the Chariot AR Medical software and downloaded at the conclusion.

Data Collection Instruments

Demographic questionnaires were completed and stored on a REDCap database.12,13 A semistructured, postsimulation interview guide developed and piloted in a previous qualitative AR study involving medical students was used to obtain qualitative data.14 The guide contained 6 questions, accompanied by additional follow-up questions that prompted the research personnel to elicit more information if necessary (Table 2). Interviews were conducted by the same study investigator to maintain consistency. This investigator was an anesthesia faculty member who did not have active supervisory roles with participants at the time of interviews. Questions assessed the participants’ opinions and attitudes toward the effectiveness of AR simulations.

Table 2.

Postsimulation Interview Questions for Qualitative Thematic Analysis

| Question Purpose | Questions and Notes | Probing Questions | Prompts |

|---|---|---|---|

| Attitudes and opinions | Can you please tell me about your simulation experience? |

|

|

| Attitudes and opinions | How did this simulation experience compare with your previous experiences with in-person medical simulation? How did it compare with real-life codes? |

|

|

| Perceptions | Please describe the advantages and limitations of learning with this tool compared with in-person simulations. |

|

|

| Perceptions | What is your opinion of the best way to teach the skills needed for medical crises beyond experiencing them firsthand? |

|

|

| Attitudes and opinions | Tell me about the teamwork you experienced during the simulation. |

|

|

| Summation question | Is there anything that you would like to say about in-person or augmented reality simulation that I have not already asked? |

|

Eye-tracking software was developed using Unity (Unity Technologies, San Francisco, CA) and translated raw eye direction data into meaningful, intentional gazes. False-positive and false-negative gazes were filtered using a minimum threshold duration trigger of 500 ms, and correction of natural binocular saccadic movement and drift was achieved by adjusting the algorithm to log positions resulting from smooth interpolation over the minimum threshold duration. This filter threshold was selected based on previous development and testing of integrated eye-tracking software on ML1.5

Simulation Design and Delivery

Holograms in the simulation created an interactive cardiac arrest scenario for participants to manage. A holographic patient and gurney were overlaid on a real cardiopulmonary resuscitation chest task trainer (Figure 1). A holographic vital sign monitor was adjusted by the instructor via an on-screen menu (Figure 2). Three research assistants acted as additional personnel in directed roles, such as chest compressor, drug administrator, and airway manager. The research assistants were instructed to follow the participant’s directives without offering additional verbal or behavioral cues to maintain standardization of the experience. The instructor offered guidance to facilitate progression of the simulation at the request of the participant with no restriction on frequency or nature of the requests. However, progression of the simulation proceeded according to the timeline of the simulation sequence irrespective of the guidance provided.

Figure 1.

Real-world and holographic asset overlay as seen through Magic Leap during simulation.

Figure 2.

Open interface controller as seen through instructor headset.

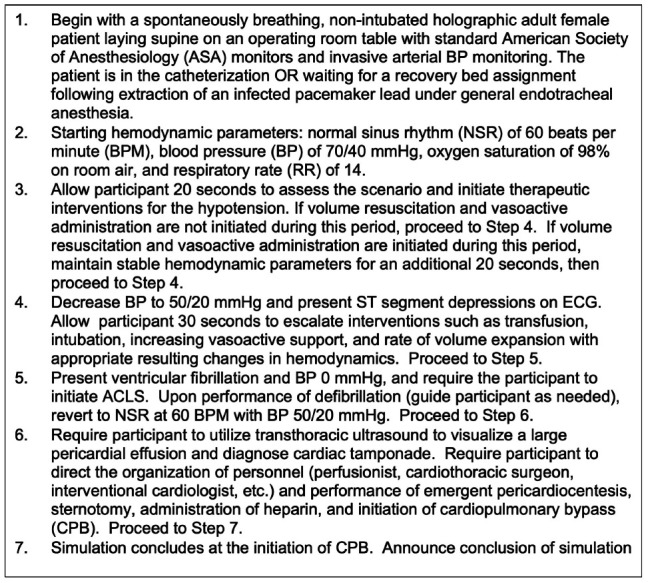

Before the simulation, the instructor reviewed the goals, which were to use effective communication and clinical decision-making skills to manage a postoperative crisis. Participants then entered the AR environment following a brief technical orientation and were instructed to integrate tactile aspects of the simulation within the holographic operating room (OR) environment (eg, task trainer and ultrasound machine) while suspending disbelief of the surrounding physical space (eg, photocopier and desks in the postanesthesia care unit where the simulation was being conducted). The simulation consisted of a patient with cardiac tamponade after pacemaker lead extraction. The instructor adhered to a detailed sequence of events to ensure a consistent experience across study subjects (Figure 3). After conclusion of the 10-minute simulation, participants removed their AR headsets and proceeded to the interview.

Figure 3.

Detailed description of simulation sequence.

Throughout the simulation, audio communications were conducted through integrated microphones and speakers in the ML1. The instructor illuminated holographic assets throughout the simulation, including defibrillator pads, a code cart, and blood products in response to participants’ verbal directives. Ambient OR noise was played through ML1 speakers during the simulation and included background conversations, doors opening and closing, and pulse oximetry.

Data Processing and Analysis

Qualitative analysis was performed based on the Standards for Reporting Qualitative Research guidelines in which iterative text coding identifies themes, and these themes were verified through a member-checking process. Three study investigators separately analyzed the interview transcripts to generate initial codes, which were revised until a consensus was reached. Codes were words or short phrases that summarize a portion of the text and allowed for synthesis or comparison.10 The investigators then evaluated connections between the codes to establish preliminary themes, which were then compared for similarities and redundancies and finalized. Supporting statements from the transcripts illustrated the themes.

An exploratory approach was used to compare differences in gaze duration of vital signs and latency to gaze of ventricular fibrillation between trainees and attendings. Gaze data were stratified by participant training level and processed by Mann-Whitney U tests to derive P values due to small sample sizes and nonnormal data. All statistical analyses were performed using R version 4.2.3.

Results

Participants

Eighteen subjects—7 residents and 6 fellows (collectively “trainees”) and 5 faculty attendings—participated (Table 1). The average session was 30 minutes, with 10 minutes each devoted to the orientation, simulation, and postsimulation interview. All participants had previously received Advanced Cardiac Life Support training and had initiated cardiopulmonary resuscitation on mannequins. All but one participant had previously initiated cardiopulmonary resuscitation on a real patient and received training on effective communication skills during resuscitation.

Thematic Analysis

Thematic analysis revealed 5 topics: global assessment, spectrum of immersion, comparative assessment, operational potential, and human-technology interface. Summative statements supporting each identified theme were described during the thematic analysis. There were no differences between trainees and faculty in their perceptions across the 5 themes.

Global Assessment

Participants uniformly reported a positive experience from the simulation, describing it as “impressive, highly valuable, helpful, fun, and realistic.” When asked to comment on the overall experience, most participants (n = 15) focused on either the technological qualities (n = 9) or emotional effects (n = 6) of the simulation. Participants focusing on the technological aspects of the simulation reported that the mixed-reality environment was “dynamic,” “high-quality,” and “impressively realistic,” although at times limited by the headset’s field of vision and creation of a “tunnel vision” effect. Subjects focusing on the emotional effects of the simulation commented on the excitement and stress response they felt (“my heart is still racing,” “I felt like I was in a real-life scenario,” and “it was a lot of fun”). All participants felt that the simulation prepared them for similar real-life scenarios and expressed interest in participating in more AR simulations.

Spectrum of Immersion

Twelve of 18 participants—5 attendings and 7 trainees—found the AR simulation experience to be highly immersive, commenting that it was “super real” and that they “felt like [they] were in the real scenario.” They discussed specific features of the simulation that added to the realism, including dynamic changes to vital signs and the realistic behavior of the holographic patient and other objects. Six participants reported feeling less immersed due to difficulty integrating the mixed-reality environment into a realistic experience, commenting that they had “trouble with the concept of time” in the simulation and “did not know which real objects were part of the [simulation].” However, the same 6 participants also perceived the AR simulation as “at least as immersive” as traditional in situ simulation due to similar challenges in creating artificial in situ clinical environments.

Comparative Assessment

The AR simulation experience compared favorably with traditional in situ simulation. Most subjects (n = 14) perceived the AR simulation experience to be either comparable (n = 9) or superior (n = 5) to traditional in-person simulations, while the remaining subjects (n = 4) noted a preference for in situ simulation due to the technology’s learning curve and mixed-reality format. Participants that favored AR simulation described it as “a lot more fun” and “way more real” than in situ simulation, citing the holographic assets’ movements (eg, seeing the patient’s chest rise with respirations and convulsing in response to defibrillation) and ambient OR noises as specific factors that contributed to their positive experience. Conversely, those preferring in situ simulation described AR as “slightly confusing” and “more distracting” than traditional simulation due to rapid changes in holographic assets (eg, sudden appearance of blood products on command) and difficulty suspending disbelief of the surrounding environment. There was no difference in age between participants who preferred in situ simulation and those who preferred AR. Thirteen subjects reported a stress response that was similar to or greater than mannequin-based simulations, noting that the ability to treat the holographic patient with both real-world and holographic assets made the AR simulation more lifelike than traditional simulation.

Operational Potential

Participants identified multiple advantages of the AR technology that would increase access to simulation education. All participants noted that the AR simulation was “simple to set-up” and “required far less equipment and space” than other high-fidelity simulations. Eight subjects (3 faculty, 3 residents, and 2 fellows) specifically commented on the portability of AR technology and its advantage over fixed, center-based simulations (“you can do this anywhere in the hospital, in the hallway, in the ICU, or in the classroom”). Several participants (n = 9) also noted that the nonalgorithmic, open-interface format of AR simulations conferred an educational advantage over modalities that rely on predetermined software algorithms. Four subjects (1 resident, 2 fellows, and 1 faculty) commented on the utility of remote-capable, interactive learning modalities during the ongoing pandemic, although this functionality was not specifically featured in this study.

Human-technology Interface

Overall, participants felt that the technology was easy to use, but several (n = 7) identified an adjustment learning curve that could be addressed with a more thorough orientation. They reported that greater familiarity with the technology would have facilitated their overall interactions within the mixed-reality environment. Nearly half of the participants (n = 8) reported the interface between holographic and real-world objects to be “clearly accessible” and “readable” and that the quality of holographic images was “good” or “excellent.” Two participants reported difficulty tracking time during the simulation due to the mixed-reality environment, stating that “in an in-person simulation, you would wait for an action to be done, but in the AR environment, the changes happen instantaneously with the push of a button, so when you mix the two, the concept of time seemed a little bit off.” These participants suggested delaying the appearance of specific holograms to simulate instances where resources had to be obtained outside of the OR (eg, blood products and rapid infusers).

Four participants commented on difficulty integrating the real-life and holographic entities during the simulation, noting that they had “tunnel vision…only focused on the holograms” and “forgot that the real-life people and equipment were also part of [the simulation].” All four of the participants had no previous experience with AR and suggested that additional experience with the technology may mitigate future challenges. Participants also suggested that additional holographic components, such as a timer, holographic ultrasound machine, and echocardiographic images, may improve the fidelity of the experience. One participant experienced a mild headache from the AR headset and the bright lights during the simulation, which resolved immediately after removing the headset.

Eye-Tracking Analysis

An exploratory approach examined the eye-tracking data collected from faculty and trainees as a secondary outcome. Regarding gaze duration, 2 of 5 faculty members had capturable gaze duration data for blood pressure, respiratory rate, and electrocardiogram, and 1 of5 faculty had capturable gaze duration data for heart rate. Twelve trainees met gaze threshold criteria for blood pressure and respiratory rate, 10 met threshold for electrocardiogram, and 6 met threshold for heart rate. Attendings had shorter mean gaze durations for all vital signs than trainees (Figure 4). On average, trainees spent 5.8 seconds longer fixated on electrocardiogram rhythms than faculty (P = .1). The difference in mean gaze duration was even more profound for blood pressure, with trainees spending approximately 14.4 seconds longer fixated on blood pressure than faculty (P = .1). With respect to respiratory rate gazes, trainees had an average gaze duration of 3.5 seconds longer than faculty (P = .6).

Figure 4.

Gaze duration of vital signs by participant training level.

Abbreviations: BP, blood pressure; ECG, electrocardiogram; HR, heart rate; RR, respiratory rate.

Regarding time to gaze at ventricular fibrillation after initiation of the rhythm, 10 of the 18 participants registered a gaze (8 trainees and 2 faculty). The mean times to gaze for trainees and attendings were 9.1 ± 9.7 seconds and 3.0 ± 1.4 seconds, respectively (P = .8). No additional data points were collected to infer identification of the arrythmia from the gaze event.

Discussion

This study identified experiential themes related to the use of an AR medical simulator for cardiovascular anesthesiology training and explored the potential application of integrated gaze tracking. Thematic analysis suggested that user satisfaction with AR simulations was comparable to, and in specific instances even higher than, in-person simulation across the 5 core themes of user experience. Global assessment of AR simulation was universally positive, and most participants reported a highly immersive learning experience. Participants who did not report a highly immersive experience or preferred in situ simulations still rated AR simulation fidelity on par with in situ simulations and cited characteristics that limit the realism of both modalities, such as the accelerated flow of time and the need to distinguish between assets that were part of the simulation and ones that were excluded. These barriers may be addressed by implementing time delays and additional site preparation to mimic the temporal and environmental characteristics of real clinical scenarios more closely. The majority of participants found the AR equipment and software easy to use but believed a more thorough technical orientation before the simulation would benefit the technology’s learning curve. Overall, the qualitative results support the utility of the AR software for cardiac anesthesia simulation training.

These results enhance current simulation education paradigms by improving experiential fidelity. Highly immersive and emotionally charged AR simulation experiences may contribute to improved clinical performance because high-fidelity simulations have been associated with superior advanced cardiovascular life support performance.15,16 Additionally, high-fidelity simulations are an important factor in maintaining psychological safety for learners by permitting learners to make mistakes without patient harm.17 Beyond an effective immersive learning experience, participants reported operational advantages of AR that may expand the accessibility of simulation education, such as its portability, minimal set-up time, and cost-efficiency. These key components may also promote increased participation frequency, scheduling flexibility, and use in remote or resource-limited locations.18

Beyond the qualitative assessment of AR simulations, quantitative biometric analysis was performed by leveraging the gaze-tracking feature within the ML1. Gaze data were registered between 7 and 14 of the 18 subjects depending on the vital sign of interest, corresponding to a capture rate of 39% to 78%. Consequently, analysis of the secondary aim was underpowered, indicating that further refinement in the hardware and software is needed to reliably use gaze tracking data. For the participants with registered gazes, the data may inform assessment of mastery. Gaze patterns in this study suggest that faculty fixated on vital signs for shorter durations than trainees, which is consistent with previous studies that demonstrate pediatric intensive care unit and emergency department faculty attending to focal points for shorter time intervals than trainees.18 This finding may reflect an experienced faculty physician’s more efficient decision-making capacity than trainees.

There were several limitations. First, the aforementioned variable gaze capture rate in this study was inconsistent with the software, which previously demonstrated over 80% reliable gaze capture during dedicated testing.5 This may be attributed to threshold limits for gaze capture, lack of gaze discrimination between different vital signs on the holographic monitor, or the inability to control for peripheral gazes. Although the duration of vital sign gazes and latency to gaze fixation of ventricular fibrillation was not captured for all participants, these findings may be used to further optimize the software as the technology evolves. Second, the qualitative thematic analysis method used to analyze the primary outcome does not provide a control group and limits the ability to determine if the comparative narrative offered by the participants was not biased by their exposure to the intervention, although the benefits of this approach allow for greater discovery and nuances of the user experience. Third, the participants were selected from a single cardiac anesthesiology division. It is impossible to determine if the qualitative themes are directly translatable to other institutions. However, the participants have heterogeneous training backgrounds, and we have no reason to believe these participants to have different learning styles than those at similar institutions.

Conclusion

Mixed-reality technologies hold remarkable potential in the delivery of effective, portable, and cost-effective simulation training. This study suggests positive user perception and acceptance of AR as a novel modality for medical simulation training. Future studies will compare AR with in-person simulation with a control group and optimize gaze-tracking capabilities for quantitative biometric analysis and feedback.

Acknowledgments

We acknowledge Maria Menendez, Ahtziri Fonseca, and Kenny Roy, who served as research assistants and consultants in the design, implementation, and support of the study protocol.

Funding Statement

Funding: None

Footnotes

Conflicts of interest: None

References

- 1.Tang KS, Cheng DL, Mi E, Greenberg PB. Augmented reality in medical education: a systematic review. Can Med Educ J. 2020;11(1):e81–96. doi: 10.36834/cmej.61705. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Chaballout B, Molloy M, Vaughn J, et al. Feasibility of augmented reality in clinical simulations: using Google Glass with manikins. JMIR Med Educ. 2016;2(1):e2. doi: 10.2196/mededu.5159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Remtulla R. The present and future applications of technology in adapting medical education amidst the COVID-19 pandemic. JMIR Med Educ. 2020;6(2):e20190. doi: 10.2196/20190. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Chen MJ, Ambardekar A, Martinelli SM, et al. Defining and addressing anesthesiology needs in simulation-based medical education. J Educ Perioper Med. 2022;24(2):1–15. doi: 10.46374/volxxiv_issue2_mitchell. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Caruso T, Hess O, Roy K, et al. Integrated eye tracking on Magic Leap One during augmented reality medical simulation: a technical report. BMJ Simul Technol Enhanc Learn. 2021;7(5):431–4. doi: 10.1136/bmjstel-2020-000782. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Chen H-E, Bhide RR, Pepley DF, et al. Can eye tracking be used to predict performance improvements in simulated medical training? A case study in central venous catheterization. Proc Int Symp Hum Factors Ergon Healthc. 2019;8(1):110–4. doi: 10.1177/2327857919081025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ashraf H, Sodergren MH, Merali N, et al. Eye-tracking technology in medical education: a systematic review. Med Teach. 2018;40(1):62–9. doi: 10.1080/0142159X.2017.1391373. [DOI] [PubMed] [Google Scholar]

- 8.Desvergez A, Winer A, Gouyon JB, Descoins M. An observational study using eye tracking to assess resident and senior anesthetists’ situation awareness and visual perception in postpartum hemorrhage high fidelity simulation. PLoS One. 2019;14(8):e0221515. doi: 10.1371/journal.pone.0221515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Krois W, Reck-Burneo CA, Gröpel P, et al. Joint attention in a laparoscopic simulation-based training: a pilot study on camera work, gaze behavior, and surgical performance in laparoscopic surgery. J Laparoendosc Adv Surg Tech A. 2020;30(5):564–8. doi: 10.1089/lap.2019.0736. [DOI] [PubMed] [Google Scholar]

- 10.O’Brien BC, Harris IB, Beckman TJ, et al. Standards for reporting qualitative research: a synthesis of recommendations. Acad Med. 2014;89(9):1245–51. doi: 10.1097/ACM.0000000000000388. [DOI] [PubMed] [Google Scholar]

- 11.Jowsey T, Deng C, Weller J. General-purpose thematic analysis: a useful qualitative method for anaesthesia research. BJA Educ. 2021;21(12):472–8. doi: 10.1016/j.bjae.2021.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Harris PA, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)—a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform. 2009;42(2):377–81. doi: 10.1016/j.jbi.2008.08.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Harris PA, Taylor R, Minor BL, et al. The REDCap consortium: building an international community of software platform partners. J Biomed Inform. 2019;95:103208. doi: 10.1016/j.jbi.2019.103208. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Hess O, Qian J, Bruce J, et al. Communication skills training using remote augmented reality medical simulation: a feasibility and acceptability qualitative study. Med Sci Educ. 2022;32(5):1005–14. doi: 10.1007/s40670-022-01598-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Demaria S, Jr, Bryson EO, Mooney TJ, et al. Adding emotional stressors to training in simulated cardiopulmonary arrest enhances participant performance. Med Educ. 2010;44(10):1006–15. doi: 10.1111/j.1365-2923.2010.03775.x. [DOI] [PubMed] [Google Scholar]

- 16.Price JW, Price JR, Pratt DD, et al. High-fidelity simulation in anesthesiology training: a survey of Canadian anesthesiology residents’ simulator experience. Can J Anaesth. 2010;57(2):134–42. doi: 10.1007/s12630-009-9224-5. [DOI] [PubMed] [Google Scholar]

- 17.Madireddy S, Rufa EP. StatPearls. Treasure Island, FL: StatPearls Publishing; 2021. Maintaining confidentiality and psychological safety in medical simulation. [PubMed] [Google Scholar]

- 18.Andreatta P. Healthcare simulation in resource-limited regions and global health applications. Simul Healthc. 2017;12(3):135–8. doi: 10.1097/SIH.0000000000000220. [DOI] [PubMed] [Google Scholar]

- 19.McNaughten B, Hart C, Gallagher S, et al. Clinicians’ gaze behaviour in simulated paediatric emergencies. Arch Dis Child. 2018;103(12):1146–9. doi: 10.1136/archdischild-2017-314119. [DOI] [PubMed] [Google Scholar]