Abstract

Objective

To identify the risk of acute respiratory distress syndrome (ARDS) and in-hospital mortality using long short-term memory (LSTM) framework in a mechanically ventilated (MV) non-COVID-19 cohort and a COVID-19 cohort.

Methods

We included MV ICU patients between 2017 and 2018 and reviewed patient records for ARDS and death. Using active learning, we enriched this cohort with MV patients from 2016 to 2019 (MV non-COVID-19, n=3905). We collected a second validation cohort of hospitalised patients with COVID-19 in 2020 (COVID+, n=5672). We trained an LSTM model using 132 structured features on the MV non-COVID-19 training cohort and validated on the MV non-COVID-19 validation and COVID-19 cohorts.

Results

Applying LSTM (model score 0.9) on the MV non-COVID-19 validation cohort had a sensitivity of 86% and specificity of 57%. The model identified the risk of ARDS 10 hours before ARDS and 9.4 days before death. The sensitivity (70%) and specificity (84%) of the model on the COVID-19 cohort are lower than MV non-COVID-19 cohort. For the COVID-19 + cohort and MV COVID-19 + patients, the model identified the risk of in-hospital mortality 2.4 days and 1.54 days before death, respectively.

Discussion

Our LSTM algorithm accurately and timely identified the risk of ARDS or death in MV non-COVID-19 and COVID+ patients. By alerting the risk of ARDS or death, we can improve the implementation of evidence-based ARDS management and facilitate goals-of-care discussions in high-risk patients.

Conclusion

Using the LSTM algorithm in hospitalised patients identifies the risk of ARDS or death.

Keywords: Decision Support Systems, Clinical; COVID-19; Critical Care Outcomes; Medical Informatics

WHAT IS ALREADY KNOWN ON THIS TOPIC

Acute respiratory distress syndrome (ARDS) is commonly under-recognised in clinical settings, which can lead to delays in evidence-based management.

WHAT THIS STUDY ADDS

A long short-term memory algorithm trained on mechanically ventilated patients can identify the risk of ARDS development or in-hospital mortality using structured electronic health record data without the use of chest X-ray analysis. SARS-CoV-2 infection has a noted high incidence of ARDS. The model, trained on mechanically ventilated non-COVID-19 patients, performed well on COVID-19 patients, with an evaluation of 1.82 patients needed to identify 1 patient at risk of ARDS or death in the hospital.

HOW THIS STUDY MIGHT AFFECT RESEARCH, PRACTICE OR POLICY

Being able to identify the risk of ARDS, regardless of COVID-19 status, early can improve compliance with evidence-based management and allow prognostication.

Introduction

Acute respiratory distress syndrome (ARDS) affects nearly a quarter of all acute respiratory failure patients requiring mechanical ventilation. It contributes to high morbidity and mortality of critically ill patients.1 ARDS is consistently under-recognised, leading to delays in implementing evidence-based best practices, such as the use of lung-protective ventilation strategies.2 3 The onset of the COVID-19 pandemic overwhelmed the healthcare system in the USA, and patients with severe to critical SARS-CoV-2 infections had a high incidence of ARDS and high mortality. This was especially true early in the pandemic, before the discovery of using early steroids and other immunosuppressants for treatment.4 5 An electronic health record (EHR)-based decision support system that accurately identifies patients with ARDS can improve the management and escalation of these critically ill patients.6 Different machine learning techniques, such as L2-logistic regression, artificial neural networks and XGBoost gradient boosted tree models, have leveraged EHR to identify or predict ARDS, yielding robust statistical discrimination as reported in studies.7–9 In a distinct study, Zeiberg et al applied L2-regularised logistic regression to structured EHR data sourced from a single-centre population within the initial 7 days of hospitalisation. A meticulous two-physician chart review established the gold standard diagnosis of ARDS. Despite the rarity of ARDS occurrences (2.5%) within the testing cohort of this investigation, the area under the receiver operating curve (AUROC) attained an impressive value of 0.81.7 Other investigations centred on using the Medical Information Mart for the ICU databases.10 11 These endeavours relied on diverse data sources such as free-text entries, diagnostic codes and radiographic reports for both the diagnosis and prediction of ARDS.10 11

We aimed to train a deep learning model using long short-term memory (LSTM) framework and active learning method using a historic dataset from a mechanically ventilated (MV) non-COVID-19 cohort to identify patients with risk of ARDS or in-hospital mortality. We validated the model on an MV non-COVID-19 cohort, a COVID+ cohort and a subgroup of MV COVID+ cohort.

Materials and methods

The study was conducted at Montefiore Medical Center, encompassing three hospital sites.

Cohort assembly

MV non-COVID-19 cohorts

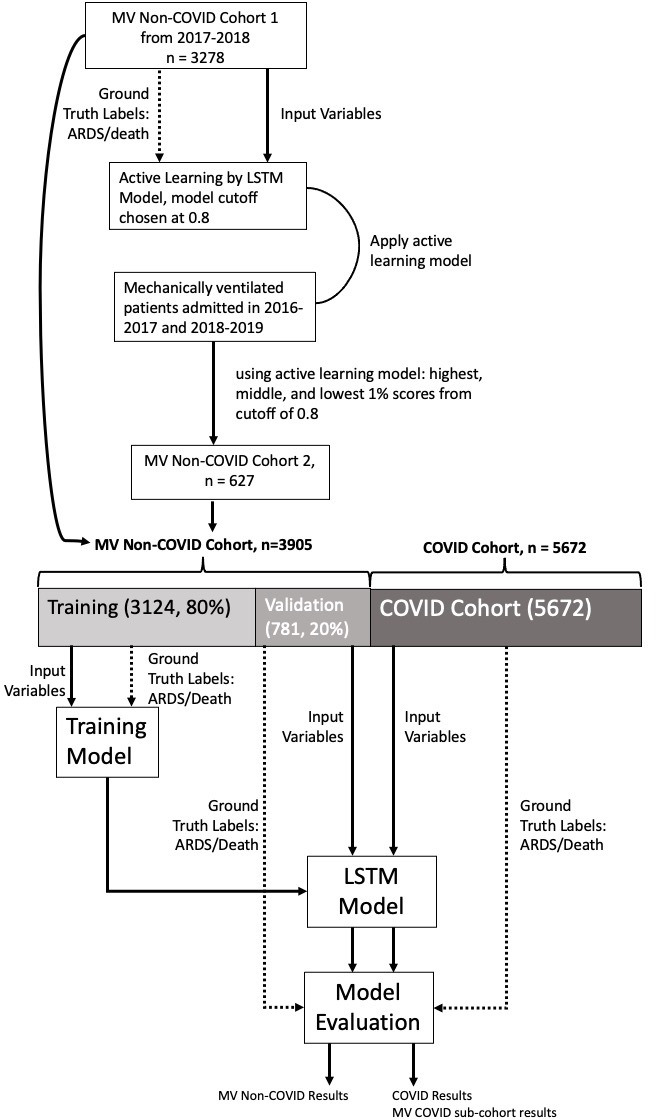

Non-COVID-19 cohort 1 was constructed between 1 January 2017 and 31 August 2018 (figure 1). We included MV adults in the ICU with ages greater than 18. Each patient’s chart was reviewed for ARDS.

Figure 1.

Cohort assembly and model training. ARDS, acute respiratory distress syndrome; LSTM, long short-term memory; MV, mechanically ventilated.

Ground truth labelling: ARDS gold-standard identification

We defined ARDS using the Berlin criteria: hypoxaemia (arterial oxygen tension (PaO2) to fractional inspired oxygen (FiO2) ratio (PFR)≤300 with positive pressure ventilation ≥5cmH20), bilateral infiltrates on chest radiographs by independent review and a presence of ARDS risk factors (sepsis, shock, pancreatitis, aspiration, pneumonia, drug overdose and trauma/burn) not solely due to heart failure.12 We used the first date and time of PFR≤300 with confirmed bilateral infiltrates within 24 hours as the time of ARDS presentation (ToP of ARDS).

Active learning

We used the ‘active learning’ technique to provide additional adult MV patients from July 2016 to December 2016 and September 2018 to December 2019 (AL-cohort).13 A preliminary recurrent neural network was developed using the LSTM model and trained with the original non-COVID-19 cohort 1. Next, we applied the preliminary model to the AL-cohort. We used pool-based sampling and uncertainty techniques to identify records from AL-cohort to be reviewed and labelled by clinicians.13 The uncertainty technique includes patients whose scores are very close to the cut-off, which means the model is least confident about them. We chose a cut-off of 0.80 and selected all records with a score between 0.75 and 0.85. We created the MV non-COVID-19 cohort 2 using the top 1% of the highest, lowest 1% and medium scores of the AL cohort. This allowed us to enrich MV non-COVID-19 cohort 2 with patients with ARDS or those who died in the hospital.

COVID-19 validation cohort

We included all hospitalised adult patients with and without mechanical ventilation with a positive SARS-Cov-2 transcription-mediated amplification assay from 1 March 2020 to 17 April 2020 in the COVID-19 cohort.

Training and validation cohort splitting

MV non-COVID-19 cohorts 1 and 2 were combined as the MV non-COVID-19 cohort. We randomly selected 80% of patients for training (MV non-COVID training cohort) and validation to learn model parameters and find optimal hyperparameters. The trained model was validated on the remaining 20% of the non-COVID-19 cohort (MV non-COVID-19 validation cohort), the COVID-19 cohort and the MV COVID-19 cohort separately (figure 1).

EHR data collection and processing

Clinical data were collected through automated abstraction of EHR data. Raw EHR data for each admission were abstracted, sampled and validated (online supplemental table 2).

bmjhci-2023-100782supp001.pdf (156.8KB, pdf)

Sampling

Raw longitudinal EHR data were sampled every hour. Sampling was necessary since the different variables were recorded at different timestamps with different frequencies to aggregate the longitudinal data into hourly snapshots. If the data were recorded multiple times within 1 hour, we computed the minimum and maximum based on all recorded measurements. If it was not recorded at all within the 1-hour time frame, we considered it as ‘missing’. For data that were recorded exactly once during an hour, the minimum and maximum would be the same.

Data validation

Data validation was performed by range checking (online supplemental table 2). If the recorded measure was outside the valid range, we discarded it and treated it as a missing value.

Missing data

The missing data were handled by ‘forward imputing’, where the most recent value fills the missing value. If there were no data available for imputation, we used normal values. We used the lower bound of the normal range as the minimum and the upper bound as the maximum value for those timestamps. A feature vector of size 132 represents each timestamp.

Model training

LSTM network is a paradigm of recurrent neural networks that can capture the temporal information of sequential data.14 We used the EHR data, including the previous 12 hours, as the network inputs to train a model that can generate a predictive score for every patient at every hour. The network consisted of an LSTM unit with 10 filters, followed by a drop-out layer with 50% probability of keeping.15 The network ended with a linear layer and a Sigmoid activation function to output a score from 0 to 1, which is interpreted as the probability of developing ARDS or in-hospital mortality.

Model evaluation

We applied the model on the MV non-COVID-19 validation cohort and COVID-19 cohort hourly to produce the score for that timestamp which is an indication of the probability of ARDS development or death. For each cohort, we calculated the AUROC. We also calculated the sensitivity, specificity, positive predictive value (PPV), negative predictive value, and F1 score at different risk thresholds (cutoffs). We use the highest F1 score to generate a confusion matrix for selecting a score cut-off. The warning time is the first time the score exceeds the predefined cut-off. We continued running the test until the score exceeded the cut-off or discharge time. We evaluated model timeliness based on ARDS and death, ARDS and not death, no ARDS and death, no ARDS and not death and compared the actual ToP ARDS time/death time with the warning time.

Feature importance

Feature importance identifies a subset of features that are the most relevant for the accuracy of the model. We used local interpretable model-agnostic explanations (LIME),16 to determine the importance of each variable to the accuracy of the model. The feature importance value was determined for 200 randomly sampled patients in each cohort using LIME, then calculated the average across all samples.

Results

Cohort description

MV non-COVID-19 cohort 1 included 3278 patients (online supplemental table 1 and figure 1). MV Non-COVID-19 cohort 2 was derived from the active learning, consisting of 627 patients (online supplemental table 1). We combined MV Non-COVID-19 cohorts 1 and 2 to create the MV non-COVID-19 Cohort (n=3905, table 1). COVID-19 cohort included 5672 patients (table 1). Online supplemental table 3 shows the descriptive statistics of all variable fields in the MV non-COVID and COVID-19 cohorts.

Table 1.

Cohorts characteristics

| Training | Validation | ||||

| Variables | MV non-COVID-19 cohort | MV non-COVID-19 (training) cohort | Non-COVID-19 (validation) cohort | COVID-19 cohort | MV COVID-19 subcohort |

| n | 3905 | 3124 | 781 | 5672 | 803 |

| Age, year, mean±SD | 65.0±14.7 | 65.0±14.8 | 65.3±14.4 | 60.80±17.2 | 62.1±13.9 |

| Gender | |||||

| Male, n (%) | 1741 (44.6) | 1437 (46) | 328 (42) | 2665 (47) | 319 (40) |

| Female, n (%) | 2164 (55.4) | 1686 (54) | 452 (58) | 3006 (53) | 484 (60) |

| Race or ethnicity | |||||

| White, n (%) | 1015 (26) | 749 (24) | 249 (32) | 623 (11) | 177 (22) |

| Black, n (%) | 1718 (44) | 1405 (45) | 320 (41) | 2495 (44) | 345 (43) |

| Other, n (%) | 1171 (30) | 968 (31) | 210 (27) | 2552 (45) | 281 (35) |

| ARDS determination | |||||

| PaO2/FiO2 ratio ≤300, n (%) | 3211 (82.2) | 2579 (82.6) | 632 (80.9) | 617 (10.9) | 617 (77) |

| CXR interpretation | |||||

| Yes (consistent with ARDS), n (%) | 1333 (34.1) | 35.4 (35.4) | 260 (33.3) | 565 (10) | 565 (82) |

| Indeterminant, n (%) | 313 (8.0) | 7.1 (7.1) | 60 (7.7) | 18 (.3) | 18 (2.2) |

| No (not consistent with ARDS), n (%) | 2259 (57.8) | 57.6 (57.6) | 461 (59) | 34 (.6) | 34 (4.2) |

| Risk factors | |||||

| Aspiration, n (%) | 407 (10.4) | 10.3 (10.3) | 86 (11) | ||

| Shock, n (%) | 1520 (38.9) | 39.2 (39.2) | 299 (38.3) | ||

| Pneumonia, n (%) | 1530 (39.2) | 39.8 (39.8) | 288 (36.9) | 5672 (100) | 803 (100) |

| Sepsis, n (%) | 1885 (48.3) | 48.8 (48.8) | 362 (46.4) | ||

| Pancreatitis, n (%) | 42 (1.1) | 1.1 (1.1) | 9 (1.2) | ||

| Burn, n (%) | 3 (0.1) | 3 (0.1) | 0 (0) | ||

| Overdose, n (%) | 98 (2.5) | 2.5 (2.5) | 21 (2.7) | ||

| Transfusion, n (%) | 1191 (30.5) | 30.7 (30.7) | 232 (29.7) | ||

| Congestive heart failure, n (%) | 914 (23.4) | 23.6 (23.6) | 178 (22.8) | ||

| Presence of at least one risk factor, n (%) | 2739 (70.1) | 70.6 (70.6) | 362 (46.4) | 5672 (100) | 803 (100) |

| Clinical outcomes | |||||

| Mechanically ventilated, n (%) | 3905 | 3124 | 781 | 803 (14.2) | 803 |

| ARDS, n (%) | 1646 (42.2) | 1326 (42.4) | 320 (41.0) | 583 (10.3) | 583 (72.6) |

| In-hospital mortality, n (%) | 1033 (26.5) | 848 (27.1) | 185 (23.7) | 907 (16.0) | 418 (52.1) |

| ARDS or in-hospital mortality, n (%) | 2044 (52.3) | 1655 (53.0) | 389 (49.8) | 1235 (21.9) | 746 (92.9) |

ARDS, acute respiratory distress syndrome; CXR, chest X-ray; FiO2, fractional inspired oxygen; MV, mechanically ventilated; PaO2, arterial oxygen tension.

Model diagnostics

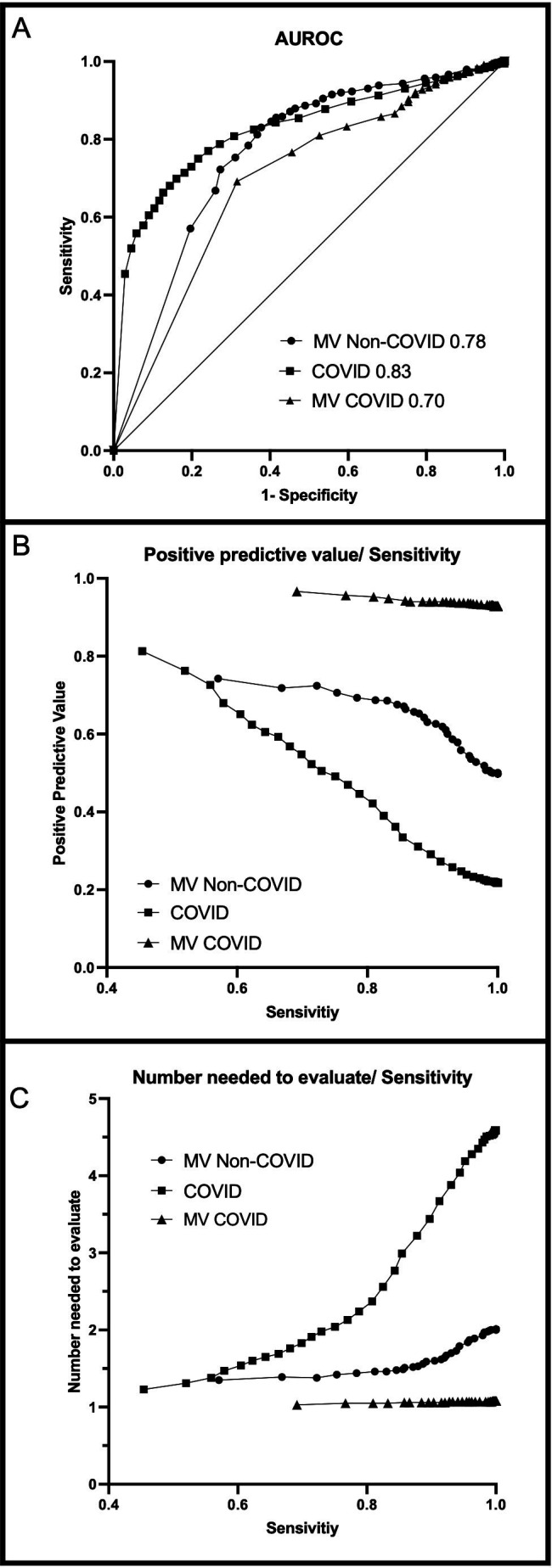

MV non-COVID-19 validation cohort

Based on the highest F1 score, we chose a model score cut-off at 0.90. The model diagnostics are presented in table 2, figure 2. The model warned of patient risk at a median of 10 hours (IQR −75 to 4) before ARDS and −225 hours or 9 days (IQR −461 to 101 hours) before death in the hospital (table 3). In ARDS survivors, the majority of the patients had ARDS risk identified before intubation and before ARDS diagnosis (table 3). For ARDS non-survivors, the model warned at 1 hour (IQR −38 to 9) before intubation, −20 hours (IQR −115 to 0.3) before ARDS and at −314 hours (IQR −589 to –128 hours) before death (table 3).

Table 2.

Model diagnostics

| TREAT-ECARDS model diagnostics | MV non-COVID-19 cohort | COVID-19 cohort | MV COVID-19 subcohort |

| Sensitivity | 0.86 | 0.7 | 0.92 |

| Specificity | 0.57 | 0.84 | 0.23 |

| Positive predictive value | 0.66 | 0.55 | 0.94 |

| Negative predictive value | 0.8 | 0.91 | 0.17 |

| Receiver operating curve | 0.78 | 0.83 | 0.7 |

| F1 score | 0.75 | 0.61 | 0.93 |

| No needed to evaluate | 1.52 | 1.82 | 1.06 |

MV, mechanically ventilated.

Figure 2.

Model diagnostics, AUROC, PPV with sensitivity and NNE with sensitivity. AUROC, area under the receiver operating curve; MV, mechanically ventilated; NNE, number needed to evaluate; PPV, positive predictive value.

Table 3.

Timeliness of model

| Cohort, (n) | Correctly identifies, n (%) | Time from intubation, median (IQR), hours | Before intubation, n (%) | After intubation, n (%) | Time from ARDS label, median (IQR), hours | Before ARDS, n (%) | After ARDS, n (%) | Time from death median (IQR), hours |

| MV non-COVID-19 cohort | ||||||||

| ARDS, (204) | 166 (81.4) | 0 (−12.8 to 26.0) | 87 (52.4) | 79 (47.6) | 0 (−43.8 to 12.0) | 115 (69.3) | 51 (30.7) | |

| Death, (69) | 60 (87) | 225.5 (−461.3 to –101.3) | ||||||

| ARDS and death, (116) | 108 (93.1) | -1 (−38.8 to 9.3) | 68 (63.0) | 40 (37.0) | 20 (−115.5 to 0.3) | 81 (75.0) | 27 (25.0) | 314 (−588.5 to –127.8) |

| ARDS or death, (389) | 274 (70.4) | 1 (−17.8 to 15.0) | 155 (56.6) | 119 (43.4) | 10.0 (−75.5 to 4.0) | 196 (71.5) | 78 (28.5) | 225.5 (−461.3 to –101.3) |

| No ARDS or death, (392) | 223 (56.9) | |||||||

| COVID-19 cohort | ||||||||

| ARDS, (328) | 318 (97) | 3 (−8.8 to 11.0) | 136 (42.8) | 182 (57.2) | 0 (−16 to 9.0) | 141 (44.3) | 128 (40.3) | |

| Death, (652) | 308 (47.2) | 58 (−112 to –20) | ||||||

| ARDS and death, (255) | 237 (92.9) | 4 (−1 to 18) | 86 (36.3) | 156 (65.8) | 0 (−12 to 10) | 125 (52.7) | 112 (47.3) | 112 (−211.3 to –52) |

| ARDS or death, (1235) | 555 (44.9) | 3 (−3.5 to 13.0) | 222 (40.0) | 333 (60.0) | 0 (−14.0, 10.0) | 266 (47.9) | 240 (43.2) | 58 (−112 to –20) |

| No ARDS or death, (4437) | 3724 (83.9) | |||||||

| MV COVID-19 subcohort | ||||||||

| ARDS, (328) | 318 (97) | 3 (−8.8 to 11.0) | 136 (42.8) | 182 (57.2) | 0 (−16.0 to 9.0) | 141 (44.3) | 128 (40.3) | |

| Death, (163) | 128 (78.5) | 37 (−87 to –11) | ||||||

| ARDS and death, (255) | 237 (92.9) | 4 (−1, 18) | 86 (36.3) | 156 (65.8) | 0 (−12 to 10) | 125 (52.7) | 112 (47.3) | 112 (−211. 3 to –52) |

| ARDS or death, (746) | 555 (74.4) | 3 (−3.5 to 13.0) | 222 (40.0) | 333 (60.0) | 0 (−14.0 to 10.0) | 266 (47.9) | 240 (43.2) | 37 (−87 to –11) |

| No ARDS or death, (57) | 13 (22.8) |

ARDS, acute respiratory distress syndrome; MV, mechanically ventilated.

COVID-19 cohort and MV COVID-19 subcohort

Using the same cut-off of 0.9, we applied the model to COVID-19 and MV COVID-19 subcohorts. The model diagnostics are presented in table 2 and figure 2. When the model was applied to the COVID-19 cohort, the PPV was lower and more patients needed to be screened compared with the MV non-COVID-19 validation cohort. Whereas in the MV COVID -19 subcohort patients had a high prevalence of ARDS and in-hospital mortality, the PPV and number needed to evaluate were much lower than in the MV non-COVID-19 Validation Cohort.

In the COVID-19 cohort, the model warned the patient was likely to have ARDS or in-hospital mortality 3 hours after intubation and at ToP ARDS (table 3). Among the non-survivors, the model warned 2.4 days before in-hospital mortality (IQR 4.7–0.83) in COVID-19 patients, and 1.54 days before in-hospital mortality (IQR 3.6–0.46) in MV COVID-19 patients (table 3).

Feature importance

For both the MV non-COVID-19 and COVID-19 cohorts, we randomly selected 200 encounters from each cohort and performed LIME (online supplemental figure 1). The top contributors are similar in the MV non-COVID-19 and COVID-19 cohorts. The most important variable to the model was lactate level in discriminating the clinical outcome. The model consistently used lactate, age, cryoprecipitate transfusion, dopamine, bicarbonate level and epinephrine as important input variables (online supplemental figure 1).

Discussion

From a cohort of pre-COVID-19 pandemic patients on mechanical ventilation, we developed and validated an LSTM model to identify patients at risk for ARDS or in-hospital mortality. This model was successfully integrated into EHR and identified patients at risk for ARDS or in-hospital mortality in all adults hospitalised with and without COVID-19 infection, regardless of mechanical ventilation status. The model was also able to warn well before the events of ARDS or death in both the MV non-COVID-19 and COVID-19 cohorts. The timeliness of the model allows clinicians to modify management and implement evidence-based practices promptly.

This is the first utilisation of an LSTM network for identifying the risk of ARDS and in-hospital mortality. The LSTM is a recurrent neural network that uses feedback layers to capture temporal aspects such as sequences and trends. This approach is well suited for this study because past events and the progression of patient status are often valuable to determine the probability of ARDS or death. As in the reality of managing critically ill patients, physiological observations at each time point are taken into account. Their change and progression or regression inform the decisions at the subsequent processing of this information. This is well suited for dynamically changing situations to monitor and identify patients progressing to ARDS or in-hospital mortality. LSTM models have been used to predict heart failure, transfusion needs in the ICU, and mortality in the neonatal ICU, all with better predictive utility than traditional logistic regression models.17–19 We chose to include ARDS diagnosis and in-hospital mortality as our patient-centred outcomes of interest instead of ARDS or in-hospital mortality alone, as in previous ARDS prediction studies.6 7 20 Identifying the risk of ARDS or in-hospital mortality has shown real clinical implications when managing patients, mitigating the ambiguity that sometimes can exist in ARDS clinical diagnosis based on shifting diagnostic criteria.7 8 20–22

This cohort is one of the largest validated ARDS gold standards developed by manual chart review and active learning from a single centre. We did not rely on ICD-10 diagnosis codes or radiology reports to identify ARDS. Instead, we followed the Berlin criteria using PFR, independent review of chest X-ray for the presence of bilateral infiltrates and risk factors of ARDS in the patients’ chart. Our model performed similarly to previously reported models using other machine learning methods, ranging from 0.71 to 0.90.7 9–11 21 We forgo chest X-ray interpretation as input variables, as in Zeiberg et al.7 Other large-scale ARDS identification studies which used natural language processing of radiology reports and diagnostic codes in clinical settings would delay ARDS recognition and rely heavily on clinician decisions.9 11 Using chest radiographs for the diagnosis of ARDS has its limitations, as studies show high interobserver variabilities despite training.12 23 In addition, radiology report turn-around times can range from 15 min to 26 hours, depending on the study location, availability of staff and hospital resources.24 25 This reliance on chest radiograph interpretations may delay ARDS diagnosis.

Despite the different clinical characteristics of the study cohorts, being MV patients non-COVID-19 versus non-MV COVID-19 patients, important features in risk identification were broadly consistent between the cohorts using lactate, age, cryoprecipitate transfusion, dopamine, bicarbonate level and epinephrine as important input variables. LIME can directly associate model features to increased or decreased risk of ARDS or death in an individual, on a patient-by-patient-level.26 27 We randomly sampled 200 patients in each cohort and obtained an average of the absolute LIME values to understand what features were generally used. This does not provide a clinical explanation and rationale for why features may relate to higher or lower scores. Instead, it sheds light on important features that the model needs as its input data to predict a score accurately, whether additive or subtractive, to the risk. Norepinephrine was the most commonly used vasopressor for both cohorts; intriguingly, it did not contribute to the model consideration. The model rarely used vasopressors such as dopamine and epinephrine to discriminate the outcome of ARDS and/or in-hospital mortality. Oxygen support devices were also not deemed important on average; we postulate that our gold standard labelling required mechanical ventilation for ARDS identification, making oxygen support devices less important in the discrimination.

In clinical practice, ARDS is underdiagnosed, which leads to increased exposures in management that are detrimental to patients, such as high tidal volume ventilation and delayed implementation of evidence-based practices that are helpful.2 3 28–31 We used continuous data at 1-hour intervals starting at hospital admission to identify the early risk of an adverse outcome. Indeed, in the non-COVID-19 cohort, we identified ARDS hours before intubation and at the time of ToP ARDS. The majority of patients (56.5%) had been identified before ARDS diagnosis in the MV non-COVID-19 cohort, and this remained the case in the COVID+ cohort (43%). Implemented and delivered as a clinical decision support system, the early recognition would allow clinicians to initiate treatment such as LTVV as early as possible, when it may more positively impact outcomes.3

Furthermore, the model identified the risk of in-hospital mortality 9 days in advance in the non-COVID-19 cohort and 2 days in advance in the COVID-19 cohort. This has significant implications for triaging patients during surge capacity. In the MV non-COVID-19 cohort, there was no concern for ventilator or ICU resource allocation. Early identification of risk for death would alert the clinician to implement aggressive management and allow the treating physician to consider early palliation intervention/conversation. In the setting of a high volume surge of respiratory illness, such as the onset of the COVID-19 pandemic, where the incidences of ARDS and death are high, identifying adverse outcomes days in advance could help the clinician in making necessary triage decisions for resource allocation.32–34

Our study has some limitations. First, our cohorts were constructed from a single centre in the Bronx, and the patients’ characteristics may not be generalisable to other centres and populations. However, our medical centre consists of three hospitals ranging from community and academic to tertiary transplant centres, thus spanning a wide spectrum of disease severity. In addition, we validated the algorithm in the COVID-19 cohort regardless of the respiratory support type, demonstrating consistent model performance across different cohorts. Second, although we were able to determine feature importance using LIME on 200 samples from each cohort, we were unable to discern the actual direction of association with the risk of ARDS or death. We cannot discern if the individual variables increase or decrease the risk of ARDS or death, despite their importance to the overall model. However, the consistency in features used to determine risk between the validation cohorts is reassuring. Ultimately, the variables that we included in models are variables known to be clinically associated with ARDS or death; therefore, the direction of influence on risk assessment is less germane. The strength of our study lies in the predictive nature of this algorithm and the timeliness of its predictions. Using longitudinal data from admission allowed the LSTM model to learn from the progression of the patient’s clinical status over time. This model also was flexible to have similar diagnostic performance in patients with different clinical characteristics.

In conclusion, our LSTM model identified risk for ARDS and in-hospital mortality on patients with or without COVID-19 regardless of mechanical ventilator support. The model identified patients early, which implies management changes can be implemented early.

Acknowledgments

The authors thank Mr. Daniel Ceusters for his administrative assistance for this project.

Footnotes

Contributors: J-TC: data collection and monitoring, data analysis and manuscript preparation. RM: data analysis and manuscript preparation. BBA: data collection and data analysis. MNG: idea and project generation, data analysis, manuscript review. PM: idea and project generation, data analysis, manuscript review. PM is the guarantor of the overall content.

Funding: This study was supported by Agency for Healthcare Research and Quality (AHRQ), grant number R18HS026188 and National Insitute of Health/ National Center for Advancing Translational Science (NCATS) Einstein-Montefiore CTSA Grant Number 1UM1TR004400.

Competing interests: None declared.

Provenance and peer review: Not commissioned; externally peer reviewed.

Supplemental material: This content has been supplied by the author(s). It has not been vetted by BMJ Publishing Group Limited (BMJ) and may not have been peer-reviewed. Any opinions or recommendations discussed are solely those of the author(s) and are not endorsed by BMJ. BMJ disclaims all liability and responsibility arising from any reliance placed on the content. Where the content includes any translated material, BMJ does not warrant the accuracy and reliability of the translations (including but not limited to local regulations, clinical guidelines, terminology, drug names and drug dosages), and is not responsible for any error and/or omissions arising from translation and adaptation or otherwise.

Data availability statement

Data sharing not applicable as no datasets generated and/or analysed for this study. All data relevant to the study are included in the article or uploaded as supplementary information.

Ethics statements

Patient consent for publication

Not applicable.

Ethics approval

This study was approved by Albert Einstein College of Medicine Internal Review Board.

References

- 1.Cartin-Ceba R, Kojicic M, Li G, et al. Epidemiology of critical care syndromes, organ failures, and life-support interventions in a suburban US community. Chest 2011;140:1447–55. 10.1378/chest.11-1197 [DOI] [PubMed] [Google Scholar]

- 2.Bellani G, Laffey JG, Pham T, et al. Epidemiology, patterns of care, and mortality for patients with acute respiratory distress syndrome in intensive care units in 50 countries. JAMA 2016;315:788–800. 10.1001/jama.2016.0291 [DOI] [PubMed] [Google Scholar]

- 3.Needham DM, Yang T, Dinglas VD, et al. Timing of low tidal volume ventilation and intensive care unit mortality in acute respiratory distress syndrome. A prospective cohort study. Am J Respir Crit Care Med 2015;191:177–85. 10.1164/rccm.201409-1598OC [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Berlin DA, Gulick RM, Martinez FJ. Severe COVID-19. N Engl J Med 2020;383:2451–60. 10.1056/NEJMcp2009575 [DOI] [PubMed] [Google Scholar]

- 5.Richardson S, Hirsch JS, Narasimhan M, et al. Presenting characteristics, Comorbidities, and outcomes among 5700 patients hospitalized with COVID-19 in the New York City area. JAMA 2020;323:2052–9. 10.1001/jama.2020.6775 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Wayne MT, Valley TS, Cooke CR, et al. “Electronic “Sniffer” systems to identify the acute respiratory distress syndrome”. Ann Am Thorac Soc 2019;16:488–95. 10.1513/AnnalsATS.201810-715OC [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Zeiberg D, Prahlad T, Nallamothu BK, et al. Machine learning for patient risk stratification for acute respiratory distress syndrome. PLoS One 2019;14:e0214465. 10.1371/journal.pone.0214465 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Wong A-KI, Cheung PC, Kamaleswaran R, et al. Machine learning methods to predict acute respiratory failure and acute respiratory distress syndrome. Front Big Data 2020;3:579774. 10.3389/fdata.2020.579774 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Le S, Pellegrini E, Green-Saxena A, et al. Supervised machine learning for the early prediction of acute respiratory distress syndrome (ARDS). J Crit Care 2020;60:96–102. 10.1016/j.jcrc.2020.07.019 [DOI] [PubMed] [Google Scholar]

- 10.Taoum A, Mourad-Chehade F, Amoud H. Early-warning of ARDS using novelty detection and data fusion. Comput Biol Med 2018;102:191–9. 10.1016/j.compbiomed.2018.09.030 [DOI] [PubMed] [Google Scholar]

- 11.Apostolova E, Uppal A, Galarraga JE, et al. Towards reliable ARDS clinical decision support: ARDS patient analytics with free-text and structured EMR data. AMIA Annu Symp Proc 2019;2019:228–37. [PMC free article] [PubMed] [Google Scholar]

- 12.Sjoding MW, Hofer TP, Co I, et al. Interobserver reliability of the Berlin ARDS definition and strategies to improve the reliability of ARDS diagnosis. Chest 2018;153:361–7. 10.1016/j.chest.2017.11.037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Settles B. Active learning literature survey. Computer Sciences Technical Report 1648. University of Wisconsin–Madison; 2009. [Google Scholar]

- 14.Hochreiter S, Schmidhuber J. Long short-term memory. Neural Comput 1997;9:1735–80. 10.1162/neco.1997.9.8.1735 [DOI] [PubMed] [Google Scholar]

- 15.Srivastava N, Hinton G, Krizhevsky A, et al. Dropout: A simple way to prevent neural networks from Overfitting. J Mach Learn Res 2014;15:1929–58. [Google Scholar]

- 16.Ribeiro MT, Singh S, Guestrin C. Why should I trust you?": explaining the predictions of any Classifier. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining; San Francisco, California, USA: Association for Computing Machinery, 2016:1135–44 10.1145/2939672.2939778 [DOI] [Google Scholar]

- 17.Shung D, Huang J, Castro E, et al. Neural network predicts need for red blood cell transfusion for patients with acute gastrointestinal bleeding admitted to the intensive care unit. Sci Rep 2021;11:8827. 10.1038/s41598-021-88226-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Hagan R, Gillan CJ, Spence I, et al. Comparing regression and neural network techniques for personalized predictive Analytics to promote lung protective ventilation in intensive care units. Comput Biol Med 2020;126:104030. 10.1016/j.compbiomed.2020.104030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Maheshwari S, Agarwal A, Shukla A, et al. A comprehensive evaluation for the prediction of mortality in intensive care units with LSTM networks: patients with cardiovascular disease. Biomed Tech (Berl) 2020;65:435–46. 10.1515/bmt-2018-0206 [DOI] [PubMed] [Google Scholar]

- 20.Ding X-F, Li J-B, Liang H-Y, et al. Predictive model for acute respiratory distress syndrome events in ICU patients in China using machine learning Algorithms: a secondary analysis of a cohort study. J Transl Med 2019;17:326. 10.1186/s12967-019-2075-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Fei Y, Gao K, Li WQ, et al. Prediction and evaluation of the severity of acute respiratory distress syndrome following severe acute Pancreatitis using an artificial neural network algorithm model. HPB (Oxford) 2019;21:891–7. 10.1016/j.hpb.2018.11.009 [DOI] [PubMed] [Google Scholar]

- 22.Sinha P, Delucchi KL, McAuley DF, et al. Development and validation of parsimonious Algorithms to classify acute respiratory distress syndrome phenotypes: a secondary analysis of randomised controlled trials. Lancet Respir Med 2020;8:247–57. 10.1016/S2213-2600(19)30369-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Goddard SL, Rubenfeld GD, Manoharan V, et al. The randomized educational acute respiratory distress syndrome diagnosis study: A trial to improve the radiographic diagnosis of acute respiratory distress syndrome. Crit Care Med 2018;46:743–8. 10.1097/CCM.0000000000003000 [DOI] [PubMed] [Google Scholar]

- 24.Towbin AJ, Iyer SB, Brown J, et al. Practice policy and quality initiatives: decreasing variability in turnaround time for radiographic studies from the emergency Department. Radiographics 2013;33:361–71. 10.1148/rg.332125738 [DOI] [PubMed] [Google Scholar]

- 25.Chan KT, Carroll T, Linnau KF, et al. Expectations among academic Clinicians of inpatient imaging turnaround time: does it correlate with satisfaction Acad Radiol 2015;22:1449–56. 10.1016/j.acra.2015.06.019 [DOI] [PubMed] [Google Scholar]

- 26.Ribeiro MT, Singh S, Guestrin C. Why should I trust you?": explaining the predictions of any Classifier. 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (KDD ’16); New York, NY, USA: Association for Computing Machinery, 2016. 10.1145/2939672.2939778 [DOI] [Google Scholar]

- 27.Elshawi R, Al-Mallah MH, Sakr S. On the Interpretability of machine learning-based model for predicting hypertension. BMC Med Inform Decis Mak 2019;19:146. 10.1186/s12911-019-0874-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Weiss CH, Baker DW, Weiner S, et al. Low tidal volume ventilation use in acute respiratory distress syndrome. Crit Care Med 2016;44:1515–22. 10.1097/CCM.0000000000001710 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Qadir N, Bartz RR, Cooter ML, et al. Variation in early management practices in moderate-to-severe ARDS in the United States: the severe ARDS: generating evidence study. Chest 2021;160:1304–15. 10.1016/j.chest.2021.05.047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Duggal A, Rezoagli E, Pham T, et al. Patterns of use of Adjunctive therapies in patients with early moderate to severe ARDS: insights from the LUNG SAFE study. Chest 2020;157:1497–505. 10.1016/j.chest.2020.01.041 [DOI] [PubMed] [Google Scholar]

- 31.Brower RG, Matthay MA, Morris A, et al. Ventilation with lower tidal volumes as compared with traditional tidal volumes for acute lung injury and the acute respiratory distress syndrome. N Engl J Med 2000;342:1301–8. 10.1056/NEJM200005043421801 [DOI] [PubMed] [Google Scholar]

- 32.Laventhal N, Basak R, Dell ML, et al. The ethics of creating a resource allocation strategy during the COVID-19 pandemic. Pediatrics 2020;146:e20201243. 10.1542/peds.2020-1243 [DOI] [PubMed] [Google Scholar]

- 33.Emanuel EJ, Persad G, Upshur R, et al. Fair allocation of scarce medical resources in the time of COVID-19. N Engl J Med 2020;382:2049–55. 10.1056/NEJMsb2005114 [DOI] [PubMed] [Google Scholar]

- 34.Aliberti MJR, Szlejf C, Avelino-Silva VI, et al. COVID-19 is not over and age is not enough: using frailty for prognostication in hospitalized patients. J Am Geriatr Soc 2021;69:1116–27. 10.1111/jgs.17146 Available: https://onlinelibrary.wiley.com/toc/15325415/69/5 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjhci-2023-100782supp001.pdf (156.8KB, pdf)

Data Availability Statement

Data sharing not applicable as no datasets generated and/or analysed for this study. All data relevant to the study are included in the article or uploaded as supplementary information.