Abstract

In this paper we introduce a discrete-time and continuous state-space Markov stationary process , where has a two-parameter Weibull distribution, 's are dependent and there is a positive probability that . The motivation came from the gold price data where there are several instances for which . Hence, the existing methods cannot be used to analyze this data. We derive different properties of the proposed Weibull process. It is observed that the joint cumulative distribution function of and has a very convenient copula structure. Hence, different dependence properties and dependence measures can be obtained. The maximum likelihood estimators cannot be obtained in explicit forms, we have proposed a simple profile likelihood method to compute these estimators. We have used this model to analyze two synthetic data sets and one gold price data set of the Indian market, and it is observed that the proposed model fits quite well with the data set.

Keywords: Weibull distribution, exponential distribution, maximum likelihood estimators, minification process, maximum likelihood predictor

AMS Subject Classifications: 62F10, 62F03, 62H12

1. Introduction

The aim of this paper is to introduce a new discrete-time and continuous state-space Markov Weibull process , which is flexible and it has certain distinct features so that it can be used in practice in different areas. In this case, the marginals are non-negative two-parameter Weibull distributions and s are dependent. It is a lag-1 process based on a minimization approach and with a positive probability. Therefore, if there is a non-negative time series data which are positively skewed and there is a positive probability that the consecutive values might be equal then the proposed model can be used quite effectively to analyze this data set.

The motivation for this work came when we were trying to analyze gold price data in the Indian market during the month of December 2020 and January 2021. It is observed that the data are coming from a positive valued lag-1 stationary process, there are several instances for which , and this cannot be ignored. It may be mentioned that there are several positive valued stationary processes available in the literature, for example, the exponential process by Tavares [14], Weibull and Gamma processes by Sim [13], the logistic process by Arnold [1], Pareto process by Arnold and Hallet [2], semi-Parero process by Pillai [12], generalized Weibull process by Jayakumar and Girish Babu [6], see also Yeh et al. [15], Arnold and Robertson [3], Jose et al. [7] and the reference cited therein. But none of these can be applied in this case, as in all these cases .

We have provided different properties of the proposed process . The Weibull process has one shape parameter and two scale parameters. If the shape parameter is one, it becomes a stationary exponential process. The generation from is quite straightforward, hence, different simulation experiments can be performed quite conveniently. The joint distribution of and has a very convenient copula structure. Therefore, several dependence properties and the dependence measures can be computed quite conveniently. We provide a characterization of the process. The marginals and the joint PDF can take a variety of shapes. The autocovariance and autocorrelation are not in convenient forms. However, we have provided the necessary expressions in Appendix for completeness purposes.

The maximum likelihood estimators (MLEs) cannot be obtained in explicit form. Moreover, it cannot be obtained in a routine manner. Based on some re-parameterization and using the profile likelihood method the MLEs can be obtained. The parametric bootstrap method can be used to compute the confidence intervals of the unknown parameters. We propose a goodness of fit test based on the parametric bootstrap approach. We have analyzed two synthetic data sets and one gold price data set for two months of the Indian market. It is observed that the proposed model fits the gold price data set quite well and it can be used quite effectively to analyze the gold price in the Indian market. It may be mentioned that the proposed process is a lag one process, but it can be extended to a lag-q process also, and it has been indicated how it can be done.

I think the major difference between the existing literature and the present manuscript is in its construction. The present manuscript allows to have ties, which are not available in the literature. Moreover, in the present manuscript we have provided the detailed inference procedure and showed with real data examples how it can be implemented in practice. This seems to be the main contribution of the present manuscript.

The rest of the paper is organized as follows. In Section 2 we have defined the Weibull process and provided its different properties. The maximum likelihood estimators have been discussed in Section 3. In Section 4 one goodness of fit test has been proposed based on the parametric bootstrap approach. The analyses of three data sets have been presented in Section 5. Finally, we conclude the paper in Section 6.

2. Weibull process and its properties

We will use the following notations in this paper. A Weibull random variable with the shape parameter and the scale parameter has the following probability density function (PDF);

| (1) |

and it will be denoted by WE . It has the following cumulative distribution function and hazard function, respectively, for x>0;

The mean and variance of WE is

| (2) |

respectively. A uniform random variable on (0,1) will be denoted by . Now, we are in a position to define the Weibull process.

Definition 2.1

Suppose are independent identically distributed (i.i.d.) random variables, then for , and , let us define a new sequence of random variables , where

(3) Then the sequence of random variables is called a Weibull process.

A Weibull process as defined in (3) will be denoted by WEP . Here α is the shape parameter and and are the scale parameters. It may be mentioned that a location parameter can easily be incorporated into the model, which has not been tried here. The name Weibull process comes from the following results.

Theorem 2.1

If is as defined in (3), then

is a stationary process.

follows WE .

Proof.

Part (i) follows from the definition. To prove Part (ii), note that

The following result characterizes the Weibull process.

Theorem 2.2

Let WE , and s are i.i.d. random variables with an absolute continuous distribution function on (0,1). Then the process as defined in (3) is a strictly stationary Markov process if and only if .

Proof.

‘If’ part is trivial. Now to prove the ‘only if’ part, we assume , and , for x>0. Therefore, for ,

If we write , then for 0<y<1,

Now we present the joint distribution of and , for .

Theorem 2.3

If satisfies (3), then the joint survival function of and , is

(4) where .

Proof.

The proof is quite simple and it is avoided.

The above theorem indicates that and are dependent for m = 1, and they are independently distributed if m>1. This makes the process as the lag-1 process. The joint distribution function of and will help to develop the dependence properties of the Weibull process and we would like to study it in more detail. The joint survival function of and can be written explicitly as

| (5) |

Therefore, if , then

| (6) |

It may be mentioned that (6) is the joint survival function of the Marshall–Olkin bivariate Weibull distribution, and its properties have been well studied in the literature. See for example Kundu and Dey [8] and Kundu and Gupta [10] and the references cited therein. It can be easily seen that the , for , has the following survival copula

| (7) |

The corresponding copula density function becomes

| (8) |

Based on the copula density function, the Spearman's ρ and Kendall's τ can be obtained as

respectively.

We will introduce the following regions, which will be used later.

Here, , and it may be noted that the curve C has the parametric form , for , where . The following results are needed for further development.

Theorem 2.4

If satisfies (3), then the joint survival function of and can be written as

(9) here ,

and can be obtained by subtraction, i.e.

(10)

Proof.

See in Appendix.

Now we provide the joint probability density function (PDF) of and , and because of the Markov property, it will be useful to compute the joint PDF of . It should be mentioned that since the joint distribution (survival) function is not an absolutely continuous distribution function the joint PDF does not exist in the terms of two-dimensional Lebesgue measure dominating. In this case, we need to consider the dominating measure in a different way similarly as in Bemis et al. [4]. Here, the dominating measure is the two-dimensional Lebesgue measure on , and one-dimensional Lebesgue measure on the curve C. Based on the above dominating measure the joint PDF of and , for and , can be written as follows.

Theorem 2.5

If satisfies (3), then the joint PDF of and is

(11) where

Proof.

See in Appendix.

The following conditional PDF will be useful for prediction purposes. The conditional PDF of given can be written as follows:

| (12) |

When α = 1, it becomes an exponential process. When , then the joint PDF of and for a Weibull process is

The conditional PDF of given can be written as

It follows that both for the Weibull and exponential processes,

The autocovariance and autocorrelation of a Weibull process cannot be obtained in convenient forms. In the case of exponential process, however, they can be obtained in explicit forms. We have provided all the necessary expressions in Appendix 2, for completeness purposes.

The joint PDF of can be obtained as

| (13) |

| (14) |

| (15) |

| (16) |

Note that (13) is obtained by the conditioning approach, (14) is obtained by using the Markov property, (15) is obtained by using the conditional density function, and the last step is obtained by simple algebraic calculation.

Now we will be discussing about the stopping time. It may be mentioned that the stopping time has been discussed quite extensively in the time series literature, see for example Christensen [5], Novikov and Shiryaev [11], and see the references cited therein. Let L>0 be a fixed real number, and let us define a new random variable N, such for ,

Then clearly, N is a stopping time. Now for , first we obtain

The probability generating function of N can be obtained as

Using the probability generating function, different moments and other properties can be easily derived.

3. Maximum likelihood estimators

In this section we consider the maximum likelihood estimators of the unknown parameters of a Weibull process based on a sample of size n, namely . We consider two cases separately.

Case 1: =

In this case it is assumed that . Our problem is to estimate α and λ based on . We use the following notations:

The number of elements in , and are denoted by , and , respectively. Based on the joint PDF (16), the log-likelihood function can be written as

| (17) |

where

It is immediate that for any α, . Hence, for a given α, the MLE of λ, say can be obtained as

| (18) |

and the MLE of α, say can be obtained by maximizing

| (19) |

Once, is obtained, then the MLE of λ, say can be obtained as . Due to the complicated nature of it is difficult to prove that it is a unimodal function. But in our data analysis, it is observed that is a unimodal function, and it will be explained later. In the case of exponential process, the MLE of λ can be obtained as

| (20) |

Case 2:

In this section, we consider the case when and are arbitrary. We use the following notations

and , and . Here, β is same as defined before. Based on the data vector , the log-likelihood function of , and α becomes

| (21) |

It is not trivial to maximize (21) directly. Hence, we use the following re-parameterization. We use the following transformed parameters, , where and based on the transformed parameters, the log-likelihood function can be written as

| (22) |

where

We propose to use the profile likelihood method to maximize (22). For fixed γ and α (β is also fixed in that case), first, we maximize (22) with respect to , say , and it can be obtained in explicit form as

The MLEs of γ and α, say and , respectively, can be obtained by maximizing . Finally, the MLE of can be obtained as . We will denote this as . Due to complicated nature of the function , it is difficult to prove that it has a unique maximum. But in our data analysis, it is observed from the contour plot that is a unimodal function. In both the cases we have suggested the parametric bootstrap method to construct confidence intervals of the unknown parameters. They can be very easily implemented in practice. In the case of exponential process, the MLE of for a given γ can be obtained as

and the MLE of γ can be obtained by maximizing . It is a one-dimensional optimization problem.

4. Goodness of fit

In this section, we provide a goodness of fit test so that whether a given data set comes from a Weibull process or not can be tested. Suppose is a sample from a stationary sequence . We want to test the following null hypothesis

Let us use the following notations. We denote as the ordered , similarly, as the ordered , and as their ordered expected values under . Here, depend on , but we do not make it explicit. We use the following statistic for goodness of fit test.

It is expected that if is true, then should be small. Hence, we use the following test criterion for a given level of significance

where is such that

Note that also depends on , but we do not make it explicit for brevity. It is difficult to obtain theoretically even for large n. Hence, we propose to use the parametric bootstrap technique to approximate from a given observed sample

Hence, if , then we reject the null hypothesis with β% level of significance, otherwise we accept the null hypothesis.

5. Data analysis

In this section we have analyzed three data sets; two synthetic data sets and one real gold price data set of the Indian market for two months. The main idea of these data analyses is to see how the proposed MLEs work in practice and also how the proposed model works in real life.

5.1. Synthetic data set 1:

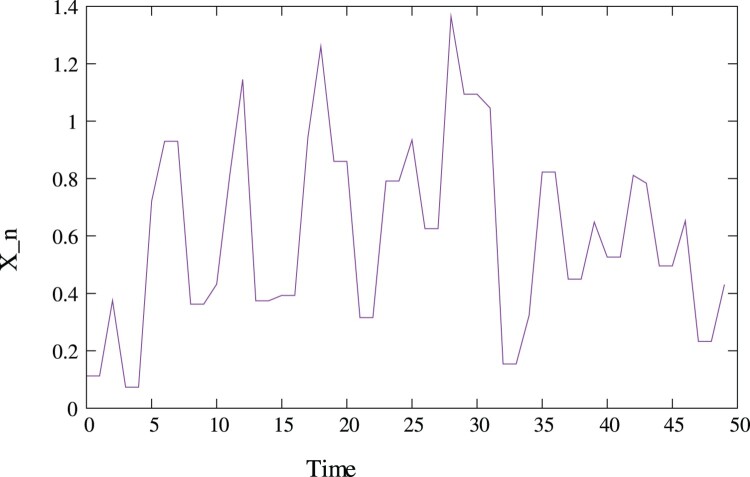

In this section, we analyze one synthetic data set, and it has been generated using the following model specification: α = 2.0, , n = 50. The data set (Data Set 1) has been presented in Figure 1.

Figure 1.

Synthetic data set with α = 2.0, .

In this case it is observed = 17, = 18 and = 14. We would like to compute the MLEs of the unknown parameters based on the assumption . It involves solving a one-dimensional optimization problem. The profile log-likelihood function of α has been presented in Figure 2.

Figure 2.

The profile log-likelihood of the synthetic data set.

It is a unimodal function. Based on the profile maximization we obtain the MLEs of α and λ as = 1.912 and = 1.068. The associate 95% confidence intervals are (1.714, 2.106) and (0.878, 1.245), respectively.

5.2. Synthetic data set 2:

In this section, we analyze one synthetic data set, and it has been generated using the following model specification: α = 3.0, = 0.15, = 0.04 and n = 75. The data set (Data Set 2) has been presented in Figure 3.

Figure 3.

Synthetic data set with α = 3.0, = 0.15 and = 0.04.

Now we would like to compute the MLEs of the unknown parameters. We have adopted the two-dimensional grid search method to compute the MLEs of the unknown parameters. The MLEs of α, and become = 3.344, = 0.154 and = 0.029. The associated 95% bootstrap confidence intervals become (2.876, 3.954), (0.137, 0.173) and (0.019, 0.047), respectively.

5.3. Gold price data

In this section, we present the analyses of the gold price data in India for two months period from 1 December 2020 to 31 January 2021. The data represents the price of one gram of gold in Indian rupees. It is presented in Figure 4. In this case n = 62, the minimum, maximum and median values are 4280, 4580 and 4355, respectively. There are 29, 22 and 10 cases, so that , and , respectively. We have performed the run test on the entire data set , there are 28 runs, and the associated p value is less than 0.001. Hence, we reject the null hypothesis that they are independently distributed.

Figure 4.

Gold price data in India (rupees/gram) from 1 December 2020 to 31 January 2021.

We have plotted the autocorrelation function (ACF) and the partial autocorrelation (PACF) of the gold price data in Figures 5 and 6, respectively. It is clear from the ACF and PACF that although and are correlated, they are uncorrelated given , for .

Figure 5.

Autocorrelation function of the gold price data.

Figure 6.

Partial autocorrelation function of the gold price data.

We have performed run tests on two lag-1 series. The number of runs are 16 and 17, respectively. The associated p values are 0.07 and 0.18, respectively. We have performed run tests on three lag-2 series also. The number of runs are 12, 12 and 11, respectively. The associated p values are 0.56, 0.56, 0.25, respectively. Hence, based on the p values we cannot reject the null hypothesis that lag-2 observations are independently distributed. We have fitted Weibull distribution to all the three lag-2 series, the Kolmogorov–Smirnov distances and the associated p values reported in brackets are 0.1453 (0.7920), 0.2163 (0.3066) and 0.2227 (0.2743). Based on the p values we cannot reject the null hypothesis that lag-2 observations are from i.i.d. Weibull distribution.

Now we would like to compute the MLEs of the unknown parameters under the assumption . It may be mentioned that in this case reasonable estimates of α and λ can be obtained in explicit forms without solving any optimization. In case of a Weibull distribution, the approximate MLEs of the unknown parameters can be obtained in explicit forms by expanding the log-likelihood function using the first-order Taylor series expansion, see for example Kundu and Gupta [9]. Based on this approach we can obtain estimates of α and λ from the odd sequence as well as from the even sequence of the data. By taking the averages of these two estimates, we obtain estimates of α and λ as 2.9878 and 0.1154, respectively.

Now we would like to obtain the MLEs of α and λ by maximizing the log-likelihood function. The profile log-likelihood function of α has been plotted in Figure 7. By maximizing the profile log-likelihood function, we obtain the MLE of α as 2.6640, the MLE of λ as 0.0729 and the associated log-likelihood value becomes −116.9681. Based on parametric bootstrapping the associated 95% confidence intervals of α and λ are (2.1375, 3.1231) and (0.0548, 0.0976), respectively.

Figure 7.

The profile log-likelihood function of α.

Further, we have calculated the MLEs of the unknown parameters when . We have computed the MLEs of the unknown parameters by maximizing the profile log-likelihood of γ and α, i.e. , with respect to γ and α. It is being performed by using the grid search method, and the MLEs are as follows: = 3.2238, = 0.0917, = 0.0192 and the corresponding log-likelihood value becomes −115.9317. The associated 95% confidence intervals of α, and become (2.6213, 3.8231), (0.0529, 0.1412), (0.0123, 0.0204), respectively.

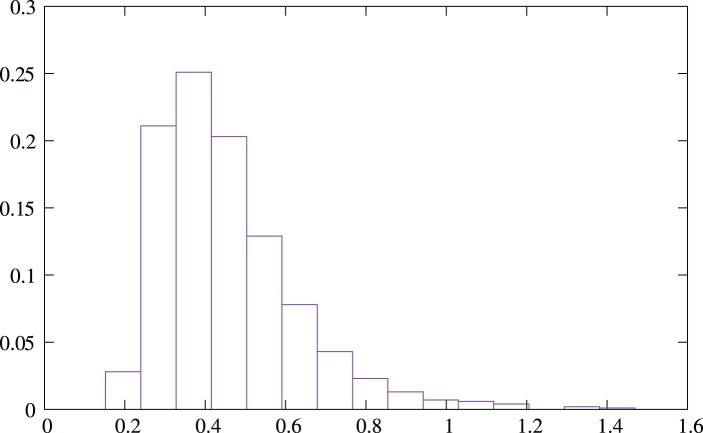

It is clear that based on the BIC model selection criterion we prefer the model WIP( ) than WIP . Now we would like to see whether both the models fit the data or not. We have used the bootstrap method proposed in Section 4 with B = 1000. The histogram of the generated when and when are provided in Figures 8 and 9, respectively.

Figure 8.

Histogram of the generated test statistics when .

Figure 9.

Histogram of the generated test statistics when .

The test statistic for the model WIP( ) is 0.1685, and the associated p-value is greater than 0.90. It seems it provides a good fit for the data set. The test statistic for the model WIP( ) is 0.6291, and the associated p value is less than 0.05. Hence, it does not provide a good fit for the data set.

6. Conclusions

In this paper, we have proposed a new discrete-time and continuous state-space stochastic process based on the Weibull distributions. The distinct feature of the proposed process is that there is a positive probability that , for some n. Hence, this model can be used quite effectively when there are ties in the two consecutive time points. We have studied different properties of the proposed process, and also provided the inference procedures of the unknown parameters.

Note that the proposed stochastic process is a lag-1 process, but it can be easily extended to lag-q process as follows: Suppose are independently and identically distributed (i.i.d.) uniform random variables, and . Then

is a lag-q stationary Weibull process. It can be easily checked that there is a positive probability that , for some n, and for . It also has a convenient copula structure. It will be interesting to develop different properties and classical inferences of this process. More work is needed in this direction.

Acknowledgements

The author would like to thank two unknown reviewers for their constructive suggestions, which have helped to improve the paper significantly.

Appendices.

Appendix 1. Proofs

Proof Proof of Theorem 2.4 —

Note that p and can be obtained from as follows:

and

Now, from

where

Since

Using this p, can be obtained by simple integration, and after that can be obtained by subtraction.

Alternatively, a simple probabilistic argument also can be given as follows. Suppose A is the following event

then

Moreover,

and

The rest can be obtained by subtraction.

Proof Proof of Theorem 2.5 —

We need to show that for all ,

here for , , , and . It has already been shown in Theorem 2.4 that

hence, the result is proved if we can show

Since, and ,

where . Let us remember,

Hence, the result follows

Appendix 2. Autocovariance and autocorrelation functions

In this section, we provide all the expressions of the autocorrelation function of the GE process mainly for completeness purposes. First, we will calculate . If denotes the indicator function on the set A, then

Now

If we denote and as incomplete gamma functions, then

We have already indicated the mean and variance of a Weibull random variable in (2). Now based on the above expressions, the autocovariance and autocorrelation functions can be obtained. In the case of exponential process i.e. when α = 1, the above expressions can be obtained in explicit forms. For example

Since,

the autocovariance and autocorrelation can be obtained in explicit forms.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- 1.Arnold B.C., Logistic process involving Markovian minimization, Commun. Stat. – Theor. Meth. 22 (1993), pp. 1699–1707. [Google Scholar]

- 2.Arnold B.C. and Hallet T.J., A characterization of the Pareto process among stationary processes of the form , Stat. Probab. Lett. 8 (1989), pp. 377–380. [Google Scholar]

- 3.Arnold B.C. and Robertson C.A., Autoregressive logistic processes, J. Appl. Probab. 26 (1989), pp. 524–531. [Google Scholar]

- 4.Bemis B., Bain L.J., and Higgins J.J., Estimation and hypothesis testing for the parameters of a bivariate exponential distribution, J. Am. Stat. Assoc. 67 (1972), pp. 927–929. [Google Scholar]

- 5.Christensen S., Phase-type distributions and optimal stopping for autoregressive processes, J. Appl. Probab. 49 (2012), pp. 22–39. [Google Scholar]

- 6.Jayakumar K. and Girish Babu M., Some generalizations of Weibull distribution and related processes, J. Stat. Theor. Appl. 14 (2015), pp. 425–434. [Google Scholar]

- 7.Jose K.K., Ristić M.M., and Joseph A, Marshall-Olkin bivariate Weibull distributions and processes, Stat. Pap. 52 (2011), pp. 789–798. [Google Scholar]

- 8.Kundu D. and Dey A.K, Estimating the parameters of the Marshall Olkin bivariate Weibull distribution by EM Algorithm, Comput. Stat. Data Anal. 53 (2009), pp. 956–965. [Google Scholar]

- 9.Kundu D. and Gupta R.D., Estimation of for Weibull distribution, IEEE Trans. Reliab. 55 (2006), pp. 270–280. [Google Scholar]

- 10.Kundu D. and Gupta A., Bayes estimation for the Marshall-Olkin bivariate Weibull distribution, Comput. Stat. Data Anal. 57 (2013), pp. 271–281. [Google Scholar]

- 11.Novikov A. and Shiryaev A., On solution of the optimal stopping problem for processes with independent increments, Stochastics. 79 (2007), pp. 393–406. [Google Scholar]

- 12.Pillai R.N., Semi-Pareto processes, J. Appl. Probab. 28 (1991), pp. 461–465. [Google Scholar]

- 13.Sim C.H., Simulation of Weibull and gamma autoregressive stationary processes, Commun. Stat. – Simul. Comput. 15 (1986), pp. 1141–1146. [Google Scholar]

- 14.L.V, Tavares, An exponential Markovian stationary process, J. Appl. Probab. 17 (1980), pp. 1117–1120. [Google Scholar]

- 15.Yeh H.C., Arnold B.C., and Robertson C.A, Pareto process, J. Appl. Probab. 25 (1988), pp. 291–301. [Google Scholar]