Summary

In low- and middle-income countries (LMICs), the fields of medicine and public health grapple with numerous challenges that continue to hinder patients' access to healthcare services. ChatGPT, a publicly accessible chatbot, has emerged as a potential tool in aiding public health efforts in LMICs. This viewpoint details the potential benefits of employing ChatGPT in LMICs to improve medicine and public health encompassing a broad spectrum of domains ranging from health literacy, screening, triaging, remote healthcare support, mental health support, multilingual capabilities, healthcare communication and documentation, medical training and education, and support for healthcare professionals. Additionally, we also share potential concerns and limitations associated with the use of ChatGPT and provide a balanced discussion on the opportunities and challenges of using ChatGPT in LMICs.

Keywords: Large language model, ChatGPT, Public health, Global health, Low to middle income countries, Equity

Introduction

“Can you do this for me?”

Most would wish for a personal assistant, ready at beck and call to tackle everything from mundane tasks to complex decisions. The evolution of artificial intelligence (AI) chatbots has potentially transformed this fantasy into tangible reality. Worldwide, individuals ranging from students to business executives are increasingly leveraging AI chatbots for diverse purposes: from assisting with homework crafting professional emails, to seeking medical guidance.

Although many use AI chatbots to complete menial tasks, they have considerable potential for improving access to information, facilitating timely communication, enhancing self-care management, and providing contextually relevant support to patients. The World Health Organization (WHO) reports that at least half of the world's 7.3 billion people lack access to essential health services they need.1 As a result, the life expectancy gap between countries with the most and least expansive health coverage is 21 years.1 These low- and middle-income countries (LMICs) often face complex public health challenges related to limited resources and practice limitations.1 An example of this is the COVID-19 pandemic, with LMICs and their marginalized populations disproportionally affected as a result of existing health disparities.2

ChatGPT, a large language model (LLM) developed by OpenAI, is a publicly accessible AI chatbot. It is built on the Generative Pre-trained Transformer (GPT) architecture, with GPT-3.5 and GPT-4 as its backbones.3 These models have been trained on a vast dataset, enabling them to engage in dynamic conversations across many languages. Despite being trained on data dated to September 2021, ChatGPT can integrate real-time or knowledge-based information through plugins, and GPT-4 can now process both image and text input.3 ChatGPT possesses the potential to impact a substantial portion of the global population, including those in LMICs. This potential serves to elevate opportunities for advancements in public health within these regions.4 After the release of ChatGPT, several similar LLMs emerged. These include Google's Bard, Microsoft's Bing, Baidu's Ernie, Anthropic's Claude, Alibaba's Tongyi Qianwen and many others. The potential of AI in medical care and public health programs has spurred a wealth of discussions, with a particular focus on the possible benefits for LMICs.5 Nevertheless, despite AI tools demonstrating impressive performance in research settings, their translation into practical real-world applications, especially in LMICs, has been restricted.6,7 This is primarily due to the challenges associated with integrating such tools into existing infrastructures, and the need for specific hardware and trained personnel to effectively leverage these technologies. On the contrary, ChatGPT is a publicly available service that requires minimal bandwidth, thus opening up the potential to reach a vast proportion of the global population, potentially effecting transformative change in medicine and public health. As the user base of ChatGPT expands and competitive alternatives emerge on the global stage, the currently high computational cost of ChatGPT might decrease, enhancing its affordability and accessibility, particularly in LMICs.8 This unique potential positions ChatGPT distinctively from its predecessors in medical AI services.

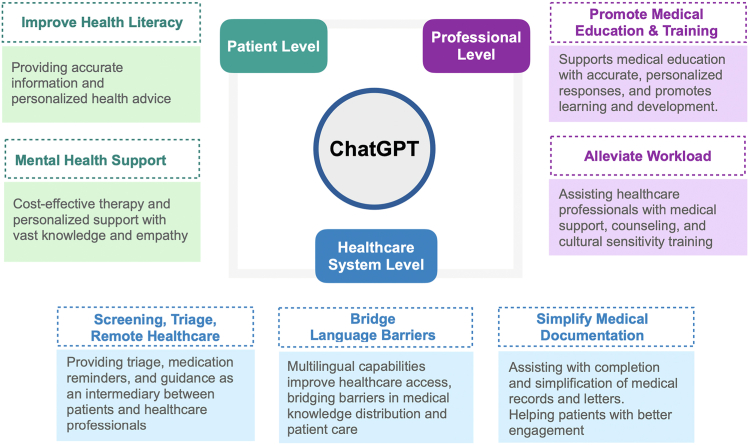

In this viewpoint, we aim to discuss the potential advantages and drawbacks of employing ChatGPT to advance healthcare in low- and middle-income countries (LMICs) by examining its potential impact on three distinct levels: patients, healthcare professionals, and healthcare systems (Fig. 1). The insights shared herein reflect the authors' perspectives and judgements based on current evidence.

Fig. 1.

The impact of ChatGPT on medicine and public health in low- and middle-income countries.

Patient level

Improving health literacy

Health literacy is crucial for making informed health decisions and reducing risks. However, in LMICs, obstacles such as inaccessible or inaccurate information, language differences, and cultural customs often contribute to low health literacy. As a result, health education programs must be tailored to meet the specific needs of each population because approaches used in developed countries may not be directly applicable to LMICs.

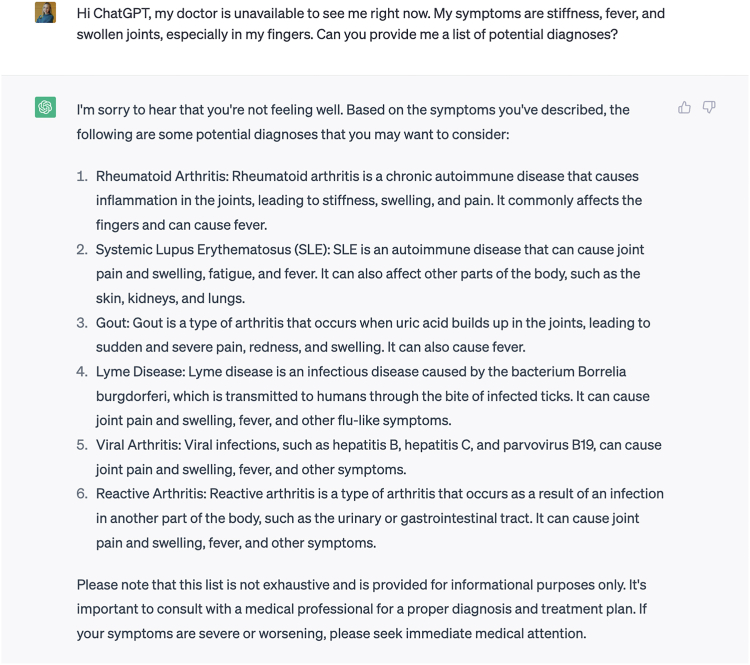

ChatGPT has significant potential to increase health literacy, particularly in LMICs where healthcare access is often limited and qualified physicians are scarce.9 First, ChatGPT can provide reasonably reliable disease information, including potential diagnosis and practical management suggestions relevant to patients' everyday lives. Table 1 contains results from a collection of studies on ChatGPT diagnosing different medical conditions.10, 11, 12 These examples suggest ChatGPT's effectiveness in providing accurate advice on treating a wide range of conditions from more common ailments such as the common cold and cough13 to more serious conditions like hypertension and diabetes mellitus.14 ChatGPT even displayed sufficient, quick advice for treating emergencies like acute organophosphate poisoning, and chronic conditions like cirrhosis and hepatocellular carcinoma.15 Fig. 2 shows a discussion between ChatGPT and one of the authors (HMS) about providing a potential diagnosis.

Table 1.

Summary of studies evaluating ChatGPT's performance in various medical domains.

| Medical domain | Study details | Performance metrics | Findings | Reference |

|---|---|---|---|---|

| Diabetes | Differentiate ChatGPT-generated and human answers with 10 questions about diabetes. | 59.5% participants could identify ChatGPT-generated answers with a binary assessment. | The strongest correlation is with previous ChatGPT use (p = 0.003) among all factors. Linguistic features might hold a higher predictive value than the content itself. | Hulman et al., 202314 |

| Microbiology | Answers to 96 first- and second-order questions based on the competency-based medical education (CBME) curriculum. | Mean score was 4.07 (±0.32)/5, which had an accuracy rate of 80%. | ChatGPT has the potential to be an effective tool for automated question-answering in microbiology. | Das et al., 202310 |

| Medical responses | Answers to 284 medical questions across 17 specialties. | Largely correct responses with mean accuracy score of 4.8/6 and mean completeness score of 2.5/3. | Substantial improvements were observed in 34 of 36 questions scored 1–2. ChatGPT generated largely accurate information although with important limitations. | Johnson et al., 202311 |

| Cancer | Comparison with NCI's answers about 13 questions on a cancer-related web page. | 96.9% overall agreement on the accuracy of cancer information. | Few noticeable differences were in the number of words or the readability of the answers from NCI or ChatGPT. | Johnson et al., 202316 |

| Cirrhosis and hepatocellular carcinoma (HCC) | Performance comparison between ChatGPT and physicians or trainees in 164 questions. | An overall accuracy of 76.9% in quality measures. | ChatGPT demonstrated strong knowledge of cirrhosis and HCC, but lacked comprehensiveness in diagnosis and preventive medicine. | Yeo et al., 202315 |

| Prostate cancer | Comparison between five state-of-the-art large language models in providing information on 22 common prostate questions. | ChatGPT had the highest accuracy rate and comprehensiveness score among all five Large Language Model, and had satisfactory patient readability. | Large Language Models with internet-connected dataset was not superior to ChatGPT. Paid version of ChatGPT did not show superiority over the free version. | Zhu et al., 202317 |

| Toxicology | Response to a case of acute organophosphate poisoning. | Subjective assessment. | ChatGPT fared well in answering and offered good explanations of the underlying reasoning. | Abdel-Messih et al., 202318 |

| Shoulder Impingement Syndrome (SIS) | Analyzed the ability of providing information for SIS. | Subjective assessment. | ChatGPT could provide useful medical information and treatment options for patients with SIS, including symptoms, similar diseases, orthopedic tests, and exercise recommendations. But potential biases and inappropriate information must be taken into account. | Kim J hee, 202219 |

| Infection | Antimicrobial advice in eight hypothetical infection scenario based questions. | Subjective assessment of appropriateness, consistency, safety, and antimicrobial stewardship implications. | ChatGPT exhibited deficiencies in situational awareness, inference, and consistency in clinical practice. | Howard et al., 202320 |

| Ophthalmology | Tested the accuracy on two question banks used for the high-stakes Ophthalmic Knowledge Assessment Program (OKAP) exam. | 55.8% and 42.7% accuracy in the two 260-question simulated exams. | Performance of ChatGPT varied across ophthalmic subspecialties. Domain-specific and pre-training might improve its performance. | Antaki et al., 202321 |

| Neuropathic pain | Tested the performance on 50 pairs of causal relationships in neuropathic pain diagnosis. | ChatGPT tended to make false negative mistakes, and showed high precision and low recall from the binary assessments of true/false. | ChatGPT lacked in consistency and stability in the context of neuropathic pain diagnosis. Using Large Language Models' causal claims as causal discovery results requires caution because of fundamental differences in the tasks and biases in causal benchmarks. | Tu et al., 202322 |

Fig. 2.

ChatGPT provides potential diagnoses when presented with a list of symptoms.

ChatGPT can improve the quality of life for people living with disabilities or chronic illnesses. Be My Eyes, a mobile app aimed for people with blindness or visual impairment, has integrated OpenAI's GPT-4 in its Virtual Volunteer tool.23 ChatGPT can potentially offer a more cost-effective care service for users with visual impairment in LMICs, including India, Pakistan, Philippines, Iran and Ghana.24 Through its image-to-text interpretation capabilities, it can further empower these individuals with greater independence.

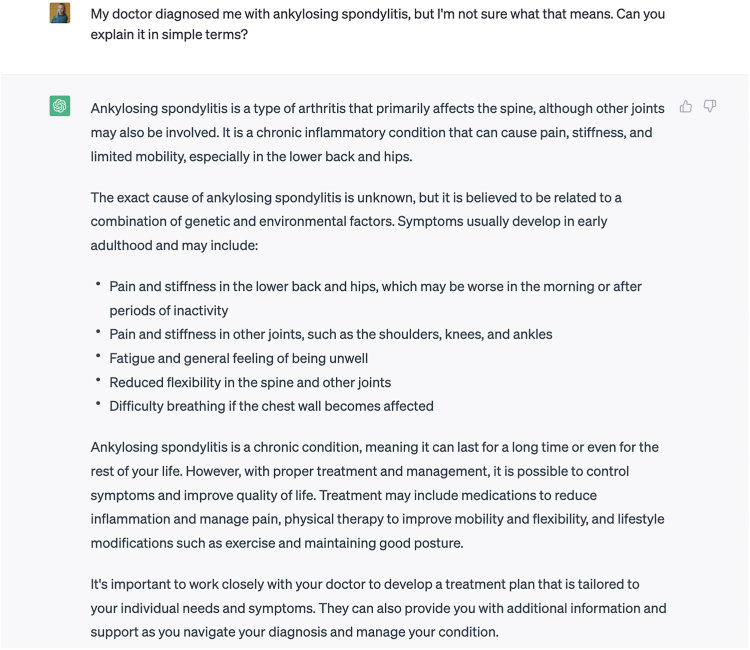

Medical records laden with intricate terminology can be perplexing for patients, potentially leading to misunderstandings. ChatGPT offers a promising solution by making healthcare knowledge more digestible.17 Fig. 3 illustrates an example of conversation between ChatGPT and one of the authors (HMS), where ChatGPT explains a diagnosis of ankylosing spondylitis in simple terms. A study conducted in Delhi, India, highlighted the efficacy of chatbots in delivering culturally attuned breastfeeding education, with chatbots addressing 88% of participants' inquiries.25 By accessing the chatbot, patients are able to learn and ask questions about sensitive information in private environment. For instance, given its advanced capabilities, ChatGPT can potentially offer precise and user-friendly information on maternal and child health to expectant mothers and new parents. This exemplifies how ChatGPT can enhance health literacy in a secure, anonymous manner. Nonetheless, it is imperative for patients to consult with their physicians for definitive advice and before making any major health decisions. Physicians, as licensed experts, possess the requisite training to provide medical counsel, whereas ChatGPT disseminates information based on its database, which may not always be current or accurate. Therefore, patients should prioritize physician consultations before taking any major medical actions.

Fig. 3.

ChatGPT simplifies complex medical terminology.

Mental health support

Inequities in mental health care emerge as a prevalent issue in LMICs.26 According to a WHO report, around 76%–85% patients with serious mental disorders did not receive any treatment in these countries.27 The National Mental Health Survey of India reported a treatment gap of 83% for any mental disorder.28 In Cambodia, a LMIC in Southeast Asia, the WHO reports the fewest mental health service providers relative to other nations in the Western Pacific Region.29 This shortfall stems from inadequate training of mental health professionals to manage and treat severe mental illness, further exacerbated by resource constraints.

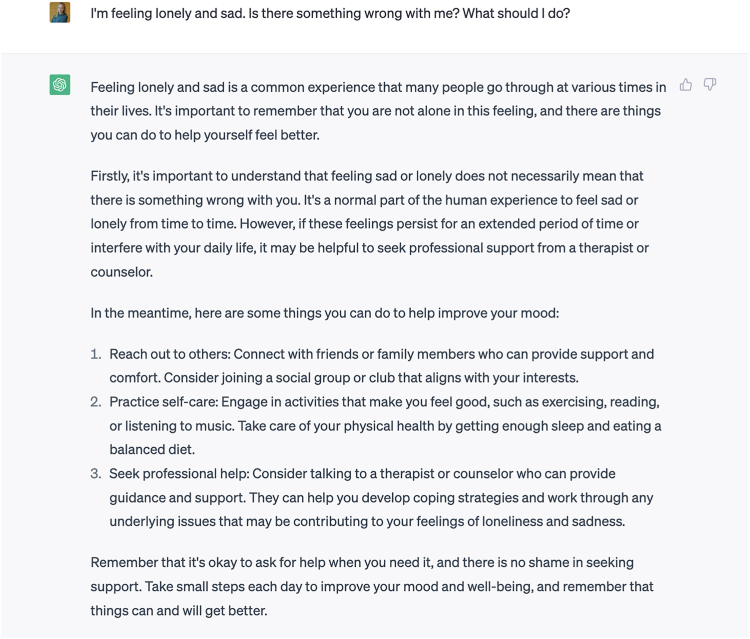

ChatGPT and similar applications are emerging as viable alternatives for reducing the gap in mental health services. With the ability to produce human-like responses, ChatGPT potentially offers companionship, support, and even therapy to individuals grappling with psychological challenges. Notably, ChatGPT is not only well-versed in psychiatric conditions but also exhibits a semblance of “empathy” in its responses. Research has demonstrated that ChatGPT provided high-caliber answers to patient queries in an online forum. Impressively, ChatGPT's responses were perceived as markedly more empathetic than those of physicians, with the frequency of empathetic or highly empathetic responses being 9.8 times greater for ChatGPT than for medical professionals.30 Additionally, certain iterations of ChatGPT are freely accessible from virtually any location, enabling patients to confide their personal experiences and feelings without the potential unease of an in-person consultation. Consequently, ChatGPT, when viewed as a mental health resource, holds promise in mitigating or even obliterating financial and logistical barriers to care.

A few chatbots have already been developed for mental health purposes. For example, Shim is a smartphone app that engages users in dialogues on positive psychology, providing tailored responses based on pre-written dialogues and user inputs.31 The Cambodian Ministry of Women's Affairs created an app with a chatbot to assist women and girls in reporting violence or abuse and offer timely and vital counseling support.32 However, these chatbots are often considered shallow and confusing by users.33 ChatGPT, an advanced instance of NLP embodied in a LLM, was trained on an extensive dataset. This training enables it to produce a broader spectrum of responses tailored to user preferences, such as tone and response length. In contrast, older chatbots are often confined to specific contexts or predetermined response templates, limiting their utility.34 One of ChatGPT's notable features is its ability to continuously adapt to user interactions.3 As a result, it has the potential to offer more personalized emotional advice and support. Fig. 4 shows a discussion between ChatGPT3.5 version and one of the authors (HMS), simulating mental health symptoms. ChatGPT was able to respond to user queries and offers strategies to improve mental health.

Fig. 4.

ChatGPT provides empathetic support when presented with mental health symptoms.

While recognizing its potential benefits, it's important to recognise that ChatGPT, like any tool, is not without limitations, especially when deployed in sensitive domains such as mental health support. An unfortunate incident in Belgium, involving an interaction between an adult male and an AI chatbot on the Chai app, underscores the potential implications if AI chatbots do not adhere strictly to necessary safety protocols.35 This incident serves as a stark reminder of the utmost importance of these safeguards. ChatGPT, as part of its safeguards, employs safety mechanisms to prohibit the generation of content that promotes self-harm or suicide.36 If such content is detected, ChatGPT promptly alerts the user of the content's violation against its policy and encourages the individual to seek support from trusted sources. Nevertheless, as the aforementioned case demonstrates, the possibility of manipulating prompts to circumvent these safety protocols remains a pressing concern.37 Therefore, it is crucial that ChatGPT and similar chatbots are continually monitored and updated based on the latest research and findings in mental health and AI ethics. This will require a multidisciplinary approach, bringing together experts in AI, mental health, ethics, regulators, and other relevant fields to ensure that these systems are as safe and effective as possible.

Healthcare professional level

Promoting medical education and training

Medical training in LMICs has continuously encountered barriers and challenges in the effort to produce well-trained physicians. In a study in Pakistan,38 only 56% of physicians met an acceptable diagnostic standard for viral diarrhea, and only 35% met the acceptable standard for treatment. A study conducted to evaluate pediatric care in Papua New Guinea found that the majority of physicians and other team members (69%) only checked for two out of four examination criteria for pneumonia cases.39 Challenges for medical education in LMICs can be attributed to insufficient financial and technological resources, undeveloped education models (i.e., teacher-centered approach instead of student-centered approach), and a lack of updated medical information.40 Below, we outline the capability of ChatGPT to complement medical teaching, particularly in LMICs.

ChatGPT, particularly with the GPT-4 engine, can serve as a valuable supplementary tool for medical education in resource-limited settings. ChatGPT performed well on medical examinations such as United States Medical Licensing Examination (USMLE)41,42 and Korean general surgery board exam.43 A recent study evaluated ChatGPT's potential in medical question answering, finding it provided logical, informative responses, equivalent to a third-year medical student's passing score.44 The performance of ChatGPT on the Medical College Admission Test (MCAT) demonstrated its ability to accurately answer standardized test questions while offering sufficient, logical explanations.45

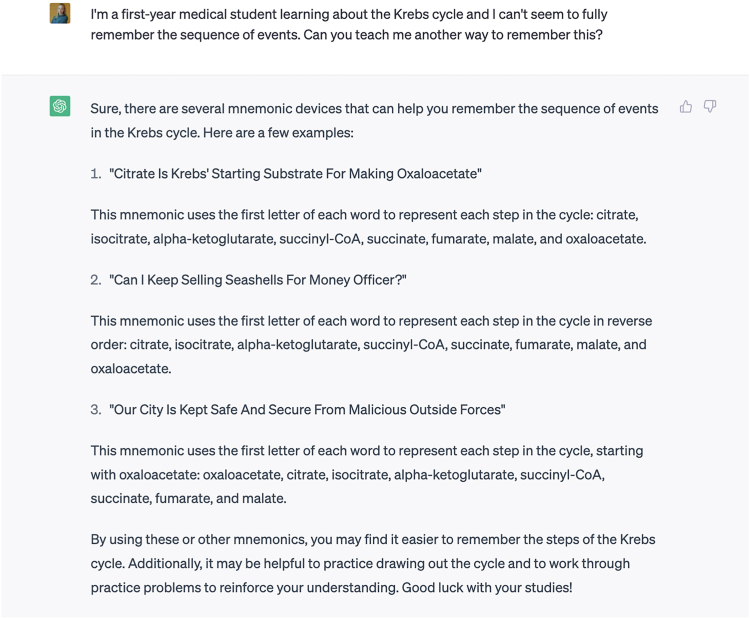

Kung et al. conducted a study that demonstrated ChatGPT's capability to produce comprehensive reasoning and valid clinical insights during the USMLE, suggesting its potential to assist medical students in education.42 ChatGPT demonstrated the capability to generate answers and explanations with a notable concordance of 94.6% across all USMLE questions.42 Such advancements potentially enable human learners to more effectively understand the logical sequence and inherent relational directionality present in the explanations offered by ChatGPT. In addition, ChatGPT allows medical students to ask questions about specific medical concepts and receive accurate and personalized responses to help them better structure their knowledge around each concept. Fig. 5 shows an interaction between ChatGPT and one of the authors (HMS), in which ChatGPT furnishes the user with mnemonic devices for remembering the Krebs Cycle. This holds promising potential, especially in LMICs where traditional medical education resources may be scarce. Furthermore, ChatGPT can offer suggestions to medical educators regarding course outlines and structure, further augmenting the medical education process.

Fig. 5.

ChatGPT provides learning strategies.

ChatGPT can revolutionize medical education by simulating case reports, offering a dynamic, case-based learning approach. This is invaluable when access to real patient data is limited due to consent or confidentiality concerns. ChatGPT's ability to generate realistic yet fictionalized patient interactions not only ensures diverse clinical exposure but also upholds ethical standards. By integrating this tool into medical curricula, students can engage with simulated cases in real-time, while fostering interactive learning and critical thinking. Levaraging on ChatGPT as a pedagogy tool may represent a new paradigm shift in blended learning. Finally, medical training and education are lifelong processes for healthcare professionals, and keeping up with the latest research, techniques, and guidelines can be challenging, especially in LMICs. Through plugins, ChatGPT can provide instant access to relevant up-to-date medical information and resources for healthcare professionals, making it easier to support their ongoing learning and development, improve their skills and knowledge, and therefore provide better care to patients.46

Alleviating workload of healthcare professionals

Healthcare professionals in LMICs are often confronted with overwhelming work pressure that stems from a complex interplay of factors. The patient load in these regions is typically high, a situation exacerbated by a chronic shortage of healthcare providers. Resources are often limited, which makes patient care even more challenging. Additionally, the lack of sufficient institutional and professional support in terms of professional development opportunities–compounds these challenges. These challenges potentially compromise the quality of care and the well-being of healthcare providers themselves. In this context, tools like ChatGPT may present opportunities to alleviate some of these pressures.

For instance, ChatGPT can assist in answering primary care level medical queries, offering comprehensive treatment strategies and recommendations for healthcare professionals.47, 48, 49 It can potentially assist health professionals in differential diagnosis, evaluating risks, formulating personalized treatment plans, and diagnosing complex conditions by providing relevant information. In a recent study by Balas et al.,50 ChatGPT was found to have potential value in diagnosing ophthalmic conditions. For provisional diagnosis, the correct diagnosis was identified in 9/10 cases. For differential diagnosis, the correct answer was obtained in all 10/10 cases from all listed possibilities of differentials.

Despite the promising potentials, deployment of ChatGPT and LLMs alike must be done judiciously and with careful consideration of the unique challenges inherent to LMICs. A salient issue in many LMICs is the pronounced shortage of physicians.51 This scarcity means that the responsibility of patient care often rests heavily on a limited number of healthcare professionals. Introducing ChatGPT into this healthcare landscape presents both potential challenges and benefits. On one hand, there's a risk that the already limited physicians might face an increased workload. This could arise if patients, after consulting with ChatGPT, require medical intervention due to following potentially incorrect advice. Conversely, ChatGPT could serve as a valuable tool in alleviating some of the pressure on these physicians. By offering prompt solutions to routine medical inquiries, ChatGPT might reduce the number of standard consultations, enabling physicians to dedicate more time to complex cases.

Healthcare systems level

Screening, triage and remote healthcare support

The lack of access to healthcare is a major issue for LMICs, with several causes contributing to significant challenges. These causes include physician shortages, inadequate healthcare infrastructure, and extensive travel distances to healthcare facilities, particularly in regions lacking specialists. In areas with limited healthcare access, like in remote regions, it is crucial to provide basic information that can help individuals identify symptoms and triage health concerns. In such settings, technology emerges as a useful assistive tool. Telemedicine services and AI-assisted triage and screening tasks have shown great promise in addressing these healthcare gaps in LMICs.52, 53, 54

ChatGPT can serve as a valuable bridge between patients and healthcare professionals, aiding in primary health assessments, appointment scheduling, triage recommendations, medication reminders, and follow-up care. This integration can be realized by incorporating ChatGPT services into existing systems via its APIs or plugins.55 Through interactive conversations and targeted questioning, ChatGPT can gather patient medical histories and offer insights into the urgency and type of medical attention needed. By utilizing plugins, ChatGPT can actively monitor patient conditions, send medication reminders, schedule check-ups, and assess if urgent care is necessary. Furthermore, the insights provided by ChatGPT can be invaluable for physicians, enhancing their diagnostic and treatment strategies.

Furthermore, ChatGPT presents capabilities that could enhance telemedicine, potentially broadening the scope of remote medical services. Utilizing its API, ChatGPT can potentially be integrated into telemedicine platforms, offering timely, evidence-based insights to aid clinical decision-making. For example, when users engage with a telemedicine platform, ChatGPT can streamline the initial patient surveys, collecting health histories and capturing essential symptom data. This information can be systematically organized and relayed to physicians for subsequent consultation, whether virtual or in-person. Moreover, during these consultations, ChatGPT can support practitioners by accessing pertinent medical literature, guidelines, and drug information. The reliability and effectiveness of ChatGPT as a virtual medical assistant have been tested in various fields (Table 1),12 including prostate cancer,17 cardiovascular disease,56 infection,20 ophthalmology,21 and neuropathic pain.22 In addition, ChatGPT can provide drug information such as side effects, interactions, contraindications, dosage, administration guidelines, storage requirements, and potential alternatives. However, it is important to acknowledge that, in its current version, ChatGPT's responses cannot replace medical advice from a doctor or other healthcare professional. Hence, patients should not blindly follow ChatGPT's advice without the proper direction from their doctor. In addition, by acting as virtual assistants, ChatGPT can promote remote healthcare management through appointment booking, treatment facilitation, and health information management.

Simplifying medical documentation

In LMICs, medical professionals often encounter challenging circumstances such as inadequate resources, manpower, and deficient infrastructure. The documentation of medical records is particularly time-consuming and resource-intensive. ChatGPT has the potential to assist with administrative tasks such as clinical letter writing, discharge summaries, clinical vignettes, and simplified reports. ChatGPT has been shown to create clinical letters with naturalness and readability comparable to human-generated letters.57 ChatGPT can also help fine-tune language and style by suggesting appropriate vocabulary, grammar, and sentence structures. Discharge summaries, frequently sidelined due to time limitations and pressing clinical duties, can be crafted by ChatGPT. This not only eases the burden on junior doctors but also bolsters patient safety during transitions in care.58 Nevertheless, users should remain vigilant, refraining from entering sensitive or private data into ChatGPT and always verifying its outputs for accuracy. A recent study suggested that most radiologists agree that ChatGPT-generated simplified reports are complete and safe for patients, allowing for better understanding and preparation for healthcare interactions.59

Bridging language barriers in medical knowledge distribution and patient care

English is the predominant language used in medicine,60,61 on the internet62 and in academic publishing.63 Among 80 LMICs, 27 countries' official languages include English, while 25 countries' official languages include other high-resource languages (>1% of data in the CommonCrawl), 12 countries' official languages include medium-resource languages (>0.1%), and 16 countries' official languages are low-resourced (<0.1%).64,65 English is an academic subject, yet not commonly spoken in LMICs. Conversely, some LMICs might not include English in their curricula at all. This linguistic gap can hinder physicians in accessing current and accurate medical data, predominantly available in English. Patients, too, might struggle to find pertinent health details due to language barriers. The linguistic tapestry of many LMICs is rich; for instance, India recognizes 22 distinct official languages. In such multilingual contexts, physicians often rely on interpreters or translation services to bridge communication gaps with patients.66 ChatGPT's exceptional multilingual capabilities, enabled by the GPT-4 backbone,67 addressing the intricacies of language diversity and enhancing communication. These capabilities offer potential benefits in two significant ways.

Firstly, ChatGPT's multilingual capabilities enable it to deliver informed responses across all supported languages, even if not directly trained in a specific language. In certain NLP tasks, ChatGPT demonstrated comparable or superior performance for some low-resource languages relative to high- and medium-resource ones. This suggests that ChatGPT's efficacy is not necessarily diminished for low-resource languages prevalent in LMICs.68 Such capability may be invaluable for physicians and patients seeking medical and public health insights in their native tongues that is not English. Access to dependable medical information in native languages can empower both healthcare providers and recipients, fostering improved health outcomes.

Secondly, ChatGPT offers high-quality translations,67 enhancing the readability and accuracy of translated information. If harnessed responsibly, physicians can gain a deeper understanding of intricate medical scenarios and leverage this tool for enhanced communication with linguistically diverse patients. Patients will also be able to develop a clearer understanding of their health conditions and treatment options, and become more engaged in their care, ultimately resulting in better health outcomes. The continued evolution and deployment of ChatGPT and other LLMs are poised to significantly bridge the global health chasm, championing universal access to health information and services.

Potential concerns, limitations, proposed approaches, and roadmap for implementation

While the potential of ChatGPT to enhance healthcare in LMICs is considerable, it is also crucial to identify and recognise its limitations and potential concerns. In particular, there is a concern that application of ChatGPT in LMICs with lower literacy levels and limited digital literacy may not directly benefit the intended population, despite the well intention. On this note, ensuring data accuracy, addressing misinformation and biases, comprehending the relevance of context, adhering to ethical guidelines, mitigating overreliance, and promoting equal access to technology should be considered holistically to effectively integrate ChatGPT into medicine and public health settings. Furthermore, validation of ChatGPT-based interventions in community and clinical settings should be further explored and the legal and infrastructure requirements for implementing ChatGPT and similar services in LMICs should be assessed.

ChatGPT's reproducibility and reliability

As a LLM, ChatGPT statistically predicts words based on their precedence in previously seen texts without truly comprehending the knowledge generated or the prompts provided.69 Its reliability depends on the accuracy and quality of its training data, which remains undisclosed and likely has a limited focus on medicine and public health. This undisclosed data may contain inaccuracies, leading to the absorption of misinformation, imbalanced content, and biases during the training process.69 Due to these factors, ChatGPT can occasionally yield inconsistent answers and may not fully capture the intricacies of medical sciences.70 Moreover, when tasked with recognizing unanswerable questions, ChatGPT's accuracy stood at a mere 27.8%. This suggests its inclination to offer speculative responses rather than declining such queries, a critical aspect in the medical field.71 Its output is also influenced by its assigned role or perspective (such as “you are a helpful assistant”) in conversations. In more severe instances, it may deliver potentially harmful response if the prompt is malicious.48 It is also vulnerable to adversarial attacks, where even an alternation of a single character can significantly impact its response.71 Thus, responses generated by ChatGPT in the medical context cannot be entirely trusted and ought to be cross checked by a trained physician. However, in LMICs, the scarcity of specialized medical training might amplify these flaws and biases, as physicians may struggle to identify inaccurate information. Further research and development are needed to enhance the reliability of ChatGPT in the medical domain, ensuring its effective application in LMICs.

In addition to ChatGPT, there are domain-specific LLMs like Google's Med-PaLM and medAlpaca, which are fine-tuned specifically for medical question answering. Research suggests that while GPT-4 hasn't been tailored specifically for medical knowledge, it surpasses Med-PaLM in the medical domain.41 Yet, with the introduction of the Med-PaLM 2 model, its performance either matches or even exceeds that of the general-purpose ChatGPT-4 in certain medical question-answering scenarios.72 The continuous advancement of domain-specific LLMs bodes well for the future, promising to enhance the precision and dependability of LLM-generated responses, which holds significant potential for patient-focused applications.

Traditionally, individuals turned to internet searches for health information, requiring discernment to assess the accuracy of online content. ChatGPT, while facing similar challenges, has been shown to provide answers that are either superior to or on par with Google search results in cardiovascular areas.73 While it can offer valuable information and guidance for common diseases and public health related topics, the extent to which it can function autonomously, i.e., whether its use should be limited to generic medical queries or extend to more advanced, patient-centric contexts, remains to be determined. Currently, in response to standard medical questions in English, ChatGPT using the GPT-4 engine often prefaces with “I am not a doctor, but...”. While this disclaimer emphasizes ChatGPT's non-medical nature, it might not deter all from exclusively depending on its guidance. Rigorous evaluation of ChatGPT's medical accuracy and safety is crucial to guide its use and help users discern its advice. Enhancing its accuracy requires not only diverse data, particularly from LMICs, but also a more structured, context-aware approach to health queries to prevent potentially misleading or harmful responses.

Disproportionate proficiency in language and social context

Although multilingual, ChatGPT's training data are primarily English-based and fine-tuned with prompts mostly in English,74,75 leading to a disparity in language proficiency and potentially inferior performance in non-English languages68 which are often spoken in LMICs. This potential limitation ought to be highlighted. Furthermore, recent report indicated that ChatGPT's responses tend to align closer with Western culture and may adapt less effectively to other cultural contexts.76 This could indicate that ChatGPT may lack cultural and religious appropriateness when implemented in LMICs. Thus, ChatGPT's limited understanding of complex social and cultural aspects relevant to medicine and public health could impede its applications in LMICs. One example where cultural consideration is especially important is in the realm of medical education. Students must be able to care for patients while considering cultural context and customs. ChatGPT's Western-typical responses may impede this important education if ChatGPT is used to create curricula in a LMIC. For instance, cultural variations are relevant especially in the discussion of mental health. Presentation of mental health symptoms have been known to vary across cultures, and therefore may require cultural specific-sensitivity when devising management and treatment plans for patients.77 The vast cultural diversity across LMIC regions may exacerbate this challenge. While evaluation tasks exist to gauge cultural differences in LLMs, there is a notable scarcity of studies that delve into cultural and religious appropriateness within healthcare. This gap underscores the need to infuse patient perspectives and localized contexts into the next phase of LLM development and refinement. Embracing a patient- and population-centric methodology could address this disparity, fostering tailored care in LMIC environments.78 Furthermore, adherence to ethical norms and best practices in digital safety and privacy is paramount. This would further ensure safety and contextual relevance in LMICs.

Infrastructure and accessibility challenges

The computational power demanded by ChatGPT and similar LLMs currently necessitates dedicated servers and internet connections, which poses challenges for adoption in LMICs due to infrastructure limitations and insufficient internet access or electronic devices. According to a report from the Alliance for Affordable Internet (A4AI), basic internet access is only available to half of the population in LMICs, and of those, only 10% have what is referred to as “meaningful connectivity” or a decent internet connection.79 However, the ongoing development of LLMs and innovative internet solutions hold promise for mitigating these challenges in the near future.

Recent advancements, such as Stanford's Alpaca model fine-tuned from Meta's LLaMA, demonstrate the feasibility of operating LLMs on mobile devices, potentially enabling offline use in rural areas with limited connectivity. Additionally, creative internet solutions like SpaceX's satellite-based Starlink system and the continued exploration of balloon technology for internet provision, such as Project Loon pioneered by Google, can help bridge the connectivity gap in remote regions.80

By partnering with NGOs and alternative internet providers, it is plausible that advanced LLMs like ChatGPT will become more universally accessible and available, regardless of geographical locations.

Implementation issues of ChatGPT in LMICs

The potential integration of ChatGPT into the medical landscape of LMICs necessitates a thorough examination of liability and ethics. Key questions arise: Who bears responsibility for adverse outcomes stemming from ChatGPT's advice? How would medical malpractice involving ChatGPT be addressed? How is user data safeguarded? A recent viewpoint By Harrer offers a potential regulatory blueprint, emphasizing the formation of AI ethics committees with decisive authority and advocating for collaboration among developers, providers, patients, and other stakeholders.69

User privacy and data protection represent a critical issue that requires careful attention. It is essential to adhere to strict data protection guidelines and ethical standards, as well as maintaining transparency in communication to users. According to OpenAI, user's conversations, including any personal data the user input, were shared with the company by default and may be used to improve their models.81 Research has demonstrated that, despite ChatGPT's dialog safety measures against revealing personal information, certain prompting engineering methods, such as a combination of jailbreak and Chain-of-Thoughts prompts, are able to compromise its safety defence mechanism.82 When analyzing health-related data with ChatGPT, there is a risk of inadvertently violating patient privacy, particularly when dealing with sensitive personal health information. To mitigate this risk, it is crucial to ensure that such applications follow strict data protection guidelines and adhere to privacy regulations. In addition, users must be aware of what information is appropriate to input into ChatGPT. Also, transparency is vital in building trust and maintaining ethical standards. Many countries, including the European Union, the United States, and China, have started discussions on the need to regulate services like ChatGPT.83 Recently, Italy took a significant step by temporarily banning ChatGPT, highlighting the need to address ethical concerns related to generative AI at an international level. However, many LMICs, particularly in South-Asia and Southeast Asia, lack the legal provisions and enforcement mechanisms to counter data breaches and cybercrime, potentially complicating the deployment of ChatGPT in LMICs. Consequently, while ChatGPT's potential is evident, its practical implementation in LMICs is shrouded in uncertainties, attributed to sparse legal backing for e-health solutions, health system limitations, and a slower digital transition pace in LMICs.ChatGPT's current limitation in offering evidence-based advice contrasts with the rigorous standards of clinical practice. Physicians typically provide recommendations after thorough patient evaluations, a nuance ChatGPT might miss in its broad response spectrum. Additionally, cultural and social beliefs can impede AI adoption. Research indicates a patient preference for in-person consultations over remote alternatives, even if more costly. While ChatGPT and similar digital health tools aren't intended to supplant face-to-face interactions, they serve as valuable adjuncts. The COVID-19 pandemic exemplified this, with a surge in digital health technology adoption, both in direct pandemic response and broader health service delivery during lockdowns. While the irreplaceability of traditional medical examinations stands, emerging evidence suggests potential benefits of remote health services, especially for underserved groups.84,85 Nevertheless, rigorous oversight and monitoring are crucial to prevent misinformation from LLM chatbots like ChatGPT.

Overreliance on ChatGPT could stifle critical thinking and decision making

Excessive reliance on ChatGPT-generated insights and recommendations might result in a decline in critical thinking and decision-making abilities among healthcare professionals. In fact, studies have shown that incorrect AI predictions could cause clinicians to doubt their own initial judgment, regardless of their experience, especially when they lacked confidence in their judgment in the first place.86 This could be exacerbated in LMICs where healthcare professionals generally have limited access to specialized and high-quality training and resources, making them more susceptible to the influence of AI-generated information and potentially compromising the quality of care delivered to patients. It is essential to strike a balance between leveraging AI technology and maintaining human expertise, ensuring that professionals continue to exercise their judgment and expertise even when supplemented with AI-generated information.78

Furthermore, ChatGPT may not have a complete understanding of the complex intricacies associated with various medicine and public health issues. Overreliance on AI-generated information may also inadvertently give rise to oversimplified solutions for complex medical topics. Such oversimplified solution generated by ChatGPT will not be sufficient and effective in addressing multifaceted public health challenges.

Taken together, user education plays a critical role in ensuring that users understand ChatGPT and the role it can play in their healthcare or health education. It is important to prioritize user education programs that explain the working principles of ChatGPT in simple and accessible language. Moreover, it is essential to emphasize the role of ChatGPT as an augmenting tool rather than a replacement for human decision-making. By emphasizing on the importance of user education, one can better ensure that the use of ChatGPT in healthcare is done responsibly and ethically.

Roadmap and future considerations

For the effective implementation of ChatGPT and similar LLMs in LMICs, a deep understanding of both barriers and potential solutions is paramount. Given the rapid evolution of LLMs and the potential of ChatGPT in healthcare, we propose the following roadmap:ChatGPT's initial applications in LMICs can be multifaceted. It can generate culturally sensitive health messages, broadening its reach to wider audiences. Additionally, it can potentially serve as an administrative assistant, supporting health professionals in tasks such as drafting clinical letters and creating discharge summaries. Furthermore, as detailed above, ChatGPT can also significantly influence medical training in LMICs.As we progress, fine-tuning, customization, and the subsequent evaluation of LLM become paramount. LLMs should be adapted to resonate with local languages and cultures in LMICs, ensuring cultural appropriateness. Research should be conducted to assess the utility of ChatGPT in diverse settings, especially in aiding clinical decision-making. Concurrently, there is a need to develop robust regulations for its application in complex scenarios. In light of the considerable potential for LLMs like ChatGPT in healthcare, particularly in LMICs, there are several key considerations to ponder for the future. User induction and education will be central to ChatGPT's success. Initiatives should be launched to test ChatGPT's practicality in varied healthcare contexts within LMICs. These efforts should be complemented with capacity-building initiatives and public awareness campaigns, ensuring that both professionals and the public are well-informed. In addition, monitoring and research will be the backbone of this roadmap. Frameworks should be established to rigorously assess ChatGPT's efficacy, safety, and overall impact, all while considering the unique contexts of LMICs. This evaluation should encompass observational studies, randomized trials, and user feedback. On the regulatory front, there is a pressing need for comprehensive oversight. Regulatory blueprints addressing liability, while promoting innovation and safeguarding patient well-being, should be crafted. These discussions should actively involve healthcare bodies, tech companies, and legal experts.

It is also crucial to recognize that the challenges of healthcare accessibility are not exclusive to LMICs. The strategies we have proposed have the potential to benefit healthcare systems universally, and may be applicable to underserved regions of high-income countries. Lastly, before deploying ChatGPT in LMIC healthcare settings, a comprehensive strategic plan should be mutually agreed upon by governing bodies and healthcare institutions. This plan should ensure data privacy and security, establish clear ethical guidelines, and prepare for potential risks or challenges that may arise from the use of this technology. By adopting these measures, we may be better positioned to improve patient care, support healthcare providers, and work towards a more balanced and efficient healthcare ecosystem.

Conclusion

Despite the inherent challenges arising from its training data constraints and potential inaccuracies, ChatGPT holds promise as a valuable tool in LMICs where access to quality healthcare is limited. ChatGPT stands to make promising contributions to medicine and public health by facilitating more immediate access to essential medical knowledge and education. This encompasses domains like health literacy, screening, triage, remote assistance, mental health, healthcare communication, documentation, and broader medical training. With the growing availability of function-enhancing plugins, ChatGPT's capabilities could potentially be augmented by incorporating more up-to-date and specialized medical information. Nonetheless, when contemplating its adoption in LMICs, a nuanced approach is essential, factoring in ethical considerations, cultural relevance, existing infrastructural constraints, prevalent low literacy rates, and limited digital proficiency. To optimize ChatGPT's utility in LMICs, it is crucial to institute suitable user onboarding and relevant educational initiatives. These initiatives can help set realistic expectations regarding ChatGPT's capabilities while fostering responsible and ethical use of this technology in LMICs.

Contributors

Conceptualisation: XW, HS, ST, JC, YXW, TYW, YCT, KC.

Literature review: XW, HS, YL, ST, JC, YXW, YCT, KC.

Project administration: XW, YL.

Supervision: XW, JC, YXW, TYW, YCT, KC.

Writing—original draft: XW, HS, YL, YXW.

Writing—review & editing: XW, HS, YL, BXT, KS, AGA, YQ, ST, JC, YXW, TYW, YCT, KC.

Declaration of interests

YQ received payment from Asia Pacific Medical Technology Association for presentation.

TYW received editorial support and medical writing from ApotheCom, study funding and article processing charges from Bayer AG, Leverkusen, Germany; funding of editorial support and medical writing from Bayer Consumer Care AG, Basel, Switzerland; study funding from Regeneron Pharmaceuticals, Inc; consulting fees from Aldropika Therapeutics, Bayer, Boehringer Ingelheim, Genetech, Iveric Bio, Novartis, Oxurion, Plano, Roche, Sanofi and Shanghai Henlius. He is also an inventor, patent-holder and a cofounder of start-up companies EyRiS and Visre.

KC received funding from the National Institutes of Health, book royalties from Wolters Kluwer and Elsevier, and a research grant from Sonex to study carpal tunnel outcomes.

Acknowledgements

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Contributor Information

Yih-Chung Tham, Email: thamyc@nus.edu.sg.

Kevin C. Chung, Email: kecchung@med.umich.edu.

References

- 1.World Health Organization . World Health Organization; Budapest, Hungary: 1998. Improving health care in developing countries using quality assurance: report of a World Health Organization pre-ISQUA joint review meeting; pp. 5–6. [Google Scholar]

- 2.World Health Organization . 2021. COVID-19 and the social determinants of health and health equity: evidence brief. [Google Scholar]

- 3.OpenAI . 2023. GPT-4 technical report.http://arxiv.org/abs/2303.08774 [Google Scholar]

- 4.Eloundou T., Manning S., Mishkin P., Rock D. 2023. GPTs are GPTs: an early look at the labor market impact potential of large language models.http://arxiv.org/abs/2303.10130 [DOI] [PubMed] [Google Scholar]

- 5.Schwalbe N., Wahl B. Artificial intelligence and the future of global health. Lancet. 2020;395:1579–1586. doi: 10.1016/S0140-6736(20)30226-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.van de Sande D., Van Genderen M.E., Smit J.M., et al. Developing, implementing and governing artificial intelligence in medicine: a step-by-step approach to prevent an artificial intelligence winter. BMJ Health Care Inform. 2022;29 doi: 10.1136/bmjhci-2021-100495. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Ciecierski-Holmes T., Singh R., Axt M., Brenner S., Barteit S. Artificial intelligence for strengthening healthcare systems in low- and middle-income countries: a systematic scoping review. NPJ Digit Med. 2022;5:162. doi: 10.1038/s41746-022-00700-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Chen L., Zaharia M., Zou J. 2023. FrugalGPT: how to use large language models while reducing cost and improving performance. [DOI] [Google Scholar]

- 9.Guo J., Li B. The application of medical artificial intelligence technology in rural areas of developing countries. Health Equity. 2018;2:174–181. doi: 10.1089/heq.2018.0037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Das D., Kumar N., Longjam L.A., et al. Assessing the capability of ChatGPT in answering first- and second-order knowledge questions on microbiology as per competency-based medical education curriculum. Cureus. 2023;15(3) doi: 10.7759/cureus.36034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Johnson D., Goodman R., Patrinely J., et al. Assessing the accuracy and reliability of AI-generated medical responses: an evaluation of the chat-GPT model. Research Square (preprint) 2023 doi: 10.21203/rs.3.rs-2566942/v1. [DOI] [Google Scholar]

- 12.Iftikhar L., Iftikhar M.F., Hanif M.I. 2023. DocGPT: impact of ChatGPT-3 on health services as a virtual doctor. [Google Scholar]

- 13.Hossain M.M., Krishna Pillai S., Dansy S.E., Bilong A.A. Mr Dr. Health-assistant chatbot. Int J Artif Intell. 2021;8:58–73. [Google Scholar]

- 14.Hulman A., Dollerup O.L., Mortensen J.F., et al. ChatGPT- versus human-generated answers to frequently asked questions about diabetes: a Turing test-inspired survey among employees of a danish diabetes center. PLoS One. 2023;18(8) doi: 10.1371/journal.pone.0290773. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Yeo Y.H., Samaan J.S., Ng W.H., et al. Assessing the performance of ChatGPT in answering questions regarding cirrhosis and hepatocellular carcinoma. Clin Mol Hepatol. 2023;29(3):721–732. doi: 10.3350/cmh.2023.0089. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Johnson SB, King AJ, Warner EL, Aneja S, Kann BH, Bylund CL. Using ChatGPT to evaluate cancer myths and misconceptions: artificial intelligence and cancer information. JNCI Cancer Spectrum. 2023;7:kad015. doi: 10.1093/jncics/pkad015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Zhu L., Mou W., Chen R. 2023. Can the ChatGPT and other large language models with internet-connected database solve the questions and concerns of patient with prostate cancer? 2023.03.06.23286827. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Abdel-Messih MS, Boulos MNK. ChatGPT in clinical toxicology. JMIR Med Education. 2023;9 doi: 10.2196/46876. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Kim J. Search for medical information and treatment options for musculoskeletal disorders through an artificial intelligence Chatbot: focusing on shoulder impingement syndrome. medRxiv. 2022 doi: 10.1101/2022.12.16.22283512. [DOI] [Google Scholar]

- 20.Howard A., Hope W., Gerada A. ChatGPT and antimicrobial advice: the end of the consulting infection doctor? Lancet Infect Dis. 2023;23(4):405–406. doi: 10.1016/S1473-3099(23)00113-5. [DOI] [PubMed] [Google Scholar]

- 21.Antaki F., Touma S., Milad D., El-Khoury J., Duval R. Evaluating the performance of ChatGPT in ophthalmology: an analysis of its successes and shortcomings. Ophthalmol Sci. 2023;3(4):100324. doi: 10.1101/2023.01.22.23284882. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Tu R., Ma C., Zhang C. 2023. Causal-discovery performance of ChatGPT in the context of neuropathic pain diagnosis. [DOI] [Google Scholar]

- 23.Be my eyes. https://openai.com/customer-stories/be-my-eyes

- 24.Community stories. https://www.bemyeyes.com/community-stories

- 25.Yadav D., Malik P., Dabas K., Singh P. Feedpal: understanding opportunities for chatbots in breastfeeding education of women in India. Proc ACM Hum-Comput Interact. 2019;3:1–30. [Google Scholar]

- 26.Parry S.J., Wilkinson E. Mental health services in cambodia: an overview. BJPsych Int. 2020;17:29–31. doi: 10.1192/bji.2019.24. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Demyttenaere K., Bruffaerts R., Posada-Villa J., et al. Prevalence, severity, and unmet need for treatment of mental disorders in the world health organization world mental health surveys. JAMA. 2004;291:2581–2590. doi: 10.1001/jama.291.21.2581. [DOI] [PubMed] [Google Scholar]

- 28.Singh O. Artificial intelligence in the era of ChatGPT - opportunities and challenges in mental health care. Indian J Psychiatry. 2023;65:297. doi: 10.4103/indianjpsychiatry.indianjpsychiatry_112_23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.World Health Organization . 2021. Mental health atlas 2020. [Google Scholar]

- 30.Ayers J.W., Poliak A., Dredze M., et al. Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum. JAMA Intern Med. 2023;183:589. doi: 10.1001/jamainternmed.2023.1838. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.A fully automated conversational agent for promoting mental well-being: a pilot RCT using mixed methods. Internet Interv. 2017;10:39–46. doi: 10.1016/j.invent.2017.10.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Kimmarita L. Ministry to launch app, chatbot to help counter gender violence. https://phnompenhpost.com/national/ministry-launch-app-chatbot-help-counter-gender-violence

- 33.Abd-Alrazaq A.A., Alajlani M., Ali N., Denecke K., Bewick B.M., Househ M. Perceptions and opinions of patients about mental health chatbots: scoping review. J Med Internet Res. 2021;23 doi: 10.2196/17828. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Oh K.-J., Lee D., Ko B., Choi H.-J. 2017 18th IEEE international conference on mobile data management (MDM) IEEE; Daejeon, South Korea: 2017. A chatbot for psychiatric counseling in mental healthcare service based on emotional dialogue analysis and sentence generation; pp. 371–375. [Google Scholar]

- 35.‘He would still be here’: man dies by suicide after talking with AI chatbot, widow says. https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

- 36.Usage policies. https://openai.com/policies/usage-policies

- 37.Xiang C. The amateurs jailbreaking GPT say they’re preventing a closed-source AI dystopia. Vice. 2023 https://www.vice.com/en/article/5d9z55/jailbreak-gpt-openai-closed-source [Google Scholar]

- 38.Thaver I.H., Harpham T., McPake B., Garner P. Private practitioners in the slums of Karachi: what quality of care do they offer? Soc Sci Med. 1998;46:1441–1449. doi: 10.1016/s0277-9536(97)10134-4. [DOI] [PubMed] [Google Scholar]

- 39.Beracochea E., Dickson R., Freeman P., Thomason J. Case management quality assessment in rural areas of Papua New Guinea. Trop Doct. 1995;25:69–74. doi: 10.1177/004947559502500207. [DOI] [PubMed] [Google Scholar]

- 40.Al-Shamsi M. Addressing the physicians' shortage in developing countries by accelerating and reforming the medical education: is it possible? J Adv Med Educ Prof. 2017;5:210–219. [PMC free article] [PubMed] [Google Scholar]

- 41.Nori H., King N., McKinney S.M., Carignan D., Horvitz E. 2023. Capabilities of GPT-4 on medical challenge problems.http://arxiv.org/abs/2303.13375 [Google Scholar]

- 42.Kung T.H., Cheatham M., Medenilla A., et al. Performance of ChatGPT on USMLE: potential for AI-assisted medical education using large language models. PLoS Digital Health. 2023;2 doi: 10.1371/journal.pdig.0000198. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Oh N., Choi G.-S., Lee W.Y. ChatGPT goes to operating room: evaluating GPT-4 performance and future direction of surgical education and training in the era of large language models. Ann Surg Treat Res. 2023;104(5):269–273. doi: 10.4174/astr.2023.104.5.269. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Gilson A., Safranek C.W., Huang T., et al. How does ChatGPT perform on the United States medical licensing examination? The implications of large language models for medical education and knowledge assessment. JMIR Med Educ. 2023;9 doi: 10.2196/45312. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Bommineni V.L., Bhagwagar S., Balcarcel D., Davazitkos C., Boyer D. 2023. Performance of ChatGPT on the MCAT: the road to personalized and equitable premedical learning. 2023.03.05.23286533. [Google Scholar]

- 46.Moons P., Van Bulck L. ChatGPT: can artificial intelligence language models be of value for cardiovascular nurses and allied health professionals. Eur J Cardiovasc Nurs. 2023 doi: 10.1093/eurjcn/zvad022. [DOI] [PubMed] [Google Scholar]

- 47.Sallam M. ChatGPT utility in healthcare education, research, and practice: systematic review on the promising perspectives and valid concerns. Healthcare. 2023;11:887. doi: 10.3390/healthcare11060887. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Cascella M., Montomoli J., Bellini V., Bignami E. Evaluating the feasibility of ChatGPT in healthcare: an analysis of multiple clinical and research scenarios. J Med Syst. 2023;47:33. doi: 10.1007/s10916-023-01925-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Rao A., Kim J., Kamineni M., Pang M., Lie W., Succi M.D. Evaluating ChatGPT as an adjunct for radiologic decision-making. medRxiv. 2023 doi: 10.1016/j.jacr.2023.05.003. 2023.02.02.23285399. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Balas M., Ing E.B. Conversational AI models for ophthalmic diagnosis: comparison of ChatGPT and the isabel pro differential diagnosis generator. JFO Open Ophthalmology. 2023;1 [Google Scholar]

- 51.Saluja S., Rudolfson N., Massenburg B.B., Meara J.G., Shrime M.G. The impact of physician migration on mortality in low and middle-income countries: an economic modelling study. BMJ Glob Health. 2020;5 doi: 10.1136/bmjgh-2019-001535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Ting D.S., Gunasekeran D.V., Wickham L., Wong T.Y. Next generation telemedicine platforms to screen and triage. Br J Ophthalmol. 2020;104:299–300. doi: 10.1136/bjophthalmol-2019-315066. [DOI] [PubMed] [Google Scholar]

- 53.Garzon-Chavez D., Romero-Alvarez D., Bonifaz M., et al. Adapting for the COVID-19 pandemic in Ecuador, a characterization of hospital strategies and patients. PLoS One. 2021;16 doi: 10.1371/journal.pone.0251295. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Love S.M., Berg W.A., Podilchuk C., et al. Palpable breast lump triage by minimally trained operators in Mexico using computer-assisted diagnosis and low-cost ultrasound. JGO. 2018;4:1–9. doi: 10.1200/JGO.17.00222. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Dwivedi Y.K., Pandey N., Currie W., Micu A. Leveraging ChatGPT and other generative artificial intelligence (AI)-based applications in the hospitality and tourism industry: practices, challenges and research agenda. IJCHM. 2023 doi: 10.1108/IJCHM-05-2023-0686. [DOI] [Google Scholar]

- 56.Sarraju A., Bruemmer D., Van Iterson E., Cho L., Rodriguez F., Laffin L. Appropriateness of cardiovascular disease prevention recommendations obtained from a popular online chat-based artificial intelligence model. JAMA. 2023;329(10):842–844. doi: 10.1001/jama.2023.1044. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Ali S.R., Dobbs T.D., Hutchings H.A., Whitaker I.S. Using ChatGPT to write patient clinic letters. Lancet Digital Health. 2023;5(4):e179–e181. doi: 10.1016/S2589-7500(23)00048-1. [DOI] [PubMed] [Google Scholar]

- 58.Patel S.B., Lam K. ChatGPT: the future of discharge summaries? Lancet Digital Health. 2023;5:e107–e108. doi: 10.1016/S2589-7500(23)00021-3. [DOI] [PubMed] [Google Scholar]

- 59.Jeblick K., Schachtner B., Dexl J., et al. 2022. ChatGPT makes medicine easy to swallow: an exploratory case study on simplified radiology reports. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Chan S.M.H., Mamat N.H., Nadarajah V.D. Mind your language: the importance of English language skills in an International Medical Programme (IMP) BMC Med Educ. 2022;22:405. doi: 10.1186/s12909-022-03481-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Maher J. The development of english as an international language of medicine. Applied Linguistics. 1986;7:206–218. [Google Scholar]

- 62.Historical yearly trends in the usage statistics of content languages for websites. 2023. https://w3techs.com/technologies/history_overview/content_language/ms/y [Google Scholar]

- 63.Di Bitetti M.S., Ferreras J.A. Publish (in English) or perish: the effect on citation rate of using languages other than English in scientific publications. Ambio. 2017;46:121–127. doi: 10.1007/s13280-016-0820-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.World bank country and lending groups – world bank data help desk. https://datahelpdesk.worldbank.org/knowledgebase/articles/906519-world-bank-country-and-lending-groups

- 65.List of official languages by country and territory. Wikipedia; 2023. https://en.wikipedia.org/w/index.php?title=List_of_official_languages_by_country_and_territory&oldid=1169893098 [Google Scholar]

- 66.Al Shamsi H., Almutairi A.G., Al Mashrafi S., Al Kalbani T. Implications of language barriers for healthcare: a systematic review. Oman Med J. 2020;35:e122. doi: 10.5001/omj.2020.40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Jiao W., Wang W., Huang J., Wang X., Tu Z. Is ChatGPT A good translator? Yes with GPT-4 as the engine. arXiv. 2023 doi: 10.48550/ARXIV.2301.08745. [DOI] [Google Scholar]

- 68.Lai V.D., Ngo N.T., Veyseh A.P.B., et al. 2023. ChatGPT beyond english: towards a comprehensive evaluation of large language models in multilingual learning.http://arxiv.org/abs/2304.05613 [Google Scholar]

- 69.Harrer S. Attention is not all you need: the complicated case of ethically using large language models in healthcare and medicine. eBioMedicine. 2023;90 doi: 10.1016/j.ebiom.2023.104512. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Dahmen J., Kayaalp M., Ollivier M., et al. Artificial intelligence bot ChatGPT in medical research: the potential game changer as a double-edged sword. Knee Surg Sports Traumatol Arthrosc. 2023;31:1–3. doi: 10.1007/s00167-023-07355-6. [DOI] [PubMed] [Google Scholar]

- 71.Shen X., Chen Z., Backes M., Zhang Y. 2023. In ChatGPT we trust? Measuring and characterizing the reliability of ChatGPT.http://arxiv.org/abs/2304.08979 [Google Scholar]

- 72.Singhal K., Tu T., Gottweis J., et al. 2023. Towards expert-level medical question answering with large language models.http://arxiv.org/abs/2305.09617 [Google Scholar]

- 73.Van Bulck L., Moons P. What if your patient switches from Dr. Google to Dr. ChatGPT? A vignette-based survey of the trustworthiness, value, and danger of ChatGPT-generated responses to health questions. Eur J Cardiovasc Nurs. 2023 doi: 10.1093/eurjcn/zvad038. [DOI] [PubMed] [Google Scholar]

- 74.Ouyang L., Wu J., Jiang X., et al. 2022. Training language models to follow instructions with human feedback.http://arxiv.org/abs/2203.02155 [Google Scholar]

- 75.Brown T.B., Mann B., Ryder N., et al. 2020. Language models are few-shot learners.http://arxiv.org/abs/2005.14165 [Google Scholar]

- 76.Cao Y., Zhou L., Lee S., Cabello L., Chen M., Hershcovich D. 2023. Assessing cross-cultural alignment between ChatGPT and human societies: an empirical study.http://arxiv.org/abs/2303.17466 [Google Scholar]

- 77.Haroz E.E., Bolton P., Gross A., Chan K.S., Michalopoulos L., Bass J. Depression symptoms across cultures: an IRT analysis of standard depression symptoms using data from eight countries. Soc Psychiatry Psychiatr Epidemiol. 2016;51:981–991. doi: 10.1007/s00127-016-1218-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.McCradden M.D., Kirsch R.E. Patient wisdom should be incorporated into health AI to avoid algorithmic paternalism. Nat Med. 2023;29:765–766. doi: 10.1038/s41591-023-02224-8. [DOI] [PubMed] [Google Scholar]

- 79.Alliance for Affordable Internet . 2020. Meaningful connectivity: a new target to raise the bar for internet access.https://a4ai.org/wp-content/uploads/2021/02/Meaningful-Connectivity_Public-.pdf [Google Scholar]

- 80.Satellites beat balloons in race for flying internet. BBC News; 2021. https://www.bbc.com/news/technology-55770141 [Google Scholar]

- 81.How your data is used to improve model performance | OpenAI Help Center. https://help.openai.com/en/articles/5722486-how-your-data-is-used-to-improve-model-performance

- 82.Li H., Guo D., Fan W., Xu M., Song Y. 2023. Multi-step jailbreaking privacy attacks on ChatGPT.http://arxiv.org/abs/2304.05197 [Google Scholar]

- 83.China mandates security reviews for AI services like ChatGPT - bloomberg. https://www.bloomberg.com/news/articles/2023-04-11/china-to-mandate-security-reviews-for-new-chatgpt-like-services

- 84.Mgbako O., Miller E.H., Santoro A.F., et al. COVID-19, telemedicine, and patient empowerment in HIV care and research. AIDS Behav. 2020;24:1990–1993. doi: 10.1007/s10461-020-02926-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Cao B., Bao H., Oppong E., et al. Digital health for sexually transmitted infection and HIV services: a global scoping review. Curr Opin Infect Dis. 2020;33:44–50. doi: 10.1097/QCO.0000000000000619. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Tschandl P., Rinner C., Apalla Z., et al. Human–computer collaboration for skin cancer recognition. Nat Med. 2020;26:1229–1234. doi: 10.1038/s41591-020-0942-0. [DOI] [PubMed] [Google Scholar]