Highlights

-

•

Relationships across intelligence, cognition and neurocognitive disorders are complex.

-

•

The way these constructs are conceptualized leads to various measurement approaches.

-

•

Advanced data fitting models offered new conceptual and methodological frameworks.

Keywords: Intelligence; Cognition; Dementia; Executive functions, structural equation model

Abstract

The study of intelligence's role in development of major neurocognitive disorders (MND) is influenced by the approaches used to conceptualize and measure these constructs. In the field of cognitive impairment, the use of single ‘intelligence’ tests is a common approach to estimate intelligence. Despite being a practical compromise between feasibility and constructs, variance of these tests is only partially explained by general intelligence, and some tools (e.g., lexical tasks for premorbid intelligence) presented inherent limitations. Alternatively, factorial models allow an actual measure of intelligence as a latent factor superintending all mental abilities. Royall and colleagues used structural equation modeling to decompose the Spearman's general intelligence factor g in δ (shared variance across cognitive and functional measures) and g’ (shared variance across cognitive measures only). Authors defined δ as the ‘cognitive correlates of functional status’, and thus a ‘phenotype for all cause dementia’. Compared to g’, δ explained a little rate of cognitive measures’ variance, but it demonstrated a higher accuracy in dementia case-finding. From the methodological perspective, given g ‘indifference’ to its indicators, further studies are needed to identify the minimal set of tools necessary to extract g, and to test also non-cognitive variables as measures of δ. From the clinical perspective, general intelligence seems to influence MND presence and severity more than domain specific cognitive abilities. Giving δ ‘blindness’ to etiology, its association with biomarkers and contribution to differential diagnosis might be limited. Classical neuropsychological approaches based on patterns of performances at cognitive tests remained fundamental for differential diagnosis.

1. Introduction

Intelligence is a complex construct whose role in the development of major neurocognitive disorders (MND) is intriguing both in terms of its potentially ‘protective’ effect against age-related cognitive impairments, and its relationship with domain-specific cognitive functions that are typically evaluated according to formal neuropsychological assessments in both clinical and research settings.

The study of intelligence's role in neurocognitive disorders is influenced by the approaches used to conceptualize and measure these multifaceted constructs. The purpose of this personal view is to discuss both usual clinical and research paradigms and advanced conceptual and statistical approaches focused on the association between intelligence and neurocognitive disorders.

2. Cognitive reserve, premorbid intelligence and neurocognitive disorders

Some important studies linked intelligence scores in childhood, adolescence and young adulthood with subsequent MND occurrence, but a recent systematic review concluded that the overall evidence is inconclusive [1], [2], [3]. The Lothian Birth Cohort (LBC) and Aberdeen Birth Cohort (ABC) studies derived by the Scottish Mental Survey, and they both explored the association between intelligence estimates obtained in 11-year-old schoolchildren and MND risk in later life [4], [5], [6], [7]. The main results showed that lower childhood mental ability was related with an increased risk of MND, with some preliminary data for differential effects among MND subtypes (i.e., higher associations for Vascular Dementia, VaD, than Alzheimer Disease, AD) and sexes (i.e., dose-response association in women, but not in men) [4,5]. The epochal Nun study analyzed idea density and grammatical complexity, considered as proxy measures of intelligence, from autobiographies completed by novices at age 22, and found that low juvenile linguistic ability was associated with poorer cognitive function and higher incidence of AD later in life [8]. In a systematic review, the 2 studies that evaluated the effect of premorbid intelligence on the likelihood of developing MND reported a combined OR=0.58 (95%CI 0.44–0.77) for individuals with high pre-dementia intelligent quotient (IQ) compared to low, showing a decreased risk of 42% [9].

These studies support the cognitive reserve (CR) hypothesis that some people's brains may be more resilient to aging and neurodegeneration than others’, and that ‘premorbid’ intelligence may be a protective factor due to the brain's ability to tolerate more damage and/or to compensate for damage by more efficient cognitive processing [10,11]. The construct of CR has been proposed to account for interindividual differences in susceptibility to age-related brain changes and pathology: individuals with high cognitive reserve would cope better with the same amount of pathology than individuals with low cognitive reserve [10]. Previous studies found that some life experiences seem to be associated with resilience against age- or pathology-related decline of cognition [10,11]. Overall, some sociodemographic (e.g., educational attainment, occupational status, literacy) and behavioral (e.g., engagement in cognitively stimulating, leisure, physical, and social activities) characteristics seem to have the potential to contribute to CR, but the real mechanisms underlying these interactions need to be further elucidated [11]. Furthermore, these features have also been frequently used as proxies for CR, thus reducing our possibilities to explore their role as risk/protective, mediating, or confounding factors.

3. Common measures of intelligence in studies on neurocognitive disorders

Among the various approaches developed to estimate premorbid intelligence, those based on demographic regression equations, irregular word reading tasks and lexical decision-making tasks are the most common [12]. Regression equations’ methods are used to estimate premorbid intelligence based on socio-demographic characteristics but, although these variables are certainly correlated with intelligence, their real overlap needs to be further explored [12]. Irregular word reading (e.g., National Adult Reading Test, NART) and lexical decision-making tasks are based on the evaluation of language abilities (i.e., pronouncing irregularly spelled words and differentiating real words from pseudo-words, respectively) that are supposed to be relatively unaffected by cognitive decline [13]. Studies on healthy subjects have proven a strong association between scores on these tasks and general intelligence [12]. Results from a recent study based on moderated hierarchical regression models suggested that verbal premorbid intelligence (measured using the NART) should be used as a CR proxy over other common socio-demographic proxies [14]. Despite an intriguingly similarity between premorbid and crystallized intelligence (i.e., knowledge-based abilities dependent on education and experience) [15], some findings suggest an influence of disease severity on verbal premorbid intelligence estimated with lexical tasks in clinical populations and cautioned against the risk to underestimate priori cognitive functioning in people living with MND [12].

Furthermore, the relationship between graphemes (letters) and phonemes (sounds) of words varies across languages [16]. In some cases (e.g., Spanish), the phonological forms of words are transparently represented in the orthography, and the grapheme–phoneme relationship is consistent, in other languages (e.g., English) the correspondence between spelling and sound is not transparent [16]. In opaque languages, reading irregular words is based on familiarity instead of pronunciation rules. As a result, the adaptation of irregular word reading tasks in languages with high grapheme-phoneme correspondence poses particular challenges, and estimating premorbid intelligence with these tasks may vary in function of the grapheme-phoneme consistency in a specific language [17]. Finally, the use of these tasks in patients with specific language deficits (e.g., learning disorders, aphasia, or language variants of MND) poses several limitations [12].

Intelligence can also be determined by a range of specific tools. The Wechsler intelligence batteries, which include the Wechsler Adult Intelligence Scale (WAIS) and the Wechsler Intelligence Scale for Children (WISC), are the most widely used intelligence assessments and are frequently applied also in neuropsychological evaluations [18], [19], [20], [21], [22], [23], [24], [25]. Apart from an estimate of general intelligence, Wechsler's batteries also introduced a distinction between verbal and performance intelligence that are closely related to crystallized and fluid (i.e., the ability to solve novel problems by using reasoning) components of intelligence, respectively [15]. However, a longstanding criticism is that these batteries present a disproportionate emphasis on measures of the crystallized component, and their administration is time consuming and expensive. A practical compromise is the use of a single ‘intelligence’ test. The Raven's Progressive Matrices (RPM), and the Culture Fair Test (CFT) are widely used as measures of intelligence, and they are often considered closely related both to fluid and performance components of intelligence since they utilize non-verbal materials [26,27]. Visuo-spatial reasoning tests, such as RPM and CFT, are also supposed to be relatively unbiased by cultural differences (‘culture-free’) because they involve no verbal content and are based on visual basic forms [28]. Despite its popularity, nowadays this assumption of culture-fairness is debated, and several studies showed multiple cultural differences on perception, manipulation, and conceptualization of visuo-spatial materials [29].

Among cognitive tests usually employed in clinical practice, previous studies pointed out that some executive function tests (e.g., Wisconsin Card Sorting Test and Verbal Fluency) are closely linked to fluid intelligence, and executive dysfunction observed in some clinical conditions can be interpreted to reflect a decrease in fluid intelligence [30]. A recent meta-analysis of studies on the relationships between the Wisconsin Card Sorting Test and intelligence found that overall shared variance was modest and just one third of the test variability could be accounted for by variability in common indicators of intelligence [31]. Studies on the genetic associations between executive functions and general intelligence suggest that they are overlapping but separable at genetic variant and molecular pathway levels, and that in adulthood, common EF abilities are distinct from intelligence that otherwise seems associated with working memory updating-specific EF abilities [32,33]. Overall, research has demonstrated that executive functions only partially correspond to the psychometric concept of intelligence, and their relationship is a controversial issue. On the other hand, executive functions broadly encompass cognitive skills that are responsible for planning, initiation, sequencing, and monitoring of complex goal-directed behavior, and are a major determinant of problem behavior and disability. This may explain the relatively robust associations between executive functions measures and functional outcomes [34].

4. Factorial models of intelligence

Among the theoretical models of intelligence, the Spearman's g model and the Cattell–Horn–Carroll model have been widely applied in neurosciences. Spearman identified a general intelligence factor, named g, that is a single common factor constituted by the shared variance of all the tests in a cognitive battery, and it is based on the postulate that all cognitive measures are influenced by intelligence [35]. The Spearman g model is bi-factor as it considers two levels: g, that explains the variance common to all the measured variables, and specific independent factors that account for residual variances in each measured variable [36]. The Cattell–Horn–Carroll model is based on the previous, but it includes three ascending levels of factors: highly specialized task-specific abilities, broad cognitive abilities (e.g., fluid and crystallized intelligence), and the general intelligence g that affects all other abilities [37]. The Cattell–Horn–Carroll model is hierarchical as it introduces an intermediate level of group factors (i.e., primary cognitive abilities) between g and the specific factors [36]. Exploratory factor analyses of this hierarchical model showed that the intelligence group factors presented positive intercorrelations, and thus they do not reflect distinct (orthogonal) abilities [38], [39], [40]. A confirmatory factor analysis of Carroll's datasets further showed that the hierarchical model extracted many intercorrelated group factors, whose relevance beyond that of g was scarce [41]. This relatively poor fit to empirical data of the hierarchical model supports the use of the bi-factorial model, that also satisfies the parsimony criterion, and thus the existence of a global intelligence factor manifesting in all cognitive measures [41,42].

5. Advanced conceptual and statistical approaches to intelligence and neurocognitive disorders

Overall, in the field of cognitive impairment, the use of a single ‘intelligence’ test is a common approach to estimate intelligence. Despite being a practical compromise between feasibility and constructs, validity of intelligence tests is based on their load on general intelligence and, as any other measured mental ability, their variance is only partially explained by this factor. Alternatively, factorial models allow an actual measurement of intelligence as a latent factor that superintends all mental abilities, and advanced statistical approaches, such as structural equation modeling (SEM), has the potential to improve informativeness and complexity of factorial approaches.

From the statistical point of view, the use of SEM allows the representation of various aspects of a complex phenomenon. From one side, SEM includes both observed and latent variables, the latter representing underlying constructs thought to exist but not directly measurable. On the other side, these models postulate causal connections that link latent variables to observed ones and has the potential to help also disentangling interaction and mediation effects. The use of SEM has not only a methodological value, as it effectively represents a conceptual framework that hypothesizes and describes the multifaceted and multidimensional nature of phenomena.

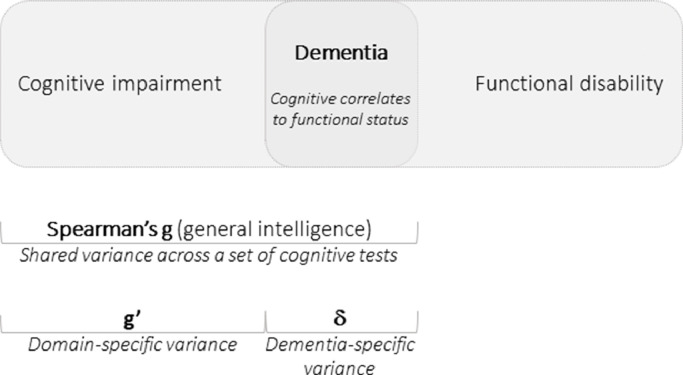

Approximately one decade ago, Royall and colleagues proposed a new conceptualization of dementia as a latent variable (δ) that was defined by the authors as the ‘cognitive correlates of functional status’, and that has been proposed as a ‘phenotype for all cause dementia’ [43,44]. Specifically, Royall and colleagues used SEM to decompose Spearman's g in the shared variance across cognitive and functional measures (δ), and the shared variance across cognitive measures only (g') (Fig. 1) [43,44]. As δ takes into consideration a core feature of MND (i.e., the association between functional and cognitive decline), it represents the ‘dementia-specific variance’ of cognitive performance, and it is thus considered relevant to MND diagnosis [43,44]. On the other side, g’ is a strictly cognitive construct aligned with the traditional view of MND as domain-specific. Specifically, g’ represents the ‘domain-specific variance’ of cognitive performance, and it is considered irrelevant to MND diagnosis due to the lack of an association with disability [43,44].

Fig. 1.

Model of dementing process based on the decomposition of Spearman's g latent factor in dementia-specific (δ) and domain-specific (g’) variance of cognitive performance.

δ has been validated in both healthy and clinical samples using different assessment methods that range from small to comprehensive test batteries [43], [44], [45], [46], [47], [48], [49], [50]. Previous studies by Royall and colleagues are consistent in showing that δ explained a very little rate of the total variance in a set of cognitive measures, and this evidence poses some critical issues on the real impact of cognitive performances on MND diagnosis [43,44]. On the other hand, δ’s variance gained more clinical utility than that of g': δ demonstrated a high accuracy for the discrimination between persons with or without MND in samples having multiple etiologies or varying degrees of severity [43], [44], [45], [46], [47], [48], [49], [50]. Furthermore, δ was associated with MND severity, as measured by clinical dementia rating scale, in both cross-sectional and longitudinal analyses [[43], [44], [45],48]. Finally, δ was most strongly related to nonverbal measures, that are known to have the higher associations with functional outcomes, whereas g' was most strongly related to verbal measures [43].

This approach has clinical and methodological implications. From one side it introduces a new conceptualization of intelligence's and cognitive functions’ role in the development of neurocognitive disorders. On the other side it differed from the classical methods used to estimate these constructs.

From the clinical point of view, general intelligence seems to influence the presence and the severity of MND much more than domain specific cognitive abilities. However, recent studies showed that δ appears ‘blind’ to MND's etiology [50,51]. John and colleagues found that, while domain-specific variance (g’, residual to δ) was able to distinguish the most common MND subtypes (i.e., AD, VaD, Dementia with Lewy Bodies, and Frontotemporal dementia), δ scores were not. As a result, the burden of pathological mechanisms involving specific brain structures is likely to influence domain-specific factors (e.g., memory or executive functions) [51]. These findings suggest that the latent variable δ represents a measure of MND severity that is useful for an accurate determination of the diagnosis, while the patterns of performance across cognitive tests provides diseases’ characterizations that are useful in differentiating between MND subtypes (differential diagnosis) [51].

If, as proposed by Royall and colleagues, ‘δ represents a phenotype for the dementia syndrome itself, regardless of etiology’, its association with biomarkers is likely to be limited and to differ according to samples’ characteristics. Given that intelligence could be conceptualized as a measure of global efficiency of the brain networks, Royall and colleagues hypothesized that connectivity could represent a potential biomarker of the ‘dementia phenotype’ δ. In line with this, an association was found between δ and the Default Mode Network (DMN) in AD cohorts [52,53]. DMN potential association with AD pathology seems to be supported by its involvement in both autobiographical tasks and amyloid-β deposition in pre-clinical phases [52]. Further research efforts are needed to verify if other neural networks and/or imaging biomarkers may represent the relative contribution of different underlying pathologies to δ. In a recent study, Royall and Palmer investigated the effects of neuroimaging biomarkers related to ischemic cerebrovascular disease (ICVD) on two latent variables derived from the performance on executive measures (EF and dEF, representing ‘executive-specific homologs’ of g’ and δ, respectively). They found that neuroimaging biomarkers of ICVD were associated with both EF and dEF, but the latent domain-specific EF factor was related to disability only via general intelligence (dEF). As a result, the authors concluded that the associations of ICVD biomarkers with EF were irrelevant with respect to functional status, and thus to the association between ICVD's and MND. The authors argued also that g, being ‘indifferent’ to its indicators and ‘agnostic’ to etiology, is unlikely to be localized as a discrete brain function within specific lobes or circuits, and the ‘dementia-specific variance’ in executive measures may be independent from structures and functions involved in frontal networks. On the other side, some variance in executive measures was associated with ICVD biomarkers independently of g/δ, and this could lead to the hypothesis that a rate of domain-specific EF variance might be related to frontal structures and functions. As the authors discussed also in a previous study on executive functions and intelligence, evidence might suggest that variance of executive measures could deal with both frontal functions (which may load on localizable factors) and ‘pure’ executive functions (which may load on general intelligence, and thus may be somehow indistinguishable from it) [54].

From the methodological point of view, the use of Spearman's g construct within SEM approach seems to also have the potential to refine our measurement of intelligence, and of its multifaceted role in dementing processes.

As previously discussed, premorbid intelligence is usually ‘estimated’ based on some verbal (irregular word reading and lexical decision-making) tasks, while the approach proposed by Royall and colleagues actually measures intelligence as a latent factor underlying all cognitive abilities according to the Spearman's original conception. Remaining adherent to this paradigm, the use of a single intelligence test may represent just an ‘estimate’ of g, as this latent variable have to be derived from a battery and not from a single measure, and the variance of a single test has multiple source and it is only partially explained by g. Studies on healthy subjects have proven an association between scores on the above mentioned lexical tasks and general intelligence [22]. Considering previous findings from Royall and colleagues, this association could be expected to involve mainly g’, whose link with verbal measures has already been proven and whose similarity with crystallized intelligence is intriguingly. The extent to which premorbid intelligence's estimates based on lexical tasks could be explained by g’ and/or could represent a crystallized component of intelligence needs to be further explored.

The use of Spearman's g construct to measure intelligence has further methodological implications in terms of both range and type of variables from which it can be derived, and potentialities to output δ as a global ‘dementia phenotype’ score for each individual.

Because Spearman's g contributes to every cognitive measure, δ can potentially be constructed from any battery that contains both cognitive and functional measures. To understand the real impact that δ could have in changing our approach to MND diagnosis, there is a need of further studies on both the identification of the minimal set of tools needed to extract δ, and the impact that the characteristics of a specific cognitive battery might have on δ’s psychometric properties. Once δ is available as a latent factor, a composite ‘d-score’ can be derived for each individual pondering the score of each observed indicator for its weight and summing the products. The resultant ‘d-score’ represents a continuous measure of the ‘dementia phenotype’ across the MND spectrum, and it could be used as a measure of severity and to determine clinically relevant thresholds useful also for the clinicians’ decision-making processes.

Given the assumption that g is ‘empirically indifferent’ to its measured variables, as it encompasses all cognitive abilities, Royall and colleagues hypothesize to conceptualize intelligence as an intrinsic general property of brain integration that encompasses several quantifiable variables [49,55]. In this view, g may manifest also in non-cognitive domains, and behavioral and autonomic variables could be tested within the models. In a recent study on behavioral and psychological symptoms of dementia (BPSD), Royall and Palmer found that δ was associated with both single and total BPSD burden, suggesting the existence of a ‘dementia-specific behavioral profile’ related to general intelligence and likely independent from specific etiologic mechanisms [55]. Considering the increasing interest and emerging evidence in non-cognitive symptoms that may represent early and non-invasive markers of MND, further studies should also verify the association of δ with other sensory or motor changes [56].

6. Conclusions

Several issues remained unsolved within the complex relationships across intelligence, cognitive functions, and neurocognitive disorders. The way we conceptualize intelligence determines both the way we measure it and the approach we use to study its association with other constructs, and the same is true for neurocognitive disorders.

In clinical neuropsychology, the cognitive contribution to neurocognitive disorders is traditionally evaluated according to the analysis of patterns of impaired performances at cognitive tests. This method utilizes the unique variance of each cognitive test to classify the performances. On the other side, the shared variance across tests has been used to extract composite measures or latent constructs representing for example the effect of specific cognitive domains (e.g., memory, executive functions). Royall and colleagues extended the latter approach to a new statistical conceptualization of dementia as a ‘state of functional incapacity engendered by cognitive declines’ [55]. Available evidence seems to support a dissociation between these approaches in terms of their clinical utility: models based on the extraction of composite/latent constructs seem of upmost utility in the determination of presence and severity of cognitive impairment, while models based on the analysis of the patterns of performances at cognitive tests seems to reveal their best contribution in terms of differential diagnosis [51].

As accuracy is important both in MND case-finding and in differential diagnosis, the two approaches are fundamental within the clinical decision-making process. The use of advanced modeling methods based on data fitting, such as SEM, allows a fine-grained examination and comparison of these different scenarios.

Author declaration

Intellectual property

X I confirm that I have given due consideration to the protection of intellectual property associated with this work and that there are no impediments to publication, including the timing of publication, with respect to intellectual property. In so doing I confirm that I have followed the regulations of our institutions concerning intellectual property.

Research ethics

Non applicable.

Authorship

X The author meet the ICMJE criteria.

Funding

None.

Declaration of Competing Interest

The authors declare there is no conflict of interests.

References

- 1.Yeo R.-A., Arden R., Jung R.E. Alzheimer's disease and intelligence. Curr. Alzheimer Res. 2011;8(4):345–353. doi: 10.2174/156720511795745276. [DOI] [PubMed] [Google Scholar]

- 2.Russ T.C. Intelligence, cognitive reserve, and dementia: time for intervention? JAMA Netw. Open. 2018;1(5) doi: 10.1001/jamanetworkopen.2018.1724. [DOI] [PubMed] [Google Scholar]

- 3.Rodriguez F.S., Lachmann T. Systematic review on the impact of intelligence on cognitive decline and dementia risk. Front. Psychiatry. 2020;11:658. doi: 10.3389/fpsyt.2020.00658. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Deary I.J., Whalley L.J., Starr J.M. American Psychological Association; Washington, DC: 2009. A Lifetime of Intelligence: Follow-up Studies of the Scottish Mental Surveys of 1932 and 1947. [DOI] [Google Scholar]

- 5.McGurn B., Deary I.J., Starr J.M. Childhood cognitive ability and risk of late-onset Alzheimer and vascular dementia. Neurology. 2008;71(14):1051–1056. doi: 10.1212/01.wnl.0000319692.20283.10. [DOI] [PubMed] [Google Scholar]

- 6.Taylor A.M., Pattie A., Deary I.J. Cohort Profile update: the Lothian birth cohorts of 1921 and 1936. Int. J. Epidemiol. 2018 doi: 10.1093/ije/dyy022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Whalley L.J., Starr J.M., Athawes R., Hunter D., Pattie A., Deary I.J. Childhood mental ability and dementia. Neurology. 2000;55(10):1455–1459. doi: 10.1212/WNL.55.10.1455. [DOI] [PubMed] [Google Scholar]

- 8.Snowdon D.A., Kemper S.J., Mortimer J.A., Greiner L.H., Wekstein D.R., Markesbery W.R. Linguistic ability in early life and cognitive function and Alzheimer's disease in late life. Findings from the Nun Study. JAMA. 1996;275(7):528–532. [PubMed] [Google Scholar]

- 9.Valenzuela M.J., Sachdev P. Brain reserve and dementia: a systematic review. Psychol. Med. 2006;36(4):441–454. doi: 10.1017/S003329170500626. [DOI] [PubMed] [Google Scholar]

- 10.Stern Y. Cognitive reserve in ageing and Alzheimer's disease. Lancet Neurol. 2012;11(11):1006–1012. doi: 10.1016/S1474-4422(12)70191-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Stern Y. What is cognitive reserve? Theory and research application of the reserve concept. J. Int. Neuropsychol. Soc. 2002;8(3):448–460. [PubMed] [Google Scholar]

- 12.Nelson H.E. NFER-Nelson; Windsor, UK: 1982. The National Adult Reading Test (NART): Test Manual. [Google Scholar]

- 13.Overman M.J., Leeworthy S., Welsh T.J. Estimating premorbid intelligence in people living with dementia: a systematic review. Int. Psychogeriatr. 2021;33(11):1145–1159. doi: 10.1017/S1041610221000302. [DOI] [PubMed] [Google Scholar]

- 14.Boyle R., Knight S.P., De Looze C., et al. Verbal intelligence is a more robust cross-sectional measure of cognitive reserve than level of education in healthy older adults. Alzheimers Res. Ther. 2021;13(1):128. doi: 10.1186/s13195-021-00870-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Cattell R.B. Some theoretical issues in adult intelligence testing. Psychol. Bull. 1941;38:592. [Google Scholar]

- 16.Borleffs E., Maassen B.A.M., Lyytinen H., Zwarts F. Measuring orthographic transparency and morphological-syllabic complexity in alphabetic orthographies: a narrative review. Read Writ. 2017;30(8):1617–1638. doi: 10.1007/s11145-017-9741-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Schrauf R.W., Weintraub S., Navarro E. Is adaptation of the word accentuation test of premorbid intelligence necessary for use among older, Spanish-speaking immigrants in the United States? [published correction appears in J Int Neuropsychol Soc. 2008 Mar;14(2):349] J. Int. Neuropsychol. Soc. 2006;12(3):391–399. doi: 10.1017/s1355617706060462. [DOI] [PubMed] [Google Scholar]

- 18.Wechsler D. The Psychological Corporation; New York, NY, USA: 1955. Wechsler Adult Intelligence Scale: Manual. [Google Scholar]

- 19.Wechsler D. The Psychological Corporation; New York, NY, USA: 1981. Wechsler Adult Intelligence Scale–Revised. [Google Scholar]

- 20.Wechsler D. The Psychological Corporation; San Antonio, TX, USA: 1997. Wechsler Adult Intelligence Scale (WAIS-III): Administration and Scoring Manual. [Google Scholar]

- 21.Wechsler D. The Psychological Corporation; San Antonio, TX, USA: 2008. Wechsler Adult Intelligence Scale (WAIS–IV) [Google Scholar]

- 22.Wechsler D. The Psychological Corporation; New York, NY, USA: 1949. Wechsler Intelligence Scale For Children (WISC): Manual. [Google Scholar]

- 23.Wechsler D. The Psychological Corporation; New York, NY, USA: 1974. Manual of the Wechsler Intelligence Scale for Children–Revised. [Google Scholar]

- 24.Wechsler D. The Psychological Corporation; San Antonio, TX, USA: 1991. WISC-III Wechsler Intelligence Scale for Children: Manual. [Google Scholar]

- 25.Wechsler D. The Psychological Corporation; San Antonio, TX, USA: 2003. Wechsler Intelligence Scale for Children (WISC-IV) [Google Scholar]

- 26.Raven J.C., Court J., Raven J. H. K. Lewis; London, UK: 1976. Manual for Raven's Progressive Matrices. [Google Scholar]

- 27.Cattell R.B., Cattell A.K. Institute for Personality and Ability Testing; Champaign, IL, USA: 1973. Measuring Intelligence with the Culture Fair Tests. [Google Scholar]

- 28.Duncan J. Yale University Press; London, UK: 2010. How Intelligence Happens. [Google Scholar]

- 29.Gonthier C. Cross-cultural differences in visuo-spatial processing and the culture-fairness of visuo-spatial intelligence tests: an integrative review and a model for matrices tasks. Cogn. Res. Princ. Implic. 2022;7(1):11. doi: 10.1186/s41235-021-00350-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Roca M., Manes F., Chade A., et al. The relationship between executive functions and fluid intelligence in Parkinson's disease. Psychol. Med. 2012;42(11):2445–2452. doi: 10.1017/S0033291712000451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Kopp B., Maldonado N., Scheffels J.F., Hendel M., Lange F. A meta-analysis of relationships between measures of wisconsin card sorting and intelligence. Brain Sci. 2019;9(12):349. doi: 10.3390/brainsci9120349. Published 2019 Nov 29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Gustavson D.E., Reynolds C.A., Corley R.P., Wadsworth S.J., Hewitt J.K., Friedman N.P. Genetic associations between executive functions and intelligence: a combined twin and adoption study. J. Exp. Psychol. Gen. 2022;151(8):1745–1761. doi: 10.1037/xge0001168. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Ciobanu L.G., Stankov L., Schubert K.O., et al. General intelligence and executive functioning are overlapping but separable at genetic and molecular pathway levels: an analytical review of existing GWAS findings. PLoS One. 2022;17(10) doi: 10.1371/journal.pone.0272368. Published 2022 Oct 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Royall D.R., Lauterbach E.C., Kaufer D., et al. The cognitive correlates of functional status: a review from the Committee on Research of the American Neuropsychiatric Association. J. Neuropsychiatry Clin. Neurosci. 2007;19(3):249–265. doi: 10.1176/jnp.2007.19.3.249. [DOI] [PubMed] [Google Scholar]

- 35.Spearman C. General intelligence, objectively determined and measured. Am. J. Psychol. 1904;15:201–293. [Google Scholar]

- 36.Beaujean A.A. John Carroll's views on intelligence: bi-factor vs. higher-order models. J. Intell. 2015;3(4):121–136. doi: 10.3390/jintelligence3040121. [DOI] [Google Scholar]

- 37.McGrew K.S. CHC theory and the human cognitive abilities project: standing on the shoulders of the giants of psychometric intelligence research. Intelligence. 2009;37:1–10. [Google Scholar]

- 38.Carroll J.B. Cambridge University Press; Cambridge: 1993. Human Cognitive Abilities: A Survey of Factor-Analytic Studies. [Google Scholar]

- 39.Gustaffson J.E., Undheim J.O. In: Handbook of Educational Psychology. Berliner DC, Calfee RC, editors. Macmillan; New York, NY: 1996. Individual differences in cognitive functions. [Google Scholar]

- 40.Flanagan D.P., Dixon S.G. In: Encyclopedia of Special Education. Reynolds CR, Vannest KJ, Fletcher-Janzen E, editors. 2014. The Cattell-Horn-Carroll theory of cognitive abilities. [DOI] [Google Scholar]

- 41.Benson N.F., Beaujean A.A., McGill R.J., Dombrowski S.C. Revisiting Carroll's survey of factor-analytic studies: implications for the clinical assessment of intelligence. Psychol. Assess. 2018;30(8):1028–1038. doi: 10.1037/pas0000556. [DOI] [PubMed] [Google Scholar]

- 42.Cole J.C., Randall M.K. Comparing the cognitive ability models of Spearman, Horn and Cattell and Carroll. J. Psychoeduc. Assess. 2003;21(2):160–179. doi: 10.1177/073428290302100204. [DOI] [Google Scholar]

- 43.Royall D.R., Palmer R.F. Getting Past "g": testing a new model of dementing processes in persons without dementia. J. Neuropsychiatry Clin. Neurosci. 2012;24(1):37–46. doi: 10.1176/appi.neuropsych.11040078. [DOI] [PubMed] [Google Scholar]

- 44.Royall D.R., Palmer R.F., O'Bryant S.E., Texas Alzheimer's Research and Care Consortium Validation of a latent variable representing the dementing process. J. Alzheimers Dis. 2012;30(3):639–649. doi: 10.3233/JAD-2012-120055. [DOI] [PubMed] [Google Scholar]

- 45.Royall D.R. Welcome back to your future: the assessment of dementia by the latent variable "δ". J. Alzheimers Dis. 2016;49(2):515–519. doi: 10.3233/JAD-150249. [DOI] [PubMed] [Google Scholar]

- 46.Royall D.R., Palmer R.F., Markides K.S. Exportation and validation of latent constructs for dementia case finding in a Mexican American population-based cohort. J. Gerontol. B. 2017;72(6):947–955. doi: 10.1093/geronb/gbw004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Royall D.R., Matsuoka T., Palmer R.F., et al. Greater than the sum of its parts: δ Improves upon a battery's diagnostic performance. Neuropsychology. 2015;29(5):683–692. doi: 10.1037/neu0000153. [DOI] [PubMed] [Google Scholar]

- 48.Palmer R.F., Royall D.R. Future dementia severity is almost entirely explained by the latent variable δ’s intercept and slope. J. Alzheimer's Dis. 2016;49:521–529. doi: 10.3233/JAD-150254. [DOI] [PubMed] [Google Scholar]

- 49.Royall D.R., Palmer R.F. δ Scores identify subsets of "mild cognitive impairment" with variable conversion risks. J. Alzheimers Dis. 2019;70(1):199–210. doi: 10.3233/JAD-190266. [DOI] [PubMed] [Google Scholar]

- 50.Gavett B.E., Vudy V., Jeffrey M., John S.E., Gurnani A.S., Adams J.W. The δ latent dementia phenotype in the uniform data set: cross-validation and extension. Neuropsychology. 2015;29(3):344–352. doi: 10.1037/neu0000128. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.John S.E., Gurnani A.S., Bussell C., Saurman J.L., Griffin J.W., Gavett B.E. The effectiveness and unique contribution of neuropsychological tests and the δ latent phenotype in the differential diagnosis of dementia in the uniform data set. Neuropsychology. 2016;30(8):946–960. doi: 10.1037/neu0000315. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Royall D.R., Palmer R.F., Vidoni E.D., Honea R.A., Burns J.M. The default mode network and related right hemisphere structures may be the key substrates of dementia. J. Alzheimers Dis. 2012;32:467–478. doi: 10.3233/JAD-2012-120424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Royall D.R., Palmer R.F., Vidoni E.D., Honea R.A. The default mode network may be the key substrate of depression related cognitive changes. J. Alzheimers Dis. 2013;34:547–559. doi: 10.3233/JAD-121639. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Royall D.R., Palmer R.F. Executive functions” cannot be distinguished from general intelligence: two variations on a single theme within a symphony of latent variance. Front. Behav. Neurosci. 2014;9:1–10. doi: 10.3389/fnbeh.2014.00369. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Royall D.R., Palmer R.F. δ scores predict multiple neuropsychiatric symptoms. Int. J. Geriatr. Psychiatry. 2020;35(11):1341–1348. doi: 10.1002/gps.5371. [DOI] [PubMed] [Google Scholar]

- 56.Montero-Odasso M., Pieruccini-Faria F., Ismail Z., et al. CCCDTD5 recommendations on early non cognitive markers of dementia: a Canadian consensus. Alzheimers. 2020;6(1):e12068. doi: 10.1002/trc2.12068. Published 2020 Oct 17. [DOI] [PMC free article] [PubMed] [Google Scholar]