Abstract

Successful goal-directed actions require constant fine-tuning of the motor system. This fine-tuning is thought to rely on an implicit adaptation process that is driven by sensory prediction errors (e.g., where you see your hand after reaching vs. where you expected it to be). Individuals with low vision experience challenges with visuomotor control, but whether low vision disrupts motor adaptation is unknown. To explore this question, we assessed individuals with low vision and matched controls with normal vision on a visuomotor task designed to isolate implicit adaptation. We found that low vision was associated with attenuated implicit adaptation only for small visual errors, but not for large visual errors. This result highlights important constraints underlying how low-fidelity visual information is processed by the sensorimotor system to enable successful implicit adaptation.

INTRODUCTION

Our ability to enact successful goal-directed actions derives from multiple learning processes (McDougle et al., 2016; Bond & Taylor, 2015; Haith, Huberdeau, & Krakauer, 2015; McDougle, Bond, & Taylor, 2015; Taylor, Krakauer, & Ivry, 2014; Taylor & Ivry, 2011; Keisler & Shadmehr, 2010). Among these processes, implicit motor adaptation is key for ensuring that the sensorimotor system remains well-calibrated in response to changes in the body (e.g., muscle fatigue) and in the environment (e.g., a heavy jacket). This adaptive process is driven by a mismatch between the predicted sensory feedback generated by the cerebellum and the actual sensory feedback arriving from the periphery—that is, sensory prediction error (Kim, Avraham, & Ivry, 2021; Shadmehr, Smith, & Krakauer, 2010).

Uncertainty in the sensory feedback has been shown to reduce the system’s sensitivity to the feedback signal (i.e., learning rate) and, as such, reduces the extent of implicit adaptation (Ferrea, Franke, Morel, & Gail, 2022; Shyr & Joshi, 2021; Samad, Chung, & Shams, 2015; van Beers, 2012; Wei & Körding, 2010; Burge, Ernst, & Banks, 2008; van Beers, Wolpert, & Haggard, 2002). This phenomenon can be accounted for by an optimal integration model. According to this model, the learning rate reflects a Bayes optimal weighting between the sensory feedback and feedforward prediction (Kawato, Ohmae, Hoang, & Sanger, 2021; Shadmehr et al., 2010; Wei & Körding, 2010; Burge et al., 2008; Körding & Wolpert, 2004; Ito, 1986; Albus, 1971; Marr, 1969). When sensory noise is high, the model stipulates that this integration process lowers the weight given to the feedback signal, reduces the strength of the resultant error, and, as such, attenuates implicit adaptation for all error sizes.

Recent work has discovered an unappreciated constraint on this error integration process (Tsay, Avraham, et al., 2021). Uncertain visual feedback was found to only attenuate adaptation when visual sensory prediction errors were small, but not when they were large. However, sensory feedback noise was manipulated in a relatively coarse, unnatural extrinsic environmental manipulation (i.e., a dispersed cloud of visual feedback). In the current study, we sought to examine how implicit motor adaptation is affected by sensory uncertainty arising from intrinsic noise within the neural circuitry conveying sensory feedback. Understanding how sensory uncertainty affects implicit adaptation under a broad range of circumstances serves to constrain our computational and neural models of sensorimotor learning.

We used a Web-based visuomotor rotation task to assess implicit adaptation in individuals with diverse forms of visual impairments—that is, low vision because of reduced visual acuity, reduced contrast sensitivity, or restricted visual field. Although prior work has shown that low vision is associated with impaired motor control (Cheong, Ling, & Shehab, 2022; Lenoble, Corveleyn, Tran, Rouland, & Boucart, 2019; Endo et al., 2016; Verghese, Tyson, Ghahghaei, & Fletcher, 2016; Pardhan, Gonzalez-Alvarez, & Subramanian, 2012; Timmis & Pardhan, 2012; Kotecha, O’Leary, Melmoth, Grant, & Crabb, 2009; Jacko et al., 2000), the effect of low vision on motor learning has not been investigated. We hypothesized that low vision—a heterogeneous set of visual impairments—would also attenuate implicit adaptation for small, but not large, visual errors similar to the effect of extrinsic visual noise. Our results support this hypothesis, providing converging evidence for how low-fidelity visual information is processed by the sensorimotor circuitry to enable successful implicit adaptation.

METHODS

Ethics Statement

All participants gave written informed consent in accordance with policies approved by the institutional review board (protocol number: 2016-02-8439). Participation in the study was in exchange for monetary compensation.

Participants

Individuals with impaired visual function that interferes with the activities of daily life (i.e., low vision) were recruited through Meredith Morgan Eye Center and via word of mouth. Potential participants were screened using an on-line survey and were excluded if they did not have a clinical diagnosis related to low vision (e.g., macular degeneration, glaucoma, Stargardt’s disease), or if their self-reported visual acuity (i.e., “Recall your visual acuity results from your clinician-administered eye exam within the last year”) in their best-seeing eye was better than 20/30 (i.e., 0.2 logMAR). Participants also reported if their low vision was related to peripheral and/or central vision, if it was present since birth or acquired later in life (denoted hereafter as early versus late onset), and if they had difficulty seeing road signs; specifically, participants were prompted with a Likert scale from 1 (road signs are very blurry) to 7 (road signs are very clear). This functional measure correlates negatively with visual acuity (R = −.5, p = .04).

In addition, participants responded to five survey questions about whether their low vision condition affected their day-to-day function. The questions were stated as follows: (1) Do you use any mobility or navigational aids? (2) Do you have difficulty detecting an edge of a step? (3) Do you have difficulty pouring water into a cup? (4) Do you have difficulty walking up and down stairs? (5) Do you have difficulty detecting obstacles? Using these self-report responses, we calculated a “visual impairment index” by tallying the number of “yes” responses and dividing this number by five (i.e., the number of questions). A higher number denotes greater visual impairment (max = 1; min = 0; Table 1).

Table 1.

Participants’ Reponses to Questions about Their Low Vision

| # | Age | Hand | Etiology | Visual Acuity in Better Eye | Diff. Seeing Road Signs | Peripheral Visual Field | Central Visual Field | Low Vision Onset | Visual Impairment Index |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 31 | R | Retinitis pigmentosa | 0.3 | 2 | Impaired | Intact | Late | 0.8 |

| 2 | 34 | R | Achromatopsia | 0.9 | 1 | Intact | Intact | Early | 0.6 |

| 3 | 60 | A | Cerebral visual impairment | 0.4 | 3 | Impaired | Intact | Late | 1 |

| 4 | 60 | R | Infection | 1.7 | 1 | Intact | Impaired | Late | 0.6 |

| 5 | 86 | R | Glaucoma | 1.0 | 2 | Impaired | Intact | Late | 1 |

| 6 | 70 | R | Macular degeneration | 0.9 | 2 | Intact | Impaired | Late | 0.2 |

| 7 | 34 | R | Pseudotumor cerebri | 1.3 | 2 | Impaired | Impaired | Late | 1 |

| 8 | 61 | A | Rieger syndrome | 0.2 | 2 | Impaired | Intact | Late | 1 |

| 9 | 26 | R | Genetic | 0.9 | 3 | Intact | Intact | Late | 0.8 |

| 10 | 60 | R | Infection | 1.2 | 2 | Intact | Impaired | Early | 0.6 |

| 11 | 55 | R | Stargardt disease | 1.0 | 1 | Impaired | Impaired | Late | 0.8 |

| 12 | 86 | R | Glaucoma | 1.0 | 1 | Impaired | Intact | Late | 0.6 |

| 13 | 29 | R | Glaucoma | 0.7 | 3 | Impaired | Intact | Late | 1 |

| 14 | 59 | R | Diabetic retinopathy | 1.2 | 2 | Impaired | Intact | Late | 0.8 |

| 15 | 31 | R | Glaucoma | 1.7 | 1 | Impaired | Intact | Early | 0 |

| 16 | 57 | A | Glaucoma | 1.0 | 5 | Intact | Intact | Early | 1 |

| 17 | 24 | R | Albinism | 1.2 | 1 | Impaired | Impaired | Early | 0.4 |

| 18 | 78 | R | Macular degeneration | 0.6 | 4 | Intact | Impaired | Late | 1 |

| 19 | 24 | R | Optic nerve atrophy | 0.8 | 1 | Intact | Intact | Late | 0.2 |

| 20 | 28 | R | Nystagmus | 0.5 | 4 | Intact | Intact | Early | 0.2 |

Age is reported in years, and handedness is reported as right (R) or ambidextrous (A) (no participants in this group were left-handed). Self-reports of visual acuity of the better seeing eye (logMAR) and peripheral/central visual field loss are provided. Difficulty with reading road signs is self-reported on a scale from 1 to 7. Low vision onset was self-reported as early onset, late onset, or unknown (no one responded unknown). Visual impairment scores denoted the degree to which low vision impacted activities of daily living (1 = most severe impairment; 0 = least severe impairment).

To our knowledge, this is the first study to examine sensorimotor learning in individuals with low vision. Thus, the sample size was determined based on similar neuropsy-chological studies examining sensorimotor learning in different patient groups (e.g., cerebellar degeneration, Parkinson’s disease; Tsay, Najafi, Schuck, Wang, & Ivry, 2022; Tsay, Schuck, & Ivry, 2022; Tseng, Diedrichsen, Krakauer, Shadmehr, & Bastian, 2007). Each participant completed two sessions that were spaced at least 24 hr apart to minimize any savings or interference (Avraham, Morehead, Kim, & Ivry, 2021; Lerner et al., 2020; Krakauer, Ghez, & Ghilardi, 2005). This amounted to a total of 40 on-line test sessions, with each session lasting approximately 45 min. Note that none of the participants with low vision reported using special devices to augment their vision during the experiment.

We also recruited 20 matched control participants via Prolific, a Web site for online participant recruitment, to match the low vision group based on age, sex, handedness, and years of education. All control participants completed two sessions, which amounted to 40 on-line test sessions, each lasting approximately 45 min. Participants on Prolific have been vetted through a screening procedure to ensure data quality. Two sessions from the control data were incomplete because of technical difficulties and thus not included in the analyses.

By design, the low vision and control groups did not differ significantly in age, t(36) = −0.6, p = .58, μ =−3.3, [−15.4, 8.8], D = 0.2; control mean = 46.4, SD = 16.4 years; low vision mean = 49.7, SD = 21.1 years; handedness, χ2(1) = 0, p = .93; both groups = 17 right-handers and three ambidextrous individuals; sex, χ2(1) = 6.1, p = .05; control = 14 female and six male participants, low vision = 11 female and eight male participants, one declined to specify; or years of education, t(38) = −0.1, p = .89, μ = −0.1, [−1.5,1.3], D = 0; control = 17.0 ± 2.2, low vision = 17.1 ± 2.3 (Table 1). As expected, the low vision group reported significantly more visual impairments compared with the control group based on their self-reported difficulty with reading road signs, t(25) = 16.0, p < .001,μ = 4.6, [4.0, 5.1], D = 5.1; control = 6.7 ± 0.5, low vision = 2.2 ± 1.2.

The participants with low vision completed the task with the experimenter on the phone, and thus, available to provide instructions and monitor performance. The control participants completed the task on their own, accessing the Web site at their convenience.

Apparatus

Participants used their own computer to access a Web page hosted on Google Firebase. The task was created using the OnPoint platform (Tsay, Lee, Ivry, & Avraham, 2021), and the task progression was controlled by JavaScript code running locally in the participant’s Web browser. The size and position of stimuli were scaled based on each participant’s screen size/resolution (height = 920 ± 180 px, width = 1618 ± 433 px), which was automatically detected. As such, any differences in screen size and screen magnification were accounted for between individuals. For ease of exposition, the stimulus parameters reported below reflect the average screen resolution in our participant population. Importantly, before starting the experiment, the experimenter verified that participants were seated at a comfortable distance away from the screen (20–30 in.) and were able to comfortably see the various visual stimuli on the screen (e.g., the blue target and the white feedback cursor). In our prior validation work using this on-line interface and procedure, the exact movement and the exact device used did not impact measures of performance or learning on visuomotor adaptation tasks (Tsay, Lee, et al., 2021). We note that, unlike our laboratory-based setup in which we occlude vision of the reaching hand, this was not possible with our on-line testing protocol. That being said, we have found that measures of implicit and explicit adaptation are similar between in-person and on-line settings (Tsay, Lee, et al., 2021). Moreover, based on our informal observations, participants remain focused on the screen during the experiment (to see the target and how well they are doing), so vision of the hand would be limited to the periphery.

Reaching Task Stimuli and General Procedure

During the task, the participant performed small reaching movements by moving their computer cursor with their trackpad or mouse. The participant’s mouse or trackpad sensitivity (gain) was not modified, but rather left at the setting the participant was familiar with. On each trial, participants made a center-out planar movement from the center of the workspace to a visual target. A white annulus (1% of screen height: 0.24 cm in diameter) indicated the start location at the center of the screen, and a red circle (1% of screen height: 0.24 cm in diameter) indicated the target location. The radial distance of the target from the start location was 8 cm (40% of screen height). The target could appear at one of three directions from the center. Measuring angles counterclockwise and defining right-ward as 0°, these directions were: 30° (upper right quadrant), 150° (upper left quadrant), and 270° (straight down). Within each experiment, triads of trials (i.e., a cycle) consisted of one trial to each of the three targets. The order in which the three targets were presented was randomized within each cycle. Note that participants with color vision deficits could still do the task because position information also indicated the difference between the start location and target location.

At the beginning of each trial, participants moved their cursor to the start location at the center of their screen. Cursor position feedback, indicated by a white dot (0.6% of screen height: 0.1 cm in diameter), was provided when the cursor was within 2 cm of the start location (10% of screen height). After maintaining the cursor in the start location for 500 msec, the target appeared at one of three locations (see above). Participants were instructed to move rapidly to the target, attempting to “slice” through the target. If the movement was not completed within 400 msec, the message “too slow” was displayed in red 20 pt. Times New Roman font at the center of the screen for 400 msec.

Feedback during this movement phase could take one of the following forms: veridical feedback, no-feedback, or rotated noncontingent (“clamped”) feedback. During veridical feedback trials, the movement direction of the cursor was veridical with respect to the movement direction of the hand. That is, the cursor moved with their hand as would be expected for a normal computer cursor. During no-feedback trials, the cursor was extinguished as soon as the hand left the start annulus and remained off for the entire reach. During rotated clamped feedback trials, the cursor moved along a fixed trajectory relative to the position of the target—a manipulation shown to isolate implicit motor adaptation (Tsay, Parvin, & Ivry, 2020; R. Morehead, Taylor, Parvin, & Ivry, 2017). The clamp was temporally contingent on the participant’s movement, matching the radial distance of the hand from the center circle, but noncontingent on the movement in terms of its angular offset relative to the visual target. The fixed angular offset (with respect to the target) was either 3° or 30° (see below). The participant was instructed to “ignore the visual feedback and reach directly to the target.”

For all feedback trials, the radial position of the cursor corresponded to that of the hand up to 8 cm (the radial distance of the target), at which point the cursor position was frozen for 50 msec, before disappearing. After completing a trial, participants moved the cursor back to the starting location. The visual cursor remained invisible until the participant moved within 2 cm of the start location, at which point the cursor appeared without any rotation.

The Impact of Low Vision on Implicit Motor Adaptation for Small and Large Errors

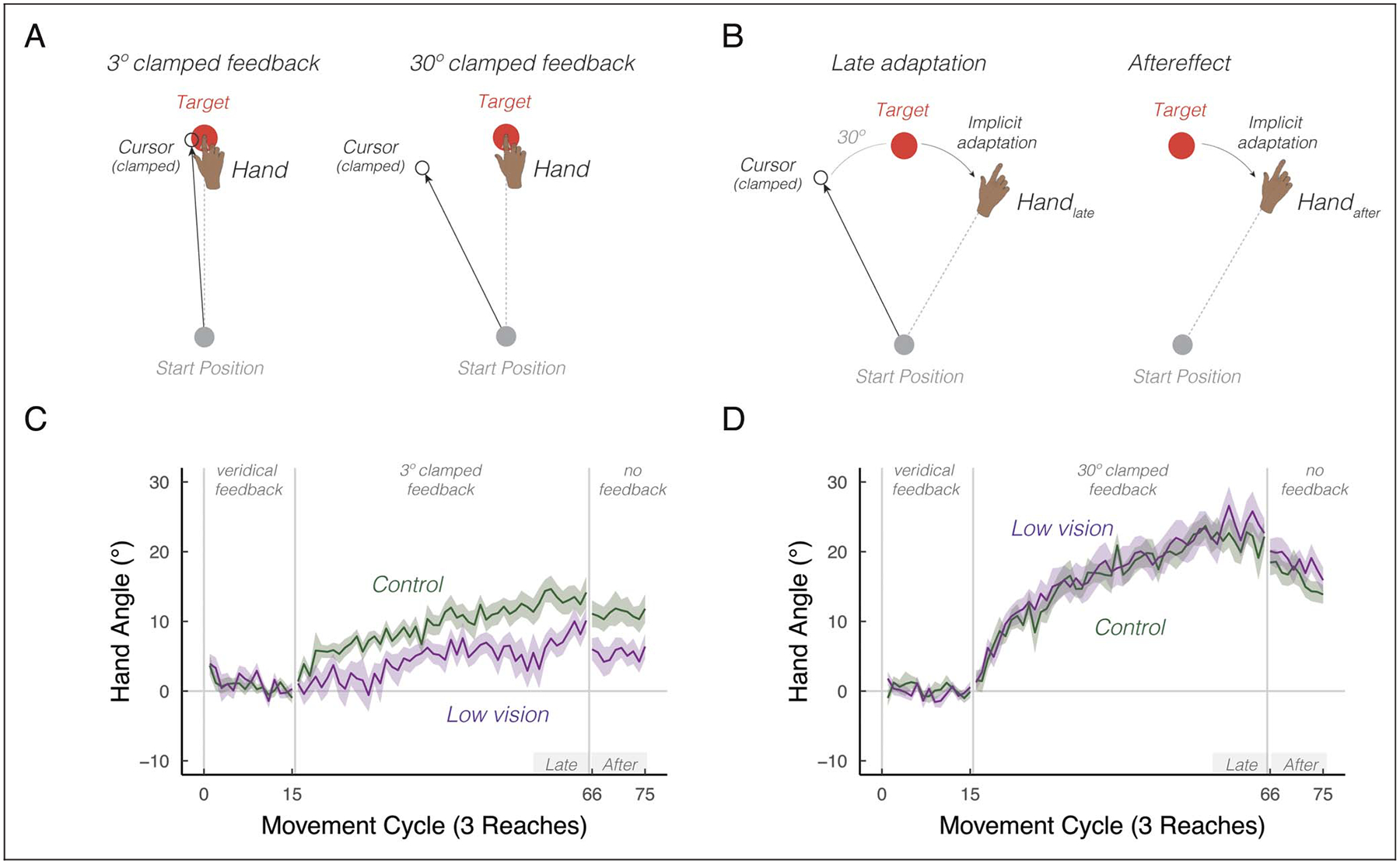

Participants with low vision and control participants (n = 20 per group) were tested in two sessions, with clamped feedback used to induce implicit adaptation. Numerous studies have observed that the degree of implicit adaptation saturates for visual errors greater than 5° (Hayashi, Kato, & Nozaki, 2020; Kim, Morehead, Parvin, Moazzezi, & Ivry, 2018); thus, we examined implicit adaptation in response to 3° errors (an error before the saturation zone) and 30° errors (an error within the saturation zone; Figure 1A). The session order and direction (clockwise or counterclockwise) of the clamped rotation were counter-balanced across individuals. Each session consisted of 75 cycles (225 trials total), distributed across three blocks: baseline veridical feedback block (15 cycles), rotated clamped feedback (50 cycles), and a no-feedback aftereffect block (10 cycles).

Figure 1.

Intrinsic visual feedback uncertainty attenuates implicit adaptation in response to small, but not large errors. (A) Schematic of the clamped feedback task. The sensory prediction error—the difference between the predicted visual feedback location (i.e., the target, red circle) and visual feedback location (i.e., the cursor, white circle)—can either be small (3°) or large (30°). The cursor feedback follows a constant trajectory rotated relative to the target, independent of the angular position of the participant’s hand. The rotation size remains invariant throughout the rotation block. The participant was instructed to move directly to the target and ignore the visual feedback. A robust aftereffect is observed when the visual cursor is removed during the no feedback block, implying that the clamp-induced adaptation is implicit. Note that participants reached toward three targets: 30° (upper right quadrant), 150° (upper left quadrant), and 270° (straight-down). Only one target is shown in the schematic for ease of illustration. (B) We defined two summary measures of learning: late adaptation (handlate) and aftereffect (handafter). Late adaptation reflects the average hand angle relative to the target at the end of the clamped feedback block. Aftereffect reflects the average hand angle during the subsequent no-feedback block. (C–D) Mean time courses of hand angle for 3° (C) and 30° (D) visual clamped feedback, for both the low vision (dark magenta) and matched control (green) groups. Hand angle is presented relative to the baseline hand angle (i.e., last five cycles of the veridical feedback block). Shaded region denotes SEM. Gray horizontal bars labeled Late and After indicate late and aftereffect phases of the experiment.

Before the baseline block, the instruction “Move directly to the target as fast and accurately as you can” appeared on the screen. Before the clamped feedback block, the instructions were modified to read: “The white cursor will no longer be under your control. Please ignore the white cursor and continue to aim directly towards the target.” To clarify the invariant nature of the clamped feedback, three demonstration trials were provided. On all three trials, the target appeared directly above the start location on the screen (90° position), and the participant was told to reach to the left (Demo 1), to the right (Demo 2), and downward (Demo 3). On all three of these demonstration trials, the cursor moved in a straight line, 90° offset from the target. In this way, the participant could see that the spatial trajectory of the cursor was unrelated to their own reach direction. Before the no-feedback aftereffect block, the participants were reminded to “Move directly to the target as fast and accurately as you can.”

Attention and Instruction Checks

It is difficult in on-line studies to verify that participants fully attend to the task. To address this issue, we sporadically instructed participants to make specific keypresses: “Press the letter ‘b’ to proceed.” If participants did not press the correct key, the experiment was terminated. These attention checks were randomly introduced within the first 50 trials of the experiment. We also wanted to verify that the participants understood the clamped rotation manipulation. To this end, we included one instruction check after the three demonstration trials: “Identify the correct statement. Press ‘a’: I will aim away from the target and ignore the white dot. Press ‘b’: I will aim directly towards the target location and ignore the white dot.” The experiment was terminated if participants did not press the correct key (i.e., press “b”). Note that no participants in either group were excluded based on these attention and instruction checks.

Data Analysis

The primary dependent variable of reach performance was hand angle, defined as the angle of the participant’s movement location relative to the target when movement amplitude reached an 8-cm radial distance from the start position. Specifically, we measured the angle between a line connecting the start position to the target and a line connecting the start position to the position the participant moved to. Given that there is little generalization of learning between target locations spaced more than 120° apart (Morehead et al., 2017; Krakauer et al., 2005), the data are graphed by cycles. For visualization purposes, the hand angles were flipped for blocks in which the clamp was counterclockwise with respect to the target.

Outlier responses were defined as trials in which the hand angle deviated by more than 3 SDs from a moving five-trial window or if the hand angle was greater than 90° from the target (median percent of trials removed per participant ± interquartile range (IQR): control = 0.1 ± 1.0%, low vision = 0.1 ± 1.0%).

The hand angle data were baseline corrected on an individual basis to account for idiosyncratic angular biases in reaching to the three target locations (Morehead & Ivry, 2015; Vindras, Desmurget, Prablanc, & Viviani, 1998). These biases were estimated based on heading angles during the last five veridical-feedback baseline cycles (Trials 31–45), with these bias measures then subtracted from the data for each cycle. We defined two summary measures of learning: late adaptation and aftereffect (Figure 1B). Late adaptation was defined as the mean hand angle over the last 10 movement cycles of the rotation block (Trials 166–195). The aftereffect was operationalized as the mean angle over all movement cycles of the no-feedback aftereffect block (Trials 196–225).

These data were submitted to a linear mixed effects model, with hand angle measures as the dependent variable. We included experiment phase (late adaptation, aftereffect), group (low vision or control), and error size (3°, 30°) as fixed effects and participant ID as a random effect. A priori, we hypothesized that the low vision group would differ from the controls in their response to the small errors.

We employed F tests with the Satterthwaite method to evaluate whether the coefficients obtained from the linear mixed effects model were statistically significant (R functions: lmer, lmerTest, anova). Pairwise post hoc t tests (two-tailed) were used to compare hand angle measures between the low vision and control groups (R function: emmeans). p Values were adjusted for multiple comparisons using the Tukey method. The degrees of freedom were also adjusted when the variances between groups were not equal. Ninety-five percent confidence intervals for group comparisons (t tests) obtained from the linear mixed effects model are reported in squared brackets. Standard effect size measures are also provided (D for between-participants comparisons; Dz for within-participant comparisons; for between-subjects ANOVA; Lakens, 2013).

RESULTS

Consistent with numerous prior studies, participants in both groups showed a gradual change in hand angle in the opposite direction of the clamped feedback, trending toward an asymptotic level (Figure 1C–D; Tsay, Haith, Ivry, & Kim, 2022; Kim, Parvin, & Ivry, 2019; Morehead et al., 2017). Late adaptation was significant in all four conditions, indicating robust implicit adaptation generated by the clamped feedback, regardless of error size or participant vision level (3° controls: t(17) = 7.8, p < .001, μ = 13.4°, [9.9°, 17.1°], D = 1.8; 30° controls: t(17) = 13.9, p < .001, μ = 21.6°, [18.4°, 24.9°], D = 3.1; 3° low vision: t(19)= 5.6, p < .001, μ = 7.5°, [4.7°, 10.2°], D = 1.3); 30° low vision: t(19) = 10.0, p < .001, μ = 23.8°, [18.8°, 28.8°], D = 2.2). The main effect of Phase was not significant, F(1, 110) = 1.0, p = .32, , indicating that implicit adaptation exhibited minimal decay back to baseline when visual feedback was removed. Comparing the left and right panels of Figure 1C and 1D, the learning functions were higher when the error was 30° compared with when the error was 3°, F(1, 112) = 21.6, p < .001, , corrobo-rating previous reports showing that implicit adaptation increases with the size of the error (Kim et al., 2018; Marko, Haith, Harran, & Shadmehr, 2012).

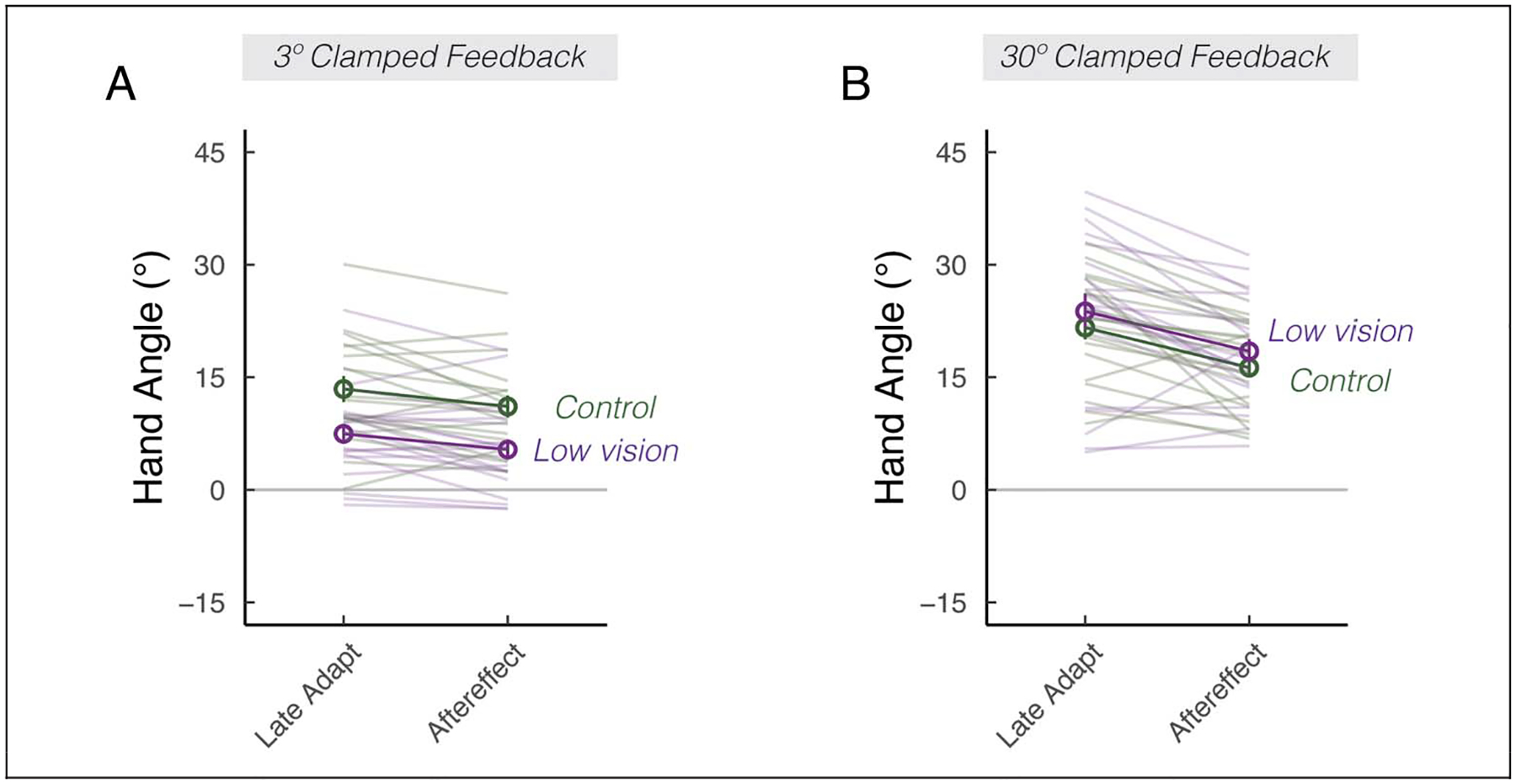

We next turned to our main question, asking how low vision impacts implicit adaptation in response sensory prediction errors. There was a significant interaction between Group and Error Size, F(1, 111) = 10.5, p = .002, : Whereas the learning function between the two groups were indistinguishable in response to a 30° error (Figures 1D and 2B), the learning function in the low vision group was attenuated compared with controls in response to a 3° error (Figures 1C and 2A). This assessment was confirmed by post hoc t tests, revealing that low vision was associated with attenuated implicit adaptation in response to the small error, t(60) = −3.0, p = .02, μ = −5.8, [−9.6, −1.9], D = 0.9, but not the large error, t(57) = 1.1, p = .67, μ = 2.2, [−1.6, 5.9], D = 0.2. Together, these results underscored an unappreciated implicit adaptation impairment associated with low vision, but only when the size of the visual error was small.

Figure 2.

Visual uncertainty attenuates implicit adaptation in response to small, but not large errors. Mean hand angles ± SEM during the late phase of the clamped-feedback block, and during the no-feedback aftereffect block, for 3° (A) and 30° (B) clamped rotation sessions. Thin lines denote individual participants.

Session Order Effect

Although the session order (3° or 30°) was fully counter-balanced across participants, one potential concern in a within-participant design of learning is that there may be an effect of transfer or interference between sessions (Avraham et al., 2021; Lerner et al., 2020; Krakauer et al., 2005). For instance, experiencing a 30° clamped feedback in the first session may interfere with learning in the second session, resulting in attenuated learning. We did not observe a significant Session Order effect on the extent of motor aftereffects, although the effect was marginal, F(1, 36) = 3.7, p = .06, . The key interaction between Group and Error Size remained significant even when Session Order was entered into the model as a covariate, F(1, 36) = 10.5, p = .003, , driven by a selective attenuating effect of low vision on small errors, t(71) = 2.9, p = .03, μ = −5.8, [−11.1, −0.5], D = 1.1, but not large errors, t(11) = 2.1, p = .66, μ = 2.3, [−2.9, 7.5], D = 0.5.

Kinematic Effects

There were no group differences in movement time (MT), that is, the time between the start of the movement (i.e., 1 cm from the center) and end of the movement (i.e., 8 cm from the center; MT: t(34) = 0.6, p = .58, μ = 17.5, [−46.9, 81.9], D = 0.2; median MT ± IQR, low vision = 140.0 ± 106.5 msec; control = 106.4 ± 163.9 msec). In contrast, RT, the interval between target presentation to the start of movement, was, on average, slower in the low vision group compared with control group (RT: t(32) = 3.3, p = .002, μ = 116.8, [47.6, 185.9], D = 1.1; median RT ± IQR, low vision = 425.0 ± 206.5 msec; control = 317.0 ± 133.6 msec). On an individual level, RTs did not significantly correlate with the degree of visual acuity (R = −.1, p = .75), ability to see road signs (R = −.3, p = .14), or visual impairment indices (R = .3, p = .18) in the low vision group. These findings are consistent with the notion that the group of individuals with low vision was impaired in their ability to visually detect targets relative to the controls, but that the ways in which low vision can impact target acquisition in a visuomotor task like ours are multifaceted and not necessarily predictable from low-dimensional measures of visual function. The group-level RT difference prompted us to include RT as a covariate in our analyses. We found that implicit adaptation was not significantly modulated by RT (main effect of RT: F(1, 95) = 1.2, p = .27, ; 3° aftereffect correlated with baseline RT: R = .01, p = .96; 30° aftereffect correlated with baseline RT: R = .00, p = .99). The interaction between Group and Error Size remained significant, F(1, 112) = 11.4, p = .001, , with the low vision group exhibiting attenuated implicit adaptation in response to small errors, t(60) = −2.4, p = .04, μ = −5.0, [0.9, 9.1], D = 0.9, but not large errors, t(60) = −1.4, p = .47, μ = −3.1, [−7.2, 1.1], D = 0.2.

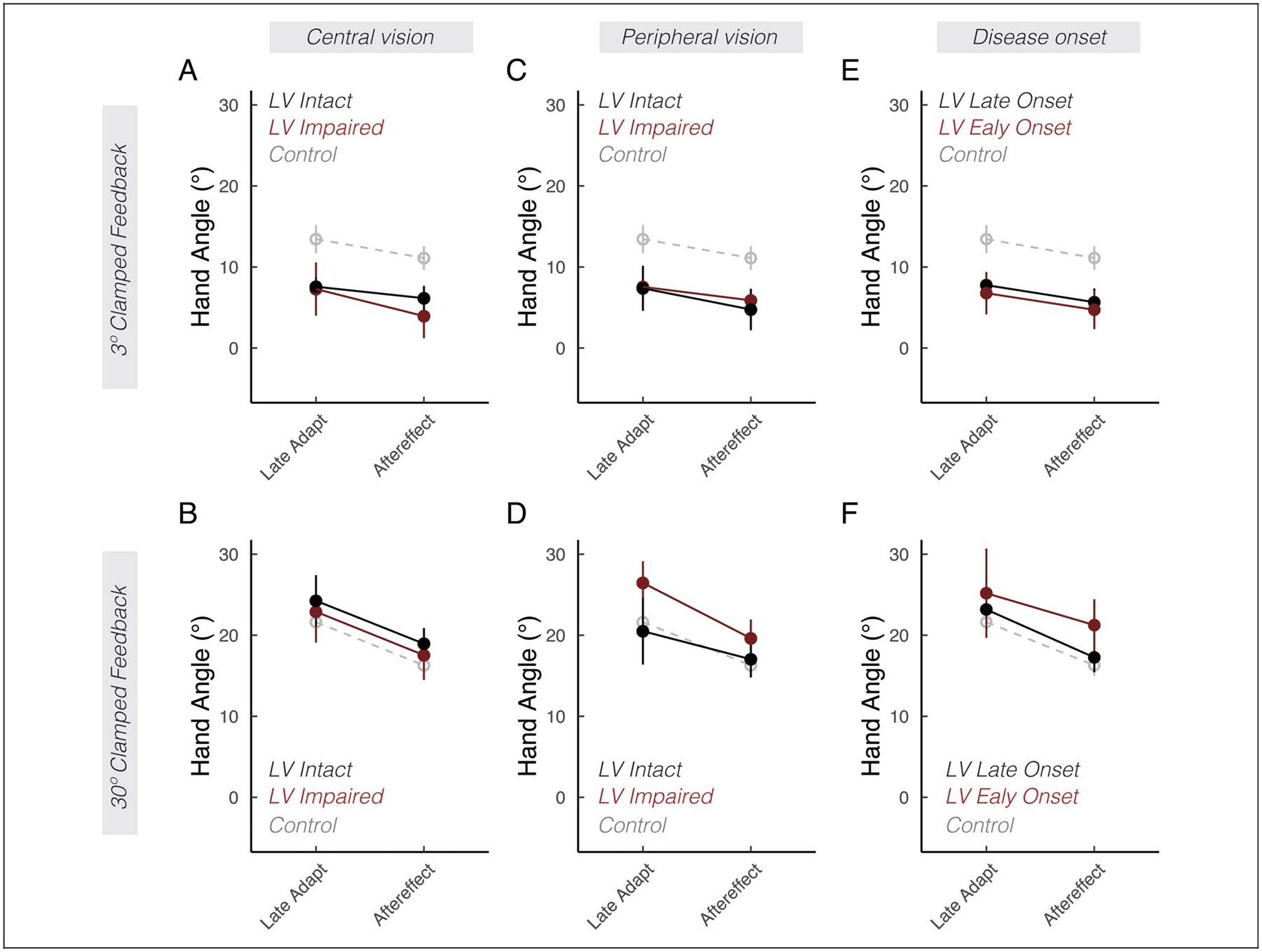

Subgroup Analysis

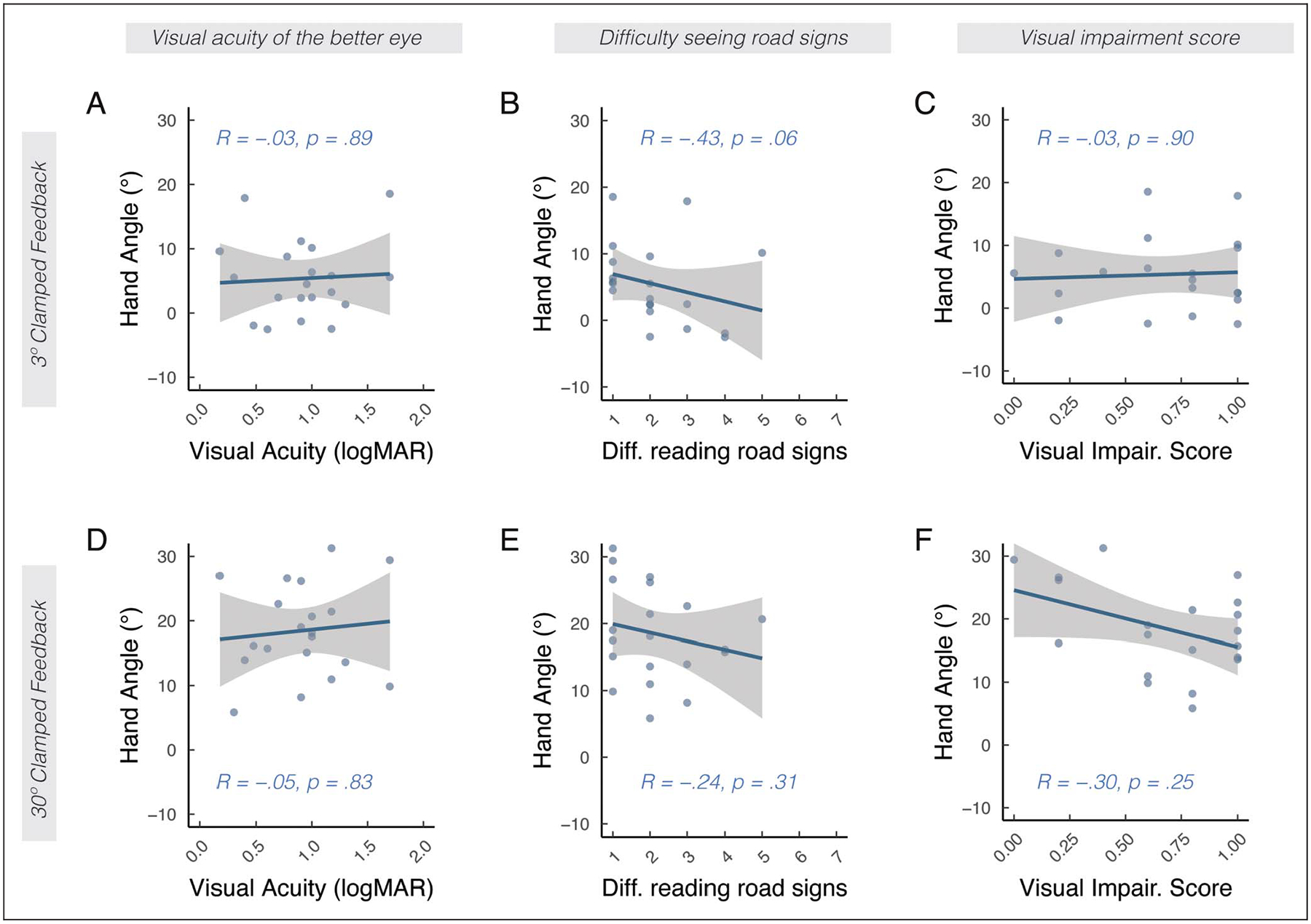

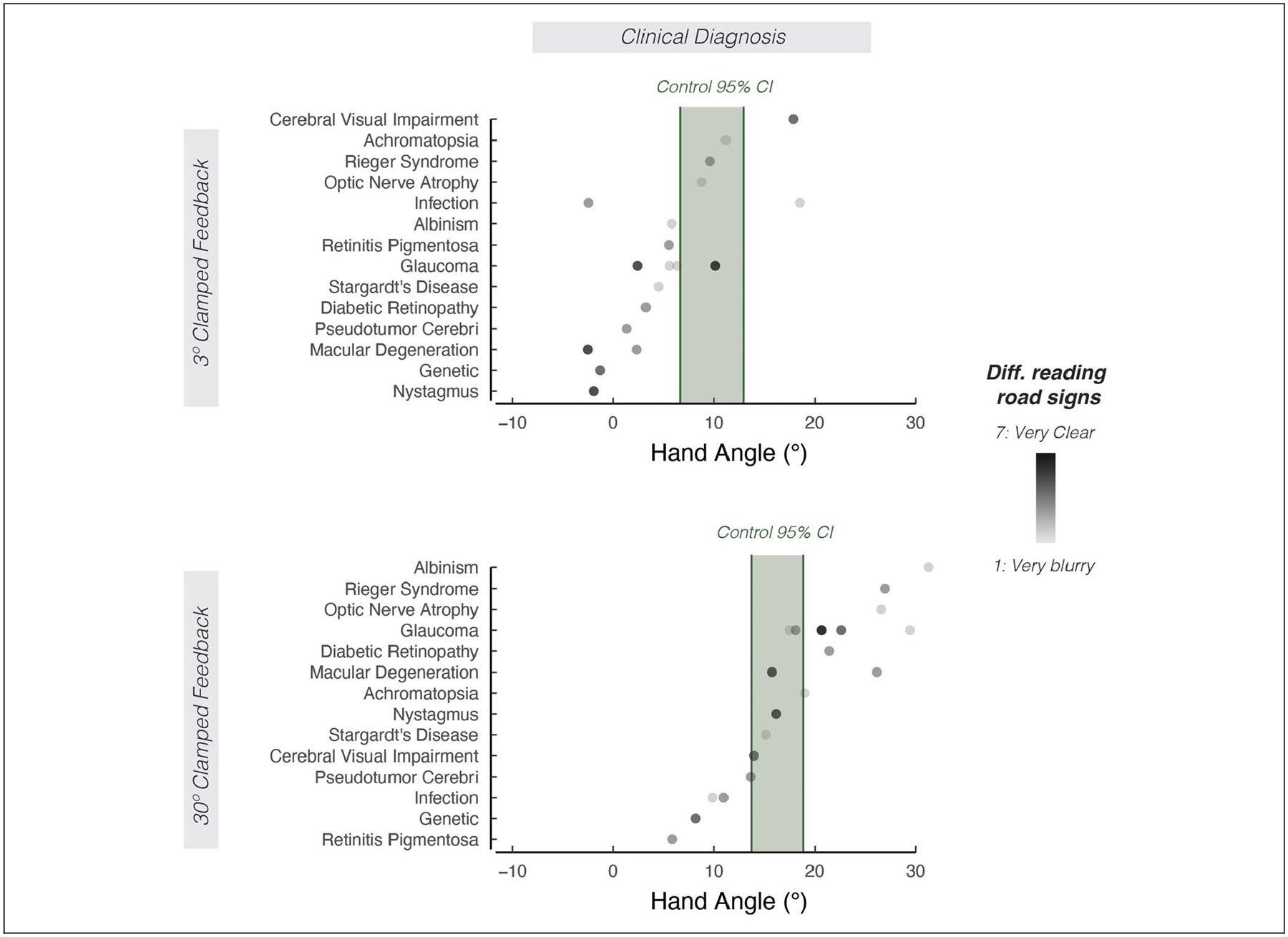

We also explored whether various subgroups of the participants with low vision exhibited differences in implicit adaptation. As shown in Figure 3, there were no appreciable differences between participants with and without central vision loss (Figure 3A and B; F(1, 18) = 0.3, p = .62, ), with and without peripheral vision loss (Figure 3C and D; F(1, 18)= 1.0, p = .32, ), or early versus late onset of low vision (Figure 3E and F; F(1, 17) = 0.7, p = .51, ). Furthermore, there were also no appreciable association between participants’ self-reports of visual acuity (Figure 4A and D), ability to perceive road signs (Figure 4B and E), visual impairment scores (Figures 4C and F), and clinical diagnoses (Figure 5) with the extent of implicit adaptation. In summary, we did not identify additional features among individuals in the low vision group that impacted implicit adaptation.

Figure 3.

Low vision subgroup analyses. Mean hand angles ± SEM during late adaptation and aftereffect phases. Each column divides the low vision (LV) group based on a different performance or clinical variable: central vision loss (A, B), peripheral vision loss (C, D), or disease onset (E, F). The control group is shown in gray dashed lines.

Figure 4.

The association between visual acuity and visual function and motor aftereffects. Correlation between visual acuity of the less impaired eye and motor aftereffects (A, D). Correlation between how clearly participants report seeing road signs (1 = very clear; 7 = very blurry) and motor aftereffects (B, E). Correlation between participant’s visual impairment index and motor aftereffects (C, F). The solid line indicates the regression line, and the shaded region indicates SEM. The Spearman correlation is noted by R.

Figure 5.

The effect of clinical diagnosis on motor aftereffects. Mean aftereffects sorted by clinical diagnoses involving low vision. Shading of the dot indicates how well participants report seeing road signs (light shading = road signs are very blurry; dark shading = road signs are very clear). The 95% confidence interval for the control group is indicated by the green shaded region.

DISCUSSION

Low vision can cause difficulty in discriminating the position of visual objects (Timmis & Pardhan, 2012; Massof & Fletcher, 2001). This impairment impacts motor performance, resulting in slower and less accurate goal-directed movements (Cheong et al., 2022; Lenoble et al., 2019; Endo et al., 2016; Verghese et al., 2016; Pardhan et al., 2012; Timmis & Pardhan, 2012; Kotecha et al., 2009; Jacko et al., 2000). Here, we asked how low vision impacts motor learning using a visuomotor adaptation task that isolates implicit adaptation. The results revealed that low vision was associated with attenuated implicit adaptation when the sensory prediction error was small, but not when the error was large. The error size by intrinsic visual uncertainty interaction converges with a recent in-laboratory study, in which sensory uncertainty was artificially increased using different cursor patterns (Tsay, Avraham, et al., 2021). Together, these results point to a strong convergence between the effect of extrinsic uncertainty in the visual stimulus (e.g., a foggy day) and intrinsic uncertainty induced by low vision (e.g., damage to or pathology of the visual system).

Potential Neural Learning Mechanisms that May Give Rise to the Error Size by Visual Uncertainty Interaction

An optimal integration hypothesis posits that intrinsic uncertainty induced by low vision would be associated with decreased sensitivity to errors and attenuate implicit adaptation for all error sizes. Therefore, an optimal integration hypothesis cannot account for our results. That being said, this error by uncertainty interaction can be explained by a modified Bayesian perspective, which posits that the nervous system performs causal inference (Hong, Badde, & Landy, 2021; Shams & Beierholm, 2010; Wei & Körding, 2009): Small errors, attributed to a misca-librated movement (e.g., not reaching far enough to retrieve a glass of water because of muscle fatigue), are “relevant” and thus require implicit adaptation to nullify these sensorimotor errors; the weight given to these small errors will fall off with increasing uncertainty. On the other hand, large errors are more likely attributed to “irrelevant” external sources from the environment (e.g., a missed basketball shot because of a sudden gust of wind) and will therefore get discounted by the sensorimotor system. Paradoxically, the weight given to these large errors will increase with uncertainty, because uncertainty can obscure the attribution of large errors to an external source. As such, the causal inference model predicts a crossover point, where implicit adaptation will be higher for small certain errors (compared with small uncertain errors) but be lower for large certain errors (compared with large uncertain errors; Wei & Körding, 2009).

A recent theory of implicit adaptation proposes an alternative possibility: The kinesthetic re-alignment hypothesis centers on the notion that implicit adaptation is driven to reduce a kinesthetic error, the mismatch between the perceived and desired position of the hand, rather than a visual error (Tsay, Kim, Haith, & Ivry, 2022). Note that in the original exposition of this model (Tsay, Kim, et al., 2022), we used the phrase “proprioceptive re-alignment.” However, moving forward, we will adopt the term “kinesthetic re-alignment” given that this better captures the idea that the perceived position of the hand is an integrated signal composed of multisensory inputs from vision and peripheral proprioceptive afferents, as well as predictive information from efferent signals (Proske & Gandevia, 2012). According to the kinesthetic re-alignment hypothesis, visual uncertainty indirectly affects implicit adaptation by influencing the magnitude of the kinesthetic shift, that is, the degree to which visual feedback recalibrates (biases) the perceived position of the hand (Cressman & Henriques, 2011). When the visual error is small, visual uncertainty attenuates the size of kinesthetic shifts and, therefore, attenuates the degree of implicit adaptation. When the visual error is larger than ~10°, kinesthetic shifts saturate and are therefore invariant to uncertainty (Tsay, Kim, Parvin, Stover, & Ivry, 2021; ’t Hart, Ruttle, & Henriques, 2020; Tsay et al., 2020). As such, visual uncertainty has no impact on implicit adaptation when the visual error is large. The mechanism driving kinesthetic shifts (i.e., the extent to which vision biases/attracts the perceived hand position) remains an active area for research. Some suggest that these shifts are because of mechanisms like causal inference (Hong et al., 2021; Wei & Körding, 2009), whereas others hypothesize that these mechanisms may follow a simple, fixed ratio rule (Zaidel, Turner, & Angelaki, 2011). Our data motivate future studies to directly evaluate the impact of visual uncertainty on kinesthetic shifts and probe the neural correlates that support this learning process.

Importantly, visual uncertainty was characterized rather coarsely in the current study via recalling the results of a recent clinician-administered eye exam and via self-reporting how low vision impacted ability to carry out activities of daily living. We acknowledge that without more fine-grained psychophysical tests of visual acuity, contrast sensitivity, and visual field loss, it is challenging to evaluate quantitative differences in visual uncertainty between the two study groups, or to examine potential effects of individual differences. Thus, it is possible that the effects observed between our two groups derive from other differences between them that do not reflect different levels of visual function. For example, the two groups may have viewed their screens at slightly different distances or with differing levels of brightness. To mitigate this concern, we have tried our best to standardize our setup. That is, we verified that participants all viewed the screen at a similar, comfortable distance and were all able to see the visual stimuli without using any compensatory viewing strategies. In addition, we note that possible setup differences would likely result in main effects between groups (e.g., participants with low vision paying less attention to the visual feedback, and therefore attenuating adaptation for all visual error sizes; Parvin, Dang, Stover, Ivry, & Morehead, 2022), rather than result in an interaction between error size and visual uncertainty. Detecting this interaction in a diverse sample of people with low vision who have performed the task in a naturalistic environment in fact highlights the robustness in our results.

The Impact of Low Vision on Sensorimotor Control and Learning

Previous work has shown that individuals with low vision move slower and make more errors when performing goal-directed movements (Verghese et al., 2016; Pardhan et al., 2012; Timmis & Pardhan, 2012; Pardhan, Gonzalez-Alvarez, & Subramanian, 2011; Kotecha et al., 2009). Although these deficits are observed in people with both central and peripheral vision loss, reductions in central vision appear to be the key limiting factor (Pardhan et al., 2011, 2012). Central vision loss, which can result in lower acuity and contrast sensitivity, likely worsens the ability to precisely locate the intended visual target as well as respond to the sensory predictions conveying motor performance, an impairment that would be especially pronounced when the target and error are small (Legge, Parish, Luebker, & Wurm, 1990; Tomkinson, 1974). Interestingly, in our Web-based studies, we did not observe strong associations between subjective measures of visual ability and implicit adaptation (see Figures 3–4). That being said, we readily acknowledge that our Web-based approach (adopted to continue research during the global pandemic) offers preliminary evidence for the impact of low vision on sensorimotor learning. We opted to recruit a diverse cohort of low vision participants, one that is largely representative of the diversity inherent to low vision. By administering a more detailed psychophysical battery, future follow-up studies would be able to home in on how different visual impairments (e.g., contrast sensitivity, color sensitivity) may jointly impact the extent of implicit adaptation.

From a practical perspective, our results provide the first characterization of how low vision affects not only motor performance, but also motor learning. Specifically, when the sensory inputs to the sensorimotor system cannot be clearly disambiguated because of low vision (i.e., small and uncertain visual errors), the extent of implicit adaptation is attenuated. However, when visual errors are clearly disambiguated despite having low fidelity (i.e., large and uncertain errors), the extent of implicit adaptation is not impacted by low vision. This dissociation underscores how the underlying learning mechanism per se is not compromised by low vision and may be exploited to enhance motor outcomes during clinical rehabilitation (Tsay & Winstein, 2020). For example, clinicians and practitioners could use nonvisual feedback (e.g., auditory or tactile) to enhance the saliency and possibly reduce localization uncertainty of small visual error signals (Endo et al., 2016; Patel, Park, Bonato, Chan, & Rodgers, 2012). Moreover, rehabilitative specialists could provide explicit instructions to highlight the presence of small errors, such that individuals may learn to rely more on explicit re-aiming strategies to compensate for these errors (Merabet, Connors, Halko, & Sánchez, 2012). Future work could examine which of these techniques is most effective to enhance motor learning when errors are small.

Funding Information

Jonathan S. Tsay, National Institute of Neurological Disorders and Stroke (https://dx.doi.org/10.13039/100000065), grant number: 1F31NS120448; Richard B. Ivry, National Institute of Neurological Disorders and Stroke (https://dx.doi.org/10.13039/100000065), grant number: R35NS116883-01; Emily A. Cooper, NSF, grant number: 2041726. The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

Diversity in Citation Practices

Retrospective analysis of the citations in every article published in this journal from 2010 to 2021 reveals a persistent pattern of gender imbalance: Although the proportions of authorship teams (categorized by estimated gender identification of first author/last author) publishing in the Journal of Cognitive Neuroscience (JoCN) during this period were M(an)/M = .407, W(oman)/M = .32, M/W = .115, and W/W = .159, the comparable proportions for the articles that these authorship teams cited were M/M = .549, W/M = .257, M/W = .109, and W/W = .085 (Postle and Fulvio, JoCN, 34:1, pp. 1–3). Consequently, JoCN encourages all authors to consider gender balance explicitly when selecting which articles to cite and gives them the opportunity to report their article’s gender citation balance.

Data Statement Availability

Raw data and code can be accessed at https://datadryad.org/stash/share/thhAGiZyDdcHQMqQYa6iwLITFNUpxwJbOqWnvmNHqsU.

REFERENCES

- Albus JS (1971). A theory of cerebellar function. Mathematical Biosciences, 10, 25–61. 10.1016/0025-5564(71)90051-4 [DOI] [Google Scholar]

- Avraham G, Morehead R, Kim HE, & Ivry RB (2021). Reexposure to a sensorimotor perturbation produces opposite effects on explicit and implicit learning processes. PLoS Biology, 19, e3001147. 10.1371/journal.pbio.3001147 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bond KM, & Taylor JA (2015). Flexible explicit but rigid implicit learning in a visuomotor adaptation task. Journal of Neurophysiology, 113, 3836–3849. 10.1152/jn.00009.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burge J, Ernst MO, & Banks MS (2008). The statistical determinants of adaptation rate in human reaching. Journal of Vision, 8, 20.1–20.19. 10.1167/8.4.20 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cheong Y, Ling C, & Shehab R (2022). An empirical comparison between the effects of normal and low vision on kinematics of a mouse-mediated pointing movement. International Journal of Human–Computer Interaction, 38, 562–572. 10.1080/10447318.2021.1952802 [DOI] [Google Scholar]

- Cressman EK, & Henriques DYP (2011). Motor adaptation and proprioceptive recalibration. Progress in Brain Research, 191, 91–99. 10.1016/B978-0-444-53752-2.00011-4 [DOI] [PubMed] [Google Scholar]

- Endo T, Kanda H, Hirota M, Morimoto T, Nishida K, & Fujikado T (2016). False reaching movements in localization test and effect of auditory feedback in simulated ultra-low vision subjects and patients with retinitis pigmentosa. Graefe’s Archive for Clinical and Experimental Ophthalmology, 254, 947–956. 10.1007/s00417-015-3253-2 [DOI] [PubMed] [Google Scholar]

- Ferrea E, Franke J, Morel P, & Gail A (2022). Statistical determinants of visuomotor adaptation in a virtual reality three-dimensional environment. Scientific Reports, 12, 10198. 10.1038/s41598-022-13866-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haith AM, Huberdeau DM, & Krakauer JW (2015). The influence of movement preparation time on the expression of visuomotor learning and savings. Journal of Neuroscience, 35, 5109–5117. 10.1523/JNEUROSCI.3869-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hayashi T, Kato Y, & Nozaki D (2020). Divisively normalized integration of multisensory error information develops motor memories specific to vision and proprioception. Journal of Neuroscience, 40, 1560–1570. 10.1523/JNEUROSCI.1745-19.2019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hong F, Badde S, & Landy MS (2021). Causal inference regulates audiovisual spatial recalibration via its influence on audiovisual perception. PLoS Computational Biology, 17, e1008877. 10.1371/journal.pcbi.1008877 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ito M (1986). Long-term depression as a memory process in the cerebellum. Neuroscience Research, 3, 531–539. 10.1016/0168-0102(86)90052-0 [DOI] [PubMed] [Google Scholar]

- Jacko JA, Barreto AB, Marmet GJ, Chu JYM, Bautsch HS, Scott IU, et al. (2000). Low vision: The role of visual acuity in the efficiency of cursor movement. In Proceedings of the fourth international ACM conference on assistive technologies (pp. 1–8). [Google Scholar]

- Kawato M, Ohmae S, Hoang H, & Sanger T (2021). 50 years since the Marr, Ito, and Albus models of the cerebellum. Neuroscience, 462, 151–174. 10.1016/j.neuroscience.2020.06.019 [DOI] [PubMed] [Google Scholar]

- Keisler A, & Shadmehr R (2010). A shared resource between declarative memory and motor memory. Journal of Neuroscience, 30, 14817–14823. 10.1523/JNEUROSCI.4160-10.2010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim HE, Avraham G, & Ivry RB (2021). The psychology of reaching: Action selection, movement implementation, and sensorimotor learning. Annual Review of Psychology, 72, 61–95. 10.1146/annurev-psych-010419-051053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim HE, Morehead R, Parvin DE, Moazzezi R, & Ivry RB (2018). Invariant errors reveal limitations in motor correction rather than constraints on error sensitivity. Communications Biology, 1, 19. 10.1038/s42003-018-0021-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim HE, Parvin DE, & Ivry RB (2019). The influence of task outcome on implicit motor learning. eLife, 8, e39882. 10.7554/eLife.39882 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Körding KP, & Wolpert DM (2004). Bayesian integration in sensorimotor learning. Nature, 427, 244–247. 10.1038/nature02169 [DOI] [PubMed] [Google Scholar]

- Kotecha A, O’Leary N, Melmoth D, Grant S, & Crabb DP (2009). The functional consequences of glaucoma for eye-hand coordination. Investigative Ophthalmology & Visual Science, 50, 203–213. 10.1167/iovs.08-2496 [DOI] [PubMed] [Google Scholar]

- Krakauer J, Ghez C, & Ghilardi MF (2005). Adaptation to visuomotor transformations: Consolidation, interference, and forgetting. Journal of Neuroscience, 25, 473–478. 10.1523/JNEUROSCI.4218-04.2005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lakens D (2013). Calculating and reporting effect sizes to facilitate cumulative science: A practical primer for t tests and ANOVAs. Frontiers in Psychology, 4, 863. 10.3389/fpsyg.2013.00863 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Legge GE, Parish DH, Luebker A, & Wurm LH (1990). Psychophysics of reading. XI. Comparing color contrast and luminance contrast. Journal of the Optical Society of America, 7, 2002–2010. 10.1364/josaa.7.002002 [DOI] [PubMed] [Google Scholar]

- Lenoble Q, Corveleyn X, Tran THC, Rouland J-F, & Boucart M (2019). Can I reach it? A study in age-related macular degeneration and glaucoma patients. Visual Cognition, 27, 732–739. 10.1080/13506285.2019.1661319 [DOI] [Google Scholar]

- Lerner G, Albert S, Caffaro PA, Villalta JI, Jacobacci F, Shadmehr R, et al. (2020). The origins of anterograde interference in visuomotor adaptation. Cerebral Cortex, 30, 4000–4010. 10.1093/cercor/bhaa016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marko MK, Haith AM, Harran MD, & Shadmehr R (2012). Sensitivity to prediction error in reach adaptation. Journal of Neurophysiology, 108, 1752–1763. 10.1152/jn.00177.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marr D (1969). A theory of cerebellar cortex. Journal of Physiology, 202, 437–470. 10.1113/jphysiol.1969.sp008820 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Massof RW, & Fletcher DC (2001). Evaluation of the NEI visual functioning questionnaire as an interval measure of visual ability in low vision. Vision Research, 41, 397–413. 10.1016/S0042-6989(00)00249-2 [DOI] [PubMed] [Google Scholar]

- McDougle SD, Boggess MJ, Crossley MJ, Parvin D, Ivry RB, & Taylor JA (2016). Credit assignment in movement-dependent reinforcement learning. Proceedings of the National Academy of Sciences, U.S.A, 113, 6797–6802. 10.1073/pnas.1523669113 [DOI] [PMC free article] [PubMed] [Google Scholar]

- McDougle SD, Bond KM, & Taylor JA (2015). Explicit and implicit processes constitute the fast and slow processes of sensorimotor learning. Journal of Neuroscience, 35, 9568–9579. 10.1523/JNEUROSCI.5061-14.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Merabet LB, Connors EC, Halko MA, & Sánchez J (2012). Teaching the blind to find their way by playing video games. PLoS One, 7, e44958. 10.1371/journal.pone.0044958 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Morehead JR, & Ivry R (2015). Intrinsic biases systematically affect visuomotor adaptation experiments. Neural Control of Movement. https://ivrylab.berkeley.edu/uploads/4/1/1/5/41152143/morehead_ncm2015.pdf [Google Scholar]

- Morehead R, Taylor JA, Parvin DE, & Ivry RB (2017). Characteristics of implicit sensorimotor adaptation revealed by task-irrelevant clamped feedback. Journal of Cognitive Neuroscience, 29, 1061–1074. 10.1162/jocn_a_01108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pardhan S, Gonzalez-Alvarez C, & Subramanian A (2011). How does the presence and duration of central visual impairment affect reaching and grasping movements? Ophthalmic & Physiological Optics, 31, 233–239. 10.1111/j.1475-1313.2010.00819.x [DOI] [PubMed] [Google Scholar]

- Pardhan S, Gonzalez-Alvarez C, & Subramanian A (2012). Target contrast affects reaching and grasping in the visually impaired subjects. Optometry and Vision Science, 89, 426–434. 10.1097/OPX.0b013e31824c1b89 [DOI] [PubMed] [Google Scholar]

- Parvin DE, Dang KV, Stover AR, Ivry RB, & Morehead JR (2022). Implicit adaptation is modulated by the relevance of feedback. bioRxiv. 10.1101/2022.01.19.476924 [DOI] [PubMed] [Google Scholar]

- Patel S, Park H, Bonato P, Chan L, & Rodgers M (2012). A review of wearable sensors and systems with application in rehabilitation. Journal of Neuroengineering and Rehabilitation, 9, 21. 10.1186/1743-0003-9-21 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proske U, & Gandevia SC (2012). The proprioceptive senses: Their roles in signaling body shape, body position and movement, and muscle force. Physiological Reviews, 92, 1651–1697. 10.1152/physrev.00048.2011 [DOI] [PubMed] [Google Scholar]

- Samad M, Chung AJ, & Shams L (2015). Perception of body ownership is driven by Bayesian sensory inference. PLoS One, 10, e0117178. 10.1371/journal.pone.0117178 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shadmehr R, Smith MA, & Krakauer J (2010). Error correction, sensory prediction, and adaptation in motor control. Annual Review of Neuroscience, 33, 89–108. 10.1146/annurev-neuro-060909-153135 [DOI] [PubMed] [Google Scholar]

- Shams L, & Beierholm UR (2010). Causal inference in perception. Trends in Cognitive Sciences, 14, 425–432. 10.1016/j.tics.2010.07.001 [DOI] [PubMed] [Google Scholar]

- Shyr MC, & Joshi SS (2021). Validation of the Bayesian sensory uncertainty model of motor adaptation with a remote experimental paradigm. In 2021 IEEE 2nd international conference on human-machine systems (ICHMS) (pp. 1–6). [Google Scholar]

- Taylor JA, & Ivry RB (2011). Flexible cognitive strategies during motor learning. PLoS Computational Biology, 7, e1001096. 10.1371/journal.pcbi.1001096 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Taylor JA, Krakauer JW, & Ivry RB (2014). Explicit and implicit contributions to learning in a sensorimotor adaptation task. Journal of Neuroscience, 34, 3023–3032. 10.1523/JNEUROSCI.3619-13.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- ’t Hart BM, Ruttle JE, & Henriques DYP (2020). Proprioceptive recalibration generalizes relative to hand position. https://deniseh.lab.yorku.ca/files/2020/05/tHart_SfN_2019.pdf?x64373

- Timmis MA, & Pardhan S (2012). The effect of central visual impairment on manual prehension when tasked with transporting-to-place an object accurately to a new location. Investigative Ophthalmology & Visual Science, 53, 2812–2822. 10.1167/iovs.11-8860 [DOI] [PubMed] [Google Scholar]

- Tomkinson CR (1974). Accurate assessment of visual acuity in low vision patients. Optometry and Vision, 51, 321–324. 10.1097/00006324-197405000-00004 [DOI] [PubMed] [Google Scholar]

- Tsay JS, Avraham G, Kim HE, Parvin DE, Wang Z, & Ivry RB (2021). The effect of visual uncertainty on implicit motor adaptation. Journal of Neurophysiology, 125, 12–22. 10.1152/jn.00493.2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsay JS, Haith AM, Ivry RB, & Kim HE (2022). Interactions between sensory prediction error and task error during implicit motor learning. PLoS Computational Biology, 18, e1010005. 10.1371/journal.pcbi.1010005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsay JS, Kim H, Haith AM, & Ivry RB (2022). Understanding implicit sensorimotor adaptation as a process of proprioceptive re-alignment. eLife, 11, e76639. 10.7554/eLife.76639 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsay JS, Kim HE, Parvin DE, Stover AR, & Ivry RB (2021). Individual differences in proprioception predict the extent of implicit sensorimotor adaptation. Journal of Neurophysiology, 125, 1307–1321. 10.1152/jn.00585.2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsay JS, Lee A, Ivry RB, & Avraham G (2021). Moving outside the lab: The viability of conducting sensorimotor learning studies online. Neurons, Behavior, Data Analysis, and Theory. 10.51628/001c.26985 [DOI] [Google Scholar]

- Tsay JS, Najafi T, Schuck L, Wang T, & Ivry RB (2022). Implicit sensorimotor adaptation is preserved in Parkinson’s disease. Brain Communications, 4, fcac303. 10.1093/braincomms/fcac303, [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsay JS, Parvin DE, & Ivry RB (2020). Continuous reports of sensed hand position during sensorimotor adaptation. Journal of Neurophysiology, 124, 1122–1130. 10.1152/jn.00242.2020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsay JS, Schuck L, & Ivry RB (2022). Cerebellar degeneration impairs strategy discovery but not strategy recall. Cerebellum. 10.1007/s12311-022-01500-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tsay JS, & Winstein CJ (2020). Five features to look for in early-phase clinical intervention studies. Neurorehabilitation and Neural Repair, 35, 3–9. 10.1177/1545968320975439 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tseng Y-W, Diedrichsen J, Krakauer JW, Shadmehr R, & Bastian AJ (2007). Sensory prediction errors drive cerebellum-dependent adaptation of reaching. Journal of Neurophysiology, 98, 54–62. 10.1152/jn.00266.2007 [DOI] [PubMed] [Google Scholar]

- van Beers RJ (2012). How does our motor system determine its learning rate? PLoS One, 7, e49373. 10.1371/journal.pone.0049373 [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Beers RJ, Wolpert DM, & Haggard P (2002). When feeling is more important than seeing in sensorimotor adaptation. Current Biology, 12, 834–837. 10.1016/S0960-9822(02)00836-9 [DOI] [PubMed] [Google Scholar]

- Verghese P, Tyson TL, Ghahghaei S, & Fletcher DC (2016). Depth perception and grasp in central field loss. Investigative Ophthalmology & Visual Science, 57, 1476–1487. 10.1167/iovs.15-18336 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vindras P, Desmurget M, Prablanc C, & Viviani P (1998). Pointing errors reflect biases in the perception of the initial hand position. Journal of Neurophysiology, 79, 3290–3294. 10.1152/jn.1998.79.6.3290 [DOI] [PubMed] [Google Scholar]

- Wei K, & Körding K (2009). Relevance of error: What drives motor adaptation? Journal of Neurophysiology, 101, 655–664. 10.1152/jn.90545.2008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wei K, & Körding K (2010). Uncertainty of feedback and state estimation determines the speed of motor adaptation. Frontiers in Computational Neuroscience, 4, 11. 10.3389/fncom.2010.00011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaidel A, Turner AH, & Angelaki DE (2011). Multisensory calibration is independent of cue reliability. Journal of Neuroscience, 31, 13949–13962. 10.1523/JNEUROSCI.2732-11.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Raw data and code can be accessed at https://datadryad.org/stash/share/thhAGiZyDdcHQMqQYa6iwLITFNUpxwJbOqWnvmNHqsU.