Abstract

People express their own emotions and perceive others’ emotions via a variety of channels, including facial movements, body gestures, vocal prosody, and language. Studying these channels of affective behavior offers insight into both the experience and perception of emotion. Prior research has predominantly focused on studying individual channels of affective behavior in isolation using tightly controlled, non-naturalistic experiments. This approach limits our understanding of emotion in more naturalistic contexts where different channels of information tend to interact. Traditional methods struggle to address this limitation: manually annotating behavior is time-consuming, making it infeasible to do at large scale; manually selecting and manipulating stimuli based on hypotheses may neglect unanticipated features, potentially generating biased conclusions; and common linear modeling approaches cannot fully capture the complex, nonlinear, and interactive nature of real-life affective processes. In this methodology review, we describe how deep learning can be applied to address these challenges to advance a more naturalistic affective science. First, we describe current practices in affective research and explain why existing methods face challenges in revealing a more naturalistic understanding of emotion. Second, we introduce deep learning approaches and explain how they can be applied to tackle three main challenges: quantifying naturalistic behaviors, selecting and manipulating naturalistic stimuli, and modeling naturalistic affective processes. Finally, we describe the limitations of these deep learning methods, and how these limitations might be avoided or mitigated. By detailing the promise and the peril of deep learning, this review aims to pave the way for a more naturalistic affective science.

Keywords: Deep learning, Affective science, Person perception, Generalizability, Cognitive modeling

Humans express and recognize emotions using multiple channels in contextually flexible ways (Cowen & Keltner, 2021; Kret et al., 2020; Neal & Chartrand, 2011; Niedenthal et al., 2009; Nummenmaa et al., 2014). These channels include facial movements (Coles et al., 2019; Ekman, 1993; Namba et al., 2022; Wood et al., 2016), body language (C. Ferrari et al., 2022; Poyo Solanas et al., 2020; Reed et al., 2020; Wallbott, 1998), and the tone and content of speech (Bachorowski & Owren, 1995; Beukeboom, 2009; Hawk et al., 2009; Ponsot et al., 2018). Context – both the physical and human environment – also plays a key role (Greenaway et al., 2018; Ngo & Isaacowitz, 2015; Whitesell & Harter, 1996).

Prior research focusing on each individual channel of affective information has advanced a mechanistic understanding of emotion. However, this approach limits generalizability to real-world contexts where different channels of information naturally interact (Yarkoni, 2022). This relatively non-naturalistic tradition of affective research stems in part from technical barriers related to analyzing emotion in more naturalistic contexts. Here, we introduce how deep learning could be applied to overcome these barriers. By understanding its promises and being mindful of its limitations, researchers may use deep learning to advance a more naturalistic affective science.

Current Practices in Affective Research

Before we introduce deep learning applications for affective research, we discuss current practices and challenges. We focus on three topics: quantifying behavior, optimizing stimuli, and modeling affective processes.

Researchers commonly quantify behavior through manual annotation. For instance, they annotate the activation of facial muscles (e.g., cheek raiser) on participants’ faces using the Facial Action Coding System (Ekman & Friesen, 1978; Girard et al., 2013; Kilbride & Yarczower, 1983). Others manually measure the joint angles of mannequin figures and point-light displays to study body language (Atkinson et al., 2004; Coulson, 2004; Roether et al., 2009; Thoresen et al., 2012).

However, manual annotation is time-consuming (Cohn et al., 2007). This limits the quantity and frequency of annotation. For instance, it would be infeasible to annotate every frame in a large set of videos. As a result, prior research has disproportionately used small samples of static, artificial stimuli (Aviezer et al., 2008, 2012; Benitez-Quiroz et al., 2018; Cowen et al., 2021; McHugh et al., 2010).

Existing research also uses computational tools for quantifying behaviors. For instance, computational models of faces and facial expressions help reveal diagnostic features that people use to infer emotions from faces (Blanz & Vetter, 1999; Jack & Schyns, 2017; Martinez, 2017). Using digital equipment, researchers measure vocal features of speech such as amplitude and frequency (Scherer, 1995, 2003). Models that link these vocal features to emotions further enable researchers to manipulate emotional vocalizations during conversations in real time (Arias et al., 2021). Researchers also investigate the emotional content of speech by computing the frequencies of emotion laden words (e.g., words that commonly express happiness) to perform sentiment analysis (Crossley et al., 2017; Pennebaker & Francis, 1999).

These computational tools work well with highly controlled stimuli (e.g., high quality audiovisual recordings). However, they struggle with naturalistic stimuli, such as real-world conversations where speakers talk over each other in noisy environments. More generally, many of these computational models are based on theory-driven features (e.g., facial/vocal features, or words that researchers think might be associated with emotions), which could miss important emotional features that researchers do not anticipate.

The challenge of quantifying behaviors leads to the further challenge of optimizing naturalistic stimuli that portray these behaviors. Representative sampling is necessary to make inferences from samples to populations. This is widely understood by psychologists, and the field is making increasing efforts to recruit participants from more diverse populations (Barrett, 2020; Henrich et al., 2010; Rad et al., 2018). However, it is less widely appreciated that the need for representative sampling also applies to stimuli (Brunswik, 1955).

The lack of tools for quantifying behavior in naturalistic stimuli (e.g., video recording of participants’ emotional responses in conversations) makes it difficult to systematically select and manipulate stimuli. As a result, much prior research relies on manual selection (e.g., selecting recordings based on basic emotions) and manual manipulation (e.g., changing the joint angles of point-light displays based on hypotheses). These methods introduce researchers’ preconceived beliefs into experimental designs, and may lead to conclusions that favor those beliefs.

The technical barriers to quantifying behavior and optimizing stimuli contribute to a third challenge, modeling naturalistic affective processes. The mind integrates different channels of affective information in complex and contextual manners. For instance, these integrations may be nonlinear (e.g., when paired with a high-pitched vocalization, both wide and squinting eyes could signal frustration). Different subsets of information streams may be integrated at different stages (e.g., identity and context first, and then with facial expressions). Common linear modeling approaches cannot fully capture these complex processes.

The Promise of Deep Learning for Affective Research

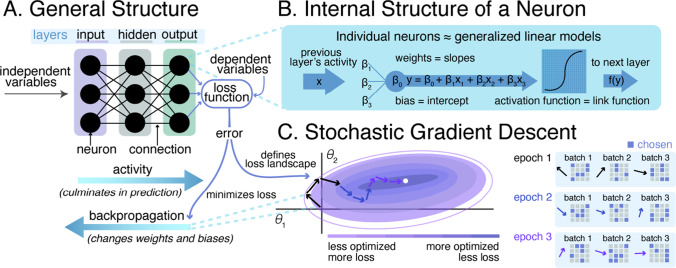

Machine learning is an umbrella term for the practice of training computer algorithms to discover patterns in and make predictions about data (Table 1). Deep learning is a subset of machine learning based on deep neural networks (DNNs) (Rumelhart et al., 1986). DNNs consist of networks of artificial neurons, roughly akin to neurons, and connections, representing synapses. By optimizing the connection (i.e., weights) between neurons the model learns a mapping between the inputs and the outputs that minimizes the prediction errors during the training process (Fig. 1). There are a wide variety of DNN architectures used for solving different computational problems (Table 1).

Table 1.

Comparing deep learning with other machine learning methods

| Goal | Examples of Deep Learning Methods | Examples of Other Machine Learning Methods |

|---|---|---|

| Regression (Linear) | Single-layer Perceptron with Linear Activations | Linear Regression, Ridge, Lasso |

| Regression (Nonlinear) | Multilayer Perceptron with Nonlinear Activations | Generalized Linear Model, Polynomial Regression |

| Regression (Time series, Sequences) | Long Short-term Memory Network, Transformer | Autoregressive models, Hidden Markov Model |

| Classification | Convolutional Neural Network | Support Vector Machine, Random Forest |

| Dimension Reduction (Linear) | Autoencoder with Linear Activations | Principal Component Analysis, Exploratory Factor Analysis |

|

Dimension Reduction (Nonlinear) |

Autoencoder with Nonlinear Activations, Self-supervised Model | T-distributed Stochastic Neighbor Embedding, Uniform Manifold Approximation and Projection |

| Clustering | N/A (deep learning can facilitate clustering but does not itself return categorical outputs) |

K-Mean, Hierarchical Clustering, Gaussian Mixture Model |

| Cognitive Models | Spiking Network | Drift Diffusion Model |

| Agentic Models | Deep reinforcement learning | Reinforcement learning |

Examples represent common use cases; they are neither exclusive nor exhaustive

Fig. 1.

The structure and training process of a DNN. A. The basic components of a DNN. B. The computations performed inside a neuron. C. The training process for minimizing loss (prediction error) using stochastic gradient descent via backpropagation

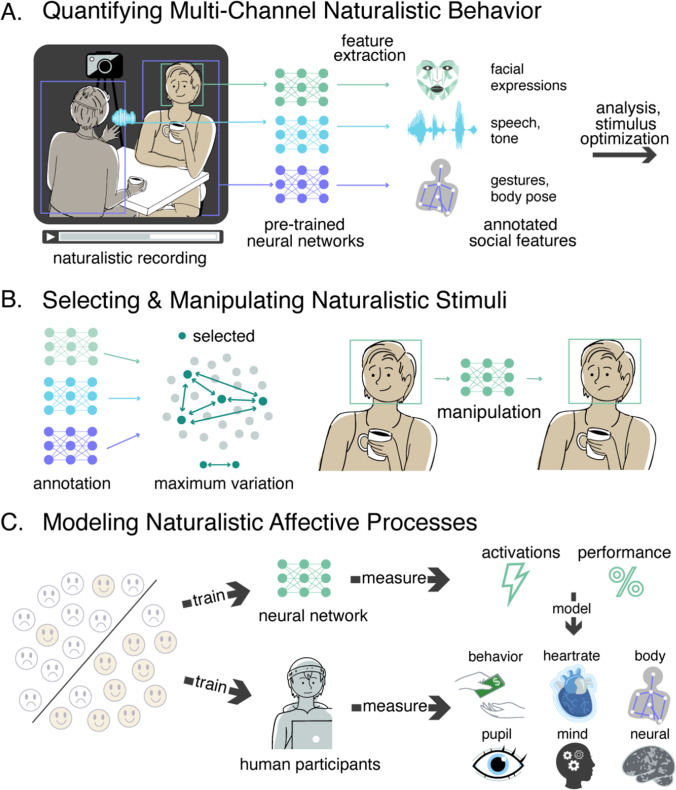

Here, we describe how DNNs could help address the challenges of behavior quantification, stimuli optimization, and cognitive modeling (Fig. 2). First, many pre-trained DNN models can be readily applied to quantify different channels of affective behavior and do not require any additional model training (Table 2). These models have four distinct advantages over manual annotations and existing computational models.

Fig. 2.

Applications of DNNs for advancing naturalistic affective research. A. DNNs provide a more scalable way to quantify behavior of study participants and stimulus targets in naturalistic contexts. B. DNN-based quantifications can support better experimentation by facilitating naturalistic stimulus selection and manipulation. C. DNNs are capable of capturing interactive and nonlinear effects, ideal for modeling cognitive/neural mechanisms underlying the subjective experience, physiological responses, and the recognition and expressions of emotions

Table 2.

Examples of pre-trained DNN models and DNN architectures for quantifying naturalistic behavior

| Modality | Application | Citation |

|---|---|---|

| Facial Expression | Emotion Labelling | (Cheong et al., 2021) |

| (Wasi et al., 2023) | ||

| (Xue et al., 2022) | ||

| (Wen et al., 2023) | ||

| (Vo et al., 2020) | ||

| (Wang, Peng, et al., 2020) | ||

| Action Unit Annotation | (Cheong et al., 2023) | |

| (Savchenko, 2022) | ||

| (Luo et al., 2022) | ||

| (Pumarola et al., 2018a) | ||

| Body Pose | Pose Estimation | (Kocabas et al., 2021) |

| (Xu, Zhang, et al., 2022) | ||

| (Liu et al., 2022) | ||

| (Cai et al., 2020) | ||

| (Sun et al., 2019) | ||

| (Xiao et al., 2018) | ||

| Speech | Speech Separation | (Lutati et al., 2023) |

| (Lutati et al., 2022b) | ||

| (Subakan et al., 2021) | ||

| (Luo et al., 2020) | ||

| (Luo & Mesgarani, 2019) | ||

| Speech-to-Text | (Bain et al., 2023) | |

| (Gállego et al., 2021) | ||

| (Conneau et al., 2021) | ||

| (Wang, Tang, et al., 2020) | ||

| (Park et al., 2019) | ||

| Text | Text Embedding | (He et al., 2021) |

| (Gao et al., 2021) | ||

| (Lan et al., 2020) | ||

| (Reimers & Gurevych, 2019) | ||

| (Cer et al., 2018) | ||

| Sentiment Analysis | (Heinsen, 2022) | |

| (Sun et al., 2020) | ||

| (Heinsen, 2020) | ||

| (Peters et al., 2018) |

First, many pre-trained DNNs are efficient to use. For instance, some face annotation DNNs can quantify action units, facial key points, and head poses across thousands of frames of a video in a few minutes (Baltrusaitis et al., 2018; Benitez-Quiroz et al., 2016). This speed advantage creates new possibilities. For instance, using these tools, researchers could predict participants’ subjective experience of emotions in real time based on the behavioral quantifications of them from video recordings (Li et al., 2021) and use these predictions to time exactly when to introduce experimental manipulations (Fig. 2A).

Second, using pre-trained DNNs reduces costs. For instance, to study body language of real people in social interactions, traditionally researchers need to acquire expensive devices such as motion capture suits or camera systems (Hart et al., 2018; Zane et al., 2019). In comparison, pre-trained DNNs can annotate body poses and joint positions based on ordinary video recordings (Kocabas et al., 2020, 2021; Rempe et al., 2021). These pre-trained and lightweight DNN models provide accessible alternatives to a broader range of researchers.

Third, pre-trained DNNs for behavioral quantification are well suited for complex, real-world contexts. For instance, many pre-trained DNNs are available for naturalistic speech analysis, including the separation of overlapping speech sources, conversion of speech to text, and the quantification of these text in terms of their meaning (Chernykh & Prikhodko, 2018; Lutati et al., 2022a; C. Wang et al., 2022). They offer researchers more powerful tools to investigate how people communicate emotions in real-world conversations and large text corpora across cultures and languages (Ghosal et al., 2019; Poria et al., 2019; Thornton et al., 2022).

Fourth, pre-trained DNNs for behavioral quantification are flexible to use. For instance, there are a range of pre-trained DNNs for quantifying contexts (physical and human environment). At one extreme, researchers can combine multiple models to quantify different elements in the context, such as the interacting partners’ behaviors and the objects present (Bhat et al., 2020). At the other extreme, researchers can extract a global description of the scene (Krishna et al., 2017). The ability to quantify context in these different operational levels makes it possible to study the effects of context on emotion recognition, emotion expression, and the subjective, physiological, and neural components of emotion more precisely.

Applying deep learning to quantify behavior benefits stimulus optimization efforts as well (Fig. 2B). Imagine a case in which researchers are investigating how storytelling evokes emotional experiences. Selecting storytelling videos that are representative of the diverse storytelling that people encounter in daily life will facilitate a more generalizable conclusion (Fig. 2B, left). The multi-channel behavioral quantifications from DNNs can help achieve this goal. Specifically, researchers could first scrape a large number of real-world storytelling videos from the internet; then quantify multi-channel information in each video (e.g., face, body, speech, context) using deep learning models; and finally, apply the maximum variation sampling procedure (Patton, 1990) to select a subset of stimuli from every part of the psychological space.

To better understand the causal relation between different channels of information and affective responses, researchers may wish to manipulate stimuli beyond selecting them (Fig. 2B right). Deep learning models can also manipulate naturalistic stimuli realistically and in real time (Xu, Hong, et al., 2022). For instance, researchers could manipulate the facial expressions of participants as they spoke to each other over a video call and measure how one participant’s manipulated facial expressions influence the other partner’s subjective experience of emotions or physiological responses. This would allow for controlled experiments on naturalistic conversations through the medium of the conversation itself, rather than imposing an external intrusion upon it (e.g., prompts to change conversation topics).

Researchers can also use deep learning to achieve experimental control over naturalistic stimuli by synthesizing novel stimuli that never existed in the real world (Balakrishnan et al., 2018; Daube et al., 2021; Guo et al., 2023; Liu et al., 2021; Masood et al., 2023; Pumarola et al., 2018b; Ren & Wang, 2022; Roebel & Bous, 2022; Schyns et al., 2023; Wang et al., 2018; Yu et al., 2019). These tools can generate high-quality, realistic images, audios, and videos of any combination of features that the researchers might be interested in, some even in real time. This can provide an unprecedented level of control to researchers while still retaining naturalism in the stimuli.

Finally, deep learning could advance a computational understanding of naturalistic affective processes in the mind and brain (Fig. 2C). Many researchers have already applied deep learning to cognitive modeling, such as how information is represented in the visual cortex (Cichy & Kaiser, 2019; Dobs et al., 2022; Khaligh-Razavi & Kriegeskorte, 2014; Kohoutová et al., 2020; Konkle & Alvarez, 2022; Mehta et al., 2020; Perconti & Plebe, 2020; Richards et al., 2019; Saxe et al., 2021; Su et al., 2020).

Research has started applying deep learning to model affective cognition (Kragel et al., 2019; Thornton et al., 2023). Three qualities of DNNs make them a promising avenue for advancing a naturalistic understanding of affective processes. First, by virtue of their nonlinear activation functions and multi-layered structure (Fig. 1), DNN models excel at discovering complex interactions among both observable variables (e.g., affective behaviors) and latent variables. Given the importance of latent variables (e.g., emotions) and the complex interactions between behaviors and contexts, this feature is essential for building realistic cognitive models of affective processing.

Second, DNN models can predict multidimensional dependent variables in a single integrated model. Unlike common regression-based models, which typically have scalar outputs, the dependent variables in DNN models can be scalars, vectors, or multidimensional arrays. Moreover, DNN models can capitalize on the structure of the data, modeling both spatial relationships (e.g., via convolutions) and temporal relationships (e.g., via recurrence). Although one can find these individual pieces in other bespoke statistical models (Table 1), arguably nothing rivals the flexibility of deep learning at combining them into a single computationally efficient package. Since both the inputs (others’ naturalistic behavior) and outputs of affective processes (subjective, physiological, and neural components of emotions) are frequently multidimensional and complexly structured in time and space, this flexibility makes deep learning useful for affective modeling.

Third, DNN models provide a useful framework for simulating causal effects. For instance, to understand how different types of affective behaviors (e.g., face and voice) interact to express emotions, one can manipulate a DNN’s architecture so that different cues are allowed to interact in different layers of the models. These manipulations are impossible to do in the human brain as one cannot simply rewire it at will. Deep learning can also be embedded within embodied agents (Arulkumaran et al., 2017) so that researchers can use them to study how affective processes shape action and decision-making as agents learn to causally manipulate their environment.

Limitations of Deep Learning for Affective Research

Despite its promise, deep learning is not a magic box. Understanding the limitations of DNNs will help affective scientists use them effectively. Here, we describe the limitations of DNNs for behavior quantification, stimuli optimization, and cognitive modeling.

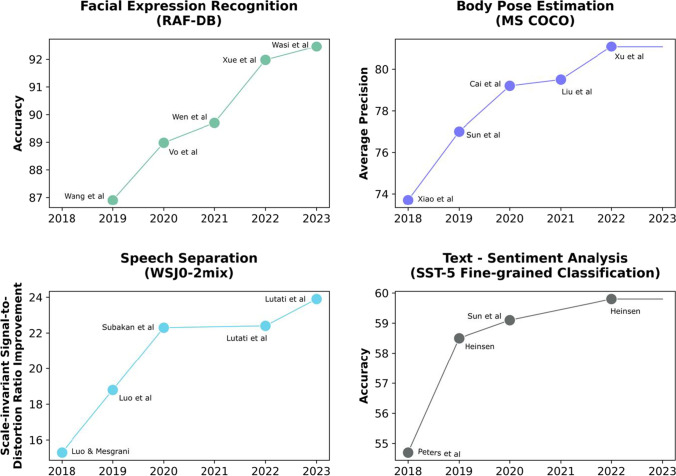

First, although the accuracy of pre-trained DNNs for annotating affective behavior is relatively high, many of them have yet to achieve human-level accuracy. For instance, the accuracy of estimating three-dimensional facial expressions and body movements is constrained by the two-dimensional inputs that these models are trained on (images and videos). However, given the fast pace of deep learning improvements (Fig. 3), there is reason to be optimistic about improvement in this regard.

Fig. 3.

Improvements of DNNs for behavioral quantification over time. Title indicates the behavior channel and the corresponding benchmark dataset that the models were evaluated on. X-axis indicates the year the models were published. Y-axis indicates the metric for measuring model performance. Data reflect benchmarks reported on paperswithcode.com (Papers with Code, n.d.)

Second, the benefits of using pre-trained DNNs for behavioral annotation vary with the context. For instance, many of these models annotate only a subset of behavioral features that researchers might be interested in, such as only 20 out of 46 facial action units. The performance of these models may be significantly reduced in certain situations. For instance, DNN audio source separation may fail when the quality of audio recordings is low. These models also struggle to generalize. For instance, a facial expression classification model that performs well in the conditions it was trained on (e.g., frontal, well-lit, adult faces), may perform poorly when applied to different conditions (Cohn et al., 2019). Careful accuracy and bias auditing should be part of any study relying on deep learning as an objective quantification tool.

Third, DNNs are susceptible to social biases. These biases may result from the composition of the training dataset (e.g., having more samples for certain ethnicities than others), the bias of the humans who provided the training labels (e.g., stereotyped associations), and/or the architecture of the algorithm itself (Mehrabi et al., 2021; Shankar et al., 2017). For instance, some algorithms wrongly assign more negative emotions to Black men’s faces than White men’s faces, reflecting a stereotype widespread in the US (Kim et al., 2021; Rhue, 2018; Schmitz et al., 2022).

Since the application of stimulus optimization uses outputs from pre-trained DNNs, the above limitations of the quantification models can carry through to influence stimulus selection. For instance, if a voice quantification model has been overtrained on male versus female speech, it may represent male voices as more distinct from each other than female voices. Applying maximum variation sampling based on these quantifications might thus lead to over-sampling of male speech.

Maximum variation sampling is also susceptible to class imbalance (Van Calster et al., 2019). For instance, if the initial stimulus set has significantly more positive valence stories than negative ones, then more positive stories will be selected more frequently. However, both issues with stimulus selection can be mitigated with stratified maximum variation sampling (e.g., applying the procedure to male and female speech separately, or positive and negative stories separately) (Lin et al., 2021, 2022; Lin & Thornton, 2023).

While some applications of deep learning are approaching maturity (i.e., achieving high-level accuracy), such as behavior quantification (Fig. 3), others are just emerging, such as manipulating and synthesizing realistic stimuli in real time. At present, applying DNNs for manipulating and synthesizing stimuli are most reliable for features that the models have been exposed to (Hosseini et al., 2017; Papernot et al., 2016). For instance, if an algorithm has never been trained on the motion of jumping, its synthesis of people jumping with joy is unlikely to look realistic.

When applying DNNs for affective modeling, in addition to the overall level of accuracy, researchers should carefully consider the types of errors that DNNs make, which may be systematically different from those of humans. Researchers should also caution equating deep learning models to the human mind and brain. Correlations between human performance and DNNs do not indicate that the two systems share similar causal mechanisms (Bowers et al., 2022; Schyns et al., 2022).

Finally, researchers should also distinguish between the inherent versus current limitations of DNNs. For instance, many existing DNN models are trained on aggregate-level data and thus cannot represent individual differences in affective processes. However, with the proper inputs (e.g., individual-level perceptions with individual difference measures), DNNs could in principle model individual differences in affective processes.

Besides the limitations highlighted for each of the three applications above, we have summarized the most prominent limitations of deep learning in general, alongside potential mitigation strategies for each of them (Table 3).

Table 3.

Limitations of deep learning

| Limitation | Explanation | Mitigation Strategies |

|---|---|---|

| Social bias | Worse, or systematically different, performance for marginalized groups; Reflects bias in dataset composition, annotation, or algorithm construction | Perform bias audits; Retrain model with less problematic data or algorithm; Critically consider goals and (mis)uses of algorithms |

| Causal Inference | Model performance may reflect causal or confounding relationships in data, and model cannot distinguish them | Continue using normal causal identification strategies (e.g., experiments, instruments) |

| Interpretability | Large number of parameters and nonlinear relationships render models opaque/inexplainable in human terms | Visualize units’ “receptive fields”; “lesion” parts of model or augment data to reveal function |

| Costly to train | Large, high-quality training datasets can be expensive to collect/create; Training large models can require expensive hardware and incur large electricity costs | Use pretrained models; Use smaller “distilled” models that offer similar performance with fewer parameters; Share costs with other researchers |

| Performance | Most existing models still perform worse than human gold standard; The types of errors made by models may be very different from those made by humans | Wait for state-of-art to improve; tolerate scale vs. accuracy tradeoff; examine error patterns |

| Generalization | Model performance generally degrades under “distribution shift” – i.e., models can interpolate within the examples they have been trained on, but often fail to extrapolate to new regions of the feature/task space; Versions of the same model trained on the same data with different random seeds can generalize very differently | Audit performance on own data; Fine-tune pretrained models to improve generalization to specific use case; Avoid deploying models to cases far beyond their training set; Stress test different versions of the same model |

| Symbolic Reasoning | Models cannot generically solve non-differentiable or symbolic problems, and unsupervised clustering; Large models can memorize specific symbol patterns but cannot generalize rules | Use symbolic AI; Use hybrid deep learning-symbolic AI systems; Avoid non-differentiable problems; Audit for memorization |

| Feedback | Models are feed-forward only, meaning they cannot model feedback processes that occur in the brain; Limits ability to model temporal dynamics | Use non-feed-forward ANNs (e.g., spiking networks); Model longer timescales (e.g., time course of learning) |

| Technical skills | Relatively high level of programming proficiency; acquisition of many skills specific to deep learning | Create and use open learning resources (e.g., Jupyter Books); Amend graduate curriculum |

Conclusion

In this review, we have provided a brief introduction to how deep learning could be applied to tackle challenges in affective science. We focused on three main applications: behavior quantification, stimuli optimization, and affective modeling. These applications can advance naturalistic research on the verbal and nonverbal expressions of emotions, the recognition of emotions, and the subjective, physiological, and neural components of affective experiences. We encourage interested readers to explore other works that provide detailed primers on how to use these tools to their fullest (Pang et al., 2020; Thomas et al., 2022; Urban & Gates, 2021; Yang & Molano-Mazón, 2021). With deep learning tools in hand, they will stand poised to substantially expand our understanding of emotion in more naturalistic contexts.

Additional Information

Funding

Not applicable

Conflict of Interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Availability of data and material

Not applicable

Code availability

Not applicable

Authors' contributions

Not applicable

Ethics approval

Not applicable

Consent to participate

Not applicable

Consent for publication

Not applicable

Footnotes

Landry S. Bulls, Lindsey J. Tepfer and Amisha D. Vyas contributed equally to this work.

References

- Arias P, Rachman L, Liuni M, Aucouturier J-J. Beyond correlation: Acoustic transformation methods for the experimental study of emotional voice and speech. Emotion Review. 2021;13(1):12–24. doi: 10.1177/1754073920934544. [DOI] [Google Scholar]

- Arulkumaran K, Deisenroth MP, Brundage M, Bharath AA. Deep reinforcement learning: A brief survey. IEEE Signal Processing Magazine. 2017;34(6):26–38. doi: 10.1109/MSP.2017.2743240. [DOI] [Google Scholar]

- Atkinson AP, Dittrich WH, Gemmell AJ, Young AW. Emotion perception from dynamic and static body expressions in point-light and full-light displays. Perception. 2004;33(6):717–746. doi: 10.1068/p5096. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Hassin RR, Ryan J, Grady C, Susskind J, Anderson A, Moscovitch M, Bentin S. Angry, disgusted, or afraid?: Studies on the malleability of emotion perception. Psychological Science. 2008;19(7):724–732. doi: 10.1111/j.1467-9280.2008.02148.x. [DOI] [PubMed] [Google Scholar]

- Aviezer H, Trope Y, Todorov A. Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science. 2012;338(6111):1225–1229. doi: 10.1126/science.1224313. [DOI] [PubMed] [Google Scholar]

- Bachorowski J-A, Owren MJ. Vocal expression of emotion: Acoustic properties of speech are associated with emotional intensity and context. Psychological Science. 1995;6(4):219–224. doi: 10.1111/j.1467-9280.1995.tb00596.x. [DOI] [Google Scholar]

- Bain, M., Huh, J., Han, T., & Zisserman, A. (2023). WhisperX: Time-accurate speech transcription of long-form audio (arXiv:2303.00747). arXiv. 10.48550/arXiv.2303.00747

- Balakrishnan, G., Zhao, A., Dalca, A. V., Durand, F., & Guttag, J. (2018). Synthesizing images of humans in unseen poses (arXiv:1804.07739; version 1). arXiv. http://arxiv.org/abs/1804.07739

- Baltrusaitis, T., Zadeh, A., Lim, Y. C., & Morency, L.-P. (2018). OpenFace 2.0: Facial behavior analysis toolkit. 2018 13th IEEE international conference on Automatic Face & Gesture Recognition (FG 2018), 59–66. 10.1109/FG.2018.00019

- Barrett HC. Towards a cognitive science of the human: Cross-cultural approaches and their urgency. Trends in Cognitive Sciences. 2020;24(8):620–638. doi: 10.1016/j.tics.2020.05.007. [DOI] [PubMed] [Google Scholar]

- Benitez-Quiroz, C. F., Srinivasan, R., & Martinez, A. M. (2016). EmotioNet: An accurate, real-time algorithm for the automatic annotation of a million facial expressions in the wild. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 5562–5570. 10.1109/CVPR.2016.600

- Benitez-Quiroz CF, Srinivasan R, Martinez AM. Facial color is an efficient mechanism to visually transmit emotion. Proceedings of the National Academy of Sciences. 2018;115(14):3581–3586. doi: 10.1073/pnas.1716084115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beukeboom CJ. When words feel right: How affective expressions of listeners change a speaker’s language use. European Journal of Social Psychology. 2009;39(5):747–756. doi: 10.1002/ejsp.572. [DOI] [Google Scholar]

- Bhat G, Danelljan M, Van Gool L, Timofte R. Know your surroundings: Exploiting scene information for object tracking. In: Vedaldi A, Bischof H, Brox T, Frahm J-M, editors. Computer vision – ECCV 2020 (Vol. 12368, pp. 205–221) Springer International Publishing; 2020. [Google Scholar]

- Blanz, V., & Vetter, T. (1999). A morphable model for the synthesis of 3D faces. Proceedings of the 26th Annual Conference on Computer Graphics and Interactive Techniques - SIGGRAPH ‘99, 187–194. 10.1145/311535.311556

- Bowers, J. S., Malhotra, G., Dujmović, M., Montero, M. L., Tsvetkov, C., Biscione, V., Puebla, G., Adolfi, F., Hummel, J. E., Heaton, R. F., Evans, B. D., Mitchell, J., & Blything, R. (2022). Deep problems with neural network models of human vision. Behavioral and Brain Sciences, 1–74. 10.1017/S0140525X22002813 [DOI] [PubMed]

- Brunswik E. Representative design and probabilistic theory in a functional psychology. Psychological Review. 1955;62:193–217. doi: 10.1037/h0047470. [DOI] [PubMed] [Google Scholar]

- Cai Y, Wang Z, Luo Z, Yin B, Du A, Wang H, Zhang X, Zhou X, Zhou E, Sun J. Learning delicate local representations for multi-person pose estimation. In: Vedaldi A, Bischof H, Brox T, Frahm J-M, editors. Computer vision – ECCV 2020 (Vol. 12348, pp. 455–472) Springer International Publishing; 2020. [Google Scholar]

- Cer, D., Yang, Y., Kong, S., Hua, N., Limtiaco, N., John, R. S., Constant, N., Guajardo-Cespedes, M., Yuan, S., Tar, C., Sung, Y.-H., Strope, B., & Kurzweil, R. (2018). Universal sentence encoder (arXiv:1803.11175). arXiv. 10.48550/arXiv.1803.11175

- Cheong, J. H., Jolly, E., Xie, T., Byrne, S., Kenney, M., & Chang, L. J. (2021). Py-feat: Python facial expression analysis toolbox (arXiv:2104.03509). arXiv. http://arxiv.org/abs/2104.03509 [DOI] [PMC free article] [PubMed]

- Cheong, J. H., Jolly, E., Xie, T., Byrne, S., Kenney, M., & Chang, L. J. (2023). Py-feat: Python facial expression analysis toolbox (arXiv:2104.03509). arXiv. 10.48550/arXiv.2104.03509 [DOI] [PMC free article] [PubMed]

- Chernykh, V., & Prikhodko, P. (2018). Emotion recognition from speech with recurrent neural networks (arXiv:1701.08071; version 2). arXiv. http://arxiv.org/abs/1701.08071

- Cichy RM, Kaiser D. Deep neural networks as scientific models. Trends in Cognitive Sciences. 2019;23(4):305–317. doi: 10.1016/j.tics.2019.01.009. [DOI] [PubMed] [Google Scholar]

- Cohn JF, Ambadar Z, Ekman P. Handbook of emotion elicitation and assessment. Oxford University Press; 2007. Observer-based measurement of facial expression with the facial action coding system; pp. 203–221. [Google Scholar]

- Cohn JF, Ertugrul IO, Chu WS, Girard JM, Hammal Z. Multimodal behavior analysis in the wild: Advances and challenges. Academic Press; 2019. Affective facial computing: Generalizability across domains; pp. 407–441. [Google Scholar]

- Coles NA, Larsen JT, Lench HC. A meta-analysis of the facial feedback literature: Effects of facial feedback on emotional experience are small and variable. Psychological Bulletin. 2019;145(6):610–651. doi: 10.1037/bul0000194. [DOI] [PubMed] [Google Scholar]

- Conneau, A., Baevski, A., Collobert, R., Mohamed, A., & Auli, M. (2021). Unsupervised cross-lingual representation learning for speech recognition. Interspeech 2021, 2426–2430. 10.21437/Interspeech.2021-329

- Coulson M. Attributing emotion to static body postures: Recognition accuracy, confusions, and viewpoint dependence. Journal of Nonverbal Behavior. 2004;28:117–139. doi: 10.1023/B:JONB.0000023655.25550.be. [DOI] [Google Scholar]

- Cowen AS, Keltner D. Semantic space theory: A computational approach to emotion. Trends in Cognitive Sciences. 2021;25(2):124–136. doi: 10.1016/j.tics.2020.11.004. [DOI] [PubMed] [Google Scholar]

- Cowen AS, Keltner D, Schroff F, Jou B, Adam H, Prasad G. Sixteen facial expressions occur in similar contexts worldwide. Nature. 2021;589(7841):7841. doi: 10.1038/s41586-020-3037-7. [DOI] [PubMed] [Google Scholar]

- Crossley SA, Kyle K, McNamara DS. Sentiment analysis and social cognition engine (SEANCE): An automatic tool for sentiment, social cognition, and social-order analysis. Behavior Research Methods. 2017;49(3):803–821. doi: 10.3758/s13428-016-0743-z. [DOI] [PubMed] [Google Scholar]

- Daube C, Xu T, Zhan J, Webb A, Ince RAA, Garrod OGB, Schyns PG. Grounding deep neural network predictions of human categorization behavior in understandable functional features: The case of face identity. Patterns. 2021;2(10):100348. doi: 10.1016/j.patter.2021.100348. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dobs K, Martinez J, Kell AJE, Kanwisher N. Brain-like functional specialization emerges spontaneously in deep neural networks. Science Advances. 2022;8(11):eabl8913. doi: 10.1126/sciadv.abl8913. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ekman, P., & Friesen, W. V. (1978). Facial action coding system. Environmental Psychology & Nonverbal Behavior.

- Ekman P. Facial expression and emotion. American Psychologist. 1993;48(3):376–379. doi: 10.1037//0003-066x.48.4.384. [DOI] [PubMed] [Google Scholar]

- Ferrari C, Ciricugno A, Urgesi C, Cattaneo Z. Cerebellar contribution to emotional body language perception: A TMS study. Social Cognitive and Affective Neuroscience. 2022;17(1):81–90. doi: 10.1093/scan/nsz074. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gállego, G. I., Tsiamas, I., Escolano, C., Fonollosa, J. A. R., & Costa-jussà, M. R. (2021). End-to-end speech translation with pre-trained models and adapters: UPC at IWSLT 2021. Proceedings of the 18th International Conference on Spoken Language Translation (IWSLT 2021), 110–119. 10.18653/v1/2021.iwslt-1.11

- Gao, T., Yao, X., & Chen, D. (2021). SimCSE: Simple contrastive learning of sentence Embeddings. Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, 6894–6910. 10.18653/v1/2021.emnlp-main.552

- Ghosal, D., Majumder, N., Poria, S., Chhaya, N., & Gelbukh, A. (2019). DialogueGCN: A graph convolutional neural network for emotion recognition in conversation (arXiv:1908.11540). arXiv. http://arxiv.org/abs/1908.11540

- Girard, J. M., Cohn, J. F., Mahoor, M. H., Mavadati, S., & Rosenwald, D. P. (2013). Social risk and depression: Evidence from manual and automatic facial expression analysis. Proceedings of the ... International Conference on Automatic Face and Gesture Recognition, 1–8. 10.1109/FG.2013.6553748 [DOI] [PMC free article] [PubMed]

- Greenaway KH, Kalokerinos EK, Williams LA. Context is everything (in emotion research) Social and Personality Psychology Compass. 2018;12(6):e12393. doi: 10.1111/spc3.12393. [DOI] [Google Scholar]

- Guo, Z., Leng, Y., Wu, Y., Zhao, S., & Tan, X. (2023). Prompttts: Controllable text-to-speech with text descriptions. ICASSP 2023–2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 1–5. 10.1109/ICASSP49357.2023.10096285

- Hart, T. B., Struiksma, M. E., van Boxtel, A., & van Berkum, J. J. A. (2018). Emotion in stories: Facial EMG evidence for both mental simulation and moral evaluation. Frontiers in Psychology, 9, 613. 10.3389/fpsyg.2018.00613 [DOI] [PMC free article] [PubMed]

- Hawk ST, van Kleef GA, Fischer AH, van der Schalk J. “Worth a thousand words”: Absolute and relative decoding of nonlinguistic affect vocalizations. Emotion. 2009;9:293–305. doi: 10.1037/a0015178. [DOI] [PubMed] [Google Scholar]

- He, P., Liu, X., Gao, J., & Chen, W. (2021). DeBERTa: Decoding-enhanced BERT with disentangled attention (arXiv:2006.03654). arXiv. 10.48550/arXiv.2006.03654

- Heinsen, F. A. (2020). An algorithm for routing capsules in all domains (arXiv:1911.00792). arXiv. 10.48550/arXiv.1911.00792

- Heinsen, F. A. (2022). An algorithm for routing vectors in sequences (arXiv:2211.11754). arXiv. 10.48550/arXiv.2211.11754

- Henrich J, Heine SJ, Norenzayan A. The weirdest people in the world? Behavioral and Brain Sciences. 2010;33(2–3):61–83. doi: 10.1017/S0140525X0999152X. [DOI] [PubMed] [Google Scholar]

- Hosseini, H., Xiao, B., Jaiswal, M., & Poovendran, R. (2017). On the limitation of convolutional neural networks in recognizing negative images. 2017 16th IEEE international conference on machine learning and applications (ICMLA), 352–358. 10.1109/ICMLA.2017.0-136

- Jack RE, Schyns PG. Toward a social psychophysics of face communication. Annual Review of Psychology. 2017;68(1):269–297. doi: 10.1146/annurev-psych-010416-044242. [DOI] [PubMed] [Google Scholar]

- Khaligh-Razavi S-M, Kriegeskorte N. Deep supervised, but not unsupervised, models may explain IT cortical representation. PLoS Computational Biology. 2014;10(11):e1003915. doi: 10.1371/journal.pcbi.1003915. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilbride JE, Yarczower M. Ethnic bias in the recognition of facial expressions. Journal of Nonverbal Behavior. 1983;8(1):27–41. doi: 10.1007/BF00986328. [DOI] [Google Scholar]

- Kim, E., Bryant, D., Srikanth, D., & Howard, A. (2021). Age Bias in Emotion Detection: An Analysis of Facial Emotion Recognition Performance on Young, Middle-Aged, and Older Adults. Proceedings of the 2021AAAI/ACM Conference on AI, Ethics, and Society, 638–644. 10.1145/3461702.3462609

- Kocabas, M., Athanasiou, N., & Black, M. J. (2020). VIBE: Video inference for human body pose and shape estimation (arXiv:1912.05656). arXiv. 10.48550/arXiv.1912.05656

- Kocabas, M., Huang, C.-H. P., Hilliges, O., & Black, M. J. (2021). PARE: Part attention Regressor for 3D human body estimation. IEEE/CVF International Conference on Computer Vision (ICCV), 11107–11117. 10.1109/ICCV48922.2021.01094

- Kohoutová L, Heo J, Cha S, Lee S, Moon T, Wager TD, Woo C-W. Toward a unified framework for interpreting machine-learning models in neuroimaging. Nature Protocols. 2020;15(4):Article 4. doi: 10.1038/s41596-019-0289-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Konkle T, Alvarez GA. A self-supervised domain-general learning framework for human ventral stream representation. Nature Communications. 2022;13(1):491. doi: 10.1038/s41467-022-28091-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kragel, P. A., Reddan, M. C., LaBar, K. S., & Wager, T. D. (2019). Emotion schemas are embedded in the human visual system. Science Advances, 5(7):eaaw4358 [DOI] [PMC free article] [PubMed]

- Kret ME, Prochazkova E, Sterck EHM, Clay Z. Emotional expressions in human and non-human great apes. Neuroscience & Biobehavioral Reviews. 2020;115:378–395. doi: 10.1016/j.neubiorev.2020.01.027. [DOI] [PubMed] [Google Scholar]

- Krishna, R., Hata, K., Ren, F., Fei-Fei, L., & Niebles, J. C. (2017). Dense-captioning events in videos. IEEE International Conference on Computer Vision (ICCV), 706–715. 10.1109/ICCV.2017.83

- Lan Z, Chen M, Goodman S, Gimpel K, Sharma P, Soricut R. ALBERT: A LITE BERT. FOR SELF-SUPERVISED LEARNING OF LANGUAGE REPRESENTATIONS; 2020. [Google Scholar]

- Li Q, Liu YQ, Peng YQ, Liu C, Shi J, Yan F, Zhang Q. Real-time facial emotion recognition using lightweight convolution neural network. Journal of Physics: Conference Series. 2021;1827(1):012130. doi: 10.1088/1742-6596/1827/1/012130. [DOI] [Google Scholar]

- Lin C, Keles U, Adolphs R. Four dimensions characterize attributions from faces using a representative set of English trait words. Nature Communications. 2021;12(1):1. doi: 10.1038/s41467-021-25500-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lin, C., Keles, U., Thornton, M. A., & Adolphs, R. (2022). How trait impressions of faces shape subsequent mental state inferences [registered report stage 1 protocol]. Nature Human Behaviour10.6084/m9.figshare.19664316.v1

- Lin, C., & Thornton, M. A. (2023). Evidence for bidirectional causation between trait and mental state inferences. Journal of Experimental Social Psychology, 108, 104495. 10.31234/osf.io/ysn3w

- Liu H, Liu F, Fan X, Huang D. Polarized self-attention: Towards high-quality pixel-wise mapping. Neurocomputing. 2022;506:158–167. doi: 10.1016/j.neucom.2022.07.054. [DOI] [Google Scholar]

- Liu M-Y, Huang X, Yu J, Wang T-C, Mallya A. Generative adversarial networks for image and video synthesis: Algorithms and applications. Proceedings of the IEEE. 2021;109(5):839–862. doi: 10.1109/JPROC.2021.3049196. [DOI] [Google Scholar]

- Luo, C., Song, S., Xie, W., Shen, L., & Gunes, H. (2022). Learning multi-dimensional edge feature-based AU relation graph for facial action unit recognition. Proceedings of the Thirty-first International Joint Conference on Artificial Intelligence, 1239–1246. 10.24963/ijcai.2022/173

- Luo, Y., Chen, Z., & Yoshioka, T. (2020). Dual-path RNN: Efficient long sequence modeling for time-domain Single-Channel speech separation. ICASSP 2020–2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 46–50. 10.1109/ICASSP40776.2020.9054266

- Luo Y, Mesgarani N. Conv-TasNet: Surpassing Ideal Time–Frequency Magnitude Masking for Speech Separation. IEEE/ACM Transactions on Audio, Speech, and Language Processing. 2019;27(8):1256–1266. doi: 10.1109/TASLP.2019.2915167. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lutati, S., Nachmani, E., & Wolf, L. (2022a). SepIt: Approaching a Single Channel speech separation bound (arXiv:2205.11801; version 3). arXiv. http://arxiv.org/abs/2205.11801

- Lutati, S., Nachmani, E., & Wolf, L. (2022b). SepIt: Approaching a Single Channel speech separation bound. Interspeech 2022, 5323–5327. 10.21437/Interspeech.2022-149

- Lutati, S., Nachmani, E., & Wolf, L. (2023). Separate and diffuse: Using a Pretrained diffusion model for improving source separation (arXiv:2301.10752). arXiv. 10.48550/arXiv.2301.10752

- Martinez AM. Computational models of face perception. Current Directions in Psychological Science. 2017;26(3):263–269. doi: 10.1177/0963721417698535. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Masood M, Nawaz M, Malik KM, Javed A, Irtaza A, Malik H. Deepfakes generation and detection: State-of-the-art, open challenges, countermeasures, and way forward. Applied Intelligence. 2023;53(4):3974–4026. doi: 10.1007/s10489-022-03766-z. [DOI] [Google Scholar]

- McHugh JE, McDonnell R, O’Sullivan C, Newell FN. Perceiving emotion in crowds: The role of dynamic body postures on the perception of emotion in crowded scenes. Experimental Brain Research. 2010;204(3):361–372. doi: 10.1007/s00221-009-2037-5. [DOI] [PubMed] [Google Scholar]

- Mehrabi N, Morstatter F, Saxena N, Lerman K, Galstyan A. A survey on Bias and fairness in machine learning. ACM Computing Surveys. 2021;54(6):1–35. doi: 10.1145/3457607. [DOI] [Google Scholar]

- Mehta Y, Majumder N, Gelbukh A, Cambria E. Recent trends in deep learning based personality detection. Artificial Intelligence Review. 2020;53(4):2313–2339. doi: 10.1007/s10462-019-09770-z. [DOI] [Google Scholar]

- Namba S, Sato W, Nakamura K, Watanabe K. Computational process of sharing emotion: An authentic information perspective. Frontiers in Psychology. 2022;13:849499. doi: 10.3389/fpsyg.2022.849499. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neal DT, Chartrand TL. Embodied emotion perception: Amplifying and dampening facial feedback modulates emotion perception accuracy. Social Psychological and Personality Science. 2011;2(6):673–678. doi: 10.1177/1948550611406138. [DOI] [Google Scholar]

- Ngo N, Isaacowitz DM. Use of context in emotion perception: The role of top-down control, cue type, and perceiver’s age. Emotion. 2015;15(3):292–302. doi: 10.1037/emo0000062. [DOI] [PubMed] [Google Scholar]

- Niedenthal PM, Winkielman P, Mondillon L, Vermeulen N. Embodiment of emotion concepts. Journal of Personality and Social Psychology. 2009;96(6):1120–1136. doi: 10.1037/a0015574. [DOI] [PubMed] [Google Scholar]

- Nummenmaa L, Glerean E, Hari R, Hietanen JK. Bodily maps of emotions. Proceedings of the National Academy of Sciences. 2014;111(2):646–651. doi: 10.1073/pnas.1321664111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pang B, Nijkamp E, Wu YN. Deep learning with TensorFlow: A review. Journal of Educational and Behavioral Statistics. 2020;45(2):227–248. doi: 10.3102/1076998619872761. [DOI] [Google Scholar]

- Papernot, N., McDaniel, P., Jha, S., Fredrikson, M., Celik, Z. B., & Swami, A. (2016). The limitations of deep learning in adversarial settings. 2016 IEEE European Symposium on Security and Privacy (EuroS&P), 372–387. 10.1109/EuroSP.2016.36

- Papers with Code. (n.d.). Retrieved June 26, 2023, from https://paperswithcode.com/

- Park, D. S., Chan, W., Zhang, Y., Chiu, C.-C., Zoph, B., Cubuk, E. D., & Le, Q. V. (2019). SpecAugment: A simple data augmentation method for automatic speech recognition. Interspeech 2019, 2613–2617. 10.21437/Interspeech.2019-2680

- Patton MQ. Qualitative evaluation and research methods, 2nd ed (p. 532) Sage Publications, Inc; 1990. [Google Scholar]

- Pennebaker J, Francis M. Linguistic inquiry and word count. Lawrence Erlbaum Associates, Incorporated; 1999. [Google Scholar]

- Perconti P, Plebe A. Deep learning and cognitive science. Cognition. 2020;203:104365. doi: 10.1016/j.cognition.2020.104365. [DOI] [PubMed] [Google Scholar]

- Peters, M. E., Neumann, M., Iyyer, M., Gardner, M., Clark, C., Lee, K., & Zettlemoyer, L. (2018). Deep contextualized word representations. Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, volume 1 (long papers), 2227–2237. 10.18653/v1/N18-1202

- Ponsot E, Burred JJ, Belin P, Aucouturier J-J. Cracking the social code of speech prosody using reverse correlation. Proceedings of the National Academy of Sciences. 2018;115(15):3972–3977. doi: 10.1073/pnas.1716090115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Poria, S., Majumder, N., Mihalcea, R., & Hovy, E. (2019). Emotion recognition in conversation: Research challenges, datasets, and recent advances. IEEE Access, 7, 100943–100953. 10.1109/ACCESS.2019.2929050

- Poyo Solanas M, Vaessen MJ, de Gelder B. The role of computational and subjective features in emotional body expressions. Scientific Reports. 2020;10(1):1. doi: 10.1038/s41598-020-63125-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pumarola A, Agudo A, Martinez AM, Sanfeliu A, Moreno-Noguer F. GANimation: Anatomically-aware facial animation from a single image. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y, editors. Computer vision – ECCV 2018. Springer International Publishing; 2018. pp. 835–851. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pumarola, A., Agudo, A., Martinez, A. M., Sanfeliu, A., & Moreno-Noguer, F. (2018b). GANimation: Anatomically-aware facial animation from a single image. European Conference on Computer Vision, 818–833. https://openaccess.thecvf.com/content_ECCV_2018/html/Albert_Pumarola_Anatomically_Coherent_Facial_ECCV_2018_paper.html [DOI] [PMC free article] [PubMed]

- Rad MS, Martingano AJ, Ginges J. Toward a psychology of Homo sapiens: Making psychological science more representative of the human population. Proceedings of the National Academy of Sciences. 2018;115(45):11401–11405. doi: 10.1073/pnas.1721165115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reed, C. L., Moody, E. J., Mgrublian, K., Assaad, S., Schey, A., & McIntosh, D. N. (2020). Body matters in emotion: Restricted body movement and posture affect expression and recognition of status-related emotions. Frontiers in Psychology, 11. https://www.frontiersin.org/articles/10.3389/fpsyg.2020.01961 [DOI] [PMC free article] [PubMed]

- Reimers, N., & Gurevych, I. (2019). Sentence-BERT: Sentence Embeddings using Siamese BERT-networks. Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), 3982–3992. 10.18653/v1/D19-1410

- Rempe, D., Birdal, T., Hertzmann, A., Yang, J., Sridhar, S., & Guibas, L. J. (2021). HuMoR: 3D human motion model for robust pose estimation (arXiv:2105.04668). arXiv. 10.48550/arXiv.2105.04668

- Ren, X., & Wang, X. (2022). Look outside the room: Synthesizing a consistent long-term 3D scene video from a single image (arXiv:2203.09457; version 1). arXiv. http://arxiv.org/abs/2203.09457

- Rhue, L. (2018). Racial influence on automated perceptions of emotions (SSRN scholarly paper 3281765). 10.2139/ssrn.3281765

- Richards BA, Lillicrap TP, Beaudoin P, Bengio Y, Bogacz R, Christensen A, Clopath C, Costa RP, de Berker A, Ganguli S, Gillon CJ, Hafner D, Kepecs A, Kriegeskorte N, Latham P, Lindsay GW, Miller KD, Naud R, Pack CC, et al. A deep learning framework for neuroscience. Nature Neuroscience. 2019;22(11):11. doi: 10.1038/s41593-019-0520-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Roebel A, Bous F. Neural Vocoding for singing and speaking voices with the multi-band excited WaveNet. Information. 2022;13(3):3. doi: 10.3390/info13030103. [DOI] [Google Scholar]

- Roether, C., Omlor, L., Christensen, A., & Giese, M. (2009). Critical features for the perception of emotion from gait. Journal of Vision, 9(15) https://jov.arvojournals.org/article.aspx?articleid=2204009 [DOI] [PubMed]

- Rumelhart DE, Hinton GE, Williams RJ. Learning representations by back-propagating errors. Nature. 1986;323(6088):6088. doi: 10.1038/323533a0. [DOI] [Google Scholar]

- Savchenko, A. V. (2022). Frame-level prediction of facial expressions, valence, arousal and action units for Mobile devices (arXiv:2203.13436). arXiv. 10.48550/arXiv.2203.13436

- Saxe A, Nelli S, Summerfield C. If deep learning is the answer, what is the question? Nature Reviews Neuroscience. 2021;22(1):1. doi: 10.1038/s41583-020-00395-8. [DOI] [PubMed] [Google Scholar]

- Scherer KR. Expression of emotion in voice and music. Journal of Voice. 1995;9(3):235–248. doi: 10.1016/S0892-1997(05)80231-0. [DOI] [PubMed] [Google Scholar]

- Scherer KR. Vocal communication of emotion: A review of research paradigms. Speech Communication. 2003;40(1):227–256. doi: 10.1016/S0167-6393(02)00084-5. [DOI] [Google Scholar]

- Schmitz, M., Ahmed, R., & Cao, J. (2022). Bias and fairness on multimodal emotion detection algorithms.

- Schyns PG, Snoek L, Daube C. Degrees of algorithmic equivalence between the brain and its DNN models. Trends in Cognitive Sciences. 2022;26(12):1090–1102. doi: 10.1016/j.tics.2022.09.003. [DOI] [PubMed] [Google Scholar]

- Schyns PG, Snoek L, Daube C. Stimulus models test hypotheses in brains and DNNs. Trends in Cognitive Sciences. 2023;27(3):216–217. doi: 10.1016/j.tics.2022.12.003. [DOI] [PubMed] [Google Scholar]

- Shankar, S., Halpern, Y., Breck, E., Atwood, J., Wilson, J., & Sculley, D. (2017). No Classification without Representation: Assessing Geodiversity Issues in Open Data Sets for the Developing World (arXiv:1711.08536). arXiv. 10.48550/arXiv.1711.08536

- Su C, Xu Z, Pathak J, Wang F. Deep learning in mental health outcome research: A scoping review. Translational Psychiatry. 2020;10(1):1. doi: 10.1038/s41398-020-0780-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Subakan, C., Ravanelli, M., Cornell, S., Bronzi, M., & Zhong, J. (2021). Attention is all you need in speech separation. ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), 21–25. 10.1109/ICASSP39728.2021.9413901

- Sun, K., Xiao, B., Liu, D., & Wang, J. (2019). Deep high-resolution representation learning for human pose estimation. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 5686–5696. 10.1109/CVPR.2019.00584

- Sun, Z., Fan, C., Han, Q., Sun, X., Meng, Y., Wu, F., & Li, J. (2020). Self-explaining structures improve NLP models (arXiv:2012.01786). arXiv. 10.48550/arXiv.2012.01786

- Thomas AW, Ré C, Poldrack RA. Interpreting mental state decoding with deep learning models. Trends in Cognitive Sciences. 2022;26(11):972–986. doi: 10.1016/j.tics.2022.07.003. [DOI] [PubMed] [Google Scholar]

- Thoresen JC, Vuong QC, Atkinson AP. First impressions: Gait cues drive reliable trait judgements. Cognition. 2012;124(3):261–271. doi: 10.1016/j.cognition.2012.05.018. [DOI] [PubMed] [Google Scholar]

- Thornton, M. A., Rmus, M., Vyas, A. D., & Tamir, D. I. (2023). Transition dynamics shape mental state concepts. Journal of Experimental Psychology. General. 10.1037/xge0001405 [DOI] [PMC free article] [PubMed]

- Thornton, M. A., Wolf, S., Reilly, B. J., Slingerland, E. G., & Tamir, D. I. (2022). The 3d mind model characterizes how people understand mental states across modern and historical cultures. Affective Science, 3(1). 10.1007/s42761-021-00089-z [DOI] [PMC free article] [PubMed]

- Urban, C., & Gates, K. (2021). Deep learning: A primer for psychologists. Psychological Methods, 26(6). 10.1037/met0000374 [DOI] [PubMed]

- Van Calster B, McLernon DJ, van Smeden M, Wynants L, Steyerberg EW, Bossuyt P, Collins GS, Macaskill P, McLernon DJ, Moons KGM, Steyerberg EW, Van Calster B, van Smeden M, Vickers AJ, On behalf of Topic Group ‘Evaluating diagnostic tests and prediction models’ of the STRATOS initiative Calibration: The Achilles heel of predictive analytics. BMC Medicine. 2019;17(1):230. doi: 10.1186/s12916-019-1466-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vo T-H, Lee G-S, Yang H-J, Kim S-H. Pyramid with super resolution for in-the-wild facial expression recognition. IEEE Access. 2020;8:131988–132001. doi: 10.1109/ACCESS.2020.3010018. [DOI] [Google Scholar]

- Wallbott, H. G. (1998). Bodily expression of emotion. European Journal of Social Psychology, 28(6), 879–896.

- Wang, C., Tang, Y., Ma, X., Wu, A., Okhonko, D., & Pino, J. (2020). Fairseq S2T: Fast speech-to-text modeling with Fairseq. Proceedings of the1st conference of the Asia-Pacific chapter of the Association for Computational Linguistics and the 10th International Joint Conference on Natural Language Processing: System Demonstrations, 33–39. https://aclanthology.org/2020.aacl-demo.6

- Wang, C., Tang, Y., Ma, X., Wu, A., Popuri, S., Okhonko, D., & Pino, J. (2022). Fairseq S2T: Fast speech-to-text modeling with fairseq (arXiv:2010.05171; version 2). arXiv. http://arxiv.org/abs/2010.05171

- Wang K, Peng X, Yang J, Meng D, Qiao Y. Region attention networks for pose and occlusion robust facial expression recognition. IEEE Transactions on Image Processing. 2020;29:4057–4069. doi: 10.1109/TIP.2019.2956143. [DOI] [PubMed] [Google Scholar]

- Wang, T.-C., Liu, M.-Y., Zhu, J.-Y., Liu, G., Tao, A., Kautz, J., & Catanzaro, B. (2018). Video-to-video synthesis (arXiv:1808.06601; version 2). arXiv. http://arxiv.org/abs/1808.06601

- Wasi, A. T., Šerbetar, K., Islam, R., Rafi, T. H., & Chae, D.-K. (2023). ARBEx: Attentive feature extraction with reliability balancing for robust facial expression learning (arXiv:2305.01486). arXiv. 10.48550/arXiv.2305.01486

- Wen, Z., Lin, W., Wang, T., & Xu, G. (2023). Distract your attention: Multi-head cross attention network for facial expression recognition. Biomimetics, 8(2), article 2. 10.3390/biomimetics8020199 [DOI] [PMC free article] [PubMed]

- Whitesell NR, Harter S. The interpersonal context of emotion: Anger with close friends and classmates. Child Development. 1996;67(4):1345–1359. doi: 10.1111/j.1467-8624.1996.tb01800.x. [DOI] [PubMed] [Google Scholar]

- Wood A, Rychlowska M, Korb S, Niedenthal P. Fashioning the face: Sensorimotor simulation contributes to facial expression recognition. Trends in Cognitive Sciences. 2016;20(3):227–240. doi: 10.1016/j.tics.2015.12.010. [DOI] [PubMed] [Google Scholar]

- Xiao B, Wu H, Wei Y. Simple baselines for human pose estimation and tracking. In: Ferrari V, Hebert M, Sminchisescu C, Weiss Y, editors. Computer vision – ECCV 2018 (Vol. 11210, pp. 472–487) Springer International Publishing; 2018. [Google Scholar]

- Xu, Y., Zhang, J., Zhang, Q., & Tao, D. (2022). ViTPose: Simple Vision Transformer Baselines for Human Pose Estimation (arXiv:2204.12484). arXiv. 10.48550/arXiv.2204.12484

- Xu, Z., Hong, Z., Ding, C., Zhu, Z., Han, J., Liu, J., & Ding, E. (2022). MobileFaceSwap: A lightweight framework for video face swapping (arXiv:2201.03808; version 1). arXiv. http://arxiv.org/abs/2201.03808

- Xue, F., Wang, Q., Tan, Z., Ma, Z., & Guo, G. (2022). Vision transformer with attentive pooling for robust facial expression recognition. IEEE Transactions on Affective Computing, 1–13. 10.1109/TAFFC.2022.3226473

- Yang GR, Molano-Mazón M. Towards the next generation of recurrent network models for cognitive neuroscience. Current Opinion in Neurobiology. 2021;70:182–192. doi: 10.1016/j.conb.2021.10.015. [DOI] [PubMed] [Google Scholar]

- Yarkoni T. The generalizability crisis. Behavioral and Brain Sciences. 2022;45:e1. doi: 10.1017/S0140525X20001685. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yu, C., Lu, H., Hu, N., Yu, M., Weng, C., Xu, K., Liu, P., Tuo, D., Kang, S., Lei, G., Su, D., & Yu, D. (2019). DurIAN: Duration informed attention network for multimodal synthesis (arXiv:1909.01700; version 2). arXiv. http://arxiv.org/abs/1909.01700

- Zane E, Yang Z, Pozzan L, Guha T, Narayanan S, Grossman RB. Motion-capture patterns of voluntarily mimicked dynamic facial expressions in children and adolescents with and without ASD. Journal of Autism and Developmental Disorders. 2019;49(3):1062–1079. doi: 10.1007/s10803-018-3811-7. [DOI] [PMC free article] [PubMed] [Google Scholar]