Abstract

Deep learning-based image reconstruction approaches have demonstrated impressive empirical performance in many imaging modalities. These approaches usually require a large amount of high-quality paired training data, which is often not available in medical imaging. To circumvent this issue we develop a novel unsupervised knowledge-transfer paradigm for learned reconstruction within a Bayesian framework. The proposed approach learns a reconstruction network in two phases. The first phase trains a reconstruction network with a set of ordered pairs comprising of ground truth images of ellipses and the corresponding simulated measurement data. The second phase fine-tunes the pretrained network to more realistic measurement data without supervision. By construction, the framework is capable of delivering predictive uncertainty information over the reconstructed image. We present extensive experimental results on low-dose and sparse-view computed tomography showing that the approach is competitive with several state-of-the-art supervised and unsupervised reconstruction techniques. Moreover, for test data distributed differently from the training data, the proposed framework can significantly improve reconstruction quality not only visually, but also quantitatively in terms of PSNR and SSIM, when compared with learned methods trained on the synthetic dataset only.

Keywords: unsupervised learning, pretraining, image reconstruction, Bayesian deep learning, computed tomography

1. Introduction

In this work we develop a novel unsupervised knowledge-transfer (UKT) framework for image reconstruction. The reconstruction of an image is often formulated through a (linear) inverse problem

where y ∈ Y is a corrupted measurement, δy is the additive noise, x ∈ X is the image to be recovered, and the data acquisition is described by a linear forward map A : X → Y, where X and Y are suitable finite-dimensional vector spaces.

In the past few years, deep learning (DL)-based image reconstruction techniques have demonstrated remarkable empirical results, often substantially outperforming more conventional methods in terms of both image quality and computational efficiency [7, 44]. In DL-based approaches, image reconstruction can be phrased as the problem of finding a deep neural network (DNN) F θ : Y → X such that F θ (y) ≈ x, where the neural network F θ is parametrised by a parameter vector θ. In supervised learning the optimal parameter vector θ* is learned from a set of ordered pairs of ground truth images and the corresponding (noisy) measurement data by minimising a suitable loss

where ℓ(F θ (y n ), x n ) measures the discrepancy between the network prediction F θ (y n ) and the corresponding ground truth image x n , and is often taken to be the mean squared error. Supervised learning has been established as a powerful tool to improve reconstruction quality and speed, rapidly becoming a workhorse in several imaging applications [56].

In order to deliver competitive performance, supervised learning may require many ordered pairs (x n , y n ), n = 1, …, N, which are unfortunately often not available in medical imaging applications since clean ground truth images are either too costly or impossible to collect. Meanwhile, reconstruction methods learned in a scarce-data regime often fail to generalise on instances which belong to a different data distribution [12, 48]. Moreover, even small deviations from the training data distribution can potentially lead to severe reconstruction artefacts (i.e., supervised models can exhibit poor performance even for a small distributional shift). This behaviour is further exacerbated by the presence of structural changes such as rare pathologies; thereby significantly degrading the performance of supervisedly learned reconstruction methods [5]. To make matters worse, such forms of deviation from the training data distribution are ubiquitous in medical imaging, owing to factors such as the change in acquisition protocols. For example, in magnetic resonance imaging (MRI), these factors include echo time, repetition time, flip angle, and inherent hardware variations in the used scanner [31]; in computed tomography (CT), they include the choice of view angles, acquisition time per view, and source-target separation.

Therefore, there is an imperative need to develop learned image reconstruction techniques that do not rely on a large amount of high-quality ordered pairs of training data. In a recent review [56], this issue has been identified as one of the key challenges in the next generation of learned reconstruction techniques. To address this outstanding challenge, in this work we develop a novel UKT strategy to transfer acquired ‘reconstructive knowledge’ across different datasets using the Bayesian framework. It comprises of two phases. The first phase is supervised and is tasked with pretraining a DNN reconstructor on data pairs of ground truth images and corresponding measurement data (which can be either simulated or experimentally collected). The goal of this step is to capture inductive biases of the given reconstruction task using simulated or experimental data. The second phase is unsupervised. It fine-tunes the reconstructor learned in the first phase on clinically-realistic measurement data, using a novel regularised Bayesian loss. This fine-tunes the network to the target reconstruction task while maintaining the prior knowledge learned in the first step. Note that unlike supervised or semi-supervised learning, the proposed framework does not assume any ground truth data from the target domain, and hence it is an unsupervised learning method. Extensive numerical experiments with low-dose and sparse-view CT on two datasets, i.e., FoamFanB [47] and LoDoFanB [37], indicate that the proposed approach is competitive with state-of-the-art methods both quantitatively and qualitatively, and that test-time adaptation can significantly boost performance.

In summary, the development of an UKT framework for learned image reconstruction, and its validation on clinically realistic simulated measurement data, represent the main contributions of this work. To the best of our knowledge, this is the first work to propose Bayesian UKT for test-time adaptation of a learned image reconstruction method. Furthermore, the use of the Bayesian framework allows capturing predictive uncertainty of the obtained reconstructions. Our framework has the following distinct features: (i) adapting to unseen measurement data without the need for ground truth images; (ii) leveraging reconstructive properties learned in the supervised phase for effective feature representation; (iii) providing uncertainty estimates on the reconstructed images. These features make the framework very attractive for performing learned reconstruction without ordered pairs from the target domain, as confirmed by the extensive numerical experiments in section 4. The Bayesian nature of the framework is noteworthy in the emerging field of scalable uncertainty quantification for image reconstruction, where the heavy computational cost is often deemed as one of the major hurdles [9]. In contrast, the approach presented in this work is highly scalable, by building upon recent advances in variational inference [23], and hence holds significant potential for medical image reconstruction.

1.1. Related work

The lack of (a sufficient amount of) reference training data has only recently motivated the development of deep learning-based image reconstruction approaches that do not require ground truth images. We identify two main groups of current learned approaches: test-time adaptation, and unsupervised approaches.

Test-time adaptation focuses on learning under differing training and testing distributions. It often consists of fine-tuning a pretrained DNN for a single datum at a time, or for a small set of test instances. In [18, 26] this paradigm is used for MRI reconstruction, where reconstructive properties acquired by a network that has been pretrained on a task for which a large dataset is available, are transferred to a different task where the supervised data is scarce (but still available). The proposed approach extends the aforementioned work from the supervised target reconstruction task to an unsupervised one. In the context of object recognition, Sun et al [51] propose to adapt only a part of a convolutional neural network (CNN) according to a self-supervised loss defined on the given test image to address distributional shift. The model is then trained via multi-task learning, where shared features are learned jointly over supervised and self-supervised data. Gilton et al [25] adapt a pretrained image reconstruction network to reconstruct images from a perturbed forward model using only a small collection of measurements, by enforcing the data fidelity while penalising the deviation of the network parameters from the parameters of the pretrained model. Conceptually speaking, our study is complementary to these studies. The proposed approach can be interpreted as conducting unsupervised test-time adaptation for distributional shift of the image data, but within a Bayesian framework. Furthermore, the use of the Bayesian framework brings several distinct advantages: (i) it allows deriving the training loss in a principled manner; (ii) it can boost reconstructive performance; (iii) it simultaneously delivers the predictive uncertainty information associated with the reconstructions.

Meanwhile, deep image prior (DIP) is a representative unsupervised image reconstruction method, which achieves sample-specific performance using DNNs to describe the mapping from latent variables to high-quality images [53]. During inference the network architecture acts as a regulariser for reconstruction [8, 21]. Similarly, Zhang et al [60] use a U-Net model as the reconstruction network and propose to adapt the model through backpropagation by updating the parameters of a pretrained U-Net under the guidance of data fidelity for each individual test data y, with no supervision, and showcase the approach on under-sampled MRI reconstruction. Despite strong performance, it suffers from slow convergence (often requiring thousands of iterations), and the need for a well-timed early stopping, otherwise the network may overfit to the noise in the data. The latter issue has motivated the use of an additional stabiliser [8].

Test time adaptation and DIP represent only two approaches that are most closely related to the present work. In recent years, there have been significant advances in unsupervised biomedical imaging reconstruction techniques and we refer interested readers to a recent review [4] on other approaches and references therein, which discusses many promising unsupervised methods.

The rest of the paper is structured as follows. In section 2 we describe the setting and discuss deep unrolled DL. In section 3 we develop the proposed two-phase UKT paradigm. In section 4 we present experimental results for low-dose and sparse-view CT, including several supervised and unsupervised benchmarks, and discuss the results obtained with the two-phase learning paradigm. In section 5 we add some concluding remarks.

2. Preliminaries

In this section we describe the fundamentals of how unrolled networks are used for image reconstruction. We then describe the Bayesian approach for DNNs, based on which we shall develop the proposed UKT strategy.

2.1. Unrolled networks

Unrolling is a popular paradigm for constructing a network F θ for image reconstruction. The idea is to mimic well-established iterative optimisation algorithms, e.g., (proximal) gradient descent, alternating direction method of multipliers, and primal-dual hybrid gradient method. Namely, unrolled methods use an iterative procedure to reconstruct an image x from the measurement y by combining analytical model components (e.g., the forward map A and its adjoint A ⊤) with data-driven components that are parameterised by the network parameters θ and learned from the training data. The unrolled nature of the network allows seamlessly integrating the underlying physics of the data acquisition process into the design of the network F θ , which can enable the development of high-performance reconstructors from reasonably sized training datasets [41]. More specifically, given an initial guess x 0 (e.g., the filtered back-projection (FBP) in CT reconstruction), we recursively compute iterates

with

being the gradient of the data fidelity term, where K ⩾ 1 is the total number of iterations, is the sub-network used at the kth iteration, and θ k is the corresponding weight vector. The overall iterative process can then be written as

where x K is the final reconstruction, and F θ is the overall network, with parameters θ = (θ 1, …, θ K ), constructed as a concatenation of sub-networks . In practice, the parameters θ k of each sub-network can be shared across different blocks (i.e., θ 1 =…= θ K ), a procedure known as weight-tying or weight-sharing. This allows to reduce the total number of trainable parameters, so as to facilitate the training process. By slightly abusing the notation, we denote the shared parameter by θ. In this work, we only consider the case of weights shared across the blocks, but the proposed framework extends straightforwardly to the general case.

2.2. Bayesian neural networks

We briefly describe Bayesian neural networks (BNNs), in which network parameters θ are treated as random variables and are learned through a Bayesian framework so as to facilitate uncertainty quantification of the network prediction. Bayesian learning provides a principled yet flexible framework for knowledge integration, and allows quantifying predictive uncertainties associated with a particular point estimate [9, 23]. Bayesian learning is ideally suited for deriving a proper training loss for combining the knowledge across different ‘domains’, to which the framework proposed in section 3 belongs. Nonetheless, the use of BNNs for medical imaging is still not widespread due to the associated computational challenge.

In a BNN, by placing a prior distribution p(θ) over the network parameters θ (which is commonly taken to be the standard Gaussian distribution), and by combining it with a likelihood function of the data using Bayes’ formula, we obtain a posterior distribution over the parameters θ, given the data

where is the normalising constant. The likelihood is fully specified upon properly modelling the data noise statistics and the data generation process (e.g., forward operator A). The posterior distribution represents the complete Bayesian solution of the learning task.

The posterior is often computationally intractable, since the computation of the normalising constant Z involves a high-dimensional integral. To circumvent this computational issue, we adopt variational inference (VI) [30], which employs the Kullback–Leibler (KL) divergence to construct an approximating distribution q(θ). Recall that the KL divergence KL[q(θ)‖p(θ)] between q and p is defined by

VI looks for an easy-to-compute approximate posterior distribution q ψ(θ) parametrised by variational parameters ψ. The approximation q ψ (θ) is most commonly taken from a variational family consisting of products of independent Gaussians

where the notation denotes a Gaussian distribution with mean μ j and variance , are the variational parameters, and D is the total number of parameters in F θ . In the literature this is commonly known as the mean field approximation. VI constructs an approximation within the family by

Given a learned approximate posterior , the predictive distribution of the target image x for a new query measurement y q is given by

A point estimate of the image x can then be obtained via Monte Carlo (MC) sampling as

with T MC samples θ t , with t = 1, …, T, distributed according to .

When the network densities are shared across the iterates, we have

with the superscript ⊗K denoting the K-fold product, and the overall iterative process reads

Note that the standard mean field approximation doubles the number of trainable parameters, which brings significant computational challenges. In practice, the training of fully Bayesian models is often non-trivial, and the performance of the resulting network is often inferior to non-Bayesian networks [45]. BNNs are thus still not widely used in learned image reconstruction [9]. To make our approach competitive with non-Bayesian methods, while retaining the benefits of Bayesian modelling, we can adopt the strategy of being Bayesian only a little bit [11, 19]. That is, we use VI only on a subset of the parameters θ, and use point estimates for the remaining parameters (or equivalently, a Dirac distribution). This can reduce the number of trainable parameters, and hence greatly facilitate the training process, while maintaining the Bayesian nature of the learning algorithm.

Remark 2.1.

Apart from VI there are other approximate inference schemes, such as MC dropout [24] and Laplace approximation [19, 40]. MC dropout has been widely used for modelling uncertainty, and has also found application in the medical imaging community (e.g., segmentation [52]), due to its computational efficiency and easy implementation, but its approximation accuracy tends to be inferior to VI. For example, MC dropout tends to severely underestimate predictive uncertainty [35]. Laplace approximation [40] has started to attract renewed interest, but has not been explored within medical image reconstruction so far, since the computational cost of approximating the Hessian of the loss with respect to the network parameters θ is often prohibitively high in the context of image reconstruction and a scalable and yet accurate approximation of the Hessian is still under development.

Remark 2.2.

In light of the decoder-encoder structure of the U-Net that is used below (cf figure 1(b) for a schematic illustration), the idea of ‘being Bayesian a little bit’ resembles a hybridisation of an autoencoder [55] and a variational autoencoder [34], which are used for the decoder and encoder parts, respectively. However, there is a major difference between the two approaches: the formulation we employ for image reconstruction is conditional on the measurement, whereas the standard autoencoder and variational autoencoder formulations are unsupervised in that they access only samples of images.

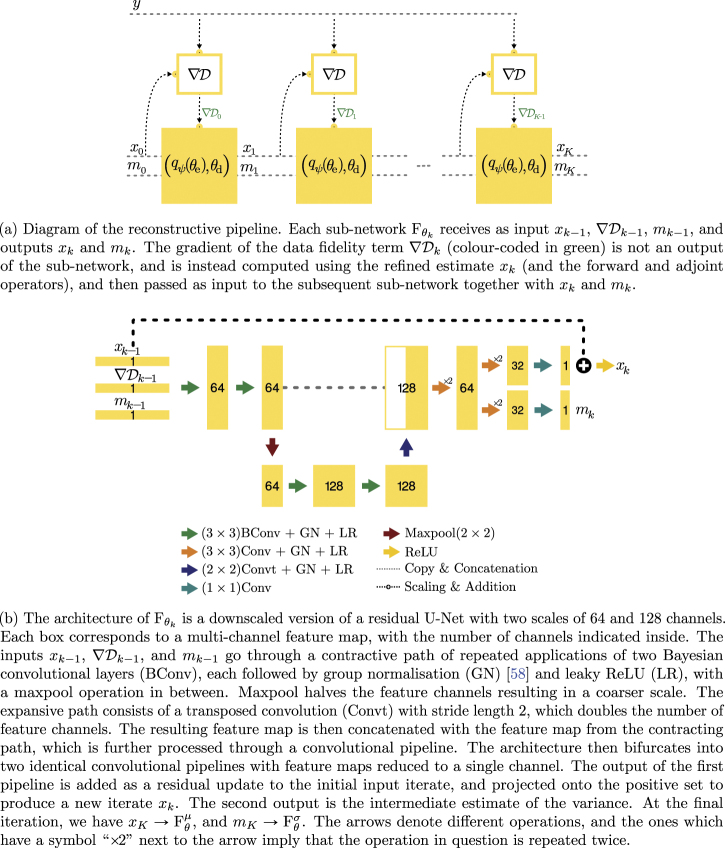

Figure 1.

(a) Schematic illustration of the overall iterative reconstructive process, and (b) the architecture of each sub-network.

3. Two-phase learning

In this section we describe our novel two-phase UKT strategy aimed at addressing the challenges associated with the lack of sufficient supervised training data in the target reconstruction task. We systematically develop the learning strategy within a Bayesian framework with a sub-network being a downscaled version of a residual U-Net [50] (cf figure 1(b) for a schematic illustration), which is a popular choice in learned image reconstruction [29], and will be used in the experiments in section 4. The network adopts a multi-scale encoder-decoder structure consisting of an encoding component and a decoding component, whose parameters are denoted respectively by and , and θ = (θ e, θ d). In the derivation of the proposed UKT framework below, we use VI only on the network parameters θ e of the encoder component, which can be interpreted as choosing an approximate posterior for the encoder . The decoder parameters θ d remain deterministic, and are treated as point-estimates. The adaptation of the UKT framework to other network architectures is straightforward.

Now we briefly describe the two phases of the proposed learning strategy. The first phase is supervised, and employs a given training dataset where each pair consists of a ground truth image and the corresponding (noisy) measurement datum , which can be either simulated or experimentally collected (if available). The goal of this phase is to pretrain a reconstruction network F θ by learning the (approximate) posterior distribution for the parameters θ e of the encoder, and the optimal deterministic parameters of the decoder, in order to assist the unsupervised phase. Specifically, we aim to achieve two objectives: (i) identify a sensible region for the network parameters; (ii) learn robust representations that are not prone to overfitting. Ideally, to facilitate the reconstruction quality this phase should mimic the setting of the target reconstruction task as close as possible in terms of the geometry of image acquisition (e.g., size of images and distribution of image features), and the noise statistics (e.g., distribution and noise level). This phase would allow learning adequate inductive biases and task-specific priors so as to enable successful subsequent unsupervised learned image reconstruction.

The second phase is unsupervised, and has access to a dataset which consists of only a few measurements (e.g., clinically-realistic CT sinograms), but with no access to corresponding ground truth images. Moreover, the distribution of the measurement data in may differ significantly from that in . The aim of this phase is to fine-tune the parameters θ of the reconstruction network F θ so that it performs well on the data from the target domain. This is achieved by initialising the parameters (ψ, θ d) of the reconstruction network F θ to the optimal configuration found in the first phase, and then minimising a novel loss function, which we shall derive below in the Bayesian framework. Through this phase we address the need for adaptivity to the target reconstruction task due to a potential distributional shift of the data and effectively use the inductive bias to assist the reconstruction of the target task.

3.1. Pretraining via supervised learning

In this first phase, we have access to a training dataset of ordered pairs (which can be either simulated or experimentally collected), and we employ the Bayesian framework described in section 2.2 to find the optimal distribution for the parameters θ e of the encoder, which approximates the true posterior and the optimal decoder parameters . To construct the posterior , we first set the prior p(θ e) over the encoder parameters θ e to the standard Gaussian , which is a standard practice in the Bayesian DL community. Following the heteroscedastic noise model [43], the likelihood is set to

with and . Analogously, note that . Note that the network F θ has two outputs: the mean , and the variance . Here denotes the initial guess used by the learned reconstruction method for the nth training pair . For example, in CT reconstruction, we customarily take to be the FBP. We refer readers to figure 1(a) for a schematic illustration, where x k and m k denote the mean and variance estimates at the kth iteration, respectively. Up to an additive constant independent of the arguments, we can write

The minimisation of KL divergence in (2.1) can be recast as the minimisation of the following loss over the admissible set

where β > 0 is a regularisation parameter. This loss coincides with the negative value of the evidence lower bound (ELBO) in VI (when β = 1). Upon expanding the terms, fixing the prior at , and ignoring additive constants independent of θ d and q ψ (θ e), we can rewrite the loss as (recall that D e denotes the dimensionality of the encoder parameter θ e)

where the vector refers to variational parameters of the approximate distribution q ψ (θ e), where μ j and are respectively the mean and the variance of the jth component of the encoder parameters θ e. Note that the term affects only the encoder parameters θ e, whereas the decoder parameters θ d are treated deterministically (without any explicit penalty). In order to minimise the loss with respect to the variational parameters ψ, we need to compute the gradient of the loss with respect to ψ. This can be done efficiently using the local reparametrisation trick [33], which employs a deterministic dependence of the ELBO with respect to ψ.

The combination of the unrolled network with Bayesian neural networks allows quantifying the uncertainty over the reconstructed image by unrolling methods, and we have termed the resulting approach (when trained in a greedy manner) as Bayesian deep gradient descent (BDGD) in prior works [10, 11]. BDGD provides natural means to quantify not only the predictive uncertainty associated with a given reconstruction, but also to disentangle the sources from which the predictive uncertainty arises. Uncertainty is typically categorised into aleatoric and epistemic uncertainties [9, 32]. Epistemic uncertainty arises from the uncertainty over the network parameters, and is captured by the posterior q ψ (θ e) [13, 32]. Aleatoric uncertainty is instead caused by the randomness in the data acquisition process. To account for this, in the loss (3.1) we employ a heteroscedastic noise model [43], which sets the likelihood to be a Gaussian distribution, with both its mean and variance predicted by the network F θ . Accordingly, we adjust the network architecture by bifurcating the decoder output. Namely, sub-network outputs are used to update the estimate x k , whilst the intermediate term m k , which embodies a form of ‘information transmission’, is given by . At the final iteration m K provides an estimate of the variance component of the likelihood; see figure 1(a) again for a schematic illustration on the overall workflow of the network F θ .

Following [20], we can decompose the (entry-wise) predictive variance Var[x] into a sum of aleatoric (ΔA[y q ]) and epistemic (ΔE[y q ]) uncertainties using the law of total variance as follows

Upon denoting the initial guesses for the mean and the variance for a query data y q by x q,0 and m q,0, respectively, and abbreviating as , and as , we estimate ΔA[y q ] and ΔE[y q ] by T ⩾ 1 MC samples as

where all the operations on vectors are understood entry-wise.

Remark 3.1.

There are at least two alternative loss functions that can be derived from the Bayesian loss (3.2). The first option is to set the parameters θ as fully deterministic, which gives rise to the following non-Bayesian loss

Note that this loss does not penalise the decoder parameters θ d, as in the Bayesian formulation, whereas it penalises the encoder parameters θ e by the standard weight decay, which corresponds directly to the standard Gaussian prior on the encoder parameters θ e. The presence of the log-determinant is due to heteroscedastic noise modelling [43], and accordingly the network F θ has two outputs, one for the mean and the other for the variance. The second option is to fix the output noise variance as (with known σ) in the heteroscedastic noise modelling. This leads to the following loss

This is essentially identical to the loss in (1.1) (modulo weight decay), which is arguably the most popular loss for obtaining supervised end-to-end DL-based image reconstruction algorithms.

3.2. Unsupervised knowledge-transfer

In the second phase we use the Bayesian framework to integrate the knowledge learned in the first phase to new imaging data for which we do not have access to paired training data but only to noisy observations. Note that the knowledge of the trained network (on the supervised data ) is encoded indirectly in the posterior distribution and in the optimal parameters . The goal of the second phase is to approximate the true posterior , and to find the updated optimal decoder parameters given the measurement data and the supervised data from the first phase. This can be achieved as follows. By Bayes’ formula, the posterior distribution is given by

Here is the likelihood at test-time (i.e., the likelihood of the measurement data from the target reconstruction task), and the normalising constant is the marginal likelihood of the total observed data . We approximate the posterior (from the supervised phase) by the estimated optimal posterior , which is learned in the first phase, thus encapsulating the ‘proxy’ knowledge we have acquired from the supervised dataset . An approximation to the true posterior for the combined data can then be obtained using VI as

where the objective function is given by

The approximate posterior over the supervised dataset is by construction used as a prior in the second phase. It remains to construct the likelihood for the unsupervised dataset . For any measurement datum , the likelihood p(y u|θ e) is set to

Upon letting , we have

Note that unlike in (3.2), this likelihood would exert no influence on the component of the network output F θ (arising from the heteroscedastic modelling). To address this, we shall, inspired by the bias variance decomposition, replace the log-likelihood log p(y u|θ e) with a suitable modification. For , using the standard bias-variance decomposition, we obtain

By the definition of , the first term can be rewritten as . Meanwhile, for a random vector w with mean and covariance Cov(w), we have

Since is a zero mean random vector with covariance , we have

Consequently,

This will be used in the loss function in the modified log-likelihood. In practice, the term can be approximated using randomised trace estimators (e.g., the Hutchinson’s estimator [15]). The computation of the optimal variational parameters ψ* and the optimal decoder parameter by minimising the negative value of the ELBO proceeds analogously to the supervised phase, but with the key changes outlined above.

In addition to enforcing data fidelity, we also include a regularisation term to the loss in (3.3),

whereas a regulariser we take the total variation seminorm TV(u) = ‖∇u‖1, and γ > 0 is the regularisation parameter. This incorporates prior knowledge over expected images by penalising unlikely or undesirable solutions. TV is widely used in image reconstruction, due to its edge-preserving properties [14], and has also been applied to learned reconstruction [8, 16]. Intuitively, without the TV term, optimising the loss is akin to minimising the fidelity, and thus the training process is prone to overfitting, especially when the neural network is over-parameterised, necessitating the use of early stopping (which also has a regularising effect). The numerical experiments indicate that incorporating this term can help stabilise the training process and lead to improved reconstructions, which agrees with earlier observations [8, 16]. In summary, the loss at the second phase reads

which upon expansion, relabelling, and the aforementioned modifications, leads to the loss

Since q ψ (θ e) and are constructed as the products of independent Gaussians (i.e., mean field approximation), the term has a closed-form expression given by

where refers to variational parameters of the approximate distribution q ψ (θ e), where μ j and σ j are the mean and the variance of the jth component of θ e, and and are the optimal variational parameters learned in the first phase (and thus fixed during the second phase). Note that the loss in (3.4) represents only one possibility for unsupervised knowledge transfer, and there are alternatives. In the appendix A, we derive an alternative training loss, by constructing the likelihood p(y u|θ e) differently, which also allows interpreting the loss as an approximate Bayesian loss.

It is instructive to interpret the terms in the loss in the lens of more familiar variational regularisation [22, 28]. The first term in (3.2) enforces data fidelity, which encourages the learned network F θ to be close to the right-inverse of A (i.e., the action of the forward map A on the output of is close to the measurement data y u). The second term, , controls the growth of the variance component, and along with the first term arises naturally when performing approximate VI (with a Gaussian likelihood) on the posterior distribution ; see the appendix A for further discussions. Note that this term does not appear if one considers only the usual maximum a posteriori (MAP) estimator to the posterior distribution . The third term, the TV regulariser, plays a crucial role in stabilising the learning process [8]. The fourth term forces the posterior q ψ (θ e) to be close to the prior of the unsupervised phase (which is the posterior obtained during the supervised phase). These properties together give rise to a highly flexible UKT paradigm: the adaptation can be done individually for each query image datum (which is natural for streaming data) or for a whole batch of measurement data. The regularisation parameters γ > 0 and β > 0 control the strength of the related penalty terms. In practice, it is important to choose the regularisation parameters β and γ suitably, as in any inverse technique. In our experiments β and γ are chosen on a validation set.

Remark 3.2.

The loss in (3.4) can be viewed as a generalisation of the more conventional non-Bayesian approaches for domain adaptation

where is the optimal encoder network parameter learned at the supervised phase. This loss encourages the network output to be close to piece-wise constant, and meanwhile, the corresponding network should not deviate too much from . Due to the use of the Bayesian framework, the UKT loss (3.4) involves extra terms that are related to the variance of the parameters. Formally, the loss in (3.5) can also be obtained by considering the MAP estimator of the posterior distribution , concurring with the well-known connection between the MAP estimator and the posterior distribution. Nonetheless, even if considering the loss (3.5) alone, the Bayesian framework elucidates the standing assumptions for obtaining the loss. The loss in (3.5) is closely connected to the loss

which also penalises the deviation of decoder parameters θ d from the pretrained parameters . This is essentially the training loss employed in [25]. The other major difference between (3.5) and (3.6) lies in use of the TV penalty on the network output .

It is also worth noting that affects the loss in (3.4) only via the term , controlling the covariance of the estimate, but not via the data fidelity term. This is due to the simplified derivation of the loss. The genuine Bayesian loss in (A.1) to be derived in the appendix does incorporate into noise covariance and consequently it does enter into the noise weighting matrix in the data fidelity. See the appendix A for further discussions.

4. Experiments and results

In this section we present numerical experiments on simulated data to showcase the performance of the proposed UKT framework.

4.1. Experimental settings

First we describe the experimental setting, including datasets, data generation, benchmark methods and training details.

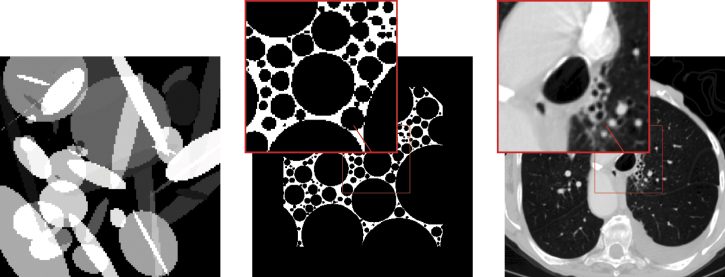

Datasets. In the experiments we use three datasets: Ellipses, FoamFanB and LoDoFanB. The Ellipses dataset consists of random phantoms of overlapping ellipses, and is commonly used for inverse problems in imaging [2]. The intensity of the background is taken to be 0, the intensity of each ellipse is taken randomly between 0.1 and 1, and the intensities are added up in regions where ellipses overlap. The phantoms are of size 128 × 128; see figure 2 for a representative phantom. The training set contains 32 000 pairs of phantoms and sinograms, while the test set consists of 128 pairs. This dataset is used for the training of all the methods that involve supervised training. The FoamFanB dataset is constructed using a cylindrical foam phantom containing 100 000 randomly-placed non-overlapping bubbles. The phantom consists of 100 slices of size 1024 × 1024 and is generated with the open-source foam_ct_phantom package [47]. Analytic projection images of the phantoms were also computed using the package. Each slice is then cropped into four 256 × 256 square sections, which are zero padded with 50 pixels in all four directions. Out of the resulting 400 slices, we randomly retain half; see figure 2 for a representative slice. The intensity of the pixels are either 0 or 0.5, which allows retaining finer structures in the images. The LoDoFanB dataset [37] is more medically realistic, and consists of 223 human chest CTs, in which the (original) slices from the LIDC/IDRI Database [6] have been pre-processed, and the resulting images are of size 362 × 362; see figure 2 for a representative slice. The FoamFanB and LoDoFanB datasets are used in the unsupervised phase, where we assume to know only the sinograms. The ground truth images are only used to evaluate the performance of all the studied methods, unless otherwise specified.

Figure 2.

Representative ground truth images from Ellipses (left), FoamFanB (middle) and LoDoFanB (right) datasets. The window of the LoDoFanB dataset is set to a Hounsfield unit (HU) range ≈ [−1000, 400].

Data generation. For the forward map A, taken to be the Radon transform, we employ a two-dimensional fan-beam geometry with 600 angles for the low-dose CT setting, and 100 angles for the sparse-view CT setting. Source-to-axis and axis-to-detector distances are both set to 500 mm. For both datasets we apply a corruption process given by , where is the mean number of photons per pixel and is fixed at 8000 (corresponding to low-dose CT), and is the attenuation coefficient, set to 0.2. We linearise the forward model by applying the transformation −log(⋅)/μ. We can then use as the data fidelity term since post-log measurements of low-dose CT approximately follow a Gaussian distribution [38, 57].

Benchmark methods. We compare the proposed BDGD + UKT approach with several unsupervised and supervised benchmarks. Unsupervised methods include FBP (using a Hann filter with a low-pass cut-off 0.6), (isotropic) TV regularisation, and DIP + TV [8]. Supervised methods include U-Net based post-processing (FBP + U-Net) [17], two learned iterative schemes: learned gradient descent (LGD) [2] and learned primal dual (LPD) [3], and BDGD (i.e., without UKT) [10, 11]. U-Net is widely used for post-processing (e.g., denoising and artefact removal), including FBPs [29], and our implementation follows [8] using a slightly down-scaled version of the standard U-Net. LGD and LPD are widely used, with the latter often seen as the standard benchmark for supervised deep tomographic reconstruction. BDGD exhibits competitive performance while being a Bayesian method [10, 11].

Training, hyper-parameters, and implementation. All supervised methods are first trained on the Ellipses dataset, and then tested on Ellipses, FoamFanB and LoDoFanB datasets separately. Unless otherwise stated, the learned models are not adapted to the FoamFanB and LoDoFanB datasets, but perform reconstruction directly on a given sinogram. The methods were implemented in PyTorch, and trained on a GeForce GTX 1080 Titan GPU. All operator-related components (e.g., forward operator, adjoint, and FBP) are implemented using the Operator Discretisation Library [1] with the astra_gpu backend [54].

For all the unsupervised methods (FBP, TV, DIP + TV), the hyperparameters (frequency scaling in FBP and regularisation parameter in TV and DIP + TV) are selected to maximise the PSNR on a subset of the dataset consisting of 5 images. DIP + TV adopts a U-Net architecture proposed in [8] (accessible in the DIVal library [36]): a five-scale U-Net without skip connections for the Ellipses dataset, and a six-scale U-Net with skip connections only at the last two scales for FoamFanB and LoDoFanB datasets. For both architectures the number of channels is set to 128 at every scale. In table 1 we report the number of parameters used for the LoDoFanB dataset.

Table 1.

Reconstruction methods used in this work. For each method, the number of learnable parameters is indicated, as well as approximate runtime for both low-dose CT and sparse-view CT on the LoDoFanB dataset, is reported.

| Methods | Parameters | Runtime | |

|---|---|---|---|

| Unsupervised | FBP | 1 | 38 ms/7 ms |

| TV | 1 | 20 s/10 s | |

| DIP + TV | 2.9 × 106 | 20 min/18 min | |

| Supervised | FBP + U-Net | 6.1 × 105 | 5 ms |

| LGD | 1.3 × 105 | 89 ms/34 ms | |

| LPD | 2.5 × 105 | 180 ms/55 ms | |

| BDGD | 8.8 × 105 | 7 s/6 s | |

| BDGD + UKT | 8.8 × 105 | 7 s/6 s | |

All learned reconstruction methods were trained until convergence on the Ellipses dataset. FBP + U-Net implements a down-sized U-Net architecture with four scales and skip connections at each scale. LGD is implemented as in [3], where the parameters of the reconstructor are not shared across the iterates, and we use K = 10 unrolled iterations. LPD follows the implementation in [3]. We train FBP + U-Net, LGD and LPD by minimising the loss in (1.1) using the Adam optimiser and a learning rate schedule according to cosine annealing [39]. All models are trained for 30 epochs. BDGD uses a multi-scale convolutional architecture (cf figure 1), with K = 3 unrolled iterations. Furthermore, the UKT phase is initialised with parameters , which are obtained at the end of the supervised training on the Ellipses dataset. For the FoamFanB dataset, the regularisation parameter γ is set to 5 × 10−5 for the low-dose setting and to 1 × 10−4 for the sparse-view setting. Analogously, for the LoDoFanB dataset, the regularisation parameter γ is set to 1 × 10−4 for the low-dose setting and to 5 × 10−4 for the sparse-view setting. On both datasets, β is set to 1 × 10−4 for both settings. T = 10 MC samples are used to reconstruct the point estimate, and to compute the associated uncertainty estimates. A Pytorch implementation of the proposed approach is publicly available at https://github.com/rb876/unsupervised_knowledge_transfer to reproduce the numerical experiments.

4.2. Experimental results

In table 2 we report PSNR and SSIM values for the studied datasets. We observe that unsupervised methods give higher PSNR and SSIM values on FoamFanB and LoDoFanB datasets than on the Ellipses dataset, with FBP on FoamFanB being the exception. The converse is true for supervised methods. Moreover, TV and DIP + TV outperform supervised reconstruction methods in both low-dose and sparse-view CT settings for FoamFanB and LoDoFanB datasets. The results for BDGD + UKT and BDGD indicate that adapting the parameters on the given dataset allows achieving a noticeable improvement in reconstruction quality in both low-dose and sparse-view CT settings. Note also that BDGD + UKT outperforms all supervised reconstruction methods, while performing on par with DIP + TV (but the corresponding computation time is only a small fraction of that for the latter). This last observation is not surprising, since the test data (FoamFanB and LoDoFanB) are distributed differently from the synthetic training data (Ellipses). As a result, the performance of supervised reconstruction methods deteriorates significantly.

Table 2.

Comparison of reconstruction methods for the Ellipses, FoamFanB, and LoDoFanB datasets by average PSNR and SSIM. All supervised methods are trained on the Ellipses dataset. Learned models are then tested on the FoamFanB and LoDoFanB datasets. In the table, the two best performing methods are highlighted in bold case.

| Low-dose CT | Sparse-view CT | |||||||

|---|---|---|---|---|---|---|---|---|

| Methods | Ellipses | FoamFanB | LoDoFanB | Ellipses | FoamFanB | LoDoFanB | ||

| Unsupervised | FBP | 28.50/0.844 | 20.73/0.629 | 33.01/0.842 | 26.74/0.718 | 16.34/0.174 | 29.10/0.593 | |

| TV | 33.41/0.878 | 36.39/0.939 | 36.55/0.869 | 30.98/0.869 | 27.53/0.832 | 34.74/0.833 | ||

| DIP + TV | 34.53/0.957 | 38.42/0.997 | 39.32/0.896 | 32.02/0.931 | 31.99/0.987 | 36.80/0.866 | ||

| Supervised | FBP + U-Net | 37.05/0.970 | 30.26/0.723 | 32.13/0.820 | 32.13/0.936 | 20.09/0.347 | 27.22/0.694 | |

| LGD | 40.73/0.985 | 31.37/0.909 | 33.42/0.862 | 33.72/0.952 | 22.86/0.687 | 28.49/0.507 | ||

| LPD | 44.27/0.994 | 28.09/0.918 | 33.21/0.866 | 36.19/0.970 | 24.86/0.886 | 34.60/0.838 | ||

| BDGD | 43.60/0.994 | 30.72/0.974 | 35.91/0.877 | 35.36/0.971 | 19.44/0.406 | 34.16/0.824 | ||

| BDGD + UKT | — | 40.72/0.997 | 38.40/0.899 | — | 30.07/0.966 | 35.67/0.855 | ||

Table 1 reports also the approximate runtime for all the methods under consideration. All learned methods (i.e., LGD, LPD, BDGD) require multiple calls of the forward operator A, and thus they are slower at test time than the methods that do not (e.g., FBP + U-Net, which only post-processes the FBP reconstruction). In addition, BDGD and BDGD + UKT use 10 MC samples to obtain a single reconstruction, leading to a slightly longer reconstruction time of approximately 7 s per image. However, all learned methods are found to be significantly faster than the TV reconstruction. Meanwhile, DIP + TV is much slower than TV taking approximately 20 min to reconstruct a single instance of the LoDoFanB dataset. The runtimes for the FoamFanB dataset were almost identical and are thus not included.

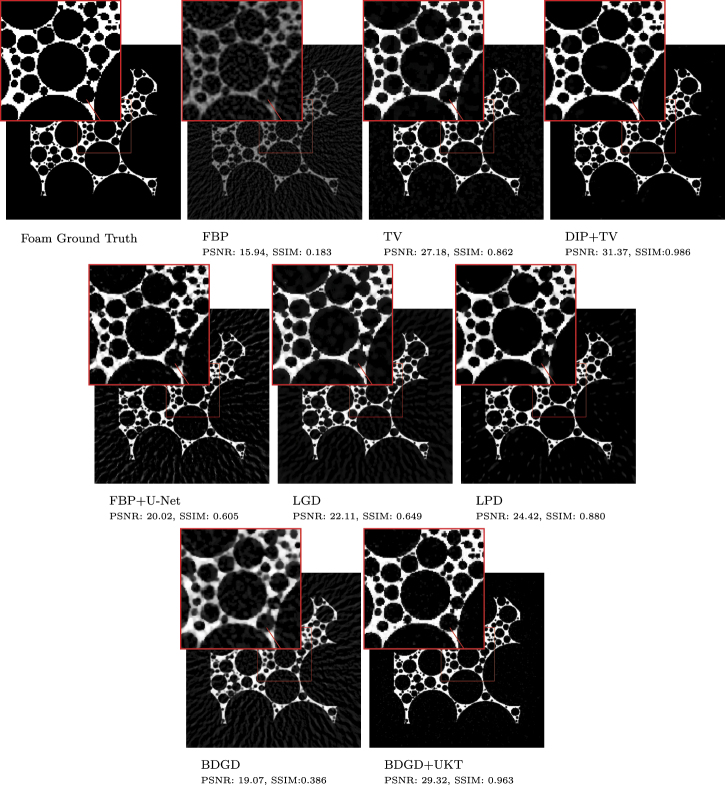

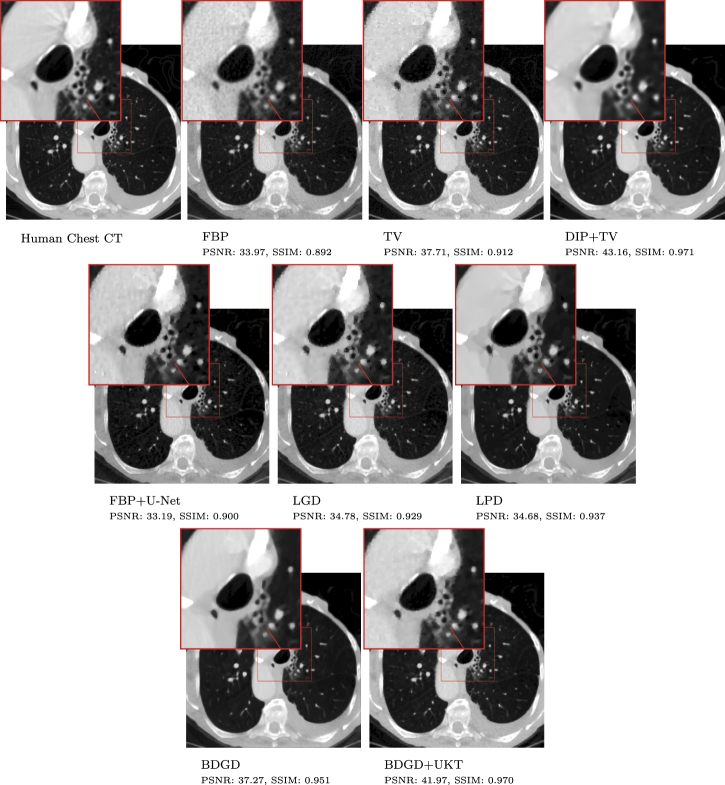

Example reconstructed images are shown in figures 3 and 4, for the sparse-view FoamFanB and the low-dose LoDoFanB CT settings, respectively. We observe that BDGD + UKT significantly reduces background noise in the reconstructions while faithfully capturing finer details, particularly in the low-dose setting. Overall, DIP + TV and BDGD + UKT produce reconstructions with similar properties. However, DIP + TV, LPD and BDGD + UKT tend to suffer from slight over-smoothing. Meanwhile, TV reconstruction suffers from patchy artefacts, which is a well-known drawback of the TV penalty [14], and also retains more background noise.

Figure 3.

Sparse-view reconstruction of the FoamFanB dataset along with a zoomed-in region indicated by a small square.

Figure 4.

Low-dose human chest CT reconstruction within the LoDoFanB dataset along with a zoomed-in region indicated by a small square. The window is set to a HU range of ≈[−1000, 400].

The sparse-view setting in figure 3 is numerically more challenging and the reconstructions are susceptible to streak artefacts, which are especially pronounced in the FBP but are still discernible in reconstructions obtained by other methods. Nonetheless, best performing methods (DIP + TV and BDGD + UKT) can achieve an excellent compromise between smoothing and the removal of streak artefacts. Interestingly, in figure 4, the learned methods, including BDGD + UKT, suffer from some undesirable over-smoothing inside the lung cavity.

BDGD + UKT is good at recovering fine structures that are present in the FoamFanB data, which are poorly reconstructed by BDGD. For example, in the last row of figure 3, the smaller circles are smoothed out and thus not discernible in BDGD reconstructions, but they are well reconstructed with BDGD + UKT. Similarly, figure 4 shows that BDGD + UKT better captures fine details in the human torso; for example the zoomed-in region shows an improvement over the overly-smoothed reconstruction produced by BDGD. These observations clearly indicate that the unsupervised fine-tuning is highly beneficial in improving the quality of the reconstructed image.

We further evaluate the learned methods by first pretraining them on the Ellipses dataset, and then fine-tuning them on one half of the LoDoFanB dataset but with ground truth data included. The remaining half of the LoBaFanB dataset is used for testing. We thus operate under the assumption that we have access to only one half of the ground truth images from the LoDoFanB dataset. This is intended to benchmark the reconstructive properties of the unsupervised fine-tuning against a more popular supervised adaptation, and may serve as the ‘upper-bound’ on the reconstructive performance of the proposed method. The quantitative results of this controlled setting are presented in tables 3 and 4. The notation SKT stands for the supervised knowledge-transfer: the fine-tuning is conducted via (3.2) on one half of the LoDoFanB dataset including ground truth data. Unsupervised (U)-BDGD refers to BDGD trained via (3.4) by completely omitting the pretraining in the first phase. It is observed that U-BDGD shows subpar reconstructive properties only for the low-dose CT setting, but surprisingly, it matches the performance obtained by BDGD + UKT for the sparse-view CT setting. However, we observe that pretraining helps to considerably speed up the convergence of BDGD + UKT. It takes only a few epochs to converge, whilst U-BDGD leads to a more unstable and lengthy learning (up to 100 epochs). This behaviour is also observed with the fine-tuning of other learned benchmark methods. This indicates the need of an adaptation phase, in the presence of distributional shift, and the beneficial effect of pretraining. Moreover, table 3 shows that using supervised data pairs from the target domain to adapt the network to the target task can significantly improve the reconstructive properties of all the learned methods. Nonetheless, the degree of improvement depends strongly on both the used method and the problem setting. The proposed BDGD + UKT approach dramatically improves the performance and mitigates the performance drop due to the distributional shift. Table 4 also shows the influence of the TV in the fine-tuning stage. Setting γ = 0 leads to overfitting to the noise after 10 epochs (i.e., approx. 2000 gradient updates), and even with careful early stopping the performance is still subpar when compared with the approach employing TV regularisation. Therefore, the TV term plays an important role in the proposed framework.

Table 3.

‘Upper-bounds’ obtained via supervised fine-tuning on LoDoFanB.

| Methods | Low-Dose CT | Sparse-View CT |

|---|---|---|

| FBP + U-Net | 36.05/0.879 | 34.47/0.828 |

| LGD | 38.33/0.894 | 36.00/0.855 |

| LPD | 39.85/0.914 | 37.59/0.876 |

| BDGD + SKT | 40.14/0.909 | 37.71/0.877 |

Table 4.

Comparison between BDGD + UKT and UL.

| Methods | Low-doseCT | Sparse-view CT |

|---|---|---|

| BDGD + UKT | 38.33/0.895 | 35.67/0.853 |

| UKT w/o TV | 27.65/0.549 | 22.86/0.354 |

| U-BDGD | 36.64/0.870 | 35.68/0.852 |

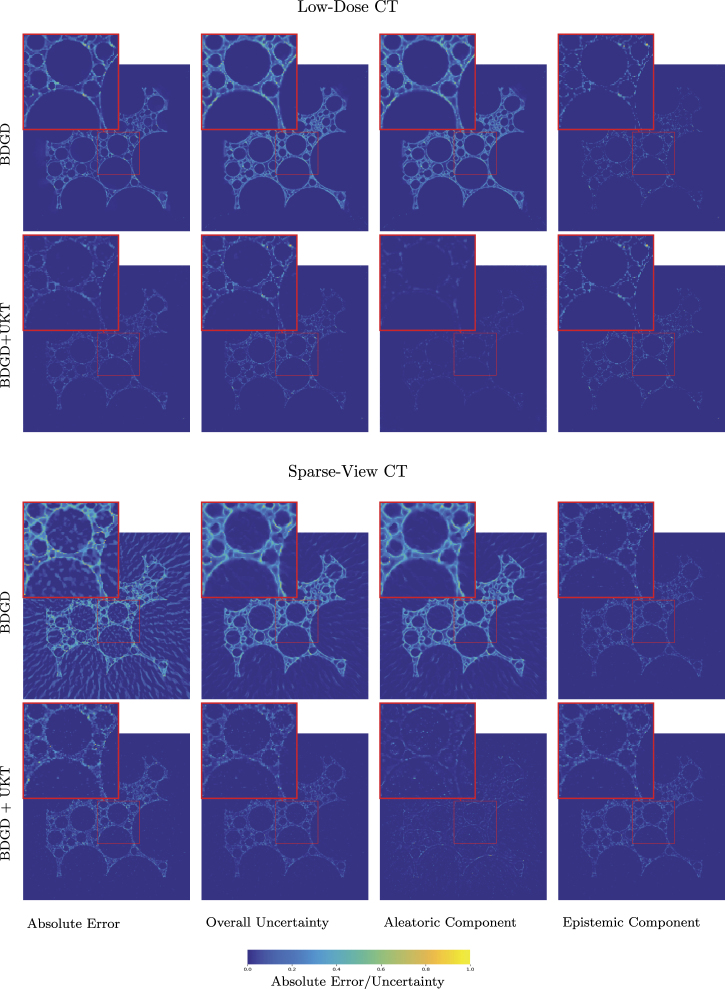

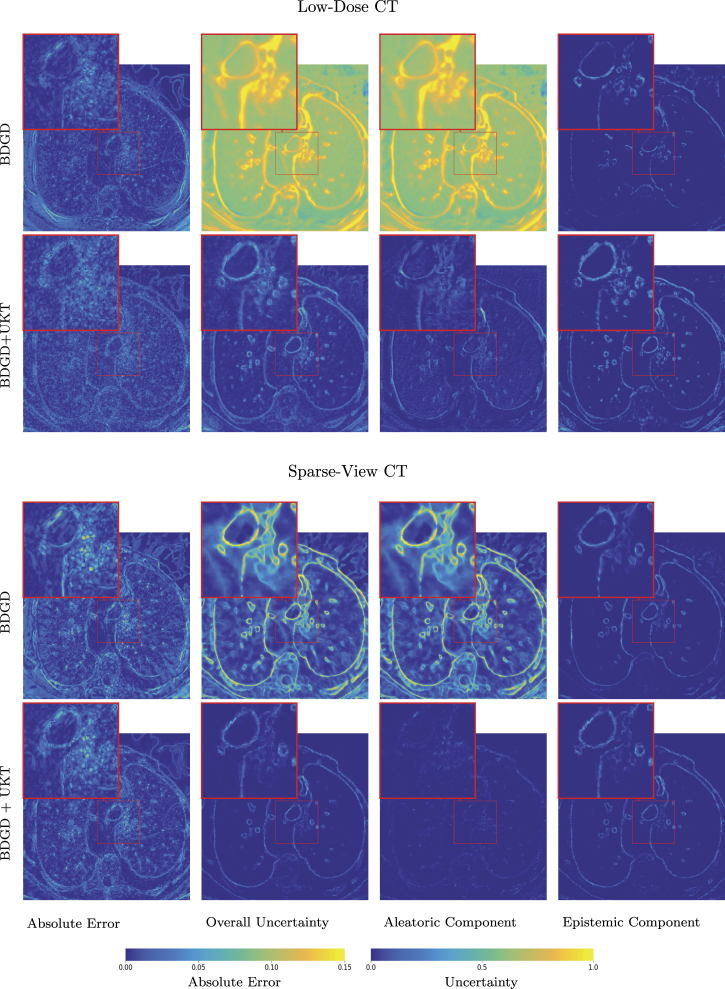

It is worth noting that BDGD + UKT also provides useful predictive uncertainty information on the reconstructions. In figures 5 and 6, we present the uncertainty estimates along with pixel-wise errors for the FoamFanB and LoDoFanB CT settings, respectively. The overall predictive uncertainty largely concentrates around the edges: the reconstruction of sharp edges exhibits a higher degree of uncertainty. This agrees well with the intuition that edges are more challenging to accurately resolve than smooth regions, and thus are more prone to reconstruction errors. Note that aleatoric and epistemic uncertainties have different sources, one is due to inherent data noise, and the other due to the model uncertainty, arising from the lack of a sufficient amount of training data. To ascertain the sources, we apply the decomposition (3.1). Interestingly, we observe that in both the low-dose and the sparse-view CT settings, epistemic uncertainty appears to be dominating within the (overall) predictive uncertainty. Nonetheless, the two types of uncertainty share a similar shape, and in either case, the overall shape closely resembles the pixel-wise error, indicating that the uncertainty estimate can potentially be used as an error indicator, concurring with existing empirical measurement data [52]. It is also instructive to compare the uncertainty estimates obtained by BDGD and BDGD + UKT. Figures 5 and 6 show that the estimates obtained by BDGD result in larger magnitudes, with the aleatoric component overshadowing the epistemic one. Visually, the unsupervised adaptation phase ameliorates the epistemic estimate: the pixel-wise predictive epistemic uncertainty obtained with BDGD + UKT is better at capturing the edges of the anatomical structures present in the reconstructed image.

Figure 5.

Qualitative uncertainty analysis on the FoamFanB dataset. The pixel-wise absolute reconstruction error, (max-min normalised across low-dose and sparse-view CT settings) pixel-wise predictive uncertainty, and its decomposition into the aleatoric and epistemic constituent components for low-dose and sparse-view CT obtained by BDGD and BDGD + UKT.

Figure 6.

Qualitative uncertainty analysis on the LoDoFanB dataset. The pixel-wise absolute reconstruction error, (max-min normalised across low-dose and sparse-view CT settings) pixel-wise predictive uncertainty, and its decomposition into the aleatoric and epistemic constituent components for low-dose and sparse-view CT obtained by BDGD and BDGD + UKT.

4.3. Discussion

The experimental results in tables 2 and 4 have several implications for image reconstruction. First, they show that while supervised iterative methods (FBP + U-Net, LGD, and LPD) can deliver impressive results when trained and tested on imaging datasets that come from the same distribution, but fail when applied directly to data from a different distribution. Specifically, on the Ellipses dataset they vastly outperform the traditional FBP and TV, but on the LoDoFanB dataset the difference between learned methods and FBP nearly vanishes (particularly in the low-dose setting), and the standard TV actually outperforms the supervised methods. This behaviour might be due to a form of bias-variance trade-off, where training with a large dataset allows improving the performance in the supervised case, but which has a negative effect on the generalisation property. The performance degrades significantly when the distribution of the testing measurement data deviates from that of the training data. This results in a loss of flexibility, and underwhelming performance, when reconstructing an image of a different type. Thus, adjusting the training regiment, or further adapting the network parameters to data from a different distribution, can be beneficial for improving the reconstruction quality. The results in table 3 indicate that all learned methods, including BDGD + UKT, can benefit greatly from the supervised data from the target domain.

Overall, the results show that Bayesian neural networks with VI can deliver strong performance that is competitive with deterministic reconstruction networks, when equipped with the strategy of being Bayesian only a little bit. This can be first observed on the Ellipses dataset. Table 1 shows that BDGD performs on par with (or often better than) all the unsupervised and supervised methods under consideration, which is in agreement with previous experimental findings [10]. The results also show the potential of the Bayesian UKT framework for medical image reconstruction in the more challenging setting where ground truth images are not available. Namely, adapting the model through the described framework allows achieving a significant performance boost on both the FoamFanB and LoDoFanB datasets. Moreover, BDGD + UKT shows roughly the same performance as DIP + TV, while being significantly faster in terms of runtime, cf table 1. This observation is consistent with existing studies using pretraining in other contexts [27, 49]. Indeed, all the learned methods are significantly faster than TV and DIP + TV reconstructions. In addition, BDGD + UKT can deliver uncertainty estimates on the reconstructions, with their sources quantified into aleatoric and epistemic ones. It is observed that for the studied settings, the epistemic uncertainty dominates the aleatoric one, and both uncertainty estimates correlate well with the pixel-wise error of the reconstructions. Nonetheless, the calibration of these estimates remains to be validated, like nearly all DL-based uncertainty quantification techniques [9].

The extensive experimental results indicate that UKT shows great promise in the unsupervised setting. The results clearly show the need for adapting data-driven approaches to structural changes in the data, its distribution and size, and for incorporating the insights observed in the available supervised data to update the reconstruction model [42, 46]. Though only conducted on labelling tasks, recent studies show that transfer learning through pretraining exhibits good results when the difference between data distributions is small [59]. Moreover, one needs to ensure that pretraining does not result in overfitting the data from the first task. Both requirements seem to be satisfied in the studied setting. Further investigation is needed to examine how does the performance of a reconstruction network change with respect to the size and type of data that the pretraining dataset consists of, as well as with respect to changes in the physical setting (e.g., forward operators and noise statistics).

5. Concluding remarks

The use of a full Bayesian treatment for learned medical image reconstruction methods is still largely under development, due to the associated training challenges [9]. The proposed BDGD + UKT is very promising in the following aspects: (i) it is easy to train due to the adoption of the strategy being Bayesian only a little bit; (ii) the performance of the obtained point estimates is competitive with benchmark methods; (iii) it also delivers predictive uncertainty. In particular, the numerical results indicate that the predictive uncertainty can be visually used as a reliable error indicator. In this work we have presented a novel two-phase learning framework, termed UKT, for addressing the lack of a sufficiently large amount of paired training data in learned image reconstruction techniques. The framework consists of two learning phases, both within a Bayesian framework. It first pretrains a learned iterative reconstructor on (simulated) ordered pairs and then at test-time, it fine-tunes the model to realise sample-wise adaptation using only noisy clinically realistic measurements. Extensive experiments on low-dose and sparse-view CT reconstructions show that the approach is indeed very promising. It can achieve competitive performance with several state-of-the-art supervised and unsupervised approaches both qualitatively and quantitatively.

Acknowledgments

The authors are grateful to two anonymous referees for their constructive comments and Johannes Leuschner (University of Bremen) for helpful discussions.

Appendix A. Alternative loss for unsupervised knowledge-transfer

The derivation of the training loss (3.4) is not very principled in the sense that the trace term does not arise naturally from the likelihood function p(y u|θ e) using Bayes’ rule. One can though derive alternative losses by slightly modifying the construction of the likelihood p(y u|θ e). Below we give one such construction based on hierarchical modelling. For any measurement datum , corresponding to the unknown image x u, the likelihood p(y u|x u) is set to

where σ 2 dictates the strength of the measurement noise. The likelihood p(y u|x u) can be obtained under the standard assumption that the noise corruption to the exact data Ax u (for the unknown image x u) follows a Gaussian distribution with zero mean and variance σ 2 I. Meanwhile, under the heteroscedastic noise modelling, the unknown image x u (which in turn depends on the network parameter θ) is assumed to follow a Gaussian distribution

with the mean and covariance being the two outputs of the neural network F θ . Consequently, assuming that the data noise and the unknown image x u are independent, combining the last two identities using Bayes’ rule leads to

Upon letting , we then have

In addition to enforcing data fidelity, we may also include the total variation penalty into the loss (to stabilise the training process). Finally, after expanding, relabelling, and ignoring the constant terms (in θ), we obtain the following alternative loss at the second phase

Note that the term has a closed-form expression.

The loss in (A.1) differs from that in (3.4) only in the construction of the likelihood p(y u|θ e). However, the former is computationally less convenient, due to the presence of the factor in the data consistency term, as well as the log-determinant . Indeed, in view of the following well-known matrix directional derivative formulas

for any symmetric positive definite X, and admissible direction H, the gradient evaluation requires solving multiple linear systems, with the matrices given only implicitly. This can be computationally demanding for large-scale image restoration tasks such as CT reconstruction. In practice, the derivative of the log-determinant can be efficiently approximated using randomised trace estimators (e.g., the Hutchinson’s estimator [15], which again involves multiple linear solves).

The next result shows that the loss in (3.4) is actually a computationally more tractable approximation to the genuine Bayesian loss in (A.1), under the condition (i.e., the matrix has a small operator norm). This result provides a more principled Bayesian interpretation of the loss (3.4).

Proposition A.1.

Under the condition , the loss in (3.4) is an approximation to the Bayesian loss in (A.1).

Proof.

Let be the residual. It follows directly from the preceding matrix derivative formulas that

Note that the approximation is good under the given conditions. Then substituting these approximations into (A.1), ignoring the constant term and relabelling, we obtain the loss in (3.4). This shows the desired assertion. □

Footnotes

The work of RB is substantially supported by the i4health PhD studentship (UK EPSRC EP/S021930/1) and from The Alan Turing Institute (UK EPSRC EP/N510129/1), and that of ZK, SA and BJ by UK EPSRC EP/T000864/1, and that of SA and BJ also by UK EPSRC EP/V026259/1. AH acknowledges funding by Academy of Finland Projects 336796, 334817, 338408.

Contributor Information

Riccardo Barbano, Email: riccardo.barbano.19@ucl.ac.uk.

Željko Kereta, Email: z.kereta@ucl.ac.uk.

Andreas Hauptmann, Email: Andreas.Hauptmann@oulu.fi.

Simon R Arridge, Email: s.arridge@ucl.ac.uk.

Bangti Jin, Email: bangti.jin@gmail.com.

Data availability statement

The data that support the findings of this study are openly available and stated in the main text.

References

- 1.Adler J, Kohr H, Öktem O. Operator discretization library (ODL) 2017 Software available from https://github.com/odlgroup/odl .

- 2.Adler J, Öktem O. Solving ill-posed inverse problems using iterative deep neural networks. Inverse Problems. 2017;33:124007. doi: 10.1088/1361-6420/aa9581. [DOI] [Google Scholar]

- 3.Adler J, Öktem O. Learned primal-dual reconstruction. IEEE Trans. Med. Imaging. 2018;37:1322–32. doi: 10.1109/tmi.2018.2799231. [DOI] [PubMed] [Google Scholar]

- 4.Akçakaya M, Yaman B, Chung H, Ye J C. Unsupervised deep learning methods for biological image reconstruction and enhancement: an overview from a signal processing perspective. IEEE Signal Process. Mag. 2022;39:28–44. doi: 10.1109/msp.2021.3119273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Antun V, Renna F, Poon C, Adcock B, Hansen A C. On instabilities of deep learning in image reconstruction and the potential costs of AI. Proc. Natl Acad. Sci. USA. 2020;117:30088–95. doi: 10.1073/pnas.1907377117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Armato S G, III, et al. Lung image database consortium: developing a resource for the medical imaging research community. Radiology. 2004;232:739–48. doi: 10.1148/radiol.2323032035. [DOI] [PubMed] [Google Scholar]

- 7.Arridge S, Maass P, Öktem O, Schönlieb C-B. Solving inverse problems using data-driven models. Acta Numer. 2019;28:1–174. doi: 10.1017/s0962492919000059. [DOI] [Google Scholar]

- 8.Baguer D O, Leuschner J, Schmidt M. Computed tomography reconstruction using deep image prior and learned reconstruction methods. Inverse Problems. 2020;36:094004. doi: 10.1088/1361-6420/aba415. [DOI] [Google Scholar]

- 9.Barbano R, Arridge S, Jin B, Tanno R. Uncertainty quantification in medical image synthesis. In: Burgos N, Svoboda D, editors. Biomedical Image Synthesis and Simulation: Methods and Applications. Amsterdam: Elsevier; 2022. pp. pp 601–41. [Google Scholar]

- 10.Barbano R, Kereta Ž, Zhang C, Hauptmann A, Arridge S, Jin B. Quantifying sources of uncertainty in deep learning-based image reconstruction. NeurIPS 2020 Workshop on Deep Learning and Inverse Problems.2020. [Google Scholar]

- 11.Barbano R, Zhang C, Arridge S, Jin B. Quantifying model-uncertainty in inverse problems via Bayesian deep gradient descent. Int. Conf. Pattern Recognition; 2021. pp. 1392–9. [Google Scholar]

- 12.Bickel S. 2008. Learning under differing training and test distributions. PhD Thesis. [Google Scholar]

- 13.Blundell C, Cornebise J, Kavukcuoglu K, Wierstra D. Weight uncertainty in neural networks. Int. Conf. Machine Learning; 2015. pp. 1613–22. [Google Scholar]

- 14.Bredies K, Holler M. Higher-order total variation approaches and generalisations. Inverse Problems. 2020;36:123001. doi: 10.1088/1361-6420/ab8f80. [DOI] [Google Scholar]

- 15.Bujanovic Z, Kressner D. Norm and trace estimation with random rank-one vectors. SIAM J. Matrix Anal. Appl. 2021;42:202–23. doi: 10.1137/20m1331718. [DOI] [Google Scholar]

- 16.Cascarano P, Sebastiani A, Comes M C, Franchini G, Porta F. Combining weighted total variation and deep image prior for natural and medical image restoration via ADMM. 2020 (arXiv: 2009.11380)

- 17.Chen H, Zhang Y, Kalra M K, Lin F, Chen Y, Liao P, Zhou J, Wang G. Low-dose CT with a residual encoder-decoder convolutional neural network. IEEE Trans. Med. Imaging. 2017;36:2524–35. doi: 10.1109/tmi.2017.2715284. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dar S U H, Özbey M, Çatlı A B, Çukur T. A transfer-learning approach for accelerated MRI using deep neural networks. Magn. Reson. Med. 2020;84:663–85. doi: 10.1002/mrm.28148. [DOI] [PubMed] [Google Scholar]

- 19.Daxberger E, Nalisnick E, Allingham J U, Antorán J, Hernández-Lobato J M. Bayesian deep learning via subnetwork inference. Proc. 38th Int. Conf. Machine Learning, PMLR; 2021. pp. 2510–21. [Google Scholar]

- 20.Depeweg S, Hernandez-Lobato J-M, Doshi-Velez F, Udluft S. Decomposition of uncertainty in Bayesian deep learning for efficient and risk-sensitive learning. Int. Conf. Machine Learning; 2018. pp. 1184–93. [Google Scholar]

- 21.Dittmer S, Kluth T, Maass P, Baguer D O. Regularization by architecture: a deep prior approach for inverse problems. J. Math. Imaging Vis. 2020;62:456–70. doi: 10.1007/s10851-019-00923-x. [DOI] [Google Scholar]

- 22.Engl H W, Hanke M, Neubauer A. Regularization of Inverse Problems (Mathematics and its Applications vol 375) Dordrecht: Kluwer; 1996. [Google Scholar]

- 23.Gal Y. 2016. Uncertainty in deep learning. PhD Thesis. [Google Scholar]

- 24.Gal Y, Ghahramani Z. Dropout as a Bayesian approximation: representing model uncertainty in deep learning. Proc. 33rd Int. Conf. Machine Learning, PMLR; 2016. pp. 1050–9. [Google Scholar]

- 25.Gilton D, Ongie G, Willett R. Model adaptation in biomedical image reconstruction. Int. Symp. Biomedical Imaging; 2021. pp. 1223–6. [Google Scholar]

- 26.Han Y, Yoo J, Kim H H, Shin H J, Sung K, Ye J C. Deep learning with domain adaptation for accelerated projection-reconstruction MR. Magn. Reson. Med. 1998;80:1189–205. doi: 10.1002/mrm.27106. [DOI] [PubMed] [Google Scholar]

- 27.He K, Girshick R, Dollar P. Rethinking imagenet pre-training. Proc. IEEE/CVF Int. Conf. Computer Vision (ICCV); 2019. pp. 4918–27. [Google Scholar]

- 28.Ito K, Jin B. Inverse Problems: Tikhonov Theory and Algorithms. Singapore: World Scientific; 2015. [Google Scholar]

- 29.Jin K H, McCann M T, Froustey E, Unser M. Deep convolutional neural network for inverse problems in imaging. IEEE Trans. Image Process. 2017;26:4509–22. doi: 10.1109/tip.2017.2713099. [DOI] [PubMed] [Google Scholar]

- 30.Jordan M I, Ghahramani Z, Jaakkola T S, Saul L K. An introduction to variational methods for graphical models. Mach. Learn. 1999;37:183–233. doi: 10.1023/a:1007665907178. [DOI] [Google Scholar]

- 31.Karani N, Erdil E, Chaitanya K, Konukoglu E. Test-time adaptable neural networks for robust medical image segmentation. Med. Image Anal. 2021;68:101907. doi: 10.1016/j.media.2020.101907. [DOI] [PubMed] [Google Scholar]

- 32.Kendall A, Gal Y. What uncertainties do we need in Bayesian deep learning for computer vision?. Neural Information Processing Systems; 2017. pp. 5580–90. [Google Scholar]

- 33.Kingma D P, Salimans T, Welling M. Variational dropout and the local reparameterization trick. Neural Information Processing Systems; 2015. pp. 2575–83. [Google Scholar]

- 34.Kingma D P, Welling M. Auto-encoding variational Bayes. 2013 (arXiv: 1312.6114)

- 35.Laves M-H, Ihler S, Fast J F, Kahrs L A, Ortmaier T. Recalibration of aleatoric and epistemic regression uncertainty in medical imaging. 2021 (arXiv: 2104.12376)

- 36.Leuschner J, Baltazar M, Erzmann D. Deep inversion validation library. 2019 Software available from https://github.com/jleuschn/dival .

- 37.Leuschner J, Schmidt M, Baguer D O, Maass P. LoDoPaB-CT, a benchmark dataset for low-dose computed tomography reconstruction. Sci. Data. 2021;8:109. doi: 10.1038/s41597-021-00893-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Li T, Li X, Wang J, Wen J, Lu H, Hsieh J, Liang Z. Nonlinear sinogram smoothing for low-dose x-ray CT. IEEE Trans. Nucl. Sci. 2004;51:2505–13. doi: 10.1109/tns.2004.834824. [DOI] [Google Scholar]

- 39.Loshchilov I, Hutter F. SGDR: stochastic gradient descent with warm restarts. Int. Conf. Learning Representations.2017. [Google Scholar]

- 40.Mackay D J C. Pasadena, California: 1992. Bayesian methods for adaptive models. PhD Thesis. UMI Order No. GAX92-32200. [Google Scholar]

- 41.Monga V, Li Y, Eldar Y C. Algorithm unrolling: interpretable, efficient deep learning for signal and image processing. IEEE Signal Process. Mag. 2021;38:18–44. doi: 10.1109/msp.2020.3016905. [DOI] [Google Scholar]

- 42.Mundt M, Hong Y W, Pliushch I, Ramesh V. A wholistic view of continual learning with deep neural networks: forgotten lessons and the bridge to active and open world learning. 2020 doi: 10.1016/j.neunet.2023.01.014. (arXiv: 2009.01797) [DOI] [PubMed]

- 43.Nix D A, Weigend A S. Estimating the mean and variance of the target probability distribution. Int. Conf. Neural Networks; Piscataway, NJ: IEEE; 1994. pp. 55–60. [Google Scholar]

- 44.Ongie G, Jalal A, Metzler C A, Baraniuk R G, Dimakis A G, Willett R. Deep learning techniques for inverse problems in imaging. IEEE J. Sel. Areas Inf. Theory. 2020;1:39–56. doi: 10.1109/jsait.2020.2991563. [DOI] [Google Scholar]

- 45.Osawa K, Swaroop S, Khan M E E, Jain A, Eschenhagen R, Turner R E, Yokota R. Practical deep learning with Bayesian principles. Neural Information Processing Systems.2019. [Google Scholar]

- 46.Parisi G I, Kemker R, Part J L, Kanan C, Wermter S. Continual lifelong learning with neural networks: a review. Neural Netw. 2019;113:54–71. doi: 10.1016/j.neunet.2019.01.012. [DOI] [PubMed] [Google Scholar]

- 47.Pelt D, Batenburg K, Sethian J. Improving tomographic reconstruction from limited data using mixed-scale dense convolutional neural networks. J. Imaging. 2018;4:128. doi: 10.3390/jimaging4110128. [DOI] [Google Scholar]

- 48.Quiñonero-Candela J, Sugiyama M, Lawrence N D, Schwaighofer A, editors. Dataset Shift in Machine Learning. Cambridge, MA: MIT Press; 2009. [Google Scholar]

- 49.Raghu M, Zhang C, Kleinberg J, Transfusion S B. Understanding transfer learning for medical imaging. Proc. 33rd Int. Conf. Neural Information Processing Systems; 2019. pp. 3347–57. [Google Scholar]

- 50.Ronneberger O, Fischer P, Brox T. U-Net: convolutional networks for biomedical image segmentation. Int. Conf. Medical Image Computing and Computer Assisted Intervention; Berlin: Springer; 2015. pp. 234–41. [Google Scholar]

- 51.Sun Y, Wang X, Liu Z, Miller J, Efros A, Hardt M. Test-time training with self-supervision for generalization under distribution shifts. Int. Conf. Machine Learning; 2020. pp. 9229–48. [Google Scholar]

- 52.Tanno R, Worrall D E, Ghosh A, Kaden E, Sotiropoulos S N, Criminisi A, Alexander D C. Bayesian image quality transfer with CNNs: exploring uncertainty in dMRI super-resolution. Int. Conf. Medical Image Computing and Computer-Assisted Intervention; Berlin: Springer; 2017. pp. 611–9. [Google Scholar]

- 53.Ulyanov D, Vedaldi A, Lempitsky V. Deep image prior. IEEE Conf. Computer Vision and Pattern Recognition; 2018. pp. 9446–54. [Google Scholar]

- 54.Van Aarle W, Palenstijn W J, De Beenhouwer J, Altantzis T, Bals S, Batenburg K J, Sijbers J. The ASTRA toolbox: a platform for advanced algorithm development in electron tomography. Ultramicroscopy. 2015;157:35–47. doi: 10.1016/j.ultramic.2015.05.002. [DOI] [PubMed] [Google Scholar]

- 55.Vincent P, Larochelle H, Lajoie I, Bengio Y, Manzagol P-A. Stacked denoising autoencoders: learning useful representations in a deep network with a local denoising criterion. J. Mach. Learn. Res. 2010;11:3371–408. doi: 10.5555/1756006.1953039. [DOI] [Google Scholar]

- 56.Wang G, Ye J C, De Man B. Deep learning for tomographic image reconstruction. Nat. Mach. Intell. 2020;2:737–48. doi: 10.1038/s42256-020-00273-z. [DOI] [Google Scholar]

- 57.Wang J, Li T, Lu H, Liang Z. Noise reduction for low-dose single-slice helical CT sinograms. IEEE Trans. Nucl. Sci. 2006;53:1230–7. doi: 10.1109/tns.2006.874955. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Wu Y, He K. Group normalization. European Conf. Computer Vision; 2018. pp. 3–19. [Google Scholar]

- 59.Yang X, He X, Liang Y, Yang Y, Zhang S, Xie P. Transfer learning or self-supervised learning? A tale of two pretraining paradigms. 2020 (arXiv: 2007.04234)

- 60.Zhang J, Liu Z, Zhang S, Zhang H, Spincemaille P, Nguyen T D, Sabuncu M R, Wang Y. Fidelity imposed network edit (FINE) for solving ill-posed image reconstruction. NeuroImage. 2020;211:116579. doi: 10.1016/j.neuroimage.2020.116579. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The data that support the findings of this study are openly available and stated in the main text.