Abstract

Eyeblinks and other large artifacts can create two major problems in event-related potential (ERP) research, namely confounds and increased noise. Here, we developed a method for assessing the effectiveness of artifact correction and rejection methods at minimizing these two problems. We then used this method to assess a common artifact minimization approach, in which independent component analysis (ICA) is used to correct ocular artifacts, and artifact rejection is used to reject trials with extreme values resulting from other sources (e.g., movement artifacts). This approach was applied to data from five common ERP components (P3b, N400, N170, mismatch negativity, and error-related negativity). Four common scoring methods (mean amplitude, peak amplitude, peak latency, and 50% area latency) were examined for each component. We found that eyeblinks differed systematically across experimental conditions for several of the components. We also found that artifact correction was reasonably effective at minimizing these confounds, although it did not usually eliminate them completely. In addition, we found that the rejection of trials with extreme voltage values was effective at reducing noise, with the benefits of eliminating these trials outweighing the reduced number of trials available for averaging. For researchers who are analyzing similar ERP components and participant populations, this combination of artifact correction and rejection approaches should minimize artifact-related confounds and lead to improved data quality. Researchers who are analyzing other components or participant populations can use the method developed in this study to determine which artifact minimization approaches are effective in their data.

Keywords: Electroencephalogram (EEG), independent component analysis (ICA), ERP CORE, signal-to-noise ratio (SNR), standardized measurement error (SME)

1. Introduction

Eyeblinks generate large artifacts in electroencephalographic (EEG) recordings, typically exceeding 200 μV at the frontal pole (Fp1 and Fp2) and 30 μV at the vertex (Cz; see Lins et al., 1993 for normative data). Large artifacts can also occur anywhere on the scalp as a result of movements, skin potentials, muscle contractions, and idiosyncratic events (see Luck, 2014 for an overview of common artifacts in ERP experiments). Consequently, almost all event-related potential (ERP) studies employ an artifact rejection and/or artifact correction approach to deal with these artifacts, and several guidelines for publishing ERP and time-frequency studies indicate that this is essential (Duncan et al., 2009; Keil et al., 2014, 2022; Picton et al., 2000).

The present study examines the effectiveness of a common approach to dealing with eyeblinks and other artifacts that produce extreme values, in which artifacts with stable scalp distributions are corrected using independent component analysis (ICA; Chaumon et al., 2015; Jung, Makeig, Humphries, et al., 2000; Jung, Makeig, Westerfield, et al., 2000) and any trials that have extreme voltage deflections in any channel in the corrected data are also excluded from the averaged ERPs (artifact rejection; Islam et al., 2016; Nolan et al., 2010). This general approach is used very widely: in the first 10 issues of the 2023 volume of Psychophysiology, there were at least 18 papers that used some variant of this general approach (see Addante et al., 2023; Arnau et al., 2023; Bruchmann et al., 2023; Chen & Chen, 2023; Fan et al., 2023; Hubbard et al., 2023; Lin et al., 2023; Liu et al., 2023; Morales et al., 2023; Nguyen et al., 2023; Nicolaisen-Sobesky et al., 2023; Paraskevoudi & SanMiguel, 2023; Ringer et al., 2023; Schmuck et al., 2023; Sun et al., 2023; Tao et al., 2023; Wood et al., 2023; Zheng et al., 2023).

A careful assessment of this combined correction-rejection approach is particularly relevant at this moment because the usefulness of extensive EEG preprocessing has been questioned (Delorme, 2023) and because a new metric of data quality is now available that is directly related to effect sizes and statistical power (Luck et al., 2021). The rejection of trials containing artifacts may be particularly problematic because it will decrease the number of trials included in the averages, making the averaged ERP waveforms noisier. The new metric of data quality takes into account both the single trial noise level and the number of trials being averaged together, making it possible to determine whether the benefit of eliminating noisy trials outweighs the cost of having fewer trials.

The goal of the present investigation was to assess the effectiveness of an artifact minimization approach that is widely used and easy to implement using both open-source and commercial analysis packages. If this approach works well, researchers can keep using it, avoiding the considerable work required to implement more complex approaches that might have only marginal benefits. If it works poorly, however, this would establish a need for developing and implementing better approaches. We did not attempt to answer the question of which approach to artifact minimization is best, which would be difficult given the sheer number of available correction and rejection approaches. We did, however, aim to provide a well-justified and straightforward method for assessing the effectiveness of different artifact minimization approaches. Researchers could apply this method to their own data to determine whether their current artifact minimization approach is effective (because, as we will show, the effectiveness of an approach depends on the nature of the data). Methodologists could use this method to assess the effectiveness of new or improved artifact minimization approaches.

1.1. Goals of Artifact Correction and Rejection

To evaluate an approach to rejecting or correcting artifacts, it is essential to begin by carefully defining the goals of rejection and correction. Previous examinations of ICA-based artifact correction with real data have focused primarily on theoretical goals, such as minimizing mutual information and obtaining independent components with dipolar scalp maps (Delorme et al., 2012; Hoffmann & Falkenstein, 2008; Klug & Gramann, 2021; Winkler et al., 2015). Here, we consider the narrower goal of assessing whether two or more conditions or groups truly differ from each other in the amplitude or latency of a specific ERP component. There are two main ways in which artifacts can interfere with this goal (Luck, 2014, 2022). First, and most importantly, artifacts can be a potential confound. For example, if participants blink more in one condition than in another, the electrooculographic (EOG) voltage produced by the blinks may create a difference in the ERP waveforms between the conditions. This difference may then be interpreted as a difference in EEG activity between conditions rather than as a difference in EOG activity, leading to an incorrect scientific conclusion.

Second, artifacts may be a source of uncontrolled variance that decrease statistical power and cause a true effect not to be statistically significant. For example, a few random trials with a ±200 μV movement artifact in each participant’s data could be enough to add substantial error variance and prevent a true effect from being statistically significant. This would also lead to an incorrect scientific conclusion (or, more precisely, a failure to provide sufficient evidence for the correct conclusion). The present study addresses both of these issues, examining whether artifact correction and rejection help to minimize consistent but artifactual differences between conditions (i.e., reduce potential confounds) and help to minimize uncontrolled variance (i.e., increase statistical power). Note that although some prior studies of the effectiveness of artifact correction have assessed uncontrolled variance or statistical power(e.g., Delorme, 2023; Klug & Gramann, 2021; Mennes et al., 2010), previous research has largely neglected the possibility that artifacts are a confound. It is unlikely that any artifact correction method will be perfect, and it is essential to assess whether any residual artifactual signals create meaningful confounds in a given study.

EOG signals are also sometimes used for a third purpose in studies with visual stimuli, namely ensuring that the eyes are open and pointed in the appropriate direction when the stimulus is presented (Luck, 2014, 2022). For example, even when ICA is used to correct for EOG artifacts resulting from blinks, it can still be useful to reject trials on which the eyes are closed at the time of the stimulus. This issue arises in a fairly large number of studies, so we will consider it in the present analyses. In addition, when lateralized stimuli are used, EOG signals can be used to demonstrate that the eyes did not deviate systematically from fixation and change the sensory input. This is a less common issue that requires special analytic approaches(Luck, 2022; Woodman & Luck, 2003), so we will not consider it in the present analyses.

1.2. The Present Study

Most previous studies of the effectiveness of artifact minimization approaches have assessed data from a single study, often with a relatively small number of participants. This makes it difficult to know whether the results would generalize to other studies. In the present study, we evaluated the effectiveness of artifact correction and rejection across a broad range of experimental paradigms with a reasonably large sample size. Specifically, we used the publicly available ERP CORE (Compendium of Open Resources and Experiments; Kappenman et al., 2021), which includes data from 40 young adults who performed six standardized paradigms that yielded seven commonly-studied ERP components: P3b, N400, N170, N2pc, mismatch negativity (MMN), error-related negativity (ERN), and lateralized readiness potential (LRP). We did not analyze the N2pc and LRP data, because ocular artifacts create very different issues for these components than for most ERP components1. For the other five components, we asked (a) whether eyeblinks differed across experimental conditions and were therefore a potential confound, (b) whether ICA effectively minimized this confound, (c) whether ICA decreased or increased the data quality, and (d) whether the rejection of trials with extreme values increased the data quality even though it reduced the number of trials included in the averaged ERP waveforms. We also examined the effects of rejecting trials with blinks that interfered with the ability to see the stimuli.

We did not examine the effectiveness of rejecting trials in which a blink occurred at any time in the epoch, which was the standard approach prior to the widespread adoption of blink correction methods. When that approach was standard, it was also typical to ask participants to minimize blinking or to blink during the intertrial interval. In the ERP CORE tasks, by contrast, participants were instructed that they could blink immediately after responding, which was typically within the epoch. This is not a situation in which researchers would typically reject trials with a blink at any point in the epoch, so examining the effectiveness of this type of artifact rejection approach using the ERP CORE data would not be a fair test of the approach.

Blink-related confounds are a potential problem in a large proportion of ERP studies, so they are the focus of the present investigation. Our method can also be directly applied to vertical eye movements, which have very similar scalp distributions to blinks. Other kinds of confounds may be of concern in other experimental paradigms or participant populations, and our method could be modified for those other confounds.

By examining data from five different ERP components, the present investigation could draw relatively general conclusions about the effectiveness of the common approach of combining ICA-based correction for blinks with artifact rejection to eliminate trials with extreme voltage deflections. Our results may not generalize to all experimental paradigms and participant populations, but they will still be of value for a relatively large number of ERP studies. Another major goal of the present study was to develop and assess a rigorous yet easy-to-implement method for assessing the effectiveness of artifact correction and rejection. This method could be utilized in future research to assess the effectiveness of alternative artifact minimization approaches and could be applied to other experimental paradigms and participant populations. Moreover, we sought to test the proposal that artifact correction and rejection have little or no value (Delorme, 2023).

Note that the present study focuses on conventional averaged ERPs. However, it would be straightforward to adapt our assessment method to other EEG analysis approaches, such as time-frequency analysis and multivariate pattern analysis.

1.3. Quantifying Systematic Confounds

In the ERP CORE data, blinks are the main artifacts that are likely to differ systematically across experimental conditions and create a confound. We assessed the possibility that blinks were a confound by examining the bipolar vertical electrooculogram (VEOG) signal prior to artifact correction. The eyeblink artifact appears to be primarily caused by the eyelid sliding across the cornea2 (Lins et al., 1993), which creates a large positive deflection above the eyes and a smaller negative deflection below the eyes. We computed the bipolar VEOG as the voltage above the eyes (which is positive during a blink) minus the voltage below the eyes (which is negative during a blink). A positive value minus a negative value creates a large positive value, so this bipolar derivation effectively magnifies the blink-related activity. In addition, most EEG activity is similar at electrodes under and over the eyes, so this difference subtracts out most (but not all) neural signals. Thus, the bipolar VEOG channel provides a large and relatively pure index of blink activity.

We used this channel to determine the proportion of trials that contained blinks in each of the two conditions that were used to define a given component (e.g., face trials and car trials for the N170, error trials and correct trials for the ERN). If the proportion differs across conditions, then the blinks are a potential confound. Even if the number of blinks is equal across groups or conditions, the timing of the blinks might differ, and this could also be a significant confound. For example, if participants blink earlier on correct trials than on error trials in an ERN experiment, this will create an early negative voltage followed by a later positive voltage at frontal electrode sites in an error-minus-correct difference waveform. This pattern could be mistaken for an ERN followed by an error positivity (Pe). Indeed, this is exactly what we observed in our flankers paradigm. We assessed differences in the timing of blinks by comparing the averaged bipolar VEOG waveforms across conditions.

To determine the effectiveness of ICA-based artifact correction at minimizing blink-related confounds, we reconstructed the bipolar VEOG signal after artifact correction. If the corrected bipolar VEOG signals are nearly identical across conditions, then it is reasonable to conclude that the blink correction was effective in minimizing blink-related confounds. By contrast, a significant difference in bipolar VEOG between conditions after correction suggests that the correction was not completely successful. However, even if the blink-related activity has been perfectly eliminated, there could be differences between conditions in the bipolar VEOG signal as a result of volume-conducted ERP activity. We used semipartial correlations to determine whether any residual activity in the corrected bipolar VEOG signal reflected a failure of artifact correction or instead reflected volume-conducted voltages from the ERP component of interest.

1.4. Quantifying Data Quality

To determine whether artifact correction and rejection improved the data quality, we used a newly developed metric of data quality called the standardized measurement error (SME; Luck et al., 2021; Zhang & Luck, 2023). The SME estimates the standard error of measurement for a given amplitude or latency score from an averaged ERP waveform. It takes into account the fact that a given type of noise will have different effects on data quality depending on the method used to score a component’s amplitude or latency. For example, peak amplitude scores are much more distorted by high-frequency noise than are mean amplitude measures. In addition, when baseline correction is applied, low-frequency noise will have a larger effect on amplitudes at long latencies than at short latencies. The SME therefore estimates the measurement error for a particular score. A separate SME value is obtained for each participant, and the values can then be aggregated across participants by taking the root mean square of the individual-participant values (the RMS(SME)). The resulting RMS(SME) is directly related to the effect size for a difference between conditions or groups, which in turn is directly related to statistical power (see Luck et al., 2021, for details). Finally, because SME values represent the measurement error for amplitude or latency scores obtained from averaged ERP waveforms, they naturally reflect the combined effects of the single-trial noise level and the number of trials being averaged together. Thus, the SME provides an excellent means of determining whether the benefit of rejecting trials with extreme values (i.e., eliminating large artifactual voltages) outweighs the reduction in the number of trials being averaged together.

2. Method

The data analysis procedures were implemented with MATLAB 2021b (MathWorks Inc), using EEGLAB Toolbox v2023.0 (Delorme & Makeig, 2004) combined with ERPLAB Toolbox v9.20 (Lopez-Calderon & Luck, 2014). The data and scripts are available at https://osf.io/vpb79/. The present analyses can also be conducted without scripting by using the EEGLAB and ERPLAB graphical user interfaces. Thus, it should be straightforward for other investigators to apply our method to their own data.

2.1. ERP CORE Data and Preprocessing

We assessed the effects of artifact correction and artifact rejection on ERP data quality using the ERP CORE dataset (Kappenman et al., 2021), which can be found at https://doi.org/10.18115/D5JW4R. Comprehensive information concerning the participants, paradigms, recording techniques, and analysis protocols can be obtained from the original paper. The following section provides a concise summary of the participants, recording techniques, and preprocessing procedures. Additionally, each specific ERP paradigm is briefly described in its corresponding section within the Results section.

The ERP CORE dataset contains data from 40 neurotypical college students (25 women, 15 men), who were recruited from the University of California, Davis community. All 40 participants were included in the present analyses, regardless of the number of artifacts or behavioral errors present. The only exception is that one participant was excluded from the ERN analyses because this participant had only two usable error trials.

The EEG was recorded using a Biosemi ActiveTwo recording system (Biosemi B.V., Amsterdam) with DC coupling, active electrodes, an antialiasing filter (fifth-order sinc filter with a half-power cutoff at 204.8 Hz), and a sampling rate of 1024 Hz. Single-ended signals were recorded from 30 scalp sites (FP1, F3, F7, FC3, C3, C5, P3, P7, P9, PO7, PO3, O1, Oz, Pz, CPz, FP2, Fz, F4, F8, FC4, FCz, Cz, C4, C6, P4, P8, P10, PO8, PO4, 02) along with horizontal and vertical electrooculogram electrodes (HEOG left, HEOG right, VEOG lower).

Several preprocessing steps had already been carried out on the data within the ERP CORE resource. Stimulus event codes were adjusted to account for the intrinsic delay of the video monitor, and the data were resampled at 256 Hz using an antialiasing filter set at 115 Hz. Following this, the data were referenced to the average of P9 and P10 electrodes, which are electrically similar to the left and right mastoids but tend to be more stable. The one exception was the N170 paradigm, where Cz was used as the reference prior to averaging, and then the data were re-referenced to the average of all scalp sites after averaging. For all components, we also created a bipolar horizontal electrooculogram (HEOG-bipolar) channel as HEOG-left minus HEOG-right, and we created a bipolar vertical electrooculogram (VEOG-bipolar) as FP2 minus VEOG-lower3. In addition, a noncausal Butterworth high-pass filter with a half-amplitude cutoff at 0.1 Hz and a low-pass filter at 30 Hz (a roll-off of 12 dB/octave for both filters) were applied to the continuous EEG data.

Note that in all of the ERP CORE paradigms except the MMN task, participants were instructed to avoid blinking between the time of the stimulus and the time of the behavioral response but were free to blink after responding. Instructing participants to suppress blinks altogether is known to require cognitive effort (Lerner et al., 2009) and can impact ERPs (Ochoa & Polich, 2000). However, merely delaying the blinks until after the response seems to require less effort (although we know of no formal demonstrations of this). Instructions to delay blinks can be advantageous because they minimize the number of trials with blinks that might interfere with seeing the stimuli and reduce the possibility of differences in blink activity between conditions prior to the response. However, this procedure may also cause the blinks to be concentrated in the late part of the epoch. Differences in blinking across conditions may then be present late in the epoch, creating EOG confounds that might be mistaken for late ERP effects. The overall benefit of instructions to delay blinking will depend on the nature of a given study.

2.2. General artifact correction and rejection methods

Independent component analysis (ICA) was used for artifact correction. We used the EEGLAB runica() routine, which implements the infomax algorithm. This is probably the most widely used ICA algorithm for artifact correction (because it is the default in EEGLAB), and it is also one of the best-performing algorithms (Delorme et al., 2012). We used the default parameters, because our goal was to evaluate the effectiveness of a widely used approach rather than to determine the optimal approach.

The original ICA-based correction performed on the ERP CORE data did not follow recent recommendations for optimization (Dimigen, 2020; Klug & Gramann, 2021; Luck, 2022; Winkler et al., 2015). We therefore conducted a new ICA decomposition, in which we created a parallel dataset for each participant that was optimized for the decomposition. In this parallel dataset, we first applied a noncausal Butterworth band-pass filter with half-amplitude cutoffs at 1 Hz and 30 Hz and a roll-off of 12 dB/octave. Although this relatively narrow bandpass significantly distorts the time course of the ERP waveform (Zhang et al., 2023a, 2023b), it does not change the scalp distributions, so it does not interfere with the ICA decomposition process. However, it minimizes idiosyncratic noise that would otherwise degrade the decomposition. The data were then resampled at 100 Hz to increase the speed of the decomposition. Finally, we deleted break periods and time periods with non-biologically plausible outlier voltages from the continuous EEG, because these periods also contain idiosyncratic noise that would otherwise degrade the decomposition. This was achieved using the ERPLAB pop_erplabDeleteTimeSegments() function to delete break periods (defined as periods of at least 2 seconds without an event code) and the ERPLAB pop_continuousartdet() function to delete periods in which the peak-to-peak amplitude within a specific window length exceeded a threshold. The window length and threshold were set individually via visual inspection in the original ERP CORE resource, with a window length between 500 and 2000 ms and a threshold between 350 and 750 μV.

We included all EEG and EOG electrodes in the ICA decomposition except for the bipolar channels and any “bad” channels that required interpolation (as identified in the original ERP CORE dataset). Independent components (ICs) that represented blink artifacts were identified using ICLabel, an automatic IC classification system that was trained on a large number of datasets with manually labeled ICs (Pion-Tonachini et al., 2019). ICLabel assigns a probability that a given IC reflects a specific artifact. We classified an IC as reflecting blinks if ICLabel gave it a probability of at least 0.9 as reflecting blink activity. ICs with a lower probability are by definition more ambiguous, so we visually assessed the time-course match between these ICs and the VEOG-bipolar signal. A good match was occasionally observed for these ICs, and we also classified these ICs as reflecting blinks4. This semi-automatic approach to IC classification is quite common, but it involves the subjective judgment of experts and is therefore difficult to reproduce exactly. We therefore repeated all analyses using fully automatic classification (i.e., without visual inspection), once with a probability threshold of 0.9 and once with a probability threshold of 0.8. All three approaches yielded identical patterns of statistical significance for the analyses reported in this paper, except for one that is noted in the Results section. The specific results shown below came from the semi-automatic approach. Table 1 summarizes the number of ICs that were classified as reflecting blinks with each of these approaches.

Table 1.

Mean number of blink-related independent components identified by three methods for each ERP component (range in parentheses)

| Fully automatic classification (probability = 0.8-1.0) | Fully automatic classification (probability = 0.9-1.0) | Semi-automatic classification (probability = 0.9-1.0 plus visual inspection) | |

|---|---|---|---|

| N400 | 1.40 (0-3) | 1.35 (0-3) | 1.48 (1-3) |

| ERN | 1.35 (1-2) | 1.13 (0-2) | 1.33 (1-2) |

| P3b | 1.10 (0-2) | 1.05 (0-2) | 1.13 (1-2) |

| MMN | 1.35 (0-2) | 1.18 (0-2) | 1.48 (1-2) |

| N170 | 1.38 (0-3) | 1.20 (0-2) | 1.33 (0-3) |

After the decomposition was performed, we transferred the component weights back to the original dataset that was not heavily filtered. The EEG was then reconstructed from the non-blink ICs. This procedure made it possible to obtain ICA weights using heavily filtered data that were not degraded by idiosyncratic noise, but the ICA weights were then applied to the original data so that the time course of the ERPs would not be distorted by the filtering.

Artifact detection and rejection were performed after artifact correction and after the data were epoched and baseline-corrected using the time periods shown in Table 2. Every epoch from every corrected EEG channel was subjected to two algorithms that detected somewhat different kinds of extreme values. We first applied ERPLAB’s simple voltage threshold algorithm with a threshold of 200 μV, which flagged any epochs in which the baseline-corrected voltage exceeded the threshold at any time point during the epoch. We then applied ERPLAB’s moving window peak-to-peak algorithm with a threshold of 100 μV, which computed the peak-to-peak amplitude within overlapping 200-ms windows across a given epoch. An epoch was flagged if the peak-to-peak amplitude within any window exceeded the threshold. These specific thresholds were chosen on the basis of an exploratory analysis comparing different threshold combinations, in which we found that these thresholds tended to yield the greatest overall reduction in noise. Details of this exploratory analysis can be found in the supplementary materials (Figures S2–S6). The epochs that were flagged for artifacts were then excluded when the averaged ERPs were computed. All channels were excluded for a given epoch if an artifact was flagged in any channel.

Table 2.

Epoch window, baseline period, electrode site, and measurement window used for each ERP component.

| N400 | ERN | P3b | MMN | N170 | |

|---|---|---|---|---|---|

| Epoch (ms) | −200 to 800 | −600 to 400 | −200 to 800 | −200 to 800 | −200 to 800 |

| Baseline Period (ms) | −200 to 0 | −400 to −200 | −200 to 0 | −200 to 0 | −200 to 0 |

| Measurement Channel | CPz | FCz | Pz | FCz | PO8 |

| Measurement Window (ms) | 300 to 500 | 0 to 100 | 300 to 600 | 125 to 225 | 110 to 150 |

Because the ICA-corrected data were used for the artifact detection process, blinks did not create extreme values and did not cause an epoch to be flagged for rejection. However, we wanted to count the number of trials with blinks in each condition to determine if blink rates differed across conditions. This was accomplished by applying ERPLAB’s step function routine to the uncorrected VEOG-bipolar channel. This routine slid a moving window across the epoch, and the absolute value of the difference between the mean voltage in the first half and second half of each window was computed (see Luck, 2014 for a detailed description). The largest of these values for a given epoch was then compared with a threshold. We used a window width of 200 ms, a step size of 10 ms, and a threshold of 100 μV.

In addition, one of our analyses excluded trials in which a blink occurred near the time of the stimulus, which prevents the perception of that stimulus. This was accomplished using the step function but limiting the time period to −200 to +200 ms.

2.3. Specific artifact correction and rejection approaches

We compared five different approaches to combining artifact correction with artifact rejection. In our baseline approach, labeled “None”, no artifact correction or rejection were applied. We simply epoched and baseline-corrected the EEG using the time windows specified in Table 2, and then averaged all the EEG epochs for a given condition except those with incorrect behavioral responses.

For the second approach, labeled “ICA”, we used the ICA approach described in the previous section to correct for blinks and then averaged all the EEG epochs except those with incorrect behavioral responses. No epochs were rejected because of EEG or EOG artifacts.

The third approach, labeled “ICA+EV1” was identical to the ICA approach except that epochs with extreme values (EVs) in any channel (as defined in the preceding section) were excluded during averaging. Note that the extreme values were assessed after artifact correction, so blinks did not lead to rejection.

The fourth approach, labeled “ICA+EV1+Blink”, was identical to the ICA+EV1 approach except that the averages also excluded epochs with blink activity around the time of the stimulus (as defined in the previous section). This excludes trials in which a blink would have prevented the perception of a visual stimulus.

The fifth approach, labeled “ICA+EV2”, was identical to the ICA+EV1 approach except that epochs were rejected only if extreme values were present in the measurement channel for a given component (listed in Table 2). By contrast, the ICA+EV1 approach excludes trials with extreme values in any channel. By narrowing extreme value detection to the measurement channel, we aimed to retain more trials.

We also explored two additional approaches in which channels exhibiting extreme values during an epoch were interpolated for that epoch rather than being rejected. This is not a common approach, and it did not work as well as rejection, so the results are described in the supplementary materials (see Figure S1).

2.4. Assessment of data quality

We computed the data quality separately for each of the five artifact minimization approaches across four scoring methods: mean amplitude, peak amplitude, 50% area latency, and peak latency. To estimate the noise, we focused on the difference waves of the specific components under investigation. In the case of the P3b component, for example, we computed a rare-minus-frequent difference wave, scored the amplitudes and latencies from this difference wave, and obtained the data quality for these scores. All analyses for a given component were restricted to the maximal channel for that component, as listed in Table 2.

Mean amplitude was defined as the mean voltage within the measurement window listed in Table 2. Peak amplitude was determined as the maximum positive voltage (for the P3b component) or the maximum negative voltage (for other components) within the measurement window, whereas peak latency was defined as the latency at which the peak amplitude occurred. To measure the 50% area latency, we computed the area bounded by the zero voltage line and the ERP difference wave during the measurement period (i.e., the area on the positive side of the zero line for the P3b and the area on the negative side of the zero line for the N170, MMN, N400, and ERN) and located the time point that divided the integral into equal halves . To enhance temporal precision, we upsampled the waveforms by a factor of 10 using spline interpolation before scoring the latencies (see Luck, 2014 for the rationale for upsampling and a more detailed description of the 50% area latency measure).

SME values were obtained individually from each participant for each score. For mean amplitude scores, we first computed the analytic SME (aSME) value for each parent waveform (e.g., the rare and frequent waveforms for the P3b component). Specifically, the mean amplitude score (i.e., the mean voltage across the measurement window) was obtained for each epoch for a given participant in a given condition (using the same epochs that are used for averaging), and the aSME was computed as the standard deviation of these scores divided by the square root of the number of epochs. We then computed the SME of the difference between waveforms as , where SMEA-B is the SME of the difference between conditions A and B, and SMEA and SMEB are the SMEs of the two individual conditions (see Zhang et al., 2023a for details).

This approach is not valid for other scoring methods (e.g., peak amplitude, peak latency, 50% area latency). For these scores, we instead employed bootstrapping to estimate the SME (bootstrapped SME or bSME) directly from the relevant difference wave. The bootstrapping process is explained in detail in Luck et al. (2021) and requires simple scripting (example bSME scripts available at https://doi.org/10.18115/D58G91). In our study, we used 1,000 bootstrap iterations for each bSME value.

To obtain an aggregate measure of data quality across participants, we computed the root mean square (RMS) of the single-participant SME values (the RMS(SME)). This was computed by summing the squared single-participant SME values, dividing this sum by the number of participants, and then taking the square root. Note that we used the RMS across participants rather than the mean across participants because the RMS is more directly related to effect sizes and statistical power (Luck et al., 2021). Bootstrapping (with 10,000 iterations) was used to obtain the standard error of the RMS(SME) values.

3. Results

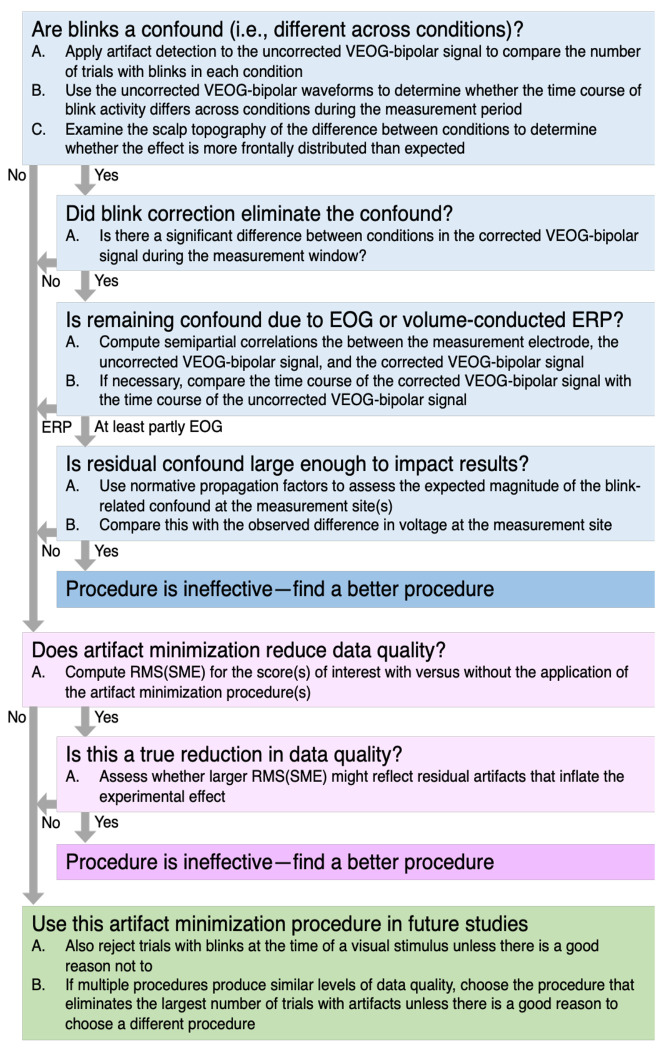

Each of the following sections presents the results for one of the five ERP components. The first section uses the N400 data to exemplify in detail our general method for assessing the effectiveness of artifact correction and rejection (see flowchart in Figure 1). This is followed by briefer sections on the ERN, P3b, MMN, and N170 components.

Figure 1.

Summary of the present method for assessing the effectiveness of an artifact minimization approach for a given dataset.

3.1. Applying our general method to the N400 component

As shown in Figure 2a, the N400 component was elicited by means of a word-pair judgment paradigm. Each trial consisted of a red prime word followed by a green target word. Participants were tasked with indicating whether the target word was semantically related (p = 0.5, 60 trials) or unrelated (p = 0.5, 60 trials) to the preceding prime word by pressing one of two buttons. The N400 was measured from the unrelated-minus-related difference wave at the CPz electrode site.

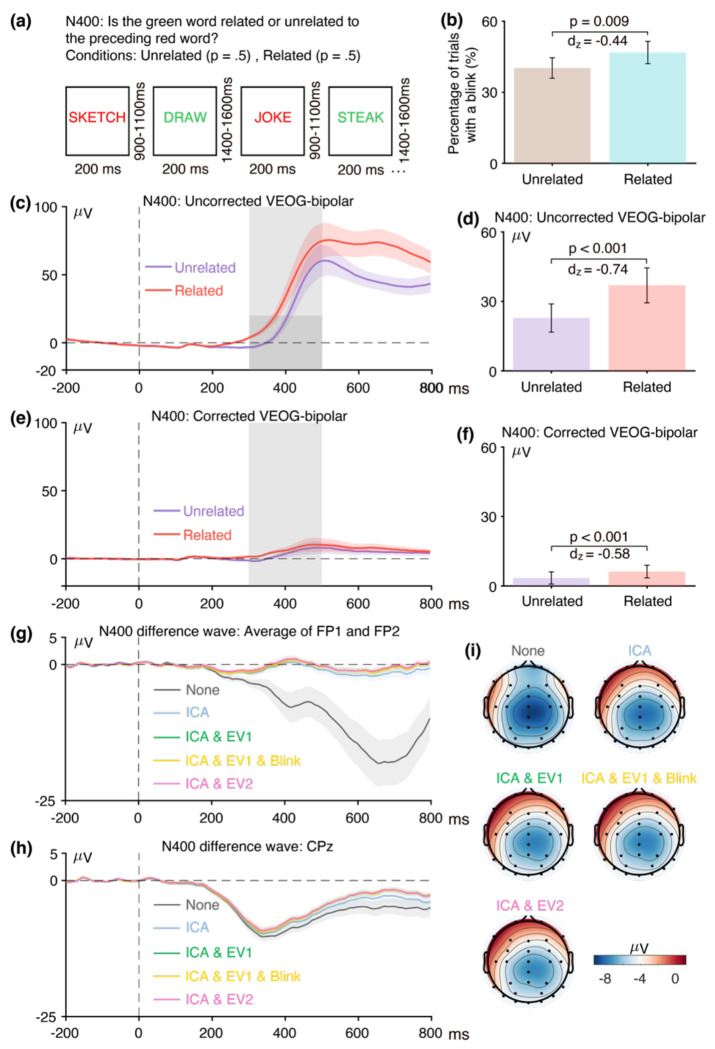

Figure 2.

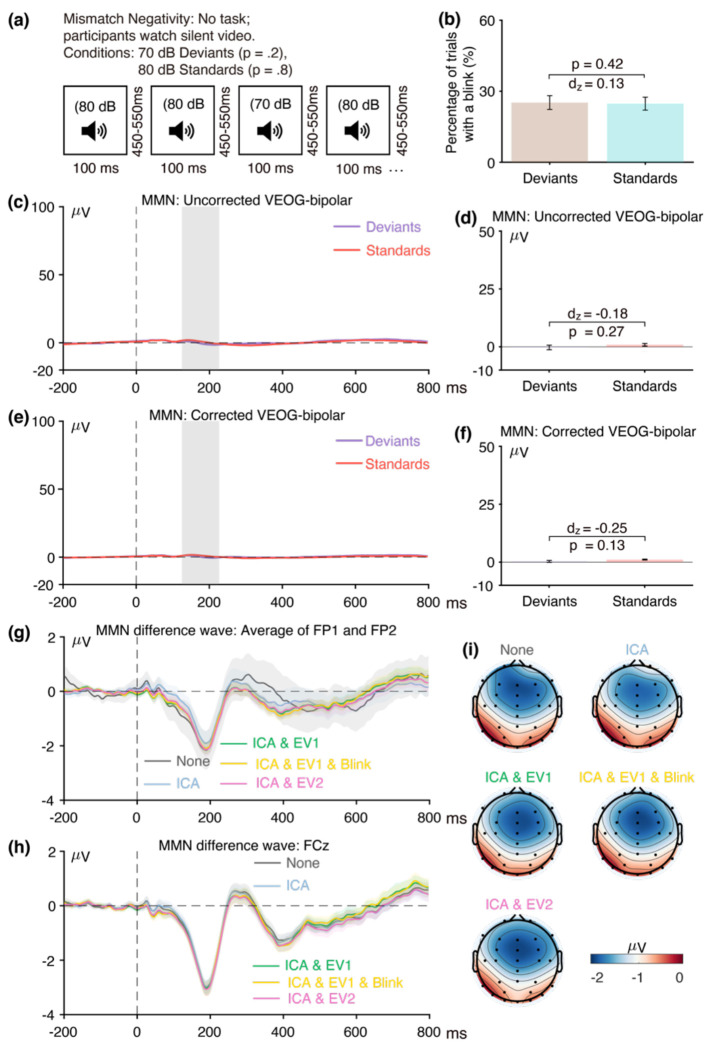

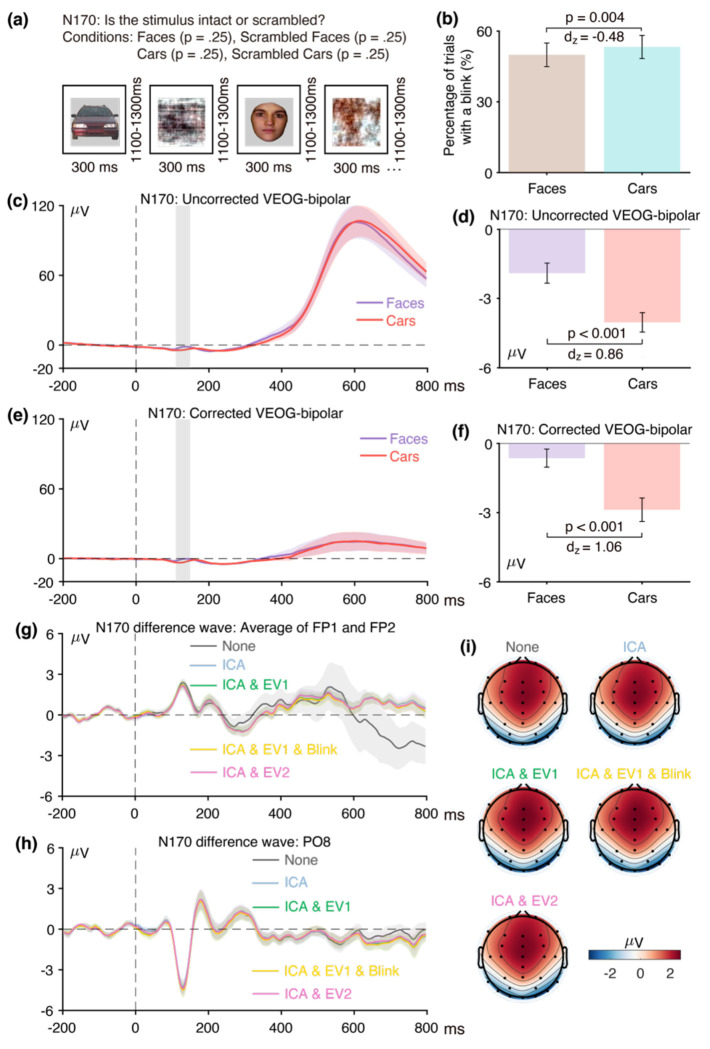

(a) N400 word pair judgment paradigm. (b) Percentage of trials with a blink for the parent waves, measured from uncorrected VEOG-bipolar channel. (c) Grand average ERP waveforms for semantically unrelated and semantically related targets in the uncorrected VEOG-bipolar electrode site. (d) Mean amplitudes from the uncorrected VEOG-bipolar channel during the N400 measurement window for the unrelated and related targets. (e) Grand average ERP waveforms for unrelated and related targets in the corrected VEOG-bipolar channel. (f) Mean amplitudes from the corrected VEOG-bipolar channel during the N400 measurement window for the unrelated and related targets. (g) Grand average ERP difference waves (unrelated minus related) for the five artifact minimization approaches at the FP1 and FP2 electrode sites. (h) Grand average difference waves for the five artifact minimization approaches at CPz. (i) Scalp maps of the mean amplitude measured from 300–500 ms in the grand average difference wave. Error bars show the standard error of the mean. The VEOG-bipolar signals were computed as upper minus lower.

3.1.1. Assessment of eyeblink confounds in the N400 data

As shown in Figure 1, the first general goal of our method is to determine whether eyeblinks differed across conditions and were therefore a potential confound. The first step toward this goal is to examine the percentage of trials on which a blink occurred for the two conditions, as determined from the uncorrected VEOG-bipolar signal. As shown in Figure 2, blinks were significantly more common for the semantically related targets than for the semantically unrelated targets (t(39) = −2.77, p = 0.009, Cohen’s dz = −0.44). Thus, blink-related activity is a confound that could distort measurements of N400 activity.

As illustrated in Figure 2c, the next step is to examine the time course of the blink activity in the averaged ERPs from the uncorrected VEOG-bipolar channel (defined as the voltage above the eyes minus the voltage below the eyes). A very large deflection (>50 μV) was present, and it was larger for the semantically related targets than for the semantically unrelated targets from approximately 250 ms after stimulus onset through the end of the epoch. We measured the mean amplitude of this blink-related activity during the time window of the N400 component (300–500 ms). Consistent with the difference in blink frequency across conditions, we found that the mean amplitude was significantly greater for the semantically related targets than for the semantically unrelated targets (see Figure 2d; t(39) = −4.66, p < 0.001, Cohen’s dz = −0.74). Note that this effect size of 0.74 was even greater than the effect size of 0.58 for the proportion of trials with blinks. In general, comparing the voltages across conditions (which is based on a continuous variable) is more sensitive than comparing the proportion of blinks (which is based on a categorical variable). In addition, the time course of the blink activity might differ across conditions even if the proportion of blinks does not differ.

The next step of our procedure is to examine how the blink confound, if present, propagates to the EEG electrodes. This is assessed by isolating the experimental effect with a difference wave, plotting this difference wave at key scalp electrodes, and making a scalp map of the difference wave during the measurement window. This is illustrated in panels g, h, and i of Figure 2, which show the unrelated-minus-related N400 difference wave for both the original data and the data following application of our artifact minimization approaches. At the FP1 and FP2 channels, where blink-related activity should be maximal, a very large negative deflection can be seen beginning at approximately 200 ms when no artifact correction was performed. This negativity presumably reflects the greater blink-related EOG activity observed for related targets than for unrelated targets in the uncorrected VEOG-bipolar signal. The voltage deflection in this N400 difference wave was negative-going because the difference wave was computed by subtracting the related trials (which had a larger positive VEOG deflection) from the unrelated trials (which had a smaller positive VEOG deflection).

Panel h shows the corresponding data from the CPz channel, which is the a priori measurement site for the N400. The difference wave was again more negative when no artifact correction was performed than when our artifact minimization procedures were performed. However, the difference between the waveforms with and without artifact minimization was much smaller at CPz than at FP1 and FP2, reflecting the fact that only a small percentage of the blink-related activity propagates to CPz. The blink-related activity that was present without artifact minimization can also be observed in the scalp maps of the unrelated-minus-related difference (panel i). Specifically, the topography of the difference was more frontal when blink correction was not performed.

Together, these results demonstrate that blink-related activity was greater for semantically related targets than for semantically unrelated targets and was therefore a potential confound in comparing these two conditions. If this activity is not reduced to negligible levels, any differences in apparent ERP activity between the semantically related and unrelated targets after approximately 200 ms could reflect differences in blinking rather than differences in the N400 component. Thus, some method is necessary to minimize the blink-related artifacts.

3.1.2. Effectiveness of ICA at minimizing eyeblink confounds in the N400 data

Given that a significant confound was present in the uncorrected VEOG-bipolar signal, the next step is to determine whether this confound was eliminated in the ICA-corrected data. This involves using the ICA-corrected data to create a corrected VEOG-bipolar channel (computed as the corrected F2 channel minus the corrected VEOG-lower channel). As shown in Figure 2e, most of the blink activity was removed, and most of the difference in blink-related activity between semantically related and semantically unrelated targets was also eliminated. However, the corrected VEOG-bipolar signal was still 2.79 μV greater for related targets than for unrelated targets during the N400 measurement window (t(39) = −3.70, p < 0.001, Cohen’s dz = −0.58). This raises the possibility that the ICA-based correction did not fully eliminate the confounding EOG activity.

However, it is also possible that the difference between conditions in the uncorrected VEOG-bipolar waveform does not reflect blink activity but instead reflects volume-conducted N400 activity. That is, if the N400 activity is larger (more negative) above the eyes than below the eyes, then this will add a negative voltage to the above-minus-below subtraction used to create the VEOG-bipolar channel. And because the N400 was larger for unrelated targets than for related targets, this added voltage in the VEOG-bipolar channel should be larger (more negative) for unrelated targets than for related targets. This is exactly what was observed, with a more negative VEOG-bipolar voltage for the unrelated targets than for the related targets (see Figure 2e). Thus, it is not obvious whether the remaining difference between related and unrelated targets in the corrected VEOG-bipolar channel reflects volume-conducted N400 activity or eyeblink activity that was not fully eliminated by ICA.

To assess this possibility, we used semipartial correlations to test for the presence of volume-conducted ERP activity in the corrected VEOG-bipolar signal5. Specifically, we computed the correlation between the corrected unrelated-minus-related difference voltage at the CPz electrode and the corrected unrelated-minus-related difference voltage in the VEOG-bipolar channel after partialling out variance explained by the unrelated-minus-related difference voltage in the uncorrected VEOG-bipolar channel. This approach is based on the assumption that the voltage at CPz and the voltage in the uncorrected VEOG-bipolar signal will share some variance due to the propagation of EOG and ERP activity between them, and the simple correlation between CPz and the corrected VEOG-bipolar signal could reflect residual EOG activity that propagated to CPz. However, if we partial out variance due to the uncorrected VEOG-bipolar signal (which is dominated by true EOG activity), then any remaining correlation between CPz and the corrected VEOG-bipolar signal should almost entirely reflect the propagation of the N400 to the VEOG-bipolar channel6. Because we are mainly interested in activity that might confound the N400 effect, we used the mean voltage during the N400 measurement window (see Table 2), measured from the unrelated-minus-related difference wave. We did not find a significant semipartial correlation between the corrected CPz signal and the corrected VEOG-bipolar signal (r(38) = −0.026, p = .876), providing no evidence that the difference between related and unrelated trials in the corrected VEOG-bipolar signal could be explained by volume-conducted N400 activity.

An analogous approach can be used to assess whether the corrected VEOG-bipolar signal contains residual EOG activity. In this approach, the correlation between the uncorrected and corrected VEOG-bipolar signals is computed after partialing out variance explained by the measurement electrode (again using the mean voltage of the difference wave during the measurement period). In the N400 data, we found a significant semipartial correlation between the uncorrected and corrected VEOG-bipolar signals (r(38) = .397, p = .012). This correlation provides evidence that at least some of the variance in the unrelated-minus-related difference wave in the corrected VEOG-bipolar signal could be explained by eyeblink activity, indicating that ICA did not completely eliminate the confounding effects of eyeblinks. Consistent with this conclusion, the corrected VEOG-bipolar signal contained a noticeable deflection for both the unrelated and related trials that approximately matched the blink-related deflection in the uncorrected VEOG-bipolar signal.

Note that the lack of a significant semipartial correlation between the uncorrected and corrected VEOG-bipolar signals cannot be used as evidence that ICA was effective at minimizing blink-related confounds. This is because the blink-related activity may be responsible for a large portion of the shared variance that is being partialed out7. We will discuss this in more detail in the case of the ERN.

When there is reason to believe that ICA was not completely effective at removing the confound in the corrected VEOG-bipolar signal, the next step is to determine whether the residual confound was large enough to have a meaningful impact when propagated to the measurement electrode. That is, once the 2.79 μV unrelated-minus-related difference that was measured in the VEOG-corrected channel propagates to the scalp electrodes, how much would it impact the uncorrected-minus-corrected N400 effect measured from the CPz channel? The normative values provided by Lins et al. (Lins et al., 1993) indicate that we would expect a propagated voltage of approximately 19% at Fz, approximately 10% at Cz, and approximately 5% at Pz. Propagation values are unavailable for CPz but are presumably between those at Cz and Pz. The 2.79 μV effect in the corrected bipolar VEOG channel would therefore be expected to produce an effect of approximately 0.53 μV at Fz, 0.28 μV at Cz, and 0.14 μV at Pz, with a value somewhere between 0.14 and 0.28 μV at CPz. This would be less than 4% of the measured difference between related and unrelated trials of −8.11 μV in the N400 measured at CPz. Thus, although ICA did not completely eliminate the confounding eyeblink activity at CPz, the residual activity was negligible for the purposes of this specific study.

However, the confounding effects on N400 measurements might be larger in other paradigms and participant populations, and even a small confound in the measurement electrode might be problematic for answering some scientific questions (e.g., when the confound is large relative to the size of the effect at the measurement electrode). The present results therefore indicate that researchers should be very cautious about ocular confounds in N400 studies if they are using ICA-based artifact correction. In addition, researchers should assess whether confounds are present in their own data and consider applying our method (as summarized in Figure 1) for assessing whether the confound was reduced to negligible levels.

3.1.3. Alternative ICA decomposition of the N400 data

We also considered the possibility that the residual activity in the corrected VEOG-bipolar channel was a result of the specific ICA decomposition approach used in the present analyses, which involved aggressive filtering of low frequencies and the use of the automated ICLabel routine to determine which ICs represented artifacts (along with manual confirmation). To assess this possibility, we performed the same analysis using the corrected data from the ERP CORE resource, in which the ICA decomposition did not involve aggressive high-pass filtering and the artifact-related ICs were selected “by eye” on the basis of their scalp maps and time courses.

We again found a statistically significant difference of 4.01 μV between related and unrelated targets during the N400 latency window in the corrected VEOG-bipolar channel (t(39) = 6.00, p <0.001, Cohen’s dz = 0.95 ). We also found a significant semipartial correlation between the uncorrected VEOG-bipolar channel and the corrected VEOG-bipolar channel (r(38) = 0.397, p = 0.012) whereas the semipartial correlation between the CPz channel and the corrected VEOG-bipolar channel was again not significant (r(38) = −0.024. p = 0.876). Thus, the apparent failure of ICA to completely eliminate blink-related confounds generalized to a different ICA decomposition. Of course, ICA might be more effective with different parameters, and there may be other artifact correction approaches that are more effective (e.g., second-order blind inference or source-space reconstruction; Jonmohamadi et al., 2014; Joyce et al., 2004). However, the goal of the present study was to determine whether the commonly-used approach implemented here is effective, not to answer the much more challenging question of which correction approach is best.

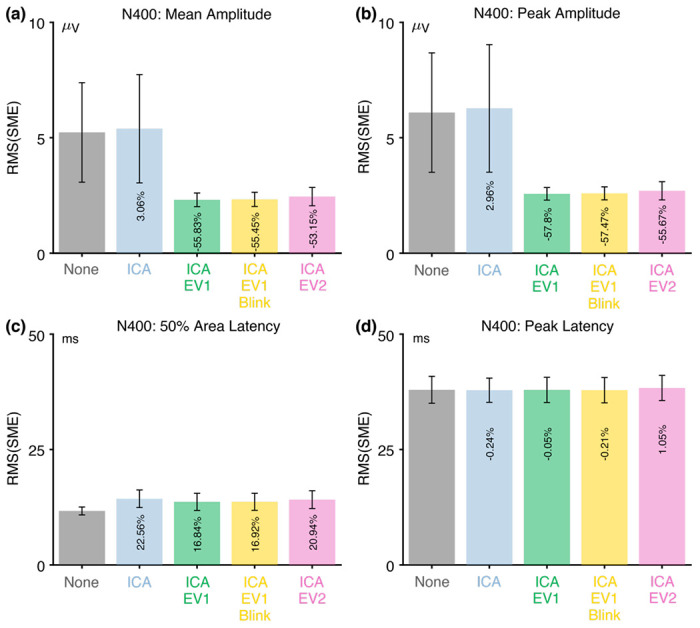

3.1.4. Assessment of data quality in the N400 data

Once it has been demonstrated that artifact correction has reduced any blink-related confounds to negligible levels, the next step is to assess whether the combination of correction and rejection increases or decreases the data quality (see Figure 1). In this section, we therefore evaluate the effectiveness of our artifact correction and rejection approaches in reducing the RMS(SME).

Figure 3 shows the RMS(SME) values at the a priori measurement site (CPz) for each combination of the four scoring methods and the five artifact minimization approaches. Interestingly, the ICA-only method did not lead to lower RMS(SME) values (i.e., did not lead to improved data quality). This is presumably because such a small proportion of blink activity is propagated to the CPz measurement site that blinks do not meaningfully increase trial-to-trial variability at this site. This result is consistent with Delorme (2023), who found that ICA-based artifact correction did not increase the ability to detect significant effects in several datasets.

Figure 3.

Root mean square of the standardized measurement error (RMS(SME)) from the N400 experiment for four different scoring methods and five different artifact minimization approaches. Smaller RMS(SME) values indicate higher data quality (less noise). Error bars show the standard error of the RMS(SME) values.

However, the three approaches that included rejection of trials with extreme values led to much better data quality for the two amplitude scoring methods compared to no artifact minimization and compared to the ICA-only approach. Indeed, rejecting trials with extreme values reduced the RMS(SME) by more than 50%. In other words, although artifact rejection reduced the number of trials, the net effect was still an improvement in data quality. For peak latency, the RMS(SME) was similar across all five approaches.

For 50% area latency, the RMS(SME) was actually slightly worse (larger) for all of the approaches that included ICA-based correction compared to the data with no artifact minimization. However, this may be a consequence of the fact that the blink-related activity increased the size of the unrelated-minus-related difference wave (as shown in Figure 2h). In other words, if the difference wave is larger as the result of greater blink activity for related targets than for unrelated targets, then the 50% area latency can be measured more consistently. However, the resulting latency values will be distorted by the blink-related activity, so the values might be misleading even if they are more consistent. Thus, although the no-minimization approach might appear to be advantageous for scoring the 50% area latency, this advantage is illusory. This illustrates the importance of assessing confounds in addition to data quality.

Table 3 shows the proportion of trials that were excluded because of artifacts for the three approaches that involved artifact rejection (ICA+EV1, ICA+EV1+Blinks, ICA+EV2). There were very few trials with blinks at the time of the stimulus in the N400 experiment, so the percentage of rejected trials was only slightly greater for the ICA+EV1+Blinks approach than for the ICA+EV1 approach. More trials were rejected for these approaches than for the ICA+EV2 approach, in which trials were rejected only if the extreme values occurred in the measurement channel (CPz). However, fewer than 5% of trials were rejected for any of these approaches. In addition, these three approaches led to similar RMS(SME) values.

Table 3.

Percentage of rejected trials for each condition of each ERP component (in %).

| N400 | ERN | P3b | MMN | N170 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Unrelated | Related | Incorrect | Correct | Target | Nontarget | Deviants | Standards | Faces | Cars | |

| ICA & EV1 | 2.35 ± 0.71 | 3.31 ± 1.15 | 2.24 ± 0.83 | 2.51 ± 0.82 | 3.32 ± 0.97 | 3.02 ± 0.86 | 4.78 ± 1.45 | 4.69 ± 1.51 | 3.17 ±1.29 | 3.70 ±1.34 |

| ICA & EV1 & Blink | 2.62 ± 0.73 | 3.49 ± 1.19 | 2.24 ± 0.83 | 2.51 ± 0.82 | 3.53 ± 0.98 | 3.20 ± 0.87 | 15.05 ± 1.99 | 14.51 ± 1.94 | 3.62 ±1.31 | 3.96 ±1.37 |

| ICA & EV2 | 0.74 ± 0.40 | 0.86 ± 0.61 | 0.36 ± 0.36 | 0.54 ± 0.27 | 0.86 ± 0.33 | 0.58 ± 0.26 | 1.25 ± 0.85 | 1.26 ± 0.83 | 1.66 ±0.95 | 1.39 ±0.68 |

Participants in the original ERP CORE study were excluded from the final analyses if more than 25% of trials were rejected because of extreme values. The effects of excluding participants are complicated because exclusion impacts the degrees of freedom as well as the data quality, so we are not excluding participants with excessive rejection in the main analyses of the present study. However, because excluding participants with large numbers of artifacts is a common procedure, we have provided supplementary analyses showing the RMS(SME) values when these participants were excluded (see supplementary Table S1 and Figures S7–S11). One participant exceeded our threshold for exclusion for the EV1 approach in the N400 paradigm. As shown in Figure S7, excluding this participant further improved the RMS(SME) values for the amplitude scores, with little impact on the RMS(SME) values for the latency scores.

3.1.5. Recommendations for the N400

For studies like the ERP CORE N400 experiment, the present results indicate that blinks are a potential confound that can contribute to differences between conditions, even in the central and parietal channels where the N400 is typically scored. Thus, some method for minimizing the impact of blink-related activity (e.g., artifact correction or rejection) is essential in N400 experiments.

ICA-based blink correction substantially reduced the blink artifact in the present analyses, but a statistically significant artifactual difference between conditions remained in the corrected VEOG-bipolar channel. After propagating to the central and parietal channels, the residual artifactual difference between conditions was negligible (less than half a microvolt). However, an effect of this size might still be large enough to produce an incorrect interpretation of the results in some studies, and larger blink artifacts may be present in other paradigms or participant populations. We therefore recommend that all N400 studies quantify the difference between conditions in the corrected VEOG-bipolar signal. If the difference is significant, the propagation factors provided by Lins et al. (1993) can be used to determine whether the residual EOG activity is large enough to meaningfully impact the results in the channels where the N400 is being measured.

We also found that excluding trials with extreme values improved the data quality for the amplitude scores, with the reduction in number of trials being outweighed by the reduction in noise. There were no meaningful differences in data quality between the three rejection approaches examined here. We therefore recommend the EV1 approach to extreme values, which ensures that the data are clean in all channels (which may be important for scalp maps and other analyses). However, there may be studies in which it is advantageous to reject trials only when the extreme values occur in the measurement channel. In addition, we recommend rejecting trials with blinks at the time of the stimulus that might interfere with the perception of the stimulus unless there is a good reason not to. Thus, we recommend the ICA+EV1+Blinks approach for studies like the ERP CORE N400 experiment.

These recommendations, along with a summary of the key N400 results, are provided in Table 4.

Table 4.

Summary of results and recommendations.

| Significant confound in VEOG-bipolar signal | Significant semipartial correlation with corrected VEOG | Recommendation for artifact minimization | |||

|---|---|---|---|---|---|

| Before correction | After correction | Uncorrected VEOG | Measured Channel | ||

| N400 | ✓ | ✓ | ✓ | × | ICA + EV1 + Blink |

| ERN | ✓ | ✓ | × | ✓ | ICA + EV1* + Blink |

| P3b | ✓ | × | ✓ | × | ICA + EV1 + Blink |

| MMN | × | × | ✓ | × | ICA* + EV1 |

| N170 | ✓ | ✓ | × | ✓ | ICA + EV1 + Blink |

ICA: Correction of blinks using independent component analysis; EV1: Rejection of trials with extreme values in any channel after artifact correction; Blink: Rejection of trials with blinks within ±200 ms of stimulus onset.

Optional

3.1.6. When to assess the effectiveness of artifact minimization

Ideally, the effectiveness of artifact minimization would be assessed in an a priori manner. That is, it would be applied to one or more previous datasets, and the results would then be used to determine which minimization approach to use in future datasets. It is potentially problematic to use a given dataset to determine the best artifact minimization approach and then use that approach to analyze the same dataset. However, it is not clear how this post hoc approach could actually bias the results and increase the Type I error rate, because our method for assessing artifact minimization approaches does not depend on the extent to which differences in brain activity are present. On the other hand, using the same dataset twice in this manner might lead to non-obvious biases. Thus, it would be safest to use previous datasets to determine whether a given artifact minimization approach is adequate and then use this approach in an a priori manner for future studies.

3.2. The error-related negativity (ERN)

As illustrated in Figure 4a, we used a flankers paradigm to elicit the ERN. Each display consisted of a central arrow accompanied by four flanking arrows, which pointed in the same direction (p = .5) or opposite direction (p = .5) as the central arrow. Participants were tasked with determining whether the central arrow pointed leftward (p = .5, 200 trials) or rightward (p = .5, 200 trials) and responded by pressing a button with the corresponding hand. Each participant made correct responses on 352.1 ± 36.6 trials and incorrect responses on 42.0 ± 22.6 trials (except one participant, who had only 2 incorrect responses and was excluded from all analyses). The ERN was measured from the incorrect-minus-correct difference wave at the FCz electrode site.

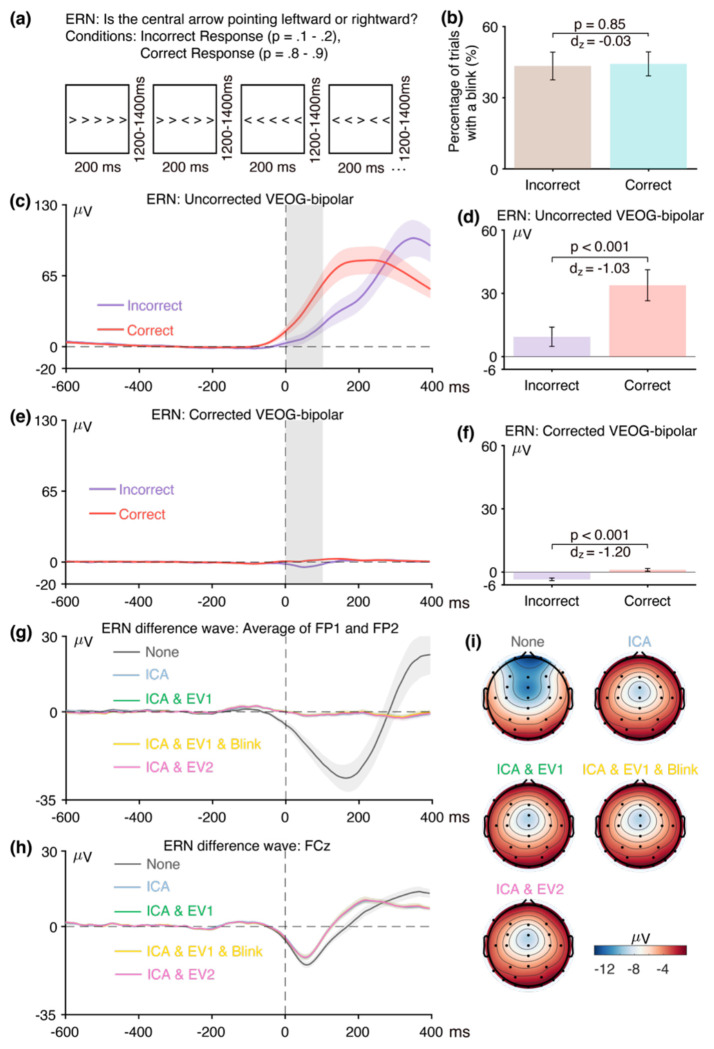

Figure 4.

(a) Flankers task used to elicit the error-related negativity (ERN). (b) Percentage of trials with a blink for the parent waves, measured from uncorrected VEOG-bipolar channel. (c) Grand average ERP waveforms for the incorrect and correct conditions in the uncorrected VEOG-bipolar electrode site. (d) Mean amplitudes from the uncorrected VEOG-bipolar channel during the ERN measurement window for incorrect and correct trials. (e) Grand average ERP waveforms for the incorrect and correct conditions in the corrected VEOG-bipolar channel. (f) Mean amplitudes from the corrected VEOG-bipolar channel during the ERN measurement window for the incorrect and correct trials. (g) Grand average ERP difference waves (incorrect minus correct) for the five artifact minimization approaches at the FP1 and FP2 electrode sites. (h) Grand average difference waves for the five artifact minimization approaches at FCz. (i) Scalp maps of the mean amplitude measured from 0–100 ms in the grand average difference wave. Error bars show the standard error of the mean. The VEOG-bipolar signals were computed as upper minus lower. Note that the ERN data were response-locked rather than stimulus-locked, so time zero is the time of the response.

3.2.1. Assessment of eyeblink confounds in the ERN data

The first step was to determine whether eyeblinks differed across conditions and were therefore a potential confound. Figure 4b shows the percentage of trials on which a blink occurred for the incorrect versus correct responses, as determined from the uncorrected VEOG-bipolar channel. Blinks were approximately equally likely on both correct trials and error trials (t(38) = −0.19, p = 0.85, Cohen’s dz = −0.03).

Figure 4c shows the grand average waveforms from the uncorrected VEOG-bipolar channel (upper minus lower). A very large deflection (>50 μV) was present, beginning just before the time of the response. This deflection was larger for correct responses than for incorrect responses from approximately −50 ms to 300 ms, and was then larger for incorrect responses than for correct responses until the end of epoch. It was significantly more positive for correct responses than for incorrect responses during the ERN measurement window (0–100 ms, Figure 4d; t(38) = −6.42, p < 0.001, Cohen’s dz = −1.03). Thus, even though the likelihood of a blink did not differ between incorrect and correct trials, the time course differed, creating a potential confound during the ERN measurement window. This demonstrates the importance of examining the time course of blink activity in averaged EOG waveforms and not just the likelihood of blinks (see Stern et al., 1984).

Panels g, h, and i of Figure 4 show how the blink confound—as assessed in the incorrect-minus-correct difference wave—propagated to the scalp ERPs. At the FP1 and FP2 channels, where blink-related activity should be maximal (panel g), the uncorrected waveform shows a large negative deflection followed by a large positive deflection. This is exactly what would be expected from the uncorrected VEOG-bipolar signal (panel c), in which the voltage was initially more positive for the correct trials and then became more positive for the incorrect trials. That is, the initial greater positive voltage for the correct trials relative to the incorrect trials created an initial negative voltage when the correct trials were subtracted from the incorrect trials in the difference wave; the subsequent greater positive voltage for the incorrect trials relative to correct trials in the VEOG created a late positive voltage in the incorrect-minus-correct difference wave. Note that the pattern produced by the blink activity in the difference wave at FP1 and FP2 is qualitatively similar to the pattern expected from true brain activity on the basis of prior research, consisting of an initial negative ERN followed by a later positive Pe. These deflections were greatly reduced in the waveforms after ICA-based blink correction was performed.

Panel h shows the corresponding data from the ERN measurement site, FCz. When artifact correction was performed, the typical pattern of an ERN followed by a Pe was observed, with a transition from negative to positive at approximately 130 ms. Both the negative and positive peaks were larger without artifact correction, and the negative-positive transition was shifted to approximately 200 ms. This is exactly what would be expected if true ERN and Pe deflections were present in the corrected data, with a volume-conducted EOG artifact summing with these effects in the uncorrected data. The residual artifact was quite large at FCz, both because the difference in blink activity between correct and incorrect trials was large and because FCz is fairly close to the source of the artifact. The blink-related activity that was present without artifact minimization can also be observed in the scalp maps of the incorrect-minus-correct difference (Figure 4i). Specifically, the topography of the difference was more frontal when the blink-related activity was not minimized.

Together, these results demonstrate that blinks are a very worrisome potential confound in ERN experiments. Specifically, the eyeblink confound would be expected to produce a more negative voltage for error trials than for correct trials at FCz immediately after the response (just like the ERN) followed by a more positive voltage for error trials than for correct trials (just like the Pe). Thus, if eyeblink confounds are not completely eliminated, they could easily masquerade as, or artificially augment, the ERN and Pe effects. Consequently, additional work is needed to be certain that the ERN and Pe effects observed after correction are not volume-conducted EOG artifacts.

3.2.2. Effectiveness of ICA at minimizing eyeblink confounds in the ERN data

Figure 4e shows the grand average waveforms for the corrected VEOG-bipolar channel. Most of the blink activity was eliminated, and most of the difference in blink-related activity between the incorrect and correct trials was also eliminated. However, there was still a 4.51 μV difference between the incorrect and correct trials in the corrected VEOG-bipolar channel during the ERN measurement window, which was statistically significant (t(38) = −7.49, p < 0.001, Cohen’s dz = −1.20). This raises the possibility that the ICA-based correction did not fully eliminate the confounding EOG activity, just as was observed in the N400 data.

However, this effect may instead reflect volume-conducted ERN activity. That is, if the difference in ERN voltage between incorrect and correct trials is larger (more negative) above the eyes than below the eyes, then this will appear as a more negative voltage for correct trials than for incorrect trials in the VEOG-bipolar channel. We used our semipartial correlation approach to determine whether the difference between the incorrect and correct trials in the corrected VEOG-bipolar channel reflects a failure of correction or volume-conducted ERN activity. That is, we quantified the extent to which variance in the corrected VEOG-bipolar channel can be uniquely explained by variance in the uncorrected VEOG-bipolar channel (which primarily contains blink activity) and by variance in the FCz channel (which primarily contains ERN activity). The correlations were computed using the voltage from 0-100 ms measured from the single-participant incorrect-minus-correct difference waves.

We found that the semipartial correlation between the FCz channel and the corrected VEOG-bipolar channel was substantial and statistically significant (r(37) = 0.43, p = 0.007)8. This indicates that at least some of the difference between incorrect and correct trials in the corrected VEOG-bipolar signal reflects volume-propagated ERN activity. By contrast, the semipartial correlation between the uncorrected and corrected VEOG-bipolar signals during the ERN measurement window was relatively small and not statistically significant (r(37) = 0.248, p = 0.134).

However, the lack of a significant semipartial correlation between the uncorrected and corrected VEOG-bipolar channels is not sufficient to conclude that there is no residual EOG activity in the corrected data. First, this would rely on accepting the null hypothesis. Second, some of the blink activity will produce shared variance between the uncorrected VEOG-bipolar signal and the signal at the measurement electrode. This shared blink activity is not captured by the semipartial correlation. To take an extreme example, imagine that FP2 was used as the measurement electrode. If the activity at FP2—which is largely blink activity—was partialed out from the correlation between the uncorrected and uncorrected VEOG-bipolar signals, this would remove almost all of the blink-related variance. The remaining correlation would therefore provide little information about whether similar blink activity was present in the uncorrected and corrected VEOG-bipolar signals. Thus, whereas the presence of a significant semipartial correlation between the uncorrected and corrected VEOG-bipolar signals provides good reason to believe that residual EOG activity is present after correction, the absence of a significant correlation is not sufficient to conclude that correction was successful.

To provide additional evidence about the presence of residual EOG activity after correction, we examined the time course of the corrected and uncorrected VEOG-bipolar signals. The ICA blink correction procedure uses the same unmixing and mixing matrices at all time points, and the scalp distribution of the true blink activity is also constant across time points. As a result, any residual EOG activity in the corrected EOG-bipolar signal should have approximately the same time course as the uncorrected VEOG-bipolar signal. If the difference between conditions in the corrected VEOG-bipolar signal is limited to the time at which the experimental effect is clearly present in the corrected data from the measurement electrode, whereas the difference between conditions in the uncorrected VEOG-bipolar signal extends more broadly, this provides good evidence that the correction procedure was successful and little or no residual EOG activity was present to confound the comparison of the conditions.

In the ERN data, for example, the difference between correct and incorrect trials in the corrected VEOG-bipolar signal was limited to the time period of the ERN component (ca. 0-150 ms; see Figure 4e). By contrast, there were large differences between correct and incorrect trials in the uncorrected VEOG-bipolar signal between 300 and 400 ms. If residual EOG activity were present in the corrected VEOG-bipolar signal, it would have been visible in this late period. We can therefore conclude that our implementation of ICA-based artifact correction was successful at minimizing the confounding blink activity for the ERN in this particular study.

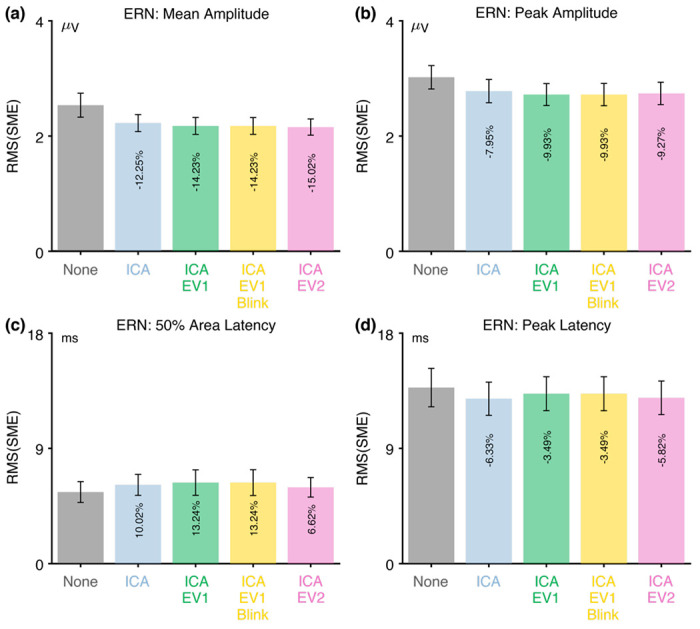

3.2.3. Assessment of data quality in the ERN data

Figure 5 shows the RMS(SME) values at the a priori measurement site (FCz) for each combination of the four scoring methods and the five artifact minimization approaches for ERN. For the two amplitude scoring methods, the RMS(SME) values were slightly better (smaller) for the four artifact minimization approaches (all of which included ICA-based blink correction) than when no artifact minimization was performed. Excluding trials with extreme values reduced the RMS(SME) slightly relative to the ICA-only approach. These results are again consistent with the finding of Delorme (2023) that ICA-based artifact correction did not increase the ability to detect significant effects.

Figure 5.

Root mean square of the standardized measurement error (RMS(SME)) from the ERN experiment for four different scoring methods and five different artifact minimization approaches. Smaller RMS(SME) values indicate higher data quality (less noise). Error bars show the standard error of the RMS(SME) values.

There was very little impact of any of the artifact minimization approaches for peak latency. For 50% area latency, however, the RMS(SME) was actually slightly better (smaller) for the no-minimization approach than for the other approaches. However, this may be a consequence of the fact that the blink-related activity increased the size of the incorrect-minus-correct difference wave (as shown in Figure 4h). In other words, if the difference wave is larger, then the 50% area latency can be measured more consistently. However, the resulting latency values will be distorted by the blink-related activity, so the values might be misleading even if they are measured more precisely.

Table 3 shows that fewer than 3% of trials were rejected for any of the three approaches that involved artifact rejection (ICA+EV1, ICA+EV1+Blinks, ICA+EV2).

3.2.4. Recommendations for the ERN

For studies like the ERP CORE ERN experiment, the present results indicate that blinks are a particularly worrisome confound, because they may produce the same negative-positive sequence of voltages on the scalp as the ERN and Pe components. Thus, significant care is necessary to make sure that the artifact minimization procedure is effective. For example, if ERN activity is compared across groups, and the ERN appears to be larger in one group than in another, it would be essential to demonstrate that this is not a consequence of differences in blink-related activity.

Fortunately, we found that ICA-based blink correction did an excellent job of eliminating blink-related confounds. Although there was a substantial and statistically significant difference between correct trials and error trials in the corrected VEOG-bipolar signal, this difference appeared to primarily reflect volume-conducted ERN activity rather than uncorrected blink activity. However, ICA may not work this well in all datasets. For example, ICA may not remove blink-related confounds as well in studies with different numbers of electrodes, a shorter period of data, noisier data, and so on. We would therefore recommend using ICA-based blink correction in ERN experiments but carefully assessing its effectiveness rather than simply assuming that it completely eliminated blink-related confounds.

If evidence of a confound remains, the propagation factors provided by Lins et al. (1993) can be used to determine whether the residual EOG activity is large enough to meaningfully impact the results in the channels where the ERN is being measured.

We found that ICA-based artifact correction produced a modest improvement in data quality for amplitude scores. However, excluding trials with extreme values had minimal additional impact, indicating that the reduction in the number of trials produced by artifact rejection was approximately equally balanced by the reduction in noise. Given that the rejection of trials with extreme values did not hurt the data quality, and that it is presumably better not to include trials with extreme values if there is no cost for excluding them, we recommend rejecting trials with extreme values in datasets like the ERP CORE ERN experiment. However, very few trials were rejected in the present dataset, and rejection might have a larger effect in noisier datasets. In such datasets, it would be worthwhile to compute RMS(SME) values with and without rejection to determine whether the reduction in the number of trials resulting from artifact rejection is outweighed by the reduction in noise. In any case, trials in which the eyes were closed at the time of the stimulus should ordinarily be excluded. These recommendations, along with a summary of the key ERN results, are provided in Table 4.

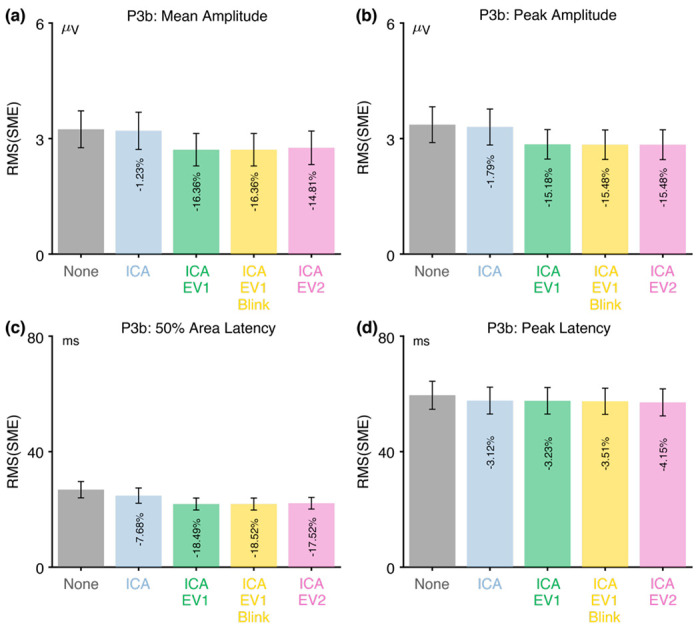

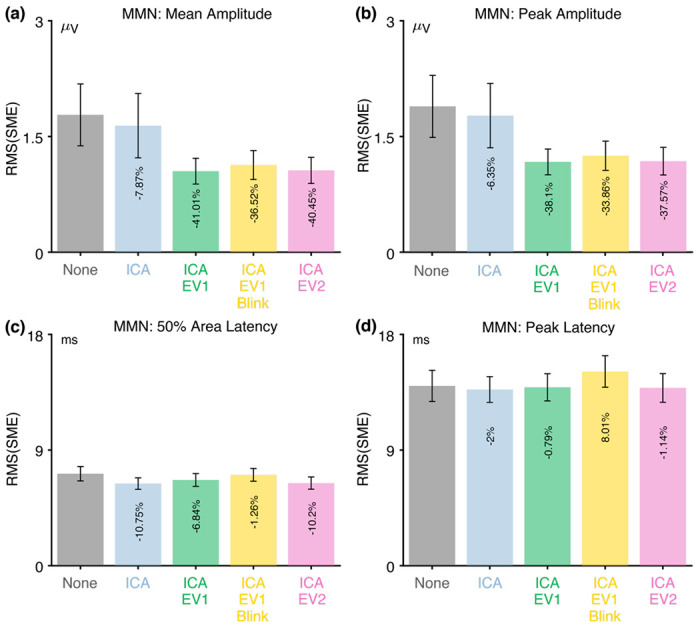

3.3. The P3b component

Figure 6a illustrates the active visual oddball task that was used to elicit the P3b component. Participants were presented with a random sequence of five letters (A, B, C, D, and E), each with an equal probability (0.2). In every block, one specific letter was assigned as the target, and participants were instructed to press a designated button when the target letter appeared and a different button for any non-target letter. For example, if C was defined as the target, participants were instructed to press the target button when C appeared and the non-target button for the letters A, B, D, or E. Each letter served as the target in one block of trials. Over the experiment, each participant encountered 40 target trials and 160 non-target trials. The P3b was measured from the target-minus-nontarget difference wave at the Pz electrode site.

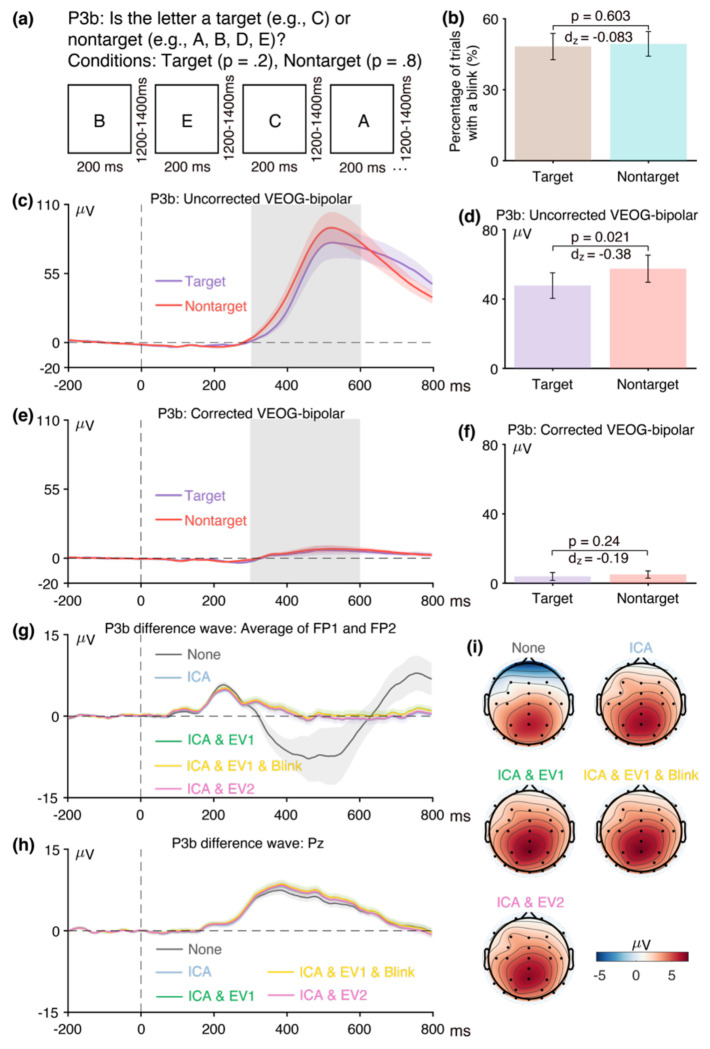

Figure 6.

(a) Active visual oddball paradigm used to elicit the P3b component. (b) Percentage of trials with a blink for the parent waves, measured from uncorrected VEOG-bipolar channel. (c) Grand average ERP waveforms for targets and nontargets in the uncorrected VEOG-bipolar electrode site. (d) Mean amplitudes from the uncorrected VEOG-bipolar channel during the P3b measurement window for targets and nontargets. (e) Grand average ERP waveforms for targets and nontargets in the corrected VEOG-bipolar channel. (f) Mean amplitudes from the corrected VEOG-bipolar channel during the P3b measurement window for targets and nontargets. (g) Grand average ERP difference waves (target minus nontarget) for the five artifact minimization approaches at the FP1 and FP2 electrode sites. (h) Grand average difference waves for the five artifact minimization approaches at Pz. (i) Scalp maps of the mean amplitude measured from 300–600 ms in the grand average difference wave. Error bars show the standard error of the mean. The VEOG-bipolar signals were computed as upper minus lower.

3.3.1. Assessment of eyeblink confounds in the P3b data