Abstract

Isocitrate dehydrogenase (IDH) mutation status has emerged as an important prognostic marker in gliomas. This study sought to develop deep learning networks for non-invasive IDH classification using T2w MR images while comparing their performance to a multi-contrast network. Methods: Multi-contrast brain tumor MRI and genomic data were obtained from The Cancer Imaging Archive (TCIA) and The Erasmus Glioma Database (EGD). Two separate 2D networks were developed using nnU-Net, a T2w-image-only network (T2-net) and a multi-contrast network (MC-net). Each network was separately trained using TCIA (227 subjects) or TCIA + EGD data (683 subjects combined). The networks were trained to classify IDH mutation status and implement single-label tumor segmentation simultaneously. The trained networks were tested on over 1100 held-out datasets including 360 cases from UT Southwestern Medical Center, 136 cases from New York University, 175 cases from the University of Wisconsin–Madison, 456 cases from EGD (for the TCIA-trained network), and 495 cases from the University of California, San Francisco public database. A receiver operating characteristic curve (ROC) was drawn to calculate the AUC value to determine classifier performance. Results: T2-net trained on TCIA and TCIA + EGD datasets achieved an overall accuracy of 85.4% and 87.6% with AUCs of 0.86 and 0.89, respectively. MC-net trained on TCIA and TCIA + EGD datasets achieved an overall accuracy of 91.0% and 92.8% with AUCs of 0.94 and 0.96, respectively. We developed reliable, high-performing deep learning algorithms for IDH classification using both a T2-image-only and a multi-contrast approach. The networks were tested on more than 1100 subjects from diverse databases, making this the largest study on image-based IDH classification to date.

Keywords: nnU-Net, deep learning, IDH, U-net, brain tumor, MRI, CNN, gliomas

1. Introduction

The World Health Organization (WHO) revised glioma classification in 2016 with the observation that tumors with isocitrate dehydrogenase (IDH) mutation have a better prognosis than those with wildtype IDH [1]. IDH-mutated tumors also have different diagnostic and therapy responses than wildtype tumors. As a result, different diagnostic and therapeutic approaches are necessary for IDH-mutated and wildtype gliomas. Currently, the only way to conclusively detect an IDH-mutated glioma involves immunohistochemistry or gene sequencing on a tissue sample obtained either via a biopsy or surgical removal. However, information from the TCGA indicates that a mere 35% of biopsy samples have sufficient tumor content to allow for accurate molecular characterization [2]. Therefore, developing a robust and reliable non-invasive approach would be beneficial for these patients.

MR spectroscopy has the potential to identify IDH mutations. The mutant IDH enzyme produces the oncometabolite 2-HG [3]. MR spectroscopic methods have been developed to detect 2-HG non-invasively in brain tumors [4,5,6,7]. However, in a clinical setting, the spectral imaging data can be difficult to interpret due to artifacts, patient motion, poor shimming, small voxel sizes, non-ideal tumor location, or the presence of hemorrhage or calcification. Even when the spectra are of good quality, the false-positive rate of this technique is over 20%, making reliable clinical implementation difficult [8].

IDH wildtype gliomas are typically treated with more aggressive regimens than IDH mutant gliomas. Specific chemotherapeutic interventions are more effective in IDH-mutated gliomas (e.g., temozolomide) [9,10,11,12,13]. Furthermore, surgical resection of non-enhancing tumor volume in grade 3–4 IDH-mutated tumors has been shown to improve survival [14]. However, the determination of IDH mutation status is currently achieved through direct tissue sampling, which can be difficult to achieve. The amount of tumor tissue in biopsy samples may not always be sufficient for a complete molecular characterization [2]. Therefore, developing a robust and reliable non-invasive approach would be beneficial for these patients.

Recent research has demonstrated that deep learning is more effective than conventional machine learning techniques for predicting the genetic and molecular biology of tumors when using MRI [15,16,17]. However, these techniques are not yet suitable for clinical use as they rely on labor-intensive tumor pre-segmentation, significant pre-processing, or the use of multi-contrast acquisitions that frequently get disrupted by patient movement during lengthy scan times. Furthermore, the existing approaches utilize a 2D (slice-wise) classification method known to be susceptible to data leakage problems [18,19]. Two-dimensional slice-wise models, when applied to cross-sectional imaging data, are specifically prone to data leakage as randomized data division might happen across all subjects. This can lead to the creation of training, validation, and test sets where neighboring slices from the same subject are found in distinct data subsets. Since these adjacent slices often contain a great deal of shared information, this method can artificially enhance accuracy levels by introducing bias into the testing phase. Our own work with 2D IDH models has shown a boost of over 6% in classification accuracy when data leakage is permitted [20]. Another limitation of these previous studies is their lack of evaluation of large, true external held-out datasets, limiting the generalizability of their results.

This study sought to develop highly accurate, fully automated, and reliable deep learning algorithms for IDH classification. This study’s evaluation of large, diverse, and true external held-out datasets represents an important milestone in the journey towards clinical translation.

2. Materials and Methods

2.1. Datasets

2.1.1. Training Data

Multi-parametric brain MRI data and genomic information of glioma patients were obtained from the publicly available TCIA (The Cancer Imaging Archive)/TCGA (The Cancer Genome Atlas) databases and The Erasmus Glioma Database (EGD) [21,22,23]. Subjects were screened for the availability of IDH mutation status and multi-contrast MR images. Only pre-operative studies were included in the final dataset. The TCIA database consists of 227 subjects (94 IDH mutated, 133 IDH wildtype), and the EGD database consists of 456 subjects (150 IDH mutated, 306 IDH wildtype). The combination of TCIA and EGD provided 683 subjects with multi-contrast MR images and ground truth IDH status. Ground truth IDH status of the TCIA and EGD datasets was determined using the gold-standard Sanger method and next-generation sequencing, respectively [22,24,25,26]. The subject IDs used for training and their corresponding IDH statuses are listed in Table S1 of the supplementary data.

2.1.2. Testing Data

External testing data were obtained from 5 institutions, including 2 publicly available datasets. The public data were obtained from the University of California, San Francisco (UCSF) Preoperative Diffuse Glioma MRI dataset [27] with 495 subjects and the previously mentioned EGD [22] with 456 subjects. The collaborator/in-house datasets were obtained from three institutions, the UT Southwestern Medical Center (UTSW) with 360 subjects, New York University (NYU) with 136 subjects, and the University of Wisconsin–Madison (UWM) with 175 subjects (Table 1). Subjects were screened for the availability of IDH mutation status and multi-contrast MR images. Only pre-operative studies were included in the final dataset. IDH mutation status was determined either by immunohistochemistry or next-generation sequencing when available.

Table 1.

IDH status of all testing datasets included in this study.

| UTSW | NYU | UWM | EGD | UCSF | Total | |

|---|---|---|---|---|---|---|

| Mutated | 104 | 23 | 19 | 150 | 103 | 399 |

| Wildtype | 256 | 113 | 156 | 306 | 392 | 1223 |

| Total | 360 | 136 | 175 | 456 | 495 | 1622 |

2.2. Pre-Processing

Multi-contrast native space images, including pre-contrast and post-contrast T1-weighted, T2-weighted, and T2-weighted fluid attenuation inversion recovery (FLAIR), from TCIA, UTSW, NYU, and UWM were pre-processed using the FeTS platform (version 002) [28], which co-registers the images, brings them to SRI24 template space [29], and performs skull-stripping and multi-class brain tumor segmentation. The UCSF data were already pre-processed (co-registered in SRI24 template space and skull-stripped) [27]. The EGD data were also pre-processed (co-registered to MNI152 space), with the data skull-stripped using Advanced Normalization Tools (ANTS) [22,30]. Additionally, all datasets were (a) N4 bias corrected and (b) intensity normalized to zero-mean and unit variance [31,32].

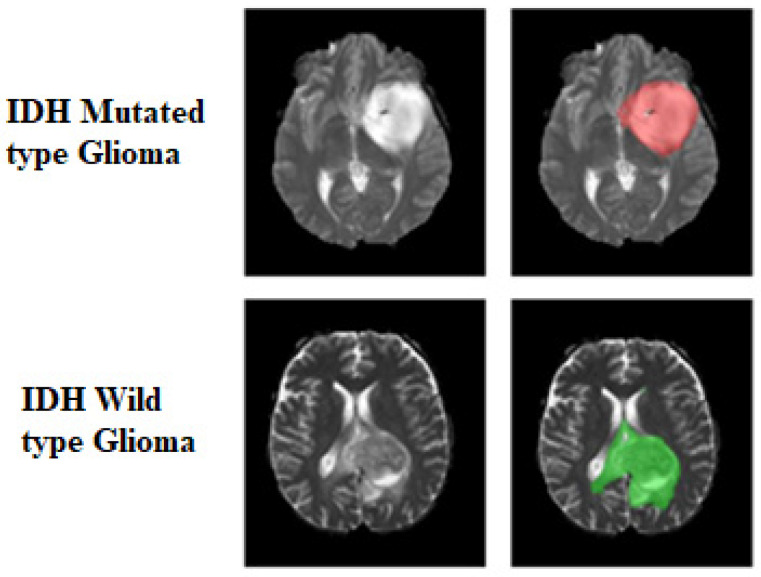

Multi-label FeTS tumor segmentations were combined to generate the whole-tumor masks. The ground truth tumor masks in IDH-mutated cases were marked with 1s, while the ground truth tumor masks for IDH wildtype were labeled with 2s (Figure 1). These tumor masks were used as the ground truth for tumor segmentation in the training step.

Figure 1.

Ground truth tumor masks. The green voxels represent IDH wildtype (values of 2). The red voxels represent IDH mutated (values of 1). The ground truth labels have the same mutation status for all voxels in each tumor.

2.3. Network Details

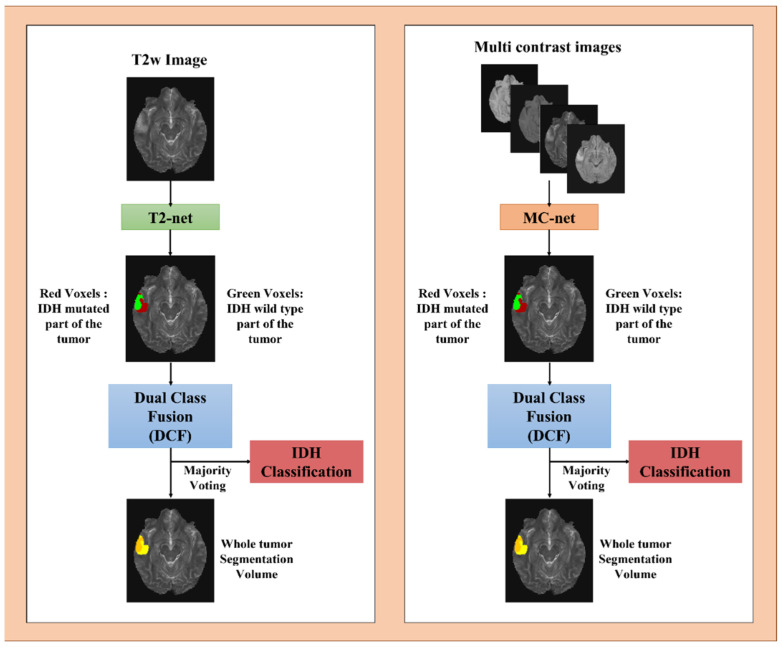

Two separate 2D networks were designed using the nnU-Net package. These were a T2w-image-only network (T2-net) and a multi-contrast network (MC-net) (Figure 2). Each network was trained on TCIA data only (227 subjects) and a combination of TCIA + EGD datasets (683 subjects combined) separately. The nnU-Net’s trainer class nnU-NetTrainerV2_DA5, representing advanced data augmentation steps, was used. The nnU-Net’s inbuilt five-fold cross-validation (CV) training-only procedure was implemented for each network. Generic nnU-Nets were trained to perform voxel-wise dual-class segmentation of the whole tumor, with Class 1 representing IDH mutated and Class 2 representing IDH wildtype. Generic nnU-Nets were selected as they reuse all the generated feature maps, such that that each layer in the design has a direct supervision signal [33].

Figure 2.

Overview of voxel-wise classification of IDH mutation status. Volumes were combined through dual-class fusion to remove false positives and create a tumor segmentation volume. Majority voting was applied across the voxels to predict the overall IDH mutation status.

2.4. Network Implementation and Cross-Validation

The networks were implemented using PyTorch [34] and nnU-Net packages [35]. To generalize the performance of the networks, nnU-Net’s inbuilt cross-validation (CV) training-only procedure was utilized. A 5-fold CV procedure was implemented for training each network. The subjects were randomly shuffled, and 80% of the data were used for training, and 20% of the data were used for in-training validation (model optimization). Data shuffling and splitting were implemented at the subject level to avoid the problem of data leakage [18,19]. During each fold of the cross-validation procedure, the subjects were reshuffled to generate the training and in-training validation datasets. It is important to note that each fold in the cross-validation process signifies a new training phase with a unique combination of the dataset. The in-training validation set aids in enhancing network performance throughout training. The in-training validation dataset is used by the algorithm to assess its performance after every training cycle and to update the model’s hyperparameters (as detailed in Supplementary Table S2). It is not considered a true held-out dataset since the algorithm modifies its performance based on the results from the in-training validation dataset during each round. Once the algorithm has finished all the training folds, it is then evaluated on the actual held-out external datasets to gauge its performance.

2.5. Testing Procedure

Testing data included all the external datasets for the TCIA-trained networks (EGD, UTSW, NYU, UWM, and UCSF), representing a total of 1622 subjects, and all external datasets without the EGD dataset for the TCIA + EGD-trained networks (total of 1166 subjects). Output segmentations of the five trained models from each fold of the CV procedure were ensembled to obtain the final two-class segmentation volume. A dual-class fusion (DCF) technique was developed to merge the two-class segmentation. The two classes were combined, and the largest connected component was extracted using a 3D connected component algorithm in MATLAB(R). This combination of classes yielded a whole-tumor segmentation map (Figure 2). Majority voting over the voxel-wise classes resulted in an IDH classification for each subject. The networks were run on Tesla A100 NVIDIA GPUs, and the developed IDH classification procedure was completely automated. A tumor segmentation map is a natural output of the voxel-wise classification approach.

2.6. Statistical Analysis

The performance of both T2-net and MC-net was statistically analyzed using MATLAB (2019a) and R programming 2022. The accuracy of these two networks was determined by a majority-voting process with a voxel-wise cutoff of 50%. This threshold was then utilized to compute the subject-level accuracy, sensitivity, and specificity. A receiver operating characteristic curve (ROC curve) was also calculated for the ensembled results of each combination of the network, training approach, and trainer class. The ROC methodology can be found in the supplementary data. The dice similarity coefficient was used to compare the spatial overlap between the network’s segmentation and the ground truth.

3. Results

3.1. T2-Net

Table 2 represents the testing results for T2-net. The network trained on a combined dataset of TCIA + EGD achieved the best accuracies across all institutions with an overall accuracy of 87.6% and an overall AUC of 0.8931. It also achieved a mean dice score as high as 0.85 ± 0.15.

Table 2.

T2-net IDH classification results.

| Network Type | Training Group | Metrics | UTSW | NYU | UWM | EGD | UCSF | Overall Accuracy | Overall AUC |

|---|---|---|---|---|---|---|---|---|---|

| T2-net | TCIA | Accuracy | 83.9 | 77.9 | 85.1 | 85.3 | 88.7 | 85.4 | 0.8686 |

| Sensitivity | 75.0 | 60.9 | 78.9 | 76.7 | 76.7 | 75.4 | |||

| Specificity | 87.5 | 81.4 | 85.9 | 89.5 | 91.8 | 88.6 | |||

| Dice Score | 0.83 ± 0.18 | 0.87 ± 0.14 | 0.84 ± 0.16 | 0.73 ± 0.19 | 0.82 ± 0.16 | 0.80 ± 0.18 | |||

| TCIA + EGD | Accuracy | 86.1 | 81.6 | 89.7 | - | 89.5 | 87.6 | 0.8931 | |

| Sensitivity | 81.7 | 60.9 | 84.2 | - | 70.9 | 75.5 | |||

| Specificity | 87.9 | 85.8 | 90.4 | - | 94.4 | 90.8 | |||

| Dice Score | 0.85 ± 0.15 | 0.88 ± 0.13 | 0.85 ± 0.15 | - | 0.83 ± 0.15 | 0.85 ± 0.15 |

3.2. MC-Net

Table 3 represents the testing results for MC-net. The network trained on the combined dataset of TCIA + EGD achieved the best accuracies across all institutions with an overall accuracy of 92.8% and an overall AUC of 0.9646 and outperformed the T2-only network. The MC-net achieved a mean dice score as high as 0.89 ± 0.13.

Table 3.

MC-net IDH classification results.

| Network Type | Training Group | Metrics | UTSW | NYU | UWM | EGD | UCSF | Overall Accuracy |

Overall AUC |

|---|---|---|---|---|---|---|---|---|---|

| MC-net | TCIA | Accuracy | 87.2 | 91.9 | 87.4 | 92.3 | 93.5 | 91.0 | 0.9448 |

| Sensitivity | 73.1 | 78.3 | 84.2 | 86.0 | 89.3 | 83.0 | |||

| Specificity | 93.0 | 94.7 | 87.8 | 95.4 | 94.5 | 93.6 | |||

| Dice Score | 0.90 ± 0.13 | 0.92 ± 0.10 | 0.91 ± 0.11 | 0.77 ± 0.17 | 0.87 ± 0.14 | 0.86 ± 0.15 | |||

| TCIA + EGD | Accuracy | 90.0 | 92.6 | 92.6 | - | 94.9 | 92.8 | 0.9646 | |

| Sensitivity | 80.8 | 79.3 | 68.4 | - | 88.3 | 82.3 | |||

| Specificity | 93.8 | 96.5 | 95.5 | - | 96.7 | 95.6 | |||

| Dice Score | 0.90 ± 0.13 | 0.92 ± 0.13 | 0.92 ± 0.07 | - | 0.87 ± 0.13 | 0.89 ± 0.13 |

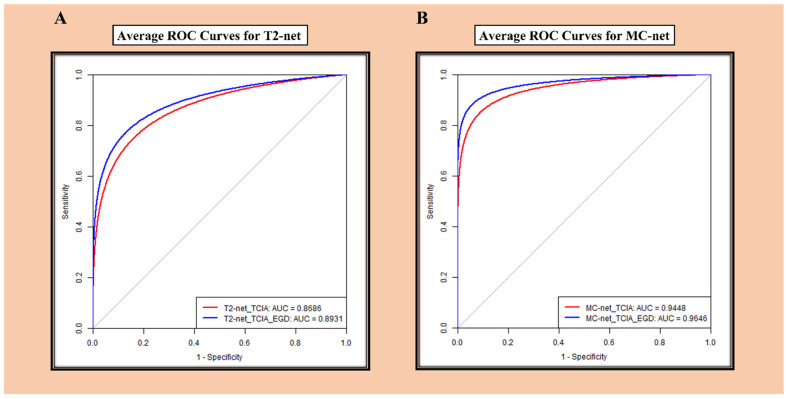

3.3. ROC Analysis

ROC curves for the average accuracy of T2-net and MC-net are provided in Figure 3. MC-net demonstrated better performance than T2-net.

Figure 3.

(A) ROC analysis for T2-net. (B) ROC analysis for MC-net.

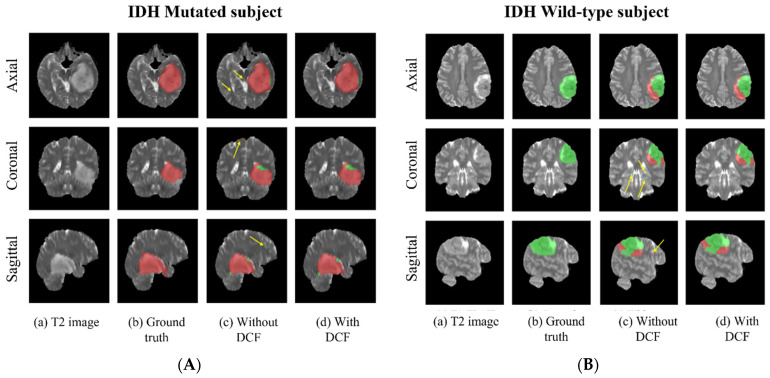

3.4. Voxel-Wise Classification

Since these networks are voxel-wise classifiers, they provide simultaneous tumor segmentation. Figure 4A,B shows examples of the voxel-wise classification for an IDH-wildtype and an IDH-mutated case using the T2-net. The DCF procedure successfully eliminated false positives. The average voxel-wise accuracy for each network and mutation status was also calculated. In general, all the models exhibited higher voxel-wise accuracies for the wildtype class compared to for the mutated class (Table 4).

Figure 4.

(A) Example voxel-wise segmentation for an IDH-mutated and IDH-wildtype tumor. T2 image (a). Ground truth segmentation (b). Voxel-wise predictions without DCF (c) and after DCF (d). Yellow arrows in indicate false positives. Red voxels depict IDH-mutated class, and green voxels depict IDH wildtype (B).

Table 4.

Mean voxel-wise accuracies for each network.

| Network Type | Training Group | IDH Type | UTSW | NYU | UWM | EGD | UCSF | Overall |

|---|---|---|---|---|---|---|---|---|

| T2-net | TCIA | Mutant | 71.05 | 56.95 | 76.40 | 74.97 | 73.49 | 72.60 |

| Wildtype | 84.05 | 80.59 | 85.20 | 87.07 | 88.71 | 86.13 | ||

| TCIA + EGD | Mutant | 78.48 | 62.10 | 81.94 | - | 70.18 | 73.79 | |

| Wildtype | 84.63 | 83.03 | 87.65 | - | 91.99 | 88.09 | ||

| MC-net | TCIA | Mutant | 71.17 | 69.43 | 81.11 | 81.57 | 84.92 | 79.00 |

| Wildtype | 90.57 | 91.37 | 85.26 | 93.11 | 91.18 | 90.80 | ||

| TCIA + EGD | Mutant | 80.01 | 73.64 | 69.65 | - | 85.68 | 80.98 | |

| Wildtype | 91.71 | 94.15 | 93.33 | - | 93.50 | 93.05 |

3.5. Training and Segmentation Times

Each cross-validation procedure took approximately 5 days to train. The trained networks took only one minute to segment the whole tumor, implement DCF, and predict the IDH mutation status for each subject.

4. Discussion

We developed and evaluated MRI-based deep learning algorithms for IDH classification in gliomas. Two separate 2D networks were developed using the nnU-Net package, a T2w-image-only network (T2-net) and a multi-contrast network (MC-net). The performance of T2-net and MC-net was compared across different datasets, revealing the potential of T2-net for future improvements and the advantages of our networks over existing IDH classification algorithms.

The overall accuracy of the T2-net trained on the combined dataset of TCIA + EGD was 87.6%, with an AUC of 0.8931, while the MC-net trained on the same dataset achieved an overall accuracy of 92.8% and an AUC of 0.9646. The MC-net outperformed the T2-net across all institutions, suggesting that using multi-contrast MR images enhances the performance of the algorithm (Table 3 and Table 4).

Despite the superior performance of MC-net, the T2-net demonstrated a high overall accuracy and AUC, indicating the potential of T2-net in future applications. Our results suggest that the use of T2w images has the potential to achieve similar performance as multi-contrast images. The T2-net’s performance can be improved by incorporating additional data, refining the network architecture, and optimizing hyperparameters.

The ability to use only T2-weighted images offers several advantages over multi-contrast MR images. T2w MR images are widely available and are a part of routine clinical practice, making them more accessible and practical in a clinical setting. This accessibility ensures that the T2-net can be applied to a larger patient population. A T2w MR sequence requires shorter acquisition times compared to multi-contrast MR images, reducing the likelihood of motion artifacts and improving patient comfort. Relying on a single MR image simplifies the pre-processing and prediction pipeline, accelerating the implementation of T2-net in clinical setting, where acquiring multi-contrast MR images may not always be possible. The time taken by T2-net to segment the entire tumor, execute the DCF procedure, and predict the IDH mutation status for a subject is under one minute. All these advantages of T2-net make clinical translation much more straightforward.

Our T2-net and MC-net exhibited excellent performance in comparison to several existing machine learning algorithms. Zhang et al. achieved 80% IDH classification accuracy using a radiomics approach with an SVM model and multi-modal MRI features [15] and 86% classification accuracy employing multi-modal MRI, clinical features, and a random forest algorithm [16]. Zhou et al. reached an AUC of 0.91 by incorporating MR feature extraction and a random forest algorithm [36]. Li et al. attained an AUC of 0.95 by combining deep-learning-based radiomics (DLR) on multi-modal MR images [37]. Choi et al. developed a complex hybrid approach based on 2D tumor images, radiomic features, 3D tumor shape, and loci guided by a tumor segmentation CNN, yet only achieved 78% IDH classification accuracy on the TCIA dataset [38]. Matsui et al. developed a multi-modality model to diagnose the molecular subtype and to predict the three-group classification directly, achieving a classification accuracy of only 68.7% for the test dataset [39]. Karami et al. developed DL-based models using conventional MRI and multi-shell diffusion MRI to achieve a classification accuracy of 75% [40]. Pasquini et al. developed a convolutional neural network (CNN) on multiparametric MRI, achieving a classification accuracy of 67% using T2w images alone [41]. In contrast, our T2-net reached an overall IDH classification accuracy of 87.5%, while the MC-net attained an overall IDH classification accuracy of 92.7%. Our networks demonstrated competitive performance when compared to the algorithm developed by Chang et al. (accuracy of 94%) using the TCIA database [17]. However, Chang et al.’s algorithm uses a 2D (slice-wise) classification approach, which is prone to data leakage problems [17]. In contrast, our algorithms employ subject-level data splitting, which mitigates the data leakage issue commonly found in slice-wise methodologies [18,19]. Our work also overcomes the limitations of previous studies by assessing the performance on large, true external held-out datasets, yielding more reliable accuracy estimates.

Several factors may contribute to the superior performance of our networks compared to previously published machine learning algorithms. The primary reason is the employment of simple nnU-Nets, in contrast to the hybrid and more complex networks reported earlier. Simple UNets are easy to use and train, with excellent generalizability and interpretability and a reduced risk of overfitting [42]. Additionally, the nnU-Nets architecture is advantageous as it automatically configures itself, including pre-processing, network architecture, training, and post-processing [35]. The nnU-Nets carry information from each preceding layer to the subsequent ones, making these networks more trainable and helping to minimize overfitting [35,43]. The nnU-Nets were designed to leverage information from MR images and differentiate imaging features between brain tumors and artifacts and are equipped to handle variations in tumor location, size, and appearance using our pre-processing and data augmentation strategies. It is worth noting that approximately 10% of the TCIA dataset used for training had some kind of visible motion or artifact. Despite these complexities, the network was able to effectively learn and generalize, indicating its ability to handle such challenges. The dual-class fusion (DCF) stage also aids in effectively discarding false positives and enhances segmentation accuracy by omitting unrelated voxels that are not connected to the tumor. Additionally, the networks require minimal pre-processing and do not necessitate the extraction of pre-engineered features from the images or histopathological data [44].

The trained nnU-Nets are voxel-wise classifiers, giving a classification for each individual voxel in the image. This provides simultaneous single-label tumor segmentation (e.g., the combination of voxels classified as IDH mutated and non-mutated forms the overall tumor segmentation). These networks showed impressive performance in whole-tumor segmentation, with T2-net yielding dice coefficients of 0.85 and the multi-contrast MC-net yielding 0.89. These coefficients align with top performers from various BraTS tumor segmentation challenges [43,45,46,47].

These voxel-wise classifiers lead to classifications of parts of each tumor as either IDH mutated or IDH wildtype. This reflects the known heterogeneity in genetic expression that can occur in gliomas, resulting in diverse tumor biology [17,48]. In clinical practice, immunohistochemistry (IHC) evaluations are mainly used to detect (using monoclonal antibodies) prevalent IDH mutations such as IDH1-R132H. Different cutoff values have been proposed for determining the IDH status using IHC methods, and heterogeneity in staining with IHC has been reported. While some advocate staining of more than 10% of tumor cells to confer IDH positivity, others suggest that one strongly stained tumor cell is sufficient [49]. Heterogeneity of staining with IHC has been reported, where up to 46% of subjects showed partial uptake [50]. In 2011, Perusser et al. reported that IDH1-R132H expression may occur in only a fraction of tumor cells [51]. Heterogeneity of the sample can also affect the sensitivity of genetic testing [52]. IDH heterogeneity and reported false negativity in some gliomas have been explained by monoallelic gene expression, wherein only one allele of a gene is expressed even though both alleles are present. According to Horbinski, sequencing may not always be adequate to identify tumors that are functionally IDH1/2 mutated [51,53]. Although heterogeneity of IDH status has been reported in histochemical and genomic evaluations of gliomas, we do not claim that the deep learning networks in this study are able to detect such heterogeneity in IDH mutation status. Rather, the mixed classification results likely reflect the morphologic heterogeneity of the IDH mutation status within a given tumor. Regardless, the accuracies using this voxel-wise approach mean that it well outperforms other methods.

Although IHC methods are routinely used in the clinic, several exome sequencing studies have demonstrated that traditional IDH1 antibody testing using IHC methods fails to detect up to 15% of IDH-mutated gliomas [25,26]. There are several molecular methods that can be used to determine IDH mutation status from tissue. The current gold standard is the whole-genome Sanger DNA sequencing method. Next-generation sequencing (NGS) methods such as whole-exome sequencing (WES) and NGS cancer gene panels can also be used to determine IDH mutation status. However, these molecular methods can be limited by the amount of time, cost, and volume of tumor tissue required for testing [54].

The study employed minimal data pre-processing. As for the T2-only network, high-quality T2-weighted images are commonly obtained during clinical brain tumor diagnostic evaluations and are generally resistant to patient motion effects, allowing for acquisition within two minutes. On modern MRI scanners, high-quality T2w images can even be obtained in the presence of patient motion [55]. As such, the ability to solely use T2w images presents a significant advantage for clinical translation. This method was inspired by similar approaches used for identifying other genetic statuses [56].

Our pre-processing steps preserve the original image information without needing any region of interest or pre-segmentation of the tumor. Prior deep learning algorithms for MRI-based IDH classification often relied on explicit tumor pre-segmentation, whether manual or through a separate deep learning network [17,38]. These pre-segmentation steps add complexity and, in the case of manual procedures, hinder the development of a robust automated clinical workflow. Our network uniquely carries out simultaneous tumor segmentation, a natural output of the voxel-wise classification process.

In reviewing the performance of the various external datasets, we noticed that the UTSW dataset tended to provide less accuracy for the MC-net than the other held-out datasets. Approximately half of the UTSW data used in this study represent MR data acquired at other nearby regional imaging centers, from where patients were referred to UTSW for surgical management with their outside imaging data sent into our PACs. The remainder of the UTSW subject imaging data were acquired on our own scanners using our standardized clinical brain tumor protocols (across a variety of vendors and platforms). When separating the UTSW data into external imaging centers and internal UTSW imaging data, there was a large difference in IDH classification accuracy, with the internal UTSW data providing 95.3% accuracy and the external referral data providing only 87.7% accuracy. This represents a potentially interesting area of additional inquiry in terms of the performance of molecular classification algorithms related to image acquisition parameters, image quality, field strength, vendor, and other factors that may be important when considering clinical implementation.

5. Future Work

Deep learning models usually require substantial amounts of data to train effectively. Training on a large dataset allows the networks to learn the underlying patterns and relationships in the data, improving their ability to generalize to unseen examples. The training subjects used in this study were only from the TCIA and EGD databases. While the TCIA dataset is relatively small (227 subjects) compared to the sample sizes typically required for deep learning, when combined with the EGD dataset, it provided nearly 700 subjects for training. Despite this caveat, the data are representative of real-world clinical experience, with multi-parametric MR images from multiple institutions with diverse acquisition parameters and imaging vendor platforms. Additionally, the evaluations were performed on over 1100 subjects with true held-out and geographically diverse datasets.

As seen in our study, the performance of both T2-net and MC-net improved when trained on the combined dataset of TCIA + EGD compared to TCIA alone. This indicates that adding more training data can help to improve the performance of the algorithms. A larger and more diverse training dataset can lead to better generalization and robustness in the model, allowing it to perform well on various external datasets. This is particularly important for deep learning algorithms, as they tend to require large amounts of data to learn the underlying patterns and features necessary for accurate classification. Although our results show promise for clinical translation, our algorithms’ performance needs to be replicated on additional independent datasets with variable acquisition parameters and imaging vendor platforms.

6. Conclusions

We developed two MRI-based deep learning networks for IDH classification of gliomas, a T2w-image-only network (T2-net) and a multi-contrast network (MC-net), with high accuracy. The developed networks were tested on over 1100 true held-out subjects from diverse databases, making this the largest study on non-invasive, image-based IDH prediction to date. The MC-net outperformed the T2-net in terms of accuracy, sensitivity, specificity, AUC, and dice coefficient. However, T2-net has the advantage of using only T2w MR images, which are more widely available and have faster acquisition times. This makes the T2-net a promising tool for clinical settings where multi-contrast MR images may not be readily available. Our results support the potential integration of T2-net and MC-net in clinical practice to provide non-invasive, accurate, and rapid IDH mutation prediction.

7. Importance of the Study

The identification of isocitrate dehydrogenase (IDH) mutation status as a marker for treatment and prognosis is one of the most significant recent findings in brain glioma biology. Gliomas with the mutated form of the gene have a more favorable prognosis and respond better to treatment than those with the non-mutated or wildtype form. Currently, the only reliable method to establish IDH mutation status requires obtaining glioma tissue through an invasive brain biopsy or open surgical resection. The ability to determine IDH status non-invasively carries substantial importance in determining therapy and predicting prognosis. Two MRI-based deep learning networks were developed for IDH classification, a T2w-image-only network (T2-net) and a multi-contrast network (MC-net). The high IDH classification accuracy of our T2w-image-only network (T2-net) marks an important milestone in the journey towards clinical translation.

Acknowledgments

Yin Xi: Department of Radiology, UT Southwestern Medical Center, Dallas, TX, USA.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/bioengineering10091045/s1; Table S1. Training subjects and corresponding IDH status; Table S2. Hyperparameters used to develop the MC-net.

Author Contributions

Experimental design (C.G.B.Y., B.C.W., N.C.D.T., A.J.M. and J.A.M.); Implementation (C.G.B.Y., B.C.W., N.C.D.T., A.J.M. and J.A.M.); Data analysis and interpretation (C.G.B.Y., B.C.W., N.C.D.T., J.M.H., D.D.R., N.S., K.J.H., T.R.P., B.F., M.D.L., R.J., R.J.B., M.C.P., A.J.M. and J.A.M.); Manuscript writing (C.G.B.Y., B.C.W., N.C.D.T., J.M.H., D.D.R., N.S., K.J.H., T.R.P., B.F., M.D.L., R.J., R.J.B., M.C.P., A.J.M. and J.A.M.). All authors have read and agreed to the published version of the manuscript.

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki, and approved by the Institutional Review Board of University of Texas Southwestern Medical STU-2020-1184.

Informed Consent Statement

Not applicable.

Data Availability Statement

The results <published or shown> here are in whole or part based upon data generated by the TCGA Research Network: http://cancergenome.nih.gov/: TCGA-GBM (DOI: 10.7937/K9/TCIA.2016.RNYFUYE9) , TCGA-LGG (DOI: 10.7937/K9/TCIA.2016.L4LTD3TK) and Ivy GAP (DOI: 10.7937/K9/TCIA.2016.XLwaN6nL). Results were also derived from UCSF-PDGM (DOI: 10.7937/tcia.bdgf-8v37), and EGD (https://xnat.bmia.nl/data/archive/projects/egd) publicly available datasets. Data supporting the findings of this study that are not already included in the article or supplementary materials may be available from the authors upon reasonable request. Some data may be restricted due to privacy and/or ethical restrictions.

Conflicts of Interest

The authors declare no conflict of interest.

Funding Statement

This work was supported by NIH/NCI R01CA260705 (J.A.M.) and NIH/NCI U01CA207091 (A.J.M. and J.A.M.).

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.Louis D.N., Perry A., Reifenberger G., Von Deimling A., Figarella-Branger D., Cavenee W.K., Ohgaki H., Wiestler O.D., Kleihues P., Ellison D.W. The 2016 World Health Organization classification of tumors of the central nervous system: A summary. Acta Neuropathol. 2016;131:803–820. doi: 10.1007/s00401-016-1545-1. [DOI] [PubMed] [Google Scholar]

- 2.The Cancer Genome Atlas Research Network Comprehensive genomic characterization defines human glioblastoma genes and core pathways. Nature. 2008;455:1061–1068. doi: 10.1038/nature07385. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Yan H., Parsons D.W., Jin G., McLendon R., Rasheed B.A., Yuan W., Kos I., Batinic-Haberle I., Jones S., Riggins G.J. IDH1 and IDH2 mutations in gliomas. N. Engl. J. Med. 2009;360:765–773. doi: 10.1056/NEJMoa0808710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Pope W.B., Prins R.M., Thomas M.A., Nagarajan R., Yen K.E., Bittinger M.A., Salamon N., Chou A.P., Yong W.H., Soto H. Non-invasive detection of 2-hydroxyglutarate and other metabolites in IDH1 mutant glioma patients using magnetic resonance spectroscopy. J. Neuro-Oncol. 2012;107:197–205. doi: 10.1007/s11060-011-0737-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Choi C., Ganji S.K., DeBerardinis R.J., Hatanpaa K.J., Rakheja D., Kovacs Z., Yang X.-L., Mashimo T., Raisanen J.M., Marin-Valencia I. 2-hydroxyglutarate detection by magnetic resonance spectroscopy in IDH-mutated patients with gliomas. Nat. Med. 2012;18:624–629. doi: 10.1038/nm.2682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.De la Fuente M.I., Young R.J., Rubel J., Rosenblum M., Tisnado J., Briggs S., Arevalo-Perez J., Cross J.R., Campos C., Straley K. Integration of 2-hydroxyglutarate-proton magnetic resonance spectroscopy into clinical practice for disease monitoring in isocitrate dehydrogenase-mutant glioma. Neuro-Oncol. 2015;18:283–290. doi: 10.1093/neuonc/nov307. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tietze A., Choi C., Mickey B., Maher E.A., Parm Ulhøi B., Sangill R., Lassen-Ramshad Y., Lukacova S., Østergaard L., von Oettingen G. Noninvasive assessment of isocitrate dehydrogenase mutation status in cerebral gliomas by magnetic resonance spectroscopy in a clinical setting. J. Neurosurg. 2017;128:391–398. doi: 10.3171/2016.10.JNS161793. [DOI] [PubMed] [Google Scholar]

- 8.Suh C.H., Kim H.S., Paik W., Choi C., Ryu K.H., Kim D., Woo D.C., Park J.E., Jung S.C., Choi C.G., et al. False-Positive Measurement at 2-Hydroxyglutarate MR Spectroscopy in Isocitrate Dehydrogenase Wild-Type Glioblastoma: A Multifactorial Analysis. Radiology. 2019;291:752–762. doi: 10.1148/radiol.2019182200. [DOI] [PubMed] [Google Scholar]

- 9.SongTao Q., Lei Y., Si G., YanQing D., HuiXia H., XueLin Z., LanXiao W., Fei Y. IDH mutations predict longer survival and response to temozolomide in secondary glioblastoma. Cancer Sci. 2012;103:269–273. doi: 10.1111/j.1349-7006.2011.02134.x. [DOI] [PubMed] [Google Scholar]

- 10.Okita Y., Narita Y., Miyakita Y., Ohno M., Matsushita Y., Fukushima S., Sumi M., Ichimura K., Kayama T., Shibui S. IDH1/2 mutation is a prognostic marker for survival and predicts response to chemotherapy for grade II gliomas concomitantly treated with radiation therapy. Int. J. Oncol. 2012;41:1325–1336. doi: 10.3892/ijo.2012.1564. [DOI] [PubMed] [Google Scholar]

- 11.Mohrenz I.V., Antonietti P., Pusch S., Capper D., Balss J., Voigt S., Weissert S., Mukrowsky A., Frank J., Senft C., et al. Isocitrate dehydrogenase 1 mutant R132H sensitizes glioma cells to BCNU-induced oxidative stress and cell death. Apoptosis. 2013;18:1416–1425. doi: 10.1007/s10495-013-0877-8. [DOI] [PubMed] [Google Scholar]

- 12.Molenaar R.J., Botman D., Smits M.A., Hira V.V., van Lith S.A., Stap J., Henneman P., Khurshed M., Lenting K., Mul A.N., et al. Radioprotection of IDH1-Mutated Cancer Cells by the IDH1-Mutant Inhibitor AGI-5198. Cancer Res. 2015;75:4790–4802. doi: 10.1158/0008-5472.CAN-14-3603. [DOI] [PubMed] [Google Scholar]

- 13.Sulkowski P.L., Corso C.D., Robinson N.D., Scanlon S.E., Purshouse K.R., Bai H., Liu Y., Sundaram R.K., Hegan D.C., Fons N.R., et al. 2-Hydroxyglutarate produced by neomorphic IDH mutations suppresses homologous recombination and induces PARP inhibitor sensitivity. Sci. Transl. Med. 2017;9:eaal2463. doi: 10.1126/scitranslmed.aal2463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Beiko J., Suki D., Hess K.R., Fox B.D., Cheung V., Cabral M., Shonka N., Gilbert M.R., Sawaya R., Prabhu S.S., et al. IDH1 mutant malignant astrocytomas are more amenable to surgical resection and have a survival benefit associated with maximal surgical resection. Neuro-Oncol. 2014;16:81–91. doi: 10.1093/neuonc/not159. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Zhang X., Tian Q., Wang L., Liu Y., Li B., Liang Z., Gao P., Zheng K., Zhao B., Lu H. Radiomics strategy for molecular subtype stratification of lower-grade glioma: Detecting IDH and TP53 mutations based on multimodal MRI. J. Magn. Reson. Imaging. 2018;48:916–926. doi: 10.1002/jmri.25960. [DOI] [PubMed] [Google Scholar]

- 16.Zhang B., Chang K., Ramkissoon S., Tanguturi S., Bi W.L., Reardon D.A., Ligon K.L., Alexander B.M., Wen P.Y., Huang R.Y. Multimodal MRI features predict isocitrate dehydrogenase genotype in high-grade gliomas. Neuro-Oncol. 2017;19:109–117. doi: 10.1093/neuonc/now121. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Chang P., Grinband J., Weinberg B.D., Bardis M., Khy M., Cadena G., Su M.Y., Cha S., Filippi C.G., Bota D., et al. Deep-Learning Convolutional Neural Networks Accurately Classify Genetic Mutations in Gliomas. AJNR Am. J. Neuroradiol. 2018;39:1201–1207. doi: 10.3174/ajnr.A5667. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Wegmayr V., Aitharaju S., Buhmann J. Classification of brain MRI with big data and deep 3D convolutional neural networks. In: Petrick N., Mori K., editors. Medical Imaging 2018: Computer-Aided Diagnosis. Volume 1057501. SPIE; Bellingham, WA, USA: 2018. [DOI] [Google Scholar]

- 19.Feng X., Yang J., Lipton Z.C., Small S.A., Provenzano F.A., Alzheimer’s Disease Neuroimaging Initiative Deep Learning on MRI Affirms the Prominence of the Hippocampal Formation in Alzheimer’s Disease Classification. bioRxiv. 2018;2018:456277. doi: 10.1101/456277. [DOI] [Google Scholar]

- 20.Nalawade S., Murugesan G.K., Vejdani-Jahromi M., Fisicaro R.A., Bangalore Yogananda C.G., Wagner B., Mickey B., Maher E., Pinho M.C., Fei B., et al. Classification of brain tumor isocitrate dehydrogenase status using MRI and deep learning. J. Med. Imaging. 2019;6:046003. doi: 10.1117/1.JMI.6.4.046003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Clark K., Vendt B., Smith K., Freymann J., Kirby J., Koppel P., Moore S., Phillips S., Maffitt D., Pringle M., et al. The Cancer Imaging Archive (TCIA): Maintaining and operating a public information repository. J. Digit. Imaging. 2013;26:1045–1057. doi: 10.1007/s10278-013-9622-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Van der Voort S.R., Incekara F., Wijnenga M.M.J., Kapsas G., Gahrmann R., Schouten J.W., Dubbink H.J., Vincent A.J.P.E., van den Bent M.J., French P.J., et al. The Erasmus Glioma Database (EGD): Structural MRI Scans, WHO 2016 Subtypes, and Segmentations of 774 Patients with Glioma. Data Brief. 2021;37:107191. doi: 10.1016/j.dib.2021.107191. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Ceccarelli M., Barthel F.P., Malta T.M., Sabedot T.S., Salama S.R., Murray B.A., Morozova O., Newton Y., Radenbaugh A., Pagnotta S.M., et al. Molecular Profiling Reveals Biologically Discrete Subsets and Pathways of Progression in Diffuse Glioma. Cell. 2016;164:550–563. doi: 10.1016/j.cell.2015.12.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Dubbink H.J., Atmodimedjo P.N., Kros J.M., French P.J., Sanson M., Idbaih A., Wesseling P., Enting R., Spliet W., Tijssen C., et al. Molecular classification of anaplastic oligodendroglioma using next-generation sequencing: A report of the prospective randomized EORTC Brain Tumor Group 26951 phase III trial. Neuro-Oncol. 2016;18:388–400. doi: 10.1093/neuonc/nov182. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Cryan J.B., Haidar S., Ramkissoon L.A., Bi W.L., Knoff D.S., Schultz N., Abedalthagafi M., Brown L., Wen P.Y., Reardon D.A., et al. Clinical multiplexed exome sequencing distinguishes adult oligodendroglial neoplasms from astrocytic and mixed lineage gliomas. Oncotarget. 2014;5:8083–8092. doi: 10.18632/oncotarget.2342. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Gutman D.A., Dunn W.D., Jr., Grossmann P., Cooper L.A., Holder C.A., Ligon K.L., Alexander B.M., Aerts H.J. Somatic mutations associated with MRI-derived volumetric features in glioblastoma. Neuroradiology. 2015;57:1227–1237. doi: 10.1007/s00234-015-1576-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Calabrese E., Villanueva-Meyer J.E., Rudie J.D., Rauschecker A.M., Baid U., Bakas S., Cha S., Mongan J.T., Hess C.P. The University of California San Francisco Preoperative Diffuse Glioma MRI Dataset. Radiol. Artif. Intell. 2022;4:e220058. doi: 10.1148/ryai.220058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sheller M.J., Edwards B., Reina G.A., Martin J., Pati S., Kotrotsou A., Milchenko M., Xu W., Marcus D., Colen R.R., et al. Federated learning in medicine: Facilitating multi-institutional collaborations without sharing patient data. Sci. Rep. 2020;10:12598. doi: 10.1038/s41598-020-69250-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Rohlfing T., Zahr N.M., Sullivan E.V., Pfefferbaum A. The SRI24 multichannel atlas of normal adult human brain structure. Hum. Brain Mapp. 2010;31:798–819. doi: 10.1002/hbm.20906. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Avants B.B., Tustison N.J., Song G., Cook P.A., Klein A., Gee J.C. A reproducible evaluation of ANTs similarity metric performance in brain image registration. Neuroimage. 2011;54:2033–2044. doi: 10.1016/j.neuroimage.2010.09.025. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Tustison N.J., Cook P.A., Klein A., Song G., Das S.R., Duda J.T., Kandel B.M., van Strien N., Stone J.R., Gee J.C., et al. Large-scale evaluation of ANTs and FreeSurfer cortical thickness measurements. Neuroimage. 2014;99:166–179. doi: 10.1016/j.neuroimage.2014.05.044. [DOI] [PubMed] [Google Scholar]

- 32.Tustison N.J., Avants B.B., Cook P.A., Zheng Y., Egan A., Yushkevich P.A., Gee J.C. N4ITK: Improved N3 bias correction. IEEE Trans. Med. Imaging. 2010;29:1310–1320. doi: 10.1109/TMI.2010.2046908. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Jegou S., Drozdzal M., Vazquez D., Romero A., Bengio Y. The One Hundred Layers Tiramisu: Fully Convolutional DenseNets for Semantic Segmentation; Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops; Honolulu, HI, USA. 21–26 July 2017. [Google Scholar]

- 34.Paszke A., Gross S., Massa F., Lerer A., Bradbury J., Chanan G., Killeen T., Lin Z., Gimelshein N., Antiga L. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019;32 [Google Scholar]

- 35.Isensee F., Jaeger P.F., Kohl S.A.A., Petersen J., Maier-Hein K.H. nnU-Net: A self-configuring method for deep learning-based biomedical image segmentation. Nat. Methods. 2021;18:203–211. doi: 10.1038/s41592-020-01008-z. [DOI] [PubMed] [Google Scholar]

- 36.Zhou H., Chang K., Bai H.X., Xiao B., Su C., Bi W.L., Zhang P.J., Senders J.T., Vallieres M., Kavouridis V.K., et al. Machine learning reveals multimodal MRI patterns predictive of isocitrate dehydrogenase and 1p/19q status in diffuse low- and high-grade gliomas. J. Neurooncol. 2019;142:299–307. doi: 10.1007/s11060-019-03096-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Li Z., Wang Y., Yu J., Guo Y., Cao W. Deep Learning based Radiomics (DLR) and its usage in noninvasive IDH1 prediction for low grade glioma. Sci. Rep. 2017;7:5467. doi: 10.1038/s41598-017-05848-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Choi Y.S., Bae S., Chang J.H., Kang S.G., Kim S.H., Kim J., Rim T.H., Choi S.H., Jain R., Lee S.K. Fully automated hybrid approach to predict the IDH mutation status of gliomas via deep learning and radiomics. Neuro-Oncol. 2021;23:304–313. doi: 10.1093/neuonc/noaa177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Matsui Y., Maruyama T., Nitta M., Saito T., Tsuzuki S., Tamura M., Kusuda K., Fukuya Y., Asano H., Kawamata T. Prediction of lower-grade glioma molecular subtypes using deep learning. J. Neuro-Oncol. 2020;146:321–327. doi: 10.1007/s11060-019-03376-9. [DOI] [PubMed] [Google Scholar]

- 40.Karami G., Pascuzzo R., Figini M., Del Gratta C., Zhang H., Bizzi A. Combining Multi-Shell Diffusion with Conventional MRI Improves Molecular Diagnosis of Diffuse Gliomas with Deep Learning. Cancers. 2023;15:482. doi: 10.3390/cancers15020482. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Pasquini L., Napolitano A., Tagliente E., Dellepiane F., Lucignani M., Vidiri A., Ranazzi G., Stoppacciaro A., Moltoni G., Nicolai M., et al. Deep Learning Can Differentiate IDH-Mutant from IDH-Wild GBM. J. Pers. Med. 2021;11:290. doi: 10.3390/jpm11040290. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Simpson A.L., Antonelli M., Bakas S., Bilello M., Farahani K., Van Ginneken B., Kopp-Schneider A., Landman B.A., Litjens G., Menze B. A large annotated medical image dataset for the development and evaluation of segmentation algorithms. arXiv. 20191902.09063 [Google Scholar]

- 43.Wang G., Li W., Ourselin S., Vercauteren T. Automatic Brain Tumor Segmentation using Cascaded Anisotropic Convolutional Neural Networks. arXiv. 2017 doi: 10.3389/fncom.2019.00056.1709.00382 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Delfanti R.L., Piccioni D.E., Handwerker J., Bahrami N., Krishnan A., Karunamuni R., Hattangadi-Gluth J.A., Seibert T.M., Srikant A., Jones K.A., et al. Imaging correlates for the 2016 update on WHO classification of grade II/III gliomas: Implications for IDH, 1p/19q and ATRX status. J. Neurooncol. 2017;135:601–609. doi: 10.1007/s11060-017-2613-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ghaffari M., Sowmya A., Oliver R. Automated Brain Tumor Segmentation Using Multimodal Brain Scans: A Survey Based on Models Submitted to the BraTS 2012–2018 Challenges. IEEE Rev. Biomed. Eng. 2020;13:156–168. doi: 10.1109/RBME.2019.2946868. [DOI] [PubMed] [Google Scholar]

- 46.Bakas S., Reyes M., Jakab A., Bauer S., Rempfler M., Crimi A., Shinohara R.T., Berger C., Ha S.M., Rozycki M. Identifying the best machine learning algorithms for brain tumor segmentation, progression assessment, and overall survival prediction in the BRATS challenge. arXiv. 20181811.02629 [Google Scholar]

- 47.Baid U., Ghodasara S., Mohan S., Bilello M., Calabrese E., Colak E., Farahani K., Kalpathy-Cramer J., Kitamura F.C., Pati S. The RSNA-ASNR-MICCAI BraTS 2021 benchmark on brain tumor segmentation and radiogenomic classification. arXiv. 20212107.02314 [Google Scholar]

- 48.Pusch S., Sahm F., Meyer J., Mittelbronn M., Hartmann C., von Deimling A. Glioma IDH1 mutation patterns off the beaten track. Neuropathol. Appl. Neurobiol. 2011;37:428–430. doi: 10.1111/j.1365-2990.2010.01127.x. [DOI] [PubMed] [Google Scholar]

- 49.Lee D., Suh Y.L., Kang S.Y., Park T.I., Jeong J.Y., Kim S.H. IDH1 mutations in oligodendroglial tumors: Comparative analysis of direct sequencing, pyrosequencing, immunohistochemistry, nested PCR and PNA-mediated clamping PCR. Brain Pathol. 2013;23:285–293. doi: 10.1111/bpa.12000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Agarwal S., Sharma M.C., Jha P., Pathak P., Suri V., Sarkar C., Chosdol K., Suri A., Kale S.S., Mahapatra A.K., et al. Comparative study of IDH1 mutations in gliomas by immunohistochemistry and DNA sequencing. Neuro-Oncol. 2013;15:718–726. doi: 10.1093/neuonc/not015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Preusser M., Wohrer A., Stary S., Hoftberger R., Streubel B., Hainfellner J.A. Value and limitations of immunohistochemistry and gene sequencing for detection of the IDH1-R132H mutation in diffuse glioma biopsy specimens. J. Neuropathol. Exp. Neurol. 2011;70:715–723. doi: 10.1097/NEN.0b013e31822713f0. [DOI] [PubMed] [Google Scholar]

- 52.Tanboon J., Williams E.A., Louis D.N. The Diagnostic Use of Immunohistochemical Surrogates for Signature Molecular Genetic Alterations in Gliomas. J. Neuropathol. Exp. Neurol. 2015;75:4–18. doi: 10.1093/jnen/nlv009. [DOI] [PubMed] [Google Scholar]

- 53.Horbinski C. What do we know about IDH1/2 mutations so far, and how do we use it? Acta Neuropathol. 2013;125:621–636. doi: 10.1007/s00401-013-1106-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Wall J.D., Tang L.F., Zerbe B., Kvale M.N., Kwok P.Y., Schaefer C., Risch N. Estimating genotype error rates from high-coverage next-generation sequence data. Genome Res. 2014;24:1734–1739. doi: 10.1101/gr.168393.113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Nyberg E., Sandhu G.S., Jesberger J., Blackham K.A., Hsu D.P., Griswold M.A., Sunshine J.L. Comparison of brain MR images at 1.5T using BLADE and rectilinear techniques for patients who move during data acquisition. AJNR Am. J. Neuroradiol. 2012;33:77–82. doi: 10.3174/ajnr.A2737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Korfiatis P., Kline T.L., Coufalova L., Lachance D.H., Parney I.F., Carter R.E., Buckner J.C., Erickson B.J. MRI texture features as biomarkers to predict MGMT methylation status in glioblastomas. Med. Phys. 2016;43:2835–2844. doi: 10.1118/1.4948668. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The results <published or shown> here are in whole or part based upon data generated by the TCGA Research Network: http://cancergenome.nih.gov/: TCGA-GBM (DOI: 10.7937/K9/TCIA.2016.RNYFUYE9) , TCGA-LGG (DOI: 10.7937/K9/TCIA.2016.L4LTD3TK) and Ivy GAP (DOI: 10.7937/K9/TCIA.2016.XLwaN6nL). Results were also derived from UCSF-PDGM (DOI: 10.7937/tcia.bdgf-8v37), and EGD (https://xnat.bmia.nl/data/archive/projects/egd) publicly available datasets. Data supporting the findings of this study that are not already included in the article or supplementary materials may be available from the authors upon reasonable request. Some data may be restricted due to privacy and/or ethical restrictions.