Abstract

Clinical decision support (CDS) systems powered by predictive models have the potential to improve the accuracy and efficiency of clinical decision-making. However, without sufficient validation, these systems have the potential to mislead clinicians and harm patients. This is especially true for CDS systems used by opioid prescribers and dispensers, where a flawed prediction can directly harm patients. To prevent these harms, regulators and researchers have proposed guidance for validating predictive models and CDS systems. However, this guidance is not universally followed and is not required by law. We call on CDS developers, deployers, and users to hold these systems to higher standards of clinical and technical validation. We provide a case study on two CDS systems deployed on a national scale in the United States for predicting a patient’s risk of adverse opioid-related events: the Stratification Tool for Opioid Risk Mitigation (STORM), used by the Veterans Health Administration, and NarxCare, a commercial system.

Keywords: clinical decision support, artificial intelligence, predictive modeling, algorithmic safety, opioid use disorder

INTRODUCTION

Clinical decision support (CDS) systems have played a role in medical decision-making for decades, and a wide variety of CDS systems are in use today.1,2 Many CDS systems are based on predictive models that estimate the risk of an adverse outcome, including complex machine learning methods such as artificial neural networks.3,4

Despite their growing use among US healthcare providers and health insurance companies, the impact of CDS systems on patient outcomes or costs is difficult to measure and there is conflicting evidence of their benefits to both patients and providers.2,5

We argue that technical validation and clinical validation are especially important for preventing harm. Technical validation addresses whether a CDS system meets its technical specifications such as prediction accuracy and software reliability; clinical validation addresses whether the system yields its intended impact on patients and providers. Researchers and regulators have proposed guidance for technical validation and clinical validation—including instructions for developing, implementing, and evaluating predictive models.6,7 The US Food and Drug Administration (FDA) has provided high-level guidance for the development and evaluation of Software as a Medical Device (SaMD), which includes CDS systems.8,9 While the FDA has not yet enforced this guidance, they have identified some CDS systems for future regulation. This includes one of the two prediction models we use as exemplars, which influences opioid prescription and dispensation decisions.10

Despite abundant guidance, many proposed and deployed CDS systems have not been technically and clinically validated by their developers or deployers and cannot be externally validated by others because there is insufficient information about their design and use.11 Furthermore, some systems are concealed as intellectual property or use “black-box” models that are not easily interpreted.12 This is concerning from a health equity perspective in that CDS systems have been found to discriminate against certain subgroups of patients.13–20

CDS systems and the opioid epidemic

In 2021, there were 106 699 drug overdose deaths in the United States, most of which involved an opioid.21 In response to the opioid epidemic, many CDS systems have been proposed for estimating a patient’s risk of overdose, though few have been validated.22 We focus on two such systems: the Stratification Tool for Opioid Risk Mitigation (STORM), used by the Veterans Health Administration (VHA) and the Defense Health System, and NarxCare, a commercial system. Both systems are deployed on a national scale in the United States, and both use models that aim to predict risk of adverse opioid-related outcomes.

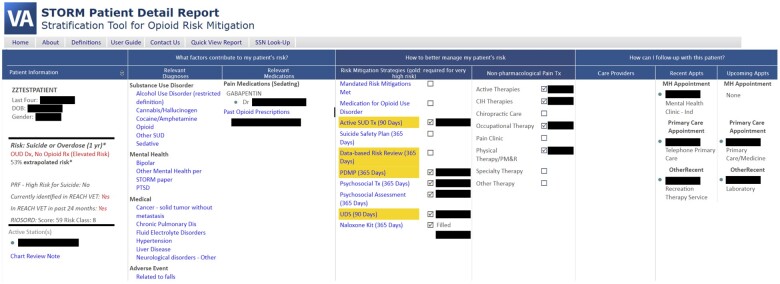

NarxCare. In the early 2000s, US federal and state government agencies began surveilling controlled substance prescriptions in response to the accelerating opioid epidemic. Federal agencies encouraged states to adopt Prescription Drug Monitoring Programs (PDMPs), enabled by prescription databases of controlled substance prescription data. PDMP databases were made available to prescribers, dispensers, and law enforcement and allowed patient-level queries of prescription drug-seeking behaviors.24 One product of these efforts is the platform now known as NarxCare, which is maintained by Bamboo Health (https://bamboohealth.com, last accessed June 21, 2023).24,25 NarxCare’s interface (Figure 1) presents aggregated PDMP data, and risk scores based on these data, geared toward opioid prescribers and dispensers (https://bamboohealth.com/solutions/narxcare/, last accessed June 21, 2023). We focus on the NarxCare Overdose Risk Score (ORS), a number between 0 and 999 that indicates a patient’s risk of unintentional overdose death.26

Figure 1.

An illustration of the NarxCare interface based on NarxCare marketing materials.23 Top panels show various risk scores and risk factors. The Overdose Risk Score (center panel) estimates the patient’s risk of accidental death due to opioid overdose. Lower panels summarize the patient’s Prescription Drug Monitoring Program data.

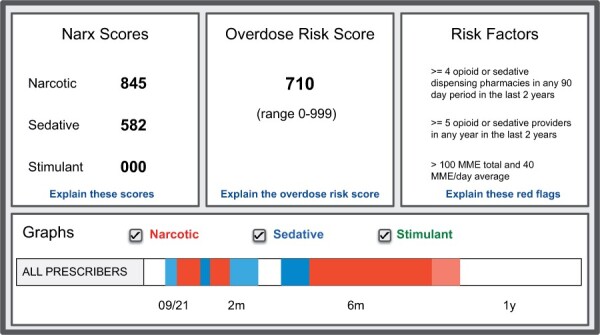

STORM is a model developed by the VHA for predicting risk of suicide- or overdose-related events. The VHA patient population has an especially high risk of opioid-related adverse outcomes,27,28 and STORM was developed as part of the VHA Opioid Safety Initiative (https://www.va.gov/PAINMANAGEMENT/Opioid_Safety, last accessed June 21, 2023). The STORM dashboard (Figure 2) displays a patient’s estimated risk score (a percentage of between 0% and 100%), risk factors identified by the model, information to coordinate with other care providers, and patient-tailored clinical guideline recommendations for risk mitigation strategies to be considered in the patient’s treatment plan.

Figure 2.

The STORM dashboard includes patient information including a risk score and risk factors (left columns), suggested risk mitigation strategies (middle), and treatment history and other provider information to facilitate care coordination (right). STORM: Stratification Tool for Opioid Risk Mitigation.

Although STORM and NarxCare have a similar function, their approach to validation is very different. Evaluations of NarxCare-ORS focus only on technical validation—specifically, they evaluate the predictive performance for a narrow patient cohort, and do not address its impact on patients or providers. In contrast, STORM has been validated from both a technical and clinical perspective.

We are not the first to raise concerns about NarxCare or other CDS systems that are poorly validated. Oliva25 warns that using NarxCare and PDMP data to guide prescribing and dispensing decisions can harm patients with complex pain conditions, uninsured or underinsured patients, and those with co-morbidities or disabilities. The California Society of Addiction Medicine raised concerns of NarxCare’s transparency and insufficient validation.29 Kilby30 argues that, in general, predictive models related to opioid use do not align with the goal of improving patient care. In a WIRED article, Szalavitz31 reports on individual patient experiences and instances of harm associated with NarxCare, suggesting that it may pose safety and effectiveness issues.

We cannot determine how, or if, NarxCare can be fixed. Rather we intend this case study as a call to action for CDS developers, deployers, and users, to hold CDS systems to higher standards of validation.

VALIDATING CDS SYSTEMS AND PREDICTIVE MODELS

We separate the validation process into two steps: (1) defining a system’s purpose and intended impact and (2) evaluating it from a clinical and technical perspective.

Purpose and intended impact

To validate a CDS system we first need to answer the questions: which healthcare decisions is this tool meant to influence, and how? and what is the intended impact on patients and providers? FDA SaMD guidance suggests that CDS developers answer these questions in a definition statement,8 which underpins the entire SaMD review framework. This statement tells regulators how to evaluate a tool’s performance, its intended use, and the severity of potential impacts on patients.

NarxCare-ORS: purpose and impact

Public documents provide conflicting definitions of NarxCare’s purpose. Marketing materials state that ORS scores are only intended for “raising awareness,” and not as a basis for clinical decisions—suggesting that NarxCare and ORS are not intended for CDS.23 Yet, NarxCare user guides provide explicit recommendations for how ORS scores should be interpreted and used for clinical decision-making.32 Furthermore, no documentation defines the clinical or operational outcomes that NarxCare is meant to impact—such as overdose rate, naloxone dispensation and prescription, or referrals to pain or addiction specialists.

This ambiguity is problematic: the manufacturer states that NarxCare is not intended for CDS; yet, NarxCare is currently used by (and marketed toward) many care providers across the United States (https://bamboohealth.com, last accessed June 21, 2023). Furthermore, PDMP data directly influence clinicians’ treatment decisions,33,34 so we should expect that PDMP-derived ORS scores will have a similar influence on clinicians.

STORM: purpose and impact

The STORM developers state that STORM risk scores are intended to help providers prioritize patients for case review and intervention.35 In a clinical study protocol, Chinman et al36 state that providers should conduct case reviews for patients categorized in the top tier of risk scores and describe several key outcomes for validation—including clinical outcomes (overdose- and suicide-related events, accidents) and implementation outcomes (number of case reviews and opioid mitigation strategies).

Technical and clinical evaluations

Technical evaluation addresses questions of the underlying technology. One aspect of technical evaluation is software reliability, IT integration, and compliance with stated requirements.9,37,38 Another aspect assesses the generalizability of predictive models and identifies potential disparities in performance for patient subgroups and historically marginalized groups.39–41 These evaluations are typically conducted prior to deployment, often using simulations.42

Clinical evaluation assesses a system’s impact on patients and providers. This includes measuring health and operational outcomes and determining whether the system is used correctly according to the manufacturer’s instructions.9 Unlike technical evaluation, clinical evaluation usually requires a trial or pilot study.

NarxCare-ORS: evaluation

There are three public studies of NarxCare-ORS that use a small cohort of patients from Ohio, Indiana, and Michigan, which find that NarxCare-ORS is somewhat predictive of opioid-related outcomes and is comparable with simpler “red flag” methods of risk assessment.43–45 Other studies find that ORS scores are predictive of non-opioid-related outcomes not related to NarxCare’s intended use.46–48

These evaluations are insufficient for several key reasons. First, these studies consider a narrow cohort: they include a subset of patients in Ohio, Michigan, and Indiana. While ORS scores are somewhat predictive of opioid-related outcomes for these patients, this finding does not necessarily generalize to other states. This is troubling because NarxCare is reportedly used by 44 of the 54 PDMP programs in the United States and in all 50 US states (https://bamboohealth.com/audience/state-governments, last accessed June 21, 2023). In other words, NarxCare-ORS has not been technically validated for the vast majority of patients who are scored by this tool. Second, there has been no subgroup analysis of ORS scores. The predictive utility of ORS may vary depending on demographics, insurance status, and access to healthcare.39

Third, and most importantly, NarxCare-ORS has never been clinically evaluated. For NarxCare-ORS, a clinical evaluation might measure impacts on health indicators such as opioid overdose rate, mortality, and naloxone use—and provider-related indicators such as adherence to manufacturer guidelines, number and type of prescriptions, and patient referrals.

STORM: evaluation

There have been one technical evaluation and several clinical and implementation evaluations of STORM. An initial technical evaluation was conducted by STORM developers using a cohort of 1 135 601 patients—including almost all VHA patients who had an opioid prescription in 2010.35 Unlike NarxCare-ORS evaluations, this study includes a cohort of patients that is nationally representative of the patients that STORM is intended for: all VHA patients who received an opioid prescription from VA.

STORM clinical evaluations incorporated strong randomized evaluation design to measure the impact on opioid-related health outcomes,49,50 strategies for integrating STORM into clinical workflows,51 and oversight of STORM use.52,53 These studies examined the clinical impact of use of the STORM CDS system to target a prevention program. This included effect on clinical outcomes and assessment of change in clinical process and practice.

DISCUSSION

In the context of the opioid epidemic, an improperly validated CDS system can have severe consequences. For example, if the NarxCare model leads a pharmacist to believe that a patient’s risk of overdose is higher than their actual risk, the pharmacist may decline to fill the patient’s prescription, disrupting their treatment plan. This can leave the patient frustrated and with potentially disabling pain due to poor model design choices such as those described in the gray literature.31 A model can also suggest that a patient’s risk is lower than their actual risk and miss an opportunity to prevent opioid misuse or a lethal overdose. Model errors like this are impossible to avoid in practice. Furthermore, data in healthcare settings are collected for the purpose of supporting administrative billing and regulatory reporting. They are a collection of observations about a patient when they are sick, not healthy, and often plagued by missing values, selection bias, confounding, irregular sampling and temporal drift—making it difficult to draw generalizable conclusions.54 There are also widely known challenges with the prediction of rare events.

Improper use of predictive models can be just as harmful as deploying faulty models. PDMP data influence prescription decisions in a variety of ways: some providers use these data as a justification to refuse prescription or treatment, or even discharge patients from a practice.33,34 We should expect that some clinicians will use NarxCare-ORS—a PDMP-derived risk score—to refuse treatment for certain patients. And manufacturer guidance here is contradictory: marketing materials state that ORS should not influence prescription decisions,23 while user guides recommend specific prescriber actions corresponding to ORS score ranges.32

In contrast, STORM’s intended use and intended outcomes were defined and validated during development and evaluation.35,36,49–53 While STORM and NarxCare include risk scores, STORM recommends risk mitigation strategies based on clinical guidelines, and information to facilitate care coordination.

While it is useful to contrast NarxCare-ORS with STORM, both systems have limitations. The STORM model has only been updated once (to a 2014–2015 cohort) since its original development on a 2010–2011 cohort35 and should be re-evaluated and updated using more recent data. Furthermore, the developers have identified subgroup disparities in STORM indicating worse predictive performance for veterans who are older, non-white, and female55,56; ongoing work aims to mitigate these disparities. There is no public subgroup analysis of NarxCare, though performance disparities can potentially harm marginalized groups.25

Regulators and researchers provide guidance for CDS system validation, but this guidance is not universally followed by CDS developers and users and is not enforced by regulators. For this reason, healthcare providers in the United States cannot assume that CDS systems have been validated, and healthcare systems should establish oversight procedures to independently ensure that these tools satisfy basic requirements of safety and quality. Some academic healthcare systems have started this effort, including Duke Medical Center (https://aihealth.duke.edu/algorithm-based-clinical-decision-support-abcds/, last accessed June 21, 2023), University of Wisconsin Health,57 and Stanford Medicine.58 These programs can serve as an example for others, though it is important to acknowledge that each provider is different: there is no “one-size-fits-all” approach to algorithmic governance.

The current environment also poses challenges to CDS developers, as both governance standards and FDA SaMD guidance continue to evolve. There are no formal mechanisms to enforce requirements on SaMD applications, and current guidance does not explicitly define requirements (such as criteria for transparency or validation). For this reason, CDS developers face enormous uncertainty about what these regulations will require, and when and how they will be enforced.

As standards for algorithmic governance in healthcare begin to take shape, we encourage the creators, deployers, and users of CDS systems to focus not only on their technical capabilities but also on their purpose, intended use, and most importantly, their impact on patients.

ACKNOWLEDGMENTS

The authors thank Jodie Trafton for feedback on the manuscript and discussions about the development of STORM.

Contributor Information

Duncan C McElfresh, Department of Health Policy, Stanford University, Stanford, California, USA; Program Evaluation Resource Center, Office of Mental Health and Suicide Prevention, US Department of Veterans Affairs, Menlo Park, California, USA.

Lucia Chen, Department of Health Policy, Stanford University, Stanford, California, USA.

Elizabeth Oliva, Program Evaluation Resource Center, Office of Mental Health and Suicide Prevention, US Department of Veterans Affairs, Menlo Park, California, USA.

Vilija Joyce, Program Evaluation Resource Center, Office of Mental Health and Suicide Prevention, US Department of Veterans Affairs, Menlo Park, California, USA; Health Economics Resource Center, US Department of Veterans Affairs, Menlo Park, California, USA.

Sherri Rose, Department of Health Policy, Stanford University, Stanford, California, USA.

Suzanne Tamang, Program Evaluation Resource Center, Office of Mental Health and Suicide Prevention, US Department of Veterans Affairs, Menlo Park, California, USA; Department of Medicine, Stanford University, Stanford, California, USA.

FUNDING

DCM, EO, VJ, and ST were supported by the VA Center for Innovation to Implementation (Ci2i). LC and SR were supported by an NIH Director’s Pioneer Award DP1LM014278 and LC was also supported by a Stanford HAI Hoffman-Yee Research Grant.

AUTHOR CONTRIBUTIONS

All authors contributed to formative discussions and manuscript editing. DCM led the manuscript preparation.

CONFLICT OF INTEREST STATEMENT

None declared.

DATA AVAILABILITY

No data were generated or analysed in support of this research.

REFERENCES

- 1. Kawamoto K, Houlihan CA, Andrew Balas E, Lobach DF.. Improving clinical practice using clinical decision support systems: a systematic review of trials to identify features critical to success. BMJ 2005; 330 (7494): 765. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Bright TJ, Wong A, Dhurjati R, et al. Effect of clinical decision-support systems. Ann Intern Med 2012; 157 (1): 29–43. [DOI] [PubMed] [Google Scholar]

- 3. Lisboa PJ, Taktak AF.. The use of artificial neural networks in decision support in cancer: a systematic review. Neural Networks 2006; 19 (4): 408–15. [DOI] [PubMed] [Google Scholar]

- 4. Artetxe A, Beristain A, Graña M.. Predictive models for hospital readmission risk: a systematic review of methods. Comput Methods Programs Biomed 2018; 164: 49–64. [DOI] [PubMed] [Google Scholar]

- 5. Sutton RT, Pincock D, Baumgart DC, Sadowski DC, Fedorak RN, Kroeker KI.. An overview of clinical decision support systems: benefits, risks, and strategies for success. NPJ Digit Med 2020; 3 (1): 17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. de Hond AAH, Leeuwenberg AM, Hooft L, et al. Guidelines and quality criteria for artificial intelligence-based prediction models in healthcare: a scoping review. NPJ Digit Med 2022; 5 (1): 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Lu JH, Callahan A, Patel BS, et al. Assessment of adherence to reporting guidelines by commonly used clinical prediction models from a single vendor: a systematic review. JAMA Netw Open 2022; 5 (8): e2227779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. International Medical Device Regulators Forum, Software as a Medical Device (SaMD) Working Group. “Software as a medical device”: possible framework for risk categorization and corresponding considerations. September, 2014. https://www.imdrf.org/sites/default/files/docs/imdrf/final/technical/imdrf-tech-140918-samd-framework-risk-categorization-141013.pdf. Accessed June 21, 2023.

- 9. U.S. Department of Health and Human Services, Food and Drug Administration. Software as a medical device (SAMD): clinical evaluation. guidance for industry and food and drug administration staff. December, 2017. https://www.fda.gov/media/100714/download. Accessed June 21, 2023.

- 10. U.S. Food and Drug Administration. Clinical decision support software: guidance for industry and food and drug administration staff. September, 2022. https://www.fda.gov/media/109618/download. Accessed June 21, 2023.

- 11. Yang C, Kors JA, Ioannou S, et al. Trends in the conduct and reporting of clinical prediction model development and validation: a systematic review. J Am Med Inform Assoc 2022; 29 (5): 983–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Rudin C. Stop explaining black box machine learning models for high stakes decisions and use interpretable models instead. Nat Mach Intell 2019; 1 (5): 206–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. Lacy ME, Wellenius GA, Carnethon MR, et al. Racial differences in the performance of existing risk prediction models for incident type 2 diabetes: the cardia study. Diabetes Care 2016; 39 (2): 285–91. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Chen IY, Szolovits P, Ghassemi M.. Can ai help reduce disparities in general medical and mental health care? AMA J Ethics 2019; 21 (2): 167–79. [DOI] [PubMed] [Google Scholar]

- 15. Reeves M, Bhat HS, Goldman-Mellor S.. Resampling to address inequities in predictive modeling of suicide deaths. BMJ Health Care Inform 2022; 29 (1): e100456. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Obermeyer Z, Powers B, Vogeli C, Mullainathan S.. Dissecting racial bias in an algorithm used to manage the health of populations. Science 2019; 366 (6464): 447–53. [DOI] [PubMed] [Google Scholar]

- 17. Sjoding MW, Dickson RP, Iwashyna TJ, Gay SE, Valley TS.. Racial bias in pulse oximetry measurement. N Engl J Med 2020; 383 (25): 2477–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Delgado C, Baweja M, Crews DC, et al. A unifying approach for GFR estimation: recommendations of the NKF-ASN task force on reassessing the inclusion of race in diagnosing kidney disease. Am J Kidney Dis 2022; 79 (2): 268–88.e1. [DOI] [PubMed] [Google Scholar]

- 19. Dhillon RK, McLernon DJ, Smith PP, et al. Predicting the chance of live birth for women undergoing IVF: a novel pretreatment counselling tool. Hum Reprod 2016; 31 (1): 84–92. [DOI] [PubMed] [Google Scholar]

- 20. Collins GS, Altman DG.. Predicting the 10 year risk of cardiovascular disease in the United Kingdom: independent and external validation of an updated version of QRISK2. BMJ 2012; 344: e4181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Spencer MR, Minino AM, Warner M. Drug overdose deaths in the United States, 2001–2021. December, 2022. 10.15620/cdc:122556. Accessed June 21, 2023. [DOI] [PubMed]

- 22. Tseregounis IE, Henry SG.. Assessing opioid overdose risk: a review of clinical prediction models utilizing patient-level data. Transl Res 2021; 234: 74–87. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. Appriss Health. About NarxCare: for patients and their families. Appriss Health, 2019. https://apprisshealth.com/wp-content/uploads/sites/2/2019/03/HLTH_Patient-Information-Sheet_FINAL.pdf. Accessed June 21, 2023.

- 24. Sacco LN, Duff JH, Sarata AK. Prescription drug monitoring programs. May, 2018. https://sgp.fas.org/crs/misc/R42593.pdf. Accessed June 21, 2023.

- 25. Oliva J. Dosing discrimination: regulating PDMP risk scores. 110 California Law Review 2021; 47. 10.2139/ssrn.3768774. [DOI] [Google Scholar]

- 26. Whalen K, Sitkovits K. Risk scoring in the PDMP to identify at-risk patients. Appriss Health, 2020. https://go.bamboohealth.com/rs/228-ZPQ-393/images/AH-NarxCare-White-Paper.pdf. Accessed June 21, 2023.

- 27. Baser O, Xie L, Mardekian J, Schaaf D, Wang L, Joshi AV.. Prevalence of diagnosed opioid abuse and its economic burden in the Veterans Health Administration. Pain Pract 2014; 14 (5): 437–45. [DOI] [PubMed] [Google Scholar]

- 28. Bohnert ASB, Ilgen MA, Galea S, McCarthy JF, Blow FC.. Accidental poisoning mortality among patients in the Department of Veterans Affairs Health System. Med Care 2011; 49 (4): 393–6. [DOI] [PubMed] [Google Scholar]

- 29. Miotto KA. Re: Appriss NarxCare/bamboo health PMP clearinghouse implementation in California letter to Austin Weaver, Manager of California Department of Justice CURES Program. California Society of Addiction Medicine. March, 2022. https://csam-asam.org/wp-content/uploads/2022-03-28-CSAM-President-Letter-to-CURES-DOJ-re-NarxCare.pdf. Accessed June 21, 2023.

- 30. Kilby AE. Algorithmic fairness in predicting opioid use disorder using machine learning. In: proceedings of the 2021 ACM conference on fairness, accountability, and transparency, FAccT ’21; New York, NY, USA. Association for Computing Machinery, 2021: 272. 10.1145/3442188.3445891. [DOI] [PMC free article] [PubMed]

- 31. Szalavitz M. The pain was unbearable. so why did doctors turn her away? Wired Magazine. August, 2021. https://www.wired.com/story/opioid-drug-addiction-algorithm-chronic-pain/. Accessed June 21, 2023.

- 32. Appriss Health. Requestor user support manual. Arizona Prescription Drug Monitoring Program, 2022. https://pharmacypmp.az.gov/sites/default/files/2022-03/AZ%20PMP%20AWARxE%20User%20Support%20Manual_v2.5.pdf. Accessed June 21, 2023.

- 33. Picco L, Lam T, Haines S, Nielsen S.. How prescription drug monitoring programs influence clinical decision-making: a mixed methods systematic review and meta-analysis. Drug Alcohol Depend 2021; 228: 109090. [DOI] [PubMed] [Google Scholar]

- 34. Picco L, Sanfilippo P, Xia T, Lam T, Nielsen S.. How do patient, pharmacist and medication characteristics and prescription drug monitoring program alerts influence pharmacists’ decisions to dispense opioids? a randomised controlled factorial experiment. Int J Drug Policy 2022; 109: 103856. [DOI] [PubMed] [Google Scholar]

- 35. Oliva EM, Bowe T, Tavakoli S, et al. Development and applications of the Veterans Health Administration’s stratification tool for opioid risk mitigation (storm) to improve opioid safety and prevent overdose and suicide. Psychol Serv 2017; 14 (1): 34–49. [DOI] [PubMed] [Google Scholar]

- 36. Chinman M, Gellad WF, McCarthy S, et al. Protocol for evaluating the nationwide implementation of the VA Stratification Tool for Opioid Risk Management (STORM). Implement Sci 2019; 14 (1): 5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. International Organization for Standardization. ISO 13485:2016: medical devices –quality management systems – requirements for regulatory purposes. Standard, ISO, Geneva, CH, 2016. https://www.iso.org/standard/59752.html. Accessed June 21, 2023.

- 38. Global Harmonization Task Force International Medical Device Regulators Forum. Quality management system – medical devices – guidance on corrective action and preventive action and related QMS processes. Standard, IMDRF, 2010. https://www.imdrf.org/documents/ghtf-final-documents/ghtf-study-group-3-quality-systems. Accessed June 21, 2023.

- 39. Chen IY, Pierson E, Rose S, Joshi S, Ferryman K, Ghassemi M.. Ethical machine learning in healthcare. Annu Rev Biomed Data Sci 2021; 4 (1): 123–44. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Stevens LM, Mortazavi BJ, Deo RC, Curtis L, Kao DP.. Recommendations for reporting machine learning analyses in clinical research. Circ Cardiovasc Qual Outcomes 2020; 13 (10): e006556. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41. Mehrabi N, Morstatter F, Saxena N, Lerman K, Galstyan A.. A survey on bias and fairness in machine learning. ACM Comput Surv 2022; 54 (6): 1–35. [Google Scholar]

- 42. Wiens J, Saria S, Sendak M, et al. Do no harm: a roadmap for responsible machine learning for health care. Nat Med 2019; 25 (9): 1337–40. [DOI] [PubMed] [Google Scholar]

- 43. Huizenga JE, Breneman BC, Patel VR, Raz A, Speights DB. NARxCHECK score as a predictor of unintentional overdose death. Appriss Health, 2016. https://apprisshealth.com/wp-content/uploads/sites/2/2017/02/NARxCHECK-Score-as-a-Predictor.pdf. Accessed June 21, 2023.

- 44. Cochran G, Brown J, Yu Z, et al. Validation and threshold identification of a prescription drug monitoring program clinical opioid risk metric with the who alcohol, smoking, and substance involvement screening test. Drug Alcohol Depend 2021; 228: 109067. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Bannon MJ, Lapansie AR, Jaster AM, Saad MH, Lenders J, Schmidt CJ.. Opioid deaths involving concurrent benzodiazepine use: assessing risk factors through the analysis of prescription drug monitoring data and postmortem toxicology. Drug Alcohol Depend 2021; 225: 108854. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Emara AK, Grits D, Klika AK, et al. Narxcare scores greater than 300 are associated with adverse outcomes after primary tha. Clin Orthop Relat Res 2021; 479 (9): 1957–67. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47. Emara AK, Santana D, Grits D, et al. Exploration of overdose risk score and postoperative complications and health care use after total knee arthroplasty. JAMA Netw Open 2021; 4 (6): e2113977. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Yang P, Bonham AJ, Carlin AM, Finks JF, Ghaferi AA, Varban OA.. Patient characteristics and outcomes among bariatric surgery patients with high narcotic overdose scores. Surg Endosc 2022; 36 (12): 9313–20. [DOI] [PubMed] [Google Scholar]

- 49. Strombotne KL, Legler A, Minegishi T, et al. Effect of a predictive analytics-targeted program in patients on opioids: a stepped-wedge cluster randomized controlled trial. J Gen Intern Med 2023; 38 (2): 375–81. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Auty SG, Barr KD, Frakt AB, Garrido MM, Strombotne KL.. Effect of a Veterans Health Administration mandate to case review patients with opioid prescriptions on mortality among patients with opioid use disorder: a secondary analysis of the storm randomized control trial. Addiction 2023; 118 (5): 870–9. [DOI] [PubMed] [Google Scholar]

- 51. Rogal SS, Chinman M, Gellad WF, et al. Tracking implementation strategies in the randomized rollout of a Veterans Affairs national opioid risk management initiative. Implement Sci 2020; 15 (1): 48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. Minegishi T, Garrido MM, Lewis ET, et al. Randomized policy evaluation of the Veterans Health Administration Stratification Tool for Opioid Risk Mitigation (STORM). J Gen Intern Med 2022; 37 (14): 3746–50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Minegishi T, Frakt AB, Garrido MM, et al. Randomized program evaluation of the Veterans Health Administration Stratification Tool for Opioid Risk Mitigation (STORM): a research and clinical operations partnership to examine effectiveness. Subst Abus 2019; 40 (1): 14–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Hyung-Jin Y, Ho LC.. Medical big data: promise and challenges. Kidney Res Clin Pract 2017; 36 (1): 3–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Tamang S. Using big data to improve outcomes: data science at the VA. AcademyHealth Workshop: Understanding and Eliminating Bias in HSR Methods. April, 2021. https://academyhealth.org/events/2021-07/workshop-understanding-and-eliminating-bias-hsr-methods. Accessed June 21, 2023.

- 56. Halamka J, Might M, Tamang S. Real-world applications of AI to support health equity. National Academy of Medicine Workshop: Channeling the Potential of Artificial Intelligence to Advance Health Equity. August, 2021. https://nam.edu/event/channeling-the-potential-of-ai-to-advance-health-equity/. Accessed June 21, 2023.

- 57. Liao F, Adelaine S, Afshar M, Patterson BW.. Governance of clinical ai applications to facilitate safe and equitable deployment in a large health system: key elements and early successes. Front Digit Health 2022; 4: 931439. doi: 10.3389/fdgth.2022.931439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58. Armitage H. Researchers create guide for fair and equitable ai in health care. October, 2022. https://scopeblog.stanford.edu/2022/10/03/researchers-create-guide-for-fair-and-equitable-ai-in-health-care/. Accessed June 21, 2023.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No data were generated or analysed in support of this research.