Abstract

To monitor adherence to diets and to design and evaluate nutritional interventions, it is essential to obtain objective knowledge about eating behavior. In most research, measures of eating behavior are based on self-reporting, such as 24-h recalls, food records (food diaries) and food frequency questionnaires. Self-reporting is prone to inaccuracies due to inaccurate and subjective recall and other biases. Recording behavior using nonobtrusive technology in daily life would overcome this. Here, we provide an up-to-date systematic overview encompassing all (close-to) publicly or commercially available technologies to automatically record eating behavior in real-life settings. A total of 1328 studies were screened and, after applying defined inclusion and exclusion criteria, 122 studies were included for in-depth evaluation. Technologies in these studies were categorized by what type of eating behavior they measure and which type of sensor technology they use. In general, we found that relatively simple sensors are often used. Depending on the purpose, these are mainly motion sensors, microphones, weight sensors and photo cameras. While several of these technologies are commercially available, there is still a lack of publicly available algorithms that are needed to process and interpret the resulting data. We argue that future work should focus on developing robust algorithms and validating these technologies in real-life settings. Combining technologies (e.g., prompting individuals for self-reports at sensed, opportune moments) is a promising route toward ecologically valid studies of eating behavior.

Keywords: eating, drinking, daily life, real life, sensors, technology, behavior

1. Introduction

As stated by the World Health Organization (WHO) “Nutrition is coming to the fore as a major modifiable determinant of chronic disease, with scientific evidence increasingly supporting the view that alterations in diet have strong effects, both positive and negative, on health throughout life” [1]. It is therefore of key importance to find efficient and solid methodologies to study eating behavior and food intake in order to help reduce potential long-term health problems caused by unhealthy diets. Past research on eating behaviors and attitudes relies intensively on self-reporting tools, such as 24-h recalls, food records (food diaries) and food frequency questionnaires (FFQ; [2,3,4]). However, there is an increasing understanding of the limitations of this classical approach to studying eating behaviors and attitudes. One of the major limitations of this approach is that self-reporting tools rely on participants’ recall, which may be inaccurate or biased (especially when studying the actual amount of food or liquid intake [5]). Recall biases can be caused by demand characteristics, which are cues that may indicate the study aims to participants, leading them to change their behaviors or responses based on what they think the research is about [6], or more generally by the desire to comply with social norms and expectations when it comes to food intake [7,8]. Additionally, the majority of the studies investigating eating behavior are performed in the lab, which does not allow for a realistic replication of the many influences on eating behavior that occur in real life (e.g., [9]). To overcome these limitations, it is crucial to examine eating behavior and the effect of interventions in daily life, at home or at institutions such as schools and hospitals. In contrast to lab research settings, humans typically behave naturally in these settings. It is also important that testing real-life eating behaviors in naturalistic settings relies on implicit, nonobtrusive measures [10] that are objective and able to overcome potential biases.

There is growing interest in identifying technologies able to improve the quality and validity of data collected to advance nutrition science. Such technologies should enable eating behavior to be measured passively (i.e., without requiring action or mental effort on the part of the users), objectively and reliably in realistic contexts. To maximize the efficiency of real-life measurement, it is vital to develop technologies that capture eating behavior patterns in a low-cost, unobtrusive and easy-to-analyze way. For real-world practicality, the technologies should be comfortable and acceptable so that they can be used in naturalistic settings for extended periods while respecting the users’ privacy.

To gain insight into the state of the art in this field, we performed a search for published papers using technologies to measure eating behavior in real-life settings. In addition to papers describing specific systems and technologies, this search returned many review papers, some of which contained systematic reviews.

Evaluating these systematic reviews, we found that an up-to-date overview encompassing all (close-to) available technologies to automatically record eating behavior in real-life settings is still missing. To fill this gap, we here provide such an overview, categorized by what type of eating behavior they measure and which type of sensor technology they use. We indicate to what extent these technologies are readily available for use. With this review, we aim to (1) help researchers identify the most suitable technology to measure eating behavior in real life and to provide a basis for determining the next steps in (2) research on measuring eating behavior in real life and (3) technology development.

2. Methods and Procedure

2.1. Literature Review

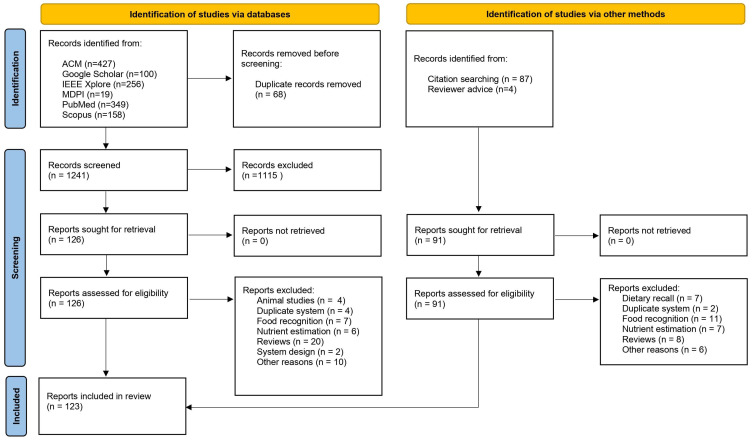

Our literature search reporting adheres to the Preferred Reporting Items for Systematic reviews and Meta-Analyses (PRISMA) checklist [11,12]. The PRISMA guidelines ensure that the literature is reviewed in a standard and systematic manner. This process underlies four phases: identification, screening, eligibility and inclusion. The PRISMA diagram showing the search flow and inclusion/exclusion of records and reports in this study is shown in Figure 1.

Figure 1.

PRISMA flow diagram describing the procedure used to select records and reports for inclusion in this review.

2.2. Eligibility Criteria

Our literature search aimed to identify mature and practical (i.e., not too complex or restrictive) technologies that can be used to unobtrusively assess food or drink intake in real-life conditions. The inclusion criterium was a sensor-based approach to the detection of eating or drinking. Studies not describing a sensor-based device to detect eating or drinking were excluded.

2.3. Information Sources and Search

The literature search included two stages: an initial automatic search of online databases and a manual search based on the reference lists from the papers selected from the previous search stage (using a snowballing approach [13,14]). In addition, papers recommended by a reviewer were added.

The initial search was conducted on 24 February 2023 across the ACM Digital Library, Google Scholar (first 100 results), IEEE Xplore, MDPI, PubMed and Scopus (Elsevier) databases. The results were date-limited from 2018 to date (i.e., 24 February 2023). As this field is rapidly advancing and sensor-based technologies evaluated in earlier papers are likely to have been further developed, we initially limited our search to the past 5 years.

Our broad search strategy was to identify papers that included terms in their title, abstract or keywords related to food and drink, eating or drinking activities and the assessment of the amount consumed.

The search was performed using equivalents of the following Boolean search string (where* represents a wildcard): “(beverage OR drink OR food OR meal) AND (consum* OR chew* OR eating OR ingest* OR intake OR swallow*) AND (portion OR serving OR size OR volume OR mass OR weigh*) AND (assess OR detect OR monitor OR measur*)”. Some search terms were truncated in an effort to include all variations of the word.

The records retrieved in the initial search across the six databases and the results of manual bibliography searches were imported into EndNote 20 (www.endnote.com) and duplicates were removed.

2.4. Screening Strategy

Figure 1 presents an overview of the screening strategy. In the first round, the titles and abstracts returned (n = 1241, after the elimination of 68 duplicates) were reviewed against the eligibility criterium. If the title and/or abstract mentioned a sensor-based approach to the detection of eating or drinking, the paper was included in the initial screening stage to be further assessed in the full-text screening stage. Papers that did not describe a sensor-based device to detect eating or drinking were excluded. Full-text screening was conducted on the remaining articles (n = 126), leading to a final sample of 73 included papers from the initial automatic search. Papers focusing on animal studies (n = 4), food recognition (n = 7), nutrient estimation (n = 6), system design (n = 2) or other nonrelated topics (n = 10) were excluded. While review papers (n = 20) were also excluded from our technology overview (Table 1), they were evaluated (Table A1 and Table A2 in Appendix A) and used to define the scope of this study. Additional papers were identified by manual search via the reference lists of full texts that were screened (n = 91). Full-text screening of these additional papers led to a final sample of 49 included papers from the manual search. Papers about dietary recall (n = 7), food recognition (n = 11), nutrient estimation (n = 7), describing systems already described in papers from the initial automatic search (n = 2) or other nonrelated topics (n = 6) were excluded. Again, review papers (n = 8) were excluded from our technology overview (Table 1) but evaluated and used to define the scope of this study. The total number of papers included in this review amounts to 123.

All screening rounds were conducted by two of the authors. Each record was reviewed by two reviewers to decide its eligibility based on the title and abstract of each study, taking into consideration the exclusion criteria. When a record was rejected by one reviewer and accepted by the other, it was further evaluated by all authors and kept for eligibility when a majority voted in favor.

2.5. Reporting

We evaluated and summarized the review papers that our search returned in two tables (Appendix A). Table A1 includes systematic reviews, while Table A2 includes nonsystematic reviews. We defined systematic reviews as reviews following the PRISMA methodology. For all reviews, we reported the year of publication and the general scope of the review. For systematic reviews, we also reported the years of inclusion, the number of papers included and the specific requirements for inclusion.

We summarized the core information about the devices and technologies for measuring eating and drinking behaviors from our search results in Table 1. This table categorizes the studies retrieved in our literature search in terms of their measurement objectives, target measures, the devices and algorithms that were used as well as their (commercial or public) availability and the way they were applied (method). In the column “Objective”, the purposes of the measurements are described. The three objectives we distinguish are “Eating/drinking activity detection”, “Bite/chewing/swallowing detection” and “Portion size estimation”. Note that the second and third objectives can be considered as subcategories of the first—technologies are included in the first if they could not be grouped under the second or third objective. The objectives are further specified in the column “Measurement targets”. In the column “Device”, we itemize the measurement tools or sensors used in the different systems. For each type of device, one or more representative papers were selected, bearing in mind the TRL (technology readiness level [15]), the availability (off-the-shelf) of the device and algorithm that were used, the year of publication (recent) and the number of times it was cited. The minimum TRL level was 2 and the paper with the highest TRL level among papers using similar techniques was selected as the representative paper. A concise description of each representative example is given in the “Method” column. The commercial availability of the example devices and algorithms is indicated in the “Off-the-shelf device” and “Ready-to-use algorithm” columns. Lastly, other studies using similar systems are listed in the “Similar papers” column. Systems combining devices for several different measurement targets can appear in different table rows. To indicate this, they are labeled with successive letters for each row they appear in (e.g., 1a and 1b).

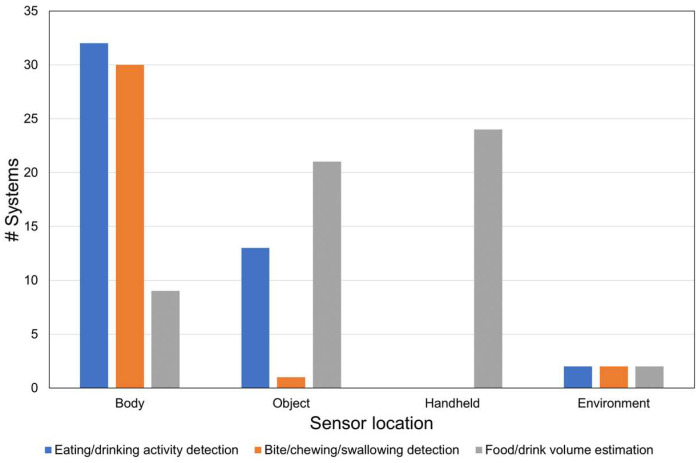

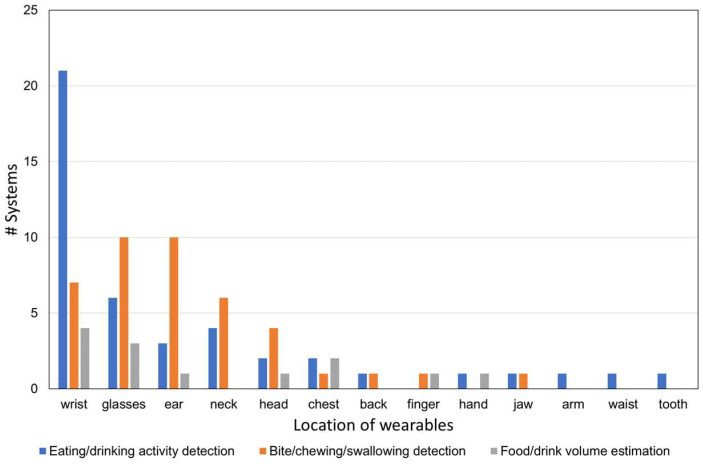

For each of the three objectives, we counted the number of papers that described sensors that are designed (1) to be attached to the body, (2) to be attached to an object, (3) to be placed in the environment or (4) to be held in the hand. Sensors attached to the body were further subdivided by body location. The results are visualized using bar graphs.

3. Results

Table 1 summarizes the core information of devices and technologies for measuring eating and drinking behaviors from our search results.

3.1. Eating and Drinking Activity Detection

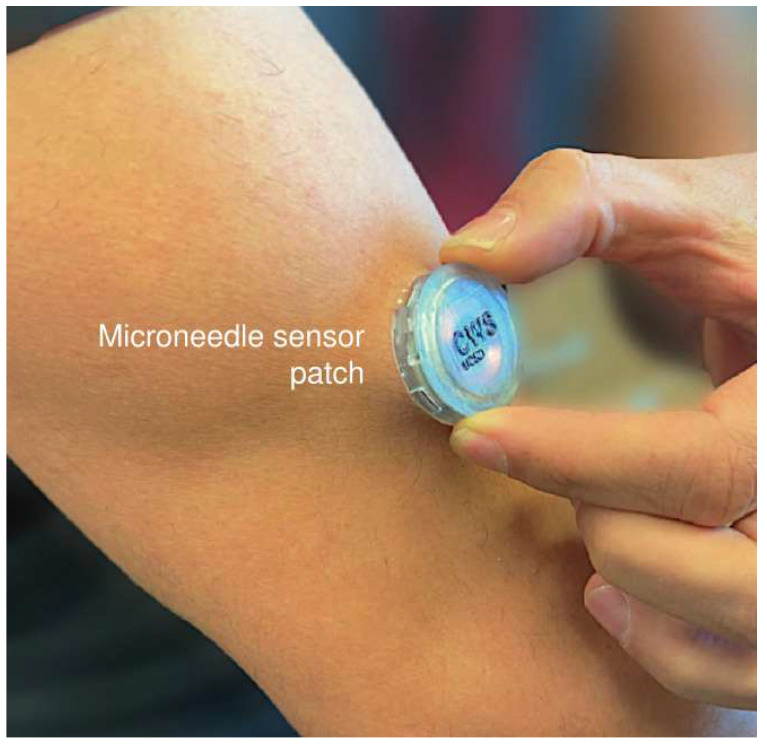

For “eating/drinking activity detection”, many systems have been reported that measure eating- and drinking-related motions. In particular, many papers reported measuring these actions using motion sensors such as inertial sensor modules (i.e., inertial measurement units or IMUs). IMUs typically consist of various sensors such as an accelerator, gyroscope and magnetometer. These sensors are embedded in smartphones and wearable devices such as smartwatches. In [16], researchers collected IMU signals with off-the-shelf smartwatches to identify hand-based eating and drinking-related activities. In this case, participants wore smartwatches on their preferred wrists. Other studies have employed IMUs worn on the wrist, upper arm, head, neck and combinations thereof [17,18,19,20]. IMUs worn on the wrist or upper arms can collect movement data relatively unobtrusively during natural eating activities such as lifting food or bringing utensils to the mouth. Recent research has also improved IMUs that are attached to the head or neck, combining sensors with glasses or necklaces so that they are less bulky and users are not aware that they are being worn. Besides IMUs, proximity sensors, piezoelectric sensors and radar sensors are also used to detect hand-to-mouth gestures or jawbone movements [21,22,23]. Pressure sensors are used to measure eating activity as well. For instance, in [24], eating activities and the amount of consumed food are measured by a pressure-sensitive tablecloth and tray. These devices provide information on food-intake-related actions such as cutting, scooping, stirring or the identification of the plate or container on which the action is executed and allow the tracking of weight changes of plates and containers. Microphones, RGB-D images and video cameras are also used to detect eating and drinking-related motions. In [25], eating actions are detected by a ready-to-use algorithm as the 3D overlap of the mouth and food, using RGB-D images taken with a commercially available smartphone. These imaging techniques have the advantage of being less physically intrusive than wearable motion sensors but they still restrict subjects’ natural eating behavior as the face must be clearly visible to the camera. Ear-worn sensors can measure in-body glucose levels [26] and tooth-mounted dielectric sensors can measure impedance changes in the mouth signaling the presence of food [27]. Although these latter methods can directly detect eating activity, the associated devices and data processing algorithms are still in the research phase. In addition, ref. [28] reports a wearable array of microneedles for the wireless and continuous real-time sensing of two metabolites (lactate and glucose, or alcohol and glucose) in the interstitial fluid (Figure 2). This is useful for truly continuous, quantitative, real-time monitoring of food and alcohol intake. Future development of the system is needed on several aspects such as battery life and advanced calibration algorithms.

Figure 2.

A microneedle-based wearable sensor system (Reprinted with permission from Ref. [28]. 2023, Springer Nature).

3.2. Bite, Chewing or Swallowing Detection

In the “bite/chewing/swallowing detection” category, we grouped studies in which the number of bites (bite count), bite weight and chewing or swallowing actions are measured. Motion sensors and video are used to detect bites (count). For instance, OpenPose is an off-the-shelf software that analyzes bite counts from videos [29]. To assess bite weight, weight sensors and acoustic sensors have been used [30,31]. In [30], the bite weight measurement also provides the estimation of a full portion.

Chewing or swallowing is the most well-studied eating- and drinking-related activity, as reflected by the number of papers focusing on such activities (31 papers). Motion sensors and microphones are frequently employed for this purpose. For instance, in [32], a gyroscope is used for chewing detection, an accelerometer for swallowing detection and a proximity sensor to detect hand-to-mouth gestures. Microphones are typically used to register chewing and swallowing sounds. In most cases, commercially available microphones are applied, while the applied detection algorithms are custom-made. Video, electroglottograph (EGG) and electromyography (EMG) devices are also used to detect chewing and swallowing. EGG detects the variations in the electrical impedance caused by the passage of food during swallowing, while EMG in these studies monitors the masseter and temporalis muscle activation for recording chewing strokes. The advantages of EGG and EMG are that they can directly detect swallowing and chewing while eating and are not, or are less, affected by other body movements compared to motion sensors. However, EMG devices are not wireless and EGG sensors need to be worn around the face, which is not optimal for use in everyday eating situations.

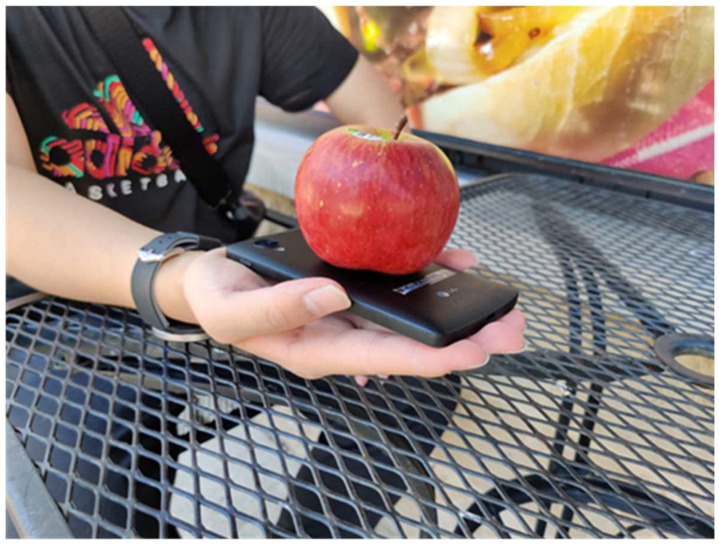

3.3. Portion Size Estimation

Portion size is estimated mainly by using weight sensors and food image analysis. Regarding weight sensors, the amount of food consumed is calculated by comparing the weights of plates before and after eating. An open-source system consisting of a wireless pocket-sized kitchen scale connected to a mobile application has been reported in [33]. As shown in Figure 3, a system turning an everyday smartphone into a weighing scale is also available [34]. The relative vibration intensity of the smartphone’s vibration motor and its built-in accelerometer are used to estimate the weight of food that is placed on the smartphone. Off-the-shelf smartphone cameras are typically used for volume estimation from food images. Also, several studies use RGB-D images to get more accurate volume estimations from information on the height of the target food. For image-based approaches, AI-based algorithms are often employed to calculate portion size. Some studies made prototype systems applicable to real-life situations. In [35], acoustic data from a microphone was collected along with food images to measure the distance from the camera to the food. This enables the food in the image to be scaled to its actual size without training images and reference objects. However, in other cases, image processing mostly uses a reference for comparing the food size. Besides image analysis, in [36], researchers took a 360-degree scanned video obtained with a laser module and a diffraction lens and applied their volume estimation algorithm to the data. In addition to the above devices, a method to estimate portion size using EMG has been reported [37]. In this study, EMG embedded in an armband device detects different patterns of signals based on the weight that a user is holding.

Figure 3.

VibroScale (reproduced from [34] with permission).

For estimating portion size in drinks, several kinds of sensors have been tested. An IMU in a smartwatch was used to estimate drink intake volume from sip duration [38]. Also, in [39], liquid sensors such as a capacitive sensor and a conductivity sensor were used to monitor the filling levels in a cup. Some research groups developed so-called smart fridges that automatically register food items and quantities. In [40], image analysis of a thermal image taken by an infrared (IR) sensor embedded in a fridge provides an estimation of a drink volume. Another study proposed a system called the Playful Bottle system [41], which consists of a smartphone attached to a common drinking mug. Drinking motions such as picking up the mug, tilting it back and placing it on the desk are detected by the phone’s accelerometer. After the drinking action is completed and the water line becomes steady, the phone’s camera captures an image of the amount of liquid in the mug (Figure 4).

Figure 4.

Playful Bottle system (reproduced from [40] with permission).

3.4. Sensor Location

Figure 5 indicates where sensors are typically located per objective. The locations of the sensors are classified as body-attached (e.g., ear, neck, head, glasses), embedded in objects (e.g., plates, cutlery) and the environment (e.g., distant camera, magnetic trackers). For eating/drinking activity detection, sensors are mostly worn on the body, followed by embedded in the objects. Body-worn sensors are also used for bite/chewing/swallowing detection. On the other hand, for portion size estimation, object-embedded and handheld sensors are mainly chosen depending on the measuring targets. Figure 6 shows the locations of wearable body sensors used in the reviewed studies. Sensors attached to wrists are most frequently used (32 cases), followed by embedded in glasses (19 cases) and attached to the ear (14 cases).

Figure 5.

Sensor placement per objective.

Figure 6.

Locations of wearables on the human body.

Table 1.

Summary of core information of devices and technologies for measuring eating and drinking behaviors. The commercial availability of the example devices and algorithms is indicated “Y”(Yes) and “N”(No). Letters a and b following the reference numbers indicate systems that combine devices for several different measurement targets, and therefore appear in two table rows (i.e., a and b).

| Objective | Measurement Target | Device | Representative Paper | Method | Off-the-Shelf Device | Ready-to-Use Algorithm | Similar Papers |

|---|---|---|---|---|---|---|---|

| Eating/drinking activity detection | eating/drinking motion | motion sensor | [16] | eating and drinking detection from smartwatch IMU signal | Y | N | [42] a, [43] a, [44,45,46], [47] a, [41] a, [21,48], [49] a, [22,50,51], [52] a, [38] a, [53] a, [17,18,54,55], [37] a, [56], [19] a, [57,58,59], [60] a, [61], [62] a, [63], [20] a, [64] a, [42,65,66,67,68] |

| [23] | detecting eating and drinking gestures from FMCW radar signal | N | N | ||||

| [24] a | eating activities and amount consumed measured by pressure-sensitive tablecloth and tray | N | N | ||||

| microphone | [47] b | eating detection from fused inertial-acoustic sensing using smartwatch with embedded IMU and microphone | Y | N | [26] a, [69], [60] b | ||

| RGB-D image | [25] a | eating action detected from smartphone RGB-D image as 3D overlap between mouth and food | Y | Y | |||

| video | [70] | eating detection from cap-mounted video camera | Y | N | [55] a | ||

| liquid level | liquid sensor | [71] | capacitive liquid level sensor | N | N | [72] | |

| impedance change in mouth | dielectric sensor | [27] | RF coupled tooth-mounted dielectric sensor measures impedance changes due to food in mouth | N | N | ||

| in-body glucose level | glucose sensor | [26] b | glucose level measured by ear-worn sensor | N | N | [28] a | |

| in-body alcohol level | microneedle sensor | [28] b | alcohol level measured by microneedle sensor on the upper arm | N | N | ||

| user identification | PPG (photoplethysmography) sensor | [53] b | sensors on water bottle to identify the user from heart rate | N | N | ||

| Bite/chewing/swallowing detection | bites (count) | motion sensor | [73] | a gyroscope mounted on a finger to detect motions of picking up food and delivering it to the mouth | Y | N | [74,75] |

| video | [29] | bite count by video analysis using OpenPose pose estimation software | Y | Y | |||

| bite weight | weight sensor | [30] a | plate-type base station with embedded weight sensors to measure amount and location of bites | N | N | [55] a | |

| acoustic sensor | [31] | commercial earbuds, estimation model based on nonaudio and audio features | Y | N | |||

| chewing/swallowing | motion sensor | [32] a | chewing detection from gyroscope, swallowing detection from accelerometer, hand-to-mouth gestures from proximity sensor | Y | Y | [76,77,78,79], [49] b, [80,81], [82] a, [83], [84] a, [85,86,87], [62] b, [20] b | |

| microphone | [88] | wearable microphone with minicomputer to detect chewing/swallowing sounds | Y | N | [43] b, [89,90,91], [82] b, [19] b, [92], [84] b, [93] | ||

| video | [94] | classification of facial action units related to chewing from video | Y | N | [55] b | ||

| EGG | [95] | swallowing detected by larynx-mounted EGG device | Y | N | |||

| EMG | [96] | eyeglasses equipped with EMG to monitor temporalis muscles’ activity | N | N | [43] c, [97] | ||

| Portion size estimation | portion size food | motion sensor | [34] | acceleration sensor of smartphone, measuring vibration intensity | Y | Y | [98] a |

| weight sensor | [33] | wireless pocket-sized kitchen scale connected to app | Y | Y | [99,100,101,102,103], [55] b, [104], [30] b, [98] b, [105], [106] a, [107], [64] b, [24] b | ||

| image | [108] | AI-based system to calculate food leftovers | Y | Y | [32] b, [35,109,110,111,112,113,114,115,116], [117] b, [106] b, [106,118,119,120,121,122,123] | ||

| [35] | measuring the distance from the camera to the food using smartphone images combined with microphone data | Y | N | [124] | |||

| [125] | RGB-D image and AI-based system to estimate consumed food volume using before- and after-meal images | Y | Y | [25] b, [126,127,128,129,130,131,132] | |||

| laser | [36] | 360-degree scanned video; the system design includes a volume estimation algorithm and a hardware add-on that consists of a laser module and a diffraction lens | N | N | [133] | ||

| EMG | [37] b | weight of food consumed from EMG data | N | N | |||

| portion size drink | motion sensor | [38] b | volume from sip duration from IMU in smartwatch | Y | N | [42] b, [52] b | |

| infrared (IR) sensor | [40] | thermal image by IR sensor embedded in smart fridge | N | N | |||

| liquid sensor | [39] | capacitive sensor, conductivity sensor, flow sensor, pressure sensor, force sensors embedded in different mug prototypes | N | N | |||

| image | [41] b | smartphone camera attached to mug | N | N | [134] |

4. Discussion

This systematic review provides an up-to-date overview of all (close-to) available technologies to automatically record eating behavior in real life. Technologies included in this review should enable eating behavior to be measured passively (i.e., without users’ active input), objectively and reliably in realistic contexts to avoid reliance on subjective user recall. We performed our review in order to help researchers identify the most suitable technology to measure eating behavior in real-life settings and to provide a basis for determining the next steps in both technology development and the measurement of eating behavior in real life. In total, 1332 studies were screened and 123 studies were included after the application of objective inclusion and exclusion criteria. A total of 26 studies contained more than one technology. We found that often, relatively simple sensors are used to measure eating behaviors. Motion sensors are commonly used for eating/drinking activity detection and bite/chewing/swallowing; in addition, microphones are often used in studies focusing on chewing/swallowing. These sensors are usually attached to the body, in particular to the wrist for eating/drinking activity detection and to areas close to the face for detecting bite/chewing/swallowing. For portion size estimation, weight sensors and images from photo cameras are mostly used.

Concerning the next steps in technology development, the information from the “Off-the-shelf device” and “Ready-to-use algorithm” columns in the technology overview table indicates which devices and algorithms are not ready for use yet and would benefit from further development. The category “portion size estimation” seems the most mature with respect to off-the-shelf availability and ready-to-use algorithms. Overall, what is mostly missing is ready-to-use algorithms. It is an enormous challenge to build fixed algorithms that accurately recognize eating behavior under varying conditions of sensor noise, types of food and individuals’ behavior and appearance. Typically, with algorithms, we refer to machine learning or AI algorithms. These are trained using annotated (correctly labeled) data and only work well in conditions that are similar to the ones they were trained in. In most reviewed studies, demonstrations of algorithms are limited to controlled conditions and a small number of participants. Therefore, these algorithms still need to be tested and evaluated for accuracy and generalizability outside the laboratory, such as in homes, restaurants and hospitals.

When it comes to real-life studies, the obtrusiveness of the devices is an important factor. Devices should minimally interfere with the natural behavior of participants. Devices worn on the body with wires connected to a battery or other devices may restrict eating motions and constantly remind participants that they are being recorded. Wireless devices are suitable in that perspective but at the same time, battery duration may be a limitation for long-term studies. Devices such as tray-embedded sensors and cameras that are not attached to the participant’s body are advantageous in terms of both obtrusiveness and battery duration.

Although video cameras can provide holistic data on participants’ eating behaviors, they present privacy concerns. When a camera is used to film the course of a meal, the data provide the participant’s physical characteristics and enable the identification of the participant. Also, when the experiments are performed at home, participants cannot avoid showing their private environment. Ideally, the experiments should allow data to be collected anonymously if this information is not needed for a certain purpose such as clinical data collection. This could be achieved by only storing extracted features from the camera data rather than the images themselves, though this prohibits later validation and improvement of feature extraction [135]. Systems using weight sensors do not suffer from privacy issues as camera images from the face do. Ref. [106] used a weight sensor in combination with a camera pointing downward at the scales to keep track of the consumption of various types of seasonings.

For future research, we think it will be powerful to combine methods and sensor technologies. While most studies rely on single types of technologies, there are successful examples of combinations that illustrate a number of ways in which system and data quality can be improved. For instance, a novel and robust device called SnackBox [136] consists of three containers for different types of snacks embedded on weight sensors (Figure 7) and can be used to monitor snacking behavior at home. It can be connected to wearables and smartphones, thereby allowing for contextualized interpretation of signals recorded from the participant and for targeted ecological momentary assessment (EMA [137]). With EMA, individuals are probed to report current behavior and experiences in their natural environment and this avoids reliance on memory. For instance, when the SnackBox detects snacking behavior, EMA can assess the individual’s current mood state through a short questionnaire. This affords the collection of more detailed and more accurate information compared to asking for this information at a later moment in time. Combining different sensor technologies can also have other benefits. Some studies used a motion detector or an audio sensor as switches to turn on other devices such as a chewing counter or a camera [62,91]. These systems are useful to collect data only during meal durations, thereby limiting superfluous data collection that is undesirable from the point of view of privacy and battery life of the devices that are worn the whole day. In a study imitating a restaurant setting, a system consisting of custom-made table-embedded weight sensors and passive RFID (radio-frequency identification) antennas was used [99]. This system detects the weight change in the food served on the table and recognizes what the food is using RFID tags, thereby complementing information that would have been obtained by using either or alone and facilitating the interpretation of the data. Other studies used an IMU in combination with a microphone to detect eating behaviors [60,82]. It was concluded that the acoustic sensor in combination with motion-related sensors improved the detection accuracy significantly compared to motion-related sensors alone.

Figure 7.

SnackBox (with permission from OnePlanet).

Besides investing in research on combining methods and sensor technologies, research applying and validating these technologies in out-of-the-lab studies is essential. Test generalizability between lab and real-life studies should be examined as well as generalizations across situations, user groups and user experience. These studies will lead to further improvements and/or insight into the context in which the technology can or cannot be used.

The current review has some limitations. First, we did not include a measure of accuracy or reliability of the technologies in our table. Some of the reviews listed in our reviews’ table (Table A1 in Appendix A, e.g., [138,139]) included the presence of evaluation metrics indicating the performance of the technologies (e.g., accuracy, sensitivity and precision) as an inclusion criterion. We decided not to have this specific inclusion criterion as we think in our case it is hard to have comparable measures among studies. Also, whether accuracy is “good” very much depends on the specific research question and study design. Second, our classification of whether an algorithm is ready-to-use could not be based on information directly provided in the paper, but should be considered as a somewhat subjective estimate from the authors of this review.

In conclusion, there are some promising devices for measuring eating behavior in naturalistic settings. However, it will take some time before some of these devices and algorithms will become commercially available due to a lack of examples from a large number of test users and in various conditions. Until then, research in- and outside the lab needs to be carried out using custom-made devices and algorithms and/or with combinations of existing devices. The approach to combine different technologies is recommended as it can lead to multimodal datasets consisting of different aspects of eating behavior (e.g., when people are eating and at what rate), dietary intake (e.g., what people are eating and how much) and contextual factors (e.g., why people are eating and with whom). We expect this to result in a much fuller understanding of individual eating patterns and dynamics, in real-time and in context, which can be used to develop adaptive, personalized interventions. For instance, physiological measures reflecting food elicited attention and arousal have been shown to be positively associated with food neophobia (the hesitance to try novel food) [140] in controlled settings [141,142]. Measuring these in naturalistic circumstances together with eating behavior and possible interventions (e.g., based on distracting attention from food) could increase our understanding of food neophobia and inspire methods to stimulate consuming novel, healthy food.

New technologies measuring individual eating behaviors will be beneficial not only in consumer behavioral studies but also in the field of food and medical industries. New insights into eating patterns and traits discovered using these technologies may contribute to clarifying the use of food products in a wide range of consumers or to allow for guidance in improving patients’ diets.

Appendix A

Table A1.

Systematic review papers that were evaluated and used to define the scope of this study (see Section 1). Listed for all systematic reviews are the year of publication, the focus of the review, the years of inclusion, the number of papers included and the specific requirements for inclusion. Text in italic represents literal quotes.

| Reference | Year of Publication | Focus of Review | Years of Inclusion | Number of Papers Included | Specific Requirements for Inclusion |

|---|---|---|---|---|---|

| [143] | 2020 | In this review paper [they] provide an overview about automatic food intake monitoring, by focusing on technical aspects and Computer Vision works which solve the main involved tasks (i.e., classification, recognitions, segmentation, etc.). | 2010–2020 | 23 papers that present systems for automatic food intake monitoring + 46 papers that address Computer Vision tasks related to food images analysis | Method should apply computer vision techniques. |

| [138] | 2020 | [This] scoping review was conducted in order to: 1. catalog the current use of wearable devices and sensors that automatically detect eating activity (dietary intake and/or eating behavior) specifically in free-living research settings; 2. and identify the sample size, sensor types, ground-truth measures, eating outcomes, and evaluation metrics used to evaluate these sensors. |

Prior to 22 December 2019 | 33 |

I—description of any wearable device or sensor (i.e., worn on the body) that was used

to automatically (i.e., no required actions by the user) detect any form of eating (e.g., content of food consumed, quantity of food consumed, eating event, etc.). Proxies for “eating” measures, such as glucose levels or energy expenditure, were not included. II—“In-field” (non-lab) testing of the sensor(s), in which eating and activities were performed at-will with no restrictions (i.e., what, where, with whom, when, and how the user ate could not be restricted). III—At least one evaluation metric (e.g., Accuracy, Sensitivity, Precision, F1-score) that indicated the performance of the sensor on detecting its respective form of eating. |

| [144] | 2019 | The goal of this review was to identify unique technology-based tools for dietary intake assessment, including smartphone applications, those that captured digital images of foods and beverages for the purpose of dietary intake assessment, and dietary assessment tools available from the Web or that were accessed from a personal computer (PC). | January 2011–September 2017 | 43 |

(1) publications were in English,

(2) articles were published from January 2011 to September 2017, and (3) sufficient information was available to evaluate tool features, functions, and uses. |

| [145] | 2017 | This article reviews the most relevant and recent researches on automatic diet monitoring, discussing their strengths and weaknesses. In particular, the article reviews two approaches to this problem, accounting for most of the work in the area. The first approach is based on image analysis and aims at extracting information about food content automatically from food images. The second one relies on wearable sensors and has the detection of eating behaviours as its main goal. | Not specified | Not specified | n/a |

| [146] | 2019 | The aim of this review is to synthesise research to date that utilises upper limb motion tracking sensors, either individually or in combination with other technologies (e.g., cameras, microphones), to objectively assess eating behaviour. | 2005–2018 | 69 |

(1) used at least one wearable motion sensor,

(2) that was mounted to the wrist, lower arm, or upper arm (referred to as the upper limb in this review), (3) for eating behaviour assessment or human activity detection, where one of the classified activities is eating or drinking. We explicitly also included studies that additionally employed other sensors on other parts of the body (e.g., cameras, microphones, scales). |

| [147] | 2022 | This paper consists of a systematic review of sensors and machine learning approaches for detecting food intake episodes. […] The main questions of this systematic review were as follows: (RQ1) What sensors can be used to access food intake moments effectively? (RQ2) What can be done to integrate such sensors into daily lives seamlessly? (RQ3) What processing must be done to achieve good accuracy? | 2010–2021 | 30 |

(1) research work that performs food intake detection;

(2) research work that uses sensors to detect food with the help of sensors; (3) research work that presents some processing of food detection to propose diet; (4) research work that use wearable biosensors to detect food intake; (5) research work that use the methodology of deep learning, Support Vector Machines or Convolutional Neural Networks related to food intake; (6) research work that is not directly related to image processing techniques; (7) research work that is original; (8) papers published between 2010 and 2021; and (9) papers written in English |

| [148] | 2021 |

This article presents a comprehensive review of the use of sensor methodologies for portion size estimation. […] Three research questions were chosen to guide this systematic review:

RQ1) What are the available state-of-the-art SB-FPSE methodologies? […] RQ2) What methods are employed for portion size estimation from sensor data and how accurate are these methods? […] RQ3) Which sensor modalities are more suitable for use in the free-living conditions? |

Since 2000 | 67 | Articles published in peer-reviewed venues; […] Papers that describe methods for estimation of portion size; FPSE methods that are either automatic or semi-automatic; written in English. |

| [135] | 2022 | [They] reviewed the current methods to automatically detect eating behavior events from video recordings. | 2010–2021 | 13 |

Original research articles […] published in the English language and containing findings on video analysis for human eating behavior from January 2010 to December 2021. […] Conference papers were included. […] Articles concerning non-human studies were excluded. We excluded research articles on eating behavior with video electroencephalogram monitoring, verbal interaction analysis, or sensors, as well

as research studies not focusing on automated measures as they are beyond the scope of video analysis. |

| [139] | 2022 | The aim of this study was to identify and collate sensor-based technologies that are feasible for dietitians to use to assist with performing dietary assessments in real-world practice settings. | 2016–2021 | 54 |

Any scientific paper published between January 2016 and December 2021 that used sensor-based devices to passively detect and record the initiation of eating in real-time. Studies were further excluded during the full text screening stage if they did not evaluate device performance or if the same research group conducted a more recent study describing a device that superseded previous studies of the same device.

Studies evaluating a device that did not have the capacity to detect and record the start time of food intake, did not use sensors, were not applicable for use in free-living settings, or were discontinued at the time of the search were also excluded. |

| [149] | 2021 | This paper reviews the most recent solutions to automatic fluid intake monitoring both commercially and in the literature. The available technologies are divided into four categories: wearables, surfaces with embedded sensors, vision- and environmental-based solutions, and smart containers. | 2010–2020 | 115 | Papers that did not study liquid intake and only studied food intake or other unrelated activities were excluded. Since this review is focused on the elderly population, in the wearable section, we only included literature that used wristbands and textile technology which could be easily worn without affecting the normal daily activity of the subjects. We have excluded devices that were not watch/band or textile based such as throat and ear microphones or ear inertial devices as they are not practical for everyday use. […] Although this review is focused on the elderly population, studies that used adult subjects were not excluded, as there are too few that only used seniors. |

| [150] | 2022 | The purpose of this review is to analyze the effectiveness of mHealth and wearable sensors to manage Alcohol Use Disorders, compared with the outcomes of the same conditions under traditional, face-to-face (in person) treatment. | 2012–2022 | 25 | Articles for analysis were published in the last 10 years in peer-reviewed academic journals, and published in the English language. They must include participants who are adults (18 years of age or older). Preferred methods were true experiments (RCT, etc.), but quasi-experimental, non-experimental, and qualitative studies were also accepted. Other systematic reviews were not accepted so as not to confound the results. Works that did not mention wearable sensors or mHealth to treat AUD were excluded. Studies with participants under age 18 were excluded. Studies that did not report results were excluded. |

| [151] | 2023 | This paper reviews the existing work […] on vision-based intake (food and fluid) monitoring methods to assess the size and scope of the available literature and identify the current challenges and research gaps. | Not specified | 253 |

(1) at least one kind of vision-based

technology (e.g., RGB-D camera or wearable camera) was used in the paper; (2) eating or drinking activities or both identified in the paper; (3) the paper used human participants data; (4) at least one of the evaluation criteria (e.g., F1-score) was used for assessing the performance of the design |

Table A2.

Nonsystematic review papers that were evaluated and used to define the scope of this study (see Section 1). Listed for all nonsystematic reviews are the year of publication and the focus of the review. Text in italic represents literal quotes.

| Reference | Year of Publication | Focus of Review |

|---|---|---|

| [152] | 2019 | A group of 30 experts gathered to discuss the state of evidence with regard to monitoring calorie intake and eating behaviors […] characterized into 3 domains: (1) image-based sensing (e.g, wearable and smartphone-based cameras combined with machine learning algorithms); (2) eating action unit (EAU) sensors (eg, to measure feeding gesture and chewing rate); and (3) biochemical measures (e.g, serum and plasma metabolite concentrations). They discussed how each domain functions, provided examples of promising solutions, and highlighted potential challenges and opportunities in each domain. |

| [153] | 2022 |

This paper concerns the validity of new consumer research technologies, as applied in a food behaviour context. Therefore, [they] introduce three validity criteria based on psychological theory concerning biases resulting from the awareness a consumer has of a measurement situation. […] The three criteria addressing validity are: 1. Reflection: the research method requires the ‘person(a)’ of the consumer, i.e., he/she needs to think about his-/herself or his/her behaviour, 2. Awareness: the method requires the consumer to know he or she is being tested, 3. Informed: the method requires the consumer to know the underlying research question. |

| [154] | 2022 | They present a high-level overview of [their] recent work on intake monitoring using a smartwatch, as well as methods using an in-ear microphone. […] [This paper’s] goal is to inform researchers and users of intake monitoring methods regarding (i) the development of new methods based on commercially available devices, (ii) what to expect in terms of effectiveness, and (iii) how these methods can be used in research as well as in practical applications. |

| [155] | 2021 | A review of the state of the art of wearable sensors and methodologies proposed for monitoring ingestive behavior in humans |

| [156] | 2017 | This article evaluates the potential of various approaches to dietary monitoring with respect to convenience, accuracy, and applicability to real-world environments. [They] emphasize the application of technology and sensor-based solutions to the health-monitoring domain, and [they] evaluate various form factors to provide a comprehensive survey of the prior art in the field. |

| [157] | 2022 | The original ultimate goal of the studies reviewed in this paper was to use the laboratory test meal, measured with the UEM [universal eating monitor], to translate animal models of ingestion to humans for the study of the physiological controls of food intake under standardized conditions. |

| [158] | 2022 | This paper describes many food weight detection systems which includes sensor systems consisting of a load cell, manual food waste method, wearable sensors. |

| [159] | 2018 | This paper summarizes recent technological advancements, such as remote sensing devices, digital photography, and multisensor devices, which have the potential to improve the assessment of dietary intake and physical activity in free-living adults. |

| [160] | 2022 | Focusing on non-invasive solutions, we categorised identified technologies according to five study domains: (1) detecting food-related emotions, (2) monitoring food choices, (3) detecting eating actions, (4) identifying the type of food consumed, and (5) estimating the amount of food consumed. Additionally, [they] considered technologies not yet applied in the targeted research disciplines but worth considering in future research. |

| [161] | 2020 | In this article [they] describe how wrist-worn wearables, on-body cameras, and body-mounted biosensors can be used to capture data about when, what, and how much people eat and drink. [They] illustrate how these new techniques can be integrated to provide complete solutions for the passive, objective assessment of a wide range of traditional dietary factors, as well as novel measures of eating architecture, within person variation in intakes, and food/nutrient combinations within meals. |

| [162] | 2021 | This survey discusses the best-performing methodologies that have been developed so far for automatic food recognition and volume estimation. |

| [163] | 2020 | This paper reviews various novel digital methods for food volume estimation and explores the potential for adopting such technology in the Southeast Asian context. |

| [164] | 2017 | This paper presents a meticulous review of the latest sensing platforms and data analytic approaches to solve the challenges of food-intake monitoring, ranging from ear-based chewing and swallowing detection systems that capture eating gestures to wearable cameras that identify food types and caloric content through image processing techniques. This paper focuses on the comparison of different technologies and approaches that relate to user comfort, body location, and applications for medical research. |

| [165] | 2020 | In this survey, a wide range of chewing activity detection explored to outline the sensing design, classification methods, performances, chewing parameters, chewing data analysis as well as the challenges and limitations associated with them. |

| [166] | 2021 | Recent advances in digital nutrition technology, including calories counting mobile apps and wearable motion tracking devices, lack the ability of monitoring nutrition at the molecular level. The realization of e ff ective precision nutrition requires synergy from di ff erent sensor modalities in order to make timely reliable predictions and e ffi cient feedback. This work reviews key opportunities and challenges toward the successful realization of e ff ective wearable and mobile nutrition monitoring platforms. |

Author Contributions

Conceptualization, H.H., P.P., A.T. and A.-M.B.; Data curation, H.H. and A.T.; Formal analysis, H.H. and A.T.; Funding acquisition, A.-M.B.; Investigation, H.H., P.P. and A.T.; Methodology, A.T.; Supervision, A.-M.B.; Visualization, A.T.; Writing—original draft, H.H., P.P., A.T. and A.-M.B.; Writing—review and editing, H.H., P.P., A.T., A.-M.B. and G.C. All authors have read and agreed to the published version of the manuscript.

Conflicts of Interest

This study was funded by Kikkoman Europe R&D Laboratory B.V. Haruka Hiraguchi is employed by Kikkoman Europe R&D Laboratory B.V.. Haruka Hiraguchi reports no potential conflicts with the study. All other authors declare no conflict of interest.

Funding Statement

This study was funded by Kikkoman Europe R&D Laboratory B.V.

Footnotes

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content.

References

- 1.World Health Organization . Diet, Nutrition, and the Prevention of Chronic Diseases: Report of a Joint WHO/FAO Expert Consultation. Volume 916. World Health Organization; Geneva, Switzerland: 2003. [PubMed] [Google Scholar]

- 2.Magarey A., Watson J., Golley R.K., Burrows T., Sutherland R., McNaughton S.A., Denney-Wilson E., Campbell K., Collins C. Assessing dietary intake in children and adolescents: Considerations and recommendations for obesity research. Int. J. Pediatr. Obes. 2011;6:2–11. doi: 10.3109/17477161003728469. [DOI] [PubMed] [Google Scholar]

- 3.Shim J.-S., Oh K., Kim H.C. Dietary assessment methods in epidemiologic studies. Epidemiol. Health. 2014;36:e2014009. doi: 10.4178/epih/e2014009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Thompson F.E., Subar A.F., Loria C.M., Reedy J.L., Baranowski T. Need for technological innovation in dietary assessment. J. Am. Diet. Assoc. 2010;110:48–51. doi: 10.1016/j.jada.2009.10.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Almiron-Roig E., Solis-Trapala I., Dodd J., Jebb S.A. Estimating food portions. Influence of unit number, meal type and energy density. Appetite. 2013;71:95–103. doi: 10.1016/j.appet.2013.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Sharpe D., Whelton W.J. Frightened by an old scarecrow: The remarkable resilience of demand characteristics. Rev. Gen. Psychol. 2016;20:349–368. doi: 10.1037/gpr0000087. [DOI] [Google Scholar]

- 7.Nix E., Wengreen H.J. Social approval bias in self-reported fruit and vegetable intake after presentation of a normative message in college students. Appetite. 2017;116:552–558. doi: 10.1016/j.appet.2017.05.045. [DOI] [PubMed] [Google Scholar]

- 8.Robinson E., Kersbergen I., Brunstrom J.M., Field M. I’m watching you. Awareness that food consumption is being monitored is a demand characteristic in eating-behaviour experiments. Appetite. 2014;83:19–25. doi: 10.1016/j.appet.2014.07.029. [DOI] [PubMed] [Google Scholar]

- 9.O’Connor D.B., Jones F., Conner M., McMillan B., Ferguson E. Effects of daily hassles and eating style on eating behavior. Health Psychol. 2008;27:S20–S31. doi: 10.1037/0278-6133.27.1.S20. [DOI] [PubMed] [Google Scholar]

- 10.Spruijt-Metz D., Wen C.K.F., Bell B.M., Intille S., Huang J.S., Baranowski T. Advances and controversies in diet and physical activity measurement in youth. Am. J. Prev. Med. 2018;55:e81–e91. doi: 10.1016/j.amepre.2018.06.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Page M.J., Moher D., Bossuyt P.M., Boutron I., Hoffmann T.C., Mulrow C.D., Shamseer L., Tetzlaff J.M., Akl E.A., Brennan S.E., et al. PRISMA 2020 explanation and elaboration: Updated guidance and exemplars for reporting systematic reviews. BMJ. 2021;372:n160. doi: 10.1136/bmj.n160. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Moher D., Liberati A., Tetzlaff J., Altman D.G. Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. Ann. Intern. Med. 2009;151:264–269. doi: 10.7326/0003-4819-151-4-200908180-00135. [DOI] [PubMed] [Google Scholar]

- 13.Wohlin C., Kalinowski M., Romero Felizardo K., Mendes E. Successful combination of database search and snowballing for identification of primary studies in systematic literature studies. Inf. Softw. Technol. 2022;147:106908. doi: 10.1016/j.infsof.2022.106908. [DOI] [Google Scholar]

- 14.Wohlin C. Guidelines for snowballing in systematic literature studies and a replication in software engineering; Proceedings of the 18th International Conference on Evaluation and Assessment in Software Engineering; London, UK. 13–14 May 2014; p. 38. [DOI] [Google Scholar]

- 15.EU . Horizon 2020—Work Programme 2014–2015: General Annexes. EU; Brussels, Belgium: 2014. Technology readiness levels (TRL) [Google Scholar]

- 16.Diamantidou E., Giakoumis D., Votis K., Tzovaras D., Likothanassis S. Comparing deep learning and human crafted features for recognising hand activities of daily living from wearables; Proceedings of the 2022 23rd IEEE International Conference on Mobile Data Management (MDM); Paphos, Cyprus. 6–9 June 2022; pp. 381–384. [DOI] [Google Scholar]

- 17.Kyritsis K., Diou C., Delopoulos A. A data driven end-to-end approach for in-the-wild monitoring of eating behavior using smartwatches. IEEE J. Biomed. Health Inform. 2021;25:22–34. doi: 10.1109/JBHI.2020.2984907. [DOI] [PubMed] [Google Scholar]

- 18.Kyritsis K., Tatli C.L., Diou C., Delopoulos A. Automated analysis of in meal eating behavior using a commercial wristband IMU sensor; Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Jeju, Republic of Korea. 11–15 July 2017; pp. 2843–2846. [DOI] [PubMed] [Google Scholar]

- 19.Mirtchouk M., Lustig D., Smith A., Ching I., Zheng M., Kleinberg S. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. Volume 1. Association for Computing Machinery; New York, NY, USA: 2017. Recognizing eating from body-worn sensors: Combining free-living and laboratory data; p. 85. [DOI] [Google Scholar]

- 20.Zhang S., Zhao Y., Nguyen D.T., Xu R., Sen S., Hester J., Alshurafa N. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. Volume 4. Association for Computing Machinery; New York, NY, USA: 2020. NeckSense: A multi-sensor necklace for detecting eating activities in free-living conditions; p. 72. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Chun K.S., Bhattacharya S., Thomaz E. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. Volume 2. Association for Computing Machinery; New York, NY, USA: 2018. Detecting eating episodes by tracking jawbone movements with a non-contact wearable sensor; p. 4. [DOI] [Google Scholar]

- 22.Farooq M., Doulah A., Parton J., McCrory M.A., Higgins J.A., Sazonov E. Validation of sensor-based food intake detection by multicamera video observation in an unconstrained environment. Nutrients. 2019;11:609. doi: 10.3390/nu11030609. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wang C., Kumar T.S., De Raedt W., Camps G., Hallez H., Vanrumste B. Eat-Radar: Continuous gine-grained eating gesture detection using FMCW radar and 3D temporal convolutional network. arXiv. 2022 doi: 10.48550/arXiv.2211.04253.2211.04253 [DOI] [PubMed] [Google Scholar]

- 24.Zhou B., Cheng J., Sundholm M., Reiss A., Huang W., Amft O., Lukowicz P. Smart table surface: A novel approach to pervasive dining monitoring; Proceedings of the 2015 IEEE International Conference on Pervasive Computing and Communications (PerCom); St. Louis, MO, USA. 23–27 March 2015; pp. 155–162. [DOI] [Google Scholar]

- 25.Adachi K., Yanai K. DepthGrillCam: A mobile application for real-time eating action recording using RGB-D images; Proceedings of the 7th International Workshop on Multimedia Assisted Dietary Management; Lisboa, Portugal. 10 October 2022; pp. 55–59. [DOI] [Google Scholar]

- 26.Rosa B.G., Anastasova-Ivanova S., Lo B., Yang G.Z. Towards a fully automatic food intake recognition system using acoustic, image capturing and glucose measurements; Proceedings of the 16th International Conference on Wearable and Implantable Body Sensor Networks, BSN 2019; Chicago, IL, USA. 19–22 May 2019; Proceedings C7-8771070. [DOI] [Google Scholar]

- 27.Tseng P., Napier B., Garbarini L., Kaplan D.L., Omenetto F.G. Functional, RF-trilayer sensors for tooth-mounted, wireless monitoring of the oral cavity and food consumption. Adv. Mater. 2018;30:1703257. doi: 10.1002/adma.201703257. [DOI] [PubMed] [Google Scholar]

- 28.Tehrani F., Teymourian H., Wuerstle B., Kavner J., Patel R., Furmidge A., Aghavali R., Hosseini-Toudeshki H., Brown C., Zhang F., et al. An integrated wearable microneedle array for the continuous monitoring of multiple biomarkers in interstitial fluid. Nat. Biomed. Eng. 2022;6:1214–1224. doi: 10.1038/s41551-022-00887-1. [DOI] [PubMed] [Google Scholar]

- 29.Qiu J., Lo F.P.W., Lo B. Assessing individual dietary intake in food sharing scenarios with a 360 camera and deep learning; Proceedings of the 2019 IEEE 16th International Conference on Wearable and Implantable Body Sensor Networks (BSN); Chicago, IL, USA. 19–22 May 2019; pp. 1–4. [DOI] [Google Scholar]

- 30.Mertes G., Ding L., Chen W., Hallez H., Jia J., Vanrumste B. Measuring and localizing individual bites using a sensor augmented plate during unrestricted eating for the aging population. IEEE J. Biomed. Health Inform. 2020;24:1509–1518. doi: 10.1109/JBHI.2019.2932011. [DOI] [PubMed] [Google Scholar]

- 31.Papapanagiotou V., Ganotakis S., Delopoulos A. Bite-weight estimation using commercial ear buds; Proceedings of the 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC); Guadalajara, Jalisco, Mexico. 1–5 November 2021; pp. 7182–7185. [DOI] [PubMed] [Google Scholar]

- 32.Bedri A., Li D., Khurana R., Bhuwalka K., Goel M. FitByte: Automatic diet monitoring in unconstrained situations using multimodal sensing on eyeglasses; Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems; Honolulu, HI, USA. 25–30 April 2020; pp. 1–12. [DOI] [Google Scholar]

- 33.Biasizzo A., Koroušić Seljak B., Valenčič E., Pavlin M., Santo Zarnik M., Blažica B., O’Kelly D., Papa G. An open-source approach to solving the problem of accurate food-intake monitoring. IEEE Access. 2021;9:162835–162846. doi: 10.1109/ACCESS.2021.3128995. [DOI] [Google Scholar]

- 34.Zhang S., Xu Q., Sen S., Alshurafa N. Adjunct Proceedings of the 2020 ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2020 ACM International Symposium on Wearable Computers, Virtual Event, Mexico, 12–17 September 2020. Association for Computing Machinery; New York, NY, USA: VibroScale: Turning your smartphone into a weighing scale; pp. 176–179. [DOI] [Google Scholar]

- 35.Gao J., Tan W., Ma L., Wang Y., Tang W. MUSEFood: Multi-Sensor-Based Food Volume Estimation on Smartphones; Proceedings of the 2019 IEEE SmartWorld, Ubiquitous Intelligence & Computing, Advanced & Trusted Computing, Scalable Computing & Communications, Cloud & Big Data Computing, Internet of People and Smart City Innovation (SmartWorld/SCALCOM/UIC/ATC/CBDCom/IOP/SCI); Leicester, UK. 19–23 August 2019; pp. 899–906. [DOI] [Google Scholar]

- 36.Makhsous S., Mohammad H.M., Schenk J.M., Mamishev A.V., Kristal A.R. A novel mobile structured light system in food 3d reconstruction and volume estimation. Sensors. 2019;19:564. doi: 10.3390/s19030564. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Lee J., Paudyal P., Banerjee A., Gupta S.K.S. FIT-EVE&ADAM: Estimation of velocity & energy for automated diet activity monitoring; Proceedings of the 2017 16th IEEE International Conference on Machine Learning and Applications (ICMLA); Cancun, Mexico. 18–21 December 2017; pp. 1071–1074. [Google Scholar]

- 38.Hamatani T., Elhamshary M., Uchiyama A., Higashino T. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. Volume 2. Association for Computing Machinery; New York, NY, USA: 2018. FluidMeter: Gauging the human daily fluid intake using smartwatches; p. 113. [DOI] [Google Scholar]

- 39.Kreutzer J.F., Deist J., Hein C.M., Lueth T.C. Sensor systems for monitoring fluid intake indirectly and directly; Proceedings of the 2016 IEEE 13th International Conference on Wearable and Implantable Body Sensor Networks (BSN); San Francisco, CA, USA. 14–17 June 2016; pp. 1–6. [DOI] [Google Scholar]

- 40.Sharma A., Misra A., Subramaniam V., Lee Y. SmrtFridge: IoT-based, user interaction-driven food item & quantity sensing; Proceedings of the 17th Conference on Embedded Networked Sensor Systems; New York, NY, USA. 10–13 November 2019; pp. 245–257. [DOI] [Google Scholar]

- 41.Chiu M.-C., Chang S.-P., Chang Y.-C., Chu H.-H., Chen C.C.-H., Hsiao F.-H., Ko J.-C. Playful bottle: A mobile social persuasion system to motivate healthy water intake; Proceedings of the 11th International Conference on Ubiquitous Computing; Orlando, FL, USA. 30 September–3 October 2009; pp. 185–194. [DOI] [Google Scholar]

- 42.Amft O., Bannach D., Pirkl G., Kreil M., Lukowicz P. Towards wearable sensing-based assessment of fluid intake; Proceedings of the 8th IEEE International Conference on Pervasive Computing and Communications Workshops (PERCOM Workshops); Mannheim, Germany. 29 March–2 April 2010; pp. 298–303. [DOI] [Google Scholar]

- 43.Amft O., Troster G. On-body sensing solutions for automatic dietary monitoring. IEEE Pervasive Comput. 2009;8:62–70. doi: 10.1109/MPRV.2009.32. [DOI] [Google Scholar]

- 44.Ortega Anderez D., Lotfi A., Langensiepen C. A hierarchical approach in food and drink intake recognition using wearable inertial sensors; Proceedings of the 11th PErvasive Technologies Related to Assistive Environments Conference; Corfu, Greece. 26–29 June 2018; pp. 552–557. [DOI] [Google Scholar]

- 45.Ortega Anderez D., Lotfi A., Pourabdollah A. Temporal convolution neural network for food and drink intake recognition; Proceedings of the 12th ACM International Conference on PErvasive Technologies Related to Assistive Environments; Rhodes, Greece. 5–7 June 2019; pp. 580–586. [DOI] [Google Scholar]

- 46.Bai Y., Jia W., Mao Z.H., Sun M. Automatic eating detection using a proximity sensor; Proceedings of the 40th Annual Northeast Bioengineering Conference (NEBEC); Boston, MA, USA. 25–27 April 2014; pp. 1–2. [DOI] [Google Scholar]

- 47.Bhattacharya S., Adaimi R., Thomaz E. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. Volume 6. Association for Computing Machinery; New York, NY, USA: 2022. Leveraging sound and wrist motion to detect activities of daily living with commodity smartwatches; p. 42. [DOI] [Google Scholar]

- 48.Chun K.S., Sanders A.B., Adaimi R., Streeper N., Conroy D.E., Thomaz E. Towards a generalizable method for detecting fluid intake with wrist-mounted sensors and adaptive segmentation; Proceedings of the 24th International Conference on Intelligent User Interfaces; Marina del Ray, CA, USA. 16–20 March 2019; pp. 80–85. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Doulah A., Ghosh T., Hossain D., Imtiaz M.H., Sazonov E. “Automatic Ingestion Monitor Version 2”—A novel wearable device for automatic food intake detection and passive capture of food images. IEEE J. Biomed. Health Inform. 2021;25:568–576. doi: 10.1109/JBHI.2020.2995473. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Du B., Lu C.X., Kan X., Wu K., Luo M., Hou J., Li K., Kanhere S., Shen Y., Wen H. HydraDoctor: Real-time liquids intake monitoring by collaborative sensing; Proceedings of the 20th International Conference on Distributed Computing and Networking; Bangalore, India. 4–7 January 2019; pp. 213–217. [DOI] [Google Scholar]

- 51.Gomes D., Sousa I. Real-time drink trigger detection in free-living conditions using inertial sensors. Sensors. 2019;19:2145. doi: 10.3390/s19092145. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Griffith H., Shi Y., Biswas S. A container-attachable inertial sensor for real-time hydration tracking. Sensors. 2019;19:4008. doi: 10.3390/s19184008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Jovanov E., Nallathimmareddygari V.R., Pryor J.E. SmartStuff: A case study of a smart water bottle; Proceedings of the 38th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC); Orlando, FL, USA. 16–20 August 2016; pp. 6307–6310. [DOI] [PubMed] [Google Scholar]

- 54.Kadomura A., Li C.-Y., Tsukada K., Chu H.-H., Siio I. Persuasive technology to improve eating behavior using a sensor-embedded fork; Proceedings of the 2014 ACM International Joint Conference on Pervasive and Ubiquitous Computing; Seattle, WA, USA. 13–17 September 2014; pp. 319–329. [DOI] [Google Scholar]

- 55.Lasschuijt M.P., Brouwer-Brolsma E., Mars M., Siebelink E., Feskens E., de Graaf K., Camps G. Concept development and use of an automated food intake and eating behavior assessment method. J. Vis. Exp. 2021;168:e62144. doi: 10.3791/62144. [DOI] [PubMed] [Google Scholar]

- 56.Lee J., Paudyal P., Banerjee A., Gupta S.K.S. A user-adaptive modeling for eating action identification from wristband time series. ACM Trans. Interact. Intell. Syst. 2019;9:22. doi: 10.1145/3300149. [DOI] [Google Scholar]

- 57.Rahman S.A., Merck C., Huang Y., Kleinberg S. Unintrusive eating recognition using Google Glass; Proceedings of the 9th International Conference on Pervasive Computing Technologies for Healthcare (PervasiveHealth); Istanbul, Turkey. 20–23 May 2015; pp. 108–111. [DOI] [Google Scholar]

- 58.Schiboni G., Amft O. Sparse natural gesture spotting in free living to monitor drinking with wrist-worn inertial sensors; Proceedings of the 2018 ACM International Symposium on Wearable Computers; Singapore. 8–12 October 2018; pp. 140–147. [DOI] [Google Scholar]

- 59.Sen S., Subbaraju V., Misra A., Balan R., Lee Y. Annapurna: An automated smartwatch-based eating detection and food journaling system. Pervasive Mob. Comput. 2020;68:101259. doi: 10.1016/j.pmcj.2020.101259. [DOI] [Google Scholar]

- 60.Staab S., Bröning L., Luderschmidt J., Martin L. Performance comparison of finger and wrist motion tracking to detect bites during food consumption; Proceedings of the 2022 ACM Conference on Information Technology for Social Good; Limassol, Cyprus. 7–9 September 2022; pp. 198–204. [DOI] [Google Scholar]

- 61.Wellnitz A., Wolff J.-P., Haubelt C., Kirste T. Fluid intake recognition using inertial sensors; Proceedings of the 6th International Workshop on Sensor-based Activity Recognition and Interaction; Rostock, Germany. 16–17 September 2019; p. 4. [DOI] [Google Scholar]

- 62.Xu Y., Guanling C., Yu C. Automatic eating detection using head-mount and wrist-worn accelerometers; Proceedings of the 17th International Conference on E-health Networking, Application & Services (HealthCom); Boston, MA, USA. 14–17 October 2015; pp. 578–581. [DOI] [Google Scholar]

- 63.Zhang R., Zhang J., Gade N., Cao P., Kim S., Yan J., Zhang C. Proceedings of the ACM on Human-Computer Interaction. Volume 6. Association for Computing Machinery; New York, NY, USA: 2022. EatingTrak: Detecting fine-grained eating moments in the wild using a wrist-mounted IMU; p. 214. [DOI] [Google Scholar]

- 64.Zhang Z., Zheng H., Rempel S., Hong K., Han T., Sakamoto Y., Irani P. A smart utensil for detecting food pick-up gesture and amount while eating; Proceedings of the 11th Augmented Human International Conference; Winnipeg, MB, Canada. 27–29 May 2020; p. 2. [DOI] [Google Scholar]

- 65.Amemiya H., Yamagishi Y., Kaneda S. Automatic recording of meal patterns using conductive chopsticks; Proceedings of the 2013 IEEE 2nd Global Conference on Consumer Electronics (GCCE); Tokyo, Japan. 1–4 October 2013; pp. 350–351. [DOI] [Google Scholar]

- 66.Nakamura Y., Arakawa Y., Kanehira T., Fujiwara M., Yasumoto K. SenStick: Comprehensive sensing platform with an ultra tiny all-in-one sensor board for IoT research. J. Sens. 2017;2017:6308302. doi: 10.1155/2017/6308302. [DOI] [Google Scholar]

- 67.Zuckerman O., Gal T., Keren-Capelovitch T., Karsovsky T., Gal-Oz A., Weiss P.L.T. DataSpoon: Overcoming design challenges in tangible and embedded assistive technologies; Proceedings of the TEI ‘16: Tenth International Conference on Tangible, Embedded, and Embodied Interaction; Eindhoven, The Netherlands. 14–17 February 2016; pp. 30–37. [DOI] [Google Scholar]

- 68.Liu K.C., Hsieh C.Y., Huang H.Y., Chiu L.T., Hsu S.J.P., Chan C.T. Drinking event detection and episode identification using 3D-printed smart cup. IEEE Sens. J. 2020;20:13743–13751. doi: 10.1109/JSEN.2020.3004051. [DOI] [Google Scholar]

- 69.Shin J., Lee S., Lee S.-J. Accurate eating detection on a daily wearable necklace (demo); Proceedings of the 17th Annual International Conference on Mobile Systems, Applications, and Services; Seoul, Republic of Korea. 12–21 June 2019; pp. 649–650. [DOI] [Google Scholar]

- 70.Bi S., Kotz D. Eating detection with a head-mounted video camera; Proceedings of the 2022 IEEE 10th International Conference on Healthcare Informatics (ICHI); Rochester, MN, USA. 11–14 June 2022; pp. 60–66. [DOI] [Google Scholar]

- 71.Kaner G., Genç H.U., Dinçer S.B., Erdoğan D., Coşkun A. GROW: A smart bottle that uses its surface as an ambient display to motivate daily water intake; Proceedings of the Extended Abstracts of the 2018 CHI Conference on Human Factors in Computing Systems; Montreal, QC, Canada. 21–26 April 2018; p. LBW077. [DOI] [Google Scholar]

- 72.Lester J., Tan D., Patel S., Brush A.J.B. Automatic classification of daily fluid intake; Proceedings of the 2010 4th International Conference on Pervasive Computing Technologies for Healthcare; Munich, Germany. 22–25 March 2010; pp. 1–8. [DOI] [Google Scholar]

- 73.Lin B., Hoover A. A comparison of finger and wrist motion tracking to detect bites during food consumption; Proceedings of the 2019 IEEE 16th International Conference on Wearable and Implantable Body Sensor Networks (BSN); Chicago, IL, USA. 19–22 May 2019; pp. 1–4. [DOI] [Google Scholar]

- 74.Dong Y., Hoover A., Scisco J., Muth E. A new method for measuring meal intake in humans via automated wrist motion tracking. Appl. Psychophysiol. Biofeedback. 2012;37:205–215. doi: 10.1007/s10484-012-9194-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Jasper P.W., James M.T., Hoover A.W., Muth E.R. Effects of bite count feedback from a wearable device and goal setting on consumption in young adults. J. Acad. Nutr. Diet. 2016;116:1785–1793. doi: 10.1016/j.jand.2016.05.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Arun A., Bhadra S. An accelerometer based eyeglass to monitor food intake in free-living and lab environment; Proceedings of the 2020 IEEE SENSORS; Rotterdam, The Netherlands. 25–28 October 2020; pp. 1–4. [DOI] [Google Scholar]

- 77.Bedri A., Li R., Haynes M., Kosaraju R.P., Grover I., Prioleau T., Beh M.Y., Goel M., Starner T., Abowd G. Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies. Volume 1. Association for Computing Machinery; New York, NY, USA: 2017. EarBit: Using wearable sensors to detect eating episodes in unconstrained environments; p. 37. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Cheng J., Zhou B., Kunze K., Rheinländer C.C., Wille S., Wehn N., Weppner J., Lukowicz P. Activity recognition and nutrition monitoring in every day situations with a textile capacitive neckband; Proceedings of the 2013 ACM Conference on Pervasive and Ubiquitous Computing Adjunct Publication; Zurich, Switzerland. 8–12 September 2013; pp. 155–158. [DOI] [Google Scholar]

- 79.Chun K.S., Jeong H., Adaimi R., Thomaz E. Eating episode detection with jawbone-mounted inertial sensing; Proceedings of the 2020 42nd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC); Montreal, QC, Canada. 20–24 July 2020; pp. 4361–4364. [DOI] [PubMed] [Google Scholar]

- 80.Ghosh T., Hossain D., Imtiaz M., McCrory M.A., Sazonov E. Implementing real-time food intake detection in a wearable system using accelerometer; Proceedings of the 2020 IEEE-EMBS Conference on Biomedical Engineering and Sciences (IECBES); Langkawi, Malaysia. 1–3 March 2021; pp. 439–443. [DOI] [Google Scholar]

- 81.Kalantarian H., Alshurafa N., Le T., Sarrafzadeh M. Monitoring eating habits using a piezoelectric sensor-based necklace. Comput. Biol. Med. 2015;58:46–55. doi: 10.1016/j.compbiomed.2015.01.005. [DOI] [PubMed] [Google Scholar]

- 82.Lotfi R., Tzanetakis G., Eskicioglu R., Irani P. A comparison between audio and IMU data to detect chewing events based on an earable device; Proceedings of the 11th Augmented Human International Conference; Winnipeg, MB, Canada. 27–29 May 2020; p. 11. [DOI] [Google Scholar]

- 83.Bin Morshed M., Haresamudram H.K., Bandaru D., Abowd G.D., Ploetz T. A personalized approach for developing a snacking detection system using earbuds in a semi-naturalistic setting; Proceedings of the 2022 ACM International Symposium on Wearable Computers; Cambridge, UK. 11–15 September 2022; pp. 11–16. [DOI] [Google Scholar]

- 84.Sazonov E., Schuckers S., Lopez-Meyer P., Makeyev O., Sazonova N., Melanson E.L., Neuman M. Non-invasive monitoring of chewing and swallowing for objective quantification of ingestive behavior. Physiol. Meas. 2008;29:525–541. doi: 10.1088/0967-3334/29/5/001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Sazonov E.S., Fontana J.M. A sensor system for automatic detection of food intake through non-invasive monitoring of chewing. IEEE Sens. J. 2012;12:1340–1348. doi: 10.1109/JSEN.2011.2172411. [DOI] [PMC free article] [PubMed] [Google Scholar]