Key Points

Question

Do representative sampling strategies used by national surgical quality improvement programs adequately assess hospital performance relative to universal review?

Findings

In this comparative effectiveness study of 113 US Department of Veterans Affairs hospitals, 502 953 sampled surgical cases were compared with 1 703 140 overall surgical cases in hospital-level analysis. Sampling identified less than half of hospitals with higher-than-expected perioperative mortality.

Meaning

These findings suggest that sampling may not adequately represent overall surgical program performance or provide stakeholders with the data necessary to inform local quality and performance improvement.

This comparative effectiveness study evaluates whether sampling strategies used by national surgical quality improvement programs provide hospitals with representative data on their overall quality and safety, as measured by 30-day mortality.

Abstract

Importance

Representative surgical case sampling, rather than universal review, is used by US Department of Veterans Affairs (VA) and private-sector national surgical quality improvement (QI) programs to assess program performance and to inform local QI and performance improvement efforts. However, it is unclear whether case sampling is robust for identifying hospitals with safety or quality concerns.

Objective

To evaluate whether the sampling strategy used by several national surgical QI programs provides hospitals with data that are representative of their overall quality and safety, as measured by 30-day mortality.

Design, Setting, and Participants

This comparative effectiveness study was a national, hospital-level analysis of data from adult patients (aged ≥18 years) who underwent noncardiac surgery at a VA hospital between January 1, 2016, and September 30, 2020. Data were obtained from the VA Surgical Quality Improvement Program (representative sample) and the VA Corporate Data Warehouse surgical domain (100% of surgical cases). Data analysis was performed from July 1 to December 21, 2022.

Main Outcomes and Measures

The primary outcome was postoperative 30-day mortality. Quarterly, risk-adjusted, 30-day mortality observed-to-expected (O-E) ratios were calculated separately for each hospital using the sample and universal review cohorts. Outlier hospitals (ie, those with higher-than-expected mortality) were identified using an O-E ratio significantly greater than 1.0.

Results

In this study of data from 113 US Department of Veterans Affairs hospitals, the sample cohort comprised 502 953 surgical cases and the universal review cohort comprised 1 703 140. The majority of patients in both the representative sample and the universal sample were men (90.2% vs 91.1%) and were White (74.7% vs 74.5%). Overall, 30-day mortality was 0.8% and 0.6% for the sample and universal review cohorts, respectively (P < .001). Over 2145 quarters of data, hospitals were identified as an outlier in 11.7% of quarters with sampling and in 13.2% with universal review. Average hospital quarterly 30-day mortality rates were 0.4%, 0.8%, and 0.9% for outlier hospitals identified using the sample only, universal review only, and concurrent identification in both data sources, respectively. For nonsampled cases, average hospital quarterly 30-day mortality rates were 1.0% at outlier hospitals and 0.5% at nonoutliers. Among outlier hospital quarters in the sample, 47.4% were concurrently identified with universal review. For those identified with universal review, 42.1% were concurrently identified using the sample.

Conclusions and Relevance

In this national, hospital-level study, sampling strategies employed by national surgical QI programs identified less than half of hospitals with higher-than-expected perioperative mortality. These findings suggest that sampling may not adequately represent overall surgical program performance or provide stakeholders with the data necessary to inform QI efforts.

Introduction

In 1985, under scrutiny over the state of health care for veterans, Congress passed legislation mandating that the US Department of Veterans Affairs (VA) establish a quality assurance program to evaluate VA medical and surgical care.1 With regard to surgery, this led to the creation and implementation of what is now the Veterans Affairs Surgical Quality Improvement Program (VASQIP). Although the VASQIP’s initial purpose was quality assurance to address its congressional mandate, its current purpose is to facilitate quality and performance improvement locally and across the health system. In 2000, the US Institute of Medicine released To Err Is Human: Building a Safer Health System, which advocated for improving the safety and value of health care in the US.2 In large part because of the success of the VASQIP, the American College of Surgeons (ACS) developed the National Surgical Quality Improvement Program (NSQIP)—a voluntary, national surgical quality improvement (QI) program with infrastructure, data collection, and analytic strategies similar to the VASQIP.3

Both the VASQIP and the ACS-NSQIP provide robust data that are currently confirmed from the health record by local clinical personnel. Because this data collection process is labor intensive and it is important to ensure data accuracy and reliability, only information from a systematic and representative sample of all surgical cases is obtained—most of which are higher complexity, higher-risk procedures. Given these nuances around data collection, the types of cases assessed, and improved surgical outcomes over time, it is unclear whether data from a sample of higher-risk surgical cases are truly representative of the overall performance of a surgical program. Furthermore, although the VASQIP and the ACS-NSQIP have been associated with improvements in the quality and safety of surgical care, recent data suggest that national improvements in surgical outcomes may be independent of participation in these types of programs.4,5,6,7

Compared with the clinician-abstracted sample of cases captured by the VASQIP, the VA Corporate Data Warehouse (CDW) provides electronic health record (EHR) data from all surgical cases performed across every VA hospital.8 The availability of data from both the VASQIP and the CDW is unique to the VA health system and therefore optimal for comparing the robustness of case sampling relative to universal review. In fact, the VA may represent the only US health system with the necessary data to address this question. The primary aim of this study was to evaluate whether the sampling strategy used by national surgical QI programs provides hospital surgical programs with data that are representative of their overall quality and safety, as measured by 30-day mortality.

Methods

Data Sources

For this comparative effectiveness study, we conducted a national, hospital-level analysis of patients who underwent noncardiac surgery at a VA hospital between January 1, 2016, and September 30, 2020. Two data sources were used: (1) the VASQIP, which is a representative sample of cases across the VA; and (2) the CDW, which provides data for 100% of cases across the VA. The Baylor College of Medicine Institutional Review Board and the Michael E. DeBakey VA Medical Center Research and Development Committee reviewed and approved the use of these deidentified data and thus waived informed consent. The study followed the Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) reporting guideline.

Representative Sample

The VASQIP is a mandatory, national surgical QI program for all VA hospitals and was implemented in 1991.6 Cases eligible for sampling are determined by the VA National Surgery Office (NSO) and have greater than minimal associated mortality and morbidity rates.9 Case identification occurs on an 8-day cycle (to ensure that cases performed over the course of a week are included), and data collection is performed by trained surgical quality nurses embedded at each hospital who use established protocols and definitions to abstract a standardized set of variables from a sample of eligible cases.10 Annually, the VASQIP represents a systematic sample of approximately 25% of cases performed across VA hospitals. Once the data are collected, the NSO analyzes them and provides quarterly feedback to each hospital in the form of risk-adjusted observed-to-expected (O-E) ratios. The reliability of VASQIP data has previously been demonstrated.11

Universal Review

The VA CDW is a national, relational database derived directly from the EHR. The CDW data are automatically extracted and updated nightly, available in an organized and structured format, and composed of multiple domains that include demographic information as well as clinical, administrative, laboratory, and pharmacy data from each encounter. In addition, data from the surgical domain include comprehensive information for every surgical case performed across all VA hospitals. Care provided to each patient at each individual VA hospital can be ascertained using a unique hospital identifier.

Study Population

Patients aged 18 years or older who underwent noncardiac surgery at a VA hospital were included. We abstracted CDW data on self-reported race and ethnicity (American Indian or Alaska Native, Asian, Black, Native Hawaiian or Other Pacific Islander, or White) to examine the distribution of race in both data sources.

To identify the VASQIP sample within the CDW, cases were linked using a combination of scrambled patient social security numbers and date of operation. For patients who underwent more than 1 operation in a 30-day interval, only their first procedure was included in the analysis. To ensure identical risk adjustment (ie, that risk adjustment using EHR data was not being compared with risk adjustment using hand-abstracted data), all patient and procedural information as well as model covariates were uniformly ascertained from the CDW (ie, VASQIP data elements were not used) using structured variables; International Classification of Diseases, Ninth Revision and International Statistical Classification of Diseases, Tenth Revision codes; and pharmacy data (eTable in Supplement 1). A small proportion of patients without a recorded date of operation or missing covariate data were excluded (n = 24 626).

Statistical Analysis

Postoperative 30-day mortality was the primary outcome. Hospital-specific, quarterly O-E ratios were calculated, which is similar to the approach used by the VA NSO to identify hospitals with quality or safety concerns. Expected mortality was derived using multivariable logistic regression through postestimation prediction. Model covariates were selected nonparsimoniously based on prior work and included age, sex, American Society of Anesthesia classification, emergency procedure, history of congestive heart failure, history of severe chronic obstructive pulmonary disease, history of neurologic event, history of diabetes, history of dialysis, surgical complexity, and surgical specialty.12,13 For surgical case complexity, the NSO assigned a category (outpatient, inpatient standard, inpatient intermediate, and inpatient complex) to each Current Procedural Terminology code. Robust SEs were used. Each hospital’s total estimated mortality over the quarter was then summed and divided by the number of cases performed. The O-E ratio was calculated by dividing the observed mortality by the expected mortality. Hospitals with potentially concerning quality or safety concerns were defined as those with a 95% CI lower boundary greater than 1.0, indicating that the hospital had higher-than-expected perioperative mortality.9

Differences in baseline patient characteristics and outlier detection were assessed with standard descriptive statistics. The Pearson correlation coefficient was used to evaluate correlation in O-E ratios calculated using the sample and universal review cohorts. To understand the reliability of our risk adjustment, we compared hospital outlier identification with CDW covariates to VASQIP covariates (ie, the hand-abstracted data) among the VASQIP sample. We found that across all quarters of data, there was 78.6% concurrent identification of hospital outliers. Sensitivity analyses were performed as follows to assess the robustness of our study findings: (1) comparing all VASQIP eligible cases (n = 571 083) to universal review; (2) excluding all outpatient cases from the universal review cohort (n = 1 405 668) due to low associated mortality rates (0.4%); (3) comparing reliability-adjusted O-E ratios for the sample to universal review14,15; and (4) comparing a random sample of 75% of cases (n = 1 277 669) and 50% of cases (n = 832 396) from the CDW to universal review. P < .05 was considered significant. All analyses were performed using Stata, version 17 (StataCorp LLC). Data analysis was performed from July 1 to December 21, 2022.

Results

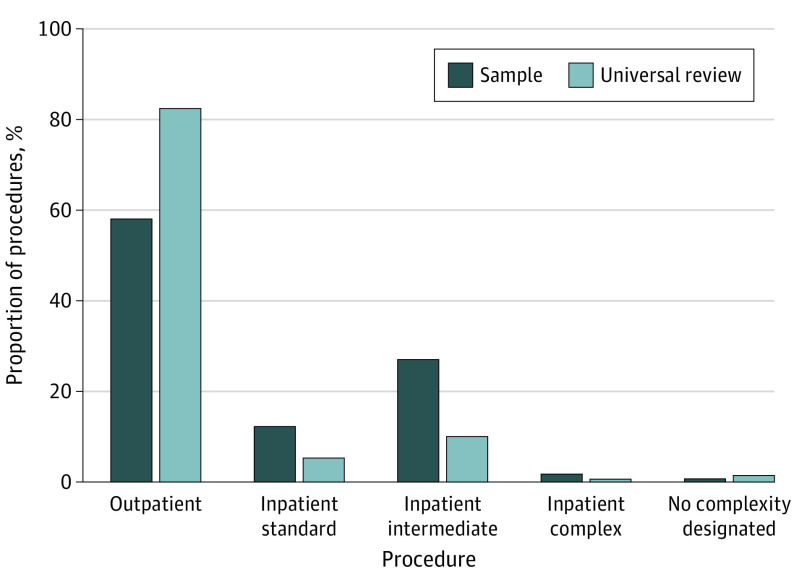

Overall, 1 703 140 cases were performed across 113 VA hospitals for 1 109 722 unique patients during the study period. Of these, 502 953 cases (performed for 419 254 unique patients) were included in the representative sample. The mean (SD) age was 62.7 (13.5) years in the representative sample cohort and 64.1 (13.4) years in the universal review cohort (P < .001). In the representative sample cohort, 90.2% were men and 9.8% were women; in the universal review cohort, 91.1% were men and 8.9% were women. In both cohorts, race and ethnicity was self-reported as American Indian or Alaska Native or Native Hawaiian or Other Pacific Islander (2.0% for both), Asian (0.6% for both), Black (18.0% vs 18.4%), White (74.7% vs 74.5%), or missing (4.8% vs 4.6%). Outpatient procedures accounted for 58.1% of cases in the sample (Figure 1) compared with 82.5% for universal review (P < .001). The Table provides additional characteristics for patients in both cohorts.

Figure 1. Procedure Types Captured in the Representative Sample vs Universal Review Cohorts.

Table. Patient Demographic and Clinical Characteristicsa.

| Characteristic | Representative sample cohort (n = 502 953) | Universal review cohort (n = 1 703 140) |

|---|---|---|

| Age, y, mean (SD) | 62.7 (13.5) | 64.1 (13.4) |

| Sex | ||

| Men | 454 560 (90.2) | 1 552 192 (91.1) |

| Women | 49 393 (9.8) | 150 948 (8.9) |

| Race and ethnicity | ||

| American Indian or Alaska Native or Native Hawaiian or Other Pacific Islander | 9949 (2.0) | 33 686 (2.0) |

| Asian | 2855 (0.6) | 10 512 (0.6) |

| Black | 90 452 (18.0) | 313 146 (18.4) |

| White | 375 551 (74.7) | 1 268 040 (74.5) |

| Missing | 24 146 (4.8) | 77 756 (4.6) |

| Marital status | ||

| Married | 251 969 (50.1) | 874 360 (51.3) |

| ASA class | ||

| 1 | 9634 (1.9) | 31 617 (1.9) |

| 2 | 125 639 (25.0) | 445 698 (26.2) |

| 3 | 323 520 (64.3) | 1 089 112 (64.0) |

| 4 | 43 535 (8.7) | 135 483 (8.0) |

| 5 | 606 (0.1) | 1009 (0.1) |

| Missing | 221 (0) | 221 (0) |

| Preoperative clinical condition | ||

| CHF | 14 519 (2.9) | 52 242 (3.1) |

| COPD | 178 122 (35.4) | 626 834 (36.8) |

| Stroke | 92 811 (18.5) | 323 463 (19.0) |

| Diabetes | 212 901 (42.3) | 802 555 (47.1) |

| Dialysis | 8360 (1.7) | 42 292 (2.5) |

| Procedural data | ||

| Emergency case | 26 771 (5.3) | 50 568 (3.0) |

| Surgical specialty | ||

| General | 144 778 (28.8) | 308 232 (18.1) |

| Orthopedics | 142 072 (28.3) | 199 566 (11.7) |

| Genitourinary | 73 665 (14.7) | 256 863 (15.1) |

| Vascular | 37 349 (7.4) | 87 564 (5.1) |

| Neurosurgery or spine | 31 441 (6.3) | 66 415 (3.9) |

| Otolaryngology | 18 014 (3.6) | 90 916 (5.3) |

| Plastics, hand, skin, or facial | 13 370 (2.7) | 192 860 (11.3) |

| Eye | 72 (0.1) | 307 443 (18.1) |

| Otherb | 38 716 (7.7) | 171 965 (10.1) |

| Missing | 3476 (0.7) | 21 316 (1.3) |

| Procedural complexity | ||

| Outpatient | 292 366 (58.1) | 1 405 668 (82.5) |

| Standard inpatient | 61 792 (12.3) | 90 390 (5.3) |

| Intermediate inpatient | 136 425 (27.1) | 171 098 (10.1) |

| Complex inpatient | 8819 (1.8) | 10 790 (0.6) |

| Not in complexity matrix | 75 (0) | 3978 (0.2) |

| Missing | 3476 (0.7) | 21 316 (1.3) |

Abbreviations: ASA, American Society of Anesthesiologists classification; CHF, congestive heart failure; COPD, chronic obstructive pulmonary disease.

Unless stated otherwise, values are presented as No. (%) of cases.

Other specialty types included amputation, breast, foot, gynecology, pain, thoracic, or transplant.

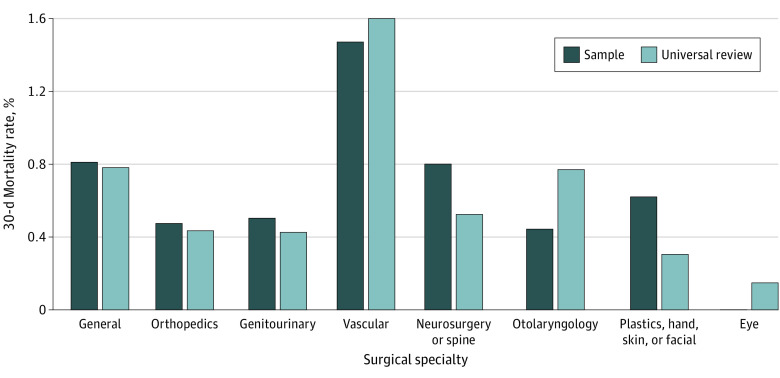

The 30-day mortality rate was 0.8% in the sample cohort compared with 0.6% in the universal review cohort (P < .001). Among cases excluded from sampling, the 30-day mortality rate was 0.5%. Across VA hospitals, the median mortality rate for excluded cases was 0.4% (IQR, 0.3%-0.6%). Mortality rates for outpatient and inpatient standard procedures were higher with universal review (outpatient: 0.4% vs 0.3%, P < .001; and inpatient standard: 1.8% vs 1.5%, P < .001). By comparison, mortality rates were not statistically different for inpatient intermediate (1.4% vs 1.4%, P = .65) or inpatient complex (2.6% vs 2.7%, P = .78) procedures. When stratified by surgical specialty (Figure 2), mortality rates were higher with universal review for eye (0% vs 0.2%, P < .001) and otolaryngology (0.5% vs 0.8%, P < .001) procedures. For outpatient procedures, mortality rates were higher with universal review for most specialties, including general (0.5% vs 0.2%), vascular (1.8% vs 1.5%), neurosurgery or spine (0.1% vs 0%), otolaryngology (0.4% vs 0.3%), eye (0.2% vs 0%), and other types (0.5% vs 0.4%) (P < .001 for all).

Figure 2. Thirty-Day Mortality Rates by Surgical Specialty in the Representative Sample vs Universal Review Cohorts.

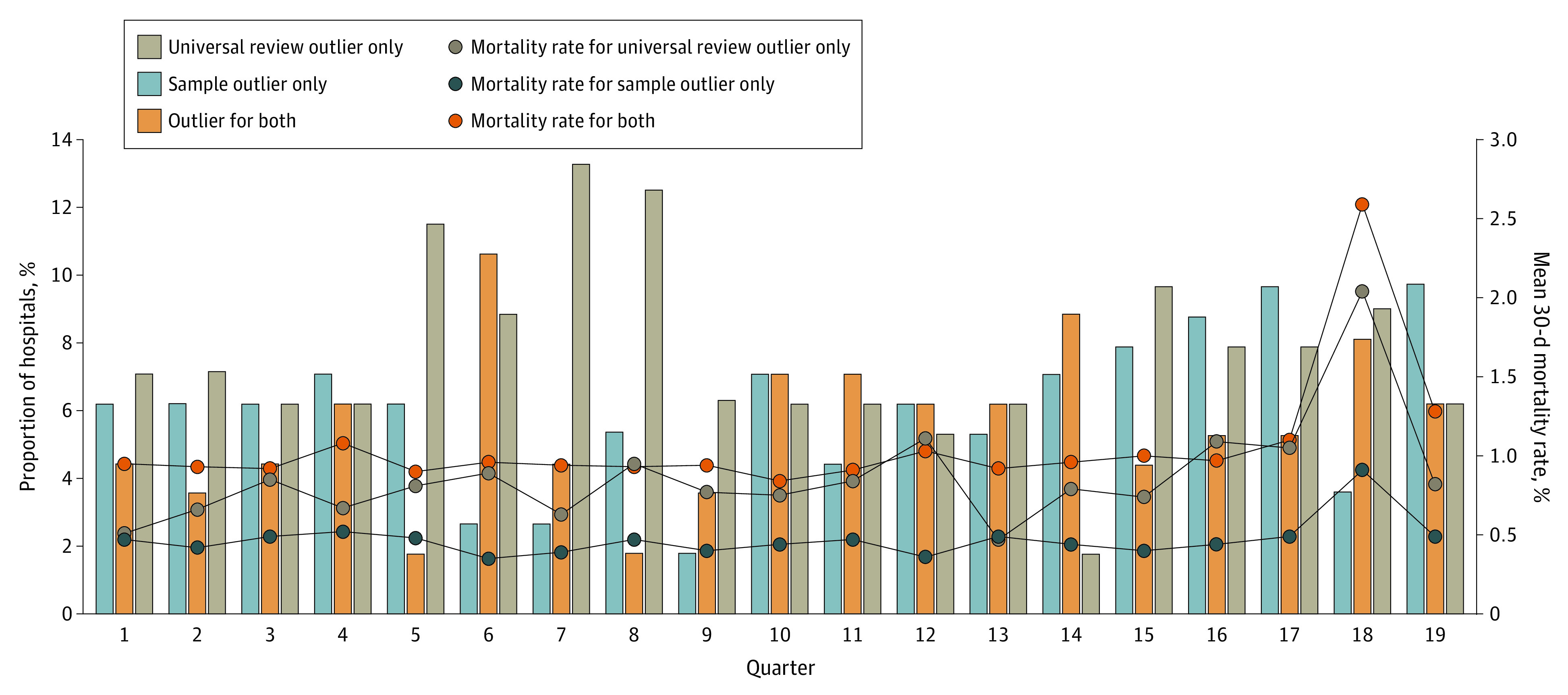

Over 2145 hospital quarters of data, a hospital was identified as an outlier in 11.7% of quarters using the sample vs in 13.2% of quarters using universal review. Among quarters in which a hospital was an outlier using the sample, they were concurrently identified as an outlier with universal review 47.4% of the time. For those identified as an outlier with universal review, they were concurrently identified in the sample 42.1% of the time. Figure 3 displays the proportion of hospitals in each quarter identified as an outlier using universal review only, the sample only, or both data sources. The average quarterly proportion of hospitals concurrently identified as an outlier was 5.6% (28.7% among outlier hospitals identified with either data source). Average quarterly 30-day mortality rates were 0.4%, 0.8%, and 0.9% for outlier hospitals identified using the sample only, universal review only, and concurrent identification in both data sources, respectively. Among cases excluded from the VASQIP, average quarterly 30-day mortality rates were 1.0% for outlier hospitals and 0.5% for nonoutliers.

Figure 3. Quarterly Hospital Outlier Identification and Mortality Rates Identified From the Representative Sample vs Universal Review Cohorts.

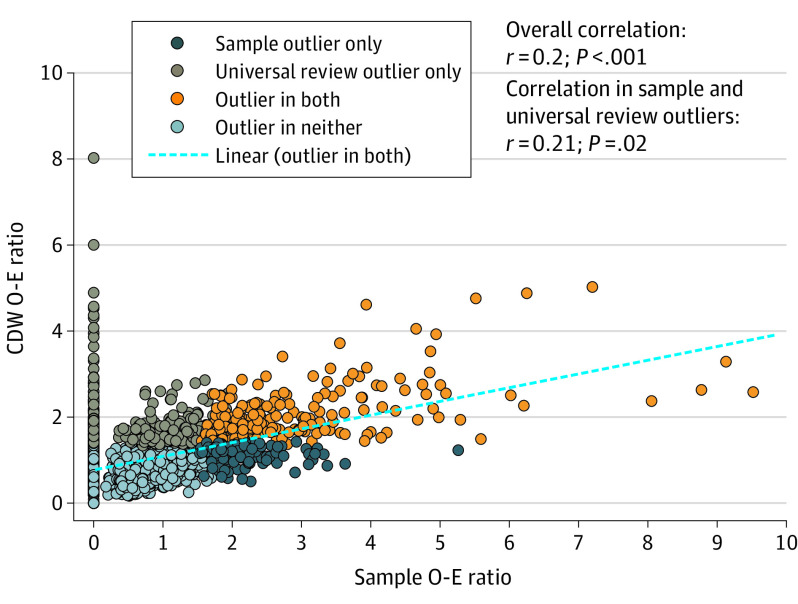

The correlation in O-E ratios from the sample and universal review cohorts was 0.20 (Figure 4). Among outlier hospitals, there was a similarly low correlation (r = 0.21). Using hospital outlier identification with the sample as the reference standard, the sensitivity, specificity, positive predictive value, and negative predictive value for outlier identification by universal review was 57.7%, 85.2%, 43.7%, and 91.0%, respectively. Using universal review as the reference standard, the sensitivity, specificity, positive predictive value, and negative predictive value for outlier identification with the sample was 43.7%, 91.0%, 57.7%, and 85.2%, respectively.

Figure 4. Observed-to-Expected (O-E) Ratios Derived From the Representative Sample and Universal Review Cohorts.

CDW indicates US Department of Veterans Affairs Corporate Data Warehouse.

In sensitivity analyses, the findings were similar when all eligible cases were compared with universal review. When outpatient procedures were excluded, hospitals were identified as outliers in a similar proportion of quarters for both cohorts (14.4%). However, concurrent identification improved. The overall average quarterly proportion concurrently identified was 10.2% and 55.1% among outliers. Hospitals identified as an outlier using the sample were concurrently identified with universal review 72.2% of the time; outliers identified with universal review were identified in the sample 70.9% of the time. When reliability-adjusted O-E ratios for the sample were used, concurrent outlier identification decreased: hospitals identified as an outlier using the sample were concurrently identified with universal review 30.6% of the time; outliers identified with universal review were identified using the sample 26.5% of the time. When a random sample of 75% of cases was evaluated, concurrent identification occurred 72.1% of the time. For a 50% random sample, concurrent identification occurred 55.8% of the time.

Discussion

It is unclear whether current sampling strategies used by national surgical QI programs, like the VASQIP and the ACS-NSQIP, accurately and adequately inform QI efforts. To our knowledge, this is the first study to comprehensively compare the robustness of systematic sampling to universal review for the contemporary assessment of surgical program outcomes. Furthermore, because the program format of the ACS-NSQIP is similar to the VASQIP, findings from the VA national program may be generalizable to similar private-sector surgical and nonsurgical QI programs. In this regard, our study provides 2 important conclusions. First, these findings suggest that compared with universal review, less than half of hospitals with higher-than-expected perioperative mortality are identified using case sampling. Second, higher mortality rates among outliers identified with universal review suggests that case sampling underestimates hospitals’ perioperative mortality rates. Taken together, these findings suggest that current sampling strategies may not adequately represent a hospital’s overall surgical program performance.

Although the reliability of clinical registry data is not in question, our study addresses a previously unanswered question regarding the contemporary assessment of hospital surgical programs by national QI programs—specifically, whether current representative case-sampling strategies adequately characterize a surgical program’s overall performance.11 Less than half of hospitals were concurrently detected as having higher-than-expected perioperative mortality when information from sampled cases was compared with universal review. This is concerning because missed and/or discordant detection suggests potential misclassification (ie, a hospital is identified as having acceptable performance [not identified as an outlier] when, in fact, they may be having a quality or safety concern), which could expose patients to suboptimal care processes or outcomes. Differences in mortality rates and outlier detection are ultimately barriers to hospitals actively working toward improving surgical quality and to health systems having the necessary data to ensure the safety of surgical care. Because misclassified hospitals may not be aware of ongoing performance issues, there could be a substantial delay in recognizing the need to critically evaluate outcomes and care processes and implementing corrective measures. The end result of missed or delayed detection is exposure of patients to potentially avoidable harm. We believe that the only way to ensure hospitals have a clear and accurate picture of the quality and safety of surgical care is to assess data from a greater proportion of (optimally all) surgical cases performed.

In this study, mortality rates among hospital outliers identified with universal review were twice as high as those identified with case sampling. Most cases captured with universal review were outpatient procedures, whereas sampling included higher-risk cases. This suggests that not only are there deaths unaccounted for by sampling, but some of these deaths are particularly problematic because they occur after lower-risk procedures (eg, outpatient procedures that would typically be expected to have a low mortality rate). The reasons for this observation are likely multifactorial and include hospitals’ processes for patient preoperative workup, patient selection for surgery, and/or choice of the appropriate care setting for the conduct of an operation. Given increased use of outpatient surgery centers, even for the management of higher-risk cases, adverse events occurring in these traditionally “low-risk” sites of service may currently be overlooked by case sampling.16,17 Alternatively, the data captured using current sampling methodology may provide information about a specific subset of cases performed by a surgical program that may not broadly generalize to overall program performance. For example, certain cases are purposely excluded from sampling because they are known to be higher risk (eg, tracheostomy, aborted cases, or surgeries performed concurrently by multiple different surgical teams). The low correlation in O-E ratios further suggests this to be a possibility. Even more importantly, among outlier hospitals, the mortality rate for cases excluded from sampling was more than twice as high as the rate at hospitals identified using only sampled cases. This suggests that excluded cases may largely explain our findings and that sampling strategies may need to be modified to more comprehensively capture the spectrum of procedures performed at a given hospital.

Alternatively, different data collection strategies that facilitate information capture from a greater proportion of cases may need to be considered. For example, the application of data science methods (eg, machine learning and natural language processing) to repositories of EHR data, like the CDW, could enable more comprehensive review of cases within national surgical QI programs.18 Other types of data (eg, administrative) are problematic for the ascertainment of certain types of variables (eg, postoperative complications) and can result in inconsistent or inaccurate identification of hospitals.19,20,21 Because it would be logistically and financially challenging to hire the number of individuals required to collect data from every case at every hospital, hybrid models using multiple data sources (eg, administrative codes for risk adjustment covariates and hand-abstracted outcomes) could be one approach for alleviating data collection burden and increasing the number of cases included in a performance evaluation.22,23 In fact, our data comparing risk adjustment using CDW and VASQIP covariates suggest this could be a promising approach that merits further exploration. Developing, evaluating, and integrating such strategies could (1) enhance the efficiency of existing surgical and nonsurgical QI programs by leveraging passively collected data and (2) create opportunities for hospitals to pivot from being reactive in response to data suggesting problematic outcomes or care processes in favor of more proactive approaches to quality and performance improvement by refocusing existing personnel toward such efforts.

Limitations

This study has several limitations. With regard to risk adjustment, our data were derived solely from the VA CDW. Therefore, the extent to which differences between the structured EHR and clinician-abstracted data may have played a role in our findings is unclear. However, we demonstrated a high degree of overlap (nearly 80%) in identification of outlier hospitals when comparing risk adjustment with EHR-derived data to VASQIP covariates and, to our knowledge, this is the only study using the only source of data available to inform this topic. Furthermore, our study population includes only veterans at VA hospitals, the majority of which are men. Therefore, procedures more commonly performed in women (eg, breast or gynecological surgeries) may have been undersampled in the VA cohort. However, because the focus of our study was program-level sampling methodology (as opposed to comparing procedure mix or patient and/or procedural outcomes), we believe this largely mitigates any issue with external validity that may have been introduced by the skewed distribution of sex among veterans. Although the ACS-NSQIP is modeled after the VASQIP (ie, similar variables and analytic framework), some key differences between them are notable. The VASQIP is a mandatory program for all VA hospitals, whereas ACS-NSQIP participation is voluntary. In addition, for hospitals that elect to participate, the ACS-NSQIP offers different participation options, including a procedure targeted option that collects additional procedure-specific variables.24 However, even in these modules, case sampling still occurs.25 Despite these differences, because the ACS-NSQIP uses case sampling similar to the VASQIP in most of its participation options, we believe our results remain largely generalizable to programs that utilize this type of sampling methodology. Information on cause-specific mortality is not provided, limiting evaluation of reasons for hospital outcome variation or specific factors that may have been associated with perioperative death. Limited information about the facility where the operation occurred is provided, so we could not ascertain the extent to which hospital factors or differences in facility-specific structure and care processes might have contributed to our findings. Finally, our study examined 30-day mortality because the VA NSO focuses on this outcome to identify hospitals with potential quality or safety concerns. Because other outcomes are captured by national QI programs, future research may expand to evaluate sampling strategies for assessing these other outcomes.

Conclusions

In this comparative effectiveness study, sampling strategies used by national surgical QI programs identified less than half of hospitals with higher-than-expected perioperative mortality. If these programs underestimate perioperative mortality rates, then health systems may not have the information they need to ensure that hospitals are providing safe surgical care and stakeholders may not have the data they need to drive local QI and performance improvement activities. As the quality and safety of surgical care continues to improve, it will be critical to continually reassess national QI and performance improvement efforts to ensure they remain robust and efficient and provide useful data to prevent avoidable patient harm.

eTable. Covariates Derived From the US Department of Veterans Affairs Corporate Data Warehouse and Used for Risk Adjustment

Data Sharing Statement

References

- 1.US Congress . H.R. 505—99th Congress (1985-1986): Veterans’ Administration Health-Care Amendments of 1985. 1985. Accessed December 1, 2022. https://www.congress.gov/bill/99th-congress/house-bill/505

- 2.Institute of Medicine Committee on Quality of Health Care in America . In: Cohn L, Corrigan J, Donaldson M, eds. To Err Is Human: Building a Safer Health System. National Academies Press; 2000. [PubMed] [Google Scholar]

- 3.Birkmeyer JD, Shahian DM, Dimick JB, et al. Blueprint for a new American College of Surgeons National Surgical Quality Improvement Program. J Am Coll Surg. 2008;207(5):777-782. doi: 10.1016/j.jamcollsurg.2008.07.018 [DOI] [PubMed] [Google Scholar]

- 4.Osborne NH, Nicholas LH, Ryan AM, Thumma JR, Dimick JB. Association of hospital participation in a quality reporting program with surgical outcomes and expenditures for Medicare beneficiaries. JAMA. 2015;313(5):496-504. doi: 10.1001/jama.2015.25 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Etzioni DA, Wasif N, Dueck AC, et al. Association of hospital participation in a surgical outcomes monitoring program with inpatient complications and mortality. JAMA. 2015;313(5):505-511. doi: 10.1001/jama.2015.90 [DOI] [PubMed] [Google Scholar]

- 6.Khuri SF, Daley J, Henderson WG. The comparative assessment and improvement of quality of surgical care in the Department of Veterans Affairs. Arch Surg. 2002;137(1):20-27. doi: 10.1001/archsurg.137.1.20 [DOI] [PubMed] [Google Scholar]

- 7.Hall BL, Hamilton BH, Richards K, Bilimoria KY, Cohen ME, Ko CY. Does surgical quality improve in the American College of Surgeons National Surgical Quality Improvement Program: an evaluation of all participating hospitals. Ann Surg. 2009;250(3):363-376. doi: 10.1097/SLA.0b013e3181b4148f [DOI] [PubMed] [Google Scholar]

- 8.US Department of Veterans Affairs . VA Information Resource Center (VIReC) Resource Guide: VA Corporate Data Warehouse. 2012. Accessed July 31, 2023. https://www.virec.research.va.gov

- 9.Khuri SF, Daley J, Henderson W, et al. ; National VA Surgical Quality Improvement Program . The Department of Veterans Affairs’ NSQIP: the first national, validated, outcome-based, risk-adjusted, and peer-controlled program for the measurement and enhancement of the quality of surgical care. Ann Surg. 1998;228(4):491-507. doi: 10.1097/00000658-199810000-00006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Massarweh NN, Kaji AH, Itani KMF. Practical guide to surgical data sets: Veterans Affairs Surgical Quality Improvement Program (VASQIP). JAMA Surg. 2018;153(8):768-769. doi: 10.1001/jamasurg.2018.0504 [DOI] [PubMed] [Google Scholar]

- 11.Davis CL, Pierce JR, Henderson W, et al. Assessment of the reliability of data collected for the Department of Veterans Affairs national surgical quality improvement program. J Am Coll Surg. 2007;204(4):550-560. doi: 10.1016/j.jamcollsurg.2007.01.012 [DOI] [PubMed] [Google Scholar]

- 12.Massarweh NN, Anaya DA, Kougias P, Bakaeen FG, Awad SS, Berger DH. Variation and impact of multiple complications on failure to rescue after inpatient surgery. Ann Surg. 2017;266(1):59-65. doi: 10.1097/SLA.0000000000001917 [DOI] [PubMed] [Google Scholar]

- 13.Massarweh NN, Kougias P, Wilson MA. Complications and failure to rescue after inpatient noncardiac surgery in the Veterans Affairs health system. JAMA Surg. 2016;151(12):1157-1165. doi: 10.1001/jamasurg.2016.2920 [DOI] [PubMed] [Google Scholar]

- 14.Dimick JB, Ghaferi AA, Osborne NH, Ko CY, Hall BL. Reliability adjustment for reporting hospital outcomes with surgery. Ann Surg. 2012;255(4):703-707. doi: 10.1097/SLA.0b013e31824b46ff [DOI] [PubMed] [Google Scholar]

- 15.Wakeam E, Hyder JA. Reliability of reliability adjustment for quality improvement and value-based payment. Anesthesiology. 2016;124(1):16-18. doi: 10.1097/ALN.0000000000000845 [DOI] [PubMed] [Google Scholar]

- 16.Torabi SJ, Patel RA, Birkenbeuel J, Nie J, Kasle DA, Manes RP. Ambulatory surgery centers: a 2012 to 2018 analysis on growth in number of centers, utilization, Medicare services, and Medicare reimbursements. Surgery. 2022;172(1):2-8. doi: 10.1016/j.surg.2021.11.033 [DOI] [PubMed] [Google Scholar]

- 17.Zenilman ME. Managing unknowns in the ambulatory surgery centers. Surgery. 2022;172(1):9-10. doi: 10.1016/j.surg.2021.12.039 [DOI] [PubMed] [Google Scholar]

- 18.Swiecicki A, Li N, O’Donnell J, et al. Deep learning-based algorithm for assessment of knee osteoarthritis severity in radiographs matches performance of radiologists. Comput Biol Med. 2021;133:104334. doi: 10.1016/j.compbiomed.2021.104334 [DOI] [PubMed] [Google Scholar]

- 19.Rosen AK, Itani KMF, Cevasco M, et al. Validating the patient safety indicators in the Veterans Health Administration: do they accurately identify true safety events? Med Care. 2012;50(1):74-85. doi: 10.1097/MLR.0b013e3182293edf [DOI] [PubMed] [Google Scholar]

- 20.Mull HJ, Borzecki AM, Loveland S, et al. Detecting adverse events in surgery: comparing events detected by the Veterans Health Administration Surgical Quality Improvement Program and the Patient Safety Indicators. Am J Surg. 2014;207(4):584-595. doi: 10.1016/j.amjsurg.2013.08.031 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Lawson EH, Zingmond DS, Hall BL, Louie R, Brook RH, Ko CY. Comparison between clinical registry and Medicare claims data on the classification of hospital quality of surgical care. Ann Surg. 2015;261(2):290-296. doi: 10.1097/SLA.0000000000000707 [DOI] [PubMed] [Google Scholar]

- 22.Lawson EH, Louie R, Zingmond DS, et al. Using both clinical registry and administrative claims data to measure risk-adjusted surgical outcomes. Ann Surg. 2016;263(1):50-57. doi: 10.1097/SLA.0000000000001031 [DOI] [PubMed] [Google Scholar]

- 23.Silva GC, Jiang L, Gutman R, et al. Mortality trends for veterans hospitalized with heart failure and pneumonia using claims-based vs clinical risk-adjustment variables. JAMA Intern Med. 2020;180(3):347-355. doi: 10.1001/jamainternmed.2019.5970 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cohen ME, Ko CY, Bilimoria KY, et al. Optimizing ACS NSQIP modeling for evaluation of surgical quality and risk: patient risk adjustment, procedure mix adjustment, shrinkage adjustment, and surgical focus. J Am Coll Surg. 2013;217(2):336-46.e1. doi: 10.1016/j.jamcollsurg.2013.02.027 [DOI] [PubMed] [Google Scholar]

- 25.American College of Surgeons . National Surgical Quality Improvement Program: User Guide for the 2021 ACS NSQIP Participant Use Data File (PUF). 2022. Accessed June 7, 2023. https://www.facs.org/media/tjcd1biq/nsqip_puf_userguide_2021_20221102120632.pdf

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

eTable. Covariates Derived From the US Department of Veterans Affairs Corporate Data Warehouse and Used for Risk Adjustment

Data Sharing Statement