Abstract

Tissue clearing has become a powerful technique for studying anatomy and morphology at scales ranging from entire organisms to subcellular features. With the recent proliferation of tissue-clearing methods and imaging options, it can be challenging to determine the best clearing protocol for a particular tissue and experimental question. The fact that so many clearing protocols exist suggests there is no one-size-fits-all approach to tissue clearing and imaging. Even in cases where a basic level of clearing has been achieved, there are many factors to consider, including signal retention, staining (labeling), uniformity of transparency, image acquisition and analysis. Despite reviews citing features of clearing protocols, it is often unknown a priori whether a protocol will work for a given experiment, and thus some optimization is required by the end user. In addition, the capabilities of available imaging setups often dictate how the sample needs to be prepared. After imaging, careful evaluation of volumetric image data is required for each combination of clearing protocol, tissue type, biological marker, imaging modality and biological question. Rather than providing a direct comparison of the many clearing methods and applications available, in this tutorial we address common pitfalls and provide guidelines for designing, optimizing and imaging in a successful tissue-clearing experiment with a focus on light-sheet fluorescence microscopy (LSFM).

Optical imaging-based approaches in biomedical research including immunohistochemistry and histology have been limited to small samples in part by the poor penetration of light into tissue. Tissue clearing is the process of changing the optical properties of tissue to improve the propagation of light through the tissue, thereby increasing the penetration depth for imaging. Most tissue-clearing protocols reduce the lipid and water content of the tissue and replace them with a medium that has a higher refractive index (RI), more closely approximating that of the remaining cellular content1,2. The result is a tissue with a more homogeneous RI and reduced light scatter that makes it amenable to in toto imaging without the need for mechanical sectioning. Tissue clearing has enabled many recent advances in neuroscience3-7, stem cell biology8,9, cancer biology10-12 and other fields of research13-15 where it is necessary to resolve subcellular features across large, intact tissue samples.

Effective tissue clearing with organic solvents has been around for over 100 years16. However, the difficulty in modern tissue clearing is not necessarily improving light transmission to render the tissue transparent by eye, but rather maintaining antigenicity, fluorophore integrity and tissue morphology within the now-transparent tissue. Even when this can be accomplished, imaging and quantifying the large volume of data remains challenging even though there have been recent advances in microscopy and analysis tools.

Modern clearing protocols3,17 can be grouped by how they remove cellular content and homogenize the RI. While all techniques produce some improvement in transparency, the degree of transparency and their effects on tissue structure vary.

Organic solvent (hydrophobic) clearing protocols use dehydration for lipid removal and organic solvents with high RI (~1.52–1.56) for RI matching18-21.

Hydrogel-based clearing protocols use detergents for lipid removal and aqueous solutions of moderate RI (~1.45–1.50) for RI matching1,22,23.

Hydrophilic clearing passively removes lipid content with detergents or amino alcohols and uses hydrophilic RI matching solutions (RI ~1.37–1.52)24-32.

The fundamental biochemistry underlying each protocol imparts a unique set of chemical and structural features to the tissue, with wide-ranging effects on everything from tissue size to epitope fidelity to autofluorescence (AF).

The overall process of clearing initially opaque tissue can be divided into several major steps: fixation, decolorization (pigment removal), labeling (or label retention), delipidation (lipid removal) and RI matching33. In recent years, there has been a proliferation of novel and modified clearing protocols. Each protocol has a varied combination of approaches to these major steps, and thus each has a unique set of strengths and weaknesses owing to their biochemical mechanisms of action. Recent reviews highlight these features as pros and cons associated with each protocol, but notably no single ‘best’ solution has emerged3,17,26,34-40. The large number of published clearing protocols suggests that there is no one-size-fits-all approach to tissue clearing as there are many trade-offs for each protocol and the optimal solution depends heavily on the scientific question at hand.

Clearing of fixed tissue is generally a beneficial approach to any scientific question where it is necessary to image with cellular resolution deeper than a few hundred microns, which is the typical depth (i.e., mean free path) to which unscattered ‘ballistic’ photons can penetrate tissue at visible wavelengths40. Clearing is typically required when the features of interest span several millimeters or even centimeters, such as neuronal or vascular networks, or when a large amount of spatially heterogeneous tissue needs to be sampled adequately to identify hallmarks of disease, such as histopathology and/or in high-throughput approaches. In addition, dense or pigment-rich tissues, which are small but have poor light penetration, will also benefit from clearing. Without clearing, these problematic samples are often addressed by physical sectioning41 or multiphoton imaging42. Tissue sections can be analyzed sparsely as in the case of slide-based histology, but this results in loss of context of the surrounding tissue and reduced throughput. Alternatively, the tissue can be reconstructed into a 3D volume after serial sectioning. However, these block-face or serial section techniques are generally destructive to the tissue and require complicated sectioning, reconstruction and analysis41,43. Multiphoton approaches are effective at increasing imaging depth, especially in live samples, but the imaging depth is still limited to ~1 mm in uncleared tissue. Whole-mount sample clearing avoids these drawbacks by keeping the sample intact to facilitate imaging and reconstruction while providing several millimeters of imaging depth.

However, whole-mount sample preparation and tissue clearing introduce many unique challenges not seen in traditional slide-based microscopy. During sample preparation, one can no longer assume uniform fixation and antibody penetration as in a thin-sliced or small tissue sample. During imaging, one can no longer simply rotate an objective turret to try a new lens as we now face challenges associated with RI matching, working distance limitations and chemical compatibility in addition to resolution and magnification. However, there are also similarities to traditional sample preparation such as the importance of fixation, minimizing AF, and labeling. Moreover, there are similarities to traditional imaging including photobleaching and the trade-offs between signal-to-noise ratio (SNR), resolution, imaging time and cost44 (Fig. 1).

Fig. 1 ∣. Considerations in a tissue-clearing experiment utilizing a light-sheet microscope.

The process of tissue clearing involves many steps and many interacting variables throughout the protocol, from fixation to clearing to imaging to data analysis. Many of these variables come together as the sample is being imaged, and the efficiency of the clearing, labeling and imaging protocols interact to reveal the details of the biological sample.

In this tutorial, we present a series of considerations and suggestions collected from biologists, chemists and microscopists for designing and optimizing a tissue-clearing protocol, with a focus on organic solvent and hydrogel-based methods. For each topic, we provide guidance for evaluating and troubleshooting common problems. We do not present a rigorous comparison or ranking of methods as each protocol will perform differently across a range of tissues, molecular probes and scientific questions. Additionally, the method of imaging and evaluation criteria provide even greater variability to any comparative study. Such comparative studies17,45-48 can provide valuable insights but should not be the sole reason for using a protocol. We argue that this type of comparison is best left to the end user, and here we aim to facilitate this process by giving guidance for the selection, execution and evaluation of a clearing protocol. This tutorial assumes some familiarity with the chemical and procedural concepts of tissue clearing, and we recommend the following reviews: refs. 1,3,34,36,40.

Challenges of tissue clearing, a protocol optimization example

We have had experience with a multitude of samples and clearing protocols and found that, even with an ultimately perfectly transparent tissue, there are other considerations to ensure an overall successful outcome of a tissue-clearing experiment. To illustrate this point, we here review one collaboration where throughout the clearing optimization process each attempt produced a cleared tissue but still yielded unsatisfactory results. The goal of this experiment was to image and segment vasculature and associated blood–brain barrier structures throughout the entire adult zebrafish brain.

Attempt 1:

The sample was cleared using the CLARITY technique22,49. The collaborating lab was equipped with an electrophoretic tissue-clearing chamber, custom-built light-sheet microscope and a high numerical aperture (NA) immersion lens (10×/0.6 NA, 8 mm working distance [WD]) at the cost of tens of thousands of dollars. The sample was rendered transparent after clearing, but a high AF signal obscured the weak endogenous GFP reporter. Despite the use of bespoke clearing lenses and established clearing protocols, no useful data were collected with several terabytes of images.

Attempt 2:

The protocol was modified to include glycine washes, which effectively reduced AF. Still, the GFP reporter was determined to have insufficient signal intensity after clearing to resolve the features of interest (blood vessels with a diameter of ~3 μm) at depths >2 mm. While further optimization of the clearing conditions (pH, temperature, detergent concentration, etc.) may have improved fluorophore stability, low expression levels from the endogenous promoter suggested that the signal would require signal amplification.

Attempt 3:

Since antibodies were needed to boost signal and transparency was not perfect throughout the entire sample, the decision was made to try using an organic solvent, ethyl cinnamate21, for clearing (most organic solvent methods quench endogenous fluorescence, thus requiring antibody use). This modification rendered a highly transparent sample with good SNR, but without specialized organic solvent dipping lenses, imaging was limited to available air lenses. Although a focused image could be produced when imaging into the organic solvent, using an air lens with the high RI solvent resulted in one of the two fluorophore channels being out of focus, an effect known as axial chromatic aberration.

Attempt 4:

To correct the chromatic aberration without purchasing additional solvent-compatible lenses, the detection objective was motorized to allow for a calibrated wavelength-specific focal shift to adequately image the two colors in the same focal plane. This approach resulted in the desired dataset, but still leaves the daunting task of storage, registration, segmentation and quantification of the data for a large number of samples.

In this example, it was fortunate that antibodies were available to distinguish the proteins of interest, the antibodies uniformly penetrated the tissue and the lateral resolution obtained with an air lens was sufficient for the biological question. Had this not been the case, the experiment would have ultimately failed. The complexity of tissue clearing comes not only from the number of considerations but also the interplay between them. As the optimization example illustrates, it was not until the clearing and AF quenching reached a certain efficacy that the weak signal was revealed. Furthermore, the choice to use the more effective organic solvent clearing protocol limited achievable resolution on the imaging system because of available lenses and sample shrinkage.

In addition to studying the literature to find the ideal clearing protocol for an application, this example demonstrates that it is also necessary to test and optimize a protocol. This may involve modifying the steps related to the clearing, signal, imaging or even the sample at each iteration. To facilitate this process, we have compiled a list of considerations for a successful tissue-clearing experiment.

Searching the literature

Sample size and composition

Sample integrity

Signal intensity and uniformity

Transparency

RI matching

AF

Chemical compatibility

Imaging

Data handling

These considerations are discussed in detail in the following sections. The order of discussion is arbitrary as each experiment requires the user to prioritize these features based on the experimental question and available resources.

Considerations for designing a tissue-clearing experiment

Tissue clearing in the literature

The first stage in developing a tissue-clearing strategy usually is searching the literature for a protocol with proven results for a similar tissue and experimental question. However, it can be challenging to find all relevant information on how a protocol will perform for a specific tissue and biological marker and whether the resulting transparency and image quality will be sufficient for a given analysis. Because of the numerous causal relationships between the variables in a clearing experiment, it becomes difficult to determine whether a given protocol will produce similar results for a unique set of variables or how changing even one variable might affect the outcome (Fig. 2). For example, high AF in some tissues can dramatically affect the ability to resolve certain features, requiring a new labeling and imaging strategy even when the clearing itself is effective. Similarly, what works on a thin tissue slice might not transfer to the whole organ, and a labeling strategy for a small-molecule dye might not work for an antibody.

Fig. 2 ∣. Key interactions between variables in a tissue-clearing experiment requiring trade-offs.

Ribbon connections, arrows and source colors indicate cause-effect relationships between features of a tissue-clearing experiment. Connection size indicates relative strength of the relationship for a generic example application, but this particular graph is neither absolute nor quantitative. Similarly, the features listed are not comprehensive but represent a minimal set of interacting parameters to be considered for most tissue-clearing experiments and highlight the interrelated nature of a clearing and imaging processes. Example: both tissue size and image resolution will have large effects on data size, but changing tissue size will also affect many other experimental variables.

When a well-matched case cannot be found in the literature, one can generalize their case to similar key experimental features such as tissue size, labeling strategy and required resolution. Most cleared tissue samples will be large, but grouping them into either (i) disc-like slices (e.g., thick sections) or (ii) spherical specimens (e.g., brain or liver) can help guide the clearing and sample mounting. Within each of those two geometric categories, there are several subcategories, which depend on what needs to be labeled, to inform fixation and labeling strategies that ensure the epitopes remain intact and accessible:

Tissue structures with small-molecule agents (e.g., nuclear labels)

Molecular probes with small agents (e.g., peptides to aptamers)

In situ labeling of RNA (e.g. RNA-FISH)

Molecular probes with large agents (e.g., antibodies)

Endogenous fluorescent proteins

Finally, categorizing what level of resolution is needed and what tools are available for image quantification will guide the imaging and analysis:

Subnuclear resolution to quantify RNA/DNA probes or to see fine structures such as dendritic spines or synaptic boutons in neurons

Nuclear resolution to quantify cells and classify cell types based on nuclear morphology

Cellular resolution to segment larger structures such as cortical layers, glands, vessels, collagen, etc.

The use of a clearing protocol that has been successfully applied in experiments that fit within these general categories will have the best chance of success as its design features should already take into account many of the experimental considerations outlined below.

To properly evaluate the performance of a clearing protocol, the ideal publication will address each of the variables in Fig. 2 and perhaps others. However, often only the transparency and signal retention are well described, quantified or even mentioned in the publication; thus, some testing and optimization is left to the end user. One commonly reported metric to qualitatively demonstrate the transparency is imaging the sample on top of a grid, pattern or graphic. When done correctly, this can provide some insight into the overall efficacy of the protocol, especially during the optimization phase. However, the reality is that these images do not yield any quantitative measure of the transparency or optical homogeneity. Thus, they do not reveal enough information to make an informed decision about the compatibility of a protocol for a given experiment. In the zebrafish optimization example, such a metric fails to provide information about AF, signal strength, antibody penetration, RI matching and achievable resolution (see section on ‘transparency’ for use and interpretation of this grid-image metric).

Sample size and composition

The final size of the sample (after clearing-induced shrinkage or expansion) will affect all downstream aspects of the experiment, from protocol duration to lens working distance. Generally, a larger sample is harder to stain, clear and image. In addition, inhomogeneous tissues require different treatment such as a decalcification50 step for some bone structures, adipose removal51 or a depigmentation52 step, and this in turn can have a large impact on transgenic signal, morphology, nucleotide preservation, etc., and can therefore complicate the clearing process. While it is unlikely that one can alter the sample entirely, certain modifications such as physical trimming or coarse slicing to reduce the size or homogenize the sample composition by removing dissimilar tissues such as bone, muscle or fat can greatly facilitate the clearing and the imaging. Similarly, using a shrinkage-prone clearing protocol such as those involving organic solvents can be favorable for fitting the sample into the physical constraints of the microscope or the optimal field of view (FOV) of a lens, because the size of the tissue and resolution both dramatically increase the data volume (see Box 1 for an example). Conversely, some hydrogel-based methods can be modified to expand the sample and provide increased resolution53,54. Even without intentional shrinkage or expansion, all methods will likely induce some size changes or deformations, making direct alignment with multiple datasets or imaging modalities difficult without applying some computational image transformations55.

Box 1 ∣. Sample size and data size example.

To properly resolve blood vessels with a diameter of 3 μm, one would need minimally two pixels (per Nyquist sampling) but ideally at least three pixels to resolve a vessel, thus 1 μm/pixel.

To image a small, but complete, adult zebrafish brain approximated as a 4 mm diameter sphere placed in a cubic ROI, at 1 μm/pixel one would require 4,000 × 4,000 pixels per optical section. This lateral sampling can be accomplished with a rather low magnification (~6×) and NA (0.1). For sparse features such as large blood vessels, an axial resolution of 4 μm might be sufficient, resulting in a total dataset size of 32 GB per channel (at 16 bit depth).

To achieve isotropic resolution, one would need to image 4,000 z-planes (at increased NA for a point-scanning system or using axial tiling for a light-sheet system), resulting in a 128 GB dataset.

A 2× isotropic increase in magnification or tissue size would result in 8× the volumetric data and increase the data size to ~1 TB/sample and channel.

This simple example shows that doubling the resolution and insisting on isotropic resolution throughout results in a 32× increase in data size and acquisition time compared with the first case.

Large-sample, high-resolution imaging is often the goal when pursuing tissue clearing, so reducing sample size might not be practical. To this end, many recent advances in tissue-clearing protocols have sought to improve the clearing, staining and imaging of large samples (defined here as samples with approximate dimensions of 10 × 10 × 10 mm3), but occasionally larger56,57. Depending on tissue composition and antibody-antigen binding kinetics, large samples generally require longer antibody incubations and longer clearing incubations—especially when passive diffusion is the only force driving these reactions. Examples of recent attempts to reduce clearing and antibody incubation time and increase the uniformity of staining come in the form of novel chemicals for fixation58,59 and/or clearing20,26,57,60,61, tissue modeling2, electrophoresis22,62, centrifugation63, modified pH/detergent concentration58,64, microwaves65 and perfusion56,66. However, these approaches often come with trade-offs, including more complicated or costly protocols, impacts on sample integrity (see ‘sample integrity’ below, and novel chemicals and fixations that might adversely affect epitope–antibody interactions not directly tested in the primary literature (see ‘signal intensity and uniformity’ below). Before committing to any one of these approaches, one must ensure that it is necessary for the clearing and/or staining and that it will not adversely affect the signal or sample integrity.

Sample integrity

The integrity of the sample is often forgotten in tissue clearing as the sample is indeed dead, but there are many considerations for the structure of the tissue and signal over an often weeks-long protocol. Retention of proteins, antigens and macromolecules, as well as overall tissue shape and stability, are required and should be considered elements of cleared sample integrity. Since all of these elements are affected to varying degrees in each tissue type by the fixation, staining and clearing protocols, it is beyond the scope of any article to examine the effects of each protocol on all aspects of sample integrity. In lieu of such a complete survey, a good understanding of the chemical principles for each protocol, tissue and epitope is essential. User groups and online forums documenting protocols and user experiences beyond the primary literature are a growing resource for this information (e.g., https://forum.claritytechniques.org/, https://idisco.info/, https://discotechnologies.org, http://www.chunglabresources.com/, https://forum.microlist.org). However, many of these resources would benefit from better curation and thus do not always provide the best troubleshooting information as a result of user error, unrealistic expectations and different evaluation criteria. The best resource is likely a direct collaboration with someone using the protocol successfully who can provide not only the final protocol but the logic for each step and guidance through the troubleshooting process.

No matter which protocol is used, proper fixation is necessary for preserving the tissue. This is especially true in clearing where greater chemical, physical and temporal stresses are imposed on the tissue downstream of fixation than for short-term staining and imaging experiments. However, overfixation introduces its own problems, including antigen masking, increased AF, reduced antibody penetration and precluding some postprocessing such as H&E staining. Conversely, underfixation reduces tissue, protein and epitope stability and can preclude other postprocessing steps that require a stronger fixation such as antibody multiplexing59.

By tuning the aggressiveness of fixation and clearing, most protocols can be adapted to favor sample integrity versus speed and clearing efficiency. In the hydrogel-based protocols, a polymerized hydrogel or tissue gel matrix stabilizes the tissue, allowing harsher clearing treatments while minimizing protein loss and distortion. Thus, the degree of fixation, density of the hydrogel and strength of the detergent can all be tuned to balance sample integrity and effective clearing1,59,66-68. Similarly, modified organic solvent protocols allow a tunable spectrum between ‘best clearing’ and ‘best fluorescence preservation’69. The vast number of variations for organic solvent, hydrogel and other protocols argue strongly in favor of the need for personalized optimization.

Signal intensity and uniformity

One of the most contentious features of many clearing protocols is the retention of endogenous transgenic fluorescent protein expression versus the requirement to use antibodies and conjugated dyes. Though this retention may be explicitly stated as a binary ‘yes’ or ‘no’, the strength of the fluorescent transgene’s promoter, the brightness of the fluorophore, the amount of AF or background, the exact variation of clearing protocol and the size of the feature of interest all contribute to the fluorescence signal intensity and dramatically alter its retention potential.

Even the clearing protocols that retain fluorescence only do so partially, as some degree of protein loss and fluorophore quenching during the fixation and clearing process is inevitable. Thus, it is always advantageous to have high expression of the protein of interest. For example, publications reporting clearing results using the Thy-1 promoter70 represent a best-case scenario of high expression levels in a sparse population of neurons. Similarly, high-expression transgenes, especially those driven by viral promoters, also have a high potential for fluorescence retention66,71,72. A more difficult case is ‘rainbow’ constructs73 where expression levels might be too low for sufficient signal retention after clearing and specific antibodies to discriminate related fluorophores do not currently exist. Hydrogel-based methods have demonstrated some success in imaging such reporter lines74, and adaptations to organic solvent-based clearing protocols are continually evolving to improve fluorescence retention, often by modified dehydration, temperature and pH conditions19,21,75. However, one must use caution when evaluating the fluorescence retention capabilities of a protocol on any reporter line other than that which will be used in the given experiment.

In most cases, one will get a brighter, more robust signal using antibodies, or more generally, any secondary molecule that can amplify the signal with a highly stable fluorescent dye. Benefits of this approach include a choice of wavelength (to mitigate AF and absorption), increased brightness, reduced bleaching and compatibility with a wider range of clearing protocols. Drawbacks include cost and speed, as antibodies are often used in high concentrations for large tissues and are slow to obtain uniform label penetration. Passive diffusion of large molecules such as antibodies in large tissues is inherently slow at ~100 μm–1 mm per day, depending on tissue type, fixation, antibody type (e.g., monoclonal, polyclonal) and density of the antigen2. When possible, reducing the size of the antibody fragment can increase diffusion efficiency76. A high antigen density can be troublesome when peripheral epitopes absorb the antibody faster than it can diffuse into the tissue, resulting in nonuniform staining patterns regardless of incubation time.

Since the antigen–antibody binding kinetics and diffusion kinetics are often unknown a priori, and each tissue-clearing protocol includes several steps that might not be unique to the clearing itself (e.g., fixation), it is important to evaluate antigenicity and signal retention after each step of the protocol. This evaluation allows one to isolate which step(s) might contribute to loss of signal when optimizing a protocol rather than faulting the clearing protocol itself. This can be done by simply obtaining a cross-sectional slice of tissue and checking for uniform staining throughout the depth of the tissue under a wide-field or confocal microscope.

For example, when adapting an immunostaining protocol to work with a tissue-clearing protocol, it can be useful to rigorously test antibody staining at several steps of the protocol.

Establish a baseline by testing the antibody against fixed tissue

Test the antibody against fixed tissue in the buffers used throughout the clearing protocol

Test the antibody against cleared tissue in the buffers used on cleared tissue

If one is trying hydrogel embedding, refixation or active labeling, controls are required for those conditions as well

When antibody staining is problematic, some of the approaches to speed lipid removal during clearing (e.g., electrophoresis) can also speed up and homogenize antibody penetration by taking advantage of their slight negative charge62. Smaller, covalently bound dyes and antibody fragments such as nanobodies have also been employed to provide bright multicolor signal with efficient dye diffusion into thick tissues56,77. Another approach pioneered in the SWITCH protocol seeks to temporarily inhibit antibody-antigen binding at a restrictive detergent concentration to allow uniform antibody penetration before switching to a permissive detergent concentration to permit antibody binding58,64. Each of these approaches requires additional reagents, equipment and sample preparation and should be carefully evaluated to ensure they are both necessary and sufficient to improve staining. There are also many time-tested approaches to improving antibody staining that are not specific to clearing protocols, such as reducing fixation time, use of detergents and DMSO, enzymatic digestion, freeze–thaw cycles and dehydration–rehydration cycles78,79.

Transparency

In addition to RI matching (see ‘RI matching’ below), which is generally the final step of a clearing protocol, upstream sample preparation also contributes to the transparency of the tissue. Depending on the protocol used, fixation, dehydration, incubation times and temperatures, delipidation and other parameters discussed previously can influence the overall transparency of a sample by affecting its chemical structure and integrity and, therefore, how it will react in a given RI-matched solution.

Improving light penetration to increase imaging depth does not necessarily mean that the entire tissue becomes perfectly transparent—in fact, this is often not necessary or possible in large tissues, at least without extended protocol duration or damage to the tissue. Any remaining proteins and pigments might impart a slight yellow-brown color to a cleared tissue that does not necessarily inhibit imaging and may indeed be beneficial as it represents good protein retention (consider the color of BSA protein solutions). The best way to determine overall transparency and RI matching is to have a series of fluorescent images from increasing depth into the tissue. Knowing the change in signal versus depth is the most instructive metric as it informs signal strength, antibody penetration, clearing efficiency, RI matching, AF and many other factors that will contribute to a poor SNR and poor images. Transparency, clearing efficiency and antibody penetration can also be evaluated from a thick tissue section obtained from a large cleared sample.

Most clearing protocols do not yield a transparent sample until after the final step, making transparency a difficult metric to use throughout the clearing protocol. However, when optimizing a protocol, it can be helpful to evaluate the outcome using a simple metric for transparency. When fluorescent volumetric imaging is not practical, the most common approach is to take an image of the tissue overlaid on a graphic or grid pattern. To make the overlaid transmission image a more effective tool, one should ideally use a tissue size relevant for the experiment that includes any heterogeneity that may be found throughout the sample and overlay it on a grid-like test pattern with a line thickness that provides sufficient dynamic range across the tissue. When imaging the tissue overlaid on the grid, the camera should be directly above and the sample should be fully submerged in the clearing medium to avoid reflections and refraction at curved surfaces in the light path caused by a RI mismatch at the air–tissue boundary, obscuring the true transparency and introducing distortions in the image.

In the absence of a grid or imaging data, the simplest visual assessment is as follows:

If one cannot see through the sample, one will only have marginal success with in toto imaging, if any

If the sample is transparent but has a glassy appearance when submerged in clearing medium, bulk RI matching is off

If one cannot see the surface of sample features by refraction or reflection, index matching worked, but there might be local index variations

Used correctly, a simple transparency metric can still provide useful feedback to compare and optimize protocols, but even in a perfectly transparent tissue, microscopic RI inconsistencies can remain, and there are many other parameters to be optimized to produce a high-quality dataset.

RI matching

RI matching is often cited as the primary mechanism of tissue clearing26,40. It is important to note, however, that it is actually ‘RI approximating’ rather than ‘matching’. Whether it is small amounts of lipid, collagen, water or protein, there are still multiple structures within the tissue that can refract, absorb or scatter light to some degree. This is further complicated when imaging through diverse, intact tissues such as bone and brain, where RI matching must be effective across multiple tissue types and any imperfections sum with increasing depth to degrade the image. In the case of imaging at depths of several millimeters, these mismatches require depth-dependent refocusing to maintain image quality, regardless of the RI matching capabilities of the microscope objectives and are in fact made substantially more difficult at high NA80,81. However, at depths below 300 μm, which are typical when imaging thin sections or live samples, these effects are generally negligible (at low to moderate NA). As a result we do not often consider RI matching in most biological samples, with the exception of some mounting medium82 and oil/glycerol immersion lenses.

Besides overall poor transparency, RI mismatches can be identified by evaluating the uniformity of the SNR of the fluorescent signal across images at the same depth within the tissue or in an XZ reslice. Image blurring or doubling in certain regions of the image might indicate that the light paths do not have consistent RI. In the case of light-sheet imaging, the RI uniformity along the illumination path must also be considered81,83.

The best clearing comes from a homogeneous RI throughout a tissue that originally contained varied refractive indices from water, lipids, carbohydrates and proteins. Since proteins and their associated epitopes or fluorophores are often the focus of experimental inquiry, one can generally remove water and lipids, leaving dehydrated proteins that have a RI between 1.50 and 1.56. Thus, organic solvents and other RI-matching media with similarly high RIs generally yield the best RI approximation. However, reducing lipids and water has implications for probe choice and fluorophore retention.

AF and decolorization

Even if a tissue is completely transparent and perfectly RI matched, there might still be pigments and other agents that autofluoresce or absorb light52. This AF generally comes from two sources: endogenous sources, such as extracellular matrix, heme, liposfuscin and melanin, and exogenous reactions formed chemically during the fixation or clearing protocol34. To evaluate the AF contribution, it is necessary to image an unstained sample in all relevant wavelengths as a negative control. An AF signal can look highly specific in structures such as insufficiently perfused vasculature. Often, an AF signal yields enough information about tissue morphology that it can be used for atlas alignment in mouse brain tissue55.

Techniques to reduce endogenous AF signals have been developed for years84-86 in a range of tissue types, and many of these solutions are compatible with tissue clearing when excess AF exists, though there are trade-offs. Incubation in hydrogen peroxide (H2O2) at elevated temperature is highly effective at decolorizing heme and pigments, but this harsh treatment will quench fluorescence and can alter epitopes20,21. An amino alcohol commonly known as Quadrol (N,N,N′,N′-Tetrakis(2-hydroxypropyl)ethylenediamine) used in the CUBIC protocol has also been used as a heme decolorizing agent25, but has limited effect on melanin-based AF52. Other agents, including N-butyldiethanolamine, 1-methylimidazole26, N-methyldiethanolamine57 and m-xylylenediamine87 have been shown to decolorize heme. Copper(II) sulfate and Sudan Black B efficiently reduced lipofuscin AF without substantial interference of other fluorophores in sectioned tissues88 and have been demonstrated to be compatible with hydrogel-based clearing66, but Sudan Black increases AF in the red spectrum89. One of the most effective methods (when applicable) for reducing heme-based AF is efficient perfusion of the animal to remove all the blood prior to fixation90.

Pigments present in some animal tissues (e.g., melanin) and many lower vertebrates and insects (e.g., pterins and ommochromes) also limit the potential for in toto imaging because of light absorption. H2O2 can be effective at reducing pigments, given the caveats noted previously. THEED (2,2′,2″,2‴-(ethylenedinitrilo)tetraethanol) and acetone have been shown to reduce pigmentation in Drosophila52,91 as well as reduce melanin and heme.

Exogenous AF induced by the fixation or clearing such as Schiff bases from aldehyde fixation can be reduced with H2O2 bleaching, sodium borohydride92 or photobleaching with high-intensity light93. Sodium sulfite has been used to combat the Maillard reaction, which causes tissues to turn brown when exposed to high heat64. Regardless of the cause, if AF cannot be removed or avoided and antibodies are available to the protein or fluorophore of interest, one can shift the signal to a longer wavelength where there is typically less AF signal94.

Chemical compatibility

As mentioned previously, every chemical used in the protocol must be compatible with the tissue, epitopes, fluorescent signal, antibodies and anything else it encounters. This extends beyond sample preparation and into imaging as well. Because of the optical and chemical balance of RI matching, the sample must generally remain in the final RI-matched solution of the clearing protocol to maintain transparency (see Box 2 protocols for exceptions). This means that one must also determine whether the available microscope objective lenses and sample mounting apparatus are compatible with the tissue-clearing chemicals.

Box 2 ∣. Protocol outlines and useful tips.

In this Box, we provide protocol outlines for tissue clearing using organic solvents (A), hydrogel embedding (B) or hydrophilic chemicals (C). These protocol outlines are not meant to be followed directly or replace those published in the primary literature. The steps have been generalized to highlight the basic steps common across many clearing protocols. Critical parameters such as concentrations and times are omitted or generalized as they are highly dependent on specific tissue types and sizes.

- Organic solvents basic protocol outline (cost: low):

-

Fix sample as necessary depending on size and type. Cross-linking, such as aldehyde fixation, is compatible with most clearing protocols unless otherwise noted in their methods.Tip: organic solvent protocols can be reversed, allowing additional labeling and imaging after clearing131.

- Decolorize (optional): chemicals discussed in the AF and decolorization section of the text or UV light can be used to remove pigment or heme causing increased AF. Use of H2O2 will quench endogenous fluorescence and can alter epitopes.

-

Stain with antibodies.Tip: current best practice is to preconjugate primary + secondary F(ab) OR use a conjugated primary antibody. Solution must be refreshed during staining. For additional tips on staining, see iDisco protocol20.Tip: optional: embed sample in 2% (wt/vol) agarose after fixation and staining. Embedding and trimming the agarose block has multiple benefits for sample handling: samples are easier to mount, preserve small structures, orient and visualize/orient once transparent.

-

Delipidate by dehydrating in a graded alcohol series, 0–100%.Tip: increasing the number of steps in the series to dehydrate samples slowly can reduce shrinkage and distortion of the sample.

-

RI match in organic solvent for ~1 d.Tip: many organic solvents have been used in tissue clearing with good results, but CAUTION: they are all very corrosive to plastics and adhesives and should be handled with care.

-

Image.Tip: UV curing or cyanoacrylate glues can be used to adhere samples for mounting. A list of organic solvent compatible materials is available in Glaser et al.105.Tip: a 3D printable glass-slide chamber of variable thickness allows imaging of these samples on traditional confocal microscopes without the need for specialized lenses. One such slide-compatible mounting scheme is seen here: https://idisco.info/idisco-protocol/.

-

- Hydrogel embedding basic protocol outline (cost: moderate-high):

-

Fix in presence of acrylamide monomer solution at 4 °C.Tip: gluteraldehyde (GA) fixation increases tissue stability for the high temperature and low pH conditions of the SWITCH protocol64, but GA is associated with increased AF and might require antibody validation64. Increasing the paraformaldehyde (PFA) concentration in the monomer solution prior to polymerization can increase the tissue-gel crosslinking to improve stability of fine structures and limits anisotropic expansion of the hydrogel, but this less porous hydrogel reduces the efficiency of antibody penetration and clearing66. See SHIELD59/E-flash58 protocols for other fixation options.

- Decolorize (optional): chemicals discussed in the AF and decolorization section of the text or UV light can be used to remove pigment or heme causing increased AF. Use of H2O2 will quench endogenous fluorescence and can alter epitopes.

-

Polymerize acrylamide at 37 °C under nitrogen gas.Tip: DIY degassing setup66.

-

Delipidate by incubating in detergent, ~1–15 d.Tip: electrophoretic pressure will increase the speed of clearing but reduce the amount of fluorescence retained if temperature or current becomes too high62.

-

Stain: current best practice is to preconjugate primary + secondary F(ab) OR use conjugated primary.Tip: active staining, electrophoresis, might increase uniformity and speed of antibody penetration.

-

RI match by immersion in RI matching solution.

-

Image.Tip: samples are often flexible and require immobilization to avoid motion artifacts.

-

- Hydrophilic basic protocol outline (cost: low):

- Fix sample as necessary depending on size and type.

- Decolorize (optional): H2O2, Quadrol or UV light can be used to remove pigment or heme causing increased AF. Use of H2O2 will quench endogenous fluorescence and can alter epitopes.

-

Stain: optional.Tip: most hydrophilic protocols are compatible with lipophilic dyes such as DIL often used for retrograde tracing.

-

RI match in alcohol or sugar solution for ~1–30 d.

- Image.

This point is particularly important when using organic solvents. The high degree of transparency achieved with organic solvents comes with trade-offs for imaging as solvents are capable of dissolving glues and plastics, including those used in the assembly of most objective lenses. They are also toxic to humans, possibly requiring additional safeguards such as secondary containment vessels, vent hoods and biosafety protocols. While organic solvents require extra caution, the imaging complications alone should not preclude considering their usage. There are ways to efficiently use air lenses for imaging cleared-tissue in sealed glass chambers83,95,96, and there are moderate-high NA lenses that are resistant to many organic solvents (ASI/Special Optics, multi-immersion lenses83). See ‘imaging cleared tissue’ section ‘lens choice’ for a more detailed discussion of this topic and Box 2 ‘organic solvents’, for tips on handling and imaging samples in organic solvents.

Imaging cleared tissue

One critical aspect of tissue clearing is that the method of clearing has a direct impact on how the sample can be imaged. Therefore, a method that offers the best transparency might not offer the best image quality if that clearing method is incompatible with available high-resolution dipping lenses or retention of the signal (fluorescence or epitope). Conversely, access to the best optical systems will not produce better results if the clearing and RI matching are not perfect. The imaging-clearing codependency is apparent in the many ways that both inefficient clearing and image acquisition artifacts can contribute to defects in the final images (Fig. 3).

Fig. 3 ∣. Artifacts in cleared tissues imaged on light-sheet microscopes.

Image artifacts can result from poor sample preparation, poor clearing and/or limitations of the imaging system. a, Optimal imaging conditions for a well-cleared sample imaged at high axial and lateral resolution resulting in uniform image quality. Images were acquired in sequential planes in the ‘XY’ orientation. The ‘XZ’ orientation represents a resliced image volume postacquisition. Image pictograms are provided to aid in identifying key image features or artifacts in the real images. Scale bar = 1 mm. Aberrations are shown in the orientation(s) where the aberration is most prominent. b, Insufficient clearing results in decreasing image quality with depth. For single-sided illumination, there is a single quality gradient following the light-sheet penetration from right to left in this image. c, RI mismatches can result in generally blurred image due to loss of focus/contrast or image doubling when multiple illumination angles misalign. d, Poor antibody penetration resulting in a ring-like pattern, with most signal near the periphery. e, AF from blood cells (heme) results in high background and bright blood vessels—especially in cases of poor perfusion. f, Sample movement between images can cause misalignment of channels (pictured) or image tiles. g, Image striping can occur when pigments or other absorbing materials block orthogonal illumination light in the sample and light-sheet destriping methods cannot overcome it. The sample was imaged with single-sided illumination from the left. h, Physical distortions such as broken blood vessels (pictured) or nonuniform size changes are common artifacts for some tissue structures. i, Air bubbles from insufficient degassing or mounting will refract light, causing blurring or shadows in the image plane in the case of light-sheet illumination. The sample was imaged with single-sided illumination from the left. j, When viewing the data in the XZ resliced orientation, bubbles appear as distortions. k, Poor axial resolution results in decreased contrast from inclusion of out-of-focus light in a thick optical section. l, Poor light penetration and increased AF at shorter wavelengths are inherent biophysical properties of the light-sample interaction that can be minimized with proper choice of fluorophores. The sample is bright on both sides from dual-sided illumination. m, Spherical aberrations result when there are imperfections in the optical path and cause a halo effect103 or edge blurring. n, Tile patterning can result when FOV tiling and there is either nonuniform image quality across the FOV, insufficient overlap or computational tiling artifacts. o, Repeated exposure of a region of tissue to excitation light eventually reduces contrast in that plane (image depicts simulated bleaching). p, When viewing the orthogonal XZ orientation, the bleached plane appears as a line.

Cleared tissue imaging is typically done in two phases, with the first being the optimization phase where the goal is to evaluate the transparency and signal quality in the cleared sample to optimize a clearing protocol. The second phase occurs when the tissue has been ‘optimally’ cleared and is ready for high-resolution imaging, which can involve multiple samples and generate large amounts of data. This distinction is important while developing a clearing protocol, as many of the noted features of successful tissue clearing (such as low AF, uniform labeling and high transparency) can be evaluated at a rather low magnification and resolution, saving valuable time, imaging resources and computational resources for the most valuable samples.

Collecting a lower resolution dataset is also very important as it can better reveal the image quality than the higher resolution data. This may sound counterintuitive, but higher resolution lenses, which collect light from a shallow depth of field over a large angle, are not as tolerant of nonideal conditions, such as imperfect RI matching, and as a result might produce blurry images. Conversely, a lower resolution system often produces high-quality images but with slightly less detail. When lower-level detail is sufficient to perform the analysis and answer the biological question, it is then advantageous to use the lower resolution, smaller dataset.

Despite advances in imaging technologies for large-tissue imaging18,83,96-98, the most widely available device for imaging cleared tissue is likely an available confocal or two-photon microscope, especially during the protocol optimization phase. Regardless of which imaging modality is chosen, the first question is often which lens to use.

Lens choice

Large cleared tissues often demand objective lenses with a long WD and high resolution. Unfortunately, there is generally a trade-off in resolution versus WD imposed by the physical angle required for the lens to collect light from the sample known as the NA of the lens. Increased NA and magnification also come at a cost of increased imaging time, sensitivity to RI mismatches and data size. One should begin using the smallest sample size and lowest magnification possible since doubling the resolution results in eight times the volume of data (Box 1). This facilitates not only data handling but also the staining and clearing process.

Previously, samples in organic solvents proved difficult to image because of chemical compatibility issues with objective lenses. However, recent solutions for imaging into organic solvents have been offered by use of wavelength-dependent refocusing96, RI-matched and chemically compatible ‘bath’ for the dipping lens99, chemically compatible front elements with concentric curvature around the focus (ASI/Special Optics)83,100 and dipping caps with correction optics20,101. Covering the front lens with a thin silicone rubber sheet is also an option to protect the objective from damage102. Together, these provide many commercial and do-it-yourself options for high-resolution imaging in organic solvents (Fig. 4).

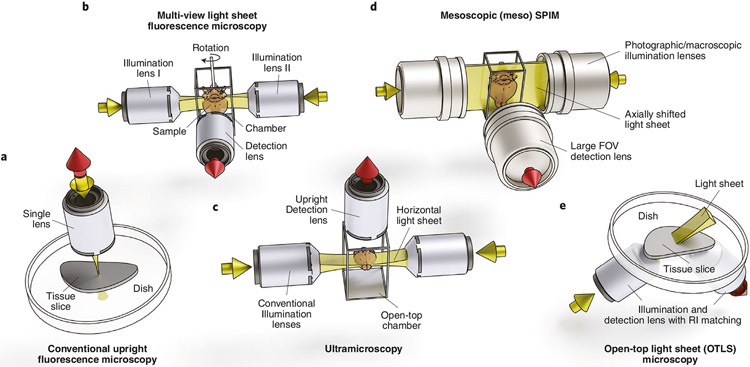

Fig. 4 ∣. Fluorescence microscopy imaging system geometries and features of microscopes commonly used for imaging cleared tissue.

a, Conventional point-scanning confocal or multiphoton: point-scanning methods require long acquisition times for large tissues. b, Multiview horizontal light sheet: this geometry allows rotation of the sample for multiview illumination and detection and requires movement of the sample for tiling and z-scanning. c, Ultramicroscopy: this configuration mounts the sample from below, which precludes sample rotation for multiview acquisitions. d, Mesoscopic horizontal light sheet—DIY: mesospim.org96 presents an oversized version of this configuration, and samples up to 50 × 50 × 100 mm can be accommodated with a large FOV. e, Open-top light sheet: this orientation (along with the inverted V-orientation, diSPIM130) allows unconstrained sample size in two dimensions for large, flat samples and high-throughput applications. All system geometries are amenable to various magnifications, resolutions and solvent compatibilities, depending on lens choice.

During the protocol optimization phase, the simplest options are often air lenses as they are generally inexpensive, have long WD, low magnification (desirable for large FOV) and are agnostic to the clearing medium used as they do not come into contact. However, a typical commercially available air lens is not optically designed for looking into a high-RI medium unless it has been designed specifically for tissue clearing. When imaging into clearing medium with an air lens, chromatic aberrations can cause color shifts, and spherical aberrations reduce the effective resolution and contrast considerably103. Chromatic aberrations are only noticeable when imaging multiple wavelengths and are not necessarily a problem during the protocol optimization phase.

When a well-optimized protocol is achieved and the highest possible resolution is required, dedicated clearing lenses can offer increased resolution and image quality. However, considering the limitations of RI approximation discussed earlier, large tissues are still RI inhomogeneous and not perfectly clear, and thus, resolution inevitably decreases with imaging depth. This is especially true with larger samples and at higher NA, and at some point resolution will fall off because of insufficient clearing or RI matching, so only a fraction of the theoretical resolution or imaging depth may be realized. And even when the lens is used with the appropriate medium and in a perfectly cleared tissue, most optical systems fail to achieve their full resolution because of low magnification and large pixel size104 (undersampling). As a result, one can reasonably expect to achieve ~1 μm lateral resolution over a 1–4 mm FOV on most imaging systems (assume ~5×/0.2 NA air lens). If the sample exceeds this FOV or requires further magnification, image tiling and zooming will be required. This, in turn, can add complications to the imaging, such as subtle sample movements, bleaching and image-stitching artifacts (Fig. 3). A list of lenses and specifications such as magnification, NA and immersion type that are potentially suitable for imaging cleared tissue is available in Glaser et al.105.

Modality choice

While it may be sufficient to optimize protocols on point-scanning systems with the noted workarounds and caveats (see Box 2 for tips on imaging), they are relatively slow and can require several hours to image a large sample. To increase axial resolution in a confocal point-scanning system, it is necessary to choose a high-NA detection lens and reduce the pinhole size, if possible. As better axial resolution also leads to improved lateral resolution, doing so increases the number of images required to cover the sample. This also results in increased light exposure to the sample and consequently increased bleaching; by the time a tissue section 5 mm deep has been imaged on a point-scanning system with 5 μm z-steps, it will have been exposed to excitation light 1,000 times. To avoid out-of-focus photobleaching and to achieve deeper imaging, a nonlinear imaging modality such as two-photon microscopy can be selected. However, like confocal microscopes, most multiphoton instruments scan a single excitation spot across the sample, which results in long acquisition times.

To speed up imaging and to decouple the lateral and axial resolution, light-sheet fluorescence microscopy (LSFM)18,97,98,104 is an attractive option. For this and many other reasons, LSFM is becoming the preferred method for imaging large cleared tissues, but it does offer unique challenges (see ‘light-sheet imaging of cleared tissues’ below). Optical projection tomography (OPT) is another cleared tissue imaging modality that is fast, simple and generally inexpensive to implement; however, there are currently no commercially available options. OPT offers relatively lower resolution than point scanning or LSFM, but can be efficient for quickly evaluating clearing efficiency and has been used for imaging cleared tissue for years106,107.

Light-sheet imaging of cleared tissues

LSFM is faster than point-scanning acquisition, induces less photobleaching and offers flexible sample mounting and orientation, which is helpful in avoiding regions that may not clear well in whole-mount samples (e.g., eye, bone, etc.). However, no perfect system exists—especially considering the broad range of tissue sizes and clearing types. The variations of light-sheet systems for cleared tissue depend largely on sample size, sample orientation and lens choices. A review of light-sheet concepts is recommended as a supplemental text to this section on light-sheet imaging104.

In addition to the detection objective lens choice considerations noted above, LSFM also requires choices to be made for the illumination lens(es). The choices will depend on tissue size (i.e., working distance), axial resolution (i.e., NA) and chemical compatibility (i.e., dipping versus air). However, the NA requirements for illumination lenses are relaxed compared with the detection path. A new problem is presented by the fact that a beam (or sheet) of light does not have a constant thickness along its propagation axis108,109. When the light sheet is tightly focused, it provides a thin optical section and resulting high axial resolution. The usable FOV, however, is decreased to a narrow region around the light-sheet waist. When this well-illuminated area becomes narrower than the detection FOV, the sheet (or the sample) needs to be moved along the direction of propagation in a discreet or swept manner110,111. Various implementations of this technique have been employed in different systems96,100,112,113, but the end result is uniform axial resolution across the FOV at the cost of increased complexity, imaging time and photobleaching. In most systems, this parameter is tunable such that one can find a balance in these trade-offs. It is important to note that the same RI mismatches that affect the detection path also affect the illumination path, and in both cases, the effects are exacerbated with NA. As a result, thin-sheet sectioning might not be effective deep in tissues with poor RI matching.

Over the past decade, many advances in LSFM imaging technology have resulted from efforts aimed at engineering more capable light-sheet illumination schemes. Pivoting the light sheet in a multidirectional selective plane illumination microscropy (mSPIM)114 or generating the light sheet by scanning a Gaussian beam in a digitally scanned light-sheet microscope (DSLM)115 led to a reduction in shadowing artifacts compared with static light sheets. A DSLM setup is also well suited to accommodate modifications aimed at increasing the optical sectioning capabilities of the instrument in thick scattering specimen, for example by incoherent structured illumination116, by using a slit for the rejection of out-of-focus light in a confocal light-sheet microscope117 or by synchronizing the scanned beam with the rolling shutter of the camera118. Apart from Gaussian illumination, other beam types can be used as well, for example, Bessel beams119,120, Airy beams121 and lattice light sheets122,123. While these more elaborate illumination schemes might achieve some suppression of scattered light or improved axial resolution across larger FOVs, they perform best when carefully matched and tuned to the properties of the sample. To improve light-sheet imaging deep inside tissue, especially where RI mismatches still exist, adaptive optics approaches can be utilized at the cost of an even more complex setup81,124. Before considering any of these approaches for imaging cleared tissue, all options to achieve better sample quality should be exhausted.

Another consideration in choosing an LSFM system design is sample mounting. To generate optical sections through the tissue, it is necessary to either move the sample through the light sheet or move the light sheet through the sample. Large, deformable samples moving through the often viscous clearing medium can suffer from motion artifacts if not properly mounted and constrained. This movement can be reduced by placing the sample in a secondary, RI-matched vessel such as a cuvette, or embedding the sample in a gel prior to clearing and retaining this protective gel throughout the protocol. The ideal imaging conditions would have nothing between the sample and the detection lens except perfectly matched clearing medium. Therefore, these secondary vessels can cause problems such as RI mismatches or reflections near the vessel wall96.

The sample mounting scheme also determines the possible orientation(s) sampled during imaging (Fig. 4). Samples can be placed flat against gravity and imaged from below or above in a fixed orientation. There are light-sheet microscope configurations that offer unconstrained lateral movement for samples that are large in two dimensions for samples that are otherwise difficult to mount in other orientations83 (Fig. 4). Alternatively, samples can be suspended from above to allow rotation about a vertical axis for imaging at various angles97. As most clearing protocols do not result in a perfectly transparent tissue, the ability to illuminate and image from multiple angles improves sample coverage and image quality but requires additional data processing and system complexity114,125. Likewise, clearing does not eliminate all absorption by the tissue. As a result, shadow artifacts occur—any sample feature absorbing or blocking the excitation light sheet will cast a shadow across the FOV. In addition to alternative light sheet generation schemes (mSPIM114, DSLM115), alternating light sheets from two directions can reduce such striping artifacts and improve overall depth penetration, sectioning and contrast114. The perfect overlap of illumination light sheets, however, can be affected by local RI variations and result in doubling of sample features in the axial direction96.

Data handling

The final data size depends on sample size, imaging resolution, number of fluorophore channels and acquisition parameters such as stitching and tiling. For example, a whole mouse brain can require anywhere from ~10 GB to well over 1 TB of storage space. In addition to the sample size and resolution effects on data size (Box 1), there are other data size considerations as well. If multiple wavelengths and multiple views are used in some of the advanced light-sheet techniques, the raw data size can easily increase to several terabytes per sample.

In addition to simply writing and storing the data, data processing is also necessary to reconstruct and render it. Processes such as tile-stitching and dynamic-range blending can be accomplished reasonably efficiently as they generally only require a few images to be loaded into memory126. 3D processing such as stack alignment and stitching, segmentation and multiview fusion, which might require loading the entire dataset into memory, become more difficult125. Even at a modest 128 GB dataset size, this exceeds the RAM of many desktop computers, so processing requires advanced hardware and software solutions. Rendering large datasets is also problematic since image dimensions greater than 4,000 pixels cannot be rendered on most GPUs, and thus rendering hardware might require downsampling and/or multiresolution hierarchical file formats, potentially negating some benefits of increasing resolution. Since preprocessing and rendering steps are often required, several free software packages such as BigStitcher and ClearMap55,127 provide a modular framework integrating several tools for large image volume preprocessing, curation and analysis.

Finally, after storage, reconstruction and rendering needs are met, the difficult task of image analysis begins. Many of the tools currently used for segmenting and quantifying image features are optimized for 2D image data and generally do not perform as well on 3D datasets. In these cases, tissue clearing still provides a way to generate many 2D slices, but limits the need for isotropic resolution and volumetric reconstruction. These big-data problems are being addressed with advances in hardware and software across many fields, but one must be cautious about generating large volumes of data and ensure they have sufficient resources to analyze and store it. For further advice on this topic, we suggest the guide by Andreev and Koo128.

Concluding remarks

Considering all the experimental variables from tissue type to data analysis, it is fair to assume that no two experiments will be identical. Thus, it is unlikely that any two clearing and imaging workflows will be alike. Nevertheless, a lab working with a certain sample and aiming to reveal specific features should be able to find a single or small subset of protocols that works for them. This guide hopefully provides the guidance to encourage such labs to go on this journey.

The difficulty of this optimization process is exacerbated by lengthy clearing protocols, which delay the imaging and analysis by days or weeks, as well as the availability of an imaging system compatible with a variety of clearing protocols. Additionally, the optimization process is variable and nonlinear. For example, one may optimize the transparency after attaining a bright, specific signal in an uncleared sample. Or one may start with a known effective clearing technique and then optimize the signal. This process is iterative, and if one determines that the signal is being degraded at the expense of clearing or imaging, it might be necessary to reoptimize the signal or adapt the clearing or imaging protocol.

With the recent proliferation of clearing method publications, searching the literature for the ideal protocol has become challenging because of the overwhelming number of approaches. There is often a desire to promote each new protocol as superior to other methods, resulting in some bias. Such bias may not always be intentional: the many steps and variations of a protocol make it difficult for any single lab to faithfully reproduce and optimize multiple clearing protocols to the same degree for comparison. As such, this ‘user error’ can result in competing protocols appearing less effective than those optimized in their lab. These ‘user error’ effects are most prevalent when methods sections do not include sufficient troubleshooting or optimization steps to faithfully reproduce the results.

We suggest that clearing protocols be viewed as complementary rather than competitive, and that multiple approaches may need to be used to tackle a single experimental question. The process of sharing, testing and optimizing these protocols on a variety of tissue types to fully understand their efficacy will require input from the scientific community as a whole, as no single study will be comprehensive enough to encompass all possible clearing and imaging protocols, genetic markers, tissue types, etc. A repository of protocols, positive as well as negative results, experiences and image data would provide a resource for searching tissue protocols and evaluating outcome. A repository could also provide a forum to disseminate information in real time rather than waiting for publications and individual trial and error to provide new insight.

As a new field of research, there are few standards available. Specifically, standards for evaluating and reporting transparency and fluorescence retention in tissue-clearing protocols are lacking. For example, clearing publications could include (i) quantification of how the clearing and labeling change with increasing depth into the tissue, (ii) quantification of fluorescence retention over time (weeks to months) to illustrate the decay rate of the fluorescence or (iii) a measure of compatibility with immunostaining. These suggested metrics certainly have limitations, and further standardization of the fluorescence signal, epitope stability, imaging and image analysis would also be required, but we propose this as a starting point for the discussion of developing a community standard.

It is important to realize that, when beginning a tissue-clearing experiment, it is unlikely there will be a ‘plug and play’ solution available. This means that some experimentation and optimization is necessary by the end user, and by deciding to adopt tissue clearing as a method, one is joining an active field of research, working to fully understand and harness the power of tissue clearing 129.

Acknowledgements

We thank J. Liu, A. Glaser, P. Ariel, D. Richardson, F. Helmchen, R. Power and the Huisken lab for their comments and discussion on the manuscript. Illustrations for Figs. 1 and 3 were done by M. Neufeld (madyrose.com). The Circos133 data visualization tool was used to generate Fig. 2. Blender (www.blender.org) was used for rendering Fig. 4. Brain tissue samples displayed in Fig. 3 were provided by L. Egolf, D. Kirschenbaum, F. Catto, P. Perin, K. Le Corf and A. Frick. We also thank the UW-Madison tissue clearing group and all those who provided inspiration, insight and samples: M. Taylor, R. Sulllivan, E. Dent, R. Taylor, K. Chan, R. Salamon and N. Iyer. We acknowledge funding by the Morgridge Institute for Research and the NIH (R01OD026219).

Related links

Key references using this protocol

- Tainaka K. et al. Annu. Rev. Cell Dev. Biol 32, 713–714 (2016) (https://www.annualreviews.org/doi/abs/10.1146/annurev-cellbio-111315-125001) introduces the key strategies for clearing tissue and provides an overview of protocols developed up to 2016. [DOI] [PubMed] [Google Scholar]

- Gradinaru V. et al. Annu. Rev. Biophys 45, 355–376 (2018) (https://www.annualreviews.org/doi/10.1146/annurev-biophys-070317-032905) provides an introduction into the principles underlying hydrogel-based tissue processing techniques. [DOI] [PMC free article] [PubMed] [Google Scholar]

- The Mesoscale Selective Plane Illumination Initiative (mesoSPIM) (http://www.mesospim.org) is an open-hardware project aimed at making instructions and software to set up and operate versatile light-sheet microscopes for large cleared samples more accessible.

- Jonkman J. et al. Nat. Protoc 15, 1585–1611 (2020) (https://www.nature.com/articles/s41596-020-0313-9) is an excellent resource for optimizing fluorescent imaging experiments relevant to many imaging modalities.32235926 [Google Scholar]

- The Confocal Microscopy List (http://confocal-microscopy-list.588098.n2.nabble.com/) has, since the early 1990s, been a go-to resource for microscopy and imaging-related questions.

- Microform (https://forum.microlist.org/) was recently established by Jennifer Waters and Tally Lambert. Currently, most questions are on hardware, fluorophores and other practical aspects of microscopy.

- TeraStitcher (https://abria.github.io/TeraStitcher/) is a stitching tool for large microscopy datasets developed by Alessandro Bria, Giulio Iannello and Roberto Valenti.

- Napari (https://github.com/napari/napari) is a rapidly evolving multidimensional image viewer especially well suited for microscopy datasets written in Python. The development is spearheaded by the Chan-Zuckerberg Biohub.

- BigStitcher (https://imagej.net/BigStitcher) is a stitching toolbox integrated into the ImageJ/Fiji ecosystem capable of stitching and fusing large multiview lightsheet datasets. The BigStitcher software was developed in the laboratory of Stephan Preibisch.

Footnotes

Competing interests

The authors declare no competing interests.

Reporting Summary

Further information on research design is available in the Nature Research Reporting Summary linked to this article.

Supplementary information The online version contains supplementary material available at https://doi.org/10.1038/s41596-021-00502-8.

References

- 1.Gradinaru V, Treweek J, Overton K & Deisseroth K Hydrogel-tissue chemistry: principles and applications. Annu. Rev. Biophys 47, 355–376 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Susaki EA et al. Versatile whole-organ/body staining and imaging based on electrolyte-gel properties of biological tissues. Nat. Commun 11, 1982 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Ueda HR et al. Tissue clearing and its applications in neuroscience. Nat. Rev. Neurosci 21, 61–79 (2020). [DOI] [PubMed] [Google Scholar]

- 4.Ertürk A. et al. Three-dimensional imaging of the unsectioned adult spinal cord to assess axon regeneration and glial responses after injury. Nat. Med 18, 166–171 (2012). [DOI] [PubMed] [Google Scholar]

- 5.Lerner TN et al. Intact-brain analyses reveal distinct information carried by SNc dopamine subcircuits. Cell 162, 635–647 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Adhikari A. et al. Basomedial amygdala mediates top-down control of anxiety and fear. Nature 527, 179–185 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Belle M. et al. Tridimensional visualization and analysis of early human development. Cell 169, 161–173.e12 (2017). [DOI] [PubMed] [Google Scholar]

- 8.Acar M. et al. Deep imaging of bone marrow shows non-dividing stem cells are mainly perisinusoidal. Nature 526, 126–130 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chen JY et al. Hoxb5 marks long-term haematopoietic stem cells and reveals a homogenous perivascular niche. Nature 530, 223–227 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Oshimori N, Oristian D & Fuchs E TGF-β promotes heterogeneity and drug resistance in squamous. cell carcinoma. Cell 160, 963–976 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.von Neubeck B. et al. An inhibitory antibody targeting carbonic anhydrase XII abrogates chemoresistance and significantly reduces lung metastases in an orthotopic breast cancer model in vivo. Int. J. Cancer 143, 2065–2075 (2018). [DOI] [PubMed] [Google Scholar]

- 12.Tanaka N. et al. Publisher Correction: whole-tissue biopsy phenotyping of three-dimensional tumours reveals patterns of cancer heterogeneity. Nat. Biomed. Eng 1, 1 (2018). [DOI] [PubMed] [Google Scholar]

- 13.Henning Y, Osadnik C & Malkemper EP EyeCi: optical clearing and imaging of immunolabeled mouse eyes using light-sheet fluorescence microscopy. Exp. Eye Res 180, 137–145 (2019). [DOI] [PubMed] [Google Scholar]

- 14.Johnson SB, Schmitz HM & Santi PA TSLIM imaging and a morphometric analysis of the mouse spiral ganglion. Hear. Res 278, 34–42 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Yang B. et al. Single-cell phenotyping within transparent intact tissue through whole-body clearing. Cell 158, 945–958 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Spalteholz W. Über das Durchsichtigmachen von menschlichen und tierischen Präparaten (Leipzig: S. Hierzel). Leipzig (1914). [Google Scholar]

- 17.Costantini I, Cicchi R, Silvestri L, Vanzi F & Pavone FS In-vivo and ex-vivo optical clearing methods for biological tissues: review. Biomed. Opt. Express 10, 5251 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Dodt HU et al. Ultramicroscopy: three-dimensional visualization of neuronal networks in the whole mouse brain. Nat. Methods 4, 331–336 (2007). [DOI] [PubMed] [Google Scholar]

- 19.Ertürk A. et al. Three-dimensional imaging of solvent-cleared organs using 3DISCO. Nat. Protoc 7, 1983–1995 (2012). [DOI] [PubMed] [Google Scholar]

- 20.Buenrostro JD, Giresi PG, Zaba LC, Chang HY & Greenleaf WJ Transposition of native chromatin for fast and sensitive epigenomic profiling of open chromatin, DNA-binding proteins and nucleosome position. Nat. Methods 10, 1213–1218 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Masselink W. et al. Broad applicability of a streamlined ethyl cinnamate-based clearing procedure. Development 146, dev166884 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Chung K & Deisseroth K CLARITY for mapping the nervous system. Nat. Methods 10, 508–513 (2013). [DOI] [PubMed] [Google Scholar]

- 23.Du H, Hou P, Zhang W & Li Q Advances in CLARITY based tissue clearing and imaging (review). Exp. Ther. Med 16, 1567–1576 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Hama H. et al. Scale: a chemical approach for fluorescence imaging and reconstruction of transparent mouse brain. Nat. Neurosci 14, 1481–1488 (2011). [DOI] [PubMed] [Google Scholar]

- 25.Tainaka K. et al. Whole-body imaging with single-cell resolution by tissue decolorization. Cell 159, 911–924 (2014). [DOI] [PubMed] [Google Scholar]

- 26.Tainaka K. et al. Chemical landscape for tissue clearing based on hydrophilic reagents. Cell Rep 24, 2196–2210.e9 (2018). [DOI] [PubMed] [Google Scholar]

- 27.Ke MT, Fujimoto S & Imai T SeeDB: a simple and morphology-preserving optical clearing agent for neuronal circuit reconstruction. Nat. Neurosci 16, 1154–1161 (2013). [DOI] [PubMed] [Google Scholar]

- 28.Hou B. et al. Scalable and DiI-compatible optical clearance of the mammalian brain. Front. Neuroanat 9, (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Aoyagi Y, Kawakami R, Osanai H, Hibi T & Nemoto T A rapid optical clearing protocol using 2,2′-thiodiethanol for microscopic observation of fixed mouse brain. PLoS ONE 10, e0116280 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Lai HM et al. Next generation histology methods for three-dimensional imaging of fresh and archival human brain tissues. Nat. Commun 9, 1066 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]