Abstract

The dominant theoretical framework to account for reinforcement learning in the brain is temporal difference (TD) reinforcement learning. The TD framework predicts that some neuronal elements should represent the reward prediction error (RPE), which means they signal the difference between the expected future rewards and the actual rewards. The prominence of the TD theory arises from the observation that firing properties of dopaminergic neurons in the ventral tegmental area appear similar to those of RPE model-neurons in TD learning. Previous implementations of TD learning assume a fixed temporal basis for each stimulus that might eventually predict a reward. Here we show that such a fixed temporal basis is implausible and that certain predictions of TD learning are inconsistent with experiments. We propose instead an alternative theoretical framework, coined FLEX (Flexibly Learned Errors in Expected Reward). In FLEX, feature specific representations of time are learned, allowing for neural representations of stimuli to adjust their timing and relation to rewards in an online manner. In FLEX dopamine acts as an instructive signal which helps build temporal models of the environment. FLEX is a general theoretical framework that has many possible biophysical implementations. In order to show that FLEX is a feasible approach, we present a specific biophysically plausible model which implements the principles of FLEX. We show that this implementation can account for various reinforcement learning paradigms, and that its results and predictions are consistent with a preponderance of both existing and reanalyzed experimental data.

The term reinforcement learning is used in machine learning1, in behavioral science2 and in neurobiology3, to denote learning on the basis of rewards or punishment. One type of reinforcement learning is temporal difference (TD) learning, which was designed for machine learning purposes. It has the normative goal of estimating future rewards when rewards can be delayed in time with respect to the actions or cues that engendered these rewards1.

One of the variables in TD algorithms is called reward prediction error (RPE), and it is simply the difference between the discounted predicted reward at the current state and the discounted predicted reward + actual reward at the next state. The concept of TD learning became prominent in neuroscience once it was demonstrated that firing patterns of dopaminergic neurons in ventral tegmental area (VTA) during reinforcement learning resemble RPE4–6

Implementations of TD using computer algorithms are straightforward but are more complex when they are mapped onto plausible neural machinery7–9. Current implementations of neural TD assume a set of temporal basis-functions9,10. These basis functions are activated by external cues. For this assumption to hold, each possible external cue must activate a separate set of basis-functions, and these basis-functions must tile all possible learnable intervals between stimulus and reward.

In this paper we demonstrate that 1) these assumptions are implausible from a fundamental conceptual level, and 2) predictions of such algorithms are inconsistent with various established experimental results. Instead, we propose that temporal basis functions used by the brain are themselves learned. We call this theoretical framework: Flexibly Learned Errors in Expected Reward, or FLEX for short. We also propose a biophysically plausible implementation of FLEX, as a proof-of-concept model. We show that key predictions of this model are consistent with actual experimental results but are inconsistent with some key predictions of the TD theory.

Results

TD learning with a fixed feature specific temporal-basis

The original TD learning algorithms assumed that agents can be in a set of discrete labeled states (s) which are stored in memory. The goal of TD is to learn a value function such that each state becomes associated with a unique value that estimates future discounted rewards. Learning is driven by the difference between value at two subsequent states, and hence such algorithms are called temporal difference algorithms. Mathematically this is captured by the update algorithm: , where is the next state and is the reward in next state, is an optional discount factor and is the learning rate.

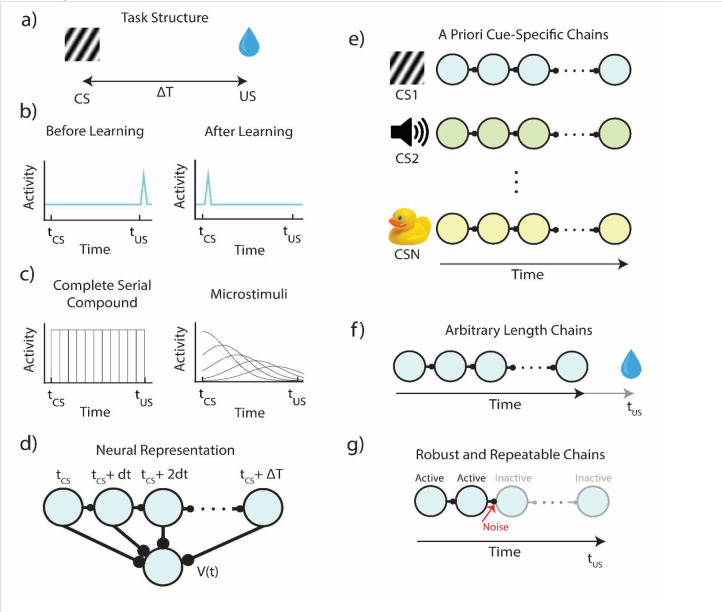

The term in the brackets in the right-hand side of the equation is called the RPE. It represents the different between the estimated value at the current state and the estimated discounted value at the next state in addition to the actual reward at the next state. If RPE is zero for every state, the value function no longer changes, and learning reaches a stable state. In experiments that linked RPE to the firing patterns of dopaminergic neurons in VTA, a transient conditioned stimulus (CS) is presented to a naïve animal followed by a delayed reward (also called unconditioned stimulus or US, Figure 1a). It was found that VTA neurons initially respond at the time of reward, but once the association between stimulus and reward is learned, neurons stop firing at the time of the reward and start firing at the time of the stimulus (Figure 1b). This response pattern is what one would expect from TD learning if VTA neurons represent RPE5.

Figure 1 – Structure and Assumptions of Temporal Bases for Temporal Difference Learning.

a) Diagram of a simple trace conditioning task. A conditioned stimulus (CS) such as a visual grating is paired, after a delay ΔT, with an unconditioned stimulus (US) such as a water reward. b) According to the canonical view, neurons in VTA respond only to the US before training, and only to the CS after training. c) In order to represent the delay period, models generally assume neural “microstates” which span the time in between cue and reward. In the simplest case of the complete serial compound (left) the microstimuli do not overlap, and each one uniquely represents a different interval. In general, though (e.g.: microstimuli, right), these microstates can overlap with each other and decay over time. d) A weighted sum of these microstates determines the learned value function V(t). e) An agent does not know a priori which cue will subsequently be paired with reward. In turn, microstate TD models implicitly assume that all N unique cues or experiences in an environment each have their own independent chain of microstates before learning. f) Rewards delivered after the end of a particular cue-specific chain cannot be paired with the cue in question. The chosen length of the chain therefore determines the temporal window of possible associations. g) Microstate chains are assumed to be reliable and robust, but realistic levels of neural noise, drift, and variability can interrupt their propagation, thereby disrupting their ability to associate cue and reward.

Learning algorithms similar to TD have been very successful in machine learning11,12. In such implementations the state (s) could, for example, represent the state of the chess board, or the coordinates in space in a navigational task. Each of these states could be associated with a value. The state space in such examples might be very large, but the values of all these different states could be feasibly stored in a computer’s memory. In some cases, a similar formulation seems feasible for a biological system as well. For example, consider a 2-D navigation problem, where each state is a location in space. One could imagine that each state would be represented by the set of hippocampal place cells activated in this location13, and that another set of neurons would encode the value function, while a third population of neurons (the “RPE”) neurons would compare the value at the current and subsequent state. On its face, this seems to be a reasonable assumption.

However, in contrast to cases where a discrete set of states might have straightforward biological implementation, there are many cases in which this machine learning inspired algorithm cannot be implemented simply in biological machinery. For example, in experiments where reward is delivered with a temporal delay with respect to the stimulus offset (Figure 1), an additional assumption of a preexisting temporal basis is required8.

Why is it implausible to assume a fixed temporal-basis in the brain?

Consider the simple canonical example of Figure 1. In the time interval between the stimulus and the reward, the animal does not change its location, nor does its sensory environment change in any predictable manner. The only thing that changes consistently within this interval is time itself. Hence, in order to mark the states between stimulus and reward, the brain must have some internal representation of time, an internal clock which tracks the time since the start of the stimulus. Note however that before the conditioning starts, the animal has no way of knowing that this specific sensory stimulus has unique significance and therefore each specific stimulus must a priori be assigned its own specific temporal representation.

This is the main hurdle of implementing TD in a biophysically realistic manner - figuring out how to represent the temporal basis upon which the association between cue and reward occurs (Figure 1a). Previous attempts were based on the assumption that there is a fixed cue-specific temporal basis, an assumption which has previously been termed “a useful fiction”8. The specific implementations include the commonly utilized tapped delay lines5,14,15 (or the so-called complete serial compound), which are neurons triggered by the sensory cue, but that are active only at a specific delay, or alternatively, a set of cue specific neuronal responses which are also delayed but have a broader temporal support which increases with an increasing delay (the so called “microstimuli”)8,10 (Figure 1c).

For this class of temporal representations, the delay time between cue and reward is tiled by a chain of neurons, with each neuron representing a cue-specific time (sometimes referred to as a “microstate”) (Figure 1c). In the simple case of the complete serial compound, responding at the cue-relative times: . The learned value the temporal basis is simply a set of neurons that have non-overlapping responses that start function (and in turn the RPE) assigned to a given cue at time t is then given by a learned weighted sum of the activations of these microstates at time t (Figure 1d).

We argue that the conception of a fixed cue-dependent temporal basis makes biologically unrealistic assumptions. First, since one does not know a priori whether presentation of a cue will be followed by a reward, these models assume implicitly that every single environmental cue (or combination of environmental cues) must trigger its own sequence of neural microstates, prior to potential cue-reward pairing (Figure 1e). Further, since one does not know a priori when presentation of a cue may or may not be followed by a reward, these models also assume that microstate sequences are arbitrarily long to account for all possible (or a range of possible) cue-reward delays (Figure 1f). Finally, these microstates are assumed to be reliably reactivated upon subsequent presentations of the cue, e.g., a neuron that represents must always represent - across trials, sessions, days, etc. However, implementation of models that generate a chain-like structure of activity can be fragile to biologically relevant factors such as noise, neural dropout, and neural drift, all of which suggest that the assumption of reliability is problematic as well (Figure 1g). The totality of these observations imply that on the basis of first principles, it is hard to justify the idea of the fixed feature-specific temporal basis, a mechanism which is required for current supposedly biophysical implementations of TD learning.

Although a fixed set of basis-functions for every possible stimulus is untenable, one could assume that it is possible to replace this assumption with a single set of fixed, universal basis-functions. An example of mechanism that can generate such general basis functions is a fixed recurrent neural network (RNN). Instead of the firing of an individual neuron representing a particular time, here the entire network state can be thought of as a representation of a cue specific time. This setup is illustrated in Figure 2a.

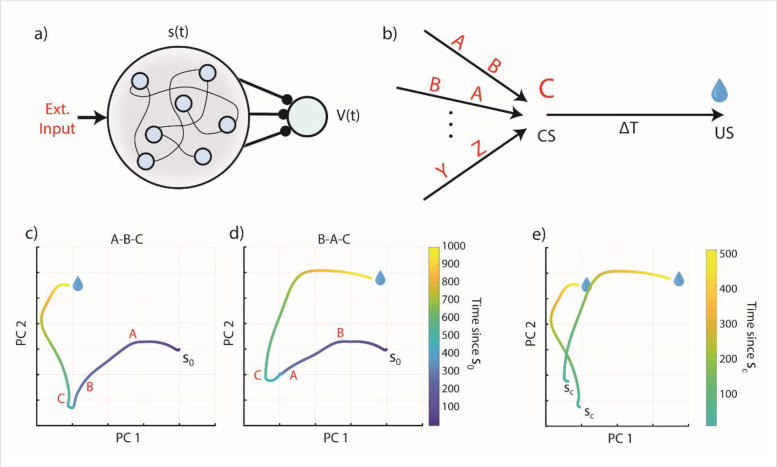

Figure 2 – A fixed RNN as a basis function generator.

a) Schematic of a fixed recurrent neural network as a temporal basis. The network receives external inputs and generates states s(t), which act as a basis for learning the value function V(t). Compare to figure 1c. b) Schematic of the task protocol. Every presentation of C is followed by a reward at a fixed delay of 1000ms. However, any combination or sequence of irrelevant stimuli may precede the conditioned stimulus C (they might also come after the CS, e.g. A,C,B). c) Network activity, plotted along its first two principal components, for a given initial state s0 and a sequence of presented stimuli A-B-C (red letter is displayed at the time of a given stimulus’ presentation). d) Same as c) but for input sequence B-A-C. e) Overlay of the A-B-C ad B-A-C network trajectories, starting from the state at the time of the presentation of C (state sc). The trajectory of network activity differs in these two cases, so the RNN state does not provide a consistent temporal basis that tracks the time since the presentation of stimulus C.

To understand the consequences of this setup, we assume a simple environment in which one specific stimulus (denoted as stimulus C) is always followed 1000ms later by a reward; this stimulus is the CS. However, it is reasonable to assume for the natural world that this stimulus exists among many other stimuli that do not predict a reward. For simplicity we consider 3 stimuli, A, B and C, which can appear at any possible order, as shown in Figure 2b, but in which stimulus C always predicts a reward with a delay of 1000ms.

We simulated the responses of such a fixed RNN to different stimulus combinations (see Methods). The complex RNN activity can be viewed as a projection to a subspace spanned by the first two principal components of the data. In Figure 2c,d we show a projection of the RNN response for two different sequences, A-B-C and B-A-C respectively, aligned to the time of reward. In Figure 2e we show the two trajectories side by side in the same subspace, starting with the presentation of stimulus C.

What these results show is that every time stimulus C appears, it generates a different temporal response in the RNN depending on the preceding stimuli. These temporal patterns can also be changed by a subsequent stimulus that may appear between the CS and US. These results mean that such a fixed RNN cannot serve as a universal basis function because its responses are not repeatable.

There are potential workarounds, such as to force the network states representing the time since stimulus C to be the same across trials. This is equivalent to learning the weights of the network such that all possible “distractor” cues pass through the network’s null space. This means that the stimulus resets the network and erases its memory, but that other stimuli have no effect on the network. Generally, one would have to train the RNN to reproduce a given dynamical pattern representing C->reward, while also being invariant to noise or task-irrelevant dimensions, the latter of which can be arbitrarily high and would have to be sampled over during training.

However, this approach requires a priori knowledge that C is the conditioned stimuli (since C-> reward is the dynamical pattern we want to preserve) and that the other stimuli are nuisance stimuli. This leaves us with quite a conundrum. In the prospective view of temporal associations assumed by TD, to learn that C is associated with reward, we require a steady and repeatable labeled temporal basis (i.e. the network tracks the time since stimulus C). However, to train an RNN to robustly produce this basis, we need to have previously learned that C is associated with reward, and that the other stimuli are not. As such, these modifications to the RNN, while mathematically convenient, are based on unreasonable assumptions.

Models of TD learning with a fixed temporal-basis are inconsistent with data

Apart from being based on unrealistic assumptions, models of TD learning also make predictions that are inconsistent with experimental data. In recent years, several experiments presented evidence of neurons with temporal response profiles that resemble temporal basis functions16–21, as depicted schematically in Figure 3a. While there is indeed evidence of sequential activity in the brain spanning the delay between cues and rewards (such as in the striatum and hippocampus), these sequences are generally observed after association learning between a stimulus and a delayed reward16,19,20. Some of these experiments have further shown that if the interval between stimulus and reward is extended, the response profiles either remap19, or stretch to fill out the new extended interval16, as depicted in Figure 3a. The fact that these sequences are observed after training and that the temporal response profiles are modified when the interval is changed supports the notion of plastic stimulus specific basis functions, rather than of a fixed set of basis function for each possible stimulus. Mechanistically, these results suggest that the naïve network might generate generic temporal response profile to novel stimuli before learning, resulting from the networks initial connectivity.

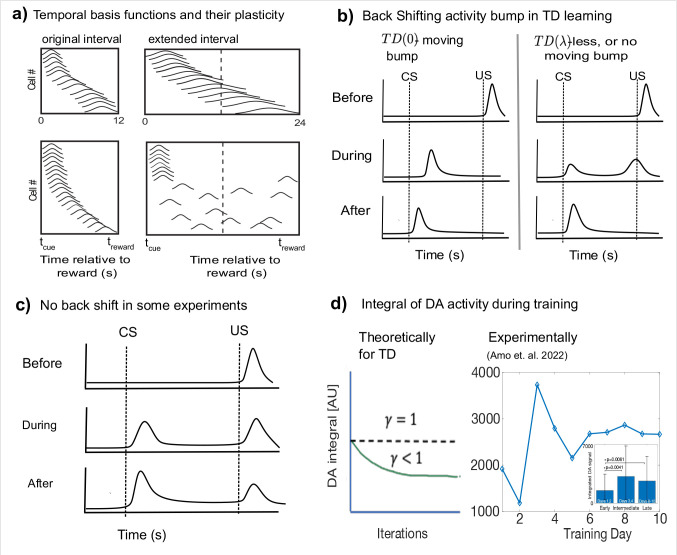

Figure 3 – Certain features of experimental results run counter to predictions of TD.

a) Putative temporal basis functions observed in experiments16,19 after training are plastic, shown here schematically. If, after training, the interval between CS and US is scaled, the basis-functions also change. Recordings in striatum16 show these basis-functions scale with the modified interval (top), while in recordings from hippocampus19 (bottom), they are observed to redistribute to fill up the new interval. b) According to the theory, RPE neuron activity during learning exhibits a backward moving bump (left). For the bump no longer appears (right). c) A schematic depiction of experiments where there is no backward shifting bump22,24. d) The integral of DA neuron activity according to TD theory (left) should be constant over training (for ) or decreasing monotonically for . We reanalyzed existing experimental data (right) and found that the integral can transiently significantly increase. The inset shows the mean and standard errors for early (1–2), intermediate (3–4) and late (8–10) days. (Data from Amo et al 202223,25, see Supplemental Figure 1).

In the canonical version of TD learning (), RPE neurons exhibit a bump of activity that moves back in time from the US to the CS during the learning process (Figure 3b-left). Subsequent versions of TD learning, called , which speed up learning by the use of a memory trace, have a much smaller, or no noticeable moving bump (Figure 3b, center and right), depending on the length of the memory trace, denoted by . Most published experiments have not shown VTA neuron responses during the learning process. In one prominent example by Pan et al. in which VTA neurons are observed over the learning process22, (depicted schematically in Figure 2c) no moving bump is observed, prompting the authors to deduce that such memory traces exist. In a more recent paper by Amo et al. a moving bump is reported23. In contrast, in another recently published paper no moving bump is observed24. Taken together, these different results suggest that at least in some cases a moving bump is not observed. However, since a moving bump is not predicted in for sufficiently large , these results do not invalidate the TD framework in general, but rather suggest that in some cases at least the variant is inconsistent with the data22.

While the moving bump prediction is parameter dependent, another prediction common to all TD variants is that the integrated RPE, obtained by summing response magnitudes over the whole trial duration, does not exceed the initial US response on the first trial. This prediction is robust because the normative basis of TD is to evaluate expected reward or discounted expected reward. In versions of TD where non-discounted reward is evaluated () the integral of RPE activity should remain constant throughout learning. Commonly TD estimates discounted reward (), where the discount means that rewards that come with a small delay are worth more than rewards that arrive with a large delay. With discounted rewards the integral of RPE activity will decrease with learning and become smaller than the initial US response. In contrast, we reanalyzed data from a recent experiment (Amo et al. 2022)23,25 and found that the integrated response can transiently increase over the course of learning (Figure 3d).

An additional prediction of TD learning which holds across many variants is that when learning converges, and if a reward is always delivered (100% reward delivery schedule), the response of RPE neurons at the time of reward is zero. Even in the case where a small number of rewards are omitted (e.g. 10%), TD predicts that the response of RPE neurons at the time of reward is very small, much smaller than at the time of the stimulus. This seems to be indeed the case for several example neurons shown in the original experiments of dopaminergic VTA neurons5. However, additional data obtained more recently indicates this might not always be the case and that significant response to reward persists throughout training22,23,26. This discrepancy between TD and the neural data is observed both for experiments in which responses throughout learning are presented22,23 as well as in experiments that only show results after training26.

In experimental approaches affording large ensembles of DA neurons to be simultaneously recorded, a diversity of responses has been reported. Some DA neurons are observed to become fully unresponsive at time of reward, while others exhibit a robust response at time of reward that is no weaker than the initial US response of these cells. This is clearly exhibited by one class of dopaminergic cells (type I) that Cohen et. al26 recorded in VTA. This diversity implies that TD is inconsistent with the results of some of the recorded neurons, but it is possible that TD does apply in some sense to the whole population. One complicating factor is that in most experiments we have no way of ascertaining that learning has reached its final state.

The original conception of TD is clean, elegant, and based on a simple normative foundation of estimating expected rewards. Over the years, various experimental results that do not fully conform with the predictions of TD have been interpreted as consistent with theory by making ad-hoc modifications27,28. Such modifications might include an assumption of different variants of the learning rule for each neuron, such that each dopaminergic neuron no longer represents RPE27, or an assumption of additional inputs such that even when the expectation of reward is learned and fully expected dopaminergic neurons still respond at the time of reward28.

The various modifications that are added to account for new data, and which diverge from a straightforward implementation of TD, raise the subtle question: when does TD stop being TD? We propose that changes to TD in which its normative basis of maximizing future -discounted reward no longer holds are no longer part of the TD framework. The types of modifications added to theory to account for the experimental data are quite common in science in general. Scientific theories that no longer account for the data are often repeatedly modified before there is eventually a paradigm shift, and they are reluctantly abandoned29.

Towards this end, a recent paper has also shown that dopamine release, as recorded with photometry, seems to be inconsistent with RPE24. This paper has shown many experimental results that are at odds with those expected by a RPE, and specifically, these experiments show that dopamine release at least partially represents retrospective probability of stimulus given reward. Other work has suggested that dopamine signaling is more consistent with direct learning of behavioral policy than a value-based RPE30.

The FLEX theory of reinforcement learning: A theoretical framework based on a plastic temporal basis.

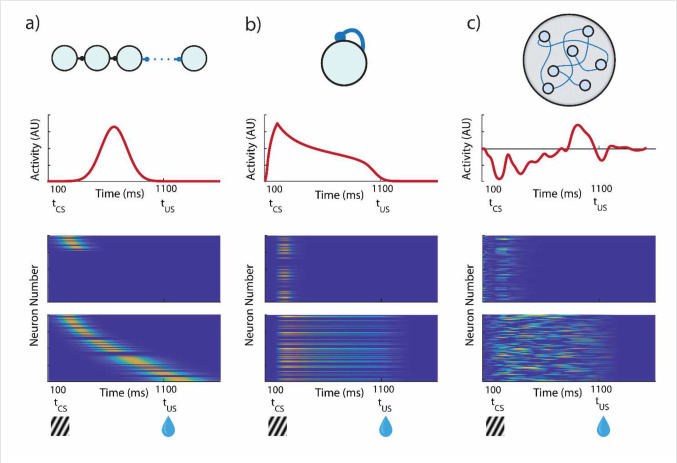

The FLEX theory assumes that there is a plastic (as opposed to fixed) temporal basis that evolves alongside the changing response of reward dependent neurons (such as DA neurons in VTA). The theory in general is agnostic about the functional form of the temporal basis, and several possible examples are shown in Figure 4 (top, schematic; middle, characteristic single unit activity; bottom, population activity before and after learning). Synfire chains (Figure 4a), homogenous recurrent networks (Figure 4b), and heterogeneous recurrent networks (Figure 4c) could all plausibly support the temporal basis. Before learning, these basis-functions do not exist, though some neurons do respond transiently to the CS. Over learning, such basis-functions develop (Figure 4, bottom) in response to the rewarded stimulus, and not to unrewarded stimuli. In FLEX, we do not need a separate, predeveloped basis for every possible stimulus that spans an arbitrary amount of time. Instead, basis functions only form in the process of learning, develop only to stimuli which are tied to reward, and only span the relevant temporal interval for the behavior.

Figure 4 – Potential Architectures for a Flexible Temporal Basis.

Three example networks which could implement a FLEX theory. Top, network architecture. Middle, activity of one example neuron in the network. Bottom, network activity before and after training. Each network initially only has transient responses to stimuli, modifying plastic connections (in blue) during association to develop a specific temporal basis to reward-predictive stimuli. a) Synfire chains could support a FLEX model, if the chain could recruit more members during learning, exclusive to reward-predictive stimuli. b) A population of neurons with homogenous recurrent connections have a characteristic decay time that is related to the strength of the weights. The cue-relative time can then be read out by the mean level of activity in the network. c) A population of neurons with heterogenous and large recurrent connections (liquid state machine) can represent cue-relative time by treating the activity vector at time t as the “microstate” representing time t (as opposed to b) where only mean activity is used).

In the following, we demonstrate that such a framework can be implemented in a biophysically plausible model, and that such a model not only agrees with many existing experimental observations, but also can reconcile seemingly incongruent results pertaining to sequential conditioning. The aim of this model is to show that the FLEX theoretical framework is possible and plausible given the available data, not to claim that this implementation is a perfectly validated model of reinforcement learning in the brain. Previous models concerning hippocampus and prefrontal cortex have also considered cue memories with adaptive durations, but not explicitly in the context of challenging the fundamental idea of a fixed temporal basis31,32.

A biophysically plausible implementation of FLEX, proof-of-concept

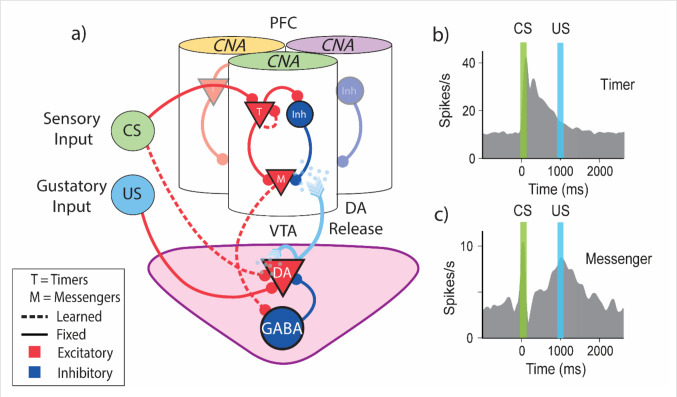

Here we present a biophysically plausible proof-of-concept model that implements FLEX. This model is motivated by previous experimental results17,18 and previous theoretical work in our lab33–37. The network’s full architecture (visualized in Figure 5a) consists of two separate modules, a basis function module, and a reward module, here mapped onto distinct brain areas. We treat the reward module as an analogue of the VTA and the basis-function module akin to a cortical region such as the mPFC or OFC (although other cortical or subcortical regions, notably striatum16,38 might support temporal basis functions). All cells in these regions are modeled as spiking integrate and fire neurons (see Methods).

Figure 5 – Biophysically Inspired Architecture Allows for Flexible Encoding of Time.

a) Diagram of model architecture. CNAs (visualized here as columns) located in PFC are selective to certain sensory stimuli (indicated here by color atop the column) via fixed excitatory inputs (CS). VTA DA neurons receive fixed input from naturally positive valence stimuli, such as food or water reward (US). DA neuron firing releases dopamine, which acts as a learning signal for both PFC and VTA. Solid lines indicate fixed connections, while dotted lines indicate learned connections. b,c) Schematic representation of data adapted from Liu et al. 201542. b) Timers learn to characteristically decay at the time of cue-predicted reward. c) Messengers learn to have a firing peak at the time of cue-predicted reward.

We assume that within our basis function module are sub-populations of neurons tuned to certain external inputs, visualized in Figure 5a as set of discrete “columns”, each responding to a specific stimulus. Within each column there are both excitatory and inhibitory cells, with a connectivity structure that includes both plastic (dashed lines, Figure 5a) and fixed synaptic connections (solid lines, Figure 5a). The VTA is composed of dopaminergic (DA) and inhibitory GABAergic cells. The VTA neurons have a background firing rate of ~5 Hz, and the DA neurons have preexisting inputs from “intrinsically rewarding” stimuli (such as a water reward). The plastic and fixed connections between the modules and from both the CS and US to these modules are also depicted in Figure 5a.

The model’s structure is motivated by observations of distinct classes of temporally-sensitive cell responses that have evolve during trace conditioning experiments in medial prefrontal cortex (mPFC) orbitofrontal cortex (OFC) and primary visual cortex (V1)17,18,36,39,40. The architecture described above allows us to incorporate these observed cell classes into our basis-function module (Figure 5b). The first class of neurons (“Timers”) are feature-specific and learn to maintain persistently elevated activity that spans the delay period between cue and reward, eventually decaying at the time of reward (real or expected). The second class, the “Messengers”, have an activity profile that peaks at the time of real or expected reward. This set of cells form what has been coined a “Core Neural Architecture” (CNA)35, a potentially canonical package of neural temporal representations. A slew of previous studies have shown these cell classes within the CNA to be a robust phenomenon experimentally18,36,39–43, and computational work has demonstrated that the CNA can be used to learn and recall single temporal intervals35, Markovian and non-Markovian sequences34,44. For simplicity, our model treats connections between populations within a single CNA as fixed (previous work has shown that such a construction is robust to perturbation of these weights34,35).

Learning in the model is dictated by the interaction of eligibility traces and dopaminergic reinforcement. We use a previously established two-trace learning rule34,36,37,45 (TTL), which assumes two Hebbian activated eligibility traces, one associated with LTP and one associated with LTD (see Methods). We use this rule because it solves the temporal credit assignment problem inherent in trace conditioning, reaches stable fixed points, and since such traces have been experimentally observed in trace conditioning tasks36. In theory, other methods capable of solving the temporal credit assignment problem (such as a rule with a single eligibility trace33) could also be used to facilitate learning in FLEX, but owing to its functionality and experimental support, we choose to utilize TTL for this work. See the Methods section for details of the implementation of TTL used here.

Now, we will use this implementation of FLEX to simulate several experimental paradigms, showing that it can account for reported results. Importantly, some of the predictions of the model are categorically different than those produced by TD, which allows us to distinguish between the two theories based on experimental evidence.

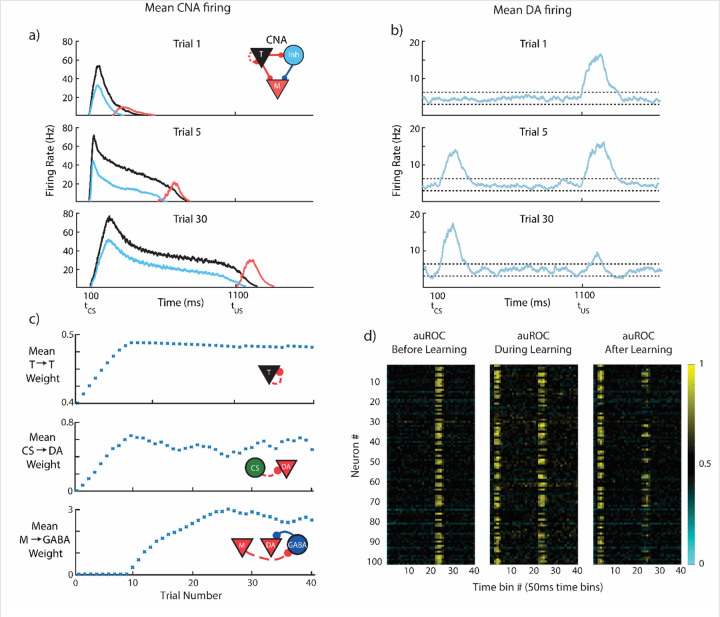

CS-evoked and US-evoked Dopamine Responses Evolve on Different Timescales

First, we test FLEX on a basic trace conditioning task, where a single conditioned stimulus is presented, followed by an unconditioned stimulus at a fixed delay of one second (Figure 6a). The evolution of FLEX over training is mediated by reinforcement learning (via TTL) in three sets of weights: Timer → Timer, Messenger → VTA GABA neurons, and CS → VTA DA neurons. These learned connections encode the feature-specific cue-reward delay, the temporally specific suppression of US-evoked dopamine, and the emergence of CS-evoked dopamine, respectively.

Figure 6-. CS-evoked and US-evoked Model Dopamine Responses Evolve on Different Timescales.

The model is trained for 40 trials while being presented with a CS at 100ms and a reward at 1100ms. a) Mean firing rates for the CNA (see inset for colors), for three different stages of learning. b) Mean firing rate over all DA neurons taken at the same three stages of learning. Firing above or below thresholds (dotted lines) evokes positive or negative in PFC. c) Evolution of mean synaptic weights over the course of learning. Top, middle, and bottom, mean strength of T→T, CS→DA, and M→GABA synapses, respectively. d) Area under receiver operating characteristic (auROC, see Methods) for all VTA neurons in our model for 15 trials before (left, US only), 15 trials during (middle, CS+US), and 15 trials after (right, CS+US) learning. Values above and below 0.5 indicate firing rates above and below the baseline distribution.

Upon presentation of the cue, cue neurons (CS) and feature-specific Timers are excited, producing Hebbian-activated eligibility traces at their CS->DA and T->T synapses, respectively. When the reward is subsequently presented one second later, the excess dopamine it triggers acts as a reinforcement signal for these eligibility traces (which we model as a function of the DA neuron firing rate, , see Methods) causing both the cue neurons’ feed-forward connections and the Timers’ recurrent connections to increase (Figure 6b).

Over repeated trials of this cue-reward pairing, the Timers’ recurrent connections continue to increase until they approach their fixed points, which corresponds to the Timers’ persistent firing duration increasing until it spans the delay between CS and US (Supplemental Figure 2 and Methods). These mature Timers then provide a feature-specific representation of the expected cue-reward delay.

The increase of feed-forward connections from the CS to the DA neurons (6c) causes the model to develop a CS-evoked dopamine response. Again, this feed-forward learning uses dopamine release at as the reinforcement signal to convert the Hebbian activated CS → DA eligibility traces into synaptic changes (Supplemental Figure 3). The emergence of excess dopamine at the time of the CS owing to these potentiated connections also acts to maintain them at a non-zero fixed point, so CS-evoked dopamine persists long after US-evoked dopamine has been suppressed to baseline (see Methods).

As the Timer population modifies its timing to span the delay period, the Messengers are “dragged along”, since, owing to the dynamics of the Messengers’ inputs (T and Inh), the Messengers themselves selectively fire at the end of the Timers’ firing envelope. Eventually, the Messengers overlap with tonic background activity of VTA GABAergic neurons at the time of the US (Figure 6b). When combined with the dopamine release at , this overlap triggers Hebbian learning at the Messenger → VTA GABA synapses (see Figure 6c, Methods), which indirectly suppresses the DA neurons. Because of the temporal specificity of the Messengers, this learned inhibition of the DA neurons (through excitation of the VTA GABAergic neurons) is effectively restricted to a short time window around the US and acts to suppress DA neural activity at back towards baseline.

As a result of these processes, our model recaptures the traditional picture of DA neuron activity before and after learning a trace conditioning task (Figure 6b). While the classical single neuron results of Schultz and others suggested that DA neurons are almost completely lacking excess firing at the time of expected reward5, more recent calcium imaging studies have revealed that a complete suppression of the US response is not universal. Rather, many optogenetically identified dopamine neurons maintain a response to the US and show varying development of a response to the CS22,23,26. This diversity is also exhibited in our implementation of FLEX (Figure 6d) due to the connectivity structure which is based on sparse random projections from the CS to the VTA and from the US to VTA.

During trace conditioning in FLEX, the inhibition of the US-evoked dopamine response (via M→GABA learning) occurs only after the Timers have learned the delay period (since M and GABA firing must overlap to trigger learning), giving the potentiation of the CS response time to occur first. At intermediate learning stages (e.g. trial 5, Figure 6a,b), the CS-evoked dopamine response (or equivalently, the CS → DA weights) already exhibits significant potentiation while the US-evoked dopamine response (or equivalently, inverse of the M → US weights) has only been slightly depressed. While this phenomenon has been occasionally observed in certain experimental paradigms 22,46–48, it has not been widely commented on – in FLEX, this is a fundamental property of the dynamics of learning (in particular, very early learning).

If an expected reward is omitted in FLEX, the resulting DA neuron firing will be inhibited at that time, demonstrating the characteristic dopamine “dip” seen in experiments (Supplemental Figure 4)5. This phenomenon occurs in our model because the previous balance between excitation and inhibition (from the US and GABA neurons, respectively) is disrupted when the US is not presented. The remaining input at is therefore largely inhibitory, resulting in a transient drop in firing rates. If the CS is consistently presented without being paired with the US, the association between cue and reward is unlearned, since the consistent negative at the time of the US causes depression of CS→DA weights (Supplemental Figure 4).

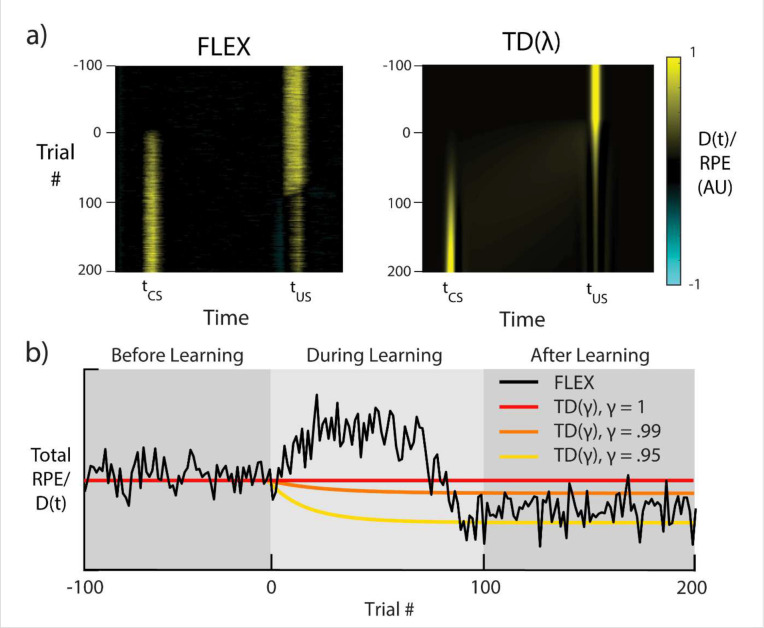

Dynamics of FLEX Diverge from those of TD During Conditioning

FLEX’s property that the evolution of the CS responses can occur independently of (and before) depression of the US response underlies a much more fundamental and general departure of our model from TD based models. In our model, DA activity does not “travel” backwards over trials1,5 as in TD(0), nor is DA activity transferred from one time to the other in an equal and opposite manner as in 7,22. This is because our DA activity is not a strict RPE signal. Instead, while the DA neural firing in FLEX may resemble RPE following successful learning, the DA neural firing and RPE are not equivalent, as evidenced during the learning period.

To demonstrate this, we compare the putative DA responses in FLEX to the RPEs in (Figure 7a), training on the previously described trace conditioning task (see Figure 6a). We set the parameters of our model to match those in earlier work22 and approximate the cue and reward as Gaussians centered at and , respectively. In TD models, by definition, integral of the RPE over the course of the trial is always less than or equal to the original total RPE provided by the initial unexpected presentation of reward. In other words, the error in reward expectation cannot be larger than the initial reward. In both and , this quantity of “integrated RPE” is conserved; for versions of TD with a temporal discounting factor (which acts such that a reward with value presented timesteps in the future is only worth where ), this quantity decreases as learning progresses.

Figure 7 – FLEX Model Dynamics Diverge from those of TD Learning.

Dynamics of both and FLEX when trained with the same conditioning protocol as shown in Figure 6. a) Dopaminergic release for FLEX (left), and RPE for (right), over the course of training. b) Sum total (i.e., the sum of each row in a) is a point on the line of b)) dopaminergic reinforcement during a given trial of training in FLEX (black), and sum total RPE in three instances of with discounting parameters , and (red, orange, and yellow, respectively). Shaded areas indicate functionally different stages of learning in FLEX. The learning rate in our model is reduced in this example, as to make direct comparison with . 100 trials of US-only presentation (before learning) are included for comparison with subsequent stages.

In FLEX, by contrast, integrated dopaminergic release during a given trial can be greater than that evoked by the original unexpected US (see Figure 7b), and therefore during training the DA signal in FLEX diverges from a reward prediction error. This property has not been explicitly investigated, and most published experiments do not provide continuous data during the training phase. However, to test our prediction, we re-analyzed recently published data which does cover the training phase23, and found that there is indeed a significant transient increase in the dopamine release during training (Figure 3d and Supplemental Figure 1). Another recent publication found that initial DA response to the US was uncorrelated with the final DA response to the CS30, which also supports the idea that integrated dopamine release is not conserved.

FLEX Unifies Sequential Conditioning Results

Standard trace conditioning experiments with multiple cues (CS1→CS2→US) have generally reported the so-called “serial transfer of activation” – that dopamine neurons learn to fire at the time of the earliest reward-predictive cue, “transferring” their initial activation at back to the time of the first conditioned stimulus49,50. However, other results have shown that the DA neural responses at the times of the first and second conditioned stimuli ( and , respectively) evolve together, with both CS1 and CS2 predictive of the reward22,24. Surprisingly, FLEX can reconcile these seemingly contradictory results. In Figure 8 we show simulations of sequential conditioning using FLEX. In early training we observe an emerging response to both CS1 and CS2, as well as to the US (Figure 8b,ii). Later on the response to the US is suppressed (Figure 8b,iii). During late training (Figure 8b,iv) the response to CS2 is suppressed and all activation is transferred to the earliest predictive stimulus, CS1. These evolving dynamics can be compared to the different experimental results. Early training is similar to the results of Pan et al. (2005)22 and Jeong et al. (2022)24, as seen in Figure 8c, while the late training results are similar to the results of Schultz (1993)49 as seen in Figure 8d.

Figure 8 – FLEX model Reconciles Differing Experimental Phenomena Observed during Sequential Conditioning.

Results from “sequential conditioning”, where sequential neutral stimuli CS1 and CS2 and are paired with delayed reward US. a) Visualization of protocol. In this example, the US is presented starting at 1500ms, with CS1 is presented starting at 100ms, and CS2 is presented starting at 800ms. b) Mean firing rates over all DA neurons, for four distinctive stages in learning – initialization(i), acquisition(ii), reward depression(iii), and serial transfer of activation(iv). c) Schematic illustration of experimental results from recorded dopamine neurons, labeled with the matching stage of learning in our model. c) DA neuron firing before (top), during (middle) and after (bottom) training, wherein two cues (0s and 4s) were followed by a single reward (6s). Adapted from Pan et al. 200522. d) DA neuron firing after training wherein two cues (instruction, trigger) were followed by a single reward. Adapted from Schultz et al. 199349.

After training, in FLEX, both sequential cues still affect the dopamine release at the time of reward, as removal of either cue results in a partial recovery of the dopamine response at (Supplemental Figure 5). This is the case even in late training in FLEX, when there is a positive dopamine response only to first cue – our model predicts that removal of the second cue will result in a positive dopamine response at the time of expected reward. In contrast, the RPE hypothesis would posit that after extended training, the value function would eventually be maximized following the first cue, and therefore removal of the subsequent cue would not change dopamine release at .

FLEX is also capable of replicating results of a different set of sequential learning paradigms (Supplemental Figure 6). In these protocols, the network is initially trained on a standard trace conditioning task with a single CS. Once the cue-reward association is learned completely, a second cue is inserted in between the initial cue and the reward, and learning is repeated. As in experiments, this intermediate cue on its own does not become reward predictive, a phenomenon called “blocking”51–53. However, if reward magnitude is increased or additional dopamine is introduced to the system, a response to the intermediate CS (CS2) emerges, a phenomenon termed “unblocking”54,55. Each of these phenomena can be replicated in FLEX (Supplemental Figure 6).

Discussion

TD has established itself as one of the most successful models of neural function to date, as its predictions regarding RPE have, to a large extent, matched experimental results. However, two key factors make it reasonable to consider alternatives to TD as a modeling framework for how midbrain dopamine neurons could learn RPE. First, attempts for biologically plausible implementations of TD have previously assumed that even before learning, each possible cue triggers a separate chain of neurons which tile an arbitrary period of time relative to the cue start. The a priori existence of such an immense set of fixed, arbitrarily long cue-dependent basis functions is both implausible and inconsistent with experimental evidence. Second, different conditioning paradigms have revealed dopamine dynamics that are incompatible with the predictions of models based on the TD framework22,24,56,57.

To overcome these problems, we suggest that the temporal basis itself is not fixed, but instead plastic, and is learned only for specific cues that lead to reward. We call this theoretical framework FLEX. We also presented a biophysically plausible implementation of FLEX, and we have shown that it can generate flexible basis functions, and that it produces dopamine cell dynamics that are consistent with experimental results. Our implementation should be seen as a proof-of-concept model. It shows that FLEX can be implemented with biophysical components, and that such an implementation is consistent with much of the data. It does not show that the specific details of this implementation, (including the brain regions in which the temporal-basis is developed, the specific dynamics of the temporal basis functions, and the learning rules used) are those used by the brain.

One of the appealing aspects of TD learning is that it arises from simple normative assumption that the brain needs to estimate future expected reward. Does FLEX have a similar normative basis? Indeed, in the final state of learning, the responses of DA neurons in FLEX resemble RPE neurons in TD, however in FLEX there is no analog for the value neurons assumed in TD. Unlike in TD, activity of FLEX DA neurons in response to the cue represent an association with future expected rewards, independent of a valuation.

Instead, the goal of FLEX is to learn the association between cue and reward, develop the temporal basis functions that span the period between the two, and transfer DA signaling from the time of the reward to the time of the cue. These basis functions could then be used as a mechanistic foundation for brain activities, including the timing of actions. In essence, DA in FLEX acts to create internal models of associations and timings. Recent experimental evidence30 which suggests that DA correlates more with the direct learning of behavioral policies rather than value-encoded prediction errors is broadly consistent with this view of dopamine’s function within the FLEX framework.

Another recent publication24 has questioned the claim that DA neurons indeed represent RPE, instead hypothesizing that DA release is also dependent on retrospective probabilities24,58. The design of most historical experiments cannot distinguish between these competing hypotheses. In this recent research project, a set of new experiments were designed specifically to test these competing hypotheses, and the results obtained are inconsistent with the common interpretation that DA neurons simply represent RPE24. More generally, while the idea that the brain does and should estimate economic value seems intuitive, it has been recently questioned59. This challenge to the prevailing normative view is motivated by behavioral experimental results which instead suggest that a heuristic process, which does not faithfully represent value, often guides decisions.

Recent papers have questioned the common view of the response of DA neurons in the brain and their relation to value estimation9,24,30,59. Here we survey additional problems with implementations of TD algorithms in neuronal machinery, and propose an alternative theoretical formulation, FLEX, along with a computational implementation of this theory. The fundamental difference from previous work is that FLEX postulates that the temporal basis-functions necessary for learning are themselves learned, and that neuromodulator activity in the brain is an instructive signal for learning these basis functions. Our computational implementation has predictions that are different than those of TD and are consistent with many experimental results. Further, we tested a unique prediction of FLEX (that integrated DA release across a trial can change over learning) by re-analyzing experimental data, showing that the data was consistent with FLEX but not the TD framework.

Methods

Network Dynamics

The membrane dynamics for each neuron are described by the following equations:

Equation 1 - Membrane Dynamics

Equation 2 - Synaptic Activation Dynamics

The membrane potential and synaptic activation of neuron are notated and refers to the conductance, and to the reversal potentials indicated by the appropriate subscript, where leak, excitatory, and inhibitory are indicated by subscripts , and , respectively. is a mean zero noise term. The neuron spikes upon it crossing its membrane threshold potential , after which it enters a refractory period . The synaptic activation is updated by an amount , at each time the neuron spikes, and decays exponentially when there is no spike.

The conductance is the product of the incoming synaptic weights and their respective presynaptic neurons:

Equation 3 - Conductance

are the connection strengths from neuron to neuron , where the superscript can either indicate (excitatory) or (inhibitory). A firing rate estimate for each neuron is calculated as an exponential filter of the spikes, with a time constant .

Equation 4 - Firing Rate

Fixed RNN

For the network in Figure 2, the dynamics of the units in the RNN are described by the equation below:

Equation 5 - RNN Dynamics

Where are the firing rates of the units in the RNN. are the recurrent weights of the RNN, each of which is drawn from a normal distribution , where is the “gain” of the network60 and is a sigmoidal activation function. Each of the inputs given to the network (, or ) is a unique, normally distributed projection of a 100 ms step function.

Dopaminergic Two-Trace Learning (dTTL)

Rather than using temporal difference learning, FLEX uses a previously established learning rule based on competitive eligibility traces, known as “two-trace learning” or TTL34,36,37. However, we replace the general reinforcement signal R(t) of previous implementations by a dopaminergic reinforcement . We repeat here the description of from the main text:

Equation 6 – Dopamine reinforcement

Note that the dopaminergic reinforcement can be both positive and negative, even though actual neuron firing (and the subsequent release of dopamine neurotransmitter) can itself only be positive. The bipolar nature of implicitly assumes that background tonic levels of dopamine do not modify weights, and that changes in synaptic efficacies are a result of a departure (positive or negative) from this background level. The neutral region around , provides robustness to small fluctuations in firing rates which are inherent in spiking networks.

The eligibility traces, which are synapse specific, act as long-lasting markers of Hebbian activity. The two traces are separated into LTP- and LTD-associated varieties via distinct dynamics, which are described in the equations below.

Equation 7 - LTP Trace Dynamics

Equation 8 - LTD Trace Dynamics

Here, (where ) is the LTP ( superscript) or LTD ( superscript) eligibility trace located at the synapse between the j-th presynaptic cell and the i-th postsynaptic cell. The Hebbian activity, , is a simple multiplication for application of this rule in VTA, where and are the time-averaged firing rates at the pre- and post-synaptic cells. Experimentally, the “Hebbian” terms which impact LTP and LTD trace generation are complex36, but in VTA we approximate with the simple multiplication . For synapses in PFC, we make the alteration that , acting to restrict PFC trace generation for large positive RPEs. The alteration of by large positive RPEs is inspired by recent experimental work showing that large positive RPEs act as event boundaries and disrupt across-boundary (but not within-boundary) associations and timing61. Functionally, this altered biases Timers in PFC towards encoding a single delay period (cue to cue or cue to reward) and disrupts their ability to encode across-boundary delays.

The LTP and LTD traces activate (via activation constant ), saturate (at a level ) and decay (with time constant ) at different rates. binds to these eligibility traces, converting them into changes in synaptic weights. This conversion into synaptic changes is “competitive”, being determined by the difference in the product:

Equation 9 - Weight Update

where is the learning rate.

The above eligibility trace learning rules, with the inclusion of dopamine, are referred to as dopaminergic “two-trace” learning or dTTL. This rule is pertinent not only because it can solve the temporal credit assignment problem, allowing the network to associate events distal in time, but also because dTTL is supported by recent experiments which have found eligibility traces for in multiple brain regions36,62–65. Notably, in such experiments, the eligibility traces in prefrontal cortex were found to convert into positive synaptic changes via delayed application of dopamine36, which is the main assumption behind dTTL.

For simplicity and to reduce computational time, in the simulations shown, connections are learned via a simple dopamine modulated Hebbian rule, . Since these connections are responsible for inhibiting the DA neurons at the time of reward, this learning rule imposes its own fixed point by suppressing down to 0. For any appropriate selection of feed-forward learning parameters in dTTL (Equation 9), the fixed-point is reached well before the fixed point . This is because, by construction, the function of M→GABA learning is to suppress down to zero. Therefore, the fixed point needs to be placed (via choosing trace parameters) beyond the fixed-point . Functionally, then, both rules act to potentiate M→GABA connections monotonically until . As a result, the dopamine modulated Hebbian rule is in practice equivalent to dTTL in this case.

Network Architecture

Our network architecture consists of two regions, VTA and PFC, each of which consist of subpopulations of leaky-integrate-and-fire neurons. Both fixed and learned connections exist between certain populations to facilitate the functionality of our model.

To model VTA, we include 100 dopaminergic and 100 GABAergic neurons, both of which receive tonic noisy input to establish baseline firing rates of ~5Hz. Naturally appetitive stimuli, such as food or water, are assumed to have fixed connections to DA neurons via the gustatory system. Dopamine release is determined by DA neuron firing above or below a threshold θ, and dopamine release acts as reinforcement for all learned connections in the model.

Our model of PFC is comprised of different feature-specific ‘columns’. Within each column there is a CNA microcircuit, with each subpopulation (Timers, Inhibitory, Messengers) consisting of 100 LIF neurons. Previous work has shown that these subpopulations can emerge from randomly distributed connections35, and further that a single mean field neuron can well approximate the activity of each of these subpopulations of spiking neurons66.

The two-trace learning rule we utilize for our model is described in further detail in previous work34,36,37,45. However, we will attempt to clarify how it functions below. As a first approximation, the traces from the two-trace learning rule we utilize effectively act such that when they interact with dopamine above baseline, the learning rule will favor potentiation, and when they encounter dopamine below baseline, the learning rule will favor depression. Formally, the rule has fixed points which depend on both the dynamics of the traces and the dopamine release :

Equation 10 – Fixed Point

A trivial fixed point exists when for all . Another simple fixed point exists in the limit that , where is the time of reward, as Equation 10 then reduces to . In this case, the weights have reached their fixed point when the traces cross at the time of reward. In practice, the true fixed points of the model are a combination of these two factors (suppression of dopamine and crossing dynamics of the traces). In reality, is not a delta function (and may have multiple peaks during the trial), so to truly calculate the fixed points, one must use Equation 10 as a whole. However, the delta approximation used above gives a functional intuition for the dynamics of learning in the model.

Supplemental Figure 2 and Supplemental Figure 3 demonstrate examples of fixed points for both recurrent and feed-forward learning, respectively. Note that in these examples the two “bumps” of excess dopamine (CS-evoked and US-evoked) are the only instances of non-zero . As such, we can take the integral in Equation 10 and split it into two parts:

Equation 11 - Two-part Fixed Point

For recurrent learning, the dynamics evolve as follows. At the beginning, only the integral over exists, as is initially zero over (Trial 1 in Supplemental Figure 2). As a result, the learning rule evolves to approach the fixed point mediated by (Trial 20 in Supplemental Figure 2). After the recurrent weights have reached this fixed point and the Timer neurons encode the cue-reward delay (Trial 30 in Supplemental Figure 2), M→GABA learning acts to suppress down to zero as well (Trial 40 in Supplemental Figure 2). Note again that we make the assumption that trace generation in PFC is inhibited during large positive RPEs. This acts to encourage the Timers to encode a single “duration” (whether cue-cue or cue-reward). In line with our assumption, experimental evidence has shown these large positive RPEs act as event boundaries and disrupt across-boundary (but not within-boundary) reports of timing61.

For feed-forward learning, the weights initially evolve identically to the recurrent weights (Trial 1 in Supplemental Figure 3). Again, only the integral over exists, so the feed-forward weights evolve according to . However, soon the potentiation of these feed-forward CS→DA weights themselves cause release of CS-evoked dopamine, and therefore we must consider both integrals to explain the learning dynamics (Trial 5 in Supplemental Figure 3). This stage of learning is harder to intuit, but an intermediate fixed point is reached when the positive produced by the traces’ overlap with US-evoked dopamine is equal and opposite to the negative produced by the traces’ overlap with CS-evoked dopamine (Trial 20 in Supplemental Figure 3). Finally, after US-evoked dopamine has been suppressed to baseline, the feed-forward weights reach a final fixed point where both positive and negative contributions to over the course of the CS offset each other (Trial 50 in Supplemental Figure 3).

The measure of “area under receiver operating characteristic” (auROC) is used throughout this paper, for the purpose of making direct comparison to a slew of calcium imaging results that use auROC as a measure of statistical significance. Following the methods of Cohen et al. (2012)26, time is tiled into 50ms bins. For a single neuron, within each 50ms bin, the distribution of spike counts for 25 trials of baseline spontaneous firing (no external stimuli) is compared to the distribution of spike counts during the same time bin for 25 trials of the learning phase in question. For example, in Figure 6d,left, the baseline distributions of spike counts are compared to the distributions of spike counts when US only is presented. ROC is calculated for each bin by sliding the criteria from zero to the max spike count within the bin, and then plotting P(active>criteria) versus P(baseline>criteria). The area under this curve is then a measure of discriminability between the two distributions, with an auROC of 1 demonstrating a maximally discriminable increase in spikes compared to baseline, 0 demonstrating a maximally discriminable decrease of spikes compared to baseline, and.5 demonstrating an inability to discriminate between the two distributions.

Data from Amo et al. 2022 was used for Supplemental Figure 123,25. The data from 7 animals (437–440, 444–446) is shown here. This data is already z-scored as described by Amo et al. On every training day only the first 40 trials are used, unless there was a smaller number of rewarded trials, in which case that number was used. For every animal per every day, the integral from the time of the CS to the end of the trial is calculated and averaged over all trials in that day. An example of the integral for one animal and one day is shown in Supplemental Figure 1a. These are the data points in Supplemental Figure 1b, with each animal represented by a different color. The blue line is the average over animals. The bar graphs in Supplemental Figure 1c represent the average over all animals in early (day 1–2), intermediate (day 3–4) and late (day 8–10) periods. Wilcoxon rank sum tests find that intermediate is significantly higher than early () and that late is significantly higher than early (). Late is not significantly lower than intermediate. We also find that late is significantly higher than early if we take the average per animal over the early and intermediate days (). A MATLAB code that carries out this analysis is posted on https://github.com/ianconehed/FLEX and will also be posted on modelDB together with the code for FLEX.

Supplementary Material

Acknowledgements

This work was supported by Biotechnology and Biological Sciences Research Council (BBSRC) Grants BB/N013956/1 and BB/N019008/1, Wellcome Trust 200790/Z/16/Z, Simons Foundation Engineering and Physical Sciences Research Council (EPSRC) EP/R035806/1, National Institute of Biomedical Imaging and Bioengineering 1R01EB022891-01 and Office of Naval Research N00014-16-R-BA01.

Footnotes

Competing Interests

The authors declare no competing interests

Code Availability

Code for both simulations and analysis is posted on: https://github.com/ianconehed/FLEX and will be available on ModelDB upon the publication of the paper.

References

- 1.Sutton R. S. & Barto A. G. Reinforcement Learning, second edition: An Introduction. (MIT Press, 2018). [Google Scholar]

- 2.Glickman S. E. & Schiff B. B. A biological theory of reinforcement. Psychol. Rev. 74, 81–109 (1967). [DOI] [PubMed] [Google Scholar]

- 3.Lee D., Seo H. & Jung M. W. Neural Basis of Reinforcement Learning and Decision Making. Annu. Rev. Neurosci. 35, 287–308 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.A Model of How the Basal Ganglia Generate and Use Neural Signals That Predict Reinforcement. in Models of Information Processing in the Basal Ganglia (eds. Houk J. C., Davis J. L. & Beiser D. G.) (The MIT Press, 1994). doi: 10.7551/mitpress/4708.003.0020. [DOI] [Google Scholar]

- 5.Schultz W., Dayan P. & Montague P. R. A Neural Substrate of Prediction and Reward. Science 275, 1593–1599 (1997). [DOI] [PubMed] [Google Scholar]

- 6.Montague P. R., Dayan P. & Sejnowski T. J. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J. Neurosci. 16, 1936–1947 (1996). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Sutton R. S. Learning to predict by the methods of temporal differences. Mach. Learn. 3, 9–44 (1988). [Google Scholar]

- 8.Ludvig E. A., Sutton R. S. & Kehoe E. J. Evaluating the TD model of classical conditioning. Learn. Behav. 40, 305–319 (2012). [DOI] [PubMed] [Google Scholar]

- 9.Namboodiri V. M. K. How do real animals account for the passage of time during associative learning? Behav. Neurosci. 136, 383–391 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Ludvig E. A., Sutton R. S. & Kehoe E. J. Stimulus Representation and the Timing of Reward-Prediction Errors in Models of the Dopamine System. Neural Comput. 20, 3034–3054 (2008). [DOI] [PubMed] [Google Scholar]

- 11.Tesauro G. TD-Gammon, a self-teaching backgammon program, achieves master-level play. Neural Comput. 6, 215–219 (1994). [Google Scholar]

- 12.Arulkumaran K., Deisenroth M. P., Brundage M. & Bharath A. A. Deep Reinforcement Learning: A Brief Survey. IEEE Signal Process. Mag. 34, 26–38 (2017). [Google Scholar]

- 13.Foster D. J., Morris R. G. & Dayan P. A model of hippocampally dependent navigation, using the temporal difference learning rule. Hippocampus 10, 1–16 (2000). [DOI] [PubMed] [Google Scholar]

- 14.Montague P., Dayan P. & Sejnowski T. A framework for mesencephalic dopamine systems based on predictive Hebbian learning. J. Neurosci. 16, 1936–1947 (1996). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Schultz W. Behavioral Theories and the Neurophysiology of Reward. Annu. Rev. Psychol. 57, 87–115 (2006). [DOI] [PubMed] [Google Scholar]

- 16.Mello G. B. M., Soares S. & Paton J. J. A Scalable Population Code for Time in the Striatum. Curr. Biol. 25, 1113–1122 (2015). [DOI] [PubMed] [Google Scholar]

- 17.Namboodiri V. M. K. et al. Single-cell activity tracking reveals that orbitofrontal neurons acquire and maintain a long-term memory to guide behavioral adaptation. Nat. Neurosci. 22, 1110–1121 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Kim E., Bari B. A. & Cohen J. Y. Subthreshold basis for reward-predictive persistent activity in mouse prefrontal cortex. Cell Rep. 35, 109082 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Taxidis J. et al. Differential Emergence and Stability of Sensory and Temporal Representations in Context-Specific Hippocampal Sequences. Neuron 108, 984–998.e9 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.MacDonald C. J., Lepage K. Q., Eden U. T. & Eichenbaum H. Hippocampal “Time Cells” Bridge the Gap in Memory for Discontiguous Events. Neuron 71, 737–749 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Parker N. F. et al. Choice-selective sequences dominate in cortical relative to thalamic inputs to NAc to support reinforcement learning. Cell Rep. 39, 110756 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Pan W.-X. Dopamine Cells Respond to Predicted Events during Classical Conditioning: Evidence for Eligibility Traces in the Reward-Learning Network. J. Neurosci. 25, 6235–6242 (2005). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Amo R. et al. A gradual temporal shift of dopamine responses mirrors the progression of temporal difference error in machine learning. Nat. Neurosci. 25, 1082–1092 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Jeong H. et al. Mesolimbic dopamine release conveys causal associations. Science 0, eabq6740 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]; 24. Amo R., Matias S., Uchida N. & Watabe-Uchida M. Population and single dopamine neuron activity during classical conditioning. Dryad, Dataset (2022) doi: 10.5061/DRYAD.HHMGQNKJW. [DOI] [Google Scholar]

- 25. xxx.

- 26.Cohen J. Y., Haesler S., Vong L., Lowell B. B. & Uchida N. Neuron-type-specific signals for reward and punishment in the ventral tegmental area. nature 482, 85–88 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Dabney W. et al. A distributional code for value in dopamine-based reinforcement learning. Nature 577, 671–675 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Mikhael J. G., Kim H. R., Uchida N. & Gershman S. J. The role of state uncertainty in the dynamics of dopamine. Curr. Biol. 32, 1077–1087 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Thomas Kuhn. The structure of scientific revolutions. vol. 111 (University of Chicago Press:, 1970). [Google Scholar]

- 30.Coddington L. T., Lindo S. E. & Dudman J. T. Mesolimbic dopamine adapts the rate of learning from action. Nature 614, 294–302 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Wang J. X. et al. Prefrontal cortex as a meta-reinforcement learning system. Nat. Neurosci. 21, 860–868 (2018). [DOI] [PubMed] [Google Scholar]

- 32.O’Reilly R. C., Frank M. J., Hazy T. E. & Watz B. PVLV: The Primary Value and Learned Value Pavlovian Learning Algorithm. Behav. Neurosci. 121, 31–49 (2007). [DOI] [PubMed] [Google Scholar]

- 33.Gavornik J. P., Shuler M. G. H., Loewenstein Y., Bear M. F. & Shouval H. Z. Learning reward timing in cortex through reward dependent expression of synaptic plasticity. Proc. Natl. Acad. Sci. 106, 6826–6831 (2009). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Cone I. & Shouval H. Z. Learning precise spatiotemporal sequences via biophysically realistic learning rules in a modular, spiking network. eLife 10, e63751 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Huertas M. A., Hussain Shuler M. G. & Shouval H. Z. A Simple Network Architecture Accounts for Diverse Reward Time Responses in Primary Visual Cortex. J. Neurosci. 35, 12659–12672 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.He K. et al. Distinct Eligibility Traces for LTP and LTD in Cortical Synapses. Neuron 88, 528–538 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Huertas M. A., Schwettmann S. E. & Shouval H. Z. The Role of Multiple Neuromodulators in Reinforcement Learning That Is Based on Competition between Eligibility Traces. Front. Synaptic Neurosci. 8, (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Aosaki T. et al. Responses of tonically active neurons in the primate’s striatum undergo systematic changes during behavioral sensorimotor conditioning. J. Neurosci. 14, 3969–3984 (1994). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chubykin A. A., Roach E. B., Bear M. F. & Shuler M. G. H. A Cholinergic Mechanism for Reward Timing within Primary Visual Cortex. Neuron 77, 723–735 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Shuler M. G. Reward Timing in the Primary Visual Cortex. Science 311, 1606–1609 (2006). [DOI] [PubMed] [Google Scholar]

- 41.Namboodiri V. M. K., Huertas M. A., Monk K. J., Shouval H. Z. & Hussain Shuler M. G. Visually Cued Action Timing in the Primary Visual Cortex. Neuron 86, 319–330 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Liu C.-H., Coleman J. E., Davoudi H., Zhang K. & Hussain Shuler M. G. Selective Activation of a Putative Reinforcement Signal Conditions Cued Interval Timing in Primary Visual Cortex. Curr. Biol. 25, 1551–1561 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Monk K. J., Allard S. & Hussain Shuler M. G. Reward Timing and Its Expression by Inhibitory Interneurons in the Mouse Primary Visual Cortex. Cereb. Cortex N. Y. NY 30, 4662–4676 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Zajzon B., Duarte R. & Morrison A. Towards reproducible models of sequence learning: replication and analysis of a modular spiking network with reward-based learning. bioRxiv 2023.01.18.524604 (2023) doi: 10.1101/2023.01.18.524604. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Cone I. & Shouval H. Z. Behavioral Time Scale Plasticity of Place Fields: Mathematical Analysis. Front. Comput. Neurosci. 15, (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Ljungberg T., Apicella P. & Schultz W. Responses of monkey dopamine neurons during learning of behavioral reactions. J. Neurophysiol. 67, 145–163 (1992). [DOI] [PubMed] [Google Scholar]

- 47.Clark J. J., Collins A. L., Sanford C. A. & Phillips P. E. M. Dopamine Encoding of Pavlovian Incentive Stimuli Diminishes with Extended Training. J. Neurosci. 33, 3526–3532 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Coddington L. T. & Dudman J. T. The timing of action determines reward prediction signals in identified midbrain dopamine neurons. Nat. Neurosci. 21, 1563–1573 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Schultz W., Apicella P. & Ljungberg T. Responses of monkey dopamine neurons to reward and conditioned stimuli during successive steps of learning a delayed response task. J. Neurosci. 13, 900–913 (1993). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Schultz W. Predictive Reward Signal of Dopamine Neurons. J. Neurophysiol. 80, 1–27 (1998). [DOI] [PubMed] [Google Scholar]

- 51.Kamin L. J. Predictability, surprise, attention, and conditioning. B Campbell R M Church Eds Punishm. Aversive Behav. 279–296 (1969). [Google Scholar]

- 52.Mackintosh N. J. & Turner C. Blocking as a function of novelty of CS and predictability of UCS. Q. J. Exp. Psychol. 23, 359–366 (1971). [DOI] [PubMed] [Google Scholar]

- 53.Waelti P., Dickinson A. & Schultz W. Dopamine responses comply with basic assumptions of formal learning theory. Nature 412, 43–48 (2001). [DOI] [PubMed] [Google Scholar]

- 54.Holland P. C. Unblocking in Pavlovian appetitive conditioning. J. Exp. Psychol. Anim. Behav. Process. 10, 476–497 (1984). [PubMed] [Google Scholar]

- 55.Steinberg E. E. et al. A causal link between prediction errors, dopamine neurons and learning. Nat. Neurosci. 16, 966–973 (2013). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Starkweather C. K., Babayan B. M., Uchida N. & Gershman S. J. Dopamine reward prediction errors reflect hidden-state inference across time. Nat. Neurosci. 20, 581–589 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Gardner M. P. H., Schoenbaum G. & Gershman S. J. Rethinking dopamine as generalized prediction error. Proc. R. Soc. B Biol. Sci. 285, 20181645 (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Namboodiri V. M. K. & Stuber G. D. The learning of prospective and retrospective cognitive maps within neural circuits. Neuron 109, 3552–3575 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Hayden B. Y. & Niv Y. The case against economic values in the orbitofrontal cortex (or anywhere else in the brain). Behav. Neurosci. 135, 192 (2021). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Rajan K., Abbott L. F. & Sompolinsky H. Stimulus-Dependent Suppression of Chaos in Recurrent Neural Networks. Phys. Rev. E 82, 011903 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Rouhani N., Norman K. A., Niv Y. & Bornstein A. M. Reward prediction errors create event boundaries in memory. Cognition 203, 104269 (2020). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Yagishita S. et al. A critical time window for dopamine actions on the structural plasticity of dendritic spines. Science 345, 1616–1620 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Hong S. Z. et al. Norepinephrine potentiates and serotonin depresses visual cortical responses by transforming eligibility traces. Nat. Commun. 13, 3202 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Gerstner W., Lehmann M., Liakoni V., Corneil D. & Brea J. Eligibility Traces and Plasticity on Behavioral Time Scales: Experimental Support of NeoHebbian Three-Factor Learning Rules. Front. Neural Circuits 12, (2018). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Brzosko Z., Schultz W. & Paulsen O. Retroactive modulation of spike timing-dependent plasticity by dopamine. eLife 4, e09685 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Gavornik J. P. & Shouval H. Z. A network of spiking neurons that can represent interval timing: mean field analysis. J. Comput. Neurosci. 30, 501–513 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.