Abstract

Purpose:

The purpose of this study was to assess the relationship and comparability of cepstral and spectral measures of voice obtained from a high-cost “flat” microphone and precision sound level meter (SLM) vs. high-end and entry level models of commonly and currently used smartphones (iPhone i12 and iSE; Samsung s21 and s9 smartphones). Device comparisons were also conducted in different settings (sound-treated booth vs. typical “quiet” office room) and at different mouth-to-microphone distances (15 and 30 cm).

Methods:

The SLM and smartphone devices were used to record a series of speech and vowel samples from a prerecorded diverse set of 24 speakers representing a wide range of sex, age, fundamental frequency (F0), and voice quality types. Recordings were analyzed for the following measures: smoothed cepstral peak prominence (CPP in dB); the low vs high spectral ratio (L/H Ratio in dB); and the Cepstral Spectral Index of Dysphonia (CSID).

Results:

A strong device effect was observed for L/H Ratio (dB) in both vowel and sentence contexts and for CSID in the sentence context. In contrast, device had a weak effect on CPP (dB), regardless of context. Recording distance was observed to have a small-to-moderate effect on measures of CPP and CSID but had a negligible effect on L/H Ratio. With the exception of L/H Ratio in the vowel context, setting was observed to have a strong effect on all three measures. While these aforementioned effects resulted in significant differences between measures obtained with SLM vs. smartphone devices, the intercorrelations of the measurements were extremely strong (r’s > 0.90), indicating that all devices were able to capture the range of voice characteristics represented in the voice sample corpus. Regression modeling showed that acoustic measurements obtained from smartphone recordings could be successfully converted to comparable measurements obtained by a “gold standard” (precision SLM recordings conducted in a sound-treated booth at 15 cm) with small degrees of error.

Conclusions:

These findings indicate that a variety of commonly available modern smartphones can be used to collect high quality voice recordings usable for informative acoustic analysis. While device, setting, and distance can have significant effects on acoustic measurements, these effects are predictable and can be accounted for using regression modeling.

Keywords: smartphones, frequency response, voice evaluation, cepstral analysis, spectral analysis

Introduction

With COVID-19 pandemic-related changes in care delivery, there is growing interest in the potential for collecting information about vocal function via smartphones. While specialty clinical space and equipment can be scarce and expensive, smartphones are ever more available for both clinicians and patients. The ability to remotely collect acoustic data for voice analysis would enhance research efforts and facilitate more user-friendly approaches to clinical care. However, adoption of such technologies in these areas is still currently limited.

Prior literature on smartphone voice recordings has been heterogeneous, limiting clarification of the circumstances under which smartphones can be reasonably used to collect voice recordings for research and/or clinical purposes 1. Many studies have utilized sound-treated or sound-proofed booths2, which offer low-noise data collection but may limit translation to typical environments for most voice users. In other cases, studies have utilized non-dysphonic speakers3, which may make it difficult to predict the generalizability of findings to patients with voice disorders seen in clinics or to dysphonic research participants. Some studies also incorporated external microphones4, adding an additional variable to the mix. Furthermore, the spoken tasks themselves were also varied, including sustained vowels and connected speech, sometimes with background noise or other modifications incorporated. Prior studies have also used a wide variety of devices, including early to more recent iPhones, Samsung smartphones, and those from other vendors such as Google and Nokia2,3,5–7.

Several studies have concluded that both iPhone and Android phones can produce recordings suitable for acoustic analysis of pathologic voice, particularly for fundamental frequency, jitter, shimmer, noise-to-harmonic ratio (NHR), cepstral peak prominence (CPP), and the Acoustic Voice Quality Index (AVQI)3,4,6–9, although there may be greater divergence with dysphonic voice samples than non-dysphonic voice samples9. However, others have indicated that, while fundamental frequency measurements appear reliable, other acoustic measures had limited robustness to recording conditions and device type, and therefore should be used with caution2,5,10,11.

There is still a lack of clear consensus on the validity and reliability of acoustic measures when obtained via smartphone recordings. With new devices becoming increasingly available and microphone and smartphone technology constantly improving, limitations of prior generations of devices may or may not remain applicable. Using a corpus of voice samples representing a broad range of ages and severity (and etiologies) of dysphonia, the objective of this study was to determine the robustness of key spectral and cepstral acoustic measures recorded using both entry-level and high-end models of very commonly used modern smartphone brands as compared to measurements obtained from research-quality microphone and sound level meter (SLM) recordings. In addition, to enhance the potential practical applicability of our findings, we examined device recordings collected in two acoustic settings (sound-treated booth and a quiet office room), and at two different mouth-to-phone distances (15 and 30 cm).

We hypothesized that correlations between measures across the recording devices would be very high (r’s > 0.8), and, given anticipated differences in frequency response curves across devices, that cepstral measures would be more robust to device type than L/H ratio. Further, we hypothesized that increased mouth-to-microphone distance and the quiet room condition would have a detrimental effect on acoustic measures by introducing increased environmental noise vs. a shorter mouth-to-microphone distance and the sound-treated booth condition, respectively. On an exploratory basis, we also sought to determine whether knowledge of device type (SLM vs. smartphone) might allow prediction of the degree to which the use of a particular device might influence a given acoustic measure when the recording distance and setting were known.

Methodology

Selection of Smartphone Devices

In this study, four popular smartphones were used as recording devices, including the (1) Apple iPhone 12 (i12), (2) the Apple iPhone SE (iSE; 2nd generation), (3) the Samsung Galaxy s21, and (4) the Samsung Galaxy s9. The smartphones were selected to represent the most popular manufacturers in the US, based on recent sales and estimated use data12–15. The entry-level and highest-end devices of each manufacturer (Apple iPhone SE and 12 and Samsung Galaxy S9 and S21) were identified at study launch. Devices were purchased new, directly from the manufacturer, to minimize the risk of differences related to prior use or refurbishment. As these devices are subjected to extensive quality control as part of manufacturing, one device of each model was used. The rationale for selecting the entry-level and highest-end devices was to assess possible differences in acoustic signal recordings available to the typical consumer at that time.

Smartphone Frequency Response

To further describe the characteristics of the selected devices, smartphone frequency response was assessed in a methodology similar to Awan et al.16. All of these smartphones use capacitive MEMS (microelectromechanical systems) condenser microphones. Each smartphone was assessed using pink noise as a reference signal to measure frequency response. A pink noise waveform (downloaded from https://www.audiocheck.net/testtones_pinknoise.php ) was output via a PC-compatible computer running Reaper v. 6.38 digital audio workstation software (Reaper, 2022) on Windows 10 (Microsoft Corp., Redmond WA USA). The computer audio output used a Behringer UMC202 audio interface (Music Tribe, Makati, Phillipines) connected to the mouth simulator input of a GRAS 45BM KEMAR head-and-torso (HAT) model (GRAS Sound & Vibration USA, Beaverton, OR USA) used to simulate and standardize the recording sound field. Pink noise was produced at an intensity equivalent to 67dB C at 30cm and 73 dB C at 15 cm as measured using a Model 377A13 Precision Condenser Microphone (PCB Piezotronics, Depew, NY USA) and Larson Davis Model 824 Precision Sound Level Meter (SLM) & Real Time Analyzer (Larson Davis - a PCB Piezotronics division, Depew, NY USA). It was our goal to address measures of voice quality used in typical vowel and speech conditions; therefore, we used signal intensity consistent with normative expectations of 65–70 dB C for speaking situations and smartphone positioning consistent with typical microphone positioning and handling comfort. We chose not to examine microphone positioning against the ear as might be typical for a phone call since (a) the emitted pressure wave at closely positioned microphone distances could have produced noise artifacts that would have affected our analyses, and (b) for potential applications for guided voice recording capture, most users would likely be viewing instructions on the screen while performing recordings.

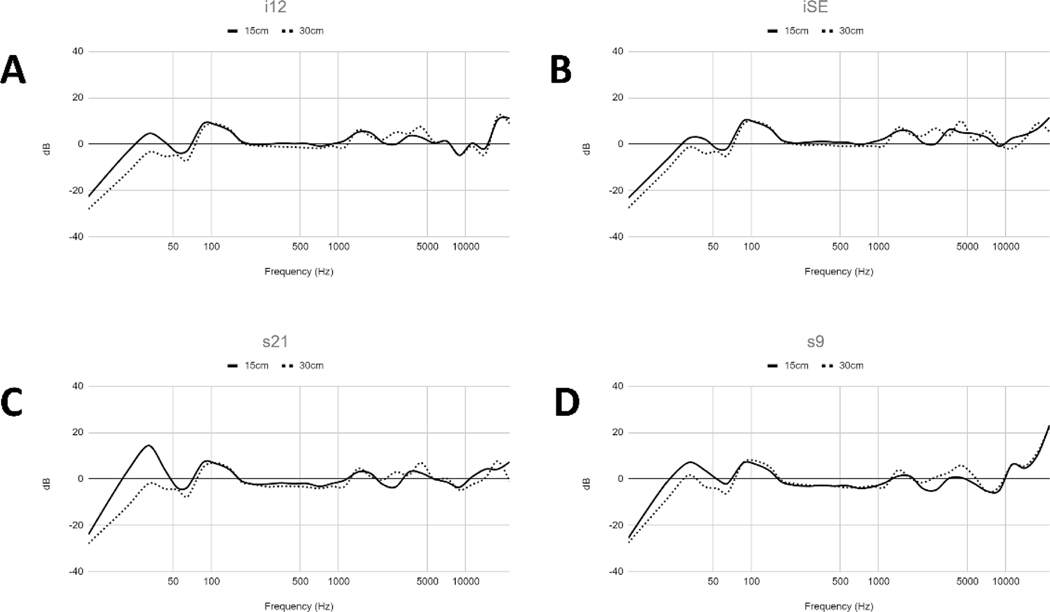

Frequency response for all four tested smartphones (see Fig. 1) were observed to be remarkably similar, consistent with available information that these phones use the same MEMS microphones. For sound booth recording at the 15 cm distance, all phones showed regions of low frequency emphasis at ≈ 30 Hz and between ≈ 85 and 140 Hz. While the low frequency emphasis between 85–140 Hz was consistent regardless of recording distance, the emphasis at ≈ 30 Hz was negligible at the 30 cm distance. All phones showed considerable roll off below 30 Hz. Response was relatively flat (+/− 3 dB) up to approx. 15000 Hz, except for high frequency peaks observed in all phones at ≈ 1450 and 4500 Hz. All phones showed increased frequency sensitivity at the Nyquist sampling rate limit (22.05 kHz).

Figure 1.

Frequency response characteristics for the tested smartphone devices.

Voice Sample Recording

The smartphone devices were used to record voice samples via the KEMAR HAT model and mouth simulator using the same signal chain described for the smartphone frequency response testing. As in Awan et al.16, the corpus of voice samples consisted of twenty-four (24) 2–3 s duration vowels (sustained /a/) and 24 speech samples of “We were away a year ago.” The selected voice samples represented a variety of quality deviations (e.g., breathy, rough) and the range of vocal severity from normal to severe. Each set of 24 samples included 12 females and 12 males, and also encompassed child through elderly voice (8 adults + 4 children in each sex category; Age Range = 4 to 70 yrs.). All vowel and speech samples had been initially recorded using a Yamaha Audiogram 6 USB audio interface (Yamaha Corp. of America, Buena Park, CA USA) and Praat software17 on a PC-compatible computer at 44.1 kHz, 16 bits using a Sennheiser MD21 microphone (Sennheiser Electronics Corp., Old Lyme, CT USA) at a 15 cm mouth-to-microphone distance in a sound treated room. For the purposes of this study, samples were normalized for amplitude and compiled into two separate .wav files (one .wav file combining vowel samples and a second .wav file combining sentence samples). In each compilation, individual vowel or sentence samples were separated by a 1 second period of silence.

Setting and Distance

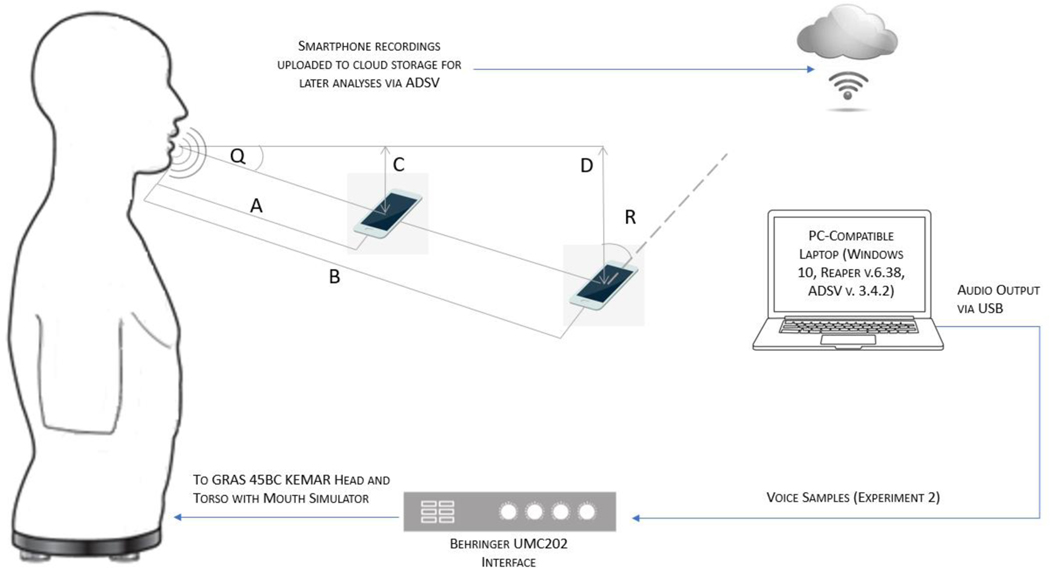

The vowel and speech .wav files were played via the KEMAR HAT and mouth simulator at an intensity equivalent to 67dB C at 30cm (consistent with the frequently reported 65–70 dB C comfortable speaking intensity). All smartphones were recorded in two settings: sound-treated booth and in a “quiet” room typical of an office location. The sound treated booth [ANSI S3.1–1999 (R 2008)] dimensions were 6.5ft/1.98m × 6ft/1.83m × 6ft/1.83m and included a chair (used for placement of KEMAR) and small desk behind the KEMAR. The background noise level in the sound treated booth was measured at 45.8 dBC [31 dBA/54.3 dBF]. The dimensions of the quiet room were 14ft/4.27m × 9ft/2.74m × 9ft/2.74m, and included a medium sized desk and a chair to mimic an office setting. The KEMAR was placed on the chair, and the desk was placed approximately 5 ft/1.52m from the mouthpiece of KEMAR to minimize reflections. The background noise level of the “quiet” room/office setting was 52.3 dBC (38.6 dBA/53.8 dBF). In addition, within each setting the smartphones were positioned at two mouth-to-microphone distances: 15cm and 30cm. These distances were selected to simulate a reasonable viewing distance for reading standardized passages from a smartphone device. Smartphones were positioned at 45 degrees at approximately 7.5 cm (at the 15 cm mouth-to-microphone distance) and 15 cm (at the 30 cm mouth-to-microphone distance) below mouth level to represent a handheld position that would allow for the screen to be read in a comfortable position. The SLM was also positioned in a similar position as the smartphones. Figure 2 provides an illustration of the voice sample playback, recording setup, and smartphone placement in relation to the KEMAR HAT model.

Figure 2.

Schematic of the voice sample playback and smartphone recording setup. Smartphone recordings were uploaded to cloud storage and later analyzed using the Analysis of Dysphonia in Speech and Voice program (ADSV v. 3. 4.2). Smartphone positioning in relation to the KEMAR head and torso (HAT) model: A = 15cm distance; B = 30 cm distance; C = 7.5 cm; D = 15 cm; Q = 30 degrees; R = 45 degrees. For the purposes of illustration clarity, distances are not to scale.

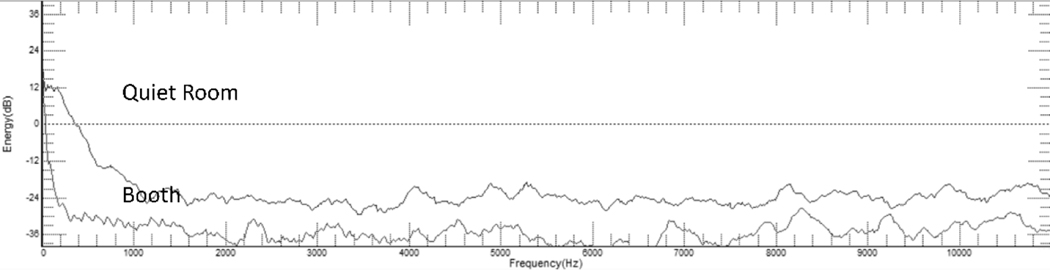

The long-term average spectrum (LTAS) characteristics of the sound booth vs. quiet room were analyzed from 3 second recordings of the background noise in each setting (see Fig. 3). The mean spectral amplitude was observed to be considerably greater in the quiet room vs. the booth. In addition, the quiet room spectra showed an increased spectral mean frequency and decreased skewness, particularly due to increased spectral energy in the 0–1000 Hz range vs. the sound booth.

Figure 3.

Background noise spectra obtained using the SLM in the sound-treated booth vs. quiet room.

Smartphone Recording Application

We found it challenging to find a commercial mobile audio recording app that met our needs: 1) capable of running on both iOS and Android, 2) support for 44.1 kHz uncompressed recording18, 3) having flexible data storage capabilities, 4) potential for HIPAA compliant data security, 5) transparency of data handling and storage. Therefore, we decided to develop our own software. A custom app was developed for use with each phone that was used to (a) record at 44.1 kHz, 16 bits of resolution18, (b) save to uncompressed .wav format, and (c) automatically upload recorded signals to secured cloud storage, from where it can later be retrieved for analysis. The app used in this study was created using Flutter, an open source framework, developed by Google, for building multi-platform applications. Flutter allows a mobile app to be developed for both iOS and Android from the same code base. Using Flutter, we developed a prototype mobile application that met our design needs. We performed multiple test recordings to confirm the desired recording characteristics. The Flutter source code for the application with the basic recording functionality has been released on GitHub as open source under the MIT license (https://github.com/kelvinlim/flutter_recorder).

Input recording levels were preset via each phone with no automatic gain control, noise reduction, or equalization applied during recording. As a reference, signals were also recorded via the Model 377A13 Precision Condenser Microphone (rated at +/−2 dB from 40–20000 Hz) and Larson Davis Model 824 Precision SLM, and captured at 44.1 kHz, 16-bits using the Behringer interface. Recordings via the SLM microphone were obtained with a signal gain set to peak at approximately −18 dB FS and using flat response (consistent with test recordings from the various smartphones).

Voice Sample Analysis

The various vowel and speech sample recordings were analyzed using the Analysis of Dysphonia in Speech and Voice program (ADSV v. 3.4.2 – Pentax Medical, Montvale, NJ) for spectral and cepstral measures of voice that have been demonstrated as robust measures of typical and disordered voice quality in both vowel and speech contexts18–20. In addition, Spectral and cepstral measures have been demonstrated as valid indices of vocal severity and pre- vs. post-treatment comparison 21–24.

As per the manufacturer’s recommendations, signal equalization was applied to all recordings prior to ADSV analyses to account for the characteristics of the HAT mouth speaker. Vowel and speech samples were analyzed using the sustained vowel and all voiced sentence protocols within the ADSV program, respectively. When opening a .wav file for analysis, the ADSV program automatically downsamples 44.1 kHz recordings to 22.05 kHz prior to analyses, thereby resulting in a spectral frequency limit of ≈ 11 kHz. For each vowel sample, a central 1 second portion of the waveform was isolated for analysis; a central 1–2 second stable portion of the sound wave has been typically used for vowel analysis in which onsets and offsets are removed due to expected vocal F0 and sound level variations. For sentence analysis, cursors were placed ≈ 5 ms before and after the production of the all-voiced sentence. Cursor locations marking the start and end of the analyzed portions of each vowel and sentence sample were matched to ensure that the analysis regions were consistent across recordings.

The following measures were computed using the ADSV software for each vowel and speech sample recorded using the various test devices: Cepstral Peak Prominence (CPP dB – the relative amplitude of the smoothed cepstral peak25, generally representative of the dominant rahmonic in the voice signal; the L/H Ratio in dB (a ratio of low/high frequency spectral energy using a 4000 Hz cut-off calculated as a measure of spectral tilt)20,25–27; and the Cepstral Spectral Index of Dysphonia (CSID – a multivariate estimate of the 100-point severity scale used in auditory-perceptual evaluation scales such as the Consensus Auditory Perceptual Evaluation of Voice (CAPE-V)28; weighted towards the CPP but also includes measures of L/H Ratio, CPP standard deviation, and L/H Ratio standard deviation). The aforementioned measures were selected as measures of voice quality used in more typical vocal intensity conditions (hence the use of a signal intensity consistent with normative expectations for speaking situations). Default ADSV analysis settings were used, and a complete description of the various analysis algorithms used in the ADSV has been previously reported20,29–31. Measures of fundamental frequency (F0) were not evaluated since previous studies have demonstrated that measures of vocal F0 are robust to factors such as recording system/device and environmental noise5,16,32.

Statistical Analysis

Statistical analyses were computed using JASP v. 0.16.433 and R v. 4.2.234. Statistical analysis methods included ANOVA and effect size calculations, correlation, and regression analyses, and specifics are included with relevant results to help facilitate interpretation. In the event of violations of the assumption, of sphericity, the F results for repeated measures used the Greenhouse-Geisser correction. In total, 960 voice samples were recorded (5 devices [4 smartphones + SLM Precision Condenser Microphone] × 2 sample types [vowel; sentence] × 2 settings [booth; quiet room] x 2 distances [15 cm; 30 cm] x 24 voice samples).

Results

Exploratory Analyses & Visual Description

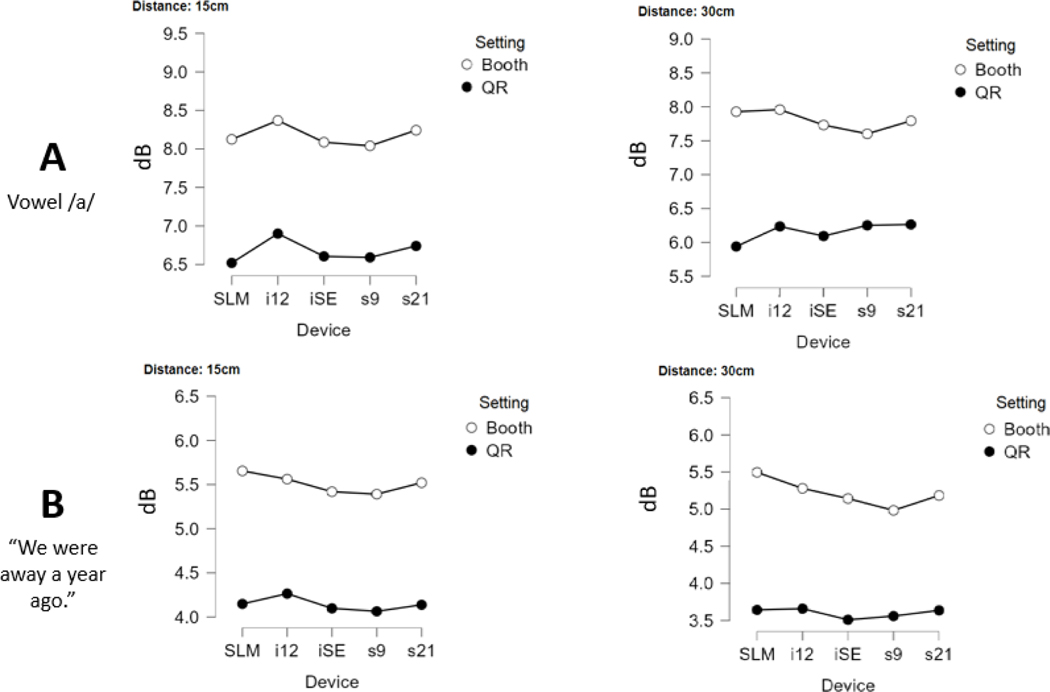

Cepstral Peak Prominence (CPP)

For analysis of both vowel and sentence contexts, repeated measures 3-way ANOVA’s were computed (5 levels of Device [SLM, i12, iSE, s21, s9]; 2 levels of Setting [Booth vs. Quiet Room]; 2 levels of Distance [15 vs. 30 cm]). For each ANOVA computed, indices of effect size were computed using eta squared (η2). Interpretation of the strength of the effect size using η2 was based on the work of Cohen35, who proposed that a small effect is ≥ .01, a moderate effect is ≥ .06, and a strong effect is ≥ .14. In the vowel context, the main effect of device was observed to be marginally significant with a small effect (F Greenhouse-Geisser (1.215, 27.943) = 4.309, p = 0.04, η2 = 0.012). The main effect of setting was observed to be strong and highly significant (F(1, 23) = 336.049, p < .001, η2 = 0.752), and a significant main effect of distance with a moderate effect size was also observed (F(1, 23) = 75.765, p < .001, η2 = 0.06). Similar results were observed for the sentence context, with significant main effects of setting (F(1, 23) = 338.21, p < .001, η2 = 0.756) and distance (F(1, 23) = 68.729, p < .001, η2 = 0.059) observed. The main effect of device was nonsignificant in the sentence context when evaluated at the traditional p< .05 alpha level (F Greenhouse-Geisser (1.227, 28.217) = 3.80, p = 0.054, η2 = 0.011). Figure 4 summarizes the effects of recording setting and microphone distance on CPP across the tested devices in (A) vowel and (B) sentence contexts.

Figure 4.

Mean CPP (Cepstral Peal Prominence) in (A) vowel and (B) sentence contexts as a function of setting (Booth vs. Quiet Room [QR]) and microphone distance (15 vs. 30 cm).

Correlation analyses were also conducted to determine the presence and strength of the relationships between acoustic measures obtained using the different devices. For this analysis, we considered measures obtained using the SLM in the sound-treated booth at a distance of 15 cm as the comparative “standard”. For CPP, all correlations between the various smartphones and the SLM standard were highly significant (p < .001) and very strong (r’s > .90) (see Table A1 in the Appendix), regardless of vowel or sentence context. In addition, an intraclass correlation (ICC3,1) was computed as an assessment of interdevice reliability between tested smartphones and the SLM standard (booth, 15 cm). An ICC = 0.98 (95% CI: 0.966 to 0.99) was observed for the measurement of CPP in the vowel context, and an ICC = 0.973 (95% CI: 0.955 to 0.986) observed for the sentence context.

Low/High Spectral Ratio (L/H Ratio)

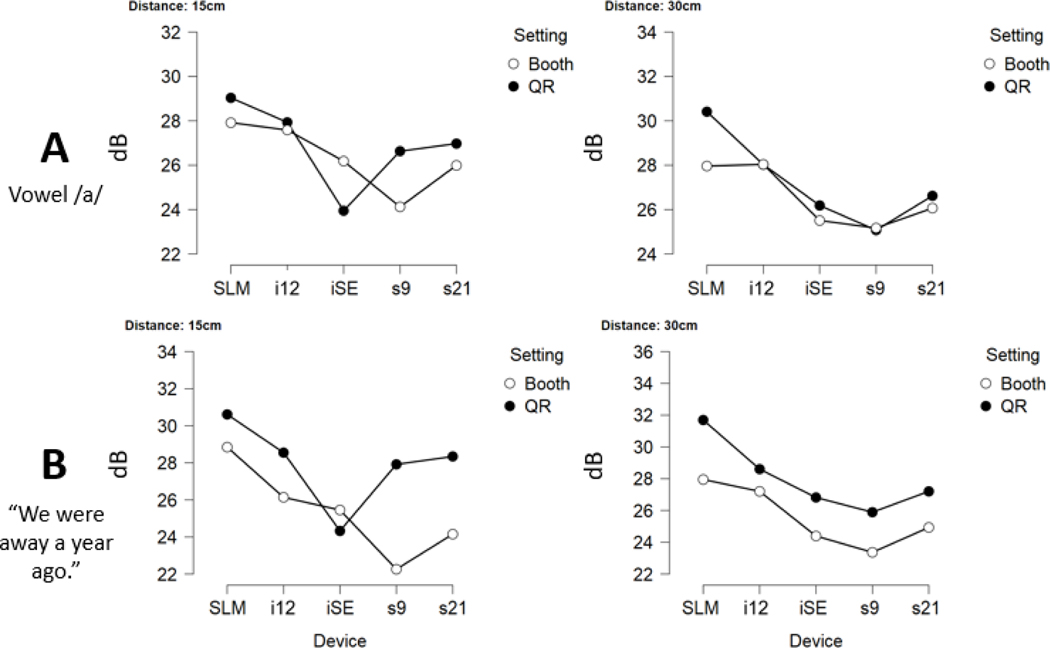

In contrast to the CPP results, for L/H Ratio the main effect of device was large and highly significant in both vowel (F Greenhouse-Geisser (1.552, 35.687) = 26.793, p < .001, η2 = 0.377) and sentence contexts (F Greenhouse-Geisser (1.537, 35.358) = 81.192, p < .001, η2 = 0.390). The main effect of setting was observed to be small in the vowel context (F(1, 23) = 8.578, p = .008, η2 = 0.019), whereas the same effect of setting on L/H Ratio was strong and highly significant in the sentence context (F(1, 23) = 31.185, p < .001, η2 = 0.194). The main effect of distance was observed to be negligible in both vowel (F(1, 23) = 3.237, p= .085, η2 = 0.004) and sentence contexts (F(1, 23) =1.395, p = 0.25, η2 < .001). Figure 5 shows mean L/H Ratio values for each tested smartphone and the SLM in (A) vowel and (B) sentence contexts at different settings and distances.

Figure 5.

Mean L/H Ratio (Low/High spectral ratio) using a 4 kHz cutoff) in (A) vowel and (B) sentence contexts as a function of setting (Booth vs. Quiet Room [QR]) and microphone distance (15 vs. 30 cm).

For L/H Ratio, all correlations between the various smartphones and the SLM standard were again observed to be very strong (r’s > .90, p < 0.001) in both vowel and sentence contexts (see Table A1). Strong interdevice reliability between tested smartphones and the SLM standard (booth, 15 cm) for measures of L/H Ratio were demonstrated in both vowel (ICC = 0.933 [95% CI: 0.891 to 0.965]) and sentence contexts (ICC = 0.89 [95% CI: 0.826 to 0.942]).

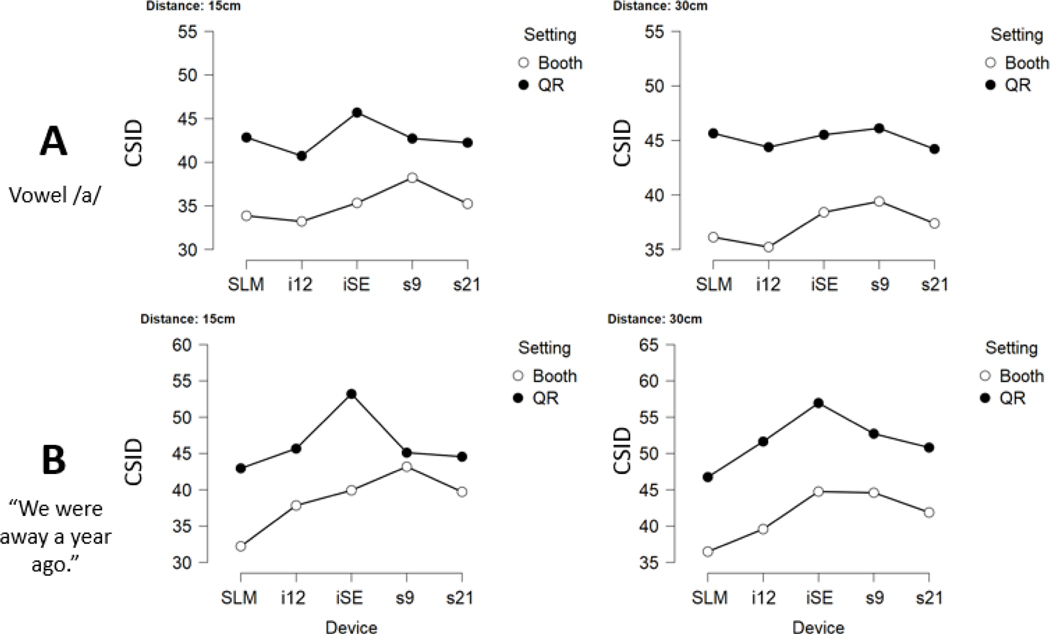

Cepstral Spectral Index of Dysphonia (CSID)

Since the CSID is produced via a multiple regression formula that includes both the CPP and L/H ratio, results mirrored some of the previously reported findings. Vowel context results were somewhat similar to those previously reported for CPP, with the main effect of device observed to be small-to-moderate (F Greenhouse-Geiser (2.047, 47.087) =5.788, p = .005, η2 = 0.042), while the main effect of setting was large and highly significant (F(1, 23) = 107.803, p < .001, η2 = 0.460), and the main effect of distance was small-to-moderate (F(1, 23) = 25.773, p < .001, η2 = 0.038). In the sentence context, the main effect of device was large and highly significant (F Greenhouse-Geisser (2.20, 50.592) = 45.863, p < .001, η2 = 0.180), similar to results for L/H Ratio. However, as observed for CPP, the main effect of setting was also large and highly significant on the CSID (F(1, 23) =82.631, p < .001, η2 = 0.396), and the main effect of distance was observed to have a moderate effect on the CSID (F(1, 23) =122.286, p < .001, η2 = 0.085). Figure 6 shows mean CSID values for each tested smartphone and the SLM in (A) vowel and (B) sentence contexts in different settings and different distances.

Figure 6.

Mean CSID (Cepstral Spectral Index of Dysphonia) in (A) vowel and (B) sentence contexts as a function of setting (Booth vs. Quiet Room [QR]) and microphone distance (15 vs. 30 cm).

As previously reported for both CPP and L/H Ratio, all correlations between the various smartphones and the SLM standard for CSID were again observed to be very strong (r’s > .90, p<0.001) in both vowel and sentence contexts (see Table A1). ICC’s for interdevice reliability regarding measures of CSID were again strong in both vowel (ICC = 0.970 [95% CI: 0.95 to 0.985]) and sentence contexts (ICC = 0.968 [95% CI: 0.946 to 0.984]).

Mixed Effects Linear Regression Models

As the CPP, L/H ratio, and CSID were computed for each subject using each device, setting, and distance, the dependence across these measures can be taken into account by using a mixed-effects linear regression model. For each measurement and each choice of vowel/sentence, the general model is as follows:

| (1) |

where represents the measurement of either the CPP, L/H ratio, or CSID (either vowel or sentence) for subject , the coefficients are the intercept and fixed effects, is the random intercept for subject which is assumed to be normally distributed with mean zero, and is the residual error for the measurement of subject and is also assumed to be normally distributed with mean zero. The indicator variable returns 1 when the condition inside is true and 0 for false. The model is formulated so that the baseline is device=SLM, setting=booth, and distance=15cm; when any of these variables are changed, the corresponding coefficient describes how much the measurement changes on average. For example, quantifies how much that measurement changes on average when the distance is increased from 15cm to 30cm and all other variables are held fixed.

We also consider a simplified model, which only accounts for whether the device is SLM or a phone, but not which model of phone:

| (2) |

where in this model, represents the average change in the measurement when the device is changed from SLM to an arbitrary phone. The other terms in the model have the same interpretation as in the full model.

For each of the models considered, diagnostic plots (residual and Q-Q plots) indicated no violations of the model assumptions of homoscedasticity (i.e., equal error variance across subjects, regardless of severity) and normality of errors.

Regression Model: CPP

The mixed effects model was fit to the CPP vowel and sentence measurements separately, and the estimates and p-values are found in Table A2 (see Appendix). Note that the largest effect size is due to the setting, and the second largest is due to the distance. The effect of the device is substantially smaller than these other two effect sizes, even though some of the estimates are statistically significant. We see that the variability due to the subject is either 2.392 dB or 1.986 dB for vowel and sentence respectively – these values quantify the standard deviation of the subjects used in this study in the two contexts. On the other hand, the residual standard error (i.e., within sample predictive error) is only .394 dB or .396 dB for CPP measurement in vowel and sentence contexts, respectively. This residual error is relatively small, being only 16.5% or 20% of the subject standard error, respectively. Furthermore, the values conditional on the subject are > 0.97. Together these values indicate that one can accurately convert between any of the tested smartphone devices, settings, and distances using the computed regression formula, with relatively small within-sample predictive error.

One may wonder about smartphone devices which are not included in this study. Assuming that the new device has similar properties to those in this study, Equation (2) can be used to give a general predictive model. In this case, the residual standard error has a minor increase from 0.394 dB to 0.403 dB and 0.369 dB to 0.374 dB for vowel or sentence contexts, respectively. Furthermore, the R^2 values decrease only slightly to 0.975 and .970. Since these changes in residual error are relatively small, we conclude that, even if the smartphone device is not specified, one can still accurately convert a CPP measurement to the baseline of device=SLM, distance=15cm, and setting=booth (i.e., to the “standard”).

Regression Model: L/H Ratio

The mixed effects model was fit to the L/H ratio vowel and sentence measurements separately, and the estimates and p-values are found in Table A3 (see Appendix). In this case, the effect size of the smartphone device is stronger than that of the distance. The relatively low residual error (1.815 dB and 1.913 dB), and large values (.928 and .888) indicate that the measurements of L/H ratio can still be accurately converted, but with increased error than observed for the CPP measurements. Note that in this model, the effect of distance is small and not statistically significant, indicating that the distance can be easily altered without compromising the accuracy of the L/H ratio.

When the device variables in the model were modified to only include whether the device is a smartphone or not, the residual standard errors increased to 2.049 dB and 2.143 dB, and the was reduced to .908 and 0.860 for vowel and sentence, respectively.

Regression Model: CSID

The mixed effects model was fit to the CSID vowel and sentence measurements separately, and the estimates and p-values are found in Table A4 (see Appendix). In this case, the effect size of the smartphone devices is very strong for the sentence context, but relatively weak for the vowel context. The relatively low residual error (4.013 and 4.318), and large values (0.967 and 0.960) indicate that the measurements of CSID can still be accurately converted.

When the device variables in the model were modified to only include whether the device is a smartphone or not, the residual standard errors increased to 4.169 and 4.675, and the was reduced to 0.964 and 0.954 for vowel and sentence contexts, respectively. Note that in this model, the effect of the smartphone is small and not statistically significant for the vowel measurements, indicating that on average the change in CSID incurred by changing from SLM to a phone is negligible. On the other hand, for sentence measures, the effect of phone is both much larger and highly significant.

Discussion

The use of smartphones has become ubiquitous, and they provide users with not just a communication device, but an extremely powerful computational device with substantial audio recording and playback capabilities. The primary objective of this study was to assess the ability of four popular modern smartphone models (iPhones SE and 12 and Samsung s9 and s21) to obtain voice recordings that would retain key acoustic features used to describe voice quality across typical and disordered voices. While design differences between the tested smartphones and a high-quality sound level meter do result in differences in absolute measurements, the results of this study show that measurements of cepstral peak prominence (CPP), low-to-high spectral ratio (L/H Ratio) and the Cepstral Spectral Index of Dysphonia (CSID) strongly correlate (r’s > .90) with those collected with a high quality SLM regardless of mouth-to-microphone distance (15 vs. 30 cm) or setting (sound-treated booth vs. quiet room). Because the recordings and retained acoustic information share substantial information, their ability to provide key voice quality measures across the span of severity, speaker sex, and speaker age is similar across the tested smartphone and SLM devices. In turn, because the measures from these smartphone devices are highly related to those obtained via a “gold standard” research-quality SLM, the results of this study show that any differences in absolute measurement may be corrected via regression models with relatively little error.

Effect of Device

The tested smartphones had variable effects on the acoustic measures assessed in this study. The use of a smartphone was observed to have a marginally significant but small effect on measures of CPP. This result is highly positive regarding the use of smartphones for measures related to voice quality, since cepstral measures such as the CPP have been reported as highly robust measures for the characterization of typical and disordered voice quality in both vowel and speech contexts (Maryn et al., 2009). The mixed effects regression model (see Appendix, Table 2) shows the overall effect of device on measures CPP is small and, depending upon smartphone, ranges from a reduction in mean CPP of −0.007 dB to an increase of +0.238 dB in vowels vs. −0.044 to −0.236 dB in a sentence context, in comparison to a high-quality SLM used in a sound-treated booth at 15 cm. Though some of these smartphone CPP differences from the standard were statistically significant, the effect size was small. While differences for the SLM standard were quite variable in the vowel context, all smartphone device recordings showed a reduction in mean CPP measurement in the sentence context. Though the cepstral peak is generally observed in a relatively low frequency (high/large quefrency) region of the cepstrum, the relative amplitude of the cepstral peak may be affected by both harmonic and inharmonic energy from throughout the spectrum. Since the frequency response of the tested smartphones was observed to accentuate spectral energy in both low (approx. 50–140 Hz) and mid-to-high frequency regions (various accentuation “ripples” in the range of 1000–10000 Hz), it is possible that inharmonic energy in occasional intersyllabic pauses and in the stop plosive portion of /g/in the sentence “We were away a year ago” may have resulted in the observed minor reduction in mean CPP.

The aforementioned frequency response differences between the tested smartphones and the SLM standard had a large effect on the measurement of L/H ratio, consistent with the results from a study by Awan et al.16 which examined the effect of microphone frequency response on selected spectral-based acoustic measures such as L/H Ratio. In all conditions, the smartphones tested produced lower measures of mean L/H ratio than the SLM standard (see Figure 5). Though all smartphones tested showed a region of low frequency emphasis between approx. 85–140 Hz, these same devices also tended to show multiple regions of higher frequency emphasis that may have resulted in a greater spectral amplitude above 4 kHz, and thus, lower L/H ratio values. The MEMS microphones used in these smartphones are recessed within the phone housings and are situated between (a) a sound inlet opening and front chamber, and (b) a back chamber. The sensitivity of most MEMS microphones increases at higher frequencies due to the interaction between the air in the sound inlet and the air in the front chamber of the microphone, resulting in a high frequency Helmholtz resonator 36 which may be responsible for the increase in > 4 kHz spectral energy and reduced L/H ratios in comparison to the SLM standard. It should be noted that, because the ADSV program automatically downsamples the 44.1 kHz recordings to 22.1 kHz, the spectral analysis range would only extend up to approx. 11 kHz and, therefore, any spectral gain in the smartphone recordings > 11 kHz would not have any effect on the L/H ratios computed in this study. The overall effect of smartphone device on L/H ratio was observed to be large in both vowel and sentence contexts, and responsible for a reduction in mean L/H ratio of −0.934 to −3.581 dB and −2.152 to −4.917 dB in vowel and sentence contexts, respectively and in comparison to a the SLM standard. Though these differences in mean L/H ratio between the smartphones tested and the SLM standard are significant and substantial, the L/H ratio values obtained by these smartphones were, again, observed to correlate significantly and strongly (r’s > 0.90) with the SLM standard. Subsequently, the predictive regression model computed for L/H ratio indicates that L/H ratio values measured using smartphone devices such as those used here may be converted to “standard” SLM values with relatively low residual error < 2dB in both vowel and sentence contexts. Further studies regarding the intrasubject test-retest variability in measures such as L/H ratio are necessary to provide a reference by which this potential error in conversion may be gauged.

The CSID is a mathematical approximation of the 100-point CAPE-V scale (though not computationally bounded by 0 or 100 end points). Since the CSID is produced via a multivariate model which combines CPP, L/H ratio and the standard deviations of these respective measures, it was expected that the CSID measures obtained via the tested smartphones would have characteristics consistent with those previously described for CPP and L/H ratio. Indeed, the strength of correlation between CSID measures obtained via smartphone and those obtained via SLM had similar strength to those reported for CPP (see Appendix, Table A3), and the effect of device was observed to be highly significant and large in the sentence context (as observed for L/H ratio), but only small-to-moderate in effect size for the vowel context. In the vowel context, the device effect on CSID was minimal, with the predicted change in CSID observed to range from only −1.234 to +1.99 severity points. In contrast, the device effect in the sentence context was much stronger, and responsible for a positive change (i.e., increased estimate of severity) of 4.083 to 9.11 severity points. However, as observed with the previous measures, the extremely strong correlations observed between the smartphone devices and the standard SLM for the CSID measures show that the range of severity information contained within the voice sample corpus was retained and highly reliable, regardless of any observed differences in absolute CSID measurement. The predictive model for CSID showed that CSID values measured using these smartphone devices may be converted to “standard” SLM values with relatively low residual error of < 5 severity points in both vowel and sentence contexts. This result may be put into context by comparing severity ratings (e.g., CAPE-V overall severity ratings) with alternative listeners (i.e., interjudge reliability). Karnell et al.37 reported interjudge mean differences in the rating of CAPE-V overall severity between experienced judges as large as 3.5 to 8.2 severity points, while Helou et al.38 reported differences of 1.8 to 4.0 scale points, depending upon the level of experience of the listener. In a study of pediatric voice, Kelchner et al.39 reported mean interjudge differences in the rating of overall severity from 0.9 to 7.2 scale points. Examples of intraclass correlations (ICC’s) for the interjudge reliability of CAPE-V overall severity ratings from experienced speech-language pathology raters have been reported from 0.67 to 0.8838–42 . In contrast, the inter-device ICC for the CSID measure of vocal severity in this study was 0.970 (95% CI: 0.95 to 0.985) and 0.968 (95% CI: 0.946 to 0.984), for the vowel and sentence context, respectively.

Effect of Distance and Setting

While the primary focus of this study was on the effect of smartphone device on selected acoustic measures, our methodology also confirmed important effects of environmental factors on voice measurements. In the case of distance, a change of microphone (smartphone or SLM) placement from 15 to 30 cm resulted in significant and moderate strength effects on measures of CPP and CSID. It seems apparent that, as the distance from the source increases, signal-to-noise ratio will decrease; this is reflected in a decrease of approx. 0.4 dB in CPP (in both vowel and sentence contexts; see Appendix, Table 4), and a corresponding increase in computed CSID scores of approximately 2 severity units in vowels and 4 severity units in the sentence context. Close mouth-to-microphone distances (e.g., 2.5 to 10 cm)16,18 have been recommended to reduce the effect of external noise and increase SNR. In this study, we chose somewhat larger distances that would simulate a hand-held position in which a subject/patient could read target text from the smartphone during a recording. However, these results confirm that the closer the mouth-to-microphone distance the better, and the 15cm distance may provide a good balance between readable distance from the smartphone and signal quality.

While mouth-to-microphone/device distance is a factor that can be relatively easy to control, clinicians may have less control over the recording setting. However, the results of this study show that the recording environment had strong effects on all acoustic measures reported in this study. The quiet room setting as used in this study was responsible for an approx. 1.5 dB reduction in CPP in both vowel and sentence contexts, and for an increase in CSID of approx. 7 (vowel) to 9 (sentence) severity units. Setting also significantly affected measures of L/H Ratio, though there was a much greater differential effect in vowels vs. sentences, with an increase of approx. 0.6 dB in vowels but approx. 2.5 dB in sentence contexts. It is of interest to note that while the quiet room setting resulted in a reduction in cepstral peak values, the same setting resulted in an increase in measures of L/H ratio. The increase in L/H ratio due to setting (i.e., an increase in L/H ratio in the quiet room setting) can be explained by a review of the spectral characteristics of background noise recordings in the sound treated booth vs. our quiet room (see Fig. 3). The mean spectral amplitude was observed to be considerably greater in the quiet room vs. the booth, and spectral skewness was decreased (particularly due to the increased spectral energy in the 0–1000 Hz range in the quiet room spectra). In addition, the low frequency resonance of the smartphones (see Fig. 1) may have resulted in increased sensitivity to low frequency noise (e.g., 60 Hz hum; heating/air conditioning vibrations) in the quiet room environment, resulting in a broader low frequency peak in the response curve than observed in the sound treated booth setting. This increase in low frequency spectral energy may have been responsible for the increased L/H ratio results observed in the quiet room vs. the booth. The effect of the setting may have been comparatively greater in the sentence vs. vowel context because pauses and occasional halting speech allowed for portions of the sentence recordings to be more heavily influenced by the background noise than in a continuously voiced portion of vowel production.

Despite these complexities, our regression models showed that the effects of distance and setting had highly predictable effects on the voice measurements reported in this study and, therefore, could be accounted for and adjusted to approximate measurements collected using a standard SLM at 15cm in a sound treated booth.

Limitations and Future Study

Limitations of the study are associated with study design and potential voice samples, smartphones, and settings that may be substantially different from what was included here. First, our frequency response measures may have been affected by room modes that may cause both peaks and nulls in frequency response. Though the frequency response results provided herein are perhaps more consistent with typical microphone performance, they may be somewhat different from what would be observed via an anechoic chamber. Second, the acoustic analyses findings reported herein may be less predictive for any voice samples that exceed the severity of included dysphonia. However, this is unlikely to occur in most clinical or research samples given the wide range of severity within our samples. Third, it was not practical to test every available vendor and model of smartphone; however, by testing basic and higher-end devices, as well as understanding that many smartphone models share common components (such as MEMS microphones), it may be reasonable to suppose that many other models would be comparable. Fourth, the quiet room used in this study was a typical office room that is likely similar to, but not necessarily acoustically identical to, other quiet rooms. Therefore, the specific effects of setting as described herein may vary from other “quiet room” settings. Nonetheless, our findings show that recordings collected in a comparable setting should be interpretable for intersubject differences in voice quality and intrasubject voice change over time. As shown in this study, the impact of individual room characteristics can be assessed by including a brief silence at the start of recordings, and the results of this study are likely to be most applicable to rooms that are acoustically similar. Finally, the recording app designed for this study relied on the built-in signal amplification level for each recording. While the recorded signals from the various smartphones clearly retained the key acoustic characteristics of the voice samples used in this study, the recorded signal level of approximately −18 dB FS was not as desirable as a −12 dB FS signal that provides a much stronger signal amplitude while retaining ample headroom to avoid clipping. It is recommended that apps developed or used for voice recording provide the ability to manipulate input signal gain to peak at least at the −12 dB FS level.

Future work could use similar methodology with other smartphone vendors/models. However, with continued technological advancements and commonality of smartphone components across manufacturers, the relevant differences between devices may get smaller over time, we expect that such studies may have diminishing returns. Similarly, assessment of additional potential sources of variability such as vertical and horizontal angles of smartphone positioning, the potential effect of smartphone cases, and the potential effect of extended phone use and “wear and tear” may need further assessment, as well as the effect of potential advances in microphone sensitivity and directionality. In addition, though the smartphone positioning and mouth-to-microphone distances used for this study were selected to approximate a commonly used phone reading position, typical phone positioning with microphone in close proximity to the mouth should also be assessed. Finally, this study has focused on a select sample of spectral and cepstral measures computed using the ADSV program. While previous study has demonstrated that measures of CPP computed using ADSV strongly correlate with similar measures in the Praat audio signal analysis program43, further study using Praat to examine the reliability of spectral and more traditional sustained vowel perturbation measures as obtained via smartphone recordings would be beneficial. Future study that expands these results to a wider array of potential acoustic measures used in the voice literature will be of great value.

Conclusions

While device design differences between with smartphones vs. a high-cost “flat” microphone included in a precision sound level meter (SLM) system resulted in variability in spectral and cepstral-based measures of voice, the intercorrelations of the measurements were extremely strong (r’s > 0.90), indicating that all devices were able to capture the wide range of voice characteristics represented in the voice sample corpus. Therefore, the results of this study support the robustness of a variety of acoustic measures collected using smartphone recordings across a diverse range of dysphonia severity and demographics. These findings suggest that the previously reported inadequacy of smartphone microphones for research-quality voice recordings1 may be circumvented by the selection of newer devices. Our findings also offer the possibility of interpreting measurements more meaningfully by quantitatively accounting for specific aspects of the recording conditions such as microphone distance, setting, and ambient noise characteristics previously identified as key characteristics influencing the consistency of measures across different conditions 5. Therefore, it is hoped that the measures from this paper can be used to make more informed decisions regarding the use of smartphone voice recordings in a variety of conditions and thereby extend use to more commonly available settings such as quiet office rooms. The overall hope is that these findings, in combination with prior literature, and the free availability and transparency of the basic code for the voice recording app used in this study, will allow more widespread use of acoustic analyses of voice by supporting the strategic use of smartphones for voice recording.

Acknowledgments

Dr. S. Misono. is supported by funding from the National Institutes of Health (UL1TR002494 and K23DC016335), American College of Surgeons, and the Triological Society.

Dr. J. A. Awan is supported by NSF award number SES-2150615 to Purdue University.

The content is solely the responsibility of the authors and does not necessarily represent the official views of the funding organizations.

Disclosures:

Dr. S. N. Awan licenses the algorithms that form the basis of the Analysis of Dysphonia in Speech and Voice (ADSV) program to PENTAX Medical (Montvale, NJ). Dr. Awan is supported by funding from the National Institute on Deafness and Other Communication Disorders (2R01DC009029-13A1).

Appendix

Table A1.

Mean Pearson’s r correlations across smartphones vs. the sound level meter standard (SLM, Booth, at 15cm).

| Dependent Variable | Across Setting and Distance | Booth 15cm | Booth 30cm | Quiet Room 15cm | Quiet Room 30 cm |

|---|---|---|---|---|---|

| CPP Vowel | 0.969 (0.018) Range: 0.943 – 0.993 |

0.981 (0.003) Range: 0.977 – 0.985 |

0.989 (0.003) Range: 0.986 – 0.9993 |

0.950 (0.005) Range: 0.944 – 0.956 |

0.957 (0.011) Range: 0.943 – 0.969 |

| CPP Sentence | 0.955 (0.021) Range: 0.920 – 0.980 |

0.972 (0.002) Range: 0.970 – 0.974 |

0.976 (0.004) Range: 0.970 – 0.980 |

0.931 (0.015) Range: 0.920 – 0.954 |

0.942 (0.011) Range: 0.935 – 0.959 |

| L/H Ratio Vowel | 0.950 (0.020) Range: 0.908 – 0.979 |

0.957 (0.018) Range: 0.941 – 0.978 |

0.938 (0.033) Range: 0.908 – 0.979 |

0.951 (0.008) Range: 0.941 – 0.959 |

0.956 (0.017) Range: 0.940 – 0.977 |

| L/H Ratio Sentence | 0.942 (0.023) Range: 0.892 – 0.970 |

0.952 (0.015) Range: 0.938 – 0.966 |

0.938 (0.020) Range: 0.918 – 0.965 |

0.930 (0.035) Range: 0.892 – 0.962 |

0.950 (0.018) Range: 0.931 – 0.970 |

| CSID Vowel | 0.971 (0.012) Range: 0.935 – 0.989 |

0.981 (0.008) Range: 0.971 – 0.989 |

0.976 (0.007) Range: 0.969 – 0.983 |

0.968 (0.004) Range: 0.962 – 0.973 |

0.960 (0.017) Range: 0.935 – 0.974 |

| CSID Sentence | 0.969 (0.006) Range: 0.959 – 0.981 |

0.971 (0.004) Range: 0.968 – 0.977 |

0.968 (0.005) Range: 0.962 – 0.973 |

0.968 (0.006) Range: 0.963 – 0.976 |

0.971 (0.011) Range: 0.959 – 0.981 |

Standard deviations (in parentheses) and correlation ranges are also provided. All correlations are significant at p < .001.

CPP: Cepstral Peak Prominence; L/H Ratio: Low vs. High Spectral Ratio (4 kHz cutoff); CSID: Cepstral Spectral Index of Dysphonia

Table A2.

Estimates and R^2 values for models (1) and (2) applied to CPP vowel and sentences.

| Vowel | Sentence | |||

|---|---|---|---|---|

| Effect | Full Model Estimate | Simplified Model Estimate | Full Model Estimate | Simplified Model Estimate |

| Intercept | 8.136*** | 8.136*** | 5.689*** | 5.689*** |

| Setting=QR | −1.573*** | −1.573*** | −1.490*** | −1.490*** |

| Distance=30cm | −0.443*** | −0.443*** | −0.417*** | −.417*** |

| Device=any smartphone | — | 0.091* | — | −0.147*** |

| Device=i12 | 0.238*** | — | −0.044 | — |

| Device=iSE | 0.001 | — | −0.193*** | — |

| Device=s21 | 0.132* | — | −0.116* | — |

| Device=s9 | −0.007 | — | −0.236*** | — |

| Random effect std | 2.392 | 2.392 | 1.986 | 1.986 |

| Residual std | 0.394 | 0.4032 | 0.369 | 0.3739 |

| R^2 | 0.976 | 0.975 | 0.971 | 0.970 |

All table values are in dB. The significance of the parameter estimates is indicated as follows:

(<.05),

(<.01),

(<.001).

QR: Quiet Room; std = standard deviation

Table A3.

The model estimates and R^2 values for models (1) and (2) applied to L/H Ratio vowel and sentences.

| Vowel | Sentence | |||

|---|---|---|---|---|

| Effect | Full Model Estimate | Simplified Model Estimate | Full Model Estimate | Simplified Model Estimate |

| Intercept | 28.379*** | 28.380*** | 28.438*** | 28.438*** |

| Setting=QR | 0.630*** | 0.630*** | 2.528*** | 2.528*** |

| Distance=30cm | 0.274 | 0.274 | 0.146 | 0.146 |

| Device=any smartphone | — | −2.577*** | — | −3.804*** |

| Device=i12 | −0.934*** | — | −2.152*** | — |

| Device=iSE | −3.375*** | — | −4.530*** | — |

| Device=s21 | −2.418*** | — | −3.618*** | — |

| Device=s9 | −3.581*** | — | −4.917*** | — |

| Random effect std | 6.365 | 6.362 | 4.923 | 4.918 |

| Residual std | 1.815 | 2.049 | 1.913 | 2.143 |

| 0.928 | 0.908 | 0.888 | 0.860 | |

All table values are in dB. The significance of the parameter estimates is indicated as follows:

(<.05),

(<.01),

(<.001).

QR: Quiet Room; std = standard deviation

Table A4.

The model estimates and R^2 values for models (1) and (2) applied to CSID vowel and sentences.

| Vowel | Sentence | |||

|---|---|---|---|---|

| Effect | Full Model Estimate | Simplified Model Estimate | Full Model Estimate | Simplified Model Estimate |

| Intercept | 34.626*** | 34.626*** | 32.995*** | 32.994*** |

| Setting=QR | 7.766*** | 7.766*** | 9.022*** | 9.022*** |

| Distance=30cm | 2.234*** | 2.234*** | 4.178*** | 4.178*** |

| Device=any smartphone | — | 0.631 | — | 6.159*** |

| Device=i21 | −1.234* | — | 4.083*** | — |

| Device=iSE | 1.618** | — | 9.110*** | — |

| Device=s21 | 0.152 | — | 4.641*** | — |

| Device=s9 | 1.990*** | — | 6.803*** | — |

| Random effect std | 21.323 | 21.322 | 20.576 | 20.572 |

| Residual std | 4.013 | 4.169 | 4.318 | 4.675 |

| 0.967 | 0.964 | 0.960 | 0.954 | |

The significance of the parameter estimates is indicated as follows:

(<.05),

(<.01),

(<.001).

QR: Quiet Room; std = standard deviation

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- 1.Petrizzo D, Popolo PS. Smartphone Use in Clinical Voice Recording and Acoustic Analysis: A Literature Review. J Voice. 2021;35(3):499.e23–499.e28. doi: 10.1016/J.JVOICE.2019.10.006 [DOI] [PubMed] [Google Scholar]

- 2.Jannetts S, Schaeffler F, Beck J, Cowen S. Assessing voice health using smartphones: bias and random error of acoustic voice parameters captured by different smartphone types. Int J Lang Commun Disord. 2019;54(2):292–305. doi: 10.1111/1460-6984.12457 [DOI] [PubMed] [Google Scholar]

- 3.Grillo EU, Brosious JN, Sorrell SL, Anand S. Influence of Smartphones and Software on Acoustic Voice Measures. Int J Telerehabil. 2016;8(2):9–14. doi: 10.5195/ijt.2016.6202 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Kim GH, Kang DH, Lee YY, et al. Recording Quality of Smartphone for Acoustic Analysis. Journal of Clinical Otolaryngology Head and Neck Surgery. 2016;27(2):286–294. doi: 10.35420/JCOHNS.2016.27.2.286 [DOI] [Google Scholar]

- 5.Maryn Y, Ysenbaert F, Zarowski A, Vanspauwen R. Mobile Communication Devices, Ambient Noise, and Acoustic Voice Measures. J Voice. 2017;31(2):248.e11–248.e23. doi: 10.1016/J.JVOICE.2016.07.023 [DOI] [PubMed] [Google Scholar]

- 6.Guan Y, Li B. Usability and Practicality of Speech Recording by Mobile Phones for Phonetic Analysis. 2021 12th International Symposium on Chinese Spoken Language Processing, ISCSLP; 2021. Published online January 24, 2021. doi: 10.1109/ISCSLP49672.2021.9362082 [DOI] [Google Scholar]

- 7.Zhang C, Jepson K, Lohfink G, Arvaniti A. Comparing acoustic analyses of speech data collected remotely. J Acoust Soc Am. 2021;149(6):3910–3916. doi: 10.1121/10.0005132 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Uloza V, Ulozaitė-Stanienė N, Petrauskas T, Kregždytė R. Accuracy of Acoustic Voice Quality Index Captured With a Smartphone – Measurements With Added Ambient Noise. Journal of Voice. Published online 2021. doi: 10.1016/j.jvoice.2021.01.025 [DOI] [PubMed] [Google Scholar]

- 9.Ulozaite-Staniene N, Petrauskas T, Šaferis V, Uloza V. Exploring the feasibility of the combination of acoustic voice quality index and glottal function index for voice pathology screening. Eur Arch Otorhinolaryngol. 2019;276(6):1737–1745. doi: 10.1007/S00405-019-05433-5 [DOI] [PubMed] [Google Scholar]

- 10.Marsano-Cornejo MJ, Roco-Videla Á. Comparison of the acoustic parameters obtained with different smartphones and a professional microphone. Acta Otorrinolaringol Esp. 2021;73(1):51–55. doi: 10.1016/J.OTORRI.2020.08.006 [DOI] [PubMed] [Google Scholar]

- 11.Ge C, Xiong Y, Mok P. How reliable are phonetic data collected remotely? Comparison of recording devices and environments on acoustic measurements. Published online 2021. doi: 10.21437/Interspeech.2021-1122 [DOI] [Google Scholar]

- 12.State by State: The Most Popular Android Phones in the US | PCMag. Accessed September 6, 2022. https://www.pcmag.com/news/state-by-state-the-most-popular-android-phones-in-the-us

- 13.These are the most popular iOS and Android devices in North America by active use - PhoneArena. Accessed September 6, 2022. https://www.phonearena.com/news/most-popular-active-ios-android-devices-north-america-market-report_id132703

- 14.US Smartphone Market Grows 19% YoY in Q1 2021. Accessed September 6, 2022. https://www.counterpointresearch.com/us-smartphone-market-q1-2021/

- 15.• US smartphone market share by vendor 2016–2022 | Statista. Accessed September 6, 2022. https://www.statista.com/statistics/620805/smartphone-sales-market-share-in-the-us-by-vendor/

- 16.Awan SN, Shaikh MA, Desjardins M, Feinstein H, Abbott KV. The Effect of Microphone Frequency Response on Spectral and Cepstral Measures of Voice: An Examination of Low-Cost Electret Headset Microphones. Am J Speech Lang Pathol. 2022;31(2):959–973. doi: 10.1044/2021_AJSLP-21-00156 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Boersma P, Weenink D. Praat. Published online 2016. http://www.fon.hum.uva.nl/praat/

- 18.Patel RR, Awan S, Barkmeier-kraemer J, et al. Recommended Protocols for Instrumental Assessment of Voice: American Speech-Language-Hearing Association Expert Panel to Develop a Protocol for Instrumental Assessment of Vocal Function. Am J Speech Lang Pathol. 2018;27(August):887–905. [DOI] [PubMed] [Google Scholar]

- 19.Maryn Y, Roy N, de Bodt M, van Cauwenberge P, Corthals P. Acoustic measurement of overall voice quality: a meta-analysis. J Acoust Soc Am. 2009;126(5):2619–2634. doi: 10.1121/1.3224706 [DOI] [PubMed] [Google Scholar]

- 20.Awan SN, Roy N, Jetté ME, Meltzner GS, Hillman RE. Quantifying dysphonia severity using a spectral/cepstral-based acoustic index: Comparisons with auditory-perceptual judgements from the CAPE-V. Clin Linguist Phon. 2010;24(9). doi: 10.3109/02699206.2010.492446 [DOI] [PubMed] [Google Scholar]

- 21.Gillespie AI, Gartner-Schmidt J, Lewandowski A, Awan SN. An Examination of Pre- and Posttreatment Acoustic Versus Auditory Perceptual Analyses of Voice Across Four Common Voice Disorders. Journal of Voice. Published online 2017. doi: 10.1016/j.jvoice.2017.04.018 [DOI] [PubMed] [Google Scholar]

- 22.Awan S, Helou L, Stojadinovic A, Solomon N. Tracking voice change after thyroidectomy: application of spectral/cepstral analyses. Clin Linguist Phon. 2011;25(4):302–320. doi: 10.3109/02699206.2010.535646 [DOI] [PubMed] [Google Scholar]

- 23.Alharbi GG, Cannito MP, Buder EH, Awan SN. Spectral/Cepstral Analyses of Phonation in Parkinson’s Disease before and after Voice Treatment: A Preliminary Study. Folia Phoniatrica et Logopaedica. 2019;71(5–6). doi: 10.1159/000495837 [DOI] [PubMed] [Google Scholar]

- 24.Awan S, Roy N. Outcomes measurement in voice disorders: application of an acoustic index of dysphonia severity. J Speech Lang Hear Res. 2009;52(2):482–499. doi: 10.1044/1092-4388(2009/08-0034) [DOI] [PubMed] [Google Scholar]

- 25.Hillenbrand J, Houde R. Acoustic correlates of breathy vocal quality: dysphonic voices and continuous speech. J Speech Hear Res. 1996;39(2):311–321. http://www.ncbi.nlm.nih.gov/pubmed/8729919 [DOI] [PubMed] [Google Scholar]

- 26.Awan S, Roy N. Acoustic prediction of voice type in women with functional dysphonia. J Voice. 2005;19(2):268–282. doi: 10.1016/j.jvoice.2004.03.005 [DOI] [PubMed] [Google Scholar]

- 27.Hillenbrand J, Cleveland R, Erickson RL. Acoustic correlates of breathy vocal quality. J Speech Hear Res. 1994;37(4):769–778. http://www.ncbi.nlm.nih.gov/pubmed/7967562 [DOI] [PubMed] [Google Scholar]

- 28.Kempster GB, Gerratt BR, Verdolini Abbott K, Barkmeier-Kraemer J, Hillman RE. Consensus auditory-perceptual evaluation of voice: development of a standardized clinical protocol. American journal of speech-language pathology / American Speech-Language-Hearing Association. 2009;18(2):124–132. doi: 10.1044/1058-0360(2008/08-0017) [DOI] [PubMed] [Google Scholar]

- 29.Awan S Analysis of Dysphonia in Speech and Voice (ADSV): An Application Guide. KayPENTAX, Inc./Pentax Medical, Inc.; 2011. [Google Scholar]

- 30.Awan SN, Solomon NP, Helou LB, Stojadinovic A. Spectral-cepstral estimation of dysphoria severity: External validation. Annals of Otology, Rhinology and Laryngology. 2013;122(1). [DOI] [PubMed] [Google Scholar]

- 31.Awan S, Roy N, Cohen S. Exploring the relationship between spectral and cepstral measures of voice and the voice handicap index (VHI). Journal of Voice. 2014;28(4):430–439. doi: 10.1016/j.jvoice.2013.12.008 [DOI] [PubMed] [Google Scholar]

- 32.Cavalcanti JC, Englert M, Oliveira M, Constantini AC. Microphone and Audio Compression Effects on Acoustic Voice Analysis: A Pilot Study. Journal of Voice. Published online 2021. doi: 10.1016/j.jvoice.2020.12.005 [DOI] [PubMed] [Google Scholar]

- 33.JASP Team. JASP. Published online 2022. https://jasp-stats.org/

- 34.R Core Team. R: A language and environment for statistical computing. Published online 2020. https://www.r-project.org/

- 35.Cohen J Statistical Power Analysis for the Behavioral Sciences. 2nd ed. L. Erlbaum Associates; 1988. [Google Scholar]

- 36.Widder J, Morcelli A. Basic principles of MEMS microphones. EDN. Published 2014. Accessed September 6, 2022. https://www.edn.com/basic-principles-of-mems-microphones/ [Google Scholar]

- 37.Karnell MP, Melton SD, Childes JM, Coleman TC, Dailey SA, Hoffman HT. Reliability of Clinician-Based (GRBAS and CAPE-V) and Patient-Based (V-RQOL and IPVI) Documentation of Voice Disorders. Journal of Voice. 2007;21(5):576–590. doi: 10.1016/J.JVOICE.2006.05.001 [DOI] [PubMed] [Google Scholar]

- 38.Helou LB, Solomon NP, Henry LR, Coppit GL, Howard RS, Stojadinovic A. The role of listener experience on Consensus Auditory-perceptual Evaluation of Voice (CAPE-V) ratings of postthyroidectomy voice. American journal of speech-language pathology / American Speech-Language-Hearing Association. 2010;19(3):248–258. doi: 10.1044/1058-0360(2010/09-0012) [DOI] [PubMed] [Google Scholar]

- 39.Kelchner LN, Brehm SB, Weinrich B, et al. Perceptual Evaluation of Severe Pediatric Voice Disorders: Rater Reliability Using the Consensus Auditory Perceptual Evaluation of Voice. Journal of Voice. 2010;24(4):441–449. doi: 10.1016/J.JVOICE.2008.09.004 [DOI] [PubMed] [Google Scholar]

- 40.Majd NS, Khoddami SM, Drinnan M, Kamali M, Amiri-Shavaki Y, Fallahian N. Validity and rater reliability of Persian version of the Consensus Auditory Perceptual Evaluation of Voice. Auditory and Vestibular Research. 2014;23(3):65–74. Accessed August 14, 2022. https://avr.tums.ac.ir/index.php/avr/article/view/245 [Google Scholar]

- 41.Ertan-Schlüter E, Demirhan E, Ünsal EM, Tadıhan-Özkan E. The Turkish version of the consensus auditory-perceptual evaluation of voice (CAPE-V): A reliability and validity study. Journal of Voice. 2020;34(6):965.e13–965.e22. doi: 10.1016/J.JVOICE.2019.05.014 [DOI] [PubMed] [Google Scholar]

- 42.Zraick RI, Kempster GB, Connor NP, et al. Establishing Validity of the Consensus Auditory-Perceptual Evaluation of Voice (CAPE-V). Am J Speech Lang Pathol. 2011;20(1):14–22. doi: 10.1044/1058-0360(2010/09–0105) [DOI] [PubMed] [Google Scholar]

- 43.Watts CR, Awan SN, Maryn Y. A Comparison of Cepstral Peak Prominence Measures From Two Acoustic Analysis Programs. Journal of Voice. 2017;31(3). doi: 10.1016/j.jvoice.2016.09.012 [DOI] [PubMed] [Google Scholar]