Abstract

Precise segmentation of the nucleus is vital for computer-aided diagnosis (CAD) in cervical cytology. Automated delineation of the cervical nucleus has notorious challenges due to clumped cells, color variation, noise, and fuzzy boundaries. Due to its standout performance in medical image analysis, deep learning has gained attention from other techniques. We have proposed a deep learning model, namely C-UNet (Cervical-UNet), to segment cervical nuclei from overlapped, fuzzy, and blurred cervical cell smear images. Cross-scale features integration based on a bi-directional feature pyramid network (BiFPN) and wide context unit are used in the encoder of classic UNet architecture to learn spatial and local features. The decoder of the improved network has two inter-connected decoders that mutually optimize and integrate these features to produce segmentation masks. Each component of the proposed C-UNet is extensively evaluated to judge its effectiveness on a complex cervical cell dataset. Different data augmentation techniques were employed to enhance the proposed model’s training. Experimental results have shown that the proposed model outperformed extant models, i.e., CGAN (Conditional Generative Adversarial Network), DeepLabv3, Mask-RCNN (Region-Based Convolutional Neural Network), and FCN (Fully Connected Network), on the employed dataset used in this study and ISBI-2014 (International Symposium on Biomedical Imaging 2014), ISBI-2015 datasets. The C-UNet achieved an object-level accuracy of 93%, pixel-level accuracy of 92.56%, object-level recall of 95.32%, pixel-level recall of 92.27%, Dice coefficient of 93.12%, and F1-score of 94.96% on complex cervical images dataset.

Introduction

Cervical cancer often occurs in the cervix, a narrow cylindrical path of the lower uterus known as the neck of the womb. Cervical cancer is the world’s fourth most prevalent cancer, accounting for roughly 25000 fatalities among women per year [1]. This rate has been on the decline since 1950, attributed to the availability of different screening tests. Over the past four decades, histopathology imaging has been the gold standard in all forms of cancer investigations. Digital histopathology images are acquired from Image capturing, tissue slicing, staining, and digitization, where the images generally have big resolutions. Many nuclei are often found in a tissue slide with varying shapes, appearances, textures, and morphological features. The primary analytical procedures in digital histopathology are the segmentation of nuclei from cells, glands, and tissues. The segmented nuclei provide indicators that are critical for cancer diagnosis and prognostic. A simplified Papanicolaou (Pap) smear test, thinPrep Cytology test (TCT), and liquid-based cytology (LBC) are the most commonly used screening methods to detect cervical cancer [2–4]. The cytological scans of the cervical smear are investigated at 400 x magnification through the microscope. With this magnification, pathologists have to examine multiple field-of-views per scan, which takes a lot of time, extremely susceptible to errors and observer bias. This process becomes more difficult due to cell clumps, yeast contamination, or bacteria masking by blood, mucus, and inflammation.

Several automated diagnostic methods have been designed to help cytologists to examine viginal smears of Pap strains, which are discussed in related work. A number of factors continue to pose a challenge to this task, including the presence of overlapping nuclei, superficial cells, poor contrast, spurious edges, poor staining, and cytoplasm. Therefore, more robust automatic screening systems are required to assist cytologists in determining cytopathy in cervical cells. A vital component in the Cervical Cytology pipeline is the precise segmentation and detection of a nucleus from Cervical cells [5]. An increasing number of studies primarily intrigued the delineation of cells cluster and nuclei. Watershed [6], morphological operation, thresholding [7], and active contour models [8] are a few of the many methods employed for the segmentation of nuclei or cellular mass. These techniques failed to delineate overlapping cell structures well.

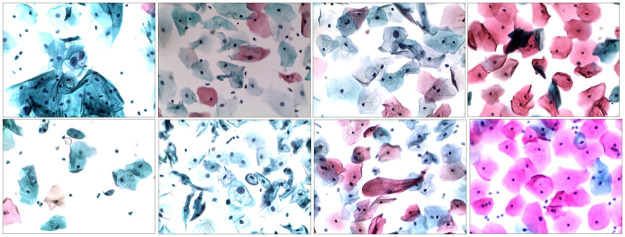

Recent studies [9] have shown some improvements in the isolation of clumping nuclei and cytoplasm from cervical cells. The datasets used in most of these studies for the delineation of nuclei include the liquid Based Cytology (LBC) Pap smear dataset and Herlev dataset for single-cell delineation and Shenzhen University (SZU), ISBI2014, and ISBI2015 datasets for multi-cell examination. These datasets images show nuclei with isolated, apparent color variation and separated boundaries among cell constitutes, making analysis easy. According to [10], despite good performance on those datasets, several approaches mentioned above failed to excel in their dataset based on real-world clinical data having folded nuclei, cytoplasm, and color differences. Similarly, the majority of prior studies [11–14] focused on the segmentation of huddled cytoplasm, and a few [15–17] concentrated on the delineation of nuclei from those datasets. Recently, deep neural networks [18–20] have surged in popularity for their standout performance in the field of medical image analysis. Deep learning models can handle complex tasks if the size of the datasets is big enough [21]. Presently, datasets in hand possess lesser diversity of nuclei shape, appearance, and color than real-world clinical data. We have selected a challenging dataset consisting of 104 cervical cell images of size 1024 x 768 based on LBC screening. Fig 1 shows a few examples of cervical images having variations in shape, texture, color, and appearance, making the dataset more challenging. Furthermore, the continuous pooling and convolution operations across the network result in the loss of vital information required for the precise segmentation of nuclei.

Fig 1. Shows cervical cell images from the employed dataset.

To handle these issues, effective feature extraction, and adaptation to the heterogeneity of cervical cells, an improved UNet model with BiFPN is suggested, which is suitable for segmenting various forms of cervical cell nuclei. Overall, the contributions of this study are listed in the following:

We have designed an optimized network based on UNet architecture, namely C-UNet, for precisely segmenting nuclei from cervical images.

C-UNet strategically integrates the Cross-scale features (CSFI) module in C-UNet to integrate features extracted by the network using a cross-scale weighted integration scheme into the final feature map. This fusion adaptively combines the elements of different spatial resolutions and domains based on their significance.

C-UNet uses two interconnected decoders for boundary detection and semantic segmentation of cervical nuclei.

To evaluate the usefulness of each part of C-UNet multiple experiments were performed.

The remainder of this manuscript is structured as follows: related work is illustrated in section 2, section 3 describes the architecture of the suggested model, followed by results and discussion in section 4, and the conclusion is provided in section 5.

Related work

A slew of successful cytological nuclei segmentation methods has been developed during the previous two decades. Prior techniques for segmenting cytological nuclei can be categorized as handcrafted and deep learning techniques.

Handcrafted methods

Traditional methods for cytological nuclei segmentation are based on edge enhancement [22], thresholding [17, 23–25], clustering [8, 9, 26, 27], morphological features and marker controlled watershed [28, 29]. [22] provides a cervical nucleus and cytoplasm detector based on edge enhancement. Depth equalization was employed to enhance edges but failed to segment blurry contours. [17] developed a model for the locating and segmentation of nuclei from cytological images. A randomized Hough Transform with prior knowledge is used to locate nuclei, and a level-set segmentation method is used to separate nuclei from cell regions. Otsu thresholding, median filter, and canny edge detector were employed during preprocessing stage. Such techniques are not applicable to the splitting of crowded cell structures.

[6] proposed thresholding for separating cell regions and multiscale hierarchal method based on circularity and homogeneity segmented regions. A binary classifier was used to distinguish cytoplasm from the nucleus. The ranking of the cells was determined by linearizing the leaves of a binary tree created using hierarchical clustering. Cases with irregular sizes and shapes were poorly handled. [25] designed an automated method to segment nuclei from cervical cells. They employed V-channel to enhance the contrast between cytoplasm and nuclei. Two features, roundness and shape factor, were used to validate the segmentation of clumped nuclei. Concave-point algorithms were applied to segment multi-clumped nuclei. To remove noise and non-uniform illumination, median filter and adaptive thresholding were used. Zhao et al. [27] addressed the problem of cervical cell segmentation via Markov Random Field (MRF) based on superpixel. A gap search method was applied to minimize time complexity. Sahe et al. [9] utilized a superpixel merging approach for accurate segmentation of crowded cervical nuclei. The superpixel is obtained through the statistical region merging (SRM) technique through the pair-wise regional control threshold of SLIC (Simple Linear Iterative Clustering) superpixel. This technique could not resolve the under-segmentation issue and failed to tackle the heterogeneity of nucleus size and shape.

Morphological analysis and thresholding-based approaches have been used for the crisp segmentation of cervical nuclei. [28] introduced an iterative thresholding technique to segment nuclei on the basis of the size of the nucleus, solidity, and intensity. [23] employed local thresholding to segregate cervical nuclei on the basis of properties within a window of a radius of 15 pixels. In [24], traditional Otsu thresholding was integrated with contrast-limited adaptive histogram and anisotropic filtering to detect nuclei. Thresholding-based methods do not work well for intractable cases. In [7, 30], morphological features were used to locate the centroid of the nucleus, which is subsequently employed to determine the boundaries of the nucleus. [30] used centroid to determine markers for watershed transform, and [7] utilized them to find radial profiles. These techniques were unable to segment varying shape cellular structures. In [31], an adaptive local graph-cut approach for cervical cell delineation was applied with a blend of area information, texture, intensity, and boundary. Graph-based method’s performance degrades in the case of touching objects.

Watershed is a prevailing image segmentation technique that has been around for a long time. Several studies [12, 32] have determined that marker-controlled watershed segmentation is effective. [12] developed a multi-pass watershed algorithm with barrier-based watershed transform for cervix cell segmentation. The first finds the nucleus on a gradient-based edge map; the second pass segregates touching, isolated, and clumped cells, and the third pass estimates the cell shape in the clumped clusters. These methods are susceptible to noise and need pre and post-processing, which is quite cumbersome.

These approaches have the apparent flaw of not being able to adequately split cervical nuclei since they frequently rely on an incomplete set of low-level hand-crafted features. Additionally, these features lack structural information and yield below-par segmentation results. Thus, various pre and post-processing steps are needed for different approaches for various types of nuclei to enhance the segmentation quality. However, the lengthy pipeline and complicated, intricate process flow often encounter instability. The entire segmentation procedure might fail if errors occur in the intermediatory phases.

Deep activated features based methods

Deep learning-based models are one of the most recent advances in numerous applications and are widely employed in cytological image analysis. [33] integrated a superpixel with a Convolutional neural network (CNN) for precise segmentation of nuclei and cytoplasm from cytological images. A trimmed mean filter was used to remove noise from input images. CNN was used to learn 15 features from superpixels. Coarse nucleus segmentation was performed to decrease the clustering of inflammatory cells. The nuclei segmentation accuracy was noted as 94.50%. Coarse segmentation becomes inconsistent for cases when the receptive field is larger than the nuclei. In [34], a fully convolutional network (FCN) was employed to separate the nucleus region, and a graph-based method was used to obtain finer segmentation. They recorded an accuracy of 94.50% on a dataset comprising 1400 images. FCN employs single-scale features that produce inconsistent results for complex cases. Song et al. [35] investigated a multiscale convolutional network (MSCN) with graph partitioning via superpixel for cervical nuclei and cytoplasm segmentation. The results demonstrated that MSCN, graph partitioning, and superpixel effectively delineate cervical cells. Superpixel segmentation has shown an increase of 2.06% for the nucleus and 5.06% for the cytoplasm compared to raw pixels segmentation. However, the employed method cannot detect isolated and touching nuclei in the same process. Phoulady et al. [36] used an iterative thresholding method with CNN for the cervical nucleus delineation model. They trained the model using nuclei patches of size 75 x 75 pixels. This method achieved a recall, precision, and F-score of 86%, 89%, and 87% on a complex CERVIX93 dataset. This method is not able to isolate touching cellular nuclei boundaries. Liu et al. [37] designed a model based on Mask-Regional Convolutional Neural Network (Mask-RCNN) and Locally Fully Connected Conditional Random Field (LFCCRF) models. The Mask-RCNN incorporates multiscale feature maps to perform accurate nuclei segmentation, and LFCCRF uses abundant spatial information to refine the nucleus boundary further. Experimental results on the Herlev Pap smear dataset show superior performance compare to most of the prior studies. Conditional Random Field based methods employ second order statistics whilst higher order statistic is more beneficial for segmentation of images. In [38], two functional extensions were introduced in Faster RCNN, i.e., global context aware function to improve spatial correlation and deformable convolution function in the last three layers of FPN (feature pyramid network) to enhance scalability. Experiments have shown a reasonable improvement of 6–9% on mAP (mean average precision) on the cervical images dataset. RCNN is not able to delineate nuclei having varying aspect ratio and spatial locations.

Presently, there are few models developed expressly for the detection of cervical cancer [39–41]. Tan et al. [39] deployed a faster RCNN for the identification of cancer cells in TCT images and obtained an AUC of 0.67. Zhang et al. [42] investigated R-FCN architecture for cancer cell detection in LBC images. This architecture focuses on detecting the abnormal region instead of abnormal nuclei. The performance was evaluated on a novel notion termed hit degree, which defines how closely each ground truth and detected region are from each other. Li et al. [43] introduced a faster RCNN to identify and classify abnormal cells in cervical smear images scanned at 20 x. In [44], the authors presented a generative adversarial network (GAN) to successfully segment both overlapping and single-cell images. The proposed GAN used structural information of the whole Image and the probability distribution of morphology of the cell for segmentation. Compared to other state-of-the-art models, results show good performance on poor contrast and highly overlapping cells. The model produced significant DC (dice coefficient) and FNRo (false negative rate) values of 94.35% and 7.9% for single cells, 89.9%, and 6.4% for clumped cells. The GAN-based models are very difficult to train and unsuitable for small datasets. Chen et al. [45] used two staged Mask-RCNN to segment cervical cytoplasm and nuclei. ISBI-2014 and ISBI-2015 were used to evaluate the model and witness increased performance for segmenting cervical cellular masses. Yang et al. [46] applied a deep learning model based on a modified UNet. They used ResNet-34 as an encoder block leveraged with Gating Context-Aware Pooling layers to extract and refine learned features. The modified decoder uses a global attention layer to build long-range dependencies. The proposed model was trained and tested on a private dataset, namely the ClusteredCell dataset. Results show significant improvement in the performance of their model compared to state-of-the-art (SOTA) models. [47] employed UNet embedded local and global attention layers. These multi-attentions layers enhance the network capabilities to extract and utilize features for segmentation of cervical cytoplasm and nucleus. The Herlev dataset was used to evaluate model performance and recorded better segmentation scores than SOTA models. [48] designed a deep learning-based model for the segmentation of nuclei from multiple datasets. Tissue-specific features were extracted from histopathological images using BiFPN. Post-processing steps are required to further improve output. Excellent segmentation results were recorded as compared to other benchmarking networks. [49] developed a 3 phase cervical cell segmentation method. In the first phase, a CNN is used for coarse segmentation of cellular masses; the second phase identifies the location of cytoplasm and nuclei with a random walker graph; the third phase refines the output of previous phases through the Hungarian algorithm. A DSC score of 97.2% was noted for the ISBI-2014 dataset. [50] proposed a framework based on an adversarial paradigm for spotting the cervical cell. They used RCNN to construct the encoder & decoder and fine-tuned (FSAE) autoencoder to optimize parameters. Analysis of results shows an improvement in the performance of the proposed framework. [51] proposed Triple-UNet and exploited Haemotoxylin staining to predict cellular nuclei. They subtracted boundaries from the segmentation map to split clumped nuclei. The drawback of this technique is that such subtraction of instance boundary may reduce segmentation accuracy. [52] designed a model, StarDist, to estimate the centroid probability map and distance from each foreground pixel to its corresponding instance boundary. Each polygon map corresponds to one nuclear instance. This technique only predicts polygons based on the centroid pixel’s characteristics, which lacks contextual information for big-sized nucleus occurrences, which lowers segmentation accuracy.

Material and method

C-UNet’s architecture

Overview

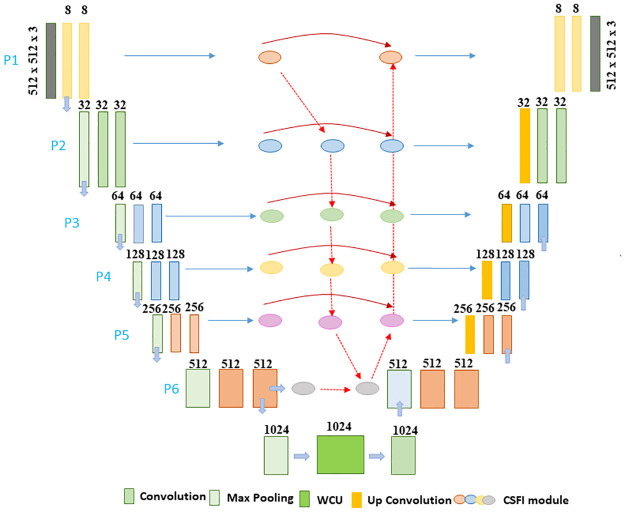

The complete structure of the C-UNet is presented in Fig 2, comprising three modules, i.e., encoder, Cross-scale features integration (CSFI), and interlinked decoder. The encoder learns the nuclei features, which are passed on to the CSFI module to generate rich and precise feature representation. This benefits the inter-connected decoders to create valid and reliable activations for each nuclei sample.

Fig 2. Presents a structure of the proposed C-UNet model.

UNet architecture

We have suggested a cervical cell segmentation architecture based on U-Net, composed of a multiscale feature extractor encoder and inter-connected decoders. The network takes 512 x 512 x 3 image as input and output a 512 x 512 mask. The encoder has the classic architecture of a convolution network. It comprises two successive convolutions of 3 x 3 with similar padding followed by Rectified Linear Unit (ReLU) activation function and maximum pooling of 2 x 2 with a stride of size 2 for downsampling. With each downsampling, the number of feature channels is doubled. The levels of the encoder are seven. The features at the sixth level fed into the CSFI unit and WCU simultaneously, and output fed into the interlinked decoders. For regularization of the model, a dropout layer is used with a factor of 0.5. Each level in the encoder is composed of an upsampling of the feature preceded by the convolution layer of size 2 x 2, which reduces the number of features at each level to half. The extracted features due to upsampling are integrated with the analogous features from the feature network. The integration is followed by two convolutions of 3 x 3 with the same padding, each preceded by the ReLU function. In the last layer, the acquired 512 x 512 x 64 features go through two convolutions of 3 x 3. It is preceded by the ReLU function, the last convolution operation of 1 x 1, and the sigmoid function. In the final layer of the backbone network, the obtained 512 x 512 x 64 feature map undergoes two 3 x 3 convolutions.

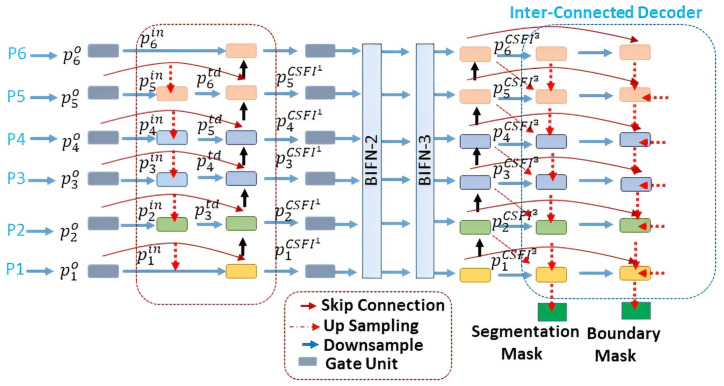

Cross-scale features integration (CSFI) module

We have used the UNet model to extract features from input images in different levels with the help of Convolution layers. To minimize the noise response, computation overhead and to concentrate on specific features, we have employed a gating mechanism. The gating unit surpasses the response of insignificant regions and focuses on nuclei features. It is composed of 1 x 1 convolution, batch normalization, sigmoid activation, and dropout function. The CSFI comprises multiple bottom-up and top-down pathways based on insight from BiFPN [53], as shown in Fig 3. These pathways merge low and high-level features in an efficient way. Output from multiple levels of the UNet was employed as input to the BiFPN model for feature integration. The BiFPN model outputs the features on seven different levels ranging from p1 to p7.

Fig 3. Shows the cross-scale feature integration module, inter-connected decoders, and its essential components.

Formally, given a set of multi-level features () at ith level and ) represents an intermediate feature set on the top-down path. The objective is to aggregate multiscale features to get . We have employed a weighted integration mechanism to fuse features of various resolutions. The integration mechanism is mathematically shown in Eq 1.

| (1) |

vi represents extra learnable weight, ReLU is employed to ensure that the value of additional weight should be greater than or equal to zero and the value of e set to 0.0001. This enables to rectify the relevance of each channel of the input representation.

As illustrated in Fig 3. The intermediate fused features maps are calculated using Eqs 2 and 3 as follows

| (2) |

Where represents intermediate feature maps of ith level on the top-down path, vi shows the weighting vector, is the input features vector. Intuitively, vi shows the significance of the features map . If is crucial to the output, then vi will be assigned a bigger value during training.

| (3) |

indicates the output of the CSFI module, Rb symbolizes bilinear interpolation to resize feature maps before adding at pyramid level l and i indicates a series of CSFI modules, i.e., 3 in our case.

Decoder

The Features extracted by the CSFI module are passed into the decoder. The decoder module jointly integrates multiple-scale features from CSFIs and generates precise segmentation and boundary masks. After resizing by bilinear interpolation Rb, the optimized feature maps from ith CSFI module passed through the feature integration unit. This iterative procedure is performed until the final masks at the (L-1) pyramid level are generated. For instance, segmentation and boundary maps at 3rd level is computed as shown in Eqs 4 and 5 below

| (4) |

Where symbolizes feature maps at 3rd level. represents features maps obtained at the 3rd pyramid level, shows reweighted features maps of 3rd CSFI module, are the features obtained after filtration through the gating block.

| (5) |

indicates boundary detection maps at 3rd level. A refinement unit is incorporated to refine feature maps further. It comprises two convolutional layers of kernel size 3 x 3 and 1 x 1, respectively.

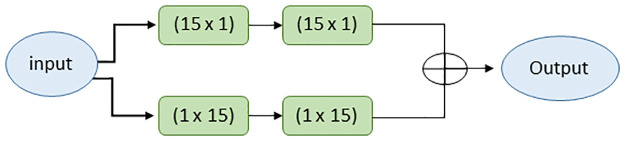

Wide Context Unit (WCU)

WCU, like the CSFI module, learns the contextual information and performs feature accumulation at the transition level, enabling improved reconstruction of segmented nuclei. The WCU consists of two parallel connections of two convolutional layers with different combinations, and the output from them is added up to an output vector, as shown in Fig 4. The convolution layers in the first connection have filter sizes of N x 1 and 1 x N, respectively. Similarly, convolution layers in the second connection have filters of dimensions 1 x N and N x 1.

Fig 4. Structure of WCU.

Loss functions

The loss function or cost function is used to evaluate the prediction of the network. The lower the cost function value, the higher the model performance. We have employed multiple loss functions to evaluate the performance of the proposed model.

Baseline module loss

We have used a weighted summation of three-loss functions for efficient convergence and speedy training of the suggested model, i.e., dice loss, binary cross-entropy, and focal loss. The respective mathematical dynamics are expressed in Eq 6 below:

| (6) |

Where lossDice represents dice loss, LossBCE indicates binary cross-entropy loss and Lossft shows a focal loss. wseg symbolizes the hyper-parameter that regulates dice and binary cross-entropy losses.

Numerically respective losses are expressed in Eqs 7, 8 and 9 below

| (7) |

| (8) |

| (9) |

Where P symbolizes the total number of pixels, c indicates classes, and represents the smoothness constant. ρic and Lic shows probability of ith pixel of class and ground-truth label. α, β, and ∝ denotes hyper-parameters in the focal loss function.

Boundary detection loss

We have employed a combination of combo loss and focal loss, which is mathematically expressed in Eq 10 as

| (10) |

Where Lossbou represent boundary loss and Losscom shows combo loss mathematically expressed in Eq 11.

| (11) |

Where P number of pixels, ρic and Lic shows probability of ith pixel of class and ground-truth label. α, β, and ∝ represents focal loss hyper-parameters.

Experimental setup

Datasets

Many cases in the clinical environment have cervical images with overlapped, self-folded, blurred contours, different sizes and shapes of nuclei, and color similarity with the cytoplasm. At the same time, few cervical scans have impurities, i.e., stains, illumination, and focus variabilities. To meet the practical requirements, the system should handle such cases properly. In deep learning, the size of labeled data is critical for satisfactory performance. It needs time and expertise in the medical field to properly build and annotate cervical cytology images. We have used the cervical cytology image dataset published by [54] for this research, which contains complex cases from the real-world clinical environment. Fig 1, 6 and 7 show some samples from the dataset. It is composed of 104 LCT cervical images of size 1024 x 768. Each instance has a ground truth marked very carefully by the experienced pathologist. The images are generated via Olympus microscope B x 51 with an adequate pixel size of 0.32μm x 0.32μm and 200x magnification.

To justify the performance of the C-UNet, two standard datasets (ISBI2014 and ISBI2015) were also used in this study. The ISBI2014 dataset consists of 45 training images and 900 test images generated synthetically with different cell counts and overlap ratios. The ISBI2015 dataset contains 17 samples, and each consists of 20 other (Extended Depth of Field Images) EDF from multiple focus planes in a field of view. Each Image comprises 40 cervical cells with various cell numbers, overlaps ratios, texture, and contrast. We have used eight images for training and 9 for testing purposes. Table 1 shows the number of images before and after data augmentation, and the impact of data augmentation on the model performance is reflected in Table 6.

Table 1. Shows the total number of images used in this study.

| Datasets | No. of Images | No. of Images after Augmentation |

|---|---|---|

| LCT dataset used in this study | 104 | 640 |

| ISBI-2014 | 45 | 445 |

| ISBI-2015 | 34 | 350 |

Preprocessing

We applied data augmentation, boundary enclosing, and stain normalization to obtain better performance.

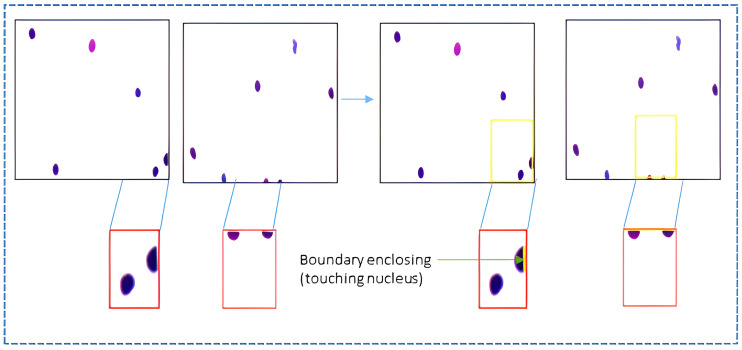

Boundary enclosing

We have performed boundary enclosing of the nuclei at the edges of the image and patches of the cervical cells, as presented in Fig 5. The open boundaries at the edges bring inconsistency during training and may escalate further with data augmentation, i.e., scaling, rotation, resizing, cropping, and transformation. Boundary enclosing assists the model in identifying the nonexistent and fuzzy boundaries around the edge of cervical cell images.

Fig 5. Shows the boundary enclosing of the cervical nuclei.

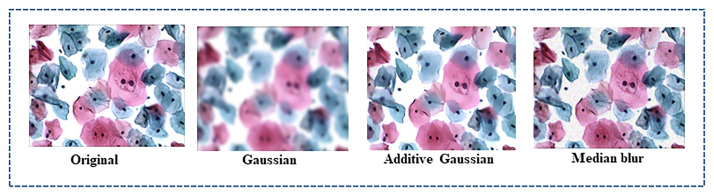

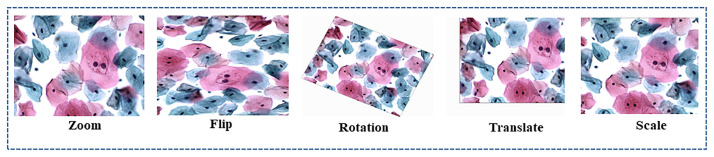

Data augmentation

To minimize deep learning model generalization errors and enhance the model’s performance, we have performed data augmentation to produce new instance points in the cervical image dataset. The total number of images after data augmentation becomes 640. This prevents overfitting and regulates model training for a long period. We have employed two types of data augmentation techniques for the cervical cell dataset. Geometrical transformation (resizing, vertical and horizontal flipping, rescaling and resizing, image translation, and elastic transformation) is depicted in Figs 6 and 7 below. Noise and intensities transformation includes blur, Gaussian, and additive Gaussian noise, along with Hue, Saturation, and Contrast adjustments. The impact of augmentation on the C-UNet performance is shown in Table 6.

Fig 6. Shows cervical images after the addition of different types of noise.

Fig 7. Shows different types of data augmentation for cervical images.

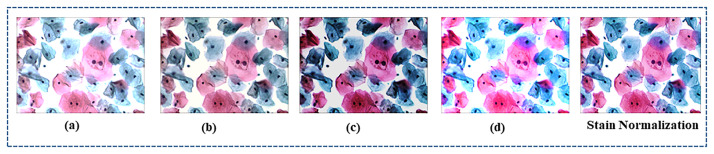

Stain normalization

The Hematoxylin and eosin (H & E) staining are extensively used in pathology to discriminate nuclei from cytoplasmic and other cellular structures. Hematoxylin is used to stain the nuclei of the cells, whereas eosin is used to stain the cytoplasm and extracellular matrix. This staining has color variation due to different response functions of scanner staining protocols and manufacturer design of stain vendors. These differences have undesirable effects on image interpretation of deep learning-based pathology because these methods mainly depend upon the texture and color of (H & E) images. The performance of deep learning models can be significantly improved by normalizing (H & E) images [55]. Nevertheless, normalization techniques designed for traditional computer vision applications provide inadequate benefits in computational pathology. Numerous techniques have been developed for standardizing pathology images [56–58]. [56] introduced an unsupervised learning method for stain density maps estimation by partitioning pathology images into sparse density maps and then integrating them with a stain-colored basis of the target image, as shown in Fig 8(a). This technique only changes the color and keeps the original Image’s structure. Our preliminary experiments suggested that sparse stain standardization performed better than standard normalization methods [57, 58] for cervical images, as shown in Fig 8.

Fig 8. Shows the comparison of various image normalization approaches.

Fig 8(a) represents the normalization method employed by [56], 8(b), and 8(c) shows the results of techniques designed by [57, 58].

Training detail

Adopting the standards evaluation methods, we have split our cervical images dataset into training (80% of the whole dataset), evaluation (10% of the entire dataset), and testing (10% of the original dataset) sets. During the course of model training, stochastic gradient descent (SGD) was employed as an optimizer. The learning rate was set to 0.001 momentum of 0.9, and weight decay of 5e-4 was used to train the model. We have used the synchronous batch normalization (BN) method with a decay rate of 0.99. BN helps the model to converge early and minimize the chances of overfitting. ReLU activation function for the entire network and sigmoid activation function for the last layer with softmax. The batch size was set to 10, and constant smoothness rate was set to 1e-3, and an early stopping scheme was used to tackle overfitting. The description of the loss functions and their impact used are presented in Table 5 below. For training purposes, the original size of the images in the dataset was cropped to 256 x256 x 3 for augmentation. For testing, the image size was set to 512 x 512 x 3. The suggested model is trained on an Nvidia Ge-Force GTX 1080 GPU (graphical processing unit) with 12 GB of RAM. We have trained the C-UNet on a large cell nuclei dataset [59] and then retrained on our cervical cells dataset.

Evaluation measures

To justify the performance of the proposed model on the cervical images dataset, we have employed accuracy, recall, and the Dice coefficient. For all these performance measures, the highest numbers represent the best results. The description of each of the criteria is shown below:

Accuracy

Accuracy is the ratio of correctly identified cervical nucleus pixels to the total number of pixels in a cervical cytology image. Mathematically it is described as shown in Eq 12.

| (12) |

Where TP symbolizes true positive, TN shows true negative rates, FP indicates false positive, and FN represents false negative.

Recall

The recall is measured as the ratio between the numbers of positive cervical nuclei pixels correctly identified as positive to the total number of pixels in the cervical cell image. Mathematically expressed in Eq 13 as

| (13) |

Dice coefficient

The dice Coefficient is a statistical measure employed to determine the similarity of two instances. Mathematically it is expressed as in Eq 14.

| (14) |

F1-score

The F1 is calculated as the ratio between precision and recall. The greater the F1 values, the better overlap between ground-truth and predicted cervical nuclei segmentation masks. Mathematically described in Eq 15.

| (15) |

Quantitative study and results

C-UNet consists of different image processing and deep learning approaches; therefore, we have extensively analyzed each module of the suggested deep learning network to comprehend how each part relates to performance improvement. We have performed five experiments under the same evaluation and training settings, and the results are given as the average of all experiments.

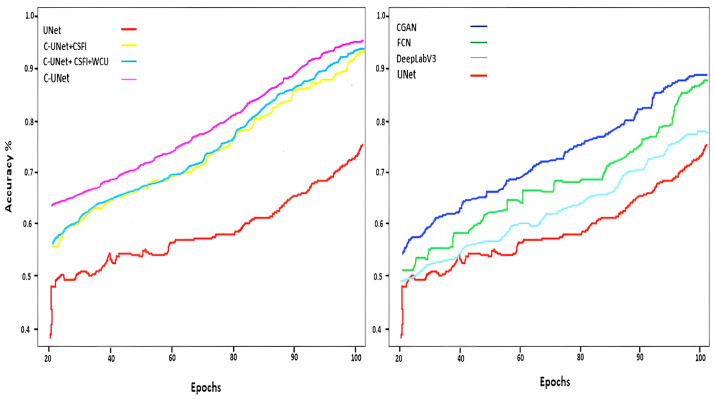

C-UNet module

We have performed several experiments to explore the performance of different components in the C-UNet model. The impact of each element of the proposed model is shown in Table 2 and Fig 9. We have started with the standard UNet model, and the results are reflected in Table 2, row 1. In the second experiment, the CSFI module was incorporated into baseline UNet and noticed an improvement in the segmentation results. In the third experiment, we introduced a features reweighting unit and noticed a significant improvement in the segmentation performance of the proposed model. This is in line with our recommendation that integrating features from various domains and spatial resolutions retain local and spatial information across the pyramid. In the fourth experiment, we have incorporated WCU, which integrates features at the transitional level and helps model the precise reconstruction of segmented nuclei, as shown in Table 2 and Fig 10. Fig 8 shows original cervical cell images, ground truth, and prediction masks generated by benchmark networks and the proposed C-UNet.

Table 2. Performance comparison with benchmark architectures for cervical nuclei segmentation with C-UNet.

| Method | Acco | Recallo | Accp | Recallp | Dice Coefficient | F1-Score |

|---|---|---|---|---|---|---|

| Standard UNet | 73.21% | 84.11% | 80.33% | 74.41% | 85.21% | 78.62% |

| C-UNet+CSFI | 92.31% | 94.32% | 91.02% | 89.92% | 90.59% | 93.98% |

| C-UNet+ CSFI+WCU | 92.67% | 94.96% | 91.77% | 91.13% | 92.42% | 94.71% |

| C-UNet+ CSFI+WCU+ ID | 93.00% | 95.32% | 92.56% | 92.27% | 93.12% | 94.96% |

| DeepLabv3 | 88.02% | 86.11% | 77.09% | 84.21% | 82.23% | 88.17% |

| FCN | 88.23% | 91.33% | 88.27% | 83.03% | 90.49% | 84.52% |

| CGAN | 91.77% | 92.34% | 90.44% | 90.11% | 91.23% | 92.76% |

| Mask R-CNN | 72.66% | 82.81% | 80.21% | 75.42% | 85.24% | 78.58% |

Acco = object level accuracy, * Recallo = Object level recall, Accp = *Pixel level Accuracy, *Recallp = Pixel level recall

Fig 9. Models accuracy during training against epochs.

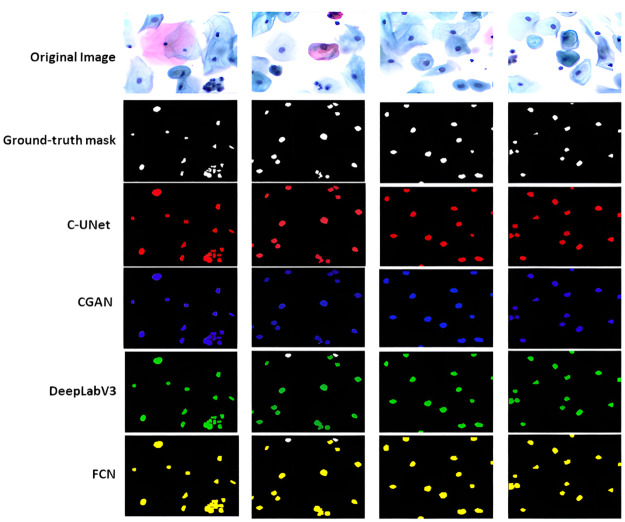

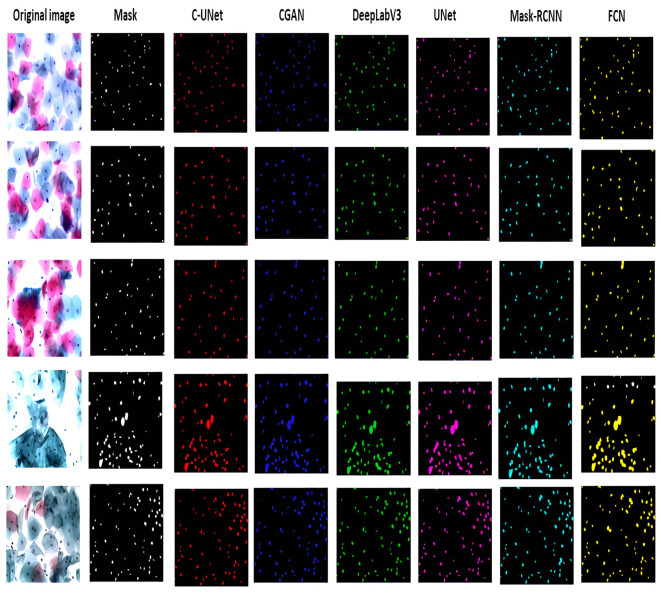

Fig 10. Shows cervical images and segmentation masks generated by state-of-the-art segmentation networks.

Comparison with state of the art

We have compared the performance of the proposed C-UNet with state-of-the-art models employed for such tasks. To judge the accuracy of the models, a fivefold cross-validation scheme is adopted. Fully Connected Network (FCN) [60], CGAN [61], UNet [62], Mask RCNN [63], and deeplabv3 [64] were used. FCN employed VGG [65] as a basic network to extract features that perform slightly worse than other networks. By carefully examining Fig 11, we can observe that FCN suffers boundary leakage problems due to ambiguous boundary edges. UNet with U-shaped architecture to learn low and deep features performed slightly better than FCN but yielded over-segmentation when weak and strong edges appeared at the joining zones of clumped cervical cells. DeepLabv3 employed ResNet [66] to learn the features. DeepLabv3 is more appropriate for segmenting images with larger objects, while cervical nuclei are too small, and this network did not perform well for this task. CGAN represented a context-aware regressive network and employed gradient penalty for cervical nuclei identification and performed better than other networks but failed to split cell contours from the boundary.

Fig 11. Shows cervical cell segmentation of state-of-the-art models.

Multiple cases include clumped nuclei, self-folding, intercellular overlapping, blurred contours, and diverse shapes of nuclei. As visualized in Fig 9, UNet detected nuclei from the cervical cells but failed to yield intact segmentation. Deeplabv3 performed better than others but was unable to identify impurities nuclei. CGAN proved more optimized than others for segmenting cervical cell nuclei but failed to identify nuclei and cytoplasm if they have similar colors. The proposed C-UNet outperformed them by segmenting background, nuclei, and impurities. The cervical smear images have a large proportion of small nuclei, high variations in resolution, blurry boundaries, and information features are low. The information related to nuclei is often contained in the inclusion, and this information mostly reduces due to continuous pooling and convolution operation. The C-UNet model uses 3 Bi-FPN layers that encompass direction connection layers in each BiFPN to prevent loss of significant features information. UNet uses interconnected decoders that merge boundary and semantic features and produce improved segmentation masks.

Comparison with existing methods on ISBI datasets

We have considered the same evaluation metrics used in the prior studies for ISBI2014 and ISBI2015 to compare the performance of the proposed C-UNet. DSC, FNRo (False-negative rate at object level), FPRp (False positive rate at pixel level), and TPRp (True positive rate at pixel level) were used to measure the segmentation performance of the models in this section of the paper.

The nuclei segmentation performance of the existing methods and the proposed U-Net on the ISBI2014 dataset is listed in Table 3 below. [12] employed a three-pass fast watershed technique to segment the nucleus and cytoplasm from cervical cell scans. [23] integrated Voronoi diagrams and superpixels to segment cells into different parts. [28] developed a model for boundary approximation of clumped cells. They enhanced the boundary of cells by using the information in the stacks of images. [67] used a joint optimization of multi-level functions to distinguish cytoplasm and nuclei from clusters of clumped cervical cells. [14] developed a multiscale CNN model to distinguish cervical cell components. [68] designed a model based on contour refinement and partitioning of superpixel for cervical cell segmentation. We have selected these methods for fair comparison due to their best performance on the ISBI2014 and ISBI2015 datasets.

Table 3. Comparison of C-UNet with existing methods on ISBIs datasets using DSC (threshold > 0.7) TPRp, FNRo, and FPRp.

| DSC | TPRp | FNRo | FPRp | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Method | ISBI2014 | ISBI2015 | Dataset [44] | ISBI2014 | ISBI2015 | Dataset [44] | ISBI2014 | ISBI2015 | Dataset [44] | ISBI2014 | ISBI2015 | Dataset [44] |

| [12] | 0.89 ± 0.07 | 0.85 ± 0.07 | NA | 0.91 ± 0.09 | 0.95 ±0.07 | NA | 0.27 ± 0.28 | 0.11 ± 0.17 | NA | 0.004± 0.005 | 0.004± 0.004 | NA |

| [23] | 0.87 ± 0.08 | - | NA | 0.90 ± 0.09 | - | NA | 0.14 ± 0.17 | - | NA | 0.005± 0.004 | - | NA |

| [28] | 0.85 ± 0.08 | NA | 0.94 ± 0.06 | NA | 0.16 ± 0.22 | NA | 0.005 ± 0.005 | NA | ||||

| [67] | 0.88 ± N/A | - | NA | 0.92 ± N/A | - | NA | 0.21 ± N/A | - | NA | 0.001± N/A | - | NA |

| [14] | - | 0.89 ± N/A | NA | - | 0.92 ± N/A | NA | - | 0.26 ± N/A | NA | - | 0.002± N/A | NA |

| [68] | 0.90 ± 0.08 | 0.88 ± 0.09 | NA | 0.88 ± 0.10 | 0.88 ± 0.12 | NA | 0.14 ± 0.19 | 0.43 ± 0.17 | NA | 0.002± 0.003 | 0.001± 0.001 | NA |

| [69] | 0.93 ± 0.04 | 0.92 ± 0.05 | NA | 0.93 ± 0.05 | 0.93 ± 0.05 | NA | 0.91 ± 0.05 | 0.13 ± 0.15 | NA | 0.001 ± 0.002 | 0.001 ± 0.003 | NA |

| C-UNet | 0.94 ± 0.04 | 0.93 ± 0.04 | 0.92± 0.04 | 0.94 ± 0.05 | 0.94 ± 0.05 | 0.96± 0.05 | 0.92 ± 0.05 | 0.12 ± 0.14 | 0.062±0.03 | 0.001 ± 0.002 | 0.001 ± 0.002 | 0.001± 0.002 |

| Standard UNet | NA | NA | 0.87± 0.05 | NA | NA | 0.90± 0.05 | NA | NA | 0.079±0.03 | NA | NA | 0.003± 0.003 |

| DeepLabv3 | NA | NA | 0.90± 0.04 | NA | NA | 0.94± 0.05 | NA | NA | 0.69±0.03 | NA | NA | 0.002± 0.002 |

| FCN | NA | NA | 0.89± 0.05 | NA | NA | 0.93± 0.05 | NA | NA | 0.70±0.03 | NA | NA | 0.002± 0.002 |

Impact of loss functions on segmentation

To analyze the impact of different loss functions on the segmentation performance of the C-UNet, we have experimented with varying combinations of loss, and the results are summarized in Table 4. DSC, FNRo (False-negative rate at object level), FPRp (False positive rate at pixel level), and TPRp (True positive rate at pixel level) were used to measure the performance quantitatively. From Table 4, it is observed that the suggested loss combination and assigning values of wseg and wbou to 0.4 assists the proposed model in achieving optimum results than other combinations.

Table 4. Impact of various loss combinations for cervical cell segmentation on the C-UNet.

| Segmentation loss | Boundary loss | DSC | FNRo | FPRp | TPRp |

|---|---|---|---|---|---|

| focal | Focal | 0.76±0.09 | 0.35±0.17 | 0.002±0.003 | 0.75±0.07 |

| Dice + BCE | Dice + BCE | 0.90±0.07 | 0.25±0.16 | 0.001±0.003 | 0.90±0.06 |

| Dice + BCE | Combo | 0.89±0.07 | 0.27±0.17 | 0.001±0.003 | 0.88±0.08 |

| Focal Tversky | Focal Tversky | 0.79±0.08 | 0.34±0.18 | 0.002±0.003 | 0.80±0.07 |

| Focal Tversky + wseg (Dice+ BCE) w wseg = 0.9 | FT+wbou Combo w wbou = 0.9 | 0.92±0.05 | 0.25±0.15 | 0.001±0.003 | 0.90±0.06 |

| Focal Tversky + wseg (Dice+ BCE) w wseg = 0.4 | FT+wbou Combo w wbou = 0.4 | 0.93±0.04 | 0.24±0.14 | 0.001±0.003 | 0.91±0.06 |

Impact of resolution on segmentation

In deep learning, input representation size and dataset volume are critical for optimum results. The original size of the cervical images in the employed dataset is 1024×768. We have experimented with different resolution patches, i.e., 256 x256, 512 x 512, and 768 x 768, to find the optimized size that can preserve spatial and contextual information. All experiments were conducted under identical conditions except for 768 x768 resolution, where batch size was set to 9 to minimize computation cost. We have observed optimum performance in the case of 512 x 512, as shown in Table 5.

Table 5. Shows the segmentation results with different patch size.

| Resolution | Acco | Recallo | Accp | Recallp | Dice Coefficient | F1-Score |

|---|---|---|---|---|---|---|

| 768 x768 | 89.26% | 90.23% | 89.22% | 90.02% | 89.03% | 89.62% |

| 512 x 512 | 93.00% | 95.32% | 92.56% | 92.27% | 93.12% | 94.96% |

| 256 x256 | 90.09% | 91.42% | 89.66% | 89.86% | 88.12% | 90.03% |

Impact of data augmentation

Table 6. Shows the impact of data augmentation on the proposed network performance.

| Method | Augmentation | Acco | Recallo | Accp | Recallp | Dice Coefficient | F1-Score |

|---|---|---|---|---|---|---|---|

| C-UNet | Yes | 93.00% | 95.32% | 92.56% | 92.27% | 93.12% | 94.96% |

| C-UNet | No | 87.29% | 88.40% | 86.61% | 85.86% | 84.82% | 88.17% |

Conclusion

In this paper, we have proposed a deep neural network based on the architecture of classic UNet to improve the nucleus segmentation from cervical smear images. We have designed a cross-scale feature integration module to preserve the spatial and local features on the basis of their significance. We have incorporated a wide context unit into the baseline UNet to extract the contextual information and feature accumulation at the transition level in order to enhance nucleus segmentation. A decoder was composed of two inter-connecter decoders to generate segmentation and boundary masks. The proposed C-UNet was evaluated on the cervical smear images dataset, ISBI2014, and ISBI2015 datasets. To enhance the training of the proposed model, we have employed stain normalization, other data augmentation, and transformation techniques. The evaluation of the model has shown the performance of the proposed model is superior to those of the extant models. Although, C-UNet has shown an improvement in the segmentation of cervical nuclei segmentation, pathologist confirmation may be needed in a practical setting. This network is expensive computationally because of the C-UNet depth and inter-connected decoders. In the future, we will focus on minimizing computational overhead, investigating contrastive learning to enhance model performance, and generalizing for other applications.

Data Availability

DOI: 10.17632/jks43dkjj7.1 URL: https://data.mendeley.com/datasets/jks43dkjj7/1.

Funding Statement

The author(s) received no specific funding for this work.

References

- 1.World Health Organization. "International agency for research on cancer." (2019).

- 2.Safaeian Mahboobeh, Solomon Diane, and Castle Philip E. "Cervical cancer prevention—cervical screening: science in evolution." Obstetrics and gynecology clinics of North America 34, no. 4 (2007): 739–760. doi: 10.1016/j.ogc.2007.09.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Zhang Hong-xin, Song Yi-min, Li Su-hong, Yin Yu-hui, Gao Dong-ling, and Chen Kui-sheng. "Quantitative detection of screening for cervical lesions with ThinPrep Cytology Test." Clinical Oncology and Cancer Research 7, no. 5 (2010): 299–302. [Google Scholar]

- 4.Singh Vikrant Bhar, Gupta Nalini, Nijhawan Raje, Srinivasan Radhika, Suri Vanita, and Rajwanshi Arvind. "Liquid-based cytology versus conventional cytology for evaluation of cervical Pap smears: experience from the first 1000 split samples." Indian Journal of Pathology and Microbiology 58, no. 1 (2015): 17. doi: 10.4103/0377-4929.151157 [DOI] [PubMed] [Google Scholar]

- 5.Plissiti Marina E., and Nikou Christophoros. "A review of automated techniques for cervical cell image analysis and classification." Biomedical imaging and computational modeling in biomechanics (2013): 1–18. [Google Scholar]

- 6.Gençtav Aslı, Aksoy Selim, and Önder Sevgen. "Unsupervised segmentation and classification of cervical cell images." Pattern recognition 45, no. 12 (2012): 4151–4168. [Google Scholar]

- 7.Plissiti Marina E., Nikou Christophoros, and Charchanti Antonia. "Automated detection of cell nuclei in pap smear images using morphological reconstruction and clustering." IEEE Transactions on information technology in biomedicine 15, no. 2 (2010): 233–241. doi: 10.1109/TITB.2010.2087030 [DOI] [PubMed] [Google Scholar]

- 8.Li Kuan, Lu Zhi, Liu Wenyin, and Yin Jianping. "Cytoplasm and nucleus segmentation in cervical smear images using Radiating GVF Snake." Pattern recognition 45, no. 4 (2012): 1255–1264. [Google Scholar]

- 9.Saha Ratna, Bajger Mariusz, and Lee Gobert. "SRM superpixel merging framework for precise segmentation of cervical nucleus." In 2019 Digital Image Computing: Techniques and Applications (DICTA), pp. 1–8. IEEE, 2019. [Google Scholar]

- 10.Hoque Iram Tazim, Ibtehaz Nabil, Chakravarty Saumitra, Rahman M. Saifur, and Rahman M. Sohel. "A contour property based approach to segment nuclei in cervical cytology images." BMC Medical Imaging 21, no. 1 (2021): 1–12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Phoulady, Hady Ahmady, Dmitry B. Goldgof, Lawrence O. Hall, and Peter R. Mouton. "A new approach to detect and segment overlapping cells in multi-layer cervical cell volume images." In 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), pp. 201–204. IEEE, 2016.

- 12.Tareef Afaf, Song Yang, Huang Heng, Feng Dagan, Chen Mei, Wang Yue, and Cai Weidong. "Multi-pass fast watershed for accurate segmentation of overlapping cervical cells." IEEE transactions on medical imaging 37, no. 9 (2018): 2044–2059. doi: 10.1109/TMI.2018.2815013 [DOI] [PubMed] [Google Scholar]

- 13.Song Jie, Xiao Liang, and Lian Zhichao. "Contour-seed pairs learning-based framework for simultaneously detecting and segmenting various overlapping cells/nuclei in microscopy images." IEEE Transactions on Image Processing 27, no. 12 (2018): 5759–5774. doi: 10.1109/TIP.2018.2857001 [DOI] [PubMed] [Google Scholar]

- 14.Song Youyi, Tan Ee-Leng, Jiang Xudong, Cheng Jie-Zhi, Ni Dong, Chen Siping, Lei Baiying, and Wang Tianfu. "Accurate cervical cell segmentation from overlapping clumps in pap smear images." IEEE transactions on medical imaging 36, no. 1 (2016): 288–300. doi: 10.1109/TMI.2016.2606380 [DOI] [PubMed] [Google Scholar]

- 15.Al-Kofahi Yousef, Lassoued Wiem, Lee William, and Roysam Badrinath. "Improved automatic detection and segmentation of cell nuclei in histopathology images." IEEE Transactions on Biomedical Engineering 57, no. 4 (2009): 841–852. doi: 10.1109/TBME.2009.2035102 [DOI] [PubMed] [Google Scholar]

- 16.Ali Sahirzeeshan, and Madabhushi Anant. "An integrated region-, boundary-, shape-based active contour for multiple object overlap resolution in histological imagery." IEEE transactions on medical imaging 31, no. 7 (2012): 1448–1460. doi: 10.1109/TMI.2012.2190089 [DOI] [PubMed] [Google Scholar]

- 17.Bergmeir Christoph, Miguel García Silvente, and José Manuel Benítez. "Segmentation of cervical cell nuclei in high-resolution microscopic images: a new algorithm and a web-based software framework." Computer methods and programs in biomedicine 107, no. 3 (2012): 497–512. [DOI] [PubMed] [Google Scholar]

- 18.Rasheed Assad, Umar Arif Iqbal, Shirazi Syed Hamad, Khan Zakir, Nawaz Shah, and Shahzad Muhammad. "Automatic eczema classification in clinical images based on hybrid deep neural network." Computers in Biology and Medicine 147 (2022): 105807. doi: 10.1016/j.compbiomed.2022.105807 [DOI] [PubMed] [Google Scholar]

- 19.Liu Haoran, Liu Mingzhe, Li Dongfen, Zheng Wenfeng, Yin Lirong, and Wang Ruili. "Recent advances in pulse-coupled neural networks with applications in image processing." Electronics 11, no. 20 (2022): 3264. [Google Scholar]

- 20.Jin Kai, Yan Yan, Chen Menglu, Wang Jun, Pan Xiangji, Liu Xindi, et al. "Multimodal deep learning with feature level fusion for identification of choroidal neovascularization activity in age‐related macular degeneration." Acta Ophthalmologica 100, no. 2 (2022): e512–e520. doi: 10.1111/aos.14928 [DOI] [PubMed] [Google Scholar]

- 21.Li Chaoyang, Dong Mianxiong, Li Jian, Xu Gang, Chen Xiu-Bo, Liu Wen, et al. "Efficient Medical Big Data Management With Keyword-Searchable Encryption in Healthchain." IEEE Systems Journal (2022). [Google Scholar]

- 22.Ottman Noora, Ruokolainen Lasse, Suomalainen Alina, Sinkko Hanna, Karisola Piia, Lehtimäki Jenni, et al. "Soil exposure modifies the gut microbiota and supports immune tolerance in a mouse model." Journal of allergy and clinical immunology 143, no. 3 (2019): 1198–1206. doi: 10.1016/j.jaci.2018.06.024 [DOI] [PubMed] [Google Scholar]

- 23.Lu Zhi, Carneiro Gustavo, Bradley Andrew P., Ushizima Daniela, Nosrati Masoud S., Bianchi Andrea GC, et al. "Evaluation of three algorithms for the segmentation of overlapping cervical cells." IEEE journal of biomedical and health informatics 21, no. 2 (2016): 441–450. doi: 10.1109/JBHI.2016.2519686 [DOI] [PubMed] [Google Scholar]

- 24.Tareef, Afaf, Yang Song, Min-Zhao Lee, Dagan D. Feng, Mei Chen, and Weidong Cai. "Morphological filtering and hierarchical deformation for partially overlapping cell segmentation." In 2015 International Conference on Digital Image Computing: Techniques and Applications (DICTA), pp. 1–7. IEEE, 2015.

- 25.Zhang, Ling, Siping Chen, Tianfu Wang, Yan Chen, Shaoxiong Liu, and Minghua Li. "A practical segmentation method for automated screening of cervical cytology." In 2011 International Conference on Intelligent Computation and Bio-Medical Instrumentation, pp. 140–143. IEEE, 2011.

- 26.Tsai Meng-Husiun, Chan Yung-Kuan, Lin Zhe-Zheng, Yang-Mao Shys-Fan, and Huang Po-Chi. "Nucleus and cytoplast contour detector of cervical smear image." Pattern Recognition Letters 29, no. 9 (2008): 1441–1453. [Google Scholar]

- 27.Zhao Lili, Li Kuan, Wang Mao, Yin Jianping, Zhu En, Wu Chengkun, et al. "Automatic cytoplasm and nuclei segmentation for color cervical smear image using an efficient gap-search MRF." Computers in biology and medicine 71 (2016): 46–56. doi: 10.1016/j.compbiomed.2016.01.025 [DOI] [PubMed] [Google Scholar]

- 28.Phoulady Hady Ahmady, Goldgof Dmitry, Hall Lawrence O., and Mouton Peter R. "A framework for nucleus and overlapping cytoplasm segmentation in cervical cytology extended depth of field and volume images." Computerized Medical Imaging and Graphics 59 (2017): 38–49. doi: 10.1016/j.compmedimag.2017.06.007 [DOI] [PubMed] [Google Scholar]

- 29.Yang, Xiaodong, Houqiang Li, and Xiaobo Zhou. "Nuclei segmentation using marker-controlled watershed, tracking using mean-shift, and Kalman filter in time-lapse microscopy." IEEE Transactions on Circuits and Systems I: Regular Papers 53, no. 11 (2006): 2405–2414.

- 30.Plissiti Marina E., Nikou Christophoros, and Charchanti Antonia. "Combining shape, texture and intensity features for cell nuclei extraction in Pap smear images." Pattern Recognition Letters 32, no. 6 (2011): 838–853. [Google Scholar]

- 31.Zhang Ling, Kong Hui, Chin Chien Ting, Liu Shaoxiong, Chen Zhi, Wang Tianfu, et al. "Segmentation of cytoplasm and nuclei of abnormal cells in cervical cytology using global and local graph cuts." Computerized Medical Imaging and Graphics 38, no. 5 (2014): 369–380. doi: 10.1016/j.compmedimag.2014.02.001 [DOI] [PubMed] [Google Scholar]

- 32.Cheng Jierong, and Rajapakse Jagath C. "Segmentation of clustered nuclei with shape markers and marking function." IEEE Transactions on Biomedical Engineering 56, no. 3 (2008): 741–748. doi: 10.1109/TBME.2008.2008635 [DOI] [PubMed] [Google Scholar]

- 33.Song, Youyi, Ling Zhang, Siping Chen, Dong Ni, Baopu Li, Yongjing Zhou, et al. "A deep learning based framework for accurate segmentation of cervical cytoplasm and nuclei." In 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, pp. 2903–2906. IEEE, 2014. [DOI] [PubMed]

- 34.Zhang, Ling, Milan Sonka, Le Lu, Ronald M. Summers, and Jianhua Yao. "Combining fully convolutional networks and graph-based approach for automated segmentation of cervical cell nuclei." In 2017 IEEE 14th international symposium on biomedical imaging (ISBI 2017), pp. 406–409. IEEE, 2017.

- 35.Song Youyi, Zhang Ling, Chen Siping, Ni Dong, Lei Baiying, and Wang Tianfu. "Accurate segmentation of cervical cytoplasm and nuclei based on multiscale convolutional network and graph partitioning." IEEE Transactions on Biomedical Engineering 62, no. 10 (2015): 2421–2433. doi: 10.1109/TBME.2015.2430895 [DOI] [PubMed] [Google Scholar]

- 36.Phoulady, Hady Ahmady, and Peter R. Mouton. "A new cervical cytology dataset for nucleus detection and image classification (Cervix93) and methods for cervical nucleus detection." arXiv preprint arXiv:1811.09651 (2018).

- 37.Liu Yiming, Zhang Pengcheng, Song Qingche, Li Andi, Zhang Peng, and Gui Zhiguo. "Automatic segmentation of cervical nuclei based on deep learning and a conditional random field." IEEE Access 6 (2018): 53709–53721. [Google Scholar]

- 38.Li Xia, Xu Zhenhao, Shen Xi, Zhou Yongxia, Xiao Binggang, and Li Tie-Qiang. "Detection of cervical cancer cells in whole slide images using deformable and global context aware faster RCNN-FPN." Current Oncology 28, no. 5 (2021): 3585–3601. doi: 10.3390/curroncol28050307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Bhatt Anant R., Ganatra Amit, and Kotecha Ketan. "Cervical cancer detection in pap smear whole slide images using convnet with transfer learning and progressive resizing." PeerJ Computer Science 7 (2021): e348. doi: 10.7717/peerj-cs.348 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Tan Xiangyu, Li Kexin, Zhang Jiucheng, Wang Wenzhe, Wu Bian, Wu Jian, et al. "Automatic model for cervical cancer screening based on convolutional neural network: a retrospective, multicohort, multicenter study." Cancer Cell International 21, no. 1 (2021): 1–10. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Meiquan Xu, Weixiu Zeng, Yanhua Sun, Junhui Wu, Tingting Wu, Yajie Yang, et al. "Cervical cytology intelligent diagnosis based on object detection technology." (2018). [Google Scholar]

- 42.Zhang Jianwei, He Junting, Chen Tianfu, Liu Zhenmei, and Chen Danni. "Abnormal region detection in cervical smear images based on fully convolutional network." IET Image Processing 13, no. 4 (2019): 583–590. [Google Scholar]

- 43.Li X., Xu Z., Shen X., Zhou Y., Xiao B. and Li T.Q., 2021. Detection of cervical cancer cells in whole slide images using deformable and global context aware faster RCNN-FPN. Current Oncology, 28(5), pp.3585–3601. doi: 10.3390/curroncol28050307 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Huang Jinjie, Yang Guihua, Li Biao, He Yongjun, and Liang Yani. "Segmentation of cervical cell images based on generative adversarial networks." IEEE Access 9 (2021): 115415–115428. [Google Scholar]

- 45.Chen Jiajia, and Zhang Baocan. "Segmentation of overlapping cervical cells with mask region convolutional neural network." Computational and Mathematical Methods in Medicine 2021 (2021). doi: 10.1155/2021/3890988 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Yang Guihua, Huang Jinjie, He Yongjun, Chen Yuanjian, Wang Tao, Jin Cong, et al. "GCP-Net: A Gating Context-Aware Pooling Network for Cervical Cell Nuclei Segmentation." Mobile Information Systems 2022 (2022). [Google Scholar]

- 47.Li Gang, Sun Chengjie, Xu Chuanyun, Zheng Yu, and Wang Keya. "Cervical Cell Segmentation Method Based on Global Dependency and Local Attention." Applied Sciences 12, no. 15 (2022): 7742. [Google Scholar]

- 48.Ilyas Talha, Mannan Zubaer Ibna, Khan Abbas, Azam Sami, Kim Hyongsuk, and De Boer Friso. "TSFD-Net: Tissue specific feature distillation network for nuclei segmentation and classification." Neural Networks 151 (2022): 1–15. doi: 10.1016/j.neunet.2022.02.020 [DOI] [PubMed] [Google Scholar]

- 49.Mahyari Tayebeh Lotfi, and Dansereau Richard M. "Multi‐layer random walker image segmentation for overlapped cervical cells using probabilistic deep learning methods." IET Image Processing (2022). [Google Scholar]

- 50.Elakkiya R., Sri Teja Kuppa Sai, Deborah L. Jegatha, Bisogni Carmen, and Medaglia Carlo. "Imaging based cervical cancer diagnostics using small object detection-generative adversarial networks." Multimedia Tools and Applications 81, no. 1 (2022): 191–207. [Google Scholar]

- 51.Zhao Bingchao, Chen Xin, Li Zhi, Yu Zhiwen, Yao Su, Yan Lixu, et al. "Triple U-net: Hematoxylin-aware nuclei segmentation with progressive dense feature aggregation." Medical Image Analysis 65 (2020): 101786. doi: 10.1016/j.media.2020.101786 [DOI] [PubMed] [Google Scholar]

- 52.Schmidt, Uwe, Martin Weigert, Coleman Broaddus, and Gene Myers. "Cell detection with star-convex polygons." In International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 265–273. Springer, Cham, 2018.

- 53.Tan, Mingxing, Ruoming Pang, and Quoc V. Le. "Efficientdet: Scalable and efficient object detection." In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pp. 10781–10790. 2020.

- 54.Zhang Jianwei, Liu Zhenmei, Du Bohai, He Junting, Li Guanzhao, and Chen Danni. "Binary tree-like network with two-path fusion attention feature for cervical cell nucleus segmentation." Computers in Biology and Medicine 108 (2019): 223–233. doi: 10.1016/j.compbiomed.2019.03.011 [DOI] [PubMed] [Google Scholar]

- 55.Khan Adnan Mujahid, Rajpoot Nasir, Treanor Darren, and Magee Derek. "A nonlinear mapping approach to stain normalization in digital histopathology images using image-specific color deconvolution." IEEE transactions on Biomedical Engineering 61, no. 6 (2014): 1729–1738. doi: 10.1109/TBME.2014.2303294 [DOI] [PubMed] [Google Scholar]

- 56.Macenko, Marc, Marc Niethammer, James S. Marron, David Borland, John T. Woosley, Xiaojun Guan, et al. "A method for normalizing histology slides for quantitative analysis." In 2009 IEEE international symposium on biomedical imaging: from nano to macro, pp. 1107–1110. IEEE, 2009.

- 57.Janowczyk Andrew, Basavanhally Ajay, and Madabhushi Anant. "Stain normalization using sparse autoencoders (StaNoSA): application to digital pathology." Computerized Medical Imaging and Graphics 57 (2017): 50–61. doi: 10.1016/j.compmedimag.2016.05.003 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Bejnordi Babak Ehteshami, Litjens Geert, Timofeeva Nadya, Irene Otte-Höller André Homeyer, Karssemeijer Nico, et al. "Stain specific standardization of whole-slide histopathological images." IEEE transactions on medical imaging 35, no. 2 (2015): 404–415. doi: 10.1109/TMI.2015.2476509 [DOI] [PubMed] [Google Scholar]

- 59.Kumar Neeraj, Verma Ruchika, Sharma Sanuj, Bhargava Surabhi, Vahadane Abhishek, and Sethi Amit. "A dataset and a technique for generalized nuclear segmentation for computational pathology." IEEE transactions on medical imaging 36, no. 7 (2017): 1550–1560. doi: 10.1109/TMI.2017.2677499 [DOI] [PubMed] [Google Scholar]

- 60.Chen, Liang-Chieh, George Papandreou, Iasonas Kokkinos, Kevin Murphy, and Alan L. Yuille. "Semantic image segmentation with deep convolutional nets and fully connected crfs." arXiv preprint arXiv:1412.7062 (2014). [DOI] [PubMed]

- 61.Mahmood Faisal, Borders Daniel, Chen Richard J., McKay Gregory N., Salimian Kevan J., Baras Alexander, et al. "Deep adversarial training for multi-organ nuclei segmentation in histopathology images." IEEE transactions on medical imaging 39, no. 11 (2019): 3257–3267. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Ronneberger, Olaf, Philipp Fischer, and Thomas Brox. "U-net: Convolutional networks for biomedical image segmentation." In International Conference on Medical image computing and computer-assisted intervention, pp. 234–241. Springer, Cham, 2015.

- 63.He, Kaiming, Georgia Gkioxari, Piotr Dollár, and Ross Girshick. "Mask r-cnn." In Proceedings of the IEEE international conference on computer vision, pp. 2961–2969. 2017.

- 64.Chen, Liang-Chieh, Yukun Zhu, George Papandreou, Florian Schroff, and Hartwig Adam. "Encoder-decoder with atrous separable convolution for semantic image segmentation." In Proceedings of the European conference on computer vision (ECCV), pp. 801–818. 2018..

- 65.K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” 3rd Int. Conf. Learn. Represent. ICLR 2015—Conf. Track Proc., 2015.

- 66.He, Kaiming, Xiangyu Zhang, Shaoqing Ren, and Jian Sun. "Deep residual learning for image recognition." In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 770–778. 2016.

- 67.Lu Zhi, Carneiro Gustavo, and Bradley Andrew P. "An improved joint optimization of multiple level set functions for the segmentation of overlapping cervical cells." IEEE Transactions on Image Processing 24, no. 4 (2015): 1261–1272. doi: 10.1109/TIP.2015.2389619 [DOI] [PubMed] [Google Scholar]

- 68.Lee, Hansang, and Junmo Kim. "Segmentation of overlapping cervical cells in microscopic images with superpixel partitioning and cell-wise contour refinement." In Proceedings of the IEEE conference on computer vision and pattern recognition workshops, pp. 63–69. 2016.

- 69.Wan Tao, Xu Shusong, Sang Chen, Jin Yulan, and Qin Zengchang. "Accurate segmentation of overlapping cells in cervical cytology with deep convolutional neural networks." Neurocomputing 365 (2019): 157–170. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

DOI: 10.17632/jks43dkjj7.1 URL: https://data.mendeley.com/datasets/jks43dkjj7/1.