Abstract

Objective Despite the benefits of the tailored drug–drug interaction (DDI) alerts and the broad dissemination strategy, the uptake of our tailored DDI alert algorithms that are enhanced with patient-specific and context-specific factors has been limited. The goal of the study was to examine barriers and health care system dynamics related to implementing tailored DDI alerts and identify the factors that would drive optimization and improvement of DDI alerts.

Methods We employed a qualitative research approach, conducting interviews with a participant interview guide framed based on Proctor's taxonomy of implementation outcomes and informed by the Theoretical Domains Framework. Participants included pharmacists with informatics roles within hospitals, chief medical informatics officers, and associate medical informatics directors/officers. Our data analysis was informed by the technique used in grounded theory analysis, and the reporting of open coding results was based on a modified version of the Safety-Related Electronic Health Record Research Reporting Framework.

Results Our analysis generated 15 barriers, and we mapped the interconnections of these barriers, which clustered around three entities (i.e., users, organizations, and technical stakeholders). Our findings revealed that misaligned interests regarding DDI alert performance and misaligned expectations regarding DDI alert optimizations among these entities within health care organizations could result in system inertia in implementing tailored DDI alerts.

Conclusion Health care organizations primarily determine the implementation and optimization of DDI alerts, and it is essential to identify and demonstrate value metrics that health care organizations prioritize to enable tailored DDI alert implementation. This could be achieved via a multifaceted approach, such as partnering with health care organizations that have the capacity to adopt tailored DDI alerts and identifying specialists who know users' needs, liaise with organizations and vendors, and facilitate technical stakeholders' work. In the future, researchers can adopt the systematic approach to study tailored DDI implementation problems from other system perspectives (e.g., the vendors' system).

Keywords: drug–drug interaction, clinical decision support systems, electronic health records, implementation science

Background and Significance

Drug–drug interactions (DDIs) are highly prevalent in the United States 1 2 and are associated with 5 to 14% of adverse events among inpatients, 3 4 most of which are preventable. Patients are exposed to potential DDIs throughout their health care journey, from outpatient encounters to the time of hospital admission, during hospitalization, and at discharge. 1 5 To mitigate risks, the Committee on the Quality of Health Care in America advocates using Health Information Technology (e.g., clinical decision support [CDS]) to identify possible errors, such as potential adverse drug interactions. 6 The Centers for Medicare and Medicaid Services also included DDI screening as part of the “meaningful use” criteria of electronic health records (EHRs) in light of the Health Information Technology for Economic and Clinical Health Act. 7 Recent systematic reviews 8 9 show that DDI alerts can reduce medication errors and potential adverse drug interactions. 10 However, despite the benefits, the overall performance and implementation of DDI alerts are less than satisfactory. 4

Alert fatigue has become a widely recognized symptom associated with the use of DDI decision support. 11 12 It can be attributed to two core problems of DDI alerts: low alert importance (i.e., clinical relevance) and high alert burden. 13 First, DDI alerts have low clinical relevance because DDI alerts are generated with poorly tiered DDI knowledge bases that have inconsistent or inappropriate classifications of interaction severity. 12 14 For example, different knowledge base vendors use different approaches to classify the interaction severity 15 and studies show high variation in DDI alerts across institutions and EHRs. 2 14 Second, DDI alerts did not account for other factors such as patient-specific data and user knowledge and specialty. 13 The high alert burden (e.g., low signal-to-noise ratio and override rates as high as 96% 16 17 18 19 20 21 ) is due to (1) poor usability that results in low prescribing efficiency and alert effectiveness 22 ; and (2) limited consideration of the alert frequency, clinical workflow (e.g., DDI alerts are commonly interruptive 23 ), and clinical context. 13

To increase clinical relevance and reduce the volume of alerts, several studies have recommended improving DDI alert specificity through the inclusion of patient-specific and context-specific characteristics into DDI alerts. 4 24 25 26 27 With this goal, our research group (i.e., the Meaningful Drug Interaction Alerts team) consisting of pharmacists, clinicians, drug interaction experts, and medical informaticians used a systematic process 2 to develop and maintain a set of contextualized, evidence-based, patient-specific, and interoperable DDI alerts. 28 For example, we developed a tailored DDI alert for colchicine and inhibitors of both cytochrome P450 3A4 and P-glycoprotein that not only triggers when a patient is at risk for an adverse event from the relevant drug combinations but also uses data about the patient's kidney function in the EHRs to better communicate the risk of patient harm. 29 The tailored DDI alert algorithms are presented in logic flow diagrams and are hosted on a public-facing website 28 along with implementation guides and CDS artifacts. They were also disseminated through a series of educational webinars to pharmacy informaticists, information technology analysts, pharmacists, and clinicians, and via targeted outreach to health systems. Despite the broad dissemination strategy and the benefits of tailored DDI alerts, the uptake of our tailored DDI alert algorithms has been limited. Among 200 surveyed seminar participants, we received only two affirmative responses that their health care organizations implemented our tailored DDI alerts.

Objective

In this study, we aimed to understand the challenges with the adoption of tailored DDI alerts and identify factors that would increase the implementation of tailored DDI alerts. We sought to answer the following research questions:

What are the barriers to the implementation of tailored DDI alerts?

What are the inherent dynamics within health care organizations that limit DDI alert optimization?

Methods

In this study, we employed a qualitative research approach, specifically conducting interviews guided by Proctor's taxonomy of implementation outcomes 30 and the Theoretical Domains Framework (TDF). 31 Our data analysis was informed by grounded theory analysis techniques 32 and the reporting of open coding results was based on a modified version of the Safety-Related EHR Research (SAFER) reporting framework. 33 All procedures in this study were approved by the Institutional Review Boards at the University of Arizona and the University of Utah.

Theoretical Approach to Study Drug–Drug Interactions Clinical Decision Support Implementation

The Translating Research into Practice (TRIP) model 34 was initially used to guide the implementation of our tailored DDI alerts in clinical practice. TRIP emphasizes that adoption occurs as users within a social system analyze and accept innovation. Based on Roger's diffusion of innovations, 35 the TRIP model inspired us to deliver a series of webinars as a communication strategy to disseminate the tailored DDI alerts. Yet, the adoption was still low, based on the survey among our seminar participants. When we encountered significant barriers to adoption that were not explained by TRIP and diffusion of innovations, we shifted our focus to sociotechnical systems theory 36 and theoretical foundations from learning health systems. 37 Specifically, we examined Proctor's taxonomy of implementation outcomes 30 and probed the TDF 31 to develop an interview guide ( Supplementary Appendix A , available in the online version) for mapping barriers to DDI alert implementation.

Participants

We identified the following stakeholders as critical to the successful DDI alert implementation based on a previous study 38 : chief medical informatics officers (CMIOs), pharmacy informaticists, other clinical informaticists involved in the implementation and/or maintenance of DDI CDS, and information technology analysts. Participants were recruited through purposive snowball sampling. Each participant was compensated with a nominal value gift card, although two individuals declined compensation.

Data Collection

All interviews were led by a senior investigator (D.C.M.), along with two other researchers (V.S. and S.G.), beginning with an overview of the DDI CDS project and followed by questions from an interview guide. Interviews were structured to allow participants to think about and describe DDI CDS implementation aspects. Interviews were recorded with permission from participants. Each interview was approximately 60 minutes long. Interview recordings were transcribed using rev.com and otter.ai. The transcripts were proofread by researchers (T.Z. and V.S.) and archived with corresponding recordings.

Data Analysis

Our analysis was initially conducted by a researcher (T.Z.) who was not involved in the interviews or interview guide construction. This individual led the open coding to minimize potential bias from preconceived explanations and frameworks. 39 Transcripts were read several times and meaning units were tagged with codes annotated in Microsoft Word. Every set of initial codes generated from individual interviews was constantly compared with previously analyzed data, discussed and validated with another researcher (S.G.), triangulated with previous DDI alert performance and implementation studies, 4 14 40 41 and later categorized into subthemes. Three researchers (T.Z., S.G., and V.S.) then discussed and came to a consensus on the resulting subthemes (see Table 1 ). Furthermore, researchers (T.Z., S.G., and V.S.) also identified relationships and interconnections between subthemes and examined how these subthemes directly or collaterally impact the implementation of tailored DDI alerts. The reporting of barriers was based on a modified version of the SAFER Reporting 33 framework (see Supplementary Appendix B and Supplementary Appendix C, Supplementary Tables S1 – S8 , available in the online version).

Table 1. Theme and subthemes of DDI CDS implementation barriers.

| Themes | Subthemes |

|---|---|

| Hardware and software | Maintenance issues |

| Technical adoption issues | |

| Security issues | |

| Proprietary issues | |

| Clinical content | Pitfalls of algorithmizing DDI |

| Human–computer interaction | Usability issues |

| Suboptimal CDS user experience | |

| Workflow and communication | Insufficient training and education |

| People | Insufficient social capital and capacity |

| Varied DDI alerts exposure among users | |

| Internal organizational features | Organizational inertia |

| Monetary inertia | |

| Lengthy governance process | |

| External features, rules, and regulations | Legal barriers |

| Measurement and monitoring | Lack of actionable metrics |

Abbreviations: CDS, clinical decision support; DDI, drug–drug interaction.

Results

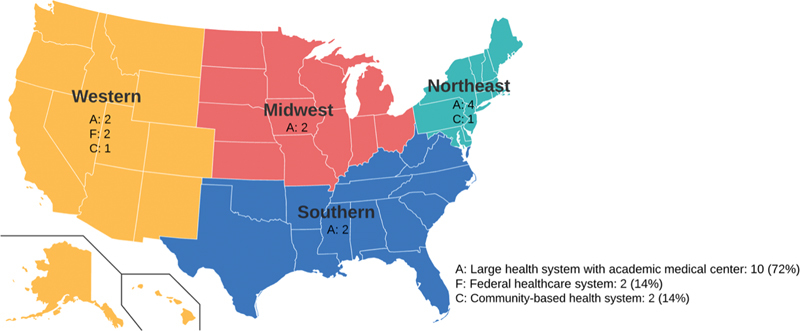

A total of 14 structured interviews were conducted online via Zoom with 17 participants (24% female and 76% male) from 14 different U.S. health systems recruited through purposive snowball sampling as well via contact lists from educational webinars related to DDI CDS (see Fig. 1 ). Interviews were conducted over a 4-month period in 2021. Of the 14 organizations, 10 of them represented large, integrated health systems including inpatient acute and ambulatory health care delivery. Participants' professional backgrounds included CMIOs (47%), pharmacy informaticists or informaticists (41%), and information technology analysts (12%).

Fig. 1.

Overview of participating health systems in the study.

System Barriers

The barriers codebook provided a comprehensive view of relevant DDI alert implementation issues related to 8 themes and 15 subthemes (see Table 2 ).

Table 2. Excerpt from barriers codebook.

| Themes | Subthemes | Barriers |

|---|---|---|

| Hardware and software | Maintenance issues | Hard to maintain; lots of maintenance |

| Technical adoption issues | Requires lots of effort for setup and accuracy check; not sure how to implement a new DDI algorithm | |

| Security issues | Challenging to securely process and share data | |

| Proprietary issues | Challenging to import algorithms into the current system; interoperability issue; high dependency on the current system | |

| Clinical content | Pitfalls of algorithmizing DDI | The rare delineation between drug interactions; challenging to categorize levels; not enough level of evidence; challenging to find a threshold |

| Human–computer interaction | Usability issues | Perception of the inability to modify the off-the-shelf drug warnings; high override rate; need more reasons in the dropdowns; DDI alerts don't provide alternative options; overuse of interruptive alerts; people lack tools to change DDI alerts |

| Suboptimal CDS user experience | DDI alert is cumbersome, annoying, interruptive, and useless; DDI alert fatigue | |

| Workflow and communication | Insufficient training and education | No training; training program is antiquated and not extensive; lots of self-training |

| People | Insufficient social capital and capacity | People like pharmacy coordinators and local champions who can drive DDI alerts implementation are occupied; challenging to find people with all clinical, operational, and technical knowledge |

| Varied DDI alerts exposure among users | Immediate and severe alerts are fired to attendings and residents, while other alerts are fired to pharmacists; residents experience a lot more than attendings and see them much more annoying; DDI alerts overwhelm attendings | |

| Internal organizational features | Organizational inertia | Low priority; limited resource; difficult to get through backlogs; pushback from risk management; safety events don't usually drive the implementation of DDI algorithm |

| Monetary inertia | Cost prohibitive; difficult to justify cost benefit | |

| Lengthy governance process | The approval process is challenging and time-consuming | |

| External features, rules, and regulations | Legal barriers | Rarely encountered legal barriers that need a legal team to review; the legal barrier is minimal; more conservative with implementation at top litigations areas |

| Measurement and monitoring | Lack of actionable metrics | Lack of value quality measure; lack of a systematic process of analyzing alerts and retooling them |

Abbreviations: CDS, clinical decision support; DDI, drug–drug interaction.

Hardware and Software

All participants in this study were from health systems that were using either Epic or Cerner EHR systems. Several issues were raised in the context of these systems including the proprietary nature of EHR software, technical adoption, maintenance, and security.

Proprietary issues : due to the proprietary interests of these information systems, there is limited ability to customize current DDI alerts or incorporate new/different DDI alerts. For example, one participant stated that “ implementing tailored DDI alert algorithms in Epic is technically feasible but [it] can't be done in a clean and easy way. ” While Epic and Cerner provide open platforms (e.g., in the medication alert realm, First Databank (FDB) has offered organizations the ability to develop best practice advisories (BPAs) via FDB AlertSpace, made available via the Epic App Orchard), third-party CDS is typically difficult to implement (e.g., “ interoperability is always a challenge,” “FDB can't accommodate some custom interaction warnings and we are reliant on FDB to update alert changes ”).

Technical adoption issues : efforts to program a new DDI alert in their current information system and ensure it performs as intended is challenging because there are limited off-the-shelf or plug-and-play approaches to CDS customization (e.g., “ it requires lots of effort for setup and accuracy check[ing], and we are not sure how to implement a new DDI alert algorithm,” “the ease of implementation is sort of a huge hurdle ”). To implement any new CDS algorithms, participants indicated the need for (1) an implementation roadmap that initiates and streamlines the implementation process (e.g., “ use a process approach to provide suggestions on implementation ”); (2) a toolkit such as “ a scoring system with rules that someone could turbocharge into their system to fire BPAs… ” to “ make DDI alert algorithms turnkey in terms of initial implementation and maintenance ”; or (3) a prebuilt Application Programming Interface (API) that follows standards such as Health Level Seven Fast Health care Interoperability Resources (HL7 FHIR) and “ packages DDI algorithms for direct and seamless consumption into the EHR systems ” while minimizing the role of vendors in the process.

Maintenance issues : the implementation of customized DDI CDS often comes with additional requirements for maintenance and management (e.g., “ The maintenance part can be very difficult… especially with BPAs because of the maintenance of…value sets ”). To meet the management and maintenance requirements, outsourcing maintenance to an external third party could be a favorable option yet it incurs more cost. For example, one participant stated that “ If it is something that requires quite a bit of management and upkeep…[like] pulling new literature and evaluating, we'll have somebody do this for us. ”

Security issues : concerns about patient privacy and information security are a barrier (e.g., “ It is hard to securely process and share data. It's way easier for us to pull things in than it is for us to send things out for security reasons ”) because of additional safeguards that may need to be implemented and the need to have a technical review board involved in overseeing customization. For example, a participant shared that “ to connect with an external system, like a web service or something externally where we're sending data, it does have to go through our architectural review board where they assess security, privacy, [and] all those other kinds of things. ”

Clinical Content

Pitfalls of algorithmizing DDIs : based on our interviews, we found that the difficulty of developing rules-based DDI CDS can be due to (1) the multitude of drugs and DDIs (e.g., “ it's impossible for us to get down into the weeds ”); (2) the difficulty to find universally agreeable thresholds to categorize DDI severity levels (e.g., “ it is hard to find a happy alert threshold…[with] agreement from clinical review and local clinical experts…with DDI CDS ”); (3) the delineation between clinical consequences of DDIs is not well-defined (e.g., “ there is rare delineation between drug interactions,” “not enough level of evidence…[for] what can be turned on and off ”). Furthermore, translating clinical guidelines into algorithms is challenging (e.g., “ the problem that's always been around when implementing CDS - how do you translate…clinical guidelines into actual code ”) and requires multifaceted expertise (e.g., experts that know DDI CDS development and implementation and also possess the knowledge of DDIs) that was rare among the health systems who participated.

Human–Computer Interface

Suboptimal CDS user experience : multiple participants indicated that “ DDI alerts are the top complaint about CDS. ” They described DDI alerts as cumbersome, boring, interruptive, useless, and annoying. Despite suboptimal presentation, DDI alerts were still perceived as useful (e.g., “ DDI CDS may be helpful, but alerting may not be helpful ”). Participants also reported high override rates associated with DDI alerts that were difficult to reduce (e.g., “ We've done a lot of work to reduce the alert volume but have not seen much of a difference in our override ”).

Usability issues : usability issues associated with the current DDI alerts are manifested in how they alert as well as the alert content they present. Some common complaints reported by participants were the end-users' perception of difficulty to customize (e.g., modifying the off-the-shelf drug warnings), low transparency (e.g., absence of explanation of why the alert is triggered and what the risk factors are), and lack of actionable instruction (e.g., no alternative options provided). These problems could be attributed to low user involvement in the development process, leading to an interaction experience that is not user centered (e.g., “ DDI alerts makers don't understand users' needs and [alerts] are not usefully designed [to fit] into clinicians' decision-making process ”). Some characteristics of ideal DDI alerts identified by the participants are (1) providing explanations of why alerts are fired as well as a comprehensive ensemble of clinically relevant information (e.g., “ Make it as transparent as possible and pull in additional information, so they don't have to go searching through the chart ”) ; (2) suggesting direct, functional, actionable alternatives (e.g., “ make the alerts as actionable as possible and ensure the next steps are clear,” “make sure you're not just telling me what not to do, but make sure you're also telling me what a better option would be ”); (3) supporting feedback mechanisms for users' input (e.g., “ have a feedback button” or “have feedback tools that look for cranky comments ”); (4) being well-integrated into the workflow so that they are less interruptive in general, yet purposefully interruptive when required (e.g., “ [An] alert is not always necessary, but if you are going to interrupt them in their workflow, then try to explain why it's so important that you interrupted them and what the risk factors are ”).

Workflow and Communication

Insufficient training and education : participants indicated that there was limited to no training and education about medication alerts to facilitate the adoption of DDI alerts (e.g., “ I doubt our training contains anything extensive ”). When training programs were available, participants described them as often antiquated and limited (e.g., “ training program based off of screenshots, modules and quizzes for walking you through workflows is antiquated,” “[for] general medication alerts [we have] almost no education…maybe for the pharmacy department on a small scale ”). A participant stated that “ [DDI alert users] have to provide a lot of self-training ” as a workaround solution.

People

Varied exposure to DDI alerts among users : DDI alerts appear to various end-users, ranging from trainees (e.g., new residents and fellows) to expert clinicians and pharmacists, whose extent of DDI alert exposure often conflicts with their user needs and interests (e.g., “ our residents might see them as much more annoying because they're experiencing [them] a lot more often,” “Attendings wanted to see them and believe that they're beneficial [to residents],” “EHR only sends immediate and severe [alerts] to clinicians for better attention. The other ones go to pharmacists. ”). Currently, providers receive an overwhelming amount of medication alerts, most of which are perceived as having little value and seldom read, because the current DDI alerts do not account for the level of clinical experience or job function (e.g., “ As a physician, I get so many alerts on all of the medicines I order and most of them are annoying. ”). One participant suggested that there is a need for “ stratifying about who should be receiving DDI alerts ” based on roles (e.g., pharmacists versus physicians, attending physicians versus residents) to provide more meaningful and personalized DDI alerts. Although providers have different needs and interests regarding DDI alerts compared with pharmacists (e.g., “ pharmacists want the [DDI] alerts for safety reasons and physicians want to reduce [DDI] alerts that they find annoying ”), clinicians are not well-represented in the DDI alert development or optimization process. For example, one participant stated that “ all the change that comes with any drug alerting is driven through pharmacy and not too many that come from physicians or nurses. ”

Insufficient social capital and capacity : multiple leaders within health care organizations (e.g., medication safety officers, patient safety officers, CMIOs, etc.) are generally supportive of CDS that improves patient outcomes and organizational outcomes (e.g., “ Pharmacy leadership and CEO office show encouragement to adopt advanced systems that improve CDS. ”). Some participants reported the lack of expertise and knowledge in their organizations to analyze and implement tailored DDI alerts (e.g., “ We have rich data but few people know how to interpret it,” “It's hard to find local champions to implement something this complex,” “It's challenging to find clinical people that may have tech interests. ”). Among a few individuals that could drive changes to improve DDI alerts, their time and capacity are limited (e.g., “ It's hard to find people who can implement and drive change and actually have time,” “IT team is willing to work on things but no bandwidth to act on anything. ”).

Internal Organizational Features

Several internal organizational barriers to tailored DDI alerts implementation were identified by participants including monetary inertia, lengthy governance processes, and organizational inertia.

Monetary inertia : organizations are mindful of the cost aspect and are “ always looking at balancing capital and benefit, and trying to make sure that we're always allocating our funds to the most beneficial thing for our patients and their care. ” The benefit of implementing tailored DDI alerts should justify the cost; otherwise, it would be cost-prohibitive. A few participants indicated that till date there is no evidence of cost-benefit to justify implementing tailored DDI alerts (e.g., “ We've looked into alert space several times, but we haven't found the cost-benefit for it to justify the cost for right now ”).

Lengthy governance process : a lengthy and time-consuming CDS approval process can be a barrier to initiating the implementation of tailored DDI alerts. Participants indicated that the review process can take months due to the large volume of reviews (e.g., “ DDI [alert] algorithm build-review-finalization process is challenging and time consuming,” “review process can take up to 4 months ”).

Organizational inertia : health care organizations lack the momentum to implement tailored DDI alerts because it is not prioritized (e.g., “ the challenge for most healthcare [is that] we have these strategic imperatives but DDI alerting is not a strategic imperative.,” “There is a need [to tailor DDI alerts] but falls to lowest priority. ”). The lack of momentum can be attributed to several reasons: (1) different departments within health care organizations have conflicting interests regarding reducing DDI alerts (e.g., “ over alert[ing] makes people in the medication and patient safety world happy,” “we get a lot of pushback from risk management [for] turning off CDS, or we get mandates from quality safety and risk prevention ”); (2) changes to DDI alerts are preferred from the user level to ensure the clinical and operational buy-in but the implementation relies on support from the leadership (e.g., “ It's very grassroots- bottom up. We'll get requests from prescribers, from people in pharmacy, from a bunch of different groups, and then we'll evaluate it as a group and make a decision ”); and (3) safety-related events do not usually drive the implementation of DDI alerts. Even though patient safety was emphasized as the core value of health care organizations in our interviews (e.g., “ patient safety is the organization's north star, any CDS tool that aligns with patient safety got full support from the leadership ”), it was perceived as the less compelling argument compared with productivity and efficiency. For example, one participant suggested, “ convince CMIOs or CDS committees from a productivity efficiency standpoint (e.g., EHR fatigue and resilience for clinicians) but not from a safety perspective. ”

External Rules and Regulations

Legal barriers : external legal or regulatory barriers to the implementation of CDS were reported to be minimal. One participant postulated that organizations in areas where past litigation has occurred may have a more conservative attitude towards tailored DDI alerts implementation; however, others did not report legal or regulatory barriers, given regulations like the federal “meaningful use” criteria that encourage CDS adoption (e.g., “ we get pushback from some regulatory body, but for the most part, we haven't involved legal and risk management,” “we hardly encountered legal barriers that need a legal team to review. ”).

Measurement and Monitoring

Lack of Actionable Metrics : according to participants, their health care organizations primarily use readily available metrics (e.g., acceptance rate, override rate, the volume of alerts, or ratio of alerts fired for individuals over a certain amount of time) to monitor alert performance and they are “ mostly ad hoc analysis of DDI alerts for small tweaks ” (e.g., severity level change). Nevertheless, these metrics do not provide compelling arguments in terms of productivity and efficiency that health care organizations prioritize to drive decisions related to tailored DDI alert implementation at the organizational level. Multiple participants suggested using value metrics that measure effectiveness, efficiency, and productivity (e.g., “ trying to have some data about the effectiveness of the intervention,” “structuring your return on investment (ROI) and making sure that the return on that investment is high enough ”).

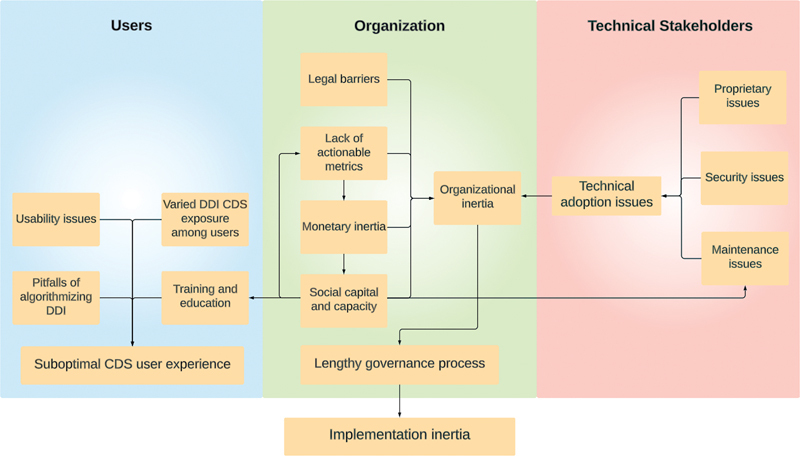

System Barriers

By mapping the interconnections between subthemes, we uncovered the system dynamics enabled by the interaction of these barriers. The first cluster is about end-users (e.g., attending physicians, residents, and pharmacists). Suboptimal DDI alert user experience was attributed to proximal factors such as insufficient training, usability issues, and various levels of DDI alert exposure as well as the distal factor such as the pitfalls of algorithmizing DDIs. The second cluster (i.e., the organization) included multiple factors that would contribute to the organizational inertia of navigating through the lengthy governance processes to implement tailored DDI alerts (e.g., insufficient social capital and capacity, monetary inertia, lack of actionable metrics, and legal barriers). The last cluster centers on technical stakeholders (e.g., IT team, DDI alert developers, cybersecurity experts, and vendors) showing that technical adoption can be complicated by proprietary issues, security issues, and maintenance issues (see Fig. 2 ).

Fig. 2.

Coding paradigm of implementation barriers (users: residents, attendings, pharmacists; organization: administrative and the leadership; technical stakeholders: IT team, DDI CDS developers, cybersecurity experts, vendors).

At the organizational level, lengthy governance processes are the bottleneck of tailored DDI alert implementation. The lack of actionable value metrics limits the assignment of monetary value as well as the social capital and capacity allocation, making it challenging to establish evidence (e.g., effectiveness, efficiency, and productivity) for implementing tailored DDI alerts. These barriers could form a vicious cycle that amplifies organizational inertia of prioritizing and navigating through the lengthy governance process. The issues associated with users are found not directly causing implementation inertia, and instead, their issues could be a driver of the adoption of tailored DDI alerts when they are well-represented at the organizational level, which is not the case currently. Technical barriers (e.g., proprietary issues, security issues, and maintenance issues) only become concerns to health care organizations when they lack value metrics that align with organizational interests to justify the effort to overcome these technical barriers.

Discussion

In this study, through interviews with 17 participants from diverse stakeholder groups, we identified 15 barriers regarding tailored DDI alert implementation. The findings of this study provided a comprehensive view of the barriers and health care system dynamics that hinder the implementation of tailored DDI alerts. Some findings are consistent with previous studies. 4 40 For example, our study revealed nuanced perceptions regarding DDI alerts, highlighting that while the interaction experience was suboptimal, the DDI alerts themselves were still found useful. Another study also corroborated the usability issues uncovered in our study, such as lack of customizability and lack of actionable instructions. 40

Multiple barriers identified in our study have been focal areas of many DDI alerts studies and efforts have been dedicated to improving the experience (e.g., recommendations to improve the usability of DDI alerts, 23 recommendations for selecting DDI alerts, 2 recommendations for reducing alert burden, 11 and strategic recommendations for drug alert implementation and optimization 42 ). These studies focused on showing how to improve drug alert experience and reduce alert fatigue, but mostly ignore discussions of the actuators—health care organizations—that potentiate these improvements: why would health care organizations want to implement these recommendations to optimize DDI alerts? This question is important because our study suggests that, while technical adoption issues associated with the EHR vendors were the common complaints about implementation inertia, it was the health care organizations that primarily impact the implementation of DDI alerts, which is consistent with previous studies. 14 41 As health care organizations emphasize DDI alerts' impact on costs, productivity, and efficiency, it is imperative to have value metrics that health care systems prioritize (e.g., higher ratings from external quality organizations) to gain the momentum for implementing tailored DDI alerts. However, defining and quantifying these value metrics is challenging and there are no agreed-upon value measures, 11 which presents opportunities for further studies.

With the diverse participants that included a higher percentage of organizational level participants (e.g., CMIOs) as compared with end-users, we were able to delve into organizational factors and unveil underlying mechanisms related to tailored DDI alert implementation inertia. Our study fills a research gap identified in the previous systematic review and gaps analysis 43 that existing studies examining factors influencing CDS implementation mostly focused on human and technological factors but much less on organizational factors because their study participants were mostly end-users such as physicians. Our results demonstrate that different entities (e.g., users, organizations, and technical stakeholders) within health care organizations have different foci of interest regarding DDI alert performance and different expectations regarding DDI alert optimization. The misaligned interests and expectations have resulted in health care organizations' inertia in implementing tailored DDI alerts at the system level. Future research can continue using a systematic approach to studying the problems associated with tailored DDI alert implementation from other system perspectives such as the vendors.

Limitations

Our study has some limitations common to other qualitative studies, including the reliance on a small sample of interview participants which mostly included CMIOs, pharmacy informaticists, and information technology analysts. Therefore, the generalizability of our findings to other populations and health care organizations using other EHR systems may be limited. For example, the legal barriers were reported to be minimal at our participants' health care organizations; however, other organizations may perceive more legal barriers as one participant postulated that organizations in areas where past litigation has occurred may have a more conservative attitude toward tailored DDI alerts implementation. In addition, our study mostly focused on factors that affect the implementation of tailored DDI alerts that reduce alert volume and alert fatigue. It relies on the precondition that DDI alerts have been implemented and the health care systems are already at a relatively higher stage of the CDS implementation process. 44 Therefore, our findings may not be generalizable to lower stages of the DDI alerts implementation process. For example, risk management can be a facilitator in the process of DDI alert implementation as they regard these alerts as an effective way to control risk; however, risk management might become a barrier if one were to reduce alerts by implementing tailored DDI alerts because they prefer over-alerting. Another example is that many external regulations such as “meaningful use” criteria provide incentives for CDS implementation and use but they do not provide enough incentives to reduce alerts.

Conclusion

Health care organizations primarily determine the implementation and optimization of DDI alerts. To drive the uptake of tailored DDI alerts, it is imperative to identify and demonstrate value metrics that health care organizations have prioritized, which are different from the most readily available metrics such as override or acceptance rates. This could be achieved via a multifaceted approach, such as partnering with health care organizations that have the ability and capacity to uptake tailored DDI alerts and identifying appropriate multifaceted specialists that can connect the bottom-up demands and top-down supports by amplifying end-users' voices to the health care leadership and convincing the health care leadership that tailored DDI alerts are of value.

Clinical Relevance Statement

It is widely recognized that excessive DDI alerts lead to alert fatigue, and there is a need to tailor DDI alerts by factoring in patient-specific and context-specific characteristics. Despite the benefits of tailored DDI alerts and the broad dissemination strategy, the uptake of our tailored DDI alerts has been limited thus far. This article examined the implementation barriers and system dynamics and proposed possible solutions from a system perspective that would potentially drive tailored DDI alerts implementation in the industry.

Multiple-Choice Questions

-

When implementing DDI alerts, especially tailored DDI alerts that factor in patient-specific and context-specific characteristics, which of the following entities have the most impact on the implementation inertia?

EHR vendors

Health care organizations

End-users, such as pharmacists, physicians, and residents

Technical stakeholders

Correct Answer : The correct answer is option b. Although technical adoption issues related to EHR vendors are often raised as barriers to implement tailored DDI alerts, our study shows that the implementation inertia is mostly caused by the unaligned system dynamic within health care institutes.

-

Which of the following value metric(s) is/are important to health care organizations in their decisions of implementing tailored DDI alerts?

Efficiency

Effectiveness

Acceptance Rate

A and B

Correct Answer : The correct answer is d. Health care organizations prioritize efficiency, effectiveness, and productivity in their decisions rather than the most readily available metrics such as acceptance rate and override rate.

Funding Statement

Funding This project was supported by grant number R01HS025984 from the Agency for Healthcare Research and Quality. V.S. was supported in part by the National Science Foundation under grant number 1838745.

Conflict of Interest None declared.

Protection of Human and Animal Subjects

All procedures in this study were approved by the Institutional Review Boards at the University of Arizona and the University of Utah.

Note

The content is solely the responsibility of the authors and does not necessarily represent the official views of the Agency for Healthcare Research and Quality or the National Science Foundation.

Supplementary Material

Supplementary Material

Supplementary Material

References

- 1.Egger S S, Drewe J, Schlienger R G. Potential drug-drug interactions in the medication of medical patients at hospital discharge. Eur J Clin Pharmacol. 2003;58(11):773–778. doi: 10.1007/s00228-002-0557-z. [DOI] [PubMed] [Google Scholar]

- 2.Tilson H, Hines L E, McEvoy G et al. Recommendations for selecting drug-drug interactions for clinical decision support. Am J Health Syst Pharm. 2016;73(08):576–585. doi: 10.2146/ajhp150565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Magro L, Moretti U, Leone R. Epidemiology and characteristics of adverse drug reactions caused by drug-drug interactions. Expert Opin Drug Saf. 2012;11(01):83–94. doi: 10.1517/14740338.2012.631910. [DOI] [PubMed] [Google Scholar]

- 4.Van De Sijpe G, Quintens C, Walgraeve K et al. Overall performance of a drug-drug interaction clinical decision support system: quantitative evaluation and end-user survey. BMC Med Inform Decis Mak. 2022;22(01):48. doi: 10.1186/s12911-022-01783-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Vonbach P, Dubied A, Krähenbühl S, Beer J H. Prevalence of drug-drug interactions at hospital entry and during hospital stay of patients in internal medicine. Eur J Intern Med. 2008;19(06):413–420. doi: 10.1016/j.ejim.2007.12.002. [DOI] [PubMed] [Google Scholar]

- 6.Institute of Medicine (US) Committee on Quality of Health Care in America . Washington, DC: National Academies Press; 2001. Crossing the Quality Chasm: A New Health System for the 21st Century. [PubMed] [Google Scholar]

- 7.Blumenthal D, Tavenner M. The “meaningful use” regulation for electronic health records. N Engl J Med. 2010;363(06):501–504. doi: 10.1056/NEJMp1006114. [DOI] [PubMed] [Google Scholar]

- 8.Vélez-Díaz-Pallarés M, Pérez-Menéndez-Conde C, Bermejo-Vicedo T. Systematic review of computerized prescriber order entry and clinical decision support. Am J Health Syst Pharm. 2018;75(23):1909–1921. doi: 10.2146/ajhp170870. [DOI] [PubMed] [Google Scholar]

- 9.Roumeliotis N, Sniderman J, Adams-Webber T et al. Effect of electronic prescribing strategies on medication error and harm in hospital: a systematic review and meta-analysis. J Gen Intern Med. 2019;34(10):2210–2223. doi: 10.1007/s11606-019-05236-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Humphrey K E, Mirica M, Phansalkar S, Ozonoff A, Harper M B. Clinician perceptions of timing and presentation of drug-drug interaction alerts. Appl Clin Inform. 2020;11(03):487–496. doi: 10.1055/s-0040-1714276. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.McGreevey J D, III, Mallozzi C P, Perkins R M, Shelov E, Schreiber R. Reducing alert burden in electronic health records: state of the art recommendations from four health systems. Appl Clin Inform. 2020;11(01):1–12. doi: 10.1055/s-0039-3402715. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Horn J R, Gumpper K F, Hardy J C, McDonnell P J, Phansalkar S, Reilly C. Clinical decision support for drug-drug interactions: improvement needed. Am J Health Syst Pharm. 2013;70(10):905–909. doi: 10.2146/ajhp120405. [DOI] [PubMed] [Google Scholar]

- 13.Children's Hospital Association CDS working group . Harper M B, Longhurst C A, McGuire T L, Tarrago R, Desai B R, Patterson A. Core drug-drug interaction alerts for inclusion in pediatric electronic health records with computerized prescriber order entry. J Patient Saf. 2014;10(01):59–63. doi: 10.1097/PTS.0000000000000050. [DOI] [PubMed] [Google Scholar]

- 14.McEvoy D S, Sittig D F, Hickman T T et al. Variation in high-priority drug-drug interaction alerts across institutions and electronic health records. J Am Med Inform Assoc. 2017;24(02):331–338. doi: 10.1093/jamia/ocw114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Kesselheim A S, Cresswell K, Phansalkar S, Bates D W, Sheikh A. Clinical decision support systems could be modified to reduce 'alert fatigue' while still minimizing the risk of litigation. Health Aff (Millwood) 2011;30(12):2310–2317. doi: 10.1377/hlthaff.2010.1111. [DOI] [PubMed] [Google Scholar]

- 16.van der Sijs H, Aarts J, Vulto A, Berg M. Overriding of drug safety alerts in computerized physician order entry. J Am Med Inform Assoc. 2006;13(02):138–147. doi: 10.1197/jamia.M1809. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Shah N R, Seger A C, Seger D L et al. Improving acceptance of computerized prescribing alerts in ambulatory care. J Am Med Inform Assoc. 2006;13(01):5–11. doi: 10.1197/jamia.M1868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Payne T H, Nichol W P, Hoey P, Savarino J.Characteristics and override rates of order checks in a practitioner order entry systemProc AMIA Symp. Published online2002602–606. [PMC free article] [PubMed]

- 19.Bryant A D, Fletcher G S, Payne T H. Drug interaction alert override rates in the meaningful use era: no evidence of progress. Appl Clin Inform. 2014;5(03):802–813. doi: 10.4338/ACI-2013-12-RA-0103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Edrees H, Amato M G, Wong A, Seger D L, Bates D W. High-priority drug-drug interaction clinical decision support overrides in a newly implemented commercial computerized provider order-entry system: override appropriateness and adverse drug events. J Am Med Inform Assoc. 2020;27(06):893–900. doi: 10.1093/jamia/ocaa034. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Villa Zapata L, Subbian V, Boyce R D et al. Overriding drug-drug interaction alerts in clinical decision support systems: a scoping review. Stud Health Technol Inform. 2022;290:380–384. doi: 10.3233/SHTI220101. [DOI] [PubMed] [Google Scholar]

- 22.Russ A L, Chen S, Melton B L et al. A novel design for drug-drug interaction alerts improves prescribing efficiency. Jt Comm J Qual Patient Saf. 2015;41(09):396–405. doi: 10.1016/s1553-7250(15)41051-7. [DOI] [PubMed] [Google Scholar]

- 23.Payne T H, Hines L E, Chan R C et al. Recommendations to improve the usability of drug-drug interaction clinical decision support alerts. J Am Med Inform Assoc. 2015;22(06):1243–1250. doi: 10.1093/jamia/ocv011. [DOI] [PubMed] [Google Scholar]

- 24.Tolley C L, Slight S P, Husband A K, Watson N, Bates D W. Improving medication-related clinical decision support. Am J Health Syst Pharm. 2018;75(04):239–246. doi: 10.2146/ajhp160830. [DOI] [PubMed] [Google Scholar]

- 25.Muylle K M, Gentens K, Dupont A G, Cornu P. Evaluation of an optimized context-aware clinical decision support system for drug-drug interaction screening. Int J Med Inform. 2021;148:104393. doi: 10.1016/j.ijmedinf.2021.104393. [DOI] [PubMed] [Google Scholar]

- 26.Horn J, Ueng S. The effect of patient-specific drug-drug interaction alerting on the frequency of alerts: a pilot study. Ann Pharmacother. 2019;53(11):1087–1092. doi: 10.1177/1060028019863419. [DOI] [PubMed] [Google Scholar]

- 27.Chou E, Boyce R D, Balkan B et al. Designing and evaluating contextualized drug-drug interaction algorithms. JAMIA Open. 2021;4(01):ooab023. doi: 10.1093/jamiaopen/ooab023. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.ddi-cds.org – Drug-Drug Interaction Clinical Decision Support. https://ddi-cds.org/ https://ddi-cds.org/

- 29.Drug-Drug Interactions with Colchicine – ddi-cds.org. https://ddi-cds.org/drug-drug-interactions-with-colchicine/ https://ddi-cds.org/drug-drug-interactions-with-colchicine/

- 30.Proctor E, Silmere H, Raghavan R et al. Outcomes for implementation research: conceptual distinctions, measurement challenges, and research agenda. Adm Policy Ment Health. 2011;38(02):65–76. doi: 10.1007/s10488-010-0319-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Atkins L, Francis J, Islam R et al. A guide to using the Theoretical Domains Framework of behaviour change to investigate implementation problems. Implement Sci. 2017;12(01):77. doi: 10.1186/s13012-017-0605-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Creswell J W.Qualitative Inquiry & Research Design Choosing Among Five Approaches Thousand Oaks, CA: SAGE;2013 [Google Scholar]

- 33.Singh H, Sittig D F. A sociotechnical framework for safety-related electronic health record research reporting: the safer reporting framework. Ann Intern Med. 2020;172(11):S92–S100. doi: 10.7326/M19-0879. [DOI] [PubMed] [Google Scholar]

- 34.Titler M G, Everett L Q. Translating research into practice. Considerations for critical care investigators. Crit Care Nurs Clin North Am. 2001;13(04):587–604. [PubMed] [Google Scholar]

- 35.Rogers E M, Singhal A, Quinlan M M.Diffusion of innovations. In: An Integrated Approach to Communication Theory and ResearchThird Edit. Routledge;2019415–433. [Google Scholar]

- 36.Appelbaum S H. Socio-technical Systems. Manage Decis. 1997;35(06):452–463. [Google Scholar]

- 37.McLachlan S, Dube K, Johnson O et al. A framework for analysing learning health systems: are we removing the most impactful barriers? Learn Health Syst. 2019;3(04):e10189. doi: 10.1002/lrh2.10189. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Byrne C B, Sherry D S, Mercincavage L, Johnston D, Pan E, Schiff G.Key Lessons in Clinical Decision Support ImplementationWestat Tech Repport;2010

- 39.Ramanadhan S, Revette A C, Lee R M, Aveling E L. Pragmatic approaches to analyzing qualitative data for implementation science: an introduction. Implement Sci Commun. 2021;2(01):70. doi: 10.1186/s43058-021-00174-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Jung S Y, Hwang H, Lee K et al. Barriers and facilitators to implementation of medication decision support systems in electronic medical records: mixed methods approach based on structural equation modeling and qualitative analysis. JMIR Med Inform. 2020;8(07):e18758. doi: 10.2196/18758. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Baysari M T, Van Dort B A, Stanceski K et al. Is evidence of effectiveness a driver for clinical decision support selection? A qualitative descriptive study of senior hospital staff. Int J Qual Health Care. 2023;35(01):1–6. doi: 10.1093/intqhc/mzad004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Saiyed S M, Davis K R, Kaelber D C. Differences, opportunities, and strategies in drug alert optimization-experiences of two different integrated health care systems. Appl Clin Inform. 2019;10(05):777–782. doi: 10.1055/s-0039-1697596. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Kilsdonk E, Peute L W, Jaspers M WM. Factors influencing implementation success of guideline-based clinical decision support systems: a systematic review and gaps analysis. Int J Med Inform. 2017;98:56–64. doi: 10.1016/j.ijmedinf.2016.12.001. [DOI] [PubMed] [Google Scholar]

- 44.Liberati E G, Ruggiero F, Galuppo L et al. What hinders the uptake of computerized decision support systems in hospitals? A qualitative study and framework for implementation. Implement Sci. 2017;12(01):113. doi: 10.1186/s13012-017-0644-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Anfara V A, Brown K M, Mangione T L. Qualitative analysis on stage: making the research process more public. Educ Res. 2002;31(07):28–38. [Google Scholar]

- 46.Devers K J.How will we know “good” qualitative research when we see it? Beginning the dialogue in health services research Health Serv Res 199934(5 Pt 2):1153–1188. [PMC free article] [PubMed] [Google Scholar]

- 47.Creswell J W, Miller D L. Determining validity in qualitative inquiry. Theory Pract. 2000;39(03):124–130. [Google Scholar]

- 48.Patton M Q.Qualitative Research and Evaluation Methods: Theory and PracticeSAGE Publ Inc;2015832 [Google Scholar]

- 49.Patton M Q.Enhancing the quality and credibility of qualitative analysis Health Serv Res 199934(5 Pt 2):1189–1208. [PMC free article] [PubMed] [Google Scholar]

- 50.Palinkas L A, Horwitz S M, Green C A, Wisdom J P, Duan N, Hoagwood K. Purposeful sampling for qualitative data collection and analysis in mixed method implementation research. Adm Policy Ment Health. 2015;42(05):533–544. doi: 10.1007/s10488-013-0528-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Gibbs L, Kealy M, Willis K, Green J, Welch N, Daly J. What have sampling and data collection got to do with good qualitative research? Aust N Z J Public Health. 2007;31(06):540–544. doi: 10.1111/j.1753-6405.2007.00140.x. [DOI] [PubMed] [Google Scholar]

- 52.Neuman W L. Social research methods: qualitative and quantitative approaches. Teach Sociol. 2002;30(03):380. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Material

Supplementary Material