Abstract

This study introduces an innovative approach to address convex optimization problems, with a specific focus on applications in image and signal processing. The research aims to develop a self-adaptive extra proximal algorithm that incorporates an inertial term to effectively tackle challenges in convex optimization. The study's significance lies in its contribution to advancing optimization techniques in the realm of image deblurring and signal reconstruction. The proposed methodology involves creating a novel self-adaptive extra proximal algorithm, analyzing its convergence rigorously to ensure reliability and effectiveness. Numerical examples, including image deblurring and signal reconstruction tasks using only 10% of the original signal, illustrate the practical applicability and advantages of the algorithm. By introducing an inertial term within the extra proximal framework, the algorithm demonstrates potential for faster convergence and improved optimization outcomes, addressing real-world challenges of image enhancement and signal reconstruction. The algorithm's incorporation of an inertial term showcases its potential for faster convergence and improved optimization outcomes. This research significantly contributes to the field of optimization techniques, particularly in the context of image and signal processing applications.

Keywords: Forward-backward algorithm, Signal & image processing, Convex minimization problem, Weak convergence, Inertial method

1. Introduction

One of the very important tools for modeling and solving various real-world problems is convex optimization theory. The theory can be applied in variety of fields like machine learning, game theory, economics, control theory among others, see Refs. [[1], [2], [3], [4], [5], [6], [7], [8], [9], [10]] for more details.

Consider a function that is not differentiable but convex and a set that is convex, the standard convex optimization problem aim for solving

| (1.1) |

Researchers have over the years derived various techniques for locating . For example Newton methods, regula-falsi methods and others have been employed to minimize the function , but when fails to be differentiable/smooth, then the proximal point algorithm (PPA) introduced in Ref. [11], is the most prevalent and desirable technique. For any starting point , Martinet in Ref. [11] recommend the recursive rule for the generated sequence for finding the solution (1.1).

| (1.2) |

Since the right part of (1.2) is a minimization of a strongly convex operator, then we will surely have just one solution which is referred to as the proximal operator:

| (1.3) |

which can be written mostly as,

| (1.4) |

for some .

Martinet expressed the view that each weak cluster points in the sequence of (1.2) solve the optimization problem (1.1). The procedure has attracted the interest of many researchers because it is straightforward and has the ability to search for the solution of large scale optimization problem. Hence, the theory have been extended in many ways, for example see Refs. [12],[13],[14],[15],[16],[17] ,[[18], [19], [20], [21]][,[[22], [23], [24]] and the many references therein. The mathematical model of temperature-dependent flow of power-law nanofluid over a variable stretching Riga sheet was study by Ref. [24]. Following refs. [[25], [26], [27], [28]] have several other noteworthy studies. Priority-aware resource scheduling for UAV-mounted mobile edge computing networks was study by Ref. [29]. references [[29], [30], [31], [32], [33], [34], [35]] encompass various other significant investigations.

The existence of a strong link between the various problems related to the fixed point problem, namely, variational inequality and minimization, has been shown, This allows the P.P.A to be utilized to solve these problems and other related problems. For example, the of the minimization problem (1.1) is also the zeros of the sub-differential of symbolized as which in-turn is the fixed point of , i.e.

| (1.5) |

Our objective in this endeavor is to explore ways of solving the minimization problem stated in (1.1). The algorithm's core lies in optimizing convex problems, enhancing outcomes in diverse scenarios. Its contributions encompass both image and signal domains, making it a valuable tool for enhancing quality, accuracy, and analysis in data-driven applications. By efficiently tackling convex optimization challenges, this algorithm finds relevance in various tasks, including image processing, signal reconstruction, and sparse signal recovery and sparse signal recovers. The loss function involved in this problem is denoted as , and it is defined on a real Hilbert space . The operator is both smooth and convex, whereas is lower semicontinuous, proper, and convex, but it is not necessarily smooth or differentiable.

| (1.6) |

We can represent the collection of solutions of (1.6) as . It is evident that achieves the minimum value for if and only if

| (1.7) |

From the relationships in (1.5), the forward-backward algorithm (also known as the shrinkage thresholding algorithm) is defined as:

| (1.8) |

The value assigned to the step-size, denoted as , falls within the open interval between 0 and , where represents the Lipschitz constant of the gradient of . More insights on the method and its practical uses can be obtained by referring to Refs. [6,18–20,23,24,29], including the sources cited therein.

The inertial proximal scheme was proposed by F. Alvarez and H. Attouch in 2001 [36]. The method is inspired by the heavy ball with friction dynamical system, and given any two points , the sequence is defined using the iterative rule:

| (1.9) |

Further details about the scheme can be found in the original source [36].

After the convergence properties of the inertial proximal scheme were discovered, researchers in the field put in significant efforts to improve the algorithm in various ways. For instance, A. Beck and M. Teboulle [23] developed the Fast Inertia Shrinkage Thresholding Algorithm (FISTA) in 2009. To implement FISTA, two initial values and are chosen from a set , and is set to 1. The update rule involves four steps, including the calculation of , , , and , which make use of various parameters such as , , and the proximal operator. The algorithm is given as:

| (1.10) |

Tseng [10] introduced a modified shrinkage thresholding approach or a method that utilizes a step-size line-search technique in 2000. This method can be defined as follows: given , , , and , we have:

| (1.11) |

Here, is the maximum value of that satisfies .

A novel modified forward-backward splitting method was recently introduced by Padcharoen et al. [37] in 2021. Their definition of the method is as follows: Let and be elements of . Then, we can compute

| (1.12) |

This method can be used to solve optimization problems effectively and efficiently. Suparat Kesornprom and Prasit Cholamjiak in Ref. [22] showed that the algorithm in (1.13) weakly converges to the solution of (1.6).

Kesornprom S. and Cholamjiak P. introduced a new inertial forward-backward method for solving the problem given in (1.6) [22]. This method is a recent addition to the class of proximal type algorithms. For , the algorithm computes using the following steps:

| (1.13) |

Here, and are elements of , and and are positive constants. Additionally, , and is defined as follows:

The authors proved in Ref. [22] that the algorithm in (1.13) converges weakly to the solution of (1.6), which makes it an effective tool for solving this type of problem.

The results discussed above provided motivation for us to propose a modified inertial extra-proximal-gradient algorithm. This algorithm is designed to converge to the solution of a convex optimization problem given in (1.6). We then applied this algorithm to an image and signal reconstruction problem.

2. Preliminary

The focus of this section will be to present a selection of widely recognized lemmas, propositions, and definitions hat are necessary to prove the main theorem in a satisfactory manner.

For any sequence , we define as the weak -limit, which is a set containing such that there exists some subsequence of with weakly converging to . We also have both weak () and strong () convergence of to . Additionally, the subdifferential of is defined as

Lemma 2.1 (see Refs. [17],[18]) Consider a real Hilbert space , the following statements are valid:

J1 for every , .

J2 , .

J3 .

J4 , .

Lemma 2.2 (see Ref. [22]). Suppose that is quasi-Fejer convergent to a point within the set . Then, we can deduce the following:

-

I.

is bounded.

-

II.

If every weak accumulation point of lies in , then converges weakly to a point within .

Lemma 2.3 (see Ref. [22]). Suppose we have real, positive sequences , , and such that:

If the infinite sums and are both finite, then the limit of as approaches infinity exists.

Lemma 2.4 (see Ref. [22]). Consider a subdifferential operator that is maximal monotone. addition, the graph of , is demiclosed, that is, if a sequence satisfying the conditions that converges weakly to and converges strongly to , then .

Lemma 2.5 (see Ref. [22]) Suppose we have real, positive sequences and such that:

Then, we have , where . Furthermore, if , then the sequence is bounded.

Definition 2.1 (see Ref. [22]) Consider a subset of such that . We define a sequence in to be quasi-Fejer convergent to a point in if and only if, for every , there exists a positive sequence satisfying , and for every . If the sequence is null, then we can conclude that is Fejer convergent to a point in .

Definition 2.2 (see Ref. [22]) Consider a nonlinear point to set function , the graph of is the set

for every and in the set , the operator is said to be non-expansive if,

If for any and belonging to a set , and and belonging to and respectively, the inequality holds, then the operator can be referred to as monotone.

is a maximal monotone operator if the graph if its graph is not entirely contained within the graph of any other monotone operator.

is strongly monotone if we can find such that

If there exists a positive value such that for any and in set , the inequality holds, then is said to be strongly monotone.

The function is considered to be firmly non-expansive if, for any two points and in a set , the following inequality holds:

3. Convergence analysis

Our interest in this section is to show that algorithm (3.1) converges weakly to a point in the set of solution of (1.6). In this section, we will also state and proof some new useful lemmas that will assist in showing convergence.

C1 The feasible region for the convex optimization problem (1.6) has at least one solution, which means that it is not empty, and it is given in the set below:

C2 are two convex, proper and lower semi-continuous operators.

C3 is smooth on and is Lipschitz continuous on with the Lipschitz constant 0. (1.6).

The function is smooth over the set , and the gradient of is bounded by a Lipschitz constant over , where is a positive value, this is. This relationship is expressed in equation (1.6).

We will now present a method for solving equation (1.6), which we call the "inertial extra forward-backward technique".

Algorithm 3.1 An Initial extra-proximal Extra Forward and Backward Method (IEFBM)

Initialize-step: Let and .

Recursive Step: for , the following computation for is performed:

1st-step Compute the inertial term: .

2nd-step Compute the proximal gradient descent two times in a progressive manner:

3rd-tep Compute step:

4th-step stop when , otherwise, set and move control to the first step

Lemma 3.1 Suppose that the sequence is produced by Algorithm 3.1. Then

for in the solution set

Proof. Assuming that (1.6) has a solution, say we can proceed to deduce the following:

| (3.1) |

The following conclusions are draw from the hypothesis in algorithm 3.1,

Therefore,

| (3.2) |

Similarly,

| (3.3) |

it is easy to see that

| (3.4) |

Hence, substituting it into equation (3.3) we have,

| (3.5) |

Now, let us compute ,

| (3.6) |

Substituting (3.4), (3.5) and (3.6) into (3.1) we have,

| (3.7) |

From Algorithm (3.1) we have, Let then we have that

Since is an element of the set of solution, defined in (1.6), then . Therefore,

| (3.8) |

We obtain,

| (3.9) |

Similarly, since and if we let we can have that,

| (3.10) |

Hence, we have

| (3.11) |

Using (3.8), (3.10) we obtain,

| (3.12) |

Lemma 3.2 Let Algorithm 3.1. generates then If , we obtain that

exists for every , the solution set.

Proof. Since is an element of the set of solution, then from lemma 3.1 we obtain,

Notice that

hence, we obtained

| (3.13) |

Using Lemma 2.5, we derive that

where . Since, , by Lemma 2.5, we have that is bounded, that is . Therefore, using (3.12) and Lemma 2.3 we can conclude that exists. This complete the proof.

Lemma 3.3 Let Algorithm 3.1 generates . If , then

Proof.

| (3.14) |

it is enough to show that .

We obtain,

| (3.15) |

from Algorithm 3.1. Now, since is uniformly continuous,

From (3.15), we have

| (3.16) |

We also know that,

| (3.17) |

From the inertial step in algorithm 3.1, we have

Therefore

| (3.18) |

From (3.12) we have that

| (3.19) |

The fact that and that exists a conclusion from Lemma 3.2, we can conclude from (3.19) that

lastly, from 3.4 we can easy see that,

We have that,

| (3.20) |

hence, using the result of (3.20), (3.16) in (3.14) we conclude that

This completes the proof.

Theorem 3.1 Suppose Algorithm 3.1 produces the sequence . If the infinite series converges to a finite value, then the sequence weakly converges to an element that belongs to the set of solutions defined by the problem in (1.6).

Proof. Given that the sequence is bounded, we can identify a subset of the sequence, denoted by , such that , where is a member of . By applying Lemma 3.3, it follows that for . Using Algorithm 3.1 we get that,

| (3.21) |

From equation (3.20), we can deduce that . As a result, it follows that weakly converges to . By applying Lemma 2.4 and taking the limit as approaches infinity in equation (3.21), while considering the maximal property of the operator and the uniform continuity of , we can establish that:

Thus, we can conclude that belongs to the solution set of the maximal operator , i.e., . Additionally, we observe from inequality (3.19) that the sequence is a quasi-Fejer sequence. Therefore, using Lemma 2.2, we can establish that the sequence weakly converges to a point, say in the solution set . This completes the proof.

4. Application of the propose algorithm to image and signal reconstruction

Our objective in this section is to furnish an illustration to show the application of the propose algorithm to image restoration and signal reconstruction respectively, and to exhibit the applicability, stability and efficacy of our newly introduced algorithm. To show how our proposed algorithm faired, we compared it with the very recent scheme introduced by Suparat Kesornprom and Prasit Cholamjiak [22].

Our initial issue pertains to the restoration of the original image from a blurred image, and we will employ the peak-signal-to-noise ratio (P.S.N.R), as presented by K. H. Thung and P. Raveendran [38], as a metric to gauge the efficacy of both methods in producing high-quality images. Given that is the original image and is the reconstructed image, then the P.S.N.R is defined follows:

It is noted that, a greater P.S.N.R typically implies a better quality of reconstruction. This numerical experiment was conducted using JupyterLab (Python 3) on an 8 Giga Byte of RAM with a core i5 processor.

It is a commonly accepted fact that all images consist of a dimension of pixels, and the values of each pixel are restricted to the range of . The process of image deblurring or masking is expressed through the following system of linear equations:

| (4.1) |

The blurring matrix is denoted by , and the original image is represented by , which belongs to the space. The blurred or degraded image, also referred to as the observed image or measurement, is represented by in the space, and denotes the noise that has been incorporated into the image.

In order to obtain an estimation of the initial image, it is necessary to determine the solution to the optimization dilemma expressed in Equation 4.2 below

| (4.2) |

where and are the positive parameter, the -norm, and the Euclidean norm respectively. It is widely known that the norm is convex but not smooth and the Euclidean norm is a both convex and differentiable/smooth.

The optimization problem in (4.2) can easily be written in the form of (1.6) by setting and we shall use our newly introduced algorithm, (3.1) to restore the original image or something very close to it given a masked image in Fig. 1.

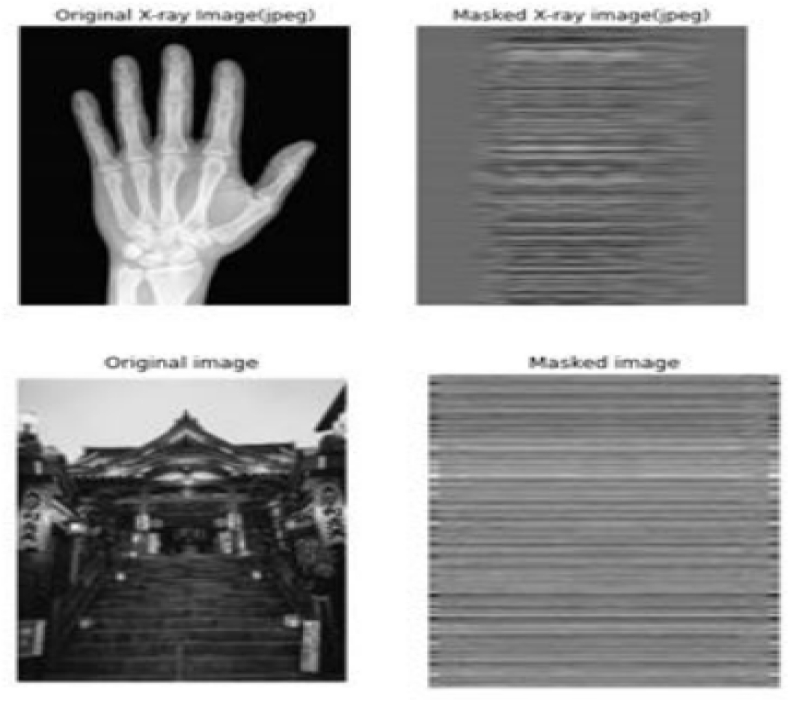

Fig. 1.

The images on the left represent the original images see Refs. [39,40], while the images on the right show the masked images, with respective PSNR values of −65.9 and −60.2.

The algorithm is initialized with the following parameters:

where .

The computational result is documented in Table 1.

Table 1.

Extra-proximal gradient algorithm convergence table.

| Image-Type | C.P.U-Time | Algorithm 3.1 (P.S.N.R Scores) | CPU-Time | Algorithm 1.13 (P.S.N.R Scores) | |

|---|---|---|---|---|---|

| Bioimage | 20000 | 112.8 | −21.6 | 82.1 | −23.7 |

| 40000 | 224.7 | −19.2 | 160.8 | −21.6 | |

| 60000 | 363.5 | −18.0 | 265.1 | −20.2 | |

| 120000 | 720.3 | −16.6 | 497.9 | −18.0 | |

| 200000 | 1184.9 | −15.4 | 958.2 | −16.6 | |

| House.jpg | 20000 | 35 | −20.9 | 27.0 | −22.3 |

| 40000 | 71.1 | −20.2 | 55.0 | −20.9 | |

| 60000 | 104.3 | −20.1 | 85.2 | −20.4 | |

| 120000 | 218.4 | −19.9 | 183.4 | −20.1 | |

| 200000 | 384.5 | −19.9 | 322.9 | −19.9 |

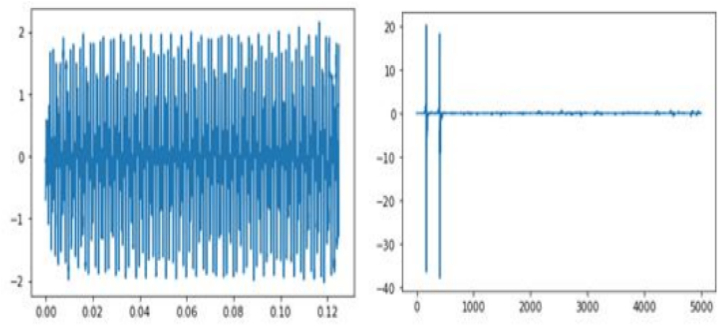

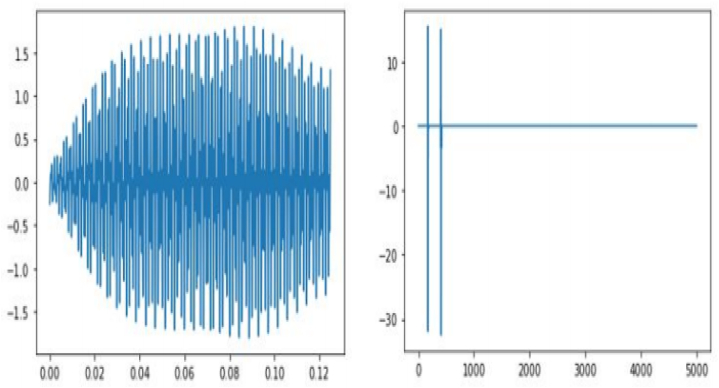

For the second example, we will employ our algorithm to reconstruct a sinusoidal signal from a.

10% sample.

We used the following initialization: where

. employing our propose algorithm with the 10% sample from the original signal, the following results were observed after 40000 iterations. Table 1 show the extra-proximal gradient algorithm convergence. The image on the left was obtained using algorithm 3.1, while the image on the right was produced by implementing algorithm 1.13 (see Fig. 2). The P.S.N.R and convergence plot obtained from the reconstructed image data are illustrated in Fig. 3. The graph on the left corresponds to the y, whereas the transformed graph on the right corresponds to the axis of yt (see Fig. 4). The graphical representation of the 10% sample data is display in Fig. 5. The signal reconstructed using algorithm (3.1) can be represented uniquely in both the time domain and frequency domain is display in Fig. 6.

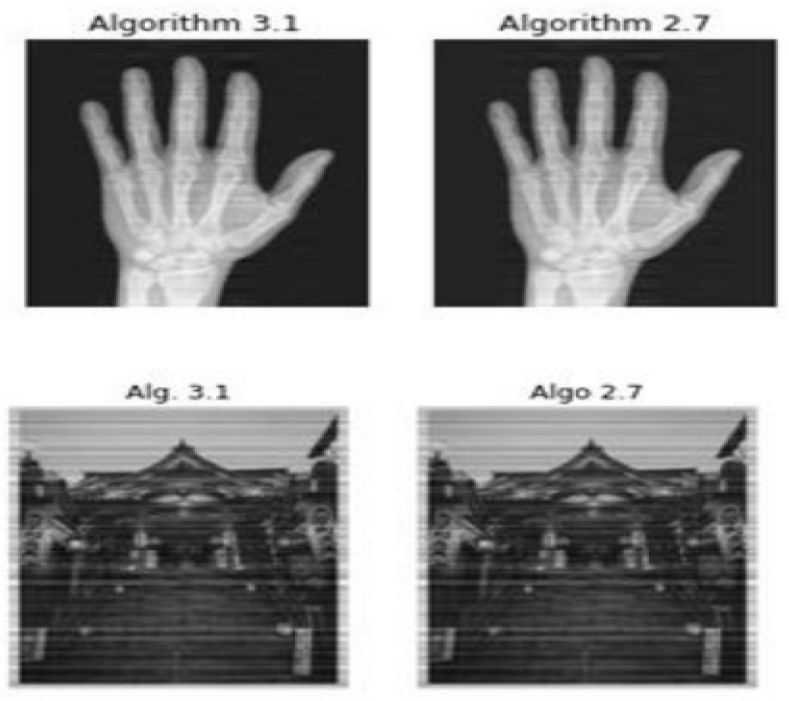

Fig. 2.

The image on the left was obtained using algorithm 3.1, while the image on the right was produced by implementing algorithm 1.13.

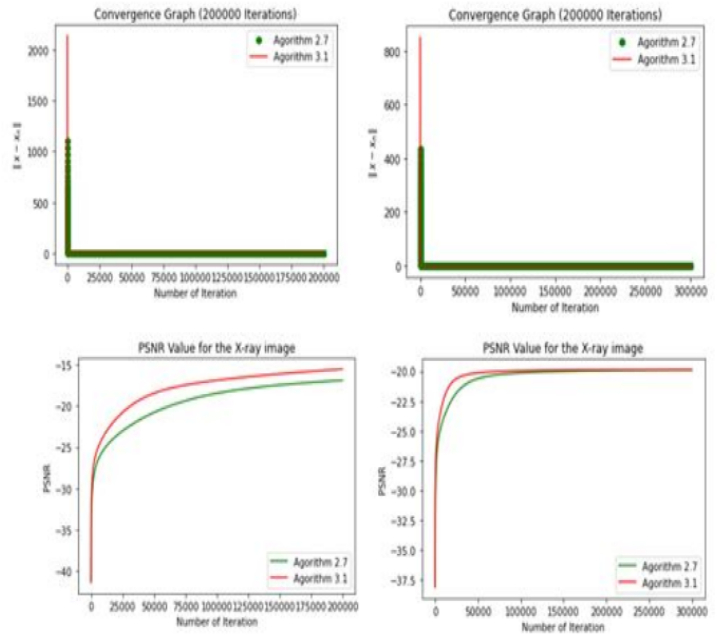

Fig. 3.

The P.S.N.R and convergence plot obtained from the reconstructed image data are illustrated in the figure. The plot on the left depicts the outcome obtained from algorithm 3.1, while the plot on the right exhibits the results produced by executing algorithm 1.13.

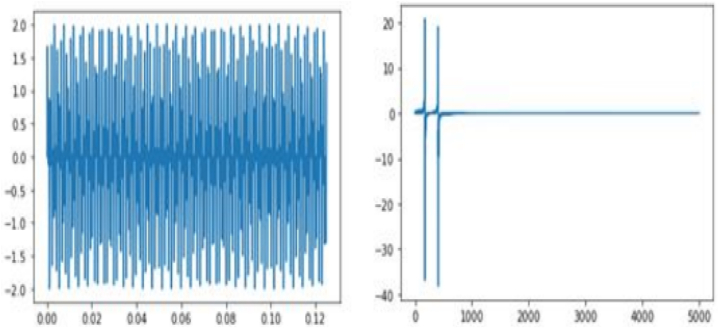

Fig. 4.

The graph on the left corresponds to the y, whereas the transformed graph on the right corresponds to the axis of yt.

Fig. 5.

A graphical representation of the 10% sample data.

Fig. 6.

The signal reconstructed using algorithm (3.1) can be represented uniquely in both the time domain and frequency domain.

The graphical representation of the reconstructed signal employing cvxpy is display in Fig. 7.

Fig. 7.

The graphical representation of the reconstructed signal employing cvxpy.

5. Conclusions

The main purpose of this work is to introduce an initial extra proximal forward-backward algorithm that is capable of searching for the solution of a convex minimization problem, and to demonstrate through analytical means that the algorithm converges weakly to the problem's solution. Employing the regularization model, we present two applications of our algorithm: Firstly, we use it to restore a blurred image in image restoration. Secondly, we use it to reconstruct a basic signal from only 10% of the original signal. Furthermore, We also present a graphically representation of the resulting data. The introduced algorithm finds meaningful applications in various domains. It can enhance the quality of medical images, aiding in accurate diagnoses.

Author contribution statement

Joshua Olilima & Adesanmi Mogbademu: Conceived and designed the experiments; performed the experiments; Wrote the paper. M. Asif Memon & Adebowale Martins Obalalu: Analyzed and interpreted the data; Wrote the paper. Hudson Akewe & Jamel Seidu: Contributed reagents, materials, analysis tools or data; wrote the paper.

Data availability statement

No data was used for the research described in the article.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Khan S., Cholamjiak W., Kazmi K. An inertial forward-backward splitting method for solving combination of equilibrium problems and inclusion problems. Comp. Appl. Math. 2018;37:6283–6307. doi: 10.1007/s40314-018-0684-5. [DOI] [Google Scholar]

- 2.Parikh Neal, Boyd Stephen. Proximal algorithms. Foundations and Trends in Optimization. 2013;1(No. 3):123–231. [Google Scholar]

- 3.Moreau J.-J. Proximit et dualit dans un espace Hilbertien. Bull. Soc. Math. France. 1965;93(2):273–299. [Google Scholar]

- 4.Gibali A., Thong D.V. Tseng type methods for solving inclusion problems and its applications. Calcolo. 2018;55:49. doi: 10.1007/s10092-018-0292-1. [DOI] [Google Scholar]

- 5.Cholamjiak W., Cholamjiak P., Suantai S. An inertial forward-backward splitting method for solving inclusion problems in Hilbert spaces. J. Fixed Point Theory Appl. 2018;20:42. doi: 10.1007/s11784-018-0526-5. [DOI] [Google Scholar]

- 6.Bauschke H., Bui M., Wang X. Applying FISTA to optimization problems (with or) without minimizers. Math. Program. 2020;184:349–381. doi: 10.1007/s10107-019-01415-x. [DOI] [Google Scholar]

- 7.Yambangwai D., Khan S., Dutta H., Cholamjiak W. Image restoration by advanced parallel inertial forward backward splitting methods. Soft Comput. 2021;25:6029–6042. doi: 10.1007/s00500-021-05596-6. [DOI] [Google Scholar]

- 8.Combettes P., Pesquet J.-C. Proximal splitting methods in signal processing. Fixed-Point Algorithms for Inverse Problems in Science and Engineering. 2011;49:185–212. [Google Scholar]

- 9.Shehu Y., Gibali A. New inertial relaxed method for solving split feasibilities. Optim. Lett. 2020 doi: 10.1007/s11590-020-01603-1. [DOI] [Google Scholar]

- 10.Tseng P. A modified forward-backward splitting method for maximal monotone appings. SIAM J. Control Optim. 2000;38:431–446. doi: 10.1137/S0363012998338806. [DOI] [Google Scholar]

- 11.Martinet B. Dtermination approche dun point fixed dune application pseudo- contractante. C.R. Acad. Sci. Paris. 1972;274A:163–165. [Google Scholar]

- 12.Hieu Van D., Anh P., Muu L. Modied forward-backward splitting method for variational inclusions, 4 OR-Q. J. Oper. Res. 2021;19:127–151. doi: 10.1007/s10288-02000440-3. [DOI] [Google Scholar]

- 13.Iusem A., Svaiter B., Teboulle M. Entropy-like proximal methods in convex rogramming. Math. Oper. Res. 1994;19:790–814. doi: 10.1287/moor.19.4.790. [DOI] [Google Scholar]

- 14.Wang F., Xu H. Weak and strong convergence of two algorithms for the split fixed point problem. Numer. Math. Theor. Meth. Appl. 2018;11:770–781. doi: 10.4208/nmtma.2018.s05. [DOI] [Google Scholar]

- 15.Burachik R., Iusem A. Set-valued Mappings and Enlargements of Monotone Operators. Springer; Boston: 2008. Enlargements of monotone operators; pp. 161–220. [Google Scholar]

- 16.Tang Yan, Lin Honghua, Gibali Aviv, Cho Yeol Je. Convergence analysis and applications of the inertial algorithm solving inclusion problems. App. Num. Math. 2022;175:1–17. doi: 10.1016/j.apnum.2022.01.016. [DOI] [Google Scholar]

- 17.Duan Peichao, Zhang Yiqun, Bu Qinxiong. New inertial proximal gradient methods for unconstrained convex optimization problems. J. Inequalities Appl. 2020:255. doi: 10.1186/s13660-020-02522-6. 2020. [DOI] [Google Scholar]

- 18.M.O. Osilike, E.U. Ofoedu, and F.U. Attah, The Hybrid Steepest Descent Method for Solutions of Equilibrium Problems and Other Problems in Fixed Point Theory,.

- 19.Shehu Y., Iyiola O.S. Weak convergence for variational inequalities with inertial-type method. Appl. Anal. 2020;101(1):192–216. [Google Scholar]

- 20.Duan P.C., Song M.M. General viscosity iterative approximation for solving unconstrained convex optimization problems. J. Inequal. Appl. 2015:334. 2015. [Google Scholar]

- 21.Guo Y.N., Cui W. Strong convergence and bounded perturbation resilience of a modified proximal gradient algorithm. J. Inequal. Appl. 2018;103 doi: 10.1186/s13660-018-1695-x. 2018. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Kesornprom S., Cholamjiak P. A modified inertial proximal gradient method for minimization problems and applications. AIMS Mathematics. 2022;7(5):8147–8161. [Google Scholar]

- 23.Beck A., Teboulle M. A fast iteratives shrinkage-thresholding algorithm for linear Inverse problems. SIAM J. Imaging Sci. 2009;2:183–202. doi: 10.1137/080716542. [DOI] [Google Scholar]

- 24.Bello Cruz J., Nghia T. On the convergence of the forward-backward splitting method with linesearches. Optim. Methods Software. 2016;31:1209–1238. doi: 10.1080/10556788.2016.1214959. [DOI] [Google Scholar]

- 25.Abbas N., Rehman K.U., Shatanawi W., Abodayeh K. Mathematical model of temperature- dependent flow of power-law nanofluid over a variable stretching Riga sheet. Waves Random Complex Media. 2022:1–8. Aug 18. [Google Scholar]

- 26.Shatnawi T.A., Abbas N., Shatanawi W. Comparative study of Casson hybrid nanofluid models with induced magnetic radiative flow over a vertical permeable exponentially stretching sheet. AIMS Math. 2022;7(12):20545–20564. [Google Scholar]

- 27.Nazir A., Abbas N., Shatanawi W. On stability analysis of a mathematical model of a society confronting with internal extremism. Int. J. Mod. Phys. B. 2023;37(7) [Google Scholar]

- 28.Shatnawi T.A., Abbas N., Shatanawi W. Mathematical analysis of unsteady stagnation point flow of radiative Casson hybrid nanofluid flow over a vertical Riga sheet. Mathematics. 2022;10(19):3573. [Google Scholar]

- 29.Zhou W., Fan L., Zhou F., Li F., Lei X., Xu W., Nallanathan A. IEEE Transactions on Vehicular Technology; 2023. Priority-aware Resource Scheduling for Uav-Mounted Mobile Edge Computing Networks. [Google Scholar]

- 30.Chen L., Fan L., Lei X., Duong T.Q., Nallanathan A., Karagiannidis G.K. Relay-assisted federated edge learning: performance analysis and system optimization. IEEE Trans. Commun. 2023 [Google Scholar]

- 31.Zhou W., Xia J., Zhou F., Fan L., Lei X., Nallanathan A., Karagiannidis G.K. IEEE Transactions on Vehicular Technology; 2023. Profit Maximization for Cache-Enabled Vehicular Mobile Edge Computing Networks. [Google Scholar]

- 32.Zheng X., Zheng X., Zhu F., Xia J., Gao C., Cui T., Lai S. Intelligent computing for WPTMEC-aided multi-source data stream. EURASIP J. Appl. Signal Process. 2023;2023(1):1–17. [Google Scholar]

- 33.Zheng X., Zhu F., Xia J., Gao C., Cui T., Lai S. Intelligent computing for WPTâ MEC-aided multi-source data stream. EURASIP J. Appl. Signal Process. 2023;2023(1):1–17. [Google Scholar]

- 34.Ling J., Xia J., Zhu F., Gao C., Lai S., Balasubramanian V. DQN-based resource allocation for NOMA-MEC-aided multi-source data stream. EURASIP J. Appl. Signal Process. 2023;2023(1):44. [Google Scholar]

- 35.He L., Tang X. Learning-based MIMO detection with dynamic spatial modulation. IEEE Transactions on Cognitive Communications and Networking. 2023;(99):1–12. [Google Scholar]

- 36.Alvarez F., Attouch H. An inertial proximal method for maximal monotone operators via discretization of a nonlinear oscillator with damping. Set-Valued Anal. 2001;9:3–11. [Google Scholar]

- 37.Padcharoen A., Kitkuan D., Kumam W., Kumam P. Tseng methods with inertial for solving inclusion problems and application to image deblurring and image recovery problems. Comput. Math. Method. 2021;3:1088. doi: 10.1002/cmm4.1088. [DOI] [Google Scholar]

- 38.Thung K.H., Raveendran P. A survey of image quality measures. Proceeding of International Conference for Technical Postgraduates. 2009;14 [Google Scholar]

- 39.Students from PHED 301 in Sport Health and Physical Education Department at Vancouver Island University, Advance Anatomy, Pressbooks, https://pressbooks.bccampus.ca/advancedanatomy1sted/chapter/medical-imaging/.

- 40.Duong Viet Thong. Cholamjiak Prasit, Rassias Michael T., Cho Yeol Je. Strong convergence of inertial subgradient extragradient algorithm for solving pseudomonotone equilibrium problems. Optimization Letters. 2021;15 [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

No data was used for the research described in the article.