Abstract

The application of artificial intelligence (AI) based on deep learning in dental diagnostic imaging is increasing. Several popular deep learning tasks have been applied to dental diagnostic images.

Classification tasks are used to classify images with and without positive abnormal findings or to evaluate the progress of a lesion based on imaging findings. Region (object) detection and segmentation tasks have been used for tooth identification in panoramic radiographs. This technique is useful for automatically creating a patient's dental chart. Deep learning methods can also be used for detecting and evaluating anatomical structures of interest from images. Furthermore, generative AI based on natural language processing can automatically create written reports from the findings of diagnostic imaging.

Keywords: Deep learning, Dental imaging, Panoramic radiograph, Classification, Region detection, Segmentation

1. Introduction

Since the first decade of the 21st century, digital radiography and dental cone-beam computed tomography (CBCT) have become popular in dentistry. As a result, the research and development of computer-aided detection and diagnosis (CAD), which had not been aggressive in medical fields, gained momentum for fields including dentistry [1]. There are several possible purposes for dental radiological CAD, such as the detection of radiopaque maxillary sinus lesions [2], calcification bodies in the carotid artery [3], and morphological changes in the mandibular cortical bone that suggest possible osteoporosis [4].

Early CAD detected specific X-ray findings to distinguish between the diagnostic images of positive and negative patients using a program designed based on human knowledge and experience. The pathological X-ray findings were characterised by changes in the size of certain organs, high organ radiopacity, and disappearance of soft- or hard-tissue anatomical structures.

Since the early 2010s, the application of machine learning and artificial intelligence (AI) in CAD has increased. In conventional CAD systems, developers had to explicitly write methods and rules into computer programs. In contrast, machine learning and AI can automatically learn characteristic patterns and knowledge from data. That is, it acquires the means to perform a task by learning from experience through data analysis and repeated trials.

Deep learning (DL) is a computer technology that has triggered the development of AI applications. DL is a kind of machine learning method that applies neural networks. A neural network is a set of algorithms having mathematical models that mimic the networks of neurones in the brain and is widely studied since the 20th century.

DL has demonstrated its usefulness in image analysis and processing, natural language processing, predictive analytics, and machine system control. Furthermore, AI is expected to be used in many dental applications, as shown in Fig. 1. (Fig. 1) In image analysis and processing, deep-learning methods are used to solve these problems. Among the various DL methods, classification, region (object) detection, and segmentation are often applied to diagnostic images. For radiological modalities in dentistry, the application of DL has been studied in intraoral radiography, panoramic radiography, and dental cone beam CT (CBCT). In this study, we describe the application of AI in dental imaging diagnosis, mainly from the perspective of various deep learning tasks.

Fig. 1.

Possible clinical application of deep learning in dentistry.

2. The classification task

The classification task can be used to identify the tooth location on intraoral periapical radiographs. In dental practice, ten or more periapical X-ray images of all teeth are often captured per patient. It is difficult to identify these images perfectly without confusing the maxilla, mandible, and left and right sides.

Such tasks can benefit from artificial intelligence using popular DL networks such as LeNet and ResNet, which are fairly accurate in recognizing handwritten numbers and letters [5]. In addition, using a DL-based graphical user interface application, dentists can employ deep learning without the need for programming knowledge.

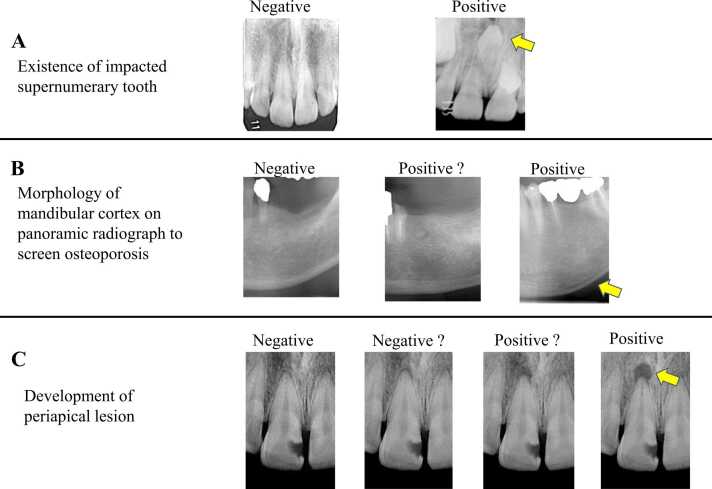

For supervised-learning classification tasks, the accuracy of the ground truth classification provided by humans is important. Fig. 2 shows examples of the objectives for the classification task in dental radiographs (Fig. 2). The classification criteria for identification of impacted supernumerary teeth are evident from Fig. 2A. On the contrary, the accurate classification of the mandibular cortex morphology which indicates the possibility of osteoporosis requires observation by experts. (Fig. 2B). However, in cases of a periapical lesion, it is not easy to diagnose whether a particular periapical region is a radicular cyst (Fig. 2C).

Fig. 2.

Examples objectives for classification task.

Kohinata et al. [6] applied DL strategies to classify subjects (patients) with panoramic radiography data. The patients were classified based on several dental and physical characteristics, including age, sex, mixed or permanent dentition, number of presenting teeth, impacted wisdom tooth status, and prosthetic treatment status. The results showed that mixed or permanent dentition and prosthetic treatment status exhibited a classification accuracy of over 90 %. The accuracies of the other classification items were relatively low. The original panoramic radiograph had a large image matrix size of 3000 × 1500 pixels. The images had to be resized to reduce the resolution for performing deep learning on them because higher resolutions demand heavy computations. However, lower image resolutions may result in low accuracy in other classification categories.

Because DL requires a high computer performance, it is difficult to make precise and detailed diagnoses in images covering a wider area. Therefore, a technique for cropping to a region of interest (ROI) from a bigger X-ray image and performing classification is preferred. Using this procedure, DL classification has been studied for various lesions and anatomical structures. These include pathologies such as maxillary sinusitis [7], root morphology of the molar tooth [8], morphological changes in the temple-mandibular joint [9], and aspirated food and drink in video fluorographic (VF) examination of swallowing images [10].

3. The region (object) detection task

In the region detection task under DL, AI learns from a large number of ROIs manually annotated by experts and can automatically define a rectangular ROI in the objective images.

The application of region detection or segmentation DL tasks is necessary to define appropriate ROIs in any X-ray image if the ultimate purpose is to classify a positive and negative radiographic findings. The target lesions or anatomical structures which are input to a region-detection task can be varied. Panoramic radiographs cover the maxillary sinus [11], [12], temple mandibular joint [13], radiolucent lesions in the mandible [14], impacted supernumerary teeth in the maxillary incisor region [15], cleft jaw and palate [16], and sialoliths of the submandibular gland [17]. Computed tomography (CT) images have been used for detecting cervical lymph nodes in patients with oral squamous cell carcinoma [18].

A DL algorithm for automatically detecting teeth in panoramic radiography is considered a breakthrough in dental practice [19], [20], [21]. Each detected tooth is classified according to its pathological and treatment status, such as presence of tooth decay, eruption disorder, restorative or prosthetic or endodontic treatments, and dental implants. This technique is useful not only for diagnosing a patient’s dental disease but also for automatically obtaining complete information of the patient’s dentition. Moreover, it can also be useful for the postmortem personal identification in the event of a large-scale natural disasters. As panoramic radiography shows a wide area of the maxillofacial region, image preparation methods such as extracting the area of the dental arch in advance, are considered for efficient and accurate tooth detection [22]. Some of these techniques have been developed and already deployed for practical use.

However, on panoramic radiography, neighbouring teeth often overlap. Therefore, it appears complicated and confusing when multiple rectangular ROIs appear close to each other in the dentition area of a panoramic image (Fig. 3A).

Fig. 3.

Automatically region detection and segmentation of teeth in the panoramic radiography.

4. The segmentation task

The segmentation task in deep learning is a technique that divides an image into objective segments. In semantic segmentation, the objects to be selected are distinguished from the background and other objects using different colours. In instance segmentation, it is possible to identify and separate individual instances of the same class of objects. This procedure may be suitable for teeth identification in panoramic radiographs with a clear view [23], [24] (Fig. 3B).

Region detection and segmentation can also be considered complementary techniques. However, when creating annotation data to prepare the training dataset, segmentation is needed to accurately trace the outline of the target, while region detection only encloses the target in a rough rectangle. The segmentation task is generally considered more suitable than the region detection task for extracting specific and detailed anatomical structures and lesions.

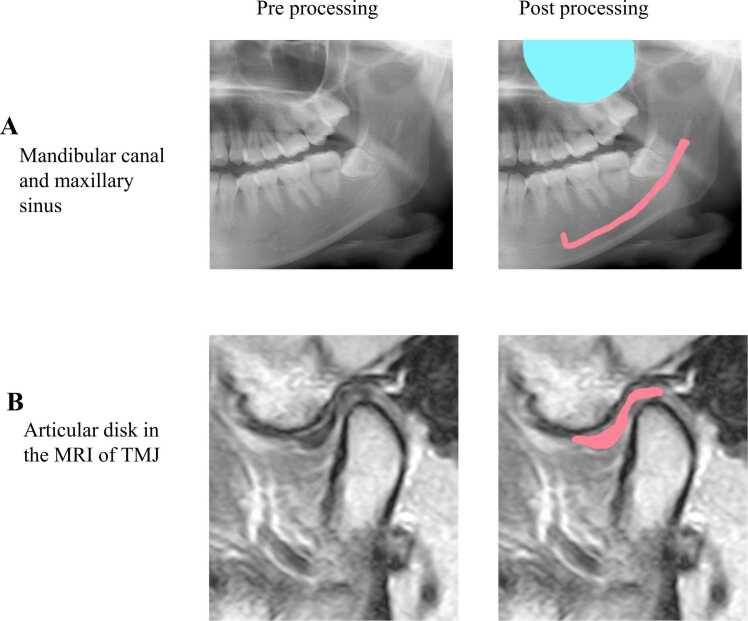

Fig. 4 shows a sample segmentation image of the anatomical structure (Fig. 4). In the panoramic radiograph, a segmentation task is performed to extract the mandibular canal and maxillary sinus [25], [26] (Fig. 4A). Segmentation of periapical lesions in panoramic radiographs has also been reported [27], [28].

Fig. 4.

Example segmentation image of anatomical structure.

In intraoral radiographs, segmentation of dental caries from bitewing or periapical radiographs is preferred. In deep learning segmentation, it is important to choose an objective lesion or anatomical structure according to the size of the radiograph and resolution power.

Several studies have applied segmentation tasks to improve the accuracies of classification tasks. Mori et al. [29] reported that in the classification task to determine whether a periapical radiograph was taken in the appropriate geometric position, the task's accuracy was improved by preliminary segmentation of the tooth that should be located in the centre of the image.

In addition to conventional radiography, the application of image segmentation based on machine learning (including DL) has been explored to detect tumorous lesions and metastatic lymph nodes from CT and MR images [30], [31], [32]. Nozawa et al. [33] reported about the segmentation of the temporomandibular joint (TMJ) disk using MRI (Fig. 4B). Ariji et al. [34] reported about the segmentation of food and drink boluses using video fluoroscopic images of swallowing action.

5. Generative AI

Generative AI is a category of AI techniques that involves creating or generating new content such as text and images.

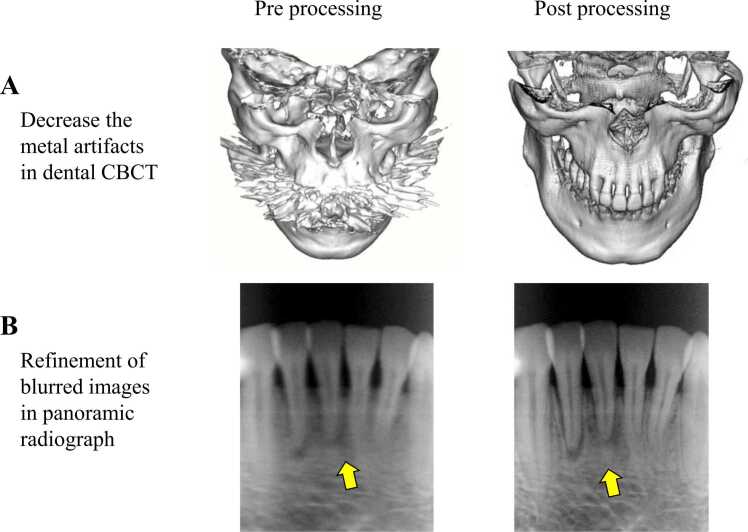

Generative AI employs deep-learning models to achieve its objectives. Generative adversarial networks (GANs) can learn the underlying structures and characteristics from image datasets to define complex patterns and generate new images that resemble the training data. Fig. 5 shows an example of the utility of image processing using generative AI (Fig. 5).

Fig. 5.

Example utilities of image processing by generative AI.

Reports indicate that GANs are useful in decreasing obstructive factors in dental CBCT images, which can interfere with correct image interpretation and diagnosis. (Fig. 5A) These obstructive factors include image noise from the half-circle scan and artefacts from the dental prosthesis [35], [36], [37]. The application of GANs to refine the blurred images that appear in the incisor region of panoramic radiographs has also been reported [38] (Fig. 5B).

A valuable application of generative AI in dentistry is the creation of a patient's dentition and facial features, which are targeted for improvement by prosthetic or orthodontic treatment. The products of generative AI can be beneficial for explanations to patients using images and also for computer-aided design data for creating treatment devices and prostheses to be set in the patient's oral cavity. In addition, generative AI can also integrate natural language processing and prove useful in communication between patients and the medical staff, and support in writing image findings and reports.

6. Perspective

Several advances in computer and software technologies have supported the progress of deep learning. In the medical field, providing a deep-learning environment equipped with an easily understandable user interface has positively helped doctors and dentists with limited programming knowledge to operate the equipment effectively [6].

When considering a feasibility study to detect a finding indicative of a lesion in medical images, it is necessary to determine which deep learning tasks should be used. For example, in certain methods the entire image is regarded as objective data and classified into positive or negative images depending on the presence or absence of the lesion. Alternatively, a region detection or segmentation task can be first applied to detect objective organs and then classify them into positive or negative image findings. The method to be used depends on the disease or anomaly under consideration.

It is interesting and insightful to apply various deep learning techniques to datasets and compare their results. However, to obtain accurate results, a large number of high-quality diagnostic images with precise annotations are required as training images. This process of dataset creation and training requires considerable time and effort.

Recent generative AI techniques enable the organisation of training datasets; however, the level of its utility in the field of dentistry is yet to be explored. There is still room for growth in deep learning and AI for applications in dentistry. Thus, at this juncture it becomes important for the field of dentistry to pursue the latest technological advancements along with fundamental clinical and research applications.

Scientific field of dental science

Radiology and imaging.

Conflict of interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- 1.Katsumata A., Fujita H. Progress of computer-aided detection / diagnosis (CAD) in dentistry. Jpn Dent Sci Rev. 2014;50:63–68. [Google Scholar]

- 2.Hara T., Mori S., Kaneda T., Hayashi T., Katsumata A., Fujita H. Automated contralateral subtraction of dental panoramic radiographs for detecting abnormalities in paranasal sinus. SPIE Med Imaging. 2011;7963 79632R-1-6. [Google Scholar]

- 3.Sawagashira T., Hayashi T., Hara T., Katsumata A., Muramatsu C., Zhou X., Iida Y., Katagi K., Fujita H. An automatic detection method for carotid artery calcifications using top-hat filter on dental panoramic radiographs. IEICE Trans Inf Syst. 2013;E96-D(8):1878–1881. doi: 10.1109/IEMBS.2011.6091533. [DOI] [PubMed] [Google Scholar]

- 4.Muramatsu C., Matsumoto T., Hayashi T., Hara T., Katsumata A., Zhou X., Iida Y., Matsuoka M., Wakisaka T., Fujita H. Automated measurement of mandibular cortical width on dental panoramic radiographs. Int J Comput Assist Radiol Surg. 2013;8:877–885. doi: 10.1007/s11548-012-0800-8. [DOI] [PubMed] [Google Scholar]

- 5.Kitano T., Mori M., Nishiyama W., Kohinata K., Iida Y., Fujita H., Katsumata A. Classification of intraoral X-ray images using artificial intelligence. Dent Radiol. 2020;60(2):53–57. [Google Scholar]

- 6.Kohinata K., Kitano T., Nishiyama W., Mori M., Iida Y., Fujita H., Katsumata A. Deep learning for preliminary profiling of panoramic images. Oral Radiol. 2022;39(2):275–281. doi: 10.1007/s11282-022-00634-x. [DOI] [PubMed] [Google Scholar]

- 7.Murata M., Ariji Y., Ohashi Y., Kawai T., Fukuda M., Funakoshi T., Kise Y., Nozawa M., Katsumata A., Fujita H., Ariji E. Deep-learning classification using convolutional neural network for evaluation of maxillary sinusitis on panoramic radiography. Oral Radiol. 2019;35(3):301–307. doi: 10.1007/s11282-018-0363-7. [DOI] [PubMed] [Google Scholar]

- 8.Hiraiwa T., Ariji Y., Fukuda M., Kise Y., Nakata K., Katsumata A., Fujita H., Ariji E. A deep-learning artificial intelligence system for assessment of root morphology of the mandibular first molar on panoramic radiography. Dentomaxillofac Radiol. 2019;48(3) doi: 10.1259/dmfr.20180218. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Jung W., Lee K.E., Suh B.J., Seok H., Lee D.W. Deep learning for osteoarthritis classification in temporomandibular joint. Oral Dis. 2023;29(3):1050–1059. doi: 10.1111/odi.14056. [DOI] [PubMed] [Google Scholar]

- 10.Iida Y., Näppi J., Kitano T., Hironaka T., Katsumata A., Yoshida H. Detection of aspiration from images of a videofluoroscopic swallowing study adopting deep learning. Oral Radiol. 2023;39:553–562. doi: 10.1007/s11282-023-00669-8. [DOI] [PubMed] [Google Scholar]

- 11.Mori M., Ariji Y., Katsumata A., Kawai T., Araki K., Kobayashi K., Ariji E. A deep transfer learning approach for the detection and diagnosis of maxillary sinusitis on panoramic radiographs. Odontology. 2021;109(4):941–948. doi: 10.1007/s10266-021-00615-2. [DOI] [PubMed] [Google Scholar]

- 12.Kuwana R., Ariji Y., Fukuda M., Kise Y., Nozawa M., Kuwada C., Muramatsu C., Katsumata A., Fujita H., Ariji E. Performance of deep learning object detection technology in the detection and diagnosis of maxillary sinus lesions on panoramic radiographs. Dentomaxillofac Radiol. 2021;50(1) doi: 10.1259/dmfr.20200171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Choi E., Kim D., Lee J.Y., Park H.K. Artificial intelligence in detecting temporomandibular joint osteoarthritis on orthopantomogram. Sci Rep. 2021;11(1) doi: 10.1038/s41598-021-89742-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Ariji Y., Yanashita Y., Kutsuna S., Muramatsu C., Fukuda M., Kise Y., Nozawa M., Kuwada C., Fujita H., Katsumata A., Ariji E. Automatic detection and classification of radiolucent lesions in the mandible on panoramic radiographs using a deep learning object detection technique. Oral Surg Oral Med Oral Pathol Oral Radiol. 2019;128(4):424–430. doi: 10.1016/j.oooo.2019.05.014. [DOI] [PubMed] [Google Scholar]

- 15.Kuwada C., Ariji Y., Fukuda M., Kise Y., Fujita H., Katsumata A., Ariji E. Deep learning systems for detecting and classifying the presence of impacted supernumerary teeth in the maxillary incisor region on panoramic radiographs. Oral Surg Oral Med Oral Pathol Oral Radiol. 2020;130(4):464–469. doi: 10.1016/j.oooo.2020.04.813. [DOI] [PubMed] [Google Scholar]

- 16.Kuwada C., Ariji Y., Kise Y., Fukuda M., Ota J., Ohara H., Kojima N., Ariji E. Detection of unilateral and bilateral cleft alveolus on panoramic radiographs using a deep-learning system. Dentomaxillofac Radiol. 2022 doi: 10.1259/dmfr.20210436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Ishibashi K., Ariji Y., Kuwada C., Kimura M., Hashimoto K., Umemura M., Nagao T., Ariji E. Efficacy of a deep leaning model created with the transfer learning method in detecting sialoliths of the submandibular gland on panoramic radiography. Oral Surg Oral Med Oral Pathol Oral Radiol. 2022;133(2):238–244. doi: 10.1016/j.oooo.2021.08.010. [DOI] [PubMed] [Google Scholar]

- 18.Ariji Y., Fukuda M., Nozawa M., Kuwada C., Goto M., Ishibashi K., Nakayama A., Sugita Y., Nagao T., Ariji E. Automatic detection of cervical lymph nodes in patients with oral squamous cell carcinoma using a deep learning technique: a preliminary study. Oral Radiol. 2021;37(2):290–296. doi: 10.1007/s11282-020-00449-8. [DOI] [PubMed] [Google Scholar]

- 19.Tuzoff D.V., Tuzova L.N., Bornstein M.M., Krasnov A.S., Kharchenko M.A., Nikolenko S.I., Sveshnikov M.M., Bednenko G.B. Tooth detection and numbering in panoramic radiographs using convolutional neural networks. Dentomaxillofac Radiol. 2019;48(4) doi: 10.1259/dmfr.20180051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Morishita T., Muramatsu C., Seino Y., Takahashi R., Hayashi T., Nishiyama W., Zhou X., Hara T., Katsumata A., Fujita H. Tooth recognition of 32 tooth types by branched single shot multibox detector and integration processing in panoramic radiographs. J Med Imaging (Bellingham) 2022;9(3) doi: 10.1117/1.JMI.9.3.034503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mima Y., Nakayama R., Hizukuri A., Murata K. Tooth detection for each tooth type by application of faster R-CNNs to divided analysis areas of dental panoramic X-ray images. Radiol Phys Technol. 2022;15(2):170–176. doi: 10.1007/s12194-022-00659-1. [DOI] [PubMed] [Google Scholar]

- 22.Muramatsu C., Morishita T., Takahashi R., Hayashi T., Nishiyama W., Ariji Y., Zhou X., Hara T., Katsumata A., Ariji E., Fujita H. Tooth detection and classification on panoramic radiographs for automatic dental chart filing: improved classification by multi-sized input data. Oral Radiol. 2021;37(1):13–19. doi: 10.1007/s11282-019-00418-w. [DOI] [PubMed] [Google Scholar]

- 23.Umer F., Habib S., Adnan N. Application of deep learning in teeth identification tasks on panoramic radiographs. Dentomaxillofac Radiol. 2022;51(5) doi: 10.1259/dmfr.20210504. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Leite A.F., Gerven A.V., Willems H., Beznik T., Lahoud P., Gaêta-Araujo H., Vranckx M., Jacobs R. Artificial intelligence-driven novel tool for tooth detection and segmentation on panoramic radiographs. Clin Oral Invest. 2021;25:2257–2267. doi: 10.1007/s00784-020-03544-6. [DOI] [PubMed] [Google Scholar]

- 25.Cha J.Y., Yoon H.I., Yeo I.S., Huh K.H., Han J.S. Panoptic segmentation on panoramic radiographs: Deep learning-based segmentation of various structures including maxillary sinus and mandibular canal. J Clin Med. 2021;10(12):2577. doi: 10.3390/jcm10122577. Jun 11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Ariji Y., Mori M., Fukuda M., Katsumata A., Ariji E. Automatic visualization of the mandibular canal in relation to an impacted mandibular third molar on panoramic radiographs using deep learning segmentation and transfer learning techniques. Oral Surg Oral Med Oral Pathol Oral Radiol. 2022;134(6):749–757. doi: 10.1016/j.oooo.2022.05.014. [DOI] [PubMed] [Google Scholar]

- 27.Bayrakdar I.S., Orhan K., Çelik Ö., Bilgir E., Sağlam H., Kaplan F.A., Görür S.A., Odabaş A., Aslan A.F., Różyło-Kalinowska I. A U-net approach to apical lesion segmentation on panoramic radiographs. Biomed Res Int. 2022;2022 doi: 10.1155/2022/7035367. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Song I.S., Shin H.K., Kang J.H., Kim J.E., Huh K.H., Yi W.J., Lee S.S., Heo M.S. Deep learning-based apical lesion segmentation from panoramic radiographs. Imaging Sci Dent. 2022;52(4):351–357. doi: 10.5624/isd.20220078. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Mori M., Ariji Y., Fukuda M., Kitano T., Funakoshi T., Nishiyama W., Kohinata K., Iida Y., Ariji E., Katsumata A. Performance of deep learning technology for evaluation of positioning quality in periapical radiography of the maxillary canine. Oral Radiol. 2022;38(1):147–154. doi: 10.1007/s11282-021-00538-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Deng W., Luo L., Lin X., Fang T., Liu D., Dan G., Chen H. Head and neck cancer tumor segmentation using support vector machine in dynamic contrast- enhanced MRI. Contrast Media Mol Imaging. 2017;2017(8612519) doi: 10.1155/2017/8612519. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Ariji Y., Kise Y., Fukuda M., Kuwada C., Ariji E. Segmentation of metastatic cervical lymph nodes from CT images of oral cancers using deep-learning technology. Dentomaxillofac Radiol. 2022;51(4) doi: 10.1259/dmfr.20210515. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Xu X., Xi L., Wei L., Wu L., Xu Y., Liu B., Li B., Liu K., Hou G., Lin H., Shao Z., Su K., Shang Z. Deep learning assisted contrast-enhanced CT-based diagnosis of cervical lymph node metastasis of oral cancer: a retrospective study of 1466 cases. Eur Radiol. 2023;33(6):4303–4312. doi: 10.1007/s00330-022-09355-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Nozawa M., Ito H., Ariji Y., Fukuda M., Igarashi C., Nishiyama M., Ogi N., Katsumata A., Kobayashi K., Ariji E. Automatic segmentation of the temporomandibular joint disc on magnetic resonance images using a deep learning technique. Dentomaxillofac Radiol. 2022;51(1) doi: 10.1259/dmfr.20210185. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Ariji Y., Gotoh M., Fukuda M., Watanabe S., Nagao T., Katsumata A., Ariji E. A preliminary deep learning study on automatic segmentation of contrast-enhanced bolus in videofluorography of swallowing. Sci Rep. 2022;12(1) doi: 10.1038/s41598-022-21530-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Hegazy M.A.A., Cho M.H., Lee S.Y. Half-scan artifact correction using generative adversarial network for dental CT. Comput Biol Med. 2021;132 doi: 10.1016/j.compbiomed.2021.104313. [DOI] [PubMed] [Google Scholar]

- 36.Hu Z., Jiang C., Sun F., Zhang Q., Ge Y., Yang Y., Liu X., Zheng H., Liang D. Artifact correction in low-dose dental CT imaging using Wasserstein generative adversarial networks. Med Phys. 2019;46(4):1686–1696. doi: 10.1002/mp.13415. [DOI] [PubMed] [Google Scholar]

- 37.Hegazy M.A.A., Cho M.H., Lee S.Y. Image denoising by transfer learning of generative adversarial network for dental CT. Biomed Phys Eng Express. 2020;6(5) doi: 10.1088/2057-1976/abb068. [DOI] [PubMed] [Google Scholar]

- 38.Kim H.S., Ha E.G., Lee A., Choi Y.J., Jeon K.J., Han S.S., Lee C. Refinement of image quality in panoramic radiography using a generative adversarial network. Dentomaxillofac Radiol. 2023;52(5) doi: 10.1259/dmfr.20230007. [DOI] [PMC free article] [PubMed] [Google Scholar]