Abstract

Purpose:

To evaluate the cost-effectiveness of phacoemulsification simulation training in virtual reality simulator and wet laboratory on operating theater performance.

Methods:

Residents were randomized to a combination of virtual reality and wet laboratory phacoemulsification or wet laboratory phacoemulsification. A reference control group consisted of trainees who had wet laboratory training without phacoemulsification. All trainees were assessed on operating theater performance in 3 sequential cataract patients. International Council of Ophthalmology Surgical Competency Assessment Rubric—phacoemulsification (ICO OSCAR phaco) scores by 2 masked independent graders and cost data were used to determine the incremental cost-effectiveness ratio (ICER). A decision model was constructed to indicate the most cost-effective simulation training strategy based on the willingness to pay (WTP) per ICO OSCAR phaco score gained.

Results:

Twenty-two trainees who performed phacoemulsification in 66 patients were analyzed. Trainees who had additional virtual reality simulation achieved higher mean ICO OSCAR phaco scores compared with trainees who had wet laboratory phacoemulsification and control (49.5 ± standard deviation [SD] 9.8 vs 39.0 ± 15.8 vs 32.5 ± 12.1, P < .001). Compared with the control group, ICER per ICO OSCAR phaco of wet laboratory phacoemulsification was $13,473 for capital cost and $2209 for recurring cost. Compared with wet laboratory phacoemulsification, ICER per ICO OSCAR phaco of additional virtual reality simulator training was US $23,778 for capital cost and $1879 for recurring cost. The threshold WTP values per ICO OSCAR phaco score for combined virtual reality simulator and wet laboratory phacoemulsification to be most cost-effective was $22,500 for capital cost and $1850 for recurring cost.

Conclusions:

Combining virtual reality simulator with wet laboratory phacoemulsification training is effective for skills transfer in the operating theater. Despite of the high capital cost of virtual reality simulator, its relatively low recurring cost is more favorable toward cost-effectiveness.

Keywords: cataract surgery, cost-effectiveness, education, phacoemulsification, simulation

1. Introduction

Evidence for enhanced surgical competency compels the increasing uptake of simulation training.[1–3] Notably, a national survey by Ferris et al in the United Kingdom reported the proportion of trainee cataract surgeons with access to simulation training increased from <10% in 2009 to about 80% in 2015.[2] However, information about the cost of cataract surgery training is scanty. Nandigam et al reported the expenditures per resident cataract surgery simulation training program varied widely from $4900 to $306,400.[4] When considering the cost of cataract surgery training in the operating theater, Hosler et al estimated an extra amount of $8293 per annual was spent for each resident.[5] Nonetheless, the cost entailed in achieving a certain training outcome is not clear. Currently, there are no data available to evaluate the cost-effectiveness of simulation training compared with no intervention or alternative training approaches in cataract surgery. As the global training expenditure rises with increasing demand for competent cataract surgeons and greater emphasis on technologically advanced simulators, cost must be carefully evaluated against the effectiveness of training.[6,7] Stakeholders may become reluctant to invest in an effective training strategy because the return on investment is not readily apparent.[8–10]

There are various high fidelity simulation training options for cataract surgery.[11] A commercially available virtual reality simulator provides task-specific, proficiency-based training in phacoemulsification cataract extraction which had been vigorously validated.[12–14] There is robust clinical evidence for proficiency-based training in the virtual reality simulator associates with effective surgical skills transfer[3] and reduced intraoperative complication rates[2,15]; however, its cost-effectiveness is not yet evaluated.[16–19]

Apart from virtual reality simulator, various wet laboratory phacoemulsification simulation models have been described.[11,20] Wet laboratory training enables tactile feedback, which is not yet available through commercially available virtual reality cataract surgery simulator.[14,21] Training centers may have the incentive to invest in wet laboratory because the training facilities are mutual to various surgical procedures.[22,23] However, implementing a wet laboratory skills course can be expensive, which includes a myriad of recurrent expenditures and manpower.[4,22]

Combining the distinct advantages of virtual reality and wet laboratory training may provide the most comprehensive and effective simulation training.[3] The purpose of this study was to evaluate the cost-effectiveness of the combination of virtual reality and wet laboratory phacoemulsification compared with only wet laboratory phacoemulsification on novice surgeon’s performance in the operating theater. The cost data were attained from all actual components in setting-up and implementing our phacoemulsification training curriculum in practice. To enhance the generalizability of our cost-effectiveness analysis, we herein developed an economic model for sensitivity analysis for a range of willingness to pay (WTP) values.[24] WTP represents the price that is required to achieve a certain unit of outcome variable. Using this economic model, the cost-effectiveness of simulation training strategies can be compared. The optimal strategy can then be identified for various scenarios and translated into practical recommendations for decision makers.

2. Methods

2.1. Participants

From March 2018 to June 2020, resident trainees were identified by the chiefs of service of all ophthalmology departments with residency programs in Hong Kong. All were invited to participate in a structured simulation cataract surgery training curriculum at the Chinese University of Hong Kong Jockey Club Ophthalmic Microsurgical Training Centre (CUHK JC OMTC). Participation in the curriculum was free of charge, and all expenses were supported by a philanthropic donation from the Hong Kong Jockey Club. All trainees and trainers participated when they were off work and did not receive any compensation or incentives. At the beginning of the curriculum, trainees were invited to voluntarily join the study. Inclusion criteria were no ophthalmic microsurgical simulation training or phacoemulsification experience in the operating theater before enrollment and written informed consent.

2.2. Study design

The cost-effectiveness study was carried out according to the 4 steps of program effectiveness and cost generalization (PRECOG) model proposed by Tolsgaard et al, which consisted of (1) gathering data on training outcome; (2) assessing total cost; (3) calculating ICERs, and (4) estimating cost-effectiveness probability.[9]

2.3. Simulation cataract surgery training modules, randomization and reference group

The first step was a randomized trial of phacoemulsification simulation training in virtual reality simulator in addition to wet laboratory versus in wet laboratory only. The original protocol of the randomized trial and the details of the curriculum are available (Supplemental Material, http://links.lww.com/MD/J745). This study was approved by Research Ethics Committee of the Hong Kong Hospital Authority and adhered to the tenets of the Declaration of Helsinki. The trial registration is ISRCTN15327117.

The standardized curriculum consisted of 3 modules. The first module was learning basic microsurgical skills such as wound construction, the use of viscoelastic device, instrument handling when entering the anterior chamber, wound closure, and suture placement supervised by fellowship-trained surgeons for 6 hours in the wet laboratory. All trainees must complete the first module before proceeding to the second module.

The second module consisted of performing all steps of phacoemulsification surgery with the Centurion Vision System (Alcon Laboratories, Inc., Texas, USA) and intraocular lens implantation in synthetic model eye (Kitaro WetLab, Frontier Vision Co., Ltd., Hyogo, Japan). They had a total of 6 hours of supervised wet laboratory training.

After completion of the second module, the trainees were randomized into either Group A (Eyesi + Wet lab) or Group B (Wet lab). Randomization sequence was created using Microsoft Excel (version 16, Microsoft Corp, Redmond, Washington, USA) with a 1:1 allocation using random block sizes of 2, 4, and 6 by an independent coordinator (BY) who was not involved in the trial protocol and had no access to data until all outcomes were collected. The trainees received their randomized group allocation via smartphone text message from the independent coordinator, and the allocations were concealed from all other study investigators.

Trainee group A (Eyesi + Wet lab) proceeded to the third module before operating room video-recorded assessment of phacoemulsification surgeries in patients. Group B (Wet lab) proceeded to operating room video assessment. They were allowed the option to attend the third module after completing the video assessment.

The third module was proficiency-based, virtual reality phacoemulsification simulation training (Eyesi Version 3.0, VRmagic, Mannheim, Germany). Trainees reserved their training time slots through a secured online booking system to ensure their identities and group allocations remained concealed from the investigators. At the start, each trainee was given a tutorial on-site to operate in Eyesi and a printed instruction manual. Thereafter, they were allowed to practice in the simulator for unlimited time until a score of 600 out of 700 was achieved. These training exercises were selected according to validation studies, which consisted of intracapsular navigation level 2, antitremor training level 4, intracapsular antitremor training level 2, forceps training level 4, bimanual training level 5, capsulorhexis training level 1 and phaco divide and conquer level 5.[3,12]

2.4. Operating room video assessment and masking

Following the completion of training modules according to their allocations, trainees performed their initial 3 sequential video-recorded phacoemulsification cataract surgeries with intraocular lens implantation in patients under supervision. All supervisors were masked to the study groups to which the residents belonged. All trainees were assessed on uncomplicated cataract eyes which had Snellen best-corrected visual acuity >1/60 and Lens Opacities Classification System III (LOC III) grading of nuclear color <4, nuclear opalescence <4, cortical (C) <4, and posterior subcapsular (P) <4. A research assistant observed the 3 sequential surgeries and recorded the surgical steps as performed by the trainees (without supervisor intervention). Total operation time and intraoperative complications including errant capsulorhexis, PCR, and anterior vitrectomy were also recorded.

2.5. Data anonymization, masking, and outcome measures

All visible identities and sounds on the videos were cropped so that patients, trainees and supervisors were anonymized. All videos were uploaded to cloud storage platform (OneDrive, Microsoft, Washington, USA) in random sequence. Two graders (DN, OA) who were masked to the identity of trainees independently assessed all residents’ videos and scored their surgical competencies using the International Council of Ophthalmology Surgical Competency Assessment Rubric—phacoemulsification (ICO OSCAR phaco).[25] Detailed skill requirements of the ICO OSCAR phaco to achieve each level in each step had been explained to all study participants during the instruction course. Both graders were also briefed on the ICO OSCAR phaco to ensure standardized assessment. The graders were not required to score the first item “draping” because the procedure was not captured by the video camera. Global indices were not rated because all trainees were under supervision and some steps were performed by supervisors. Therefore, the graders scored 13 task-specific items with maximum total score of 65. After the grades were returned for data analysis, the scores of the items that were performed by supervisors were adjusted to 0.

2.6. Reference group

Trainees who completed the first module (fundamental wet laboratory training without phacoemulsification) who did not proceed to the second module and randomization were recruited as control subjects in Group C (Control) and directly participated in the operation room video assessment. These trainees did not proceed to the second module because of schedule conflicts, and few were not able to attend during COVID-19 pandemic shut down of simulation facility in 2020.

2.7. Cost data collection

Cost data were collected according to the cost reporting framework in education research proposed by Levin, which comprised of (1) all identified components associated with each type of simulation modules at the CUHK JC OMTC; (2) monetary values for each of the components; and (3) sum of costs for simulation modules for comparison among alternative training strategies.[26] Two categories of cost data were reported: “capital cost” represented the expenses for the requisite facilities per training center and “recurring cost” represented the recurrent expenditures for each trainee to participate in the simulation module. Actual expenses for warranty plans were considered as maintenance cost for microscope, phacoemulsification system, and virtual reality simulator. The maintenance cost for wet laboratory equipment was the budget set aside for replacing damaged equipment.

2.8. Determining the incremental cost-effectiveness ratio

Incremental cost-effectiveness ratio (ICER) was calculated for the primary outcome of surgical competency. It was determined by the cost of the simulation module(s) from a trainee group minus those of the comparison group, divided by the difference in their outcomes (mean difference in total ICO OSCAR phaco scores). The following comparisons were performed: Group A (Eyesi + Wet lab) versus Group B (Wet lab); Group A (Eyesi + Wet lab) versus Group C (Control) and Group B (Wet lab) versus Group C (Control).

2.9. Estimating cost-effectiveness probability for different WTP values

WTP represents the amount that the stakeholder is prepared to pay to achieve the target outcome.[24] In health economics theory, probabilistic model predicts the likelihood that an intervention is cost-effective for a range of WTP values.[27] In this study, the net benefit regression model was used to estimate the probability of cost-effectiveness at various WTP values.[28] The basic equation for each individual subject’s net benefit (NB) was NB = WTP × E – C, where E represented the observed effect (mean ICO OSCAR phaco score) and C represented cost. Based on this equation, each subject’s NB values at various WTP values were fitted into a general linear regression framework NB = α + β(TX) + ε, where α was an intercept term, TX was an intervention dummy (e.g., Group A = 1 and Group B = 0), and ε was an error term. The coefficient estimate (β) represented the incremental net benefit (INB). INB conveyed by how much the value of the extra effect outweighed the extra cost. A positive INB indicated cost-effectiveness and negative INB indicated that the training module was not cost-effective. Based on the variance in distribution of effects and costs, cost-effectiveness acceptability curve was generated to illustrate the probability of a simulation training strategy being cost-effective at various WTP values.[9,29]

2.10. Statistical analysis

Pearson Chi-square test was used to compare differences between categorical variables. When any of the expected cell count was <5, Fisher exact test was used. Shapiro-Wilk test was used to assess the normality of data. For nonparametric data, differences between means were assessed by the Mann-Whitney U test. Kruskal-Wallis test was used to compare the differences in surgical competency scores between the 3 trainee groups (A, B, and C). A discriminative ability on a 5% level (P < .05) was considered statistically significant. When a statistically significant difference was found, post hoc pairwise comparison was performed with Dunn-Bonferroni method. Generalizability theory was used to analyze the reliability of the ICO OSCAR phaco scores.[30] A fully crossed design for variances of ratings between the 2 graders and 3 videos were evaluated. Generalizability coefficient of 0.8 and above was considered an acceptable level. Statistical analyses, including cost-effectiveness analyses were performed in SPSS version 24.0 (SPSS Inc., Chicago, IL, USA).

3. Results

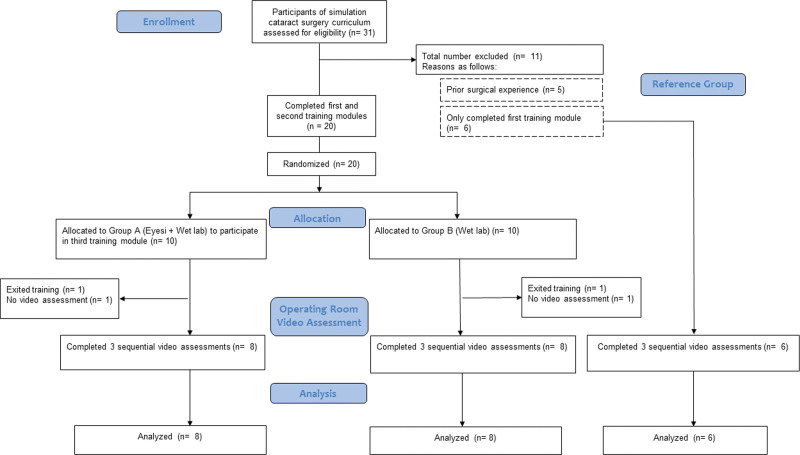

Of 31 ophthalmology residents who participated in the simulation cataract surgery training curriculum at the CUHK JC OMTC, 26 met the inclusion criteria and participated in the study. Ten trainees were randomized to Group A (Eyesi + Wet lab) and 10 were randomized to Group B (Wet lab). Six trainees who only completed the first module and did not proceed to randomization were recruited in reference Group C (Control). Four trainees did not have video recording in the operating room and were not able to be assessed; 2 owing to technical problems during the recording and 2 exited from the study. The final analysis, therefore, consisted of 8 trainees in Group A, 8 in Group B, and 6 in Group C (Fig. 1).

Figure 1.

CONSORT flow diagram.

3.1. Trainees data

Data of trainees who were in the final analysis is listed in Table 1. The 3 groups were similar in age, gender ratio, duration since graduation from medical school, and duration of ophthalmic residency training. All of them were right hand dominant. Group A trainees spent a mean time of 148.13 ± standard deviation (SD) 57.60 minutes (ranged from 84 minutes to 312 minutes) to complete the proficiency requirement in Eyesi.

Table 1.

Demographic and background data of participants in Groups A (Eyesi + Wet laboratory phacoemulsification with synthetic eyes + Wet laboratory with porcine eyes), B (Wet laboratory phacoemulsification with synthetic eyes + Wet laboratory with porcine eyes) and C (Wet laboratory with porcine eyes).

| Group A | Group B | Group C | P | |

|---|---|---|---|---|

| Total number (n) | 8 | 8 | 6 | / |

| Gender Male:Female | 3:5 | 2:6 | 4:2 | 0.42a |

| Age mean ± SD (years) | 27.0 ± 3.3 | 28.4 ± 4.3 | 26.5 ± 0.7 | 0.31b |

| Right dominant hand | 8 | 8 | 6 | / |

| Duration of graduation from medical school mean ± SD (years) | 3.6 ± 1.2 | 3.8 ± 2.1 | 2.8 ± 0.8 | 0.32b |

| Duration of ophthalmic residency training mean ± SD (years) | 2.1 ± 0.4 | 2.1 ± 0.4 | 1.7 ± 0.5 | 0.09b |

Fisher exact test;

Kruskal-Wallis test.

SD = standard deviation.

3.2. Cataract patients data

A total of 66 cataract patients were operated by trainees during the video recording assessment (Table 2). Patients in the 3 groups had no significant differences in age, gender, cataract grading by LOCS III classification and preoperative visual acuity.

Table 2.

Data of video-recorded cataract patients operated by trainees from Groups A (Eyesi + Wet laboratory phacoemulsification with synthetic eyes + Wet laboratory with porcine eyes), B (Wet laboratory phacoemulsification with synthetic eyes + Wet laboratory with porcine eyes), and C (Wet laboratory with porcine eyes).

| Group A | Group B | Group C | P | |

|---|---|---|---|---|

| Total number (n) | 24 | 24 | 18 | / |

| Gender Male:Female | 8:16 | 10:14 | 6:12 | 0.85a |

| Age mean ± SD (years) | 75.3 ± 8.3 | 76.9 ± 6.2 | 76.6 ± 6.1 | 0.67b |

| LOCS III NO mean ± SD | 2.6 ± 1.2 | 2.7 ± 1.1 | 3.0 ± 0.7 | 0.58b |

| LOCS III NC mean ± SD | 2.6 ± 1.1 | 2.9 ± 1.2 | 2.5 ± 0.4 | 0.64b |

| LOCS III C mean ± SD | 1.4 ± 0.7 | 2.0 ± 1.1 | 2.1 ± 0.9 | 0.60b |

| LOCS III P mean ± SD | 1.0 ± 0.2 | 1.7 ± 1.1 | 1.3 ± 0.5 | 0.30b |

| Preop BCVA (LogMAR) mean ± SD | 0.63 ± 0.26 | 0.88 ± 0.53 | 0.64 ± 0.20 | 0.30b |

Chi square test;

Kruskal-Wallis test.

BCVA = best-corrected visual acuity, C = cortical, LOCS III = Lens Opacities Classification System III, LogMAR = logarithm of the minimum angle of resolution, NC = nuclear color, NO = nuclear opalescence, P = posterior subcapsular, SD = standard deviation.

3.3. Primary outcome: operation room performance skills

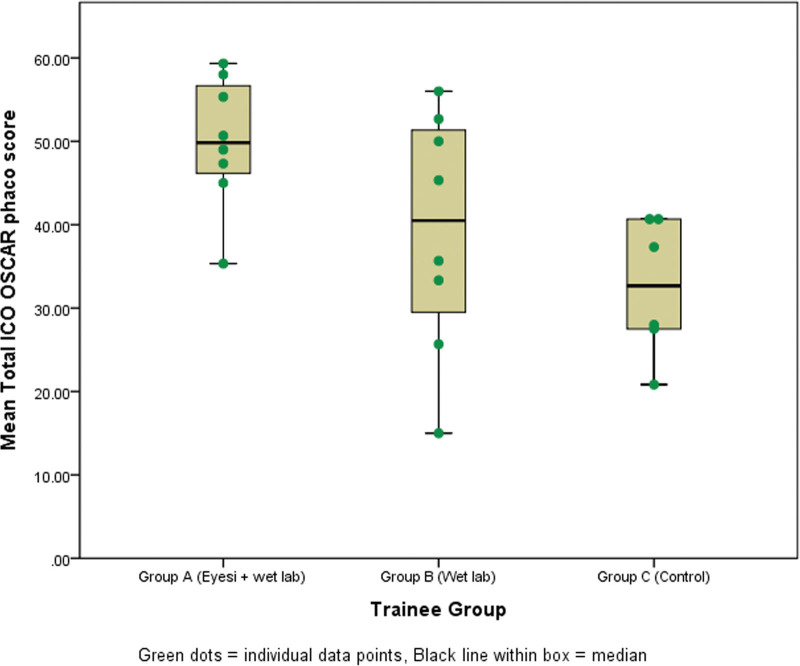

The generalizability coefficient for the performance assessments was 0.83, which had acceptable reliability with 2 masked independent graders who assessed 3 surgical videos from each trainee. Results from the randomized trial indicated that trainees in Group A (Eyesi + Wet lab) had superior mean total ICO OSCAR phaco scores compared with Group B (Wet lab) (49.5 ± SD 9.8 vs 39.0 ± SD 15.8, P = .02). Both randomized groups performed better than Group C (Control) which had a mean score of 32.5 ± SD 12.1 (P < .001 and P = .28 for A vs C and B vs C, respectively) (Fig. 2).

Figure 2.

Box and Whisker plot for the mean total ICO OSCAR phaco scores for surgical competency by trainees Groups A (Eyesi + Wet laboratory phacoemulsification with model eyes + Wet laboratory with porcine eyes), B (Wet laboratory phacoemulsification with model eyes + Wet laboratory with porcine eyes), and C (Wet laboratory with porcine eyes). Individual data were represented by green dots. ICO OSCAR phaco = International Council of Ophthalmology Surgical Competency Assessment Rubric—phacoemulsification.

3.4. Other intraoperative outcomes

The 3 groups of trainees had no significant difference in intraoperative complication rates: errant capsulorhexis occurred in 2, 1, and 1 case(s); posterior capsule rupture (PCR) in 2, 2, and 2 cases and anterior vitrectomy was performed in 1, 2 and 1 case(s) of operations by Groups A, B, and C, respectively. Group A trainees performed a significantly higher number of steps (out of 13 total) without intervention by supervisors during phacoemulsification surgery comparing to Groups B and C (11.5 ± SD 1.9 vs 9.5 ± SD 3.3 vs 9.1 ± SD 3.1, P = .006). There was no statistically significant difference in total operation time (34.75 ± SD 10.76 vs 38.91 ± SD 9.31 vs 39.92 ± SD 4.68 minutes, P = .10) between groups A, B, and C, respectively.

3.5. Cost data

Cost data (Table 3) for each type of cataract surgery simulation module in our curriculum: first module (wet laboratory with porcine eyes), second module (wet laboratory with phacoemulsification in synthetic eyes), and third module (training with virtual reality simulator, Eyesi) was reported in the “capital cost” and the “recurring cost” categories. The cost associated with capital cost (per training center) and recurring cost (per annual per trainee) in Groups A (Eyesi + Wet laboratory with model eyes + Wet laboratory with porcine eyes), B (Wet laboratory with model eyes + Wet laboratory with porcine eyes), and C (Wet laboratory with porcine eyes) were summarized. Some items were shared between the first and second modules, such as work station and microscope, thus, repetitive items were excluded from calculations of capital costs for Groups A and B.

Table 3.

Cost data in US dollars

| Capital Cost (per training center) | First module (Wet laboratory with porcine eye) | Second module (Wet laboratory phacoemulsification with model eye) | Third module (EyeSi) | |||

|---|---|---|---|---|---|---|

| Items | Cost per unit | Quantity | Cost per unit | Quantity | Cost per unit | Quantity |

| Work station table | 164.10 | 1 | 164.10 | 1 | / | 0 |

| Surgical stool | 1,499.68 | 2 | 1,499.68 | 2 | 1,499.68 | 1 |

| Ophthalmic operating microscope | 70,166.67 | 1 | 70,166.67 | 1 | / | 0 |

| Video camera for microscope | 24,629.49 | 1 | 24,629.49 | 1 | / | 0 |

| Phacoemulsification system | / | 0 | 85,256.41 | 1 | / | 0 |

| Intraocular equipment set | 4,400.00 | 1 | 4,400.00 | 1 | / | 0 |

| Kitaro Mannequin head (training kit + sclera) | / | 0 | 1,089.74 | 1 | / | 0 |

| EyeSi virtual reality simulator | / | 0 | / | 0 | 247,692.31 | 1 |

| Total Capital Cost | 102,359.62 | 188,705.77 | 249,191.99 | |||

| (B) Recurring cost (per annum per trainee) | First module (Wet laboratory with porcine eye) | Second module (Wet laboratory phacoemulsification with model eye) | Third module (EyeSi) | |||

| Consumables | Cost per unit | Quantity | Cost per unit | Quantity | Cost per unit | Quantity |

| Porcine eye | 0.26 | 6 | / | 0 | / | 0 |

| Water absorbing table drape | 0.16 | 2 | 0.16 | 2 | / | 0 |

| Gloves | 0.10 | 6 | 0.10 | 6 | / | 0 |

| Viscoelastic (Healon) | 32.05 | 6 | 32.05 | 6 | / | 0 |

| 15° Sideport knife | 4.30 | 6 | 4.30 | 6 | / | 0 |

| Keratome (2.2 mm slit knife) | 4.30 | 6 | 4.30 | 6 | / | 0 |

| Syringes (3 mL syringe) | 0.12 | 6 | 0.12 | 6 | / | 0 |

| 27G needle | 0.07 | 6 | 0.07 | 6 | / | 0 |

| 27G cannula | 0.05 | 6 | 0.05 | 6 | / | 0 |

| Sutures (10-0 nylon) | 13.72 | 6 | 13.72 | 6 | / | 0 |

| Kitaro cornea part | / | 0 | 48.72 | 6 | / | 0 |

| Kitaro lens part | / | 0 | 14.10 | 6 | / | 0 |

| Phaco cassette (Centurion FMS) | / | 0 | 102.31 | 6 | / | 0 |

| Staff salary (per hour) | ||||||

| Administration & support (per hour) | 44.87 | 12 | 44.87 | 12 | / | 0 |

| Equipment maintenance (per annum) | ||||||

| Intraocular equipment set | 880.00 | 1 | 880.00 | 1 | / | 0 |

| Microscope maintenance | 3,974.36 | 1 | 3,974.36 | 1 | / | 0 |

| Phaco machine maintenance | / | 0 | 7,692.31 | 1 | / | 0 |

| EyeSi maintenance | / | 0 | / | 0 | 12,790.90 | 1 |

| EyeSi software upgrade | / | 0 | / | 0 | 6,900.00 | 1 |

| Total recurring cost (per annum) | 5,722.94 | 14,404.47 | 19,690.90 | |||

| (C) Training groups cost (per trainee) | Group A (1st + 2nd + 3rd modules) | Group B (1st + 2nd modules) | Group C (1st module) | |||

| Capital cost* | 437,897.76 | 188,705.77 | 102,359.62 | |||

| Recurring cost (per annum) | 39,818.31 | 20,127.41 | 5,722.94 | |||

(A) The capital cost per training center for starting up cataract surgery simulation with the following modules: wet laboratory with porcine eyes, wet laboratory with synthetic eyes and virtual reality simulator (Eyesi). (B) The recurring cost per annum per trainee for implementing the simulation training modules. (C) Summary of capital and recurring costs for Groups A (Eyesi + Wet laboratory phacoemulsification with model eyes + Wet laboratory with porcine eyes), B (Wet laboratory phacoemulsification with model eyes + Wet laboratory with porcine eyes) and C (Wet laboratory with porcine eyes).

Repetitive items (shared between first and second modules) were excluded from calculations of capital costs for Groups A and B.

3.6. Cost-effectiveness analyses

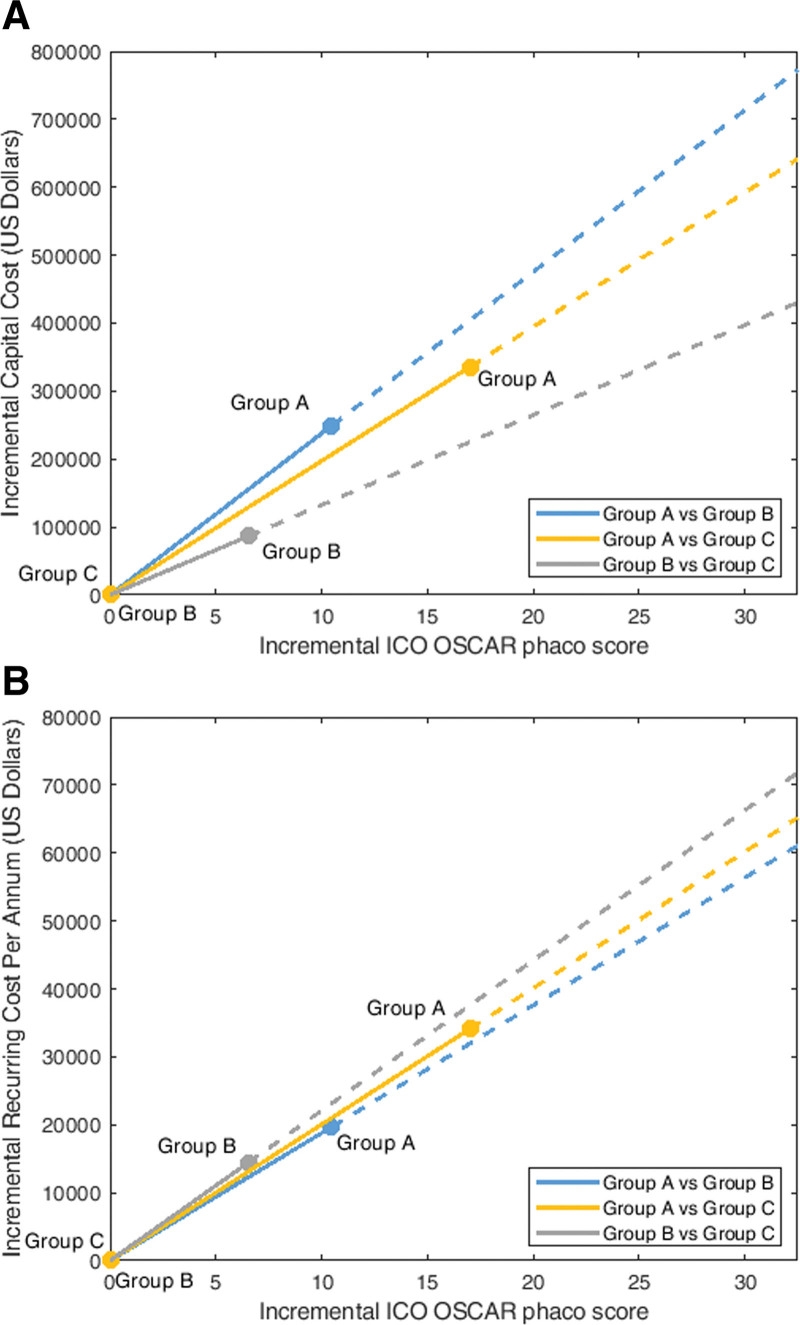

Cost-effective analyses were performed to represent 2 perspectives: (i) capital cost (ii) recurring cost. The ICER per ICO OSCAR phaco score of Group A (Eyesi + Wet lab) relative to Group B (Wet lab) was US $23,777.86 for capital cost and $1878.90 for recurring cost. The ICER for Group A (Eyesi + Wet lab) relative to Group C (Control) was $19,737.54 for capital cost and $2005.61 for recurring cost. ICER for Group B (Wet lab) relative to Group C (Control) was $13,473.27 for capital cost and $2209.27 for recurring cost (Fig. 3A,B).

Figure 3.

(A) Cost-effectiveness plane with y axis representing the incremental capital cost (US Dollars) of our training center for requisite simulation facilities and x axis representing the ICO OSCAR phaco score in operating theater performance. The blue line illustrates the incremental cost-effectiveness ratio (ICER) of Group A (Eyesi + Wet laboratory phacoemulsification with synthetic eyes + Wet laboratory with porcine eyes) relative to Group B (Wet laboratory phacoemulsification with synthetic eye + Wet laboratory with porcine eyes), the yellow line illustrates the ICER of Group A relative to Group C (Wet laboratory with porcine eyes), and the gray line illustrates the ICER of Group B relative to Group C. (B) Cost-effectiveness plane with y axis representing the incremental recurring cost per annum (US Dollars) of trainee participation in simulation module(s) and x axis representing the incremental ICO OSCAR phaco score in operating theater performance. The blue line illustrates the ICER of Group A (Eyesi + Wet laboratory phacoemulsification with synthetic eyes + Wet laboratory with porcine eyes) relative to Group B (Wet laboratory phacoemulsification with synthetic eye + Wet laboratory with porcine eyes), the yellow line illustrates the ICER of Group A relative to Group C (Wet laboratory with porcine eyes), and the gray line illustrates the ICER of Group B relative to Group C. ICER, incremental cost-effectiveness ratio, ICO OSCAR phaco = International Council of Ophthalmology Surgical Competency Assessment Rubric—phacoemulsification.

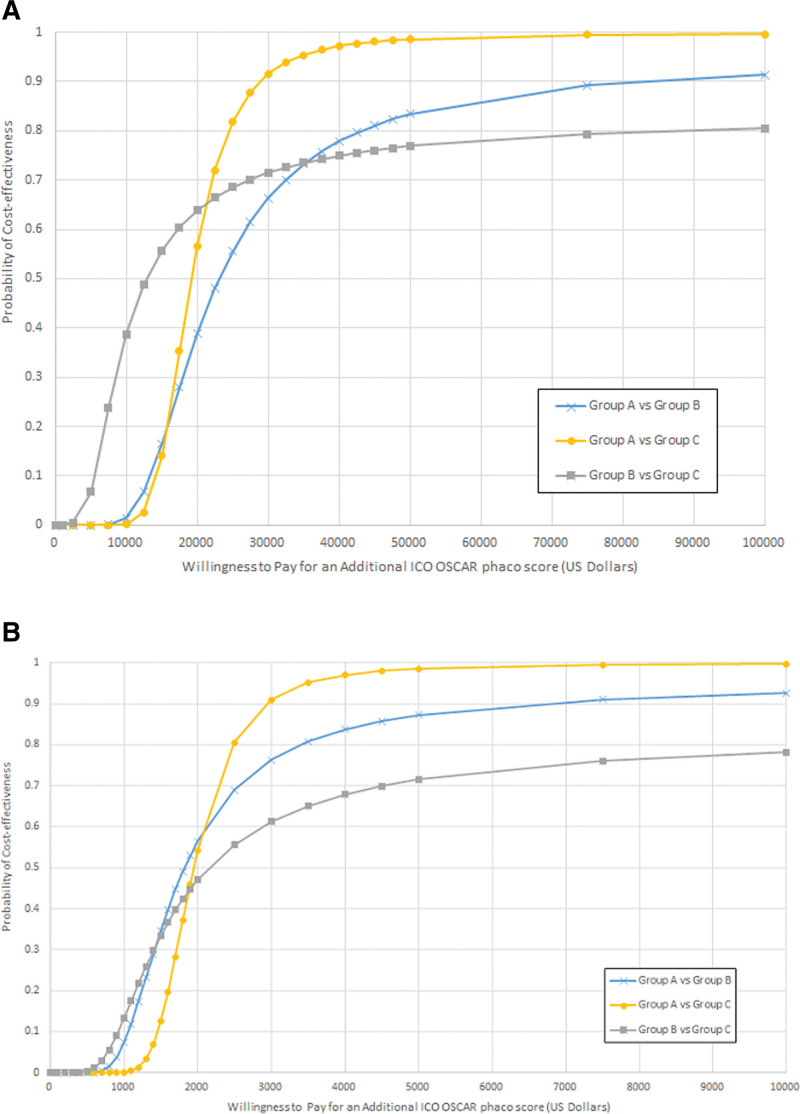

Based on the net benefit regression model, the cost-effectiveness acceptability curve was constructed for comparison of training strategies for capital cost and recurring cost, respectively (Fig. 4A,B). The probability of cost-effectiveness for a presumed WTP value per additional ICO OSCAR phaco score gained in the operating theater can be estimated for training strategies used in Groups A, B, and C when compared with 1 another. The practical scenarios and recommendations based on interpretations of Figure 4A,B are summarized in Table 4.

Figure 4.

(A) CEAC illustrating the probability that a cataract surgery simulation training strategy was cost-effective for the range of WTP values per ICO OSCAR phaco score in US dollars for capital cost. Groups A (Eyesi + Wet laboratory phacoemulsification with model eyes + Wet laboratory with porcine eyes), B (Wet laboratory phacoemulsification with model eyes + Wet laboratory with porcine eyes) and C (Wet laboratory with porcine eyes) are compared in pairs. (B) CEAC illustrating the probability that a cataract surgery simulation training strategy was cost-effective for the range of WTP values per ICO OSCAR phaco score in US dollars for recurring cost per annum. Groups A (Eyesi + Wet laboratory phacoemulsification with model eyes + Wet laboratory with porcine eyes), B (Wet laboratory phacoemulsification with model eyes + Wet laboratory with porcine eyes) and C (Wet laboratory with porcine eyes) are compared in pairs. CEAC = cost-effectiveness acceptability curve, ICO OSCAR phaco = International Council of Ophthalmology Surgical Competency Assessment Rubric—phacoemulsification, WTP = willingness to pay.

Table 4.

Practical scenarios and interpretations from cost-effectiveness acceptability curves (CEAC) when comparing between cataract surgery simulation training strategies.

| Scenarios | Interpretations from CEAC |

|---|---|

| When only elementary wet laboratory facilities exist (Group C) and need to decide whether to acquire wet laboratory training with phacoemulsification in model eyes (Group B) or to acquire both wet laboratory phacoemulsification and virtual reality simulator training (Group A). | If the WTP value per skill transfer score is below $22,500, then wet laboratory phacoemulsification training without virtual reality simulator would have higher probability of cost-effectiveness (Fig. 4A, Group B vs C). However, if WTP is above $22,500, then the combination of virtual reality simulator and wet laboratory phacoemulsification training will always have higher probability of cost-effectiveness (Fig. 4A, Group A vs C). |

| When wet laboratory facility for phacoemulsification in model eyes already available (Group B) and need to decide whether to acquire a unit of virtual reality simulator for additional training (Group A). | For WTP value per skill transfer score of $25,000 and above (Fig. 4A, Group A vs B), the probability of cost-effectiveness of acquiring virtual reality simulator in addition to wet laboratory phacoemulsification training will be above 50%. |

| When all facilities are already available for implementing any of the 3 simulation training strategies. | If WTP value per skill transfer score is less than $1400, wet laboratory phacoemulsification training without virtual reality simulator will achieve higher probability of cost-effectiveness (Fig. 4B, Group B vs C). If WTP value per skill transfer score is slightly higher ($1850 and above), the combination of virtual reality simulator and wet laboratory phacoemulsification will achieve a higher probability of cost-effectiveness (Fig. 4B, Group A vs B and A vs C). |

CEAC = cost-effectiveness acceptability curves.

4. Discussions

This clinical trial-based cost-effectiveness evaluation applied the methodological approach prompted by scientists in the field of education research.[9,10,31] The outcome of skills transfer in the operating theater from this interventional study was high impact as defined by the Kirkpatrick hierarchy.[11] Furthermore, this study provided empirical evidence for the costs entailed in attaining effective surgical skills transfer in the operating theater.

ICER indicated the increment cost per unit of additional outcome effect observed in the clinical trial.[10] From the perspective of capital investment in setting-up a new phacoemulsification surgery training facility, the ICER for gaining 1 ICO OSCAR phaco score from the combination of virtual reality simulator and wet laboratory phacoemulsification training relative to wet laboratory phacoemulsification training was highest (Fig. 3B). But from the perspective of recurring cost, the ICER for combination of virtual reality simulator and wet laboratory phacoemulsification training relative to wet laboratory phacoemulsification training was the lowest (Fig. 4A). The capital cost for acquiring a unit of virtual reality simulator is expensive, however, if only recurrent expenditures such as hardware maintenance and software upgrades are of concern, then virtual reality simulator is more favorable toward cost-effectiveness.

ICER quantifies the extra cost per extra unit of effect gained, but it does not indicate whether the extra cost is worthwhile without a benchmark.[32] The benchmark for cost-effectiveness of cataract surgery training has not been established. To enhance the generalizability of our cost-effectiveness evaluation, we developed an economic model for comparing the various simulation training strategies according to the probability of cost-effectiveness based on any amount of WTP values.[9] The NB approach is a contemporary economic evaluation method to determine whether the NB of 1 simulation intervention surpassed that of the other by taking into consideration the incremental cost and incremental effect over a range of WTP values.[28] Economists performed sensitivity analysis to allow the interpretation of cost-effectiveness in various scenarios.[32] In other words, stakeholders can determine the most favorable cost-effective strategy with the available resources at hand, and the next best alternative option if they prefer to utilize less resources.

Our study of simulation strategies did not reveal any effect in reducing the total operation time and intraoperative complication rates when novice trainees performed their 3 initial phacoemulsification surgeries in the operating theater. The overall PCR rate in this study was 9.1% among trainees who performed their initial 3 surgeries in the operating theater. In the randomized controlled trial conducted by Dean et al, the PCR rate of the simulation intervention group was 7.8%, which was significantly lower than the control group without training intervention (26.6%).[1] Unlike our study, the PCR rates from Dean et al’s study were reported at the 1-year evaluation endpoint, and their intervention group had performed a higher number of cataract surgeries in patients compared with control group (537 vs 203 surgeries, respectively).[1] From the results of a clinical trial, Thomsen et al identified trainees who had performed up to 75 phacoemulsification surgeries in patients were still able to demonstrate improvement in surgical skills following virtual reality simulator training.[3] Persistent simulation practices, further progression in operating theater surgical experience, and long-term surveillance of trainees’ performances are required in lowering PCR rates. Future cost-benefit analyses will be able to determine whether cost saving from reduced patient complications ultimately recoups the investment in medical simulation training.

A limitation of this study is the relatively small sample size. The power calculation for cost-effectiveness analysis differs from the approach based on primary clinical outcomes.[33] Due to the large variability of types of healthcare resources and cost measures, a much larger sample size is often required for an adequately powered economic evaluation.[34] Rather than testing a particular hypothesis concerning cost-effectiveness, health economist proposes the estimation of cost-effectiveness as a pragmatic approach.[9,35] The annual intake of residents in ophthalmology was very limited, and the opportunity to conduct a rigorous clinical trial on ophthalmic surgeon trainees had been precious. Because of the tremendous demand in training cataract surgeons and the substantial increasing uptake of simulation worldwide, it is imperative to collect any evidence that is available to facilitate decision making on the allocation of training resources that is critical to the viability and sustainability of training programs.[19] Our economic evaluation estimated the cost and value of simulation training based on the likelihood that the combination of virtual reality simulation and wet laboratory phacoemulsification are more effective for skills transfer in the operating theater.

A further limitation is that the reference group participants were not truly randomized but selected as those who had completed part of the course. This could be a source of selection bias. To minimize bias, trainees’ identities and allocations had been concealed from the investigators and graders. Various models, including animal eyes and grape skin, have been common tools for preoperative cataract surgery training.[11] The inclusion of reference group in this study provided more scenarios for cost-effectiveness comparisons.

The cost-effectiveness of implementing virtual reality simulator training for cataract surgery had been a hot topic for debate for many years. Young et al estimated that a typical residency program in the United States would need 34 years to recoup the expense of a unit of Eyesi, but acknowledged that the assumption could be oversimplified because only nonsupply cost (e.g., operation room time and staff salaries) was considered.[17] Lowry et al also performed hypothetical cost analysis based on operation room time savings per resident, and suggested that the expenditure for acquiring a unit of Eyesi simulator can be offset by its occupancy by greater number of residents over a few years.[16] It is intuitive that higher number of participants in a simulation facility will improve the efficiency of resource utilization. Economic evaluation by “break-even analysis” is able to identify the number of participants needed in order to generate effects or benefits to break-even with the cost of setting-up and running the facility.[31]

Globally, the expenditure for health professional education is estimated at $100 billion per year to meet the immense demand for training healthcare professionals.[6] As resource is finite, cost-effectiveness analysis of medical training is tremendously important.[10] Each training center would have unique cost considerations influenced by a myriad of circumstances, such as manpower, rental for space, utilities, availability of donated equipment, fluctuating market values of equipment, instrument upgrades, and relationships with industry. There are different priorities with regards to the diverse culture and teaching philosophies worldwide. We recommend our generalized approach to cost-effectiveness analysis according to established education research methodology and contemporary economic concepts, which lays down the framework for future economic evaluations of various strategies in ophthalmic microsurgical training. Thereby, stakeholders are empowered to make informed decision towards shrewd investment to deliver the best possible training outcome in cataract surgery.

Acknowledgments

We wish to thank The Hong Kong Jockey Club Charities Trust (https://charities.hkjc.com/charities/english/charities-trust/index.aspx), Ms. Ella Fung, CUHK JC OMTC Program Manager and Mr. William Yuen, CUHK JC OMTC Technician.

Author contributions

Conceptualization: Danny Siu-Chun Ng, Clement C. Tham.

Data curation: Danny Siu-Chun Ng, Alvin L. Young, Wilson W. K. Yip, Nai Man Lam, Kenneth K. Li, Simon T. Ko, Wai Ho Chan.

Formal analysis: Danny Siu-Chun Ng, Benjamin H. K. Yip, Orapan Aryasit, Shameema Sikder, John D. Ferris, Chi Pui Pang, Clement C. Tham.

Funding acquisition: Danny Siu-Chun Ng, Clement C. Tham.

Investigation: Danny Siu-Chun Ng, Benjamin H. K. Yip, Alvin L. Young, Wilson W. K. Yip, Nai Man Lam, Kenneth K. Li, Simon T. Ko, Wai Ho Chan, Orapan Aryasit, Shameema Sikder, John D. Ferris, Chi Pui Pang, Clement C. Tham.

Methodology: Danny Siu-Chun Ng, Clement C. Tham.

Project administration: Danny Siu-Chun Ng.

Resources: Danny Siu-Chun Ng, Alvin L. Young, Wilson W. K. Yip, Nai Man Lam, Kenneth K. Li, Simon T. Ko, Wai Ho Chan, Clement C. Tham.

Supervision: Danny Siu-Chun Ng, Chi Pui Pang, Clement C. Tham.

Visualization: Danny Siu-Chun Ng, Benjamin H. K. Yip.

Writing – original draft: Danny Siu-Chun Ng.

Writing – review & editing: Danny Siu-Chun Ng, Benjamin H. K. Yip, Alvin L. Young, Wilson W. K. Yip, Nai Man Lam, Kenneth K. Li, Simon T. Ko, Wai Ho Chan, Orapan Aryasit, Shameema Sikder, John D. Ferris, Chi Pui Pang, Clement C. Tham.

Supplementary Material

Abbreviations:

- BCVA

- best-corrected visual acuity

- C

- cortical

- CEAC

- cost-effectiveness acceptability curve

- CUHK JC OMTC

- Chinese University of Hong Kong Jockey Club Ophthalmic Microsurgical Training Centre

- HMRF

- Health and Medical Research Fund

- ICER

- incremental cost-effectiveness ratio

- ICO OSCAR phaco

- International Council of Ophthalmology Surgical Competency Assessment Rubric—phacoemulsification

- INB

- incremental net benefit

- LOCS III

- Lens Opacities Classification System III

- LogMAR

- logarithm of the minimum angle of resolution

- NB

- net benefit

- NBR

- net benefit regression

- NC

- nuclear color

- NO

- nuclear opalescence

- P

- posterior subcapsular

- PCR

- posterior capsular rupture

- PRECOG

- programme effectiveness and cost generalization

- SD

- standard deviation

- WTP

- willingness to pay

This research is funded by Health and Medical Research Fund (HMRF), Hong Kong (Ref.: 05162446). The sponsor or funding organization had no role in the design or conduct of this research.

This study was approved by institutional review boards and adhered to the tenets of the Declaration of Helsinki. (1) Approved 12/08/2019, New Territories West Cluster Research Ethics Committee, Hong Kong Hospital Authority (Ref: NTWC/REC/19070). (2) Approved 29/11/2017, Kowloon Central Cluster Research Ethics Committee/ Kowloon East Cluster Research Ethics Committee, Hong Kong Hospital Authority (Ref: KCC/KEC-2017-0175). (3) Approved 02/02/2018, New Territories East Cluster Research Ethics Committee, Hong Kong Hospital Authority (Ref: 2017.675). (4) Approved 25/02/2018, Hong Kong East Cluster Research Ethics Committee, Hong Kong Hospital Authority (Ref: HKECREC-2018-006).

The authors have no conflicts of interest to disclose.

The datasets generated during and/or analyzed during the current study are available from the corresponding author on reasonable request.

How to cite this article: Ng DS, Yip BHK, Young AL, Yip WWK, Lam NM, Li KK, Ko ST, Chan WH, Aryasit O, Sikder S, Ferris JD, Pang CP, Tham CC. Cost-effectiveness of virtual reality and wet laboratory cataract surgery simulation. Medicine 2023;102:40(e35067).

Supplemental Digital Content is available for this article.

Contributor Information

Danny S. Ng, Email: dannyng@cuhk.edu.hk.

Benjamin H. K. Yip, Email: ywk806@ha.org.hk.

Alvin L. Young, Email: youngla@ha.org.hk.

Wilson W. K. Yip, Email: ywk806@ha.org.hk.

Nai M. Lam, Email: lnmz01@ha.org.hk.

Kenneth K. Li, Email: lkw856@ha.org.hk.

Simon T. Ko, Email: kotc@ha.org.hk.

Wai H. Chan, Email: chanwh6@ha.org.hk.

Orapan Aryasit, Email: all_or_none22781@hotmail.com.

Shameema Sikder, Email: ssikder1@jhmi.edu.

John D. Ferris, Email: johndferris@me.com.

Chi P. Pang, Email: cppang@cuhk.edu.hk.

References

- [1].Dean WH, Gichuhi S, Buchan JC, et al. Intense simulation-based surgical education for manual small-incision cataract surgery: the ophthalmic learning and improvement initiative in cataract surgery randomized clinical trial in Kenya, Tanzania, Uganda, and Zimbabwe. JAMA Ophthalmol. 2021;139:9–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Ferris JD, Donachie PH, Johnston RL, et al. Royal College of Ophthalmologists’ National Ophthalmology Database study of cataract surgery: report 6. The impact of EyeSi virtual reality training on complications rates of cataract surgery performed by first and second year trainees. Br J Ophthalmol. 2020;104:324–9. [DOI] [PubMed] [Google Scholar]

- [3].Thomsen AS, Bach-Holm D, Kjaerbo H, et al. Operating room performance improves after proficiency-based virtual reality cataract surgery training. Ophthalmology. 2017;124:524–31. [DOI] [PubMed] [Google Scholar]

- [4].Nandigam K, Soh J, Gensheimer WG, et al. Cost analysis of objective resident cataract surgery assessments. J Cataract Refract Surg. 2015;41:997–1003. [DOI] [PubMed] [Google Scholar]

- [5].Hosler MR, Scott IU, Kunselman AR, et al. Impact of resident participation in cataract surgery on operative time and cost. Ophthalmology. 2012;119:95–8. [DOI] [PubMed] [Google Scholar]

- [6].Frenk J, Chen L, Bhutta ZA, et al. Health professionals for a new century: transforming education to strengthen health systems in an interdependent world. Lancet. 2010;376:1923–58. [DOI] [PubMed] [Google Scholar]

- [7].Resnikoff S, Lansingh VC, Washburn L, et al. Estimated number of ophthalmologists worldwide (International Council of Ophthalmology update): will we meet the needs? Br J Ophthalmol. 2020;104:588–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [8].Brown CA, Belfield CR, Field SJ. Cost effectiveness of continuing professional development in health care: a critical review of the evidence. BMJ. 2002;324:652–5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Tolsgaard MG, Tabor A, Madsen ME, et al. Linking quality of care and training costs: cost-effectiveness in health professions education. Med Educ. 2015;49:1263–71. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Walsh K, Levin H, Jaye P, et al. Cost analyses approaches in medical education: there are no simple solutions. Med Educ. 2013;47:962–8. [DOI] [PubMed] [Google Scholar]

- [11].Thomsen AS, Subhi Y, Kiilgaard JF, et al. Update on simulation-based surgical training and assessment in ophthalmology: a systematic review. Ophthalmology. 2015;122:1111–1130.e1. [DOI] [PubMed] [Google Scholar]

- [12].Thomsen AS, Kiilgaard JF, Kjaerbo H, et al. Simulation-based certification for cataract surgery. Acta Ophthalmol. 2015;93:416–21. [DOI] [PubMed] [Google Scholar]

- [13].Saleh GM, Lamparter J, Sullivan PM, et al. The international forum of ophthalmic simulation: developing a virtual reality training curriculum for ophthalmology. Br J Ophthalmol. 2013;97:789–92. [DOI] [PubMed] [Google Scholar]

- [14].Sikder S, Tuwairqi K, Al-Kahtani E, et al. Surgical simulators in cataract surgery training. Br J Ophthalmol. 2014;98:154–8. [DOI] [PubMed] [Google Scholar]

- [15].McCannel CA, Reed DC, Goldman DR. Ophthalmic surgery simulator training improves resident performance of capsulorhexis in the operating room. Ophthalmology. 2013;120:2456–61. [DOI] [PubMed] [Google Scholar]

- [16].Lowry EA, Porco TC, Naseri A. Cost analysis of virtual-reality phacoemulsification simulation in ophthalmology training programs. J Cataract Refract Surg. 2013;39:1616–7. [DOI] [PubMed] [Google Scholar]

- [17].Young BK, Greenberg PB. Is virtual reality training for resident cataract surgeons cost effective? Graefes Arch Clin Exp Ophthalmol. 2013;251:2295–6. [DOI] [PubMed] [Google Scholar]

- [18].la Cour M, Thomsen ASS, Alberti M, et al. Simulators in the training of surgeons: is it worth the investment in money and time? 2018 Jules Gonin lecture of the Retina Research Foundation. Graefes Arch Clin Exp Ophthalmol. 2019;257:877–81. [DOI] [PubMed] [Google Scholar]

- [19].Chilibeck CM, McGhee CNJ. Virtual reality surgical simulators in ophthalmology: Are we nearly there? Clin Exp Ophthalmol. 2020;48:727–9. [DOI] [PubMed] [Google Scholar]

- [20].Lee AG, Greenlee E, Oetting TA, et al. The Iowa ophthalmology wet laboratory curriculum for teaching and assessing cataract surgical competency. Ophthalmology. 2007;114:e21–26. [DOI] [PubMed] [Google Scholar]

- [21].Daly MK, Gonzalez E, Siracuse-Lee D, et al. Efficacy of surgical simulator training versus traditional wet-lab training on operating room performance of ophthalmology residents during the capsulorhexis in cataract surgery. J Cataract Refract Surg. 2013;39:1734–41. [DOI] [PubMed] [Google Scholar]

- [22].Henderson BA, Grimes KJ, Fintelmann RE, et al. Stepwise approach to establishing an ophthalmology wet laboratory. J Cataract Refract Surg. 2009;35:1121–8. [DOI] [PubMed] [Google Scholar]

- [23].Mak ST, Lam CW, Ng DSC, et al. Oculoplastic surgical simulation using goat sockets. Orbit. 2022;41:292–296. [DOI] [PubMed] [Google Scholar]

- [24].Hoch JS, Briggs AH, Willan AR. Something old, something new, something borrowed, something blue: a framework for the marriage of health econometrics and cost-effectiveness analysis. Health Econ. 2002;11:415–30. [DOI] [PubMed] [Google Scholar]

- [25].Golnik C, Beaver H, Gauba V, et al. Development of a new valid, reliable, and internationally applicable assessment tool of residents’ competence in ophthalmic surgery (an American Ophthalmological Society thesis). Trans Am Ophthalmol Soc. 2013;111:24–33. [PMC free article] [PubMed] [Google Scholar]

- [26].Levin HM, McEwan PJ. Cost-effectiveness analysis: methods and applications. 2nd ed. Thousand Oaks, CA: Sage. 2001. [Google Scholar]

- [27].van Hout BA, Al MJ, Gordon GS, et al. Costs, effects and C/E-ratios alongside a clinical trial. Health Econ. 1994;3:309–19. [DOI] [PubMed] [Google Scholar]

- [28].Hoch JS, Hay A, Isaranuwatchai W, et al. Advantages of the net benefit regression framework for trial-based economic evaluations of cancer treatments: an example from the Canadian Cancer Trials Group CO.17 trial. BMC Cancer. 2019;19:552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [29].Barton GR, Briggs AH, Fenwick EA. Optimal cost-effectiveness decisions: the role of the cost-effectiveness acceptability curve (CEAC), the cost-effectiveness acceptability frontier (CEAF), and the expected value of perfection information (EVPI). Value Health. 2008;11:886–97. [DOI] [PubMed] [Google Scholar]

- [30].Bilgic E, Watanabe Y, McKendy K, et al. Reliable assessment of operative performance. Am J Surg. 2016;211:426–30. [DOI] [PubMed] [Google Scholar]

- [31].Maloney S, Haines T. Issues of cost-benefit and cost-effectiveness for simulation in health professions education. Adv Simul (Lond). 2016;1:13. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [32].Paulden M. Calculating and interpreting ICERs and net benefit. PharmacoEcon. 2020;38:785–807. [DOI] [PubMed] [Google Scholar]

- [33].Petrou S, Gray A. Economic evaluation alongside randomised controlled trials: design, conduct, analysis, and reporting. BMJ. 2011;342:d1548. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [34].Glick HA. Sample size and power for cost-effectiveness analysis (part 1). PharmacoEcon. 2011;29:189–98. [DOI] [PubMed] [Google Scholar]

- [35].Briggs A. Economic evaluation and clinical trials: size matters. BMJ. 2000;321:1362–3. [DOI] [PMC free article] [PubMed] [Google Scholar]