Abstract

This article concerns predictive modeling for spatio-temporal data as well as model interpretation using data information in space and time. We develop a novel approach based on supervised dimension reduction for such data in order to capture nonlinear mean structures without requiring a prespecified parametric model. In addition to prediction as a common interest, this approach emphasizes the exploration of geometric information from the data. The method of Pairwise Directions Estimation (PDE) is implemented in our approach as a data-driven function searching for spatial patterns and temporal trends. The benefit of using geometric information from the method of PDE is highlighted, which aids effectively in exploring data structures. We further enhance PDE, referring to it as PDE+, by incorporating kriging to estimate the random effects not explained in the mean functions. Our proposal can not only increase prediction accuracy but also improve the interpretation for modeling. Two simulation examples are conducted and comparisons are made with several existing methods. The results demonstrate that the proposed PDE+ method is very useful for exploring and interpreting the patterns and trends for spatio-temporal data. Illustrative applications to two real datasets are also presented.

Keywords: Covariates, dimension reduction, kriging, semi-parametric models, visualization, spatio-temporal data

1. Introduction

Complicated phenomena in spatio-temporal data raise large challenges remaining to be overcome even in today's new era of computing. Kriging, as a method widely used in modeling such data, typically contains two components; namely, a stationary Gaussian process and a mean function. Interestingly, most kriging approaches assume a very simple structure for the mean function. For example, data at n locations over T time points are assumed to be observed according to

where is a mean function, is a zero-mean Gaussian process with a covariance function , and is an additive white-noise. Usual choice of is either a linear combination of ‘known’ covariates (i.e. universal kriging), or a constant not varying over space or time (i.e. ordinary kriging). It results in most analyses focusing on the covariance functions.

In Ref. [22], Martin and Simpson showed that ordinary kriging could obtain a poor prediction under the presence of strong trends. To explore the important spatial structures for spatio-temporal data, the renowned empirical orthogonal function (EOF) analysis is a commonly used method, which is based on principal component analysis; see Refs. [7,9] for review. Letting

| (1) |

EOFs formulate the spatio-temporal data approximately in the summation form as

| (2) |

(see Refs. [3,26]). This linear combination of inner products of temporal and spatial functions in EOFs has close connection to many dimension reduction approaches for geostatistics in the literature. From the reduced rank perspective, a low-rank model for spatial data at a particular time point is considered in a form similar to (2) as

| (3) |

where 's are unknown scalars and is either a fully known function or a parametric basis function depending on a few parameters (e.g. [2,6,29]). When several time points are involved, in (3) can be further assumed as a time-varying random variables (see Refs. [8,10,27,28]). Some of these works assumed to be independent and identically distributed (i.i.d.) over time, while others linked and for equal time spaces in an autoregressive way. The SpTimer package developed by Bakar and Sahu [1] utilizing the low-rank framework is implemented to hierarchical Bayesian modeling for space-time data. In contrast to stochastic 's and parametric 's above, additive models provide a non-parametric perspective on (3). They used spline functions to represent and/or (e.g. [16,24]). The mgcv package based on Ref. [30] is a convenient tool for additive models.

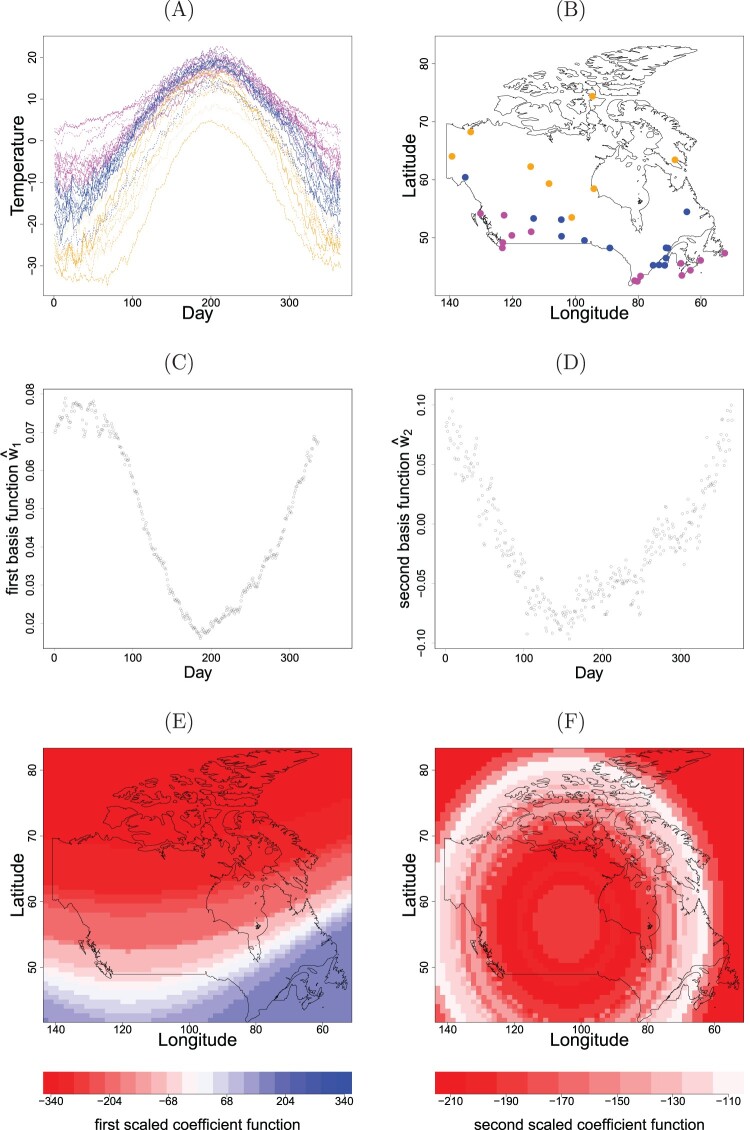

As a motivating example for illustrating the intriguing discovery, the data of average temperatures for each day of the year at 35 weather stations in Canada, shown in Figure 3(A,B), are considered. From Figure 3(A), a concave down pattern for daily temperatures is clearly observed. It seems to imply that a strong geometric information structure in the data. Two immediate questions are raised: are there any important time-related shapes hidden in those curves? Does the variation of curves relate to spatial patterns across the locations of stations? These questions stimulated us to investigate whether the inner products of spatial and temporal functions aid in exploring and interpreting the data. Our primary aim of the study is to gain an understanding of how temperature cycles at stations vary with location and/or with time. In general, this aim is not easy to accomplish. The aforementioned dimension reduction approaches turn the problem more tractable through different simplifications. For example, the EOFs impose the orthogonality on estimated components, the low-rank methods assume certain parametric function forms, and additive models use a large collection of smooth functions and rely on coefficient shrinkage to eliminate unneeded functions. In contrast, we relax those simplifications through an extension of supervised dimension reduction approach into the analysis without requiring pre-specified parametric functions.

Figure 3.

Plots of (A) the average daily temperatures over individual locations, (B) the spatial distribution of locations, (C) the first and (D) the second basis functions, and (E) the first and (F) the second scaled coefficient functions for Canadian Weather data.

Instead of directly taking in (1), we assume with a substantially different mean distinct from typical kriging. The Pairwise Directions Estimation (PDE; [20]) is incorporated in our proposed method to estimate the in a data-driven way. In this article, we focus on explicitly finding both and in order to detect potentially sophisticated mean structures in the spatio-temporal data.

Despite recent flourish of research on supervised dimension reduction (e.g. [4,17,20]), such time-relevant high-dimensional data remains to be challenging. We shall propose a novel approach to adaptively capture important spatial structures and temporal patterns for spatio-temporal data. Our main idea is to combine PDE and kriging, two quite flexible spatio-temporal modeling methods, into an ensemble learner, which not only increases the prediction accuracy of PDE, but also gives more comprehensive interpretation than typical kriging. The numerical results and real applications show that our proposed method not only gives explainable mean trends but may also produce more accurate model prediction.

The remainder of the paper is organized as follows. Section 2 introduces our approach. We use two simulation examples to illustrate and evaluate our proposed method in Section 3. Section 4 applies our method to two real datasets, and Section 5 concludes with discussion on future work.

2. Proposed method

Consider a sequence of processes, for , defined on a d-dimensional spatial domain with . The processes are assumed to have a stationary spatio-temporal covariance function . The spatio-temporal random effects model of with a measurement error ε is considered in this article as

| (4) |

| (5) |

for , where , , is a zero-mean random effect with a covariance function for any pair of and , and is an additive white-noise uncorrelated with . We assume that and are mutually uncorrelated. More specifically, stands for , where is a spatial-only covariate vector, and we can represent the inner-product term in (5) in terms of the summation as

| (6) |

Here , is an unknown basis function changing gradually with time t, and is an unknown coefficient function standing for a certain spatial pattern. The function depends on a vector through an unknown vector of weights. We refer to as a variate. Hence, determining amounts to finding both and , which is a typical theme in supervised dimension reduction via constructing new variate instead of using directly.

For covariates over , are considered in our simulation examples and real data applications. We certainly can incorporate more elaborate and domain-specific covariates into , which may be useful for comprehending the spatio-temporal phenomenon.

Note that in the semi-parametric models of (4) and (5), only and are observed, but other quantities are kept unknown. The aim of this study is to predict the process by reconstructing those unknown terms , and based on the observed data and , ; , as parsimonious as possible. To estimate those terms in (5), we begin with finding and via PDE proposed by Lue [20], and then estimate by applying the kriging method for the spatio-temporal data (e.g. [7,25]). We briefly introduce the two key building blocks, PDE and kriging, and then propose our estimation method.

2.1. Pairwise directions estimation

Suppose that at n distinct locations, , for with a covariate vector are available. The original setting for time point t is not necessarily equal-spaced, but for simplicity, we assume them to be equal-spaced. For ease of illustration, we denote , and . The rationale behind using PDE to approximate the inner-product term in (5) is tentatively considering

| (7) |

which originally deals with multivariate response data via a ”pairwise” model. After some algebra, (7) leads to the following model

| (8) |

for . In order to describe , we need to estimate a vector , a vector and a link function , which all are totally unknown. Under (7), it is natural to pairwise find the solutions of and via minimizing . The estimation strategy is to find first and then estimate and in an iterative way. We allow , and to be determined by the data with the number κ as small as possible.

The initial estimate of , can be found by two methods for efficiently implementing the PDE method. They are mrSIR and pe-mrPHD methods (for more details, see Refs. [18,19]). After initialization of , we utilize an adaptive estimation of MAVE in Ref. [31] for a single-index case to obtain an initial estimate of by solving the minimization problem:

| (9) |

where , K is a kernel function and is the bandwidth of .

The iterative algorithm for estimating , and for in (8) via PDE proceeds in the following steps:

Initialize , , from mrSIR and/or pe-mrPHD, and then get as the minimizer of in (9). Compute the estimated basis functions , where is the sample covariance of (see Lemma of [20]).

- At the τ-th iteration, fit a linear regression model to against , i.e. assuming

for , to obtain the estimated coefficients , , where the in the subscript denotes the iteration number.(10) Obtain the updated estimate from (9) by replacing with and then compute the updated basis functions , .

Repeat steps 2 and 3 until , for some given tolerance value Δ (e.g. ).

- Use the final estimate in step 4 to obtain the final estimates and , , by solving the minimization problem:

where and is the bandwidth of . We use to be the estimate of in (8).

In step 2 of the algorithm, obtained from the regression coefficients in (10), is a working estimate of at .

2.2. Function scaling estimation

The PDE captures the inner-product term, , of nonlinear mean structures in (5) without requiring a prespecified parametric model; namely, and found by PDE in step 5 are used to respectively estimate and in (6) for . In order to address the identifiability issue of functional estimation, we let the estimate be a normalized vector of unit norm, and then a scaling factor is introduced for prediction purpose. Under (5), we use to approximate via a linear fit. More specifically, are the estimated coefficients obtained from the linear regression of against for each . Then we refer to the jth scaled coefficient function as the estimates , . Based on the scaling procedure, it would not only improve the prediction accuracy, but also give appropriate magnitude for the inner products. In calculating at a predicted location , the scaled coefficients , can be obtained by using the average of k nearest neighbors around in the observed data (e.g. k = 3).

2.3. Incorporation of kriging

Since PDE does not take the random effects in (5) into account, we incorporate kriging to estimate this term for model prediction. The kriging method is dedicated to modeling the covariance function that measures the strength of dependency between any pairs of variables at and . In (5), and are unknown deterministic functions. When measurement errors are absent with , an equivalence exists between the variogram and the covariance function under the second-order stationary assumption of , i.e. is constant (assumed zero here) over and t, and only depends on and ; see Ref. [5] or [23]. Then the minimum-mean-squared-error linear prediction for at of interest can be found by under the joint normality and assuming the parameters to be known; see Ref. [5].

We consider the isotropic product-sum model in this article with covariance in the form of

| (11) |

for any pair of space-time points and with valid covariance functions and , respectively. We use exponential covariance functions for and in what follows, where both and contain two parameters for the marginal variance and the scale of or , and is an additional parameter for the spatio-temporal interaction. When the first two terms on the right-hand side in (11) vanish, the covariance function becomes a separable model. Although other non-separable covariance functions can be applied in this framework, we mainly focus on (11) due to its ease of understanding and acceptable flexibility.

In the presence of measurement errors as (4), the variation of comes from both and , and hence . We shall instead consider a variogram model corresponding to the following covariance function between and :

where is the indicator function, and . For approximation of , we need to calculate the empirical variogram, namely, the sample version of , for the detrended process . The estimation of parameters , , and is based on least-squares variogram fitting, which is the default method implemented in the package . After obtaining by PDE in Section 2.1, we apply kriging to estimate based on the residuals through using

with plugging-in estimated parameters under the joint normality assumptions of and in (4) and (5). Here and are replaced respectively with and found by PDE in its steps 4 and 5 for . We perform a one-more-step iteration to obtain the final estimates for each component in (5), whose algorithm is given in Section 2.4.

2.4. Enhanced PDE

We propose a new enhanced method of PDE, called PDE+, through incorporating the PDE with kriging for modeling spatio-temporal data. In what follows, , , , and are defined in a similar way as with respect to . The algorithm for PDE+ proceeds in the following two steps:

Apply PDE to the data for obtaining and , and then get the residuals from a linear fit of against for . Use kriging in Section 2.3 on to get an initial estimate of .

Let be the difference between and from step I, and then repeat step I once by treating the observed data as .

The final predictive model of PDE+ is defined as the one in step II. The reason for taking once iteration on PDE+ is for computational efficiency. For prediction, we incorporate function scaling estimation procedure in Section 2.2 for measuring the prediction error based on the testing data. In this article, the dominant eigenvalue sequence and the scatterplots of on are effectively used to determine the parameter κ in (7) in practice.

3. Numeric results

3.1. Simulation setups

We shall apply our proposed method for studying predictive modeling on spatio-temporal data through two simulated examples. Suppose that we observe data at location and time t. Let the spatial-only covariate vector be , where , generated from given spatial locations and be the coefficient function for unknown spatial structure shared across t. Under (5), we think of elements of and as unknown deterministic functions. In all simulations and real applications, we have conducted the sample splitting procedure by removing some spatial locations and some time points (20% removal). To make comparisons with our proposal, we consider several existing methods. To evaluate the performance of prediction accuracy, we compute four indices, including two versions of prediction error criteria, namely root integrated mean squared error (RIMSE) and rooted prediction mean squared error (RPMSE), defined by

| (12) |

where is the size of locations in the testing data, denotes the estimate of , and . We also assess the model prediction based on continuous ranked probability score (CRPS) and interval score (Iscore) discussed in Ref. [12]. For a specific real-value y, CRPS and Iscore are respectively defined as:

| (13) |

| (14) |

where is the cumulative distribution of a probabilistic forecasting model, is its prediction interval. We shall report the averaged CRPS and Iscore over all observed y values for in what follows. According to four indices above, the smaller the index levels, the higher is the prediction accuracy.

The cross-validation estimates of RIMSE, RPMSE, CRPS and Iscore are collected over 100 replications for each example. Seven other methods to be compared, referred to as naive, SpTimer, kriging-P, kriging-N, mgcv, OKFD and WMWC, are briefly described below.

The naive method takes the simple predictor , i.e. the sample mean of all locations at time t. The SpTimer method uses a hierarchical Bayesian approach for space-time data. Its model is given by

where 's are zero-mean spatial random effects independent over time with an isotropic stationary Matérn covariance function. This method has been implemented in an R package named SpTimer. The kriging method typically considers the term in (5) as a constant μ. The ordinary kriging estimates by , where depend on the variogram under the constraint of , with plugging all estimated covariance parameters. We refer to this typical kriging as parametric kriging, or kriging-P for short. Instead of using parametric form, [32] recently proposed a non-parametric method to estimate the spatio-temporal mean function and covariance function. We refer to it as non-parametric kriging (kriging-N). If the assumptions of stationarity, constant mean, or isotropic structure lack to hold, such a non-parametric method expectedly performs better than kriging-P.

The mgcv method exploits additive models with tensor products. It assumes

| (15) |

where 's are constructed by superimposing several known basis functions and its noises are assumed to follow independently over space and time. With these higher-order interactions, the model tends to overfit, and hence some penalized approach is necessary. The famous R package mgcv provides convenient ways for determining an appropriate penalty.

There have been some research treating spatial-temporal data as a set of functional data, i.e. curves, at multiple locations, by specifying spatial correlations among curves [11,13–15]. The OKFD method used in Refs. [11,14] estimates a mean curve and a variance curve for all , followed by modeling the based on a spatially stationary covariance model . The OKFD was implemented in the R package geofd. Besides, the literature [13] and [15] discussed an expansion of as , where the mean curve and the components of and are estimated based on weighted averages of quantities from all observed locations, without assuming spatial stationarity. This method is referred to as weighted-mean-weighted-component (WMWC).

Moreover, the original PDE method is also included in the comparisons. We can think of PDE+ as an ensemble learner combining both PDE and kriging. The comparisons among PDE, kriging, and PDE+ aid in numerically verifying that the proposed integration leads to better prediction of the data.

According to the algorithms of the above methods, the default values are used to set the turning parameters. We emphasize the accuracy of prediction as the indices in (12)–(14) and the findings of interesting pattern structures. The strategy for determining the structural dimension κ in model (7) is based on the maximal eigenvalue ratio criterion (MERC) proposed by Luo et al. [21]. More specifically, they suggested to select κ by , where is the estimated eigenvalue of the eigenvalue decomposition for mrSIR (or pe-mrPHD). For the two real data applications in the next section, both of the MERC are two. From simulations and practical applications in the next two sections, PDE successfully estimates the effective dimension reduction directions.

3.2. Comparison of simulation results

In each simulation run, we set T = 20 and generate at n locations which consist of a learning set with size and a testing set with size . We also exclude learning data at randomly sampled time points for validation purposes. The location set is drawn from using simple random sampling. We set the number of slices for mrSIR to be 10 for all runs. We denote the absolute value of cosine angle between the estimate and the true vector as , , for evaluating the accuracy of directional estimation.

Example 3.1

Consider a process, , generated according to (5) with trigonometric and quadratic structures by setting

(16)

(17) for , and being a zero-mean random effect with the covariance

Using (4), the data with over time and space are generated. With letting and , the coefficient functions 's can be reformulated as and , where . Both unknown vectors, and , are sufficient dimension reduction directions needed to be estimated.

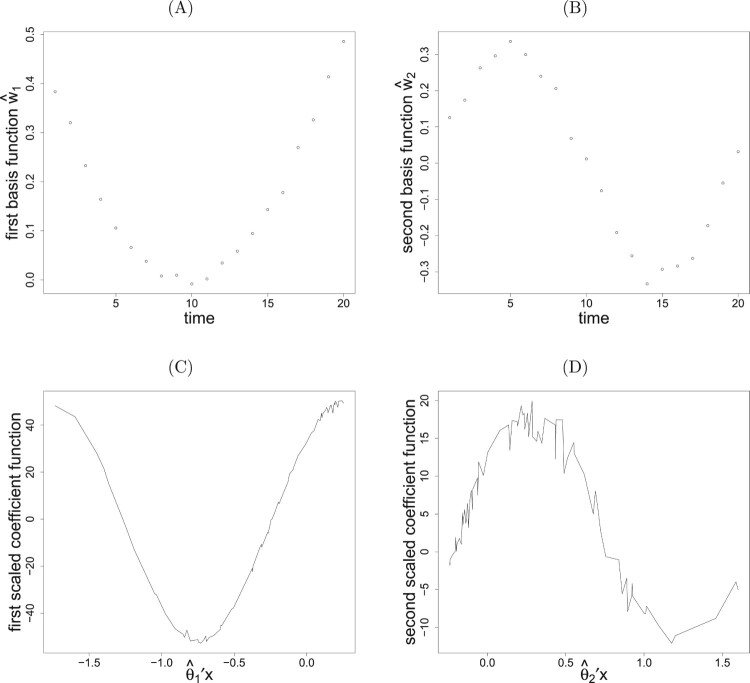

To illustrate the application of PDE+ via data visualization, a single run is taken. The data with n = 100 are generated according to models (16) and (17). We separately conduct the eigenvalue decomposition of mrSIR and pe-mrPHD for the learning data to obtain initial estimates 's. It turns out that the modified eigenvalues (0.73, 0.31, 0, 0) found by mrSIR suggest one variate. One significant direction is also found by pe-mrPHD with the eigenvalues (2.24, 1.83, 0.68, 0.29). By choosing and taking the two initial estimates , we proceed with the proposed algorithm to dimension reduction for and , ; . After attaining the iterative convergence, two leading directions, (0.501, 0.506, 0.490, 0.502) and (-0.496, -0.496, 0.515, 0.492) , for variates are found along with and , which are consistently close to the theoretical vectors . The scatterplot of the first estimated basis function, shown in Figure 1(A), reveals a noticeable quadratic pattern. Figure 1(B) shows a clear sine pattern for the second estimated basis function. Figure 1(C,D) displays coefficient functions which are very close to the true trigonometric patterns.

Figure 1.

Plots of (A) the first and (B) the second basis functions with (C) the first and (D) the second scaled coefficient functions for a randomly selected simulation from model in (16) and (17).

In reference to the sampling performance, the results based on 100 simulated replicates according to (16) and (17) are summarized in Table 1. It reports the mean and standard deviation for four indices obtained by all methods. PDE+ does outperform with the smallest averaged values of indices for this model and with the best prediction interval because of its capture of clear signal for functional patterns. For example, the mean of RIMSE obtained by PDE+ is about 5.18, which is about 27.6% improvement over kriging-P; while the improvement with respect to RPMSE is more significant about 31.8% . Not surprisingly, PDE uses locally linear information via smoothing techniques to capture the function forms of and , , which make the rest of processes more well-suited for typical kriging models. Though the original PDE and kriging-P obtain similar results in this setup, the effectiveness of prediction is validated via combining the strengths of PDE and kriging into PDE+.

Table 1.

Averaged values of four indices in the testing locations obtained by methods for data generated from models in (16) and (17) in 100 replicates (values given in parentheses are the corresponding standard errors).

| PDE+ | PDE | naive | SpTimer | kriging-P | kriging-N | mgcv | OKFD | WMWC | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RIMSE | 5.18 | 6.91 | 37.17 | 32.74 | 6.61 | 13.30 | 6.93 | 8.39 | 13.45 | |||

| (1.21) | (2.67) | (3.33) | (3.57) | (1.31) | (2.03) | (1.13) | (8.50) | (4.28) | ||||

| RPMSE | 1.32 | 1.79 | 8.91 | 8.79 | 1.74 | 3.27 | 1.71 | 2.07 | 3.11 | |||

| (0.46) | (0.85) | (0.63) | (0.83) | (0.44) | (0.54) | (0.40) | (2.03) | (0.95) | ||||

| CRPS | 0.05 | 0.10 | 5.57 | 5.43 | 0.17 | 1.06 | 0.25 | 0.25 | 0.22 | |||

| (0.06) | (0.12) | (5.97) | (5.85) | (0.38) | (0.78) | (0.37) | (0.31) | (0.48) | ||||

| Iscore | 0.19 | 0.25 | 4.35 | 4.31 | 0.22 | 0.37 | 0.25 | 0.33 | 0.48 | |||

| (0.27) | (0.37) | (5.34) | (5.30) | (0.45) | (0.17) | (0.38) | (0.43) | (0.82) | ||||

Both kriging-N and WMWC via removing a non-constant mean function prior to modeling the complicated covariance structure indeed pay some price in estimation. It is not appropriate for OKFD to ignore the correlation interactions between space and time in estimation. In contrast, the naive and SpTimer produce considerable error in prediction, however.

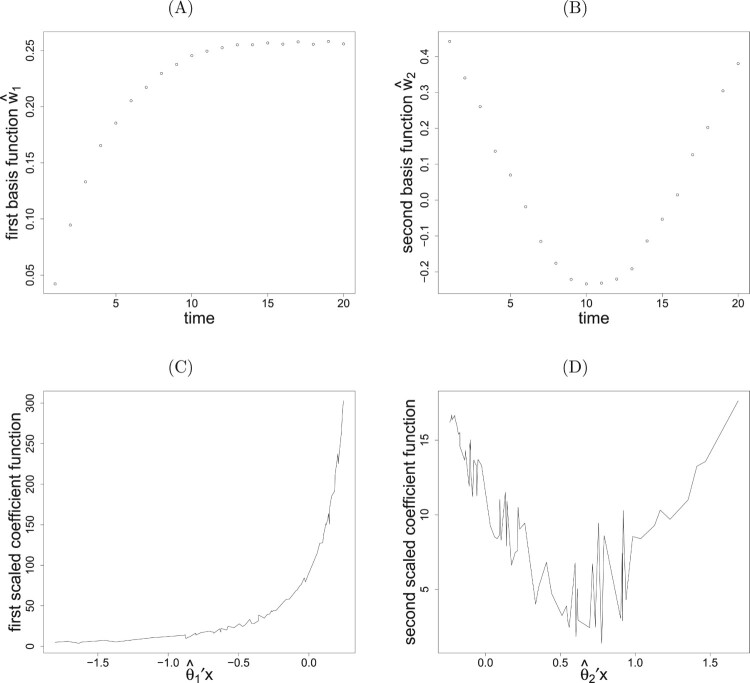

Example 3.2

Generate a process, , from (5) with setting

(18)

(19) for , and being a zero-mean random effect with the covariance

We also generate over time and space. We need to estimate two sufficient dimension reduction vectors, and , as previous mentioned.

The data with n = 150 are generated according to models (18), (19) and (4) for a single run. Using the eigenvalue decomposition, the modified eigenvalues (1.50, 0.35, 0, 0) found by mrSIR suggest one variate and another significant direction is also found by pe-mrPHD with the eigenvalues (2.50, 1.93, 0.65, 0.50). Two leading directions for variates, ( 0.520, 0.480, 0.530, 0.467) and , are found by taking , after attaining the iterative convergence. The estimates along with and are consistently close to the theoretical vectors . The first two estimated basis functions, shown in Figure 2(A,B), reveal clear arctangent and quadratic patterns. Figure 2(C,D) displays estimated coefficient functions which are very close to the true views.

Figure 2.

Plots of (A) the first and (B) the second basis functions with (C) the first and (D) the second scaled coefficient functions for a randomly selected simulation from model in (18) and (19).

The results based on 100 simulation runs according to (18) and (19) are summarized in Table 2. As regards the predictive accuracy, PDE+ does outperform with the smallest averaged indices compared to other methods. The original PDE significantly outperforms kriging-P and kriging-N in this example. In contrast, other methods perform worse duo to lacking the capture of the substantial mean structure. The mean of RIMSE obtained by PDE+ is about 5.83, which is about 79% improvement over kriging-P; while the improvement is more significant about 116% ( with respect to RPMSE, for example. In contrast, the naive and SpTimer produce considerable error in prediction, however.

Table 2.

Averaged values of four indices in the testing locations obtained by methods for data generated from models in (18) and (19) in 100 replicates (values given in parentheses are the corresponding standard errors).

| PDE+ | PDE | naive | SpTimer | kriging-P | kriging-N | mgcv | OKFD | WMWC | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RIMSE | 5.83 | 6.43 | 65.38 | 61.05 | 10.44 | 17.57 | 13.55 | 10.44 | 12.10 | |||

| (1.35) | (1.59) | (8.66) | (7.29) | (5.05) | (4.14) | (2.61) | (19.86) | (4.64) | ||||

| RPMSE | 1.65 | 1.81 | 17.53 | 16.35 | 3.57 | 5.85 | 4.12 | 2.85 | 2.86 | |||

| (0.53) | (0.63) | (2.49) | (2.43) | (1.91) | (1.67) | (0.78) | (5.24) | (1.06) | ||||

| CRPS | 0.36 | 0.41 | 11.5 | 10.41 | 0.91 | 2.11 | 0.45 | 0.45 | 0.78 | |||

| (0.30) | (0.44) | (10.11) | (9.28) | (1.66) | (3.40) | (0.60) | (0.64) | (0.30) | ||||

| Iscore | 0.23 | 0.25 | 7.70 | 6.78 | 0.46 | 0.99 | 0.48 | 0.67 | 0.46 | |||

| (0.15) | (0.26) | (10.39) | (9.52) | (0.89) | (2.75) | (0.44) | (0.52) | (0.11) | ||||

4. Applications to real data

To illustrate the application of our approach to predictive modeling for empirical studies, we analyze two real datasets. In the following datasets, the longitudes and latitudes are available for given spatial locations. Additional information about elevation in the second dataset is usable. We set the respective spatial-only covariate vector to be and , where is the longitude, is the latitude, and m is the elevation.

4.1. Canadian weather data

We applied the proposed method to a dataset of average temperatures over the years 1960–1994 for each day of the year at 35 weather stations in Canada. The data are available in the fda package on Comprehensive R Archive Network (CRAN). The observations of interest are the average of temperatures measured on 35 location sites for 365 days.

The annual temperature cycle at 35 stations shown in Figure 3(A) indicates a clear concave-down pattern. The map of observation locations is shown in Figure 3(B). For illustration, we first standardize for being centered and removing scale. We randomly partition into a learning set with size 30 and a testing set with size 5 for further comparison analysis. We exclude learning data at randomly sampled 73 days for validation purpose. Details in one single analysis run are demonstrated. We conduct the eigenvalue decomposition for the learning data to obtain initial estimates 's. Two significant directions are found by pe-mrPHD with the eigenvalues (2.71, 2.35, 1.20, 0.81). By choosing the bandwidths, and , we proceed with the proposed algorithm to dimension reduction for and , ; . After convergence, two leading directions are and (0.314, 0.110, 0.900, 0.281) . It shows that there exist respective dominant effects, latitude and squared longitude, for the first two variates.

According to (5), both basis functions and coefficient functions affect through the inner product form. Figure 3(C,D) displays the estimated basis functions which reveal similar concave patterns. Figure 3(C) displays that is positive and has much higher values at the left and right sides along the horizontal axis (Day) (indicating a winter season), but lower values in the middle (indicating a summer season). The levels of function over spatial locations shown in Figure 3(E) reveal a decreasing trend as the latitude increases. This figure also shows a large portion of locations with negative values for . As a result, the influence of the first dominant component just goes consistently with the common sense that the larger latitude is, the lower temperature is in winter; the lower latitude is, the higher temperature is in summer. The shape of Figure 3(D) roughly contrasts the temperature difference between summer and winter, due to the two seasons with the opposite signs of the function value for . Figure 3(F) displays the levels of over locations, indicating the maximum of the absolute value of occurred nearly around the continental areas. Note that is negative for all areas. Figure 3(F) indicates that the bright-red (continental) regions have greater temperature difference compared to the faint-red (coastal) regions. Consequently, the secondary component implies that continental areas tend to have lower temperatures in winter, and higher temperatures in summer compared to the coastal regions. The colors in Figure 3(A,B) are based on the corresponding temperature difference. The pattern in Figure 3(F) can be also found in Figure 3(B). More specifically, Figure 3(A) implies the temperature differences are greater in brown curves (continental areas) than in pink curves (coastal areas).

In reference to the sampling performance, the results based on 100 sets of random data partitions, as previously mentioned are summarized in Table 3. With including a three-term interaction in (15) (i.e. the term), mgcv produces a considerable error with the respective mean values of 2487.9, 235.8, 7.3, and 14.7 for RIMSE, RPMSE, CRPS and Iscore. The larger values of indices found by mgcv indicate its heavy overfitting. It should be aware of the constraint impact on model complexity, even though mgcv provides its automatic penalty determination. The results for mgcv shown in Table 3 are obtained through excluding the term. Interestingly, PDE captures the sharp functional patterns for and , j = 1, 2, useful for improving model prediction. Not unexpectedly, PDE+ does outperform with the smallest averaged indices through integrating PDE for the dominant mean structure with kriging for estimating the random effect. From the outputs of Table 3 and Figure 3, PDE+ indeed shows an interpretable predictive model in addition to improving the accuracy of model prediction.

Table 3.

Averaged values of four indices in the testing locations obtained by methods for Canadian Weather data in 100 validations (values given in parentheses are the corresponding standard errors).

| PDE+ | PDE | naive | SpTimer | kriging-P | kriging-N | mgcv | OKFD | WMWC | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RIMSE | 50.21 | 101.93 | 112.67 | 96.64 | 55.58 | 54.72 | 174.77 | 238.75 | 191.72 | |||

| (24.91) | (24.8) | (32.48) | (29.37) | (25.89) | (13.16) | (168.21) | (233.38) | (13.78) | ||||

| RPMSE | 3.01 | 5.79 | 6.68 | 6.47 | 3.32 | 3.14 | 13.31 | 17.76 | 10.16 | |||

| (1.88) | (1.63) | (2.06) | (2.09) | (1.47) | (0.82) | (15.77) | (19.54) | (0.73) | ||||

| CRPS | 0.72 | 2.24 | 2.73 | 2.61 | 0.76 | 1.11 | 1.84 | 2.14 | 7.06 | |||

| (0.59) | (1.51) | (3.22) | (3.23) | (0.86) | (1.01) | (1.99) | (1.23) | (4.12) | ||||

| Iscore | 0.47 | 1.11 | 1.78 | 1.72 | 0.50 | 0.47 | 1.95 | 2.72 | 4.61 | |||

| (0.31) | (0.93) | (2.99) | (2.98) | (0.48) | (0.39) | (1.69) | (1.38) | (4.01) | ||||

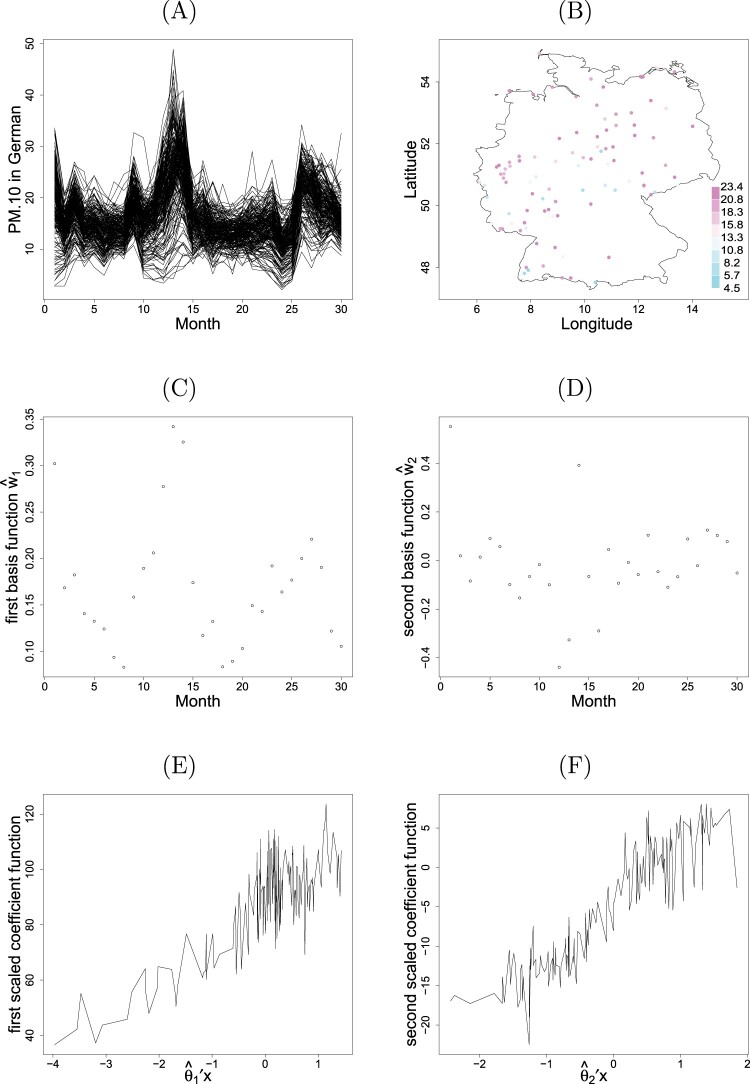

4.2. German PM data

We applied the proposed method to a dataset of air quality obtained from European Commission's Airbase and air quality e-reporting repositories (AQER). The monthly averages of PM concentrations within Germany between January 2016 and June 2018 are considered. The package saqgetr on CRAN was used to extract hourly PM averages, and an aggregation to obtain monthly averages was performed at each of background stations. Totally 221 stations have complete monthly records over the 30 months considered. The monthly average concentrations for 221 stations shown in Figure 4(A) indicate several peaks occurring particularly at the beginning of years.

Figure 4.

Plots of (A) the monthly averages of PM concentrations over individual locations, (B) the spatial distribution of locations, (C) the first and (D) the second basis functions, and (E) the first and (F) the second scaled coefficient functions for German PM data.

In addition to location information, this dataset also contains elevation values. We will demonstrate that spatial covariates show two dominate effects in the PDE+ model. The map of station locations is shown in Figure 4(B), where the color gradients represent the average concentrations of PM values over the 30 months of individual sites. For illustration, we first standardize for being centered and removing scale for further analysis. We randomly partition into a learning data with size 177 and a testing data with size 44. We also exclude learning data at randomly sampled 6 months for validation purpose.

In one single analysis run, the eigenvalue decomposition is conducted for the learning data to obtain initial estimates 's. Two significant directions are found by pe-mrPHD with the eigenvalues (0.68, 0.60, 0.21, 0.17, 0.07). After the convergence of PDE algorithm with choosing the bandwidths and , two leading directions, and , for variates are obtained. It reveals that there exist respective dominant effects; namely, elevation (with negative association) for the first variate, and a linear combination of longitude and latitude (with approximately equal weight) for the second variate.

Recall that both and affect through the inner product form. Figure 4(C) displays the first estimated basis function with a conceivable ‘W’ pattern reflecting the peaks of the data. It indicates that German PM concentrations are higher in winter than in summer. Figure 4(E) shows a linearly increasing trend of associated with a large negative elevation effect in . As a result, the influence of the first dominant component shows that the larger (smaller) the elevation levels, the smaller (larger) is the value of PM . Figure 4(D) displays a near equal-partitioned pattern fluctuating around zero in the vertical axis. Figure 4(F) exhibits a linearly increasing pattern along , roughly standing for a direction from northeast to southwest, due to the equal-weight effect of longitude and latitude in . Consequently, the influence of the second component illustrates that the air pollution of PM in Germany widely spreads over in the areas from northeast to southwest, similar to the colored pattern shown in Figure 4(B). Compared to Figure 4(E), the second component may serve as the complement part of influence on PM due to the magnitudes.

The results based on 100 random data partitions for sampling performance are summarized in Table 4. To make a compatible comparison, kriging-P uses universal kriging with elevation being the covariate in the mean part. For methods without a natural way to include the covariates (e.g. OKFD and naive), we simply subtract the mean function obtained by a linear regression of on elevation from . Again, PDE indeed captures the interesting functional patterns for and , j = 1, 2. In the aspect of predictive accuracy, PDE+ does outperform with the smallest averaged indices, as shown in Table 4. More importantly, PDE+ can greatly help the explanation of the spatio-temporal trends, which is difficult for all other methods.

Table 4.

Averaged values of four indices in the testing locations obtained by methods for German PM data in 100 validations (values given in parentheses are the corresponding standard errors).

| PDE+ | PDE | naive | SpTimer | kriging-P | kriging-N | mgcv | OKFD | WMWC | ||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RIMSE | 17.28 | 27.64 | 19.17 | 18.32 | 17.68 | 24.78 | 18.95 | 90.19 | 90.79 | |||

| (1.98) | (1.95) | (1.19) | (0.98) | (3.49) | (1.40) | (1.07) | (2.79) | (9.90) | ||||

| RPMSE | 3.29 | 5.12 | 3.68 | 3.47 | 3.43 | 4.68 | 3.56 | 16.71 | 16.84 | |||

| (0.38) | (0.37) | (0.21) | (0.24) | (0.7) | (0.25) | (0.20) | (0.49) | (1.78) | ||||

| CRPS | 0.86 | 1.66 | 1.22 | 1.18 | 1.01 | 2.46 | 1.18 | 14.51 | 13.32 | |||

| (0.55) | (0.7) | (1.31) | (1.24) | (0.88) | (2.67) | (0.99) | (2.68) | (5.32) | ||||

| Iscore | 0.48 | 0.76 | 0.65 | 0.57 | 0.49 | 1.52 | 0.55 | 11.26 | 10.49 | |||

| (0.29) | (0.39) | (1.13) | (1.09) | (0.55) | (2.38) | (0.66) | (2.91) | (5.34) | ||||

5. Discussion

Exploring the mean structure for spatio-temporal data via dimension reduction is studied in this article. Effective dimension reduction is a much more challenging statistical issue on spatio-temporal data, which was less paid attention in the past decades. We focus on dimension reduction for the spatial covariates to increase the interpretability of the predictive model. Searching the optimum of possible linear combinations for is crucial for solving such a problem. Another important issue is to discover the connection of linear or nonlinear association with the spatio-temporal variables of interest. The PDE+ can successfully achieve the above goals. In simulations, the performance of PDE is comparable to kriging, while in real applications, kriging works better than PDE. Overall, PDE+, combining PDE and kriging, outperforms kriging in all situations. The proposed method based on dimension reduction effectively demonstrates its interpretable and accurate prediction from all the numerical results.

In contrast, few methods utilize a data-driven approach for providing the estimation of mean structures in the literature. Two exceptions investigated in the numerical examples are kriging-N and WMWC, which may trade stability off for flexibility. The number of unknown parameters in the data-driven estimation of in (6) is relatively small compared to the available data points. For example, and in the jth component have respective T and p parameters, while is merely a univariate smoother for the variate . Besides, the number of parameters in for is usually less than a dozen. Using the smaller number of parameters in PDE+ not only prevent the modeling from overfitting, but also allow it to be more easily interpretable. Hence, we can think of PDE+ as a parsimonious ensemble learner with great prediction ability as typical ensemble methods, but not being a hard-to-understand black box.

Acknowledgments

The authors thank Professor Hsin-Cheng Huang for useful comments greatly improving the article. We also would like to thank the associated editor and the two anonymous reviewers for their helpful and thorough suggestions.

Appendix.

The codes for implementing the PDE+ method of this article are available at https://github.com/hhlue/PDEplus. We also illustrate how to extract the components of a PDE+ model, and how to predict based on PDE+, through a simulation in Example 3.1. In addition, the datasets of monthly German PM concentrations in Section 4.2 can also be found from the same webpage.

Funding Statement

Lue's and Tzeng's research works were supported in part by grants from the Ministry of Science and Technology of Taiwan [grant numbers MOST107-2118-M-029-001 and MOST 107-2118-M-110-004-MY3], respectively.

Disclosure statement

No potential conflict of interest was reported by the author(s).

References

- 1.Bakar K.S. and Sahu S.K., sptimer: spatio-temporal bayesian modelling using R, J. Stat. Softw. 63 (2015), pp. 1–32. [Google Scholar]

- 2.Banerjee S., Gelfand A.E., Finley A.O., and Sang H., Gaussian predictive process models for large spatial data sets, J. R. Stat. Soc. Series B. Stat. Methodol. 70 (2008), pp. 825–848. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Braud I. and Obled C., On the use of empirical orthogonal function (EOF) analysis in the simulation of random fields, Stoch. Hydrol. Hydraul. 5 (1991), pp. 125–134. [Google Scholar]

- 4.Coudret R., Girard S., and Saracco J., A new sliced inverse regression method for multivariate response, Comput. Stat. Data. Anal. 77 (2014), pp. 285–299. [Google Scholar]

- 5.Cressie N., Statistics for Spatial Data, John Wiley & Sons, Hoboken, 2015. [Google Scholar]

- 6.Cressie N. and Johannesson G., Fixed rank kriging for very large spatial data sets, J. R. Stat. Soc. Series B. Stat. Methodol. 70 (2008), pp. 209–226. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Cressie N. and Wikle C.K., Statistics for Spatio-Temporal Data, John Wiley & Sons, Boca Raton, 2011. [Google Scholar]

- 8.Cressie N., Shi T., and Kang E.L., Fixed rank filtering for spatio-temporal data, J. Comput. Graph. Stat. 19 (2010), pp. 724–745. [Google Scholar]

- 9.Demšar U., Harris P., Brunsdon C., Fotheringham A.S., and McLoone S., Principal component analysis on spatial data: an overview, Ann. Assoc. Am. Geogr. 103 (2013), pp. 106–128. [Google Scholar]

- 10.Fassò A. and Cameletti M., A unified statistical approach for simulation, modeling, analysis and mapping of environmental data, Simulation 86 (2010), pp. 139–153. [Google Scholar]

- 11.Giraldo R., Delicado P., and Mateu J., geofd: Spatial Prediction for Function Value Data (2020). Available at https://CRAN.R-project.org/package=geofd, R package version 2.0.

- 12.Gneiting T. and Raftery A.E., Strictly proper scoring rules, prediction, and estimation, J. Am. Stat. Assoc. 102 (2007), pp. 359–378. [Google Scholar]

- 13.Gromenko O., Kokoszka P., Zhu L., and Sojka J., Estimation and testing for spatially indexed curves with application to ionospheric and magnetic field trends, Ann. Appl. Stat. 6 (2012), pp. 669–696. [Google Scholar]

- 14.Ignaccolo R., Mateu J., and Giraldo R., Kriging with external drift for functional data for air quality monitoring, Stoch. Environ. Res. Risk. Assess. 28 (2014), pp. 1171–1186. [Google Scholar]

- 15.Kokoszka P. and Reimherr M., Some recent developments in inference for geostatistical functional data, Rev. Colomb. Estad. 42 (2019), pp. 101–122. [Google Scholar]

- 16.Lee D.J. and Durbán M., P-spline anova-type interaction models for spatio-temporal smoothing, Stat. Model. 11 (2011), pp. 49–69. [Google Scholar]

- 17.Li K.C., Aragon Y., Shedden K., and Thomas Agnan C., Dimension reduction for multivariate response data, J. Am. Stat. Assoc. 98 (2003), pp. 99–109. [Google Scholar]

- 18.Lue H.H., Sliced inverse regression for multivariate response regression, J. Stat. Plan. Inference 139 (2009), pp. 2656–2664. [Google Scholar]

- 19.Lue H.H., On principal hessian directions for multivariate response regressions, Comput. Stat. 25 (2010), pp. 619–632. [Google Scholar]

- 20.Lue H.H., Pairwise directions estimation for multivariate response regression data, J. Stat. Comput. Simul. 89 (2019), pp. 776–794. [Google Scholar]

- 21.Luo R., Wang H., and Tsai C.L., Contour projected dimension reduction, Ann. Stat. 37 (2009), pp. 3743–3778. [Google Scholar]

- 22.Martin J.D. and Simpson T.W., Use of kriging models to approximate deterministic computer models, AIAA J. 43 (2005), pp. 853–863. [Google Scholar]

- 23.Pebesma E. and Heuvelink G., Spatio-temporal interpolation using gstat, R J. 8 (2016), pp. 204–218. [Google Scholar]

- 24.Sharples J. and Hutchinson M., Spatio-temporal analysis of climatic data using additive regression splines, in Proceedings of International Congress on Modelling and Simulation (MODSIM' 05). Citeseer, 2005, pp. 1695–1701.

- 25.Sherman M., Spatial Statistics and Spatio-temporal Data: covariance functions and directional properties, John Wiley & Sons, Chichester, 2011. [Google Scholar]

- 26.Thorson J.T., Cheng W., Hermann A.J., Ianelli J.N., Litzow M.A., O'Leary C.A., and Thompson G.G., Empirical orthogonal function regression: linking population biology to spatial varying environmental conditions using climate projections, Glob. Chang. Biol. 26 (2020), pp. 4638–4649. [DOI] [PubMed] [Google Scholar]

- 27.Tzeng S. and Huang H.C., Resolution adaptive fixed rank kriging, Technometrics 60 (2018), pp. 198–208. [Google Scholar]

- 28.Wang W.T. and Huang H.C., Regularized principal component analysis for spatial data, J. Comput. Graph. Stat. 26 (2017), pp. 14–25. [Google Scholar]

- 29.Wikle C.K., Low-rank representations for spatial processes, Handb. Spat. Stat. 107 (2010), pp. 118. [Google Scholar]

- 30.Wood S.N., Generalized Additive Models: an introduction with R, CRC press, Boca Raton, 2017. [Google Scholar]

- 31.Xia Y., Tong H., Li W.K., and Zhu L.X., An adaptive estimation of dimension reduction space, J. R. Stat. Soc. Series B. Stat. Methodol. 64 (2002), pp. 363–410. [Google Scholar]

- 32.Yang K. and Qiu P., Nonparametric estimation of the spatio-temporal covariance structure, Stat. Med. 38 (2019), pp. 4555–4565. [DOI] [PubMed] [Google Scholar]