Abstract

Efficient computation of optimal transport distance between distributions is of growing importance in data science. Sinkhorn-based methods are currently the state-of-the-art for such computations, but require computations. In addition, Sinkhorn-based methods commonly use an Euclidean ground distance between datapoints. However, with the prevalence of manifold structured scientific data, it is often desirable to consider geodesic ground distance. Here, we tackle both issues by proposing Geodesic Sinkhorn—based on diffusing a heat kernel on a manifold graph. Notably, Geodesic Sinkhorn requires only computation, as we approximate the heat kernel with Chebyshev polynomials based on the sparse graph Laplacian. We apply our method to the computation of barycenters of several distributions of high dimensional single cell data from patient samples undergoing chemotherapy. In particular, we define the barycentric distance as the distance between two such barycenters. Using this definition, we identify an optimal transport distance and path associated with the effect of treatment on cellular data.

1. INTRODUCTION

Optimal Transport (OT) distances or Wasserstein distances are computed by lifting ground distances between points to distances between measures. This distance is computed relative to a ground distance on the support of the distributions, making it more informative than distances based only on a pointwise comparison of the densities. However, to compute the Wasserstein, one needs to find the optimal transport plan from the source distribution to a target distribution; this is a linear programming problem requiring for discrete distributions of size [1].

An efficient modification of the optimal transport problem is to consider entropy-regularized transportation. This formulation is solved with the Sinkhorn algorithm [2] by iteratively rescaling a Gaussian kernel based on the distance matrix. It is equivalent to the Schrödinger Bridge problem, for which similar algorithms were developed [3]–[5]. In the discrete case, it requires for distributions of size , since it relies on matrix-vector products. Furthermore, this formulation allows for fast computation of the discrete barycenter with fixed support (the average distributions w.r.t. the Sinkhorn distance). An important drawback of the Sinkhorn algorithm is the necessity of storing and multiplying the pairwise distance matrix with a vector.

Additionally, the ground distance is commonly chosen as the Euclidean distance. The Euclidean distance is often sub-optimal for high-dimensional datasets over larger distances according to the manifold hypothesis, which says observations lie near a low dimensional (curved) manifold in high dimensional space [6]. For higher dimensional datasets assumed to be sampled from a lower dimensional manifold, using a distance closer to the manifold for OT has shown promising results [7]–[10].

In this work, we present Geodesic Sinkhorn1 a Sinkhorn-based method for fast optimal transport with a heat-geodesic ground distance. Our method is based on the geometry of the dataset constructed from a common graph and uses the heat kernel on the graph to defined a heat-geodesic distance. Key to this approach, we will never need to construct or operate on an distance matrix, and we will only use the sparse Laplacian matrix and sparse matrix-vector products. For sparse graphs, this can be used for computation of the Sinkhorn distance with a manifold ground distance, improving on the implementations based on dense matrices.

Increasing the state-of-the art efficiency in Sinkhorn computation opens us up to being able to perform complex operations on large groups of datasets. In particular, we consider interpolating between datasets and show that using our heat-geodesic distance improves the interpolation accuracy compared to OT with Euclidean distance. The barycenter corresponds to the average distribution of a set of distributions. Our method allows for finer-grained barycenters on a data manifold, which motivates us to define a novel notion of dissimilarity between families of distributions called barycentric distance.

We apply the barycentric distance to single cell data from patient-derived cancer organoids (PDOs) to assess the effect of treatments (such as drugs and chemotherapy). Here we have one set of PDOs from control conditions, and another set that are treated. The treatment effect is thus the distance between these barycenters. In addition, we use Geodesic Sinkhorn’s barycenter to compare the effect from one family of distributions to another.

Our main contributions include: (1) A new method for computing optimal transport distances on a manifold called Geodesic Sinkhorn, which is highly efficient in time and memory. (2) Defining the barycentric distance; a novel distance between families of distributions, and showing its utility in deriving treatment effect from control and treated patient samples.

2. RELATED WORK

Geodesic Sinkhorn is related to prior work linking the entropy-regularized optimal transport problem triangular mesh with the heat operator [8], [11], but using different graph filtering techniques. These approaches approximate the application of the heat kernel to a vector by discretizing the heat equation and solving systems of linear equations. This technique was used in different contexts, either with the cotangent Laplacian [8] or to learn a ground metric [12]. Solving these systems for each Sinkhorn iteration can be done efficiently with a sparse Cholesky decomposition. However, this method’s efficiency depends mainly on the efficiency of the Cholesky decomposition which can be slow depending on the sparsity pattern is for an matrix, and necessitates solving systems of linear equations per Sinkhorn iteration, where is the number of sub-steps in the backward Euler discretization.

3. PRELIMINARIES

In this section, we start by reviewing the basics of OT and the Wasserstein distance, as well the Sinkhorn distance. Then we review two notions fundamental to our method; the heat equation on a graph and the Chebyshev approximation of the heat kernel.

3.1. Wasserstein Distance

In the following, we assume that all distributions admit a density or a probability mass function, and we use the same notation for both. Let be two probability distributions on a measurable space with metric , let be the set of joint probability distributions on the space where, for any measurable subset and . The -Wasserstein distance is defined as:

| (1) |

In the following, we consider . An exact algorithm based on linear programming can solve this problem in time for discrete distributions of size .

3.2. Sinkhorn Distances

The Kullback-Leibler (KL) divergence between and some strictly positive on is defined as

| (2) |

The Sinkhorn distance2 is a relaxation of equation 1 where the infimum is over all coupling in for . Introduced in [13], the optimization of this distance can be solved by considering the entropyregularized transport

| (3) |

where we define the entropy of a coupling as , and . This formulation converges to the Wasserstein distance as , and can be solved with the Sinkhorn algorithm with complexity of the order for discrete distributions of size [13]. In the discrete case, the transport matrix admits the form , where are vectors of size . The Sinkhorn algorithm iteratively updates the vectors as , where .

Following [8], using the kernel gives an alternative interpretation of the Sinkhorn distance as

| (4) |

The problem in equation 3 is strictly convex and continuous yielding a unique minimizer. In the discrete case, this leads to an algorithm for the entropy-regularized Wasserstein distance based on the Sinkhorn algorithm enforcing the marginal constraints on the kernel while minimizing the distance as quantified by .

The underlying metric is generally unknown, thus the kernel cannot be evaluated. The authors of [8] proposed to approximate with the heat kernel on . According to Varadhan’s formula [14], the geodesic distance on a manifold can be recovered from the heat transfer at small timescales as

| (5) |

Hence, motivating the use of the heat-geodesic distance , with associated kernel . Interestingly, Sinkhorn-based methods admit an efficient algorithm to solve the barycenter problem which we present next.

3.3. Interpolation with discrete support

By constraining the support to a set (or a graph), we can efficiently interpolate between more than two distributions. The barycenter problem [1], [8], [15] generalizes the notion of average between points to an average between distributions. For a set of distributions supported on , the objective is to find a distribution minimizing the average distance

where denotes the space of probability distributions supported on , and are non-negative weights. Finding the barycenter is a challenging optimization problem, however the barycenter for Sinkhorn-based methods admits an efficient computation. It involves updating vectors , which define a transport plan from to the barycenter . The support of the barycenter is constrained to , for most Sinkhorn-based methods the size of needs to be small for computational reason. Our method does not suffer from such a limitation. Hence, we can consider barycenter with greater expressivity, and interpolate between large sets of distributions.

3.4. Heat Diffusion on a Graph

Consider an undirected graph with a set of vertices and a set of edges , and its weighted adjacency matrix with non-negative edge weights, and the diagonal degree matrix , where . We define the combinatorial Laplacian as , for any function we have . The combinatorial Laplacian is a symmetric positive semi-definite matrix, and has an eigendecomposition with orthonormal eigenvectors and diagonal eigenvalue matrix , such that . The combinatorial Laplacian is a natural extension of the negative of the Laplacian operator to a graph. For a signal on , the diffusion of on the graph evolves according to the heat equation

The heat kernel solves this ODE, it is defined by the matrix exponential . By orthogonality of the eigenvectors of , we can write and . Computing by eigendecomposition would require operations. Recall that, for the Sinkhorn algorithm, we are only concerned with the application of the heat operator on a signal . For larger diffusion time, the heat kernel converges to its eigenvector associated to the lowest eigenvalues of the Laplacian, hence, intuitively, the heat kernel corresponds to a low-pass filter. In Geodesic Sinkhorn, we use Chebyshev polynomials [16], [17] to approximate the application of the heat operator to a signal. For a short timescale , using the heat kernel accounts for using the geodesic distance as ground distance in the entropy-regularized OT formulation equation 3.

3.5. Chebyshev Polynomials

Polynomial sequences are often used to approximate functions or operator. With Chebyshev polynomials, we can approximate the application of the matrix exponential to a signal on the graph. An attractive property of Chebyshev polynomials is that the approximation error decays exponentially with the maximum degree . They are defined by the recursive relation with and for . On [−1, 1] these polynomials are orthogonal w.r.t. the weight , and can be used to express the operator . Assuming the largest eigenvalue , we can write

where the scalar coefficient depend on time and can be evaluated with the Bessel function. The approximation of is based on the first term of the series which we note . It results in matrix-vector products which can be efficient since, in general, is a sparse matrix. On a -nearest neighbor graph, this can be , where is a regularization parameter. Chebyshev polynomials admits interesting theoretical properties and are known to converge faster than other polynomials [17], [18]. The choice of the parameter is related to the number of neighbors or the connectivity of the graph. For small diffusion time, hence only diffusing in a local neighborhood, the approximation is accurate even with a small . As the diffusion time increases, has to increase in order to consider a larger neighborhood around a node. For OT, we consider small diffusion time, and we found that our results were stable for all greater than 10.

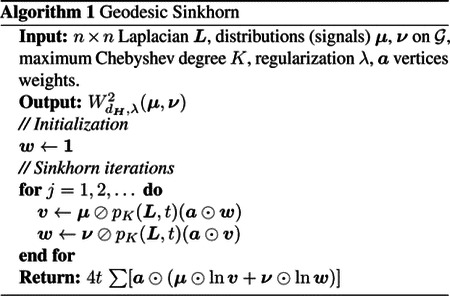

4. GEODESIC SINKHORN DISTANCES

We define the Geodesic Sinkhorn distance between any signals or distributions on a graph by the entropy-regularized OT with the heat kernel on the graph. This construction is also valid between any point cloud datasets. In that case, for datasets sampled from a set of distributions , we construct a common graph using an affinity kernel on the datasets and compare two samples by taking the distance between two indicator functions on the graph. We approximate the heat kernel with Chebyshev polynomials of order . In Algorithm 1, we present the main steps to evaluate the Geodesic Sinkhorn. It is based upon Sinkhorn iterations [2], [13], where ⊘ and ⊙ denote respectively the elementwise division and multiplication. Note that, as opposed to the usual Sinkhorn algorithm, we never have to store a dense distance matrix, but only the usually sparse graph Laplacian.

Definition 4.1. The Geodesic Sinkhorn distance between two distributions on a graph is

In the following proposition, we find the ground distance implicitly used in the optimal transport defined by Geodesic Sinkhorn. We use ≃ for the equivalence relation between distances.

Proposition 4.2. There exists a maximum Chebyshev polynomial degree such that the ground distance in Geodesic Sinkhorn is equivalent to the one based

In particular, the Wasserstein distances with these ground distances are equivalent.

Proof. Because the approximation error decreases exponentially in [17], we have that for any sufficiently small there exist such that . Choose such that this is true for all vertices . We define

and we have the equivalence between the distances since

and since the logarithm is a monotonic function. □

In [8], [12], using the Euler implicit discretization results in a ground cost of the form , where Id is the identity matrix, and can be seen as another approximation for the matrix exponential.

The efficiency of Geodesic Sinkhorn improves the notion of barycenter as it is possible to consider much larger graph , thus a finer grained support of the barycenter. This leads us to define a novel distance between families of distributions.

Definition 4.3. For two finite families of distributions and supported on , we define the barycentric distance between the families as

where are respectively the barycenters of and .

The previous definition is valid for any distances between distributions or barycenters. However, OT barycenters are known to be more informative than others [15]. We will further explore this comparison in our experiments. We use it to distinguish between two groups in a medical setting where a set of patients received a treatment (defining the family ), and another set acts as a control family . Following this idea, we define a notion of effect between two families.

Definition 4.4. For two family of distributions and supported on , define the Expected Barycenter Effect of as

where are respectively the barycenters of and , and the features and .

Note that we compute the average on the family of distributions instead of the average on their support, hence we evaluate their expectations in a closed form. This definition also extends to a conditional equivalent where families of distribution can be subdivided with discrete covariate variables. When the barycenters are computed with the total variation, this definition is equivalent to the naive Average Treatment Effect(ATE) [19]; i.e. difference of empirical means.

5. RESULTS

We demonstrate the accuracy and efficiency of the Geodesic Sinkhorn distance on two tasks: (1) Nearest-Wasserstein-neighbor calculation on simulated data with manifold structure similar to the setup of [10]; (2) A newly defined Barycentric distance between families of distributions computed to quantify the effect of a treatment on patient-derived organoids. In Appendix A.1, we present additional results on time series interpolation.

5.1. Nearest-Wasserstein-neighbor distributions

In this experiment, we compare our method with Sinkhorn [13], and LR Sinkhorn [20], both algorithms with Euclidean and squared Euclidean ground distance, with DiffusionEMD [21], and Sinkorn with Euler approximation of the heat filter. We created 15 Gaussian distributions sampled randomly on a swiss roll dataset, and sampled 10k observations from each distribution. We rotated the observations in 10 dimensions. We consider a k-nearest neighbors task on these distributions. We evaluate the methods with the ground truth, since we know the exact geodesic distance on the manifold. In Tab. 1, we report the average and standard deviation over 10 seeds of the Spearman and Pearson correlations to the ground truth, and the runtime in seconds with and without the computation of the graph. Our method is the most accurate while being much faster than other Sinkhorn-based methods.

Table 1.

KNN task for 15 distributions, best score highlighted is bold. Geodesic Sinkhorn is the most accurate, while being faster than other Sinkhorn-based methods.

| Method | SpearmanR | PearsonR | P@5 | Time(s) no graph | Time(s) |

|---|---|---|---|---|---|

| Diffusion EMD | 0.62±0.097 | 0.736±0.023 | 0.66±0.072 | 2.845±0.135 | 7.877±0.531 |

| Sinkhorn | 0.387±0.044 | 0.523±0.036 | 0.471±0.028 | 112.406±0.206 | 112.406±0.206 |

| Sinkhorn | 0.411±0.036 | 0.485±0.027 | 0.492±0.053 | 133.686±5.234 | 133.686±5.234 |

| LR Sinkhorn | −0.31±0.07 | −0.131±0.086 | 0.237±0.037 | 578.631±107.82 | 578.631±107.82 |

| LR Sinkhorn | 0.366±0.048 | 0.379±0.051 | 0.447±0.023 | 204.191±3.656 | 204.191±3.656 |

| Euler Sinkhorn | 0.776±0.061 | 0.718±0.009 | 0.728±0.072 | 449.752±42.985 | 455.059±43.083 |

| Geodesic Sinkhorn | 0.847±0.023 | 0.754±0.016 | 0.833±0.034 | 10.176±1.249 | 16.682±1.705 |

5.2. Barycentric distance

We test if we can identify a linear treatment effect with the Expected Barycenter Effect (EBE). In this experiment, we create a control family of distributions of ten standard Gaussian distributions. The treatment group consists of nine Gaussian distributions , and one outlier centered at different means. For each distribution, we sample 500 observations, and reproduce the experiment over ten seeds. In Tab. 2, we report the EBE and its standard deviation with the Geodesic Sinkhorn, the Total Variation (TV) distance, and Sinkhorn. Since the TV only compares the mean, it is sensitive to the outlier, whereas our method can identify the true treatment effect.

Table 2.

Expected Barycenter Effect (EBE) with one outlier distribution centered at −60,−30,0, or 5. Comparison using the barycenter from Sinkhorn, total variation, or Geodesic Sinkhorn. Values closer to the real treatment effect of 5 are better.

| Outlier | EBE Geo Sinkhorn | EBE Sinkhorn | EBE TV |

|---|---|---|---|

| −60 | 5.016±0.226 | −0.103±0.005 | −1.429±0.144 |

| −30 | 5.053±0.196 | 0.355±0.049 | 1.571±0.144 |

| 0 | 4.917±0.315 | 4.954±0.157 | 4.571±0.144 |

| No outlier | 5.059±0.159 | 5.054±0.16 | 5.071±0.144 |

5.3. Single-cell signaling data

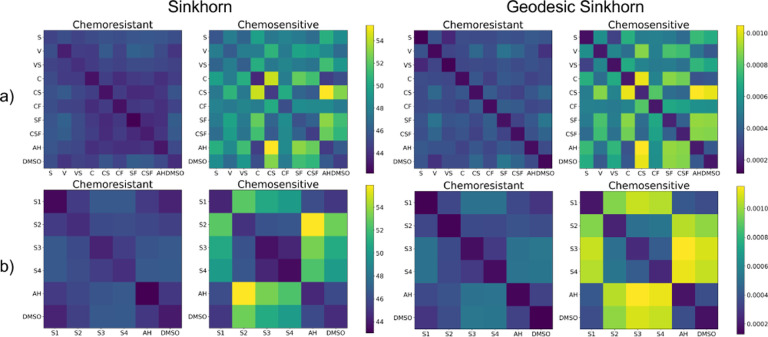

We use single-cell signaling data produced by mass cytometry (MC) for a screening study to compare the treatment effect of different chemotherapies on 10 colorectal cancer (CRC) patient-derived organoids (PDOs) [22]. These PDOs can be grouped into chemoresistant PDOs, that show little-to-no effect when treated with chemotherapies; and chemosensitive PDOs, that present strong shifts in their phenotypes upon treatment. The observations include single-cell data information on the cell cycle and signaling changes upon treatment of PDOs with different CRC treatments at a range of concentrations. In Fig. 1, we present the barycentric distances matrices between treatments a) and between four concentrations of treatment SN-38 (S) b). In both cases, the control groups corresponds to AH and DMSO, the two rightmost columns. We compare the distance matrices between Sinkhorn (left) and our method (right). Our method provide a finer distinction between treatments (Fig. 1 top) and concentrations (Fig. 1 bottom), especially for the chemosensitive group. As observed in [22], chemosensitive PDOs show little-to-no response to lower concentrations of SN-38 (S1), but their phenotype shifts very strongly upon treatment with higher concentrations (S2, S3, and S4) (Fig. 1 b). When comparing combinations of different treatments (Fig. 1 a), Geodesic Sinkhorn better resolves the difference between SN-38 (S) alone and in combination with Cetuximab (C), showing that S is the main agent creating the treatment effect and the combination with C does not resolve in a synergistic effect [22]. Note that we only consider the relative magnitude of the distances, since the two algorithm use different ground distances.

Fig. 1.

a) Barycentric distances matrices for the Sinkhorn algorithm (left) and our method Geodesic Sinkhorn (right). b) Barycentric distances matrices between doses of treatment SN-38, for four concentrations S1 S2 S3 S4. Control groups correspond to AH and DSMO. Geodesic Sinkhorn provides a clearer distinction between treatments, and doses.

6. CONCLUSION

In this work, we considered the use of OT for graphs and large datasets in high dimensions potentially sampled from a lower dimensional manifold. We proposed Geodesic Sinkhorn, a fast implementation of the Sinkhorn algorithm using the graph Laplacian and Chebyshev polynomials. Our method is well adapted for large and high dimensional datasets as it is defined with a geodesic ground distance, which takes into account the underlying geometry of the data, and requires less computation time and less memory. On a synthetic dataset, we showed that Geodesic Sinkhorn is much faster than other Sinkhorn-based methods while being more accurate. With the Wasserstein barycenter, we defined the barycentric distance to compare entire families of distributions, and the expected barycenter effect, then applied both methods to a large PDO drug screen dataset.

Acknowledgments

This research was partially funded by ESP Mérite [G.H.], NSERC Discovery grant 03267 [G.W.], Canada CIFAR AI Chair [G.W.], NIH grant R01GM135929 [G.W., S.K.], Cancer Research UK (C60693 / A23783) [C.J.T.], the Rosetrees Trust (M872 / A2292) [C.J.T.], and the Yale-UCL Collaborative Student Exchange Programme.

A. APPENDIX

A.1. Time series Interpolation

To evaluate Geodesic Sinkhorn’s performance on inferring dynamics, we test its performance on a task for time series interpolation. In this setting the most used datasets are Embryoid Body [1], and WOT [2]. We curated ten scRNA-seq datasets; WOT [2], Clark [3], Embryoid Body [1], and seven more from the 2022 multimodel single-cell integration challeng3 to test our method. The observations are the gene expression of single cells from a distribution evolving through time. The Waddington-OT dataset (WOT) has 38 timpoints of a developing stem cell population over 18 days collected roughly every 6–12 hours. This is the most densely sampled dataset in time. The Embryoid Body dataset is a single-cell RNA seq dataset of developing embryoid bodies from 0–30 days with 5 datasets collected over time. The Clark dataset contains 12 samples over 9 unique timepoints of a developing mouse retina. Finally, the NeurIPS 2022 data contains four donors with single-cell transcriptomic data collected over 4 timepoints for 3 donors with 10X-multiome and 4 combined cite-seq (in the publically released training data) leading to 7 additional time series with 4 timepoints.

The goal is to interpolate the distribution between two timepoints. The number of timepoints in each dataset range from 4 to 40. Here for a dataset with single-cell distributions over time for we compute the exact Euclidean 2-Wasserstein distance between the interpolated distribution at time and the ground truth distribution . Since we interpolate between two distributions we used the McCann interpolant as its support is . We compare our Geodesic Sinkhorn interpolation with either the Sinkhorn Mccann interpolant with Euclidean ground distance ( Sinkhorn), Sinkhorn Euler Mccann with the Euler heat approximation in Tab. 3 Sinkhorn with Euler approximation ran out of memory on the Clark and WOT datasets. We see that across all 10 the Geodesic Sinkhorn interpolation with the Mccann interpolant outperforms all other methods, hence showcasing the importance of the heat-geodesic distance and our kernel approximation. We also compare Sinkhron Euler and Geodesic Sinkhorn for different nearest neighbors graphs in Tab. 4 and Tab. 5, where Geodesic Sinkhorn outperforms Sinkhorn Euler on most datasets.

Table 3.

Time series interpolation task comparing mean and standard deviation across 5 seeds the 2-Wasserstein metric averaged across time for 10 single-cell timeseries datasets. Sinkhron Euler ran out of memory on two datasets. Lower is better, best performance on each dataset is bold.

| Dataset | Sinkhorn | Sinkhron Euler | Geo Sinkhorn (ours) |

|---|---|---|---|

| Cite Donor0 | 48.545 ± 0.057 | 46.254 ± 3.192 | 44.440 ± 0.108 |

| Cite Donor1 | 48.220 ± 0.055 | 45.897 ± 3.254 | 44.165 ± 0.103 |

| Cite Donor2 | 50.281 ± 0.016 | 47.773 ± 3.958 | 45.673 ± 0.092 |

| Cite Donor3 | 49.339 ± 0.081 | 46.565 ± 3.553 | 45.022 ± 0.146 |

| Clark | 13.500 ± 0.003 | – | 13.288 ± 0.008 |

| EB | 12.415 ± 0.008 | 12.298 ± 0.140 | 12.133 ± 0.011 |

| Multiome Donor0 | 56.648 ± 0.048 | 55.373 ± 7.234 | 53.431 ± 0.077 |

| Multiome Donor1 | 54.028 ± 0.126 | 52.396 ± 4.394 | 50.238 ± 0.022 |

| Multiome Donor2 | 58.798 ± 0.155 | 57.182 ± 5.511 | 55.041 ± 0.058 |

| WOT | 8.096 ± 0.003 | – | 7.397 ± 0.106 |

Table 4.

Time series interpolation task comparing mean of the 2-Wasserstein for a KNN graph with 50 neighbors. Lower is better, best score is bold.

| Dataset | Sinkhorn Euler | Geodesic Sinkhorn |

|---|---|---|

| Cite Donor0 | 48.507 | 46.850 |

| Cite Donor1 | 48.207 | 46.883 |

| Cite Donor2 | 50.383 | 49.176 |

| Cite Donor3 | 49.210 | 47.646 |

| Clark | 13.506 | 13.378 |

| EB | 12.409 | 12.394 |

| Multiome Donor0 | 56.676 | 55.095 |

| Multiome Donor1 | 54.028 | 53.952 |

| Multiome Donor2 | 58.821 | 57.187 |

| WOT | 8.070 | 8.279 |

Table 5.

Time series interpolation task comparing mean of the 2-Wasserstein for a KNN graph with 100 neighbors. Lower is better, best score is bold.

| Dataset | Sinkhorn Euler | Geodesic Sinkhorn |

|---|---|---|

| Cite Donor0 | 48.573 | 47.102 |

| Cite Donor1 | 48.197 | 47.373 |

| Cite Donor2 | 50.298 | 49.517 |

| Cite Donor3 | 49.290 | 48.433 |

| Clark | 13.500 | 13.396 |

| EB | 12.415 | 12.416 |

| Multiome Donor0 | 56.708 | 55.562 |

| Multiome Donor1 | 54.063 | 54.016 |

| Multiome Donor2 | 58.802 | 57.732 |

| WOT | 8.096 | 8.253 |

Footnotes

With a slight abuse of language we use the term distance, although the entropy-regularized formulation does not respect the identity of indiscernibles.

References

- [1].Peyré G. and Cuturi M., “Computational Optimal Transport,” arXiv, 2020. [Google Scholar]

- [2].Sinkhorn R. and Knopp P., “Concerning nonnegative matrices and doubly stochastic matrices,” Pacific Journal of Mathematics, 1967. [Google Scholar]

- [3].Fortet R., “Résolution d’un systeme d’équations de m. schrödinger,” J. Math. Pure Appl. IX, 1940. [Google Scholar]

- [4].Kullback S., “Probability densities with given marginals,” The Annals of Mathematical Statistics, 1968. [Google Scholar]

- [5].Knight P. A. and Ruiz D., “A fast algorithm for matrix balancing,” IMA Journal of Numerical Analysis, 2013. [Google Scholar]

- [6].Moon K. R., Stanley J. S., Burkhardt D., van Dijk D., Wolf G., and Krishnaswamy S., “Manifold learning-based methods for analyzing single-cell RNA-sequencing data,” Current Opinion in Systems Biology, 2018. [Google Scholar]

- [7].Huguet G., Magruder D. S., Tong A., et al. , “Manifold interpolating optimal-transport flows for trajectory inference,” NeurIPS, 2022. [PMC free article] [PubMed] [Google Scholar]

- [8].Solomon J., De Goes F., Peyré G., et al. , “Convolutional wasserstein distances: Efficient optimal transportation on geometric domains,” ACM Transactions on Graphics (ToG), 2015. [Google Scholar]

- [9].Tong A., Huguet G., Shung D., et al. , “Embedding signals on graphs with unbalanced diffusion earth mover’s distance,” in ICASSP, IEEE, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [10].Tong A., Huguet G., Natik A., et al. , “Diffusion earth mover’s distance and distribution embeddings,” in ICML, 2021. [Google Scholar]

- [11].Crane K., Weischedel C., and Wardetzky M., “Geodesics in heat: A new approach to computing distance based on heat flow,” ACM Transactions on Graphics, 2013. [Google Scholar]

- [12].Heitz M., Bonneel N., Coeurjolly D., Cuturi M., and Peyré G., “Ground metric learning on graphs,” J. of Math. Imaging and Vision, 2021. [Google Scholar]

- [13].Cuturi M., “Sinkhorn distances: Lightspeed computation of optimal transport,” NeurIPS, 2013. [Google Scholar]

- [14].Varadhan S. R. S., “On the behavior of the fundamental solution of the heat equation with variable coefficients,” Communications on Pure and Applied Mathematics, 1967. [Google Scholar]

- [15].Cuturi M. and Doucet A., “Fast computation of wasserstein barycenters,” in ICML, 2014. [Google Scholar]

- [16].Shuman D. I., Vandergheynst P., and Frossard P., “Chebyshev polynomial approximation for distributed signal processing,” in DOCSS, IEEE, 2011. [Google Scholar]

- [17].Marcotte S., Barbe A., Gribonval R., et al. , “Fast multi-scale diffusion on graphs,” in ICASSP, IEEE, 2022. [Google Scholar]

- [18].Huang S.-G., Lyu I., Qiu A., and Chung M. K., “Fast polynomial approximation of heat kernel convolution on manifolds and its application to brain sulcal and gyral graph pattern analysis,” IEEE transactions on medical imaging, 2020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [19].Imbens G. W. and Rubin D. B., Causal inference in statistics, social, and biomedical sciences. Cambridge University Press, 2015. [Google Scholar]

- [20].Scetbon M., Cuturi M., and Peyré G., “Low-rank sinkhorn factorization,” in ICML, 2021. [Google Scholar]

- [21].Tong A., Huang J., Wolf G., Dijk D. V., and Krishnaswamy S., “TrajectoryNet: A Dynamic Optimal Transport Network for Modeling Cellular Dynamics,” in ICML, 2020. [PMC free article] [PubMed] [Google Scholar]

- [22].Zapatero M. R., Tong A., Sufi J., et al. , “Trellis single-cell screening reveals stromal regulation of patient-derived organoid drug responses,” bioRxiv, 2023. [DOI] [PubMed] [Google Scholar]

References

References

- [1].Moon K. R., van Dijk D., Wang Z., et al. , “Visualizing structure and transitions in high-dimensional biological data,” Nat Biotechnol, vol. 37, no. 12, pp. 1482–1492, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [2].Schiebinger G., Shu J., Tabaka M., et al. , “Optimal-Transport Analysis of Single-Cell Gene Expression Identifies Developmental Trajectories in Reprogramming,” en, Cell, vol. 176, no. 4, 928–943.e22, 2019. [DOI] [PMC free article] [PubMed] [Google Scholar]

- [3].Clark B. S., Stein-O’Brien G. L., Shiau F., et al. , “Single-Cell RNA-Seq Analysis of Retinal Development Identifies NFI Factors as Regulating Mitotic Exit and Late-Born Cell Specification,” Neuron, vol. 102, no. 6, 1111–1126.e5, Jun. 2019, ISSN: 08966273. [DOI] [PMC free article] [PubMed] [Google Scholar]