Abstract

Biological systems with many components often exhibit seemingly critical behaviors, characterized by atypically large correlated fluctuations. Yet the underlying causes remain unclear. Here we define and examine two types of criticality. Intrinsic criticality arises from interactions within the system which are fine-tuned to a critical point. Extrinsic criticality, in contrast, emerges without fine tuning when observable degrees of freedom are coupled to unobserved fluctuating variables. We unify both types of criticality using the language of learning and information theory. We show that critical correlations, intrinsic or extrinsic, lead to diverging mutual information between two halves of the system, and are a feature of learning problems, in which the unobserved fluctuations are inferred from the observable degrees of freedom. We argue that extrinsic criticality is equivalent to standard inference, whereas intrinsic criticality describes fractional learning, in which the amount to be learned depends on the system size. We show further that both types of criticality are on the same continuum, connected by a smooth crossover. In addition, we investigate the observability of Zipf’s law, a power-law rank-frequency distribution often used as an empirical signature of criticality. We find that Zipf’s law is a robust feature of extrinsic criticality but can be nontrivial to observe for some intrinsically critical systems, including critical mean-field models We further demonstrate that models with global dynamics, such as oscillatory models, can produce observable Zipf’s law without relying on either external fluctuations or fine tuning. Our findings suggest that while possible in theory, fine tuning is not the only, nor the most likely, explanation for the apparent ubiquity of criticality in biological systems with many components. Our work offers an alternative interpretation in which criticality, specifically extrinsic criticality, results from the adaptation of collective behavior to external stimuli.

Life emerges from an intricate interplay among a large number of components, yet how it achieves such exquisite organization remains unexplained. Several aspects of this question fall within the domain of statistical physics, which studies the emergence of collective behaviors from the interaction between microscopic degrees of freedom. Perhaps the greatest success of statistical physics is in describing spontaneous transitions between two phases of matter such as liquid and gas. For a class of phase transitions, such as between ferromagnetic and paramagnetic displays unique properties, absent from either phase. Many of, displays unique properties, absent from either phase. Many of these properties are relevant to biological function: scale invariance allows scaling up without the need for redesign [1–3], insensitivity to microscopic details can form a basis for robust behaviors [4, 5], and strong correlations between components appear useful for effective information propagation [6–9].

A tantalizing question arises whether biology operates near a critical point [10, 11]. This idea has a long history, see, e.g., Ref. [12]. However, it is not until recently that high-precision, simultaneous measurements of hundreds to thousands of components in biological systems allow quantitative empirical tests of the criticality hypothesis.

Modern quantitative biology experiments have indeed observed seemingly critical behaviors in many systems across scales, from amino acid sequences [13] to spatiotemporal dynamics of gene expressions [4, 14] to firing patterns of neurons [15–20] to velocity fluctuations in bird flocks [21–23]. In these systems, correlations among the components and susceptibilities to perturbations often appear to diverge with the system size. Yet, this ubiquity is somewhat surprising, not least because equilibrium statistical physics tells us that criticality requires a hard to achieve fine tuning of models to a special point in their parameter space.

While biology may well be capable of fine tuning [24, 25], alternative explanations for the observed criticality exists [26–28]. Some signatures of criticality arise without fine tuning when observable degrees of freedom are coupled to an unobserved fluctuating variable or variables [26, 27, 29, 30]. Provided that the number of fluctuating variables is relatively small and their fluctuations are sufficiently large, this latent fluctuation needs not depend on the specifics of the observable degrees of freedom such as the system size. In this case, criticality results from an extrinsic effect. Although this mechanism seems to differ from the fine-tuning explanation, they are not entirely unrelated; interacting systems at criticality also generate large fluctuations. In fact, the usual definition of criticality describes not its mechanisms, but rather the behavior of the observable degrees of freedom such as diverging correlation length, scale invariance and nonanalytic thermodynamic functions (see, e.g., Refs. [31–33]). Such properties can emerge intrinsically from interactions between components when model parameters are carefully chosen, as is often the case in statistical physics. However, this intrinsic mechanism is by no means the only one, nor is it a defining feature of criticality.

Here we introduce a new definition of criticality that spans both intrinsic and extrinsic mechanisms. Using the languages of learning and information theory, we show that a unifying feature of both types of criticality is a divergence of mutual information between two halves of the system.1 The rates of divergence depend on how the fluctuations scale with the system size and generally differ between intrinsic and extrinsic criticality. We show further that critical systems are equivalent to the problem of learning parameters from iid samples, with the fluctuating fields playing the role of the parameters and system components of iid samples. This learning problem is characterized by diverging information between the parameters and samples. Through the learning-theoretic lens, we interpret intrinsic criticality as a fractional learning problem, in which only a fraction of parameters is available for learning due to a sharpening of a priori distribution as the system size grows. In contrast, extrinsic criticality has a fixed a priori distribution whose entropy, i.e., information available to be learned, does not shrink with the system size.

In addition, we examine the observability of Zipf’s law—the inverse relationship between the rank and frequency of system states—commonly used as an empirical signature of criticality [10, 17]. While the correspondence between Zipf’s law and critical behaviors is expected in the thermodynamic limit [10], little is known whether it remains robust under finite sample size and especially in finite systems, for which the definition of criticality becomes blurred. Our analyses reveal that the extrinsic mechanism is a likelier explanation of experimentally observed Zipfian behaviors than typical intrinsic critical systems, such as critical mean-field models, which usually generate fluctuations that are too small. We show further that, when endowed with certain dynamics, intrinsically induced fluctuations can become large enough to support empirically observable Zipf-like distributions over a range of model parameters without the need for fine tuning.

We emphasize that the distinction between both types of criticality is not sharp. Varying the system-size scaling of critical fluctuations leads to a smooth crossover between intrinsic and extrinsic criticality with the latter corresponding to the limit, in which the fluctuations are independent of the system size. Importantly, this crossover behavior means that intrinsically critical fluctuations that depend very weakly on the system size are empirically indistinguishable from extrinsic critical ones.

I. INFORMATION AND LEARNING–THEORETIC VIEW OF CRITICALITY

Large fluctuations are a defining feature of criticality.

Criticality is often characterized by nonanalytic behaviors of the derivatives of thermodynamic energy or the divergence of correlations of an order parameter [31, Ch XIV]. Such definitions assume the knowledge of the probabilistic model that governs the system, and rely on quantities that are not readily accessible in biology experiments. As a result, they are hard to extend to living systems. Alternatively, one can attempt to define criticality, directly using properties of the joint probability distribution of the system’s components which can be estimated directly from data. Zipf’s law in the rank-ordered frequencies of the system states is one possibility [10]. This definition of criticality also describes the samples that encode maximum information about the unknown generative process at a fixed level of compression [41–44]. More generally, energy is an extensive quantity and thus the central limit theorem ensures that the variance of energy fluctuations for a typical, weakly correlated statistical physics system of size scales as . A faster growth, with , indicates correlated microscopic fluctuations that violate the iid assumptions of the central limit theorem.2 We use the presence of these atypically large fluctuations as a definition of criticality.

Divergent mutual information between subsystems is another signature of criticality.

Large correlated fluctuations allow predictions of the state of one part of the system, , from measurements of its other part, , with and . Specifically the mutual information between these parts reads [45]

| (1) |

where denotes the entropy of a random variable. At a continuous phase transition, whether equilibrium or not, this mutual information diverges logarithmically, [34–40]. We take this divergence as another, equivalent definition of criticality. We will show that this definition provides a unifying way of treating extrinsic and intrinsic criticality (see Fig. 1), and to isolate the effects of critical correlations from that due to geometry, we will focus on non-spatial systems here and in the following.

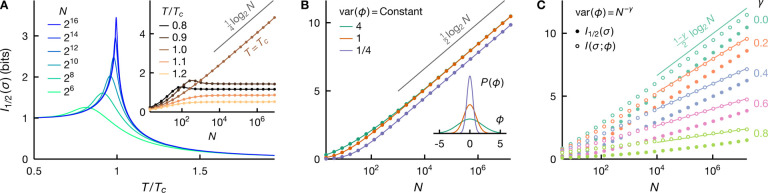

FIG. 1. Divergent information signifies criticality.

A: Intrinsic criticality in equilibrium generally requires fine tuning. We depict the mutual information between two equal halves of the system, with , for the fully connected Ising model as a function of temperature for a range of system sizes (see legend). We see that the information diverges with only at the critical temperature . This divergence is logarithmic, with the asymptotic information given approximately by (inset). B: Extrinsic criticality, on the other hand, emerges without fine tuning. For a system of noninteracting spins, coupled to a common Gaussian fluctuating field (inset), the information always diverges with . In contrast to the fully connected Ising model at criticality, the asymptotic information grows faster with . C: The scaling exponent of the critical fluctuation, , controls the information divergence rate. We illustrate the information between two halves of the system (filled circles), and between the system and the latent field (empty circles) for conditionally independent identical spins, Eq. (5), under a Gaussian fluctuating field for various values of (see legend). The asymptotic scaling of the information is in good agreement with the expected logarithmic divergence (lines), Eq. (19). We also see that , as expected from the data processing inequality for the Markov chain .

Diverging information also characterizes statistical inference.

Generally, the information that iid samples encode about the parameters of a distribution grow logarithmically with sample size in the limit [35, 36]. Take, for example, a process that generates iid samples from a probability distribution where is an unknown, real-valued, and possibly multi-dimensional parameter, which one wants to estimate from . Given an a priori distribution , the joint marginal distribution of the samples is

| (2) |

If we divide the samples into two macroscopic groups, i.e., , then the mutual information between these groups reads [35] (see also Appendix A)

| (3) |

where denotes the dimensionality of the parameter , or, more precisely, the dimensionality of the subset of the parameters that can be inferred from .3 Importantly, the information between the two halves exists only to the extent that both depend on . In fact, this information is nearly the same as the information one has about the parameter having observed , i.e., [35] (see Fig. 1C). We emphasize that this logarithmic divergence arises without fine tuning and for a broad range of assumptions about and .

Extrinsic criticality is equivalent to statistical learning.

We can interpret the information divergence in inference problems as a signature of criticality, imposed extrinsically by the unknown, fluctuating variable . We consider a physical manifestation of an extrinsically critical system [26, 27] and write down the joint probability of noninteracting, identical binary spins in an external magnetic field ,

| (4) |

where and . If the field is not constant, but a random variable that fluctuates for different realizations of the system, then marginalizing over this field yields

| (5) |

where is the marginal distribution of the fluctuating field. This equation takes the exact same form as Eq. (2), with the magnetic field playing the role of the model parameter and the spins of the iid samples. As a result, it follows immediately that [35], where and denote two halves of the system, see Fig. 1B. In fact, we can derive this result by noticing that the a posteriori distribution, , sharpens as grows, with the asymptotic variance decreasing as . In other words, as our knowledge of the parameter improves with , its a posteriori differential entropy decreases as . Given that the a priori entropy is finite and does not change with , we obtain

| (6) |

where the approximations hide the terms of order .

We can glean additional insights from a more traditional statistical mechanics argument. First we recast Eq. (5) as

| (7) |

where is the magnetization. For large , we evaluate the above integral, using the saddle point approximation and assuming that satisfies certain technical conditions [26],

| (8) |

where denotes the saddle point. Defining the energy function as and recalling that the thermodynamic entropy is the logarithm of the density of states—i.e., with —we obtain to the leading order in [26]

| (9) |

which does not grow with . This equivalence between the energy and entropy to all increasing order in signifies a very strong form of criticality [10].

To compute the mutual information, we write down the entropy of the system

| (10) |

Using Eq. (9) and noting that , we have

| (11) |

where we drop the terms of order and smaller. As a result, the information between the two halves of the system reads

| (12) |

which exhibits the same logarithmic divergence as one-parameter learning, Eq. (6).

The specifics of our calculations (conditional independence of spins, binary spins, etc.) are not important for the main result: an extrinsically varying parameter gives the mutual information between two subparts of the system (surface interaction terms excluded) that grows as . This is a very specific form of criticality, mathematically equivalent to a problem of learning the said parameter from observations of the system’s microstate. For larger dimensional parameters, the calculation generalizes: mutual information is per inferable parameter component [35] (so long as the number of parameters is much smaller than .

Intrinsic criticality is described by ‘fractional’ parameter learning.

To illustrate the physics of intrinsic criticality, we consider a minimal model of fully connected identical Ising spins, defined by the energy function . As usual, the joint probability distribution of the spins is , where denotes the inverse temperature. The partition function guarantees proper normalization,

| (13) |

where the last equality follows from the Hubbard–Stratonovich transformation. We see that the system consists of fluctuating degrees of freedom that include the spins as well as a scalar field , and the joint distribution of these variables reads

| (14) |

Marginalizing out the spins yields

| (15) |

where the subscript emphasizes that this distribution is -dependent. Now the distribution over the spin states is

| (16) |

which is the same form as the extrinsically critical model in Eq. (5), albeit with an a priori distribution that depends on .

Intrinsically induced fluctuations behave differently at and away from the critical point. To see this, we recall that for small . Substituting this approximation into the exponent of Eq. (15) gives, for small ,

| (17) |

We see that for , but at . For , we have again with a prefactor that depends on the curvature around the maxima of . This change in scaling behaviors is what defines criticality: the fluctuation becomes atypically large only at the critical temperature , with a variance that scales as instead of for this simple fully connected model.

Divergent mutual information emerges only at the critical point, see Fig. 1A. The scaling behaviors of the variance of intrinsically induced fluctuations result in the asymptotic a priori differential entropy, at and , otherwise. From Eq. (14), we have , hence the a posteriori differential entropy scales as . We see that the logarithmic divergences in a priori and a posteriori entropy cancel exactly away from criticality, leading to mutual information that does not grow with . At criticality, on the other hand, we have [38]

| (18) |

We emphasize that the prefactor here is 1/4 instead of 1/2—that is, we learn only half of a parameter in this intrinsically critical setting.

Fractional learning is typical of intrinsically induced criticality.

In the above examples of intrinsic and extrinsic criticality, we see that the a posteriori differential entropy of the fluctuating field is asymptotically identical, i.e., . The a priori entropy, in contrast, differs: does not depend on for extrinsic fluctuations, whereas for the fully connected Ising model at criticality. As a result, the mutual information diverges at different rates [Eqs. (6) & (18)]. More generally, intrinsically induced fluctuations depend on the system size but need not take the same form as Eq. (15). For a unimodal fluctuating field with , the differential entropy reads . Therefore (see Appendix A),

| (19) |

Away from criticality, , and the mutual information is finite: we cannot learn much about the field because, in noncritical thermodynamic systems, fluctuations are small, and we already know almost everything a priori. In contrast, critical fluctuations are larger, , so that observing the spins provides information about the specific realization of , and hence about the other spins. However, the entropy of intrinsically induced critical fluctuations decreases with quite generally, , resulting effectively in only a fraction of a parameter being available for learning, thereby a decrease in the information from its maximum possible of . In Fig. 1C, we see that Eq. (19) agrees well with the asymptotic behavior of mutual information for a range of .

Thus, we have shown that, at least for a simple model, intrinsic criticality can be viewed as a learning problem, where the underlying large fluctuations in the order parameter leave sufficient freedom to learn its specific realization from observations of the system state. The expression of the information in terms of the difference of the a priori and the a posteriori entropy, Eq. (6), shows that these results will generalize to other critical systems: critical exponents will govern the a priori differential entropy of the order parameter, while the a posteriori differential entropy remains approximately . Similarly, for multi-dimensional order parameters, each dimension will contribute to the mutual information essentially independently. For correlated fluctuating parameters, the logarithmic divergence rate provides a measure of effective dimensions of the parameters [47]. Finally, extrinsic and intrinsic criticality will add up as well so that each extrinsically or intrinsically critical field (i.e., with -independent or -dependent fluctuations) will contribute or a smaller amount to the coefficient in front of in the information.

II. SIGNATURE OF CRITICALITY IN FINITE SYSTEMS

In reality, we can only observe a finite number of components, and the analysis of asymptotic behaviors, while instructive, becomes less precise. To this end, we now turn to the observability of criticality in finite systems. Criticality admits a number of potentially observable signatures. We focus on the properties of the empirical joint distribution of the system components, which can be constructed directly from observational data and thus is readily usable in the context of living systems. A critical system is expected to exhibit Zipf’s law, i.e., an inverse relationship between ranks and frequencies of the system states [10].

First we recap how Zipf’s behavior emerges from large fluctuations [26, 27] in the asymptotic limit. Consider the joint distribution of conditionally independent spins,

| (20) |

We see that , and thus becomes a sharper function of as increases, with a characteristic width that scales as . This scaling sets a threshold above which fluctuations are critical—that is, critical fluctuations are characterized by with . As , a critical prior, , appears flat with respect to which becomes infinitely sharp. Therefore, we can make the approximation,

| (21) |

where plays the role of the thermodynamic entropy and is the maximum of , assuming only one exists. Substituting the above approximation into Eq. (20) yields

| (22) |

which illustrates that the energy function depends on only through , i.e., . Importantly, the above equation signifies Zipf’s law via the equivalence between the extensive parts of the entropy and energy [10],

| (23) |

For mean-field criticality, [Eq. (17)] and the above cancellation holds when is adequately small. This condition is guaranteed for a typical realization of since . For extrinsic criticality, and the entropy-energy equivalence needs not rely on being small.

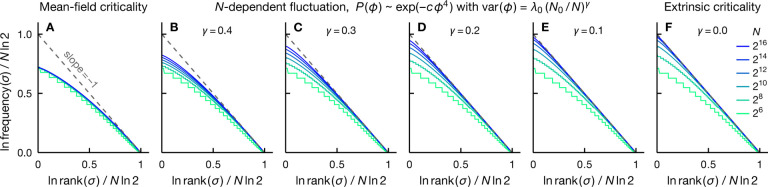

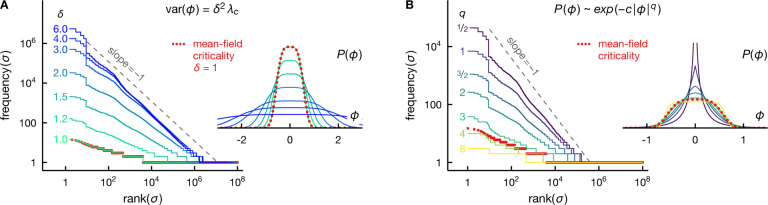

In Fig. 2, we depict exact (infinite samples) rank-frequency plots for the fully connected Ising model at a range of temperatures. We see a clear deviation from the power-law behavior at all temperatures. The rank-frequency plot at approaches Zipf’s scaling, but only in the tail region and for an adequately large system (Fig. 2B). In a smaller system, Zipf’s law can appear more accurate at (Fig. 2A). This disconnect between the Zipf behavior and criticality in mean-field models is likely to be more visible under finite samples, which can only probe parts of the exact rank-frequency plots (see also Fig. 6). We emphasize here that the number of observations required to resolve the tail of this rank-frequency plot would be experimentally impractical, for and for (Fig. 2A&B).

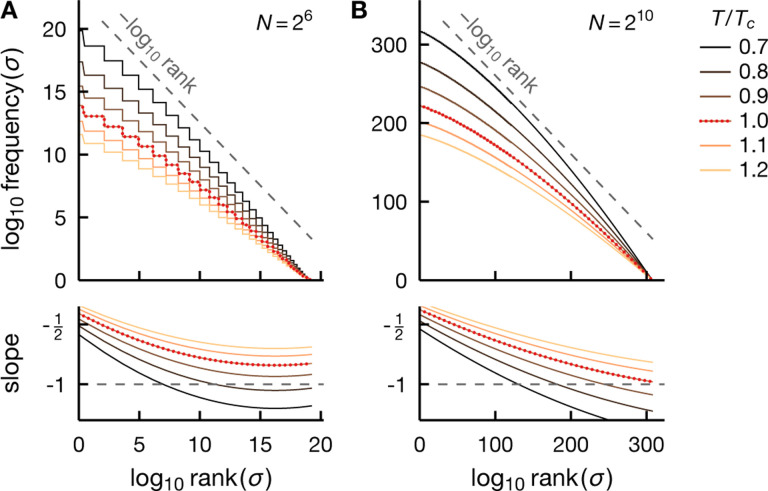

FIG. 2. Zipf’s law is an inaccurate description of critical meanfield systems.

We depict exact rank-ordered distributions for the fully connected Ising model [Eq. (13)] at various temperatures (see legend) for the system size and (A and B, respectively). Note that the axes correspond to log-rank and log-frequency. The slope of the smoothed log-log plot illustrates how close the models are to Zipf’s law (dashed). Here we obtain the approximate slope (bottom row) by fitting a cubic polynomial to the ‘knees’ of the rank-frequency log-log plot. We see that the rank-frequency plots deviate from the power-law behavior at all temperatures, and this deviation does not appear to improve as the system grows. Importantly the critical model () exhibits Zipf’s scaling (dashed) only for the least frequent states in the tail region of the rank-ordered distributions and only when the system is large enough (B). In fact, in a smaller system, the rank-frequency plot can appear more Zipf-like at (A), see also Fig. 6.

FIG. 6. Empirical Zipf behavior needs not coincide with the critical point of the system.

A: We depict empirical rank-ordered distributions of the rank-one Ising model [Eqs. (26–27)] at various temperatures (see color legend in B). We see that the distribution is closest to Zipf’s law at an intermediate temperature, significantly lower than the critical temperature . As an empirical, alternative definition of a critical temperature for finite systems, we consider the maximum of the specific heat or, equivalently the energy variance. This definition results in (see B), which is still significantly higher than the temperature that exhibits approximate Zipf behavior. B: The energy variance (solid, left axis) and mutual information (dashed, right axis) provide measures of correlations in the system. Both maximize below roughly at the same temperature for ). C: The prior changes from unimodal to bimodal at , which indicates maximum correlations in the thermodynamic limit. However, in finite systems, cannot be accurately described by its behavior near maxima. We see that the specific heat peaks at (see ), where is bimodal but with significant density at . Here the results are for a system of 60 spins, 108 realizations per model and [see Eq. (24)].

On the other hand, Fig. 3 shows that the rank-ordered distributions of identical spins under extrinsic fluctuations become more Zipf-like over the entire range of frequencies and ranks as the fluctuation variance increases and as the system grows. However, the rank-frequency plots approach Zipf’s law only when the system is sufficiently large (Fig. 3B).

FIG. 3. Zipf’s law emerges robustly for adequately large extrinsic fluctuations.

We show exact rank-ordered distributions for independent spins under a Gaussian fluctuating field of varying variance (see legend). We see that Zipf’s law becomes more accurate as the variance of the fluctuations and the system size increase. However, the rank-ordered distributions approach Zipf’s scaling over the entire range only when the system is adequately large (B). Here we obtain the approximate slope (bottom row) using the same method as in Fig. 2.

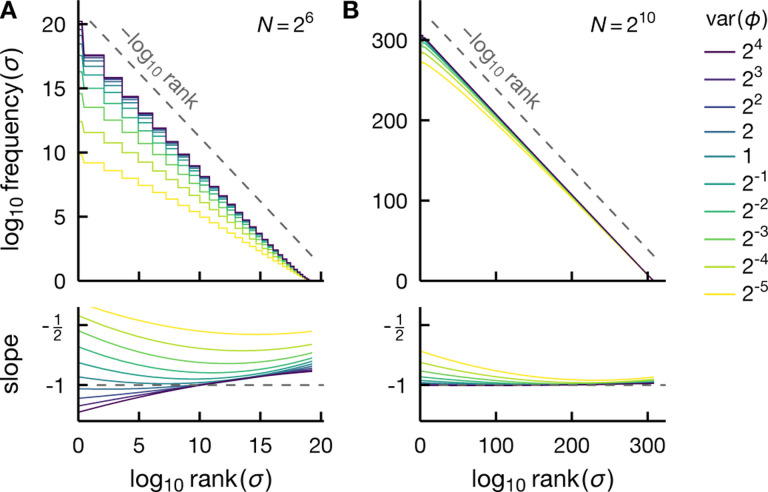

Although mean-field criticality results in a Zipf-like rankfrequency plot only in the hard-to-observe tail region, intrinsic criticality with a more general critical exponent—i.e., with —can generate rank-ordered distributions that are much closer to Zipf’s law. In Fig. 4, we compare the system-size dependence of the rank-frequency plots for the fully connected Ising model at to several models with larger fluctuations, including those potentially induced by intrinsic criticality of non-mean-field models as well as the limiting case of extrinsic criticality . Figure 4A shows that the critical mean-field model exhibits Zipf scaling only in the tail region of the rank-ordered distribution (see also Fig. 2) and the agreement with Zipf’s law does not improve, nor degrade, as the system grows. On the other hand, we see that if the fluctuation variance decreases more slowly with , i.e., , Zipf’s law gradually becomes more accurate as increases, see Fig. 4B–F. This behavior implies that empirically observed Zipf behavior is likely to indicate either extrinsic criticality or intrinsic criticality of non-mean-field type, characterized by critical fluctuations that scale only weakly with the system size.

FIG. 4. Rank-ordered distributions exhibit a smooth crossover between intrinsic and extrinsic criticality.

Normalized exact rank-frequency plots for a range of system sizes (see legend in F) illustrate a gradual crossover from the fully connected Ising model at criticality (A) to spins under an extrinsic fluctuating field (F). This crossover is induced by varying the scaling exponent of the variance of fluctuations , from for the mean-field criticality in A [see Eq. (15)] to for non-mean-field intrinsic criticality in B-E (see label) and for extrinsic criticality in F. We see that the rank-frequency plots approach Zipf’s law (dashed) as the system grows, and at a faster rate for a smaller scaling exponent . For mean-field criticality, the agreement with Zipf scaling neither improves, nor degrades, with increasing (Panel A). In B-E, the prior over fluctuations takes the form where [cf. Eq. (17)]. In all panels, the fluctuation variance at (the smallest shown) is the same and equal to that of the critical mean-field case. That is, in B-F, we have with denoting the variance of critical mean-field fluctuation at .

III. ZIPF’S LAW AS A SIGNATURE OF CRITICALITY UNDER FINITE SAMPLES

Experimental measurements are finite in not only the number of observable degrees of freedom but also the number of observations. We now turn to examine the behavior of rank-frequency plots constructed from finite samples.

In the following, we generalize our conditionally independent model, Eq. (5), to describe nonidentical spins,

| (24) |

Here is the coupling strength between the spin and the field , which can differ from one spin to another. For convenience, we also define

| (25) |

which is the characteristic width of at large , i.e., with . Following the argument in the preceding section, we expect Zipf behavior when .

Similarly, for mean-field criticality, we consider the rank-one Ising model, which generalizes the fully connected Ising model to nonidentical spins and is defined by the energy function,

| (26) |

where and describes the pairwise interaction between spins and . This model can be recast as a conditionally independent model, with the conditional distribution of the spins given by Eq. (24) and an a priori distribution that depends on both and (see Appendix B),

| (27) |

where . The thermodynamic critical temperature, , marks the point at which this distribution changes from unimodal to bimodal.

Figure 5 illustrates that critical mean-field fluctuations are too small to generate experimentally observable Zipf’s law. We consider a system of 60 conditionally independent spins under a number of a priori distributions, (see inset), including that induced by a rank-one Ising model at [Eq. (27)]. For each a priori distribution, we draw 108 iid realizations of the system and construct an empirical rank-frequency plot. In Fig. 5A, we see that the rank-one Ising model at does not produce Zipf’s law. Yet, if we make the fluctuation larger while fixing the shape (standardized moments) of the fluctuation prior, the resulting rank-frequency plot edges closer to Zipf scaling. However, the fluctuation variance is not the only factor that controls the behavior of the rank-frequency plot. In Fig. 5B, we see that at a fixed variance, an a priori distribution with thicker tails (larger standardized moments) produces a more Zipf-like rank-order plot. We emphasize that the resolution of these plots, especially in the tails, is limited by the number of samples. While we do not rule out the possibility that mean-field criticality may exhibit Zipf behavior in the tail region (see also Fig. 2), observing such behavior would require orders of magnitude more samples than 108 and would therefore be experimentally impractical.

FIG. 5. Critical mean-field fluctuations are too small to support empirically observable Zipf behavior.

Panels A and B illustrate the effects of the width and the structure of the tails of the a priori distribution (inset) on finite-sample rank-frequency plots, respectively. The red dotted curves correspond to the rank-one Ising model at criticality [Eqs. (26–27)]. In A, we consider linear scaling of critical mean-field fluctuations such that , where is the fluctuation variance of the rank-one Ising model at , for various scaling coefficient (see legend). We see that critical mean-field fluctuations do not result in Zipf behavior. Increasing the fluctuation variance while maintaining the overall shape of the fluctuation prior produces rank-frequency plots that progressively appear closer to Zipf scaling (dashed). In B, we consider the fluctuating field of the form . We vary the probability in the tails of with the shape parameter (see legend), and choose the scale parameter such that the fluctuation variance is fixed and equal to that of critical mean-field fluctuations (red dotted lines). Decreasing increases the probability of large , i.e., puts more mass in the tails of , and improves the agreement between rank-frequency plots and Zipf’s law. Overall, while instructive in understanding critical behaviors, mean-field models are an unlikely candidate for explaining experimentally observed Zipf behavior. Here the results are for a system of 60 spins, 108 realizations per model and [see Eq. (24)].

Extrapolating the asymptotic critical temperature to finite systems is, of course, somewhat dubious. In Fig. 6, we consider another frequently used empirical definition of criticality which identifies the critical point with the maximum in the specific heat or equivalently the energy variance. In the asymptotic limit , this definition is identical to the thermodynamic critical temperature . For finite systems, however, the specific heat maximum occurs at a lower temperature (Fig. 6B). This temperature also coincides roughly with another possible empirical definition of criticality, namely the maximum of the mutual information which indicates maximum correlations and learnability (Fig. 6B). In Fig. 6A, we see that lowering the temperature of the rank-one Ising model from to makes the system closer to, but still visibly different from, Zipf’s law. In fact, the closest agreement to Zipf’s law occurs at an even lower temperature. Two factors contribute to this intriguing temperature dependence. First, for mean-field criticality, Zipf scaling is expected only in the tail of the rank-frequency plot (see Fig. 2B), which requires a very large number of samples to resolve. Second, the correspondence between criticality and Zipf behavior is blurred in finite systems with the tendency for Zipf’s law to be more accurate at subcritical temperatures (see Fig. 2A). In sum, we demonstrate that for mean-field models, empirically observable Zipf behavior can be completely uncoupled from the usual notion of criticality.

Figure 6C illustrates the interaction-induced a priori distribution at various temperatures. We see that is flat around its maximum at . In the thermodynamic limit, this condition leads to non-Gaussian fluctuations which break the central limit theorem and generate critical correlations. However, in finite systems, the field is not well described by fluctuations in the immediate vicinity of its most likely values. We see that at the specific heat maximum , the a priori distribution has a non-negligible density at even though it is bimodal with maxima at . This distribution results in a larger fluctuation than at , resulting in higher energy variance as well as a rank-ordered plot closer to Zipf’s law. As the temperature drops below , the most likely field values move further away from zero. Larger values of suppress the variability of the system: each spin aligns with with increasing probability, thereby the decrease in the energy variance. At high temperatures , the a priori distribution becomes sharply peaked at , resulting in more random systems and thus a decrease in correlations. Rank-ordered plots also reflect this competition; reduced variability leads to a rank-ordered plot that decays faster than Zipf’s law at low , whereas increased randomness yields a plot that appears flatter than Zipf’s law at high .

Intrinsic fluctuations can lead to Zipf’s law without fine tuning.

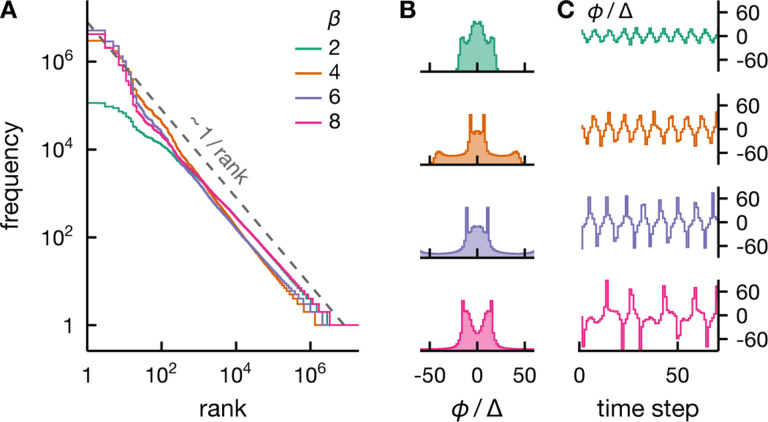

So far we see that, in the absence of external fluctuations, the empirical signatures of large correlated fluctuations, such as Zipf-like distributions, are hard to observe. While this statement is true for equilibrium systems, critically large intrinsic fluctuations can emerge generically when the system is endowed with certain dynamics. To illustrate this point, we consider a discrete-time, dynamical generalization of the conditionally independent spin model [Eq. (24)],

| (28) |

with and . Here the index labels each spin and the time step. The parameter controls the stochasticity of the spins much like the inverse temperature and couples states separated by two time steps. We illustrate the dynamics of the fluctuating field for various and the corresponding a priori distributions in Fig. 7B&C, respectively. In Fig. 7A, we see that this model can result in Zipf behavior over a range of model parameters, demonstrating that fine tuning is not a requirement for empirically observable Zipf behavior in the absence of external fields. Although the fluctuations are generated entirely internally, we can interpret this emergence of Zipf’s law as extrinsic criticality. To see this, we note that the model parameters and control the amplitude of the oscillations and thus the fluctuation variance (Fig. 7B&C). The system size only determines the stochasticity of the dynamics. As a result, the a priori distribution depends only weakly on and becomes completely independent of in the asymptotic limit. This diminishing system-size dependence makes the resulting fluctuations indistinguishable from extrinsic ones.

FIG. 7. Zipf’s law can emerge from intrinsically induced fluctuations without fine tuning.

A: Rank-ordered distributions for a dynamical spin model, Eq. (28), display Zipf’s law for a range of effective inverse temperatures (see legend). B&C : We illustrate typical empirical distributions of the fluctuating field and its typical dynamics (same legend as A). The results shown are for a system of 60 spins, realizations per model and , and we set [see Eq. (28)].

IV. DISCUSSION

Here we introduced general definitions of criticality encompassing extrinsically and intrinsically critical systems, and we showed that information-theoretic and learning-theoretic considerations allow us to view all non-spatial, critical systems on a similar footing. Namely, criticality leads to the logarithmic divergence in the information between two subsystems or between the system’s observable degrees of freedom and its fluctuating latent field. The coefficient in front of the divergence is semi-integer for systems with extrinsic criticality, and other fractions for intrinsic criticality. Both situations can be viewed as learning the parameter from measurements of the state of system components, and the a priori variance of this parameter is independent of the system size (extrinsic criticality) or decreases as the system size grows (intrinsic criticality).

We focused on the scenario where the most critical a system can be is when it has bits of mutual information per dimension of the order parameter, which is equivalent to the iid learning problems. Additional intrinsic couplings would then reduce the a priori variance of the order parameter and hence reduce the mutual information. However, some biological systems may have the a priori parameter variance that increases with [48], so that more than bits are contributed to the mutual information per latent field.

One can imagine this happening in an optimally designed sensory system, where spins are coupled to the field in a way to reduce the redundancy of the information they obtain about it. Investigating the properties of such systems from information-theoretic, learning, and statistical physics angles is clearly needed. Similarly, it is worth investigating systems in which the mutual information between macroscopic parts scales as a sublinear power of , (rather than a logarithm), which correspond to an infinite number of latent fields with hierarchically smaller a priori variances [35].4 Finally, since all of our learning and information-theoretic arguments are asymptotic, and corrections may not be negligibly small compared to , subleading corrections are also worth investigating.

In addition, there is a striking similarity between the critical behavior of mutual information in classical systems and entanglement entropy, a quantum information-theoretic measure of correlations. At quantum critical points, long-ranged correlations lead to diverging entanglement entropy, violating the area law [51]. For infinite quantum critical spin chains, this divergence is logarithmic in the subsystem size with a universal prefactor that is related to the central charge of the corresponding conformal field theory [52–56]. It would be interesting to develop a learning-theoretic picture of quantum criticality and explore whether and how the central charge relates to the effective number of latent parameters.

We also investigated the observability of an empirical signature of criticality, namely Zipf’s law. While the correspondence between Zipf behavior and criticality is precise in the thermodynamic limit, whether it holds for a finite system depends on how critical the system is, i.e., how large the a priori variance is, compared to the width of the conditional distribution . For extrinsic criticality, Zipf’s law emerges robustly under an adequately broad a priori fluctuation distribution. Intrinsic criticality, on the other hand, does not always induce large enough fluctuations to support Zipf behavior. In particular, mean-field critical fluctuations are too small to generate Zipf’s law even in the infinite-sample limit. Indeed, under finite samples, the closest agreement to Zipf’s law can occur at a temperature significantly lower than the thermodynamic critical temperature as well as the specific heat maximum, an alternative, empirical definition of a critical point. This approximate Zipf behavior at intermediate temperature results from the competition between order-promoting interactions and thermal noise, and is unlikely to be a signature of equilibrium intrinsic criticality in the usual sense.

Perhaps the disconnect between intrinsic criticality and Zipf behavior in finite systems is unsurprising, not least because of the blurred notion of criticality away from the thermodynamic limit. While using the specific heat maximum to indicate criticality is tempting, it requires assumptions on the probabilistic model that describes the data. Zipf behavior offers an alternative, model-free definition, but as we showed, it can be nontrivial to observe in intrinsically critical systems. We emphasize that no finite-system definition of criticality captures all of its thermodynamic signatures; for instance, finite-sample Zipf behavior does not correspond to maximum energy fluctuations or maximum correlations (see Fig. 6).

While our asymptotic analysis suggests that the correspondence between intrinsic criticality and Zipf behavior is more precise for larger systems, we focus on a relatively small system of 60 spins (Figs. 5–7) since it is more relevant to real measurements. In particular, a well-sampled rank-ordered plot becomes exceedingly difficult to achieve as the system size grows. For example, a rank-ordered distribution for the critical rank-one Ising model with 80 spins shows almost no structure even at 108 samples (see Fig. 8). This loss of structure due to finite samples is less severe for extrinsic fluctuations but the agreement with Zipf’s law degrades with increasing (Fig. 9), in contrast to the infinite-sample case, in which Zipf’s law becomes more accurate as the system grows (Fig. 4F).

FIG. 8. Subsampling does not make intrinsically critical systems appear more Zipf-like.

We depict the rank-ordered frequency for the rank-one Ising model of spins, Eq. (26), at the critical temperature, for (a & b), and a range of observed subsystem size (see legend). We see that limiting observation to a fraction of the full system, i.e., , does not result in more Zipf-like behavior (dashed). It leads however to more structured rank-ordered distributions, especially when the system is large (b), since a system of fewer spins requires a smaller sample size to be well-sampled. The results shown are for 108 realizations per model and .

FIG. 9. Zipf’s law emerges robustly from large extrinsic fluctuations.

We display rank-ordered distributions for the conditionally independent model with spins for (left to right) under a Gaussian fluctuating field of different widths (see legend). When the fluctuation is large compared to the width of the conditional distribution —i.e., when , see Eq. (25)—the rank-ordered plots exhibit Zipf behavior (dashed). The empirical rank-ordered distribution displays meaningful structures only when constructed from adequate samples. Larger systems require more samples; for , the distribution is completely flat for even with 108 samples (c). The results shown are for 108 realizations per model and .

We show further that some oscillatory systems can generate observable Zipf’s law without fine tuning or external fluctuations. We argue that the mechanism behind this behavior is mathematically equivalent to the extrinsic mechanism since the scale of the collective dynamics—hence the variance of the fluctuations once the time variable is integrated out—is often independent of the system size. Indeed, collective oscillations are common both in mathematical models (e.g., Refs. [57, 58]) and in biological systems (e.g., Refs. [59–61]). Our results suggest that models with a global dynamical variable that stays within a certain range could offer another plausible explanation for empirically observed Zipf’s law, without the need for extrinsic fluctuations.

Finally, we discuss how subsampling may affect the observability of Zipf behavior. Many experiments do not measure the system in its entirety and what we can vary is the number of the observed components rather than the system size. Perhaps the unobserved degrees of freedom could play the role of an extrinsic source of fluctuations for the observed ones, resulting in extrinsically induced criticality and thus making observation of Zipf’s law more probable. However, our simple model of intrinsic criticality does not support this thinking. Suppose we observe out of the total of spins. Intrinsic fluctuations quite generally become smaller with , i.e., with , whereas the width of the conditional probability decreases with , i.e., [cf. Eq. (25)]. As a result, the relative fluctuation variance is with for critical systems. In other words, decreasing the observable fraction makes the fluctuation appear smaller and Zipf’s law less likely (even though the smaller number of degrees of freedom makes it easier to obtain better-sampled rank-frequency plots, see Fig. 8). This effect is even more acute when the system size far outnumbers the observed components, e.g., a recording of neural spikes in the brain.

Real systems can of course be more complicated than our simple model. For example, in spatially extended systems, the order parameter could be a field in space and the number of inferable parameters, e.g., the Fourier components of the order parameter, can depend on how many spins we observe. Thus, the bits available to be learned can depend on the number of observed spins, even though the a priori variances of the parameters do not (as they are set by the size of the entire system). Investigations of criticality and its empirical signatures in this setting are in order.

We end by pointing out that many biological critical systems become more Zipf-like as they grow [10], which begs the question of why this happens. As pointed out in Ref. [26], consider a sensory system that is learning the state of the outside world (that is, responds to its different values differently); one would expect this system to be constructed in a way not to decrease the variability of the world when the system size grows. Such systems would always be critical, and specifically extrinsic critical, maybe explaining their ubiquity.

ACKNOWLEDGMENTS

We thank William Bialek, Stephanie Palmer, and Pankaj Mehta for valuable discussions. VN and DJS acknowledge support from the National Science Foundation, through the Center for the Physics of Biological Function (PHY-1734030). DJS is supported in part by the Simons Foundation and by the Sloan Foundation. IN was supported in part by the Simons Foundation Investigator grant and the NIH grants 1R01NS099375 and 2R01NS084844.

Appendix A: Mutual information in conditionally independent models

In this appendix, we consider conditionally independent spin models and derive the leading contribution to the mutual information between the spins and the fluctuating field and between two halves of the system in the many-spin limit.

First, we write down the probability distribution of the spins,

| (A1) |

where denotes the distribution of the fluctuating field . The conditional probability of each spin reads

| (A2) |

where parametrizes the influence of the fluctuating field on spin . For convenience, we introduce

| (A3) |

such that

| (A4) |

Therefore the full joint distribution can be written as

| (A5) |

We now consider the limit . We assume that the weights are independent of the system size (e.g., they are drawn from a fixed distribution) such that is intensive. For a smooth prior—i.e., —the joint distribution [Eq. (A5)] is dominated by fluctuations around the minimum of ,

| (A6) |

where is the root of and we drop the superfluous dependence on from . We see that when conditioned on the spins, the fluctuation is Gaussian,

| (A7) |

As a result, we obtain the conditional differential entropy,

| (A8) |

We see that the logarithmic divergence is the leading contribution since the last two terms do not grow with . For extrinsic fluctuations, , and thus , is independent of ; therefore, we obtain

| (A9) |

On the other hand, if for some and a constant , its entropy diverges logarithmically and the mutual information reads

| (A10) |

We see that the decrease in information results from the fluctuation entropy that decreases logarithmically with .

The same logarithmic divergence also emerges in the mutual information between two macroscopic halves of the system. To see this, we note that the entropy of the spins is given by

| (A11) |

Similarly, the entropy of each half of the system reads

| (A12) |

where and . When the subsystems are large and the spins in each half are randomly chosen, we have [see Eq. (A8)]

| (A13) |

We now write the mutual information between the two halves in terms of the above entropy,

| (A14) |

| (A15) |

where we use the fact that and the property of conditional independence, . For , we see that is smaller than by one bit.

Appendix B: Rank-one Ising models

Here we provide an analysis of rank-one Ising models—those with pairwise interaction matrices of rank one—defined by the energy function,

| (B1) |

where denotes the state of the system, the spin at site , and the product describes the interaction between spins and . We note that the terms with only add an irrelevant constant. This model generalizes the fully-connected Ising model which corresponds to setting for all and . As usual, the probability distribution of the system configuration is given by

| (B2) |

where we introduce the inverse temperature and the partition function .

Computing the partition function by directly summing over all possible spin states is generally analytically intractable. Instead, we trade this summation for an integral using the Hubbard–Stratonovich transformation,

| (B3) |

We see that the spins become noninteracting at the cost of introducing a new fluctuating field which correlates with the spins via the joint distribution,

| (B4) |

Summing out each spin variable from Eq. (B3) yields

| (B5) |

As a result, we can express various thermodynamic variables of the spins as integrals over a continuous field which are usually more convenient than summations over discrete spin states. In particular, the internal energy, entropy and heat capacity—U, S and C, respectively—read

| (B6) |

| (B7) |

| (B8) |

where and denote the mean and variance of .

In addition, we see that a rank-one Ising model is equivalent to a conditionally independent model with a fluctuation field, induced by the intrinsic interactions between spins. The marginal distribution of this field is given by

| (B9) |

and thus we have

| (B10) |

We see again that conditioning on the fluctuating field removes the interactions between spins.

Recalling that for small , we see that the fluctuation distribution exhibits a structural transition at

| (B11) |

where it changes from unimodal at to bimodal at . In the limit , this point corresponds to the critical temperature of an order-disorder phase transition. In the disordered phase at high temperatures , the spins are mostly random. In the ordered phase at low temperatures , on the other hand, they mimic the pattern set by the signs of .

Footnotes

Divergent information is a direct result of long-ranged correlations at critical points, at which the entire system becomes correlated. Indeed, it has proved a useful characterization of criticality. See, e.g., Refs. [34–40].

We assume here that the variance of the energy of each component, , does not depend on . Our argument does not rely on this assumption. If , then the central limit theorem implies . Then criticality describes the scenarios, in which the energy fluctuations grow faster (or decrease more slowly) than this rate.

We assume that the number of parameters is much smaller than the sample size . For , the information can grow linearly with [46].

References

- [1].West G. B., Brown J. H., and Enquist B. J., A general model for the origin of allometric scaling laws in biology, Science 276, 122 (1997). [DOI] [PubMed] [Google Scholar]

- [2].Stevens C. F., An evolutionary scaling law for the primate visual system and its basis in cortical function, Nature 411, 193 (2001). [DOI] [PubMed] [Google Scholar]

- [3].Lee S. and Stevens C. F., General design principle for scalable neural circuits in a vertebrate retina, Proceedings of the National Academy of Sciences 104, 12931 (2007). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [4].Krotov D., Dubuis J. O., Gregor T., and Bialek W., Morphogenesis at criticality, Proceedings of the National Academy of Sciences 111, 3683 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [5].Zhang W., Scerbo P., Delagrange M., Candat V., Mayr V., Vriz S., Distel M., Ducos B., and Bensimon D., Fgf8 dynamics and critical slowing down may account for the temperature independence of somitogenesis, Communications Biology 5, 113 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [6].Beggs J. M., The criticality hypothesis: how local cortical networks might optimize information processing, Philosophical Transactions of the Royal Society A 366, 329 (2008). [DOI] [PubMed] [Google Scholar]

- [7].Chialvo D. R., Emergent complex neural dynamics, Nature Physics 6, 744 (2010). [Google Scholar]

- [8].Toyoizumi T. and Abbott L. F., Beyond the edge of chaos: Amplification and temporal integration by recurrent networks in the chaotic regime, Phys. Rev. E 84, 051908 (2011). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [9].Mathijssen A. J. T. M., Culver J., Bhamla M. S., and Prakash M., Collective intercellular communication through ultra-fast hydro-dynamic trigger waves, Nature 571, 560 (2019). [DOI] [PubMed] [Google Scholar]

- [10].Mora T. and Bialek W., Are biological systems poised at criticality?, Journal of Statistical Physics 144, 268 (2011). [Google Scholar]

- [11].Beggs J. M., Addressing skepticism of the critical brain hypothesis, Frontiers in Computational Neuroscience 16, 703865 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [12].Muñoz M. A., Colloquium: Criticality and dynamical scaling in living systems, Rev. Mod. Phys. 90, 031001 (2018). [Google Scholar]

- [13].Mora T., Walczak A. M., Bialek W., and Callan C. G., Maximum entropy models for antibody diversity, Proceedings of the National Academy of Sciences 107, 5405 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [14].Nykter M., Price N. D., Aldana M., Ramsey S. A., Kauffman S. A., Hood L. E., Yli-Harja O., and Shmulevich I., Gene expression dynamics in the macrophage exhibit criticality, Proceedings of the National Academy of Sciences 105, 1897 (2008). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [15].Beggs J. M. and Plenz D., Neuronal avalanches in neocortical circuits, Journal of Neuroscience 23, 11167 (2003). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [16].Haimovici A., Tagliazucchi E., Balenzuela P., and Chialvo D. R., Brain organization into resting state networks emerges at criticality on a model of the human connectome, Phys. Rev. Lett. 110, 178101 (2013). [DOI] [PubMed] [Google Scholar]

- [17].Tkačik G., Mora T., Marre O., Amodei D., Palmer S. E., Berry II M. J., and Bialek W., Thermodynamics and signatures of criticality in a network of neurons, Proceedings of the National Academy of Sciences 112, 11508 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [18].Mora T., Deny S., and Marre O., Dynamical criticality in the collective activity of a population of retinal neurons, Phys. Rev. Lett. 114, 078105 (2015). [DOI] [PubMed] [Google Scholar]

- [19].Kastner D. B., Baccus S. A., and Sharpee T. O., Critical and maximally informative encoding between neural populations in the retina, Proceedings of the National Academy of Sciences 112, 2533 (2015). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [20].Meshulam L., Gauthier J. L., Brody C. D., Tank D. W., and Bialek W., Coarse graining, fixed points, and scaling in a large population of neurons, Phys. Rev. Lett. 123, 178103 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [21].Cavagna A., Cimarelli A., Giardina I., Parisi G., Santagati R., Stefanini F., and Viale M., Scale-free correlations in starling flocks, Proceedings of the National Academy of Sciences 107, 11865 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [22].Bialek W., Cavagna A., Giardina I., Mora T., Silvestri E., Viale M., and Walczak A. M., Statistical mechanics for natural flocks of birds, Proceedings of the National Academy of Sciences 109, 4786 (2012). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [23].Bialek W., Cavagna A., Giardina I., Mora T., Pohl O., Silvestri E., Viale M., and Walczak A. M., Social interactions dominate speed control in poising natural flocks near criticality, Proceedings of the National Academy of Sciences 111, 7212 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [24].Attanasi A., Cavagna A., Del Castello L., Giardina I., Melillo S., Parisi L., Pohl O., Rossaro B., Shen E., Silvestri E., and Viale M., Finite-size scaling as a way to probe near-criticality in natural swarms, Phys. Rev. Lett. 113, 238102 (2014). [DOI] [PubMed] [Google Scholar]

- [25].Ma Z., Turrigiano G. G., Wessel R., and Hengen K. B., Cortical circuit dynamics are homeostatically tuned to criticality in vivo, Neuron 104, 655 (2019). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [26].Schwab D. J., Nemenman I., and Mehta P., Zipf’s law and criticality in multivariate data without fine-tuning, Phys. Rev. Lett. 113, 068102 (2014). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [27].Aitchison L., Corradi N., and Latham P. E., Zipf’s law arises naturally when there are underlying, unobserved variables, PLoS Computational Biology 12, e1005110 (2016). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [28].Touboul J. and Destexhe A., Power-law statistics and universal scaling in the absence of criticality, Phys. Rev. E 95, 012413 (2017). [DOI] [PubMed] [Google Scholar]

- [29].Morrell M. C., Sederberg A. J., and Nemenman I., Latent dynamical variables produce signatures of spatiotemporal criticality in large biological systems, Phys. Rev. Lett. 126, 118302 (2021). [DOI] [PubMed] [Google Scholar]

- [30].Morrell M., Nemenman I., and Sederberg A. J., Neural criticality from effective latent variable, eLife 12, RP89337 (2023). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [31].Landau L. D. and Lifshitz E. M., Statistical Physics, 3rd ed., Course of Theoretical Physics, Vol. 5 (Butterworth-Heinemann, Oxford, UK, 1980). [Google Scholar]

- [32].Goldenfeld N., Lectures On Phase Transitions And The Renormalization Group (CRC Press, 1992). [Google Scholar]

- [33].Cardy J., Scaling and Renormalization in Statistical Physics, Cambridge Lecture Notes in Physics (Cambridge University Press, Cambridge, UK, 1996). [Google Scholar]

- [34].Grassberger P., Toward a quantitative theory of self-generated complexity, International Journal of Theoretical Physics 25, 907 (1986). [Google Scholar]

- [35].Bialek W., Nemenman I., and Tishby N., Predictability, complexity, and learning, Neural Computation 13, 2409 (2001). [DOI] [PubMed] [Google Scholar]

- [36].Bialek W., Nemenman I., and Tishby N., Complexity through nonextensivity, Physica A: Statistical Mechanics and its Applications 302, 89 (2001), Proc. Int. Workshop on Frontiers in the Physics of Complex Systems. [Google Scholar]

- [37].Tchernookov M. and Nemenman I., Predictive information in a nonequilibrium critical model, Journal of Statistical Physics 153, 442 (2013). [Google Scholar]

- [38].Wilms J., Vidal J., Verstraete F., and Dusuel S., Finite-temperature mutual information in a simple phase transition, Journal of Statistical Mechanics: Theory and Experiment 2012, P01023 (2012). [Google Scholar]

- [39].Lau H. W. and Grassberger P., Information theoretic aspects of the two-dimensional ising model, Phys. Rev. E 87, 022128 (2013). [DOI] [PubMed] [Google Scholar]

- [40].Cohen O., Rittenberg V., and Sadhu T., Shared information in classical mean-field models, Journal of Physics A: Mathematical and Theoretical 48, 055002 (2015). [Google Scholar]

- [41].Marsili M., Mastromatteo I., and Roudi Y., On sampling and modeling complex systems, Journal of Statistical Mechanics: Theory and Experiment 2013, P09003 (2013). [Google Scholar]

- [42].Cubero R. J., Jo J., Marsili M., Roudi Y., and Song J., Statistical criticality arises in most informative representations, Journal of Statistical Mechanics: Theory and Experiment 2019, 063402 (2019). [Google Scholar]

- [43].Duranthon O., Marsili M., and Xie R., Maximal relevance and optimal learning machines, Journal of Statistical Mechanics: Theory and Experiment 2021, 033409 (2021). [Google Scholar]

- [44].Marsili M. and Roudi Y., Quantifying relevance in learning and inference, Physics Reports 963, 1 (2022). [Google Scholar]

- [45].Cover T. M. and Thomas J. A., Elements of Information Theory, 2nd ed. (Wiley-Interscience, 2006). [Google Scholar]

- [46].Ngampruetikorn V. and Schwab D. J., Information bottleneck theory of high-dimensional regression: relevancy, efficiency and optimality, in Advances in Neural Information Processing Systems, Vol. 35, edited by Koyejo S., Mohamed S., Agarwal A., Belgrave D., Cho K., and Oh A. (Curran Associates, Inc., 2022) pp. 9784–9796. [PMC free article] [PubMed] [Google Scholar]

- [47].Bialek W., On the dimensionality of behavior, Proceedings of the National Academy of Sciences 119, e2021860119 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [48].Humplik J. and Tkačik G., Probabilistic models for neural populations that naturally capture global coupling and criticality, PLOS Computational Biology 13, 1 (2017). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [49].Ngampruetikorn V. and Schwab D. J., Random-energy secret sharing via extreme synergy (2023), in preparation.

- [50].Bialek W., Palmer S. E., and Schwab D. J., What makes it possible to learn probability distributions in the natural world? (2020), arXiv:2008.12279 [cond-mat.stat-mech]. [Google Scholar]

- [51].Eisert J., Cramer M., and Plenio M. B., Colloquium: Area laws for the entanglement entropy, Rev. Mod. Phys. 82, 277 (2010). [Google Scholar]

- [52].Holzhey C., Larsen F., and Wilczek F., Geometric and renormalized entropy in conformal field theory, Nuclear Physics B 424, 443 (1994). [Google Scholar]

- [53].Vidal G., Latorre J. I., Rico E., and Kitaev A., Entanglement in quantum critical phenomena, Phys. Rev. Lett. 90, 227902 (2003). [DOI] [PubMed] [Google Scholar]

- [54].Calabrese P. and Cardy J., Entanglement entropy and quantum field theory, Journal of Statistical Mechanics: Theory and Experiment 2004, P06002 (2004). [Google Scholar]

- [55].Refael G. and Moore J. E., Entanglement entropy of random quantum critical points in one dimension, Phys. Rev. Lett. 93, 260602 (2004). [DOI] [PubMed] [Google Scholar]

- [56].Ryu S. and Takayanagi T., Holographic derivation of entanglement entropy from the anti-de sitter space/conformal field theory correspondence, Phys. Rev. Lett. 96, 181602 (2006). [DOI] [PubMed] [Google Scholar]

- [57].Mirollo R. E. and Strogatz S. H., Synchronization of pulse-coupled biological oscillators, SIAM Journal on Applied Mathematics 50, 1645 (1990). [Google Scholar]

- [58].Acebrón J. A., Bonilla L. L., Pérez Vicente C. J., Ritort F., and Spigler R., The kuramoto model: A simple paradigm for synchronization phenomena, Rev. Mod. Phys. 77, 137 (2005). [Google Scholar]

- [59].Buzsáki G. and Draguhn A., Neuronal oscillations in cortical networks, Science 304, 1926 (2004). [DOI] [PubMed] [Google Scholar]

- [60].Gregor T., Fujimoto K., Masaki N., and Sawai S., The onset of collective behavior in social amoebae, Science 328, 1021 (2010). [DOI] [PMC free article] [PubMed] [Google Scholar]

- [61].Di Talia S. and Vergassola M., Waves in embryonic development, Annual Review of Biophysics 51, 327 (2022). [DOI] [PMC free article] [PubMed] [Google Scholar]