Abstract

The complexity and challenging underwater environment leading to degradation in underwater image. Measuring the quality of underwater image is a significant step for the subsequent image processing step. Existing Image Quality Assessment (IQA) methods do not fully consider the characteristics of degradation in underwater images, which limits their performance in underwater image assessment. To address this problem, an Underwater IQA (UIQA) method based on color space multi-feature fusion is proposed to focus on underwater image. The proposed method converts underwater images from RGB color space to CIELab color space, which has a higher correlation to human subjective perception of underwater visual quality. The proposed method extract histogram features, morphological features, and moment statistics from luminance and color components and concatenate the features to obtain fusion features to better quantify the degradation in underwater image quality. After features extraction, support vector regression(SVR) is employed to learn the relationship between fusion features and image quality scores, and gain the quality prediction model. Experimental results on the SAUD dataset and UIED dataset show that our proposed method can perform well in underwater image quality assessment. The performance comparisons on LIVE dataset, TID2013 dataset,LIVEMD dataset,LIVEC dataset and SIQAD dataset demonstrate the applicability of the proposed method.

Subject terms: Computer science, Information technology, Image processing

Introduction

The underwater world is abundant in resources and images obtained through underwater observation equipment are crucial for exploring the underwater world and exploiting its resources. In practical underwater applications, high-quality underwater images can provide sufficient and accurate information to facilitate the realization of underwater tasks. However, the underwater environment is complex and variable, and factors such as light scattering, absorption, and environmental noise can affect the quality of underwater images, leading to issues such as color shift, low contrast, and low definition1.When underwater images are affected by quality degradation, the application value of these images may be reduced2, which in turn can impact their performance in practical underwater application tasks. In recent years, numerous effective methods have been proposed for enhancing underwater images3–9. However, in the field of underwater image enhancement, there is currently no effective, robust, and widely-accepted method for assessing the quality of underwater images. Therefore, it is of significant research importance to investigate an effective and robust UIQA method. Such an assessment method can help to measure the application value of underwater images and provide a credible and effective reference for the selection of original underwater images and underwater image enhancement methods in practical applications.

There are currently two categories of IQA methods, subjective IQA methods and objective IQA methods10. Subjective IQA methods rely on the subjective perception of the observer, which is time-consuming, costly, unstable, and dependent on expert knowledge11. As such, subjective methods are only suitable for small-scale image datasets, which hinder the efficient and large-scale quality assessment of underwater images. Therefore, objective IQA methods, which utilize computer-designed methods to automatically and accurately assess image quality, are more suitable for evaluating the quality of underwater images.

IQA methods can be classified according to the amount of reference information required, including Full-Reference Image Quality Assessment (FR-IQA), Reduced Reference Image Quality Assessment (RR-IQA), and No-Reference Image Quality Assessment (NR-IQA)12,13. The FR-IQA method requires all the reference information of the original image and compares the difference between the original image and the distorted image to obtain the quality of the distorted image. The RR-IQA method requires partial feature information of the original image as a reference, and the distorted IQA is achieved by calculating the difference between the extracted features of the original image and those of the distorted image. Due to the complexity and uniqueness of the underwater environment, it is often difficult to obtain clear reference images of underwater scenes. Therefore, the NR-IQA method, which requires no original image reference information, is the optimal choice for objective assessment of underwater image quality.

The NR-IQA method does not require the help of a reference image and can access the quality of distorted images based solely on their own features.Moorthy and Bovik14 proposed the Blind Image Quality Index (BIQI) based on a two-step framework model of natural scene statistics. The distortions in the image are first identified, and then the statistical features of specific distortion types are extracted. Next, the image authenticity and integrity are evaluated using a controlled pyramid with two scales and six directions, and a corresponding image quality score is calculated for each distortion type. Finally, the weighted average of the quality scores corresponding to different distortions is calculated to obtain the overall IQA results.Li et al.15 proposed a no-reference IQA method based on structural degradation to predict the visual quality of multi-distortion images. This method computes gradient-weighted histograms using LBP operators to process gradient images and extract the structural features of images, effectively describing the image quality degradation due to multiple distortions. Liu et al.16 proposed a two-step framework IQA model called the Spatial-Spectral Entropy-based Quality Index (SSEQ). This model extracts quality-aware features from three scales of spatial entropy and spectral entropy, and obtains image quality scores by training the model with support vector regression. Gu et al.17 proposed the Blind Image Quality Measure of Enhanced Images (BIQME), which analyzes the contrast, sharpness, and brightness of images based on 17 features to assess image quality.Mittal et al.18 proposed a spatial domain-based no-reference quality assessment method Blind/Referenceless Image Spatial Quality Evaluator (BRISQUE), which uses the statistical properties of the empirical distribution of local brightness normalization to quantify the image quality based on the morphological features of the image. Based on this approach, Mittal et al.19 introduced an unsupervised Natural Image Quality Evaluator (NIQE) use the statistical features of natural scenes. The NIQE method uses a multivariate Gaussian (MVG) model to fit the image. Zhang et al.20 extended Integrated Local NIQE(ILNIQE) based on NIQE, which adds more image-related features and calculates the distance between the image patches and the Gaussian model, finally integrates the image patch quality scores to get the overall image quality score. In recent years, deep learning-based methods and techniques have been widely employed in the task of IQA , Kang et al.21 proposed a convolutional neural network-based IQA method Convolutional Neural Networks for no-reference Image Quality Assessment (CNNIQA), which mainly contains one convolutional layer and two fully connected layers, integrating feature extraction and assessment model building into one network. Zhang et al.22 introduced a Deep Bilinear Convolutional Neural Network (DBCNN) for NR-IQA, which is applicable to both synthetic and real distorted images. The DBCNN consists of two linear convolutional neural networks, each dedicated to a distorted scene. Features from both CNNs are bilinearly merged for the final quality prediction. Su et al.23 presented an adaptive hyper network architecture that first extracts image semantics and establishes perceptual rules through hypernetwork adaptation, which is adopted by the quality prediction network to give image quality. You et al.24 first applied Transformer to the field of IQA and proposed Transformer for Image Quality assessment (TRIQ) method. TRIQ uses an adaptive encoder to process images of different resolutions, and the feature maps extracted by the convolutional neural network are used as the input to the Transformer encoder, and then the image quality is sensed by the MLP Head. Yang et al.25 extended Multi-dimension Attention Network for No-Reference Image Quality Assessment (MANIQA) based on a multi-dimensional attention network. The method extracts features by Vision Transformer (ViT), applies attention mechanisms in channel and spatial dimensions using transfer attention blocks and scaling transformation blocks, using a two-branch structure of patch weighted quality prediction to predict the final score based on the weight of each patch quality score.

In recent years, as the field of underwater image processing has continued to advance, a number of UIQA methods have been proposed. Yang et al.26 proposed the Underwater Color Image Quality Evaluation Metric (UCIQE), which extracts underwater image features that align more closely with human perception in the CIElab color space. The saturation, chromaticity, and contrast of underwater images are used as measurement components, which are subsequently combined linearly to develop a quality assessment method capable of measuring color shift, blurring, and low contrast of underwater images. Panetta et al.27 proposed an Underwater Image Quality Measure (UIQM) method based on the Human Visual System (HVS). The UIQM method considers various factors that impact the quality of underwater images by utilizing the Underwater Image Colorfulness Measure (UICM), Underwater Image Sharpness Measure (UISM), and Underwater Image Contrast Measure (UIConM). The method combines these three measurement components linearly to comprehensively characterize the quality of underwater images. In addition to the aforementioned methods, Yang et al.28 proposed a no-reference frequency domain-based UIQA method called FDUM. The FDUM method combines the spatial and frequency domains in the color metric, refines the contrast metric using a dark channel a priori method, and uses multiple linear regression to obtain weighting coefficients for the linear combination of the metric values in order to assess the quality of underwater images. On the other hand, Zheng et al.29, on the other hand, proposed an Underwater Image Fidelity (UIF) metric. The metric relies on statistical features of underwater images in the CIELab space, suggesting naturalness, sharpness, and structure indices. Saliency-based spatial pooling is used to determine the final quality score of the image. While RGB-based IQA methods have demonstrated advantages in assessing the quality of natural scene images30,31, they do not perform well for underwater scenes due to the high correlation and poor uniformity of their components, which deviate significantly from human visual perception29. Current methods for assessing the quality of underwater images inadequately use color space, which leads to poor feature extraction and an inaccurate representation of the relationship between underwater image quality and subjective perception. Presently, these methods primarily consider chromaticity, contrast, sharpness, saturation, and similar features, with relatively limited feature selection. As a result, they fail to recognize that the degradation of underwater image quality can be caused by a combination of several factors.

To address the issues with existing methods, this paper proposes a No-Reference Underwater Image Quality Assessment based on Multi-feature Fusion in Color Space (NMFC). The NMFC method utilizes a color space transformation method to extract luminance histogram, Local Binary Pattern (LBP), moment statistics, and morphological features in the CIELab color space to represent underwater image information through feature fusion. Support Vector Regression (SVR) is subsequently used to learn the relationship between the fused features and underwater image quality, enabling the establishment of a model and the realization of accurate UIQA . Experimental results obtained using the SAUD32 and UIED29 underwater image quality assessment datasets demonstrate that the proposed method effectively and accurately assesses underwater image quality and exhibits high consistency with human subjective perception. The generality of the method is further demonstrated using several traditional image datasets, including LIVE33, TID201334, LIVEMD35, LIVEC36 and SIQAD37.

Methods

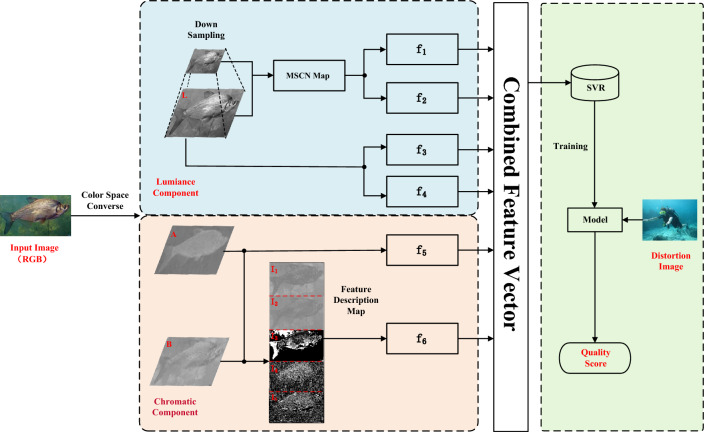

The proposed method is illustrated in Fig. 1,begins by converting underwater images from RGB to CIELab color space through color space conversion. In this color space, six quantified underwater image distortion features (as detailed in Table 1) are extracted and fused with multiple features to form a feature vector. Finally, SVR is utilized to learn the mapping relationship between the feature vectors and the quality of underwater images, resulting in the establishment of a model for UIQA.

Figure 1.

The detailed procedure of the proposed method.

Table 1.

Details of features extracted by this method.

| Feature ID | Feature description |

|---|---|

| LBP histogram feature | |

| Luminance component morphological feature | |

| Luminance component moment statistic feature | |

| Luminance histogram feature | |

| Chromatic component moment statistic feature | |

| Chromatic component morphological feature |

Color space conversion

The CIELab color space38 is specifically designed to match human color perception and achieve perceptual uniformity. Consequently, in order to more effectively extract features, the proposed method converted the RGB underwater images to the CIELab color space. Specifically, the proposed method first transformed the images from the RGB color space to the XYZ color space, before subsequently converting them to the CIELab color space. Eq. (1) was used to accomplish this conversion, as detailed below.

| 1 |

The equation for converting XYZ color space to CIELab color space is as follows:

| 2 |

| 3 |

Where, is the CIE XYZ trichromatic stimulus value of the reference white point value [0.9504, 1.0000, 1.0888], simulating noon sunlight with the associated color temperature of 6504 K.

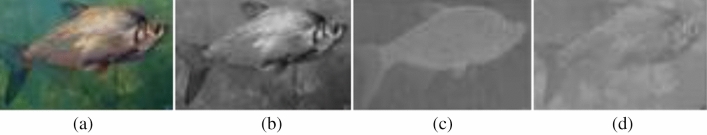

Figure 2b–d depict the image data corresponding to the L-component, A-component, and B-component of the original image Fig. 2a subsequent to the color space conversion.

Figure 2.

Examples of underwater images and the corresponding component images. (a) Raw image, (b) L-component image, (c) A-component image, (d) B-component image.

L component feature extraction

Underwater images are known to be impacted by the absorption and scattering of light by water, often resulting in insufficient brightness, low visibility, and low contrast, thereby leading to a degradation of underwater image quality. It is widely acknowledged that the luminance channel L component plays a crucial role in this regard. Therefore, there is a need to extract features that can effectively characterize changes in underwater image quality, with a particular emphasis on the L component.

L component luminance histograms

The luminance histogram features can be calculated by Eq. (4), the process is: (1) divide the image luminance values into k bins, (2) traverse the image pixels and count the number of pixels in each bin, (3) divide the number of pixels in each bin by the total number of pixels in the image to get the probability of each bin, thus the luminance histogram features are obtained.

This method is based on the luminance component map by Eq. (4) to obtain the corresponding histogram features of the underwater image as histogram features .

| 4 |

where, ,M, N are the height and width of image is the pixel value, is the indicating function, which is 1 when and 0 otherwise.

L component morphological parameters

Previous studies have suggested that performing nonlinear operations on images can help eliminate the correlation between pixels in the image39. Hence, to extract features from the underwater images I , this study utilizes the Mean Subtracted Contrast Normalized (MSCN) technique (as depicted in Eq. 5) and extracts statistical features based on the MSCN coefficients16. The extraction process is performed as follows.

I is an underwater image of size M*N, which has MSCN coefficient :

| 5 |

where: is the result after Gaussian filtering, and is the standard deviation . C is the constant 1 that prevents the denominator from being 0. The variables and are defined as follows:

| 6 |

| 7 |

| 8 |

where w is the two-dimensional cyclic symmetric Gaussian weight function, and() .

The statistical characteristics are obtained by fitting the MSCN coefficients using the Asymmetric Generalized Gaussian Distribution (AGGD) model. The AGGD model is shown in Eq. (9)

| 9 |

where,

| 10 |

| 11 |

| 12 |

| 13 |

is the gamma function;t is any one of the variables taking the value .

The MSCN coefficients were obtained by AGGD fitting using the moment matching method40 for the shape parameters ,the left and right scale parameters , the mean value .The skewness S as well as the kurtosis K41 of the MSCN image are calculated Eqs. (14) and (15).Shape parameters , left and right scale parameters , mean skew S and kurtosis K are taken as morphological features and denote any one image.

| 14 |

| 15 |

Downsampling is applied to the luminance component map, and for the image , the proposed method downsample it to obtain a downsampled map with a resolution of.The morphological features of I and the downsampled mapas the features of .

L component moment statistics

The moment statistics42 of the luminance component maps, including the first-order moment mean , second-order moment variance , third-order moment skewness s, and fourth-order moment kurtosis k, were calculated using equations Eqs. (16–19).

| 16 |

| 17 |

| 18 |

| 19 |

where represents the first pixel of the j pixel of the i component of the image and N represents the number of pixels in the image.

The four moment statistics are used as features ,which means .

L component LBP histograms

The proposed method use the rotationally invariant uniform LBP operator43 for the image I and its downsampled map .The rotation-invariant uniform LBP model is defined as:

| 20 |

| 21 |

Where represents the center point (i, j) pixel value. represents the nth pixel value of the neighborhood, P is the number of neighborhoods, R is the radius of the neighborhood, and is the pixel consistency parameter of the pixel point (i, j) the pixel consistency parameter of the neighboring pixel points, defined as:

| 22 |

After processing the two maps using rotation-invariant uniform LBP, the LBP histogram is built based on the two LBP mapping maps by the Eqs. (23) and (24):

| 23 |

| 24 |

The obtained LBP histogram feature can effectively perceive the underwater image quality, so it is used as the luminance component feature .

AB component feature extraction

In order to quantify the degradation of underwater image quality resulting from color changes, our proposed method extracts features from the chromatic channels.

Chromatic feature maps

Inspired by the NUIQ method32build chromatic descriptor maps based on the two chromatic components( and ) to extract the chromatic component features of underwater images.The proposed method constructs five color feature maps, AB difference map, saturation map, AB angle map and AB derivation angle, based on A and B chromatic components,which can represent the color perception information of underwater images from different perspectives and effectively describe the features of chromatic channels in underwater images. The construction process of the chromatic feature maps is defined as Eq. (25):

| 25 |

Where is the gradient along the horizontal and vertical directions. c is a small constant.

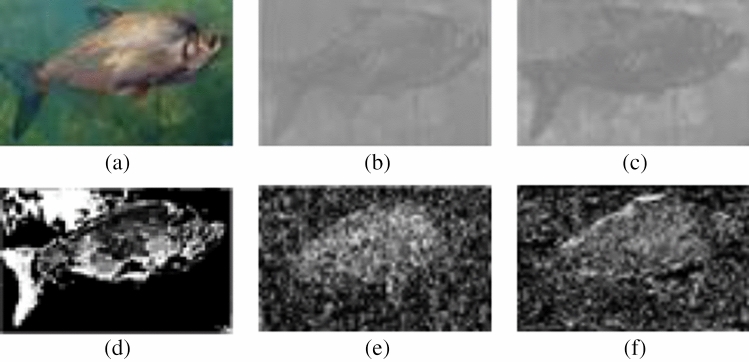

The five feature maps feature maps are illustrated in Fig. 3

Figure 3.

Illustration of chromatic feature maps. (a) Pristine underwater image, (b–f) are five chromatic feature maps of (a).

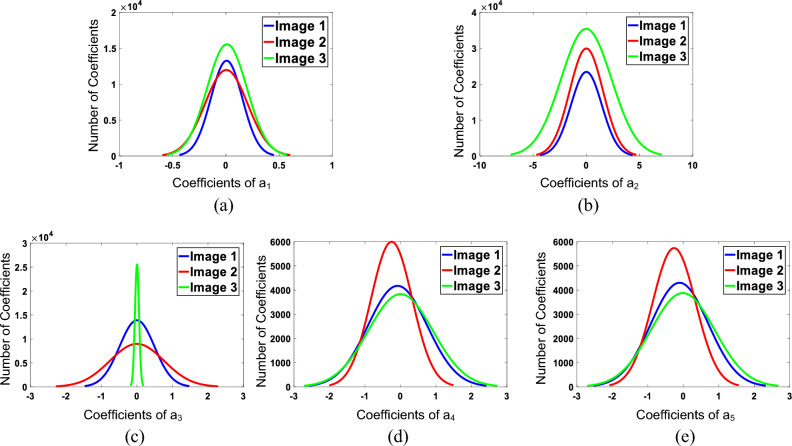

To confirm the effectiveness of our proposed chromatic feature maps, three underwater images with varying image quality (as shown in Fig. 4) were selected. The corresponding five chromatic feature maps were fitted with a normal distribution function, and the resulting curves are shown in Fig. 5.

Figure 4.

Examples of underwater images. (a) I1, (b) I2, (c) I3.

Figure 5.

Fitting curves of the chromatic feature maps for the underwater images shown in Fig.4.

AB component morphological parameters

In Fig. 5, the subfigure displays fitted curves of color feature maps corresponding to three different quality levels of underwater images. Each fitted curve represents a distinct shape, highlighting the variations in the color component morphological features.This confirms that the five chromatic feature maps constructed in this study successfully reflect the variations in underwater image quality. Consequently, it is reasonable to utilize the morphological parameters of the fitted curves of these chromatic feature maps as morphological features for the analysis of underwater image quality.

Based on the five chromatic feature maps AGGD fitting was applied Eq. (9) to obtain the shape parameter , the left and right scale parameters , and the mean value of the distribution . For the five chromatic feature maps skewness S and kurtosis K were calculated according to Eqs. 14 and 15. Combining the five feature maps corresponding parameters as the feature , that is .

AB component moment statistics

Similar to the luminance channel, this method also performs the calculation of moment statistics in the chromatic channel.

This method will use Eq. (16–19) to calculate the first-order moment mean ,second-order moment variance , third-order moment skewness s and fourth-order moment kurtosis k on the A-component map and B-component map of the chromatic channel to obtain a moment vector as the chromatic channel moment statistic , then

Building image quality assessment model

Feature fusion can realize the complementary features of chromatic and luminance components of underwater images, and describe the quality change of images more effectively. After the two types of features are extracted, feature fusion will be performed. We employ a feature fusion strategy that concatenates multiple features to obtain fused features . However, the process of building an IQA model remains to be addressed. Considering the impressive generalization ability of SVR along with its widespread use in numerous methods for IQA14,16,17, it is deemed suitable for accurately establishing relationships between image quality-related features and image scores. Therefore, for the purposes of this study, the LIBSVM toolkit44 was utilized to implement SVR with a Radial Basis Function (RBF) kernel. The mapping between fused features and subjective quality scores of underwater images was established using SVR in order to gain an UIQA model. In evaluating the quality of underwater images, features are extracted from the images to be measured according to the method outlined in this paper. These features are then fused and input into the UIQA model to obtain the quality score.

Experimental results

Dataset and assessment metric

In order to analyze the performance of the proposed method, this paper conducted comparative experiments on publicly available image datasets: SAUD32, UIED29, LIVE33,LIVEMD35,LIVEC36,TID201334, and SIQAD37. Each dataset contains subjective IQA results for each image, including mean opinion score (MOS), difference mean opinion score (DOMS), or Bradley–Terry score (B–T score)45.

The UIED dataset29 includes 100 images of real underwater scenes,including 16 coral images,26 marine life images, 14 seabed rock images, 12 sculpture images, 10 wreck images and 22 diver images.Typical images in the database are shown in Fig. 6, with resolutions ranging from 183 275 to 1350 1800. 10 representative underwater image enhancement algorithms are used to process the original images, and the resulting dataset was comprised of 1000 images. The SAUD database32 is similar to the UIED database, as it is also an underwater image quality evaluation dataset,also containing 100 images of real underwater scenes. However, different enhancement methods were selected for processing the underwater images in this dataset. The LIVE33 database contains 29 distortion-free high-resolution images as reference images and 779 distorted images corresponding to the reference images. These images have five distortion types, which are (1) 175 JPEG2000 Compression(JP2K) distorted images; (2) 169 JPEG Compression (JPEG) distorted images; (3) 145 Gaussian Blur (GB) distorted images; (4) 145 White Gaussian Noise (WN) images; and (5) 145 Fast Fading error (FF) distorted images.LIVE image database provides a DMOS for each image in the range [0, 100], with higher scores representing poorer image quality. The TID201334 database contains 25 reference images and their corresponding distortion images of 24 distortion types, each containing five distortion levels, for a total of 3000 images, giving MOS values in the range [0, 9] to indicate perceptual quality. The LIVEMD35 database is a mixed distortion image database containing two types of multiple distortion, which are blur distortion mixed with JPEG compression distortion and Gaussian blur distortion mixed with Gaussian white noise.Fifteen reference images were used to generate 450 distorted images in both multiple distortion scenarios, with 225 images of both types, and each image was given a range [0, 100] of DMOS only as a subjective score. LIVE Challenge36 is an authentic IQA database containing 1162 images. Each image is captured by a diverse photographer using distinct camera equipment ,encompassing a wide range of real-world scenes, and these images suffer from complex reality distortion. The MOS value corresponding to each image is obtained through an online crowdsourcing platform in the range [0, 100]. SIQAD37 is a commonly used screenshot image database that contains 20 reference images and their corresponding 980 distorted images with seven distortion levels for each distortion type. The database contains seven distortion types, which are GN, GB, Motion Blurring (MB), Contrast Change (CC), JPEG, JP2K and Layer Segmentation based Compression (LSC).SIQAD gives the DMOS value for each image in the range [0, 100].The specific information for each dataset is presented in Table 2.

Figure 6.

Typical underwater images in UIED database.

Table 2.

Detailed information of benchmark datasets.

| Dataset | Ref Images | Distortion images | Distortion types | Subjective scores |

|---|---|---|---|---|

| SAUD | 100 | 1000 | 10 | B–T score |

| UIED | 100 | 1000 | 10 | MOS |

| LIVE | 29 | 779 | 5 | DMOS |

| SIQAD | 20 | 980 | 7 | MOS |

| TID2013 | 25 | 3000 | 24 | MOS |

| LIVEMD | 15 | 450 | 2 | DMOS |

| LIVEC | – | 1162 | – | MOS |

Experimental analysis conducted on the SAUD and UIED image datasets can effectively verify the effectiveness of the proposed method for UIQA. The experiments carried out on LIVE, LIVMD, LIVEC, TID2013, and SIQAD demonstrate the applicability of the proposed method for different types of image data.

To evaluate the performance of various objective IQA methods, it is necessary to compare the subjective assessment scores of images with the IQA results obtained by these methods. If an objective IQA method demonstrates high agreement with the subjective image assessment scores, it indicates that the corresponding method is more consistent with human subjective perception and has better performance. This paper utilize three widely adopted metrics, including the Spearman rank-order correlation coefficient (SROCC), Kendall rank-order correlation coefficient (KROCC), and Pearson linear correlation coefficient (PLCC), to measure the performance of IQA methods. The SROCC and KROCC values measure the monotonicity between the method’s assessment results and the subjective assessment scores, while the PLCC value measures the accuracy of the method for IQA. The closer the absolute values of these three metrics are to 1, the higher the agreement between the assessment results of the corresponding objective quality assessment method and the subjective assessment scores, indicating better performance by the method.

Performance analysis of the method

To evaluate the effectiveness of the proposed NMFC method, this study conducted comparisons between our method and mainstream IQA methods on two datasets, including the SAUD dataset and the UIED dataset. The proposed method selected seven existing NR-IQA methods for comparison, including BIQI14, SSEQ16, BRISQUE18, NIQE19, ILNIQE20, BIQME17, NUIQ32, and classical UIQA methods,including UIQM26, UCIQE27, FDUM28,UIF29. Among these methods, the NIQE and ILNIQE approaches do not require subjective scoring and are thus regarded as fully blind IQA methods, which therefore do not require retraining. The remaining methods were retrained using the SAUD and UIED datasets. In order to ensure the fairness of the experiments, this paper utilized the authors’ published source code and trained the score assessment models using SVR in all experiments.

For image dataset division, the proposed method randomly split the images into a training set and a test set, with 80 and 20 of the images respectively. Additionally, the two parts were ensured to contain no duplicate images. The score assessment model was then trained using all the features extracted from the images in the training set, along with their corresponding subjective assessment scores. Once the model was obtained, it was used to evaluate the images in the test set and calculate the appropriate assessment metrics. To ensure the accuracy and reliability of the experimental results, proposed method conducted multiple iterations of the experiment. Specifically, the process was repeated 50 times for each method, with 50 rounds of training and testing carried out for every iteration. After 50 rounds of testing, we calculated the average value of each assessment metric, which was then taken as the overall assessment result of the corresponding IQA method.

Table 3 gives the overall performance comparison results of this paper method with other methods on SAUD and UIED datasets, and the values of the best and second performance corresponding to different assessment metrics on each dataset are bolded. According to the experimental results in Table 3, we can get the following conclusions. First, the method proposed in this paper outperforms other IQA methods on the underwater image dataset. SROCC, KROCC, PLCC assessment index values corresponding to the overall assessment results of this method on the SAUD and UIED image datasets reach the optimum; NUIQ method achieves sub-optimal SROCC, KROCC, PLCC values on the SAUD and UIED dataset. Compared with the suboptimal method, the values of SROCC, KROCC, PLCC on the SAUD dataset are higher than the suboptimal method by 0.0463, 0.0443 and 0.0397; the values of SROCC, KROCC, PLCC on the UIED dataset are higher than the suboptimal method by 0.0215, 0.0188 and 0.0055 or so. Secondly,the UCIQE, UIQM, and FDUM algorithms exhibit subpar performance on the SAUD and UIED databases, as evidenced by their low SROCC, KROCC, and PLCC values. It is worth noting that the UIF method underperforms specifically on the SAUD database; however, it ranks third in terms of SROCC (0.6117), KROCC (0.4428), and PLCC (0.631) values for the UIED dataset. This discrepancy can be attributed to these methods’ reliance on combining measurement component scores with weights to derive quality scores for underwater images.As described in the related work above, limitation of such approaches lies in their dependence on weight distributions constrained by specific databases, where coefficients differ across different datasets.This also one of the factors that motivated the design of our proposed method. Lastly, the performance of some methods is poor, and the assessment index values of SROCC and PLCC are less than 0.5, which is a big gap with the methods in this paper. According to the above conclusions, it can be seen that the IQA method proposed in this paper has certain advantages compared with other IQA methods on different underwater image datasets, and the consistency with human subjective perception is higher than other methods, which can efficiently and accurately evaluate the quality of underwater images.

Table 3.

Performance comparison of the proposed method on two underwater image datasets.

| IQA method | SAUD | UIED | ||||

|---|---|---|---|---|---|---|

| SROCC | KROCC | PLCC | SROCC | KROCC | PLCC | |

| BRISQUE | 0.4934 | 0.3481 | 0.5180 | 0.3964 | 0.2741 | 0.4306 |

| BIQI | 0.4057 | 0.2820 | 0.4269 | 0.4438 | 0.3089 | 0.5196 |

| NIQE | 0.0626 | 0.0425 | 0.1814 | 0.2528 | 0.1702 | 0.2198 |

| ILNIQE | 0.2844 | 0.1929 | 0.2825 | 0.4196 | 0.2882 | 0.4066 |

| SSEQ | 0.5475 | 0.4249 | 0.5683 | 0.5161 | 0.3642 | 0.5577 |

| BIQME | 0.7271 | 0.5924 | 0.7483 | 0.5194 | 0.3681 | 0.6183 |

| UIQM | 0.0271 | 0.0179 | 0.0581 | 0.0839 | 0.0562 | 0.0560 |

| UCIQE | 0.2002 | 0.1312 | 0.4351 | 0.0591 | 0.0438 | 0.2465 |

| UIF | 0.1182 | 0.0709 | 0.2021 | 0.6117 | 0.4428 | 0.6316 |

| FDUM | 0.1469 | 0.0965 | 0.2819 | 0.0865 | 0.0620 | 0.1011 |

| NUIQ | 0.7891 | 0.6024 | 0.8004 | 0.6136 | 0.4459 | 0.6761 |

| NMFC | 0.8354 | 0.6467 | 0.8401 | 0.6351 | 0.4647 | 0.6816 |

Intuitive comparison

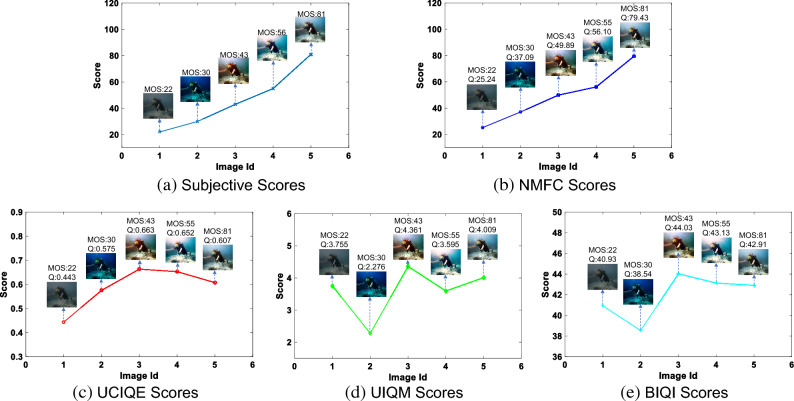

To facilitate a more intuitive comparison between the proposed method and other methods, this research paper presents a series of underwater images with varying subjective quality scores in Fig. 7. These images are arranged in ascending order from left to right based on their respective scores. Various quality assessment methods are employed to evaluate these images, and the resulting subjective quality scores, NMFC values, UCIQE values, UIQM values, and BIQI values are depicted in Fig. 8. Additionally, line charts are utilized to emphasize the effectiveness of the proposed method in comparison to other IQA methods.

Figure 7.

Figures (a–e) represent a collection of underwater images, each accompanied by a subjective rating. The images have been ranked in ascending order based on their respective subjective scores.

Figure 8.

Prediction quality values of different methods on underwater image (Fig. 7). Q is the predicted quality score.

From the figure, it can be observed that the traditional underwater image quality evaluation methods, UCIQE and UIQM, primarily focus on the color components of the image. As a result, images with rich colors (such as Fig. 7c) often receive higher scores, leading to the highest evaluation for images with medium quality. On the other hand, the traditional air IQA method, BIQI, is designed for assessing image quality in natural scenes and does not take into account the factors that affect image quality in the underwater environment. Consequently, its performance is limited, and it is unable to differentiate the quality of underwater images. In contrast, the proposed NMFC method captures features that can effectively describe the degradation of underwater image quality, considering both the luminance and chromatic components. The scores obtained through this method demonstrate better alignment with subjective quality scores, thus achieving superior performance.

Ablation experiments

To validate the chosen CIELab color space for evaluating underwater image quality in this paper, ablation experiments were conducted on the SAUD and UIED databases using the CIElab color space, opponent color space, and YCbCr color space. The experimental results are presented in Table 4.

Table 4.

Performance comparison of the proposed method on different color space.

| Color Space | SAUD | UIED | ||||

|---|---|---|---|---|---|---|

| SROCC | KROCC | PLCC | SROCC | KROCC | PLCC | |

| Opponent | 0.8303 | 0.6440 | 0.8387 | 0.6346 | 0.4635 | 0.6941 |

| YCbCr | 0.8239 | 0.6367 | 0.8328 | 0.6286 | 0.4598 | 0.6793 |

| CIELab | 0.8354 | 0.6467 | 0.8401 | 0.6351 | 0.4647 | 0.6816 |

The best values are in bold.

Based on the results presented in Table 4, the following conclusions can be drawn. Firstly, on the SAUD database, the method utilizing the CIELab color space outperforms the sub-optimal method employing the Opponent color space, with higher values of SROCC, KROCC, and PLCC evaluation indices by 0.0051, 0.0037, and 0.0014, respectively. Secondly, on the UIED database, the method utilizing the CIELab color space achieves the highest SROCC and KROCC values. However, the PLCC values is slightly lower compared to the method using the Opponent color space, with only a marginal difference of 0.0125 in the PLCC evaluation metric.Moreover, the utilization of the YCbCr color space yields unsatisfactory outcomes on both datasets. The SROCC, KROCC, and PLCC evaluation metrics exhibit lower values in comparison to the other two color spaces. This disparity suggests that the YCbCr color space inadequately captures color information, resulting in an inability to depict image intricacies and color variations. Consequently, the evaluation of underwater image quality suffers from diminished performance. In summary, the findings highlight the superior efficacy of employing the CIELab color space for underwater image quality assessment when contrasted with the alternative color spaces.

Furthermore, a series of ablation experiments were conducted on the SAUD dataset by the present study to demonstrate the effect of the multi-feature fusion strategy. The consistent results of the subjective and objective assessments of these ablation experiments are demonstrated in Table 5.

Table 5.

Performance of ablation experiments on the SAUD dataset.

| Features | SROCC | KROCC | PLCC |

|---|---|---|---|

| 0.4503 | 0.3146 | 0.4753 | |

| 0.5155 | 0.3631 | 0.5595 | |

| 0.5634 | 0.3998 | 0.6096 | |

| 0.5339 | 0.3784 | 0.5802 | |

| 0.4903 | 0.3495 | 0.5208 | |

| 0.6147 | 0.4456 | 0.6442 | |

| L-features | 0.7080 | 0.5238 | 0.7271 |

| C-features | 0.6468 | 0.4706 | 0.6788 |

| Overall | 0.8354 | 0.6467 | 0.8401 |

The best values are in bold.

As indicated in Table 5, it is observed that the increasing feature numbers utilized leads to improved performance compared to using a single feature, particularly in the case of the methods utilizing only luminance or chromatic features. Specifically, the values of the three evaluation metrics, namely SROCC, KROCC, and PLCC, of the method utilizing luminance features are 0.7080, 0.5238, and 0.7271 respectively, representing a performance improvement of approximately 15 when compared with the best performing single feature method, with improvements of 0.0933, 0.0782, and 0.0829 respectively. With an increase in the number of features utilized, the proposed method demonstrates improved performance across the assessment metrics of SROCC, KROCC, and PLCC, with values reaching 0.8354, 0.6467, and 0.8401 respectively. These performance gains are particularly evident when compared against an method utilizing only luminance and chromatic features, with corresponding SROCC improvements of 0.1274 and 0.1886, increases in KROCC of 0.1229 and 0.1761, additionally, significant increases in PLCC values of 0.113 and 0.1613 respectively. The analysis conducted in this paper indicates that through the fusion of luminance and color component features, the proposed NMFC method achieves optimal performance across all assessment metrics, with the highest degree of both subjective and objective agreement. The experimental results illustrate the performance of individual feature. Meanwhile, the results demonstrate the effective of multi-feature fusion in UIQA.

Sensitivity to image type

In order to verify the generalizability of the method in this paper, we conduct comparison experiments on singly distorted synthetic datasets LIVE, TID2013, screen content datasets SIQAD,multiply distorted synthetic datasets LIVEMD datasets, and compare the performance of the NMFC method proposed in this paper with existing classical IQA methods such as SSIM46, PSNR47, FSIM48, VIF49, etc.

The overall assessment results of the proposed method and other six classical image quality methods on three datasets are given in Table 6, and the better two results are bolded in the table. According to the results in Table 6, it can be seen that the method of this paper achieves better results on all three datasets. Firstly, on the SIQAD dataset, the proposed method outperforms other image quality evaluation methods, and the performance of SROCC and PLCC is almost higher than 0.2 compared to the worst method; still have some competitiveness on LIVE dataset, SROCC, KROCC, and PLCC values are only below FSIM and VIF methods, but the difference is not large, PLCC is only 0.027, 0.0422 and 0.02 lower than the optimal assessment results on three assessment metrics. On the TID2013 dataset, the performance of this method is only worse than FSIM method and achieves sub-optimal results, but the method in this paper is a no-reference method, compared with the FSIM method, which requires reference information, the proposed method has a wider applicable scope. Meanwhile, based on the results presented in the table, it can be observed that the evaluation performance of FSIM and VIF methods surpasses that of the proposed method on certain datasets, however, it is noted that their performance on the SIQAD dataset is suboptimal, leading to a significant performance gap with the proposed method. Finally,in the evaluation on the LIVEMD dataset, our proposed method demonstrates slightly lower performance compared to the VIF and BIQI methods. VIF is a full reference algorithm that relies on more reference information, while our proposed method operates without any reference information and still achieves comparable results to VIF. The maximum difference in each evaluation index between our method and VIF is less than 0.035. Additionally, the SROCC, KROCC, and PLCC values of our method are 0.0163, 0.0319, and 0.0196 lower than the no-reference method BIQI. However, considering that our proposed method is specifically designed for underwater images, a certain gap in performance is still acceptable.

Table 6.

Performance comparisons of the proposed method on natural image datasets and screen content image datasets.

| Method | LIVE | TID2013 | SIQAD | LIVEMD | |

|---|---|---|---|---|---|

| SROCC | SSIM | 0.9269 | 0.6370 | 0.7521 | 0.6786 |

| PSNR | 0.8756 | 0.5531 | 0.5608 | 0.6771 | |

| FSIM | 0.9610 | 0.8015 | 0.5819 | 0.6835 | |

| VIF | 0.9639 | 0.6679 | 0.6314 | 0.8823 | |

| SSEQ | 0.9033 | 0.6162 | 0.7287 | 0.8667 | |

| BIQI | 0.8796 | 0.4939 | 0.7320 | 0.8905 | |

| NMFC | 0.9369 | 0.6735 | 0.7458 | 0.8742 | |

| KROCC | SSIM | 0.7526 | 0.4636 | 0.5543 | 0.5006 |

| PSNR | 0.6865 | 0.4027 | 0.4226 | 0.5003 | |

| FSIM | 0.8379 | 0.6289 | 0.4250 | 0.6727 | |

| VIF | 0.8282 | 0.5147 | 0.4577 | 0.6969 | |

| SSEQ | 0.7364 | 0.4490 | 0.5393 | 0.6832 | |

| BIQI | 0.7312 | 0.3493 | 0.5436 | 0.7174 | |

| NMFC | 0.7957 | 0.4940 | 0.5559 | 0.6855 | |

| PLCC | SSIM | 0.9243 | 0.6524 | 0.7461 | 0.6198 |

| PSNR | 0.8723 | 0.5734 | 0.5508 | 0.7716 | |

| FSIM | 0.9597 | 0.8589 | 0.5902 | 0.8359 | |

| VIF | 0.9604 | 0.7720 | 0.7066 | 0.9144 | |

| SSEQ | 0.9017 | 0.6943 | 0.7704 | 0.8805 | |

| BIQI | 0.8823 | 0.5935 | 0.7740 | 0.9052 | |

| NMFC | 0.9404 | 0.7191 | 0.7830 | 0.8829 |

To demonstrate the generalizability of the proposed method, scatter plots were utilized to illustrate the correlation between the quality prediction results and subjective prediction results of the proposed method across various image categories, including underwater images, synthetic distortion images, screen content images, and real images in the wild. Fig. 9 displays the scatter plot depicting the predicted scores versus the subjective scores of the proposed method on SAUD, LIVE, SIQAD, and LIVEC datasets. From the a–c in Fig. 9, it can be observed that the quality prediction results of the proposed method align closely with the subjective evaluation results for underwater images, synthetic distortion images, and screen content images, where most samples compactly gather around the linear correlated line. It demonstrates that the proposed method predictions are highly consistent with human subjective perception. This observation provides evidence that the proposed method is capable of adapting to the task of image quality assessment in these scenarios. However, when evaluating the proposed method on the real scene dataset LIVEC, the SROCC, KROCC, and PLCC values are only 0.6435, 0.4599, and 0.6660, respectively, indicating not performing well. Notably, Fig.9d clearly illustrates that the points demonstrate a relative degree of dispersion and do not exhibit a tightly clustered distribution around the linear correlated line. This highlights a substantial disparity between subjective and objective consistency. Therefore, further improvements are necessary to enhance the performance of the proposed method for image quality assessment in authentic settings.

Figure 9.

Scatter plots of the predicted quality index Q versus Subjective Scores for the test set. The x-axis is the predicted quality index Q and the y-axis is the subjective value. The red line represents the ideal linear correlated line. (a) SAUD dataset. (b) LIVE dataset. (c) SIQAD dataset. (d) LIVEC dataset.

In summary, it is evident that the proposed method is resilient and can basically adapt to various distortion types and changes in image characteristics across different scenarios, demonstrating a degree of generalizability.

Stability analysis

This paper conduct performance comparison on SAUD and UIED datasets to verify the stability of the proposed method NMFC in this paper. Fig. 10 gives the box plots of the assessment results corresponding to this method and different IQA methods on SAUD and UIED datasets. The horizontal axis of the box plots represents the various IQA methods, while the vertical coordinates depict the objective assessment scores obtained through each method for the given set of images. The shape of each box corresponding to each method describes the distribution of the data and reflects the assessment effects of the different IQA methods. As shown in Fig. 10, our findings on both the SAUD and UIED datasets indicate that, in comparison with other IQA techniques, the boxes corresponding to the proposed method on each assessment index are smaller. A smaller box size indicates less fluctuation in the method’s assessment of image quality. Moreover, the distance between the upper and lower edges of the boxes corresponding to this method is small, and there are no outliers, which demonstrates that the proposed method is more stable in assessing the quality of underwater images, compared to other IQA methods. Additionally, the median score for our proposed method is higher relative to other methods, indicating better assessment accuracy. Overall, our proposed method exhibits high stability and is effective in assessing the image quality of underwater images.

Figure 10.

Box plot of IQA methods on SAUD and UIED. (a–c) SAUD database. (d–f) UIED database.

Conclusion

In this paper, an UIQA method based on color space multi-feature fusion is proposed. The proposed method extract morphological features, histogram features, moment statistics, and other features from the color space-based transformed image and perform multi-feature fusion to assess the quality of underwater images. Experimental results demonstrate that our proposed method achieves high accuracy and robustness for UIQA, and is consistent with human subjective perception. Additionally, our method can satisfy the demands of both natural image and screenshot IQA .

The existing IQA methods proposed in this paper rely on artificial features and overlook the impact of complex real distortions on image quality in real scenes, posing a challenge for evaluating image quality in realistic environments. Enhancing the performance of the method constitutes the primary objective for future research endeavors. To address this issue, our focus will be on semantically mining the feature maps proposed in this paper, combined with deep neural networks, aiming to achieve a more efficient and effective approach to image quality assessment.

Acknowledgements

The authors are grateful for the financial support provided by National Natural Science Foundation of China (Grant No. 62101268), National Natural Science Foundation of China (Grant No. 82204770), Youth Science Foundation of Jiangsu Province (Grant No. BK20210696), Future Network Scientific Research Fund Project (Grant No. FNSRFP-2021-YB-24),Postgraduate Research & Practice Innovation Program of Jiangsu Province(SJCX230573).

Author contributions

Conceived and designed the experiments: X.Y. and T. C. Performed the experiments: X.Y. and T.C. Analyzed the data: T.C., N.L. Wrote and reviewed the paper: X.Y., T.C., N.L., T.W., G. J. Approved the final version of the paper: X.Y., T. C., G.J., T.W.

Data availability

Data supporting the results of this study are available from the corresponding author upon reasonable request, including source code and experimental results.

Competing interests

The authors declare no competing interests.

Footnotes

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

These authors contributed equally: Tianhai Chen and Xichen Yang.

Contributor Information

Xichen Yang, Email: xichen_yang@njnu.edu.cn.

Tianshu Wang, Email: wangtianshu@njucm.edu.cn.

References

- 1.Zhou J, Yang T, Zhang W. Underwater vision enhancement technologies: A comprehensive review, challenges, and recent trends. Appl. Intell. 2023;53:3594–3621. doi: 10.1007/s10489-022-03767-y. [DOI] [Google Scholar]

- 2.Kang Y, et al. A perception-aware decomposition and fusion framework for underwater image enhancement. IEEE Trans. Circuits Syst. Video Technol. 2022;33:988–1002. doi: 10.1109/TCSVT.2022.3208100. [DOI] [Google Scholar]

- 3.Fu X, Cao X. Underwater image enhancement with global-local networks and compressed-histogram equalization. Signal Process. Image Commun. 2020;86:115892. doi: 10.1016/j.image.2020.115892. [DOI] [Google Scholar]

- 4.Zhou J, et al. Underwater camera: Improving visual perception via adaptive dark pixel prior and color correction. Int. J. Comput. Vis. 2023 doi: 10.1007/s11263-023-01853-3. [DOI] [Google Scholar]

- 5.Zhou J, et al. Ugif-net: An efficient fully guided information flow network for underwater image enhancement. IEEE Trans. Geosci. Remote Sens. 2023;61:1–17. doi: 10.1109/TGRS.2023.3293912. [DOI] [Google Scholar]

- 6.Zhou J, Sun J, Zhang W, Lin Z. Multi-view underwater image enhancement method via embedded fusion mechanism. Eng. Appl. Artif. Intell. 2023;121:105946. doi: 10.1016/j.engappai.2023.105946. [DOI] [Google Scholar]

- 7.Zhou J, Pang L, Zhang D, Zhang W. Underwater image enhancement method via multi-interval subhistogram perspective equalization. IEEE J. Ocean. Eng. 2023;48:474–488. doi: 10.1109/JOE.2022.3223733. [DOI] [Google Scholar]

- 8.Zhou J, Zhang D, Zhang W. Cross-view enhancement network for underwater images. Eng. Appl. Artif. Intell. 2023;121:105952. doi: 10.1016/j.engappai.2023.105952. [DOI] [Google Scholar]

- 9.Zhang D, et al. Rex-net: A reflectance-guided underwater image enhancement network for extreme scenarios. Expert Syst. Appl. 2023;231:120842. doi: 10.1016/j.eswa.2023.120842. [DOI] [Google Scholar]

- 10.Jiang G-Y, Huang D-J, Wang X, Yu M. Overview on image quality assessment methods. J. Electron. Inf. Technol. 2010;32:219–226. doi: 10.3724/SP.J.1146.2009.00091. [DOI] [Google Scholar]

- 11.Wang Z. Review of no-reference image quality assessment. Acta Autom. Sin. 2015;41:1062–1079. [Google Scholar]

- 12.Zhang W, Ma K, Zhai G, Yang X. Uncertainty-aware blind image quality assessment in the laboratory and wild. IEEE Trans. Image Process. 2021;30:3474–3486. doi: 10.1109/TIP.2021.3061932. [DOI] [PubMed] [Google Scholar]

- 13.Pan Z, et al. Vcrnet: Visual compensation restoration network for no-reference image quality assessment. IEEE Trans. Image Process. 2022;31:1613–1627. doi: 10.1109/TIP.2022.3144892. [DOI] [PubMed] [Google Scholar]

- 14.Moorthy AK, Bovik AC. A two-step framework for constructing blind image quality indices. IEEE Signal Process. Lett. 2010;17:513–516. doi: 10.1109/LSP.2010.2043888. [DOI] [Google Scholar]

- 15.Li Q, Lin W, Fang Y. No-reference quality assessment for multiply-distorted images in gradient domain. IEEE Signal Process. Lett. 2016;23:541–545. doi: 10.1109/LSP.2016.2537321. [DOI] [Google Scholar]

- 16.Liu L, Liu B, Huang H, Bovik AC. No-reference image quality assessment based on spatial and spectral entropies. Signal Process. Image Commun. 2014;29:856–863. doi: 10.1016/j.image.2014.06.006. [DOI] [Google Scholar]

- 17.Gu K, Tao D, Qiao J-F, Lin W. Learning a no-reference quality assessment model of enhanced images with big data. IEEE Trans. Neural Netw. Learn. Syst. 2017;29:1301–1313. doi: 10.1109/TNNLS.2017.2649101. [DOI] [PubMed] [Google Scholar]

- 18.Mittal A, Moorthy AK, Bovik AC. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012;21:4695–4708. doi: 10.1109/TIP.2012.2214050. [DOI] [PubMed] [Google Scholar]

- 19.Mittal A, Soundararajan R, Bovik AC. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012;20:209–212. doi: 10.1109/LSP.2012.2227726. [DOI] [Google Scholar]

- 20.Zhang L, Zhang L, Bovik AC. A feature-enriched completely blind image quality evaluator. IEEE Trans. Image Process. 2015;24:2579–2591. doi: 10.1109/TIP.2015.2426416. [DOI] [PubMed] [Google Scholar]

- 21.Kang, L., Ye, P., Li, Y. & Doermann, D. Convolutional neural networks for no-reference image quality assessment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 1733–1740 (2014).

- 22.Zhang W, Ma K, Yan J, Deng D, Wang Z. Blind image quality assessment using a deep bilinear convolutional neural network. IEEE Trans. Circuits Syst. Video Technol. 2018;30:36–47. doi: 10.1109/TCSVT.2018.2886771. [DOI] [Google Scholar]

- 23.Su, S. et al. Blindly assess image quality in the wild guided by a self-adaptive hyper network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3667–3676 (2020).

- 24.You, J. & Korhonen, J. Transformer for image quality assessment. In 2021 IEEE International Conference on Image Processing (ICIP). 1389–1393 (2021).

- 25.Yang, S. et al. Maniqa: Multi-dimension attention network for no-reference image quality assessment. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 1191–1200 (2022).

- 26.Yang M, Sowmya A. An underwater color image quality evaluation metric. IEEE Trans. Image Process. 2015;24:6062–6071. doi: 10.1109/TIP.2015.2491020. [DOI] [PubMed] [Google Scholar]

- 27.Panetta K, Gao C, Agaian S. Human-visual-system-inspired underwater image quality measures. IEEE J. Ocean. Eng. 2016;41:541–551. doi: 10.1109/JOE.2015.2469915. [DOI] [Google Scholar]

- 28.Yang N, et al. A reference-free underwater image quality assessment metric in frequency domain. Signal Process. Image Commun. 2021;94:116218. doi: 10.1016/j.image.2021.116218. [DOI] [Google Scholar]

- 29.Zheng Y, Chen W, Lin R, Zhao T, Le Callet P. Uif: An objective quality assessment for underwater image enhancement. IEEE Trans. Image Process. 2022;31:5456–5468. doi: 10.1109/TIP.2022.3196815. [DOI] [PubMed] [Google Scholar]

- 30.Sheikh HR, Bovik AC, De Veciana G. An information fidelity criterion for image quality assessment using natural scene statistics. IEEE Trans. Image Process. 2005;14:2117–2128. doi: 10.1109/TIP.2005.859389. [DOI] [PubMed] [Google Scholar]

- 31.Wang Z, Chen J, Hoi SC. Deep learning for image super-resolution: A survey. IEEE Trans. Pattern Anal. Mach. Intell. 2020;43:3365–3387. doi: 10.1109/TPAMI.2020.2982166. [DOI] [PubMed] [Google Scholar]

- 32.Jiang Q, Gu Y, Li C, Cong R, Shao F. Underwater image enhancement quality evaluation: Benchmark dataset and objective metric. IEEE Trans. Circuits Syst. Video Technol. 2022;32:5959–5974. doi: 10.1109/TCSVT.2022.3164918. [DOI] [Google Scholar]

- 33.Sheikh, H. Live Image Quality Assessment Database Release 2. http://live.ece.utexas.edu/research/quality (2005).

- 34.Ponomarenko N, et al. Image database tid2013: Peculiarities, results and perspectives. Signal Process. Image Commun. 2015;30:57–77. doi: 10.1016/j.image.2014.10.009. [DOI] [Google Scholar]

- 35.Jayaraman, D., Mittal, A., Moorthy, A. K. & Bovik, A. C. Objective quality assessment of multiply distorted images. In 2012 Conference Record of the Forty Sixth Asilomar Conference on Signals, Systems and Computers (ASILOMAR). 1693–1697. 10.1109/ACSSC.2012.6489321 (2012).

- 36.Ghadiyaram D, Bovik AC. Massive online crowdsourced study of subjective and objective picture quality. IEEE Trans. Image Process. 2016;25:372–387. doi: 10.1109/TIP.2015.2500021. [DOI] [PubMed] [Google Scholar]

- 37.Yang H, Fang Y, Lin W. Perceptual quality assessment of screen content images. IEEE Trans. Image Process. 2015;24:4408–4421. doi: 10.1109/TIP.2015.2465145. [DOI] [PubMed] [Google Scholar]

- 38.Robertson AR. Historical development of CIE recommended color difference equations. Color Res. Appl. 1990;15:167–170. doi: 10.1002/col.5080150308. [DOI] [Google Scholar]

- 39.Ruderman DL. The statistics of natural images. Network Comput. Neural Syst. 1994;5:517. doi: 10.1088/0954-898X_5_4_006. [DOI] [Google Scholar]

- 40.Murching AM, Woods JW, Sharifi K, Leon-Garcia A. Comment on“Estimation of shape parameter for generalized gaussian distribution in subband decompositions of video”. IEEE Trans. Circuits Syst. Video Technol. 1995;5:570. doi: 10.1109/76.477073. [DOI] [Google Scholar]

- 41.Joanes DN, Gill CA. Comparing measures of sample skewness and kurtosis. Statistician. 1998;47:183–189. doi: 10.1111/1467-9884.00122. [DOI] [Google Scholar]

- 42.Stricker, M.A. & Orengo, M. Similarity of color images. In Electronic Imaging (1995).

- 43.Ojala T, Pietikainen M, Maenpaa T. Multiresolution gray-scale and rotation invariant texture classification with local binary patterns. IEEE Trans. Pattern Anal. Mach. Intell. 2002;24:971–987. doi: 10.1109/TPAMI.2002.1017623. [DOI] [Google Scholar]

- 44.Chang, C.-C. & Lin, C.-J. Libsvm: A library for support vector machines. ACM Trans. Intell. Syst. Technol.2 (2011).

- 45.Bradley RA, Terry ME. Rank analysis of incomplete block designs: I. The method of paired comparisons. Biometrika. 1952;39:324–345. [Google Scholar]

- 46.Wang Z, Bovik AC, Sheikh HR, Simoncelli EP. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004;13:600–612. doi: 10.1109/TIP.2003.819861. [DOI] [PubMed] [Google Scholar]

- 47.Avcıbaş, I. S., Sankur, B. l. & Sayood, K. Statistical evaluation of image quality measures. J. Electron. Imaging11, 206–223 (2002).

- 48.Zhang L, Zhang L, Mou X, Zhang D. Fsim: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011;20:2378–2386. doi: 10.1109/TIP.2011.2109730. [DOI] [PubMed] [Google Scholar]

- 49.Sheikh HR, Bovik AC. Image information and visual quality. IEEE Trans. Image Process. 2006;15:430–444. doi: 10.1109/TIP.2005.859378. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data supporting the results of this study are available from the corresponding author upon reasonable request, including source code and experimental results.