Abstract

This article presents new teaching methodologies implemented in subjects in the Ground Engineering Area. Specifically, it focuses on a series of activities carried out due to the appearance of the COVID-19 pandemic that resulted in restrictions on class attendance. The new teaching methodologies brought about substantial changes in the way students learn and are assessed. For the practice sessions, a series of videos were prepared so students could attend and take part in the laboratory practices remotely. As regards the final theory exam, a comprehensive multiple-choice question bank was made available to students prior to the exam to consolidate the concepts seen in the master classes, which we call training and learning. We evaluated the impact of these new methodologies, implemented during two academic years, through the analysis of voluntary and anonymous student surveys and a series of indicators related to the results of the final exams. After analyzing the impact of the new teaching methodologies, we conclude that students are positive about the video experience for laboratory practices, but only as a complementary activity to in-person laboratory sessions. The students also stated that they would like the multiple-choice question bank to continue to be available in successive academic years. Improvements in the final grades of the theory exams demonstrate the success of this new teaching methodology.

Keywords: Question bank, Training and learning methodology, Online teaching, COVID-19, Pandemic, Laboratory practices, Videos, Online multiple-choice exam

1. Introduction and objectives

Bachelor's and Master's degrees in Engineering, Health, and Experimental Sciences, among other areas, include many subjects that are eminently practical [1,2]. Students learn much of the theoretical and practical content taught in the classroom through experimentation in laboratories. In laboratory teaching sessions, students can interact with equipment, materials, teachers, and other classmates in a different way than in master class sessions [3].

However, the appearance of the COVID-19 pandemic has forced universities to propose new methodologies to teaching remotely [4], including laboratory practices [5], typical of engineering studies. The closure of face-to-face universities led to the rapid adoption of distance education based on e-learning [6], although blended approaches had already started to become widespread before the pandemic [7]. To achieve the success of these methodologies, a careful design and a good selection of resources and learning materials are needed [8], always keeping in mind the benefits and the pedagogical pitfalls inherent to virtual tools [[9], [10], [11], [12]]. The decrease in face-to-face experiences may represent a risk for students to lose contact with everyday life and with their peers [13], increasing demotivation. In this sense, teachers have the challenge of adapting and modifying our instructional methodologies to provide an attractive and quality learning experience [[14], [15], [16]].

In the field of Ground Engineering, which encompasses a multidisciplinary area including applied geology, the physics of porous media, and construction engineering [17,18], many subjects apply mechanical and descriptive knowledge of the ground (soil mechanics) to the design of foundations and retaining structures (geotechnics or geotechnical engineering). We chose subjects from the Ground Engineering Area of the Mining and Civil Engineering Department of the "Higher Technical School of Civil Engineering and Mining Engineering" (ETSICCPyM) at the Polytechnic University of Cartagena (UPCT) to implement the new methods described and analyzed in this study. These subjects are summarized in Table 1.

Table 1.

Ground Engineering Area subjects in which the new teaching methodologies were implemented.

| Identifier | Subject (degree acronym) | Degree (Bachelor/Master) | Academic year |

|---|---|---|---|

| 1 | Geotechnics (GIC)a | Bachelor's degree in Civil Engineering | 2020–21 |

| 1 | Soil and Rock Mechanics (GIC)a | Bachelor's degree in Civil Engineering | 2021–22 |

| 2 | Geotechnics and Foundations (MICCP) | Master's degree in Civil Engineering | 2020–21 2021–22 |

| 3 | Geotechnics (GFA) | Bachelor's degree in Fundamentals of Architecture | 2021–22 |

| 4 | Advanced Soil Mechanics (MCIETAT) | Master's degree in Water and Ground Science & Technology | 2021–22 |

It is the same subject with a different name in the 2021-22 academic year [19].

From the total of 23.5 ECTS these 4 subjects provide, the average percentage of credits corresponding to laboratory hours (also including computer lab practice) is 23.4%, which shows the highly practical approach of these subjects.

The purpose of this work is to present two teaching methods adopted in the activities of Ground Engineering subjects as a joint response to restrictions on face-to-face classes caused by the COVID-19 pandemic [[20], [21], [22]] (which affected access to the laboratories during the academic years 2020-21 and 2021–22) and the low number of students who passed or had good grades in theoretical content assessments in previous courses. The measures consisted of substituting face-to-face attendance to laboratory practice for videos and creating a multiple-choice question bank to help students prepare for the final theory exam. Both activities were accessible on the teacher-student digital communication platform of the UPCT, which allowed both activities to be carried out online (Virtual Classroom, https://aulavirtual.upct.es/mod/book/view.php?id=122005).

In order to know the opinion and satisfaction of students with the methodologies implemented, voluntary and anonymous surveys were carried out during the academic years 2020-21 and 2021–22. Students rated (among other questions) their motivation for the new activities, whether they felt they were a good way to adapt teaching to the online conditions imposed by the pandemic, and whether the new teaching materials helped them to better understand the procedures and theoretical concepts. At the same time, students were free to leave comments expressing any complaints or suggestions for improvement. Additionally, the evaluation results of the final theory exams in the academic years 2018-19 and 2019–2020 (prior to the pandemic), and 2020-21 and 2021–22 (during the pandemic) have been compared to reflect the impact of the new methodologies on the academic performance of students.

It is interesting to note that similar experiences were carried out, also during the pandemic, for the emergency transfer from face-to-face teaching to distance learning of Chemistry Laboratory courses in the Bachelor's degree in Pharmacy [23], General Chemistry undergraduate courses for non-science majors [24], and courses in Mechanics of Structures [25], concluding that the adaptations, besides having been successful during the health crisis, have also resulted in new tools that could be very useful in the future [23].

The structure and organization of the article is as follows: in the first place, the new methodologies for teaching under non-presential conditions, the subjects involved, and the implementation of the activities in the daily lives of the students are presented and described. In the following section, we describe how the materials and contents necessary to implement the new methodologies were prepared. Subsequently, the tools used by professors to assess student performance and know their satisfaction are presented, with discussion and analysis in the following section. Finally, the last section summarizes the main conclusions derived from this work.

2. Description of teaching methodologies

2.1. Laboratory practice videos

Unlike the alternatives promoted to teach master classes (live-streaming, with slides, and camera focused on the whiteboard) through specific applications and software [26], laboratory practice classes required intermittent camera focusing from different scenarios or parts of the Soil Mechanics Lab at different times to capture each detail and phase of the tests during the practice. This made broadcasting unfeasible through the means available to professors at the UPCT. Recording the practice sessions was proposed as a solution to this problem, and the professors of the subjects carried this out. During the 2020-21 academic year, students did not have access to the Computer Lab either (this was not the case in 2021–22), so computer practices were streamed and recorded to give students the opportunity to view the videos as many times as they wished.

A total of 8 videos were made of laboratory practices and 5 of computer practices (Table 2), which were uploaded to the UPCT multimedia site (UPCTmedia, https://media.upct.es/). URL links to the videos can be found in the Supplementary Material.

Table 2.

Recordings of laboratory and computer practices and the subjects in which they were used.

| Title of the video-practice | Subjects 2020–21 |

Subjects 2021–22 |

Length |

|---|---|---|---|

| Granulometric analysis (Soil Mechanics Lab) | 1 | 1, 3, 4 | 13 min |

| Atterberg Limits (Soil Mechanics Lab) | 1 | 1, 3, 4 | 37 min |

| Determination of density, porosity, and specific weight of the particles (Soil Mechanics Lab) | 1 | 1, 3, 4 | 7 min |

| Constant head permeability test (Soil Mechanics Lab) | 1 | 1, 4 | 11 min |

| Proctor test (Soil Mechanics Lab) | 1 | 1, 3, 4 | 30 min |

| Oedometer test (Soil Mechanics Lab) | 1 | 1, 4 | 32 min |

| Unconfined compression test (Soil Mechanics Lab) | 1 | 1, 4 | 28 min |

| Direct shear test (Soil Mechanics Lab) | 1 | 1, 3, 4 | 69 min |

| Soil consolidation (Computer Lab) | 1 | 69 min | |

| Seepage flow (Computer Lab) | 1 | 92 min | |

| Diaphragm walls and anchors (Computer Lab) | 2 | 65 min | |

| Shallow foundations (Computer Lab) | 2 | 65 min | |

| Deep foundations (Computer Lab) | 2 | 92 min |

In the Soil Mechanics Lab tests, the tests were explained at the beginning of each recording (geomechanical or descriptive parameters that are determined: soil moisture, granulometry, plasticity, internal friction angle, oedometric modulus, etc.), their fields of application (soil classification, ground deformation, water flow, time-dependent settlement, etc.), and, depending on the case, some basic theoretical content to contextualize the practice, in addition to the standards guiding the tests. Subsequently, the tests were carried out according to the procedures described in the standard, shortening them in the final edition of the recording. Finally, explanations were given on how the data processing must be carried out to fill in the laboratory reports necessary to pass the practice activities.

Each recording (see Table 2 or the Supplementary Material of this paper) was uploaded to the UPCTmedia folder corresponding to the subject that each lecturer has in their profile. From this platform, the URL links were obtained, which were then posted in the Virtual Classroom (where professors post content, activity schedules, documents, exam results, data for practices, etc.).

As a complement to viewing the video, two activities were proposed. The first one was a multiple-choice quiz with unlimited attempts available in the Virtual Classroom. It displayed the number of correct answers, and the quiz had to be taken as many times as necessary to answer all the questions correctly. It remained open the week after the publication of the practice. The second activity involved using access to the practice transcript and files (generally in a spreadsheet) with the data obtained during the test. These were used to complete a laboratory report, which students had to upload to the platform.

Both viewing the video and the two activities associated with the practice were scheduled according to the general planning of the subject, which the students have available from the first day of class in the Virtual Classroom. Students could make specific queries using the Virtual Classroom communication chat, by email, or through a voluntary WhatsApp [[27], [28], [29]] group managed by the subject lecturers.

Since attending practices is mandatory, the complete and correct submission of the laboratory reports, completing the multiple-choice quizzes, and watching the videos provide elements for the teacher to determine whether students pass or not. Passing the laboratory practice part of the course is necessary before the rest of the subject activities can be assessed (individual projects, attending seminars, field trips, group work, multiple-choice theory exams, practical problem exams, etc.).

In the Computer Lab practices, also mandatory to pass the subject, students had to correctly complete the corresponding transcript, relying on the instructions given by the professor and consulting the videos created for this purpose (Table 2) as many times as they wanted. The deadline for this type of practice was the day of the final exam (first call). If the submission was later, it would be applied to the final call.

2.2. Multiple-choice question bank and theory exam

This methodology was implemented in the Bachelor's degree in Civil Engineering (GIC) and Master's degree in Civil Engineering (MICCP), in the subjects Geotechnics (renamed Soil and Rock Mechanics in the 2021-22 academic year) and Geotechnics and Foundations, subjects 1 and 2 in Table 2, respectively. For both subjects, the application period was the academic years 2020-21 and 2021-22.

The multiple-choice exams of the theoretical contents, which in June and September 2020 (second and third calls) were carried out online in the Virtual Classroom due to attendance restrictions brought about by the COVID-19 pandemic, have remained in the successive courses in an online format, although in the 2021-22 academic year they were taken in person in the Computer Lab, also using the Virtual Classroom.

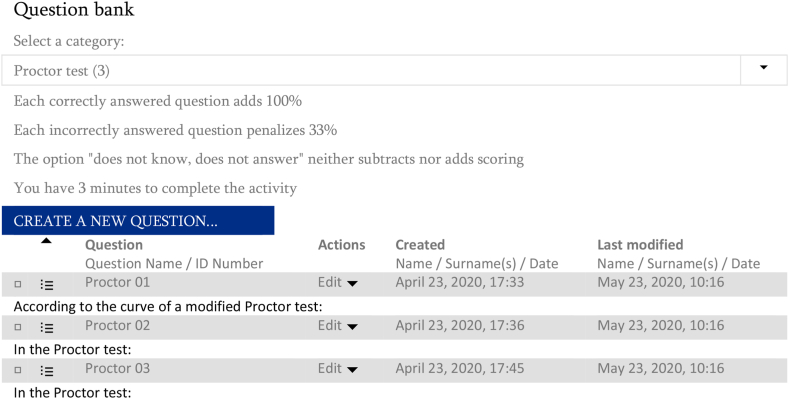

As an activity prior to taking the exam, the novelty of allowing students access to the set of multiple-choice questions of each teaching unit before the final exam was implemented in 2020–21. This question bank was made available to students at the end of each didactic unit and remained open for the rest of the academic year. Each unit contained between 10 and 40 questions (Fig. 1), depending on its contents.

Fig. 1.

Example of the Virtual Classroom question bank for the subject “Soil and Rock Mechanics” (Bachelor's degree in Civil Engineering), with the number of multiple-choice questions (and editing options) for each didactic unit.

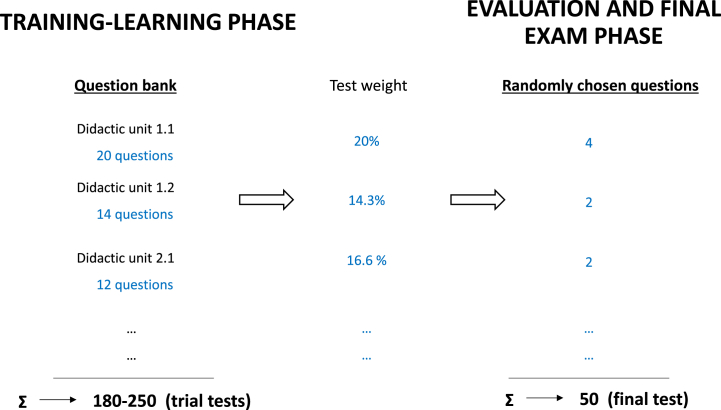

With this question bank, students were able to consolidate the theoretical contents in a training-learning phase by practicing with the questions from which an arbitrary selection would later be made to compose the final exam (assessment and final exam phase).

The new methodology was implemented with the idea of guiding students in the most relevant and important theoretical issues of each didactic unit. Previously, students were lost with so much documentation and were not able to synthesize the relevant information on their own. Each of the multiple-choice questions in the bank of a specific didactic unit had 5 possible answers: one correct (100%), three incorrect (−33.3%), and one "no answer," which did not count.

During the training-learning phase, students could access the complete quiz with all the questions as many times as they wished. Given that at the end of a training-learning test, users could check their global scores (maximum 10) and which questions had been answered incorrectly (but not the correct answer), they were forced to search for information and consult the bibliography to find the correct answers. The process of repeating the quizzes together with the study of the teaching materials, reflection and the application of critical thinking contributed to achieving the learning objectives [[30], [31], [32]] and helped students prioritize the contents of each didactic unit based on their relevance, which is one of the objectives of this methodology.

The final multiple choice theory exam, which counted 20% of the final grade in each subject, consisted of 50 questions selected from all those generated for each didactic unit. When creating the exam with the Virtual Classroom tool, each professor decided how many questions from each didactic unit would be included. Each student took a different exam thanks to the "arbitrary selection" option, which helped to minimize the possibilities of cheating when the test was taken remotely [4,[33], [34], [35], [36], [37]].

The Virtual Classroom tool allows professors to copy the question bank from one academic year to another or transfer it to other subjects. Assessment tests can also be copied from one year to another. The application allows the contents of these two activities to be edited.

By way of illustration, Fig. 2 shows a flow chart of the implementation process of the teaching methodologies presented in this section (videos and question bank).

Fig. 2.

Implementation process flowchart of the new teaching methodologies. Panel A shows lab practice video activity. Panel B shows computer practice video activity. Panel C shows question bank and theory exam activities.

3. Developing materials and content for new teaching methodologies

In this section, we will describe how the multiple-choice activities were created and made available to the students in the Virtual Classroom, as well as the creation of the practice videos.

3.1. Multiple-choice question bank and evaluation quiz

The question bank is one of the many tools available in Virtual Classroom. It allows you to create questions of a wide variety of types, such as true or false, exact numerical result, and short answer questions, among others. In our case, we decided on multiple-choice questions, since they lead the student to reflect on the veracity (or not) and precision of a series of statements, which deal with fundamental concepts of the subject taught that the future engineer must master.

Fig. 3 shows an example with the basic functions available in the question bank. In addition, a help section on using the question bank is available at https://docs.moodle.org/402/en/Teacher_quick_guide.

Fig. 3.

Example of the question bank for the Proctor test laboratory practice (Virtual Classroom professor profile).

In this way, a total of between 180 and 250 questions per subject were created, which are made available to students in the training-learning phase, once each didactic unit is finished. The questions consist of a statement and 5 response options, with a limit to choose only one. The proportion each one counts in the score is: 100% for the correct answer, −33.3% for each of the 3 incorrect answers, and 0% for the "no answer" option. Thus, for every three incorrectly answered questions, one correctly answered question is subtracted.

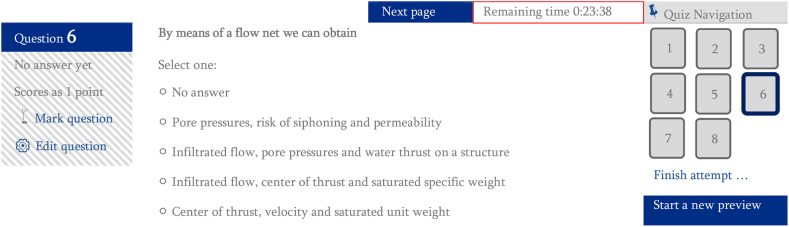

The final exam is designed with the quizzes tool of Virtual Classroom. In it, you can define numerous configuration options: timing (date and time of the exam), scores, review options, navigation options (go forward or back between questions), and the possibility of modifying the answer, among many other options. A certain number of questions from each didactic unit are loaded (this must be proportional to the relative importance of each unit), so the total number required for the exam is added (50, as established in the teaching guide). The random selection option allows each student to take a different exam since the tool arbitrarily compiles it from the question bank of each unit. Fig. 4 shows an example of what students see in the Virtual Classroom, while the flowchart in Fig. 5 describes the process from the training-learning phase to the final evaluation phase.

Fig. 4.

Example of what students see in the Virtual Classroom exam: questions, possible answers, remaining time, and navigation panel between the different questions.

Fig. 5.

Flowchart showing the shift from the training-learning phase to the final exam in the Virtual Classroom.

Once the final exam has been created, the maximum score is established (usually out of 10). The application performs the weighting to attribute the same value to each test-type question.

3.2. Laboratory practice videos

Before starting the lab practice, pre-recording preparation is necessary, which takes a long time. Thus, the soil samples to be analyzed must be prepared, along with the necessary tools to carry out the test (including a transcript of the practice prepared by the lecturers). In addition, a laptop connected to a projector is used to project the practice transcript, the results (e.g., in a spreadsheet), supporting images, notes, etc. A whiteboard is also used to display information related to the object of the practice, its phases, results, relevant theoretical aspects, etc.

For the recording of the videos, at least two lecturers were necessary, who alternated in the explanations during the different parts of the practice. The recordings usually begin with an introductory presentation, after which the practice is carried out. Next, the results are interpreted, and, finally, how to complete the laboratory report is discussed.

For the editing of the recordings and the final assembly of the videos, the free software VideoPad [38] was used. Once the final video file is obtained, it is uploaded to the UPCTmedia platform, which automatically generates a URL link, so the material is available and the teaching team decides whether access is public or restricted.

URL links are posted in Virtual Classroom, together with activities associated with the practice videos (multiple-choice quizzes and completed laboratory transcripts), Fig. 6.

Fig. 6.

Example of activities and tasks associated with the Proctor test practice in the Virtual Classroom: transcript, video URL link, deadline, laboratory report submission, and multiple-choice quiz.

In the videos of computer practices, a similar procedure was followed, although they did not require further editing since, in this case, the recording was made directly from the live-streamed broadcast (from the screen of the central computer in the Computer Lab).

4. Tools used to assess the new methodologies

In this section, two tools used to assess the success and achievements of the new methodologies will be presented. These are: i) voluntary student satisfaction surveys and ii) student performance indicators from the assessment results of the final theory exam.

4.1. Satisfaction surveys

The students enrolled in the subjects (Table 1) where the new teaching methodologies had been implemented were surveyed, anonymously and voluntarily, to receive their feedback through a series of questions with a 1–5 Likert scale [39,40] and with the possibility (optional) of giving their opinions or suggesting changes or improvements.

The surveys were carried out in two ways to allow students to choose the one they felt the most comfortable with: i) filling out a paper survey in the classroom or ii) in the Virtual Classroom. For both options, the students were duly informed that the surveys were completely voluntary and anonymous (they did not have to give their names if they completed them in class, and the Virtual Classroom has an option for anonymous surveys).

4.1.1. Survey preparation

Two independent surveys were created, one for the experience of the online laboratory practices and the other for the quizzes on didactic units and theory exams in the Virtual Classroom. The questions included in each survey are shown in Table 1, Table 2S of Supplementary Material.

The first of the surveys aimed to capture students' perceptions of the online laboratory practice experience, which was composed of 6 Likert-type questions (with a score between 1 and 5) and a seventh multi-choice question (where students could mark up to 5 possible answers) (Table 1S of Supplementary Material). Table 3S of Supplementary Material shows the attributes that corresponded to the scores 1–5 in the Likert-type questions for this first survey, which also had a final section (or eighth question) where students could leave comments or suggestions if they wanted.

The questions were the same for all the subjects involved in this experience: subjects 1, 3, 4, and 2, the latter only for the academic year 2020–21, from Table 1. The Likert-type questions sought students’ assessment of the new teaching methodology of online practices, as well as their degree of satisfaction [41,42], while the multi-choice question was intended for students to point out what, in their opinions, were the advantages and disadvantages of the new methodology.

The second survey was aimed to capture students' perceptions of the experience of the quizzes on the didactic units and the theory exam taken in the Virtual Classroom. It has a very similar structure to the first survey: 5 Likert-type questions with a score between 1 and 5. The questions in this survey are identical to those in the first survey except for question 1.4, which is specific to the online practice experience. A sixth multi-choice question was added where students could choose up to 5 answers (Table 2S of Supplementary Material). Table 1S of Supplementary Material shows the attributes that correspond to the scores 1–5 in the Likert-type questions for this second survey. A final question permitting students to leave a comment or suggestion if they wanted was included.

4.1.2. Survey results

The voluntary surveys were given to the 120 students in the subjects and academic years in Table 1. Forty-five of them responded (37.5%). Therefore, the sample is considered to be sufficiently representative to reflect students’ perceptions of the new teaching methodologies we implemented. In this section, only the graphs with the average values (or number of students choosing an option) for the different questions (or options) of the surveys are shown, leaving their analysis for the Results and Discussion section.

The results of each of the two surveys have been grouped in three different ways: i) by subjects, ii) by academic year, and iii) by overall results.

The feedback and suggestions for improvement proposed by the students in the free comment question are collected in the Supplementary Material.

-

i)

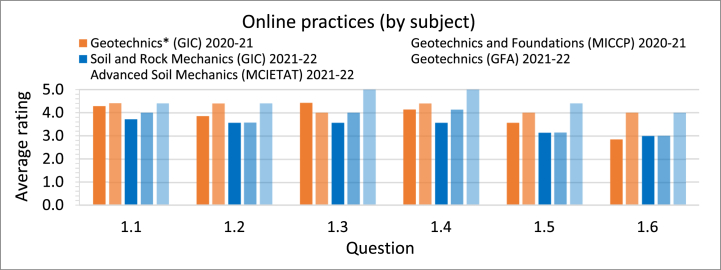

Survey results by subjects

Fig. 7, Fig. 8 show the results of the survey for the five subjects that, to date, have employed online practices. Of these five subjects, two correspond to the academic year 2020-21 and three to 2021–22. Geotechnics (GIC) was the only subject that was taught using this method in 2020–2021 and 2021–22 (it was renamed Soil and Rock Mechanics (GIC) [19]). Geotechnics and Foundations (MICCP) had online practices during the 2020-21 academic year but not in 2021–22, when this activity went back to face-to-face practices. Finally, Geotechnics (GFA) and Advanced Soil Mechanics (MCIETAT) were added to the experience in 2021–22, due to the positive reception of this methodology during the hardest year of the pandemic (2020–21).

Fig. 7.

Average ratings (by subject) of the Likert-type questions (online practice survey).

Fig. 8.

Number of students who chose each of the different options (by subject) in the multi-choice question (online practice survey).

Fig. 7 shows the average ratings for each of the 6 Likert-type questions in this survey, while Fig. 8 shows the number of students who chose each of the 5 options available in the multi-choice question.

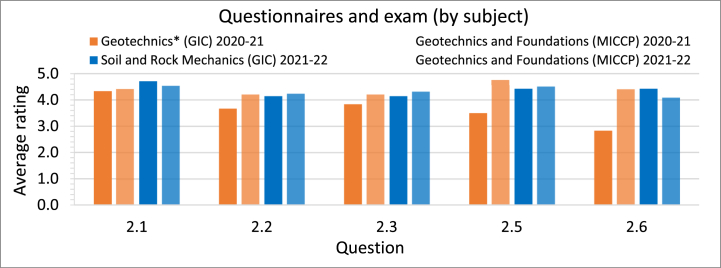

Fig. 9, Fig. 10 show the results of the survey for the four subjects that, to date, have employed the quizzes on didactic units and the theory exam in the Virtual Classroom. In this case, they are the same two subjects for the 2020-21 and 2021-22 academic years: Geotechnics and Foundations (MICCP) and Geotechnics (GIC), the latter renamed to Soil and Rock Mechanics (GIC) in 2021–22.

Fig. 9.

Average ratings (by subject) of the Likert-type questions (quizzes on didactic units and theory exam survey).

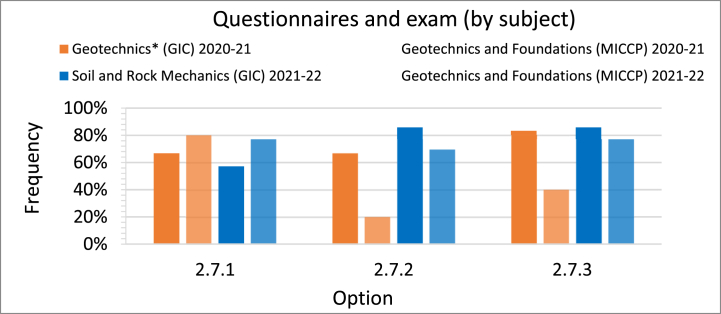

Fig. 10.

Number of students who chose each of the different options (by subject) in the multi-choice question (quizzes on didactic units and theory exam survey).

Fig. 9 shows the average ratings for each of the 5 Likert-type questions of this survey, while Fig. 10 shows the number of students who chose each of the 5 options available in the multi-choice question.

-

ii)

Survey results by academic year and overall

This section presents the results grouped by academic year and the global results of the surveys. At this point, it is important to bear in mind that the global value differs from the arithmetic average of 2020-21 and 2021-22 since all the mean values obtained in this paper are weighted by the number of students participating in each subject or, where appropriate, academic year.

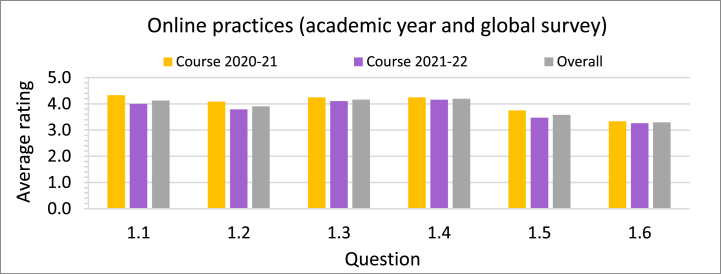

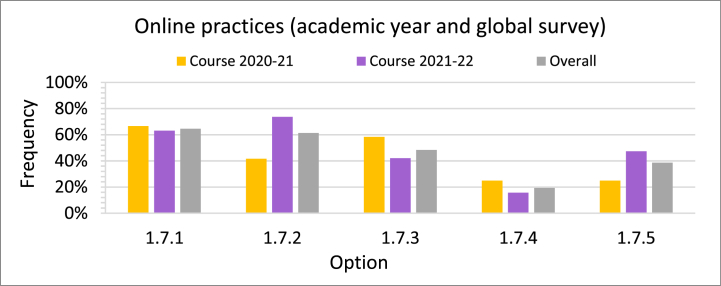

Fig. 11, Fig. 12 show the overall results of the survey for the online practice experience and the academic years 2020-21 and 2021–22. Fig. 11 shows the average ratings for each of the 6 Likert-type questions of this survey, and Fig. 12 shows the number of students who chose each of the 5 options available in the multi-choice question.

Fig. 11.

Average ratings (by academic year and overall) of the Likert-type questions (online practice survey).

Fig. 12.

Number of students who chose each of the different options (by academic year and overall) in the multi-choice question (online practice survey).

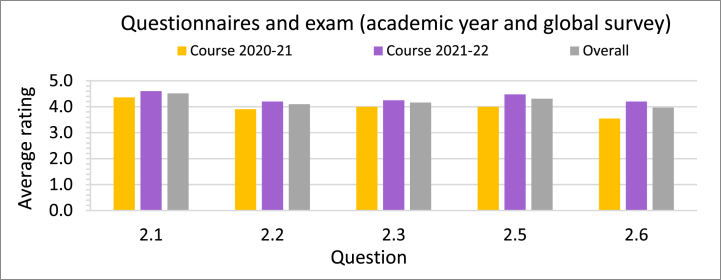

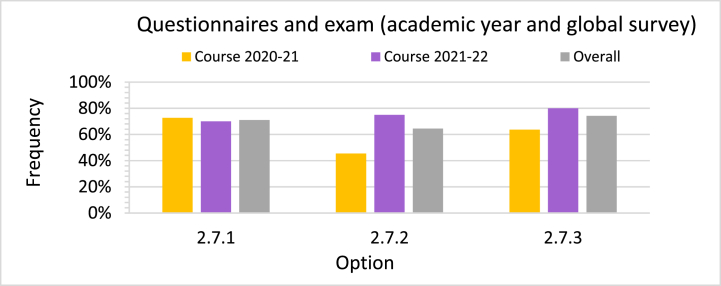

Fig. 13, Fig. 14 show the overall results of the survey about the quizzes on didactic units and the theory exam in the Virtual Classroom and for the academic years 2020-21 and 2021–22. Fig. 13 shows the average ratings for each of the 5 Likert-type questions, while Fig. 14 shows the number of students who chose each of the 5 options available in the multi-choice question.

Fig. 13.

Average ratings (by academic year and overall) of the Likert-type questions (quizzes on didactic units and theory exam survey).

Fig. 14.

Number of students who chose each of the different options (by academic year and overall) in the multi-choice question (quizzes on didactic units and theory exam survey).

Ethical approval

All the procedures carried out in this study with student surveys were conducted in accordance with the ethical standards of the UPCT Research Ethics Committee.

(https://www.upct.es/vicerrectoradoinvestigacion/es/etica/comite-de-etica-en-la-investigacion).

Informed consent was obtained from all students for the surveys.

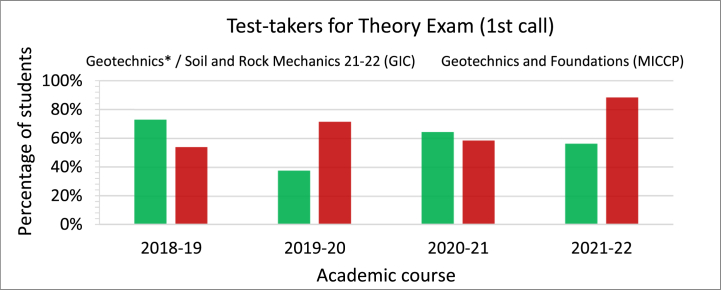

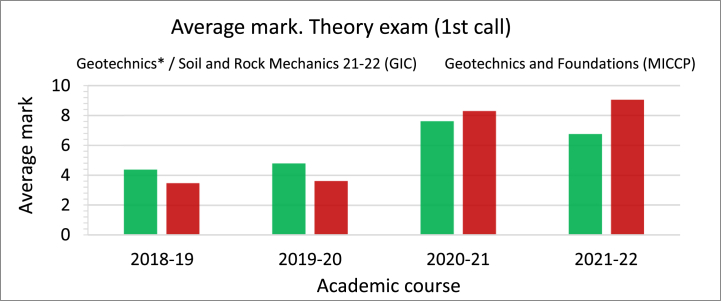

4.2. Impact of teaching methodologies on students’ grades: final theory exam indicators

In this section, a series of indicators of the influence of the new teaching methodologies on students’ grades will be shown. The analysis is included in the Results and Discussion section. This study has focused on the subjects Geotechnics and Foundations (MICCP) and Geotechnics (GIC), the latter renamed Soil and Rock Mechanics (GIC) in the 2021-22 academic year [19] since they are the only subjects to date that have implemented the new teaching methods for more than one academic year.

Before presenting the indicators related to the final theory exam, we offer a brief clarification about the assessment of online practices. This activity has always been assessed as pass or fail. Students who attend the practice sessions and submit the laboratory report correctly pass. With this pass, students are always given the highest grade for this activity, so in this case, it is not possible to think of indicators to analyze the effect of this methodology on the students' grades.

As for the impact of the quizzes on the didactic units and the theory exams in the Virtual Classroom, Fig. 15, Fig. 16, Fig. 17, Fig. 18 show a series of indicators related to the final theory exam since it is directly related to this part of the assessment of the subject. For this analysis, the last four academic years were chosen (two prior to the implementation of the new teaching methodology and two with the experience already implemented). In addition, to more clearly demonstrate the impact of the quizzes and exam preparation on the theory exams, the study was limited to the first call of the theory exam (January).

Fig. 15.

Evolution of the percentage of students who took the theory exam in the first call.

Fig. 16.

Evolution of the students who passed out of those who took the theory exam in the first call.

Fig. 17.

Evolution of the students who passed out of those enrolled (first call of the theory exam).

Fig. 18.

Average mark evolution for the theory exam (first call).

Thus, Fig. 15 shows the evolution of the percentage of students who took the theory exam in the first call for the two subjects, analyzed during the last four academic years.

The evolution of the percentage of students who passed out of the students who took the theory exam in the first call is shown in Fig. 16.

Fig. 17 shows the evolution of the percentage of students who passed the theory exam in the first call out of the total number of students enrolled.

Lastly, Fig. 18 shows the evolution of the average mark in the first call of the theory exam.

4.3. Limitations, strengths and future prospects

The sudden appearance of the COVID-19 pandemic, in the middle of the second semester of the 2020-21 academic year, completely affected the normal course of the classes, which had to be adapted to the remote mode with no time margin and on the fly. In addition, the restrictions affecting face-to-face attendance were fully extended to the 2021-22 academic year at the UPCT. In this sense, it was not possible for the teaching team to set up control groups, since all students had to take the subjects under the distance modalities implemented. Moreover, no student satisfaction surveys on previous teaching methodologies were conducted in the years prior to the pandemic, so this information is not available for comparison.

However, the improvement (in global terms) of the performance indicators does allow us to infer to some extent the effectiveness of the implementation of the new activities in the 2020-21 and 2021–2022 academic years, compared to previous years when they did not exist in 2018-19 and 2019–20. However, the teaching team has always been aware that this approach only covers the point of view of academic results (a way of measuring student learning), but not that of student satisfaction.

The teaching team is working on renewing (in the short-medium term) the materials used for both teaching methodologies (question bank and videos), in addition to implementing slight modifications: on the one hand, shortening and restructuring the videos (to help students to keep concentration and better assimilate the concepts taught [43,44]), and, on the other, the introduction of small intermediate evaluations throughout the semester that allow us to assess the improvement of students' performance in terms of grades over the period. One slight modification is already in place: the laboratory practices are once again fully face-to-face, leaving the videos as reinforcement material. In addition, the monitoring and analysis of student satisfaction is also a priority, and various options are being considered to allow the creation of control groups.

5. Results and discussion

In this section, an analysis of the results of the surveys (Fig. 7, Fig. 8, Fig. 9, Fig. 10, Fig. 11, Fig. 12, Fig. 13, Fig. 14) and the evaluation indicators (Fig. 15, Fig. 16, Fig. 17, Fig. 18) presented in the previous section will be carried out.

5.1. Analysis of survey results

5.1.1. Online practice activities

5.1.1.1. Overall surveys

In view of the results of the Likert-type questions, a positive perception of the experience is observed overall (Fig. 11). This way of complementing teaching and learning (question 1.1) received an average rating of 4.13 (remember that the Likert-type questions were valued between 1 and 5). Regarding the question related to the increase in students' motivation in the subject (question 1.2), the global assessment was 3.90, while 4.16 was given to the statement that the methodology helps students better understand practice procedures (question 1.3). Especially important is the assessment of the question on whether the methodology is a good adaptation to the attendance restrictions imposed by COVID-19 (question 1.4) since it is the Likert-type question that received the highest score, with 4.19. Finally, on whether the students think it would be suitable to apply this methodology in other subjects of their Bachelor's or Master's degrees, and if they would like this to happen (questions 1.5 and 1.6), the results also showed general acceptance, with 3.58 and 3.29, respectively, although they are the questions that received the lowest ratings in this survey.

Concerning the multi-choice question (Fig. 12), the students considered that the new online practice methodology had more advantages (questions 1.7.1 and 1.7.2) than disadvantages (questions 1.7.3 and 1.7.4). Being able to watch the videos as many times as they wanted was an advantage for 65% of the students when it came to better understanding the concepts and procedures of the practices (question 1.7.1), and in 61% of the cases, the students considered that it helped them to better complete the practice report (question 1.7.2). However, not being able to do the practice in the laboratory was considered a disadvantage for 48% of those surveyed when it came to understanding the concepts and fundamentals of the practices (question 1.7.3). This causes us to reflect that the practices should be carried out in person again in the future as long as the health conditions imposed by COVID-19 (or of any other nature) allow. The videos of the practices will be available in the Virtual Classroom as a content reinforcement activity and to compensate for justified absences from any of the face-to-face practices. Regarding question 1.7.4, only 19% of the students considered that not attending the laboratory had a negative impact when completing the practice report.

5.2. Surveys by academic year

The main issue to add to what has already been mentioned about the global results of this activity is the fact that students had a better perception in 2020–21 (the hardest year of the pandemic, with total cancellation of face-to-face classes) than in 2021–22 (the second year, with fewer restrictions on face-to-face teaching). Thus, maintaining these trends in the global results, the average rating of the Likert-type questions was 4.00 for the 2020-21 academic year, falling to 3.80 in the 2021-22 academic year (Fig. 11).

In the analysis of the multi-choice question (advantages versus disadvantages), we observe trends similar to those already mentioned; that is, a slightly lower evaluation for the second year of the experience (Fig. 12). However, there is one notable exception: the perception that being able to watch the practice videos as many times as students wanted was helpful in completing the practice reports (question 1.7.2) increased considerably from the first year to the second (only 42% considered it so in 2020–21, but 74% did in 2021–22). This could be due to better adaptation to the new teaching technologies during the second year of the pandemic. Since the appearance of COVID-19 was so sudden and dramatic, students may have needed a period of adjustment to such drastic changes in such a short period of time.

5.3. Surveys by subject

In view of the average ratings of the Likert-type questions (Fig. 7), a better perception of the methodology is observed in the Master's than in the Bachelor's degree students. Thus, the highest average score was obtained in the Master's degree subject Advanced Soil Mechanics (MCIETAT), with 4.53, followed by another Master's degree subject, Geotechnics and Foundations (MICCP), with 4.20. After these were Bachelor's degree subjects. In third place was Geotechnics (GIC), with 3.86. In fourth position was Geotechnics (GFA), with 3.64. Finally, Soil and Rock Mechanics (GIC) closed the ranking with 3.43 (as it is the same subject as Geotechnics (GIC), these had a combined average of 3.64).

A certain heterogeneity among the subjects is observed (Fig. 8) in the number of students who valued the advantages or disadvantages of the methodology. In the 2020-21 academic year (the first coinciding with the pandemic), 100% of the students of the Bachelor's degree subject Geotechnics (GIC) stated that it was a disadvantage for their learning not to be able to attend the laboratory, while for the Master's subject Geotechnics and Foundations (MICCP), 100% of the students thought the online practice experience was positive.

With all the above, we conclude that Master's degree students adapted better to this methodology than Bachelor's degree students. The greater maturity of Master's students, coupled with the fact that they have a better command of the fundamental concepts needed to interpret and understand abstract representations, may explain this difference. On the other hand, undergraduate students, who have not yet mastered the basic concepts, may require more concrete and practical approaches, thus showing somewhat lower acceptance for this activity.

5.3.1. Question bank and theory exam in the Virtual Classroom

5.3.1.1. Overall surveys

This teaching methodology was even better evaluated by students than the online practices, based on the results of the Likert-type questions (Fig. 13) and the multi-choice question (Fig. 14). This way of complementing both teaching and learning (question 2.1) received an average score of 4.52, the highest of all the Likert-type questions (Fig. 13). The question related to students' motivation in the subject (question 2.2) had a global assessment of 4.10, while the fact that the methodology helped to better understand the procedures of the practice was valued at 4.16. (question 2.3). Finally, on whether the students thought it would be suitable to apply this methodology in other subjects of their Bachelor's or Master's degrees, and whether they would like this to happen (questions 2.5 and 2.6), the results also showed general acceptance, with 4.31 and 3.97, respectively. These values were substantially higher than those obtained for the online practice experience.

Regarding the multi-choice question (Fig. 14), the students considered that the new methodology of taking quizzes on didactic units and the theory exam in the Virtual Classroom had significant advantages and no disadvantages. Thus, i) being able to do the theory quizzes during the course was an advantage for 71% of the students when it came to better understanding the theoretical concepts (question 2.7.1), ii) they were an aid to focusing on the most important theoretical concepts (question 2.7.2) for 65% of the respondents, and iii) they were an advantage for 74% of the students when they prepared for the theory exam (question 2.7.3).

For all these reasons, we plan to maintain this methodology as it is today, regardless of the face-to-face nature or not of the teaching that may be necessary due to the health conditions of COVID-19 or other circumstances, with a training and learning phase based on the question bank on the didactic units and a subsequent phase of final theory exam preparation. Both activities will be carried out using the Virtual Classroom tool.

5.4. Surveys by academic year

In general, the global trends set out above were constant for this experience, although the average evaluations improved in the second year. Thus, the average rating of the Likert-type questions was 3.96 for the 2020-21 academic year, and they increased to 4.35 in 2021–22 (Fig. 13). Similarly, the number of students who valued the advantages of this methodology also increased in the second year of the experience (Fig. 14).

All this leads us to conclude that in addition to the success of the experience from the beginning (very good ratings; no disadvantages were observed on the part of the students), in the second year of COVID-19, the students were already beginning to assimilate the enormous changes that the pandemic had brought to their lives and, specifically for this study, to their learning in the university environment.

5.5. Surveys by subject

When we analyze the average ratings of the Likert-type questions (Fig. 9), a better perception of the methodology is again observed in the Master's than in the Bachelor's students. Thus, in the average of the two courses in which the new methodology was implemented, the Master's degree subject Geotechnics and Foundations (MICCP) had an average score of 4.36, and the Bachelor's subject Geotechnics (GIC), renamed Soil and Rock Mechanics (GIC) in 2021–2022, was 4.00. The number of students who valued the advantages of this methodology increased for the second year of the experience (Fig. 10).

With all of the above, we observe better student adaptation to the teaching experience in the second year and a more positive perception by the Master's students.

5.6. Analysis of academic indicators

Next, the analysis and comparison of the indicators related to the first call of the final theory exam objectively demonstrate the success that the quizzes on the didactic units and the theory exam in the Virtual Classroom have had.

Firstly, students' motivation in the subjects increased in view of the larger number of students taking their exams in the first call (which was 88.2% for the 2021-22 academic year, Fig. 15) in the Master's degree subject Geotechnics and Foundations (MICCP) as a consequence of the implementation of the new methodology in the 2020-21 academic year. The Bachelor's degree subject Geotechnics (GIC) also increased its average values after the implementation (60.3%), which were slightly higher than in previous years (55.3%). However, a significant increase was observed compared to the last year the new teaching experience was not carried out (37.5% in 2019–20), Fig. 15.

An appreciable improvement was achieved in students' academic performance, as can be observed from the rates of students who passed the subjects, represented in Fig. 16, Fig. 17. This is most notable in the Master's degree subject Geotechnics and Foundations (MICCP). One hundred percent of the students who took the final exam passed in 2021–22, and 88.2% of the students who enrolled in the subject passed it. We should highlight that in this subject, the percentage of students who passed out of those who enrolled was only 23.1% for the 2018-19 academic year. In the Bachelor's degree subject Geotechnics (GIC), the improvement is also palpable, going from an average percentage of students who passed out of those taking the final exam of 75.8% before the implementation of the experience to 88.9% after (41.5% of students who passed out of those enrolled before, 54.1% after). It is important to note that the theory exam is passed with a minimum of 4 points out of 10.

Finally, improvement in students' academic performance can also be corroborated by an increase in students' average grades in the final theory exam (Fig. 18). Thus, in the Bachelor's degree subject Geotechnics (GIC), the average rating after the implementation of the methodology was 7.15 points out of 10. Before the experience, it was only 4.6. In the Master's degree subject Geotechnics and Foundations (MICCP), the increase is even more noticeable, going from an average rating of 3.60 out of 10 before implementation to 8.70 after.

6. Conclusions

As a consequence of the sudden appearance of the COVID-19 pandemic, the teaching team of the Ground Engineering Area (Mining and Civil Engineering Department) of the UPCT had to adapt the teaching methodologies of their subjects to remote conditions. To this end, a series of videos were used to teach the laboratory practices, as well as the creation of a question bank to help students reinforce and better understand the theoretical content taught in the online master classes.

Regarding videos for lab practices, the students overall gave more importance to the advantage of being able to watch the practice videos as many times as they wanted than to the disadvantage of not being able to attend the practice lab in person. However, the experience received higher ratings in 2020–21 (with total cancellation of face-to-face classes) than in 2021–22 (with slightly fewer restrictions), which highlights the importance students place on attending the lab in person. For this reason, laboratory classes have returned, for the current academic year 2022–23, to being fully face-to-face, while the viewing activity of the practice videos, available in the UPCT Virtual Classroom, has been left as reinforcement content.

On the other hand, a better perception of this methodology was observed among Master's students. Compared to these, undergraduate students have not yet mastered the fundamental concepts needed to interpret and understand abstract representations, so their adaptation to online teaching methodologies is not as good. In this sense, undergraduate students may require more concrete and practical approaches.

In relation to the question bank, students consider that the availability of theory quizzes during the course offers significant advantages in terms of focusing on the most important theoretical concepts, achieving the learning objectives and better preparing for the theory exam. Undoubtedly, this was one of the objectives of this methodology, which will be maintained in successive courses.

The perception for this methodology was very good for both Bachelor's and Master's students. However, for the latter group, the rating was once again higher.

Finally, the analysis of the academic performance indicators shows an improvement in students' motivation for the subjects, reflected in the increased number of students taking the theory exam. At the same time, there has been an improvement in students’ academic performance, demonstrated by a higher percentage of students passing the theory test, as well as an increase in the average mark for this exam.

In summary, pandemic-forced adaptations in teaching and learning, while effective during the health crisis, have led to useful new approaches and tools, representing a significant improvement and advancement in both teaching and learning for our students.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Acknowledgements

We thank the Higher Technical School of Civil Engineering and Mining Engineering of the Polytechnic University of Cartagena for its support of the Teaching Innovation Project “Methodology for the implementation of on-line Soil Mechanics laboratory practices through the use of the Virtual Classroom and UPCT Media platforms in GIC, MICCP and MCieTAT degrees of the EICIM”, whose funds have made it possible to record and edit the practice videos.

Footnotes

Supplementary data to this article can be found online at https://doi.org/10.1016/j.heliyon.2023.e19742.

Appendix A. Supplementary data

The following is the Supplementary data to this article.

References

- 1.Gomes L.C., Ferreira C., Mergulhão F.J. Implementation of a practical teaching course on protein engineering. Biology. Mar. 2022;11(3):387. doi: 10.3390/biology11030387. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Shiferaw H.M. Evaluating practical teaching approach in civil engineering training among public universities of Ethiopia: students' view and opinion. African J Sci, Technol, Innov Dev. 2020;14(1):216–224. doi: 10.1080/20421338.2020.1821947. [DOI] [Google Scholar]

- 3.Xu F.H., Zhu X.H., Wang S.H. Computational Social Science. CRC Press; 2021. Construction of the practical teaching system outside campus in local universities and colleges based on emerging engineering education; pp. 609–614. [DOI] [Google Scholar]

- 4.Gamage K.A.A., de Silva E.K., Gunawardhana N. Online delivery and assessment during COVID-19: safeguarding academic integrity. Educ. Sci. Oct. 2020;10(11):301. doi: 10.3390/educsci10110301. [DOI] [Google Scholar]

- 5.Gamage K.A., Wijesuriya D.I., Ekanayake S.Y., Rennie A.E., Lambert C.G., Gunawardhana N. Online delivery of teaching and laboratory practices: continuity of university programmes during COVID-19 pandemic. Educ. Sci. 2020;10(10):291. doi: 10.3390/educsci10100291. [DOI] [Google Scholar]

- 6.Morgan H. Best practices for implementing remote learning during a pandemic. Clear. House A J. Educ. Strategies, Issues Ideas. May 2020;93(3):135–141. doi: 10.1080/00098655.2020.1751480. [DOI] [Google Scholar]

- 7.Rodrigues H., Almeida F., Figueiredo V., Lopes S.L. Tracking e-learning through published papers: a systematic review. Comput. Educ. Jul. 2019;136:87–98. doi: 10.1016/j.compedu.2019.03.007. [DOI] [Google Scholar]

- 8.Oliver R. Quality assurance and e-learning: blue skies and pragmatism. ALT-J Research in Learning Technology. Oct. 2016;13(3):173–187. doi: 10.1080/09687760500376389. [DOI] [Google Scholar]

- 9.Crawford J., et al. COVID-19: 20 countries' higher education intra-period digital pedagogy responses. J Appl Learn Teach. Apr. 2020;3(1):1–20. doi: 10.37074/jalt.2020.3.1.7. [DOI] [Google Scholar]

- 10.Lewis D.I. The pedagogical benefits and pitfalls of virtual tools for teaching and learning laboratory practices in the Biological Sciences. The Higher Education Academy: STEM. 2014:1–30. [Google Scholar]

- 11.KewalRamani A., et al. U.S. Department of Education, National Center for Education Statistics; Washington, DC, USA: 2018. Student Access to Digital Learning Resources outside of the Classroom. [Google Scholar]

- 12.Kreijns K., Kirschner P.A., Jochems W. Identifying the pitfalls for social interaction in computer-supported collaborative learning environments: a review of the research. Comput Human Behav. May 2003;19(3):335–353. doi: 10.1016/S0747-5632(02)00057-2. [DOI] [Google Scholar]

- 13.Garrison D.R. Taylor and Francis; Abingdon, UK: 2011. E-Learning in the 21st Century: A Framework for Research and Practice. [DOI] [Google Scholar]

- 14.Clark R.C., Mayer R.E. fourth ed. John Wiley & Sons, Inc.; Hoboken, NJ, USA: 2016. E-Learning and the Science of Instruction: Proven Guidelines for Consumers and Designers of Multimedia Learning. [DOI] [Google Scholar]

- 15.Alhawsawi S., Jawhar S.S. Negotiating pedagogical positions in higher education during COVID-19 pandemic: teacher's narratives. Heliyon. 2021;7(6) doi: 10.1016/j.heliyon.2021.e07158. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Saha S.M., Pranty S.A., Rana M.J., Islam M.J., Hossain M.E. Teaching during a pandemic: do university teachers prefer online teaching? Heliyon. 2022;8(1) doi: 10.1016/j.heliyon.2021.e08663. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Matias N.A., A F., Coelho R.C., Andrade J.S., Jr., Araújo Flow through time–evolving porous media: swelling and erosion. J. Comput. Sci. 2021;53 doi: 10.1016/j.jocs.2021.101360. [DOI] [Google Scholar]

- 18.Martínez-Moreno E., Garcia-Ros G., Alhama I. A different approach to the network method: continuity equation in flow through porous media under retaining structures. Eng. Comput. May 2020;37(9):3269–3291. doi: 10.1108/EC-10-2019-0493. [DOI] [Google Scholar]

- 19.BOE, Resolución de 12 de julio de . Spain; 2021. 2021, de la Universidad Politécnica de Cartagena, por la que se publica el plan de estudios de Graduado o Graduada en Ingeniería Civil; pp. 90425–90428. [Google Scholar]

- 20.Ramos-Morcillo A.J., Leal-Costa C., Moral-García J.E., Ruzafa-Martínez M. Experiences of nursing students during the abrupt change from face-to-face to e-learning education during the first month of confinement due to COVID-19 in Spain. Int J Environ Res Public Health. 2020;17(15):5519. doi: 10.3390/ijerph17155519. Jul. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Mukhtar K., Javed K., Arooj M., Sethi A. Advantages, Limitations and Recommendations for online learning during COVID-19 pandemic era. Pak J Med Sci. 2020;36:S27. doi: 10.12669/PJMS.36.COVID19-S4.2785. COVID19-S4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Boe Real. Spain; 2020. Decreto 463/2020, de 14 de marzo, por el que se declara el estado de alarma para la gestión de la situación de crisis sanitaria ocasionada por el COVID-19; pp. 25390–25400. [Google Scholar]

- 23.Díez-Pascual A.M., Jurado-Sánchez B. Remote teaching of Chemistry laboratory courses during COVID-19. J Chem Educ. 2022;99(5):1913–1922. doi: 10.1021/acs.jchemed.2c00022. [DOI] [PubMed] [Google Scholar]

- 24.Njoki P.N. Remote teaching of general Chemistry for nonscience majors during COVID-19. J Chem Educ. 2020;97(9):3158–3162. [Google Scholar]

- 25.Rodríguez-Paz M.X., González-Mendivil J.A., Zárate-García J.A., Zamora-Hernández I., Nolazco-Flores J.A. A hybrid teaching model for engineering courses suitable for pandemic conditions. IEEE Revista Iberoamericana de Tecnologias del Aprendizaje. 2021;16(3):267–275. [Google Scholar]

- 26.Microsoft . 2017. Microsoft Teams. [Google Scholar]

- 27.Meta Platforms Inc; WhatsApp: 2009. [Google Scholar]

- 28.Maske S.S., Kamble P.H., Kataria S.K., Raichandani L., Dhankar R. Feasibility, effectiveness, and students' attitude toward using WhatsApp in histology teaching and learning. J. Educ. Health Promot. 2018;7:158. doi: 10.4103/jehp.jehp_30_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Oyewole B.K., Animasahun V.J., Chapman H.J. A survey on the effectiveness of WhatsApp for teaching doctors preparing for a licensing exam. PLoS One. 2020;15(4) doi: 10.1371/journal.pone.0231148. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Huang R., Spector J.M., Yang J. Educational Technology. Springer; Singapore: 2019. Linking learning objectives, pedagogies, and technologies; pp. 49–62. [DOI] [Google Scholar]

- 31.Orlovic Lovren V., Maruna M., Stanarevic S. Reflections on the learning objectives for sustainable development in the higher education curricula – three cases from the University of Belgrade. Int. J. Sustain. High Educ. Mar. 2020;21(2):315–335. doi: 10.1108/IJSHE-09-2019-0260. [DOI] [Google Scholar]

- 32.Osgood L., Bressan N. Proceedings of the Canadian Engineering Education Association (CEEA) Queen’s University Library; Jun. 2021. Integrating learning objectives in a multi-semester sustainable conservation design project for first-year students during a pandemic. Paper 084. 1-7. [DOI] [Google Scholar]

- 33.Kamalov F., Sulieman H., Calonge D.S. Machine learning based approach to exam cheating detection. PLoS One. Aug. 2021;16(8) doi: 10.1371/journal.pone.0254340. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Noorbehbahani F., Mohammadi A., Aminazadeh M. A systematic review of research on cheating in online exams from 2010 to 2021. Educ. Inf. Technol. 2022:1–48. doi: 10.1007/s10639-022-10927-7. Mar. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Mortati J., Carmel E. Can we prevent a technological arms race in university student cheating? Computer (Long Beach Calif) Oct. 2021;54(10):90–94. doi: 10.1109/MC.2021.3099043. [DOI] [Google Scholar]

- 36.Elsalem L., Al-Azzam N., Jum’ah A.A., Obeidat N., Sindiani A.M., Kheirallah K.A. Stress and behavioral changes with remote E-exams during the Covid-19 pandemic: a cross-sectional study among undergraduates of medical sciences. Annals of Medicine and Surgery. Dec. 2020;60:271–279. doi: 10.1016/j.amsu.2020.10.058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Iglesias-Pradas S., Hernández-García Á., Chaparro-Peláez J., Prieto J.L. Emergency remote teaching and students' academic performance in higher education during the COVID-19 pandemic: a case study. Comput Human Behav. Jun. 2021;119 doi: 10.1016/j.chb.2021.106713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.VideoPad V.E. Jan. 2008. VideoPad Video Editor. [Google Scholar]

- 39.Albaum G. The Likert scale revisited. Int. J. Mark. Res. Jan. 2018;39(2):1–21. doi: 10.1177/147078539703900202. [DOI] [Google Scholar]

- 40.Jebb A.T., Ng V., Tay L. A Review of Key Likert Scale Development Advances: 1995–2019,” Front Psychol. 2021;12:1590. doi: 10.3389/fpsyg.2021.637547. May. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Rodríguez J.V., Sánchez-Pérez J.F., Castro-Rodríguez E., Serrano-Martínez J.L., Upct-Bloopbusters A methodology of teaching physics and technology concepts using movie scenes and related experiments:,” The International Journal of Electrical Engineering & Education. Oct. 2020;0(0):1–15. doi: 10.1177/0020720920965872. [DOI] [Google Scholar]

- 42.Satterthwaite J.D., Vahid Roudsari R. National Student Survey: reliability and prediction of overall satisfaction scores with special reference to UK Dental Schools. Eur. J. Dent. Educ. May 2020;24(2):252–258. doi: 10.1111/eje.12491. [DOI] [PubMed] [Google Scholar]

- 43.Guo P.J., Kim J., Rubin R. Proceedings of the First ACM Conference on Learning@ Scale Conference. 2014. How video production affects student engagement: an empirical study of MOOC videos; pp. 41–50. [Google Scholar]

- 44.Fournier M. 2019. How Long Should Videos Be for E-Learning?https://www.learndash.com/how-long-should-videos-be-for-e-learning/ [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.