Abstract

Accurately recognizing facial expressions is essential for effective social interactions. Non-human primates (NHPs) are widely used in the study of the neural mechanisms underpinning facial expression processing, yet it remains unclear how well monkeys can recognize the facial expressions of other species such as humans. In this study, we systematically investigated how monkeys process the facial expressions of conspecifics and humans using eye-tracking technology and sophisticated behavioral tasks, namely the temporal discrimination task (TDT) and face scan task (FST). We found that monkeys showed prolonged subjective time perception in response to Negative facial expressions in monkeys while showing longer reaction time to Negative facial expressions in humans. Monkey faces also reliably induced divergent pupil contraction in response to different expressions, while human faces and scrambled monkey faces did not. Furthermore, viewing patterns in the FST indicated that monkeys only showed bias toward emotional expressions upon observing monkey faces. Finally, masking the eye region marginally decreased the viewing duration for monkey faces but not for human faces. By probing facial expression processing in monkeys, our study demonstrates that monkeys are more sensitive to the facial expressions of conspecifics than those of humans, thus shedding new light on inter-species communication through facial expressions between NHPs and humans.

Keywords: Monkey, Facial expression, Time perception, Eye-tracking, Pupil size

INTRODUCTION

Being able to infer the affective states and intentions of others from their facial expressions is important for social cooperation and competence (Frijda, 2016). Both humans and monkeys can express emotion through facial expressions (Maréchal et al., 2017; Waller et al., 2020). However, it remains unclear whether humans and monkeys share the same behavioral strategies to recognize and convey facial expressions. Although monkeys and humans exhibit comparable strategies when observing Neutral faces of conspecifics and heterospecifics (Dahl et al., 2009; Shepherd et al., 2010), their eye-scanning patterns upon observing facial expressions are species-dependent (Guo et al., 2019), suggesting that different species may possess different strategies for recognizing facial expressions. Furthermore, individuals with limited exposure to monkeys are unable to accurately discriminate monkey facial expressions (Maréchal et al., 2017). Electrophysiological studies involving monkey subjects and neuroimaging studies involving both monkey and human subjects have revealed dissimilarities in the neural processing of facial expressions between the species (Sugase et al., 1999; Zhu et al., 2013). Additionally, evidence suggests the existence of own-species bias in face perception in both monkeys and humans (Sigala et al., 2011). Thus, an intriguing challenge is to understand how monkeys perceive facial expressions from their unique perspective. Notably, can monkeys accurately recognize specific emotions from human facial expressions?

Given the inherent challenges in directly applying behavioral language-dependent tasks designed for humans to other species, sophisticated behavioral paradigms are required to assess the capacity of monkeys to discriminate facial expressions. In humans, faces displaying emotion can trigger an autonomic nervous response and affect the behaviors of the perceiver. Notably, human subjects show prolonged time perception in response to high-arousal human facial expressions (e.g., fear and anger) (Tipples, 2011; Tipples et al., 2015), suggesting that we may be able to probe facial expression processing in monkeys via a sophisticatedly designed time perception paradigm. Furthermore, we may also be able to clarify the existence of species-dependent effects in terms of facial expression processing.

In addition to behaviors, it is also important to understand what facial features attract observer attention, as this may provide clues for decoding emotions from facial expressions (e.g., people may infer emotions of happiness through the muscle lines at the corners of the mouth) (Beaudry et al., 2014; Calvo & Nummenmaa, 2008; Dahl et al., 2009; Smith et al., 2005). Advanced eye-tracking technology is a powerful tool that can record the eye movement trajectories of observers. Recent studies using such technology have demonstrated that human subjects prefer to look at specific inner features when recognizing emotional faces (e.g., a longer gaze at the mouth region when viewing a happy face but a longer gaze at the eye region when viewing an angry or sad face) (Beaudry et al., 2014; Calvo et al., 2013; Calvo & Nummenmaa, 2008; Eisenbarth & Alpers, 2011). In addition, eye-tracking technology can measure subtle changes in the pupils, a reliable index of the internal state of non-human primates (NHPs) (Kuraoka & Nakamura, 2022; Shepherd et al., 2010; Zhang et al., 2020). Therefore, leveraging eye-tracking technology to ascertain the viewing interest of monkeys when observing faces may yield valuable evidence regarding how monkeys process facial expressions.

In this study, we explored facial expression processing from the perspective of monkeys. A temporal discrimination task (TDT) was used to analyze how monkeys process the facial expressions of both humans and monkeys. Eye-tracking technology was used to study the viewing patterns of the monkeys while observing facial expressions. We hypothesized that monkeys may be able to infer emotional states from monkey, but not human, facial expressions. Thus, their interval timing behaviors may be differentially affected by conspecific and human face categories, resulting in different viewing patterns while observing faces of different species and expressions. The findings of this study should provide new insights into potential communication between monkeys and humans via facial expressions, providing a better understanding of non-verbal interactions.

MATERIALS AND METHODS

Animals

Three rhesus macaques (Macaca mulatta; RM07: 10.8 kg, 10.3 years old; RM50, 7.5 kg, 6.8 years old; RM29, 8.2 kg, 9 years old) were trained to perform the TDT, while six rhesus macaques (including the three that participated in the TDT; 7.2–10.8 kg, 6.8–10.3 years old) were trained to perform the face scan task (FST). All monkeys were housed individually in an AAALAC-accredited NHP facility under temperature and humidity control (humidity: 40%–70%; temperature: 20–26 °C). Each colony room was maintained under a 12:12 h light-dark cycle. All monkeys were surgically implanted with a titanium pillar for head fixation and were trained to sit quietly in a chair to perform behavioral tasks. All monkeys underwent training for at least four months before data collection, and thus were familiar with the experimenters (three facility workers). All procedures were performed in strict compliance with the National Institutes of Health Guide for the Care and Use of Laboratory Animals and were approved by the Institutional Animal Care and Use Committee (IACUC) of the Shenzhen Institute of Advanced Technology of the Chinese Academy of Sciences, approval number: SIAT-IACUC-201123-NS-DJ-A1483.

Temporal discrimination task

The TDT was modified from previous rodent studies (Liu et al., 2019; Smith et al., 2011; Soares et al., 2016). Briefly, a fixation point was first presented at the center of the screen, and the subjects were required to fixate on the center for 500 ms. A timing stimulus (e.g., human face with a fearful expression) was then presented at the center of the screen for different durations (400, 670, 900, 1 100, 1 330, and 1 600 ms). These durations were chosen as they have been shown to effectively prolong interval timing in human subjects (Smith et al., 2011). During the tests, the monkeys were required to maintain fixation within five visual degrees. After timing stimulus removal, a green dot and a red dot (located in the upper left and lower right of the monitor, respectively) were presented simultaneously, and the subjects were required to saccade to the red or green dot to indicate whether the stimulus was presented for longer or shorter than 1 s (Figure 1A). The subjects received a juice reward for a correct response. Response time (RT) was defined as the latency between the time the timing stimulus vanished and the time of a correct saccade to the target. If the subject broke fixation (including blinks) during stimulus presentation or looked outside the fixation window, the trial was immediately ended and labeled as uncompleted.

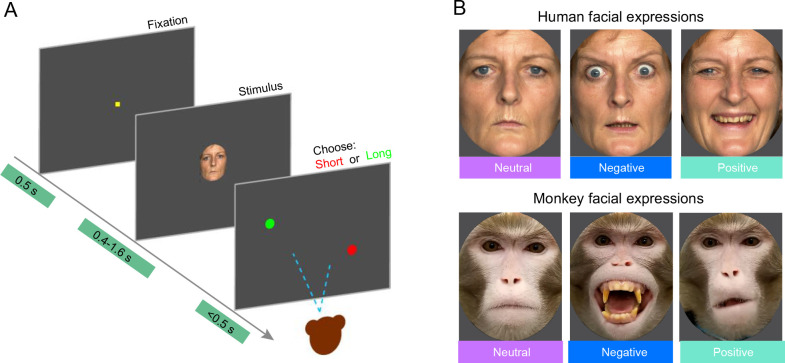

Figure 1.

Schematic of behavioral tasks and examples of facial stimuli

A: Schematic of TDT. B: Examples of human and monkey facial expressions used in TDT.

Three facial expression categories (Positive, Negative, and Neutral) were established for both monkey and human faces. For human facial expressions, typical Fear, Happy, and Neutral facial expressions corresponding to the three categories were chosen as visual stimuli based on previous studies (Angrilli et al., 1997; Calvo et al., 2013; Calvo & Nummenmaa, 2008; Gil & Droit-Volet, 2011; Yin et al., 2021). For monkey facial expressions, the three categories were chosen according to previous studies (Liu et al., 2015; Maréchal et al., 2017; Micheletta et al., 2015; Morozov et al., 2021). Specifically, the Negative category included facial expressions with raised eyebrows and open mouth showing teeth (Threat, typically classified as “aggressive”); the Positive category included facial expressions with lip smacking (Lipsmack, classified as “friendly”); and the Neutral category included facial expressions with an overall relaxed expression (Neutral). We compared the responses of monkeys to human faces and monkey faces in the same categories.

During each TDT session, subjects were presented with three facial expressions (Negative, Positive, and Neutral) from a single individual (either human or monkey). Each condition was repeated 200 times in random order, resulting in 600 trials per session. All facial expression images were standardized to a size of 320×400 pixels (8°×10°) with a gray background. To ensure uniformity across images of the same species, average luminance of each image was adjusted using Photoshop (Adobe, v2017, USA). For luminance adjustments, the RGB values of each pixel in each image were initially read using the “imread” function in MATLAB (MathWorks, R2016a, USA), then converted to the Ycbcr space using the “rgb2ycbcr” function in MATLAB, where “Y” represents luminance and “cbcr” represents chroma. All images of the same species were adjusted to ensure there were no significant differences in luminance among images. Each monkey was required to complete 34 sessions using different sets of individuals, comprising seven sets of unfamiliar human faces, seven sets of monkey faces, three sets of familiar human faces, three sets of monkey-like faces imitated by familiar experimenters, seven sets of scrambled unfamiliar human faces, and seven sets of scrambled monkey faces.

Face stimuli were obtained from multiple sources. For unfamiliar human faces, six sets of publicly available chromatic human emotional faces (2 (male, female)×3 (young, middle aged, old)) from FACES in the MAX PLANCK digital library (Ebner et al., 2010) and one human face (grayscale, young woman) from the NimStim face database (Tottenham et al., 2009) were used.

For monkey faces, seven monkeys from the facility were photographed by the experimenters. The model and subject monkeys lived in the same colony room and had visual contact with each other. Facial expressions (Negative: e.g., Threat; Positive: e.g., Lipsmack; and Neutral) were generated in the laboratory when the monkey was restricted in a custom chair with its head fixed. Negative expression was induced by a pole used for handling monkeys or by the approach of an unfamiliar facility worker; Positive expression was induced by an encounter with a fellow monkey or an unexpected fruit reward; and Neutral expression was generated when monkeys were relaxed. Photographs were taken when the monkeys were making a particular facial expression and facing the camera.

For the TDT using familiar faces, three sets of facial expressions were adopted from images of three facility workers in regular contact with the subject monkeys for 4–8 h every week and responsible for their food and water. Although the workers normally wore a uniform, face mask, and hair cover, their eyes and parts of their faces were still visible to the subject monkeys. The workers were asked to display Negative, Positive, and Neutral expressions, similar to the other models in the aforementioned database, and then to imitate Negative, Positive, and Neutral expressions of monkeys while being photographed. All face images were taken with the workers looking straight ahead.

For the scrambled facial expressions, chromatic unfamiliar human and monkey facial expressions were used as raw images, which were divided into pieces (10×10 pixels) and scrambled in random order. This procedure masked specific parts of the face while maintaining a constant mean luminance.

Face scan task

The FST procedure was modified from previous human studies (Nakakoga et al., 2020; Reisinger et al., 2020). In brief, each trial started with a 100 ms delay period, followed by the coherent presentation of five images of scrambled faces for 800 ms each to establish the baseline. Subsequently, a static face stimulus was presented for 4 000 ms. A session comprised three face stimuli chosen from one of the four stimulus sets: i.e., monkey, human, scrambled monkey, and scrambled human faces. Each session consisted of five practice trials and 210 test trials, and each monkey was required to complete four sessions. The normal face stimulus sets included Negative, Positive, and Neutral expressions from the same individual, while the scrambled face stimulus set included the same Negative facial expression with either a scrambled eye, nose, or mouth. The size of both the scrambled and face images was set to 800×1 000 pixels (20°×25°). During the task, the subjects were seated in a monkey chair facing the screen with their heads fixed in position. After the trial was initiated, they were allowed to view anywhere on the screen and received a juice reward at the end of each trial.

In the FST, only one set of human faces (young male) and one set of monkey faces were used as face stimuli. To avoid any color-induced bias in the gaze pattern of the monkeys, all face stimuli used in the FST were converted to grayscale. For the FST with scrambled sub-regions, either the eye, nose, or mouth region was replaced with scrambled counterparts (using the same scrambling method as above). All face stimuli were trimmed as much as possible to include the face only and exclude irrelevant information (e.g., head post) in the photograph. All images were processed using PhotoShop (v2017) or MATLAB (R2016a) with custom scripts.

Experimental environment

During the experiments, the monkeys sat quietly in a chair with their head fixed in position facing an LED monitor at a 57 cm viewing distance (AUSU, 24 inches, 1920×1080 resolution, 144 Hz refresh rate). The subject’s gaze position and pupil area were continuously monitored and recorded using an eye-tracking system (Eyelink 1000 Plus, SR Research company, Canada, 1 000 Hz sampling rate). Nine-point calibration was performed to calibrate the eye position before the TDT or FST experiments. All experiments were performed in a dark and quiet environment.

Data analysis

Curving fitting: For the TDT, the interval timing behaviors were assessed based on the proportion of “long” choices (PL) and the RT to each timing stimulus and duration. The relationship between PL and timing duration is typically represented as a sigmoidal curve. Thus, we adopted a sigmoidal cumulative Gaussian function (equation (1)) to fit the experimental data from the TDT sessions (Deane et al., 2017; Liu et al., 2019; McClure et al., 2005; Soares et al., 2016; Ward & Odum, 2007).

|

1 |

In this function, F(t) represents PL when the duration is equal to a given sample t; µ is the mean; and σ is the standard deviation (representing the slope of the function at t=µ). The point of subjective equivalence (PSE) is the time point when F(t)=0.5, reflecting the subjective perception of 1.0 s, and was used to assess the effect of facial expression on time perception. The constant parameters a and b represent the low asymptote and range of the function, respectively. The R2 statistic was used to quantify the goodness of fit for curve fitting.

Statistical analysis: To quantify the viewing patterns of monkeys on faces in the TDT, we calculated a scanning index (SI) in each trial using the formula: SI=(maximum scan range in horizontal direction/maximum scan range in vertical direction). As the viewing range was limited to five degrees in radius, a small SI indicated that the subject viewed a narrow range in the horizontal direction.

In the TDT, interval timing perception of the three monkeys was analyzed together and individually. Uncompleted trials were excluded from data analysis. For each monkey in each session, PL, average RT, and SI were calculated for each facial expression across varying timing durations. One-way analysis of variance (ANOVA) was used to assess the effects of facial expression on PSE. Two-way ANOVA (Facial expression×Duration) with Geisser-Greenhouse correction was used to analyze the effects of facial expression on average RT and SI. Geisser-Greenhouse correction was adopted in cases where the assumption of sphericity was violated. Additionally, for significant interactions or main effects of facial expression, post-hoc analysis was performed using Tukey’s test. All data were expressed as mean±standard error of the mean (SEM). The significance level α was set to 0.05. To quantify pupil contraction in response to facial expressions in the TDT, the raw pupil change data were divided into 1 ms bins and point-by-point comparison of each bin was performed using paired two-tailed t-tests (α set to 0.05/3≈0.017). All statistical analyses were conducted using Prism (v8, GraphPad) and SPSS (v25, IBM).

In the FST, we defined three regions of interest (ROIs), eyes, nose, and mouth, for each facial expression using similar methods as described in previous studies (Dal Monte et al., 2014; Reisinger et al., 2020). The positions and areas of the ROIs were the same for conspecific faces but were different between monkeys and humans due to species differences. Data from the six monkeys were analyzed as a group. Three-way ANOVA (Species×Facial expression×ROI) was performed to compare the viewing patterns of the monkeys on human and monkey faces. To quantify the viewing patterns for different ROIs, cumulative looking time at each ROI was first computed (as a percentage of stimulus time), then normalized by the ROI area (square visual degrees) to obtain the normalized looking percentage for each ROI (equation (2)). Two-way ANOVA (Facial expression×ROI) was performed to compare the normalized looking percentage. If the main or interaction effects reached a significant (α=0.05) or marginally significant level (α=0.06), Tukey’s post-hoc test was applied. The effect size was assessed using partial eta-squared (ηp2).

|

2 |

RESULTS

Effects of facial expression on interval timing behaviors

We first analyzed the interval timing responses of three monkeys when presented with facial expressions of either humans or monkeys. As each experimental session involved only one individual (human or monkey) without intermixing species, we measured the main effects of facial expressions (Negative, Positive, and Neutral) on monkey behaviors for both human and monkey faces independently. Notably, average PL for different facial expressions in each session showed good fit as a function of timing duration, as indicated by a sigmodal curve (Figure 2A, B; goodness of fit: R2≥0.939, 0.919, and 0.962 for RM50, RM07, and RM29, respectively), from which the PSE was obtained for each session.

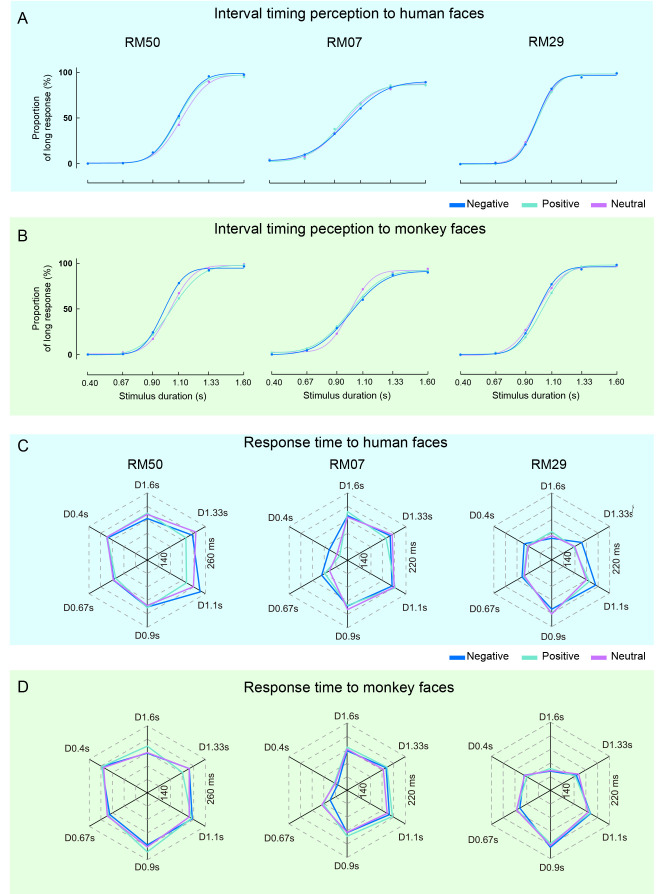

Figure 2.

Differential effects of human and monkey faces on monkey performance in TDT

A, B: Average proportion of “long” response (PL) and its fitting curve of three monkeys to human faces (A) and monkey faces (B) in all sessions, presented for 0.4–1.6 s. Blue, green, and magenta represent Negative, Positive, and Neutral expressions, respectively. C, D: Average RT under different conditions in TDT for three monkeys. Direction of hexagon denotes stimulus duration (e.g., D0.4s denotes the condition of 0.4 s). Color definitions are the same as in A, B.

Based on pooled data from all monkeys, results showed that PSE was significantly affected by monkey facial expressions (P=0.048, ηp2=0.434, one-way ANOVA, Table 1). Specifically, the PSE to Negative facial expressions was lower than that to Positive facial expressions of monkeys (P=0.036, post-hoc test), indicating that monkeys showed prolonged time perception in response to Negative monkey facial expressions. In contrast, we did not find the same effect for human faces (Table 1). We performed the same analysis for each monkey and found that monkey facial expressions had a significant effect on the PSE of RM50 (P=0.005, ηp2=0.651) and RM29 (P=0.020, ηp2=0.542, one-way ANOVA, Table 1). Similarly, post-hoc analysis indicated that the PSE to Negative faces was lower than that to Positive (RM50: P=0.007; RM29: P=0.008) and Neutral faces (RM50: P=0.007). However, neither human nor monkey facial expressions affected the PSE of RM07 (Table 1).

Table 1. Results of one-way ANOVA for effects of faces on PSE.

| Human faces | Monkey faces | Faces of familiar people | Faces of familiar people mimicking monkey expressions | |||||

| RM50 | F(1.196, 7.178)=1.578 | P=0.256 | F(1.524, 9.145)=11.220 | P=0.005 | F(1.035, 2.071)=3.707 | P=0.191 | F(1.018, 2.035)=0.410 | P=0.590 |

| RM07 | F(1.697, 10.18)=1.348 | P=0.297 | F(1.450, 8.700)=1.017 | P=0.374 | F(1.165, 2.329)=0.882 | P=0.455 | F(1.608, 3.216)=0.250 | P=0.752 |

| RM29 | F(1.508, 9.046)=0.013 | P=0.968 | F(1.433, 8.597)=7.098 | P=0.020 | F(1.421, 2.841)=0.212 | P=0.755 | F(1.086, 2.173)=0.875 | P=0.453 |

| Pooled data | F(1.715, 10.29)=0.553 | P=0.565 | F(1.726, 10.36)=4.295 | P=0.048 | F(1.002, 2.005)=1.175 | P=0.392 | F(1.002, 2.005)=0.722 | P=0.485 |

We then analyzed RT in the TDT via two-way ANOVA (Facial expression×Duration) for the three subjects (Figure 2C, D). Results showed a significant interaction effect for human faces (P=0.006, ηp2=0.096; Table 2). None of the three subjects displayed any significant changes in RT in response to human faces individually, however, upon pooling the data, human facial expressions had a significant impact on RT (P=0.043, ηp2=0.026; Table 2), i.e., RT to human Negative facial expression was longer than that to Positive facial expression (P=0.035, post-hoc test). In contrast, although one monkey appeared to be affected by monkey facial expressions (P=0.033, ηp2=0.093), the pooled data showed that RT was not affected by monkey faces (Table 2). These contrasting results (Tables 1, 2) suggest that at the population level, human and monkey facial expressions influence different aspects (either PSE or RT) of interval timing behaviors in monkeys, indicating potential species-dependent effects on facial expression processing.

Table 2. Results of two-way ANOVA for effects of faces on RT.

| Human faces | Monkey faces | |||||||

| Interaction (Facial expression×Duration) | Facial expression | Interaction | Facial expression (Facial expression×Duration) | |||||

| RM50 | F(10, 72)=1.786 | P=0.079 | F(1.734, 62.43)=2.292 | P=0.117 | F(10, 72)=0.995 | P=0.456 | F(1.504, 54.13)=0.273 | P=0.699 |

| RM07 | F(10, 72)=1.844 | P=0.068 | F(1.955, 70.37)=0.999 | P=0.372 | F(10, 72)=0.893 | P=0.544 | F(1.874, 67.46)=3.679 | P=0.033 |

| RM29 | F(10, 72)=1.766 | P=0.083 | F(1.580, 56.90)=1.90 | P=0.167 | F(10, 72)=0.4256 | P=0.930 | F(1.708, 61.48)=0.121 | P=0.856 |

| Pooled data | F(10, 240)=2.540 | P=0.006 | F(1.892, 227.1)=3.255 | P=0.043 | F(10, 240)=1.324 | P=0.218 | F(1.828, 219.4)=1.156 | P=0.313 |

| Faces of familiar people | Faces of familiar people mimicking monkey expressions | |||||||

| Interaction (Facial expression×Duration) | Facial expression | Interaction (Facial expression×Duration) | Facial expression | |||||

| RM50 | F(10, 24)=0.769 | P=0.656 | F(1.974, 23.69)=1.182 | P=0.324 | F(10, 24)=0.314 | P=0.970 | F(1.480, 17.76)=0.686 | P=0.474 |

| RM07 | F(10, 24)=0.747 | P=0.675 | F(1.980, 23.76)=1.211 | P=0.315 | F(10, 24)=1.063 | P=0.426 | F(1.790, 21.48)=1.181 | P=0.321 |

| RM29 | F(10, 24)=1.808 | P=0.114 | F(1.733, 20.80)=2.339 | P=0.127 | F(10, 24)=0.906 | P=0.543 | F(1.578, 18.94)=0.660 | P=0.494 |

| Pooled data | F(10, 96)=1.273 | P=0.256 | F(1.953, 93.74)=1.478 | P=0.234 | F(10, 96)=1.273 | P=0.256 | F(1.953, 93.74)=1.478 | P=0.234 |

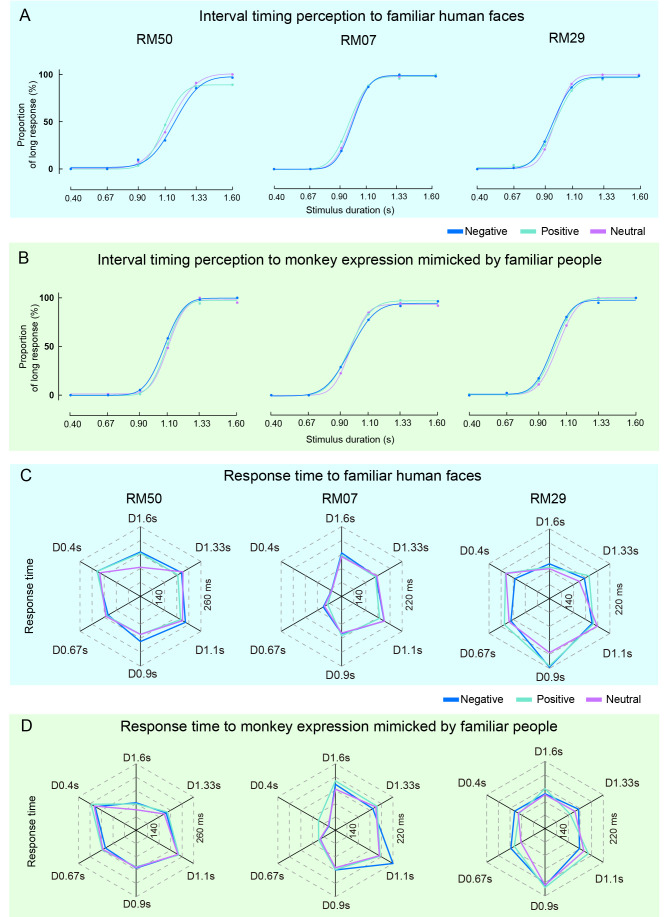

Influence of familiarity on interval timing behaviors

As familiarity with the identity of a face can influence the efficacy of expression discrimination (Rapcsak, 2019), the differentiated effects of facial expression on PSE or RT may have resulted from the subject’s poor familiarity with the test faces. To avoid such confusion, we used facial expressions from people in regular contact with the monkeys and repeated the same TDT. First, the familiar people were asked to make facial expressions in the same way as other models from previous studies (Fear/Happy/Neutral). When applying these expressions as stimuli in the TDT, PLs also generated typical sigmoidal curves (Figure 3A), although the expressions of familiar people did not affect the PSE of the monkeys (Table 1). The same people were then asked to imitate the expressions as observed in other monkeys (Threat/Lipsmack/Neutral), with these images then used as visual stimuli in the TDT. The generated PL (Figure 3B) and PSE (Table 1) for all monkeys showed no significant differences between the pairs of facial expressions. Further analysis indicated that the facial expressions imitated by familiar people did not affect RT (Figure 3C, D; Table 2). Compared to the results from the same type of facial expressions made by monkeys, these disparate findings suggest that enhancing familiarity with the identities of the human faces tested did not produce an effect analogous to that seen with monkey faces.

Figure 3.

Interval timing behaviors of monkeys to familiar human faces mimicking monkey expression in TDT

A, B: Average PLs of three monkeys and fitting curves to facial expressions of familiar humans (A) and familiar humans mimicking facial expressions of monkeys (B) in TDT. C, D: Average RTs of three monkeys to facial expressions of familiar humans (C) and familiar humans mimicking facial expressions of monkeys (D) in TDT.

Effects of facial expression on pupil size

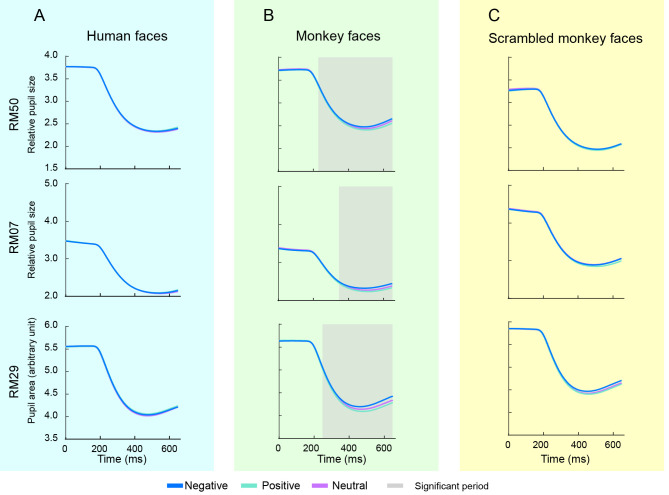

In addition to behavioral responses during the TDT, we also examined the effects of facial expressions on the pupil, a sensitive indicator of physiological state. Changes in pupil area to the seven sets of human and monkey facial expressions in the TDT were analyzed independently. To exclude the effects of luminance on pupil contraction, we balanced the overall luminance of the face images and found no significant differences in luminance between the human and monkey facial expressions. Average pupil areas of all sessions were plotted as a function of time for both human and monkey faces (Figure 4A, B). The pupil area showed rapid contraction about 200 ms after stimulus onset. Interestingly, monkey faces induced divergent contraction for different facial expressions (Figure 4B). Point-by-point pairwise comparisons indicated that human faces did not induce any significant differences in pupil contraction among the different expressions (Figure 4A), whereas monkey faces induced a significant difference between Negative and Positive expressions (Figure 4B) for the three monkeys. Specifically, these differences reached significance 227, 342, and 244 ms after stimulus onset for RM50, RM07, and RM29, respectively. To verify the effect induced by monkey facial expressions, we used scrambled monkey faces as a control and repeated the same experiment. Although the scrambled monkey faces had the same luminance as normal monkey faces, they did not induce the same effect on pupil contraction. These results suggest that facial expression-induced pupil contraction may be species-dependent.

Figure 4.

Effects of facial expressions on pupil constriction in monkeys

A–C: Average pupil area changes in three monkeys after presentation of human facial expressions (A), monkey facial expressions (B), and scrambled monkey facial expressions (C) in TDT. Blue, green, and magenta represent Negative, Positive, and Neutral expressions, respectively. Gray zone indicates significant difference between Negative and Positive facial expressions (P<0.05/3) (paired t-test, df=6).

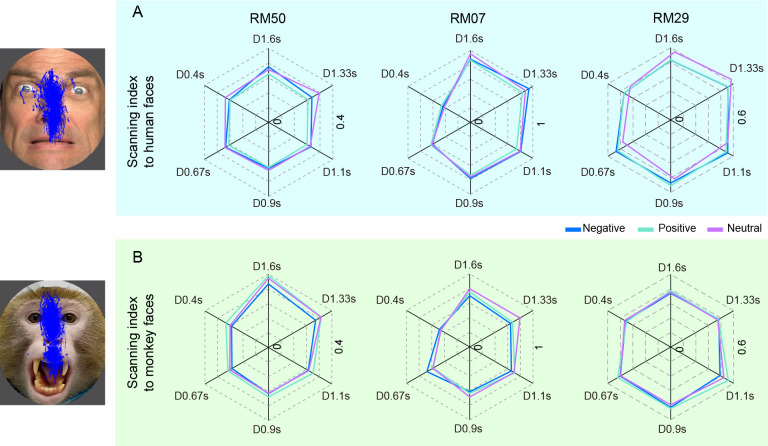

Viewing patterns in observing emotional faces

We next examined the gaze location of monkeys when viewing faces during the TDT, then plotted the viewing traces and overlayed the plots with face stimuli (Figure 5). Of note, we observed that subjects RM50 and RM29 mostly scanned up and down over the face images. To quantify this viewing pattern, we calculated the SI (where a value close to zero indicates that the monkey mostly scans up and down within a narrow horizontal range; see Methods for more details) for different facial expressions and plotted the results (Figure 5). For the pooled data of all monkeys, two-way ANOVA showed that human facial expressions did not affect the SI (Facial expression×Duration: F(10, 240)=0.658, P=0.763; Facial expression: F(1.798, 215.8)=2.231, P=0.115), whereas monkey facial expressions resulted in a lowered SI in response to Negative expressions compared to Neutral expressions (Facial expression×Duration: F(10, 240)=1.765, P=0.068; Facial expression: F(1.950, 234)=3.904, P=0.022, ηp2=0.032; post-hoc test, P=0.047). For the individual monkeys, two-way ANOVA showed that human facial expressions had a significant effect on the SI of RM50 (Facial expression×Duration: F(10, 72)=0.854, P=0.579; Facial expression: F(1.799, 64.75)=6.846, P=0.003, ηp2=0.160, Figure 5A), but not on the SI of RM07 and RM29. Post-hoc analysis indicated that the SI of RM50 was lower for Positive expressions than for Neutral expressions (P<0.001). Monkey facial expressions also affected the SI of RM50 (Facial expression×Duration: F(10, 72)=0.270, P=0.985; Facial expression: F(1.561, 56.18)=6.102, P=0.007, ηp2=0.145, Figure 5B). In contrast to human faces, post-hoc analysis showed that SIs were significantly lower for Negative monkey expressions than for Positive monkey expressions (P=0.009). These results suggest that monkeys observe human and monkey faces in different manners; in particular, monkeys prefer to scan up and down when viewing Negative expressions on monkey faces. These results are in line with the behavioral effects on PSE, RT, and pupil area induced by threatening facial expressions.

Figure 5.

Effects of facial expressions on scanning index (SI)

A: SI of three monkeys when observing human faces. B: SI of three monkeys when observing monkey faces. Blue, green, and magenta represent Negative, Positive, and Neutral expressions, respectively.

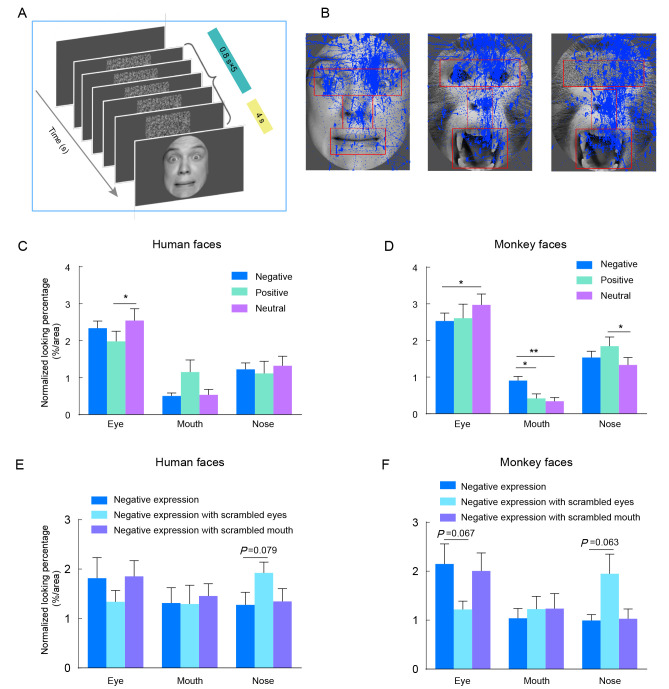

Scanning features on faces in FST

To further elucidate the viewing patterns of monkeys when observing emotional faces, we extended the facial stimuli to a larger scale and conducted the FST (Figure 6A). The FST sessions included examples of a human fear face, monkey threat face, and threat face with scrambled eyes (Figure 6B). Regions of the eye, nose, and mouth were defined separately for human and monkey faces and were overlapped with viewing traces (Figure 6B). The normalized looking percentage for each region was then plotted for the human and monkey facial expressions (Figure 6C, D). Three-way ANOVA revealed that monkeys showed similar viewing patterns in response to both monkey and human faces (Species×Facial expression×ROI: F(4, 40)=4.483, P=0.004, ηp2=0.310; Species: F(1, 10)=2.285, P=0.162). We then analyzed the effects of facial expression and ROI on relative gaze duration to human and monkey faces. As expected, two-way ANOVA showed that monkeys viewed the eye region more often for both human faces (ROI×Facial expression: F(4, 30)=4.125, P=0.009, ηp2=0.135; ROI: F(2, 15)=14.35, P<0.001, ηp2=0.578; eye vs. mouth/nose, P<0.001) and monkey faces (ROI×Facial expression: F(4, 30)=8.955, P<0.001, ηp2=0.153; ROI: F(2, 15)=26.84, P<0.001, ηp2=0.752; eye vs. mouth/nose, P<0.001). Notably, monkeys looked at the nose region longer than the mouth region for both human and monkey faces (Human faces: nose vs. mouth, P=0.046; Monkey faces: nose vs. mouth, P<0.001). Post-hoc analysis revealed that monkeys preferred to look at the eye region of Neutral expressions compared to Negative expressions for both human and monkey faces (Human: Negative vs. Neutral, P=0.02; Monkey: Negative vs. Neutral, P=0.012). Moreover, for monkey faces, Negative expressions attracted more viewing of the mouth and nose regions than the other two expressions. Specifically, Negative expressions resulted in markedly longer viewing of the mouth region than Neutral and Positive expressions (Negative vs. Positive, P=0.010; Negative vs. Neutral, P=0.003; Figure 6D).

Figure 6.

Influence of facial expressions on scanning patterns of monkeys in FST

A: Schematic of FST. B: Examples of facial stimuli and definitions of eye, nose, and mouth regions (marked in red) for a human face, monkey face, and monkey face with scrambled eyes. Blue traces indicate a monkey’s viewing traces on the face. C, D: Normalized looking percentage for each face region and expression for Negative/Positive/Neutral human faces (C) and monkey faces (D). Blue, green, and magenta represent Negative, Positive, and Neutral expressions, respectively. E, F: Normalized looking percentage for each face region and expression for human faces (E) and monkey faces (F). Blue, light blue, and purple represent Negative expressions, Negative expressions with eyes scrambled, and Negative expressions with mouth scrambled, respectively. *: P<0.05; **: P<0.01, two-way ANOVA with Turkey post-hoc test.

To eliminate the possibility that the preference toward the eye and mouth regions was induced by a particular luminance, we scrambled the eye and mouth regions and repeated the FST. Interestingly, two-way ANOVA indicated that monkey preference for the scrambled eye region diminished, instead showing a preference for the nose region when observing both human (ROI×Scrambled facial expression: F(4, 30)=4.528, P=0.006, ηp2=0.095; Negative face vs. Negative face with the scrambled eye, P=0.079, Figure 6E) and monkey faces (ROI×Scrambled facial expression: F(4, 30)=6.788, P<0.001, ηp2=0.228; Negative face vs. Negative face with scramble eye, P=0.063, Figure 6F). The inclusion of a scrambled eye region in monkey faces displaying Negative expressions resulted in monkeys looking at the eye region for a shorter duration (P=0.067). This effect was not observed for human faces (P=0.236), suggesting that monkeys may be more sensitive to the eye region of their conspecifics. The introduction of scrambled mouth regions had no significant effect on the viewing patterns for either human or monkey faces.

Collectively, these analyses provide insights into the viewing preferences of monkeys regarding different regions of emotional faces. Notably, the observation that monkeys prefer to look at the mouth region when viewing a threatening monkey face suggests that this region may be a key feature from which to extract cues of potential threat.

DISCUSSION

This study systematically investigated the perception of facial expressions from the perspective of monkeys based on several behavioral tasks and eye-tracking technology. Our results showed that the facial expressions of monkeys, but not of humans, affected timing perception, RT, and pupil contraction of the monkeys. In addition, monkeys exhibited differential viewing patterns of human and monkey faces, with greater sensitivity to the mouth region of conspecific expressions. Our results demonstrated that monkeys showed higher proficiency in perceiving expressions from conspecific faces than human faces. The observed viewing patterns also suggested that the preference for the mouth region may underlie the bias toward conspecific expressions. Overall, our exploration of the sensitivity of monkeys to human and monkey facial expressions sheds new light on potential interspecies communication via facial expressions.

Efficacy of TDT and eye-tracking in reflecting arousal states

Subjective time perception can be influenced by high arousal states (Meck, 1983), and emotional faces can trigger heightened arousal (Bakker et al., 2014). Hence, we developed the TDT paradigm to probe the perception of monkeys to facial expressions. Results showed that the monkeys exhibited prolonged time perception (Table 1) in response to Negative facial expressions of monkeys and that pupil contraction was also regulated by monkey expressions, but not human expressions. As pupils are sensitive to changes in the noradrenergic and cholinergic systems in the brain (Kahneman & Beatty, 1966; Reimer et al., 2016), they are believed to reflect the state of arousal, which is one of the factors modulating time perception.

Previous research has reported that high arousal can accelerate the internal clock, leading to the elongation of time perception (Meck, 1983). In NHPs, pupil size serves as a sensitive indicator of their internal state (Kuraoka & Nakamura, 2022). Thus, the contraction in pupil size observed in this study indicates that Negative facial expressions of monkeys, but not humans, trigger high arousal states in the viewing monkeys, suggesting a heightened sensitivity to emotional faces of conspecifics compared to humans. Overall, our study highlights the efficacy of the TDT paradigm in probing facial expression processing in a non-verbal species. Furthermore, using pupil area as a physiological marker may offer a feasible approach for measuring emotional states in NHPs.

Differential sensitivity to human and monkey facial expressions

Nevertheless, the viewing patterns of monkeys to emotional faces shared certain features between human and monkey faces. Notably, the monkeys exhibited a preference for looking at the eye region regardless of emotion and species. This preference for eyes aligns with similar attention biases observed in infants (Quinn & Tanaka, 2009), children (Yi et al., 2013), and dogs (Goursaud & Bachevalier, 2020), suggesting that eye contact is a common cross-species communication strategy, with eyes providing important information for recognizing the identity or judging the intentions of others (Guo et al., 2003; Shepherd et al., 2010; Smith et al., 2005). Another shared feature was that monkeys preferred to look at emotional faces than Neutral faces, in line with previous findings based on human subjects (Calvo & Nummenmaa, 2008; Jang et al., 2016; Leppänen et al., 2018; Priebe et al., 2021). This emotional bias suggests that both monkeys and humans are sensitive to facial expressions displayed by their conspecifics. Consistent with previous studies involving humans (Beaudry et al., 2014) and monkeys (Dahl et al., 2009), the masking of specific areas of the face disrupted the viewing patterns of monkeys. However, masking the eyes of Negative monkey faces, but not human faces, resulted in a decreasing gaze duration on the eyes, suggesting that monkeys may be more sensitive to conspecific eyes than to human eyes.

Assuming that monkeys are less sensitive to human facial expressions, one potential reason may be that humans use different facial expressions than monkeys. For example, monkeys express Fear/Threat by showing bared teeth with a wide-open mouth (Barat et al., 2018; Maréchal et al., 2017), whereas humans express fear mainly by widening their eyes, raising their brows and eyelids, and retracting their lips (Ebner et al., 2010; Ekman et al., 1983; Smith et al., 2005). As humans do not have long canine teeth and do not express threats in the same way as other predators, monkeys may be less sensitive to such facial expressions of humans. Consistently, in our study, the monkeys paid more attention to the mouth region of monkey faces than to that of human faces. Of note, however, without adequate training, humans are not very good at describing the facial expressions of monkeys (Maréchal et al., 2017). Another reason may be related to familiarity, a factor that has been shown to influence facial expression processing (Herba et al., 2008; Wild-Wall et al., 2008). Given that the studied monkeys had less exposure to observing human faces compared to their interactions with fellow monkeys and that the body postures of humans and monkeys evoke somewhat similar responses in the monkey brain (Taubert et al., 2022), our results suggest that achieving cross-species mutual understanding via facial expressions remains a long-term challenge.

Two-stage processing of facial expressions

As monkeys displayed some similar viewing preferences when observing human and monkey faces but exhibited distinct timing behaviors, our study suggests that the disparities in the processing of conspecific and human faces lie in perception and interpretation rather than in the behavior of using visual fixation to gather information about emotional states. This finding is relatively consistent with the two-stage hypothesis proposed by Adolphs (2002), who suggested that the recognition of emotions first involves the construction of a simulation of the observed emotion in the perceiver, followed by the modulation of sensory cortices via top-down influences. According to this theoretical framework, observers (monkeys) adopt a comparable strategy to gather information from the faces of different species, and an internal mechanism, possibly involving the amygdala, interprets this information and generates different outputs to effectors, resulting in the final influence. Nevertheless, our study did not provide sufficient clues regarding the specific location of internal interpreters.

Limitations

There were several limitations in this study. Firstly, while we matched the broad categories of facial expressions (Positive/Negative/Neutral) between human and monkey faces, the specific expressions within each category were not identical between the two species. Secondly, our study conclusions were based on a small sample size, which may limit the generalizability of the findings. Lastly, the visual stimuli, particularly those of monkeys displaying threatening facial expressions with an open mouth, may have induced sharp luminance contrast in the mouth region, potentially impacting the features reflected in pupil changes.

Conclusions and future directions

In conclusion, our study provides valuable insights into facial expression processing from the perspective of monkeys, revealing notable differences between humans and monkeys. Although both are primates, there appears to be a barrier between these two closely related species in terms of facial expression communication. As such, future studies involving both human and monkey faces should exercise caution when designing experiments.

Many questions remain for further investigation. One crucial direction of research would be to examine the neural mechanisms underlying these phenomena, notably identifying where and how the interpretation of facial expressions differs in the brain. Finally, it would be intriguing to explore whether it is possible to bridge these differences and facilitate the development of cross-barrier understanding between different species.

COMPETING INTERESTS

The authors declare that they have no competing interests.

AUTHORS’ CONTRIBUTIONS

X.H.L. and J.D. designed the experiment; X.H.L., L.G., Z.T.Z., and P.K.Y. collected the data; X.H.L. and J.D. performed the analysis; X.H.L. and J.D. wrote the manuscript. All authors read and approved the final version of the manuscript.

Funding Statement

This work was supported by the National Natural Science Foundation of China (U20A2017), Guangdong Basic and Applied Basic Research Foundation (2022A1515010134, 2022A1515110598), Youth Innovation Promotion Association of Chinese Academy of Sciences (2017120), Shenzhen-Hong Kong Institute of Brain Science–Shenzhen Fundamental Research Institutions (NYKFKT2019009), and Shenzhen Technological Research Center for Primate Translational Medicine (F-2021-Z99-504979)

References

- Adolphs R Neural systems for recognizing emotion. Current Opinion in Neurobiology. 2002;12(2):169–177. doi: 10.1016/S0959-4388(02)00301-X. [DOI] [PubMed] [Google Scholar]

- Angrilli A, Cherubini P, Pavese A, et al The influence of affective factors on time perception. Perception & Psychophysics. 1997;59(6):972–982. doi: 10.3758/bf03205512. [DOI] [PubMed] [Google Scholar]

- Bakker I, van der Voordt T, Vink P, et al Pleasure, arousal, dominance: mehrabian and russell revisited. Current Psychology. 2014;33(3):405–421. doi: 10.1007/s12144-014-9219-4. [DOI] [Google Scholar]

- Barat E, Wirth S, Duhamel JR Face cells in orbitofrontal cortex represent social categories. Proceedings of the National Academy of Sciences of the United States of America. 2018;115(47):E11158–E11167. doi: 10.1073/pnas.1806165115. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Beaudry O, Roy-Charland A, Perron M, et al Featural processing in recognition of emotional facial expressions. Cognition and Emotion. 2014;28(3):416–432. doi: 10.1080/02699931.2013.833500. [DOI] [PubMed] [Google Scholar]

- Calvo MG, Gutiérrez-García A, Avero P, et al Attentional mechanisms in judging genuine and fake smiles: eye-movement patterns. Emotion. 2013;13(4):792–802. doi: 10.1037/a0032317. [DOI] [PubMed] [Google Scholar]

- Calvo MG, Nummenmaa L Detection of emotional faces: salient physical features guide effective visual search. Journal of Experimental Psychology:General. 2008;137(3):471–494. doi: 10.1037/a0012771. [DOI] [PubMed] [Google Scholar]

- Dahl CD, Wallraven C, Bülthoff HH, et al Humans and macaques employ similar face-processing strategies. Current Biology. 2009;19(6):509–513. doi: 10.1016/j.cub.2009.01.061. [DOI] [PubMed] [Google Scholar]

- Dal Monte O, Noble P, Costa Vd, et al Oxytocin enhances attention to the eye region in rhesus monkeys. Frontiers in Neuroscience. 2014;8:41. doi: 10.3389/fnins.2014.00041. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deane AR, Millar J, Bilkey DK, et al Maternal immune activation in rats produces temporal perception impairments in adult offspring analogous to those observed in schizophrenia. PLoS One. 2017;12(11):e0187719. doi: 10.1371/journal.pone.0187719. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ebner NC, Riediger M, Lindenberger U FACES—a database of facial expressions in young, middle-aged, and older women and men: development and validation. Behavior Research Methods. 2010;42(1):351–362. doi: 10.3758/BRM.42.1.351. [DOI] [PubMed] [Google Scholar]

- Eisenbarth H, Alpers GW Happy mouth and sad eyes: scanning emotional facial expressions. Emotion. 2011;11(4):860–865. doi: 10.1037/a0022758. [DOI] [PubMed] [Google Scholar]

- Ekman P, Levenson RW, Friesen WV Autonomic nervous system activity distinguishes among emotions. Science. 1983;221(4616):1208–1210. doi: 10.1126/science.6612338. [DOI] [PubMed] [Google Scholar]

- Frijda NH The evolutionary emergence of what we call "emotions". Cognition and Emotion. 2016;30(4):609–620. doi: 10.1080/02699931.2016.1145106. [DOI] [PubMed] [Google Scholar]

- Gil S, Droit-Volet S "Time flies in the presence of angry faces"… depending on the temporal task used! Acta Psychologica. 2011;136(3):354–362. doi: 10.1016/j.actpsy.2010.12.010. [DOI] [PubMed] [Google Scholar]

- Goursaud APS, Bachevalier J Altered face scanning and arousal after orbitofrontal cortex lesion in adult rhesus monkeys. Behavioral Neuroscience. 2020;134(1):45–58. doi: 10.1037/bne0000342. [DOI] [PubMed] [Google Scholar]

- Guo K, Li ZH, Yan Y, et al Viewing heterospecific facial expressions: an eye-tracking study of human and monkey viewers. Experimental Brain Research. 2019;237(8):2045–2059. doi: 10.1007/s00221-019-05574-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo K, Robertson RG, Mahmoodi S, et al How do monkeys view faces? —A study of eye movements. Experimental Brain Research. 2003;150(3):363–374. doi: 10.1007/s00221-003-1429-1. [DOI] [PubMed] [Google Scholar]

- Herba CM, Benson P, Landau S, et al Impact of familiarity upon children's developing facial expression recognition. Journal of Child Psychology and Psychiatry. 2008;49(2):201–210. doi: 10.1111/j.1469-7610.2007.01835.x. [DOI] [PubMed] [Google Scholar]

- Jang SK, Kim S, Kim CY, et al Attentional processing of emotional faces in schizophrenia: evidence from eye tracking. Journal of Abnormal Psycholog. 2016;125(7):894–906. doi: 10.1037/abn0000198. [DOI] [PubMed] [Google Scholar]

- Kahneman D, Beatty J Pupil diameter and load on memory. Science. 1966;154(3756):1583–1585. doi: 10.1126/science.154.3756.1583. [DOI] [PubMed] [Google Scholar]

- Kuraoka K, Nakamura K Facial temperature and pupil size as indicators of internal state in primates. Neuroscience Research. 2022;175:25–37. doi: 10.1016/j.neures.2022.01.002. [DOI] [PubMed] [Google Scholar]

- Leppänen JM, Cataldo JK, Enlow MB, et al Early development of attention to threat-related facial expressions. PLoS One. 2018;13(5):e0197424. doi: 10.1371/journal.pone.0197424. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu N, Hadj-Bouziane F, Jones KB, et al Oxytocin modulates fMRI responses to facial expression in macaques. Proceedings of the National Academy of Sciences of the United States of America. 2015;112(24):E3123–E3130. doi: 10.1073/pnas.1508097112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu XH, Wang N, Wang JY, et al Formalin-induced and neuropathic pain altered time estimation in a temporal bisection task in rats. Scientific Reports. 2019;9(1):18683. doi: 10.1038/s41598-019-55168-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Maréchal L, Levy X, Meints K, et al Experience-based human perception of facial expressions in Barbary macaques (Macaca sylvanus) PeerJ. 2017;5:e3413. doi: 10.7717/peerj.3413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McClure EA, Saulsgiver KA, Wynne CDL Effects of D-amphetamine on temporal discrimination in pigeons. Behavioural Pharmacology. 2005;16(4):193–208. doi: 10.1097/01.fbp.0000171773.69292.bd. [DOI] [PubMed] [Google Scholar]

- Meck WH Selective adjustment of the speed of internal clock and memory processes. Journal of Experimental Psychology:Animal Behavior Processes. 1983;9(2):171–201. doi: 10.1037/0097-7403.9.2.171. [DOI] [PubMed] [Google Scholar]

- Micheletta J, Whitehouse J, Parr LA, et al Facial expression recognition in crested macaques (Macaca nigra) Animal Cognition. 2015;18(4):985–990. doi: 10.1007/s10071-015-0867-z. [DOI] [PubMed] [Google Scholar]

- Morozov A, Parr LA, Gothard K, et al Automatic recognition of macaque facial expressions for detection of affective states. eNeuro. 2021;8(6):ENEURO.0117–21.2021. doi: 10.1523/ENEURO.0117-21.2021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nakakoga S, Higashi H, Muramatsu J, et al Asymmetrical characteristics of emotional responses to pictures and sounds: evidence from pupillometry. PLoS One. 2020;15(4):e0230775. doi: 10.1371/journal.pone.0230775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Priebe JA, Horn-Hofmann C, Wolf D, et al Attentional processing of pain faces and other emotional faces in chronic pain-an eye-tracking study. PLoS One. 2021;16(5):e0252398. doi: 10.1371/journal.pone.0252398. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Quinn PC, Tanaka JW Infants' processing of featural and configural information in the upper and lower halves of the face. Infancy. 2009;14(4):474–487. doi: 10.1080/15250000902994248. [DOI] [PubMed] [Google Scholar]

- Rapcsak SZ Face recognition. Current Neurology and Neuroscience Reports. 2019;19(7):41. doi: 10.1007/s11910-019-0960-9. [DOI] [PubMed] [Google Scholar]

- Reimer J, Mcginley MJ, Liu Y, et al Pupil fluctuations track rapid changes in adrenergic and cholinergic activity in cortex. Nature Communications. 2016;7:13289. doi: 10.1038/ncomms13289. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reisinger DL, Shaffer RC, Horn PS, et al Atypical social attention and emotional face processing in autism spectrum disorder: insights from face scanning and pupillometry. Frontiers in Integrative Neuroscience. 2020;13:76. doi: 10.3389/fnint.2019.00076. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shepherd SV, Steckenfinger SA, Hasson U, et al Human-monkey gaze correlations reveal convergent and divergent patterns of movie viewing. Current Biology. 2010;20(7):649–656. doi: 10.1016/j.cub.2010.02.032. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sigala R, Logothetis NK, Rainer G Own-species bias in the representations of monkey and human face categories in the primate temporal lobe. Journal of Neurophysiology. 2011;105(6):2740–2752. doi: 10.1152/jn.00882.2010. [DOI] [PubMed] [Google Scholar]

- Smith ML, Cottrell GW, Gosselin F, et al Transmitting and decoding facial expressions. Psychological Science. 2005;16(3):184–189. doi: 10.1111/j.0956-7976.2005.00801.x. [DOI] [PubMed] [Google Scholar]

- Smith SD, Mciver TA, Di Nella MSJ, et al The effects of valence and arousal on the emotional modulation of time perception: evidence for multiple stages of processing. Emotion. 2011;11(6):1305–1313. doi: 10.1037/a0026145. [DOI] [PubMed] [Google Scholar]

- Soares S, Atallah BV, Paton JJ Midbrain dopamine neurons control judgment of time. Science. 2016;354(6317):1273–1277. doi: 10.1126/science.aah5234. [DOI] [PubMed] [Google Scholar]

- Sugase Y, Yamane S, Ueno S, et al Global and fine information coded by single neurons in the temporal visual cortex. Nature. 1999;400(6747):869–873. doi: 10.1038/23703. [DOI] [PubMed] [Google Scholar]

- Taubert J, Japee S, Patterson A, et al A broadly tuned network for affective body language in the macaque brain. Science Advances. 2022;8(47):eadd6865. doi: 10.1126/sciadv.add6865. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tipples J When time stands still: fear-specific modulation of temporal bias due to threat. Emotion. 2011;11(1):74–80. doi: 10.1037/a0022015. [DOI] [PubMed] [Google Scholar]

- Tipples J, Brattan V, Johnston P Facial emotion modulates the neural mechanisms responsible for short interval time perception. Brain Topography. 2015;28(1):104–112. doi: 10.1007/s10548-013-0350-6. [DOI] [PubMed] [Google Scholar]

- Tottenham N, Tanaka JW, Leon AC, et al The NimStim set of facial expressions: judgments from untrained research participants. Psychiatry Research. 2009;168(3):242–249. doi: 10.1016/j.psychres.2008.05.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Waller BM, Julle-Daniere E, Micheletta J Measuring the evolution of facial ‘expression’ using multi-species FACS. Neuroscience & Biobehavioral Reviews. 2020;113:1–11. doi: 10.1016/j.neubiorev.2020.02.031. [DOI] [PubMed] [Google Scholar]

- Ward RD, Odum AL Disruption of temporal discrimination and the choose-short effect. Animal Learning & Behavior. 2007;35(1):60–70. doi: 10.3758/bf03196075. [DOI] [PubMed] [Google Scholar]

- Wild-Wall N, Dimigen O, Sommer W Interaction of facial expressions and familiarity: ERP evidence. Biological Psychology. 2008;77(2):138–149. doi: 10.1016/j.biopsycho.2007.10.001. [DOI] [PubMed] [Google Scholar]

- Yi L, Fan YB, Quinn PC, et al Abnormality in face scanning by children with autism spectrum disorder is limited to the eye region: evidence from multi-method analyses of eye tracking data. Journal of Vision. 2013;13(10):5. doi: 10.1167/13.10.5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yin HZ, Cui XB, Bai YL, et al The effects of angry expressions and fearful expressions on duration perception: an ERP study. Frontiers in Psychology. 2021;12:570497. doi: 10.3389/fpsyg.2021.570497. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang B, Zhou ZG, Zhou Y, et al Increased attention to snake images in cynomolgus monkeys: an eye-tracking study. Zoological Research. 2020;41(1):32–38. doi: 10.24272/j.issn.2095-8137.2020.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu Q, Nelissen K, Van Den Stock J, et al Dissimilar processing of emotional facial expressions in human and monkey temporal cortex. Neuroimage. 2013;66:402–411. doi: 10.1016/j.neuroimage.2012.10.083. [DOI] [PMC free article] [PubMed] [Google Scholar]