Summary

In the imaging process of conventional optical microscopy, the primary factor hindering microscope resolution is the energy diffusion of incident light, most directly described by the point spread function (PSF). Therefore, accurate calculation and measurement of PSF are essential for evaluating and enhancing imaging resolution. Currently, there are various methods to obtain PSFs, each with different advantages and disadvantages suitable for different scenarios. To provide a comprehensive analysis of PSF-obtaining methods, this study classifies them into four categories based on different acquisition principles and analyzes their advantages and disadvantages, starting from the propagation property of light in optical physics. Finally, two PSF-obtaining methods are proposed based on mathematical modeling and deep learning, demonstrating their effectiveness through experimental results. This study compares and analyzes these results, highlighting the practical applications of image deblurring.

Subject areas: Physics, Optics, Optical imaging

Graphical abstract

Physics; Optics; Optical imaging

Introduction

Various types of microscopes, including fluorescence,1,2,3 electron,4,5 and scanning microscopes,6,7,8,9 are currently available for micro/nanoscale observations. However, conventional optical microscopes are nondestructive, fast, and dynamic; therefore, they remain important observation tools in biomedicine, microelectronics, chemistry, and precision instrument manufacturing.10,11,12

The first practical optical microscope was invented by Antonio van Leeuwenhoek in 1673 with a magnification of approximately 270×. This was the first time visible light and lens sets were used to capture images of samples that are difficult to observe with the naked eye. However, the ability of an optical microscope to distinguish the details of an observed target has an upper limit because of incident light diffraction, defined as the resolution of the microscope. A series of studies have been conducted to calculate the resolution of optical microscopes because it is related to the properties of light distribution. George Airy proposed a method to calculate the distribution of the bright and dark spots caused by the fluctuations of incident light that passes through a diffraction-limited system.13 Ernst Abbe calculated the resolution of optical microscopes based on the numerical aperture of the lenses and the wavelength of incident light.14 John Strutt gave a quantitative relationship between the distribution of Airy disks and resolution based on Abbe’s theory; the Rayleigh criterion was then proposed to quantitatively evaluate the resolution of optical microscopes using the intensity distributions of adjacent diffraction disks.15 Fresnel further expressed the wavefront intensity as a superposition of the waves of multiple points’ vibrations and established an analytical mathematical expression for the phenomenon of circular hole diffraction.16 Based on the mathematical description of diffraction provided by Fresnel, examined the properties of intensity distribution under different boundary conditions were examined by Kirchhoff, Fraunhofer, and Sommerfeld, which formed the basis of diffraction theory in physical optics.17,18

In sum, to study the influence of energy diffusion caused by light diffraction on imaging resolution during the microscope imaging process, establishing a quantitative relationship between diffraction-induced energy diffusion and the degree of blurring in complex optical systems based on diffraction theory is essential. Therefore, a series of study have been conducted to research it in recent years based on the diffraction theories in physical optics.

Diffraction theories in physical optics

Keller established the geometric theory of diffraction (GTD), formulating simple rules for calculating diffraction fields.19 Borovikov refined the GTD and established rules for distinguishing the regions where geometric optics and diffraction fields are applied.20 The GTD connected the applicable ranges of geometric optics and diffraction fields through transition regions and provided models of wave propagation in these regions.21,22 Bouwkamp proposed several three-dimensional diffraction field expressions based on the Kirchhoff integral.23 Based on the study by Bouwkamp, which considered that the amplitude at any point in space originates from the interference created at that location by a converging spherical wave propagating along the optical axis, Born and Wolf established a three-dimensional diffraction field expression with its origin at the intersection of the optical axis and the plane of the aperture.24 Hopkins contributed to the expression of a three-dimensional diffraction field by introducing the difference between the distances from the aperture plane to receiving plane and from the spherical wave focus point to the receiving plane (i.e., the defocus distance).25 Li and Wolf improved the three-dimensional diffraction field equation reported by Hopkins to obtain more accurate results for diffraction states with different Fresnel numbers.26 Meanwhile, Frieden and Pederson described the three-dimensional diffraction field of off-axis spherical waves.27,28 Although these researchers analyzed the diffraction properties through experiments or simulations, their models for calculating the intensity distribution mostly comprised complicated non-analytical functions, even approximate integral solutions. Therefore, analyzing the diffraction properties of a practical diffraction system simply and rapidly is difficult.

To solve this problem, Wang proposed a three-dimensional diffraction field model for circular-hole Fresnel diffraction and calculated expressions for divergent and convergent spherical wave models.29 In contrast to previous research that focused on divergent waves, he put forth a mathematical relationship between divergent and convergent waves and extended his method to calculate the intensity distribution of the convergent waves, which is an important theoretical basis for intensity analysis during imaging.

Alongside the continuous improvement of diffraction theory, these theories were also applied to optical imaging and gradually formed diffraction imaging theory. These theories are also gradually being applied to the assessment and calibration of practical optical systems. Duffieux was the first to propose an imaging theory based on diffraction.30 Blanchard used diffraction theory to design diffraction gratings and applied the improved gratings to camera lenses for aberration correction.31 These gratings were also applied to wavefront sensing systems. Stefan gave a method to calculate the optical transfer function (OTF) of a large-aperture imaging system under different medium refractive index conditions based on the diffraction model reported by Frieden and applied the OTF model to the description of confocal microscopes and partially coherent imaging systems.32 Diffraction theory imaging applications generally rely on measuring the degree of energy diffusion in blurred images when diffraction occurs and on establishing an OTF. However, accurate descriptions of the degree of energy diffusion are difficult to achieve using simple methods, such as gratings, and the results contain unknown aberrations from the systems.

Therefore, analyzing the mechanisms of light-intensity diffusion in optical imaging systems based on diffraction theory is necessary. In optical microscope systems, the mechanism of light-intensity diffusion is generally expressed as a point spread function (PSF). Therefore, the accurate acquisition of PSFs is important for the calibration and resolution enhancement of optical microscopes. Four main methods are currently available for obtaining more accurate PSFs of optical microscopes: mathematical, experimental, analytical, and deep learning.

Common methods of PSF acquisition

Mathematical methods

For the development of an accurate mathematical model of PSFs, the two main factors of PSF formation must be analyzed: the light source and the microscope system. To study the light source, Durnin proposed a model for the light intensity distribution of diffraction-free Gaussian beams of different orders under different conditions.33,34 Sprangle compared the variation of the light intensity distribution of Gaussian and Bessel beams for different parameters.35 Ruschin proposed a modified Bessel beam model with reduced diffraction phenomena and long-distance propagation.36 Overfelt compared the propagation capabilities and light-intensity model variations of Bessel, Gaussian, and Bessel-Gaussian beams under the single-hole Fresnel and Fraunhofer diffraction conditions.37 The aforementioned studies provided the propagation laws for different types of light sources when diffraction occurs.

For practical optical microscope systems, Gibson and Lanni summarized the existing models and proposed a three-dimensional convergent spherical wave diffraction field model with a more accurate representation of the defocus distance and provided a detailed model of the PSFs of the oil-immersion objective lens.38 Agard collected and analyzed images of fluorescent objects using oil- and water-immersion objective lenses, confirming the PSF model reported by Gibson and Lanni.39 Lalaoui introduced an effective medium approximation based on the study by Gibson and Lanni, creating a PSF model with more accurate refractive index parameters, thus significantly improving the accuracy of the PSF estimation.40 Török found that the conventional scalar diffraction field cannot accurately describe the propagation law of electromagnetic waves when the diffraction field passes through two media with a large difference in the refractive index, thus proposing a vector field model to describe circular hole diffraction based on the theory of Li and Wolf.41 Building on the study by Török, Haeberlé combined the diffraction field model put forth by Gibson and Lanni and proposed a point-diffusion function model for high-numerical-aperture lenses.42 In addition, some scholars have established mathematical models to theoretically calculate PSFs by describing the response of the imaging system through the pupil functions of the objective lens in Fourier optics.43,44,45

Overall, the advantage of mathematical methods is that the PSFs of optical microscopes can be calculated rapidly, and the trends of PSFs can be predicted. Simultaneously, if the optical microscope system is more complex, the construction of the corresponding mathematical model becomes more challenging.

Experimental methods

The PSF of an optical microscope system is not a regular point-like pattern and varies with spatial location during actual microscopic observation. Therefore, an experimental method is used to obtain the PSF for optical microscopes. Researchers have generally used subresolution fluorescent microbeads embedded in optical cements or tilted surfaces at different locations to measure the PSF of optical microscopes. This is particularly true for some self-made fluorescence imaging systems, which are mostly calibrated using morphological image measurements of fixed-size microbeads. Gibson and Lanni analyzed the images of fluorescent microbeads collected using an oil-immersion objective lens and produced an expression for the corresponding PSF.46 However, this method poses two major challenges: first, precisely controlling the position of a microbead in optical cement is challenging, which leads to inaccurate measurement results; second, human interaction with optical cement can disrupt the local refractive index of the cement, resulting in an inconsistent refractive index in the optical medium, leading to measurement errors. Therefore, obtaining PSFs from fluorescent microbeads embedded at different depths in the observed samples is more complicated. To solve this problem, Bruno proposed a method based on finding PSFs in the observed images and then reconstructing the images.47 With the development of single-molecule localization and tracking techniques, researchers can rely on controlled fluorescent molecules to determine the PSF of optical microscope systems.48 The advantage of this method is that the accuracy of the PSFs obtained from single fluorescent molecules is much higher than that of fluorescent microbeads. However, the localization and tracking of single molecules are more susceptible to Brownian motion, introducing observation lag and affecting the accuracy of the obtained PSFs.49

In contrast, knife-edge methods are also significant for measuring PSF in optical microscopes. These methods can obtain the corresponding PSF by differentiation from the well-defined edge part of the microscopic image.50 To measure the PSF precisely, the knife edge in the observed image must be perpendicular or parallel to the sampling direction. Although some knife-edge methods are robust to the angle of the knife edge, several constraints still exist on the selection of large-angle knife edges for optical imaging.51 For example, edge selection at different positions may lead to significant differences in the PSF measurements.52

The PSFs obtained using these measurement methods provide features that incorporate aberrations in the entire optical system; thus, the effect of the entire optical system on the imaging can be described more accurately. However, the accuracy of these experimental methods is influenced by the characteristics of the observed samples, such as morphology, location, material, and luminous characteristics.

Analytical methods

Since both mathematical and experimental methods have their apparent disadvantages, researchers have devised analytical methods that do not require complex mathematical modeling and collection of a larger number of samples simultaneously to obtain PSFs.

The analytical method is an estimation of the PSF based on an iterative approach. Nagy and Leary proposed a sub-block two-dimensional convolution method based on the fast Fourier transform and a linear interpolation technique for PSFs to reduce the mosaic effect.53 Preza and Conchello applied the layered deconvolution method used in astronomical image processing to microscopic image processing and proposed a Maximum Likelihood-Expectation Maximization deconvolution algorithm.54 Maalouf used the Zernike moment expansion of the measured depth-dependent PSFs as an initial condition to provide a theoretical basis for deconvolution.55 The measured PSFs were further optimized during the deconvolution process to approximate the true values. Kim extracted a rough PSF from images of the observed samples by performing an intensity analysis and corrected them in iterations using the Lucy-Richardson algorithm, thereby reducing computational complexity.56 Chen used images of fluorescent particles obtained from measurements to perform weighted averaging, proposed a method to estimate PSFs with spatial variation, and used deconvolution to obtain more accurate PSFs.57

Analytical methods can provide a better approximation of real PSFs using an iterative approach and can reduce the interference of human operations on the observation environment using interpolation techniques. However, analytical methods, which include blind deconvolution and interpolation, consume more computation time and have poorer real-time performance compared to mathematical and experimental methods.

Deep learning methods

Deep learning has become an effective tool for complex tasks. Over the last ten years, a series of networks have been applied to image processing, including convolutional neural networks (CNNs) and deep CNNs.58,59,60,61 In 2018, Herbel estimated the parameters of a PSF model for astronomically observed images using a CNN.62 Elias used a CNN based on a single-molecule localization technique to achieve densely overlapping PSF images over a large axial range, extracted the optimal PSFs from them, output their spatial locations, and used the extracted PSFs for the super-resolution reconstruction of microscopic images.63 Hershko extracted and optimized PSFs at different wavelengths from microscope images using a CNN.64 Vasu designed a CNN-based method for the extraction of image blur kernels and effectively applied it to the nonblind deblurring of images.65 CNNs and their improved models have been widely used for the blur kernel and PSF estimation of images.

In 2014, based on the idea of allowing two networks (the discriminator and generator) to compete with each other, generative adversarial networks (GANs) were proposed by Goodfellow.66 Huang used a GAN to train low-resolution microscope image sets and used the generated PSFs to restore high-resolution images.67 Baniukiewicz trained a GAN using microscopic cell image sets and used the generated PSFs to predict the degree of blurring of images located at different defocus depths.68 Lim proposed a cycle-consistent GAN (CycleGAN) and used it to generate blur kernels for microscope images.69 Serin used a CycleGAN and adaptive instance normalization (AdaIN) to achieve blur kernel transformation under different system conditions and applied it to CT image quality improvement.70 In 2022, Philip trained a GAN using microscope image sets with PSFs, effectively applied the generated PSFs to an embryo, and deblurring of the excised brain tissue microscope image.71

In addition to CNN and GAN, other types of networks have been used for PSF extraction and research. In 2019, Gao proposed a back-propagation quantum neural network and applied it to PSF estimation in Gaussian degradation imaging systems.72 Gray used the DEEPLOOP toolbox to train a large number of optical images to estimate and reconstruct PSFs.73 Yang trained the W-Net model using measured PSF image sets and used the generated PSFs to refocus defocused blurred microscope images.74

In recent years, deep learning methods have developed rapidly, relying on the powerful computing capability of graphics processing units (GPU), allowing researchers to avoid complex mathematical models of PSFs and obtain more optimal results. However, deep learning methods cannot obtain analytic expressions for PSFs, and a large number of collected images are required as datasets for network training. Moreover, deep learning methods are more sensitive to changes in system parameters such that a small change in any of the camera parameters results in the reacquisition of the datasets in order to accommodate the new parameters.

PSF measurement examples and results

In this study, we propose a PSF acquisition method based on an improved style GAN (StyleGAN) and a PSF modeling method based on the theoretical model in ref.29

First, the ideal image generation module based on the improved StyleGAN is trained using the collected Gaussian beam image sets, and a series of diffraction images of a Gaussian light source containing Airy disks is obtained, where the light intensity distributions in the Airy disks are closer to the theoretical distributions. Second, blur kernels are extracted from the centers of the generated ideal diffraction images with different depth information to replace the PSFs, thus avoiding the noise that occurs during the representation of PSFs using parameter identification or curve fitting. Finally, based on the learnable convolutional half-quadratic splitting and convolutional preconditioned Richardson (LCHQS-CPCR) model, the extracted blur kernels were used to deblur microbead-blurred images located at different depths under visible illumination.

The PSF generation module based on the improved StyleGAN is illustrated in Figure 1.

Figure 1.

Ideal images generation module based on improved StyleGAN75

(A and B) (A) The schematic diagram of the ideal images generation module, (B) The structure of the generator in the ideal images generation module.

We input the collected images into the ideal image generation module and set the parameter kimg to 280. kimg is the time required by the StyleGAN discriminator to recognize one thousand real images. The training process for ideal Gaussian light-source diffraction images in the ideal image generation module is shown in Figure 2, which shows that the generated images become closer to the real images as the number of iterations increases. Figure 2A shows the initial images generated by the generator at the beginning of each iteration. Figure 2B shows the generated images when kimg = 40; the color of the generated images gradually approaches the real images. Figure 2C shows the generated images when kimg = 80, the contours of the diffraction rings gradually begin to form. Figure 2D shows the generated images when kimg = 120; StyleGAN inaccurately generated colorful band patterns around the diffraction rings. Figure 2E shows the generated images when kimg = 240, where StyleGAN corrected the generated incorrect patterns by learning more samples, and the diffraction rings in the centers of the images can be clearly observed. Figure 2F shows the generated images when kimg = 280; the diffraction rings have realistic textures and details, and the shapes of the Airy disks are regular and clearly visible.

Figure 2.

Training process in ideal image generation module

(A–F) are the Gaussian light-source diffraction images generated by the generation module when kimg is 10, 40, 80, 120, 240, and 280, respectively.

The loss curves of the generator and discriminator networks during the training process are shown in Figure 3. Figure 3A shows that the loss of the discriminator gradually stabilizes when kimg = 150. In Figure 3B, the loss curve of the generator exhibits a downward trend, and the loss gradually stabilizes at kimg = 160.

Figure 3.

Loss curves of the generator network and discriminator network

(A and B) (A) is the loss curve of the discriminator network, (B) is the loss curve of the generator network.

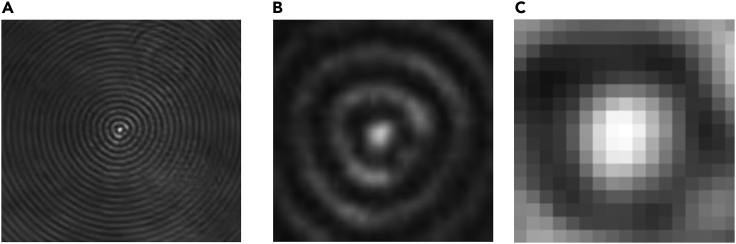

After obtaining the generated ideal diffraction images, we selected images located at different depths from the image set, grayscaled them, and then intercepted the central positions of the Airy disks as the blur kernels of the images. The grayscale image at Δd = 0 μm is shown in Figure 4A, and its central part that includes the Airy disk, is shown in Figure 4B, where Δd is the defocus distance of observed object; in Figure 4C, the central part of the Airy disk in Figure 4B was accurately extracted as the blurring kernel.

Figure 4.

Extraction of the blurring kernel from a generated image

(A–C) (A) is the diffraction image of an ideal Gaussian light source, (B) is the central part of diffraction image (A) that contains the Airy disk, and (C) is the blurring kernel extracted from (B).

The second method for obtaining the PSF is the theoretical modeling method. Our theoretical PSF model is as follows:

| (Equation 1) |

where Jn is the nth-order Bessel function, ρ is the distance from a point on the imaging surface to the optical axis, e is the natural constant, Ip is light intensity distribution at a point on the imaging surface, K is a set of constants, N = a2/λδ; δ = b0(1-(ξ2Δd/f(b0f+ξfΔd))); C = ξ2Δd/(b0f2+ξfΔd); ξ = b0-f; Δd = d-d0; a is the effective radius of the objective lens, λ is the wavelength of the light source, b0 is the ideal image distance of a microscope, d is the object distance, d0 is the ideal object distance of a microscope, and f is the focal length of a microscope.

To compare the aforementioned two methods, we extracted the blurring kernels from the original image at Δd = 0 μm and its corresponding generated image, calculated their average intensity curves through the center of the intensity peak, and compared it with our proposed theoretical modeling method. The results are shown in Figure 5. Compared to the light-intensity distribution curve of the original image, the intensity distribution curve of our image generated by StyleGAN is closer to the theoretical PSF curve. In addition, the obtained curve is smoother and possesses stronger symmetry of the optical axis.

Figure 5.

The comparison between the generated image, the original image, and the theoretical PSF model we proposed, where x is the imaging plane coordinate

After an accurate PSF model has been established, the resolution of optical microscope can be evaluated based on the Rayleigh criterion in Figure 6. According to the definition of the Rayleigh criterion, when the overlapping position intensity of the light intensity curves of two adjacent light sources accounts for 73.5% of the peak intensity of a single curve, the distance between their centers is the minimum resolution. The results in Figure 6 show that, when the Rayleigh criterion was met, the distance between the two centers was 1100 nm. To further validate the intensity distribution model, a simplified formula to estimate the minimum resolution of a practical optical system is used,

| (Equation 2) |

where ψmin is the resolution; NA is the numerical aperture of the objective lens. The calculated resolution using Equation 2 was 1159 nm, which was 59 nm different from the resolution calculated by our model, the error is about 5%, so it is believed that the light intensity distribution model we proposed is basically accurate.

Figure 6.

Schematic diagram of resolution evaluating based on Rayleigh criterion

Finally, the blur kernels extracted from the generated ideal diffraction images are used to deblur the images of microbeads under visual light illumination at different depths based on the LCHQS-CPCR model. It is an end-to-end model, that is, it performs pre-training, extraction of image prior features, image deconvolution and deblurring independently. In LCHQS-CPCR, firstly, the deconvolution module performs the deconvolution operation on the input blurred images. Secondly, the network further convolves the results of the initial deconvolution with the gradients in both horizontal and vertical directions to denoise. Finally, the denoised gradient images are subjected to further deconvolution to obtain the clear images. The gradients of the clear images are inputs of the convolutional layer in next iteration. In our deblurring experiment, the images of dynamic microbeads in solution were collected by the optical microscope, where the diameter of the microbeads was 10.08 μm; the images at different depths are as shown in Figure 7. The image-deblurring method based on the LCHQS-CPCR model is shown in Figure 8, where the outline of the microbeads is observed to become clearer, and that the noise between the microbeads is effectively removed.

Figure 7.

Original microbead images at different depths

(A–F) are the images of microbeads when Δd are 0 μm, 2 μm, 4 μm, 6 μm, 8 μm, and 10 μm, respectively.

Figure 8.

Clear images after reconstruction using our method

(A–F) are clear images of microbeads after reconstruction when Δd are 0 μm, 2 μm, 4 μm, 6 μm, 8 μm, and 10 μm, respectively.

Discussion

In summary, the PSF is an important factor for characterizing the properties of image blurring in optical systems and is the most direct way to enhance the resolution of microscopes. Therefore, the accurate measurement of PSF is a crucial issue in optical microscopy. In this study, we presented the advantages and disadvantages of each of the four methods for obtaining PSFs, because of which these four methods are often applied in different scenarios. Mathematical methods based on spatial diffraction field theory were the first to be proposed and belonged to the most traditional methods. However, owing to the development of microscope technology, the hardware structure has become increasingly complex, leading to increased difficulty in accurately modeling specific microscope systems. Therefore, mathematical methods are often aimed at optical systems in which the structures are relatively simple and easy to model. Although experimental methods can express complete system aberrations, obtaining an analytical expression of the PSF is challenging; therefore, researchers tend to choose a variety of well-established PSF models and then use various PSF models to fit the experimental results separately to determine the most accurate PSF representation. The analytical method is based on experiment and uses an interpolation technique to further reduce the number of samples required and improve the accuracy of the results. Deep learning methods are a future trend with strong generality but with high hardware requirements. For PSF measurements, the use of multiple methods, both combined and cross-validated, will be a future trend. For example, the powerful ability of deep learning can be used to generate PSF sets with specific properties that complement experimental methods. The fast and precise features of mathematical methods can also provide real-time validation of the accuracy of other methods.

Limitations of the study

Due to the complexity of PSF genesis and the coupling of multiple system parameters in advanced optical imaging, researchers may not be able to acquire an accurate PSF using a single PSF obtaining method. Therefore, it is difficult for some composite PSF obtaining methods in practical situations to be accurately categorized in this review.

Resource availability

Lead contact

Further information and requests for resources and reagents should be directed to and will be fulfilled by the lead contact, Weihan Hou (ihwhlr@126.com).

Materials availability

This study did not generate new unique reagents.

Data and code availability

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

Instructions for section 1: Data

All data reported in this paper will be shared by the lead contact upon request.

Instructions for section 2: Code

This paper does not report original code.

Instructions for section 3

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

Acknowledgments

National Natural Science Foundation of China under Grant (61973059).

Author contributions

Y.W. and W.H. conceived and designed the experiments; W.H. performed the experiments; W.H. analyzed and interpreted the data; W.H. and Y.W. contributed materials, analysis tools, and data; W.H. wrote the paper.

Declaration of interests

The authors declare that they have no competing interests.

Appendix

The derivation process of Equation 1 is shown below.

In Ref.,29 the distance r from the light source to a point on spherical wave when Fresnel diffraction occurs can be expressed as,

| (Equation 3) |

where cosα and M can be expressed as,

| (Equation 4) |

where d is the distance from the light source to the circular hole; b is the distance from the circular hole to the receiving plane; a is the radius of the circular hole, ρ is the distance from a point on the receiving plane to the optical axis, q is the distance from any point on the wave front to the center of the wave front, and α is the angle between q and ρ. Introducing Equation 3 into the expression for the amplitude Ep of Fresnel diffraction in Equation 5 yields Equations 6 and 7,

| (Equation 5) |

| (Equation 6) |

| (Equation 7) |

where dΦ is the infinitesimal element of spherical wave, k = 2π/λ is the wavenumber, λ is the wavelength of light source, a is the effective radius of lens, Jn is n-th order Bessel function.

In the process of lens imaging, the variation of object distance Δd and the variation of image distance Δb are related as follows,

| (Equation 8) |

where ξ = b0-f, f denotes the focal length, b0 is the ideal image distance. Substituting Equation 8 into Equation 3 yields Equation 9,

| (Equation 9) |

where,

| (Equation 10) |

Therefore, combining Equations 9 and 6 yields Equation 11,

| (Equation 11) |

where N = a2/λδ; C = ξ2Δd/(b0f2+ξfΔd), and E0 is denoted as,

| (Equation 12) |

Then, light intensity Ip can be calculated as,

| (Equation 13) |

According to the property of Bessel function, there are Equations 14 and 15 that hold true,

| (Equation 14) |

| (Equation 15) |

The summation of the n-th Bessel functions can be transformed into,

| (Equation 16) |

With the property of orthogonal polynomial,29 we obtain,

| (Equation 17) |

where e is the natural constant.

Therefore, Equation 15 can be transformed into,

| (Equation 18) |

Combining Equation 18 with Equation 13 yields Equation 19,

| (Equation 19) |

Replace x and y in Equation 19 with the parameters as follows,

| (Equation 20) |

Then, the light intensity IP can be denoted as,

| (Equation 21) |

Introducing a set of constants K to Equation 21 makes the simplification based on the orthogonal polynomial property more accurate, so that Equation 1 can be obtained.

References

- 1.Lichtman J.W., Conchello J.A. Fluorescence microscopy. Nat. Methods. 2005;2:910–919. doi: 10.1038/nmeth817. [DOI] [PubMed] [Google Scholar]

- 2.Cheng H., Tong S., Deng X., Li J., Qiu P., Wang K. In vivo deep-brain imaging of microglia enabled by 3-photon fluorescence microscopy. Opt. Lett. 2020;45:5271–5274. doi: 10.1364/OL.408329. [DOI] [PubMed] [Google Scholar]

- 3.Mikulewitsch M., von Freyberg A., Fischer A. Confocal fluorescence microscopy for geometry parameter measurements of submerged micro-structures. Opt. Lett. 2019;44:1237–1240. doi: 10.1364/OL.44.001237. [DOI] [PubMed] [Google Scholar]

- 4.Yan X., Liu C., Gadre C.A., Gu L., Aoki T., Lovejoy T.C., Dellby N., Krivanek O.L., Schlom D.G., Wu R., Pan X. Single-defect phonons imaged by electron microscopy. Nature. 2021;589:65–69. doi: 10.1038/s41586-020-03049-y. [DOI] [PubMed] [Google Scholar]

- 5.Herbig M., Choi P., Raabe D. Combining structural and chemical information at the nanometer scale by correlative transmission electron microscopy and atom probe tomography. Ultramicroscopy. 2015;153:32–39. doi: 10.1016/j.ultramic.2015.02.003. [DOI] [PubMed] [Google Scholar]

- 6.Zhou Q., Zhu X., Li H.F. Study on light intensity distribution of tapered-fiber in near-field scanning microscopy. Acta Physica Sinica -Chinese Edition. 2000;49:210–214. [Google Scholar]

- 7.Moison J.M., Abram I., Bensoussan M. Full mapping of optical noise in photonic devices: an evaluation by near-field scanning microscopy. Opt Express. 2008;16:9513–9518. doi: 10.1364/oe.16.009513. [DOI] [PubMed] [Google Scholar]

- 8.Farid S., Kasem M., Zedan A., Mohamed G., El-Hussein A. Exploring ATR Fourier transform IR spectroscopy with chemometric analysis and laser scanning microscopy in the investigation of forensic documents fraud. Opt Laser. Technol. 2021;135:106704. [Google Scholar]

- 9.Shuji Fabrication Technique of Pencil-type Fibre Probes and Small Apertures for Photon Scanning Tunnelling Microscopy. Opt Laser. Technol. 1995 [Google Scholar]

- 10.Sutter P., Sutter E. Unconventional van der Waals heterostructures beyond stacking. iScience. 2021;24:103050. doi: 10.1016/j.isci.2021.103050. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kumarasamy M., Sosnik A. Heterocellular Spheroids of the Neurovascular Blood-Brain Barrier as A Platform for Personalized Nanoneuromedicine. iScience. 2021;24:102183. doi: 10.1016/j.isci.2021.102183. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yi X., So P. Three-dimensional super-resolution high-throughput imaging by structured illumination STED microscopy. Opt Express. 2018;26:209–220. doi: 10.1364/OE.26.020920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Rivolta C. Airy disk diffraction pattern: comparison of some values of f/No. and obscuration ratio. Appl. Opt. 1986;25:2404. doi: 10.1364/ao.25.002404. [DOI] [PubMed] [Google Scholar]

- 14.Masters R.B. Ernst Abbe and the Foundation of Scientific Microscopes. Opt. Photon. News. 2007 [Google Scholar]

- 15.Zhou S., Jiang L. Modern description of Rayleigh's criterion. Phys. Rev. 2019;99:013808. [Google Scholar]

- 16.Burghard W. Die Widerlegung der Theorie der molécules puppiformes. Eine verschollene Abhandlung von Augustin Jean Fresnel. Centaurus. 1988;31 [Google Scholar]

- 17.Akhmanov S.A., Nikitin S.Y. Clarendon Press, Oxford University Press; 1997. Physical Optics. [Google Scholar]

- 18.White H.E. McGraw-Hill Book Co; 1937. Fundamentals of Physical Optics. [Google Scholar]

- 19.Keller J. Geometrical theory of diffraction. J. Opt. Soc. Am. 1962;52:116–130. doi: 10.1364/josa.52.000116. [DOI] [PubMed] [Google Scholar]

- 20.Borovikov V.A., Kinber B.E. 1978. Geometrical Theory of Diffraction. [Google Scholar]

- 21.Borovikov V.A. IET Digital Library; 1994. GTD or Physical Optics Methods? [Google Scholar]

- 22.Borovikov V.A. Diffraction by polyhedral angle: Field in vicinity of singular ray. J. Acoust. Soc. Am. 2002;112:2415. [Google Scholar]

- 23.Bouwkamp C.J. mathematics research group washington square college of arts & science new york university; 1953. Diffraction theory. A Critique of Some Recent Developments. [Google Scholar]

- 24.Born M., Clemmow P.C., Gabor D. Principles of optics. Math. Gaz. 1999;1:986–990. [Google Scholar]

- 25.Hopkins H.H. Oxford Univ. Pr; 1950. Wave Theory of Aberrations. [Google Scholar]

- 26.Li Y., Wolf E. Three-dimensional intensity distribution near the focus in systems of different Fresnel numbers. J. Opt. Soc. Am. A. 1984;1:801. [Google Scholar]

- 27.Frieden B.R. Optical Transfer of the Three-Dimensional Object. J. Opt. Soc. Am. 1967;57:56. [Google Scholar]

- 28.Frieden B.R. Image Recovery by Minimum Discrimination from a Template. Appl. Opt. 1989 doi: 10.1364/AO.28.001235. [DOI] [PubMed] [Google Scholar]

- 29.Wang P., Xu Y., Wang W., Wang Z. Analytic expression for Fresnel diffraction. J. Opt. Soc. Am. A. 1998;15:684–688. [Google Scholar]

- 30.Duffieux P.M. Le théorème de Dirichlet et l'imagerie coherente. Appl. Opt. 1967;6 doi: 10.1364/AO.6.000323. [DOI] [PubMed] [Google Scholar]

- 31.Blanchard P.M., Greenaway A.H. Simultaneous Multiplane Imaging with a Distorted Diffraction Grating. Appl. Opt. 1999;38:6692–6699. doi: 10.1364/ao.38.006692. [DOI] [PubMed] [Google Scholar]

- 32.Schönle A., Hell S.W. Calculation of vectorial three-dimensional transfer functions in large-angle focusing systems. J. Opt. Soc. Am. Opt Image Sci. Vis. 2002;19:2121–2126. doi: 10.1364/josaa.19.002121. [DOI] [PubMed] [Google Scholar]

- 33.Durnin J., Miceli J.J., Eberly J.H. Diffraction-free beams. Phys. Rev. Lett. 1987;58:1499–1501. doi: 10.1103/PhysRevLett.58.1499. [DOI] [PubMed] [Google Scholar]

- 34.Durnin J. Exact solutions for nondiffracting beams. I. The scalar theory. J. Opt. Soc. Am. A. 1987;4:651–654. [Google Scholar]

- 35.Sprangle P., Hafizi B. Comment on nondiffracting beams. Phys. Rev. Lett. 1991;66:837. doi: 10.1103/PhysRevLett.66.837. [DOI] [PubMed] [Google Scholar]

- 36.Ruschin S. Modified Bessel nondiffracting beams. J. Opt. Soc. Am. A. 1994;11:3224–3228. [Google Scholar]

- 37.Overfelt P.L., Kenney C.S. Comparison of the propagation characteristics of Bessel, Bessel–Gauss, and Gaussian beams diffracted by a circular aperture. J. Opt. Soc. Am. A. 1991;8:732–745. [Google Scholar]

- 38.Gibson S.F., Lanni F., Lanni F. Experimental test of an analytical model of aberration in an oil-immersion objective lens used in three-dimensional light microscopy. J. Opt. Soc. Am. A. 1992;9:154–166. doi: 10.1364/josaa.9.000154. [DOI] [PubMed] [Google Scholar]

- 39.Hanser B.M., Gustafsson M.G.L., Agard D.A., Sedat J.W. Phase-retrieved pupil functions in wide-field fluorescence microscopy. J. Microsc. 2004;216:32–48. doi: 10.1111/j.0022-2720.2004.01393.x. [DOI] [PubMed] [Google Scholar]

- 40.Lalaoui L. “Axial Point Spread Function Modeling by Application of Effective Medium Approximations: Widefield Microscopy - ScienceDirect.” Optik 212.

- 41.Török P., Varga P., Laczik Z., Booker G.R. Electromagnetic diffraction of light focused through a planar interface between materials of mismatched refractive indices: an integral representation. J. Opt. Soc. Am. A. 1995;12:325–332. [Google Scholar]

- 42.Haeberlé O. Focusing of light through a stratified medium: a practical approach for computing microscope point spread functions. Part I: Conventional microscopy. Opt. Commun. 2003;216:55–63. [Google Scholar]

- 43.von Diezmann A., Lee M.Y., Lew M.D., Moerner W.E. Correcting field-dependent aberrations with nanoscale accuracy in three-dimensional single-molecule localization microscopy. Optica. 2015;2:985–993. doi: 10.1364/OPTICA.2.000985. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Beverage J.L., Shack R.V., Descour M.R. Measurement of the three-dimensional microscope point spread function using a Shack-Hartmann wavefront sensor. J. Microsc. 2002;205:61–75. doi: 10.1046/j.0022-2720.2001.00973.x. [DOI] [PubMed] [Google Scholar]

- 45.Lee W.S., Lim G., Kim W.C., Choi G.J., Yi H.W., Park N.C. Investigation on improvement of lateral resolution of continuous wave STED microscopy by standing wave illumination. Opt Express. 2018;26:9901–9919. doi: 10.1364/OE.26.009901. [DOI] [PubMed] [Google Scholar]

- 46.Gibson S.F., Lanni F. Diffraction by a circular aperture as a model for three-dimensional optical microscopy. J. Opt. Soc. Am. A. 1989;6:1357–1367. doi: 10.1364/josaa.6.001357. [DOI] [PubMed] [Google Scholar]

- 47.Colicchio B., Haeberlé O., Xu C., Dieterlen A., Jung G. Improvement of the LLS and MAP deconvolution algorithms by automatic determination of optimal regularization parameters and pre-filtering of original data. Opt. Commun. 2005;244:37–49. [Google Scholar]

- 48.von Diezmann L., Shechtman Y., Moerner W.E. Three-Dimensional Localization of Single Molecules for Super-Resolution Imaging and Single-Particle Tracking. Chem. Rev. 2017;117:7244–7275. doi: 10.1021/acs.chemrev.6b00629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Small A., Stahlheber S. Fluorophore localization algorithms for super-resolution microscopy. Nat. Methods. 2014;11:267–279. doi: 10.1038/nmeth.2844. [DOI] [PubMed] [Google Scholar]

- 50.McCormick B.H., Mayerich D.M. Three-Dimensional Imaging Using Knife-Edge Scanning Microscopy. Microsc. Microanal. 2004;10:1466–1467. doi: 10.1111/j.1365-2818.2008.02024.x. [DOI] [PubMed] [Google Scholar]

- 51.Yang J., Zhang Z., Cheng Q. Resolution enhancement in micro-XRF using image restoration techniques. J. Anal. At. Spectrom. 2022;37:750–758. [Google Scholar]

- 52.Mehravar S., Cromey B., Kieu K. Characterization of multiphoton microscopes by non-linear knife-edge technique. Appl. Opt. 2020;59:G219. doi: 10.1364/AO.391881. [DOI] [PubMed] [Google Scholar]

- 53.Nagy J.G., Plemmons R.J., Torgersen T.C. Iterative image restoration using approximate inverse preconditioning. IEEE Trans. Image Process. 1996;5:1151–1162. doi: 10.1109/83.502394. [DOI] [PubMed] [Google Scholar]

- 54.McNally J.G., Preza C., Conchello J.A., Thomas L.J., Jr. Artifacts in computational optical-sectioning microscopy. J. Opt. Soc. Am. A. 1994;11:1056–1067. doi: 10.1364/josaa.11.001056. [DOI] [PubMed] [Google Scholar]

- 55.Maalouf E., Colicchio B., Dieterlen A. Fluorescence microscopy three-dimensional depth variant point spread function interpolation using Zernike moments. J. Opt. Soc. Am. A. 2011;28:1864–1870. [Google Scholar]

- 56.Kim B., Naemura T. Blind Depth-variant Deconvolution of 3D Data in Wide-field Fluorescence Microscopy. Sci. Rep. 2015;5:9894. doi: 10.1038/srep09894. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Chen Y., Chen M., Zhu L., Wu J.Y., Du S., Li Y. Measure and model a 3-D space-variant PSF for fluorescence microscopy image deblurring. Opt Express. 2018;26:14375. doi: 10.1364/OE.26.014375. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Jain V., Seung H.S. Natural image denoising with convolutional networks. Proc. NIPS. 2008:1–8. [Google Scholar]

- 59.Xie J., Xu L., Chen E. Image denoising and inpainting with deep neural networks. Proc. NIPS. 2012:341–349. [Google Scholar]

- 60.Harold B., Christian C., Schuler J., Harmeling S. Image denoising with multi-layer perceptrons, part 1: comparison with existing algorithms and with bounds. Comput. Sci. 2012;38:1544–1582. [Google Scholar]

- 61.Zhang K., Zuo W., Chen Y., Meng D., Zhang L. Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans. Image Process. 2017;26:3142–3155. doi: 10.1109/TIP.2017.2662206. [DOI] [PubMed] [Google Scholar]

- 62.Herbel J., Kacprzak T., Amara A., Refregier A., Lucchi A. Fast Point Spread Function Modeling with Deep Learning. J. Cosmol. Astropart. Phys. 2018;2018:054. [Google Scholar]

- 63.Elias N. 2019. DeepSTORM3D: Dense Three-Dimensional Localization Microscopy and Point Spread Function Design by Deep Learning. [Google Scholar]

- 64.Gray M. HAL open science; 2022. DEEPLOOP: DEEP Learning for an Optimized Adaptive Optics Psf Estimation. [Google Scholar]

- 65.Vasu S., Maligireddy V.R., Rajagopalan A.N. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) IEEE; 2018. Non-blind Deblurring: Handling Kernel Uncertainty with CNNs. [Google Scholar]

- 66.Goodfellow I.J., Abadie J.P., Mirza M. Generative adversarial networks. Proc. NIPS. 2014:2672–2680. [Google Scholar]

- 67.Huang C.Y. 2018 IEEE International Ultrasonics Symposium (IUS) IEEE; 2018. Ultrasound Imaging Improved by the Context Encoder Reconstruction Generative Adversarial Network. [Google Scholar]

- 68.Baniukiewicz P., Lutton E.J., Collier S., Bretschneider T. Generative Adversarial Networks for Augmenting Training Data of Microscopic Cell Images. Front. Comput. Sci. 2019;1 [Google Scholar]

- 69.Lim S., Park H., Lee S.E., Chang S., Sim B., Ye J.C. CycleGAN with a Blur Kernel for Deconvolution Microscopy: Optimal Transport Geometry. IEEE Trans. Comput. Imaging. 2020;6:1127–1138. [Google Scholar]

- 70.Serin Y., Kim E.Y., Ye J.C. 2020. Continuous Conversion of CT Kernel Using Switchable CycleGAN with AdaIN. [DOI] [PubMed] [Google Scholar]

- 71.Philip W. Experimentally Unsupervised Deconvolution for Light-Sheet Microscopy with Propagation-Invariant Beams. Light Sci. Appl. 2022 doi: 10.1038/s41377-022-00975-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Gao K. IEEE; 2010. PSF estimation for Gaussian image blur using back-propagation quantum neural network; pp. 1068–1073. [Google Scholar]

- 73.Hershko E., Weiss L.E., Michaeli T., Shechtman Y. Multicolor localization microscopy and point-spread-function engineering by deep learning. Opt Express. 2019;27:6158–6183. doi: 10.1364/OE.27.006158. [DOI] [PubMed] [Google Scholar]

- 74.Yang X. Deep-Learning-Based Virtual Refocusing of Images Using an Engineered Point-Spread Function. ACS Photonics. 2021 [Google Scholar]

- 75.Wei Y., Hou W. Blurring kernel extraction and super-resolution image reconstruction based on style generative adersarial networks. Opt Express. 2021;29:44024–44044. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.