Abstract

Background

Anxiety in university students can lead to poor academic performance and even dropout. The Adult Manifest Anxiety Scale (AMAS-C) is a validated measure designed to assess the level and nature of anxiety in college students.

Objective

The aim of this study is to provide internet-based alternatives to the AMAS-C in the automated identification and prediction of anxiety in young university students. Two anxiety prediction methods, one based on facial emotion recognition and the other on text emotion recognition, are described and validated using the AMAS-C Test Anxiety, Lie and Total Anxiety scales as ground truth data.

Methods

The first method analyses facial expressions, identifying the six basic emotions (anger, disgust, fear, happiness, sadness, surprise) and the neutral expression, while the students complete a technical skills test. The second method examines emotions in posts classified as positive, negative and neutral in the students' profile on the social network Facebook. Both approaches aim to predict the presence of anxiety.

Results

Both methods achieved a high level of precision in predicting anxiety and proved to be effective in identifying anxiety disorders in relation to the AMAS-C validation tool. Text analysis-based prediction showed a slight advantage in terms of precision (86.84 %) in predicting anxiety compared to face analysis-based prediction (84.21 %).

Conclusions

The applications developed can help educators, psychologists or relevant institutions to identify at an early stage those students who are likely to fail academically at university due to an anxiety disorder.

Keywords: Anxiety, University students, Adult manifest anxiety scale–college version (AMAS-C), Facial expression analysis, Text sentiment analysis

Highlights

-

•

This article introduces an Internet-based application to predict anxiety in university students.

-

•

Students' facial expressions are analysed as they complete a technical skills test.

-

•

Students' emotions are analysed in posts on their profile on the social network Facebook.

-

•

The AMAS-C test is used to validate anxiety identification through text and facial emotion analysis.

1. Introduction

Anxiety is a physiological, behavioural and psychological response (Ozen et al., 2010). It is a common symptom in every person when they must perform activities related to social obligations, meeting deadlines, driving in traffic, etc. (https://www.apa.org/topics/anxiety/). This anxiety helps us stay alert and focused to respond to any dangerous or demanding situation (Lawson, 2006; Ozen et al., 2010). However, a person with an anxiety disorder tends to experience extreme fear and prolonged worry, which interferes with daily activities (Scanlon et al., 2007; Ran et al., 2016) and causes feelings of weakness and low self-esteem (Vanderlind et al., 2022; Yan and Horwitz, 2008; Spielberger and Vagg, 1995; Ratanasiripong et al., 2018; Beiter et al., 2015). According to Zambrano Verdesoto et al. (2018), the first year of university education is considered critical, as up to one third of students drop out of higher education institutions during this year (Zeidner, 1998; Spielberger and Vagg, 1995; Eysenck, 2013). Anxiety is a determining factor in the academic performance of university students, especially in the first year of admission (Tijerina González et al., 2019). Poor academic performance can have a negative impact on students, causing them to drop subjects or, in the worst case, to drop out of university altogether.

In recent years, social networks have become an important part of the lives of young people between the ages of 16 and 24 (Glover and Fritsch, 2018). Nowadays, the use of social networks has become an everyday activity, as it is not only a means of communication, but also a source of information. It allows individuals to express their opinions, feelings and thoughts on any subject. In addition, people with mental disorders often share their mental states or discuss mental health issues with others through platforms by posting text messages, photos, videos and other links (Guo et al., 2022; Zhang et al., 2022, Zhang et al., 2023). Thus, given the popularity of social networks, it would be possible to use social network data to detect stress, depression and anxiety (Jia, 2018). A recent analysis concludes that facial expressions, images, emotional chatbots and text on social media platforms can effectively detect emotions and depression (Joshi and Kanoongo, 2022). In terms of machine learning techniques used in text emotion detection, several notable studies are worth mentioning. For example, Ali et al. (2022) uses learning techniques to classify psychotic disorders based on Facebook status updates. The random forest classifier outperformed competing classifiers. Another paper by Benton et al. (2017) proposes a method for predicting suicide risk and mental health problems using multitask learning. The authors used Twitter datasets to identify users with anxiety, bipolar disorder, depression, panic disorder, eating disorder or schizophrenia, as well as a dataset with characteristics of people who had attempted suicide. The results show that a multi-task learning model with all task predictions performs significantly better than other models. de Souza et al. (2022) predict depression in the social network Reddit. The proposed method identifies the features with the highest SHapley Additive Explanation values for a sample of test users. Regarding sentiment analysis in text, some authors recommend focusing the analysis on three main sentiments: positive, neutral and negative (Patankar et al., 2016; Jamal et al., 2021; Avila et al., 2020; Esposito et al., 2020).

Moreover, some authors point out that recognising primary or basic emotions (happiness, anger, fear, disgust, surprise, sadness) in facial expressions allows a better analysis of emotions because they are easy to recognise (Lozano-Monasor et al., 2014, Lozano-Monasor et al., 2017). Furthermore, Ekman (1993) argues that the connection is so strong that one has a distinctive facial expression for each basic emotion. Nowadays, facial recognition software, especially commercial software, is constantly evolving to include larger training databases and more natural (not fake or experimentally induced) emotional data (Harley, 2016). Guthier et al. (2016) note that facial expressions and emotions influence each other because a facial expression resulting from an emotion uses facial muscles that cannot be consciously controlled. For this reason, most vision-based approaches to emotion recognition focus on faces. Recently, a comprehensive review of the most commonly used classical and neural network strategies for interpreting and recognising facial expressions of emotion (Canal et al., 2022) has been conducted. In terms of machine learning techniques for facial emotion recognition, Florea et al. (2019) propose to predict anxiety/stress in children using the Annealed Label Transfer method. In addition, Giannakakis et al. (2017) develop a framework for the detection and analysis of stress/anxiety, focusing on non-voluntary and semi-voluntary facial signals. Finally, Huang et al. (2016) predict social anxiety related to public speaking using facial feature extraction methods to obtain 68 facial landmarks. The study uses support vector machine classifiers to identify facial expressions of public speaking anxiety.

To conclude, it is possible to predict, or even know exactly, a young person's anxiety based on his or her activity on social networks and facial emotion recognition. Thus, the aim of this paper is to introduce an internet-based application that predicts anxiety in young university students using two methods: (i) identification of anxiety through the analysis of facial expressions and (ii) identification of anxiety through the analysis of feelings on the social network Facebook. For both methods, the Adult Manifest Anxiety Scale-College (AMAS-C) test is used as a validation tool. This test assesses whether the proposed methods (facial and textual) could provide an accurate prediction compared to the results obtained from the ground truth data.

2. Methods

2.1. The AMAS-C test

A well-known test to measure anxiety is the Adult Manifest Anxiety Scale (AMAS) (Reynolds et al., 2003), which consists of three different instruments: (i) AMAS-A (for adults), (ii) AMAS-C (for college students) and (iii) AMAS-E (for older adults). Thus, the AMAS-C is designed to measure and assess anxiety in college students (Reynolds et al., 2003; González Ramírez et al., 2021). It holds 49 items divided into four anxiety scales and one validity scale. The first scale consists of 12 items focusing on Worry/Oversensitivity (WOS), the second scale consists of 7 items focusing on Social Concerns/Stress (SOC) and the third scale consists of 8 items focusing on Physiological Activity (PHY). The fourth scale of the AMAS-C focuses on Test Anxiety and consists of 15 items. Finally, the AMAS-C has a scale on Lie, which is used for data validity and consists of 7 items. In addition, the AMAS-C has a Total Anxiety score that is based on the sum of the scores of the first four scales mentioned above, excluding the Lie scale. The T-score ranges of the three scales used are 44 (1), 45–54 (2), 55–64 (3), 65–74 (4), and 75 (5), which correspond to the following clinical ratings: low, expected, mildly elevated, clinically significant, and extreme. These clinical ratings open up the concept of a lag in prediction (Reynolds et al., 2003) due to the potential significance associated with the T-score of the AMAS-C test. A T-score that falls within a possible range of T-scores is associated with its corresponding potential significance. For this reason, the focus is on using this lag to validate the predictions of this study. Although the predicted T-score may or may not be very close to the actual score, it is sufficient for it to fall within the same range to provide an accurate interpretation of the student's anxiety.

In this paper the Total Anxiety, Test Anxiety and Lie scales of the AMAS-C have been used. Indeed, the first step in assessing AMAS scores is to examine the Total Anxiety score. This scale is designed to reflect a wide range of symptoms, including irrational worry and fear, a general feeling of apprehension or unease, and the manifestation of these symptoms in interpersonal, physiological and cognitive domains. In addition, high Total Anxiety scores reflect general problems associated with anxiety.

Then, the Test Anxiety scale is designed to measure anxiety associated with tests, test taking and performance evaluation. Elevated levels of test anxiety can lead to deficient performance on tests, despite adequate or even high knowledge of the subject matter. This scale captures the debilitating levels of stress associated with test taking and the unproductive drains that so often plague test anxiety sufferers. For this reason, this scale is included in the prediction of the T score. In the context of the present work, a correct or approximate prediction of the T score, or its interpretation, will allow us to identify students who are more likely to fail academically at university for anxiety-related reasons.

As noted above, the first step in interpreting any form of the AMAS is to find whether a valid and interpretable protocol has been obtained. This can be done by observing invalid response patterns, by examining the responses, or by simple visual inspection. This is important because if there is any reason to suspect that an AMAS protocol is characterised by invalid responses, the results are likely to be uninterpretable. For this reason, each version of the AMAS includes a Lie scale designed to detect intentional distortions or response biases based on social desirability. The items on the Lie scale represent “ideal” behaviour that is not characteristic of any individual. Those who respond positively to “I am always nice” or “I never get angry” are obviously not showing human behaviour at any age. For this reason, this scale is used to measure the truthfulness of participants' self-reports, based on the interpretation provided by the T-score ranges.

2.2. Meaning Cloud for detecting emotions in text

The Meaning Cloud API performs detailed, multilingual sentiment analysis from a variety of sources (Chatzakou et al., 2017). The text supplied is analysed to determine whether it expresses a positive, neutral or negative sentiment (or is undetectable) (Patankar et al., 2016; Jamal et al., 2021; Avila et al., 2020; Esposito et al., 2020). This is done by identifying individual sentences and evaluating the relationship between them, resulting in an overall polarity value for the text as a whole (https://www.meaningcloud.com/products/sentiment-analysis). The features of the post-analysis API are:

-

•

Overall sentiment extracts the general opinion expressed in a tweet, post or review.

-

•

Attribute-level sentiment specific sentiment for an object or any of its qualities, analysing the sentiment of each sentence in detail.

-

•

Opinion and fact identification distinguishes between the expression of an objective fact or a subjective opinion.

-

•

Irony detection names comments in which what is expressed is the opposite of what is said.

-

•

Graded polarity distinguishes very positive and very negative opinions, as well as the absence of sentiment.

-

•

Agreement and disagreement finds opposing views and contradictory or ambiguous messages.

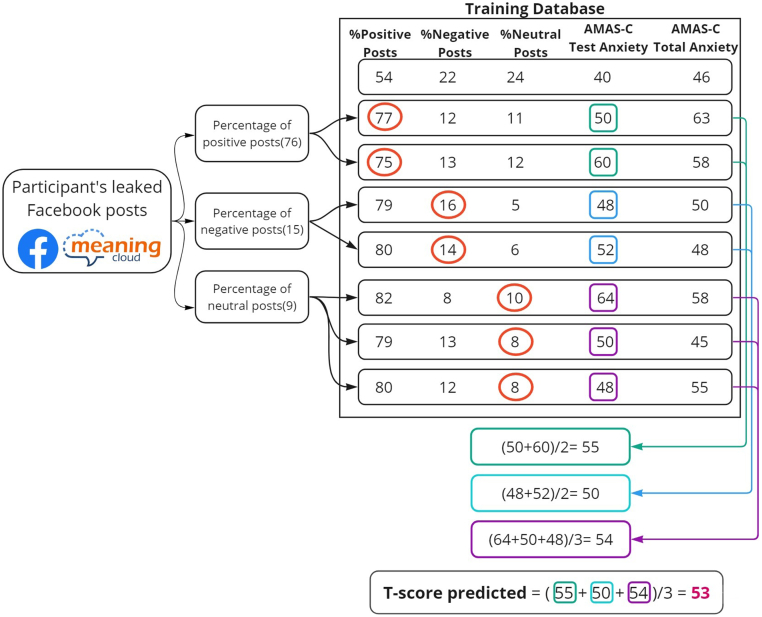

The prediction algorithm used for each participant is based on sentiment analysis of their posts on the social network Facebook using Meaning Cloud. The algorithm uses as input the percentage of total posts classified as positive, neutral and negative (see Fig. 1). The result of the prediction is two predicted T scores corresponding to the Test Anxiety and Total Anxiety scales.

Fig. 1.

Prediction algorithm using Facebook posts.

It should be noted that posts that do not stand for relevant information are discarded. Table 1 describes the exclusion criteria used.

Table 1.

Exclusion criteria for Facebook posts.

| # | Criterion | Reason |

|---|---|---|

| 1 | Posts about games, applications or other services provided by Facebook. | Posts created through these services do not truly reflect the feelings or thoughts of the participant. |

| 2 | Posts that contain a single link or multiple links. | A link or multiple links do not reflect a particular sentiment, or the context of the sentiment may be lost when parsing a URL. |

| 3 | Posts containing photos with no comments. | Sentiment analysis requires specific text to analyse, so if it does not exist, there is no material for sentiment analysis. |

| 4 | Posts containing only tags. | A name or list of names does not reflect a specific sentiment. |

| 5 | Posts containing only icons. | The sentiment analysis API does not recognise icons, so it does not reflect the sentiment or thoughts of the participant. |

| 6 | Posts containing only addresses. | These address-only posts do not reflect sentiment. |

| 7 | Posts that reflect spam (including criteria). | These posts reflect repetition and will affect the overall rating. |

2.3. Technical skills testing and Morph AI for facial emotion recognition

Admission to higher education is often based primarily on prior achievement and entrance examination results. Today, all higher education institutions, especially computer science and engineering faculties, face challenges in the admissions process. Every university should strive to have an admissions system based on valid and reliable admissions criteria that selects candidates who are likely to succeed in their programmes (Mengash, 2020).

Other parameters commonly used to predict student performance at university are assessments, quiz marks, laboratory work and examination marks. The test used in this document to support the process of access to higher education, which includes verbal, numerical and logical reasoning skills in their various forms, is based on the ability of students in the first or second year of the third level of education (degree) to answer the 10 questions in the test. In addition to the aptitude test (questions and answers), the time factor was added, i.e., a maximum response time per question was defined in order to provoke stress and anxiety in the participants when answering the test. The aim of the test developed is to provoke or infer emotional states to support facial analysis (Meiselman, 2016). More specifically, the technical skills test is designed to elicit the emotional state by analysing the participant's facial gestures using machine vision. The participant must answer a total of 10 questions, each with a time limit. If a question is not answered within the allotted time, it is automatically skipped, and the participant is presented with the next question.

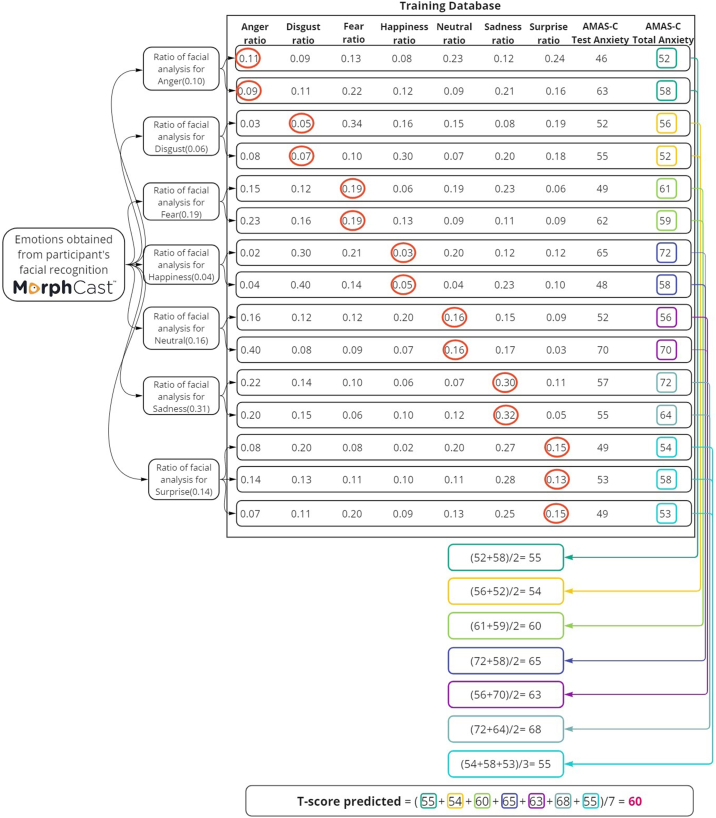

For emotion recognition using artificial vision, the MorphCast tool (Harley, 2016) is available. The MorphCast AI HTML5 SDK (https://www.morphcast.com) is a native JavaScript engine based on deep neural networks that can analyse facial expressions and features in any web page or application. MorphCast's facial expression analysis technology can analyse over 130 facial features. Each participant's identifier is linked to the results of the facial analysis of induced emotions, together with the results of the technical skills test. The affective states studied correspond to the six basic emotions (anger, disgust, fear, happiness, sadness, surprise) and neutral expression. It should be noted that the MorphCast tool analyses, for each of the questions, all the facial gestures shown within a given time (maximum response time) or until a given question is answered. Fig. 2 shows the diagram of the prediction algorithm using facial analysis of emotions using the MorphCast tool.

Fig. 2.

Prediction algorithm using facial emotion analysis.

The results of the facial analysis of emotions provide the average score for each of the seven emotions obtained during the solution of the technical skills test. Finally, two predicted T scores are obtained, corresponding to the Test Anxiety and Total Anxiety scales.

2.4. Participants

To determine the sample size, knowing the population size, the following formula was used, assuming a finite population (see Eq. (1)).

| (1) |

where is the sample size, is the margin of confidence in the normal distribution that provides the desired level of confidence (a confidence of 95 % corresponds to a score ), is the proportion of individuals with a given characteristic, is the proportion of individuals not having this characteristic, is the margin of error, and is the population size.

The formula gives a sample size of 120 students. Therefore, 120 students enrolled in the first and second year of the Information Technology course at the University of the Armed Forces ESPE were recruited for the evaluation. The students were between 18 and 24 years old. All participants signed an informed consent form after a careful explanation of the study and before the experiment was conducted. The collected data were stored anonymously in dissociated databases. First, all 120 students took the AMAS-C test. Then the sample was divided into two groups, one for the training base and one for the validation. Based on the Pareto principle or the 80/20 rule (Nemur, 2016), the ratio “few out of many” was applied, where the results of a few students (20 %) are represented by most of the other results (80 %). In this case, 80 % of the 120 students resulted in a total of 96 students in the first group (training engine) and the remaining 20 %, i.e., 24 students, for the validation.

2.5. Description of the experiment

The Clinical Research Ethics Committee of the Complejo Hospitalario Universitario de Albacete approved this study on 24 September 2019, with code number 2019/07/073. The authors confirm that all procedures contributing to this work complied with the ethical standards of the relevant national and institutional committees on human experimentation and with the Helsinki Declaration of 1975, as revised in 2008.

The experiment conducted was divided into three phases:

-

•

Phase 1. Administration of the AMAS-C test. In this phase, the measurement of the AMAS-C test scales (Total Anxiety, Test Anxiety and Lie) is obtained so that these scores can be compared with the methods proposed in phases 2 and 3. The aim is to check which of the two methods has scores closer to those obtained in this phase of the AMAS-C test, which is widely accepted in psychology.

Fig. 3 describes the procedure for obtaining the T-scores of each scale using the AMAS-C test. First of all, the self-reporting form or questionnaire of the AMAS-C is used, where only the three desired values are obtained, namely T-scores for Test Anxiety, T-scores for Total Anxiety and T-scores for Lie. The questionnaire is administered to the 120 participants. As previously explained, of these 120 participants, 96 will form part of the training base and the remaining 24 participants will form the basis for future testing.

Fig. 3.

Registration process of the participants' T scores.

Once the T scores of the three scales studied have been obtained from the AMAS-C test, these scores are stored in different databases, taking into account that:

-

1.

The records of the 96 participants used as the training base with the T scores on the Test Anxiety and Total Anxiety scales are stored in a database.

-

2.

The records of the same 96 participants are stored in a second database with the T-scores of the Test Anxiety, Total Anxiety and Lie scales filtered by the T-score of Lie. Those participants who scored T 75 are not recorded in this database.

-

3.

The records of the remaining 24 participants with T scores on the Test Anxiety and Total Anxiety scales are stored in the validation database.

-

4.

The records of the same 24 participants are stored in a second database, with the Lie T-value filter applied, with T-values from the Test Anxiety, Total Anxiety and Lie scales. Those participants who scored T 75 are not included in this database.

-

•

Phase 2. Identification of anxiety by sentiment analysis of posts on the social network Facebook. This first method is conducted by performing a textual analysis of the participants' posts on the Facebook social network. This phase is in turn divided into the following four steps: (1) preprocessing, (2) learning to classify the posts as positive, negative and neutral, (3) evaluation or contrast with the scores obtained in the AMAS-C test (phase 1) for the Test Anxiety and Total Anxiety scales, and (4) prediction with a new group of participants.

The procedure for prediction by textual analysis using the Facebook posts of each of the 24 participants is described below. Fig. 4 serves as an example to illustrate the procedure.

-

–

The records obtained with the scores for Test Anxiety, Total Anxiety and Lie are stored by administering the AMAS-C test.

-

–

All of the participants' Facebook posts are acquired using the Meaning Cloud API.

-

–

The posts obtained from the participants are processed to categorise them into three states (positive, negative and neutral). As an example of this step, Fig. 4 predicts the T score of a user whose percentage of positive posts is 76 %, negative posts is 15 % and neutral posts is 9 %. All these percentages add up to 100 % of the textual representation of the content of the user's posts on the social network Facebook.

-

–

The percentage of each state is taken (% of positive publications, % of negative publications and % of neutral publications) and compared with the percentages present in the training base of the 96 participants of the learning phase, together with the result of the AMAS-C test in each of the scales obtained by these 96 students. In Fig. 4 we see how the three states are contrasted with each of the training base records where the other participants' information is stored, and the three states of the percentages of positive, negative and neutral publications. To this training base are added the scores obtained in phase 1 on the AMAS-C scales (Test Anxiety and Total Anxiety).

-

–

The closest superior value and the closest inferior value in the training database are taken from each of the percentages obtained from positive, negative and neutral posts.

-

–

For both scales of the AMAS-C test (Test Anxiety and Total Anxiety), the T scores corresponding to the positive, negative and neutral posts are averaged. Following the example, we take the value of the percentage of positive publications of the participant (76 %) and compare a similar value in each record. If we do not find it, we select an upper and a lower value, i.e. the selected records would be 77 and 75, since they are the only ones in the training base of the example. These selected records have the values of the AMAS-C test scales. Assuming that we want to calculate the T-score of the Test Anxiety scale, the corresponding values are 50 and 60. The mean (55) is obtained from these values. The same procedure is repeated to obtain the average of the AMAS-C scores to obtain the scores of negative (50) and neutral (54) publications.

-

–

Finally, an overall average (predicted T-score) is obtained, which is assigned to the participant from the three T-scores of each of the two scales under consideration. We can see in Fig. 4 how the scores from the three states are averaged to give a T score of 53. This is the predicted value for the Test Anxiety scale for one of the participants.

-

•

Phase 3. Identification of anxiety through facial expression analysis. In this phase, the second proposed method is developed by detecting emotions through facial expressions. This phase is also divided into four steps to be followed. (1) preprocessing, in which the technical skills test is carried out with the aim of capturing the emotions emitted by each participant in each question of the test, (2) learning with the emotions obtained, classifying them into anger, disgust, fear, happiness, sadness, surprise and neutral, (3) evaluation or contrast with the scores obtained in the AMAS-C test (phase 1) for the Test Anxiety and Total Anxiety scales, and (4) prediction, in which new participants perform the technical skills test while the stored emotions are extracted in the background.

Fig. 4.

Example of Phase 2 detection and prediction of anxiety by analysing sentiments in publications on the social network Facebook.

Fig. 5 illustrates the facial recognition algorithm in the same way as an example of text recognition was shown above. In this case, the example is of a participant with the following emotion ratios obtained by MorphCast AI: anger (0.10), disgust (0.06), fear (0.19), happiness (0.04), neutral (0.16), sadness (0.31) and surprise (0.14). Each of these emotions is called a state. All these averages add up to the value of one (1) of the extraction of emotions by facial recognition while the participant is performing a skill test.

Fig. 5.

Example of Phase 3 detection and prediction of anxiety by analysing facial expressions.

2.6. Architecture

An architecture based on free software tools was implemented as shown in Fig. 6. The application, called “Anxiety Sentiment Test”, was developed using the React (https://reactjs.org/) library suite, a JavaScript library designed for creating participant interfaces and supported by Facebook. Because it is internet-based, it works on any mobile device, laptop or desktop computer with a browser and camera.

Fig. 6.

“Anxiety Sentiment Test” architecture.

The application uses the Facebook API to log in participants and obtain public data such as their name, email and an ID provided by Facebook to identify them and store their results. It also uses the SDK provided by Firebase to interact with the Firestore database and store the results in the corresponding collections. The data is obtained from the participants' interaction with the different sections of the web application:

-

1.

49-item form (AMAS-C test), which allows us to measure the participant's Test Anxiety and Total Anxiety, and to check the validity of the test using the Lie scale.

-

2.

Analysis of Facebook posts using the sentiment analysis API provided by Meaning Cloud.

-

3.

Analysis of emotions using the MorphCast HTML5 AI SDK.

These last two functions supply results that are analysed and processed to make the prediction about the Test Anxiety and Total Anxiety of the participants. Being a web application, the interface is one of the most relevant parts of the system, as it allows communication between the database and the process generated by the participant. The outstanding features of this framework are its versatility and ease of communication, both with JavaScript and with the Firestore database. An intuitive and easy to use interface has been designed, based on the Material Design standard developed by Google (https://material.io/design/introduction/#principles).

3. Results

This section presents the prediction results obtained by each method, as well as the errors obtained in each of them. In addition, we present the results in their respective ranges according to the AMAS-C test, to determine the discrepancy between sentiment analysis in texts and in facial expressions when making predictions. For the evaluation, two different predictions were made: (i) including the entire sample foreseen in the experiment and (ii) studying the precision of the obtained data filtered by the Lie scale of the AMAS-C test.

3.1. Results using the whole sample

Based on the 80/20 rule mentioned above, the prediction results were obtained with 96 students in the learning phase and 24 students in the prediction phase. Table 2 shows the results obtained for the Test Anxiety scale and Table 3 shows the results of the Total Anxiety of the 24 students (named from 1 to 24). The columns of these tables are made up of: (i) the student's identifier, (ii) the ranges of scores according to the AMAS-C test scale, (iii) the score that the participant obtained when solving the AMAS-C test, (iv) the prediction of the results of the analysis of the publications by textual analysis, (v) the prediction of the results of the facial emotion analysis, (vi) the absolute value of the difference between the AMAS-C test and the prediction of the textual analysis, and (vii) the absolute value of the difference between the AMAS-C test and the prediction of the facial emotion analysis.

Table 2.

Results of Test Anxiety prediction.

| ID | AMAS-C range | AMAS-C score (C) | Text analysis score (T) | Facial analysis score (F) | C-T | C-F |

|---|---|---|---|---|---|---|

| 20 | 44 | 40 | 65.83 | 65.17 | 25.83 | 25.17 |

| 6 | 42 | 61.60 | 61.92 | 19.60 | 19.92 | |

| 11 | 45 | 62.50 | 64.86 | 17.50 | 19.86 | |

| 7 | 45 | 53 | 61.00 | 63.79 | 8.00 | 10.79 |

| 9 | – | 53 | 62.57 | 64.50 | 9.57 | 11.50 |

| 12 | 54 | 53 | 67.80 | 67.07 | 14.80 | 14.07 |

| 17 | 53 | 62.42 | 60.64 | 9.42 | 7.64 | |

| 5 | 56 | 60.83 | 64.21 | 4.83 | 8.21 | |

| 14 | 55 | 56 | 61.11 | 66.29 | 5.11 | 10.29 |

| 19 | – | 56 | 57.00 | 67.64 | 1.00 | 11.64 |

| 1 | 64 | 59 | 64.50 | 60.71 | 5.50 | 1.71 |

| 3 | 62 | 59.00 | 65.50 | 3.00 | 3.50 | |

| 4 | 62 | 65.86 | 62.57 | 3.85 | 0.57 | |

| 13 | 65 | 61.83 | 62.71 | 3.17 | 2.29 | |

| 16 | 65 | 61.67 | 65.50 | 3.33 | 0.50 | |

| 8 | 67 | 60.83 | 63.93 | 6.17 | 3.07 | |

| 10 | 65 | 67 | 63.17 | 65.07 | 3.83 | 1.93 |

| 18 | – | 67 | 61.33 | 59.14 | 5.67 | 7.86 |

| 22 | 74 | 67 | 62.17 | 65.14 | 4.83 | 1.86 |

| 24 | 67 | 66.00 | 60.93 | 1.00 | 6.07 | |

| 15 | 70 | 67.40 | 60.00 | 2.60 | 10.00 | |

| 21 | 70 | 64.50 | 68.71 | 5.50 | 1.29 | |

| 23 | 70 | 65.50 | 61.57 | 4.50 | 8.43 | |

| 2 | 75 | 76 | 56.33 | 64.85 | 19.67 | 11.15 |

| Mean difference: | 7.85 | 8.30 | ||||

Table 3.

Results of Total Anxiety prediction.

| ID | AMAS-C range | AMAS-C score (C) | Text analysis score (T) | Facial analysis score (F) | C-T | C-F |

|---|---|---|---|---|---|---|

| 6 | 45 | 58.40 | 60.85 | 13.40 | 15.85 | |

| 20 | 48 | 65.17 | 58.23 | 17.17 | 10.23 | |

| 7 | 45 | 48 | 59.00 | 61.00 | 10.57 | 13.36 |

| 14 | – | 49 | 60.00 | 66.00 | 10.79 | 16.57 |

| 11 | 54 | 53 | 59.83 | 65.86 | 6.83 | 12.86 |

| 3 | 53 | 55.67 | 58.79 | 2.67 | 5.79 | |

| 4 | 53 | 66.43 | 60.57 | 13.43 | 7.57 | |

| 12 | 60 | 68.80 | 62.79 | 8.80 | 2.79 | |

| 22 | 60 | 60.67 | 68.86 | 0.67 | 8.86 | |

| 9 | 55 | 63 | 64.14 | 61.50 | 1.14 | 1.50 |

| 1 | – | 63 | 66.00 | 58.86 | 3.00 | 4.14 |

| 24 | 64 | 63 | 61.50 | 57.57 | 1.50 | 5.43 |

| 17 | 64 | 61.47 | 57.36 | 2.53 | 6.64 | |

| 19 | 64 | 59.33 | 60.71 | 4.67 | 3.29 | |

| 18 | 64 | 54.33 | 53.21 | 9.67 | 10.79 | |

| 8 | 65 | 58.83 | 65.50 | 6.17 | 0.50 | |

| 16 | 65 | 68 | 59.67 | 64.93 | 8.33 | 3.07 |

| 15 | – | 68 | 64.80 | 57.43 | 3.20 | 10.57 |

| 13 | 74 | 71 | 55.50 | 60.14 | 15.50 | 10.86 |

| 23 | 71 | 65.17 | 60.93 | 5.83 | 10.07 | |

| 5 | 73 | 58.17 | 62.43 | 14.83 | 10.57 | |

| 10 | 75 | 62.00 | 61.21 | 13.00 | 13.79 | |

| 21 | 75 T | 75 | 58.83 | 66.43 | 16.17 | 8.57 |

| 2 | 81 | 51.17 | 62.23 | 29.83 | 18.77 | |

| Mean difference: | 9.15 | 8.85 | ||||

Looking at Table 2, Table 3, it can be seen that the overall average error of the T-score between the AMAS-C test result and the prediction using sentiment analysis in Facebook posts is 7.85 and 9.15 for Test Anxiety and Total Anxiety, respectively. On the other hand, the prediction error between the AMAS-C test and the prediction by facial emotion analysis is 8.30 and 8.85 for the two scales.

Taking Reynolds et al. (2003) as a reference, the concept of lag is defined as the distance of ranges that exists between the actual range and the predicted one. That is, a lag of 0 signifies that the predicted range is the same as the actual range, a lag of 1 means that the predicted range is one category away from the actual range, and so on. Table 4 shows the lag (G), both for the Test Anxiety scale and the Total Anxiety scale, for each of the students. C-R, T-R and F-R stand for AMAS-C, text analysis and facial analysis range, respectively. Similarly, T-lag and F-lag are the lags between C-R and T-R, and C-R and F-R, respectively. The average lag is 0.92 (0.88 and 0.96) and 0.90 (0.88 and 0.92) for the prediction using the text analysis method and the face analysis method, respectively. Both approaches give comparable results, although slightly better for the facial emotion analysis method.

Table 4.

Prediction results by range (R) and student (ID).

| ID | Test Anxiety |

Total Anxiety |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| C-R | T-R | F-R | T-lag | F-lag | C-R | T-R | F-R | T-lag | F-lag | |

| 1 | 3 | 3 | 3 | 0 | 0 | 4 | 4 | 3 | 0 | 1 |

| 2 | 5 | 3 | 4 | 2 | 1 | 5 | 2 | 3 | 3 | 2 |

| 3 | 3 | 3 | 4 | 0 | 1 | 2 | 3 | 3 | 1 | 1 |

| 4 | 3 | 4 | 3 | 1 | 0 | 2 | 4 | 3 | 2 | 1 |

| 5 | 3 | 3 | 3 | 0 | 0 | 4 | 3 | 3 | 1 | 1 |

| 6 | 1 | 3 | 3 | 2 | 2 | 2 | 3 | 3 | 1 | 1 |

| 7 | 2 | 3 | 3 | 1 | 1 | 2 | 3 | 3 | 1 | 1 |

| 8 | 4 | 3 | 3 | 1 | 1 | 4 | 3 | 4 | 1 | 0 |

| 9 | 2 | 3 | 3 | 1 | 1 | 3 | 3 | 3 | 0 | 0 |

| 10 | 4 | 3 | 4 | 1 | 0 | 5 | 3 | 3 | 2 | 2 |

| 11 | 2 | 3 | 4 | 1 | 2 | 2 | 3 | 4 | 1 | 2 |

| 12 | 2 | 4 | 4 | 2 | 2 | 3 | 4 | 3 | 1 | 0 |

| 13 | 4 | 3 | 3 | 1 | 1 | 4 | 3 | 3 | 1 | 1 |

| 14 | 3 | 3 | 4 | 0 | 1 | 2 | 3 | 4 | 1 | 2 |

| 15 | 4 | 4 | 3 | 0 | 1 | 4 | 3 | 3 | 1 | 1 |

| 16 | 4 | 3 | 4 | 1 | 0 | 4 | 3 | 4 | 1 | 0 |

| 17 | 2 | 3 | 3 | 1 | 1 | 3 | 3 | 3 | 0 | 0 |

| 18 | 4 | 3 | 3 | 1 | 1 | 3 | 2 | 2 | 1 | 1 |

| 19 | 3 | 3 | 4 | 0 | 1 | 3 | 3 | 4 | 0 | 1 |

| 20 | 1 | 4 | 3 | 3 | 2 | 2 | 4 | 3 | 2 | 1 |

| 21 | 4 | 3 | 4 | 1 | 0 | 5 | 3 | 4 | 2 | 1 |

| 22 | 4 | 3 | 4 | 1 | 0 | 3 | 3 | 4 | 0 | 1 |

| 23 | 4 | 4 | 3 | 0 | 1 | 4 | 4 | 3 | 0 | 1 |

| 24 | 4 | 4 | 3 | 0 | 1 | 3 | 3 | 3 | 0 | 0 |

| Mean difference: | 0.88 | 0.88 | Mean difference: | 0.96 | 0.92 | |||||

3.2. Results using the Lie scale

For this prediction, the Lie scale of the AMAS-C test is used. In fact, the Lie scale is used to filter and discard the T-scores 44 and 75. After studying the Lie scale, the sample was reduced to 99 participants. After applying the Pareto rule to the sample for training and validation, there was a base of 80 students for training and 19 students for the validation. The results for the Test Anxiety and Total Anxiety scales are now shown in Table 5, Table 6.

Table 5.

Results of Test Anxiety (filtered by the Lie scale).

| ID | AMAS-C range | AMAS-C score (C) | Text analysis score (T) | Facial analysis score (F) | C-T | C-F |

|---|---|---|---|---|---|---|

| 5 | 44 | 42 | 60.25 | 61.92 | 18.25 | 19.92 |

| 10 | 45 | 61.60 | 66.11 | 16.6 | 21.11 | |

| 6 | 45 | 53 | 59.00 | 61.20 | 6.00 | 8.20 |

| 8 | – | 53 | 62.57 | 63.08 | 9.57 | 10.08 |

| 11 | 54 | 53 | 67.25 | 65.58 | 14.25 | 12.58 |

| 15 | 53 | 60.73 | 61.55 | 7.73 | 8.55 | |

| 4 | 55 | 56 | 59.00 | 64.15 | 3.00 | 8.15 |

| 17 | – | 56 | 60.00 | 64.67 | 4.00 | 8.67 |

| 2 | 64 | 62 | 62.40 | 64.92 | 0.40 | 2.92 |

| 3 | 62 | 63.67 | 62.50 | 1.67 | 0.50 | |

| 12 | 65 | 65 | 60.80 | 60.10 | 4.20 | 4.90 |

| 14 | – | 65 | 60.00 | 65.55 | 5.00 | 0.55 |

| 7 | 74 | 67 | 60.83 | 63.93 | 6.17 | 3.07 |

| 9 | 67 | 63.17 | 66.25 | 3.83 | 0.75 | |

| 16 | 67 | 65.25 | 58.69 | 1.75 | 8.31 | |

| 19 | 67 | 65.80 | 58.90 | 1.20 | 8.10 | |

| 13 | 70 | 67.40 | 60.00 | 2.60 | 10.00 | |

| 18 | 70 | 66.20 | 61.75 | 3.80 | 8.25 | |

| 1 | 75 | 76 | 59.60 | 62.55 | 16.40 | 13.45 |

| Mean difference: | 6.65 | 8.32 | ||||

Table 6.

Results of Total Anxiety (filtered by the Lie scale).

| ID | AMAS-C range | AMAS-C score (C) | Text analysis score (T) | Facial analysis score (F) | C-T | C-F |

|---|---|---|---|---|---|---|

| 5 | 45 | 59.50 | 60.08 | 14.50 | 15.08 | |

| 6 | 45 | 48 | 50.33 | 59.00 | 2.33 | 11.00 |

| 2 | – | 53 | 56.00 | 58.38 | 3.00 | 5.38 |

| 3 | 54 | 53 | 64.00 | 58.70 | 11.00 | 5.70 |

| 10 | 53 | 58.20 | 64.33 | 5.20 | 11.33 | |

| 11 | 60 | 68.50 | 61.17 | 8.50 | 1.17 | |

| 8 | 55 | 63 | 64.14 | 61.08 | 1.14 | 1.92 |

| 19 | – | 63 | 60.00 | 55.00 | 3.00 | 8.00 |

| 15 | 64 | 64 | 59.07 | 57.91 | 4.93 | 6.09 |

| 16 | 64 | 59.25 | 52.46 | 4.75 | 11.54 | |

| 17 | 64 | 60.40 | 65.00 | 3.60 | 1.00 | |

| 7 | 65 | 58.83 | 65.50 | 6.17 | 0.50 | |

| 13 | 65 | 68 | 64.80 | 57.43 | 3.20 | 10.57 |

| 14 | – | 68 | 58.40 | 63.82 | 9.60 | 4.18 |

| 12 | 74 | 71 | 55.80 | 57.40 | 15.20 | 13.60 |

| 18 | 71 | 64.20 | 59.83 | 6.80 | 11.17 | |

| 4 | 73 | 56.60 | 62.38 | 16.40 | 10.62 | |

| 9 | 75 | 75 | 62.00 | 63.08 | 13.00 | 11.92 |

| 1 | 81 | 54.40 | 60.27 | 26.60 | 20.73 | |

| Mean difference: | 8.36 | 8.50 | ||||

From these tables, we can see that the overall average error of the T-score (Test Anxiety and Total Anxiety) between the result of the AMAS-C ground truth data and the prediction by sentiment analysis in Facebook posts is 7.51. On the other hand, the error between the ground truth data and the prediction by sentiment analysis in Facebook posts is 8.41. On the other hand, the error between AMAS-C and prediction by facial emotion analysis is 8.41. The results in the respective ranges of the AMAS-C test are also presented (see Table 7).

Table 7.

Prediction results by range (R) and student (ID) (filtered by the Lie scale).

| ID | Test Anxiety |

Total Anxiety |

||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| C-R | T-R | F-R | T-lag | F-lag | C-R | T-R | F-R | T-lag | F-lag | |

| 1 | 5 | 3 | 3 | 2 | 2 | 5 | 2 | 3 | 3 | 2 |

| 2 | 3 | 3 | 3 | 0 | 0 | 2 | 3 | 3 | 1 | 1 |

| 3 | 3 | 3 | 3 | 0 | 0 | 2 | 3 | 3 | 1 | 1 |

| 4 | 3 | 3 | 3 | 0 | 0 | 4 | 3 | 3 | 1 | 1 |

| 5 | 1 | 3 | 3 | 2 | 2 | 2 | 3 | 3 | 1 | 1 |

| 6 | 2 | 3 | 3 | 1 | 1 | 2 | 2 | 3 | 0 | 1 |

| 7 | 4 | 3 | 3 | 1 | 1 | 4 | 3 | 4 | 1 | 0 |

| 8 | 2 | 3 | 3 | 1 | 1 | 3 | 3 | 3 | 0 | 0 |

| 9 | 4 | 3 | 4 | 1 | 0 | 5 | 3 | 3 | 2 | 2 |

| 10 | 2 | 3 | 4 | 1 | 2 | 2 | 3 | 3 | 1 | 1 |

| 11 | 2 | 4 | 4 | 2 | 2 | 3 | 4 | 3 | 1 | 0 |

| 12 | 4 | 3 | 3 | 1 | 1 | 4 | 3 | 3 | 1 | 1 |

| 13 | 4 | 4 | 3 | 0 | 1 | 4 | 3 | 3 | 1 | 1 |

| 14 | 4 | 3 | 4 | 1 | 0 | 4 | 3 | 3 | 1 | 1 |

| 15 | 2 | 3 | 3 | 1 | 1 | 3 | 3 | 3 | 0 | 0 |

| 16 | 4 | 4 | 3 | 0 | 1 | 3 | 3 | 2 | 0 | 1 |

| 17 | 3 | 3 | 4 | 0 | 1 | 3 | 3 | 4 | 0 | 1 |

| 18 | 4 | 4 | 3 | 0 | 1 | 4 | 3 | 3 | 1 | 1 |

| 19 | 4 | 4 | 3 | 0 | 1 | 3 | 3 | 3 | 0 | 0 |

| Mean difference: | 0.74 | 0.95 | Mean difference: | 0.84 | 0.84 | |||||

Based on what is presented in Table 7, it can be seen that the average lag between the result offered by the ground truth data and the prediction by sentiment analysis in Facebook posts is 0.79. On the other hand, the average lag between the ground truth data and the prediction by facial emotion analysis is 0.89. In this case, we see that the average lag is more distinct. Thus, in the prediction considering the Lie scale, the prediction method using textual analysis is the one that produces the best results.

3.3. Statistical analysis of results using the Lie scale

After the two earlier predictions, the best results are found in the prediction adjusted by the Lie scale of the AMAS-C test. For this reason, the statistical analysis in this section will be based on the data obtained in this last prediction.

First, we will say that a prediction is correct or acceptable if it has no lag or at most a lag of difference 1 with respect to the AMAS-C ground truth data. For this purpose, Table 8 is prepared, showing the number of times and the probability that each delay of the textual and facial analysis occurs in relation to the AMAS-C test.

Table 8.

Prediction of acceptable ranges.

| Lags | 3 | 2 | 1 | 0 |

|---|---|---|---|---|

| Lag instances (text analysis) | 1 | 4 | 19 | 14 |

| 2.63 % | 10.53 % | 50.00 % | 36.84 % | |

| Lag instances (facial analysis) | 0 | 6 | 22 | 10 |

| 0.00 % | 15.79 % | 57.89 % | 26.32 % |

As can be seen, there is a precision of 86.84 % of predicting a T-score corresponding to the correct (36.84 %) or acceptable (50 %) difference using textual analysis. On the other hand, there is a precision of 84.21 % of predicting a T-score corresponding to the correct (26.32 %) or acceptable (57.89 %) difference through facial analysis. Therefore, it can be assumed that the method that allows a more exact prediction of anxiety is the one that uses textual analysis. We will look at the two methods and the scales studied.

Table 9 shows the prediction occurrences of correct or acceptable ranges (Test Anxiety and Total Anxiety) for the face analysis method. It should be noted that there is a precision of 89.47 % when predicting a T-score corresponding to the correct or acceptable score of the Total Anxiety scale by facial analysis. On the other hand, there is a precision of 78.95 % when predicting a T-score corresponding to the correct score of the Test Anxiety scale.

Table 9.

Prediction of acceptable ranges using the facial analysis method.

| Lags | 3 | 2 | 1 | 0 |

|---|---|---|---|---|

| Lag instances (Test Anxiety) | 0 | 4 | 10 | 5 |

| 0.00 % | 21.05 % | 52.63 % | 26.32 % | |

| Lag instances (Total Anxiety) | 0 | 2 | 12 | 5 |

| 0.00 % | 10.53 % | 63.16 % | 26.31 % |

Table 10 again shows the prediction occurrences of correct or acceptable ranges (Test Anxiety and Total Anxiety) for the textual analysis method. In this case, we have a precision of 89.48 % of predicting a T-score corresponding to the correct or acceptable range of the Total Anxiety scale by textual analysis. On the other hand, there is a precision of 84.21 % of predicting, through textual analysis, a T-score corresponding to the correct range of the Test Anxiety scale.

Table 10.

Prediction of acceptable ranges using the text analysis method.

| Lags | 3 | 2 | 1 | 0 |

|---|---|---|---|---|

| Lag instances (Test Anxiety) | 0 | 3 | 8 | 8 |

| 0.00 % | 15.79 % | 42.10 % | 42.11 % | |

| Lag instances (Total Anxiety) | 1 | 1 | 11 | 6 |

| 5.26 % | 5.26 % | 57.90 % | 31.58 % |

As already seen in Table 8, the prediction methods based on textual and facial analysis reflect a high precision (86.84 % and 84.21 %, respectively) of correctly or acceptably predicting the T-score corresponding to the two ranges studied. Now, according to Table 9, Table 10, it can be seen that the prediction method using face and text analysis has a higher precision of correctly predicting the T-score corresponding of the Total Anxiety scale, with a precision of 89.47 % and 89.48 %, respectively. On the other hand, the prediction method using face analysis has a lower precision of correctly predicting the T score corresponding to the Test Anxiety scale (78.95 %) than the method based on textual recognition of emotions (84.21 %).

4. Discussion

This article presents an internet-based tool designed to predict anxiety levels among university students. By analysing the emotions expressed in Facebook posts, it was possible to accurately determine the prevailing emotional tone within a text. Similarly, the facial emotion analysis tool has facilitated the identification of basic emotions.

In this study, the exclusion criteria applied to the Facebook posts supported the sentiment analysis, as in some cases between 5 % and 25 % of a participant's total posts corresponded to posts that fell within the exclusion criteria, i.e., posts that did not represent the thoughts, opinions or feelings of an individual. By filtering these messages, possible errors in the detection of null sentiments were avoided and, in turn, most of the already filtered messages were analysed and classified correctly.

Based on the results obtained, it is found that the developed application provides support for the early identification of anxiety in university students, as there is an 89.47 % precision of correctly predicting an individual's overall diagnosis of anxiety. This means that the developed application can support teachers, psychologists or the competent body in the early identification of students who are likely to fail academically at university due to an anxiety disorder. The prediction method using text analysis had a very slightly higher precision (86.84 %) of being correct in predicting an individual's anxiety than the prediction method using face analysis (84.21 %), but both methods would be valid for detecting anxiety disorders.

The AMAS-C validation tool was used to compare and assess the results of the two proposed prediction methods, as it is a psychologically approved instrument. The scales studied, both in the AMAS-C and in the predictions based on textual and facial analysis, were Total Anxiety and Test Anxiety. In addition, the Lie scale was included in the AMAS-C test as an instrument to supply discrimination criteria for valid or invalid test results. The Physiological Activity, Worry/Oversensitivity and Social Concerns/Stress scales were not included because these scales are not directly related to the student's academic performance.

In terms of related work, several aspects of these studies are similar to the research conducted. As in the present exploration, the aforementioned studies focus on the use of machine learning for automated anxiety prediction. They explore different methods, such as analysing Facebook status updates to detect depression (Ali et al., 2022) and predicting suicide risk and mental health problems on Twitter through multitask learning (Benton et al., 2017) and depression prediction (de Souza et al., 2022) on Reddit. Regarding facial expression recognition, some works (Florea et al., 2019; Giannakakis et al., 2017; Huang et al., 2016) also address anxiety detection using machine learning techniques. However, all of these works use either pre-processed text datasets, which provide textual features of the mental disorder, or facial prediction datasets, which contain images of individuals with anxiety, for training. We adopted the Facebook social network for data pre-processing and categorisation into states of textual information (positive, negative and neutral posts). For face recognition, we considered working with live images while the participant was in a stressful situation. Our contribution is focused on the use of a specific test to detect anxiety in university students at an early stage. The aim is to provide an early anxiety detection tool that is as efficient as the traditional AMAS-C test, while optimising its application time, based on the exposure and identification of two methods (facial and textual) to test which is more reliable in predicting anxiety.

The methods developed for detecting anxiety in university settings through text analysis on the social network Facebook and facial expression recognition have the potential to provide information about students' emotions. However, ethical challenges arise, such as concerns about student privacy. To address this, students must give informed consent for their data to be accessed on the social network, and the information is handled internally within the application. Another challenge or potential limitation is that text interpretation can be complex in some cases, and algorithms must be able to distinguish genuine expressions of anxiety from other factors such as sarcasm or irony. In the case of facial expression analysis, interpretation can be influenced by cultural and personal differences. The use of this technique is proposed as part of regular academic activities at university (rather than the applied knowledge test in this study), under the supervision of authorities and psychologists, which partially addresses privacy concerns.

While the AMAS-C test is a more conventional tool for assessing anxiety, textual analysis through the Facebook social network, as well as analysis and recognition through facial expressions, offer several advantages. The AMAS-C relies on student self-report or interviews, which can be prone to bias, denial or lack of awareness. In contrast, textual and facial analysis could provide an unbiased and unfiltered insight into students' behaviours and emotions. Social media and facial expressions can reveal patterns that students may not recognise or acknowledge in a survey. In addition, these methods could provide earlier detection by identifying subtle changes in behaviour and emotions before the individual is aware of them.

Another advantage of the two proposed methods is that the number of students that can be assessed could be much greater than with traditional methods such as the AMAS-C test. There are universities with admissions of thousands of students, where only those students who decide to seek help out of their own interest or in cases where anxiety is already extreme and has been reported by a third party can be considered. Assessing anxiety conventionally could take much more time and may not even cover the total number of students.

Finally, it is hoped that the proposed methods will make it possible to identify incoming students who may be at risk of academic failure due to anxiety, thus preventing them from dropping out of their studies due to lack of timely support from a competent professional.

5. Conclusions

This paper has described an Internet application that allows the prediction of anxiety in university students. The textual analysis of Facebook posts in terms of general sentiments (positive, negative and neutral) has allowed the correct identification of the predominant sentiment of a text. Similarly, the facial emotion analysis tool has allowed the detection of basic emotions (happiness, anger, fear, disgust, surprise, sadness). This is important because fear is a complex emotion that is related to the basic emotions. Furthermore, the possibility of using the second type of analysis is justified in relation to the increasing trend of using cameras embedded in wearable devices.

The results obtained shed light on the significant potential of the developed application to address the problem of anxiety among university students. With a precision of 89.47 % to correctly predict an individual's overall anxiety diagnosis, this tool could be instrumental in providing timely support to a demographic vulnerable to academic difficulties due to anxiety disorders. Comparison of the prediction methods further underlines the advantages of text analysis, which showed a slightly higher precision rate of 86.84 % compared to facial analysis of 84.21 %. However, the crucial conclusion is that both avenues present valuable possibilities for identifying anxiety disorders that can negatively affect student mental health and academic performance. These results not only represent a technological breakthrough, but also a way for educational institutions to proactively improve their support systems and contribute to the overall well-being of their students.

As future work related to the possible current limitations of this proposal, we think of:

-

•

Expanding the scales taken from the AMAS-C test and predicting each of them with the same or more modalities used in this work, to figure out the modality and scale that allows the most exact prediction.

-

•

Increasing the data source for the textual analysis of textual sentiments, such as the social network Twitter, Instagram, TikTok, among others, and comparing the results.

-

•

Enlarging the sample size and focusing it on non-technical occupations to build a training base with diverse data in the different ranges of the AMAS-C test scales and comparing the results.

-

•

Performing prediction using additional state-of-the-art deep learning models to determine the models that provide the most accurate prediction. Since in this work an algorithm has been developed to adapt to the aforementioned data and needs. Based on this, the results obtained could also be compared with those of the proposed algorithm and other more popular algorithms.

Funding

Grants PID2020-115220RB-C21 and EQC2019-006063-P funded by MCIN/AEI/10.13039/501100011033 and by “ERDF A way to make Europe”. Grant 2022-GRIN-34436 funded by Universidad de Castilla-La Mancha and by “ERDF A way of making Europe”. This research was also supported by CIBERSAM, Instituto de Salud Carlos III, and Ministerio de Ciencia e Innovación. This work has been partially supported by Portuguese Fundação para a Ciência e a Tecnologia – FCT, I.P. under the project UIDB/04524/2020 and by Portuguese National funds through FITEC - Programa Interface, with reference CIT “INOV - INESC Inovação - Financiamento Base”.

Declaration of competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

References

- Ali M., Baqir A., Sherazi H.H.R., Hussain A., Alshehri A.H., Imran M.A. Machine learning based psychotic behaviors prediction from facebook status updates. Comput. Mater. Contin. 2022;72:2411–2472. [Google Scholar]

- Avila D., Altamirano A., Avila J., Guerrero G. 2020 15th Iberian Conference on Information Systems and Technologies. 1–5. IEEE; 2020. Anxiety detection using the AMAS-C test and feeling analysis on the Facebook social network. [Google Scholar]

- Beiter R., Nash R., McCrady M., Rhoades D., Linscomb M., Clarahan M., Sammut S. The prevalence and correlates of depression, anxiety, and stress in a sample of college students. J. Affect. Disord. 2015;173:90–96. doi: 10.1016/j.jad.2014.10.054. [DOI] [PubMed] [Google Scholar]

- Benton A., Mitchell M., Hovy D. arXiv; 2017. Multi-task Learning for Mental Health Using Social Media Text. preprint arXiv:1712.03538. [Google Scholar]

- Canal F.Z., Müller T.R., Matias J.C., Scotton G.G., de Sa Junior A.R., Pozzebon E., Sobieranski A.C. A survey on facial emotion recognition techniques: a state-of-the-art literature review. Inf. Sci. 2022;582:593–617. [Google Scholar]

- Chatzakou D., Vakali A., Kafetsios K. Detecting variation of emotions in online activities. Expert Syst. Appl. 2017;89:318–332. [Google Scholar]

- Ekman P. Facial expression and emotion. Am. Psychol. 1993;48:384. doi: 10.1037//0003-066x.48.4.384. [DOI] [PubMed] [Google Scholar]

- Esposito A., Raimo G., Maldonato M., Vogel C., Conson M., Cordasco G. 2020 11th IEEE International Conference on Cognitive Infocommunications. IEEE; 2020. Behavioral sentiment analysis of depressive states; pp. 209–214. [Google Scholar]

- Eysenck M.W. Psychology Press; 2013. Anxiety: The Cognitive Perspective. [Google Scholar]

- Florea C., Florea L., Badea M.S., Vertan C., Racoviteanu A. BMVC. 2019. Annealed label transfer for face expression recognition; p. 104. [Google Scholar]

- Giannakakis G., Pediaditis M., Manousos D., Kazantzaki E., Chiarugi F., Simos P.G., Marias K., Tsiknakis M. Stress and anxiety detection using facial cues from videos. Biomed. Signal Process. Control. 2017;31:89–101. [Google Scholar]

- Glover J., Fritsch S.L. Child and Adolescent Psychiatric Clinics. Vol. 27. 2018. #KidsAnxiety and social media: a review; pp. 171–182. [DOI] [PubMed] [Google Scholar]

- González Ramírez M.L., Sánchez Yescas A.A., García Vázquez J.P., Padilla López L.A., Galindo Aldana G.M., Galarza del Angel J. 2021 Mexican International Conference on Computer Science (ENC) 2021. Validation study of the manifest anxiety scale in adults for smartphone; pp. 1–6. [Google Scholar]

- Guo T., Zhao W., Alrashoud M., Tolba A., Firmin S., Xia F. Multimodal educational data fusion for students’ mental health detection. IEEE Access. 2022;10:70370–70382. [Google Scholar]

- Guthier B., Dörner R., Martinez H.P. Entertainment Computing and Serious Games. Springer; 2016. Affective computing in games; pp. 402–441. [Google Scholar]

- Harley J.M. Emotions, Technology, Design, and Learning. 2016. Measuring emotions: a survey of cutting edge methodologies used in computer-based learning environment research; pp. 89–114. [Google Scholar]

- Huang F., Wen W., Liu G. 2016 9th International Symposium on Computational Intelligence and Design (ISCID) IEEE; 2016. Facial expression recognition of public speaking anxiety; pp. 237–241. [Google Scholar]

- Jamal N., Xianqiao C., Al-Turjman F., Ullah F. A deep learning–based approach for emotions classification in big corpus of imbalanced tweets. Trans. Asian Low-Resource Language Information Process. 2021;20:1–16. [Google Scholar]

- Jia J. Twenty-Seventh International Joint Conference on Artificial Intelligence. 2018. Mental health computing via harvesting social media data; pp. 5677–5681. [Google Scholar]

- Joshi M.L., Kanoongo N. Depression detection using emotional artificial intelligence and machine learning: a closer review. Mater. Today: Proc. 2022;58:217–226. [Google Scholar]

- Lawson D.J. The University of Maine; 2006. Test Anxiety: A Test of Attentional Bias. [Google Scholar]

- Lozano-Monasor E., López M.T., Fernández-Caballero A., Vigo-Bustos F. Ambient Assisted Living and Daily Activities. 147–154. Springer; 2014. Facial expression recognition from webcam based on active shape models and support vector machines. [Google Scholar]

- Lozano-Monasor E., López M., Vigo-Bustos F., Fernández-Caballero A. Facial expression recognition in ageing adults: from lab to ambient assisted living. J. Ambient. Intell. Humaniz. Comput. 2017;8:567–578. [Google Scholar]

- Meiselman H.L. Emotion Measurement. Elsevier; 2016. Emotion measurement: integrative summary; pp. 645–697. [Google Scholar]

- Mengash H.A. Using data mining techniques to predict student performance to support decision making in university admission systems. IEEE Access. 2020;8:55462–55470. [Google Scholar]

- Nemur L. Babelcube Inc.; 2016. Productividad: Consejos y Atajos de Productividad para personas ocupadas. [Google Scholar]

- Ozen S.N., Ercan I., Irgil E., Sigirli D. Anxiety prevalence and affecting factors among university students. Asia Pac. J. Public Health. 2010;22:127–133. doi: 10.1177/1010539509352803. [DOI] [PubMed] [Google Scholar]

- Patankar A.J., Kulhalli K.V., Sirbi K. 2016 IEEE International Conference on Advances in Electronics, Communication and Computer Technology. IEEE; 2016. Emotweet: sentiment analysis tool for Twitter; pp. 157–159. [Google Scholar]

- Ran M.S., Mendez A.J., Leng L.L., Bansil B., Reyes N., Cordero G., Carreon C., Fausto M., Maminta L., Tang M. Predictors of mental health among college students in Guam: implications for counseling. J. Couns. Dev. 2016;94:344–355. [Google Scholar]

- Ratanasiripong P., China T., Toyama S. Mental health and well-being of university students in Okinawa. Educ. Res. Int. 2018;2018 [Google Scholar]

- Reynolds C., Richmond B., Lowe P.A. Western Psychological Service; 2003. The Adult Manifest Anxiety Scale: Manual. [Google Scholar]

- Scanlon L., Rowling L., Weber Z. ‘You don’t have like an identity…you are just lost in a crowd’: forming a student identity in the first-year transition to university. J. Youth Stud. 2007;10:223–241. [Google Scholar]

- de Souza V.B., Nobre J.C., Becker K. Dac stacking: a deep learning ensemble to classify anxiety, depression, and their comorbidity from reddit texts. IEEE J. Biomed. Health Inform. 2022;26:3303–3311. doi: 10.1109/JBHI.2022.3151589. [DOI] [PubMed] [Google Scholar]

- Spielberger C.D., Vagg P.R. Taylor & Francis; 1995. Test Anxiety: A Transactional Process Model. [Google Scholar]

- Tijerina González L.Z., González Guevara E., Gómez Nava M., Cisneros Estala M.A., Rodríguez García K.Y., Ramos Peña E.G. Depresión, ansiedad y estrés en estudiantes de nuevo ingreso a la educación superior. Rev. Salud Públ. Nutr. 2019;17:41–47. [Google Scholar]

- Vanderlind W.M., Everaert J., Caballero C., Cohodes E.M., Gee D.G. Emotion and emotion preferences in daily life: the role of anxiety. Clin. Psychol. Sci. 2022;10:109–126. doi: 10.1177/21677026211009500. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Yan J.X., Horwitz E.K. Learners’ perceptions of how anxiety interacts with personal and instructional factors to influence their achievement in English: a qualitative analysis of efl learners in China. Lang. Learn. 2008;58:151–183. [Google Scholar]

- Zambrano Verdesoto G.J., Rodríguez Mora K.G., Guevara Torres L.H. Análisis de la deserción estudiantil en las universidades del Ecuador y América Latina. Rev. Pertinencia Acad. 2018:1–28. [Google Scholar]

- Zeidner M. Springer Science & Business Media; 1998. Test Anxiety: The State of the Art. [Google Scholar]

- Zhang T., Schoene A.M., Ji S., Ananiadou S. Natural language processing applied to mental illness detection: a narrative review. NPJ Digit. Med. 2022;5:46. doi: 10.1038/s41746-022-00589-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang T., Yang K., Ji S., Ananiadou S. Emotion fusion for mental illness detection from social media: a survey. Information Fusion. 2023;92:231–246. [Google Scholar]