Abstract

Background

The recommended readability of online health education materials is at or below the sixth- to eighth-grade level. Nevertheless, more than a decade of research has demonstrated that most online education materials pertaining to orthopaedic surgery do not meet these recommendations. The repeated evidence of this limited progress underscores that unaddressed barriers exist to improving readability, such as the added time and cost associated with writing easily readable materials that cover complex topics. Freely available artificial intelligence (AI) platforms might facilitate the conversion of patient-education materials at scale, but to our knowledge, this has not been evaluated in orthopaedic surgery.

Questions/purposes

(1) Can a freely available AI dialogue platform rewrite orthopaedic patient education materials to reduce the required reading skill level from the high-school level to the sixth-grade level (which is approximately the median reading level in the United States)? (2) Were the converted materials accurate, and did they retain sufficient content detail to be informative as education materials for patients?

Methods

Descriptions of lumbar disc herniation, scoliosis, and spinal stenosis, as well as TKA and THA, were identified from educational materials published online by orthopaedic surgery specialty organizations and leading orthopaedic institutions. The descriptions were entered into an AI dialogue platform with the prompt “translate to fifth-grade reading level” to convert each group of text at or below the sixth-grade reading level. The fifth-grade reading level was selected to account for potential variation in how readability is defined by the AI platform, given that there are several widely used preexisting methods for defining readability levels. The Flesch Reading Ease score and Flesch-Kincaid grade level were determined for each description before and after AI conversion. The time to convert was also recorded. Each education material and its respective conversion was reviewed for factual inaccuracies, and each conversion was reviewed for its retention of sufficient detail for intended use as a patient education document.

Results

As presented to the public, the current descriptions of herniated lumbar disc, scoliosis, and stenosis had median (range) Flesch-Kincaid grade levels of 9.5 (9.1 to 10.5), 12.6 (10.8 to 15), and 10.9 (8 to 13.6), respectively. After conversion by the AI dialogue platform, the median Flesch-Kincaid grade level scores for herniated lumbar disc, scoliosis, and stenosis were 5.0 (3.3 to 8.2), 5.6 (4.1 to 7.3), and 6.9 (5 to 7.8), respectively. Similarly, descriptions of TKA and THA improved from 12.0 (11.2 to 13.5) to 6.3 (5.8 to 7.6) and from 11.6 (9.5 to 12.6) to 6.1 (5.4 to 7.1), respectively. The Flesch Reading Ease scores followed a similar trend. Seconds per sentence conversion was median 4.5 (3.3 to 4.9) and 4.5 (3.5 to 4.8) for spine conditions and arthroplasty, respectively. Evaluation of the materials that were converted for ease of reading still provided a sufficient level of nuance for patient education, and no factual errors or inaccuracies were identified.

Conclusion

We found that a freely available AI dialogue platform can improve the reading accessibility of orthopaedic surgery online patient education materials to recommended levels quickly and effectively. Professional organizations and practices should determine whether their patient education materials exceed current recommended reading levels by using widely available measurement tools, and then apply an AI dialogue platform to facilitate converting their materials to more accessible levels if needed. Additional research is needed to determine whether this technology can be applied to additional materials meant to inform patients, such as surgical consent documents or postoperative instructions, and whether the methods presented here are applicable to non–English language materials.

Introduction

The median reading grade level among adults in the United States is at approximately the sixth- to eighth-grade levels [7, 12]. Because of this, the American Medical Association recommends that patient education materials are written at a sixth-grade reading level [31]. Nevertheless, repeated research demonstrates that patient education materials across orthopaedic specialties routinely exceed this recommendation [2, 9, 16, 18, 20, 24, 28, 30]. Research has demonstrated that low literacy is associated with delays in seeking care, presenting with more advanced disease, higher use of emergency services, and increased risk of mortality [4, 5, 23]. To guide authors of patient education materials, organizations such as the National Institutes of Health and Centers for Disease Control and Prevention have published writing guides [6, 25].

Nevertheless, a gap remains between a large proportion of patient education materials in orthopaedics and current recommendations for readability. Generally, there are several challenges to ensuring readable patient materials. For example, assumptions of a patient’s literacy are often overestimated, and education materials are subject to revisions or updates that might not consider readability [11]. However, from a practical perspective, creating readable materials requires additional time and cost because of the high level of detail required to limit complexity [8, 11, 25]. Although there are established software platforms to assist writers in measuring the readability of education materials [16, 26], converting texts to a more readable format remains limited to manual rewriting. Artificial intelligence (AI) language modeling may be a means of augmenting the conversion process and thus limit the obstacles associated with creating materials with recommended reading accessibility. Recently developed and freely available AI dialogue platforms, which are a form of large language modeling, may be a valuable opportunity for facilitating the readability of complex materials, such as patient education content in orthopaedic surgery [13].

Therefore, in this proof-of-concept study, we asked: (1) Can a freely available AI dialogue platform rewrite orthopaedic patient education materials to reduce the required reading skill level from the high-school level to the sixth-grade level (which is approximately the median reading level in the United States)? (2) Were the converted materials accurate, and did they retain sufficient content detail to be informative as education materials for patients?

Patients and Methods

Study Overview

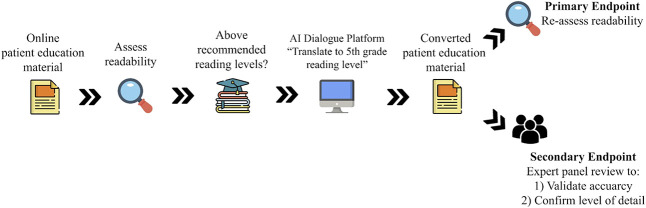

This experimental study investigated the capability of an AI dialogue platform to convert preexisting online patient education materials to recommended readability levels, and it evaluated whether conversion via AI maintained accurate and sufficient information for continued use as a patient education document (Fig. 1). To evaluate this, online patient education materials pertaining to two distinct orthopaedic subspecialties were collected and included two common topic categories (descriptions of conditions or treatments). The readability levels of the patient education materials were measured, and the materials were converted to a lower reading level using an AI dialogue platform. The readability of the education material was remeasured, and each conversion was reviewed for accuracy and detail.

Fig. 1.

Online patient education materials were identified and assessed for readability. Once it was demonstrated that these materials exceeded current recommended reading grade levels, the text was entered into a freely available artificial intelligence dialogue platform with the prompt to translate to the fifth-grade reading level. After conversion, the primary outcome was readability of the converted text. The secondary outcome was a review of all converted texts for retention of sufficient detail so it could continue to educate patients on the respective topics.

Materials Used

This study focused on patient education materials available online, given that the internet is a leading resource of health information for patients and their families [19, 20]. Patient education materials pertaining to spine surgery and adult reconstruction surgery were selected based on the authors’ expertise. We selected three common spine conditions: lumbar disc herniation, spinal stenosis, and scoliosis. We chose lumbar disc herniation and spinal stenosis because of the high incidence of these conditions, and we included scoliosis given the plethora of previous studies examining the readability of scoliosis-related patient education materials [15-17, 19, 27, 32]. Online patient education materials were acquired from the American Association of Neurological Surgeons and three of the leading spine institutions in the United States (Massachusetts General Hospital, Emory Healthcare, and the Rothman Orthopaedic Institute). We also selected the patient education descriptions of TKA and THA, given that these are two of the most common major orthopaedic surgeries in the United States [1]. These materials were acquired from online resources available from the American Academy of Hip and Knee Surgeons, as well as three of the leading arthroplasty institutions in the United States (Massachusetts General Hospital, University of California at San Francisco, and the Rothman Orthopaedic Institute). We added these three institutions to the analysis to increase the variability of education materials used for conversions. The institutions were selected by a single author (GK), with consideration of only the reputation of the institutions in the respective orthopaedic surgery discipline. An exclusion criterion was preexisting patient education materials at or below current readability recommendations, but this criterion did not need to be applied.

Assessment of Reading Levels and Reading Ease

The descriptions of each selected spine condition and arthroplasty procedure were measured for readability using the formulas for the Flesch Reading Ease score and the Flesch-Kincaid grade level. These readability measures are the most commonly used in experiments of this sort [29].

Conversion of Reading Materials Using AI and Re-evaluation

Next, each description was entered into the generative AI dialogue platform ChatGPT (https://chat.openai.com/chat; Version January 9, 2023, OpenAI), preceded by the prompt “translate to fifth-grade reading level.” We aimed to convert text to a level at or below the recommended sixth-grade reading level. We selected the fifth-grade reading level to account for potential variation in how readability is defined by the AI algorithm, given that there are numerous measures of reading level that are widely available [3]. The converted text material was then reevaluated for both validity and accuracy.

Primary and Secondary Study Outcomes

Our primary study goal was to determine whether an AI dialogue platform could improve the readability of preexisting patient education materials. This was measured using the Flesch Reading Ease Score and the Flesch-Kincaid grade level before and after conversion. The time to convert was recorded. A subanalysis was performed to evaluate conversion consistency by measuring four independent conversions of a subset of materials.

Our secondary study goal was to ensure that the converted materials maintained accuracy and the ability to inform. This was achieved via independent review by each author. Each original and converted text was evaluated for the accuracy of the information, and the converted text was evaluated for preservation of sufficient detail to maintain the ability for the information to be used for patient education.

Ethical Approval

The present study was exempt from institutional review because it did not involve human subjects research.

Statistical Analysis

Central tendency is represented by median and range.

Results

Lowering Grade Level and Improving Reading Ease

Conversion of the reading materials using a freely available AI-based dialogue platform resulted in patient education reading materials in spine surgery and arthroplasty that were consistently below the threshold of the recommended sixth-grade level that we sought, and reading ease improved concurrently (Table 1).

Table 1.

Samples of patient education materials converted to improved readability by an artificial intelligence dialogue platform

| Original description | Converted description |

| Scoliosisa | |

| Scoliosis is an abnormal lateral curvature of the spine. It is most often diagnosed in childhood or early adolescence. The spine’s normal curves occur at the cervical, thoracic, and lumbar regions in the so-called “sagittal” plane. These natural curves position the head over the pelvis and work as shock absorbers to distribute mechanical stress during movement. Scoliosis is often defined as spinal curvature in the “coronal” (frontal) plane. | Scoliosis is when the spine is bent to the side. It is most common in kids and young teens. The spine is supposed to have three curves in it, but with scoliosis, the curve is in the wrong place. It can make the spine look like a “C” or “S” shape. It is measured by how much the spine is bent but it is more than that. It is 3D problem which affects the different planes of the spine. The planes are the coronal plane (front and back), sagittal plane (right and left), and axial plane (up and down). |

| TKAb | |

| The first step when making the decision about knee replacement is to meet with your surgeon to see if you are a candidate for total knee replacement surgery. Your surgeon will take your medical history, perform a physical examination, and x-ray your knee. Even if the pain is significant, and the x-rays show advanced arthritis of the joint, the first line of treatment is nearly always nonoperative. This includes weight loss if appropriate, an exercise regimen, medication, injections, or bracing. If the symptoms persist despite these measures, then you could consider surgery. | When you want to have surgery on your knee, the first thing you do is talk to a doctor. They will look at your health and take pictures of your knee. Even if your knee hurts a lot and the pictures show a problem, the doctor will usually first try other ways to help. This might be losing weight, doing exercises, taking medicine, getting shots, or wearing a brace. But if these ways don’t work and you still have pain, then you might think about surgery. |

Converted description refers to output provided by an artificial dialogue platform after a prompt to “translate to fifth-grade reading level”.

Sample from the AANS website (https://www.aans.org/en/Patients/Neurosurgical-Conditions-and-Treatments).

Sample from the AAHKS website (https://hipknee.aahks.org/total-hip-replacement/).

Information About Spinal Conditions

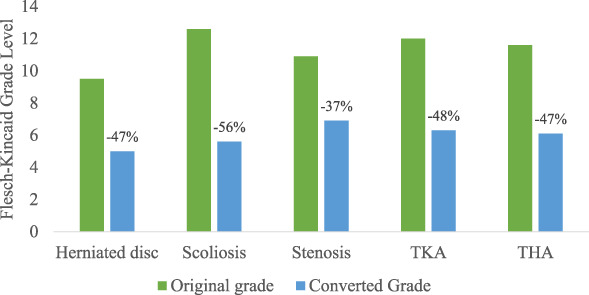

As published online by the American Association of Neurological Surgeons and three leading spine centers, the descriptions of lumbar disc herniation, scoliosis, and stenosis all exceeded current readability recommendations, with median (range) Flesch-Kincaid grade levels of 9.5 (9.1 to 10.5), 12.6 (10.8 to 15), and 10.9 (8 to 13.6), respectively (Table 2). Furthermore, the median Flesch Reading Ease scores for lumbar disc herniation, scoliosis, and stenosis were 59.7 (54.7 to 61.9), 45.1 (22.1 to 46.5), and 51.8 (41 to 64.5), respectively. After conversion to a lower reading grade level, the median Flesch-Kincaid grade levels for lumbar disc herniation, scoliosis, and stenosis improved to 5.0 (3.3 to 8.2), 5.6 (4.1 to 7.3), and 6.9 (5 to 7.8), respectively (Table 2). This represented a 47%, 56%, and 37% reduction in grade level for disc herniation, scoliosis, and stenosis, respectively (Fig. 2). The median Flesch Reading Ease scores improved to 80.4 (74.8 to 93), 79 (71.9 to 91), and 75.5 (70.4 to 83.3) for lumbar disc herniation, scoliosis, and stenosis, respectively. Conversion time was median 4.5 seconds (3.3 to 4.9) per sentence.

Table 2.

Readability of condition patient education materials about spinal conditions, before and after conversion by an artificial intelligence dialogue platform

| Readability | AANS | Rothman Orthopaedic Institute | Emory Health | Massachusetts General Hospital | Median (range) |

| Lumbar disc herniation | |||||

| Original grade | 9.1 | 10.5 | 9.7 | 9.3 | 9.5 (9.1-10.5) |

| Original reading ease | 61.9 | 58.1 | 54.7 | 61.2 | 59.7 (54.7-61.9) |

| Converted grade | 3.3 | 4.5 | 5.5 | 8.2 | 5.0 (3.3-8.2) |

| Converted reading ease | 93 | 84.4 | 76.4 | 74.8 | 80.4 (74.8-93) |

| Scoliosis | |||||

| Original grade | 11.2 | 15 | 10.8 | 14 | 12.6 (10.8-15) |

| Original reading ease | 45.5 | 44.6 | 46.5 | 22.1 | 45.1 (22.1-46.5) |

| Converted grade | 4.1 | 6.1 | 5.1 | 7.3 | 5.6 (4.1-7.3) |

| Converted reading ease | 91 | 80.4 | 77.6 | 71.9 | 79.0 (71.9-91) |

| Stenosis | |||||

| Original grade | 10.5 | 13.6 | 11.2 | 8 | 10.9 (8-13.6) |

| Original reading ease | 52.7 | 41 | 50.9 | 64.5 | 51.8 (41-64.5) |

| Converted grade | 5 | 6.6 | 7.8 | 7.2 | 6.9 (5-7.8) |

| Converted reading ease | 83.3 | 79 | 70.4 | 72 | 75.5 (70.4-83.3) |

Grade and reading ease represented as Flesch-Kincaid Grade (based on United States school grade level, with grade 12 or greater representing higher than secondary education level) and Flesch Reading Ease scores (higher scores correlate to material that is easier to read; range 0-100).

Fig. 2.

This bar graph represents the magnitude of difference in Flesch-Kincaid grade levels of patient education materials before and after conversion by an artificial intelligence dialogue platform.

Information About Arthroplasty

As published by the American Academy of Hip and Knee Surgeons and the three leading arthroplasty centers, the descriptions of TKA and THA all exceeded current readability recommendations, with median (range) Flesch-Kincaid grade levels of 12.0 (11.2 to 13.5) and 11.6 (9.5 to 12.6), respectively (Table 3). The median Flesch Reading Ease scores for TKA and THA were 44.3 (34.3 to 49.6) and 54.1 (41.8 to 58.1), respectively. After conversion, the median Flesch-Kincaid grade levels for TKA and THA improved to 6.3 (5.8 to 7.6) and 6.1 (5.4 to 7.1), respectively, which represented 48% and 47% reductions (Fig. 2). The median Flesch Reading Ease scores improved to 76.0 (74.9 to 79.2) and 77.1 (73.7 to 82.2) for TKA and THA, respectively. Conversion time was median 4.5 (3.5 to 4.8) seconds per sentence.

Table 3.

Readability of procedure patient education materials about arthroplasty, before and after conversion by an artificial intelligence dialogue platform

| Readability | AAHKS | Rothman Orthopaedic Institute | Massachusetts General Hospital | University of California at San Francisco | Median (range) |

| TKA | |||||

| Original grade | 12.2 | 11.2 | 11.7 | 13.5 | 12.0 (11.2-13.5) |

| Original reading ease | 42.9 | 45.6 | 49.6 | 34.3 | 44.3 (34.3-49.6) |

| Converted grade | 6.5 | 7.6 | 5.8 | 6.1 | 6.3 (5.8-7.6) |

| Converted reading ease | 79.2 | 76.4 | 75.6 | 74.9 | 76.0 (74.9-79.2) |

| THA | |||||

| Original grade | 12.6 | 10.5 | 12.6 | 9.5 | 11.6 (9.5-12.6) |

| Original reading ease | 41.8 | 58.1 | 52.7 | 55.4 | 54.1 (41.8-58.1) |

| Converted grade | 7.1 | 5.4 | 5.6 | 6.6 | 6.1 (5.4-7.1) |

| Converted reading ease | 77.8 | 82.2 | 76.3 | 73.7 | 77.1 (73.7-82.2) |

Grade and reading ease represented as Flesch-Kincaid Grade (based on United States school grade level, with grade 12 or greater representing higher than secondary education level) and Flesch Reading Ease scores (higher scores correlate to material that is easier to read; range 0-100).

Secondary Analysis of Consistency

Three additional conversions were performed using the American Association of Neurological Surgeons and American Academy of Hip and Knee Surgeons patient education materials to evaluate the consistency of conversion. The converted descriptions of lumbar disc herniation, scoliosis, and stenosis maintained consistent Flesch-Kincaid grade levels at median (range) of 5.2 (3.3 to 5.8), 6.8 (4.1 to 6.8), and 5.4 (5 to 6), as well as consistent median Flesch Reading Ease scores of 82.6 (81.2 to 93), 75.9 (73.2 to 91), and 82.3 (80.7 to 83.3), respectively (Table 4). Descriptions of TKA and THA were consistently converted, with median Flesch-Kincaid grade levels of 7.4 (6.5 to 8.8) and 6.8 (6.3 to 7.4), and median Flesch Reading Ease scores of 71.7 (68 to 79.2) and 78.7 (71.5 to 76.7), respectively.

Table 4.

Sequential conversions of the same patient education materials to examine the consistency of conversions by an artificial intelligence dialogue platform

| Conversion number | |||||

| 1 | 2 | 3 | 4 | Median (range) | |

| Spinea | |||||

| Herniated lumbar disc | |||||

| Converted grade | 3.3 | 5.2 | 5.2 | 5.8 | 5.2 (3.3-5.8) |

| Converted reading ease | 93 | 82.5 | 82.6 | 81.2 | 82.6 (81.2-93) |

| Scoliosis | |||||

| Converted grade | 4.1 | 6.8 | 6.8 | 6.8 | 6.8 (4.1-6.8) |

| Converted reading ease | 91 | 73.2 | 73.2 | 78.6 | 75.9 (73.2-91) |

| Stenosis | |||||

| Converted grade | 5 | 5.6 | 5.1 | 6 | 5.4 (5-6) |

| Converted reading ease | 83.3 | 81.5 | 83.1 | 80.7 | 82.3 (80.7-83.3) |

| Arthroplasty b | |||||

| TKA | |||||

| Converted grade | 6.5 | 6.8 | 8.8 | 7.9 | 7.4 (6.5-8.8) |

| Converted reading ease | 79.2 | 73.1 | 68 | 70.2 | 71.7 (68-79.2) |

| THA | |||||

| Converted grade | 7.1 | 6.3 | 6.4 | 7.4 | 6.8 (6.3-7.4) |

| Converted reading ease | 77.8 | 79.7 | 79.5 | 71.5 | 78.7 (71.5-76.7) |

Grade and reading ease are represented as Flesch-Kincaid Grade (based on United States school grade level, with grade 12 or greater representing higher than secondary education level) and Flesch Reading Ease scores (higher scores denote material that is easier to read; range 0 to 100).

aFrom the AANS website (https://www.aans.org/en/Patients/Neurosurgical-Conditions-and-Treatments).

bFrom the AAHKS website (https://hipknee.aahks.org/total-hip-replacement/).

Content Quality and Accuracy

After an independent review of each preexisting patient education material and the converted text, there were no identifiable factual errors or inaccuracies. Furthermore, each evaluator determined that sufficient detail for patient education was maintained in each converted text.

Discussion

Despite extensive evidence of deleterious health consequences associated with low health literacy [4, 5], there has been limited progress toward improving the readability of patient education materials in orthopaedic surgery [3, 20]. A potential barrier to improving the readability of patient education materials is the time and cost associated with following detailed writing guidelines [11]. The recent emergence of freely available AI dialogue platforms is a novel opportunity to convert preexisting patient education materials quickly and effectively to recommended readability levels. We demonstrated that converting preexisting patient education materials on topics regarding orthopaedic surgery to recommended readability levels is feasible, can be completed efficiently and consistently, and can maintain sufficient detail and accuracy.

Limitations

First, this was a proof-of-concept study, and it did not explore whether the artificially converted information actually improves patient comprehension. However, the education material did maintain accuracy and sufficient detail from the original education materials after conversion. Next, although the conversions occurred rapidly, each generated text needed to be reviewed by a qualified individual to ensure information was accurate and appropriate before publishing for public viewing. Of note, our study did not evaluate education materials designed specifically for legal or medicolegal purposes, such as surgical consent forms. Therefore, true time or cost savings remain unclear and would require further investigation, particularly for education documents with legal purposes. Furthermore, although the Flesch Reading Ease score and Flesch-Kincaid grade levels are the most widely used readability measures in healthcare, the validity of these tests is limited at higher reading levels such as those in secondary education and beyond [29]. Additional research on AI readability conversions might use alternative readability metrics to validate this method.

Lowering Grade Level and Improving Reading Ease

This study demonstrates that a freely available online AI dialogue platform can successfully convert preexisting patient education materials with high-school and college-level reading levels to text at or below the recommended sixth-grade reading level. Before this study, converting any form of text to easier readability required manual conversion, often aided by guidelines such as those published by the National Institutes of Health [25]. Manual conversion or considering readability guidelines while creating a new education material adds time and cost, which are undoubtedly barriers to improving the prevalence of orthopaedic patient education material readability [11]. Furthermore, educational materials pertaining to orthopaedic surgery represent a unique challenge given that evidence suggests musculoskeletal health literacy is lower on average than health literacy in general [22]. Therefore, orthopaedic surgeons or practices can evaluate the readability of their current patient education materials and facilitate conversion to recommended readability levels through a freely available AI platform. Additional research is needed to determine whether platforms other than the one we used in our study are similarly effective. Furthermore, additional investigation is needed to determine whether AI dialogue platforms can be applied to other educational materials, such as postoperative or discharge instructions, and whether the methods presented here are applicable to non–English language materials.

Content Quality and Accuracy

Despite simplifying the text and making it easier to read for patients whose reading levels are lower, we believe the converted patient education content was accurate and sufficiently detailed to start a conversation in the typical office setting. This was an important goal of our study because a notable critique of generative AI dialogue software is that it may stray from factual information to fulfill a prompt or answer a question [14]. This again underscores the need for expert review of the converted materials before publishing for public viewing. Our study focused on preexisting patient education materials and avoided exploring the capabilities of creating patient education materials without an original body of text. Presumably, the AI platform synthesizes alternative original work to generate the converted materials, but there is the possibility that the AI platform draws directly from alternative sources to convert education materials. The concept of plagiarism by AI is currently under debate as the technology’s role continues to expand, particularly in fields such as writing and art [10, 21]. The conclusions of such debates may affect the range of applications for AI platforms in clinical practice. A prudent approach in the meantime may involve including antiplagiarism software to the review process before disseminating AI-converted materials.

Conclusion

Our study found that a freely available AI dialogue platform can be used to improve the readability of patient education materials in a complex field such as orthopaedic surgery. Professional organizations and orthopaedic practices can easily assess the readability of their patient education materials, but improving the reading ease of such materials traditionally represents a more cumbersome process. Using a freely available AI dialogue platform is an opportunity to simplify the process of improving the reading ease of patient education materials. The application of AI in this manner can help reduce health literacy disparities by increasing the prevalence of education materials at the recommended reading levels. Future research is needed to determine whether similar methods can demonstrate the effectiveness of AI dialogue platforms using non–English language education materials or other materials designed to inform patients, such as postoperative or discharge instructions.

Footnotes

Each author certifies that there are no funding or commercial associations (consultancies, stock ownership, equity interest, patent/licensing arrangements, etc.) that might pose a conflict of interest in connection with the submitted article related to the author or any immediate family members.

All ICMJE Conflict of Interest Forms for authors and Clinical Orthopaedics and Related Research® editors and board members are on file with the publication and can be viewed on request.

Ethical approval was not sought for the present study.

Contributor Information

Raymond Y. Kim, Email: rkim@pennstatehealth.psu.edu.

John B. Weddle, Email: jweddle@pennstatehealth.psu.edu.

Jesse E. Bible, Email: jbible@pennstatehealth.psu.edu.

References

- 1.American Academy of Orthopaedic Surgeons. Orthopaedic practice in the U.S. 2018. Available at: https://www.aaos.org/globalassets/quality-and-practice-resources/census/2018-census.pdf. Accessed March 28, 2023.

- 2.Badarudeen S, Sabharwal S. Readability of patient education materials from the American Academy of Orthopaedic Surgeons and Pediatric Orthopaedic Society of North America web sites. J Bone Joint Surg Am. 2008;90:199-204. [DOI] [PubMed] [Google Scholar]

- 3.Badarudeen S, Sabharwal S. Assessing readability of patient education materials: current role in orthopaedics. Clin Orthop Relat Res. 2010;468:2572-2580. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Baker DW, Gazmararian JA, Williams MV, et al. Functional health literacy and the risk of hospital admission among Medicare managed care enrollees. Am J Public Health. 2002;92:1278-1283. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Baker DW, Parker RM, Williams MV, Clark WS, Nurss J. The relationship of patient reading ability to self-reported health and use of health services. Am J Public Health. 1997;87:1027-1030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Centers for Disease Control and Prevention. Simply put: a guide for creating easy-to-understand materials. Available at: https://stacks.cdc.gov/view/cdc/11938. Accessed March 28, 2023.

- 7.Davis TC, Wolf MS. Health literacy: implications for family medicine. Fam Med. 2004;36:595-598. [PubMed] [Google Scholar]

- 8.Dickinson D, Raynor DK, Duman M. Patient information leaflets for medicines: using consumer testing to determine the most effective design. Patient Educ Couns. 2001;43:147-159. [DOI] [PubMed] [Google Scholar]

- 9.Eltorai AE, Sharma P, Wang J, Daniels AH. Most American Academy of Orthopaedic Surgeons' online patient education material exceeds average patient reading level. Clin Orthop Relat Res. 2015;473:1181-1186. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Frye BL. Should using an AI text generator to produce academic writing be plagiarism? Fordham Intellectual Property, Media, & Entertainment Law Journal. Available at: https://ssrn.com/abstract=4292283. Accessed March 28, 2023. [Google Scholar]

- 11.Gal I, Prigat A. Why organizations continue to create patient information leaflets with readability and usability problems: an exploratory study. Health Educ Res. 2005;20:485-493. [DOI] [PubMed] [Google Scholar]

- 12.Greenberg E, Jin Y. 2003. national assessment of adult literacy: public-use data file user's guide. Available at: https://nces.ed.gov/naal/pdf/2007464.pdf. Accessed March 28, 2023.

- 13.Griffith E, Metz C. A new area of A.I. booms, even amid the tech gloom. Available at: https://www.nytimes.com/2023/01/07/technology/generative-ai-chatgpt-investments.html. Accessed March 14, 2023.

- 14.Liebrenz M, Schleifer R, Buadze A, Bhugra D, Smith A. Generating scholarly content with ChatGPT: ethical challenges for medical publishing. The Lancet Digital Health. 2023;5:e105-e106. [DOI] [PubMed] [Google Scholar]

- 15.Martin BI, Mirza SK, Spina N, Spiker WR, Lawrence B, Brodke DS. Trends in lumbar fusion procedure rates and associated hospital costs for degenerative spinal diseases in the United States, 2004 to 2015. Spine (Phila Pa 1976). 2019;44:369-376. [DOI] [PubMed] [Google Scholar]

- 16.Michel C, Dijanic C, Abdelmalek G, et al. Readability assessment of patient educational materials for pediatric spinal deformity from top academic orthopedic institutions. Spine Deform. 2022;10:1315-1321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Parenteau CS, Lau EC, Campbell IC, Courtney A. Prevalence of spine degeneration diagnosis by type, age, gender, and obesity using Medicare data. Sci Rep. 2021;11:5389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Parsa A, Nazal M, Molenaars RJ, Agrawal RR, Martin SD. Evaluation of hip preservation-related patient education materials from leading orthopaedic academic centers in the united states and description of a novel video assessment tool. J Am Acad Orthop Surg Glob Res Rev. 2020;4:e20.00064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Peterlein CD, Bosch M, Timmesfeld N, Fuchs-Winkelmann S. Parental internet search in the field of pediatric orthopedics. Eur J Pediatr. 2019;178:929-935. [DOI] [PubMed] [Google Scholar]

- 20.Roberts H, Zhang D, Dyer GS. The readability of AAOS patient education materials: evaluating the progress since 2008. J Bone Joint Surg Am. 2016;98:e70. [DOI] [PubMed] [Google Scholar]

- 21.Roose K. An A.I.-generated picture won an art prize. Artists aren't happy. Available at: https://www.nytimes.com/2022/09/02/technology/ai-artificial-intelligence-artists.html. Accessed March 14, 2023.

- 22.Rosenbaum AJ, Pauze D, Pauze D, et al. Health literacy in patients seeking orthopaedic care: results of the Literacy in Musculoskeletal Problems (LIMP) Project. Iowa Orthop J. 2015;35:187-192. [PMC free article] [PubMed] [Google Scholar]

- 23.Rosenbaum AJ, Uhl RL, Rankin EA, Mulligan MT. Social and cultural barriers: understanding musculoskeletal health literacy: AOA critical issues. J Bone Joint Surg Am. 2016;98:607-615. [DOI] [PubMed] [Google Scholar]

- 24.Ryu JH, Yi PH. Readability of spine-related patient education materials from leading orthopedic academic centers. Spine (Phila Pa 1976). 2016;41:E561-565. [DOI] [PubMed] [Google Scholar]

- 25.Sheppard ED, Hyde Z, Florence MN, McGwin G, Kirchner JS, Ponce BA. Improving the readability of online foot and ankle patient education materials. Foot Ankle Int. 2014;35:1282-1286. [DOI] [PubMed] [Google Scholar]

- 26.Stelzer JW, Wellington IJ, Trudeau MT, et al. Readability assessment of patient educational materials for shoulder arthroplasty from top academic orthopedic institutions. JSES Int. 2022;6:44-48. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Truumees D, Duncan A, Mayer EK, Geck M, Singh D, Truumees E. Cross sectional analysis of scoliosis-specific information on the internet: potential for patient confusion and misinformation. Spine Deform. 2020;8:1159-1167. [DOI] [PubMed] [Google Scholar]

- 28.Vives M, Young L, Sabharwal S. Readability of spine-related patient education materials from subspecialty organization and spine practitioner websites. Spine (Phila Pa 1976). 2009;34:2826-2831. [DOI] [PubMed] [Google Scholar]

- 29.Wang LW, Miller MJ, Schmitt MR, Wen FK. Assessing readability formula differences with written health information materials: application, results, and recommendations. Res Social Adm Pharm. 2013;9:503-516. [DOI] [PubMed] [Google Scholar]

- 30.Wang SW, Capo JT, Orillaza N. Readability and comprehensibility of patient education material in hand-related web sites. J Hand Surg Am. 2009;34:1308-1315. [DOI] [PubMed] [Google Scholar]

- 31.Weiss BD. Health Literacy: A Manual For Clinicians. American Medical Association Foundation and American Medical Association; 2003. [Google Scholar]

- 32.Yamaguchi S, Iwata K, Nishizumi K, Ito A, Ohtori S. 2022, Readability and quality of online patient materials in the websites of the Japanese Orthopaedic Association and related orthopaedic societies. J Orthop Sci. Published online June 8, 2022. DOI: 10.1016/j.jos.2022.05.003. [DOI] [PubMed] [Google Scholar]