Highlights

Two artificial intelligence (AI)-based methods for protein structure prediction, AlphaFold 2 and RoseTTAFold, increase dramatically the quality of structural modeling from sequence, nearing experimental accuracy.

Protein language models encode the written language of proteins, allowing for more accurate annotations and predictions than homology-based methods.

Most model organisms, neglected disease pathogens, and proteins with curated annotations have models available with varying quality, aiding wet-laboratory experiments targeting single-question issues.

Ultrafast alignment tools can traverse the protein space by both sequence and structure to identify remote evolutionary relations previously precluded to older and slower methods.

Preliminary analyses of predicted AlphaFold 2 3D-models from 21 model organisms suggest that the majority (>90%) of globular domains in proteins can be assigned to currently characterized domain evolutionary superfamilies.

Keywords: protein structure prediction, machine learning, AI, pLM, structure alignment, embeddings, AlphaFold2

Abstract

Breakthrough methods in machine learning (ML), protein structure prediction, and novel ultrafast structural aligners are revolutionizing structural biology. Obtaining accurate models of proteins and annotating their functions on a large scale is no longer limited by time and resources. The most recent method to be top ranked by the Critical Assessment of Structure Prediction (CASP) assessment, AlphaFold 2 (AF2), is capable of building structural models with an accuracy comparable to that of experimental structures. Annotations of 3D models are keeping pace with the deposition of the structures due to advancements in protein language models (pLMs) and structural aligners that help validate these transferred annotations. In this review we describe how recent developments in ML for protein science are making large-scale structural bioinformatics available to the general scientific community.

From protein sequence and structure to function through ML

The number of experimentally determined, high-resolution structures deposited in the Protein Data Banki (PDB) [1] has grown immensely since its beginning in 1976, enabling research into biological mechanisms, and in turn the development of novel therapeutics and industrial applications. This growth is, however, outpaced exponentially by that of known protein sequences increasingly impacted by high-throughput metagenomic experiments which yield billions of entries per experiment. Closing the ever-increasing gap between protein sequence and annotations of structure and function is thus a desideratum in molecular and medical biology research.

Most proteins comprise two or more structural domains [2], that is, constituents with compact structures assumed to fold largely independently. Structural domains are often associated with specific functional roles [3], although functional sites can be formed from multiple domains [3]. These structural domains – often dubbed ‘folds’ – recur in nature [4], and have been estimated to be limited to a number in the order of thousands [5]. Folds resemble more the Plato’s allegory of the cave: more the image or idea or concept than the real object (Plato Politeia [6]); this image helps to map relations between proteins.

Various resources emerged to classify domain structures in evolutionary families and fold groups (e.g., SCOPii [7], CATHiii [8], SCOPeiv [9], and ECODv [10]), and these have saturated at about 5000 structural families and about 1300 folds over the past decade, despite structural genomics initiatives targeting proteins likely to have new folds [11]. As increasingly powerful sequence profile methods [12., 13., 14.] have identified structural families in completely sequenced organisms (complete proteomes), studies suggest that up to 70% of all domains resemble those already classified in SCOP or CATH [3,15., 16., 17.]. Trivially, the distribution of family size follows some power law: most families/folds are small or species-specific, but a few hundred are very highly populated, tend to be universal across species, and have important functions [8]. In parallel, flexible or intrinsically disordered regions (IDRs) [18] making up 20–30% of all residues in a given proteome have been associated with protein function [19., 20., 21.]. As much as structural domains could be thought of as the structural units of proteins, the millions of domain combinations create the immense diversity of functional repertoires.

Since the details of function for most proteins in most organisms remain uncharacterized, understanding how domains evolve and combine to modify function would be a major step in our quest to understand and engineer biology. Protein structure data can provide a waymark, and exciting advances in structure prediction over the past year suggest that a landmark has been reached [22]. While structure prediction has steadily improved over time, thanks to the exponential growth in protein sequence data and covariation methods, this new era was kick-started by the remarkable performance of AlphaFoldvi (AF) at CASP13 [23]. The method, though, was not made available to the scientific community, resulting in various groups trying to replicate the features behind its breakthrough performance. Methods that were previously state-of-the-art released new versions based on these advancements, such as RoseTTAFold [24] and PREFMD [25]. DeepMind’s AF2 outperformed others in CASP14 [26], and reports suggest that high-quality models can be comparable to crystallographic structures, with competing methods reproducing DeepMind’s results [24,27,28]. DeepMind has recently announced the availability of 214 million putative protein structures for the whole of UniProt, which are available through the AF Database [29] and 3D-Beacons platform at the European Bioinformatics Institute (EBI). The latter provides AF models and other models by other prediction methods [30]. This 1000-fold increase in structural data requires equally transformative developments in methods for processing and analyzing the mix of experimental and putative structure/sequence data, including methods reliably predicting aspects of function from sequence alone [31., 32., 33.], and methods to quickly sift through putative structures [34].

In this review, we consider recent developments in deep learning, a branch of ML (see Glossary) operating on sequence and structure comparisons that enable highly sensitive detection of distant relationships between proteins. These will allow us to harness important insights on putative structure space, on domain combinations, and on the extent and role of disorder. One important observation from this review: no single modality has all the answers. Instead, protein sequence, evolutionary information, latent embeddings from pLMs, and structure information all play key roles in helping to uncover how proteins fold and act. Application of these tools have enabled rapid evolutionary classification of good quality AF2 models (defined as AF2 Predicted Local Distance Difference Test (pLDDT) ≥ 70 [22]) for 21 model organisms, including human (Homo sapiens), mouse (Mus musculus), rat (Rattus norvegicus), and thale cress (Arabidopsis thaliana) [35]. We review the insights derived from these studies and the future opportunities they bring for understanding the links between protein structural arrangements and their functions.

Sequence-based approaches to find homologs

Sequence similarity and evolutionary information provide gold-standard baselines

Comparing a query protein sequence against the growing sequence databases can reveal a goldmine of evolutionary information encoded in related (similar) sequences (Table 1). Closely related sequences with annotations of function and structure have successfully been used for homology-based inference (HBI), that is, the transfer of annotations from labeled to sequence-similar yet unlabeled proteins [36,37]. Beyond annotation transfer, evolutionary information condensed in multiple sequence alignments (MSAs) can serve for de novo protein function and structure prediction methods, which have ranked highly for decades in independent evaluations [37., 38., 39., 40.]. However, the runtime and parameter sensitivity of popular solutions to generate MSAs [12,13,41], in combination with selection biased sequence datasets, creates major bottlenecks: (i) slow runtime, (ii) uninformative MSAs from inappropriate default parameters, from difficult to align families (e.g., IDRs), or from lack of diversity for understudied or species-specific families. Uninformative MSAs affect the prediction quality even for AF2 [22,42,43]. While advances in computer hardware coupled with clever engineering overcame some of the speed limitations [14,44], the faster-than-Moore’s-law [45] growth of sequence databases demands alternative or complementary solutions.

Table 1.

Advantages and disadvantages of methods for homology detection

| Advantages | Disadvantages | |

|---|---|---|

| Homology-based inference (HBI) | • Highly reliable • Interpretable |

• Computationally expensive • Sensitive to choice of databases and parameters |

| Embedding-based annotation transfer (EAT) | • Fast inference, that is, generation of embeddings • Data-driven feature learning and extraction --> reduced human bias • Detection of distant homologs |

• Computationally expensive pretraining (only has to be done once) • Choice of dataset, redundancy level, preprocessing is still human biased |

| Contrastive learning | • Specialization for specific use-case improves performance • Detection of distant homologs |

• Pre-trained model is not generally applicable for identification of homologs for all aspects of protein function |

| Supervised learning | • Detection of distant homologs | • Difficult to extend to more classes • Requires enough data |

| Structure-based annotation transfer (SAT) | • Detection of very distant homologs • Highly interpretable since alignments can be interpreted visually using structure |

• Computationally expensive • No standardized metrics of similarity [root mean square deviation (RMSD), TM-score returned by TM-align method] |

pLMs: deep learning learns protein grammar

One alternative to direct evolutionary information extraction is leveraging deep learning teaching machines to encode information contained in billions of known protein sequences by adapting so-called language models (LMs) from natural language processing (NLP) to learn aspects of the ‘grammar’ of the language of life as encoded in protein sequences [46., 47., 48., 49., 50., 51.]. Where traditional ML models are trained to learn from labeled data (i.e., data with annotations) (supervised training), pLMs implicitly learn data attributes, such as constraints (evolutionary, structural, or functional) shaping protein sequences (dubbed self-supervised learning). This can be achieved either by autoregression, that is, training on predicting the next token (the word in text, the residue in pLMs) given all previous tokens in a sequence, or via masked-language modeling (i.e., by training on reconstructing corrupted sequences from noncorrupted sequence context) [47,48,50]. Repeating this on billions of sequences forces the pLM to learn properties and statistical commonalities of the underlying protein language. The resulting solutions can be transferred to other tasks (transfer learning) to predict many different phenotypes [43,52., 53., 54., 55.] (Figure 1).

Figure 1.

Overview of embeddings applications in protein structure and function characterization.

Images were retrieved from Wikipedia (alpha helix and beta strand, binding sites), Creative Proteomics (cell structure), and bioRxiv (Transmembrane Regions - CASP13 Target T1008, Structure Prediction - PDB 1Q9F) with permission from the authors.

Technically, this can be achieved by extracting the hidden states from the pLM referred to as embeddings. One key advantage of pLMs over evolutionary information is that the computation-heavy information extraction (learning the pLM) needs to be done only once during model training on efficient, high-performance computing centers. The extraction and use of the embeddings, by contrast, is done efficiently on consumer-grade hardware such as modern personal computers or even laptops.

pLMs improve the prediction of protein function

Since the introduction of the first general-purpose pLMs around 3 years ago [46,48,50,56], pLMs have been shown to astutely capture aspects of protein structure, function, and evolution just from the information contained in databases of raw sequences [32,43,47,48,50,52,53,57,58]. In an analogy with HBI, which transfers annotations based on sequence similarity, embedding-based annotation transfer (EAT) captures more information through comparing proteins in embedding, not sequence space [59,60]. Without any domain-specific optimization, and without ever seeing any labeled data, simple EAT outperformed HBI by a large margin and ranked among the top ten methods for predicting the molecular function of a protein during Critical Assessment of Functional Annotation 4 (CAFA4) [31]. Adding domain optimizations, EAT predicted proteins according to the CATH classification [8,60] beyond what could be detected by advanced sequence profile methods [61]. The power of pLMs was confirmed as CATHe revealed distant evolutionary relationships, not detected by sequence profile methods, yet confirmed by structure comparison of AF2-predicted models.

Leap in protein structure prediction combines ML with evolutionary information and hardware

Considered 2021’s method of the year [62], AF2 [22] combines advanced deep learning with evolutionary information from larger MSAs – obtained from the BFD with 2.1 billion sequences [63] or MGnifyvii [64] with 2.4 billion sequences, as opposed to UniProtviii with 231 million [65] – and more potent computer hardware to make major advances in protein structure prediction, providing good quality models for at least 50% of the likely globular domains in UniProt sequences. All top structure prediction methods, including AF2 and RoseTTAFold, rely on evolutionary couplings (EV) [66] extracted from MSAs. These approaches detect protein residues in close proximity and coevolving. The adequate preprocessing of this information has been advancing crucially over the past decades: for example, through direct coupling analysis sharpening this signal [67,68]. Although the leap of AF2 required this foundation in 2021, future advances may build their models on a different foundation [43].

Protein structure proxies function for distant homologs

As alternatives to HBI or EAT, structure-based annotation transfer (SAT) emerged. SAT more reliably captures distantly related proteins (Figure 2). With the recent breakthrough in protein structure prediction [22] solving structures computationally at near-X-ray quality [26], new possibilities to apply SAT at the proteome scale have arisen. A large new collection of in silico predicted structures is available through the AF Protein Structure Database (AFDB)ix [35], which has been analyzed through fold recognition algorithms to refine protein families and to discover novel protein folds. For instance, by mining structures with a widely used structural alignment tool (DALI) [69], a new member of the perforin/gasdermin (GSDM) pore-forming family in humans was identified in spite of having only 1% sequence identity with the GSDM family [70,71]. Furthermore, the expanded search to all proteomes, covering 356 000 predicted structures, discovered 16 novel perforin-like proteins [72].

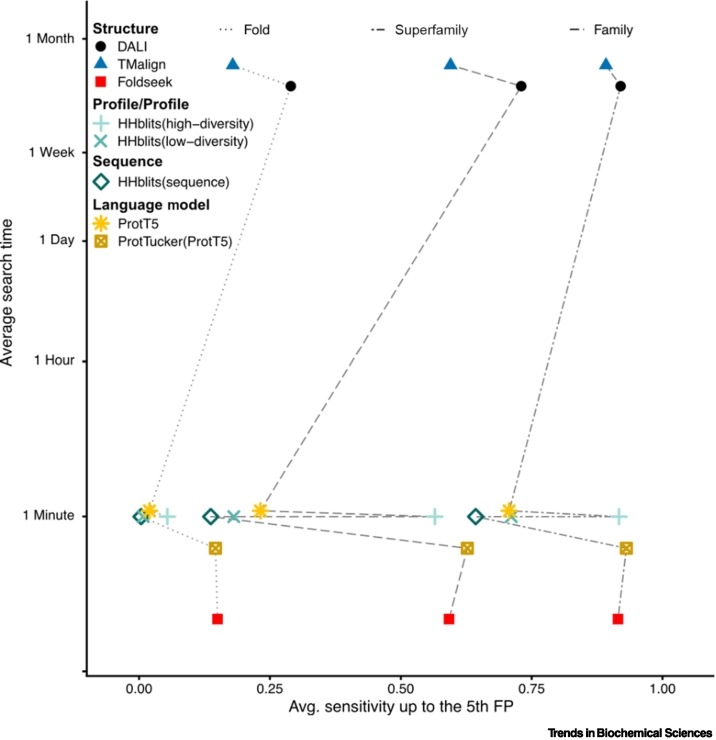

Figure 2.

Comparison of search sensitivity and speed for language models, sequence/profile-profile and structure aligner.

Average sensitivity up to the fifth false positive (x-axis) for family, superfamily, and fold measured on SCOP40e (version 2.01) [9] against average search time for a single query (y-axis) of 100 million proteins. Per SCOP40e domain we compute the fraction of detected true positives for family, superfamily, and fold up to the 5th false positive (FP) (= different fold), and plotted the average sensitivity over the domains (x-axis).

Faster solutions for structure–structure alignments enable high-throughput analyses in seconds

Despite efforts to improve the speed and sensitivity of structural aligners, traditional approaches [69,73., 74., 75.] are too slow to cope with the rapidly increasing size of predicted structure databases [35,76] (Figure 2). Hence, novel ideas for structural comparison algorithms are emerging to accelerate run times. These methods gain in speed by representing structures in a compressed form (Table 2).

Table 2.

Advantages and disadvantages of methods for structure-based homology detection

| Approach | Tools | Advantages | Disadvantages | Representation | Similarity calculation | Alignment method |

|---|---|---|---|---|---|---|

| Structure fragments | Geometricus | Fast structure similarity search tool Accurate compared to other alignment-free techniques | Global comparison only Sensitivity is limited | Backbone encoded as fixed sized fragments as moment invariants | Vector distance similarity of Geometricus embedding vectors | Not available |

| RUPEE | Fast structure database search toolEasy to use through webserver | Global comparison onlySensitivity is limited | Backbone encoded as fixed sized fragments of backbone torsion angles | Jaccard-similarity of torsion fragments or TM-score | TM-align [74] | |

| Structure volume | BioZernike | Protein chain, and quaternary structure topology free technique (avoids chain matching problem)Both methods provide easy to use webserver | Global comparison onlySearches similar surface shape, which is not sensitive | 3D Zernike descriptor of volume | Pretrained distance function to compare two volumes | Not available |

| 3D-AF-Surfer | 3D Zernike descriptors of volume | Predicted probability of being in the same fold by neural network | CE [75] | |||

| Structural alphabets | Foldseek | Fast and accurate structure alignment toolLocal or global alignmentEasy to use through webserver | No quaternary structures comparison | 3Di alphabet that describes tertiary residue–residue interactions | E-value, LDDT, TM-score | Structural Smith–Waterman or TM-align |

One way to compress structural information is to break structures into fixed-size fragments. Geometricus [77] represents proteins as a bag of shape-mers: fixed-sized structural fragments described as moment invariants. It was used to cluster the AFDB and PDB using non-negative matrix factorization to identify novel groups of protein structures [78]. RUPEE [79] is another method that breaks structures into structural fragments. It discretizes protein structures by their backbone torsion angles and then compares the Jaccard similarity of bags of torsion fragments of the two structures. The top 8000 hits are then realigned by TM-align [74] in top-align mode.

Another category of tools represents tertiary structure as discretized volumes and compares these. BioZernike [80], for example, approximates volumes through 3D Zernike descriptors and compares these by a pretrained distance function. 3D-AF-Surfer [15] also applies 3D Zernike descriptors followed by a support vector machine (SVM) trained to calculate the probability of two structures being in the same fold. Results are ranked by the SVM scores, while individual hits can be realigned using combinatorial extension (CE) [75].

The fastest category of structural aligners represents structures as sequences of a discrete structural alphabet. Most of these alphabets discretize the backbone angles of the structure [81., 82., 83.], however at a loss of information in the structured regions. Another type of structural discretization to a sequence was proposed by Foldseekx [34]. It describes tertiary residue–residue interactions as a discrete alphabet. It locally aligns the structure sequences using the fast MMseqs2xi algorithm [84]. Foldseek achieves the sensitivity of a state-of-the-art structural aligner like TM-align, while being at least 20 000 times faster.

Sequence-based structural alignment tools are well equipped to handle the upcoming avalanche of predicted protein structures. Efficient storage of structure information and queries against these makes searches against hundreds of millions of structures feasible. Representing structures as sequences allows us to also adapt fast clustering algorithms like Linclust [84] to compare billions of structures within a day. We expect current tools to further increase in sensitivity to match or exceed the performance of DALI [69] (Figure 2).

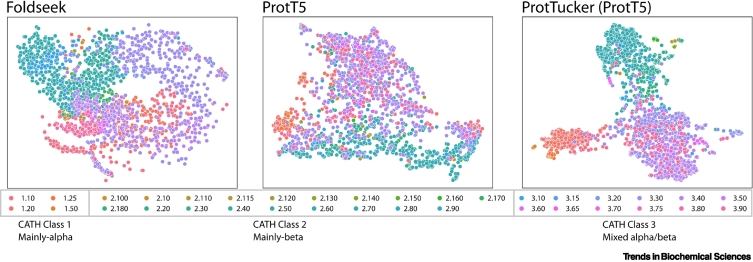

Embeddings from pLMs in combination with fast structural aligners (Foldseek) could be orthogonal in covering, classifying, and validating assignments in large swaths of protein fold space, as shown in Figure 3.

Figure 3.

Visual analysis of the structure space spanned by CATH domains expanded by AlphaFold 2 (AF2) models.

We showcase how distance in either structure (left) or embedding space (middle and right) can be used to gain insight into large sets of proteins. Simply put, we used pairwise distance between proteins to summarize ~850 000 protein domains in a single 2D plot and colored them according to their CATH class and architecture. This exemplifies a general-purpose tool for breaking down the complexity of large sets of proteins and allows, for example, detection of large-scale relationships that would otherwise be hard to find, or to detect outliers. More specifically, ~850 000 domains were structurally aligned using Foldseek [34] (left) in an all-versus-all fashion, resulting in a distance matrix based on the average pairwise bitscore within a superfamily as superfamily distances. The domain sequences were converted to embeddings using the ProtT5 (center) and ProtTucker (right) protein language models (pLMs). Similarly to the structural approach, the distance matrix between superfamilies were calculated using the average euclidean distance between embeddings belonging to different superfamilies. Using different modalities (i.e., structure and sequence embeddings) for computing distances on the same set of proteins, provides different, potentially orthogonal angles on the same problem which can be helpful during hypothesis generation. The resulting distance matrices were used as precomputed inputs for uniform manifold approximation and projection (UMAP) [121] and plotted with seaborn [122].

Application of sequence and structure approaches to analyses of the protein universe

Deep learning extends fold space

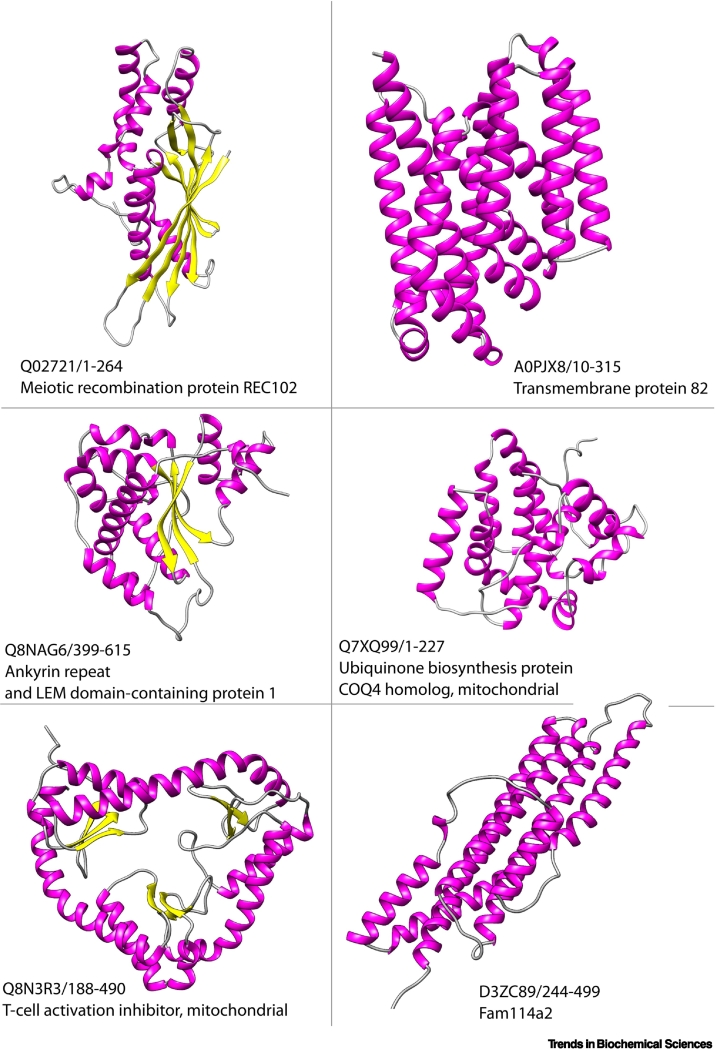

Following AFDB [35], structural analyses using fast, deep-learning-based methods (e.g., Geometricus, 3DZD) [15,77] suggested a slight predominance of mainly-alpha structures compared to the PDB, and predicted the existence of hundreds to thousands more structural families in the dataset [15]. About 75% of the AF2 structures are of sufficient global quality (pLDDT scores of ≥70) for these studies, depending on the analyses. However, even in these well-predicted 3D models, at least 26% of residues were of low model quality [16]. Recent studies showed that nearly 6% of these low-quality residues are predicted to be disordered by sequence-based approaches [78,85]. It is also clear that AF2 struggles to predict domains from small, species-specific families [16], suggesting that covariation data are needed for good-quality models. Preliminary analyses [16] revealed some very unusual structural architectures in which common folds are connected by large unordered regions or combined in quite regular arrangements using helical scaffolds (see also Figure 4).

Figure 4.

New folds in CATH-AlphaFold 2 (AF2).

Examples of novel folds previously not encountered in CATH or Protein Data Bank (PDB). Structures are identified as novel folds if they have no significant structural similarity to domains or structures in the PDB using Foldseek as a comparison method. Each structure identifier is in the format UniProt_ID/start–stop with its current name in UniProt.

We recently analyzed the proportion of predicted AF2 structural domains in the 21 model organisms that could be assigned to known superfamilies in CATH [16]. Only good-quality models were analyzed according to a range of criteria (pLDDT ≥70, large proportions of ordered residues, characteristic packing of secondary structure). We used well-established hidden Markov model (HMM)-based protocols and a novel deep-learning method (CATHe [61] based on ProtT5 [47]) to detect domain regions in the AF2 models, and Foldseek comparisons gave rapid confirmation of matches to CATH relatives [34]. We found that 92%, on average, of domains could be mapped to 3253 CATH superfamilies (out of 5600). We see that the proportion of residues in compact globular domains varies according to the organism, with well-studied model organisms having higher proportions of residues assigned to globular regions (ranging from 32% for Leishmania infantum to 76% for Escherichia coli).

By classifying good-quality AF2 models into CATH, we can expand the number of structurally characterized domains by ~67%, and our knowledge of fold groups in superfamilies (structurally similar relatives which can be well superposed) increases by ~36% to 14 859 [16]. As with other recent studies of AF2 models [15], we observe the greatest expansion in global fold groups for mainly-alpha proteins (2.5-fold). Less than 5% of CATH superfamilies (~250) are highly populated, accounting for 57.7% of all domains [8], and in these so called MEGAfamilies AF2 considerably increases the structural diversity, with some superfamilies now identified as having more than 1000 different fold groups, suggesting considerable structural plasticity outside the common structural core.

Our analyses identified 2367 putative novel families [16]. However, detailed manual analyses of 618 human AF2 structures revealed problematic features in the models, and some very distant homologies, with only 25 superfamilies verified as novel, suggesting that the majority of domain superfamilies may already be known. It is even likely that, as we bring more relatives into the AF2 superfamilies, links between current CATH superfamilies will be established and the number of superfamilies reduced. Indeed, most of the 25 new superfamilies identified possess domain structures with very similar architectures to those in existing CATH superfamilies; mainly-alpha structures (both orthogonal and up–down bundles) were particularly common, as were small alpha–beta two-layer sandwiches and mainly-beta barrels.

Biological discoveries enabled by AF2 data

The availability of off-the-shelf solutions based on AF2 – both as a tool (ColabFoldxii [42], AF2 [22], AF-Multimer [86]) and as a collection of precomputed models (AFDB [35]) – is akin to the introduction of next-generation sequencing in small research groups enabled by nanopore sequencing. Suddenly, almost every protein of interest in various projects from medical to environmental research is not held back by a lack of experimentally derived structures in the PDB.

Caveats

Although AF2 solves many challenging issues in structural modeling, its limitations have been rapidly identified by the community. All models have accompanying scores for each residue, indicating several aspects about the confidence of the prediction. For example, pLDDT gives the confidence for a particular residue, and predicted align error reflects inter-residue distances and local structural environments. Other measures are also provided. Models scoring below an average pLDDT value of 70 and containing large portions with incorrectly oriented secondary structure segments are unsuitable for most biological applications and do not reach the quality of experimentally derived structures [22]. Most issues could be related to the nature of the MSA the model is built upon, as shallowness or gaps in the alignment often result in a poor model [22,87].

Furthermore, overrepresentation of proteins with a particular folding state results in a model that is not representative of other alternative states [88]. Some models with low pLDDT point to IDRs that undergo disorder-to-order transition upon binding or are prone to fold-switching [89., 90., 91.]. Other features that may be available for experimental structures are missing from AF2 models, such as ions, cofactors, ligands, and post-translational modifications (PTMs) [92].

While some effects of sequence variants are captured by AF2, others – in particular point mutations or single amino acid variants – remain elusive to AF2, partly because predictions constitute a family-averaged more than a sequence-specific solution due to the MSA underlying each prediction [78,93].

Small- and medium-scale applications of AF2

With these caveats and limitations taken into account, AF2 enabled both small- and large-scale applications to biological questions. The sudden availability of a reliable model relieved many research groups from long-term structural characterization efforts, allowing for targeted answers in conformational studies [94,95], oligomerization prediction [96,97], drug channel conformations [98], and early-stage assembly of complexes in disease [99]. Predictions derived from AF2 models helped in validating experimentally derived structures and complexes [100], aiding in solving X-ray crystallography for molecular replacement experiments [101], as well as replacing experimental characterization entirely when it fails with particularly tough cases [98]. Transmembrane proteins, in particular, are not easily solved by X-ray crystallography, so AF2 in combination with other techniques such as NMR are being used as an orthogonal validation for experiments where particular conformations of import channels were unclear [98].

Large-scale applications of AF2

Large-scale applications of AF2 and AF-Multimer are creating entirely novel resources (AFDB [35]), complementing or expanding already established ones (CATH [8], APPRIS [102], and Membranome [103]), or enabling more focused collections and analyses, such as the characterization of the ‘metallome’ by identifying all metal-binding sites across proteomes [104], or shining light on the human dark proteome [105], or improving genomic annotation of the human genome through comparison of the predicted structures of 140k isoforms [106]. Since AF has now also released models for neglected tropical diseases, this will progress research on these often underfunded or ignored diseases. The recent release of protein structure models for the whole of UniProt will also enable large-scale analyses across the Tree of Life such as evolutionary studies on domain archaeology, among others.

Unlocking new deep-learning venues

Thanks to the increase in high-quality structure predictions spawned by AF2, there is an increasing need to readily leverage 3D information by prediction methods. Instead of using representations that first map 3D structures to 2D (e.g., contact maps) or 1D (e.g., secondary structure) before feeding them to a predictor, such networks directly operate on 3D representations of macromolecules to make predictions about their properties. Using so-called ‘inductive bias’ when designing a network (i.e., incorporating domain knowledge directly into the architecture) avoids information loss during abstraction and enables the network to directly learn useful information from the raw 3D data itself. Recently, geometric deep-learning research [107], which focuses on methods handling complex representations like graphs, has seen a steady increase in adoption, accuracy, and potential opportunities [108]. Protein 3D structures are naturally fit for geometric deep-learning approaches, whether for supervised tasks, like the prediction of molecule binding [109], or unsupervised learning approaches, which could generate alternatives to learned representations from pLMs [110,111]. Geometric deep-learning approaches stand to benefit the most from large putative 3D structures sets, potentially unlocking further opportunities for alternative, unsupervised protein representations derived from structure, or deep-learning-based potentials to substitute expensive molecular dynamics simulations for molecular docking.

A leveling effect

AF2 will help in eliminating an underlying bias in structural biology that has tended to focus more on drug discovery for human diseases, model organisms, or structures involved in pathogens. With its cheap footprint and cost, compared to traditional experimental means to characterize protein 3D structure, AF2 is neither constrained by access to expensive experimental instruments, nor to beam-time usually prioritized for large consortia. This will enable groups across the world to work on their proteins of interest without geographical or economical limitations. In a similar fashion to nanopore sequencing, model building could be done in real time for issues that are time- or location-sensitive (i.e., emerging pandemics, neglected tropical diseases), or limited to an individual, potentially allowing to transition from whole-genome sequencing to whole-proteome modeling, drug binding, and efficacy profiling.

Concluding remarks and future perspectives

Computationally predicting protein properties with increasing accuracy using deep learning [22,53,57,58] remains crucial to build structures and assemblies that assist researchers in uncovering cellular machinery. Conveniently, the better these predictors become, the more interesting it is to hijack them to design new proteins that perform desired functions [112]. Recently, deep-learning approaches emerged that ‘hallucinate’ new proteins [113] which systems like AF2 confirm may fold into plausible structures. These tools can generate new protein sequences from start to finish or, similarly to text autocompletion, conditioned on part of a given sequence input [112], all within milliseconds of runtime. Coupled with blazingly fast predictors [43,114,115], millions of potentially foldable, ML-generated sequences can be screened reliably in silico, saving energy, time, and resources, requiring in vitro and in vivo experiments only at the most exciting stages of the experimental discovery process. Whilst not all designs fold, and caution is needed, an approach similar to spell correction in NLP but trained on millions of protein sequences allowed researchers to evolve and optimize existing antibodies to better perform a desired activity [116]. Additionally, approaches that generate protein sequences from 3D structure (in some sense, the opposite direction of the classical folding problem) will get more and more important in the post-AF2 era [117]. By selecting for sequence diversity conditioning on structure, new candidates for families may be found.

With booming putative structure databases, we see the emergence of analytical approaches leveraging a model similar to how UniProt’s mix of curated (SwissProt) and putative (TrEMBL) [65] sequence databases are being used. In part, we can build on years of advances in maintaining and searching sequence databases (e.g., to extract evolutionary relationships) to create tools to analyze structure databases instead, with performant tools already available [34]. However, mainstreaming structural analysis on billions of entries will require domain-specific infrastructure and tooling. Geometric deep learning may also assist this modality by bringing new unsupervised solutions similar to pLMs but trained on protein 3D structures instead [110]. With reliable solutions in this space, we expect practitioners to combine sequence and structural analysis.

Within the realm of ‘traditional’ pLMs, that solely learn information from large unlabeled protein sequence databases, there is still room for improvement, as highlighted by recent advances in NLP. For example, there are approaches optimizing the efficiency of LMs, especially, on long sequences either by modifying the existing attention mechanism [118] or by proposing a completely different solution not relying on the de facto standard (attention) [119]. Orthogonal to such architectural improvements, recent research highlights the importance of hyperparameter optimization [120] which goes away from the constant increase in model size and rather suggests to train ‘smaller’ models (still, those models have billions of parameters) on more samples. Taken together these improvements hold the potential to improve today’s sequence-based pLMs further.

Ultimately, we see that a plethora of effective and efficient ML tools operating on different modalities, each with unique strengths and weaknesses, become available at researchers’ fingertips. Further developments in structure- and sequence-based approaches are inevitably needed (see Outstanding questions), yet, even today, combining different ML and software solutions will bring researchers to an untapped world of novel mechanisms that await discovery.

Outstanding questions.

The best structural models rely on quality and availability of protein structures found in nature. How can we further improve these deep-learning methods without relying on previous structural knowledge?

How much structural novelty is hidden in metagenomes? Are we close to discovering all ways nature can shape a protein?

Could protein modeling entirely replace experimentally derived structures?

How can we use these methods to engineer a highly efficient enzyme function never encountered in living organisms?

Speed, accuracy, and coverage of structure predictions and methods are skyrocketing. How can these tools be improved to better probe the dark universe of uncharacterized proteins?

Could future methods build complexes of protein–protein interactions and models quickly and accurately enough to be used for precision medicine?

Can we use embeddings-based methods to predict evolution in sequences?

Can today’s deep-learning structure-prediction method capture dynamic changes in structure?

Alt-text: Outstanding questions

Acknowledgments

Acknowledgments

N.B. acknowledges funding from the Wellcome Trust Grant 221327/Z/20/Z. C.R. acknowledges funding from the BBSRC grant BB/T002735/1. M.S. and S.K. acknowledge support from the National Research Foundation of Korea (NRF), grants (2019R1-A6A1-A10073437, 2020M3-A9G7-103933, 2021-R1C1-C102065, and 2021-M3A9-I4021220), Samsung DS research fund program, and the Creative-Pioneering Researchers Program through Seoul National University. This work was additionally supported by the Bavarian Ministry of Education through funding to the TUM and by a grant from the Alexander von Humboldt foundation through the German Ministry for Research and Education (Bundesministerium für Bildung und Forschung, BMBF), by two grants from BMBF (031L0168117 and program ‘Software Campus 2.0 (TUM) 2.0’ 01IS17049) as well as by a grant from Deutsche Forschungsgemeinschaft (DFG-GZ: RO1320/4-1).

Declaration of interests

No interests are declared.

Glossary

- Embedding-based annotation transfer (EAT)

embedding-based annotation transfer applies the same logic as HBI but replaces sequence similarity (SIM) with similarity of embedding vectors (generalized sequences).

- Evolutionary couplings (EV)

pairs of residues coupled through coevolution. The adequate preprocessing of these signals (e.g., through direct coupling analysis) was one important milestone toward AF2.

- Evolutionary information

information compiled through the comparison of protein sequences and structures by grouping proteins into families connected through evolution. Typically, evolutionary information is compiled in so-called family profiles, or position-specific scoring matrices (PSSMs). The combination of evolutionary information and ML affected most breakthrough steps in protein structure prediction from 1992 to 2021.

- Homology-based inference (HBI)

inference at the base of most protein annotations. Assume a query Q without known phenotype, and a protein K with experimentally known phenotype P, then HBI operates as follows: if the sequence similarity between Q and K exceeds some threshold T, we assume Q to have the same phenotype as K: if SIM(Q,K) > T -> phenotype (Q) = phenotype (K) = P. How to measure SIM and what value to choose for T depends on the type of phenotype and has to be empirically determined for each type of ‘phenotype’: for example, for 3D structure, and also for secondary structure, or for molecular function in the GeneOntology and also for binding particular classes of ligands. Incidentally, the evolutionary link in the word ‘homology’ is crucial, because protein design can generate protein pairs with similar sequences and dissimilar phenotypes.

- Machine learning (ML)

computational systems that aim to emulate human intelligence, usually by means of statistics and probability.

- Protein language models (pLMs) and embeddings

while language models (LMs) from natural language processing (NLP) understand natural language from data, pLMs aim at understanding the language of life through implicitly capturing the evolutionary, functional, and structural constraints on protein sequences. Effectively, such constraints can be learned from large sets of unannotated protein sequences, because all sequences that can ever be observed are not a random subset of all possible sequences. These constraints are captured in the connections of the ‘neurons’ used to train the pLMs, and can be written as vectors (rows of real-valued numbers) that are referred to as ‘the embeddings’.

- Structure-based annotation transfer (SAT)

implementing a logic similar to HBI, SAT expands beyond the evolutionary connection. Instead, the assumption is that similar shapes (3D coordinates) and feature descriptors (e.g., density of charged residues in surface patch) affect some similarity in terms of other function-related phenotypes.

Resources

iwww.wwpdb.org/iihttps://scop2.mrc-lmb.cam.ac.uk/iiiwww.cathdb.info/ivhttps://scop.berkeley.edu/vhttp://prodata.swmed.edu/ecod/viwww.deepmind.com/blog/putting-the-power-of-alphafold-into-the-worlds-handsviiwww.ebi.ac.uk/metagenomics/viiiwww.uniprot.org/ixwww.alphafold.ebi.ac.uk/xhttps://search.foldseek.com/xihttps://github.com/soedinglab/MMseqs2xiihttps://github.com/sokrypton/ColabFoldReferences

- 1.wwPDB consortium Protein Data Bank: the single global archive for 3D macromolecular structure data. Nucleic Acids Res. 2019;47:D520–D528. doi: 10.1093/nar/gky949. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Liu J., Rost B. CHOP proteins into structural domain-like fragments. Proteins. 2004;55:678–688. doi: 10.1002/prot.20095. [DOI] [PubMed] [Google Scholar]

- 3.Orengo C.A., Thornton J.M. Protein families and their evolution—a structural perspective. Annu. Rev. Biochem. 2005;74:867–900. doi: 10.1146/annurev.biochem.74.082803.133029. [DOI] [PubMed] [Google Scholar]

- 4.Chothia C. Proteins. One thousand families for the molecular biologist. Nature. 1992;357:543–544. doi: 10.1038/357543a0. [DOI] [PubMed] [Google Scholar]

- 5.Orengo C.A., et al. Protein superfamilies and domain superfolds. Nature. 1994;372:631–634. doi: 10.1038/372631a0. [DOI] [PubMed] [Google Scholar]

- 6.Sweeney L., St Louis University ‘The Republic of Plato’, translated with notes and an interpretative essay by Allan Bloom. Mod. Sch. 1971;48:280–284. [Google Scholar]

- 7.Murzin A.G., et al. SCOP: a structural classification of proteins database for the investigation of sequences and structures. J. Mol. Biol. 1995;247:536–540. doi: 10.1006/jmbi.1995.0159. [DOI] [PubMed] [Google Scholar]

- 8.Sillitoe I., et al. CATH: increased structural coverage of functional space. Nucleic Acids Res. 2021;49:D266–D273. doi: 10.1093/nar/gkaa1079. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Chandonia J.-M., et al. SCOPe: improvements to the structural classification of proteins – extended database to facilitate variant interpretation and machine learning. Nucleic Acids Res. 2022;50:D553–D559. doi: 10.1093/nar/gkab1054. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Cheng H., et al. ECOD: An evolutionary classification of protein domains. PLoS Comput. Biol. 2014;10 doi: 10.1371/journal.pcbi.1003926. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dessailly B.H., et al. PSI-2: Structural genomics to cover protein domain family space. Structure. 2009;17:869–881. doi: 10.1016/j.str.2009.03.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Johnson L.S., et al. Hidden Markov model speed heuristic and iterative HMM search procedure. BMC Bioinforma. 2010;11:431. doi: 10.1186/1471-2105-11-431. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Remmert M., et al. HHblits: lightning-fast iterative protein sequence searching by HMM–HMM alignment. Nat. Methods. 2012;9:173–175. doi: 10.1038/nmeth.1818. [DOI] [PubMed] [Google Scholar]

- 14.Mirdita M., et al. MMseqs2 desktop and local web server app for fast, interactive sequence searches. Bioinformatics. 2019;35:2856–2858. doi: 10.1093/bioinformatics/bty1057. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Aderinwale T., et al. Real-time structure search and structure classification for AlphaFold protein models. Commun. Biol. 2022;5:316. doi: 10.1038/s42003-022-03261-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bordin, N. et al. AlphaFold2 reveals commonalities and novelties in protein structure space for 21 model organisms. Commun. Biol. In press [DOI] [PMC free article] [PubMed]

- 17.Kolodny R., et al. On the universe of protein folds. Annu. Rev. Biophys. 2013;42:559–582. doi: 10.1146/annurev-biophys-083012-130432. [DOI] [PubMed] [Google Scholar]

- 18.Dunker A.K., et al. What’s in a name? Why these proteins are intrinsically disordered: Why these proteins are intrinsically disordered. Intrinsically Disord. Proteins. 2013;1 doi: 10.4161/idp.24157. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Romero P., et al. Thousands of proteins likely to have long disordered regions. Pac. Symp. Biocomput. 1998;1998:437–448. [PubMed] [Google Scholar]

- 20.Schlessinger A., et al. Protein disorder – a breakthrough invention of evolution? Curr. Opin. Struct. Biol. 2011;21:412–418. doi: 10.1016/j.sbi.2011.03.014. [DOI] [PubMed] [Google Scholar]

- 21.Kastano K., et al. Evolutionary study of disorder in protein sequences. Biomolecules. 2020;10:1413. doi: 10.3390/biom10101413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Jumper J., et al. Highly accurate protein structure prediction with AlphaFold. Nature. 2021;596:583–589. doi: 10.1038/s41586-021-03819-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Kryshtafovych A., et al. Critical assessment of methods of protein structure prediction (CASP) – round XIII. Proteins Struct. Funct. Bioinforma. 2019;87:1011–1020. doi: 10.1002/prot.25823. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Baek M., et al. Accurate prediction of protein structures and interactions using a three-track neural network. Science. 2021;373:871–876. doi: 10.1126/science.abj8754. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Heo L., Feig M. High-accuracy protein structures by combining machine-learning with physics-based refinement. Proteins Struct. Funct. Bioinforma. 2020;88:637–642. doi: 10.1002/prot.25847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Lupas A.N., et al. The breakthrough in protein structure prediction. Biochem. J. 2021;478:1885–1890. doi: 10.1042/BCJ20200963. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ahdritz G., et al. OpenFold: Retraining AlphaFold2 yields new insights into its learning mechanisms and capacity for generalization. bioRxiv. 2022 doi: 10.1101/2022.11.20.517210. Published online November 24, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Sen N., et al. Characterizing and explaining the impact of disease-associated mutations in proteins without known structures or structural homologs. Brief. Bioinform. 2022;23 doi: 10.1093/bib/bbac187. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Tunyasuvunakool K., et al. Highly accurate protein structure prediction for the human proteome. Nature. 2021;596:590–596. doi: 10.1038/s41586-021-03828-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Humphreys I.R., et al. Computed structures of core eukaryotic protein complexes. Science. 2021;374 doi: 10.1126/science.abm4805. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Littmann M., et al. Embeddings from deep learning transfer GO annotations beyond homology. Sci. Rep. 2021;11:1160. doi: 10.1038/s41598-020-80786-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Littmann M., et al. Protein embeddings and deep learning predict binding residues for various ligand types. Sci. Rep. 2021;11:23916. doi: 10.1038/s41598-021-03431-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zhao B., et al. DescribePROT: database of amino acid-level protein structure and function predictions. Nucleic Acids Res. 2021;49:D298–D308. doi: 10.1093/nar/gkaa931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.van Kempen M., et al. Foldseek: fast and accurate protein structure search. bioRxiv. 2022 doi: 10.1101/2022.02.07.479398. Published online September 20, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Varadi M., et al. AlphaFold Protein Structure Database: massively expanding the structural coverage of protein-sequence space with high-accuracy models. Nucleic Acids Res. 2022;50:D439–D444. doi: 10.1093/nar/gkab1061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Hamp T., et al. Homology-based inference sets the bar high for protein function prediction. BMC Bioinforma. 2013;14:S7. doi: 10.1186/1471-2105-14-S3-S7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Qiu J., et al. ProNA2020 predicts protein–DNA, protein–RNA, and protein–protein binding proteins and residues from sequence. J. Mol. Biol. 2020;432:2428–2443. doi: 10.1016/j.jmb.2020.02.026. [DOI] [PubMed] [Google Scholar]

- 38.Cui Y., et al. Predicting protein-ligand binding residues with deep convolutional neural networks. BMC Bioinforma. 2019;20:93. doi: 10.1186/s12859-019-2672-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Rost B., Sander C. Prediction of protein secondary structure at better than 70% accuracy. J. Mol. Biol. 1993;232:584–599. doi: 10.1006/jmbi.1993.1413. [DOI] [PubMed] [Google Scholar]

- 40.Hecht M., et al. Better prediction of functional effects for sequence variants. BMC Genomics. 2015;16:S1. doi: 10.1186/1471-2164-16-S8-S1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Altschul S. Gapped BLAST and PSI-BLAST: a new generation of protein database search programs. Nucleic Acids Res. 1997;25:3389–3402. doi: 10.1093/nar/25.17.3389. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Mirdita M., et al. ColabFold: making protein folding accessible to all. Nat. Methods. 2022;19:679–682. doi: 10.1038/s41592-022-01488-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Weissenow K., et al. Protein language model embeddings for fast, accurate, alignment-free protein structure prediction. Structure. 2022;30:1169–1177.e4. doi: 10.1016/j.str.2022.05.001. [DOI] [PubMed] [Google Scholar]

- 44.Buchfink B., et al. Sensitive protein alignments at tree-of-life scale using DIAMOND. Nat. Methods. 2021;18:366–368. doi: 10.1038/s41592-021-01101-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Moore G. Cramming more components onto integrated circuits. Electronics. 1965;38:82–85. [Google Scholar]

- 46.Bepler T., Berger B. Learning protein sequence embeddings using information from structure. arXiv. 2019 doi: 10.48550/arXiv.1902.08661. Published online October 16, 2019. [DOI] [Google Scholar]

- 47.Elnaggar A., et al. ProtTrans: towards cracking the language of lifes code through self-supervised deep learning and high performance computing. IEEE Trans. Pattern Anal. Mach. Intell. 2022;44:7112–7127. doi: 10.1109/TPAMI.2021.3095381. [DOI] [PubMed] [Google Scholar]

- 48.Heinzinger M., et al. Modeling aspects of the language of life through transfer-learning protein sequences. BMC Bioinforma. 2019;20:723. doi: 10.1186/s12859-019-3220-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Ofer D., et al. The language of proteins: NLP, machine learning & protein sequences. Comput. Struct. Biotechnol. J. 2021;19:1750–1758. doi: 10.1016/j.csbj.2021.03.022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Rives A., et al. Biological structure and function emerge from scaling unsupervised learning to 250 million protein sequences. Proc. Natl. Acad. Sci. U. S. A. 2021;118 doi: 10.1073/pnas.2016239118. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Brandes N., et al. ProteinBERT: a universal deep-learning model of protein sequence and function. Bioinformatics. 2022;38:2102–2110. doi: 10.1093/bioinformatics/btac020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Stärk H., et al. Light attention predicts protein location from the language of life. Bioinforma. Adv. 2021;1 doi: 10.1093/bioadv/vbab035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Marquet C., et al. Embeddings from protein language models predict conservation and variant effects. Hum. Genet. 2022;141:1629–1647. doi: 10.1007/s00439-021-02411-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Villegas-Morcillo A., et al. Unsupervised protein embeddings outperform hand-crafted sequence and structure features at predicting molecular function. Bioinformatics. 2021;37:162–170. doi: 10.1093/bioinformatics/btaa701. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Thumuluri V., et al. DeepLoc 2.0: multi-label subcellular localization prediction using protein language models. Nucleic Acids Res. 2022;50:W228–W234. doi: 10.1093/nar/gkac278. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Alley E.C., et al. Unified rational protein engineering with sequence-based deep representation learning. Nat. Methods. 2019;16:1315–1322. doi: 10.1038/s41592-019-0598-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Seo S., et al. DeepFam: deep learning based alignment-free method for protein family modeling and prediction. Bioinformatics. 2018;34:i254–i262. doi: 10.1093/bioinformatics/bty275. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Vig J., et al. BERTology meets biology: interpreting attention in protein language models. arXiv. 2021 doi: 10.48550/arXiv.2006.15222. Published online March 28, 2021. [DOI] [Google Scholar]

- 59.Littmann M., et al. Clustering FunFams using sequence embeddings improves EC purity. Bioinformatics. 2021;37:3449–3455. doi: 10.1093/bioinformatics/btab371. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Heinzinger M., et al. Contrastive learning on protein embeddings enlightens midnight zone. NAR Genomics Bioinforma. 2022;4 doi: 10.1093/nargab/lqac043. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Nallapareddy V., et al. CATHe: detection of remote homologues for CATH superfamilies using embeddings from protein language models. bioRxiv. 2022 doi: 10.1101/2022.03.10.483805. Published online March 13, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Marx V. Method of the year: protein structure prediction. Nat. Methods. 2022;19:5–10. doi: 10.1038/s41592-021-01359-1. [DOI] [PubMed] [Google Scholar]

- 63.Steinegger M., et al. Protein-level assembly increases protein sequence recovery from metagenomic samples manyfold. Nat. Methods. 2019;16:603–606. doi: 10.1038/s41592-019-0437-4. [DOI] [PubMed] [Google Scholar]

- 64.Mitchell A.L., et al. MGnify: the microbiome analysis resource in 2020. Nucleic Acids Res. 2020;48:D570–D578. doi: 10.1093/nar/gkz1035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.The UniProt Consortium, et al. UniProt: the universal protein knowledgebase in 2021. Nucleic Acids Res. 2021;49:D480–D489. doi: 10.1093/nar/gkaa1100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Marks D.S., et al. Protein 3D structure computed from evolutionary sequence variation. PLoS One. 2011;6 doi: 10.1371/journal.pone.0028766. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Anishchenko I., et al. Origins of coevolution between residues distant in protein 3D structures. Proc. Natl. Acad. Sci. 2017;114:9122–9127. doi: 10.1073/pnas.1702664114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Jones D.T., et al. PSICOV: precise structural contact prediction using sparse inverse covariance estimation on large multiple sequence alignments. Bioinformatics. 2011;28:184–190. doi: 10.1093/bioinformatics/btr638. [DOI] [PubMed] [Google Scholar]

- 69.Holm L. In: Structural Bioinformatics. Gáspári Z., editor. Vol. 2112. Springer; 2020. Using Dali for protein structure comparison; pp. 29–42. [DOI] [PubMed] [Google Scholar]

- 70.Ruan J., et al. Cryo-EM structure of the gasdermin A3 membrane pore. Nature. 2018;557:62–67. doi: 10.1038/s41586-018-0058-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Ding J., et al. Pore-forming activity and structural autoinhibition of the gasdermin family. Nature. 2016;535:111–116. doi: 10.1038/nature18590. [DOI] [PubMed] [Google Scholar]

- 72.Bayly-Jones C., Whisstock J.C. Mining folded proteomes in the era of accurate structure prediction. PLoS Comput. Biol. 2022;18 doi: 10.1371/journal.pcbi.1009930. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Taylor W.R., Orengo C.A. Protein structure alignment. J. Mol. Biol. 1989;208:1–22. doi: 10.1016/0022-2836(89)90084-3. [DOI] [PubMed] [Google Scholar]

- 74.Zhang Y. TM-align: a protein structure alignment algorithm based on the TM-score. Nucleic Acids Res. 2005;33:2302–2309. doi: 10.1093/nar/gki524. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Shindyalov I.N., Bourne P.E. Protein structure alignment by incremental combinatorial extension (CE) of the optimal path. Protein Eng. Des. Sel. 1998;11:739–747. doi: 10.1093/protein/11.9.739. [DOI] [PubMed] [Google Scholar]

- 76.Waterhouse A., et al. SWISS-MODEL: homology modelling of protein structures and complexes. Nucleic Acids Res. 2018;46:W296–W303. doi: 10.1093/nar/gky427. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Durairaj J., et al. Geometricus represents protein structures as shape-mers derived from moment invariants. Bioinformatics. 2020;36:i718–i725. doi: 10.1093/bioinformatics/btaa839. [DOI] [PubMed] [Google Scholar]

- 78.Akdel M., et al. A structural biology community assessment of AlphaFold 2 applications. Nat. Struct. Mol. Biol. 2022;29:1056–1067. doi: 10.1038/s41594-022-00849-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Ayoub R., Lee Y. RUPEE: A fast and accurate purely geometric protein structure search. PLoS One. 2019;14 doi: 10.1371/journal.pone.0213712. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Guzenko D., et al. Real time structural search of the Protein Data Bank. PLoS Comput. Biol. 2020;16 doi: 10.1371/journal.pcbi.1007970. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Yang J.-M. Protein structure database search and evolutionary classification. Nucleic Acids Res. 2006;34:3646–3659. doi: 10.1093/nar/gkl395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.de Brevern A.G., et al. Bayesian probabilistic approach for predicting backbone structures in terms of protein blocks. Proteins Struct. Funct. Genet. 2000;41:271–287. doi: 10.1002/1097-0134(20001115)41:3<271::aid-prot10>3.0.co;2-z. [DOI] [PubMed] [Google Scholar]

- 83.Wang S., Zheng W.-M. CLePAPS: fast pair alignment of protein structures based on conformational letters. J. Bioinforma. Comput. Biol. 2008;6:347–366. doi: 10.1142/s0219720008003461. [DOI] [PubMed] [Google Scholar]

- 84.Steinegger M., Söding J. Clustering huge protein sequence sets in linear time. Nat. Commun. 2018;9:2542. doi: 10.1038/s41467-018-04964-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Porta-Pardo E., et al. The structural coverage of the human proteome before and after AlphaFold. PLoS Comput. Biol. 2022;18 doi: 10.1371/journal.pcbi.1009818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Evans R., et al. Protein complex prediction with AlphaFold-Multimer. bioRxiv. 2022 doi: 10.1101/2021.10.04.463034. Published online March 10, 2022. [DOI] [Google Scholar]

- 87.Bondarenko V., et al. Structures of highly flexible intracellular domain of human α7 nicotinic acetylcholine receptor. Nat. Commun. 2022;13:793. doi: 10.1038/s41467-022-28400-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 88.del Alamo D., et al. Sampling alternative conformational states of transporters and receptors with AlphaFold2. eLife. 2022;11 doi: 10.7554/eLife.75751. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Ruff K.M., Pappu R.V. AlphaFold and implications for intrinsically disordered proteins. J. Mol. Biol. 2021;433 doi: 10.1016/j.jmb.2021.167208. [DOI] [PubMed] [Google Scholar]

- 90.Wilson C.J., et al. AlphaFold2: a role for disordered protein prediction? Int. J. Mol. Sci. 2022;23:4591. doi: 10.3390/ijms23094591. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 91.Alderson T.R., et al. Systematic identification of conditionally folded intrinsically disordered regions by AlphaFold2. bioRxiv. 2022 doi: 10.1101/2022.02.18.481080. Published online February 18, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 92.Perrakis A., Sixma T.K. AI revolutions in biology: the joys and perils of AlphaFold. EMBO Rep. 2021;22 doi: 10.15252/embr.202154046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Schmidt A., et al. Predicting the pathogenicity of missense variants using features derived from AlphaFold2. bioRxiv. 2022 doi: 10.1101/2022.03.05.483091. Published online March 05, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 94.Esposito L., et al. AlphaFold-predicted structures of KCTD proteins unravel previously undetected relationships among the members of the family. Biomolecules. 2021;11:1862. doi: 10.3390/biom11121862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 95.Saldaño T., et al. Impact of protein conformational diversity on AlphaFold predictions. Bioinformatics. 2022;38:2742–2748. doi: 10.1093/bioinformatics/btac202. [DOI] [PubMed] [Google Scholar]

- 96.Santuz H., et al. Small oligomers of Aβ42 protein in the bulk solution with AlphaFold2. ACS Chem. Neurosci. 2022;13:711–713. doi: 10.1021/acschemneuro.2c00122. [DOI] [PubMed] [Google Scholar]

- 97.Ivanov Y.D., et al. Prediction of monomeric and dimeric structures of CYP102A1 using AlphaFold2 and AlphaFold multimer and assessment of point mutation effect on the efficiency of intra- and interprotein electron transfer. Molecules. 2022;27:1386. doi: 10.3390/molecules27041386. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98.del Alamo D., et al. AlphaFold2 predicts the inward-facing conformation of the multidrug transporter LmrP. Proteins Struct. Funct. Bioinforma. 2021;89:1226–1228. doi: 10.1002/prot.26138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99.Goulet A., Cambillau C. Structure and topology prediction of phage adhesion devices using AlphaFold2: the case of two Oenococcus oeni phages. Microorganisms. 2021;9:2151. doi: 10.3390/microorganisms9102151. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.van Breugel M., et al. Structural validation and assessment of AlphaFold2 predictions for centrosomal and centriolar proteins and their complexes. Commun. Biol. 2022;5:312. doi: 10.1038/s42003-022-03269-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 101.Millán C., et al. Assessing the utility of CASP14 models for molecular replacement. Proteins Struct. Funct. Bioinforma. 2021;89:1752–1769. doi: 10.1002/prot.26214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 102.Rodriguez J.M., et al. APPRIS: selecting functionally important isoforms. Nucleic Acids Res. 2022;50:D54–D59. doi: 10.1093/nar/gkab1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103.Lomize A.L., et al. Membranome 3.0: database of single-pass membrane proteins with AlphaFold models. Protein Sci. 2022;31 doi: 10.1002/pro.4318. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 104.Wehrspan Z.J., et al. Identification of iron-sulfur (Fe-S) cluster and zinc (Zn) binding sites within proteomes predicted by DeepMind’s AlphaFold2 program dramatically expands the metalloproteome. J. Mol. Biol. 2022;434 doi: 10.1016/j.jmb.2021.167377. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 105.Binder J.L., et al. AlphaFold illuminates half of the dark human proteins. Curr. Opin. Struct. Biol. 2022;74 doi: 10.1016/j.sbi.2022.102372. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106.Sommer M.J., et al. Highly accurate isoform identification for the human transcriptome. bioRxiv. 2022 doi: 10.1101/2022.06.08.495354. Published online June 09, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107.Bronstein M.M., et al. Geometric deep learning: grids, groups, graphs, geodesics, and gauges. arXiv. 2021 doi: 10.48550/arXiv.2104.13478. Published online May 2, 2021. [DOI] [Google Scholar]

- 108.Veličković P. Message passing all the way up. arXiv. 2022 doi: 10.48550/arxiv.2202.11097. Published online February 22, 2022. [DOI] [Google Scholar]

- 109.Stärk H., et al. Proceedings of the 39th International Conference on Machine Learning. Vol. 162. 2022. EquiBind: geometric deep learning for drug binding structure prediction; pp. 20503–20521. [Google Scholar]

- 110.Zhang Z., et al. Protein representation learning by geometric structure pretraining. arXiv. 2022 doi: 10.48550/arXiv.2203.06125. Published online September 19, 2022. [DOI] [Google Scholar]

- 111.Ingraham J., et al. NIPS'19: Proceedings of the 33rd International Conference on Neural Information Processing Systems, Article No. 1417. 2019. Generative models for graph-based protein design; pp. 15820–15831. Vancouver, Canada. [Google Scholar]

- 112.Ferruz N., et al. ProtGPT2 is a deep unsupervised language model for protein design. Nat. Commun. 2022;13:4348. doi: 10.1038/s41467-022-32007-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 113.Anishchenko I., et al. De novo protein design by deep network hallucination. Nature. 2021;600:547–552. doi: 10.1038/s41586-021-04184-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Teufel F., et al. SignalP 6.0 predicts all five types of signal peptides using protein language models. Nat. Biotechnol. 2022;40:1023–1025. doi: 10.1038/s41587-021-01156-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Høie M.H., et al. NetSurfP-3.0: accurate and fast prediction of protein structural features by protein language models and deep learning. Nucleic Acids Res. 2022;50:W510–W515. doi: 10.1093/nar/gkac439. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Hie B.L., et al. Efficient evolution of human antibodies from general protein language models and sequence information alone. bioRxiv. 2022 doi: 10.1101/2022.04.10.487779. Published online September 6, 2022. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 117.Hsu C., et al. Learning inverse folding from millions of predicted structures. bioRxiv. 2022 doi: 10.1101/2022.04.10.487779. Published online September 6, 2022. [DOI] [Google Scholar]

- 118.Ma X., et al. Mega: moving average equipped gated attention. arXiv. 2022 doi: 10.48550/arXiv.2209.10655. Published online September 26, 2022. [DOI] [Google Scholar]

- 119.Gu A., et al. Efficiently modeling long sequences with structured state spaces. arXiv. 2022 doi: 10.48550/arXiv.2111.00396. Published online August 5, 2022. [DOI] [Google Scholar]

- 120.Hoffmann J., et al. Training compute-optimal large language models. arXiv. 2022 doi: 10.48550/arXiv.2203.15556. Published online March 29, 2022. [DOI] [Google Scholar]

- 121.McInnes L., et al. UMAP: uniform manifold approximation and projection for dimension reduction. arXiv. 2018 doi: 10.48550/arXiv.1802.03426. Published online September 18, 2020. [DOI] [Google Scholar]

- 122.Waskom M. Seaborn: statistical data visualization. J. Open Source Softw. 2021;6:3021. [Google Scholar]