Abstract

Prior research has found that iconicity facilitates sign production in picture-naming paradigms and has effects on ERP components. These findings may be explained by two separate hypotheses: (1) a task-specific hypothesis that suggests these effects occur because visual features of the iconic sign form can map onto the visual features of the pictures, and (2) a semantic feature hypothesis that suggests that the retrieval of iconic signs results in greater semantic activation due to the robust representation of sensory-motor semantic features compared to non-iconic signs. To test these two hypotheses, iconic and non-iconic American Sign Language (ASL) signs were elicited from deaf native/early signers using a picture-naming task and an English-to-ASL translation task, while electrophysiological recordings were made. Behavioral facilitation (faster response times) and reduced negativities were observed for iconic signs (both prior to and within the N400 time window), but only in the picture-naming task. No ERP or behavioral differences were found between iconic and non-iconic signs in the translation task. This pattern of results supports the task-specific hypothesis and provides evidence that iconicity only facilitates sign production when the eliciting stimulus and the form of the sign can visually overlap (a picture-sign alignment effect).

Keywords: Iconicity, ERPs, American Sign language, N400, Picture-naming, Translation

Iconicity refers to the presence of a perceived relationship between a lexical item’s phonological form and its meaning. Historically, iconic words were considered to be a rare phenomenon (e.g., restricted to onomatopoeia; Assaneo et al., 2011) in otherwise arbitrary spoken languages. More recently, it has become apparent that iconicity is in fact far more widely employed in spoken languages, though its degree of use varies considerably among language families as well as individual languages (Dingemanse, 2017; Imai et al., 2015; Perniss et al., 2010; Perry et al., 2015). In contrast, sign languages consistently employ iconicity in a widespread manner throughout the lexicon (Novogrodsky and Meir, 2020; Oomen, 2021; Perlman et al., 2018; Trettenbrein et al., 2021). The frequent use of iconic mappings may be due to the visual three-dimensionality afforded sign languages by the use of visible articulators, which more easily map onto visually-salient features of real world objects and the manner in which they move (Taub, 2001).

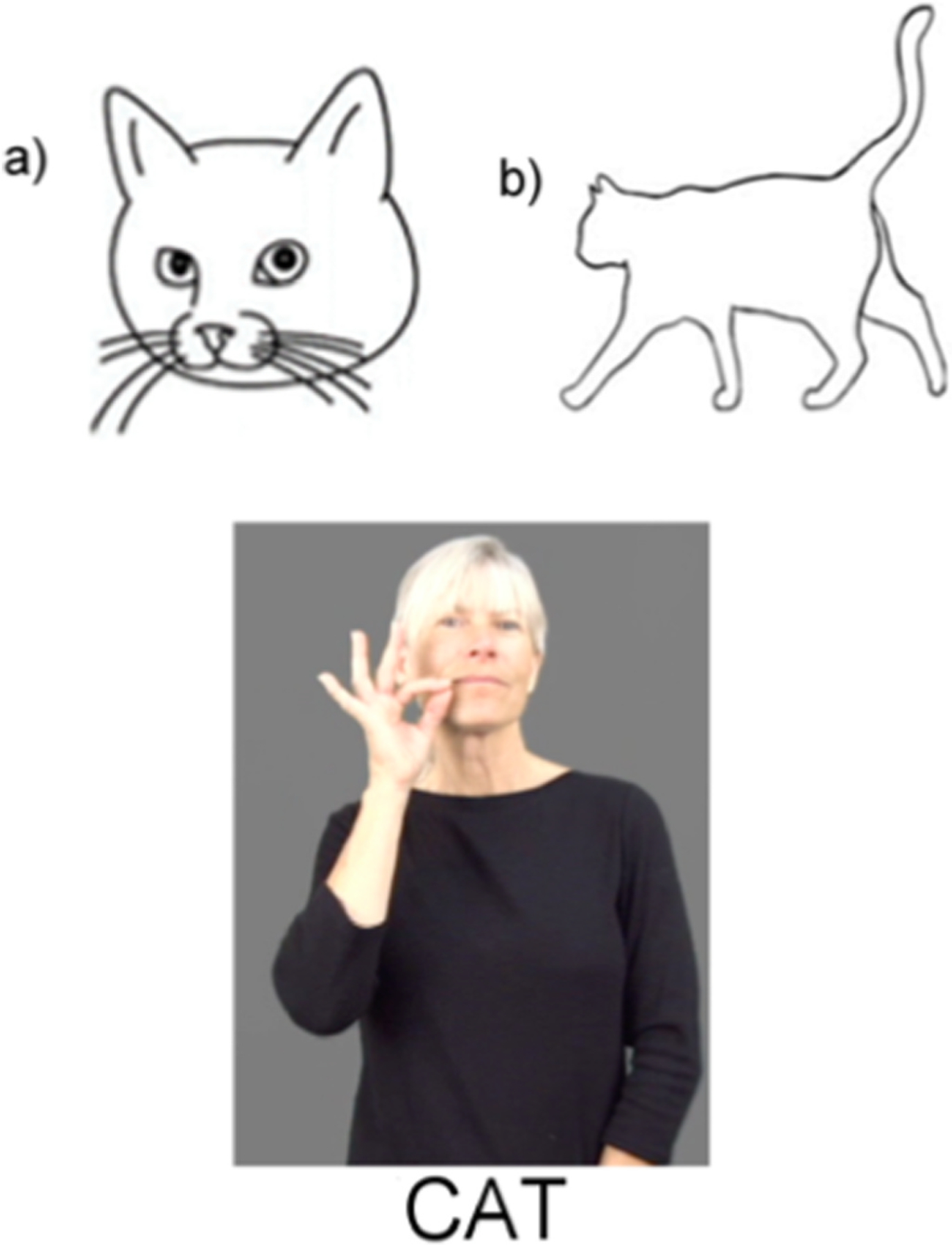

Iconicity can be characterized by analog mappings between imagistic representations, conceptual representations, and phonological form. Consider, for example, the iconic American Sign Language (ASL) sign for ‘cat’ (Fig. 1). The iconicity of this sign can be seen in the mapping between the phonological form of the sign (the whisker shape of the fingers, which are located at the signer’s face) and the representation of the shape of a cat’s whiskers and their location on the cat’s face. Vinson et al. (2015) found that pictures that elicited iconic British Sign Language (BSL) signs were named more quickly than those that elicited non-iconic BSL signs. Faster picture-naming for iconic signs has now been replicated across other signed languages, including Italian Sign Language (LIS; Navarrete et al., 2017; Pretato et al., 2018), ASL (McGarry et al., 2020; Sehyr and Emmorey, 2022), and Catalan Sign Language (LSC; Baus and Costa, 2015; Gimeno-Martínez and Baus, 2022).

Fig. 1.

Examples of a) aligned and b) less-aligned picture stimuli. For the aligned picture, the picture highlights a visual feature (whiskers) that maps onto the phonological form of the targeted sign (CAT), while this feature is minimized in the less-aligned picture (only the signer’s head maps to the head of the pictured cat).

We suggest that faster retrieval of iconic signs in picture-naming tasks could be explained by (at least) two different hypotheses: a task-specific hypothesis and a semantic feature hypothesis. The first hypothesis is that iconic signs are retrieved more quickly than non-iconic signs in picture-naming paradigms because visual elements of the picture can map onto visual elements of the phonological form of iconic signs. This visual mapping aids the retrieval of iconic signs by activating those phonological and/or semantic features that are represented in the picture. This activation is a type of lexical priming which reduces naming latencies for iconic compared to non-iconic signs, where no picture-sign mapping is possible. If this account is correct, then the recognition and comprehension of iconic signs should be facilitated by the degree of overlap between a picture-prime and a sign-target in a picture-sign matching task, with increased visual overlap resulting in increased facilitation for iconic signs. For example, a picture of a cat highlighting the cat’s whiskers (Fig. 1a) has a stronger overlap with the form of the ASL sign CAT than a picture of a cat highlighting the cat’s body and tail (Fig. 1b). Most of the pictures included as stimuli in picture-naming studies are likely to have at least some visual overlap with the targeted iconic sign, though the degree of this overlap varies, e. g., for both pictures in Fig. 1, the head of the cat maps to the head of the signer, but the additional mapping of the whiskers to the handshape of the sign provides a stronger picture-sign overlap for Fig. 1a.

Sign comprehension studies using a picture-sign matching task have manipulated the amount of this visual overlap (‘alignment’) to investigate whether the degree of alignment between a picture and an iconic sign facilitates recognition (McGarry et al., 2021; Thompson et al., 2009; Vinson et al., 2015). Results from these experiments indicate that aligned picture-sign pairs (e.g., Fig. 1a) are responded to more quickly and accurately than less aligned pairs (e.g., Fig. 1b). These findings suggest that a high degree of overlap between visual features of the referent depicted by a picture and the phonological elements of an iconic sign enables participants to access the form and meaning of iconic signs more rapidly and efficiently compared to when the picture is less aligned with the form of the sign.

Support for this task-specific hypothesis can be found in a lexical decision experiment by Bosworth and Emmorey (2010). In this study, deaf ASL signers were asked to determine whether the target sign in a prime-target pair was a real sign, and the semantic relationship between the prime and target signs and the iconicity of the signs were manipulated. Participants demonstrated semantic priming through reduced response times (RTs) when the sign-prime and the sign-target were related in meaning. However, there was no difference in this facilitation effect when the prime sign was iconic, compared to when it was non-iconic. Further, RTs did not differ for iconic compared to non-iconic target signs. These findings support the task-specific hypothesis because iconicity did not facilitate lexical access during sign recognition when the task did not involve a mapping between a sign and a picture stimulus.

Under this account, the task is also important, not just the use of picture stimuli. An iconicity advantage should only emerge when the task taps into the mapping between the visual features of the pictures and the phonological form (or highlighted semantic features) of iconic signs. For example, Pretato et al. (2018) asked LIS signers to name object pictures either with a sign or using a demonstrative pronoun plus color phrase, referring to the previous location of the object (on the left or right). Response times were faster for pictures named with iconic than non-iconic signs, but there was no effect of iconicity in the demonstrative pronoun condition, in which there was no mapping between visual features of the pictures and the phonological form of the pronoun.

However, evidence against the task-specific hypothesis comes from the third condition of the Pretato et al. (2018) study in which signers were asked to name the color of a picture using the indefinite pronoun directed at the picture – in this condition, the picture became a task-irrelevant distractor. Nonetheless, an iconicity effect was found: RTs for producing the phrase ‘pronoun color’ were shorter for pictures that had iconic signs. Pretato et al. (2018) argued that this effect arises because iconic distractors can be excluded more rapidly from the response set than non-iconic signs as iconic signs are accessed more rapidly (Navarrete et al., 2017). But it was unclear why this account would not also apply to the demonstrative pronoun condition. An alternative explanation is that signers were more likely to covertly name the object before producing the indefinite pronoun which was directed at the picture (unlike the demonstrative pronoun), and covert name production may have led to the standard facilitation effect of iconicity in picture-naming.

In contrast to the task-specific hypothesis, the semantic feature hypothesis proposes that the facilitatory effect of iconicity is driven not by a mapping between the stimulus and sign, but rather by a more robust representation of perceptual-motor semantic features for iconic signs. Of course, some iconic signs do not depict sensory-motor semantic features of the referent (e.g., those with diagrammatic iconic mappings), but such iconic signs are typically not used in picture-naming studies. Navarrete et al. (2017) hypothesized that iconic signs are retrieved faster because they are activated more quickly and robustly than non-iconic signs due to the correspondence between the sign and the perceptual-motor features of the referent. This faster activation in turn cascades into faster exclusion of non-targeted signs, and therefore faster lexical retrieval and production of the targeted sign. If this account is correct, then iconic signs should be retrieved faster than non-iconic signs, regardless of the experimental paradigm.

This view of iconicity is somewhat similar to the concept of lexical concreteness. In sign languages, iconicity is correlated with concreteness, such that more iconic signs tend to be more concrete, but this relationship does not hold in spoken languages (Perlman et al., 2018). This asymmetry likely occurs because it is easier to depict perceptual and motoric semantic features of concrete objects and actions in the manual than in the vocal modality. Concreteness is known to facilitate response latencies and to also elicit larger frontal N400s for concrete items than abstract items in event-related potential (ERP) studies. The N400 is an ERP component that indexes lexical-semantic processing, and the larger N400 amplitude for concrete items is hypothesized to be due to the stronger and denser network of semantic links for concrete words arising from the neural activation of more visual and sensory-motor information, compared to abstract words (see Barber et al., 2013; Holcomb et al., 1999; Kounios and Holcomb, 1994; van Elk et al., 2010). If the existence of a mapping between form and meaning for iconic signs results in stronger coding of perceptual and action semantic features compared to non-iconic signs, then iconicity may give rise to behavioral and neural effects that are parallel to concreteness effects.

McGarry et al. (2020) used ERPs to explore the impact of iconicity on the N400 component during picture naming by deaf ASL signers. We found evidence consistent with both the task-specific and the semantic feature hypotheses. We observed increased N400 amplitude (greater negativity) for iconic signs compared to non-iconic signs, particularly over frontal sites, as found for concreteness effects. This finding is consistent with the semantic feature hypothesis, suggesting that iconicity may be functionally similar to concreteness by robustly activating the perceptual and motoric semantic features that are depicted in the sign. However, we also included an alignment manipulation for the iconic signs and found that response latencies for the aligned trials were significantly shorter than for the non-aligned trials. Additionally, N400 amplitudes were significantly smaller for the aligned trials, and reduced N400 amplitudes are often interpreted as evidence of priming (Kutas and Federmeier, 2011; Luck, 2014). This second finding is consistent with the task-specific hypothesis because the facilitatory effect of iconicity is modulated by the nature of the picture stimulus, i.e., behavioral and electrophysiological facilitation occurs when the iconic features of the to-be-produced sign map onto visual features of the picture.

Baus and Costa (2015) also found reduced negativities (indicative of facilitation) for iconic compared to non-iconic signs when hearing L2 signers named pictures in LSC. This iconicity effect was observed in an early (P1) time window (70 m–140 ms) and in the N400 time window (350–550 ms). However, the later N400 iconicity effect was also observed when the participants named the same pictures in Spanish or Catalan (their L1), suggesting that something other than sign iconicity was driving the effect. Baus and Costa (2015) hypothesized that the early sign-specific effect reflected early conceptual engagement in which semantic features were more strongly or more automatically activated for iconic than non-iconic signs.

In contrast, Gimeno-Martínez and Baus (2022) found a later iconicity effect (140–210 ms) when deaf LSC signers named pictures, and the polarity of the effect was the opposite of Baus and Costa (2015) and was the same as the overall iconicity effect found by McGarry et al. (2020); that is, iconic signs elicited greater negativity than non-iconic signs, paralleling concreteness effects (more negativity for concrete items), rather than patterning like facilitation effects (less negativity for easy to processes items). Crucially, Gimeno-Martínez and Baus (2022) compared the behavioral and ERP iconicity effects for the same items presented in the picture-naming task and in a translation task in which signers saw written Spanish words and produced the LSC translations. Response times were faster for iconic than non-iconic signs for the picture-naming, but not the translation task. Similarly, ERP effects of iconicity were only observed for the picture-naming task. These results provide strong support for the task-specific hypothesis, but results concerning the time course of the iconicity effect and the nature of the underlying neural response (indexed in part by the polarity of the effect) are mixed.

The present study uses the same two tasks (picture-naming and translation) which test the task-specific and semantic feature hypotheses in an effort to replicate and extend the Gimeno-Martínez and Baus (2022) results with deaf ASL signers and with some important methodological differences. Neither Gimeno-Martínez and Baus (2022) nor Baus and Costa (2015) found sign-specific effects of iconicity in the N400 time window, in contrast to McGarry et al., (2020). One possible explanation for this difference is that participants were familiarized with all of the stimuli prior to the experiment in the two LSC studies, but not in the ASL study by McGarry et al. (2020). Repetition reduces the amplitude of the N400 such that repeated items elicit less negativity (repetition priming; Kutas and Federmeier, 2011). It is possible that picture and sign familiarization obscured effects of iconicity on the N400. In the present study participants were not familiarized with the stimuli, and therefore effects of iconicity on lexical access may be observed during the N400 window.

In addition, to maximize the relationship between the picture stimulus and the targeted sign, we chose to only present the aligned pictures from McGarry et al. (2020). This choice allowed us to maximize the potential for visual overlap within the picture-naming task, creating a larger contrast with the translation task. As a result, for the picture-naming task we expect to see faster response latencies and reduced (smaller) N400 amplitudes for iconic than non-iconic signs, consistent with the facilitatory effect found for aligned trials in McGarry et al. (2020). If this effect is best explained by the task-specific hypothesis, then there should be iconicity effects only in the picture-naming task, as only stimuli in this condition can visually map onto the targeted signs. If the semantic feature hypothesis is correct, then there should be reduced response latencies for iconic signs in both tasks and an increased N400 amplitude for iconic compared to non-iconic signs in both tasks. Finally, while our primary hypotheses relate to the N400 we also examined two earlier time-windows, as in Baus and Costa (2015) and Gimeno-Martínez and Baus (2022), to further investigate the time course of iconicity effects.

1. Methods

This study was pre-registered on the Open Science Framework (https://osf.io/4ufd3).

Participants.

Twenty-four deaf signers were included in the analyses (11 female; mean age = 32.4 years, SD = 7.21 years). Participants were born into deaf signing families (N = 18) or were exposed to ASL prior to the age of six (N = 6; mean age of exposure = 3 years). All participants had normal or corrected-to-normal vision, and had no history of any language, reading, or neurological disorders. Two participants were left-handed. Six additional participants were run but were excluded from the analyses due to an excessive number of artifacts in the ERP recording (more than 36% of total trials).

All participants received monetary compensation in return for participation. Informed consent was obtained from all participants in accordance with the Institutional Review Board at San Diego State University.

Materials.

Stimuli consisted of the aligned subset of digitized black on white line drawings from McGarry et al. (2020), as well as the printed English word referring to the same concept (e.g., a picture of a bird, and the printed word ‘bird’). A total of 88 different drawings and 88 English words were presented. Both the set of drawings and the words targeted the same 88 ASL signs. Half of the stimuli (44 pictures and their corresponding English words) were named or translated with iconic ASL signs, while the other 44 were named/translated with non-iconic signs. Descriptive statistics for the ASL signs are given in Table 1.

Table 1.

Descriptive statistics for the ASL signs.

| Iconicity M(SD) | Frequency M(SD) | # of two-handed signs | PND M (SD) | AoA (in years) M (SD) | |

|---|---|---|---|---|---|

| Iconic signs | 5.31 (0.94) | 3.90 (1.24) | 16 | 37.95 (27.02) | 4.67 (1.11) |

| Non-Iconic signs | 1.86 (0.39) | 4.17 (1.11) | 17 | 31.23 (28.25) | 4.66 (1.22) |

Note: PND = phonological neighborhood density, AoA = Age of Acquisition.

Iconicity ratings and subjective frequency ratings were retrieved from the ASL-LEX database (Caselli et al., 2017; Sehyr et al., 2021), and examples of all targeted ASL signs can be found in the ASL-LEX database (http://asl-lex.org). The ASL-LEX iconicity ratings were from hearing non-signers who judged the iconicity of ASL signs given their English translations on a 7-point scale (1 = not iconic at all, and 7 = very iconic). As in McGarry et al. (2020), signs were considered iconic if they received a rating of 3.7 or higher, while non-iconic signs were included if they received ratings of 2.5 or lower. The majority of iconic signs (89%; 39/44) were perceptually iconic (i.e., the form-meaning mapping involved perceptual features of the referent, such as the whiskers of a cat), and the remaining signs (11%; 5/44) were motorically iconic (i.e., the mapping involved motoric features, such as how a key is held and turned).

The iconic and non-iconic signs were matched for ASL frequency ratings from ASL-LEX, the number of two-handed signs, and for phonological neighborhood density (PND) using the Maximum PND measure from ASL-LEX. English age of acquisition (AoA) norms were used as a proxy for ASL AoA (Kuperman et al., 2012). Picture stimuli were matched for prototypicality (using ratings that were collected by McGarry et al., 2020) and image complexity as assessed through Matlab’s ‘entropy’ function (from McGarry et al., 2020). Based on the naming performance of participants in McGarry et al. (2020), the pictures used for the iconic and non-iconic conditions both had high name agreement (98.8% and 97.2%, respectively).

As noted above, we used the 44 aligned pictures with the greatest amount of structured overlap between picture and target sign from McGarry et al. (2020). To balance the number of stimuli, we randomly selected one picture for each of the non-iconic target signs. The order of picture presentation was counterbalanced across participants in two pseudorandomized lists. In the translation block, each participant saw 88 printed English words corresponding to the concepts in the picture-naming task in pseudorandomized order. The two lists were counter-balanced across participants.

Procedure.

Participants were asked to prepare for each trial by pressing and holding down the spacebar of a keyboard placed in their lap. When the space bar was pressed, a fixation cross appeared in the center of the screen for 800 ms, followed by a 200 ms blank screen and then the to-be-named picture or to-be-translated English word (the stimulus). This stimulus was maintained on the screen for 300 ms, followed by a blank screen. The spacebar release marked the response onset, i.e., the beginning of sign production. Reaction times were calculated as the amount of time elapsed from when the stimulus appeared on screen to spacebar release. After signing (space bar release), the blank screen disappeared and participants saw text asking them to press down the spacebar to move on to the next trial when ready. In this between-trial period, participants were able to take a break and blink, as the next trial would not begin until they replaced their hand on the keyboard. Participants were also provided with two self-timed breaks during the study, which gave them the opportunity to take a break for as long as they desired before resuming the study. Participants were instructed to name or translate each stimulus as quickly and accurately as possible. They were also asked to use minimal mouth and facial movements while signing to avoid artifacts associated with facial muscle movements in the ERPs. If a participant did not know what the picture represented or did not know the sign translation, they were instructed to respond with the sign DON’T-KNOW, thereby skipping the trial.

Each participant performed both tasks, which were blocked and counterbalanced.1 Before each block, participants were familiarized with the upcoming task by practicing on a set of 15 stimuli that were not included in the experiment list (either 15 pictures or 15 English words, which represented the same concept). Participants were not familiarized with the pictures or English words prior to the experiment.

EEG recording and analysis.

Participants wore an elastic cap (Electro-Cap) with 29 active electrodes (see Fig. 2 for an illustration of the electrode montage). An electrode placed on their left mastoid served as a reference during the recording and for analyses. An electrode on the right mastoid measured differential activity between the two mastoids in the event that the average mastoid activity needed to be re-referenced (which it did not). Recordings from electrodes located below the left eye and on the outer canthus of the right eye were used to identify and reject trials with blinks, horizontal eye movements, and other artifacts. Using saline gel (Electro-Gel), all mastoid, eye and scalp electrode impedances were maintained below 2.5 kΩ. EEG was amplified with SynAmpsRT amplifiers (Neuroscan-Compumedics) with a bandpass of DC to 100 Hz and was sampled continuously at 500 Hz.

Fig. 2.

Electrode montage. Shaded sites indicate the electrodes included in the analyses.

ERPs were time-locked offline to the onset of the picture or the English word. Averages were computed from −100 ms to 900 ms, with a baseline of −100 to 0 from artifact-free single trial EEG in each condition. Averaged ERPs were low-pass filtered at 15hz prior to analysis. Trials contaminated with movement and blink artifacts were excluded from analysis, as were trials with reaction times shorter than 300 ms or longer than 2.5 standard deviations above the individual participant’s mean RT.

Mean amplitude was calculated for the N400 window (300–600 ms after stimulus presentation). The selection of this window matched the N400 window from McGarry et al. (2020) and fit with a visual inspection of the grand means, which showed a negativity peaking around 400 ms after stimulus presentation. An omnibus ANOVA was conducted on the mean N400 with two levels of Iconicity (Iconic, Non-Iconic), two levels of Task (Translation, Picture-naming), three levels of Laterality (Left, Midline, Right) and five levels of Anteriority (FP, F, C, P, O). We also conducted follow-up analyses for each task separately. These ANOVAs included two levels of Iconicity, three of Laterality, and five of Anteriority to assess the effect of iconicity on sign production during the N400 window for each task.

In addition, analyses were conducted for two earlier epochs (140 ms–210 ms and 210–280 ms), using the same omnibus ANOVA as described above.2 These are the two early time windows from Gimeno-Martínez and Baus (2022) where iconicity effects were found.

2. Results

2.1. Behavioral analyses

To investigate whether the effect of iconicity on reaction times (RTs) and accuracy was different between the two tasks, we compared the 88 picture-naming trials with the 88 translation trials, paying particular attention to any interaction between iconicity and task. Unusually fast or slow trials were excluded from the analysis through the criteria described above (5% of the data). Trials with incorrect responses (less than 3 trials or 3% of all data on average) were excluded from the RT analyses. We used a linear mixed effects model with items and participants as random intercepts, and iconicity, task, word length, sign frequency, picture prototypicality, and picture complexity as fixed effects. A significant interaction in the response latencies between task and iconicity was found, t = 2.87, p = <0.001, demonstrating that the model containing the interaction is better able to explain the RT data than the model without the interaction.

For response accuracy, no interaction between task and iconicity was found, t = −1.056, p = 0.30.

Translation Task.

To statistically compare translation latencies between iconic and non-iconic signs, the LME analysis included items and participants as random intercepts, and iconicity, word length, and sign frequency as fixed effects. No effect of iconicity on RT was found, t = 0.115, p = 0.99; iconic signs (M = 584 ms, SD = 101 ms) had similar translation times as non-iconic signs (M = 580 ms, SD = 102 ms). There was also no effect of iconicity on response accuracy, t = 1.465, p = 0.181; the mean accuracy for iconic signs was 97% (SD = 2.0%) and mean accuracy was 98% (SD = 2.0%) for non-iconic signs.

Picture-naming task.

To statistically compare naming latencies between iconic and non-iconic signs, the LME analysis included items and participants as random intercepts, and iconicity, sign frequency, picture prototypicality, and picture complexity as fixed effects. An effect of iconicity was found, t = −2.131, p = 0.047. Pictures named with iconic signs (M = 681 ms, SD = 147) had faster RTs than those named with non-iconic signs (M = 704 ms, SD = 157). Similarly, we found that pictures in the iconic condition were named more accurately (M = 98%; SD = 2.2%) than those in the non-iconic condition (M = 95%; SD = 2.5%), t = 4.691, p = 0.002.

3. Electrophysiological analyses

We conducted ANOVAs for two early time windows (140–210 ms and 210–280 ms) and for the N400 window (300–600 ms).

For the earliest epoch (140–210 ms), there was a main effect of iconicity, F(1,23) = 6.16, p = 0.021, a three-way interaction between task, iconicity, and laterality, F(2,46) = 3.76, p = 0.044, as well as an interaction between task, iconicity, and posteriority, F(4,92) = 10.37, p = 0.001. These interactions indicate that task and iconicity interacted in a way that significantly influenced the ERP amplitude, and the size of this effect varied depending on the laterality and posteriority of the electrode.

The ANOVA for the 210–280 ms epoch revealed a main effect of task, F(1,23) = 4.43, p = 0.046, an interaction between task and iconicity, F (1,23) = 7.16, p = 0.014, and an interaction between task, iconicity, and posteriority F(4,92) = 10.21, p < 0.001, again indicating that task and iconicity interacted to significantly affect the ERP amplitude and that the size of this effect varied depending on the posteriority/anteriority of the electrode.

Finally, the ANOVA examining the N400 time window (300–600 ms) revealed a main effect of iconicity, F(1,23) = 6.06, p = 0.022, and an interaction between task and iconicity, F(1,25) = 6.93, p = 0.015.

To better understand these interactions with task, we conducted separate follow-up analyses on the data from the translation and the picture-naming tasks.

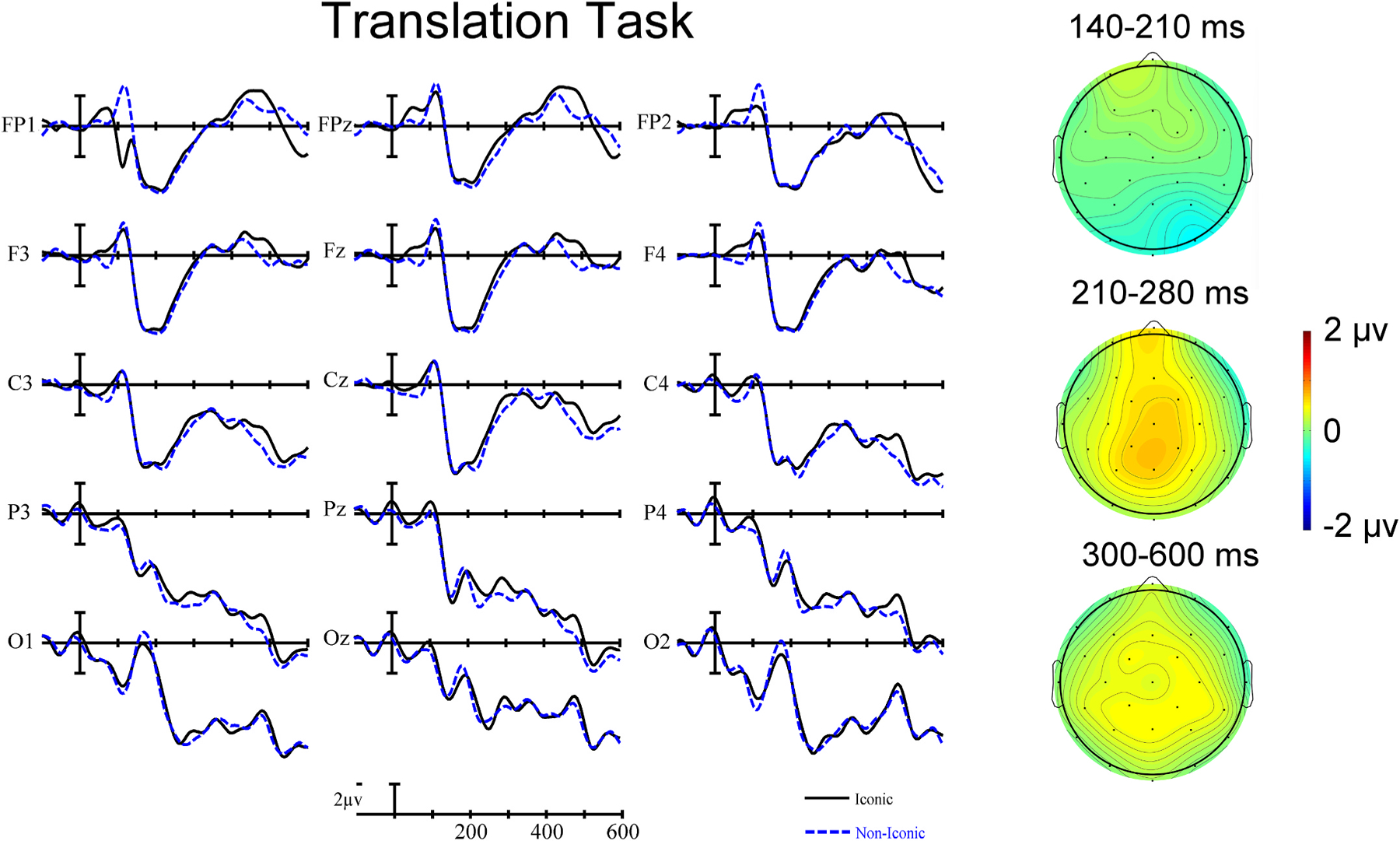

Translation task.

In the earliest (140–210 ms) epoch, there was no main effect of iconicity, F(1,23) = 0.14, p = 0.716, nor any interactions of iconicity with scalp distribution (all ps > .312). In the second (210–280 ms) epoch, there also was no main effect of iconicity, F(1,23) = 1.63, p = 0.215, nor any interactions of iconicity with scalp distribution (all ps > .312). Finally, in the N400 epoch, there was no main effect of iconicity, F(1,25) = 1.69, p = 0.20, nor any interactions of iconicity with scalp distribution (all ps > .735). Overall, these results indicate that for the translation task, iconicity does not modulate ERP amplitudes prior to or within the N400 time window (see Fig. 3).

Fig. 3.

EEG recordings and voltage maps from the English-ASL translation task. Voltage maps (Non-iconic minus Iconic conditions) are shown from 140 to 210 ms, 210–280 ms, and 300–600 ms after stimulus presentation. The scale used for voltage maps is ± 2 μV. Negative is plotted up.

Picture-naming task.

There was a significant main effect of iconicity in the 140–210 ms epoch, F(1,23) = 8.59, p = 0.008, indicating that the production of iconic signs when naming pictures was associated with broadly reduced negativity during this early time window compared to the production of non-iconic signs. Additionally, there were significant interactions between iconicity and laterality, F(2,46) = 4.6, p = 0.022, between iconicity and posteriority, F(4,92) = 12.64, p < 0.001, and there was a three-way interaction between iconicity, laterality, and posteriority, F(8, 184) = 2.57, p = 0.037. Iconic signs were associated with reduced negativity, and this effect was strongest at anterior, left-hemisphere sites (see Fig. 4).

Fig. 4.

EEG recordings and voltage maps from the Picture-Naming task. Voltage maps (Non-iconic minus Iconic conditions) are shown from 140 to 210 ms, 210–280 ms, and 300–600 ms after stimulus presentation. The scale used for voltage maps is ± 2 μV. Negative is plotted up.

In the second (210–280 ms) epoch, there was a main effect of iconicity, F(1, 23) = 6.33, p = 0.019), and a significant interaction between iconicity and posteriority F(4,92) = 13.18, p < 0.001, indicating that iconic signs were associated with reduced negativity, and that this effect was strongest at anterior sites (see Fig. 4).

Finally, in the N400 window, there was a significant main effect of iconicity, F(1,23) = 11.6, p = 0.002, again indicating that the production of iconic signs was associated with broadly reduced negativity compared to the production of non-iconic signs for the picture naming task. Additionally, this iconicity effect was greater at anterior electrodes sites, as evidenced by an iconicity by posteriority interaction, F(4,92) = 3.7, p = 0.044 (see Fig. 4).

4. Discussion

The primary goal of this study was to determine whether the faciliatory effect of iconicity found in picture-naming tasks was due to a task-specific effect or due to a more global effect resulting from more robust activation of sensory-motor semantic features depicted by iconic signs. As noted, several picture-naming studies across different sign languages report reduced response latencies for iconic signs compared to non-iconic signs (Vinson et al., 2015; Navarrete et al., 2017; Pretato et al., 2018; McGarry et al., 2020; Gimeno-Martínez and Baus, 2022). In McGarry et al. (2020), we included ERPs to explore the nature and time course of this facilitation effect and found evidence that iconicity modulated the N400 amplitude. To determine whether the task-specific or semantic feature hypothesis best explains the behavioral and electrophysiological facilitation for iconic signs, the present study compared participants’ response latencies and ERP modulations during a picture-naming task to those from an English-to-ASL translation task. If the task-specific hypothesis is correct, and the effect of iconicity is driven by the visual alignment between the picture stimulus and the targeted sign, then iconicity effects should be observed only in the picture-naming task. If the faciliatory effects are instead due to more robust semantic activation for iconic signs regardless of task, we expected that iconicity effects would occur in both the picture-naming and the translation task.

Consistent with the task-specific hypothesis and replicating Gimeno-Martínez and Baus (2022), we found behavioral and ERP iconicity effects only for the picture-naming task. Participants produced iconic signs more quickly and more accurately than non-iconic signs in the picture-naming task, with no RT or accuracy differences in the English-ASL translation task. In the present study, we included the most aligned pictures from McGarry et al. (2020), and so anticipated that we would find reduced negativities for iconic signs compared to non-iconic signs in the picture-naming condition. Indeed, this is the pattern we observed. In contrast, there was no evidence of a difference between iconic and non-iconic signs in the electrophysiological data from the translation task. This pattern of results suggests that the faciliatory effect of iconicity is confined only to tasks that use stimuli that can map onto the sensory-motoric features encoded in the phonological form of iconic signs, such as pictures. Thus, rather than representing a task-general activation of signs with richer semantic encodings, effects of iconicity seem to arise when visual features of the stimuli used to elicit signs can be quickly matched with phonological and semantic features of iconic sign forms in the lexicon. When such a mapping is not possible, such as with printed words, no boost to lexical retrieval or production is conferred.

Paralleling the results of Gimeno-Martínez and Baus (2022) and Baus and Costa (2015), the iconicity effect in the picture naming task emerged early, starting at 140 ms post-stimulus onset (although the direction of the effect was mixed across studies, see below). Unlike the LSC studies but parallel to McGarry et al. (2020), the iconicity effect was also found in the N400 window. We suggest that prior familiarization with the picture stimuli and the corresponding sign names may have obscured or reduced an iconicity effect within the N400 window in the LSC studies due repetition priming at the lexical level. However, more research is needed to confirm this hypothesis and to characterize the nature of the iconicity effect within the N400 window, e.g., whether the effect is related to lexical-level phonological encoding or lexico-semantic activation.

The early effects of iconicity likely reflect engagement of the conceptual system during picture recognition, rather than later stages of lexical encoding (Baus and Costa, 2015). Specifically, the mapping between the semantic features depicted in the picture and in the iconic sign (e.g., ‘whiskers’ for CAT) may facilitate access to the conceptual representation as the first stage of picture naming, compared to non-iconic signs. This account is consistent with the sensitivity of the picture-specific N300 component to semantic feature processing (e.g., McPherson and Holcomb, 1999). Modulation of the N300 component has been associated with processing early visual semantic features and may reflect matching visual features of the picture to features of a stored visual or conceptual representation (Schendan and Kutas, 2002, 2003). Further, semantic picture priming effects shift from more anterior in the pre-N400 epoch to more centro-posterior in the N400 window (McPherson and Holcomb, 1999), as found here for iconicity effects (see Fig. 4).

The polarity of the iconicity effects we observed in the ERPs in all epochs (reduced negativity for iconic signs) was opposite to that observed by Gimeno-Martínez and Baus (2022) and by McGarry et al. (2020). McGarry et al. (2020) interpreted the increased negativity in the N400 time window for iconic signs as reflecting increased activation of perceptual-motor semantic features depicted in iconic signs (and emphasized by the picture), parallel to the increased negativity associated with concreteness effects. To our knowledge, Gimeno-Martínez and Baus did not manipulate the amount of structured visual alignment between pictures and the targeted LSC signs, and so likely used pictures with variable degrees of alignment. The polarity difference for the iconicity effects found in the present study compared to McGarry et al. (2020) and Gimeno-Martínez and Baus (2022) may be related to the difference in the picture stimuli. Specifically, when all of the pictures are strongly visually aligned with the targeted signs, the ERP priming conferred by the pictures (i.e., decreased negativity due to visual overlap with the sign form) may override the increased negativity associated with activation of the sensory-motor features depicted in the picture and the sign – thus the overall amount of neural activity is reduced. In contrast, when both aligned and non-aligned pictures are included, as in McGarry et al. (2020) and likely in Gimeno-Martínez and Baus (2022), then the effect of visual picture-sign alignment (decreased negativity) is not strong enough to override the increased negativity associated with activation of sensory-motor semantic features that overlap between the non-aligned pictures and iconic signs.

However, Baus and Costa (2015) used the same set of picture stimuli as Gimeno-Martínez and Baus (2022) and found reduced negativity for iconic signs, as found here. Thus, polarity differences cannot be attributed solely to differences in the picture stimuli set. One possible explanation for the polarity difference between the two LSC studies lies in their different participants: hearing L2 signers (Baus & Costa, 2015) versus deaf early signers (Gimeno-Martínez and Baus, 2022). For the L2 signers, the priming effect (reduced negativity) from the mapping between the pictures and features of iconic signs may have obscured the effect of semantic feature activation (increased negativity), and the latter may be more apparent in deaf signers who likely have more robust semantic representations of LSC signs. At this point, however, these hypotheses are speculative, and more research is needed to determine what factors affect the polarity of iconicity effects in picture-naming tasks.

Our results and those of Gimeno-Martínez and Baus (2022) clearly indicate that iconicity effects are task-dependent. Thus, it is important to consider how and why iconicity effects are impacted by task. For example, translation differs from picture-naming in part because deaf signers must first access their non-dominant language (English in our study) and then access the translation in their dominant language (ASL). Compared to directly accessing ASL in picture-naming tasks, the translation process itself could reduce the impact of sign iconicity, particularly if the translation process is not mediated by semantics, as has been suggested by Navarrete et al. (2015) for word-to-sign translations. Thus, one possible explanation for the lack of iconicity effects in the translation task is that semantic representations are not activated. However, recent results from Ortega and Ostarek (2021) suggest that even when perceptual and motor semantic features are activated by a task, iconicity does not impact performance. Specifically, a previous study by Ostarek and Huettig (2017) found that for speakers, hearing a word (e.g. “bottle”) activates visual features of an object (e.g., the shape of a bottle) which facilitates detection of a picture that has been suppressed from awareness. This result is interpreted as evidence for activation of perceptual (visual) semantic features within an embodiment account of lexical access (cf. Lewis & Poeppel, 2014). Ortega and Ostarek (2021) found a similar effect for NGT (Sign Language of the Netherlands), but crucially, the iconicity of the NGT signs did not modulate the facilitation effect. This result suggests that the iconicity effects observed in picture-naming may be linked to the phonological, rather than the semantic, features of iconic signs that are represented in the picture and mapped to the form of the sign to be produced.

The vast majority of the targeted iconic signs in this study were perceptually-iconic, meaning that the iconicity occurs through the mapping between phonological features of the sign and the visual features of the referent. Only a few of the iconic signs (5/44) used a motoric or pantomimic mapping, where the form of the sign resembles the way one would handle or manipulate the referent (see Caselli and Pyers, 2020 and Ortega et al., 2017, for a discussion of perceptually-iconic and pantomimic/motorically-iconic signs). It is possible that motorically-iconic signs are not retrieved faster in picture-naming tasks because the visual features of the referent that are depicted in the picture do not map directly to the form of the sign. For example, the iconic ASL sign KEY depicts how a key is held and turned, but the picture of a key does not show the hands or the turning movement of the key. Thus, pantomimic iconicity may not be salient in typical object pictures and thus might not yield the same facilitatory effects that are observed with perceptual iconicity. In addition, the semantic features depicted by motoric and perceptually iconic signs may be represented in different neural regions, leading to different EEG scalp distributions. For example, visual shape features depicted by perceptually iconic signs could be represented in ventral temporal cortex (the “what” pathway), while motoric features depicted by pantomimic signs could be represented in posterior parietal cortex (the “how” pathway; see Kravitz et al., 2011, for review). Further work targeting these two types of iconicity is clearly needed.

In sum, the results of the present study suggest that iconicity has a task-specific potential to facilitate sign production. In tasks with pictorial stimuli, the ability of iconic signs to map onto the visual or semantic features of the stimuli seems to prime those signs for production, resulting in behavioral facilitation. If all stimuli have strong visual alignment with the targeted iconic signs, this seems to result in an overall reduction of neural activity, perhaps overriding an increased neural activity generated by activation of the sensory-motoric semantic features encoded in iconic signs. When this mapping cannot occur, as in translation or lexical decision tasks, no benefit of iconicity can occur. Taken together, these findings suggest that iconicity does not always facilitate sign production, but is able to do so when relevant to the task at hand.

Acknowledgements

This work was supported by a grant from the National Institute on Deafness and other Communication Disorders (R01 DC010997). We would like to thank Lucinda O’Grady Farnady for her help with the study and all of our participants without whom this research would not be possible.

Footnotes

Credit author statement

Meghan McGarry and Karen Emmorey conceptualized the study; Meghan McGarry and Katherine J. Midgley supervised participant recruitment and data collection; Meghan McGarry analyzed the data; Meghan McGarry wrote the original draft; Karen Emmorey, Katherine J. Midgley, and Phillip J. Holcomb wrote, reviewed, and edited the final version.

Initially, we planned to always present the translation block before the picture-naming block to maintain consistency across all participants (see pre-registration document). However, the results of the Gimeno-Martínez and Baus (2022) study became available during the data collection period, and their study counterbalanced task order. To have the best possible comparison between the two studies, we therefore decided to counterbalance our task order as well.

We did not originally intend to analyze these time windows, but we chose to include them after the publication of Gimeno-Martínez and Baus (2022).

Data availability

Data will be made available on request.

References

- Assaneo MF, Nichols JI, Trevisan MA, 2011. The anatomy of onomatopoeia. PLoS One 6 (12), e28317. 10.1371/journal.pone.0028317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Barber HA, Otten LJ, Kousta S-T, Vigliocco G, 2013. Concreteness in word processing: ERP and behavioral effects in a lexical decision task. Brain Lang. 125 (1), 47–53. 10.1016/j.bandl.2013.01.005. [DOI] [PubMed] [Google Scholar]

- Baus C, Costa A, 2015. On the temporal dynamics of sign production: An ERP study in Catalan Sign Language (LSC). Brain Res. 1609, 40–53. [DOI] [PubMed] [Google Scholar]

- Bosworth RG, Emmorey K, 2010. Effects of iconicity and semantic relatedness on lexical access in American Sign Language. J. Exp. Psychol. Learn. Mem. Cognit 36 (6), 1573–1581. 10.1037/a0020934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caselli NK, Pyers JE, 2020. Degree and not type of iconicity affects sign language vocabulary acquisition. J. Exp. Psychol. Learn. Mem. Cognit 46 (1), 127–139. 10.1037/xlm0000713. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caselli NK, Sehyr ZS, Cohen-Goldberg AM, Emmorey K, 2017. ASL-LEX: a lexical database of American Sign Language. Behav. Res. Methods 49 (2), 784–801. 10.3758/s13428-016-0742-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dingemanse M, 2017. Expressiveness and system integration: on the typology of ideophones, with special reference to Siwu. STUF - Lang. Typol. Universals 70 (2), 363–385. 10.1515/stuf-2017-0018. [DOI] [Google Scholar]

- Gimeno-Martínez M, Baus C, 2022. Iconicity in sign language production: task matters. Neuropsychologia 167, 108166. 10.1016/j.neuropsychologia.2022.108166. [DOI] [PubMed] [Google Scholar]

- Holcomb PJ, Kounios J, Anderson JE, West WC, 1999. Dual-coding, context-availability, and concreteness effects in sentence comprehension: an electrophysiological investigation. J. Exp. Psychol. Learn. Mem. Cognit 25 (3), 721–742. 10.1037/0278-7393.25.3.721. [DOI] [PubMed] [Google Scholar]

- Imai M, Miyazaki M, Yeung HH, Hidaka S, Kantartzis K, Okada H, Kita S, 2015. Sound symbolism facilitates word learning in 14-Month-Olds. PLoS One 10 (2), e0116494. 10.1371/journal.pone.0116494. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kounios J, Holcomb PJ, 1994. Concreteness effects in semantic processing: ERP evidence supporting dual-coding theory. J. Exp. Psychol. Learn. Mem. Cognit 20 (4), 804–823. 10.1037/0278-7393.20.4.804. [DOI] [PubMed] [Google Scholar]

- Kravitz DJ, Saleem KS, Baker CI, Mishkin M, 2011. A new neural framework for visuospatial processing. Nat. Rev. Neurosci 12 (4), 217–230. 10.1038/nrn3008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kuperman V, Stadthagen-Gonzalez H, Brysbaert M, 2012. Age-of-acquisition ratings for 30,000 English words. Behav. Res. Methods 44 (4), 978–990. 10.3758/s13428-012-0210-4. [DOI] [PubMed] [Google Scholar]

- Kutas M, Federmeier KD, 2011. Thirty years and counting: finding meaning in the N400 component of the event-related brain potential (ERP). Annu. Rev. Psychol 62, 621–647. 10.1146/annurev.psych.093008.131123. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Luck SJ, 2014. An Introduction to the Event-Related Potential Technique. MIT Press. [Google Scholar]

- McGarry ME, Massa N, Mott M, Midgley KJ, Holcomb PJ, Emmorey K, 2021. Matching pictures and signs: an ERP study of the effects of iconic structural alignment in American sign language. Neuropsychologia 162, 108051. 10.1016/j.neuropsychologia.2021.108051. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McGarry ME, Mott M, Midgley KJ, Holcomb PJ, Emmorey K, 2020. Picture-naming in American Sign Language: an electrophysiological study of the effects of iconicity and structured alignment. Language, Cognition and Neuroscience. https://www.tandfonline.com/doi/abs/10.1080/23273798.2020.1804601. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McPherson WB, Holcomb PJ, 1999. An electrophysiological investigation of semantic priming with pictures of real objects. Psychophysiology 36 (1), 53–65. 10.1017/S0048577299971196. [DOI] [PubMed] [Google Scholar]

- Navarrete E, Caccaro A, Pavani F, Mahon BZ, Peressotti F, 2015. With or without semantic mediation: retrieval of lexical representations in sign production. J. Deaf Stud. Deaf Educ 20 (2), 163–171. 10.1093/deafed/enu045. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Navarrete E, Peressotti F, Lerose L, Miozzo M, 2017. Activation cascading in sign production. J. Exp. Psychol. Learn. Mem. Cognit 43 (2), 302–318. 10.1037/xlm0000312. [DOI] [PubMed] [Google Scholar]

- Novogrodsky R, Meir N, 2020. Age, frequency, and iconicity in early Sign Language acquisition: evidence from the Israeli Sign Languagelanguage MacArthur–bates communicative developmental inventory. Appl. Psycholinguist 41 (4), 817–845. 10.1017/S0142716420000247. [DOI] [Google Scholar]

- Oomen M, 2021. Iconicity as a mediator between verb semantics and morphosyntactic structure: a corpus-based study on verbs in German Sign Language. Sign Lang. Linguist 24 (1), 132–141. 10.1075/sll.00058.oom. [DOI] [Google Scholar]

- Ortega G, Sümer B, Özyürek A, 2017. Type of iconicity matters in the vocabulary development of signing children. Dev. Psychol 53 (1), 89–99. 10.1037/dev0000161. [DOI] [PubMed] [Google Scholar]

- Ortega G, Ostarek M, 2021. Evidence for visual simulation during sign language processing. J. Exp. Psychol. Gen 150 (10), 2158–2166. 10.1037/xge0001041. [DOI] [PubMed] [Google Scholar]

- Ostarek M, Huettig F, 2017. Spoken words can make the invisible visible—testing the involvement of low-level visual representations in spoken word processing. J. Exp. Psychol. Hum. Percept. Perform 43 (3), 499–508. 10.1037/xhp0000313. [DOI] [PubMed] [Google Scholar]

- Perlman M, Little H, Thompson B, Thompson RL, 2018. Iconicity in signed and spoken vocabulary: a comparison between American Sign Language, British Sign Language, English, and Spanish. Front. Psychol 9 10.3389/fpsyg.2018.01433. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perniss P, Thompson RL, Vigliocco G, 2010. Iconicity as a general property of language: evidence from spoken and signed languages. Front. Psychol 1 10.3389/fpsyg.2010.00227. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Perry LK, Perlman M, Lupyan G, 2015. Iconicity in English and Spanish and its relation to lexical category and age of acquisition. PLoS One 10 (9), e0137147. 10.1371/journal.pone.0137147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pretato E, Peressotti F, Bertone C, Navarrete E, 2018. The iconicity advantage in sign production: the case of bimodal bilinguals. Sec. Lang. Res 34 (4), 449–462. 10.1177/0267658317744009. [DOI] [Google Scholar]

- Schendan HE, Kutas M, 2002. Neurophysiological evidence for two processing times for visual object identification. Neuropsychologia 40 (7), 931–945. 10.1016/S0028-3932(01)00176-2. [DOI] [PubMed] [Google Scholar]

- Schendan HE, Kutas M, 2003. Time course of processes and representations supporting visual object identification and memory. J. Cognit. Neurosci 15 (1), 111–135. 10.1162/089892903321107864. [DOI] [PubMed] [Google Scholar]

- Sehyr ZS, Caselli N, Cohen-Goldberg AM, Emmorey K, 2021. The ASL-LEX 2.0 project: a database of lexical and phonological properties for 2,723 signs in American Sign Language. J. Deaf Stud. Deaf Educ 26 (2), 263–277. 10.1093/deafed/enaa038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sehyr Z, Emmorey K, 2022. The effects of multiple linguistic variables on picture naming in American Sign Language. Behav. Res. Methods 54 (5), 2502–2521. 10.3758/s13428-021-01751-x. [DOI] [PubMed] [Google Scholar]

- Taub SF, 2001. Language from the Body: Iconicity and Metaphor in American Sign Language. Cambridge University Press. [Google Scholar]

- Thompson RL, Vinson DP, Vigliocco G, 2009. The link between form and meaning in American Sign Language: lexical processing effects. J. Exp. Psychol. Learn. Mem. Cognit 35 (2), 550–557. 10.1037/a0014547. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Trettenbrein PC, Pendzich N-K, Cramer J-M, Steinbach M, Zaccarella E, 2021. Psycholinguistic norms for more than 300 lexical signs in German Sign Language (DGS). Behav. Res. Methods 53 (5), 1817–1832. 10.3758/s13428-020-01524-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van Elk M, van Schie HT, Bekkering H, 2010. The N400-concreteness effect reflects the retrieval of semantic information during the preparation of meaningful actions. Biol. Psychol 85 (1), 134–142. 10.1016/j.biopsycho.2010.06.004. [DOI] [PubMed] [Google Scholar]

- Vinson D, Thompson RL, Skinner R, Vigliocco G, 2015. A faster path between meaning and form? Iconicity facilitates sign recognition and production in British Sign Language. J. Mem. Lang 82 (Suppl. C), 56–85. 10.1016/j.jml.2015.03.002. [DOI] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

Data will be made available on request.