Structured Abstract

Importance:

Use of non-steroidal anti-inflammatory drugs (NSAIDs) can reduce pain and has become a core strategy to decrease opioid use, but there is a lack of data to describe encouraging use when admitting patients using electronic health record systems.

Methods:

We performed a cluster randomized controlled trial of clinicians admitting adult patients to a health system over a 9-month period. Clinicians were randomized to use of a standard admission orderset. Clinicians in the intervention arm were required to actively order or decline NSAIDs; the control arm was shown the same order but without a required response. The primary outcome was NSAIDs ordered and administered by the first full hospital day. Secondary outcomes included pain scores and opioid prescribing.

Results:

A total of 20,085 hospitalizations were included. Among these hospitalizations, patients had a mean age of 58 years, Charlson comorbidity score of 2.97, while 50% and 56% were female and white, respectively. Overall, 52% were admitted by a clinician randomized to the intervention arm. NSAIDs were ordered in 2,267 (22%) intervention and 2,093 (22%) of control admissions (p=0.10). Similarly, there were no statistical differences in NSAID administration, pain scores or opioid prescribing. Average pain scores (0–5 scale) were 3.36 in the control group and 3.39 in the intervention group (p=0.46). There were no differences in clinical harms.

Conclusions and Relevance:

Requiring an active decision to order an NSAID at admission had no demonstrable impact on NSAID ordering. Multicomponent interventions, perhaps with stronger decision support, may be necessary to encourage NSAID ordering.

Introduction

The opioid epidemic has cost hundreds of thousands of lives to overdose and millions of dollars to our healthcare system. As healthcare systems and clinicians attempt to moderate opioid prescribing, multimodal pain regimens have been touted as one path to reduce opioid use and provide excellent pain control.1 Multimodal pain regimens include local and neuraxial adjuncts, as well as non-opioid medications. One component of multimodal pain regimens is use of non-steroidal anti-inflammatory (NSAID) medications such as ibuprofen, celecoxib or ketorolac.1

NSAID prescribing nationwide, including in our local hospital system, has remained low despite quality improvement efforts to promote inpatient NSAID use.2–4 One reason for this may be concerns that NSAIDs can cause postoperative or gastrointestinal bleeding and acute kidney injury.5 Clinical decision support delivered via the electronic health record (EHR) has been found useful to raise awareness on costs of orders,6 reducing ordering,7 or provide other types of computerized clinical decision support, and might be useful for nudging clinicians to use NSAIDs for pain control when admitting patients to the hospital.8,9

Our study leveraged the health system’s EHR-based standard admission orderset to encourage NSAID use, a care practice aligned with our institutional focus on opioid stewardship, to carry out a randomized controlled trial comparing two alternate approaches to NSAID ordering for adult admissions. Importantly, poorly designed EHR builds requiring excessive and redundant data entry, can contribute to clinician burnout. Therefore, it is essential, to ensure that any added clinician steps, as evaluated in our study, are a clear value-add to patient care and not adopted without rigorous study and validation.10 Our primary objective was to evaluate whether requiring a choice of NSAID ordering at admission resulted in increased ordering and thereby administration of NSAIDs by the first full day of hospital admission. Our secondary objectives were to measure differences in pain scores and opioid use and prescribingbetween these two groups.

Methods

Study design

We conducted a cluster randomized trial designed to assess the effectiveness and safety of an EHR-based intervention to encourage use of NSAIDs for admitted patients with the ultimate goal of improving NSAID use without adding ineffective EHR burden. Clinicians were the unit of randomization, and outcomes were compared for hospitalizations exposed to a clinician randomized to the active or the control arm for the admission orderset. Clinicians were only randomized if they utilized the admission pain orderset. If a clinician admitted a patient with ‘no more than mild pain’ in which no pain medications were offered, no NSAID was presented and that encounter was excluded. Likewise, if a clinician used a pain orderset developed specifically for their service, there was no intervention, and this encounter was excluded.

Site and subjects

Our study was conducted across three hospital sites within a single academic hospital system (University of California, San Francisco (UCSF)). The health system is a quaternary care center with approximately 1,200 staffed beds. Our top diagnoses include sepsis, heart failure, respiratory infection, major joint replacements and major large or small bowel procedures.

All clinicians who admitted adult patients (≥18 years old) between November 12, 2020, to August 16, 2021 were eligible to be randomized. We included all hospital encounters admitted using the standard admission orderset.

Our study was approved by the UCSF Institutional Review Board. This article followed Consolidated Standards of Reporting Trials (CONSORT) reporting guidelines for cluster randomized trials (eAppendix1).11

Randomization of clinicians

Clinicians were randomized to the intervention or control group when they first interacted with the pain medication portion of the admission orderset and remained in their randomized group for the remaining period of the trial. Those randomized to the intervention arm received a required NSAID choice at every subsequent admission. Randomization was stratified by non-surgical versus surgical services, to ensure randomization in these groups separately (eAppendix2).

Description of the Intervention

Our institution utilizes a standard admission orderset for most hospital admissions for adult patients. The orderset includes essential admission orders including vital sign frequency, lab frequency, intravenous fluid options, tube and drain management, diet choices and venous thromboembolism prophylaxis. Our intervention was embedded in the pain management section. In collaboration with the UCSF Pain Committee, a group of clinicians including anesthesiologists, surgeons, hospital intensivists, pharmacists and nurses designed a new pain order panel to improve ordering of pain mediation hospital-wide. The goal was to reduce opioid use while maintaining optimal analgesia by including options for around the clock oral (ibuprofen or celecoxib) or parenteral (ketorolac) NSAIDs as a multimodal option in conjunction with acetaminophen. Oral and parenteral opioids were additional prn options.

Clinicians randomized to the intervention arm were presented with a required choice about NSAIDs before they could complete the admission orderset. Every time they admitted a patient, they had to either click to order an NSAID or click to acknowledge that a contraindication to NSAIDs existed (eAppendix3). They did not need to specify the contraindication. Clinicians randomized to the control arm saw the same NSAID options but did not need to click on either to complete the admission orders. They could bypass the NSAID section.

Botharms hadwarnings at the top of the NSAID section: “Celecoxib: Do not use in patients with a history of ischemic heart disease, stroke, recent CABG or heart failure. Ketorolac or Ibuprofen: Avoid in patients on therapeutic anticoagulant therapy, acute or chronic kidney disease (eGFR< 60), GI bleeding in last 6 months, most transplant patients, heart failure.” to alert clinicians to NSAID contraindications.

Data sources

We used data from the EHR (Clarity) which included administrative and billing data to ensure accurate capture of clinical harms. We extracted all medication ordering activity from Clarity andadministration data from the medical administration record. We also pulled discharge prescribing data from Clarity. We calculated the Charlson comorbidity score using previously generated code for administrative databases.12

Pain scores at our institution are determined using the numeric rating scale (NRS), which is a self-reported scale with 0 being no pain and 10 being the worst possible pain.13,14 Scores are recorded by nurses and pulled from nursing flowsheets in the EHR.

Outcomes

Because patient encounters may begin before an admission order and can happen anytime during a calendar day, the available time for a medication to be ordered or administered within a 24-hour time period can vary. For this reason, we elected to focus most of our outcome measures on whether the event had occurred by the end of the first full hospital day, defined as the second midnight of admission. We did this to ensure the capture of a full hospital day, as patients admitted at 11pm would only have one hour of time in hospital day 1.

Our primary outcomes were placement of an NSAID order (specifically ibuprofen, celecoxib or ketorolac) or administration of the above NSAIDs by the end of the first full hospital day. We also examined patient pain scores by the end of the first full hospital day including highest and average pain score.

To further examine whether the intervention resulted in less frequent use of opioids, we also studied oral morphine equivalents (OME) the day before discharge, opioid prescribing at discharge, and the MEDD15 (morphine equivalent daily dose) of the discharge prescription. OME and MEDD are commonly used approximations to compute equianalgesic doses between different types of opioids.15

We analyzed three adverse events as potential clinical harms from NSAID use; in-hospital death, new gastrointestinal bleed and new acute kidney injury. Harms were defined as a diagnosis not present on admission. We classified clinical harms by extracting data from Clarity (death) as well as both the patient’s inpatient problem list and coded diagnoses which are attached to the hospital account and entered by a medical coder within 2 weeks after discharged, defined by ICD10 codes (eAppendix3). We also identified patients with documented contraindications to an NSAID (chronic kidney disease, organ transplant, allergy, history of GI bleed) using historical coding, billing and patient’s problem lists, in order to better characterize and compare the proportion of patients with or without a documented contraindication to an NSAID.

Statistical Analysis

Baseline characteristics were expressed as numbers and percentages for categorical variables and as means with a standard deviation (SD) for continuous variables. Differences between control and intervention baseline characteristics were compared by χ2 or t-test for categorical and continuous variables, respectively.

Because our unit of randomization was the ordering clinician, but effects were measured at the encounter level, we first tested whether there were differences between clinician groups in terms of observable baseline characteristics, which there were not (eAppdendix5)

We then used mixed-effects logistic regression models for each continuous and dichotomous outcomes, clustering by admitting clinician, to analyze outcomes for each encounter exposed to the intervention via their admitting clinician. No other covariates were included in the models.

Specifically, we used a mixed model with random intercepts to accommodate the clustering by clinician. This induces an equi-correlated covariance structure in the responses. Our only fixed effect was treatment arm, as is common in randomized clinical trials. Data analyses were performed from August 16, 2021 to October 25, 2022. Statistical significance was based on p-value <0.05. No multiple testing adjustments were performed. All analyses were performed using R-version 4.0.5.

Results

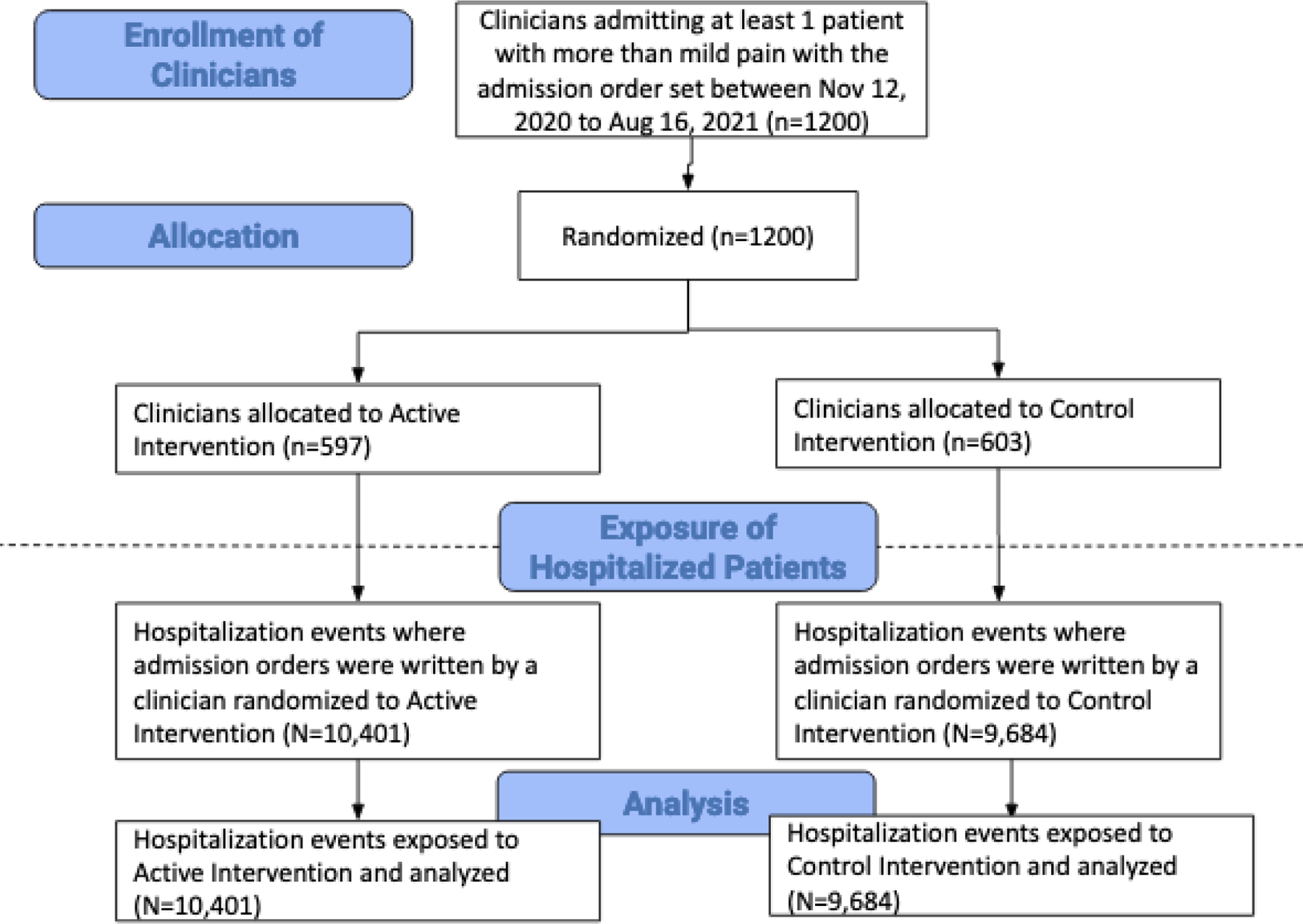

During the study period, from November 12, 2020, to August 16, 2021, 1,200 clinicians were randomized, 597 to the intervention group and 603 to the control group (eAppendix4). We anticipated 80% power to detect an effect size difference of 10% in NSAID ordering between intervention and control groups with 270 encounters in each group, ignoring the design effect of clustering within provider. With our much larger sample size of nearly 10,000 encounters in each group, even with the design effect, we were well-powered to detect a small difference in NSAID ordering (eAppendix6). Overall, the clinician group was 57% female, 48% surgical, with 44% being residents with an average of 4.5 years at the institution. The mean number of admissions per clinician over the prior 6 months was 11, and the mean number of encounters during the study period was 18 (SD 27). Clinicians were well matched in terms of observable characteristics and there was no statistically significant difference between characteristics of each group. Seeing no significant differences, we proceeded with encounter level analyses, accounting for clinician-level clustering.

Encounter data

The total cohort of adult hospitalized admissions (‘encounters’) at UCSF hospitals over the study period using the core admission orderset was 20,085 encounters (representing 13,384 unique patients) (Figure 1). In total, 10,401 encounters (52%) were admitted by clinicians randomized to the intervention arm and 9,684 (48%) admitted by clinicians randomized to the control arm. Among patients examined in the encounters, the mean age of 58 years old, Charlson comorbidity score of 2.97, 50% were female, the majority (56%) were white and 89% stated that English was their preferred language (Table1). Sixty-five percent had a surgical procedure during their encounter. There were no statistically significant differences between the two arms.

Figure 1: Consort Diagram.

Table 1: Characteristics of encounters exposed to the intervention, accounting for clustering at the clinician level.

| Control Group N=10,401 |

Intervention Group N=9,684 |

p-value | ||

|---|---|---|---|---|

| Age at admission in years (mean (SD)) | 58.5 (16.8) | 58.3 (16.6) | 0.10 | |

| Charlson Index (mean (SD)) | 2.96 (2.59) | 2.98 (2.63) | 0.30 | |

| Gender (%) | Female | 5261 (50.6) | 4869 (50.3) | 0.74 |

| Male | 5126 (49.3) | 4811 (49.7) | ||

| All others | 10 (0.1) | 3 (0.0) | ||

| Race Ethnicity (%) | American Indian or Alaska Native | 64 (0.6) | 62 (0.6) | 0.91 |

| Asian | 1530 (14.8) | 1357 (14.2) | ||

| Black or African American | 830 (8.1) | 757 (7.9) | ||

| Latinx | 1625 (15.8) | 1572 (16.4) | ||

| Multi-Race/Ethnicity | 210 (2.0) | 208 (2.2) | ||

| Native Hawaiian or Other Pacific Islander | 81 (0.8) | 62 (0.6) | ||

| Other | 229 (2.2) | 195 (2.0) | ||

| White or Caucasian | 5740 (55.7) | 5374 (56.1) | ||

| Language (%) | Cantonese | 230 (2.2) | 219 (2.3) | 0.42 |

| Mandarin | 77 (0.7) | 65 (0.7) | ||

| English | 9252 (89.1) | 8576 (88.6) | ||

| Russian | 88 (0.8) | 54 (0.6) | ||

| Spanish | 455 (4.4) | 502 (5.2) | ||

| All others | 286 (2.8) | 261 (2.7) | ||

| Surgical Procedure During Encounter (%) | 6885 (66.2) | 6254 (64.6) | 0.972 | |

| Any contraindication to NSAIDs (%) | 2742 (26.4) | 2610 (27.0) | 0.841 | |

SD = Standard Deviation

P-values are from mixed models accounting for the cluster design.Because we used these mixed models for continuous or dichotomized variables, the pvalue for gender represents male vs female, for race/ethnicity represents white vs all others and for language represents English vs all others.

Outcomes

NSAIDs were ordered in 2,093 of control (22%) and 2,267 intervention encounters (22%), (p-value=0.10).

Similarly, there were no statistical differences in NSAID administration. In terms of secondary outcomes, there were no statistically significant differences in highest pain score or average pain score by the first full hospital day, opioid ordered at discharge (yes/no) and MEDD of discharge prescription if an opioid was ordered (Table2). The day before discharge OME was higher in the intervention group (p-value=0.02). Of note, both of these groups increased from an overall ordering rate of 18% in the prior 6-month time period.

Table 2: Outcomes for each included encounter, accounting for clustering at the clinician level.

| Control Group N=10,401 |

Intervention Group N=9,684 |

p-value | |

|---|---|---|---|

| NSAID Outcomes | |||

| NSAID ordered by end of first full hospital day (primary outcome), n (%) | 2267 (22) | 2093 (22) | 0.10 |

| NSAID administered by end of first full hospital day, n (%) | 1971 (19) | 1753 (18) | 0.46 |

| Pain and Opioid Outcomes | |||

| Highest pain score during first full hospital day (mean (SD)) | 5.14 (3.44) | 5.21 (3.45) | 0.30 |

| Average pain score during first full hospital day (mean (SD)) | 3.36 (2.62) | 3.39 (2.61) | 0.46 |

| Total OME 24-hour day before discharge (mean (SD)) | 57.3 (164.0) | 65.4 (230.3) | 0.02 |

| Opioid ordered at discharge, n (%) | 6126 (59) | 5554 (57) | 0.40 |

| MEDD of discharge prescription, (mean (SD)) | 109 (203) | 123 (506) | 0.21 |

| Clinical Harms | |||

| In-hospital death, n (%) | 196 (1.9) | 187 (1.9) | 0.52 |

| New AKI, n (%) | 1151 (11.1) | 1112 (11.5) | 0.71 |

| New GI bleed, not present on admission, n (%) | 134 (1.3) | 125 (1.3) | 0.79 |

SD = Standard Deviation, NSAID = nonsteroidal anti-inflammatory drugs, OME = oral morphine equivalents, MEDD = morphine equivalent daily dose, AKI = acute kidney injury, GI = gastrointestinal

Pvalue = clustered by admitting clinician. Note: The mean OME and MEDD only include patients who had an opioid. If there was no opioid administered or prescribed at discharge, that encounter was not included in calculating the mean.

Secondary analyses

To test patient-level results, we performed the analysis again examining only an individual patient’s first encounter (n=13,384). Results were similar and there were no statistically significant differences for any of the outcomes between the two groups.

Finally, we analyzed documented contraindications to NSAIDs. In our total cohort of 20,085 encounters, 27% of encounters had a contraindication to NSAIDs, with 26% in the intervention arm and 27% in the control arm.

Adverse Events

We also followed adverse events or what we considered clinical harms thought to be directly attributable to or affected adversely by NSAIDs including in-hospital death, new acute kidney injury and new gastrointestinal (GI) bleed. In-hospital death occurred in 196 (1.9%) patient encounters in the Intervention group versus 187 (1.9%) patient encounters in the Control group (clustered p-value = 0.52). A new GI bleed occurred in 134 (1.3%) encounters in both the Intervention group and 125 (1.3%) encounters in the Control groups (p-value=0.79) New AKI occurred in 600 (8.6%) patient encounters in the Intervention group and 587 (9.1%) patient encounters in the control group (p-value = 0.57) (Table2).

Discussion

This cluster randomized controlled study indicates that the use of an EHR-based intervention for pain medication ordering, specifically requiring clinicians to have to actively click an order in the EHR and opt in or out of ordering NSAIDs, did not increase the ordering or use of NSAIDs in adult inpatients by the end of the first full hospital day.

Our finding of no difference between arms is also supported by the finding of no difference in other outcomes such as pain scores, OME in the 24-hour day before discharge, opioid ordered at discharge or MEDD of prescription. The lack of difference for these outcomes is likely due to similar levels of NSAID ordering in the two groups, with no ability for this opioid-sparing medication to have an effect. There was also no difference in clinical harms between the two groups. This lack of clinical harm is likely because NSAID ordering was the same and because the event rate of either of these harms is very low. Regardless of the ineffectiveness of our intervention, our study’s large sample size allowed the ability to detect even rare harms, important particularly as we consider other methods for increasing NSAID use. While the ideal rate of NSAID ordering may differ institution to institution, given that <25% of our encounters had a known contraindication to NSAIDs, it seems reasonable to assume that a rate closer to 50–75% would be appropriate, not the low rate we achieved.

Our results are similar to other studies using the EHR to change clinician behavior.16–20 However, most prior medication studies focus on medication deprescribing or reconciliation, as opposed to encouraging medication addition. EHR clinical support has been shown to reduce utilization of labs or imaging,6,21 but in our study, we attempted to increase utilization of an opioid-sparing pain medication in order to decrease the use of opioids without adversely affecting pain scores. Perhaps the intervention in this study was not strong enough as it included a ‘hard stop’ but not a ‘default-on’ intervention. Other studies have found that having a preselected or ‘defaulted-on’ order has been shown to effect change.22 Finally, alternative interventions to influence medication prescribing, such as showing cost information or cost savings,23 have been equally unsuccessful at changing clinician behavior.

It is possible that the timing of our intervention was not optimal for encouraging NSAID ordering and use. Culture of practice is extremely difficult to change. While the effect of NSAIDs on bleeding for most surgical patients has been disproven,5 it may be that surgeons feel more comfortable waiting until post-operative day 1 to start an NSAID and our intervention should target that day, not the admission orderset which is completed immediately after surgery. Additionally, work was ongoing to raise awareness about opioid misuse and the need for multimodal pain control, which may have driven ordering behavior separate from the orderset.

Too often, EHR ordersets are added to clinician workflow with little evaluation. Adding hard stops or making work tasks required in an EHR can lead to burnout, so it is important to justify using hard stops by tracking outcomes carefully.24 One meta-analysis found that in over 122 trials pooling 10,790 clinicians, clinical decision support systems increased desired care by only 5.8%, with only low baseline and pediatric populations showing larger effects.25 In our case, the admission orderset is used thousands of times a day by a diverse group of clinicians and it is essential that only effective evidence-based strategies be deployed. Importantly, the lessons learned from this trial informed practice and the “extra click” or hardstop to prompt NSAID ordering was discontinued in an effort to both continuously drive efficiency and avoid burnout in clinician EHR ordering and use.10 With the poor performance of these systems, it is crucial that each addition to clinicians workflow is studied and removed if it does not create change at the patient level. Additionally, institutions should not drive clinical decision making via low-intensity interventions without careful study. As previously described, the application and study of these changes in the EHR are incredibly resource intensive.26

Our study had a number of limitations. First, we randomized at the clinician level to avoid contamination bias. Since we did not randomize at the patient level, a unique patient might have ended up being represented in each group. However, we clustered by clinician in our analysis, and are well-powered to see any differences that might have existed. Additionally, our patient-level data had similar findings. Some clinician groups continued to use their own admission ordersets, so these encounters are not included in the study. Some of these ordersets include NSAIDs as part of an enhanced recovery pathway and these patients may have been more likely to have NSAIDs ordered but were not represented in our findings. However, these groups may be more amenable to order NSAIDs at baseline, which would have affected the true utility of the intervention. Due to the single-center nature of our study, these findings may not be generalizable to other institutions with different workflows, patient populations or culture around pain medication.Only 40% of admitting clinicians in our study were attendings. Residents may feel limited to autonomously choose NSAIDs, making it possible that the intervention might have exerted more influence at a community hospital where more attendings place admitting orders. Finally, our program occurred simultaneously with institutional level efforts to increase the use of non-opioid pain medication, which may have influenced clinicians to order NSAIDs whether or not they were in the intervention group.

Conclusion

This cluster randomized controlled trial involving a single EHR intervention involving a hard stop to order or bypass NSAIDs to admit a patient, with the goal of increasing uptake of NSAIDs for pain control in adult hospitalized patients, found no difference in NSAID ordering between the two groups. This opt-in intervention was not strong enough to change clinicians ordering practices. Therefore, the intervention was discontinued and modified randomized quality improvement steps were undertaken.

Supplementary Material

Key Points.

Question:

Does presenting non-steroidal anti-inflammatory drug (NSAID) ordering as a required choice on a hospital-wide admission orderset for adults as part of an opioid-sparing pain management strategy increase prescribing of NSAIDs?

Findings:

This cluster randomized controlled trial of 20,085 hospital encounters found that requiring clinicians to actively select or decline NSAIDs at hospital admission did not increase NSAID ordering at admission.

Meaning:

A stronger intervention will be required to change clinician ordering to promote NSAID uptake.

Acknowledgements

We would like to acknowledge the Learning Health System team at UCSF, including Jennifer Creasman and Alina Goncharova. We would also like to thank the Pain Order Set Committee and Pain Committee at UCSF Health including specific members of the Order Set Committee, Kendall Gross and Jenifer Twiford.

Footnotes

Disclosures

Dr. Bongiovanni was funded by the Agency for Healthcare Research and Quality (AHRQ) (K12HS026383) and is now funded by the National Institute of Aging of the National Institutes of Health under Award Number K23AG073523 and the Robert Wood Johnson Foundation under the Award P0553126. The content is solely the responsibility of the authors and does not necessarily represent the official views of AHRQ, the National Institutes of Health or the Robert Wood Johnson Foundation.

Bibliography

- 1.Wick EC, Grant MC, Wu CL. Postoperative Multimodal Analgesia Pain Management With Nonopioid Analgesics and Techniques: A Review. JAMA Surg 2017;152(7):691. doi: 10.1001/jamasurg.2017.0898 [DOI] [PubMed] [Google Scholar]

- 2.Bongiovanni T, Hansen K, Lancaster E, O’Sullivan P, Hirose K, Wick E. Adopting best practices in post-operative analgesia prescribing in a safety-net hospital: Residents as a conduit to change. Am J Surg 2020;219(2):299–303. doi: 10.1016/j.amjsurg.2019.12.023 [DOI] [PubMed] [Google Scholar]

- 3.Lancaster E, Bongiovanni T, Lin J, Croci R, Wick E, Hirose K. Residents as Key Effectors of Change in Improving Opioid Prescribing Behavior. J Surg Educ 2019;76(6):e167–e172. doi: 10.1016/j.jsurg.2019.05.016 [DOI] [PubMed] [Google Scholar]

- 4.Lancaster E, Bongiovanni T, Wick E, Hirose K. Postoperative Pain Control: Barriers to Quality Improvement. J Am Coll Surg 2019;229(4, Supplement 1):S68. doi: 10.1016/j.jamcollsurg.2019.08.163 [DOI] [Google Scholar]

- 5.Bongiovanni T, Lancaster EM, Ledesma Y, et al. NSAIDs and Surgical Bleeding: What’s the Bottom Line? J Am Coll Surg 2020;231(4, Supplement 2):e30–e31. doi: 10.1016/j.jamcollsurg.2020.08.075 [DOI] [Google Scholar]

- 6.Silvestri MT, Xu X, Long T, et al. Impact of Cost Display on Ordering Patterns for Hospital Laboratory and Imaging Services. J Gen Intern Med 2018;33(8):1268–1275. doi: 10.1007/s11606-018-4495-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.A minimalist electronic health record-based intervention to reduce standing lab utilisation | Postgraduate Medical Journal Accessed April 20, 2022. https://pmj-bmj-com.ucsf.idm.oclc.org/content/97/1144/97.long [DOI] [PubMed] [Google Scholar]

- 8.Pletcher MJ, Flaherman V, Najafi N, et al. Randomized Controlled Trials of Electronic Health Record Interventions: Design, Conduct, and Reporting Considerations. Ann Intern Med 2020;172(11_Supplement):S85–S91. doi: 10.7326/M19-0877 [DOI] [PubMed] [Google Scholar]

- 9.Najafi N, Cucina R, Pierre B, Khanna R. Assessment of a Targeted Electronic Health Record Intervention to Reduce Telemetry Duration: A Cluster-Randomized Clinical Trial. JAMA Intern Med 2019;179(1):11–15. doi: 10.1001/jamainternmed.2018.5859 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Kawamoto K, McDonald CJ. Designing, Conducting, and Reporting Clinical Decision Support Studies: Recommendations and Call to Action. Ann Intern Med 2020;172(11_Supplement):S101–S109. doi: 10.7326/M19-0875 [DOI] [PubMed] [Google Scholar]

- 11.CONSORT 2010 Statement: updated guidelines for reporting parallel group randomised trials | The EQUATOR Network Accessed January 25, 2022. https://www.equator-network.org/reporting-guidelines/consort/ [Google Scholar]

- 12.Deyo RA, Cherkin DC, Ciol MA. Adapting a clinical comorbidity index for use with ICD-9-CM administrative databases. J Clin Epidemiol 1992;45(6):613–619. doi: 10.1016/0895-4356(92)90133-8 [DOI] [PubMed] [Google Scholar]

- 13.Assessment Pain and Scores Pain. Pain Management Education at UCSF Accessed June 23, 2022. https://pain.ucsf.edu/understanding-pain-pain-basics/pain-assessment-and-pain-scores [Google Scholar]

- 14.Scher C, Petti E, Meador L, Van Cleave JH, Liang E, Reid MC. Multidimensional Pain Assessment Tools for Ambulatory and Inpatient Nursing Practice. Pain Manag Nurs Off J Am Soc Pain Manag Nurses 2020;21(5):416–422. doi: 10.1016/j.pmn.2020.03.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Opioid Conversion Calculator for Morphine Equivalents. Oregon Pain Guidance Accessed September 8, 2020. https://www.oregonpainguidance.org/opioidmedcalculator/ [Google Scholar]

- 16.McDonald EG, Wu PE, Rashidi B, et al. The MedSafer Study—Electronic Decision Support for Deprescribing in Hospitalized Older Adults: A Cluster Randomized Clinical Trial. JAMA Intern Med 2022;182(3):265. doi: 10.1001/jamainternmed.2021.7429 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Tamblyn R, Abrahamowicz M, Buckeridge DL, et al. Effect of an Electronic Medication Reconciliation Intervention on Adverse Drug Events: A Cluster Randomized Trial. JAMA Netw Open 2019;2(9):e1910756. doi: 10.1001/jamanetworkopen.2019.10756 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Mekonnen AB, Abebe TB, McLachlan AJ, Brien J anne E. Impact of electronic medication reconciliation interventions on medication discrepancies at hospital transitions: a systematic review and meta-analysis. BMC Med Inform Decis Mak 2016;16(1):1–14. doi: 10.1186/s12911-016-0353-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Electronic tools to support medication reconciliation: a systematic review | Journal of the American Medical Informatics Association | Oxford Academic Accessed June 24, 2022. https://academic-oup-com.ucsf.idm.oclc.org/jamia/article/24/1/227/2631462 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.van Doormaal JE, van den Bemt PMLA, Zaal RJ, et al. The Influence that Electronic Prescribing Has on Medication Errors and Preventable Adverse Drug Events: an Interrupted Time-series Study. J Am Med Inform Assoc 2009;16(6):816–825. doi: 10.1197/jamia.M3099 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Bates DW, Kuperman GJ, Rittenberg E, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med 1999;106(2):144–150. doi: 10.1016/S0002-9343(98)00410-0 [DOI] [PubMed] [Google Scholar]

- 22.Olson J, Hollenbeak C, Donaldson K, Abendroth T, Castellani W. Default settings of computerized physician order entry system order sets drive ordering habits. J Pathol Inform 2015;6(1):16. doi: 10.4103/2153-3539.153916 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Khanal S, Schmidtke KA, Talat U, Sarwar A, Vlaev I. Implementation and Evaluation of Two Nudges in a Hospital’s Electronic Prescribing System to Optimise Cost-Effective Prescribing. Healthc Basel Switz 2022;10(7):1233. doi: 10.3390/healthcare10071233 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Moy AJ, Schwartz JM, Chen R, et al. Measurement of clinical documentation burden among physicians and nurses using electronic health records: a scoping review. J Am Med Inform Assoc 2021;28(5):998–1008. doi: 10.1093/jamia/ocaa325 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Kwan JL, Lo L, Ferguson J, et al. Computerised clinical decision support systems and absolute improvements in care: meta-analysis of controlled clinical trials. BMJ 2020;370:m3216. doi: 10.1136/bmj.m3216 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Trinkley KE, Kroehl ME, Kahn MG, et al. Applying Clinical Decision Support Design Best Practices With the Practical Robust Implementation and Sustainability Model Versus Reliance on Commercially Available Clinical Decision Support Tools: Randomized Controlled Trial. JMIR Med Inform 2021;9(3):e24359. doi: 10.2196/24359 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.