Abstract

We used a dynamical systems perspective to understand decision-related neural activity, a fundamentally unresolved problem. This perspective posits that time-varying neural activity is described by a state equation with an initial condition and evolves in time by combining at each time step, recurrent activity and inputs. We hypothesized various dynamical mechanisms of decisions, simulated them in models to derive predictions, and evaluated these predictions by examining firing rates of neurons in the dorsal premotor cortex (PMd) of monkeys performing a perceptual decision-making task. Prestimulus neural activity (i.e., the initial condition) predicted poststimulus neural trajectories, covaried with RT and the outcome of the previous trial, but not with choice. Poststimulus dynamics depended on both the sensory evidence and initial condition, with easier stimuli and fast initial conditions leading to the fastest choice-related dynamics. Together, these results suggest that initial conditions combine with sensory evidence to induce decision-related dynamics in PMd.

Subject terms: Premotor cortex, Decision, Dynamical systems, Decision

It remains unclear why some decisions take longer than others even when the sensory inputs are similar. Here, the authors show that both initial neural state and sensory input combine in the premotor cortex to influence the speed and geometry of neural population activity during decisions.

Introduction

There are 10 minutes to make it to the airport but your phone says you’re still 12 minutes away. Seeing a yellow light in the distance you quickly floor it. You get to the intersection only to realize you have run a red light. The sight of the lights result in patterns of neural activity that respectively lead you to respond quickly to your environment (i.e., speed up when you see the yellow) and process feedback (i.e., slow down after running the red). This process of discriminating sensory cues to arrive at a choice is termed perceptual decision-making1–5.

Research in invertebrates6,7, rodents8,9, monkeys10,11, and humans12,13 has attempted to understand the neural basis of perceptual decision-making. Barring few exceptions14–16, these studies have focused on single neurons in decision-related brain regions10,11,17,18. However, currently the link between neural population dynamics in these brain areas and decision-making behavior, especially in reaction time (RT) tasks, is largely unclear. Here, we address this gap by using a dynamical systems approach19–22.

The dynamical systems approach21–23 posits that neural population activity (e.g., firing rates), X, is governed by a state equation of the following form:

| 1 |

Where F represents the recurrent dynamics (i.e., local synaptic input) in the region of interest and usually considered fixed for a given brain area in a task. U is the input from neurons outside the region of interest and depends on various task contingencies (e.g., sensory evidence). X0 is the initial condition for these dynamics. In this framework, dynamics for every trial depend on both the initial condition and input and leads to distinct behavior on every trial.

The dynamical systems approach helps link time-varying, heterogeneous activity of neural populations and behavior20,24,25. In a study of motor planning, position and velocity of the neural population dynamics relative to the mean trajectory at the time of the go cue (i.e., initial condition or X0) explained considerable variability in RTs20 (see Fig. 1a). Similarly, in studies of timing, the initial condition encoded the perceived time interval and predicted the speed of subsequent neural dynamics and the reproduced time interval26 (see Fig. 1b). In the same study, an input depending on a task contingency (gain) altered the speed of dynamics (Fig. 1b).

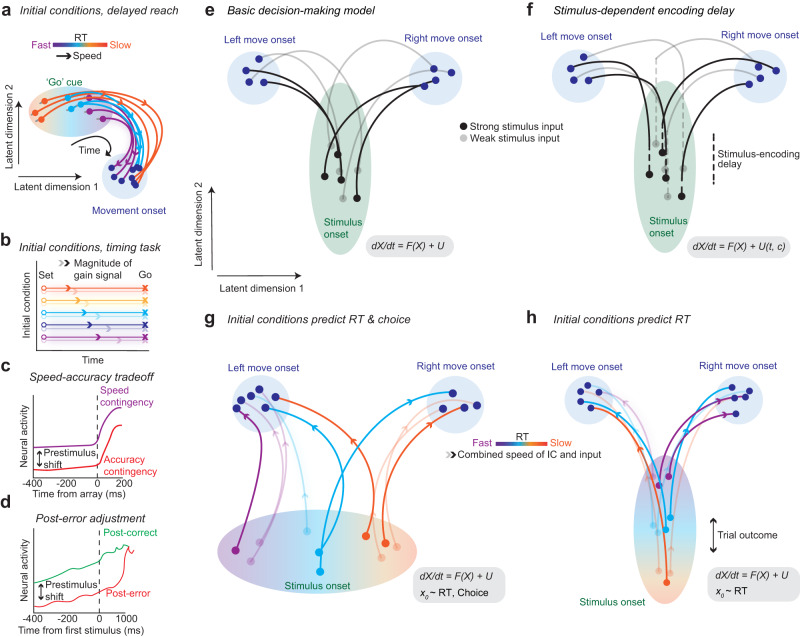

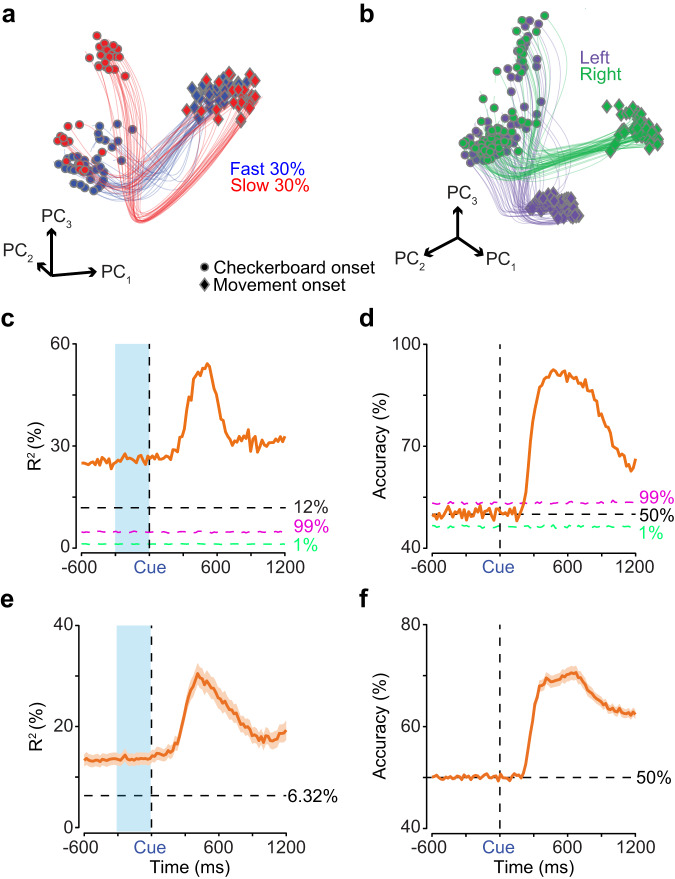

Fig. 1. Initial conditions and inputs predict subsequent neural dynamics and behavior.

a The initial condition hypothesis from delayed reach experiments20 posits that the position and velocity of a neural state at the time of the go cue (initial condition) negatively correlates with RT. b The neural population state at the end of a perceived time interval and a gain modifier actuates the initial conditions (Set, circles) determining the speed (arrows) of subsequent dynamics and therefore when an action is produced (Go, X’s)26. c, d Prestimulus neural activity differs for speed and accuracy contingencies for speed-accuracy tradeoff tasks58 or after correct and error trials33. e Basic decision-making model with no prestimulus effects, where only the strength of sensory evidence determines RT and choice. f Stimulus-dependent encoding delay. Decision-making takes longer as a function of how long it takes to visually process stimuli (dotted line). g Biased initial conditions predict both RT and choice (X0 ~ RT, choice) and combine with sensory evidence to lead to decisions. Initial neural states vary trial-to-trial, and are closer to the movement onset state for one choice (here left). Trial outcomes have no effect on initial conditions in this model as initial conditions largely reflect a reach bias. h Overall dynamics depend on both the initial conditions, which solely predict RT (X0 ~ RT), and the sensory evidence. The closer the initial condition is to a movement initiation state before checkerboard onset, the faster the velocity of the dynamics will be, leading to faster RTs. Previous outcomes shift these initial conditions such that the dynamics are either faster or slower. Current population state at stimulus onset/go cue (dots within an ellipse; e, f color matches stimulus strength; g, h color and opacity matches population state and stimulus strength respectively) evolves along trajectories of varying speed (color bars in (a) & (g/h); apply to (a), (b), (g), (h)) as set by initial conditions (a, g, h) and/or inputs (e–g). In (g) and (h) light/dark opacity of the arrowhead indicates speed of trajectory as a function of weak/strong stimulus input and initial condition (IC).

Here, we expanded on findings from motor planning and timing studies and investigated which dynamical system best described decision-related neural population activity in dorsal premotor cortex (PMd). To derive hypotheses about dynamics, we leveraged three results from prior studies. First, rate at which choice-selective activity emerges depends on the strength of the sensory evidence (e.g., auditory pulses, random dot motion, static red-green checkerboards, etc.)8,10,18,27. Second, in studies of speed-accuracy tradeoff, prestimulus neural activity is different for fast vs. slow blocks16,28–31 (Fig. 1c). Finally, the prestimulus firing rates are altered by the outcome of the previous trial32,33 (Fig. 1d). Based on these findings, we hypothesized four different dynamical mechanisms that could describe the data.

The simplest dynamical system assumes initial conditions do not covary with RT or choice and that neural dynamics and behavior are driven largely by the sensory evidence (Fig. 1e).

A second dynamical system assumes that the initial conditions do not vary, but that there are either systematic or random delays in sensory evidence processing34, that alter choice-related dynamics and behavior (Fig. 1f).

A third system assumes that initial conditions are biased towards one or another choice35, correlate with RT, and that poststimulus dynamics are influenced by both initial conditions and sensory evidence (Fig. 1g).

Finally, a fourth system assumes that initial conditions correlate with RT but not choice, and poststimulus dynamics depend on both sensory evidence and initial condition. Additionally, the changes in initial condition are in part due to the outcome of the previous trial (Fig. 1h).

We used these different candidate dynamical mechanisms to build recurrent neural networks with various constraints (Figs. S1a and S2) and simulate synthetic neural populations (Figs. S1b and S3). We analyzed these simulations of neural activity using dimensionality reduction, decoding, and regression analyses. These different dynamical mechanisms make distinct predictions about the principal component trajectories and whether prestimulus activity covaries with choice and RT. We used the predictions to analyze the firing rates of neurons recorded in PMd of monkeys performing a red-green RT perceptual decision-making task18.

Neural population dynamics in PMd had the following properties. First, state space trajectories were ordered pre- and poststimulus as a function of RT, with such effects observed within a stimulus difficulty. Subsequent KiNeT26 analysis of these trajectories suggested that faster RTs were associated with faster pre- and poststimulus dynamics as compared to slower RTs. Second, cross-validated single-trial analyses using tensor component analysis, dynamical systems, reduced-rank regression, decoding and regression analyses further corroborated that prestimulus neural state, that is the initial condition, only predicted RT but not the eventual choice. Third, poststimulus choice-related dynamics depended on both the initial condition and the sensory evidence, with choice-related signals emerging faster for easier compared to harder trials but also modulated by the initial condition. Finally, initial conditions and choice-related dynamics depended on the outcome of the previous trial with pre- and poststimulus dynamics slower on trials following an error as compared to trials following a correct response.

Our results expand on the observations of ref. 20, that the prestimulus position and velocity of the neural trajectories in state space (i.e., initial conditions) are correlated with RT, as we demonstrate that 1) both inputs and initial conditions jointly control dynamics, and 2) that changes in the initial conditions are dependent upon previous outcomes. Together, the results suggest that decision-related activity in PMd is captured by a dynamical system (Fig. 1h) composed of initial conditions, that covary with RT and are dependent upon previous outcome, and inputs (i.e., sensory evidence) which combine to induce choice-related dynamics.

Results

Decision-making behavior is dependent on sensory evidence and internal state

We trained two macaque monkeys (O and T) to discriminate the dominant color of a central, static checkerboard composed of red and green squares (Fig. 2a). Fig. 2b depicts the trial timeline. The trial began when the monkey held the center target and fixated on the fixation cross. After a short randomized holding time (300–485 ms), a red and a green target appeared on either side of the central hold (target configurations were randomized). After an additional randomized target viewing time (400-1000 ms), the checkerboard appeared. The monkey’s task was to reach to, and touch the target corresponding to the dominant color of the checkerboard. While animals were performing the task, we measured the arm and eye movements of the monkeys. We identified RTs as the first time when hand speed exceeded 10% of maximum speed during a reach. If the monkey correctly performed a trial, he was rewarded with a drop of juice and a short inter-trial interval (ITI, 300 to 600 ms across sessions) whereas if he made an error it led to a longer timeout ITI (ranging from ~ 1500 ms to ~ 3500 ms). Using timeouts for errors encouraged animals to prioritize accuracy over speed.

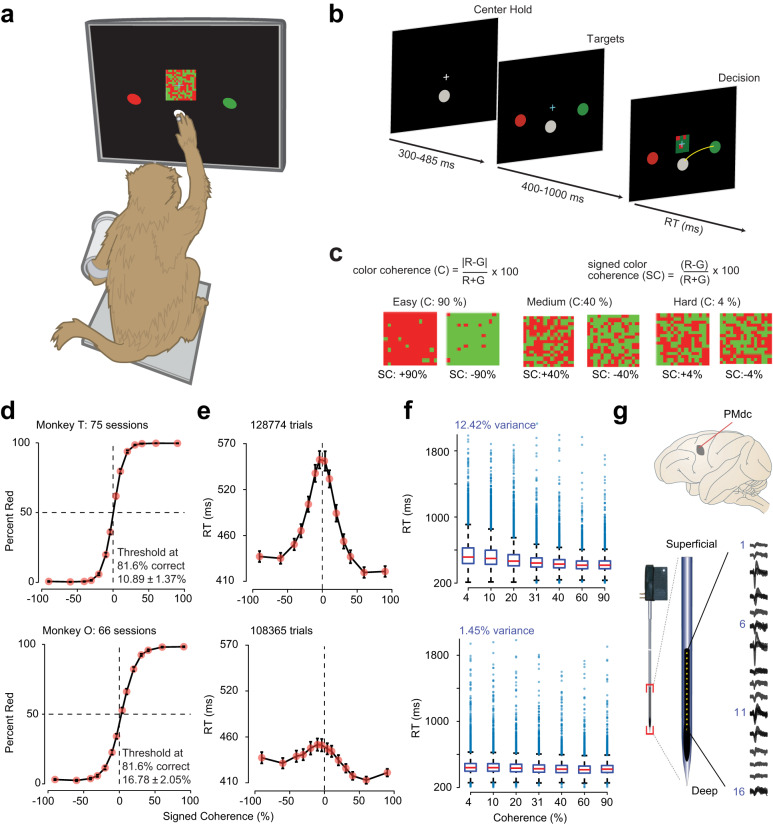

Fig. 2. Monkeys can discriminate red-green checkerboards and demonstrate rich variability in RTs between and within stimulus coherences.

a An illustration of the setup for the behavioral task. We loosely restrained the arm the monkey was not using with a plastic tube and cloth sling. A reflective infrared bead was taped on the middle digit of the active hand to measure hand position in 3D space and to mimic a touch screen. Eye position was tracked using an infrared reflective mirror placed in front of the monkey’s nose. b Timeline of the discrimination task. c Examples of different stimuli used in the experiment parameterized by the color coherence of the checkerboard cue. Positive values of signed coherence (SC) denote more red (R) than green (G) squares and vice versa. d Psychometric curves, percent responded red, and (e) RTs (correct and incorrect trials) as a function of the percent SC of the checkerboard cue, over sessions of the two monkeys (T: 75 sessions; O: 66 sessions). Dark orange markers show measured data points along with 2 × SEM estimated over sessions (error bars lie within the marker for many data points). The black line segments are drawn in between these measured data points to guide the eye. Discrimination thresholds measured as the color coherence level at which the monkey made 81.6% correct choices are also indicated. Thresholds were estimated using a fit based on the cumulative Weibull distribution function. f Standard box-and-whisker plots (i.e., center line is median, box limits are upper and lower quartiles, whiskers are 1.5x interquartile range, and outliers are plotted as blue circles) of RT as a function of unsigned checkerboard coherence (RTs from n = 128,774 (top) and n = 108,365 trials (bottom)). Note large RT variability within and across coherences. g The recording location, caudal PMd (PMdc), indicated on a macaque brain, adapted from89. Single and multi-units in PMdc were primarily recorded by a 16 electrode (150-μm interelectrode spacing) U-probe (Plexon, Inc., Dallas, TX, United States); example recording depicted. Images in (a) and (g) are adapted from Chandrasekaran, C., Peixoto, D., Newsome, W.T. et al. Laminar differences in decision-related neural activity in dorsal premotor cortex. Nat Commun 8, 614 (2017). 10.1038/s41467-017-00715-0. Source data are provided as a Source Data file.

We used 14 levels of sensory evidence referred to as signed color coherence (SC, Fig. 2c) as it is dependent on the actual dominant color of the checkerboard. Unsigned coherence (C, Fig. 2c), which refers to the strength of stimuli, is independent of the actual dominant color of the checkerboard. Thus, there are 7 levels of C.

The behavioral performance of the monkeys depended on the signed coherence. In general, across all sessions, monkeys made more errors when discriminating stimuli with near equal combinations of red and green squares (Fig. 2d). We fit the proportion correct as a function of unsigned coherence using a Weibull distribution function to estimate slopes and psychometric thresholds (average R2; T: 0.99, 75 sessions; O: 0.98, 66 sessions; Threshold (α): Mean ± SD: T: 10.89 ± 1.37%, O: 16.78 ± 2.05%; slope (β): Mean ± SD over sessions, T: 1.26 ± 0.18, O: 1.10 ± 0.14).

As expected, monkeys were generally slower for more ambiguous checkerboards (Fig. 2e). However, per monkey regressions using unsigned coherence () to predict RTs only explained ~ 12.4% and ~ 1.5% of RT variability, for monkeys T and O respectively (Fig. 2f). These results suggest that while there is RT variability induced by differences in the sensory evidence, there is also an internal source of RT variability. Indeed, as the box plots in Fig. 2f show, a key feature of the monkeys’ behavior is that RTs are quite variable within a coherence, including the easiest ones. In the subsequent sections, we investigated which dynamical mechanism (Fig. 1e–h) was most consistent with this RT variability and choice behavior.

Single unit prestimulus firing rates covary with RT and poststimulus activity is input dependent

Our database for understanding the neural population dynamics underlying decision-making consists of 996 units (546 units in T and 450 units in O, including both single neurons and multi-units, 801 single neurons) recorded from PMd (Fig. 2g) of the two monkeys over 141 sessions. We included units if they were well separated from noise and if they modulated activity in at least one task epoch. A unit was categorized as a single neuron by a combination of spike sorting and if inter-spike-interval violations were minimal (≤ 1.5% of inter-spike-intervals were ≤ 1.5 ms; median across single neurons: 0.28%).

Fig. 3 shows the smoothed (30 ms Gaussian) trial-averaged firing rates of six example units recorded in PMd aligned to checkerboard onset and organized either by coherence and choice, plotted until the median RT (Fig. 3a), or organized by RT and choice, plotted until the center of the RT bin (Fig. 3b). Many units showed classical ramp-like firing rates10,30,35–37 (see Fig. 3, top three rows). However, many neurons demonstrated complex, time-varying patterns of activity that included increases and decreases in firing rate that covaried with coherence, choice and RT15,18,38,39 (Fig. 3, bottom 3 rows). Additionally, each of the albeit curated neurons in Fig. 3b demonstrated prestimulus firing rate covariation with RT, implying variable initial conditions that ultimately factor into RTs. These firing rate dynamics and those from additional units (Fig. S4a, bottom two rows) were not an artifact of smoothing spike trains with a 30 ms Gaussian kernel, and were near identical even when spike trains were filtered with a 15 ms Gaussian or a causal 50 ms boxcar kernel (Fig. S4b, c).

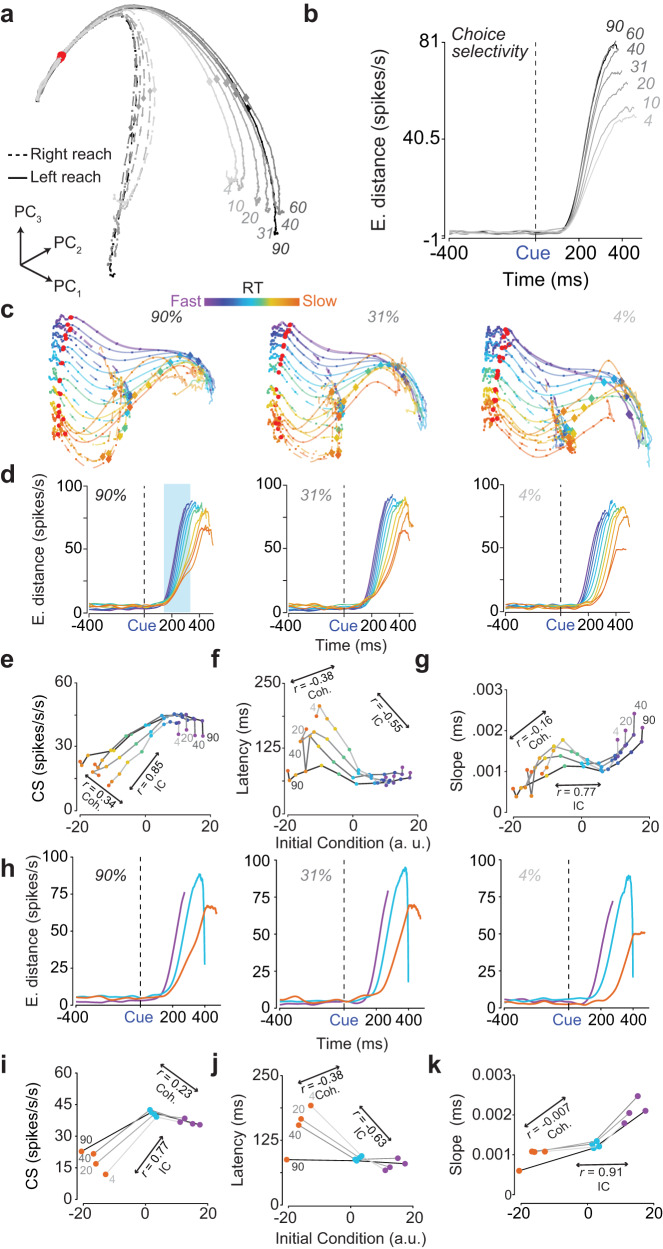

Fig. 3. Firing rates of a heterogeneous population of PMd neurons are modulated by the input (i.e., strength of the sensory evidence), and the initial condition (i.e., prestimulus firing rate) covaries with RT.

Firing rate across (a) 7 levels of color coherence (grayscale color bar with darker colors indicating easier coherences) and (b) 11 RT bins, from fast (violet) to slow (orange), for both action choices (right - dashed, left - solid) of 6 example units in PMd from monkeys T and O aligned to stimulus onset (Cue/vertical dashed black line). Firing rates are plotted until the median RT of each color coherence and until the midpoint of each RT bin (notice slightly different lengths of lines). Color-matched shading is SEM. In (a), firing rates tend to separate as a function of coherence, and in (b), the same neurons show prestimulus covariation as a function of RT (X0 ~ RT), and post-stimulus covariation with RT and choice. Source data are provided as a Source Data file.

These examples already suggest that decision-related PMd neural responses are more consistent with some dynamical hypotheses than others. First, when organized by coherence and choice, choice-selective signals are largely absent before checkerboard onset, and latency of choice selective responses after checkerboard onset are only modestly affected by the stimulus coherence. These results are inconsistent with the dynamical hypotheses outlined in Fig. 1e, g. Second, prestimulus correlation with RT is consistent with preliminary support for the dynamical mechanisms in (Fig. 1g, h), and inconsistent with the hypothesis shown in Fig. 1f.

In the next sections, we use dimensionality reduction, cross-validated single-trial analyses, decoding, and regression methods to understand how RT and choice are represented in the shared, time-varying, and heterogeneous activity of these neurons and reject various dynamical hypotheses. To predict the results of these analyses for various dynamical models shown in Fig. 1e–h we first used two modeling approaches that we describe in the next section.

Different dynamical mechanisms predict distinct relationships between prestimulus activity with RT and choice

The single unit examples shown in Fig. 3 are consistent with the dynamical mechanisms in Fig. 1g, h in that prestimulus dynamics covary with RT. However, we need to ensure that such effects are also present at the level of the neural population. A common approach to analyze heterogeneous neural populations is to use a dimensionality reduction method such as principal components analysis (PCA) on trial-averaged firing rates organized by various variables of interest and visualize the associated state-space trajectories15,40. To derive predictions on how principal components (PCs) from neural data would appear for the various dynamical mechanisms outlined in Fig. 1e–h, we used two complementary approaches.

First, we trained recurrent neural network (RNN) models to perform the same task as our monkeys. RNN models used a ReLu nonlinearity, received noisy evidence for left and right choices, and output two decision-variables for left and right choices (Fig. S1a). Additional details on the RNNs can be found in the methods (section “Recurrent neural network models of various dynamical hypotheses”). Second, we simulated a population of hypothetical neurons, based on our work from18 that used various metrics to comprehensively characterize the units analyzed in this study. The key observation from that study was that PMd contains a large fraction of neurons that increase their firing rate after stimulus onset, and show strong covariation with RT and choice (i.e., increased neurons). Smaller fractions decreased their firing rate after stimulus onset (i.e., decreased) or were only active around movement onset (i.e., perimovement). Further details of how these neurons were modeled can be found in the methods (Section “Hypothetical synthetic neural populations”) and in Fig. S1b.

After training the RNNs and building the synthetic populations, we performed PCA, decoding, and regression analyses on the firing rates of both types of models (Figs. S2 and S3). Classical decision-making models (Figs. S2a and S3a) and a delayed-input model (Fig. S2b), without any bias for one or another choice as in Fig. 1e, f, show little prestimulus covariation with choice or RT. In contrast, models (Figs. S2c and S3b) with a bias for one of the reaches as in Fig. 1g show a PCA structure where the PCs are biased for one choice over the other. Finally, to simulate the hypothesis shown in Fig. 1h, we used two approaches for the RNN. We biased both left and right choice input before checkerboard onset or altered the gain of the ReLu function. In both RNN cases, we found that prestimulus state covaries with RT. However, in neither of these RNNs (Fig. S2d, e) did we observe a strong and reliable prestimulus covariation with choice. Similarly, in a synthetic neural population where ~ 20% of neurons (Section “Hypothetical synthetic neural populations”) had baseline firing modulation with RT (consistent with the results reported in18 and the results above), prestimulus population dynamics demonstrated covariation with RT but not choice (Fig. S3c).

These RNN models and synthetic neuron simulations suggest that PCA on trial-averaged neural responses should demonstrate distinct structure consistent with one or another hypothesis. We used these results to evaluate which of these dynamical hypotheses are most consistent with our neural data.

Principal component analysis reveals prestimulus population state covariation with RT

Informed by our modeling analyses, we next visualized which dynamical hypothesis was most consistent with our PMd data. We initially performed a PCA on trial-averaged firing rate activity (again smoothed with a 30 ms Gaussian) windowed about checkerboard onset, organized by overlapping RT bins (11 levels representing a spectrum from faster to slower RTs; 300–400 ms, 325–425 ms, ..., to 600-1000 ms), and both reach directions (Fig. 4a, b). For this analysis, we pooled all trials, including all stimulus coherences and both correct and incorrect trials, then sorted by and averaged within RT bin and choice. On average, we used 100 to 200 trials per RT bin for these analyses (Fig. S6b).

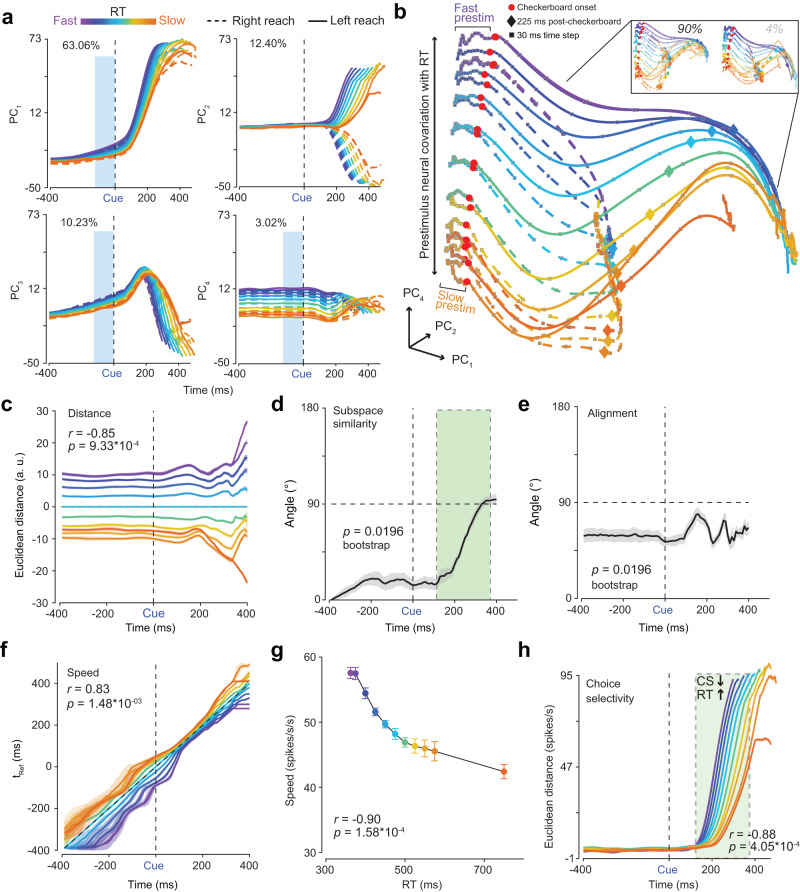

Fig. 4. Prestimulus population firing rates covary with RT.

a PCs1-4 of trial-averaged firing rates organized across 11 RT bins, both reach directions, and aligned to checkerboard onset. Percent variance explained is provided. Blue boxes highlight prestimulus covariation with RT. b State space trajectories of PCs1,2,4 aligned to checkerboard onset. Faster RT trajectories appear to move faster in the prestimulus period than slower RTs (fast/slow prestim, also see g). Inset: State space trajectories within a single stimulus coherence (i.e., 90% and 4%). c KiNeT distance analysis showing consistent spatial organization of trajectories peristimulus and correlated with RT. d Angle between subspace vector at the first timepoint (-400 ms) and subspace vector at each timepoint is largely consistent but increases as choice signals emerge (green box). e Average relative angle between adjacent trajectories at each timepoint was largely less than 90∘ for the prestimulus period but approach orthogonality as choice signals emerge poststimulus. f KiNeT Time to reference (tRef) analysis shows that trajectories for faster RTs reach similar points on the reference trajectory (cyan, middle trajectory) earlier than trajectories for slower RTs. g Average scalar speed for the prestimulus period (-400 to 0 ms epoch) as a function of RT bin. h Choice-selectivity signal measured as the Euclidean distance in the first six dimensions between the two reach directions for each RT bin aligned to checkerboard onset. Rate for choice selectivity (CS) is faster for faster RTs compared to slower RTs (green box). In (c) and (f) the x-axis is time on the reference trajectory. Black dashed lines track the reference trajectory. In d & e black dashed horizontal line indicates 90∘. Error bars are color-matched SEM (n = 50 bootstraps) in (c)–(h). Correlations in (c)–(h) were tested with two-sided t tests. p values in (d) and (e) were derived from one-sided bootstrap tests (n = 50, comparison to 90∘). Cue, Checkerboard onset, a. u., Arbitrary units. Source data are provided as a Source Data file.

To identify the number of relevant dimensions for describing this data, we used an approach developed in41 (see Section “Estimation of number of dimensions to explain the data” for details). Firing rates on every trial in PMd during this task can be thought of as consisting of a combination of signal (i.e., various task related variables) and noise contributions from sources outside the task such as spiking noise for example. Trial averaging reduces this noise but nevertheless when PCA is performed it returns a principal component (PC) space that captures variance in firing rates due to the signal and variance due to residual noise (signal+noise PCA). Ideally, we only want to assess the contributions of the signal to the PCA, but this is not possible for trial-averaged or non-simultaneously recorded data. To circumvent this issue and determine the number of signal associated dimensions, the method developed in41 estimates the noise contributions by performing a PCA on the difference between single trial estimates of firing rates, to obtain a noise PCA. Components from the signal+noise PCA and the noise PCA were compared component-by-component such that only the signal+noise dimensions that explained significantly more variance than the corresponding noise dimensions were included in further analyses. This analysis yielded six PCs that explained > 90% of the variance in trial-averaged firing rates (Fig. S5a). Similar analyses using different smoothing filters (15 ms Gaussian and 50 ms causal boxcar filters) on spikes to derive firing rates yielded similar numbers of chosen dimensions and similar amounts of variance explained for the first 6 components (87.59% and 85.67%, respectively, Fig. S5b, c).

Fig. 4a plots the first four PCs obtained from this PCA. What is apparent in Fig. 4a is that the prestimulus state strongly covaries with RT but only modestly with choice. In particular, barring component 2, which appears to be most strongly associated with choice, PCs 1, 3, and 4 showed covariation between the prestimulus state and RT (Fig. 4a, highlighted with light blue rectangles)—consistent with the rich covariation between RT and prestimulus firing rates in the single neuron examples shown in Fig. 3b. Visualizing PCs 1, 2, and 4 in a state space plot further supported this observation (Fig. 4b). The axes in Fig. 4b are deliberately not equalized to better highlight prestimulus covariation with RT. A corresponding axis-equalized figure showing the same patterns is shown in Fig. S7.

Such covariation between prestimulus neural state and RT was also not a result of pooling across all the different stimulus difficulties and was even observed within a level of stimulus coherence (note similarities between state space trajectories in Fig. 4b & its inset). We discuss this further in Section “Inputs and initial conditions both contribute to the speed of poststimulus decision-related dynamics” where we analyze the joint effects of inputs and initial conditions. These analyses are also robust to whether they are performed with multi-units and single units42 (996 units, Fig. 4) as compared to solely well-isolated single neurons (801 single neurons, Fig. S10a), and not dependent on the smoothing used to produce the firing rates (Fig. S11a).

In summary, the PCA trajectories demonstrate a lawful organization with respect to RT and modestly with choice prior to stimulus onset (Fig. 4b). Note such structure was not an artifact of using overlapping RT bins. We observed very much the same structure even when we used non-overlapping RT bins (Fig. 5a). These results are strongly consistent with the dynamical hypotheses proposed in Fig. 1h, weakly consistent with Fig. 1g and inconsistent with hypotheses in Fig. 1e, f. Additionally, direct comparison of these plots to the PCAs of RNNs (Fig. S2d, e) and synthetic neural populations (Fig. S3c) also suggests that perhaps Fig. 1h is overall more consistent with the data than Fig. 1g.

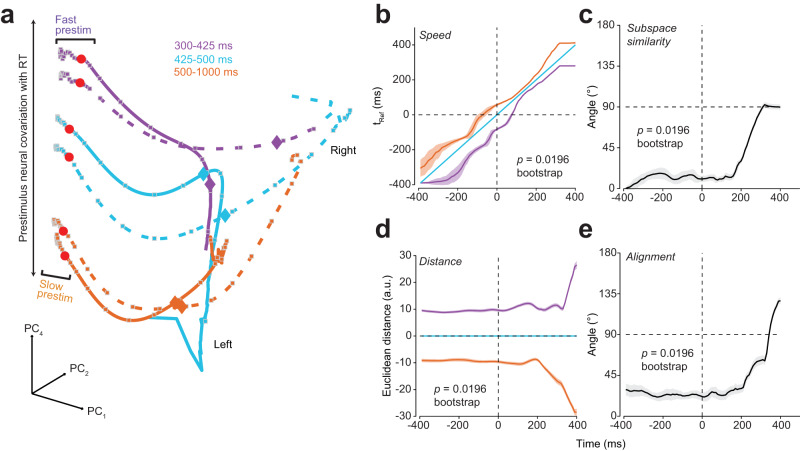

Fig. 5. Replication of PCA and KiNeT findings using non-overlapping RT bins.

a State space trajectories of the 1st, 2nd and 4th PCs (PC1,2,4) aligned to checkerboard onset (red dots). Prestimulus neural activity robustly separates as a function of RT bin. Diamonds and squares, color matched to their respective trajectories, indicate 225 ms post-checkerboard onset and 30 ms time steps respectively. Faster RT trajectories appear to move faster in the prestimulus period than slower RTs (fast/slow prestim). Note axes are deliberately not equalized to better visualize the fluctuations in the initial condition before checkerboard onset. b KiNeT Time to reference (tRef, relative time at which a trajectory reaches the closest point in Euclidean space to the reference trajectory) analysis shows that trajectories for faster RTs reach similar points on the reference trajectory (cyan, middle trajectory) earlier than trajectories for slower RTs. This result suggests that the dynamics for faster RTs are closer to a movement initiation state than slower RTs. c Angle between subspace vector at each timepoint and subspace vector at the first timepoint (-400 ms). The angle between subspace vectors is largely consistent but the space rotates as choice signals emerge ( ~ 200 ms). d KiNeT distance analysis showing that trajectories are consistently spatially organized before and after stimulus onset. e Average relative angle between adjacent trajectories for each timepoint. The angles between adjacent trajectories were largely less than 90∘ for the prestimulus period but are orthogonal post-choice signals ( ~ 350 ms). Error bars are color-matched SEM (n = 50 bootstraps) in (b)–(e). p values in (b)–(e) were derived from one-sided 50-repetition bootstrap tests ((b) and (d) difference between fast and slow RT trajectories different from 0; (c) and (e) subspace and average relative angle different from 90∘). Source data are provided as a Source Data file.

Position and velocity of initial condition correlate with poststimulus dynamics and RT

Hypotheses shown in Fig. 1g, h predict that poststimulus dynamics and behavior should demonstrate dependence upon the position and velocity of prestimulus neural trajectories in state space (i.e., initial conditions)21,22. Position is the instantaneous location in a high-dimensional state space of neural activity (i.e., firing rate of neurons) and velocity a directional measure of how fast these positions are changing over time (i.e., directional rate of change from one neural state to the next). In Fig. 4b, both the position and the velocity of the prestimulus state appear to covary with RT. For instance, prestimulus trajectories for the fastest RTs are 1) spatially separated, and 2) appear to have covered more distance along the paths for movement initiation by the time of checkerboard onset than the prestimulus trajectories for the slowest RTs (small squares which denote 20 ms time steps are more spread out for faster versus slower trajectories, Fig. 4b). In contrast, only a modest separation by choice occurs before stimulus onset.

We used the Kinematic analysis of Neural Trajectories (KiNeT26) approach to more quantitatively test these predictions. KiNeT measures the spatial ordering of trajectories and how each trajectory evolves in time, all with respect to a reference trajectory. For KiNeT analyses we used the first six PCs ( > 90% of variance) as these PCs were significantly different from noise principal components41. KiNeT analyses are first performed within a choice and then averaged across choices. Fig. S8 shows a visualization of the KiNeT analyses and Section “Kinematic analysis of neural trajectories (KiNeT)” provides a detailed description of KiNeT calculations.

First, we used KiNeT to assess if position of the initial conditions was related to RT to assess if our data was consistent with hypotheses shown in Fig. 1g, h. If the position of the initial condition covaries with RT then we expect a lawful ordering of neural trajectories organized by RT bin, otherwise they would lie on top of each other indicating a lack of spatial organization. Thus, we examined the spatial ordering of six-dimensional neural trajectories grouped by RT bins for each reach direction. We estimated the signed minimum Euclidean distance at each point for the trajectory relative to a reference trajectory (the middle RT bin, cyan, for that reach direction, Fig. 4c). Trajectories were 1) organized by RT with trajectories for faster and slower RT bins on opposite sides of the the reference trajectory, and 2) the relative ordering of the Euclidean distance with respect to the reference trajectory was also lawfully related to RT (Fig. 4c) as measured by a correlation between the center of each of the 11 RT bins (e.g., RTbin1: 325 - 400, = 362.5) and the average signed Euclidean distance from 50 bootstraps, 90 ms before checkerboard onset (r9 = −0.85, p = 9.33 × 10−4). These data are consistent with the dynamical system in Fig. 1g and h that the position of the initial condition correlates with RT.

Second, if the data are consistent with a dynamical system then the relative ordering of trajectories by RT in the prestimulus period should predict the ordering of poststimulus trajectories. We tested if this was the case by measuring the subspace similarity angle, and average alignment (Section “Kinematic analysis of neural trajectories (KiNeT)”). To calculate subspace similarity, representative of the geometry of a subspace, we first estimate vectors between adjacent trajectories at all timepoints. These vectors are averaged to derive an average inter-trajectory vector (subspace vector, Fig. 4d) at each time point. We then measure how this average vector rotates over time relative to the subspace vector for the first time point by estimating the angle (subspace angle) between these vectors. Alignment measures the degree to which neural trajectories diverge from one another in state space by estimating the average angle of the normalized vectors between pairwise adjacent trajectories at each timepoint. The null hypothesis is that the ordering of trajectories before stimulus onset is in no way predictive of the ordering of trajectories after stimulus onset. Under this null hypothesis, the subspace angle and alignment would be randomly distributed around 90° poststimulus. Alternatively, if prestimulus dynamics predict ordering of poststimulus dynamics, the average subspace angle and alignment will be largely constant from prestimulus to the poststimulus period until choice and movement initiation signals begin to emerge for the fastest RTs ( ~ 300 ms).

Consistent with the alternative hypothesis, the subspace angle (Fig. 4d) between the first point in the prestimulus period and subsequent timepoints was < 90° before and after checkerboard onset and only increased when movement initiation began to happen for the fastest RTs (50-iteration bootstrap test vs 90°, 90 ms before stimulus onset: p = 0.0196). Similarly, the alignment measured as the angle between adjacent trajectories (Fig. 4e) was largely similar throughout the trial for each reach direction and only begun to change after choice and movement initiation signals began to emerge, suggesting that the ordering of trajectories by RT was preserved well into the poststimulus period. These results imply that the initial condition strongly predicted poststimulus state and eventual RT (50-iteration bootstrap test vs 90°, 90 ms before stimulus onset: p = 0.0196), again consistent with the predictions of the dynamical systems approach.

Third, we examined if the velocity of the peristimulus dynamics was faster for faster RTs compared to slower RTs. For this purpose, we used KiNeT to find the timepoint at which the position of a trajectory is closest (minimum Euclidean distance) to the reference trajectory, which we call Time to reference (tRef, Fig. 4f). Trajectories slower than the reference trajectory will reach the minimum Euclidean distance relative to the reference trajectory later in time (i.e., longer tRef), whereas trajectories faster than the reference trajectory will reach these positions earlier (i.e., shorter tRef). Given that trajectories are compared relative to a reference trajectory, tRef can thus be considered an indirect estimate of the velocity of the trajectory at each timepoint. Note, tRef was referred to as speed in26. Although a trajectory could reach the closest point to the reference trajectory later due to a slower speed, it could also be due to unrelated factors such as starting in a position in state space further from movement onset or by taking a more meandering path through state space. All of these effects are consistent with a longer tRef and a slower velocity, but not necessarily a slower speed.

KiNeT revealed that faster RTs involved faster pre- and poststimulus dynamics whereas slower RTs involved slower dynamics as compared to the reference trajectory (trajectory associated with the middle RT bin, cyan) (Fig. 4f). There was also a positive correlation between RT bin center and average tRef as measured by KiNeT 90 ms before checkerboard onset (r9 = 0.83, p = 1.48 × 10−3, one sided test to 0). Additionally, the overall scalar speed of trajectories in the prestimulus state for the first six dimensions (measured as a change in Euclidean distance over time and averaged over the 400 ms prestimulus period) covaried lawfully with RT (r9 = −0.90, p = 1.58 × 10−4; Fig. 4g). Thus, the velocity of the initial condition, relative to the reference trajectory, is faster for faster RTs compared to slower RTs, coherent with the prediction of the initial condition hypothesis20.

One concern is that perhaps these correlations are difficult to interpret because they use overlapping RT bins. We also repeated the KiNeT analysis for nonoverlapping bins and found exactly the same pattern of results (Fig. 5b–e). In addition, all KiNeT results were replicated even if we only 1) used single units for our analyses (Fig. S10b–e), or used different smoothing kernels (15 ms Gaussian or a 50 ms boxcar, Fig. S11B–E). Collectively, these results firmly establish that the initial condition in PMd correlates with RT and that the geometry and trial-averaged dynamics of these decision-related trajectories strongly depend on the position and velocity of the initial condition consistent with the hypotheses shown in Fig. 1g, h and inconsistent with the hypotheses shown in Fig. 1e, f.

Cross-validated single-trial analyses corroborate PCA results that prestimulus neural activity predicts future neural activity and RT

Our PCA and KiNeT analyses on trial-averaged data (Fig. 4) strongly support the dynamical mechanisms in Fig. 1g, h that prestimulus state correlates with RT and suggest that prestimulus neural activity predicts future neural activity (Fig. 4d, e). However, it is unclear whether this is simply an effect of trial averaging, or whether such effects would also be seen at the single-trial level. In this section, we use Tensor component analysis (TCA), fits to a linear dynamical system (LDS), fits of a nonlinear dynamical system (LFADS), and reduced-rank regression to confirm that prestimulus state is predictive of future neural activity and RT at the single-trial level.

We applied TCA43, a matrix factorization technique akin to PCA, to binned (50 ms) spiking activity from 600 ms before to 600 ms after checkerboard onset for all trials. TCA was performed for each of the 44 sessions (23 from monkey T, and 21 from monkey O) containing V-probe data ( ~ 2–32 units) and returns three connected low-dimensional descriptions of neural activity, or tensors: neuron factors (N) × temporal factors (T) × trial factors (K) (Fig. S12a, b; Section “Tensor component analysis”). Regardless of cross-validation method, either speckled holdout43, or with a more conservative neuron holdout, the variance of neural activity explained in both the training and the test sets increased as the rank of the low-dimensional model increased (Fig. S12d). Thus, TCA provides a reasonable description of neural activity especially considering that this was performed with small numbers of units.

We next visualized the low-dimensional activity profiles for fast and slow RT trials from a single session (Fig. S12c) by multiplying the temporal factors and the trial-specific factors (Fig. S12a, b). Consistent with our results from PCA, we found that prestimulus neural activity indeed separated by RT (Fig. S12c). A regression analysis suggested that > 25% of the variance in RT was explained by the rank 4 low-dimensional descriptions in the prestimulus period (Fig. S12e). Therefore, this TCA is the first line of single-trial evidence that prestimulus neural activity in PMd correlates with RT and is consistent with hypotheses shown in Fig. 1g, h.

The core thesis of this study is that neural activity in PMd is well described by a dynamical system and that the initial condition (prestimulus neural activity) is strongly predictive of future neural activity and RT. The KiNeT subspace similarity and alignment analyses (Fig. 4d, e) provide indirect evidence that PMd activity is consistent with a dynamical system and that initial conditions are predictive of future neural activity and RT.

As a more direct test, we fit a simple low-dimensional autonomous dynamical system to binned single-trial firing rates (50 ms bins). We fit separate dynamical systems to the pre- and post-stimulus period of sessions with at least 10 units (31 sessions), fit left and right choices separately, and used leave-one-out cross validation to assess the model as a function of the dimensionality of the dynamical system (Fig. S13). We found that firing rates at the current time point closely predicted the firing rates 100 ms later (Fig. S13a). Furthermore, the LDSes were excellent models of the pre- and post-stimulus neural dynamics and could describe neural data on held-out trials, with increasing model size improving the fit of the model to the data (Fig. S13b). We then estimated the firing rates predicted by the LDS at each time point and investigated if pre- and poststimulus firing rates predicted RT. Again, prestimulus firing rates strongly predicted RT, with prediction accuracy improving for dynamical systems of larger dimensionality (Fig. S13c).

We also replicated these results using a nonlinear dynamical system fit, the Latent Factor Analysis of Dynamical Systems (LFADS44, described further in Section “Latent Factors Analysis of Dynamical Systems (LFADS)”). Again, we could predict firing rates of neurons on trials held out from the fitting process reliably (Fig. S14a, b), and pre- and poststimulus activity was correlated with RT (Fig. S14c). Thus, these two analyses strongly demonstrate that neural activity in PMd in this task is well modeled by a dynamical system where prestimulus activity can be used to predict future neural activity and RT.

Finally, we used an alternative cross-validated reduced-rank regression45,46 to test if prestimulus neural activity predicted future neural activity (Fig. S15a). Consistent with our KiNeT analysis, the prestimulus state better predicted neural activity than a shuffle control in both the pre- and post-stimulus epochs in a single session and averaged across multiple sessions (Fig. S15b, c). Additionally, angles between reduced-rank regression beta values were measured from the first timepoint to all other timepoints and found to be stable from the pre- to post-stimulus period until the point where choice signals emerge, especially as compared to beta values from shuffled activity (Fig. S15d). This single-trial analysis therefore corroborates the subspace similarity and alignment analyses performed with the trial-averaged data.

All together, these four cross-validated single-trial analyses again show that neural activity in PMd is consistent with a dynamical system where prestimulus activity predicts future neural activity and RT (Fig. 1g, h).

Initial conditions do not predict eventual choice

The previous analyses demonstrated that initial conditions strongly covaried with RT consistent with the hypotheses shown in Fig. 1g, h. However, does the initial condition also predict choice? If it does not, then the data rules out the dynamical hypothesis in Fig. 1g at least for PMd in this task. To investigate this issue, we first examined the covariation between prestimulus and poststimulus state with choice by measuring a choice-selectivity signal identified as the Euclidean distance between the left and right choices in the first six dimensions at each timepoint. The choice-selectivity signal was largely flat during the prestimulus period and increased only after stimulus onset (Fig. 4h). We also found that slower RT trials had delayed and slower increases in the choice-selectivity signal compared to the faster RTs, a result consistent with the slower overall dynamics for slower compared to faster RTs (Fig. 4h). Consistent with this observation, we found a negative correlation between the average choice-selectivity signal in the 125 to 375 ms period after checkerboard onset and the center of the RT bin (mean and 95% CI, r9 = −0.88, p = 4.05 × 10−4).

To further discriminate between the hypotheses shown in Fig. 1g, h, we further explored the initial condition and subsequent poststimulus dynamics using a combination of single-trial analysis, decoding, and regression. We first used the cross-validated LFADS44 approach to estimate single-trial dynamics in an orthogonalized latent space for left reaches and the easiest coherence in a single session (23 units). This analysis revealed that: 1) initial state for a majority of the slow RT trials are separated from the fast RT trials, 2) initial conditions associated with a minority of the slow trials are mixed in with fast initial conditions, and 3) slower RT trajectories also appear to have more curved trajectories (Fig. 6a). All of these are consistent with the results of the trial-averaged PCA reported in Fig. 4 and mirror single-trial results from TCA (Fig. S12c). Most importantly, initial neural states related to left and right reach directions are mixed prior to stimulus onset (Fig. 6b)—again consistent with the results of the trial-averaged PCA. These single-trial dynamics suggest that prestimulus spiking activity covaries with RTs but not choice, even on single trials.

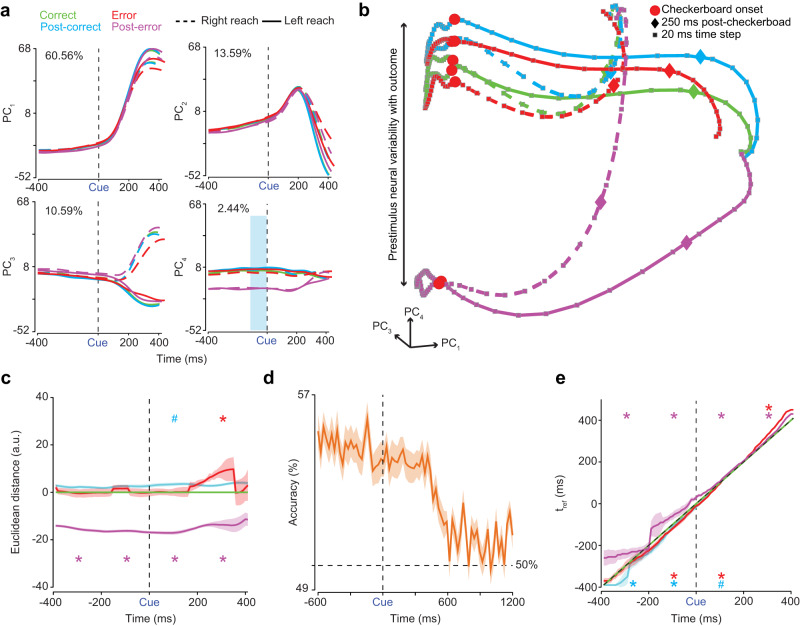

Fig. 6. Single-trial analysis, linear regression, and decoders reveal that initial conditions predict RT but not choice.

LFADS44 trajectories in the space of the first three orthogonalized factors (PC1,2,3), obtained via PCA on LFADS latents, plotted for (a) the fastest 30% of trials (blue) and the slowest 30% of trials (red) for left reaches and (b) for left (purple) and right (green) reaches, all for the easiest coherence from a single session (23 units). Each trajectory is plotted from 200 ms before checkerboard onset (dots) to movement onset (diamonds). c, d Variance explained (R2)/decoding accuracy from linear/logistic regressions of binned spiking activity (20 ms) and coherence to predict trial-matched RTs/eventual choice from all 23 units in the LFADS session shown in (a and b). Horizontal black dotted lines are the variance explained by a regression using stimulus coherence to predict RTs (12%) and 50% accuracy respectively. The magenta and light green dotted lines are the 99th and 1st percentiles of R2/accuracy values calculated from an analysis of trial-shuffled spiking activity (500 repetitions) and RTs/choice. e/f R2/accuracy values, calculated as in (c) and (d), averaged across 51 sessions. Orange shaded area is SEM. e 6.32% is the average percentage of variance explained across the 51 sessions for both monkeys by regressions using stimulus coherence to predict RTs. The horizontal black dotted line in (f) denotes 50% accuracy. Blue highlight boxes in (c) and (e) denote prestimulus neural covariability with RT. Cue - Checkerboard onset. Source data are provided as a Source Data file.

Regression and decoding analyses of raw firing rates supported insights from the LFADS visualization (Fig. 6a) that prestimulus spiking activity would be predictive of RT. A linear regression with binned prestimulus spiking activity (20 ms causal nonoverlapping bins) and coherence as predictors explained ~25% of the variance in RT from the same session used for LFADS (Fig. 6c), significantly higher than the 99th percentile of variance explained by a similar regression using trial-shuffled spiking activity instead. Identical linear regressions were performed for each of 51 sessions and R2 values were averaged across sessions. Across these sessions (Fig. 6e), prestimulus spiking activity and coherence again explained significantly more RT variance than a shuffle control of spiking activity for 47 out of 51 sessions (Mean ± SD: 13.50 ± 8.57%, 4.70 ± 3.61%, one-tailed binomial test, p = 1.11 × 10−10, Fig. S16a).

Note, prediction of RT by spiking activity was not an artifact of RT covarying with the coherence. For instance, neural activity and coherence combined explain ~25% of the variance in RTs for the example session shown in Fig. 6a, b, but coherence alone only explains only 12% of the variance in RT (Fig. 6c). Similarly, on average across sessions, linear regression with binned spiking activity and coherence as predictors explained significantly more variance in RTs in all prestimulus bins than a linear regression of RTs with solely coherence as the predictor (only the last prestimulus bin is reported here: Mean ± SD: 13.66 ± 8.9%, 6.32 ± 5.97%; Wilcoxon rank sum comparing median R2, p = 2.97 × 10−9, Fig. 6e). Therefore, nearly equal amounts of RT variance are explained by prestimulus neural spiking activity ( ~ 7%) and the coherence of the eventual stimulus (6.32%, Fig. 6e). These decoding results are essentially a replication of the results shown in Figs. S12e, S13c, and S14c.

In contrast, a logistic regression using binned spiking activity failed to predict choice at greater than chance levels in the prestimulus period. The choice-decoding accuracy was not significantly greater than the 99th percentile of accuracy from a logistic regression using trial-shuffled spiking activity, until after stimulus presentation (Fig. 6d). Similar logistic regressions were built for each session and accuracy was averaged across bins and sessions. The average prestimulus accuracy for predicting choice (Fig. 6f) was no better than chance or than the 99th percentile of averaged prestimulus accuracy from similar logistic regressions built on trial-shuffled spiking activity (Mean ± SD: 50.08 ± 0.51%, 50.00 ± 0.03%, only one session was larger than the shuffled data out of 51 comparisons, one-tailed binomial test, p = 0.999, Fig. S16b).

We further explored whether there was a prestimulus bias for faster RT bins (apparent larger prestimulus separation by choice for faster RT bins, Fig. 4b) or harder coherences as prestimulus activity has been found to be predictive of choice for harder coherences in previous experiments35. For one, prestimulus spiking activity was no better than chance at predicting eventual choice even when trials were grouped by RT bins (Fig. S16c). Next, we further refined this analysis by performing a decoding analysis where we restricted the analysis to just the hardest coherence and the fastest and slowest RT bins (Fig. S17b). Again we found no relationship between prestimulus neural activity and choice for any of the RT bins. Results were similar even when we restricted the trials to just the easiest coherence (Fig. S17a). Second, we also performed a simple regression analysis (50 ms causal bins stepped by 1 ms) where we examined if neural activity covaried with choice on a neuron-by-neuron basis for just the fastest and slowest RTs for the hardest coherences, and compared it to the percent of neurons that covaried with RT (Fig. S17c). We found that percent of neurons that covaried with choice before stimulus onset was largely at chance levels, whereas a modest ( ~ 5%) but significant portion of neurons correlated with RT even before stimulus onset. Including all coherences in this regression also did not change the results—again ~ 20% of neurons covary with RT before stimulus onset but only ~ 1% of units covary with choice (Fig. S17d). Thus, we found further evidence of the neural population covarying with RT before stimulus onset but did not observe any prestimulus choice bias.

These results are a key line of evidence in support of the dynamical hypothesis outlined in Fig. 1h that initial conditions covary with RT but not choice and thus help reject Fig. 1g as a candidate model for our neural data. They also provide independent validation of the results from the analysis of the PC trajectories.

Inputs and initial conditions both contribute to the speed of poststimulus decision-related dynamics

Thus far we have shown that the initial conditions predict RT but not choice. Our monkeys clearly demonstrate choice behavior that depends on the sensory evidence, and also are generally slower for harder compared to easier checkerboards. These behavioral results and the dynamical systems approach make two key predictions: 1) sensory evidence (i.e. the input), should modulate the properties of the choice-selectivity signal after stimulus onset and 2) the overall dynamics of the choice-selectivity signal should depend on both sensory evidence and initial conditions (Fig. S9).

To test the first prediction, we performed two analyses. First, we performed a PCA on firing rates of PMd neurons organized by stimulus coherence and choice. Figure 7a shows the state space trajectories for the first three components. In this space, activity separates faster for easier compared to harder coherences. Consistent with this visualization, choice selectivity increases faster for easier compared to harder coherences (Fig. 7b). However, there is little to no change in the latency of choice selectivity as a function of the stimulus coherence and thus firing rates in PMd are inconsistent with the hypothesis that there are stimulus-dependent encoding delays (Fig. 1f). These results suggest that poststimulus dynamics are at least in part controlled by the sensory input, consistent with the predictions of the dynamical systems hypothesis.

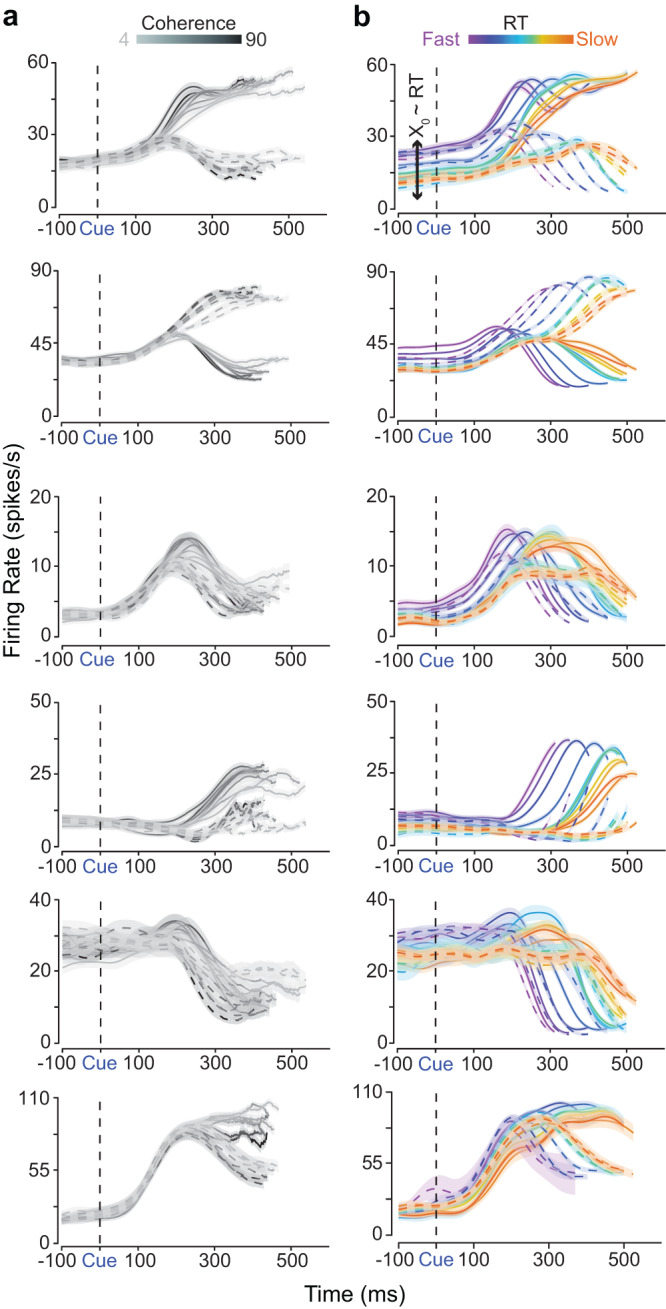

Fig. 7. Initial conditions and inputs determine speed of dynamics and ultimately choice and RT behavior.

a First three PCs (PC1,2,3) of firing rates aligned to checkerboard onset (red dots) and conditioned on stimulus coherence and choice. We observed strong poststimulus separation as a function of choice and coherence, but no observable prestimulus (-400 ms to 0 ms) separation. Color-matched diamonds and squares, indicate 225 ms post-checkerboard onset and 20 ms time steps respectively. b Choice-selectivity signal measured as the 6-dimensional Euclidean distance (E. distance) between left and right reaches as a function of stimulus coherence. c State space trajectories of the 1st, 2nd and 4th PCs of PCAs conditioned on RT bins and action choice within three stimulus coherences (90%, 31%, & 4%). d Choice-selectivity signal for each of the three coherences shown in c as a function of RT bin. e Average choice-selectivity (CS) signal in the 125 to 375 ms after checkerboard onset as a function of the initial condition (IC) within each coherence (Coh.). Easier coherences lead to higher choice-selectivity signals regardless of initial condition, but the magnitude of this signal depends on the initial condition as well as sensory evidence. f Latency of the choice-selectivity signal as a function of the initial condition (IC) and for each stimulus coherence (Coh.). As expected from (d), the latency is largely flat for the easier coherences and faster RT bins (regardless of coherence), but slower for the harder coherences. The bend down in e and the bend up in (f/g) for the fastest RT bin (300-400 ms, violet) is likely an artifact of fewer trials in this bin. g Slope of the choice-selectivity signal (m term in eq. 21) as a function of the initial condition (IC) and coherence (Coh.). Slopes strongly depend on initial condition but only weakly on coherence. h Same as (d), but for non-overlapping bins. Notice similarity between (h) and (d). Bend down in (h) emerges due to truncation of firing rates at movement onset when averaging. i–k Same as (e)–(g) but for non-overlapping bins. Again the choice selectivity depends on both initial condition (IC) and coherence (Coh.). Cue - Checkerboard onset. Source data are provided as a Source Data file.

To test the second prediction of how sensory evidence and initial conditions jointly impact the speed of poststimulus dynamics, we performed a PCA of PMd firing rates conditioned on RT and choice within a coherence. To obtain these trajectories, we first calculated trial-averaged firing rates for the 11 RT bins within each coherence. We then projected these firing rates into the first six dimensions of the PC space organized by choice and RTs (Fig. 4a, b). This projection preserved more than 90% of the variance captured by the first six dimensions of the data organized by RT bins and choice within a coherence. Typically the first six dimensions explained 75% of the total variance of the data for a given coherence. Consistent with the results in Fig. 4b, the prestimulus state again covaries with RT even within a stimulus difficulty (Fig. 7c).

To assess how inputs and initial conditions jointly influenced decision-related dynamics, we again computed the time-varying choice-selectivity signal (CS(t)) by computing the high-dimensional distance between left and right trajectories at each timepoint for each of the RT bins and coherences. Fig. 7d shows this choice-selectivity signal as a function of RT bin for the three different coherences shown in Fig. 7c. For the easiest coherence, the choice-selectivity signal starts ~ 100 ms after checkerboard onset and it increases faster (i.e., steeper slope) for faster RTs compared to slower RTs (Fig. 7d, left panel, blue highlight box). In contrast, for the hardest coherence, the choice-selectivity signal is more delayed for the slower RTs compared to the faster RTs, while the slope effect is much weaker (i.e., slope for fast RTs is similar to that of slow RTs, Fig. 7d, right panel). These plots suggest that inputs and initial conditions combine and alter the rate and latency of choice-related dynamics.

We quantified these patterns by first measuring the average choice-selectivity signal in the 250 ms period from 125 to 375 ms after checkerboard onset as a function of the initial condition and for each of the 7 coherences. We obtained an estimate of the initial condition by using a PCA to project the average six-dimensional location in state space in the -400 ms to -100 ms period before checkerboard onset for each of these conditions on to a one-dimensional axis (see “Initial condition as a function of RT and coherence”). As Fig. 7e shows, the average choice-selectivity signal is larger for easier coherences across the board but also weaker or stronger depending on the initial condition. Furthermore, when coherence is fixed, the average choice-selectivity signals depends on the initial condition. A partial correlation analysis found that the average choice selectivity in this time epoch depends on both the initial condition (50 bootstraps, mean and 99% confidence interval, r74 = 0.85 (0.846 - 0.854), p = 0.0196) and the sensory evidence (r74 = 0.341 (0.331 - 0.350), p = 0.0196). These results are key evidence that choice-selective, decision-related dynamics are controlled both by the initial condition and the sensory evidence.

Do effects observed in Fig. 7 emerge from slope changes, latency changes or both? To address this question, we fit the choice-selectivity signal (CS(t)) using a piecewise function (eq. 21) with a latency and slope parameter. Fig. 7f plots the latency of the choice-selectivity signal (tLatency) as a function of the sensory input and the initial condition. Latencies depend on both the initial condition and sensory evidence. Latencies are slowest when the initial condition is in the slow RT state and for weak inputs but faster for strong inputs or when the initial condition is in a fast RT state. Consistent with this joint dependence, a partial correlation analysis found that the latency of choice selectivity depends on both the initial condition (r74 = −0.55 (-0.59, -0.51), p = 0.0196) and stimulus coherence (r74 = −0.38 (−0.4, −0.36), p = 0.0196).

Figure 7g plots the slope of the choice-selectivity signal (m) as a function of the sensory input and the initial condition. In contrast to latency, slope of the choice-selectivity signal was strongly dependent on the initial condition but only weakly modulated by coherence. A partial correlation analysis confirmed these observations. Slope was strongly correlated with initial condition (r74 = 0.77 (0.75, 0.79), p = 0.0196) but had a very weak relationship to sensory evidence (r74 = −0.16 (−0.18, −0.13), p = 0.0196).

Again, these effects were reaffirmed when using non-overlapping bins for the analyses. Figure 7h shows the choice-selectivity signals for the three non-overlapping bins considered in Fig. 5a. For instance, for the easiest coherence, activity increases faster for faster RTs compared to slower RTs but does not appear to do so for the harder coherences, consistent with the patterns observed in Fig. 7d-g. We computed the average choice-selectivity for each of these three RT bins in the 125 to 375 ms period and again found both initial condition and coherence had an impact on the average choice-selectivity in the 125 to 375 ms period (mean and 99% confidence intervals, coherence: r = 0.225 (0.204, 0.241), initial conditions: r = 0.77 (0.76,0.78), p = 0.0196 for both cases, 50 bootstraps, Fig. 7i). Subsequent analysis of the latency and slope of these choice-selectivity signals (Fig. 7j, k) were also consistent with the conclusions from overlapping bins. Latency was strongly impacted by initial condition (r18 = −0.63, (−0.72, −0.53)) and modestly by coherence (r18 = −0.38 (−0.43, −0.33)). Slope was again strongly influenced by the initial condition (r18 = 0.91 (0.88, 0.93), p = 0.0196) but had almost no relationship to coherence (r18=-0.007, (-0.099, 0.085), p = 0.46).

In summary, choice selectivity depends on both the initial conditions and the inputs. The initial condition exerts a strong independent effect on both slope and latency of this signal, whereas sensory evidence interacts with the initial condition in altering the latency of the signal. Collectively, these results strongly support a dynamical system for decision-making where both initial conditions and inputs together shape decision-related dynamics and behavior.

The outcome of the previous trial influences the initial condition

So far we have demonstrated that the initial condition, as estimated by prestimulus population spiking activity, explains RT variability and poststimulus dynamics in a decision-making task. However, why initial conditions fluctuate remains unclear. One potential source of prestimulus neural variation could be post-outcome adjustment, where RTs for trials following an error are typically slower or occasionally faster than RTs in trials following a correct response32,47,48.

We examined if post-outcome adjustment was present in the behavior of our monkeys. We identified all error, correct (EC) sequences and compared them to correct, correct (CC) sequences. The majority of the data are from sequences of the form CCEC (78%). We compared any remaining EC sequence to the nearest CC sequence, either before or after the EC sequence (22%). We did not observe any error streaks (Fig. S18a). Associated RTs were aggregated across both monkeys and sessions.

We found that correct trials following an error were significantly slower than correct trials following a correct trial (Mean ± SD: 487 ± 129 ms, 446 ± 96 ms; Wilcoxon rank sum comparing median RTs, p = 2.23 × 10−308, Fig. S18b). Additionally, we found that correct trials following a correct trial were modestly faster than the correct trial that preceded it (M ± SD: 446 ± 96 ms, 451 ± 105 ms; Wilcoxon rank sum comparing median RTs, p = 1.81 × 10−4, Fig. S18b). Thus, trials where the previous outcome was a correct response led to a trial with a faster RT, whereas trials where the previous outcome was an error led to a trial with a slower RT.

Such changes in RT after a previous trial were mirrored by corresponding shifts in initial conditions. A PCA of trial-averaged firing rates organized by trial outcome and choice revealed that prestimulus population firing rate covaried with the previous trial’s outcome. Post-error correct trials, hereafter post-error trials, showed the largest prestimulus difference in firing rates as compared to other trial outcomes (Fig. 8a, b).

Fig. 8. Prestimulus neural activity covaries with the previous trial’s outcome.

a The first four PCs (PC1,2,3,4) of trial averaged firing rates aligned to checkerboard onset (Cue & black dashed line) of all 996 neurons from monkeys T & O and all sessions organized by choice (right - dashed lines, left - solid lines) and trial outcome (green - correct trial, cyan - correct trial following a correct trial, red - error trial, and magenta - correct trial following an error trial). Percentage variance explained by each PC presented at the top of each plot. b 1st, 3rd and 4th PC (PC1,3,4) state space aligned to checkerboard onset (red dots). Plotting of PCs extends 400 ms before checkerboard onset and 400 ms after. Observe how neural activity separates as a function of outcome, but not by choice, up to 400 ms before stimulus onset. Squares and diamonds, color matched to their respective trajectories, indicate 20 ms time steps and 250 ms post-checkerboard onset respectively. c KiNeT distance analysis demonstrating that trajectories are spatially organized with post-error trials furthest from other trial types peri-stimulus as compared to a reference trajectory (green, middle trajectory). d Logistic regressions were built per session (51 total) from current trial spiking activity to predict the outcome of the previous trial. Plot shows the average percent accuracy of these 51 logistic regressions peristimulus. Orange outline is SEM. e KiNeT Time to reference (tRef) analysis reveals that prestimulus velocity is slower peri-stimulus for post-error trials as compared to the reference trajectory (green, middle trajectory). In (c) and (e) shaded regions, color matched to their respective trajectories, are bootstrap SEM. The x-axis is labeled Time (ms), this should be understood as time on the reference trajectory. * - p = 0.0196 and # - p = 0.05. p values derived from one-sided 50-repetition bootstrap tests of differences between each outcome trajectory and the reference trajectory. a.u. - arbitrary units. Source data are provided as a Source Data file.

A KiNeT26 analysis using the first 6 PCs (again 6 dimensions captured ~ 90% of the variance; Fig. S18c) further corroborated prestimulus firing rate covariation with the previous trial’s outcome. Peri-stimulus trajectories for post-error trials occupied the reflected side of state space, relative to the reference trajectory (Correct trials), as compared to all other trial types (Fig. 8c). The averaged, windowed (i.e., –400:–200 ms, –200:0 ms, 0:200 ms, 200:400 ms) post-error trajectory was significantly different from the distance reference trajectory (0) for all windows (50-repetition bootstrap test, p < 0.0196; Fig. 8c). In a similar finding, a decoder revealed that the current trial’s spiking activity can predict, at greater than chance levels, the previous trial’s outcome from before stimulus onset until about the overall mean RT, ~ 450 ms (equal numbers of correct and error trials, were used in training the decoder, Fig. 8d), suggesting that the previous trial’s outcome has an effect on the current trial’s pre- and poststimulus population firing rates.

KiNeT analyses reveal that post-error trials also had significantly slower prestimulus trajectories as compared to the speed reference trajectory for both prestimulus windows (i.e., –400:–200 ms & –200:0 ms; 50-repetition bootstrap test vs reference trajectory: p = 0.0196; Fig. 8e), suggesting that error trials or, similarly, infrequent outcomes47 result in slower population dynamics in the following trial. Additionally, trials that follow correct trials (errors generally followed correct trials) have slightly faster prestimulus dynamics as compared to the speed reference trajectory. Post-correct trial dynamics were significantly faster in both prestimulus windows (50-repetition bootstrap test: p = 0.0196), whereas error-trial dynamics were significantly faster in the last prestimulus window (–200:0 ms; 50-repetition bootstrap test: p = 0.0196; Fig. 8e). Finally, error and post-error trials have significantly slower poststimulus trajectories in the last poststimulus window (200:400 ms) as compared to the speed reference trajectory, (50-repetition bootstrap test: p = 0.0196; Fig. 8e) consistent with their longer RTs. Altogether, these results complement behavioral results in that the initial condition shifts as a function of previous trial outcome and not just due to errors. These results suggest that slower or faster RTs after an error or correct trial are at least partially due to slower or faster prestimulus dynamics respectively (see Fig. S18d for complementary findings in single trials). Results were near identical when we used CCE and ECC sequences (Fig. S19).

The single-trial dynamics organized by trial outcome also suggest that neural state for post-error trials is separated from the post-correct trials at the time of movement onset (Fig. S18d). We reasoned that such differences would lead to differences in choice selectivity between the different trial outcomes before movement onset. Consistent with this reasoning, the six-dimensional Euclidean distance between left and right choice trajectories was largely flat until ~ 250 ms before movement-onset at which point it increased for all trial types (Fig. S18e). Post-error trials demonstrated the strongest choice selectivity as compared to all other trial types at least 250 ms before movement onset, with the difference between trial types peaking ~ 90 ms before movement onset (Fig. S18e).

These findings are consistent with the dynamical systems approach and the hypothesis in Fig. 1h as they demonstrate that initial condition before stimulus onset is dependent upon trial history and that pre- and post-stimulus dynamics slow down after errors as compared to after correct trials.

Changes in initial condition due to outcome of the previous trial likely leads to RT-related changes in the initial condition

Our results in the previous section suggest that changes in RT are at least in part due to alterations in the initial condition due to the previous trial’s outcome. To further test this hypothesis, we performed two analyses.

First, we projected the firing rates organized by outcome and choice, onto the RT subspace (defined using the first 6 principal components of the PCA in Fig. 4). If the space defined by trial outcome, and space defined by RT show a strong degree of overlap (High Overlap in Fig. S20a), then the cross projection would reveal meaningful structure. In contrast, if the subspaces were independent or non-overlapping, then cross projection would be largely unstructured25 (Independent in Fig. S20a). Consistent with our hypothesis, when we projected the firing rates organized by outcome and choice onto the RT subspace, we found near identical structure to what we observed when performing PCA on the firing rates organized by trial outcome and choice (Fig. S20b). We also performed the converse of this analysis where we projected firing rates organized by RT and choice into the space defined by trial outcome and choice (Fig. S20c). Consistent with our hypothesis that changes in trial outcome leads to changes in RT, we found near identical structure to what we observed when performing PCA on the firing rates organized by choice and RT (Fig. 4). These cross projection analyses show that the subspaces identified by trial outcome and choice, and RT and choice are highly overlapping with one another.

To quantify the strength of this overlap we first used a previously developed alignment index25. Briefly, the index calculates the trace of the matrix that results from the projection of the RT space onto the first six principal components of the outcome subspace (i.e., sum of eigenvalues) and divides this by the sum of the eigenvalues from the PCA on firing rates organized by RT and choice (see “Subspace overlap analysis”). Thus the index, as used here, quantifies the amount of variance in the RT space (Fig. 4a, b) that could be accounted for by the outcome subspace (Fig. 8a, b). This analysis revealed that ~ 77% of the total variance for the RT space was explained by the outcome subspace, suggesting that the previous trial’s outcome has a large impact in explaining prestimulus firing rate covariation with RT.

Second, we performed a dPCA49 on the population firing rates in the 600 ms before checkerboard onset organized by previous trial’s outcome and choice, and another organized by RT and choice. The respective axes that maximally separated as a function of previous trial’s outcome and that maximally separated as a function of RT demonstrated significant overlap with an angle of 47.8° between them. These results suggest that the previous trial’s outcome leads to a shift in prestimulus dynamics consistent with determining the speed of the dynamics and therefore eventual RTs.

Collectively, the past trial’s outcome leads to different initial conditions, slower pre- and poststimulus dynamics and ultimately leads to RT variability, all in line with the hypothesis in Fig. 1h.

Discussion

Our goal in this study was to identify the dynamical system that best described decision-related neural population activity in PMd. Inspired by studies of neural population dynamics related to motor planning and timing20–22,26, we investigated the neural population dynamics in PMd of monkeys performing a red-green RT decision-making task18,27. The prestimulus neural state in PMd, a proxy for the initial condition of the dynamical system, strongly predicted RT, but not choice. We observed these effects across and within stimulus difficulties and also on single trials. Furthermore, faster RT trials had faster neural dynamics and separate initial conditions from slower RT trials. Additionally, poststimulus, choice-related dynamics were altered by the inputs with easier checkerboards leading to faster dynamics than harder ones. Finally, these initial conditions and the behavior for a trial depended on the previous trial’s outcome, where RTs and prestimulus trajectories were slower for post-error compared to post-correct trials. Together, our results suggest that decision-related neural population activity in PMd is well described by a dynamical system where the choice-related dynamics (the output of the system) depend on initial conditions (influenced by trial outcome) and the sensory evidence (which solely determines the eventual choice).

At the highest level, these observations highlight how a dynamical systems approach (alternatively, computation through dynamics) can help understand the link between the time-varying activity of neural populations and behavior6,15,26,50–54. Regardless of species or brain region, an increasingly common finding is that neurons associated with cognition and motor control are often heterogeneous and demonstrate complex time-varying patterns of firing rates and mixed selectivity8,15,41,52,55. Simple models or indices, although attractive to define, are often insufficient to summarize the activity of these neural populations15,52,56, and even if one performs explicit model selection on single neurons using specialized models37, the results can be brittle because of the heterogeneity inherent in these brain regions56. The dynamical systems approach addresses this problem by using dimensionality reduction methods such as PCA and TCA, reduced-rank regression, decoding, RNN models, and optimization techniques to understand collective neuronal activity of different brain regions and tasks, generally summarizing the trial-averaged dynamics of large population datasets in lower dimensional subspaces14,15,41. Here, we demonstrated that >85% of the variance from the trial-averaged firing rate activity of nearly 1000 neurons in PMd during decisions could be explained in just a few (six) dimensions.