Abstract

A system for analysis of histopathology data within a pharmaceutical R&D environment has been developed with the intention of enabling interdisciplinary collaboration. State-of-the-art AI tools have been deployed as easy-to-use self-service modules within an open-source whole slide image viewing platform, so that non-data scientist users (e.g., clinicians) can utilize and evaluate pre-trained algorithms and retrieve quantitative results. The outputs of analysis are automatically cataloged in the database to track data provenance and can be viewed interactively on the slide as annotations or heatmaps. Commonly used models for analysis of whole slide images including segmentation, extraction of hand-engineered features for segmented regions, and slide-level classification using multi-instance learning are included and new models can be added as needed. The source code that supports running inference with these models internally is backed up by a robust CI/CD pipeline to ensure model versioning, robust testing, and seamless deployment of the latest models. Examples of the use of this system in a pharmaceutical development workflow include glomeruli segmentation, enumeration of podocyte count from WT-1 immuno-histochemistry, measurement of beta-1 integrin target engagement from immunofluorescence, digital glomerular phenotyping from periodic acid-Schiff histology, PD-L1 score prediction using multi-instance learning, and the deployment of the open-source Segment Anything model to speed up annotation.

Keywords: Visualization, Annotation, Model cataloging, Segmentation, Segment Anything

Introduction

Histological assessment provides essential insight into the phenotypic properties of the tissue microenvironment. Pharmaceutical research often relies on visual assessment of tissue morphologies, whether for characterization of in vivo experiments, or as an enrolment criterion for a clinical trial.1,2 Automated computational analysis of histopathology data can expedite tissue analysis workflows and provide more objective quantitation particularly using rapidly developing AI technologies, reducing turnaround time and rater reliability concerns.3 However, the translational challenges of interdisciplinary collaboration between data scientists and biologists are a big hurdle for the realization of medical AI systems.4,5 Consequentially, systems which allow pathologists and scientists to interface effectively with AI are essential for making the most of new exciting technologies.6

The ability to leverage histopathology images in pharmaceutical research and clinical trials relies on having the ability to visualize and annotate these images.7 Over the past decade, the commercial sector has jumped at these opportunities to provide solutions for AI-assisted diagnosis of histological images. This can be seen in the growing number of startup companies which promise easy to use off the shelf solutions for the development of AI algorithms on tissue sections.8 These tools are tailored for non-technical users, and the development and usage of pre-built AI models is abstracted behind easy-to-use interfaces. However, for a skilled computer science researcher, the proprietary and closed source nature of these commercial tools is limiting. For example, in a current commercial solution, a user can select between U-net9 and Deeplab10 network architectures to perform whole slide image segmentation. However, the details of training hyperparameters, data input and predictions aggregation strategies are not visible to the user, and state-of-the-art architectures take time to be supported.

Several open-source solutions for tissue analysis using AI have also been made available through recent academic research.11,12 These tools provide greater flexibility to skilled researchers because their source code can be reviewed and modified (the entire processing pipeline can be controlled). However, open-source software does not have the same level of robustness and support that paid software offers and may not be ideal for applications that require constant uptime and multiple stakeholders.

In pharmaceutical research, documentation and reproducibility are essential for successful regulatory approval13 and pharmaceutical partnerships with histopathology AI vendors have proven successful, however several challenges exist:

-

1.

Solutions are needed not just for experts (e.g., pathologists and computer scientists) but for collaboration of pharmaceutical companies’ interdisciplinary teams. A solution where inference using trained models can easily be run through a user interface is essential for wide utility across the diverse teams working on drug development or clinical trial enrollment.

-

2.

Commercial solutions often tailor for diagnosis.14,15 Researchers in pharmaceutical companies require more customized solutions for different needs.

-

3.

As pharmaceutical companies collect and generate massive data, a strategy for data governance is necessary5 and AI software would be developed and deployed using DevOps practices such as continuous integration and development.16 Commercial solutions either doesn’t provide the necessary flexibility and are extremely pricy.

The field of AI is rapidly developing, and pharmaceutical companies seeking shortened development and feedback cycles are starting to invest in internal data science talent. For example the recently open-sourced foundational model Segment Anything17 has shown promise for medical image segmentation tasks.18,19 On the topic of AI in pharmaceutical research, Henstock (2019) said “strategic investments in external technologies will be necessary, but the synergies of related problems allow them to be more efficiently solved internally”.5

Towards this goal, we have developed a unified platform for histopathology analysis using state-of-the-art AI technology which is tailored to pharmaceutical use cases. This software is built on the Digital Slide Archive,20 an open-source histopathology viewing platform. Our solution interfaces with existing data governance systems which are already in place. This system supports common preclinical research tasks such as tissue segmentation and feature extraction, as well as patient-level prediction using a state-of-the-art weakly supervised learning pipeline. Trained models for these tasks can be easily deployed for use within the viewer, allowing multidisciplinary stakeholders to utilize internally developed AI models. Additionally, after evaluation new state-of-the-art open-source models can be easily deployed on the system and made accessible to non-technical users. After inference using the deployed models is run, outputs such as segmentation predictions and attention heatmaps are automatically visualized interactively on the slide, and analysis metadata is associated with the slide.

Methods

A solution for histopathology data analysis requires a coalescence of data science, software design, user interface optimization, and especially in pharmaceutical research, careful data and code management. Fig. 1 outlines this solution. A description of efforts to develop a fully featured platform for pharmaceutical histology data has been broken down into the following sections: Histopathology viewer, Data governance & provenance, Deployable AI, and Automatic testing & codebase.

Fig. 1.

Overview of proposed system for histopathology analysis. The system is centered around a cloud-based histology viewer (DSA) which is run on AWS EC2. The DSA interface acts as a system for exploring the database and associated metadata, as well as tracking data provenance. HistomicsUI (a component of DSA) allows viewing and annotation of histopathology data by users. The DSA queries a series of external S3 buckets which store the data. Data queries and access pass through a system for data stewardship ensuring proper management and governance of data. External compute resources are connected to the system allowing data scientists to use annotations to create models using a shared codebase which is continuously improved. Trained models can be deployed and run through the DSA interface, where the results are automatically cataloged.

Histopathology viewer

Perhaps, the primary component for a histopathology analysis platform is a viewer in which end-users can interact with the system (center panel of Fig. 1). Histopathology slides which are digitally scanned are known as whole slide images (WSIs). These images are often gigapixels in size and are compressed using specialized formats which require purpose-built software to view.21 WSI viewers allow users to quickly zoom and pan around these large images which is essential for exploring the tissue phenotypes. For convenient use, a viewer must be fast, intuitive, and ideally not require any local software installation or file downloads.

We have chosen to use the Digital Slide Archive (DSA),20 an open-source histopathology slide viewer created by Kitware Inc. for the user facing component of our histopathology analysis platform. The DSA supports all the major WSI formats and is accessible through the web. Importantly, the DSA is licensed under the Apache License 2.0 which grants permission for commercial use, modification, distribution, patent, and private use.22 Fig. 2 depicts the user interface of the DSA.

Fig. 2.

Panel A shows loading times for 10 WSIs by storage location. This reflects the time to open a slide and zoom into the maximum magnification level. Data stored in EC2 is located on the instance, which is hosting the DSA, S3 data is stored on a remote S3 bucket. Both EC2 and S3 are accessed via the DSA over web. Data stored locally is opened via Aperio Imagescope on a user’s local computer. Panels B–D show examples of the user interface of the developed tool. Panel B depicts a folder of data in the system. Thumbnails of slides in this folder are pictured and the metadata fields associated with each slide can be configured, searched, and sorted. Panel C depicts the HistomicsUI slide viewer, where users can interact with the data, annotate, or submit analysis jobs using deployed algorithms. Here, an algorithm for PD-L1 scoring using multi-instance learning is shown, but a model agnostic version is also available using the internally developed codebase. Panel D depicts a deployed segmentation algorithm which can also be run through the user interface. The model parameters for this segmentation algorithm are user selectable which makes it reusable for multiple tissue types.

One of the most appealing aspects of the DSA is its ability to read data directly from external S3 buckets,23 which helps avoid redundant data copies, and simplifies data management. From preliminary testing, image loading times using the DSA are comparable to the commonly used locally installed software Aperio Imagescope. Fig. 2A shows the time for opening a WSI and zooming into the maximum magnification level, we note that while opening a slide directly from S3 is slower than a slide directly stored in the EC2 instance24 which hosts the DSA, it is still reasonably fast.

The HistomicsUI viewer which is integrated into the DSA platform allows user annotation of WSIs using polygon and brush tools. We have created a library for generating these annotations (which are stored in JSON25 format) automatically from the results of algorithmic inference. This includes tissue segmentation, which is represented as contours, multi-instance learning, where attention maps are visualized as heatmaps, and feature extraction, where tissue features can be associated with slides as metadata. Fig. 2C depicts the HistomicsUI viewer.

Data governance & provenance

Pharmaceutical data is often accompanied by data regulations which require a robust system for managing data access. Using an already existing internal system put in place for data access requests, we have programmatically linked the permissions model of the DSA. This means that when a user data request is granted, the S3 bucket holding the data is indexed in the DSA and they are given access to view the data. The DSA is accessible via the web secured behind a firewall, and login is accomplished via Single Sign On using a user’s enterprise credentials.

The provenance of data in the system is tracked and maintained when it is created. Metadata generated by analysis tasks is cataloged by the system as metadata, which tracks the user who ran the analysis, which model was used, the time, and code versioning to ensure reproducibility and data governance.

Deployable AI

AI algorithms can be deployed and run through the DSA interface. This is accomplished by containerization of the algorithm via Docker, input parameters are captured in the user interface and passed to the code running inference. Thus far, we have deployed algorithms for running multi-instance learning and segmentation of WSIs, examples of the interface for these algorithms is depicted in Fig. 2C&D respectively. We have also deployed an algorithm for sub-compartment segmentation and feature extraction which uses thresholding to further stratify segmented regions. Deployment of these algorithms is accomplished by creating an agnostic container where the model weights and parameters are user selectable.

A simple to use script packages model weights with the associated hyperparameters needed to run inference and uploads this to the DSA. The deployment of algorithms in the DSA is similar to prior work by Lutnick et al11 which describes the deployment of a WSI segmentation pipeline in the DSA, however, our segmentation pipeline properly captures holes inside segmented regions. To save on compute cost, our deployment uses on demand hardware accelerated compute to run AI analysis, using a lightweight EC2 instance (r5d.2xlarge) without a GPU to serve the DSA. GPU-enabled workers are spun up dynamically as AI analysis jobs are submitted to the system and then shutdown again when they are no longer needed. Currently, worker uptime is managed with a CronJob which monitors and starts workers when there are analysis jobs in the queue. This leads to a small initial delay caused by the worker instance start time which we have found to be an average of 86.4±14 s. However, once the worker is started, subsequent jobs are not subject to this delay. Currently, there is a single GPU enabled worker provisioned (g3.4xlarge AWS instance), but our framework should be easily extensible to multiple worker nodes which are able to run jobs simultaneously to speed up periods of high demand.

Automatic testing & codebase

Application testing is essential in validating software functionality over time. The application is tested with the following objectives: quality assurance, defect identification, user satisfaction, and risk assessment and compliance. Application tests were developed and executed according to a test plan designed to verify that the application meets product requirements. An automated CI/CD pipeline was developed to test the codebase, as well as containerize and deploy the AI algorithm to the DSA web interface. This pipeline first retrieves the most up-to-date version of the algorithm and executes unit tests and end-to-end tests. Since some of these tests involve GPU-accelerated inference, a custom solution was developed to allow automated calls to GPU EC2 instances after each software change of pull request. If the tests meet acceptance criteria, the algorithm is then containerized and deployed to the DSA interface, available to the application user. This system design ensures that the codebase and model versioning are always up-to-date, valid, and tracked against cataloged results. New model versions are cataloged in the system as they become available. The model version used is included in the results so this can be tracked. In the future, we plan to implement a system for defining models that should run in data locations. This will allow pre-specified models to be automatically run when new data is uploaded, which we believe will be useful for projects where new data is being continuously generated.

Concurrently, manual tests verifying the histopathology viewer web interface were developed and executed by testers (consisting of a team of data scientists, other developers, and testers) in accordance with the above testing objectives. Test data utilized for these tests originated from both private data sources and from The Cancer Genome Atlas (National Institute of Health, National Cancer Institute).26

Results & use-cases

We have optimized and deployed several algorithms in the platform for easy use by non-technical stakeholders.

Segmentation

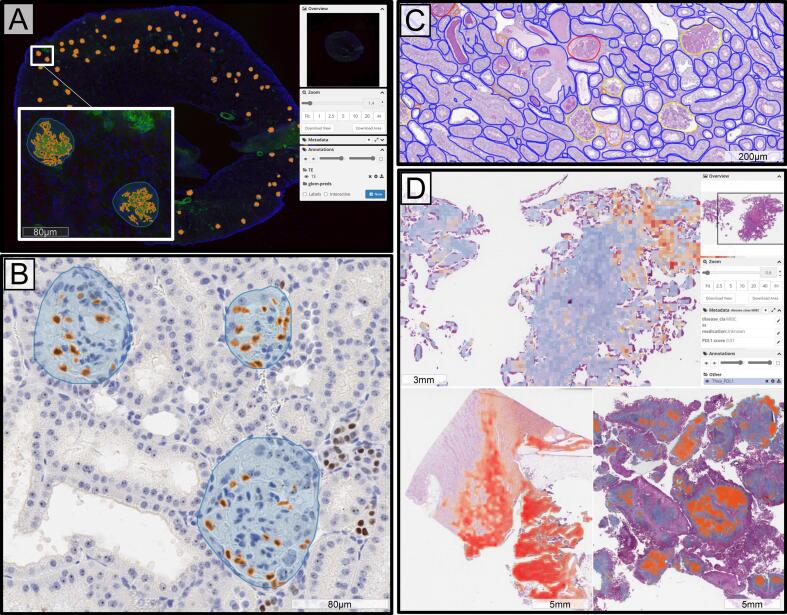

Examples of segmentation of glomeruli can be seen in Fig. 3A&B from immunofluorescence and immunohistochemistry-stained renal tissue sections respectively. Further segmentation of glomerular sub-compartments is also depicted using color deconvolution,27 Otsu’s thresholding28 and optional bottleneck detection and splitting.29 Fig. 3A shows sub-compartment segmentation of β1 integrin, and Fig. 3B shows detected WT-1 positive podocyte nuclei both within glomeruli regions. Fig. 3C shows panoptic segmentation of multiple compartments within the renal tissue. On slides containing whole mouse kidney sections scanned at 40× magnification with an average tissue area of 360±30 pixels2 tissue area, glomeruli segmentation took 290±14 s, and WT-1 detection and splitting took 11±1.6 s. On a holdout set of 6 slides, we observed a segmentation performance Matthews correlation coefficient=0.88.

Fig. 3.

Examples of computationally produced annotations in the DSA. Panel A shows glomeruli segmentation (blue) and β1 integrin detection (orange) from immunofluorescence-stained kidney tissue. Panel B shows glomeruli segmentation (blue) and podocyte detection (orange) from renal tissue stained using Wilms’ tumor-1 immunohistochemistry. Panel C depicts multi-compartment instance segmentation of renal tissue, tubules (blue), glomeruli (yellow), sclerotic glomeruli (red), and arteries (orange). Panel D depicts various heatmaps of attention scores for a multi-instance learning network trained to predict PD-L1 score on H&E tissue sections.

Feature extraction

Features from segmented regions can be quantified, extracted, and exported to CSV (comma separated variable) files for further analysis all within the DSA interface. Examples of this functionality in use includes quantification of target engagement via β1 integrin positive area Fig. 3A, and podocyte counting shown in Fig. 3B. The runtime for feature extraction is dependent on the number and complexity of the features being calculated, but to streamline this process, we have created an easy-to-use framework where users can create and pass a function which calculates the desired features, and calculation is parallelized. We note that at the time of writing, the segmentation and feature extraction tools described above have successfully been applied to more than 750 whole slide images from 10 different mouse studies by biologist stakeholders.

Multi-instance learning

Prediction of slide-level labels via multi-instance learning30 can also be run within this system. Here, attention predictions are displayed as heatmaps on the slide so experts can review which regions are being considered for the final prediction. Fig. 3D depicts several styles of heatmaps which can be generated by the system when performing a PD-L1 scoring task.31 On slides containing cancer biopsies scanned at 40×, multi-instance learning inference takes 21±0.8 s per gigapixel and has been tested in slides as large as 12 gigapixels in size. As with segmentation, we note that the multi-instance learning pipeline is model agnostic and the weights trained for PD-L1 scoring are easily replaced by another selection from a catalog of deployed models.

AI-assisted annotation

We deployed the recently released foundational model for segmentation: Segment Anything17 in the system. This model was trained to segment structures from prompts and our preliminary testing of this model shows that it performs well in histology images without any further optimization. As several projects that include annotation of histology tissue are ongoing, we decided to utilize the capabilities of the Segment Anything model to decrease the workload of expert annotators. Fig. 4 shows several modes of pre-segmentation and segmentation of user input prompts utilizing the Segment Anything model that are currently deployed in our platform. A video showing the capabilities of the Segment Anything model running in our system is available in Supplemental Fig. 1. We believe we are the first to integrate this segmentation model into a WSI viewer.

Fig. 4.

Tools for speeding annotation using zero shot learning. We have deployed a foundational model for segmentation (Segment Anything) to speed up annotation of structures in WSIs. Panel A depicts using the Segment Anything model for pre-segmentation of the entire WSI. Note the slide is automatically tiled to fit into memory, and the magnification of the tiles is user selectable through the UI. Panel B shows the ability of a user to right click and assign detected contours labels from a pre-defined list which can be set on a folder level. Panel C shows the ability to run the Segment Anything models on user defined regions of interest. This is similar to pre segmentation of the entire WSI but is useful if the user only wants to annotate specific sections of the slide. Finally, panel D shows the ability to generate segmentations from user prompts. Here, a user roughly annotates structures of interest by placing a bounding box around them which is converted to a segmentation boundary using the Segment Anything model.

On slides containing whole mouse kidney sections scanned at 40× magnification with an average tissue area of 360±30 pixels2 tissue area, pre-segmentation took 270±15 s, and segmentation from bounding box prompts took 10±0.8 s per prompt. In the future, we would like to pre-compute features using the encoder of this model and make this segmentation aid more interactive.

Conclusion

Effectively utilizing histopathology tissue in pharmaceutical research requires customizable solutions for viewing and annotating this data. AI technologies have the potential to greatly impact WSI analysis workflows by multidisciplinary teams. However, due to the fast-paced landscape of AI technology, internal investment in data science talent by pharmaceutical companies unlocks greater opportunities for integrating in house AI and open-source foundational models into easily accessible interfaces. We have created a system for AI-assisted analysis of WSIs in a pharmaceutical setting.

This system is built on top of an open-source WSI viewer which has been integrated with data access systems which are already in use. AI algorithms can easily be deployed within this system for use by non-technical users, and outputs from analysis are visualized and stored in the system with maintained provenance. The codebase for training models and deploying them in this system is backed by a robust CI/CD pipeline, which tracks model versioning and ensures that deployed code is tested.

This system is actively being used to quantify pre-clinical drug development endpoints such as podocyte count and target engagement, as well as to make promising open-source foundational models accessible to non-technical end users. Additional AI capabilities are being deployed in the system as they are needed, for example object detection. We are currently in the process of validating this system for GxP compliance32 and hope to utilize it for external deployment of AI algorithms in clinical trials.

The following are the supplementary data related to this article.

Supplementary Video

Conflict of Interest

The authors declare no conflicts of interest.

Acknowledgments

We would like to thank David Manthey, and Jeff Baumes from Kitware Inc. for their efforts in development of the open-source DSA tool which underpins this work. We also thank Pratik Patel for leading the efforts to get this tool GxP certified, Fred Sommers for project managing our working relationship with Kitware, as well as Tommaso Mansi, Asha Mahesh, and Alex Li for their support in our Kitware relationship. We also thank Rajashree Rana, Nikolay Bukanov, and Thomas Natoli for their contributions to the mouse model dataset used to test this tool.

References

- 1.Ma C., et al. An international consensus to standardize integration of histopathology in ulcerative colitis clinical trials. Gastroenterology. 2021;160(7):2291–2302. doi: 10.1053/j.gastro.2021.02.035. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Wong E.T., et al. Outcomes and prognostic factors in recurrent glioma patients enrolled onto phase II clinical trials. J Clin Oncol. 1999;17(8):2572. doi: 10.1200/JCO.1999.17.8.2572. [DOI] [PubMed] [Google Scholar]

- 3.Granter S.R., Beck A.H., Papke D.J., Jr. AlphaGo, deep learning, and the future of the human microscopist. Arch Pathol Lab Med. 2017;141(5):619–621. doi: 10.5858/arpa.2016-0471-ED. [DOI] [PubMed] [Google Scholar]

- 4.Tizhoosh H.R., Pantanowitz L. Artificial intelligence and digital pathology: challenges and opportunities. J Pathol Inform. 2018;9(1):38. doi: 10.4103/jpi.jpi_53_18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Henstock P.V. Artificial intelligence for pharma: time for internal investment. Trends Pharmacol Sci. 2019;40(8):543–546. doi: 10.1016/j.tips.2019.05.003. [DOI] [PubMed] [Google Scholar]

- 6.Holzinger A., Muller H. Toward human–AI interfaces to support explainability and causability in medical AI. Computer. 2021;54(10):78–86. [Google Scholar]

- 7.Dimitriou N., Arandjelović O., Caie P.D. Deep learning for whole slide image analysis: an overview. Front Med. 2019;6:264. doi: 10.3389/fmed.2019.00264. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Mehrvar S., et al. Deep learning approaches and applications in toxicologic histopathology: current status and future perspectives. J Pathol Inform. 2021;12(1):42. doi: 10.4103/jpi.jpi_36_21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Ronneberger O., Fischer P., Brox T. International Conference on Medical image Computing and Computer-Assisted Intervention. Springer; 2015. U-net: convolutional networks for biomedical image segmentation. [Google Scholar]

- 10.Chen L.-C., et al. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans Pattern Anal Mach Intel. 2017;40(4):834–848. doi: 10.1109/TPAMI.2017.2699184. [DOI] [PubMed] [Google Scholar]

- 11.Lutnick B., et al. A user-friendly tool for cloud-based whole slide image segmentation with examples from renal histopathology. Commun Med. 2022;2(1):1–15. doi: 10.1038/s43856-022-00138-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Miao R., et al. Quick Annotator: an open-source digital pathology based rapid image annotation tool. J Pathol Clin Res. 2021;7(6):542–547. doi: 10.1002/cjp2.229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Ahuja S., Scypinski S. Vol. 3. Academic Press; 2001. Handbook of Modern Pharmaceutical Analysis. [Google Scholar]

- 14.FDA . 2021. Software Algorithm Device to Assist Users in Digital Pathology. [Google Scholar]

- 15.FDA . 2020. Sectra Digital Pathology Module. [Google Scholar]

- 16.Ebert C., et al. DevOps. IEEE Softw. 2016;33(3):94–100. [Google Scholar]

- 17.Kirillov A., et al. arXiv preprint. 2023. Segment anything. arXiv:2304.02643. [Google Scholar]

- 18.Deng R., et al. arXiv preprint. 2023. Segment anything model (sam) for digital pathology: assess zero-shot segmentation on whole slide imaging. arXiv:2304.04155. [Google Scholar]

- 19.Ma J., Wang B. arXiv preprint. 2023. Segment anything in medical images. arXiv:2304.12306. [Google Scholar]

- 20.Gutman D.A., et al. The digital slide archive: a software platform for management, integration, and analysis of histology for cancer research. Cancer Res. 2017;77(21):e75–e78. doi: 10.1158/0008-5472.CAN-17-0629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Farahani N., Parwani A.V., Pantanowitz L. Whole slide imaging in pathology: advantages, limitations, and emerging perspectives. Pathol Lab Med Int. 2015;7(23-33):4321. [Google Scholar]

- 22.Sinclair A. License profile: Apache license, version 2.0. IFOSS L. Rev. 2010;2:107. [Google Scholar]

- 23.Palankar M.R., et al. Proceedings of the 2008 International Workshop on Data-Aware Distributed Computing. 2008. Amazon S3 for science grids: a viable solution? [Google Scholar]

- 24.Juve G., et al. 2009 5th IEEE International Conference on E-Science Workshops. IEEE; 2009. Scientific workflow applications on Amazon EC2. [Google Scholar]

- 25.Pezoa F., et al. Proceedings of the 25th International Conference on World Wide Web. 2016. Foundations of JSON schema. [Google Scholar]

- 26.D.C.C.B.R.J.M.A.K.A.P.T.P.D.W.Y. and T.S.S.L.D.A The cancer genome atlas pan-cancer analysis project. Nat Genet. 2013;45(10):1113–1120. doi: 10.1038/ng.2764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Ruifrok A.C., Johnston D.A. Quantification of histochemical staining by color deconvolution. Anal Quant Cytol Histol. 2001;23(4):291–299. [PubMed] [Google Scholar]

- 28.Xu X., et al. Characteristic analysis of Otsu threshold and its applications. Pattern Recognit Lett. 2011;32(7):956–961. [Google Scholar]

- 29.Wang H., Zhang H., Ray N. 2011 18th IEEE International Conference on Image Processing. IEEE; 2011. Clump splitting via bottleneck detection. [Google Scholar]

- 30.Foulds J., Frank E. A review of multi-instance learning assumptions. Knowled Eng Rev. 2010;25(1):1–25. [Google Scholar]

- 31.Udall M., et al. PD-L1 diagnostic tests: a systematic literature review of scoring algorithms and test-validation metrics. Diagnos Pathol. 2018;13(1):1–11. doi: 10.1186/s13000-018-0689-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Scheme P.I.C.-o. Pharmaceutical Inspection Convention, PI. 2007. Good practices for computerised systems in regulated “Gxp” environments. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Supplementary Video