Abstract

The term “digital phenotype” refers to the digital footprint left by patient-environment interactions. It has potential for both research and clinical applications but challenges our conception of health care by opposing 2 distinct approaches to medicine: one centered on illness with the aim of classifying and curing disease, and the other centered on patients, their personal distress, and their lived experiences. In the context of mental health and psychiatry, the potential benefits of digital phenotyping include creating new avenues for treatment and enabling patients to take control of their own well-being. However, this comes at the cost of sacrificing the fundamental human element of psychotherapy, which is crucial to addressing patients’ distress. In this viewpoint paper, we discuss the advances rendered possible by digital phenotyping and highlight the risk that this technology may pose by partially excluding health care professionals from the diagnosis and therapeutic process, thereby foregoing an essential dimension of care. We conclude by setting out concrete recommendations on how to improve current digital phenotyping technology so that it can be harnessed to redefine mental health by empowering patients without alienating them.

Keywords: digital phenotype, empowerment, mental health, personalized medicine, psychiatry

Introduction

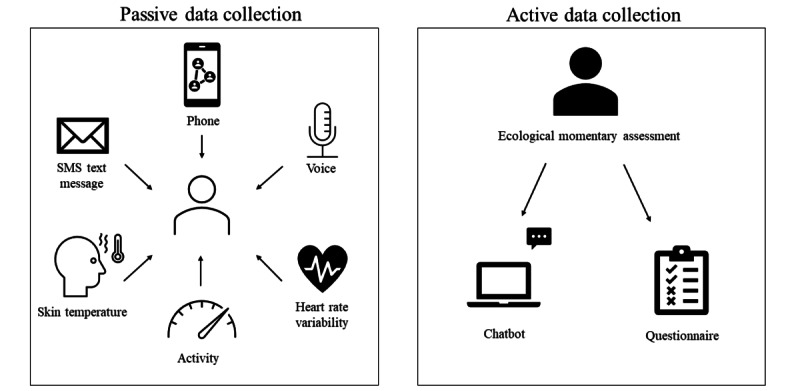

The emergence and rapid adoption of digital technologies in medicine have led to changes in medical practice and the conception of health. One such technology is based on the notion of the digital phenotype, which first emerged in 2015 in Nature Biotechnology [1], building on Dawkins’ extended phenotype, defined as the set of observable characteristics or traits of an organism. Digital phenotyping (DP) refers to the collection of observable and measurable characteristics, traits, or behaviors of an individual, defined as “moment-by-moment quantification of the individual-level human phenotype in situ using data from personal digital devices” [2]. The data can be divided into active and passive subgroups. Active data requires user engagement (eg, the completion of a questionnaire), while passive data is collected without user participation or notification.

The use of passive data collection through sensors of all kinds represents a step change in the clinical observation of patients, as it gathers fine-grained information that can be more relevant to illness phenotypes than the exclusively active data collection by the patient (eg, ecological momentary assessment and chatbot interactions). Today, there is a proliferation of digital interfaces as each individual interacts with a variety of connected objects, including wearables and smartphones equipped with a plethora of measurement tools. They can store and measure different types of data, including GPS data, proximity to other devices using Bluetooth, walking speed using an accelerometer, heart rate, oxygen level, electrical cardiac activity, sleep quality, perspiration using humidity sensors, tone of voice, activity on social networks, the lexical field of written sentences, etc. The collection of passive data has already led to some progress in various medical disciplines (eg, for monitoring cognitive function in cognitive impairments [3], Parkinson’s progression [4], cardiac electrophysiology [5], seizure detection [6], and glucose in diabetes [7]. DP serves the dual purpose of fulfilling clinical objectives and logistical aims. The clinical goals include improving health care professionals’ (HCPs’) ability to diagnose patients and select the most effective treatment options. Meanwhile, the logistical objectives involve managing health care systems to ensure optimal performance and efficiency. Nevertheless, DP may also constrain the role of HCPs, who already rely on clinical decision support systems, structuring the profession in a top-down manner at the risk of subjugating and disqualifying their know-how. Furthermore, the collection of quantitative data may dispossess patients of their subjective distress [8].

We believe that the emergence of technologies such as DP in medicine underscores the fundamental differences between 2 complementary conceptions of health [9]: one centered on the illness, the other on the patient. Illness-centered medicine has its roots in the ancient Greek medical school of Knidos. The aim is to cure illnesses. It is a medicine focused on the diagnosis and classification of illnesses. It can be related to the myth of Prometheus (ie, delaying or denying death) and corresponds to the objectification of the patient as a body or machine made up of organs and functional systems. Treatments involve invasive gestures (punctures, incisions, etc), the requirement to take medication, or, in the field of psychiatry, neurostimulation techniques such as electroconvulsive therapy. Behind this aggressive dimension of care [10] lies the idea of combating nature rather than seeking to improve coexistence with it. Patient-centered medicine is derived from the Hippocratic tradition. The aim is to care for patients by focusing on their self-experience of their illness, just as in palliative care, where human interactions are fundamental. This holistic (ie, whole person) approach to medicine focuses on prognosis and involves considering mental and social factors in order to improve individual patients’ quality of life. Throughout history, the conception of health has been pulled in these 2 opposite directions, depending on the patient’s or HCP’s point of view [10]. In the past decade, there has been much progress in patient-centered medicine. For example, clinical trials are increasingly using patient-reported outcome measures, as these are now being demanded by health authorities and regulatory agencies [11].

This paper examines how the implementation of DP in psychiatry could redefine mental health. This will be a challenging process owing to the plurality of concepts and approaches it involves. The World Health Organization defines health as a “state of complete physical, mental, and social well-being and not merely the absence of disease or infirmity” [12] and applies a normative approach based on a set of arbitrary conditions. Psychiatry has always claimed to be clinical medicine. In contrast to other medical disciplines, it has no consensual biological markers and no gold standard to help HCPs (ie, psychiatrists, psychologists, nurses, social workers, and therapists) establish diagnosis. The criteria used in psychiatry are clinical and mostly qualitative, stemming from observations of bedridden patients, in accordance with the etymology of this ancestral term (klinê, meaning bed). HCPs must make a thorough assessment of the functional impairment caused by the psychiatric illness in terms of the individual-environment interaction to justify the treatment. Finally, what makes psychiatry so complex is that there are no standards either for the physiopathological explanation of illnesses or for therapeutics. There is no international consensus on therapeutic guidelines, and there is huge interindividual variability in treatment response and tolerance, whether that treatment is pharmacological or psychotherapeutic. In other words, what is beneficial for one patient may not be for another. The same applies to physiopathological models, which range from psychodynamics to neurobiology and from genetic predispositions to environmental factors. In psychiatry, being in poor health can even have secondary benefits [13].

Classifications have been drawn up to justify therapeutic interventions. These can be either categorical, such as the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition (DSM-5) [14] and the International Classification of Diseases, or dimensional, such as the “research domain criteria” (RDoC) [15]. Both conceptions have issues [16]. Categorical classification reflects medical tradition but leaves some help-seekers without care due to arbitrary diagnostic thresholds. As they have low intrinsic validity, categorical illnesses also give rise to frontier forms and disorder spectrums with little temporal validity or therapeutic interest [17,18]. Dimensional classification considers all dimensions to be equal in their pathogenicity without taking account of their interplay and causal links (eg, some dimensions may be defense mechanisms from an evolutionary or psychodynamic perspective). It also requires thresholds that are well defined, given that they are exposed to HCPs’ subjectivity. Finally, some dimensions are purely descriptive and do not consider functional impairment or therapeutic implications [19,20].

To delve into these matters, in this viewpoint paper, we begin by discussing the expectations related to the rise of a data-driven approach to mental health in the form of DP. Subsequently, we examine how its use threatens to dehumanize mental health. Lastly, we set out guidelines for ensuring that the implementation of DP in psychiatry fosters more patient-centered mental health.

Toward a Patient-Centered Mental Health

Emergence of Digital Phenotyping in Psychiatry

Data science emerged in psychiatry some years ago, representing all the digital information about mental health, individuals’ properties, and digital factors involved in the health care processes. It ranges from theoretical variables of interest such as major life events, comorbid diagnosis, and stress factors to blood marker levels and clinical characteristics to functional neuroimaging [21]. DP gives HCPs a new set of digital biomarkers, collected from wearables, smartphones, but also virtual reality devices [22] or in gaming contexts [23], offering the opportunity to model mental health [24] and the extent of individual-environment interactions.

Machine learning brings powerful tools to explore high-dimensional and real-time data concerned with DP. It offers the opportunity “to make sense” of these digital signs of the reality they try to represent in the state of mental health [25-27]. Some see in this technology the potential to better understand the neurobiological mechanisms underlying psychiatric illnesses [28] or give new transdiagnostic models of comprehension of symptoms, in line with the “RDoC” perspective [29]. In addition, ML could be used to deliver new predictive models. Artificial intelligence (AI) might be capable of “overcoming the trial and error-driven status quo in mental health care by supporting precise diagnoses, prognosis, and therapeutic choices” [30]. These systems may have the ability to predict risks, help HCPs make clinical decisions, increase the accuracy and speed of diagnosis, and facilitate the examination of health records. Integrating this data-driven approach into clinical practice could reduce the workload of HCPs. They can already efficiently perform tasks such as diagnosing skin diseases and analyzing medical images (eg, in neurology, ophthalmology, cardiology, and gastro-enterology) [31,32] and could soon be used in clinical decision support systems in psychiatry [33], making compulsory admissions more helpful [34]. DP therefore has a natural home in psychiatry [35,36], helping HCPs access a new set of data based on individuals’ behavioral experiences and increasing their ability to classify and understand symptoms in their contextual and temporal dimensions, as well as the illnesses themselves [37,38]. Data reflecting emotions, levels of energy, behavioral changes [39], symptoms such as sociability, mood, physical activity, and sleep [40], but also logorrhea, agitation, rumination, hallucinations, or suicidal thoughts, could constitute the digital signature of a pathology (Figure 1). Given that psychiatry usually explains a mental illness and its issues in terms of the difficulty patients have interacting with their environment, it is only logical for DP to arouse such interest, especially as it could compensate for the absence of reliable biomarkers.

Figure 1.

Schematic representation of digital phenotyping.

An Improved Psychiatric Care

In recent decades, the psychometric assessment of patients has involved self-report questionnaires (eg, the Patient Health Questionnaire for Depression [41]) and observer-rated scales (eg, the Hamilton Rating Scale for Depression [42]). As these scales are filled in either by the patient or the HCP, they necessarily have a degree of subjectivity. DP would enable phenomenological data to be collected, with the possibility of establishing ecologically valid psychometric assessments [37,43] (Figure 1). For instance, user-generated content on social media sites such as Reddit allows for the recognition of mental illness-related posts with good accuracy using a deep learning approach [44].

Various studies have already provided evidence about the use of DP for diagnostic purposes. As an example, there is a correlation between circadian rhythm, step counts, or heart rate variability and the diagnosis of a mood disorder or mood episode [45-48]. Other correlations have been found between data and symptoms of schizophrenia [49-51], major depression [52-57], mood disorders [46,58,59], posttraumatic stress disorder [60,61], generalized anxiety disorder [62], suicidal thoughts [63,64], sleep disorders [65], addiction [66], stress [53,67], postpartum [68,69], autism [70], and child and adolescent psychiatry [71,72]. Among other examples of the efficiency of DP for prediction or diagnosis in mental health, Instagram photos or Facebook language have been found to be predictors of depression [73,74]; suicidal risk could be assessed from social media [75,76] with increasing precision if DP would integrate electronic health records data [77,78]; automated analysis of free speech can measure relevant mental health changes in emergent psychosis [79] or incoherence in speech in schizophrenia [80]. It is worth noting that clinical utility may be derived from a combination of passive and active data and not from passive data alone [81]. In total, increasing diagnostic accuracy could help avoid treatment delays or errors. For example, there is currently an average 8-year delay in the diagnosis of bipolar illness in France [82]. Concerning the choice of medication, phenotypic markers already play a major role (eg, antidepressants for depression, whether it is accompanied by insomnia or hypersomnia). DP could bring together more phenotypic markers than HCPs can collect and thus improve the choice of treatment, countering the cognitive bias effect on decision-making [83].

Finally, DP could improve the follow-up of people with serious mental illnesses and optimize care between 2 consultations, especially when accessing care facilities can be difficult. It could also improve the assessment of treatment efficiency. Some researchers have hypothesized that heart rate variability is influenced by the progression of the patient’s depression and could be a new biomarker of treatment response [84,85]. High diagnostic confidence improves treatment compliance, and problems with compliance are a frequent source of relapse in mental illness [86]. DP could in turn provide useful tools for enhancing therapeutic education and improving the prediction of relapses [48,87-90], Ultimately, DP could also be used to assess whether patients could benefit from psychotherapy [91], using parameters with proven efficiency [45], as part of an evidence-based medicine strategy.

Patient’s Empowerment

DP introduces new capacities to assess differences between what is normal and what is pathological. Canguilhem [92] identified normative capacity (ie, normativity) as a central condition for gauging to what extent a living person is in good health: “What characterizes health is the possibility to tolerate infractions of the usual norm and to set new norms for new situations.” Thus, all living beings live with their own norms based on their specific biological limits. These norms are defined by Canguilhem [92] as a mode of functioning in certain environmental conditions that allows individuals to have normal abilities and live normal lives. Unlike machines, living beings have the possibility of defining their norms according to environmental conditions in order to ensure real-time adaptation. The limits of these norms are inevitably tested in the course of the individuals’ biological lives as they interact with their environment and pursue their life goals, and when they have a disability that prevents them from meeting their goals, they feel ill. Nonetheless, this experience of the limits is based on self-feeling and is totally personal. When this normative capacity no longer allows an individual to adapt, thus triggering an illness, it is legitimate to intervene in order to restore the ability to set new life goals. Concerning psychiatric symptoms, DP could add a digital dimension to the notion of limits and the production and assessment of norms. Experiencing physical pain when one’s leg is broken is more readily acknowledged, and seeking help is a common response. However, people often encounter challenges in recognizing psychic pain [93], depressive symptoms, or anxiety and find it much more difficult to perceive these conditions as abnormal and deserving of appropriate adaptations or treatment. DP could provide a novel and sensitive approach that empowers individuals to define their own digital mental health and establish their own digital norms. In accordance with Canguilhem’s principles, the threshold between the normal and the pathological would become more nuanced [92,94]. Individuals would be able to perceive mental health as something more tangible, allowing them to determine for themselves which conditions contribute to their well-being independently of any scientific paradigms used by HCPs for their evaluation and treatment approaches. Consequently, individuals would have the opportunity to take charge of their mental health care, paving the way for a comprehensive mental health prevention system. With this perspective, it would be simpler to harness individual motivation for making changes and foster active participation in health strategies. In the United States, data-driven psychiatry is starting to emerge, with a close relationship among patients, HCPs, and DP [95]. There have been attempts at self-management, where patients are given greater autonomy with regard to technologies and the management of their symptoms [94]. For instance, some open science applications such as mindLAMP (which collects health data, produces easy-to-understand graphs, enables journaling thoughts and reflections, and offers customized mental health interventions) allow some depressed or alcoholic patients to rapidly develop emotional self-awareness, track thinking patterns in real time, gain insight into their progress, connect to the clinical team, engage with medication, or reinforce their confidence in psychotherapy [96]. Other studies have suggested that DP increases patients’ feelings of control over their symptoms [97]. The quantified self-approach could help scientists understand pathology better and deserves further exploration. In Western medicine, representations of diseases and health are biased by cultural interpretation. Thanks to the new language through the data concerning the self-evaluation close to a data-feeling, patients could free themselves from this bias. Herein lies the idea of compensating for the subjectivity of clinical interviews. DP could introduce a more precise and decisive point of view of the daily life of the patient, where functional impairments, one of the major characteristics of mental illness, must not be neglected. DP is therefore a potential source of objectivity in mental illnesses [98], making it possible to flag up daily abnormalities.

Toward a Personalized Psychiatry

The possibility of empowerment is consistent with psychiatry’s move toward a more personalized approach. It was initially conceived of as the tailoring of psychiatric practices to the patient’s situation based on the HCP’s assessment. However, it could take the form of personalized requests from patients to HCPs, with better comprehension of their mental disorders converting patients into self-researchers investigating their own illness. DP could create a unique network of macro (social and smart cities), meso (relation to environment and situations), micro (the person), and very micro (physiological mechanisms) data integrated into the patient’s daily life. DP would promote personalized psychiatry [99], doing away with the usual lengthy periods of observation in psychiatry. This corresponds to the concept of intelligent health, defined as the expansion of electronic health through the inclusion of built-in data analysis using new technologies, as well as the extension of patient assessment to the patient’s environment and HCPs, and data mining to support decision-making [100]. HCPs and patients must be able to select the parameters that match their characteristics and situation in a move toward clinical augmentation. This added precision in clinical observation should lead to equally shared (between HCPs and patients) models of illnesses and treatments. It opens the way to patient-centered mental health, where “the symptom network” paradigm takes its place for a better phenotypic characterization of disorders and their evolution. The symptom network aims at a technologically augmented clinical and therapeutic relationship [101] that could thus explore how symptoms influence each other without trying to find a unique causal source in a more consistent and transparent psychopathological framework [102].

To conclude, we can see how DP could bring about major scientific progress in psychiatry toward patient-centered mental health. Nevertheless, this optimistic view of its potential uses or advantages needs to be tempered by practical issues. This quest for optimized performance could also have negative impacts (Textbox 1) on society that still need to be assessed.

Positive and negative impacts of mental health as defined by digital phenotyping on society.

Positive impacts of patient-centered mental health

Precise, continuous, multidimensional psychometric assessment.

Deeper and more precise understanding of mental illnesses.

Faster and more accurate diagnosis of mental illnesses.

Better-adapted treatment based on each patient’s specificities, life history, and needs.

Better follow-up and prognosis of the mental illness over time and in changing conditions (eg, outside the hospital).

Patients are empowered to monitor the progression and root causes of their disease.

All in all: a precise, personalized (in the technical sense), patient-centered definition of mental health.

Negative impacts of dehumanized mental health

Nominalism and arbitrariness. It is unclear who or what decides the norms and standards beyond mere statistical means. Norms are disconnected from any lived experience.

Reproducing bias and systematic error in the understanding of mental illnesses: only relative objectivity.

Depriving patients of their agency, self-perception, and personal definition of what it means to be healthy and well.

Creation of arbitrary standards with a high risk of normativity, generating guilt and self-image issues.

Biopower: surveillance and privacy issues.

Alienated patients experience life in the second person after the technology and the disease.

All in all: decentering of the human being, disappearance of the human dimension of mental health.

Risk of Dehumanizing Mental Health

Overview

Although DP introduces the possibility of individuals managing their own health data, increasing the amount of information available for them to assess their own health status, there might also be a process of reduction or simplification that would have massive consequences for patients’ self-determination. Moreover, as their health data would be more readily available to third parties, it might end up being used for other purposes besides improving their health.

Concerns With the Implementation of Digital Phenotyping in Psychiatry

The clinical interview is the moment when patients’ complaints are heard by HCPs and their distress is recognized. Their illness is discussed and diagnosed, and an appropriate treatment is prescribed. Subjective representations of individuals and HCPs are part of the decision-making system in psychiatry, shaping discussions about the individual's state of health and illness. In striving to improve the current state of health, a shared objective guides the HCPs’ decisions. However, considering the data yielded by DP analysis, the decision-making process in psychiatry might undergo profound transformations to align with the evolving understanding of mental health. Canguilhem [92] explained that the risk of nominalism is to reduce life to machine functioning. For example, a healthy individual may start to feel ill after seeing figures that deviate from the norm, while a help-seeker may be declared healthy by DP. The patient’s status may thus become disconnected from the felt experience [103], excluding self-construal, illness narratives, interpersonal dynamics, and social contexts, which are determinants of mental health [18]. The term data-driven (in society, marketing, health, etc) already exists and is used when decision-making is mainly based on data interpretation. The question of who creates the norms and indicators does not seem to be an issue at present. By default, these conditions are fixed either by researchers on the basis of their study findings or by the designers of health applications (eg, walking 10,000 steps per day, limiting screen time to 4 hours per day, and eating certain types of food selected by an algorithm). For example, a marketing campaign by Yamasa Corporation claimed that walking 10,000 steps per day is a factor for well-being. However, the goal was to promote a step tracker during the 1964 Tokyo Olympics. They called this technology manpo-kei, which in Japanese literally means “10,000 steps counter.” A systematic review acknowledged the association between walking and the reduction of all causes of mortality [104] but concluded that we can expect more benefits with every 1000 steps we walk beyond 8000. The 10,000-step target is therefore arbitrary and should be reconsidered. This example helps us understand that many recent applications continue to rely on approximations of scientific data [105,106]. At present, too many applications are dedicated to different types of data collection and analysis methods that are not well assimilated [40,107]. Using norms set by DP could deprive individuals of the possibility of discussing which norms they should strive toward in order to achieve better well-being. Studies on DP in psychiatry have limited research interest [108], none of which has been applied to clinical diagnosis and treatment or shown improvements in mental health over the long term. Some applications, such as Instagram, are already suspected of damaging users’ mental health [109].

Froment [103] pointed out that historically speaking, the term illness is not technical but undeniably profane and phenomenological. The science of medicine was built on a prescientific conception of illnesses but has gradually adopted concepts developed by HCPs and health authorities. If DP modifies these concepts, it could compromise patients’ quest to understand their mental health as well as the aim of the treatment. Beyond the very definition of mental health, there is an issue with the power of data over people’s freedom and way of life in society. It compromises the very possibility of innovative objectivity or may lead to the outright failure of any attempt to achieve objectivity through this technology.

Relative Objectivity

Datafication, which is defined as the tendency to overrepresent objects with digital data (eg, DP in psychiatry), has several detractors, especially when it comes to the possibility of data representing part of the real world [93,110-112]. For instance, several scales have been developed to quantify psychic pain, but they are very heterogeneous in terms of content, and cannot be compared or replaced by each other [112]. Data power may therefore paradoxically deplete reality [113]. Each of the many steps between data collection, analysis by an algorithm, and implementation by patients or HCPs is a source of bias [114-119]. Algorithmic bias emerges when databases are created without exhaustive data or when data is sourced from patients with coexisting illnesses [120,121]. Additionally, machine learning algorithms give rise to the black box effect (ie, the opacity of the internal processes of a system that produce outputs from inputs) [122]. This concern appears when users (eg, HCPs or patients) lack knowledge about the inner workings of the system, rendering them unable to explain the outcomes of an analysis [123]. Studies exploring AI-based diagnosis do not take either difficult cases or HCPs’ clinical experience into account. AI makes mistakes when HCPs perform poorly [124]. Machine learning searches for statistical invariants, whereas clinical experience provides tacit data (ie, heuristics coupled with models or concepts, used unconsciously by HCPs, and which are complex to objectify) that algorithms have difficulty considering, although they are at the core of clinical practice in psychiatry. Objectivity is a cultural and historical construct that has elicited different approaches over time and across cultures [125]. There has been a tendency to want to quantify illnesses ever since Claude Bernard introduced the nominalist approach denounced by Canguilhem [92]. Some see self-monitoring as the instrumentalization of health, promoting the proliferation of digital tracking tools with spurious ethical claims [126]. Even more so than other specialties, psychiatry may see itself as alienated from so-called algorithmic governmentality and, paradoxically, adopt the fantasy of illness-centered medicine curing psychiatric illnesses. Algorithmic governmentality does away with direct human interaction. Rouvroy and Stiegler [112] and Rouvroy and Berns [127] introduced this notion, defining it as derivative, with norm production reliant on massive data sets and regulations favored over humans’ anticipatory ability. With DP, psychiatry could therefore end up being driven by unreflective and determined rules instead of by the desire to create greater freedom to achieve goals. The risk is that it depends less on the HCP’s experience and allows less space for patients to express themselves [128]. DP may also lead us to a hostile world, driven by financial interests, where mental health becomes an unregulated market [129]. In addition, there is a risk of psychiatry becoming purely defensive [130], where medical tests or coercion are used under legal constraints. Finally, although DP represents an opportunity for patients to express themselves through technologies, it may bring more anxiogenic modalities of communication and hinder relationships [113], whereas they are actually crucial for patient mental health care.

Alienation Instead of Empowerment

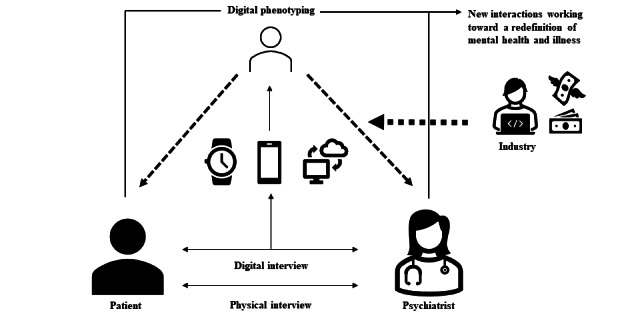

The opportunity for social and human progress in patients’ empowerment in psychiatry remains unclear. DP represents an instrument of biopower (derived from Foucault’s biopower [131,132]) for users, independently of its efficiency. The growing consideration of digital data in decision-making risks dispossessing humans (HCPs and patients) of the tools for producing health data. It may create a dependence on production tools, as already observed in the world of scientific research. Stiegler [133] demonstrated how researchers’ daily lives have been changed by the arrival of more and more digital interfaces between the objects they observe and the data required to produce knowledge. Stiegler [133] claimed that scientists are thus deprived of their production as they have to pay private industry for the right to use their own discoveries. DP could easily spark a similar process in the mental health industry [134], where the production of feelings becomes a patentable technique, introducing a third party in the relationship established by the clinical interview. Finally, DP risks defining mental health goals and dictating the means of attaining them (Figure 2). Illnesses may become political, part of a biopower seeking social control [135-137]. The term mental disorder used in the DSM-5 (instead of illness or disease) speaks for itself, the implicit meaning being that good mental health corresponds to a supposed mental order: illnesses render individuals unfit and remove their autonomy, such that they are dependent on the quantified self or on medical authorities (specialists) with increasingly narrow areas of expertise. Indeed, the current trend in psychiatry is to develop expert centers in which patients undergo a single clinical assessment, resulting in fast diagnosis and therapeutic guidelines. This paternalistic psychiatric assessment has little to do with the patient’s own expertise, which only HCPs who provide follow-up and long-term care can fully know. The risk is that HCPs relying on this data set will reach their diagnosis too early, interfering in the treatment procedure and compromising the follow-up needed to cure psychiatric illnesses.

Figure 2.

Conceptual illustration of the links created by the introduction of digital phenotyping into the health care relationship.

Ethical Issues

The concerns of patients whose intrinsic ability to normalize their environment is impaired are regarded as lying on the border between the normal and the pathological. DP could shift these borders by entering the patient-HCP relationship with a third party (Figure 2) or a fourth party if the relationship includes a family member or trusted person [138]. It raises the issue of responsibility, which also concerns the DP designer, manager, or analyst. Technological tools are not autonomous. DP threatens to go against the principles governing new technologies, namely neutrality, diversity, transparency, equity, loyalty, and overall comprehensibility.

Equity has been identified as one of the main ethical challenges of digital health [139]. Indeed, algorithms are constructed on complex models with user interfaces that are often difficult to understand. If users are not trained, DP will be misused due to the learning bias. HCPs and users could be victims of the black box effect, which can hinder the traceability of the decision-making process. It may well exacerbate the existing inequality of access to psychiatric care, penalizing patients from underprivileged backgrounds [94], on the wrong side of the digital divide [140], or exposed to the stigma [141] that extensively affects the psychiatric population [142]. Studies on the use of health data also tend to show that they are more acceptable to younger psychiatric patients [143], and adherence is predicted by a higher education level in schizophrenia [144]. There is therefore a risk that these technological advances will only benefit individuals who already enjoy easier access to psychiatric care.

In psychiatry, respect for privacy and confidentiality is also an essential part of the medical principle of patient autonomy, but health digitalization has brought about a paradigm shift. The sharing of information between different HCPs is now considered beneficial for patients. Professional integrity is replacing confidentiality [145,146]. Data anonymization can easily be bypassed by cross-referencing. Thus, personal health threatens to move from the private to the public arena [139] or to be extensively traded by private companies, particularly in psychiatry, where intimate data are of utmost importance for clinical practice. DP has indeed the potential for intrusion that would go against medical confidentiality, with questioning about the patients’ consent to share their health data. Hence, informed consent and data ownership are among the main issues [95,139,147].

Phenomenology currently lies at the core of mental health but risks being dislodged. Good health may contain a moral imperative, with pathologies being attributed either to alienation from the environment (nurture) or to biological predispositions (nature). When patients are freed of all responsibility in this way, failure ceases to exist and is replaced by illnesses, as has already happened with learning disorders, substance use disorders, and other deviances, ultimately leading to uneasiness [148]. Indeed, DP could stimulate nudging strategies, defined as “any aspect of the choice architecture that alters people’s behavior in a predictable way without forbidding any options or significantly changing their economic incentives” [149]. Nudges could create artificial health but also artificial illnesses, compromising patients’ autonomy [150,151] and agency [152]. Thus, it could be a barrier to meeting their mental health needs while creating new ones. The drift toward normativity through the medicalization of society can already be observed with the cult of performance [153] and the illusion of omnipotence over illness and death. Based on the notion of personal development, the post-Freudian therapies that first emerged back in the 1960s are intended to enable the immediate gratification of drives, thus increasing the isolation of the self [154]. The ultimate goal is for individuals to fulfill their personal achievement goals by worshiping authenticity and assertiveness, thereby exacerbating the very symptoms these therapies claim to cure. By further increasing the emphasis on performance, DP could contribute to the loss of identification with generational continuity, in other words, the inability of individuals to gradually identify themselves with the wellness and success of others rather than their own and, with the fear of growing old, the inability to think about their posterity and the handing of the baton to the next generation. The COVID-19 pandemic may be a good example of the counterhistorical health trade-off between generations: the sacrifice of liberty on behalf of health, with the collateral damage of poorer mental health among young people [155,156].

More and more digital mental health apps are available in web-based sales spaces. However, their design is seldom inspired by rigorous scientific research [105]. The popularity of these apps shows that there is consumer demand. The same need manifests itself when patients turn to complementary medicine because their physician fails to provide satisfaction. We can assume that through their use of these apps, individuals are not only responding to various marketing ploys but are also seeking to improve their daily lives. The COVID-19 pandemic undoubtedly fostered this change in the relationship between individuals and connected health, but there is a dearth of qualitative research on the place that connected objects now have in people’s daily lives [118].

Recommendations for Implementing Digital Phenotyping in Psychiatry

Overview

DP gives psychiatry an opportunity to adopt more modern ways of helping patients. However, it raises crucial questions about the values underpinning the definition of mental health [157] and could profoundly undermine the “sacred trust” that patients place in their physician to understand their illness. All in all, interdisciplinary collaboration in DP research is necessary [25,158], fostering expertise in psychiatry, computer science, data science, innovation, ethics, law, and the social sciences, to ensure the development of robust and clinically meaningful DP tools [159]. Further recommendations are needed to ensure that there is a true revolution in mental health.

Empowerment of Patients and Health Care Professionals

Usability

Progress must be made in this area to ensure adequate usability. This poses a considerable challenge owing to the properties of the data (ie, high volume, heterogeneity, noise, and sparseness) [33,108]. The Beiwe platform is an example of the will to gather and rationalize passive smartphone data to phenotype psychiatric illnesses [2]. Patients must be able to choose which DP tool they want to use, which data can be collected, for what purpose, and have the right to withdraw [139].

Empathy of Care

DP could contribute to the empowerment of HCPs if the main objective is to facilitate their operational and administrative tasks and enable them to make clinical decisions. At the very least, clinical decision support systems must always be subject to professional validation, and all the data used by the algorithm must be reported. AI-based data analysis should reduce the workload of HCPs and allow them to focus on the Hippocratic aspects of care, such that their relationships with their patients are more humanistic, empathic, and centered on their individuality in terms of history, daily life, and symptoms, thereby creating more room for psychotherapeutic approaches. It goes without saying that this can only happen if the number of HCPs is not reduced by public health policies.

Cooperation Between Patients and Health Care Professionals

Just as DP gives individuals new tools for understanding themselves and their behaviors, it opens up new prospects for sharing a common and practical definition of mental health. This definition should be shared, personalized, and evolutive. The objective of DP should be to foster better cooperation among HCPs, patients, and their environment and to enhance understanding of their interplay.

Maintaining a Critical Mindset

Information on treatments introduces cognitive bias during the decision-making phase [83]. DP should be used as a debiasing strategy and not simply to create yet another layer of information for HCPs. Patients and HCPs should nevertheless consider the risks associated with nudging with these technologies. DP only constitutes one of the data sets representing patients’ mental health, which should instead be about sensitive, pragmatic, and rational reasoning between patients and HCPs over time.

Information

For Patients

To counter the black box effect and the dispossession of health data, some authors recommend the use of explainable AI to set out the reasoning behind the conclusions [160]. This would remove the potential obstacle to patients’ and HCPs’ empowerment posed by algorithms. However, few AI models are currently available [160], but they promise to encompass ethics, security, and safety concerns [161]. In all cases, information and education need to be provided to help users understand DP, how it works, its limitations, and its potential failures.

For Health Care Providers

Data education must also be provided to HCPs. In France, medical students are not yet taught about the use of digital devices to improve patient health. Furthermore, we know that psychiatry residents mistrust digital culture [162]. This could be a major strategic error, as new generations will have to work in a global and competitive world of medicine.

For Designers

Finally, education must also be provided to the people who design and analyze DP applications. There is a lack of standards for building these tools, and their design needs to be in accordance with patients’ and HCPs’ experiences.

Vigilance

Security

Progress must be made regarding the security of the data collected [139], otherwise this technology will never be acceptable [138]. This is a particular priority in psychiatry, where it is a prerequisite for using data science. It is very much an ethical challenge, for if we want this technology to uphold the fundamental principles of privacy, transparency, informed consent, accountability, and fairness [163], the security issue must be resolved within the next few years [147].

Equity

It is essential for DP to be available to the whole psychiatric population. The MindLogger platform for mobile mental health assessment is an example of such an initiative to democratize the development of mental health apps [164].

Data Rationalization and Research Priorities

Large-scale longitudinal data sets with standardized evaluation metrics are needed to assess the potential impact of these technologies. The costs of developing such tools need to be set against the proven and expected benefits. Studies are needed to analyze the qualitative and quantitative impact of an electronic health society on patients [165,166] and must ensure that the definition of mental health is not based on an artificial boundary between the normal and the pathological and does not become distanced from the patient’s experience. Ethical aspects of digital health research need to be considered in every study: equity, replicability, privacy, and efficacy [167]. In particular, qualitative studies must be conducted to define the tools’ contents (content validity) before performing studies to validate these tools statistically and psychometrically (structural validity, internal validity, cross-cultural validity, measurement error and reliability, criterion validity) [93]. Transdisciplinary approaches, including phenomenology, must be adopted during this construction process so as not to lose sight of patient-reported outcomes. Concerning algorithms, machine learning, natural language processing, and expert systems are the most studied interventions [115]. Up until now, studies have focused on diagnosis, prognosis, risk management, or follow-up, have had strong biases, have not taken health care end users into account, and finally have not responded to needs, as shown by the concerns about low engagement [143].

Auditability

An independent committee is needed to provide a legal framework for the marketing of DP tools based on recommendations for their construction, validation, consideration of users’ feedback, ethical considerations, and costs for society. Thus, DP could follow the established norms of quality and safety and finally be cost-effective and feasible [168].

Conclusions

At the turn of the 17th century, there was a move from prescientific and Hippocratic medicine to Promethean medicine as a result of scientific discoveries and technological advances in biology. The 20th century’s paradigm consisted of using evidence-based medicine to bring about pragmatic progress. Medical practice continues to evolve; patients are once again regarded as experts in their own symptoms, and their preferences are given the same importance as external clinical data and medical experience. DP paves the way for a redefinition of mental health, making it more subjective while taking advantage of 21st century technologies for preventive, predictive, effective, and personalized medicine. We noted a certain enthusiasm for these new technologies among the general public, but HCPs remain skeptical, wondering whether the progress touted by private companies is really relevant for all patients and all HCPs. There now needs to be a qualitative study comparing patients’ and HCPs’ perspectives on the implementation of DP in psychiatry. DP calls into question the validity of the risk-benefit ratio, bringing another way of expressing and understanding illnesses. Thus, the challenge of DP will be to let patients access their own state of health, creating a new dimension of care where the borders of mental health are extended and not constrained by more digital interfaces and their pitfalls. Algorithmic governmentality should not be used to decide whether or not individuals deserve mental health care. To conclude, the priority should be to improve the abilities of patients to deal with their difficulties. DP has its place in psychiatry, fostering patients’ empowerment in terms of their illnesses, their health, their own lives, and those of others.

Abbreviations

- AI

artificial intelligence

- DP

digital phenotyping

- DSM-5

Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition

- HCP

health care professional

- RDoC

research domain criteria

Data Availability

Data sharing is not applicable to this article as no data sets were generated or analyzed during this study.

Footnotes

Authors' Contributions: AO, VA, RM, and SM contributed to the conceptualization of the manuscript. AO and VA wrote the original draft. VA, SM, and RM supervised the development of this study. All authors contributed to the review and editing of the manuscript. All authors have read and agreed to the submitted version of the manuscript.

Conflicts of Interest: FS is partially funded by Joy Ventures and Tiny Blue Dot Foundation. In the past years, FS founded and received compensation from BeSound SAS and Nested Minds LLC. SM has received speaker and consultant fees from Ethypharm, Bioserenity, and Angelini Pharma.

References

- 1.Jain SH, Powers BW, Hawkins JB, Brownstein JS. The digital phenotype. Nat Biotechnol. 2015;33(5):462–463. doi: 10.1038/nbt.3223.nbt.3223 [DOI] [PubMed] [Google Scholar]

- 2.Torous J, Kiang MV, Lorme J, Onnela JP. New tools for new research in psychiatry: a scalable and customizable platform to empower data driven smartphone research. JMIR Ment Health. 2016;3(2):e16. doi: 10.2196/mental.5165. https://mental.jmir.org/2016/2/e16/ v3i2e16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Piau A, Wild K, Mattek N, Kaye J. Current state of digital biomarker technologies for real-life, home-based monitoring of cognitive function for mild cognitive impairment to mild Alzheimer disease and implications for clinical care: systematic review. J Med Internet Res. 2019;21(8):e12785. doi: 10.2196/12785. https://www.jmir.org/2019/8/e12785/ v21i8e12785 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Diao JA, Raza MM, Venkatesh KP, Kvedar JC. Watching Parkinson's disease with wrist-based sensors. NPJ Digit Med. 2022;5(1):73. doi: 10.1038/s41746-022-00619-4. doi: 10.1038/s41746-022-00619-4.10.1038/s41746-022-00619-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Atreja A, Francis S, Kurra S, Kabra R. Digital medicine and evolution of remote patient monitoring in cardiac electrophysiology: a state-of-the-art perspective. Curr Treat Options Cardiovasc Med. 2019;21(12):92. doi: 10.1007/s11936-019-0787-3. https://link.springer.com/article/10.1007/s11936-019-0787-3 .10.1007/s11936-019-0787-3 [DOI] [PubMed] [Google Scholar]

- 6.Poh MZ, Loddenkemper T, Reinsberger C, Swenson NC, Goyal S, Sabtala MC, Madsen JR, Picard RW. Convulsive seizure detection using a wrist-worn electrodermal activity and accelerometry biosensor. Epilepsia. 2012;53(5):e93–e97. doi: 10.1111/j.1528-1167.2012.03444.x. https://onlinelibrary.wiley.com/doi/10.1111/j.1528-1167.2012.03444.x . [DOI] [PubMed] [Google Scholar]

- 7.Heinemann L, Freckmann G, Ehrmann D, Faber-Heinemann G, Guerra S, Waldenmaier D, Hermanns N. Real-time continuous glucose monitoring in adults with type 1 diabetes and impaired hypoglycaemia awareness or severe hypoglycaemia treated with multiple daily insulin injections (HypoDE): a multicentre, randomised controlled trial. Lancet. 2018;391(10128):1367–1377. doi: 10.1016/S0140-6736(18)30297-6.S0140-6736(18)30297-6 [DOI] [PubMed] [Google Scholar]

- 8.Wittink H, Nicholas M, Kralik D, Verbunt J. Are we measuring what we need to measure? Clin J Pain. 2008;24(4):316–324. doi: 10.1097/AJP.0b013e31815c2e2a.00002508-200805000-00006 [DOI] [PubMed] [Google Scholar]

- 9.Berche P. Ideal medicine does not exist. Trib Santé. 2012;37(4):29. doi: 10.3917/seve.037.0029. [DOI] [Google Scholar]

- 10.Boch AL. In: Médecine technique, médecine tragique: Le tragique, sens et destin de la médecine moderne. Supérieur DB, editor. Paris: Seli Arslan; 2009. [Google Scholar]

- 11.Germain N, Aballéa S, Smela-Lipinska B, Pisarczyk K, Keskes M, Toumi M. Patient-reported outcomes in randomized controlled trials for overactive bladder: a systematic literature review. Value Health. 2018;21:S114. doi: 10.1016/j.jval.2018.07.869. https://www.valueinhealthjournal.com/article/S1098-3015(18)33226-1/fulltext?_returnURL=https%3A%2F%2Flinkinghub.elsevier.com%2Fretrieve%2Fpii%2FS1098301518332261%3Fshowall%3Dtrue . [DOI] [Google Scholar]

- 12.Grad FP. The preamble of the constitution of the World Health Organization. Bull World Health Organ. 2002;80(12):981–984. https://europepmc.org/abstract/MED/12571728 .S0042-96862002001200014 [PMC free article] [PubMed] [Google Scholar]

- 13.Aybek S, Nicholson TR, Zelaya F, O'Daly OG, Craig TJ, David AS, Kanaan RA. Neural correlates of recall of life events in conversion disorder. JAMA Psychiatry. 2014;71(1):52–60. doi: 10.1001/jamapsychiatry.2013.2842. https://jamanetwork.com/journals/jamapsychiatry/fullarticle/1780023 .1780023 [DOI] [PubMed] [Google Scholar]

- 14.American Psychiatric Association. American Psychiatric Association. DSM-5 Task Force . Diagnostic and Statistical Manual of Mental Disorders: DSM-5. Arlington, VA: American Psychiatric Association; 2013. [Google Scholar]

- 15.Demazeux S, Pidoux V. The RDoC project: the neuropsychiatric classification of tomorrow? Med Sci (Paris) 2015;31(8-9):792–796. doi: 10.1051/medsci/20153108019. http://publications.edpsciences.org/10.1051/medsci/20153108019 .medsci2015318-9p792 [DOI] [PubMed] [Google Scholar]

- 16.Livesley WJ. A framework for integrating dimensional and categorical classifications of personality disorder. J Pers Disord. 2007;21(2):199–224. doi: 10.1521/pedi.2007.21.2.199. [DOI] [PubMed] [Google Scholar]

- 17.Insel T, Cuthbert B, Garvey M, Heinssen R, Pine DS, Quinn K, Sanislow C, Wang P. Research Domain Criteria (RDoC): toward a new classification framework for research on mental disorders. Am J Psychiatry. 2010;167(7):748–751. doi: 10.1176/appi.ajp.2010.09091379. https://ajp.psychiatryonline.org/doi/full/10.1176/appi.ajp.2010.09091379 .167/7/748 [DOI] [PubMed] [Google Scholar]

- 18.Gómez-Carrillo A, Paquin V, Dumas G, Kirmayer LJ. Restoring the missing person to personalized medicine and precision psychiatry. Front Neurosci. 2023;17:1041433. doi: 10.3389/fnins.2023.1041433. https://europepmc.org/abstract/MED/36845417 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Zimmerman M. Why hierarchical dimensional approaches to classification will fail to transform diagnosis in psychiatry. World Psychiatry. 2021;20(1):70–71. doi: 10.1002/wps.20815. https://europepmc.org/abstract/MED/33432768 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Kessler RC. The categorical versus dimensional assessment controversy in the sociology of mental illness. J Health Soc Behav. 2002;43(2):171–188. [PubMed] [Google Scholar]

- 21.Chekroud AM, Bondar J, Delgadillo J, Doherty G, Wasil A, Fokkema M, Cohen Z, Belgrave D, DeRubeis R, Iniesta R, Dwyer D, Choi K. The promise of machine learning in predicting treatment outcomes in psychiatry. World Psychiatry. 2021;20(2):154–170. doi: 10.1002/wps.20882. https://europepmc.org/abstract/MED/34002503 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Torous J, Bucci S, Bell IH, Kessing LV, Faurholt-Jepsen M, Whelan P, Carvalho AF, Keshavan M, Linardon J, Firth J. The growing field of digital psychiatry: current evidence and the future of apps, social media, chatbots, and virtual reality. World Psychiatry. 2021;20(3):318–335. doi: 10.1002/wps.20883. https://europepmc.org/abstract/MED/34505369 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Mandryk RL, Birk MV. The potential of game-based digital biomarkers for modeling mental health. JMIR Ment Health. 2019;6(4):e13485. doi: 10.2196/13485. https://mental.jmir.org/2019/4/e13485/ v6i4e13485 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Raballo A. Digital phenotyping: an overarching framework to capture our extended mental states. Lancet Psychiatry. 2018;5(3):194–195. doi: 10.1016/S2215-0366(18)30054-3.S2215-0366(18)30054-3 [DOI] [PubMed] [Google Scholar]

- 25.Smith KA, Blease C, Faurholt-Jepsen M, Firth J, Van Daele T, Moreno C, Carlbring P, Ebner-Priemer UW, Koutsouleris N, Riper H, Mouchabac S, Torous J, Cipriani A. Digital mental health: challenges and next steps. BMJ Ment Health. 2023;26(1):e300670. doi: 10.1136/bmjment-2023-300670. https://europepmc.org/abstract/MED/37197797 .bmjment-2023-300670 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Galatzer-Levy IR, Onnela JP. Machine learning and the digital measurement of psychological health. Annu Rev Clin Psychol. 2023;19(1):133–154. doi: 10.1146/annurev-clinpsy-080921-073212. https://www.annualreviews.org/doi/abs/10.1146/annurev-clinpsy-080921-073212?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed . [DOI] [PubMed] [Google Scholar]

- 27.Choudhary S, Thomas N, Ellenberger J, Srinivasan G, Cohen R. A machine learning approach for detecting digital behavioral patterns of depression using nonintrusive smartphone data (complementary path to patient health questionnaire-9 assessment): prospective observational study. JMIR Form Res. 2022;6(5):e37736. doi: 10.2196/37736. https://formative.jmir.org/2022/5/e37736/ v6i5e37736 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Montag C, Quintana DS. Digital phenotyping in molecular psychiatry-a missed opportunity? Mol Psychiatry. 2023;28(1):6–9. doi: 10.1038/s41380-022-01795-1. https://europepmc.org/abstract/MED/36171355 .10.1038/s41380-022-01795-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Torous J, Onnela JP, Keshavan M. New dimensions and new tools to realize the potential of RDoC: digital phenotyping via smartphones and connected devices. Transl Psychiatry. 2017;7(3):e1053. doi: 10.1038/tp.2017.25. doi: 10.1038/tp.2017.25.tp201725 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Koutsouleris N, Hauser TU, Skvortsova V, De Choudhury M. From promise to practice: towards the realisation of AI-informed mental health care. Lancet Digit Health. 2022;4(11):e829–e840. doi: 10.1016/S2589-7500(22)00153-4. https://linkinghub.elsevier.com/retrieve/pii/S2589-7500(22)00153-4 .S2589-7500(22)00153-4 [DOI] [PubMed] [Google Scholar]

- 31.Topol EJ. High-performance medicine: the convergence of human and artificial intelligence. Nat Med. 2019;25(1):44–56. doi: 10.1038/s41591-018-0300-7.10.1038/s41591-018-0300-7 [DOI] [PubMed] [Google Scholar]

- 32.McKinney SM, Sieniek M, Godbole V, Godwin J, Antropova N, Ashrafian H, Back T, Chesus M, Corrado GS, Darzi A, Etemadi M, Garcia-Vicente F, Gilbert FJ, Halling-Brown M, Hassabis D, Jansen S, Karthikesalingam A, Kelly CJ, King D, Ledsam JR, Melnick D, Mostofi H, Peng L, Reicher JJ, Romera-Paredes B, Sidebottom R, Suleyman M, Tse D, Young KC, De Fauw J, Shetty S. International evaluation of an AI system for breast cancer screening. Nature. 2020;577(7788):89–94. doi: 10.1038/s41586-019-1799-6.10.1038/s41586-019-1799-6 [DOI] [PubMed] [Google Scholar]

- 33.Arora A. Conceptualising artificial intelligence as a digital healthcare innovation: an introductory review. Med Devices (Auckl) 2020;13:223–230. doi: 10.2147/MDER.S262590. https://europepmc.org/abstract/MED/32904333 .262590 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Oliva F, Ostacoli L, Versino E, Pomeri AP, Furlan PM, Carletto S, Picci RL. Compulsory psychiatric admissions in an Italian urban setting: are they actually compliant to the need for treatment criteria or arranged for dangerous not clinical condition? Front Psychiatry. 2018;9:740. doi: 10.3389/fpsyt.2018.00740. https://europepmc.org/abstract/MED/30670991 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Prakash J, Chaudhury S, Chatterjee K. Digital phenotyping in psychiatry: when mental health goes binary. Ind Psychiatry J. 2021;30(2):191–192. doi: 10.4103/ipj.ipj_223_21. https://europepmc.org/abstract/MED/35017799 .IPJ-30-191 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Williamson S. Digital phenotyping in psychiatry. BJPsych Adv. 2023:1–2. doi: 10.1192/bja.2023.26. https://www.cambridge.org/core/journals/bjpsych-advances/article/digital-phenotyping-in-psychiatry/0A992BECE9BFD66C3589CE8428AC9AE2 . [DOI] [Google Scholar]

- 37.Insel TR. Digital phenotyping: technology for a new science of behavior. JAMA. 2017;318(13):1215–1216. doi: 10.1001/jama.2017.11295.2654782 [DOI] [PubMed] [Google Scholar]

- 38.Insel TR. Digital phenotyping: a global tool for psychiatry. World Psychiatry. 2018;17(3):276–277. doi: 10.1002/wps.20550. https://europepmc.org/abstract/MED/30192103 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Jagesar RR, Roozen MC, van der Heijden I, Ikani N, Tyborowska A, Penninx BWJH, Ruhe HG, Sommer IEC, Kas MJ, Vorstman JAS. Digital phenotyping and the COVID-19 pandemic: capturing behavioral change in patients with psychiatric disorders. Eur Neuropsychopharmacol. 2021;42:115–120. doi: 10.1016/j.euroneuro.2020.11.012. https://www.sciencedirect.com/science/article/pii/S0924977X20309457?via%3Dihub .S0924-977X(20)30945-7 [DOI] [PubMed] [Google Scholar]

- 40.Mendes JPM, Moura IR, Van de Ven P, Viana D, Silva FJS, Coutinho LR, Teixeira S, Rodrigues JJPC, Teles AS. Sensing apps and public data sets for digital phenotyping of mental health: systematic review. J Med Internet Res. 2022;24(2):e28735. doi: 10.2196/28735. https://www.jmir.org/2022/2/e28735/ v24i2e28735 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Kroenke K, Spitzer RL, Williams JB. The PHQ-9: validity of a brief depression severity measure. J Gen Intern Med. 2001;16(9):606–613. doi: 10.1046/j.1525-1497.2001.016009606.x. https://europepmc.org/abstract/MED/11556941 .jgi01114 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Carrozzino D, Patierno C, Fava GA, Guidi J. The Hamilton rating scales for depression: a critical review of clinimetric properties of different versions. Psychother Psychosom. 2020;89(3):133–150. doi: 10.1159/000506879. doi: 10.1159/000506879.000506879 [DOI] [PubMed] [Google Scholar]

- 43.Nisenson M, Lin V, Gansner M. Digital phenotyping in child and adolescent psychiatry: a perspective. Harv Rev Psychiatry. 2021;29(6):401–408. doi: 10.1097/HRP.0000000000000310.00023727-900000000-99908 [DOI] [PubMed] [Google Scholar]

- 44.Gkotsis G, Oellrich A, Velupillai S, Liakata M, Hubbard TJP, Dobson RJB, Dutta R. Corrigendum: characterisation of mental health conditions in social media using informed deep learning. Sci Rep. 2017;7(1):46813. doi: 10.1038/srep46813. doi: 10.1038/srep46813.srep46813 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Dahl H, Teller V, Moss D, Trujillo M. Countertransference examples of the syntactic expression of warded-off contents. Psychoanal Q. 1978;47(3):339–363. [PubMed] [Google Scholar]

- 46.Cho CH, Lee T, Kim MW, In HP, Kim L, Lee HJ. Mood prediction of patients with mood disorders by machine learning using passive digital phenotypes based on the circadian rhythm: prospective observational cohort study. J Med Internet Res. 2019;21(4):e11029. doi: 10.2196/11029. https://www.jmir.org/2019/4/e11029/ v21i4e11029 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Valenza G, Nardelli M, Lanata' A, Gentili C, Bertschy G, Kosel M, Scilingo EP. Predicting mood changes in bipolar disorder through heartbeat nonlinear dynamics. IEEE J Biomed Health Inform. 2016;20(4):1034–1043. doi: 10.1109/JBHI.2016.2554546. https://ieeexplore.ieee.org/document/7454702 . [DOI] [PubMed] [Google Scholar]

- 48.Lee HJ, Cho CH, Lee T, Jeong J, Yeom JW, Kim S, Jeon S, Seo JY, Moon E, Baek JH, Park DY, Kim SJ, Ha TH, Cha B, Kang HJ, Ahn YM, Lee Y, Lee JB, Kim L. Prediction of impending mood episode recurrence using real-time digital phenotypes in major depression and bipolar disorders in South Korea: a prospective nationwide cohort study. Psychol Med. 2022:1–9. doi: 10.1017/S0033291722002847.S0033291722002847 [DOI] [PubMed] [Google Scholar]

- 49.Strauss GP, Raugh IM, Zhang L, Luther L, Chapman HC, Allen DN, Kirkpatrick B, Cohen AS. Validation of accelerometry as a digital phenotyping measure of negative symptoms in schizophrenia. Schizophrenia (Heidelb) 2022;8(1):37. doi: 10.1038/s41537-022-00241-z. doi: 10.1038/s41537-022-00241-z.10.1038/s41537-022-00241-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Lakhtakia T, Bondre A, Chand PK, Chaturvedi N, Choudhary S, Currey D, Dutt S, Khan A, Kumar M, Gupta S, Nagendra S, Reddy PV, Rozatkar A, Scheuer L, Sen Y, Shrivastava R, Singh R, Thirthalli J, Tugnawat DK, Bhan A, Naslund JA, Patel V, Keshavan M, Mehta UM, Torous J. Smartphone digital phenotyping, surveys, and cognitive assessments for global mental health: initial data and clinical correlations from an international first episode psychosis study. Digit Health. 2022;8:20552076221133758. doi: 10.1177/20552076221133758. https://journals.sagepub.com/doi/10.1177/20552076221133758?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed .10.1177_20552076221133758 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Staples P, Torous J, Barnett I, Carlson K, Sandoval L, Keshavan M, Onnela JP. A comparison of passive and active estimates of sleep in a cohort with schizophrenia. NPJ Schizophr. 2017;3(1):37. doi: 10.1038/s41537-017-0038-0. doi: 10.1038/s41537-017-0038-0.10.1038/s41537-017-0038-0 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Zulueta J, Piscitello A, Rasic M, Easter R, Babu P, Langenecker SA, McInnis M, Ajilore O, Nelson PC, Ryan K, Leow A. Predicting mood disturbance severity with mobile phone keystroke metadata: a BiAffect digital phenotyping study. J Med Internet Res. 2018;20(7):e241. doi: 10.2196/jmir.9775. https://www.jmir.org/2018/7/e241/ v20i7e241 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Thomée S, Härenstam A, Hagberg M. Mobile phone use and stress, sleep disturbances, and symptoms of depression among young adults--a prospective cohort study. BMC Public Health. 2011;11(1):66. doi: 10.1186/1471-2458-11-66. https://bmcpublichealth.biomedcentral.com/articles/10.1186/1471-2458-11-66 .1471-2458-11-66 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Zarate D, Stavropoulos V, Ball M, de Sena Collier G, Jacobson NC. Exploring the digital footprint of depression: a PRISMA systematic literature review of the empirical evidence. BMC Psychiatry. 2022;22(1):421. doi: 10.1186/s12888-022-04013-y. https://bmcpsychiatry.biomedcentral.com/articles/10.1186/s12888-022-04013-y .10.1186/s12888-022-04013-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Taliaz D, Souery D. A new characterization of mental health disorders using digital behavioral data: evidence from major depressive disorder. J Clin Med. 2021;10(14):3109. doi: 10.3390/jcm10143109. https://www.mdpi.com/resolver?pii=jcm10143109 .jcm10143109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Ettore E, Müller P, Hinze J, Riemenschneider M, Benoit M, Giordana B, Hurlemann R, Postin D, Lecomte A, Musiol M, Lindsay H, Robert P, König A. Digital phenotyping for differential diagnosis of major depressive episode: narrative review. JMIR Ment Health. 2023;10:e37225. doi: 10.2196/37225. https://mental.jmir.org/2023//e37225/ v10i1e37225 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Wahle F, Kowatsch T, Fleisch E, Rufer M, Weidt S. Mobile sensing and support for people with depression: a pilot trial in the wild. JMIR Mhealth Uhealth. 2016;4(3):e111. doi: 10.2196/mhealth.5960. https://mhealth.jmir.org/2016/3/e111/ v4i3e111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Maatoug R, Oudin A, Adrien V, Saudreau B, Bonnot O, Millet B, Ferreri F, Mouchabac S, Bourla A. Digital phenotype of mood disorders: a conceptual and critical review. Front Psychiatry. 2022;13:895860. doi: 10.3389/fpsyt.2022.895860. https://europepmc.org/abstract/MED/35958638 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Ebner-Priemer UW, Mühlbauer E, Neubauer AB, Hill H, Beier F, Santangelo PS, Ritter P, Kleindienst N, Bauer M, Schmiedek F, Severus E. Digital phenotyping: towards replicable findings with comprehensive assessments and integrative models in bipolar disorders. Int J Bipolar Disord. 2020;8(1):35. doi: 10.1186/s40345-020-00210-4. https://europepmc.org/abstract/MED/33211262 .10.1186/s40345-020-00210-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Bourla A, Mouchabac S, El Hage W, Ferreri F. e-PTSD: an overview on how new technologies can improve prediction and assessment of Posttraumatic Stress Disorder (PTSD) Eur J Psychotraumatol. 2018;9(Suppl 1):1424448. doi: 10.1080/20008198.2018.1424448. https://europepmc.org/abstract/MED/29441154 .1424448 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Schultebraucks K. Digital approaches for predicting posttraumatic stress and resilience: promises, challenges, and future directions. Eur Psychiatr. 2023;66(S1):S50–S50. doi: 10.1192/j.eurpsy.2023.181. https://www.cambridge.org/core/journals/european-psychiatry/article/digital-approaches-for-predicting-posttraumatic-stress-and-resilience-promises-challenges-and-future-directions/EF0117495C8B41255C9889D8BCFA9824 . [DOI] [Google Scholar]

- 62.Jacobson NC, Bhattacharya S. Digital biomarkers of anxiety disorder symptom changes: personalized deep learning models using smartphone sensors accurately predict anxiety symptoms from ecological momentary assessments. Behav Res Ther. 2022;149:104013. doi: 10.1016/j.brat.2021.104013. https://europepmc.org/abstract/MED/35030442 .S0005-7967(21)00212-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Wong QJ, Werner-Seidler A, Torok M, van Spijker B, Calear AL, Christensen H. Service use history of individuals enrolling in a web-based suicidal ideation treatment trial: analysis of baseline data. JMIR Ment Health. 2019;6(4):e11521. doi: 10.2196/11521. https://mental.jmir.org/2019/4/e11521/ v6i4e11521 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Kleiman EM, Turner BJ, Fedor S, Beale EE, Picard RW, Huffman JC, Nock MK. Digital phenotyping of suicidal thoughts. Depress Anxiety. 2018;35(7):601–608. doi: 10.1002/da.22730. [DOI] [PubMed] [Google Scholar]

- 65.Teo JX, Davila S, Yang C, Hii AA, Pua CJ, Yap J, Tan SY, Sahlén A, Chin CWL, Teh BT, Rozen SG, Cook SA, Yeo KK, Tan P, Lim WK. Digital phenotyping by consumer wearables identifies sleep-associated markers of cardiovascular disease risk and biological aging. Commun Biol. 2019;2:361. doi: 10.1038/s42003-019-0605-1. doi: 10.1038/s42003-019-0605-1.605 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 66.Ferreri F, Bourla A, Mouchabac S, Karila L. e-addictology: an overview of new technologies for assessing and intervening in addictive behaviors. Front Psychiatry. 2018;9:51. doi: 10.3389/fpsyt.2018.00051. https://europepmc.org/abstract/MED/29545756 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.Goodday SM, Friend S. Unlocking stress and forecasting its consequences with digital technology. NPJ Digit Med. 2019;2:75. doi: 10.1038/s41746-019-0151-8. doi: 10.1038/s41746-019-0151-8.151 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Feldman N, Perret S. Digital mental health for postpartum women: perils, pitfalls, and promise. NPJ Digit Med. 2023;6(1):11. doi: 10.1038/s41746-023-00756-4. doi: 10.1038/s41746-023-00756-4.10.1038/s41746-023-00756-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Hahn L, Eickhoff SB, Habel U, Stickeler E, Schnakenberg P, Goecke TW, Stickel S, Franz M, Dukart J, Chechko N. Early identification of postpartum depression using demographic, clinical, and digital phenotyping. Transl Psychiatry. 2021;11(1):121. doi: 10.1038/s41398-021-01245-6. doi: 10.1038/s41398-021-01245-6.10.1038/s41398-021-01245-6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Washington P, Park N, Srivastava P, Voss C, Kline A, Varma M, Tariq Q, Kalantarian H, Schwartz J, Patnaik R, Chrisman B, Stockham N, Paskov K, Haber N, Wall DP. Data-driven diagnostics and the potential of mobile artificial intelligence for digital therapeutic phenotyping in computational psychiatry. Biol Psychiatry Cogn Neurosci Neuroimaging. 2020;5(8):759–769. doi: 10.1016/j.bpsc.2019.11.015. https://europepmc.org/abstract/MED/32085921 .S2451-9022(19)30340-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Sequeira L, Battaglia M, Perrotta S, Merikangas K, Strauss J. Digital phenotyping with mobile and wearable devices: advanced symptom measurement in child and adolescent depression. J Am Acad Child Adolesc Psychiatry. 2019;58(9):841–845. doi: 10.1016/j.jaac.2019.04.011.S0890-8567(19)30279-5 [DOI] [PubMed] [Google Scholar]

- 72.Currey D, Torous J. Digital phenotyping data to predict symptom improvement and mental health app personalization in college students: prospective validation of a predictive model. J Med Internet Res. 2023;25:e39258. doi: 10.2196/39258. https://www.jmir.org/2023//e39258/ v25i1e39258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Reece AG, Danforth CM. Instagram photos reveal predictive markers of depression. EPJ Data Sci. 2017;6(1):15. doi: 10.1140/epjds/s13688-017-0110-z. https://epjdatascience.springeropen.com/articles/10.1140/epjds/s13688-017-0110-z . [DOI] [Google Scholar]

- 74.Eichstaedt JC, Smith RJ, Merchant RM, Ungar LH, Crutchley P, Preoţiuc-Pietro D, Asch DA, Schwartz HA. Facebook language predicts depression in medical records. Proc Natl Acad Sci U S A. 2018;115(44):11203–11208. doi: 10.1073/pnas.1802331115. https://www.pnas.org/doi/abs/10.1073/pnas.1802331115?url_ver=Z39.88-2003&rfr_id=ori:rid:crossref.org&rfr_dat=cr_pub0pubmed .1802331115 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Cheng Q, Li TM, Kwok CL, Zhu T, Yip PS. Assessing suicide risk and emotional distress in Chinese social media: a text mining and machine learning study. J Med Internet Res. 2017;19(7):e243. doi: 10.2196/jmir.7276. https://www.jmir.org/2017/7/e243/ v19i7e243 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76.Won HH, Myung W, Song GY, Lee WH, Kim JW, Carroll BJ, Kim DK. Predicting national suicide numbers with social media data. PLoS One. 2013;8(4):e61809. doi: 10.1371/journal.pone.0061809. https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0061809 .PONE-D-12-37788 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Karmakar C, Luo W, Tran T, Berk M, Venkatesh S. Predicting risk of suicide attempt using history of physical illnesses from electronic medical records. JMIR Ment Health. 2016;3(3):e19. doi: 10.2196/mental.5475. https://mental.jmir.org/2016/3/e19/ v3i3e19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Barak-Corren Y, Castro VM, Javitt S, Hoffnagle AG, Dai Y, Perlis RH, Nock MK, Smoller JW, Reis BY. Predicting suicidal behavior from longitudinal electronic health records. Am J Psychiatry. 2017;174(2):154–162. doi: 10.1176/appi.ajp.2016.16010077. https://ajp.psychiatryonline.org/doi/10.1176/appi.ajp.2016.16010077 . [DOI] [PubMed] [Google Scholar]

- 79.Bedi G, Carrillo F, Cecchi GA, Slezak DF, Sigman M, Mota NB, Ribeiro S, Javitt DC, Copelli M, Corcoran CM. Automated analysis of free speech predicts psychosis onset in high-risk youths. NPJ Schizophr. 2015;1:15030. doi: 10.1038/npjschz.2015.30. doi: 10.1038/npjschz.2015.30.npjschz201530 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80.Elvevåg B, Foltz PW, Weinberger DR, Goldberg TE. Quantifying incoherence in speech: an automated methodology and novel application to schizophrenia. Schizophr Res. 2007;93(1-3):304–316. doi: 10.1016/j.schres.2007.03.001. https://europepmc.org/abstract/MED/17433866 .S0920-9964(07)00117-X [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Currey D, Torous J. Digital phenotyping correlations in larger mental health samples: analysis and replication. BJPsych Open. 2022;8(4):e106. doi: 10.1192/bjo.2022.507. https://europepmc.org/abstract/MED/35657687 .S2056472422005075 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82.Solmi M, Radua J, Olivola M, Croce E, Soardo L, de Pablo GS, Shin JI, Kirkbride JB, Jones P, Kim JH, Kim JY, Carvalho AF, Seeman MV, Correll CU, Fusar-Poli P. Age at onset of mental disorders worldwide: large-scale meta-analysis of 192 epidemiological studies. Mol Psychiatry. 2022;27(1):281–295. doi: 10.1038/s41380-021-01161-7. https://europepmc.org/abstract/MED/34079068 .10.1038/s41380-021-01161-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83.Mouchabac S, Conejero I, Lakhlifi C, Msellek I, Malandain L, Adrien V, Ferreri F, Millet B, Bonnot O, Bourla A, Maatoug R. Improving clinical decision-making in psychiatry: implementation of digital phenotyping could mitigate the influence of patient's and practitioner's individual cognitive biases. Dialogues Clin Neurosci. 2021;23(1):52–61. doi: 10.1080/19585969.2022.2042165. https://europepmc.org/abstract/MED/35860175 .2042165 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84.Hartmann R, Schmidt FM, Sander C, Hegerl U. Heart rate variability as indicator of clinical state in depression. Front Psychiatry. 2019;9:735. doi: 10.3389/fpsyt.2018.00735. https://europepmc.org/abstract/MED/30705641 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.Lesnewich LM, Conway FN, Buckman JF, Brush CJ, Ehmann PJ, Eddie D, Olson RL, Alderman BL, Bates ME. Associations of depression severity with heart rate and heart rate variability in young adults across normative and clinical populations. Int J Psychophysiol. 2019;142:57–65. doi: 10.1016/j.ijpsycho.2019.06.005. https://europepmc.org/abstract/MED/31195066 .S0167-8760(18)31094-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86.Kane JM, Aguglia E, Altamura AC, Gutierrez JLA, Brunello N, Fleischhacker WW, Gaebel W, Gerlach J, Guelfi JD, Kissling W, Lapierre YD, Lindström E, Mendlewicz J, Racagni G, Carulla LS, Schooler NR. Guidelines for depot antipsychotic treatment in schizophrenia. European Neuropsychopharmacology Consensus Conference in Siena, Italy. Eur Neuropsychopharmacol. 1998;8(1):55–66. doi: 10.1016/s0924-977x(97)00045-x.S0924977X9700045X [DOI] [PubMed] [Google Scholar]

- 87.Brietzke E, Hawken ER, Idzikowski M, Pong J, Kennedy SH, Soares CN. Integrating digital phenotyping in clinical characterization of individuals with mood disorders. Neurosci Biobehav Rev. 2019;104:223–230. doi: 10.1016/j.neubiorev.2019.07.009.S0149-7634(18)30918-7 [DOI] [PubMed] [Google Scholar]

- 88.Barnett I, Torous J, Staples P, Sandoval L, Keshavan M, Onnela JP. Relapse prediction in schizophrenia through digital phenotyping: a pilot study. Neuropsychopharmacology. 2018;43(8):1660–1666. doi: 10.1038/s41386-018-0030-z. https://europepmc.org/abstract/MED/29511333 .10.1038/s41386-018-0030-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Onnela JP, Rauch SL. Harnessing smartphone-based digital phenotyping to enhance behavioral and mental health. Neuropsychopharmacology. 2016;41(7):1691–1696. doi: 10.1038/npp.2016.7. https://europepmc.org/abstract/MED/26818126 .npp20167 [DOI] [PMC free article] [PubMed] [Google Scholar]