Abstract

The human brain is a complex system with many functional units interacting with each other. This interacting relationship, known as the functional connectivity network (FCN), is critical for brain functions. To learn the FCN, machine learning algorithms can be built based on brain signals captured by sensing technologies such as EEG and fMRI. In neurological diseases, past research has revealed that the FCN is altered. Also, focusing on a specific disease, some part of the FCN, i.e., a sub-network, can be more susceptible than other parts. However, the current knowledge about disease-specific sub-networks is limited. We propose a novel Discriminant Subgraph Learner (DSL) to identify a functional sub-network that best differentiates patients with a specific disease from healthy controls based on brain sensory data. We develop an integrated optimization framework for DSL to simultaneously learn the FCN of each class and identify the discriminant sub-network. Further, we develop tractable and converging algorithms to solve the optimization. We apply DSL to identify a functional sub-network that best differentiates patients with episodic migraine (EM) from healthy controls based on a fMRI dataset. DSL achieved the best accuracy compared to five state-of-the-art competing algorithms.

1. Introduction

The human brain is a complex system with many functional units interacting with each other. This interacting relationship, known as the functional connectivity network (FCN), is critical for brain functions (Van Den Heuvel and Pol, 2010). To learn the FCN, brain sensory technologies such as EEG, and functional MRI (fMRI) provide relevant data. By applying statistical machine learning algorithms to the data, the FCN can be inferred (Van Den Heuvel and Pol, 2010); (Huang et al., 2012).

The brain is susceptible to various types of diseases and injuries such as migraine, Alzheimer’s disease, and concussion. Past research has revealed that the FCN is altered in patients with these diseases and injuries (Silva et al., 2019). Also, because each type of disease has its specific pathological underpinning and phenotypic presentation, the FCN is altered in different ways for different diseases. Focusing on a specific disease, some part of the FCN, i.e., a sub-network, may be more susceptible than other parts. These findings provide the physiological foundation for the importance of identifying disease-specific sub-networks, which would allow for better detection or classification of the disease.

A motivating example:

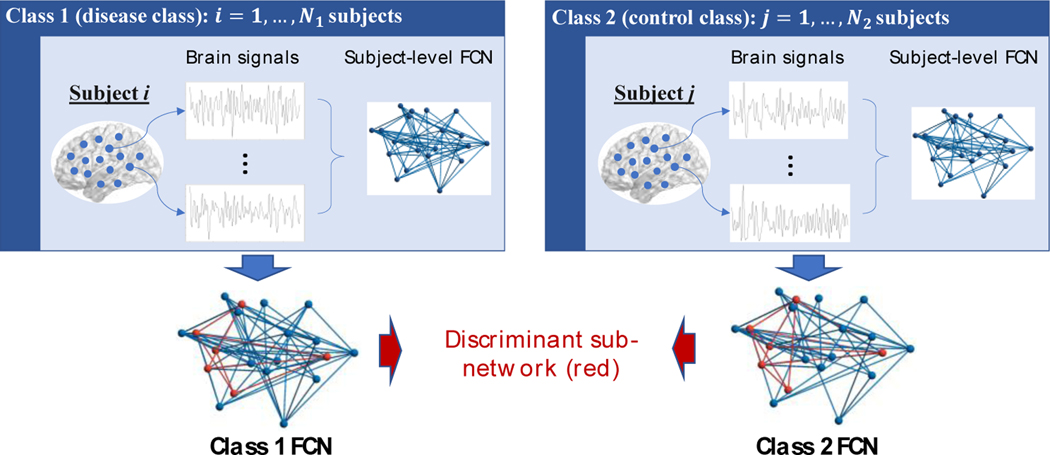

In the study of migraine, past research has revealed that the sub-networks involved in pain processing are likely to be impacted by the disease (Russo et al., 2012). However, the current knowledge about disease-specific sub-networks is quite limited, qualitative, or incomplete (Bogdanov et al., 2017). Fortunately, due to the rapid advance of brain sensory technologies, quantitative data can be collected, which makes it possible to develop machine learning algorithms to identify the sub-networks. For example, fMRI is an imaging technology that allows for collection of dynamic functional activity data from different regions of the brain. Based on the fMRI data, it is possible to identify the disease-specific sub-networks using machine learning. However, the existing machine learning algorithms fall short for providing a suitable solution. While the area of graphical models seems to be conceptually similar to our problem of sub-network identification, graphical modelling algorithms are not designed to find the “discriminant” sub-network that differentiates the patients with migraine from healthy controls. Thus, these algorithms, by design, do not have discrimination or classification capacities. Another related research area is graph classification. However, a fundamental difference between graph classification and our objective is that the former assumes that the graphs are given (i.e., graphs are input to the algorithm), whereas our objective is to learn the discriminant sub-network/subgraph from data (i.e., the subgraph is the output from our algorithm). Due to the limitations of existing methods, we propose a novel Discriminant Subgraph Learner (DSL) to identify the disease-specific sub-network based on brain sensory data, which allows for classification of the disease with high accuracy (Fig. 1).

Figure 1.

Schematic overview of the learning objective of the proposed DSL: DSL simultaneously learns class FCNs and identify the discriminant sub-network from brain sensory data

The basic idea of DSL is briefly introduced here. To capture the variability of the FCN at both the subject and the class levels, we propose a Bayesian hierarchical model, which assumes that the FCN of each subject, represented by the inverse covariance (IC) matrix of the subject’s brain sensory data, shares a common FCN/IC at the class level. Further, we propose an integrated optimization formulation that learns the IC of each class and simultaneously identifies the discriminant subgraph within the IC. The proposed formulation also uses sparsity-inducing penalties to address the challenge of learning ICs from high-dimensional datasets. Further, to provide a tractable solution for the optimization, we develop an iterative algorithm that alternates through subsets of the parameters with convergence guarantee. Additionally, to address the challenge in learning the class-level ICs within the iterative algorithm, we introduce latent variables and develop an Expectation-Maximization (EM) algorithm integrated with Block Coordinate Descent (BCD). The contributions of this paper are summarized as follows:

Contribution to statistical machine learning:

The proposed DSL model interacts with two research areas in machine learning: graphical models and graph classification. However, as explained previously, neither of the existing research areas provides the same capability as DSL. The novel design of DSL includes an integrated optimization formulation that simultaneously achieves two goals: (1) learning the FCN/IC of each class, and (2) identifying the discriminant subgraph within the IC. Additionally, we develop a tractable and converging algorithm to solve the optimization problem of DSL.

Contribution to the application domain:

DSL makes it possible to identify the functional sub-network that best differentiates patients with a certain disease from healthy controls based on functional brain sensory data. Specifically in this paper, we apply DSL to identify a functional sub-network that best differentiates patients with episodic migraine (EM) from controls based on fMRI data. Classification of EM versus controls based on neuroimaging data is a more challenging task than that of chronic migraine (Schwedt et al., 2015). We also compare DSL with four state-of-the-art machine learning algorithms. DSL outperforms all these existing algorithms.

The remainder of this paper is organized as follows: Sec. 2 reviews the related work. Sec. 3 presents the development of the proposed DSL model. Sec. 4 provides simulation experiments. Sec. 5 presents a real-data application. Sec. 6 is the conclusion.

2. Related work

2.1. Graphical models

A graphical model includes nodes to represent variables or features and edges to characterize relationships between the variables. The edges can be undirected or directed. One of the most popular types of undirected graphical models is called Gaussian Graphical Model (GGM), in which the nodes are assumed to follow a multivariate Gaussian distribution. Directed graphical models are also known as Bayesian networks (Jordan and Weiss, 2002). In this paper, our methodological development is based upon GGM. Thus, we focus on reviewing the existing research in GGM in this section.

In a GGM, the weight of the edge between two variables is related to partial covariance or inverse covariance (IC). Therefore, learning a GGM becomes learning the IC matrix from the data. This is a challenging task with high-dimensional features but limited sample sizes. To tackle the challenge, researchers have proposed various formulations that include sparsity-inducing penalties in the IC estimation to control the model complexity. Friedman et al. (Friedman et al., 2007) developed a coordinate descent algorithm for sparse IC estimation under the lasso penalty, known as graphical lasso. Hirose et al. (Hirose, Fujisawa and Sese, 2017) proposed a -lasso to get a robust sparse IC estimation based on -divergence. Huang et al. (Huang et al., 2012) proposed to learn the ICs of multiple tasks using a Bayesian framework that allowed knowledge transfer when learning task-specific ICs.

2.2. Graph classification

Graph classification is a popular research area in recent years due to the emerging graph data in various domains. Graph classification assumes that the graph of each sample is known, instead of having to be learned from data, and aims to use the graphs as input to differentiate between classes.

The existing graph classification methods fall into two general categories: similarity-based and subgraph-feature-based approaches. Similarity-based approaches learn global similarity between each pair of graphs, which is further used by conventional classification algorithms such as SVM for classification of the graphs. Global similarity is measured by graph kernels or graph embedding. Schölkopf, Tsuda and Vert (2003) introduced a unified account of a family of kernels that are defined via label sequences for handling graph data. Other types of kernels have been proposed to measure graph similarity, such as kernels between vertex and/or edge label histograms (Gärtner, Flach and Wrobel, 2003), graphlet kernels (Shervashidze et al., 2009), random walk kernels (Sugiyama and Borgwardt, 2015), and Weisfeiler-Lehman graph kernel (Shervashidze et al., 2011). Graph embedding has also been used for similarity-based approaches. Riesen et al. proposed a graph classification system using Lipschitz embedded graphs (Riesen and Bunke, 2009). One limitation of similarity-based graph classification methods is that the similarity is computed based on the global structure of graphs. However, some sub-structures may not have discriminant power and therefore including them in the computation of graph similarity may negatively affect the classification accuracy. This limitation is better addressed by the other category of graph classification methods based on subgraph features.

The basic idea of subgraph-feature-based approaches is to identify discriminative sub-structures of graphs (a.k.a. subgraphs), and put the subgraphs into a vector-format feature set to which conventional classifiers can be applied. Yan and Han (Yan and Han, 2002) proposed a method called gSpan, which mined frequent subgraphs via a lexicographic order. A LEAP algorithm was developed by Yan et al. (Yan et al., 2008) to exploit correlations between structure similarity and significance similarity by identifying dissimilar graph patterns. Saigo et al. (Saigo et al., 2009) proposed a gBoost method that progressively collects informative patterns and selects subgraphs from the whole subgraph space via a branch-and-bound pattern search algorithm. Vogelstein (Vogelstein et al., 2012) proposed a joint graph/class model to identify class-conditional signals encoded in a subset of edges. Pan et al. (Pan et al., 2015) proposed an MTG algorithm that adopts -norm and -norm regularization under a multitask learning formulation and incrementally selects subgraph features. Thanikaivelan and Gandhi (S. Thanikaivelan and K. Rajiv Gandhi, 2017) considered selecting optimal subgraphs through Principal Component Analysis (PCA) with a combined pruning technique.

2.3. Gaps of the existing research

Neither graphical models nor graph classification algorithms provide a direct solution to our targeted problem. Graphical models learn variable relationships from data, but they do not provide discrimination or classification capacities. Graph classification algorithms aim for classification, but they are under the assumption that the graph of each subject must be given. To solve our problem, one may suggest a two-step approach: 1) applying graphical models to learn the graph (i.e., functional network) of each subject from his/her brain sensory data; 2) applying graph classification algorithms on the learned graphs from 1) for classification. The limitation of the two-step approach is that the uncertainty in learning the graphs in step 1) will propagate to step 2) and affect the classification accuracy. In contrast, DLS integrates the two steps into a single optimization framework, and thus effectively tackling the uncertainty propagation. Our simulation and real-data experiments also demonstrate the advantage of DLS compared with the two-step approach.

3. Development of the proposed discriminant subgraph learner (DSL)

We will provide the model formulation for a two-class classification problem. The proposed model works generally for different types of functional brain sensory data such as EEG and fMRI. We use fMRI here to present the model formulation as this may be a less familiar data type to the readers.

3.1. Mathematical formulation of DSL

The fMRI of each subject is a 4-D object, composed by 3-D brain images taken at a series of n time points. Each brain image includes many voxels as the basic units. At each voxel, the fMRI scan produces a time series measuring the dynamics of functional activity at that location, known as the blood oxygen level dependent (BOLD) signal. When studying a particular disease, it is commonplace to focus on a set of Regions of Interest (ROIs) of the brain related to the disease. Then, the voxel-wise BOLD signals within each ROI can be averaged into a ROI-wise signal. Let be the number of ROIs. For example, in our case study, corresponding to 33 ROIs based on a meta study of the existing migraine literature (Chong et al., 2017). For each subject included in the study, let denote the BOLD signals of all the ROIs, i.e., is a matrix with representing the signal length.

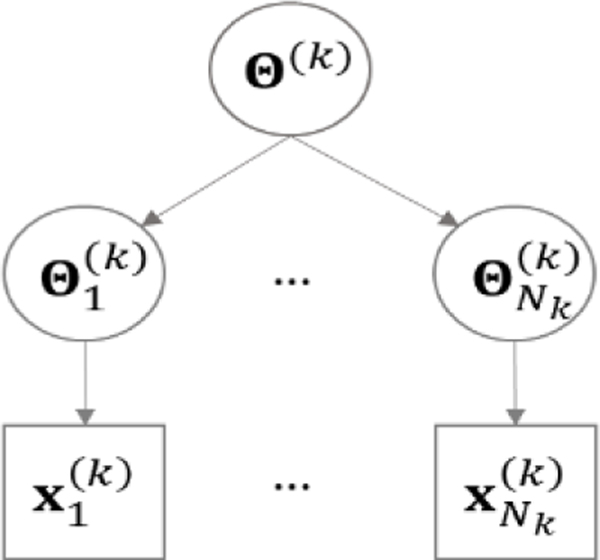

Consider two classes that consist of and subjects, respectively. For example, the two classes can correspond to EM patients and healthy controls, respectively. As the subjects are nested within each class, we propose to use a Bayesian hierarchical model (BHM) to characterize the data generating process (Fig. 2). Focus on class 1. Let denote the data for subject in class 1, . Recall that is an matrix, with each row consisting of BOLD measurements for ROIs at a particular time point. Assume each row of follows a multivariate Gaussian distribution of . Let be the IC matrix, which has been used in previous research to characterize the functional connectivity among the ROIs (Huang et al., 2012). Further assume the IC matrices of all the subjects in class 1 are generated from a common Wishart distribution, i.e., , . is the IC matrix of class 1. and are the hyper-parameters of the Wishart distribution, known as the inverse scale matrix and the degree of freedom, respectively.

Figure 2.

BHM for class ()

Based on the proposed BHM in Fig. 2, we can derive the likelihood function of , as follows:

| (1) |

where is the sample covariance matrix computed from ; the first equation is because the likelihood is only relevant to the sample covariance matrices; the second equation is due to the hierarchical structure of the BHM. We can further derive the two probabilities in the integral as follows:

| (2) |

according to the BHM. A commonly used distribution for a sample covariance matrix is the Wishart distribution, i.e., , which has a probability function of

| (3) |

Furthermore, we can derive the second probability in the integral of (1) as

| (4) |

according to the BHM structure. Using a Wishart distribution of as previously described, we can write the probability function:

| (5) |

Next, inserting (3) and (5) into (1), the likelihood function becomes:

| (6) |

In a similar way, we can get the likelihood function of , .

Furthermore, recall that in this paper we focus on the situation when two classes differ in a sub-matrix (a.k.a. subgraph) in their IC matrices, which only involves a subset of the ROIs. Let be an indicator matrix, i.e., a diagonal matrix with 1 or 0 in its diagonal to indicate if an ROI is included in the subgraph or not. is unknown so it needs to be learned from data. To learn , one approach is to learn the IC matrix of each class, i.e., and , using their respective data, and then compare the learned ICs to identify . The limitation of this sequential approach is that learning of the IC matrix of each class in the first step will affect the identification of in the second step. When the sample size is limited, it is difficult to learn an accurate IC for each class. Consequently, it will be difficult to identify accurately. To overcome this limitation, we propose the DSL model to learn , , altogether. DSL aims to solve the optimization problem in (7) that simultaneously balances learning of the IC for each class and identifying the subgraph that best distinguishes the two classes.

| (7) |

Specifically, the first term in the objective function of (7) aims to maximize the difference between the submatrices of the IC matrices of the two classes. denotes the -norm of the difference. To see the meaning of this term more clearly, consider a simple example of three ROIs and , i.e., only the first and third ROIs are included in the subgraph. Then the first term in the objective function of (7) becomes . Furthermore, the second and third terms in the objective function of (7) are the likelihood functions of the class-level ICs, and , given the data in the respective classes. The fourth and fifth terms use two penalties to impose sparsity on the class-level ICs, in order to control the model complexity under limited sample sizes. Essentially, maximizing the five terms simultaneously in the objective function of (7) balances learning of the IC for each class and identifying the subgraph that best distinguishes the two classes. , , and are tuning parameters to control the trade-off between the different terms. Additionally, there are several constraints in the optimization problem in (7): bounds the size of the subgraph by ; and are to guarantee that the learned IC matrices are valid, i.e., they must be positive definite matrices.

3.2. Optimization algorithm for parameter estimation of DSL

There is no analytical solution for the optimization problem in (7). We propose an Alternating Optimization (AO) algorithm that solves one parameter with the other two parameters fixed and iteratives over the sub-optimizations of the three parameters until convergence. In what follows, we present the sub-optimizations and the methods of solving each one. Furthermore, we summarize the iterative steps over the three sub-optimizations to solve (7) using AO. After presenting the AO algorithm, we discuss its convergence and optimality.

The three sub-optimizations for the three parameters are:

Given and , the sub-optimization with respect to is:

| (8) |

Given and , the sub-optimization with respect is:

| (9) |

Given and , the sub-optimization with respect is:

| (10) |

Next, we will discuss how to solve these sub-optimizations:

Solving the optimization in (8)

Let , the absolute difference matrix. Also let be a vector containing the diagonal elements of , . Then, (8) is equivalent to a quadratic programming problem below:

| (11) |

This optimization can be solved using a standard quadratic programming solver such as CPLEX.

Solving the optimizations in (9) and (10)

(9) and (10) have the same structure and can be solved in a similar way. It is not hard to see that both optimizations can be converted to the following unified format:

| (12) |

Solving (12) is equivalent to solving (9) if we make , , , . Solving (12) is equivalent to solving (10) if we make , , , . (12) can be considered as a penalized maximum likelihood estimation. The discussion hereafter will focus on how to solve the optimization in (12).

Using the likelihood function in (6), the optimization becomes

| (13) |

(13) is difficult to solve as it involves determinants of the unknown parameter . We propose to introduce latent variables and develop an EM algorithm to solve this optimization. EM is a well-known iterative approach to find maximum likelihood estimates of model parameters when it is difficult to obtain maximum likelihood estimates directly (Wu, 1983). The E step finds the expectation of the complete log-likelihood function with respect to the latent variables given observed data and parameter estimates in the current iteration. The M step maximizes the expectation in the E step to update the parameter estimation. The two steps iterate until convergence. Next, we present the EM algorithm developed to solve the optimization in (12). In the proposed EM algorithm, , and are treated as latent variables, observed data, and the parameter to be estimated, respectively. The complete log-likelihood function is:

| (14) |

E step

At the -th iteration of the EM algorithm, denote the parameter estimate by . Then, the E step is to find the expectation of (14) with respect to the latent variables , given the observed data, , and . Denote this expectation by

| (15) |

The first step of finding the explicit form of is to find the parametric form of the distribution of , which is summarized in Proposition 1 below. Please see the proof in Appendix A.

Proposition 1.

The probability distribution of is a product of Wishart distributions, i.e., .

Using the result in Proposition 1, we can further derive the explicit form of , which is given in Proposition 2. Please see the proof in Appendix B.

Proposition 2.

is proportional to

| (16) |

M step

In the M step, we solve an optimization that maximizes the expectation in (17) with two penalties on adopted from (12), i.e.,

| (17) |

Proposition 3 shows an equivalent form of (17) that can be solved more easily. The proof for this proposition is relatively easier and thus skipped due to space limit.

Proposition 3.

The optimization problem in Equation (17) is equivalent to:

| (18) |

where

| (19) |

(18) is not convex but the sum of a convex and a concave function. In particular, is convex, while is concave. We propose to use a BCD algorithm to solve the optimization in (18). Details of the algorithm are given in Appendix C. Note that we did not explicitly consider the constraint in the BCD algorithm. Proposition 4 shows that the positive definiteness is automatically guaranteed. Please see the proof in Appendix D.

Proposition 4.

The optimal solution obtained from the BCD algorithm, , is positive definite if the initial value, , is positive definite.

The initial value of the BCD algorithm can be set to be , which is positive definite. Then according to Proposition 4, the optimal solution will be positive definite and therefore it is a valid IC matrix. Another reason for choosing the initial values to be is that it is an unbiased estimator for . Because of the Wishart distributions of and , we can get , which is an unbiased estimator for .

Finally, the entire procedure for solving the DSL optimization in (7) is summarized as follows:

Algorithm.

for solving the DSL optimization in (7)

| Input: and ; stopping criteria, , ; tuning parameters. | |

| Output: solutions for . | |

| 1. | Compute covariance matrices and |

| 2. | Initialize: |

| 3. | Repeat |

| 4. | Compute by solving the quadratic programming in (11); |

| 5. | Compute using the proposed EM algorithm: |

| 5.1 | Input , and |

| 5.2 | Initialize ; |

| 5.3 | Repeat |

| 5.4 | E step: derive using Proposition 2; |

| 5.5 | M step: compute using BCD; |

| 5.6 | |

| 5.7 | Until |

| 5.8 | ; |

| 6. | Compute by following similar steps under 5; |

| 7. | ; |

| 8. | until |

Algorithm convergency

This is an AO algorithm that iteratively solves and the class-level ICs, and . The sub-optimization of solving in (8) is a binary quadratic programming problem. According to Lemma 1 in a previous paper (Yuan and Ghanem, 2016), this problem can be transferred to a continuous optimization. The sub-optimizations of and are solved using EM which is guaranteed to converge to a stationary point based on a previous paper (Wu, 1983). In the M-step, the optimization is solved by BCD whose convergence is presented in Appendix C. Finally, the iterations over the sub-optimizations in the algorithm converge to a first-order stationary point under mild conditions according to a previous paper (Li, Zhu and Tang, 2019). In our experiments, we observed steady increase of the objective function over the iterations and the algorithm converged quickly.

Time complexity

The algorithm iterates over solving and solving the IC of each class. Solving is a standard quadratic programming problem, for which the worst-case complexity is . is the number of variables. Solving the IC uses EM and the M-step is an optimization solved by BCD, for which the worst-case complexity is . N is the sample size. It typically takes 10–15 iterations for the E- and M-step to converge, and takes 3–6 iterations for the AO to converge, which have been consistently observed in our simulation and real-data experiments.

Tuning parameter selection

The tuning parameters of DSL include , , , , , and . In practice, we can reasonably set and to impose similar amounts of regularization on the two classes. This reduces the tuning parameters to four. is hyper-parameter of the Wishart distribution which is not sensitive and only needs to be roughly tuned. To tune the remaining parameters, a grid search can be performed and model training under each combination of parameter settings can be done in parallel. The optimal tuning parameters are those that maximize the cross-validation classification accuracy.

3.3. Classification on new samples

Upon solving the DSL optimization in (7) based on a training dataset, we can use the optimal solutions, i.e., , , and , to classy new subjects based on their fMRI data. Specifically, given the fMRI data of a new subject, , we first compute the sample covariance matrix . Next, we can extract a sub-matrix of , , which is the sample covariance matrix of the variables in the subgraph indicated by . In the same way, we can extract the sub-matrices and from , and . Then, we can use a simple likelihood-based classifier to classify the new subject, i.e., the new subject belongs to class 1 if

| (20) |

The probability function, , can be computed as:

| (21) |

The key to deriving (21) is to know the distributions of and . To achieve this, we use a nice property of Wishart distributions that the parameterization of Wishart is invariant under marginalization (Dawid, 1981). Specifically, recall that we know and . Then, according to the aforementioned property of Wishart distributions, and . Plugging the probability density functions of these Wishart distributions in (21) and through some algebra calculations, we can get:

| (22) |

where , and is the multivariate generalization of the gamma function.

4. SIMULATION STUDY

4.1. Simulation setup

In this section, we assess the performance of DSL using simulation data, in comparison with several competing methods. The data generation process includes five steps:

Construct the IC matrix for class 1, . We generate the entry at the -th row and -th column of , i.e., , from a distribution. If , set . This is to make the IC matrix sparse.

- Construct the IC for class 2, , through the following sub-steps:

-

(2.1)Let .

-

(2.2)Select a subset of variables that are involved in the discriminate subgraph. Denote the sub-matrix of that corresponds to the variables by . Construct as a diagonal matrix with ones corresponding to the variables and zeros otherwise.

-

(2.3)Randomly pick 50% of the non-zero entries in and change them to be zero. Randomly pick the same number of zero entries in and change them to be non-zero. Sample each non-zero entry from .

-

(2.4)For the remaining entries of that are not included in , i.e., entries in , resample each positive entry from and each negative entry from . The purpose of this sub-step is that although is not what primarily differentiates class 2 from class 1, we resample its non-zero entries to create a more general case that is not exactly the same as even beyond the discriminate subgraph.

-

(2.1)

Rescale the and generated in 1) and 2) to ensure that they are positive definite matrices. The rescaling includes first summing the absolute values of off-diagonal entries for each row, then dividing each off-diagonal entry by 1.5 times of the sum, and finally averaging the resulting matrix with its transpose to produce a symmetric matrix.

Construct the IC for each subject within class 1, , . Sample from , where is the rescaled IC obtained in step 3). Note that the sampled in this way is not sparse. We further sparsify by following a simple and efficient method proposed in (Kuismin and Sillanpää, 2016) that iteratively thresholds the smallest entries in the original non-sparse . This entire process is repeated to construct the IC for each subject within class 2.

Generate the data for each subject in class 1, i.e., , , from a multivariate Gaussian distribution with zero mean and IC matrix from step 4). Generate the data for each subject in class 2 in a similar way.

In the first experiment, we generate simulation data of 50 variables and 50 samples in each class. This is a challenging case because the sample size is the same as the number of variables. In addition, we set as different sizes of the discriminant subgraph. This simulation setup is comparable to the real-data case study presented in the next section. The real data includes 50 and 49 samples in the two classes, respectively; and a total of 33 ROIs, which is smaller than the 50 variables in the simulation and thus being a relatively easier case. Even though we do not know the size of the subgraph in the real data, the simulation setup includes a wide range of possible sizes ranging from 20% to 100% of the total number of variables. It is reasonable to believe that this range should cover the subgraph size in the real data. In the second experiment, we increase the training sample size to 100, and keep all other settings the same as the first experiment. We use 10-fold cross validation to choose the optimal tuning parameters. Then, the trained model is applied to a separate test set of 50 samples per class to compute the Area Under the Curve (AUC). We repeat this whole process for 30 times and report the average AUC over the 30 simulation runs.

4.2. Competing methods

We compare DSL with a collection of state-of-the-art competing methods. The first competing method is DSL without subgraph selection, referred to as DSL\subgraph. The second to fifth are existing algorithms in graph classification. These algorithms are not directly comparable to our method because they assume that the graphs are known. To make them applicable, we use graphical lasso (Friedman et al., 2007) to learn the IC of each subject. Then, the ICs are used as input to the graph classification algorithms. The following list summarizes the competing methods:

“DSL\subgraph”: DSL without subgraph selection

“Vectorized SVM”: Elements of the IC graph for each subject are put into a feature vector. A SVM classifier is built on the feature vector (Friedman, Hastie and Tibshirani, 2001).

“Similarity-based”: This is one of the two categories of graph classification algorithms reviewed in Section 2. Different kernels have been proposed to measure graph similarity. We choose to report the best accuracy based on four well-studied kernels in the literature: kernels between vertex and/or edge label histograms (Gärtner, Flach and Wrobel, 2003), graphlet kernels (Shervashidze et al., 2009), random walk kernels (Sugiyama and Borgwardt, 2015), and the Weisfeiler-Lehman graph kernel (Shervashidze et al., 2011).

“gBoost”: Another category of graph classification algorithms is subgraph-feature-based. gBoost is a representative algorithm in this category that has been used as a benchmarking method by other papers (Saigo et al., 2009).

“MTG”: Another more recent subgraph-feature-based algorithm (Pan et al., 2015).

4.3. Classification accuracies of different methods

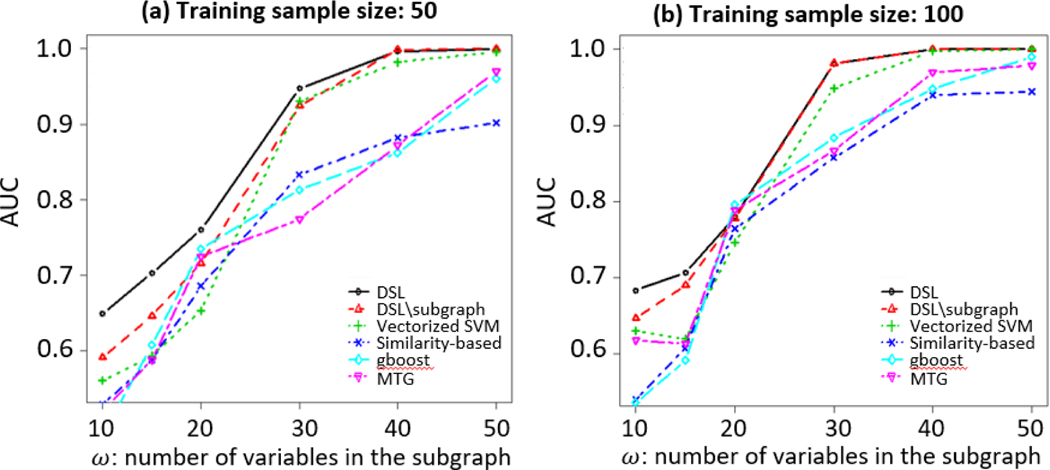

Fig. 3 shows the average AUC performance of DSL and competing methods on test data with respect to different subgraph sizes, under two different training sample sizes: 50 and 100. There are several observations: 1) In general, DSL and DSL\subgraph outperform other competing methods. 2) DSL outperforms DSL\subgraph with a smaller number of variables in the subgraph. This confirms the importance of finding the discriminate subgraph by DSL. 3) With a smaller training sample size, the advantage of DSL over DSL\subgraph is more significant.

Figure 3.

AUCs of DSL and competing methods on a test dataset of 50 samples per class

4.4. Accuracy of DSL in IC estimation and subgraph identification

As the DSL optimization simultaneously estimates the IC of each class and identifies the discriminant subgraph, we use two metrics to evaluate the accuracy of DSL: 1) Structural accuracy of the IC estimation, defined as the accuracy of identifying the truly zero (non-zero) entries in the IC matrix averaged over the two classes; 2) Subgraph identification accuracy, defined as the number of vertices in the true subgraph that are also identified by DSL. These results are summarized in Table 1. There are several observations: (i) In general, the ICs are estimated well, with a higher accuracy at a larger training size, as expected. (ii) The subgraph identification accuracy is better with larger subgraph sizes and with a larger training size. Under a fixed training size, a smaller subgraph size (i.e., a smaller ) means less difference between the two classes, making it harder to differentiate them. This inherently difficulty hurts the performance of subgraph identification by DSL. As increases, there is more difference between the two classes and naturally the subgraph identification accuracy improves. Looking at Table 1 and Fig. 3 together, the results are consistent in the sense that a larger subgraph size has a higher accuracy for identifying the subgraph by DSL and subsequently a higher AUC in using the identified subgraph for classification.

Table 1.

Accuracy of DSL

| Training sample size = 50 | Training sample size = 100 | |||

|---|---|---|---|---|

| Structural Accuracy of IC estimation | Subgraph identification accuracy | Structural Accuracy of IC estimation | Subgraph identification accuracy | |

| 10 | 0.89 | 5/10 | 0.96 | 6/10 |

| 15 | 0.88 | 9/15 | 0.96 | 10/15 |

| 20 | 0.88 | 14/20 | 0.96 | 17/20 |

| 30 | 0.89 | 26/30 | 0.96 | 28/30 |

| 40 | 0.89 | 38/40 | 0.96 | 39/40 |

| 50 | 0.89 | 49/50 | 0.96 | 50/50 |

4.5. Computational time

For the most time-consuming case across all the simulation and real-data experiments, i.e., the setting with 50 variables and 100 samples per class, the clock time of training in each parallel thread was around 290 seconds. This task was performed within the R version 4.0.2 environment on a PC with Intel Core i7–10610U 2.30 HGz CPU with 4 cores, 8 logical processors and 16 GB of RAM memory.

5. Real data application

In this section, we present an application of using resting-state fMRI to classify EM and healthy controls. The data of 50 EM patients and 49 age-matched controls were provided by our collaborators at Mayo Clinic Arizona. The dataset is not available to public due to privacy perservation. All migraine patients were diagnosed according to the diagnostic criteria set forth by the International Classification of Headache Disorders (ICHD-II). Patients were excluded if they have neurological diseases other than migraine. Healthy controls were included if they never developed headaches or if they had occasional tension-type headaches with a frequency of less than three tension-type headaches per month.

Imaging was conducted on 3-Tesla Siemens scanners using FDA-approached sequences. Prior to the imaging session, each subject was instructed to stay awake with eyes-closed, known as the resting state. Ten minutes of resting-state fMRI data were collected for each subject. Each fMRI dataset is 4-D, denoted by , where are coordinates for each basic unit (called a voxel) of the 3-D brain image and is time. In our study, there were a total of voxels in the 3-D brain image and the time series of each voxel contains over 200 time points with some slight difference between subjects. Standard steps of fMRI pre-processing were followed (Chong et al., 2017). We selected 33 ROIs based on findings in the pain and migraine literature. These regions are those consistently shown to participate in pain processing. The 33 ROIs include 16 on each hemisphere and one midline region. Table 2 lists names of the ROIs. The coordinates for the center of each ROI were also reported. Each ROI was an 8mm sphere drawn around the center coordinates. The average time series over the voxels included in each ROI was computed. The 33 ROI-level time series were used as input data to DSL and competing algorithms.

Table 2.

Names of the ROIs (odd/even numbers represent the right/left hemisphere; 33 is a midline region). Coordinates of the center for each ROI are provided below the name. The ROIs in the subgraph found by DSL are in bold.

| 1,2 | anterior insula (+/−38, 19, −3) | 3,4 | anterior cingulate cortex (+/−6, 28, 24) | 5,6 | mid cingulate cortex (+/−10, −7, 46) |

| 7,8 | posterior insula (+/−40, −14, 1) | 9,10 | posterior cingulate cortex (+/−8, −48, 39) | 11,12 | Thalamus (+/−8, −21, 7) |

| 13,14 | primary somatosensory cortex (+/−46, −24, 47) | 15,16 | dorsolateral prefrontal cortex (+/−40, 39, 24) | 17,18 | inferior lateral parietal (+/−57, −48, 30) |

| 19,20 | ventromedial prefrontal cortex (+/−6, 36, −14) | 21,22 | second somatosensory cortex (+/−52, −28, 21) | 23,24 | supplementary motor area (+/−6, 1, 68) |

| 25, 26 | temporal pole (+/−41, 10, −32) | 27,28 | amygdala (+/−22, −1, −22) | 29,30 | middle temporal gyrus (+/−60, −26, −5) |

| 31,32 | Caudate (+/−14, 13, 11) | 33 | periaqueductal gray matter (−1, −26, −11) |

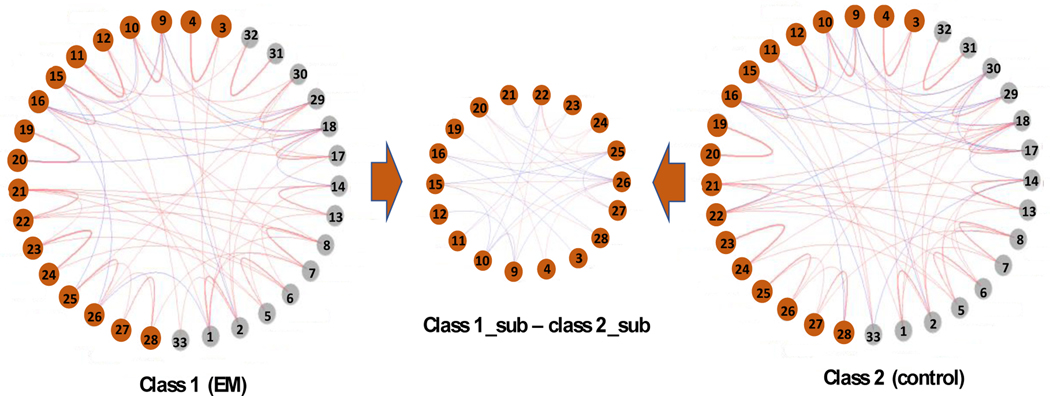

Using the fMRI data of the 33 ROIs, we can compute the sample correlation matrix for each subject, which is used as input to our DSL model. Tuning parameters are selected to maximize the leave-one-out-cross-validated (LOOCV) AUC. The subgraph found by DSL includes 18 ROIs that are highlighted in bold in Table 2. From Table 3, DSL achieves 0.81 AUC, 0.82 sensitivity, and 0.79 specificity, which significantly outperform the competing methods. DSL\subgraph has the second-best AUC (0.72), and its specificity is low (0.59). Other competing methods have even worse AUC. Fig. 4 shows the output from DSL, including the estimated IC matrix of each class converted to partial correlation matrices and the difference between the two classes in terms of the partial correlations of the identified subgraph. Partial correlation matrices have better interpretation than the original IC matrices because their elements are bounded between −1 and 1.

Table 3.

LOOCV accuracy of different methods on migraine data

| AUC | Sensitivity | Specificity | |

|---|---|---|---|

| DSL | 0.81 | 0.82 | 0.79 |

| DSL\subgraph | 0.72 | 0.80 | 0.59 |

| Vectorized SVM | 0.63 | 0.78 | 0.51 |

| Similarity-based | 0.67 | 0.68 | 0.60 |

| gBoost | 0.69 | 0.69 | 0.66 |

| MTG | 0.70 | 0.68 | 0.69 |

Figure 4.

Partial correlation matrices of EM (left) and controls (right) converted from the ICs learned by DSL. Edges with partial correlation magnitude <0.05 are not shown for visual effect. Edges in red/blue represent positive/negative particle correlations. The middle graph shows the difference between the partial correlation matrices of EM and controls on the identified subgraph by DSL (red/blue edges present positive/negative difference.).

Interpretations:

From Fig. 4, we can see that the partial correlation matrix of each class shows strong positive correlations between the left and right hemisphere for the same ROI. This phenomenon has been previously reported for both healthy and diseased brains (Chong et al., 2019). Some of these correlations have no significant difference between the EM and control classes, while some others do. Significant difference is also observed between other ROIs. Furthermore, it is interesting that several ROIs in the subgraph found by DSL are part of well-known functional networks or anatomical regions. For example, the left and right anterior cingulate cortex (3, 4), the left and right posterior cingulate cortex (9, 10) and the bilateral ventromedial are all regions that comprise the default mode network (DMN). This functional network shows synchronous activity when a person is at rest and not actively partaking in an activity. The DMN is involved in self-reflection and mind-wandering (Raichle et al., 2001) and results of several imaging studies have shown abnormal functional connectivity amongst regions of the DMN in patients with migraine (Tessitore et al., 2013) (Faragó et al., 2017) (Yu et al., 2012). Other ROIs that are important in our model include regions of the limbic system such as the left and right amygdala (27, 28), the left and right thalamus (11, 12). In accordance with our results, Hadjikhani et al. found stronger functional connectivity between the thalamus and the amygdala in migraineurs relative to patients with other chronic pain disorders, indicating that aberrant functional connectivity between these regions might be unique to migraine patients (Hadjikhani et al., 2013).

6. Conclusion

We proposed a novel DSL model to learn a sub-network within the FCN that best differentiates patients with a specific disease from healthy controls based on brain sensory data. DSL was demonstrated in an application of identifying a functional sub-network that best differentiates patients with EM from controls based on resting state fMRI data. DSL significantly outperformed competing methods in classification accuracy. There are several limitations of the proposed method. The training time of DSL is relatively slow and efficient optimization solvers can be developed in future research. The current formulation of DSL focuses on binary classification while an extension to more than two classes will address a broader range of problems. Future research may also explore applications of DSL to other neurological diseases and other types of functional brain sensory data.

Supplementary Material

Acknowledgment

This work in partially supported by NIH K23NS070891, NSF CMMI CAREER 1149602, and NSF DMS-1903135.

Appendix A: Proof of Proposition 1

| (23) |

We have known that and . Inserting the probability density functions of the two Wishart distributions into Equation (23), we get:

| (24) |

Each term in the product in Eq. (24) is a Wishart distribution with scale matrix and degree of freedom . ■

Appendix B: Proof of Proposition 2

| (25) |

where

Since is a linear operator, can become , where , which is proportional to the mean of a Wishart distribution for with the degrees of freedom and scale matrix . That is, , where as defined above.

Thus, .

Remove the constants in (25), we can know that

■

Appendix C: Derivation of the BCD algorithm

For notation simplicity, we re-write (18) into (26), i.e.,

| (26) |

The proposed BCD works by iteratively updating one column and one row of at a time while fixing other entries of . Here, we will only discuss the update on one column/row, i.e., the -th column/row, because all other updates are similar. Specifically, partition into:

| (27) |

Similarly, , and are partitioned in the same way, i.e.,

| (28) |

Putting the partitioned and back into (26), the four terms in (26) becomes:

where . We can know and under the constraint . Therefore, the optimization in (26) becomes:

| (29) |

and can be solved in alternation. With fixed, (29) becomes a univariate optimization problem:

| (30) |

where

| (31) |

Furthermore, with fixed, (29) becomes:

| (32) |

where and . (32) is a unconstrainted non-convex optimization which can be solved efficiently by a coordinate descent algorithm according to (Tseng, 2001) with the coordinate-descent update for each element in vector . With estimation for and , we can update by

| (33) |

This completes the updating on the -th column/row. Finally, we summarize the proposed BCD algorithm in Algorithm 1.

Algorithm 1.

BCD Algorithm for solving the optimization in (26) in the M-step of the proposed EM framework

| Input: for each subject, ; from the -th EM iteration; and ; tuning parameters , , ; stopping criterion, | |

| Output: updated . | |

| 1. | Initialize: |

| 2. | Compute using Equation (19); |

| 3. | Repeat |

| 4. | Let ; |

| 5. | for to do |

| 6. | Partition , , and for -th column/row according to according to (27) - (28), respectively; |

| 7. | Solve the optimization in (29) to get and ; |

| 8. | Compute using (33) and ; |

| 9. | Update the -th column/row of by and ; |

| 10. | End for |

| 11. | ; |

| 12. | until |

| 13. | . |

Algorithm 1 convergence:

This is a BCD algorithm for solving the optimization in (26), whose 3rd and 4th terms are nondifferentiable but separable. Discussion on the convergence of this algorithm can follow from a previous paper (Tseng, 2001). Specifically, although (32) is a unconstrainted non-convex optimization, under the condition of the coordinate-descent update for each element in vector has a global minimum. Then, putting (32) and (30) together, we can know that (26) has a unique minimum at each coordinate block, satisfying the conditions of Part C in Theorem 4.1 in the previous paper (Tseng, 2001). This indicates that Algorithm 1 converges to a coordinate-wise minimum point. Furthermore, due to the existence of Gateaux-differentials of (32), we can know that (32) is regular according to Lemma 3.1 in the previous paper (Tseng, 2001). This implies that each coordinate-wise minimum point is a stationary point. In all, when i.e., and in (7), the convergence of Algorithm 1 is guaranteed.

Appendix D: Proof of Proposition 4

Because Algorithm 1 is an iterative algorithm, we only need to prove is positive definite (p.d.) given that is p.d.. If this holds, it will guarantee that the at convergence will be p.d.. To prove the p.d. of , we need to prove keeps p.d. after the update of each column/row with the initial since BCD algorithm works by iterations.

Let be the obtained after the update on the -th column/row by BCD algorithm with the initial . We only need to prove . To prove , we will use the property that the determinate of a p.d. matrix must be greater than zero. Using the decomposition in (27) we can write as:

| (34) |

Then, as long as we can prove we complete the proof of this Theorem. It is obvious that because is the upper-left submatrix of the p.d. matrix . Let . According to Equation (30), it is obvious that . Then we have . ■

References

- Bogdanov P, Dereli N, Dang X-H, Bassett DS, Wymbs NF, Grafton ST, and Singh AK (2017) Learning about learning: Mining human brain sub-network biomarkers from fMRI data. PloS One, 12(10), e0184344. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chong CD, Gaw N, Fu Y, Li J, Wu T, and Schwedt TJ (2017) Migraine classification using magnetic resonance imaging resting-state functional connectivity data. Cephalalgia, 37(9), 828–844. [DOI] [PubMed] [Google Scholar]

- Chong CD, Wang L, Wang K, Traub S, and Li J. (2019) Homotopic region connectivity during concussion recovery: A longitudinal fMRI study. PloS One, 14(10), e0221892. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dawid AP (1981) Some matrix-variate distribution theory: Notational considerations and a Bayesian application. Biometrika, 68(1), 265–274. [Google Scholar]

- Faragó P, Szabó N, Tóth E, Tuka B, Király A, Csete G, Párdutz Á, Szok D, Tajti J, and Ertsey C. (2017) Ipsilateral alteration of resting state activity suggests that cortical dysfunction contributes to the pathogenesis of cluster headache. Brain Topography, 30(2), 281–289. [DOI] [PubMed] [Google Scholar]

- Friedman J, Hastie T, Höfling H, and Tibshirani R. (2007) Pathwise coordinate optimization. The Annals of Applied Statistics, 1(2), 302–332. [Google Scholar]

- Friedman J, Hastie T, and Tibshirani R. (2001) The Elements of Statistical Learning, Springer Series in Statistics New York. [Google Scholar]

- Gärtner T, Flach P, and Wrobel S. (2003) On graph kernels: Hardness results and efficient alternatives in Handbook of Learning Theory and Kernel Machines, Schölkopf B. and Warmuth MK (eds) Springer, Berlin, Heidelberg, pp. 129–143. [Google Scholar]

- Hadjikhani N, Ward N, Boshyan J, Napadow V, Maeda Y, Truini A, Caramia F, Tinelli E, and Mainero C. (2013) The missing link: Enhanced functional connectivity between amygdala and visceroceptive cortex in migraine. Cephalalgia, 33(15), 1264–1268. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hirose K, Fujisawa H, and Sese J. (2017) Robust sparse Gaussian graphical modeling. Journal of Multivariate Analysis, 161, 172–190. [Google Scholar]

- Huang S, Li J, Chen K, Wu T, Ye J, Wu X, and Yao L. (2012) A transfer learning approach for network modeling. IIE Transactions, 44(11), 915–931. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Jordan MI, and Weiss Y. (2002) Graphical models: Probabilistic inference in Handbook of Brain Theory and Neural Networks, Arbib MA (eds) MIT Press, pp. 490–496. [Google Scholar]

- Kuismin M, and Sillanpää MJ (2016) Use of Wishart prior and simple extensions for sparse precision matrix estimation. PloS One, 11(2), e0148171. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Li Q, Zhu Z, and Tang G. (2019) Alternating minimizations converge to second-order optimal solutions, in Proceedings of International Conference on Machine Learning, Long Beach, California, pp. 3935–3943. [Google Scholar]

- Pan S, Wu J, Zhu X, Zhang C, and Philip SY (2015) Joint structure feature exploration and regularization for multi-task graph classification. IEEE Transactions on Knowledge and Data Engineering, 28(3), 715–728. [Google Scholar]

- Raichle ME, MacLeod AM, Snyder AZ, Powers WJ, Gusnard DA, and Shulman GL (2001) A default mode of brain function. Proceedings of the National Academy of Sciences, 98(2), 676–682. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Riesen K, and Bunke H. (2009) Graph classification by means of Lipschitz embedding. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 39(6), 1472–1483. [DOI] [PubMed] [Google Scholar]

- Russo A, Tessitore A, Esposito F, Marcuccio L, Giordano A, Conforti R, Truini A, Paccone A, d’Onofrio F, and Tedeschi G. (2012) Pain processing in patients with migraine: An event-related fMRI study during trigeminal nociceptive stimulation. Journal of Neurology, 259(9), 1903–1912. [DOI] [PubMed] [Google Scholar]

- Thanikaivelan S. and Rajiv Gandhi K. (2017) Efficient subgraph selection using principal component analysis with pruning methods in multitask graph classification. International Journal of Control Theory and Applications, 10(19), 195–210. [Google Scholar]

- Saigo H, Nowozin S, Kadowaki T, Kudo T, and Tsuda K. (2009) gBoost: A mathematical programming approach to graph classification and regression. Machine Learning, 75(1), 69–89. [Google Scholar]

- Schölkopf B, Tsuda K, and Vert J. (2003) Kernel Methods in Computational Biology. MIT press. [Google Scholar]

- Schwedt TJ, Chong CD, Wu T, Gaw N, Fu Y, and Li J. (2015) Accurate classification of chronic migraine via brain magnetic resonance imaging. Headache: The Journal of Head and Face Pain, 55(6), 762–777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shervashidze N, Schweitzer P, van Leeuwen EJ, Mehlhorn K, and Borgwardt KM (2011) Weisfeiler-lehman graph kernels. Journal of Machine Learning Research, 12(9), 2539–2561. [Google Scholar]

- Shervashidze N, Vishwanathan SVN, Petri T, Mehlhorn K, and Borgwardt K. (2009) Efficient graphlet kernels for large graph comparison, in Proceedings of the 12th International Conference on Artificial Intelligence and Statistics, Clearwater Beach, Florida, pp. 488–495. [Google Scholar]

- Silva AR, Magalhães R, Arantes C, Moreira PS, Rodrigues M, Marques P, Marques J, Sousa N, and Pereira VH (2019) Brain functional connectivity is altered in patients with Takotsubo Syndrome. Scientific Reports, 9(1), 1–11. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sugiyama M, and Borgwardt K. (2015) Halting in random walk kernels. Advances in Neural Information Processing Systems, 28, 1639–1647. [Google Scholar]

- Tessitore A, Russo A, Giordano A, Conte F, Corbo D, De Stefano M, Cirillo S, Cirillo M, Esposito F, and Tedeschi G. (2013) Disrupted default mode network connectivity in migraine without aura. The Journal of Headache and Pain, 14(1), 89. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tseng P. (2001) Convergence of a block coordinate descent method for nondifferentiable minimization. Journal of Optimization Theory and Applications, 109(3), 475–494. [Google Scholar]

- Van Den Heuvel MP, and Pol HEH (2010) Exploring the brain network: A review on resting-state fMRI functional connectivity. European Neuropsychopharmacology, 20(8), 519–534. [DOI] [PubMed] [Google Scholar]

- Vogelstein JT, Roncal WG, Vogelstein RJ, and Priebe CE (2012) Graph classification using signal-subgraphs: Applications in statistical connectomics. IEEE Transactions on Pattern Analysis and Machine Intelligence, 35(7), 1539–1551. [DOI] [PubMed] [Google Scholar]

- Wu CFJ (1983) On the convergence properties of the EM algorithm. The Annals of Statistics, 11(1), 95–103. [Google Scholar]

- Yan X, Cheng H, Han J, and Yu PS (2008) Mining significant graph patterns by leap search, in Proceedings of the 2008 ACM SIGMOD International Conference on Management of Data, Vancouver Canada, pp. 433–444. [Google Scholar]

- Yan X, and Han J. (2002) gSpan: Graph-based substructure pattern mining, in Proceedings of 2002 IEEE International Conference on Data Mining, Maebashi City, Japan, pp. 721–724. [Google Scholar]

- Yu D, Yuan K, Zhao L, Zhao L, Dong M, Liu P, Wang G, Liu J, Sun J, and Zhou G. (2012) Regional homogeneity abnormalities in patients with interictal migraine without aura: A resting‐state study. NMR in Biomedicine, 25(5), 806–812. [DOI] [PubMed] [Google Scholar]

- Yuan G, and Ghanem B. (2017) An exact penalty method for binary optimization based on MPEC formulation, in Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, California, pp. 2867–2875. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.