Abstract

A great deal of interest has recently focused on conducting inference on the parameters in a high-dimensional linear model. In this paper, we consider a simple and very naïve two-step procedure for this task, in which we (i) fit a lasso model in order to obtain a subset of the variables, and (ii) fit a least squares model on the lasso-selected set. Conventional statistical wisdom tells us that we cannot make use of the standard statistical inference tools for the resulting least squares model (such as confidence intervals and p-values), since we peeked at the data twice: once in running the lasso, and again in fitting the least squares model. However, in this paper, we show that under a certain set of assumptions, with high probability, the set of variables selected by the lasso is identical to the one selected by the noiseless lasso and is hence deterministic. Consequently, the naïve two-step approach can yield asymptotically valid inference. We utilize this finding to develop the naïve confidence interval, which can be used to draw inference on the regression coefficients of the model selected by the lasso, as well as the naïve score test, which can be used to test the hypotheses regarding the full-model regression coefficients.

Keywords: confidence interval, lasso, p-value, post-selection inference, significance testing

1. INTRODUCTION

In this paper, we consider the linear model

| (1.1) |

where is an deterministic design matrix, is a vector of independent and identically distributed errors with and , and is a. p-vector of coefficients. Without loss of generality, we assume that the columns of are centered and standardized, such that and for .

When the number of variables is much smaller than the sample size , estimation and inference for the vector are straightforward. For instance, estimation can be performed using ordinary least squares, and inference can be conducted using classical approaches (see, e.g., Gelman and Hill, 2006; Weisberg, 2013).

As the scope and scale of data collection have increased across virtually all fields, there is an increase in data sets that are high dimensional, in the sense that the number of variables, , is larger than the number of observations, . In this setting, classical approaches for estimation and inference of cannot be directly applied. In the past 20 years, a vast statistical literature has focused on estimating in high dimensions. In particular, penalized regression methods, such as the lasso (Tibshirani, 1996),

| (1.2) |

can be used to estimate . However, the topic of inference in the high-dimensional setting remains relatively less explored, despite promising recent work in this area. Roughly speaking, recent work on inference in the high-dimensional setting falls into two classes: (i) methods that examine the null hypothesis ; and (ii) methods that make inference based on a sub-model. We will review these two classes of methods in turn.

First, we review methods that examine the null hypothesis , i.e. that the variable is unassociated with the outcome , conditional on all other variables. It might be tempting to estimate using the lasso (1.2), and then (for instance) to construct a confidence interval around . Unfortunately, such an approach is problematic, because is a biased estimate of . To remedy this problem, we can apply a one-step adjustment to , such that under appropriate assumptions, the resulting debiased estimator is asymptotically unbiased for . This is similar to the idea of two-step estimation in nonparametric and semiparametric inference (see, e.g., Hahn, 1998; Hirano et al., 2003). With the one-step adjustment, p-values and confidence intervals can be constructed around this debiased estimator. Such an approach is taken by the low dimensional projection estimator (LDPE; Zhang and Zhang, 2014; van de Geer et al., 2014), the debiased lasso test with unknown population covariance (SSLasso; Javanmard and Montanari, 2013, 2014a), the debiased lasso test with known population covariance (SDL; Javanmard and Montanari, 2014b), and the decorrelated score test (dScore; Ning and Liu, 2017). See Dezeure et al. (2015) for a review of such procedures. In what follows, we will refer to these and related approaches for testing as debiased lasso tests.

Next, we review recent work that makes statistical inference based on a sub-model. Recall that the challenge in high dimensions stems from the fact that when , classical statistical methods cannot be applied; for instance, we cannot even perform ordinary least squares (OLS). This suggests a simple approach: given an index set , let denote the columns of indexed by . Then, we can consider performing inference based on the sub-model composed only of the features in the index set . That is, rather than considering the model (1.1), we consider the sub-model

| (1.3) |

In (1.3), the notation and emphasizes that the true regression coefficients and corresponding noise are functions of the set .

Now, provided that , we can perform estimation and inference on the vector using classical statistical approaches. For instance, we can consider building confidence intervals such that for any ,

| (1.4) |

At first blush, the problems associated with high dimensionality have been solved!

Of course, there are some problems with the aforementioned approach. The first problem is that the coefficients in the sub-model (1.3) typically are not the same as the coefficients in the original model (1.1) (Berk et al., 2013). Roughly speaking, the problem is that the coefficients in the model (1.1) quantify the linear association between a given variable and the response, conditional on the other variables, whereas the coefficients in the sub-model (1.3) quantify the linear association between a variable and the response, conditional on the other variables in the sub-model. The true regression coefficients in the sub-model are of the form

| (1.5) |

Thus, unless . To see this more concretely, consider the following example with deterministic variables. Let

and set . The above design matrix does not satisfy the strong irrepresentable condition needed for selection consistency of lasso (Zhao and Yu, 2006). Thus, if we take to equal the support of the lasso estimate, i.e.,

| (1.6) |

then it is easy to verify that for some , in which case

The second problem that arises in restricting our attention to the sub-model (1.3) is that in practice, the index set is not pre-specified. Instead, it is typically chosen based on the data. The problem is that if we construct the index set based on the data, and then apply classical inference approaches on the vector , the resulting p-values and confidence intervals will not be valid (see, e.g., Pötscher, 1991; Kabaila, 1998; Leeb and Pötscher, 2003, 2005, 2006a,b, 2008; Kabaila, 2009; Berk et al., 2013). This is because we peeked at the data twice: once to determine which variables to include in , and then again to test hypotheses associated with those variables. Consequently, an extensive recent body of literature has focused on the task of performing inference on in (1.3) given that was chosen based on the data. Cox (1975) proposed the idea of sample-splitting to break up the dependence of variable selection and hypothesis testing. Wasserman and Roeder (2009) studied sample-splitting in application to the lasso, marginal regression and forward step-wise regression. Meinshausen et al. (2009) extended the single-splitting proposal of Wasserman and Roeder (2009) to multi-splitting, which improved statistical power and reduced the number of falsely selected variables. Berk et al. (2013) instead considered simultaneous inference, which is universally valid under all possible model selection procedures without sample-splitting. More recently, Lee et al. (2016) and Tibshirani et al. (2016) studied the geometry of the lasso and sequential regression, respectively, and proposed exact post-selection inference methods conditional on the random set of selected variables. See Taylor and Tibshirani (2015) for a review of post-selection inference procedures.

The procedures outlined above successfully address the second problem arising from restricting the attention to a sub-model, namely the randomness of the set . However, they do not address the first problem regarding the difference in the target of inference (1.5) unless . In the case of lasso, valid inference for can be obtained if . However, among other conditions, this requires the strong irrepresentable condition, which is known to be a restrictive condition that is not likely to hold in high dimensions (Zhao and Yu, 2006).

In a recent Statistical Science paper, Leeb et al. (2015) performed a simulation study, in which they obtained a set using variable selection, and then calculated “naïve” confidence intervals for using ordinary least squares, without accounting for the fact that the set was chosen based on the data. Of course, conventional wisdom dictates that the resulting confidence intervals will be much too narrow. In fact, this is what Leeb et al. (2015) found, when they used best subset selection to construct the set . However, surprisingly, when the lasso was used to construct the set , the confidence intervals induced by (1.4) had approximately correct coverage. This is in stark contrast to the existing literature!

In this paper, we present a theoretical justification for the empirical finding in Leeb et al. (2015). The main idea of our paper is to establish selection consistency of the lasso estimate with respect to its noiseless counterpart. This result allows us to perform valid inference for the support of the noiseless lasso without needing post-selection or sample splitting strategies. Furthermore, we use our theoretical findings to also develop the naïve score test, a simple procedure for testing the null hypothesis for all .

The rest of this paper is organized as follows. In Sections 2 and 3, we focus on post-selection inference: we seek to perform inference on in (1.3), where is selected based on the lasso, i.e., (1.6). In Section 2, we point out a previously overlooked scenario in selection consistency theory: although (1.6) is random, with high probability it is equal to the support of the noiseless lasso under relatively mild regularity conditions. This result implies that we can use classical methods for inference on , when . In Section 3, we provide empirical evidence in support of these theoretical findings. In Sections 4 and Section 5, we instead focus on the task of performing inference on in (1.1). We propose the naïve score test in Section 4, and study its empirical performance in Section 5. We end with a discussion of future research directions in Section 6. Technical proofs are relegated to the online Supplementary Materials.

We now introduce some notation that will be used throughout the paper. We use“”to denote equalities by definition, and “” for the asymptotic order. We use for the indicator function; “” and “” denote the maximum and minimum of two real numbers, respectively. For any real number . Given a set , denotes its cardinality and denotes its complement. We use bold upper case fonts to denote matrices, bold lower case fonts for vectors, and normal fonts for scalars. We use symbols with a superscript “*”, e.g., and , to denote the true population parameters associated with the full linear model (1.1); we use symbols superscripted by a set in the parentheses, e.g., , to denote quantities related to the sub-model (1.3). Symbols subscripted by “” and with a hat, e.g., and , denote parameter estimates from the lasso estimator (1.2) with tuning parameter ; symbols subscripted by “” and without a hat, e.g., and , are associated with the noiseless lasso estimator (van de Geer and Bühlmann, 2009; van de Geer, 2017),

| (1.7) |

For any vector , matrix , and index sets and , we use to denote the subvector of comprised of elements of , and to denote the sub-matrix of with rows in and columns in .

2. THEORETICAL JUSTIFICATION FOR NAÏVE CONFIDENCE INTERVALS

Recall that was defined in (1.5). The simulation results of Leeb et al. (2015) suggest that if we perform ordinary least squares using the variables contained in the support set of the lasso, , then the classical confidence intervals associated with the least squares estimator,

| (2.1) |

have approximately correct coverage, where correct coverage means that for all ,

| (2.2) |

We reiterate that in (2.2), is the confidence interval output by standard least squares software applied to the data . This goes against our statistical intuition: it seems that by fitting a lasso model and then performing least squares on the selected set, we are peeking at the data twice, and thus we would expect the confidence interval to be much too narrow.

In this section, we present a theoretical result that suggests that, in fact, this “double-peeking” might not be so bad. Our key insight is as follows: under certain assumptions, the set of variables selected by the lasso is deterministic and non-data-dependent with high probability. Thus, fitting a least squares model on the variables selected by the lasso does not really constitute peeking at the data twice: effectively, with high probability, we are only peeking at it once. That means that the naïve confidence intervals obtained from ordinary least squares will have approximately correct coverage, in the sense of (2.2).

We first introduce the required conditions for our theoretical result.

(M1) The design matrix is deterministic, with columns in general position (Rosset et al., 2004; Zhang, 2010; Dossal, 2012; Tibshirani, 2013). Columns of are standardized, i.e., for any . The error in (1.1) has independent entries and sub-Gaussian tails. The sample size , dimension and tuning parameter satisfy

(E) Let . For any index set with , let be any index set such that , . Then for all that satisfy , and ,

In addition, the restricted sparse eigenvalue,

(M2) Recall that . Let with defined in (1.7). The signal strength in satisfies

| (2.3) |

and the signal strength outside of satisfies

| (2.4) |

(T) The strong irrepresentable condition with respect to and holds, i.e., there exists such that

| (2.5) |

Condition (M1) is mild and standard in literature. Note that in (M1), we require the lasso tuning parameter to approach zero at a slightly slower rate than to control the randomness of the error . Most standard literature requires for some constant , which our condition also satisfies.

In (M2), the requirement that indicates that the noiseless lasso regression coefficients are either 0, or asymptotically no smaller than , which is larger than . Note that this assumption concerns variables chosen by the noiseless lasso and not the true model. In the second part of (M2), implies that the total signal strength of weak signal variables that are not selected by the noiseless lasso cannot be too large to be distinguishable from the sub-Gaussian noise. As shown in the proof of Proposition 2.1, the condition is important in showing that , whereas is instrumental in showing .

Condition (E) manifests the behavior of the eigenvalues of . Specifically, the first part of (E) is the restricted eigenvalue condition (Bickel et al., 2009; van de Geer and Bühlmann, 2009), except that instead of requiring as in Bickel et al. (2009) and van de Geer and Bühlmann (2009), we here require . The second part of (E) is the sparse Reisz, or sparse eigenvalue condition (Zhang and Huang, 2008; Belloni and Chernozhukov, 2013). Both the restricted eigenvalue and sparse Reisz conditions are standard and mild conditions in the literature. (E) implies that .

Condition (T) is the strong irrepresentable condition with respect to and . Condition (T) is likely weaker than the classical irrepresentable condition with respect to and , proposed in Zhao and Yu (2006), because the classical irrepresentable condition implies that with large , in which case (T) holds as it becomes identical to the classical irrepresentable condition. Lemma 2.1 shows a sufficient condition for (T), which is proven in Section S2 in the Supplementary Materials.

Lemma 2.1.

Suppose conditions (M1), (M2) and (E) hold. Condition (T) holds if

| (2.6) |

Condition (2.6) allows to diverge to infinity at a slower rate than . A more stringent version of condition (2.6) is presented in Voorman et al. (2014).

We use (T) to prove , where is the stationary condition of (1.7). Note that is the required condition for Proposition 2.1, and (T) might be sufficient but unncessary. However, (T) is more standard and understandable than the condition .

We now present Proposition 2.1, which is proven in Section S1 of the online Supplementary Materials.

PROPOSITION 2.1.

Suppose conditions (M1), (M2), (E) and (T) hold. Then, we have , where , with defined in (1.7).

The proof of Proposition 2.1 is in the same flavor as Meinshausen and Bühlmann (2006); Zhao and Yu (2006); Tropp (2006) and Wainwright (2009), and is based on absorbing the contribution of the weak signals into the noise vector. However, variable selection consistency asserts that , whereas Proposition 2.1 states that . Consequently, variable selection consistency requires the irrepresentable and signal strength conditions with respect to , whereas Proposition 2.1 requires the irrepresentable and signal strength conditions with respect to . These conditions are likely much milder than those for the variable selection consistency of the lasso, as confirmed in our simulations in Section 3. In these simulations, we estimate in 36 settings with two choices of . As shown in Table 4, in most settings, with high probability, especially in cases with large . We also estimated in the same 36 settings, with the same two choices of , as well as an additional choice of that minimizes the cross-validated MSE; we found that in all 36 × 3 settings.

TABLE 4.

The proportion of that equals , under the scale-free graph and stochastic block model settings with tuning parameters and . In the simulation, , sample size , dimension and signal-to-noise ratio .

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.2 300 0.3 |

0.5 | 0.1 | 500 0.3 |

0.5 |

|

| |||||||||

| Scale-free | 0.958 | 0.725 | 0.928 | 0.649 | 1.000 | 1.000 | 0.971 | 0.999 | 0.998 |

| Scale-free | 0.417 | 0.665 | 0.829 | 0.567 | 0.955 | 0.940 | 0.804 | 0.976 | 0.964 |

|

| |||||||||

| Stochastic block | 0.964 | 0.736 | 0.931 | 0.678 | 1.000 | 1.000 | 0.964 | 1.000 | 1.000 |

| Stochastic block | 0.394 | 0.661 | 0.815 | 0.582 | 0.964 | 0.957 | 0.582 | 0.559 | 0.964 |

|

| |||||||||

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.6 300 0.3 |

0.5 | 0.1 | 500 0.3 |

0.5 |

|

| |||||||||

| Scale-free | 0.952 | 0.713 | 0.924 | 0.687 | 1.000 | 1.000 | 0.968 | 0.999 | 0.998 |

| Scale-free | 0.396 | 0.657 | 0.799 | 0.572 | 0.950 | 0.941 | 0.779 | 0.976 | 0.963 |

|

| |||||||||

| Stochastic block | 0.950 | 0.750 | 0.935 | 0.687 | 1.000 | 1.000 | 0.967 | 1.000 | 0.999 |

| Stochastic block | 0.445 | 0.661 | 0.806 | 0.559 | 0.959 | 0.948 | 0.805 | 0.987 | 0.974 |

Based on Proposition 2.1, we could build asymptotically valid confidence intervals, as shown in Theorem 2.1.

Proposition 2.1 suggests that asymptotically, we “pay no price” for peeking at our data by performing the lasso: we should be able to perform downstream analyses on the subset of variables in as though we had obtained that subset without looking at the data. This intuition will be formalized in Theorem 2.1.

Theorem 2.1, which is proven in Section S3 in the online Supplementary Materials, shows that in (2.1) is asymptotically normal, with mean and variance suggested by classical least squares theory: that is, the fact that was selected by peeking at the data has no effect on the asymptotic distribution of . This result requires that be chosen in a non-data-adaptive way. Otherwise, will be affected by the random error through , which complicates the distribution of . Theorem 2.1 also requires Condition (W), which is used to apply the Lindeberg-Feller Central Limit Theorem. This condition can be relaxed if the noise is normally distributed.

(W) and are such that , where

and is the row vector of length with the entry corresponding to equal to one, and zero otherwise.

Theorem 2.1.

Suppose and (W) holds. Then, for any ,

| (2.7) |

where is defined in (2.1) and in (1.5), and is the variance of in (1.1).

The error standard deviation in (2.7) is usually unknown. It can be estimated using various high-dimensional estimation methods, e.g., the scaled lasso (Sun and Zhang, 2012), cross-validation (CV) based methods (Fan et al., 2012) or method-of-moments based methods (Dicker, 2014); see a comparison study of high dimensional error variance estimation methods in Reid et al. (2016). Alternatively, Theorem 2.2 shows that we could also consistently estimate the error variance using the post-selection OLS residual sum of square (RSS).

Theorem 2.2.

Suppose and , where . Then

| (2.8) |

where .

Theorem 2.2 is proven in Section S4 in the online Supplementary Materials. In (2.8), is the fitted OLS residual on the sub-model (1.3). Also, is a weak condition: since is satisfied if .

To summarize, in this section, we have provided a theoretical justification for a procedure that seems, intuitively, to be statistically unjustifiable:

Perform the lasso in order to obtain the support set ;

Use least squares to fit the sub-model containing just the features in ;

Use the classical confidence intervals from that least squares model, without accounting for the fact that was obtained by peeking at the data.

Theorem 2.1 guarantees that the naïve confidence intervals in Step 3 will indeed have approximately correct coverage, in the sense of (2.2).

3. NUMERICAL EXAMINATION OF NAÏVE CONFIDENCE INTERVALS

In this section, we perform simulation studies to examine the coverage probability (2.2) of the naïve confidence intervals obtained by applying standard least squares software to the data .

Recall from Section 1 that (2.2) involves the probability that the confidence interval contains the quantity , which in general does not equal the population regression coefficient vector . Inference for is discussed in Sections 4 and 5.

The results in this section complement simulation findings in Leeb et al. (2015).

3.1. Methods for Comparison

Following Theorem 2.1, for defined in (2.1), and for each , the 95% naïve confidence interval takes the form

| (3.1) |

In order to obtain the set , we must apply the lasso using some value of . By (M1) we need , which is slightly larger than the prediction optimal rate, (Bickel et al., 2009; van de Geer and Bühlmann, 2009). A data-driven way to obtain a larger tuning parameter it to use , which is the largest value of for which the 10-fold CV prediction mean squared error (PMSE) is within one standard error of the minimum CV PMSE (see Section 7.10.1 in Hastie et al., 2009). However, the selected by cross validation depends on and may induce additional randomness in the set of selected coefficients, . This randomness can also impact the exact post-selection procedure of Lee et al. (2016). To address this issue, the authors proposed , where . As an alternative to , we also evaluate , where we simulate and approximate the expectation based on the average of 1000 replicates. We compare the confidence intervals for with those based on the exact lasso post-selection inference procedure of Lee et al. (2016), which is implemented in the package selectiveInference.

In both approaches, the standard deviation of errors, in (1.1), is estimated either using the scaled lasso (Sun and Zhang, 2012) or by applying Theorem 2.2. However, we do not examine the combination of with error variance estimated based on Theorem 2.2, because requires an estimate of the error standard deviation based on Theorem 2.2, whereas Theorem 2.2 requires a suitable choice of to be valid.

3.2. Simulation Set-Up

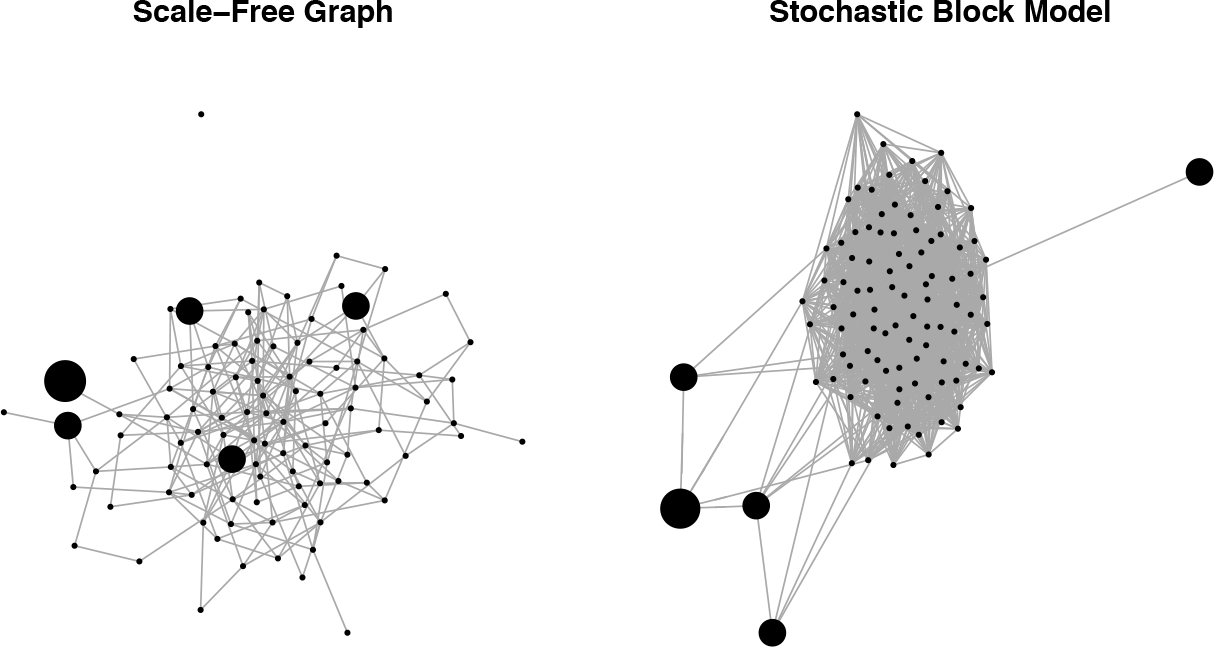

For the simulations, we consider two partial correlation settings for , generated based on (i) a scale-free graph and (ii) a stochastic block model (see, e.g., Kolaczyk, 2009), each containing nodes. These settings are relaxations of the simple orthogonal and block-diagonal settings, and are displayed in Figure 1.

FIG 1.

The scale-free graph and stochastic block model settings. The size of a given node indicates the magnitude of the corresponding element of .

In the scale-free graph setting, we used the igraph package in R to simulate an undirected, scale-free network with power-law exponent parameter , and edge density 0.05. Here, is the set of nodes in the graph, and is the set of edges. This resulted in a total of edges in the graph. We then order the indices of the nodes in the graph so that the first, second, third, fourth, and fifth nodes correspond to the 10th, 20th, 30th, 40th, and 50th least-connected nodes in the graph.

In the stochastic block model setting, we first generate two dense Erdős-Rényi graphs (Erdős and Rényi, 1959; Gilbert, 1959) with five and 95 nodes, respectively. In each graph, the edge density is 0.3. We then add edges randomly between these two graphs to achieve an inter-graph edge density of 0.05. The indices of the nodes are ordered so that the nodes in the five-node graph precede the remaining nodes.

Next, for both graph settings, we define the weighted adjacency matrix, , as follows:

| (3.2) |

where . We then set , and standardize so that , for all . We simulate observations , and generate the outcome , where

A range of error variances are used to produce signal-to-noise ratios, .

Throughout the simulations, and are held fixed over repetitions of the simulation study, while and vary.

3.3. Simulation Results

We calculate the average length and coverage proportion of the 95% naïve confidence intervals, where the coverage proportion is defined as

| (3.3) |

where and are the set of variables selected by the lasso in the bth repetition, and the 95% naïve confidence interval (3.1) for the jth variable in the bth repetition, respectively. Recall that was defined in (1.5). In order to calculate the average length and coverage proportion associated with the exact lasso post selection procedure of Lee et al. (2016), we replace in (3.3) with the confidence interval output by the selectiveInference R package.

Tables 1 and 2 show the coverage proportion and average length of 95% naïve confidence intervals and 95% exact lasso post-selection confidence intervals under the scale-free graph and stochastic block model settings, respectively. The result shows that the coverage probability of the exact post-selection confidence interval is more correct than that of the naïve confidence interval when the data are small and signal is weak. But when the data are large and/or signal is relatively strong, both confidence intervals have approximately correct coverage. This corroborates the findings in Leeb et al. (2015), in which the authors consider settings with and . The coverage probability of the naïve confidence intervals with tuning parameter is a bit smaller than the desired level, especially when the signal is weak. This may be due to the randomness in . The naïve approach of error variance estimation as in Theorem 2.2 works similarly compared to the scaled lasso across all settings. In addition, Tables 1 and 2 also show that naïve confidence intervals are substantially narrower than exact lasso post-selection confidence intervals, especially when the signal is weak.

TABLE 1.

Coverage proportions (Cov) and average lengths (Len) of 95% naïve confidence intervals with tuning parameters and , and 95% exact post-selection confidence intervals under the scale-free graph setting with partial correlation , sample size , dimension and signal-to-noise ratio . The error variance is estimated either through the scaled lasso (Sun and Zhang, 2012) (SL) or Theorem 2.2 (NL).

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.2 300 0.3 |

0.5 | 0.1 | 500 0.3 |

0.5 | |

|

| ||||||||||

| exact SL | 0.905 | 0.942 | 0.936 | 0.949 | 0.953 | 0.953 | 0.946 | 0.949 | 0.949 | |

| Cov | naïve SL | 0.683 | 0.961 | 0.944 | 0.951 | 0.952 | 0.951 | 0.971 | 0.948 | 0.947 |

| naïve SL | 0.596 | 0.876 | 0.887 | 0.905 | 0.917 | 0.910 | 0.944 | 0.935 | 0.932 | |

| naïve NL | 0.587 | 0.870 | 0.883 | 0.905 | 0.919 | 0.911 | 0.945 | 0.939 | 0.933 | |

|

| ||||||||||

| exact SL | 4.260 | 1.634 | 0.823 | 1.907 | 0.437 | 0.330 | 0.814 | 0.327 | 0.255 | |

| Len | naïve SL | 1.122 | 0.693 | 0.552 | 0.717 | 0.421 | 0.326 | 0.562 | 0.325 | 0.253 |

| naïve SL | 1.199 | 0.705 | 0.555 | 0.718 | 0.420 | 0.326 | 0.560 | 0.326 | 0.253 | |

| naïve NL | 1.201 | 0.711 | 0.559 | 0.723 | 0.424 | 0.329 | 0.563 | 0.328 | 0.255 | |

|

| ||||||||||

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.6 300 0.3 |

0.5 | 0.1 | 500 0.3 |

0.5 | |

|

| ||||||||||

| exact SL | 0.956 | 0.938 | 0.945 | 0.922 | 0.944 | 0.945 | 0.948 | 0.945 | 0.947 | |

| Cov | naïve SL | 0.625 | 0.943 | 0.953 | 0.942 | 0.942 | 0.942 | 0.964 | 0.944 | 0.944 |

| naïve SL | 0.643 | 0.873 | 0.876 | 0.918 | 0.934 | 0.929 | 0.954 | 0.941 | 0.935 | |

| naïve NL | 0.639 | 0.870 | 0.873 | 0.919 | 0.934 | 0.929 | 0.957 | 0.944 | 0.938 | |

|

| ||||||||||

| exact SL | 6.433 | 1.616 | 0.770 | 1.892 | 0.429 | 0.328 | 0.787 | 0.326 | 0.253 | |

| Len | naïve SL | 1.108 | 0.692 | 0.553 | 0.713 | 0.420 | 0.326 | 0.560 | 0.325 | 0.252 |

| naïve SL | 1.200 | 0.705 | 0.554 | 0.720 | 0.420 | 0.326 | 0.561 | 0.326 | 0.253 | |

| naïve NL | 1.203 | 0.709 | 0.557 | 0.725 | 0.423 | 0.329 | 0.564 | 0.327 | 0.254 | |

TABLE 2.

Coverage proportions (Cov) and average lengths (Len) of 95% naïve confidence intervals with tuning parameters and , and 95% exact post-selection confidence intervals under the stochastic block model setting with partial correlation , sample size , dimension and signal-to-noise ratio . The error variance is estimated either through the scaled lasso (Sun and Zhang, 2012) (SL) or Theorem 2.2 (NL).

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.2 300 0.3 |

0.5 | 0.1 | 500 0.3 |

0.5 | |

|

| ||||||||||

| exact SL | 0.921 | 0.934 | 0.948 | 0.951 | 0.934 | 0.933 | 0.953 | 0.958 | 0.958 | |

| Cov | naïve SL | 0.540 | 0.955 | 0.960 | 0.953 | 0.931 | 0.930 | 0.966 | 0.957 | 0.957 |

| naïve SL | 0.585 | 0.850 | 0.858 | 0.911 | 0.922 | 0.917 | 0.961 | 0.944 | 0.940 | |

| naïve NL | 0.586 | 0.854 | 0.865 | 0.913 | 0.924 | 0.918 | 0.961 | 0.945 | 0.942 | |

|

| ||||||||||

| exact SL | 4.721 | 1.692 | 0.857 | 1.873 | 0.425 | 0.325 | 0.758 | 0.325 | 0.252 | |

| Len | naïve SL | 1.111 | 0.682 | 0.545 | 0.709 | 0.416 | 0.323 | 0.559 | 0.323 | 0.251 |

| naïve SL | 1.188 | 0.703 | 0.552 | 0.715 | 0.416 | 0.323 | 0.557 | 0.323 | 0.251 | |

| naïve NL | 1.195 | 0.710 | 0.558 | 0.721 | 0.420 | 0.326 | 0.560 | 0.325 | 0.253 | |

|

| ||||||||||

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.6 300 0.3 |

0.5 | 0.1 | 500 0.3 |

0.5 | |

|

| ||||||||||

| exact SL | 0.923 | 0.945 | 0.949 | 0.959 | 0.956 | 0.958 | 0.943 | 0.948 | 0.948 | |

| Cov | naïve SL | 0.569 | 0.959 | 0.964 | 0.964 | 0.955 | 0.956 | 0.963 | 0.946 | 0.946 |

| naïve SL | 0.568 | 0.841 | 0.852 | 0.922 | 0.933 | 0.927 | 0.947 | 0.946 | 0.941 | |

| naïve NL | 0.561 | 0.841 | 0.850 | 0.922 | 0.935 | 0.929 | 0.950 | 0.947 | 0.943 | |

|

| ||||||||||

| exact SL | 4.744 | 1.662 | 0.832 | 1.987 | 0.424 | 0.325 | 0.807 | 0.324 | 0.251 | |

| Len | naïve SL | 1.119 | 0.690 | 0.545 | 0.712 | 0.416 | 0.323 | 0.557 | 0.322 | 0.250 |

| naïve SL | 1.194 | 0.704 | 0.554 | 0.713 | 0.416 | 0.323 | 0.555 | 0.322 | 0.250 | |

| naïve NL | 1.194 | 0.707 | 0.557 | 0.718 | 0.420 | 0.326 | 0.558 | 0.324 | 0.252 | |

In addition, to evaluate whether is deterministic, among repetitions that (there is no confidence interval if ), we also calculate the proportion of , where is the most common . The result is summarized in Table 3, which shows that is almost deterministic with tuning parameter . With , due to the randomness in the tuning parameter, is less deterministic, which may explain the smaller coverage probability than the desired level in this case. We also examined the proportion of , which is shown in Table 4. The observation is similar to that in Table 3. As mentioned in Section 1, in all the settings considered.

TABLE 3.

Among repetitions that , the proportion of that equals the most common , under the scale-free graph and stochastic block model settings with tuning parameters and . In the simulation, , sample size , dimension and signal-to-noise ratio ..

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.2 300 0.3 |

0.5 | 0.1 | 500 0.3 |

0.5 |

|

| |||||||||

| Scale-free; | 0.000 | 0.999 | 0.993 | 1.000 | 0.999 | 0.998 | 1.000 | 0.999 | 0.996 |

| Scale-free; | 0.832 | 0.958 | 0.851 | 0.961 | 0.948 | 0.934 | 0.988 | 0.980 | 0.966 |

|

| |||||||||

| Stochastic block; | 1.000 | 0.997 | 0.992 | 0.998 | 1.000 | 0.999 | 1.000 | 1.000 | 1.000 |

| Stochastic block; | 0.838 | 0.839 | 0.831 | 0.964 | 0.957 | 0.946 | 0.992 | 0.979 | 0.968 |

|

| |||||||||

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.6 300 0.3 |

0.5 | 0.1 | 500 0.3 |

0.5 |

|

| |||||||||

| Scale-free; | 0.995 | 0.996 | 0.996 | 0.999 | 0.998 | 0.998 | 0.999 | 1.000 | 1.000 |

| Scale-free; | 0.854 | 0.869 | 0.855 | 0.964 | 0.947 | 0.935 | 0.994 | 0.981 | 0.962 |

|

| |||||||||

| Stochastic block; | 0.998 | 0.998 | 0.998 | 1.000 | 1.000 | 1.000 | 1.000 | 0.999 | 0.999 |

| Stochastic block; | 0.834 | 0.843 | 0.826 | 0.969 | 0.976 | 0.968 | 0.988 | 0.979 | 0.971 |

4. INFERENCE FOR WITH THE NAÏVE SCORE TEST

Sections 2 and 3 focused on the task of developing confidence intervals for in (1.3), where , the set of variables selected by the lasso. However, recall from (1.5) that typically , where was introduced in (1.1).

In this section, we shift our focus to performing inference on . We will exploit Proposition 2.1 to develop a simple approach for testing , for .

Recall that in the low-dimensional setting, the classical score statistic for the hypothesis is proportional to , where is the vector of fitted values that results from least squares regression of onto the features . In order to adapt the classical score test statistic to the high-dimensional setting, we define the naïve score test statistic for testing as

| (4.1) |

where

and

is the orthogonal projection matrix onto the set of variables in is defined in (2.1). In (4.1), the notation represents the set in (1.6) with removed, if . If , then .

In Theorem 4.1, we will derive the asymptotic distribution of under . We first introduce two new conditions.

First, we require that the total signal strength of variables not selected by the noiseless lasso, (1.7), is small.

(M2* ) Recall that . Let with defined in (1.7). The signal strength in satisfies

| (4.2) |

and the signal strength outside of satisfies

| (4.3) |

Condition (M2*) closely resembles (M2), which was required for Theorem 2.1 in Section 2. The only difference between the two is that (M2*) requires , whereas (M2) requires only that . Recall that in Section 2, we consider inference for the parameters in the sub-model (1.3). In other words, testing the population regression parameter in (1.1) requires more stringent assumptions than constructing confidence intervals for the parameters in the sub-model (1.3).

The following condition, required to apply the Lindeberg-Feller Central Limit Theorem, can be relaxed if the noise in (1.1) is normally distributed.

(S) and satisfy , where .

We now present Theorem 4.1, which is proven in Section S5 of the online Supplementary Materials.

Theorem 4.1.

Suppose (M2*) and (S) hold and . For any , under the null hypothesis ,

| (4.4) |

where was defined in (4.1), and where is the variance of in (1.1).

Theorem 4.1 states that the distribution of the naïve score test statistic is asymptotically the same as if were a fixed set, as opposed to being selected by fitting a lasso model on the data. Based on (4.4), we reject the null hypothesis at level if , where is the quantile function of the standard normal distribution function.

We emphasize that Theorem 4.1 holds for any variable , and thus can be used to test , for all . (This is in contrast to Theorem 2.1, which concerns confidence intervals for the parameters in the sub-model (1.3) consisting of the variables in , and hence holds only for )

5. NUMERICAL EXAMINATION OF THE NAÏVE SCORE TEST

In this section, we compare the performance of the naïve score test (4.1) to three recent proposals from the literature for testing : namely, LDPE (Zhang and Zhang, 2014; van de Geer et al., 2014), SSLasso (Javanmard and Montanari, 2014a), and the decorrelated score test (dScore; Ning and Liu, 2017). (Since with high probability , we do not include the exact post-selection procedure in this comparison.) code for SSLasso, and dScore was provided by the authors; LDPE is implemented in the R package hdi. For the naïve score test, we estimate , the standard deviation of the errors in (1.1), using the scaled lasso (Sun and Zhang, 2012) or Theorem 2.2.

All four of these methods require us to select the value of the lasso tuning parameter. For LDPE, SSLasso, and dScore, we use 10-fold cross-validation to select the tuning parameter value that produces the smallest cross-validated mean square error, . As in the numerical study of the naïve confidence intervals in Section 3, we implement the naïve score test using the tuning parameter value and . Unless otherwise noted, all tests are performed at a significance level of 0.05.

In Section 5.1, we investigate the powers and type-I errors of the above tests in simulation experiments. Section 5.2 contains an analysis of a glioblastoma gene expression dataset.

5.1. Power and Type-I Error

5.1.1. Simulation Set-Up

In this section, we adapt the scale-free graph and the stochastic block model presented in Section 3.2 to have .

In the scale-free graph setting, we generate a scale-free graph with , edge density 0.05, and nodes. The resulting graph has edges. We order the nodes in the graph so that th node is the th least-connected node in the graph, for . For example, the 4th node is the 120th least-connected node in the graph.

In the stochastic block model setting, we generate two dense Erdős-Rényi graphs with ten nodes and 490 nodes, respectively; each has an intra-graph edge density of 0.3. The node indices are ordered so that the nodes in the smaller graph precede those in the larger graph. We then randomly connect nodes between the two graphs in order to obtain an inter-graph edge density of 0.05.

Next, for both graph settings, we generate as in (3.2), where . We then set , and standardize so that , for all . We simulate observations , and generate the outcome , where

A range of error variances are used to produce signal-to-noise ratios,

We hold and fixed over repetitions of the simulation, while and vary.

5.1.2. Simulation Results

For each test, the average power on the strong signal variables, the average power on the weak signal variables, and the average type-I error rate are defined as

| (5.1) |

| (5.2) |

| (5.3) |

respectively. In (5.1)–(5.3), is the p-value associated with null hypothesis in the bth simulated data set. In the simulations, the graphs and are held fixed over repetitions of the simulation study, while and vary.

Tables 5 and 6 summarize the results in the two simulation settings. Naïve score test with has slightly worse control of type-1 error rate and better power than the other four methods, which have approximate control over the type-I error rate and comparable power. The performance with the scaled lasso is similar to the performance of Theorem 2.2.

TABLE 5.

Average power and type-I error rate for the hypotheses for , as defined in (5.1)—(5.3), under the scale-free graph setting with . Results are shown for various values of , SNR. Methods for comparison include LDPE, SSLasso, dScore, and the naïve score test with tuning parameter , and . The error variance is estimated either through the scaled lasso (Sun and Zhang, 2012) (S-Z) or Theorem 2.2 (T2.2). Note that as mentioned in Section 3, we do not combine with T2.2

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.2 200 0.3 |

0.5 | 0.1 | 400 0.3 |

0.5 | |

|

| ||||||||||

| LDPE S-Z | 0.400 | 0.773 | 0.910 | 0.627 | 0.973 | 1.000 | 0.923 | 1.000 | 1.000 | |

| SSLasso S-Z | 0.410 | 0.770 | 0.950 | 0.650 | 0.970 | 1.000 | 0.910 | 1.000 | 1.000 | |

| Powerstrong | dScore S-Z | 0.330 | 0.643 | 0.857 | 0.547 | 0.957 | 1.000 | 0.887 | 1.000 | 1.000 |

| nScore S-Z | 0.403 | 0.847 | 0.960 | 0.727 | 0.990 | 0.997 | 0.940 | 1.000 | 1.000 | |

| nScore S-Z | 0.427 | 0.763 | 0.893 | 0.677 | 0.977 | 1.000 | 0.957 | 1.000 | 1.000 | |

| nScore T2.2 | 0.357 | 0.763 | 0.890 | 0.680 | 0.977 | 1.000 | 0.910 | 1.000 | 1.000 | |

|

| ||||||||||

| LDPE S-Z | 0.064 | 0.083 | 0.056 | 0.054 | 0.059 | 0.079 | 0.070 | 0.079 | 0.113 | |

| SSLasso S-Z | 0.081 | 0.087 | 0.060 | 0.066 | 0.061 | 0.086 | 0.069 | 0.086 | 0.113 | |

| Powerweak | dScore S-Z | 0.044 | 0.056 | 0.036 | 0.039 | 0.039 | 0.060 | 0.046 | 0.056 | 0.093 |

| nScore S-Z | 0.061 | 0.091 | 0.109 | 0.070 | 0.109 | 0.107 | 0.097 | 0.103 | 0.114 | |

| nScore S-Z | 0.080 | 0.077 | 0.059 | 0.060 | 0.061 | 0.061 | 0.083 | 0.076 | 0.101 | |

| nScore T2.2 | 0.067 | 0.070 | 0.070 | 0.054 | 0.071 | 0.069 | 0.079 | 0.094 | 0.123 | |

|

| ||||||||||

| LDPE S-Z | 0.051 | 0.052 | 0.051 | 0.049 | 0.051 | 0.047 | 0.050 | 0.051 | 0.049 | |

| SSLasso S-Z | 0.056 | 0.056 | 0.056 | 0.054 | 0.055 | 0.053 | 0.053 | 0.054 | 0.054 | |

| T1 Error | dScore S-Z | 0.035 | 0.040 | 0.040 | 0.033 | 0.036 | 0.034 | 0.035 | 0.037 | 0.034 |

| nScore S-Z | 0.069 | 0.082 | 0.095 | 0.064 | 0.083 | 0.079 | 0.065 | 0.068 | 0.050 | |

| nScore S-Z | 0.061 | 0.057 | 0.048 | 0.056 | 0.055 | 0.040 | 0.060 | 0.046 | 0.046 | |

| nScore T2.2 | 0.049 | 0.052 | 0.049 | 0.054 | 0.053 | 0.050 | 0.056 | 0.049 | 0.050 | |

|

| ||||||||||

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.6 200 0.3 |

0.5 | 0.1 | 400 0.3 |

0.5 | |

|

| ||||||||||

| LDPE S-Z | 0.330 | 0.783 | 0.947 | 0.627 | 0.980 | 1.000 | 0.887 | 1.000 | 1.000 | |

| SSLasso S-Z | 0.347 | 0.790 | 0.957 | 0.623 | 0.987 | 1.000 | 0.867 | 1.000 | 1.000 | |

| Powerstrong | dScore S-Z | 0.270 | 0.673 | 0.883 | 0.533 | 0.960 | 0.993 | 0.863 | 1.000 | 1.000 |

| nScore S-Z | 0.430 | 0.790 | 0.933 | 0.707 | 0.977 | 1.000 | 0.923 | 1.000 | 1.000 | |

| nScore S-Z | 0.357 | 0.767 | 0.887 | 0.677 | 0.980 | 0.997 | 0.937 | 1.000 | 1.000 | |

| nScore T2.2 | 0.340 | 0.697 | 0.907 | 0.637 | 0.973 | 1.000 | 0.950 | 1.000 | 1.000 | |

|

| ||||||||||

| LDPE S-Z | 0.031 | 0.046 | 0.063 | 0.064 | 0.074 | 0.076 | 0.054 | 0.077 | 0.119 | |

| SSLasso S-Z | 0.047 | 0.063 | 0.076 | 0.063 | 0.090 | 0.099 | 0.053 | 0.076 | 0.121 | |

| Powerweak | dScore S-Z | 0.021 | 0.037 | 0.047 | 0.036 | 0.060 | 0.044 | 0.034 | 0.050 | 0.083 |

| nScore S-Z | 0.071 | 0.089 | 0.136 | 0.081 | 0.121 | 0.104 | 0.114 | 0.113 | 0.123 | |

| nScore S-Z | 0.039 | 0.060 | 0.050 | 0.076 | 0.074 | 0.066 | 0.070 | 0.067 | 0.104 | |

| nScore T2.2 | 0.056 | 0.070 | 0.073 | 0.093 | 0.087 | 0.080 | 0.107 | 0.096 | 0.111 | |

|

| ||||||||||

| LDPE S-Z | 0.050 | 0.051 | 0.051 | 0.050 | 0.049 | 0.051 | 0.051 | 0.050 | 0.047 | |

| SSLasso S-Z | 0.056 | 0.056 | 0.056 | 0.054 | 0.055 | 0.053 | 0.053 | 0.054 | 0.054 | |

| T1 Error | dScore S-Z | 0.033 | 0.036 | 0.034 | 0.031 | 0.031 | 0.035 | 0.036 | 0.035 | 0.033 |

| nScore S-Z | 0.065 | 0.080 | 0.093 | 0.064 | 0.084 | 0.088 | 0.070 | 0.071 | 0.054 | |

| nScore S-Z | 0.056 | 0.060 | 0.045 | 0.061 | 0.051 | 0.040 | 0.058 | 0.048 | 0.047 | |

| nScore T2.2 | 0.049 | 0.053 | 0.051 | 0.060 | 0.061 | 0.049 | 0.066 | 0.049 | 0.048 | |

TABLE 6.

Average power and type-I error rate for the hypotheses for , as defined in (5.1)—(5.3), under the stochastic block model setting with . Details are as in Table 5.

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.2 200 0.3 |

0.5 | 0.1 | 400 0.3 |

0.5 | |

|

| ||||||||||

| LDPE S-Z | 0.370 | 0.793 | 0.937 | 0.687 | 0.990 | 1.000 | 0.914 | 1.000 | 1.000 | |

| SSLasso S-Z | 0.393 | 0.803 | 0.933 | 0.687 | 0.990 | 1.000 | 0.892 | 1.000 | 1.000 | |

| Powerstrong | dScore S-Z | 0.333 | 0.783 | 0.917 | 0.693 | 0.993 | 1.000 | 0.905 | 1.000 | 1.000 |

| nScore S-Z | 0.473 | 0.857 | 0.953 | 0.697 | 0.993 | 0.993 | 0.943 | 1.000 | 1.000 | |

| nScore S-Z | 0.400 | 0.797 | 0.903 | 0.713 | 0.997 | 1.000 | 0.910 | 1.000 | 1.000 | |

| nScore T2.2 | 0.437 | 0.787 | 0.923 | 0.720 | 0.987 | 1.000 | 0.940 | 1.000 | 1.000 | |

|

| ||||||||||

| LDPE S-Z | 0.041 | 0.044 | 0.051 | 0.057 | 0.050 | 0.071 | 0.050 | 0.093 | 0.071 | |

| SSLasso S-Z | 0.054 | 0.056 | 0.074 | 0.071 | 0.056 | 0.089 | 0.071 | 0.101 | 0.101 | |

| Powerweak | dScore S-Z | 0.037 | 0.044 | 0.057 | 0.060 | 0.046 | 0.077 | 0.056 | 0.101 | 0.094 |

| nScore S-Z | 0.059 | 0.071 | 0.107 | 0.043 | 0.083 | 0.070 | 0.069 | 0.094 | 0.106 | |

| nScore S-Z | 0.047 | 0.059 | 0.060 | 0.059 | 0.047 | 0.059 | 0.062 | 0.106 | 0.105 | |

| nScore T2.2 | 0.057 | 0.064 | 0.067 | 0.047 | 0.069 | 0.086 | 0.043 | 0.079 | 0.113 | |

|

| ||||||||||

| LDPE S-Z | 0.051 | 0.049 | 0.048 | 0.050 | 0.050 | 0.050 | 0.051 | 0.050 | 0.049 | |

| SSLasso S-Z | 0.057 | 0.056 | 0.058 | 0.054 | 0.054 | 0.054 | 0.054 | 0.053 | 0.054 | |

| T1ER | dScore S-Z | 0.043 | 0.040 | 0.041 | 0.041 | 0.044 | 0.042 | 0.042 | 0.042 | 0.041 |

| nScore S-Z | 0.064 | 0.074 | 0.090 | 0.059 | 0.076 | 0.076 | 0.060 | 0.060 | 0.049 | |

| nScore S-Z | 0.062 | 0.058 | 0.048 | 0.056 | 0.052 | 0.040 | 0.054 | 0.047 | 0.046 | |

| nScore T2.2 | 0.052 | 0.050 | 0.050 | 0.050 | 0.050 | 0.049 | 0.049 | 0.047 | 0.047 | |

|

| ||||||||||

|

SNR |

0.1 | 100 0.3 |

0.5 | 0.1 | 0.6 200 0.3 |

0.5 | 0.1 | 400 0.3 |

0.5 | |

|

| ||||||||||

| LDPE S-Z | 0.327 | 0.827 | 0.960 | 0.700 | 0.983 | 0.997 | 0.968 | 1.000 | 1.000 | |

| SSLasso S-Z | 0.350 | 0.853 | 0.957 | 0.687 | 0.990 | 0.997 | 0.945 | 0.996 | 1.000 | |

| Powerstrong | dScore S-Z | 0.297 | 0.787 | 0.937 | 0.697 | 0.987 | 0.993 | 0.968 | 0.996 | 1.000 |

| nScore S-Z | 0.420 | 0.870 | 0.957 | 0.720 | 0.987 | 1.000 | 0.947 | 1.000 | 1.000 | |

| nScore S-Z | 0.350 | 0.800 | 0.927 | 0.717 | 0.980 | 1.000 | 0.968 | 1.000 | 1.000 | |

| nScore T2.2 | 0.373 | 0.797 | 0.890 | 0.653 | 0.980 | 1.000 | 0.917 | 1.000 | 1.000 | |

|

| ||||||||||

| LDPE S-Z | 0.043 | 0.049 | 0.046 | 0.041 | 0.077 | 0.063 | 0.053 | 0.066 | 0.083 | |

| SSLasso S-Z | 0.061 | 0.054 | 0.070 | 0.053 | 0.086 | 0.083 | 0.067 | 0.099 | 0.105 | |

| Powerweak | dScore S-Z | 0.044 | 0.047 | 0.046 | 0.040 | 0.081 | 0.069 | 0.063 | 0.077 | 0.098 |

| nScore S-Z | 0.054 | 0.073 | 0.093 | 0.063 | 0.093 | 0.094 | 0.061 | 0.094 | 0.096 | |

| nScore S-Z | 0.059 | 0.056 | 0.044 | 0.054 | 0.087 | 0.074 | 0.067 | 0.086 | 0.103 | |

| nScore T2.2 | 0.046 | 0.054 | 0.060 | 0.059 | 0.064 | 0.084 | 0.051 | 0.087 | 0.116 | |

|

| ||||||||||

| LDPE S-Z | 0.049 | 0.049 | 0.049 | 0.049 | 0.050 | 0.049 | 0.049 | 0.047 | 0.048 | |

| SSLasso S-Z | 0.057 | 0.056 | 0.056 | 0.053 | 0.054 | 0.054 | 0.053 | 0.053 | 0.053 | |

| T1 Error | dScore S-Z | 0.033 | 0.039 | 0.036 | 0.031 | 0.033 | 0.033 | 0.032 | 0.030 | 0.031 |

| nScore S-Z | 0.063 | 0.079 | 0.089 | 0.062 | 0.077 | 0.075 | 0.060 | 0.062 | 0.048 | |

| nScore S-Z | 0.057 | 0.051 | 0.047 | 0.056 | 0.049 | 0.039 | 0.055 | 0.045 | 0.046 | |

| nScore T2.2 | 0.051 | 0.051 | 0.051 | 0.049 | 0.048 | 0.046 | 0.047 | 0.045 | 0.045 | |

5.2. Application to Glioblastoma Data

We investigate a glioblastoma gene expression data set previously studied in Horvath et al. (2006). For each of 130 patients, a survival outcome is available; we removed the twenty patients who were still alive at the end of the study. This resulted in a data set with observations. The gene expression measurements were normalized using the method of Gautier et al. (2004). We limited our analysis to highly-connected genes (Zhang and Horvath, 2005; Horvath and Dong, 2008). The normalized data can be found at the website of Dr. Steve Horvath of UCLA Biostatistics. We log-transformed the survival response and centered it to have mean zero. Furthermore, we log-transformed the expression data, and then standardized each gene to have mean zero and standard deviation one across the observations.

Our goal is to identify individual genes whose expression levels are associated with survival time, after adjusting for the other 3599 genes in the data set. With family-wise error rate (FWER) controlled at level 0.1 using the Holm procedure (Holm, 1979), the naïve score test identifies three such genes: CKS2, H2AFZ, and RPA3. You et al. (2015) observed that CKS2 is highly expressed in glioma. Vardabasso et al. (2014) found that histone genes, of which H2AFZ is one, are related to cancer progression. Jin et al. (2015) found that RPA3 is associated with glioma development. As a comparison, SSLasso finds two genes associated with patient survival: PPAP2C and RGS3. LDPE and dScore identify no genes at FWER of 0.1.

6. DISCUSSION

In this paper, we examined a very naïve two-step approach to high-dimensional inference:

Perform the lasso in order to select a small set of variables, .

Fit a least squares regression model using just the variables in , and make use of standard regression inference tools. Make no adjustment for the fact that was selected based on the data.

It seems clear that this naïve approach is problematic, since we have peeked at the data twice, but are not accounting for this double-peeking in our analysis.

In this paper, we have shown that under certain assumptions, converges with high probability to a deterministic set, . A similar result for random design matrix is presented in Zhao et al. (2019). This key insight allows us to establish that the confidence intervals resulting from the aforementioned naïve two-step approach have asymptotically correct coverage, in the sense of (1.4). This constitutes a theoretical justification for the recent simulation findings of Leeb et al. (2015). Furthermore, we used this key insight in order to establish that the score test that results from the naïve two-step approach has asymptotically the same distribution as though the selected set of variables had been fixed in advance; thus, it can be used to test the null hypothesis .

Our simulation results corroborate our theoretical findings. In fact, we find essentially no difference between the empirical performance of these naïve proposals, and a host of other recent proposals in the literature for high-dimensional inference (Javanmard and Montanari, 2014a; Zhang and Zhang, 2014; van de Geer et al., 2014; Lee et al., 2016; Ning and Liu, 2017).

From a bird’s-eye view, the recent literature on high-dimensional inference falls into two camps. The work of Wasserman and Roeder (2009); Meinshausen et al. (2009); Berk et al. (2013); Lee et al. (2016); Tibshirani et al. (2016) focuses on performing inference on the sub-model (1.3), whereas the work of Javanmard and Montanari (2013, 2014a,b); Zhang and Zhang (2014); van de Geer et al. (2014); Zhao and Shojaie (2016); Ning and Liu (2017) focuses on testing hypotheses associated with (1.1). In this paper, we have shown that the confidence intervals that result from the naïve approach can be used to perform inference on the sub-model (1.3), whereas the score test that results from the naïve approach can be used to test hypotheses associated with (1.1).

In the era of big data, simple analyses that are easy to apply and easy to understand are especially attractive to scientific investigators. Therefore, a careful investigation of such simple approaches is worthwhile, in order to determine which ones have the potential to yield accurate results, and which do not. We do not advocate applying the naïve two-step approach described above in most practical data analysis settings: we are confident that in practice, our intuition is correct, and this approach will perform poorly when the sample size is small or moderate, and/or the assumptions, which are unfortunately unverifiable, are not met. However, in very large data settings, our results suggest that this naïve approach may indeed be viable for high-dimensional inference, or at least warrants further investigation.

When choosing among existing inference procedures based on lasso, the target of inference should be taken into consideration. The target of inference can either be the population parameters, in (1.1), or the parameters induced by the sub-model chosen by lasso, in (1.5). Sample-splitting (Wasserman and Roeder, 2009; Meinshausen et al., 2009) and exact post selection (Lee et al., 2016; Tibshirani et al., 2016) methods provide valid inferences for . The latter is a particularly appealing choice for inference on , as it provides non-asymptotic confidence intervals under minimal assumptions. However, as we discussed in Section 1, is, in general, different from . A set of sufficient conditions for is the irrepresentable condition together with a beta-min condition. Unfortunately, these assumptions are unverifiable and may not hold in practice. Our theoretical analysis and empirical studies suggests that the naïve two-step approach described above facilitates inference for under less stringent assumptions and without any conditioning or sample splitting. However, this method is also asymptotic and relies on unverifiable assumptions. Debiased lasso tests (Zhang and Zhang, 2014; van de Geer et al., 2014; Javanmard and Montanari, 2013, 2014a; Ning and Liu, 2017) provide asymptotically valid inference for entries of , without requiring a beta-min or irrepresentable condition. However, they require more restrictive sparsity of , as well as sparsity of the inverse covariance matrix of covariates, , which are also unverifiable. These limitations underscore the importance of recent efforts to relax these sparsity assumptions (e.g. Zhu et al., 2018; Wang et al., 2020).

We close with some suggestions for future research. One reviewer brought up an interesting point: methods with folded-concave penalties (e.g., Fan and Li, 2001; Zhang, 2010) require milder conditions to achieve variable selection consistency, i.e., , than the lasso. Inspired by this observation, we wonder whether the proposal of Fan and Li (2001) and Zhang (2010) also require milder conditions to achieve . If so, then we could replace the lasso with a folded-concave penalty in the variable selection step, and improve the robustness of the naïve approaches. We believe this could be a fruitful area of future research. In addition, extending the proposed theory and methods to generalized linear models and M-estimators may also be promising areas for future research.

Supplementary Material

ACKNOWLEDGEMENTS

We thank the Editor, Associate Editor, and four anonymous reviewers for their incredibly insightful comments, which led to substantial improvements of the manuscript. We thank the authors of Javanmard and Montanari (2014a) and Ning and Liu (2017) for providing code for their proposals. We are grateful to Joshua Loftus, Jonathan Taylor, Robert Tibshirani and Ryan Tibshirani for helpful responses to our inquiries.

Contributor Information

Sen Zhao, 1600Amphitheatre Parkway, Mountain View, California 94043, USA.

Daniela Witten, University of Washington, Health Sciences Building, Box 357232, Seattle, Washington 98195, USA.

Ali Shojaie, University of Washington, Health Sciences Building, Box 357232, Seattle, Washington 98195, USA.

REFERENCES

- Belloni A and Chernozhukov V (2013). Least squares after model selection in high-dimensional sparse models. Bernoulli, 19(2):521–547. [Google Scholar]

- Berk R, Brown L, Buja A, Zhang K, and Zhao L (2013). Valid post-selection inference. The Annals of Statistics, 41(2):802–837 [Google Scholar]

- Bickel P, Ritov Y, and Tsybakov A (2009). Simultaneous analysis of Lasso and Dantzig selector. The Annals of Statistics, 37(4):1705–1732. [Google Scholar]

- Cox D (1975). A note on data-splitting for the evaluation of significance levels. Biometrika, 62(2):441–444. [Google Scholar]

- Dezeure R, Bühlmann P, Meier L, and Meinshausen N (2015). High-dimensional inference: Confidence intervals, p-values and R-software hdi. Statistical Science, 30(4):533–558. [Google Scholar]

- Dicker LH (2014). Variance estimation in high-dimensional linear models. Biometrika, 101(2):269–284. [Google Scholar]

- Dossal C (2012). A necessary and sufficient condition for exact sparse recovery by minimization. Comptes Rendus Mathematique, 350(1–2):117–120. [Google Scholar]

- Erdős P and Rényi A (1959). On random graphs I. Publicationes Mathematicae, 6:290–297. [Google Scholar]

- Fan J, Guo S, and Hao N (2012). Variance estimation using refitted cross-validation in ultrahigh dimensional regression. Journal of the Royal Statistical Society: Series B, 74(1):37–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fan J and Li R (2001). Variable selection via nonconcave penalized likelihood and its oracle properties. Journal of the American Statistical Association, 96(456):1348–1360. [Google Scholar]

- Gautier L, Cope L, Bolstad BM, and Irizarry RA (2004). affy – analysis of Affymetrix GeneChip data at the probe level. Bioinformatics, 20(3):307–315. [DOI] [PubMed] [Google Scholar]

- Gelman A and Hill J (2006). Data Analysis Using Regression and Multilevel/Hierarchical Models. Analytical Methods for Social Research Cambridge University Press, New York, NY, USA. [Google Scholar]

- Gilbert EN (1959). Random graphs. Annals of Mathematical Statistics, 30(4):1141–1144. [Google Scholar]

- Hahn J (1998). On the role of the propensity score in efficient semiparametric estimation of average treatment effects. Econometrica, 66(2):315–331. [Google Scholar]

- Hastie T, Tibshirani R, and Friedman J (2009). The Elements of Statistical Learning. Springer Series in Statistics. Springer-Verlag New York, New York, NY, USA. [Google Scholar]

- Hirano K, Imbens GW, and Ridder G (2003). Efficient estimation of average treatment effects using the estimated propensity score. Econometrica, 71(4):1161–1189. [Google Scholar]

- Holm S (1979). A simple sequentially rejective multiple test procedure. Scandinavian Journal of Statistics, 6(2):65–70. [Google Scholar]

- Horvath S and Dong J (2008). Geometric interpretation of gene co-expression network analysis. PLOS Computational Biology, 4(8):e1000117. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Horvath S, Zhang B, Carlson M, Lu KV, Zhu S, M, F. R., Laurance MF., Zhao W., Qi S., Chen Z., Lee Y., Scheck AC., Liau LM., Wu H., Geschwind DH., Febbo PG., Kornblum HI., Cloughesy TF., Nelson SF., and Mischel PS. (2006). Analysis of oncogenic signaling networks in glioblastoma identifies aspm as a molecular target. Proceedings of the National Academy of Sciences, 103(46):17402–17407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Javanmard A and Montanari A (2013). Confidence intervals and hypothesis testing for high-dimensional statistical models. In Burges CJC., Bottou L., Welling M, Ghahramani Z, and Weinberger KQ, editors, Advances in Neural Information Processing Systems 26, pages 1187–1195. Curran Associates, Inc. [Google Scholar]

- Javanmard A and Montanari A (2014a). Confidence intervals and hypothesis testing for high-dimensional regression. Journal of Machine Learning Research, 15(Oct):2869–2909. [Google Scholar]

- Javanmard A and Montanari A (2014b). Hypothesis testing in high-dimensional regression under the gaussian random design model: Asymptotic theory. IEEE Transaction on Information Theory, 60(10):6522–6554. [Google Scholar]

- Jin T, Wang Y, Li G, Du S, Yang H, Geng T, Hou P, and Gong Y (2015). Analysis of difference of association between polymorphisms in the XRCC5, RPA3 and RTEL1 genes and glioma, astrocytoma and glioblastoma. American Journal of Cancer Research, 5(7):2294–2300. [PMC free article] [PubMed] [Google Scholar]

- Kabaila P (1998). Valid confidence intervals in regression after variable selection. Econometric Theory, 14(4):463–482. [Google Scholar]

- Kabaila P (2009). The coverage properties of confidence regions after model selection. International Statistical Review, 77(3):405–414. [Google Scholar]

- Kolaczyk ED (2009). Statistical Analysis of Network Data: Methods and Models. Springer Series in Statistics. Springer Science+Business Media, New York, NY, USA. [Google Scholar]

- Lee JD, Sun DL, Sun Y, and Taylor JE (2016). Exact post-selection inference, with application to the lasso. The Annals of Statistics, 44(3):907–927. [Google Scholar]

- Leeb H and Pötscher BM (2003). The finite-sample distribution of post-model-selection estimators and uniform versus nonuniform approximations. Econometric Theory, 19(1):100–142. [Google Scholar]

- Leeb H and Pötscher BM (2005). Model selection and inference: Facts and fiction. Econometric Theory, 21(1):21–59. [Google Scholar]

- Leeb H and Pötscher BM (2006a). Can one estimate the conditional distribution of post-model-selection estimators? The Annals of Statistics, 34(5):2554–2591. [Google Scholar]

- Leeb H and Pötscher BM (2006b). Performance limits for estimators of the risk or distribution of shrinkage-type estimators, and some general lower risk-bound results. Econometric Theory, 22(1):69–97. [Google Scholar]

- Leeb H and Pötscher BM (2008). Can one estimate the unconditional distribution of post-model-selection estimators? Econometric Theory, 24(2):338–376. [Google Scholar]

- Leeb H, Pötscher BM, and Ewald K (2015). On various confidence intervals post-model-selection. Statistical Science, 30(2):216–227. [Google Scholar]

- Meinshausen N and Bühlmann P (2006). High dimensional graphs and variable selection with the lasso. The Annals of Statistics, 34(3):1436–1462. [Google Scholar]

- Meinshausen N, Meier L, and Bühlmann P (2009). p-values for high-dimensional regression. Journal of the American Statistical Association, 104(488):1671–1681. [Google Scholar]

- Ning Y and Liu H (2017). A general theory of hypothesis tests and confidence regions for sparse high dimensional models. The Annals of Statistics, 45(1):158–195. [Google Scholar]

- Pötscher BM (1991). Effects of model selection on inference. Econometric Theory, 7(2):163–185. [Google Scholar]

- Reid S, Tibshirani R, and Friedman J (2016). A study of error variance estimation in Lasso regression. Statistica Sinica, 26(1):35–67. [Google Scholar]

- Rosset S, Zhu J, and Hastie T (2004). Boosting as a regularized path to a maximum margin classifier. Journal of Machine Learning Research, 5:941–973. [Google Scholar]

- Sun T and Zhang C-H (2012). Scaled sparse linear regression. Biometrika, 99(4):879–898. [Google Scholar]

- Taylor J and Tibshirani RJ (2015). Statistical learning and selective inference. Proceedings of National Academy of Sciences, 112(25):7629–7634. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tibshirani R (1996). Regression shrinkage and selection via the lasso. Journal of the Royal Statistical Society, Series B, 58(1):267–288. [Google Scholar]

- Tibshirani RJ (2013). The lasso problem and uniqueness. Electronic Journal of Statistics, 7:1456–1490. [Google Scholar]

- Tibshirani RJ, Taylor J, Lockhart R, and Tibshirani R (2016). Exact post-selection inference for sequential regression procedures. Journal of the American Statistical Association, 111(514):600–620. [Google Scholar]

- Tropp J (2006). Just relax: Convex programming methods for identifying sparse signals in noise. IEEE Transaction on Information Theory, 52(3):1030–1051. [Google Scholar]

- van de Geer S (2017). Some exercises with the lasso and its compatibility constant. arXiv preprint arXiv:1701.03326. [Google Scholar]

- van de Geer S and Bühlmann P (2009). On the conditions used to prove oracle results for the Lasso. Electronic Journals of Statistics, 3:1360–1392. [Google Scholar]

- van de Geer S, Bühlmann P, Ritov Y, and Dezeure R (2014). On asymptotically optimal confidence regions and tests for high-dimensional models. The Annals of Statistics, 42(3):1166–1202. [Google Scholar]

- Vardabasso C, Hasson D, Ratnakumar K, Chung C-Y, Duarte LF, and Bernstein E (2014). Histone variants: emerging players in cancer biology. Cellular and Molecular Life Sciences, 71(3):379–404. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Voorman A, Shojaie A, and Witten DM (2014). Inference in high dimensions with the penalized score test. arXiv preprint arXiv:1401.2678. [Google Scholar]

- Wainwright MJ (2009). Sharp thresholds for high-dimensional and noisy sparsity recovery using -constrained quadratic programmming (lasso). IEEE Transaction on Information Theory, 55(5):2183–2202. [Google Scholar]

- Wang J, He X, and Xu G (2020). Debiased inference on treatment effect in a high-dimensional model. Journal of the American Statistical Association, 115(529):442–454. [Google Scholar]

- Wasserman L and Roeder K (2009). High-dimensional variable selection. The Annals of Statistics, 37(5A):2178–2201. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weisberg S (2013). Applied Lienar Regression. Wiley Series in Probability and Statistics. John Wiley & Sons, Hoboken, NJ, USA. [Google Scholar]

- You H, Lin H, and Zhang Z (2015). CKS2 in human cancers: Clinical roles and current perspectives (review). Molecular and Clinical Oncology, 3(3):459–463. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang B and Horvath S (2005). A general framework for weighted gene co-expression network analysis. Statistical Applications in Genetics and Molecular Biology, 4(Article 17). [DOI] [PubMed] [Google Scholar]

- Zhang C-H (2010). Nearly unbiased variable selection under minimax concave penalty. The Annals of Statistics, 38(2):894–942. [Google Scholar]

- Zhang C-H and Huang J (2008). The sparsity and bias of the lasso selection in high-dimensional linear regression. The Annals of Statistics, 36(4):1567–1594. [Google Scholar]

- Zhang C-H and Zhang SS (2014). Confidence intervals for low dimensional parameters in high dimensional linear models. Journal of the Royal Statistical Society: Series B, 76(1):217–242. [Google Scholar]

- Zhao P and Yu B (2006). On model selection consistency of lasso. Journal of Machine Learning Research, 7(Nov):2541–2563. [Google Scholar]

- Zhao S, Ottinger S, Peck S, Mac Donald C, and Shojaie A (2019). Network differential connectivity analysis. arXiv preprint arXiv:1909.13464. [Google Scholar]

- Zhao S and Shojaie A (2016). A significance test for graph-constrained estimation. Biometrics, 72(2):484–493. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhu Y, Bradic J, et al. (2018). Significance testing in non-sparse high-dimensional linear models. Electronic Journal of Statistics, 12(2):3312–3364. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.