Abstract

Objective

To describe visual acuity data representation in the American Academy of Ophthalmology Intelligent Research in Sight (IRIS) Registry and present a data-cleaning strategy

Design

Reliability and validity study.

Participants

Patients with visual acuity records from 2018 in the IRIS Registry.

Methods

Visual acuity measurements and metadata were identified and characterized from 2018 IRIS Registry records. Metadata, including laterality, assessment method (distance, near, and unspecified), correction (corrected, uncorrected, and unspecified), and flags for refraction or pinhole assessment were compared between Rome (frozen April 20, 2020) and Chicago (frozen December 24, 2021) versions. We developed a data-cleaning strategy to infer patients’ corrected distance visual acuity in their better-seeing eye.

Main Outcome Measures

Visual acuity data characteristics in the IRIS Registry.

Results

The IRIS Registry Chicago data set contains 168 920 049 visual acuity records among 23 001 531 unique patients and 49 968 974 unique patient visit dates in 2018. Visual acuity records were associated with refraction in 5.3% of cases, and with pinhole in 11.0%. Mean (standard deviation) of all measurements was 0.26 (0.41) logarithm of the minimum angle of resolution (logMAR), with a range of − 0.3 to 4.0 A plurality of visual acuity records were labeled corrected (corrected visual acuity [CVA], 39.1%), followed by unspecified (37.6%) and uncorrected (uncorrected visual acuity [UCVA], 23.4%). Corrected visual acuity measurements were paradoxically worse than same day UCVA 15% of the time. In aggregate, mean and median values were similar for CVA and unspecified visual acuity. Most visual acuity measurements were at distance (59.8%, vs. 32.1% unspecified and 8.2% near). Rome contained more duplicate visual acuity records than Chicago (10.8% vs. 1.4%). Near visual acuity was classified with Jaeger notation and (in Chicago only) also assigned logMAR values by Verana Health. LogMAR values for hand motion and light perception visual acuity were lower in Chicago than in Rome. The impact of data entry errors or outliers on analyses may be reduced by filtering and averaging visual acuity per eye over time.

Conclusions

The IRIS Registry includes similar visual acuity metadata in Rome and Chicago. Although fewer duplicate records were found in Chicago, both versions include duplicate and atypical measurements (i.e., CVA worse than UCVA on the same day). Analyses may benefit from using algorithms to filter outliers and average visual acuity measurements over time.

Financial Disclosure(s)

Proprietary or commercial disclosure may be found found in the Footnotes and Disclosures at the end of this article.

Keywords: Epidemiology, Intelligent Research in Sight, IRIS Registry, Visual acuity

The American Academy of Ophthalmology (Academy) conceived and implemented the IRIS®Registry (Intelligent Research in Sight) to drive quality improvement, enable United States-wide population health studies, and enhance scientific knowledge.1 The IRIS Registry was designed to be comprehensive, collecting data from ophthalmology electronic health records (EHRs), including patient demographics, medications, diagnosis and procedure codes, clinical metrics, such as visual acuity (VA) and intraocular pressure, and other data contained in EHRs. Since its launch in 2014, the IRIS Registry has become the largest single clinical specialty registry in the world.2 The vast majority of ophthalmology practices submit clinical data to the IRIS Registry; it contains information on > 73 million distinct patients receiving care from > 15 000 ophthalmologists and other clinicians in their practices, and > 440 million patient visits.3,4

In addition to its value for quality improvement and reporting activities, the IRIS Registry has value for answering research questions in ophthalmology at a larger scale. The Academy has developed a limited, expert-deidentified version for research purposes, and researchers have answered a variety of research questions, such as rates of endophthalmitis after cataract surgery,5 strabismus surgery reoperation rates,6 and prevalence of myopic choroidal neovascularization.7 Researchers have also utilized IRIS Registry VA data to investigate outcomes. For example, Parke et al8 compared VA outcomes of patients who did and did not require second surgeries after macular hole or epiretinal membrane repair, and Willis et al7 investigated whether patients with myopic choroidal neovascularization who received anti-VEGF treatment had improved vision compared with patients who received no treatment.9

One of the unique features of IRIS Registry data is the inclusion of VA measurements, which are critical as an outcome measure in ophthalmology but unavailable through traditional administrative data, such as insurance claims records (the previous standard for national-scale analyses reflecting actual clinical practice). Each VA record is associated with multiple associated metadata fields. These fields shed additional context for each record; however, they also present researchers with challenges requiring reasonable methodologies for cleaning, presenting, and interpreting the data. As a consequence, researchers have deployed variable filtering techniques.7, 8, 9 For example, some authors have filtered the IRIS Registry database to only use corrected VA (CVA, VA with correction) data.8

The quality and reproducibility of any analysis is contingent on the quality of the underlying data. In this work, we summarize characteristics and challenges of IRIS Registry VA data, and quantify evidence for data inconsistency including number and consistency of measurements per visit and unlikely relationships between CVA and uncorrected VA (UCVA) measures. A variety of data-cleaning techniques can be deployed to strengthen rigor and reproducibility, such as removing duplicates, filtering outliers, and imputing missing data10; here, we present a data-cleaning strategy for the IRIS Registry community to strengthen accuracy and reproducibility of analyses that include VA measures.

Methods

Data Source

Intelligent Research in Sight Registry analyses are performed using immutable snapshots of the database to enable reproducibility. The IRIS Registry now maintains 2 separate schemas sequentially made available to centers in the IRIS Registry Analytic Center Consortium (Consortium). The first snapshot available to researchers, “Rome,” was frozen on April 20, 2020. It is currently being phased out and replaced with “Chicago” (frozen on December 24, 2021, includes records added after April 2020). These deidentified data sets were sequentially made available to the Consortium; the Rome extract was specifically for the Consortium. Most early published analyses from the Consortium utilized Rome. In 2021, the Consortium members began to transition to Chicago, with Chicago planned as a common data set utilized for all research analyses going forward, including those performed by Consortium members as well as other users. We utilized 2018 VA, patient, and visit data from each version.

The study was reviewed and deemed exempt by the Stanford University institutional review board, and the research adhered to the Declaration of Helsinki. Data collection, deidentification, and aggregation methods have previously been described, some details of which were proprietary to the registry ingestion vendor and are anticipated to become more transparent and reliable once ingestion and analytics are fully transitioned to the same vendor in an end-to-end pipeline.1 To ensure that the Rome database does not expose protected health information, the database was carefully deidentified using the expert determination method and does not contain any fields that could link clinical records to a patient’s identity. Specifically, a third-party vendor statistically assesses the risk of reidentifying patients based on direct or indirect identifiers. If patients are deemed to have a greater than small risk of being reidentified, the data are iteratively modified to reduce this risk. For example, 3-digit zip codes with a population < 20 000 may be recoded or merged with a neighboring town. Patient records, including codes that may indicate birth, death, or residency are also obfuscated. For example, if a patient has a diagnosis code for “imprisonment and other incarceration” and lives in a 3-digit zip code with only 1 prison, it is possible to identify their address from the prison's address. This information would therefore be redacted.

VA Data

Each VA measurement in the IRIS Registry data set has associated metadata, including laterality, method of assessment (distance, near, and unspecified), correction of measurement (labeled corrected, uncorrected, and unspecified), and flags for whether measurements were obtained with refraction or pinhole assessment. Refraction, pinhole, and “no improvement” (NI) flags were assigned based on labels in EHR, which may vary based on EHR platform and practice-specific customization. For example, VA recorded in the same structured field area as a refraction for refraction-associated VA, or with “pinhole” checked off or a structured field for pinhole acuity, or “NI” either checked off or otherwise explicitly documented. There was no distinction for cycloplegic versus noncycloplegic refraction. Each VA measurement in the database may be reported as Snellen, modified Snellen, logarithm of the minimum angle of resolution (logMAR), and modified logMAR. Near vision values are reported as Jaeger notation, extracted from text when present, and reported both as Jaeger values and with logMAR conversion (if performed, variable between Rome and Chicago data sets). Visual acuity data were queried from structured data fields or text for inclusion. Logarithm of the minimum angle of resolution measurements were calculated from Snellen measurements, and modified measurements took into account +/− VA modifiers for additional letters identified or missed (e.g., 20/40 + 2 or 20/80 − 1). The algorithm for mapping modifier values to logMAR consists of rounding up or down to the logMAR equivalent of the nearest commonly-used Snellen value, in a relationship depending on the magnitude of the modifier value and the starting Snellen line (logMAR value; Supplemental Appendix, available at www.ophthalmologyscience.org).

Visual acuity measurements reported in a format other than Snellen or Jaeger notation (e.g., neonates with blinks-to-light, fix and follow, or central, steady, maintained fixation) were filtered out, with the exception of counting fingers (CF), hand motion (HM), light perception (LP), and no light perception (NLP). These may be documented in different ways (e.g., CF @5ft, FC5, 20/CF5’, etc.), all curated to a common descriptor (CF in this case, detailed curation methods not available from the registry vendor). For ETDRS, some practices had records that were labeled (by EHR column name) as ETDRS and documented as Snellen. Those results were included as Snellen values and also converted to an estimated logMAR value. There were a few patients with records labeled ETDRS who had ETDRS letters recorded, and these records were filtered out.

The Rome data set includes a denormalized VA table with metadata and included columns for logMAR and modified_logMAR. By contrast, the Chicago schema normalized the VA data and migrated to an observation table (which includes VA, intraocular pressure, and cup-to-disc ratio) and an observation_modifier table, which includes the same metadata in addition to logMAR.

Inherent to database construction, VA measurements were linked to patient ID and provider ID but not linked to a specific visit. However, both patient visit records and VA records include a deidentified, consistently-shifted date with day-level granularity. Because visits and VA cannot be directly joined, we inferred that VA measurements were associated with a visit occurring on the same date, assuming that a patient has at most 1 visit to a given provider in a single day and considering all VA records on a specific date to be related to the same visit.

Statistical Analysis

We summarized the number and proportions of VA records, patients, and visits and compared differences between logMAR and modified logMAR fields in the Rome data set and calculated logMAR values in the Chicago data set. For each associated metadata, we analyzed the frequency of missingness in VA records and determined descriptive statistics (mean and median) for each VA metadata value with nonmissing data. For patients with multiple VA measurement records on the same day, we compared metadata values. We developed an example data-cleaning strategy to infer corrected distance VA in the better-seeing eye at the patient level in 2018.

Data were queried using PostgreSQL 8.0.2. Statistics were generated using Python 3.0 (Python Software Cooperation).

Results

Study Sample, Visits, and Characteristics

The Rome data set contains 187 805 383 (136 158 960 with nonmissing logMAR) VA records among 22 329 528 (22 067 228 with nonmissing logMAR) unique patients in 2018, including duplicate measurements (same value) and multiple measurements (different values) on the same date. The Chicago data set contains 168 920 049 (168 530 763 with nonmissing logMAR) VA records among 23 001 531 (22 821 606 with nonmissing logMAR) unique patients in 2018. Measurement characteristics, including laterality and measurements associated with refraction or pinhole are shown in Tables 1 and 2.

Table 1.

Frequency and Characteristics of VA Measurements in 2018 American Academy of Ophthalmology IRIS® Registry (Intelligent Research In Sight) Rome Version∗,†,‡

| Individual VA Records in 2018 |

Unique Patient Visit Dates in 2018 |

Unique Patients in 2018 |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| N (%) | Mean (SD) § | Median (IQR) § | N (%) | Mean (SD) § | Median (IQR) § | N (%) | Mean (SD) § | Median (IQR) § | |

| All | 136 158 960 (100.0) | 0.28 (0.45) | 0.18 (0.00–0.30) | 46 480 974 (100.0) | 0.29 (0.39) | 0.18 (0.05–0.35) | 22 067 228 (100.0) | 0.22 (0.32) | 0.14 (0.05–0.28) |

| Laterality | |||||||||

| Right | 67 126 234 (49.3) | 0.27 (0.45) | 0.18 (0.00–0.30) | 43 961 059 (94.6) | 0.28 (0.47) | 0.16 (0.00–0.30) | 21 649 430 (98.1) | 0.22 (0.39) | 0.11 (0.00–0.27) |

| Left | 67 165 803 (49.3) | 0.28 (0.45) | 0.18 (0.00–0.30) | 43 961 589 (94.6) | 0.29 (0.47) | 0.18 (0.05–0.30) | 21 678 382 (98.2) | 0.22 (0.39) | 0.12 (0.00–0.28) |

| Both | 971 833 (0.7) | 0.13 (0.25) | 0.00 (0.00–0.18) | 838 048 (1.8) | 0.11 (0.21) | 0.00 (0.00–0.18) | 622 633 (2.8) | 0.10 (0.19) | 0.00 (0.00–0.15) |

| Unspecified | 895,090 (0.7) | 0.35 (0.53) | 0.18 (0.10–0.40) | 529 021 (1.1) | 0.33 (0.44) | 0.20 (0.09–0.40) | 272 585 (1.2) | 0.28 (0.39) | 0.18 (0.05–0.33) |

| Type | |||||||||

| CVA | 52 208 797 (38.3) | 0.26 (0.43) | 0.10 (0.00–0.30) | 25 930 757 (55.8) | 0.26 (0.36) | 0.15 (0.05–0.33) | 14 045 257 (63.7) | 0.21 (0.31) | 0.14 (0.05–0.27) |

| UCVA | 32 744 110 (24.1) | 0.37 (0.51) | 0.18 (0.10–0.48) | 17 016 153 (36.6) | 0.38 (0.46) | 0.24 (0.10–0.48) | 8 292 934 (37.6) | 0.34 (0.41) | 0.23 (0.10–0.43) |

| Unspecified | 51 206 053 (37.6) | 0.24 (0.41) | 0.10 (0.00–0.30) | 22 501 329 (48.4) | 0.24 (0.36) | 0.14 (0.02–0.30) | 13 469 176 (61.0) | 0.19 (0.30) | 0.10 (0.00–0.24) |

| Method | |||||||||

| Distance | 89 653 937 (65.9) | 0.28 (0.44) | 0.18 (0.00–0.30) | 34 443 898 (74.1) | 0.29 (0.38) | 0.18 (0.07–0.36) | 16 438 363 (74.5) | 0.23 (0.31) | 0.14 (0.05–0.30) |

| Near∥ | 0 (0)† | 0 (0)† | 0 (0)2 | ||||||

| Unspecified | 46 505 023 (34.2) | 0.27 (0.46) | 0.10 (0.00–0.30) | 17 530 837 (37.7) | 0.28 (0.42) | 0.16 (0.05–0.35) | 9 076 245 (41.1) | 0.22 (0.35) | 0.13 (0.03–0.28) |

| Refraction | 6 618 549 (4.9) | 0.13 (0.27) | 0.00 (0.00–0.18) | 3 449 817 (7.4) | 0.13 (0.23) | 0.05 (0.00–0.18) | 2 321 081 (10.5) | 0.11 (0.21) | 0.04 (0.00–0.14) |

| Pinhole | 16 283 667 (12.0) | 0.22 (0.29) | 0.18 (0.00–0.30) | 10 246 326 (22.0) | 0.24 (0.27) | 0.18 (0.07–0.30) | 6 321 618 (28.7) | 0.20 (0.25) | 0.14 (0.03–0.30) |

CVA = corrected visual acuity; IQR = interquartile range; logMAR = logarithm of the minimum angle of resolution; SD = standard deviation; UCVA = uncorrected visual acuity; VA = visual acuity.

Version frozen on April 20, 2020.

The minimum and maximum logMAR values for total, patient, and visits were −0.3 to 4.0 for all VA subtypes.

Under Unique Patient Visits in 2018 and Unique Patients in 2018, percentages total to > 100% because, for example, often both right eye and left eye VAs were present.

Visual acuity values reported as modified logMAR, with the exception of near VA measurements. Records with missing modified logMAR data were excluded.

All 11 066 330 near VA records in 2018 with null logMAR values. Near VA values were recorded in Jaeger notation, with the majority of nonmissing records having Jaeger 1 (54.24%).

Table 2.

Frequency and Characteristics of VA Measurements in 2018 American Academy of Ophthalmology IRIS® Registry (Intelligent Research In Sight) Chicago Version∗,†,‡

| Individual VA Records in 2018 |

Unique Patient Visit Dates |

Unique Patients in 2018 |

|||||||

|---|---|---|---|---|---|---|---|---|---|

| N (%) | Mean (SD)§ | Median (IQR)§ | N (%) | Mean (SD)§ | Median (IQR)§ | N (%) | Mean (SD)§ | Median (IQR)§ | |

| All | 168 530 763 (100.0) | 0.26 (0.41) | 0.10 (0.00–0.30) | 49 707 013 (100.0) | 0.28 (0.36) | 0.18 (0.05–0.35) | 22 821 606 (100.0) | 0.21 (0.29) | 0.13 (0.05–0.27) |

| Laterality | |||||||||

| Right | 79 417 999 (47.1) | 0.26 (0.41) | 0.10 (0.00–0.30) | 46 379 658 (93.3) | 0.27 (0.43) | 0.15 (0.03–0.30) | 22 195 382 (97.3) | 0.21 (0.36) | 0.1 (0.00–0.25) |

| Left | 79 617 069 (47.2) | 0.26 (0.41) | 0.10 (0.00–0.30) | 46 498 340 (93.5) | 0.27 (0.44) | 0.15 (0.03–0.30) | 22 313 880 (97.8) | 0.21 (0.36) | 0.1 (0.00–0.26) |

| Both | 1 980 667 (1.2) | 0.11 (0.24) | 0.00 (0.00–0.10) | 1 518 329 (3.1) | 0.11 (0.21) | 0.0 (0.00–0.10) | 1 124 163 (4.9) | 0.09 (0.20) | 0.0 (0.00–0.10) |

| Unspecified | 7 515 028 (4.5) | 0.28 (0.44) | 0.18 (0.00–0.30) | 3 529 975 (7.1) | 0.28 (0.39) | 0.18 (0.05–0.35) | 1 807 181 (7.9) | 0.22 (0.33) | 0.13 (0.03–0.28) |

| Type | |||||||||

| CVA | 65 888 408 (39.1) | 0.24 (0.40) | 0.10 (0.00–0.30) | 28 946 271 (58.2) | 0.25 (0.33) | 0.14 (0.05–0.30) | 15 243 584 (66.8) | 0.19 (0.28) | 0.11 (0.03–0.24) |

| UCVA | 39 355 985 (23.4) | 0.35 (0.47) | 0.18 (0.10–0.48) | 18 516 852 (37.3) | 0.37 (0.43) | 0.24 (0.10–0.47) | 8 879 231 (38.9) | 0.32 (0.39) | 0.21 (0.09–0.41) |

| Unspecified | 63 286 370 (37.6) | 0.23 (0.38) | 0.10 (0.00–0.30) | 24 436 629 (49.2) | 0.23 (0.33) | 0.14 (0.03–0.30) | 14 338 148 (62.8) | 0.18 (0.28) | 0.1 (0.00–0.24) |

| Method | |||||||||

| Distance | 100 696 572 (59.8) | 0.28 (0.42) | 0.18 (0.00–0.30) | 36 829 432 (74.1%) | 0.29 (0.35) | 0.18 (0.07–0.36) | 17 115 049 (75.0) | 0.22 (0.29) | 0.14 (0.05–0.29) |

| Near∥ | 13 763 728 (8.2) | 0.11 (0.17) | 0.00 (0.00–0.18) | 5 977 881 (12.0) | 0.10 (0.15) | 0.0 (0.00–0.15) | 4 372 042 (19.2) | 0.09 (0.14) | 0.0 (0.00–0.12) |

| Unspecified | 54 070 463 (32.1) | 0.27 (0.44) | 0.10 (0.00–0.30) | 19 258 887 (38.7) | 0.28 (0.39) | 0.16 (0.05–0.35) | 9 757 086 (42.8) | 0.22 (0.32) | 0.13 (0.03–0.28) |

| Refraction | 8 868 286 (5.7) | 0.12 (0.26) | 0.00 (0.0–0.18) | 4 277 090 (8.6) | 0.12 (0.23) | 0.05 (0.00–0.18) | 2 969 897 (13.0) | 0.10 (0.21) | 0.0 (0.00–0.14) |

| Pinhole | 18 648 406 (11.1) | 0.22 (0.28) | 0.18 (0.00–0.30) | 11 523 973 (23.2) | 0.24 (0.26) | 0.18 (0.07–0.30) | 6 923 268 (30.3) | 0.20 (0.24) | 0.14 (0.03–0.300) |

CVA = corrected visual acuity; IQR = interquartile range; logMAR = logarithm of the minimum angle of resolution; SD = standard deviation; UCVA = uncorrected visual acuity; VA = visual acuity.

Version frozen on December 24, 2021

The minimum and maximum logMAR values for total, patient, and visits were −0.3 to 4.0 for all VA subtypes.

Under Unique Patient Visits in 2018 and Unique Patients in 2018, percentages total to > 100% because, for example, often both right eye and left eye VAs were present.

Visual acuity values reported as modified logMAR, with the exception of near VA measurements. Records with missing modified logMAR data were excluded.

97.25% (14 153 014) near VA records in 2018 with null logMAR values. Those records with recorded near logMAR values ranged from 0.0 to 1. Near VA values were recorded in Jaeger notation, with the majority of nonmissing records having Jaeger 1 (55.4%).

There were 47 135 899 unique patient visit dates with associated VA data in 2018 in the Rome data set, and 49 968 974 corresponding unique patient visit dates in the Chicago data set. We identified an unexpectedly wide spread to the number of VA records per visit, ranging from 1 to 59 in Rome and from 1 to 57 in Chicago, with an average of 4.04 in Rome (3.38 in Chicago) and a standard deviation (SD) of 2.39 in Rome (2.31 in Chicago).

Notably, 1.4% (n = 663 451) of visit dates in Rome contained > 10 VA measurements, including duplicate entries. In total, 10.8% of all Rome records (n = 20 248 080) shared identical metadata (patient ID, date, eye laterality, VA correction, VA method, and refraction/pinhole/NI flags) with another VA record, and 2.7% shared identical metadata and logMAR with another record. In Chicago, 1.6% (n = 817 867) of visit dates contained > 10 VA measurements and only 1.4% of all records (n = 2 381 998) shared identical metadata (patient ID, date, eye laterality, VA correction, VA method, and refraction/pinhole/NI flags) with another VA record. More visit dates contained > 10 records in Chicago despite Chicago containing fewer duplicate records.

VA Values

Rome

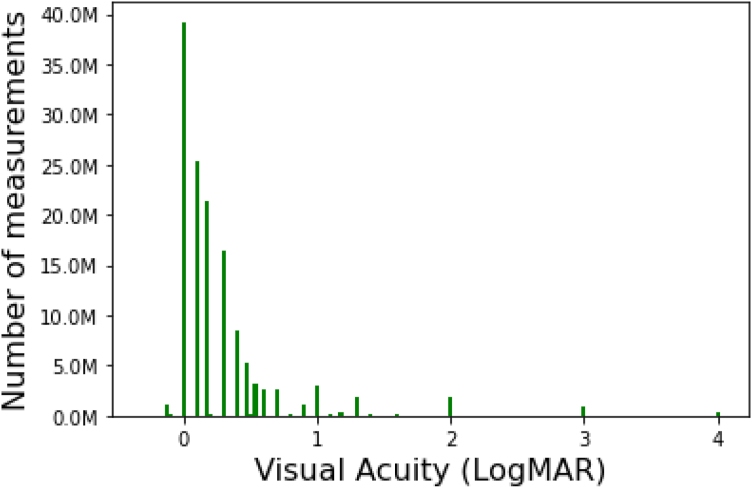

Qualitative VA values are reported using standard terminology for Snellen measurements and also assigned numeric values for logMAR and modified logMAR in the Rome data set (logMAR values in Table 1). Both logMAR and modified logMAR values ranged from −0.1 to 4.0, with 92.5% of Rome values falling between 0 and 1, with the exception of 999, which denotes unspecified (Fig 1). Snellen values ranged from 20/10 to no light perception (NLP), and modified Snellen and modified logMAR values demonstrated good concordance (Table S3, available at www.ophthalmologyscience.org). Count fingers was assigned logMAR = 2, HM logMAR = 3, LP logMAR = 3, and NLP logMAR = 4.

Figure 1.

Distribution of modified logarithm of the minimum angle of resolution (logMAR) visual acuity measurements in 2018 American Academy of Ophthalmology IRIS® Registry (Intelligent Research in Sight) in Rome.

The mean (SD) of logMAR and modified logMAR were the same (0.28 and 0.45, respectively). Only 0.7% of records had different values for logMAR and modified logMAR for a patient on a given visit date, with the average difference being −0.00051 (SD, 0.00953). Median VA was notably better (lower logMAR) than mean VA, reflecting a distribution of VA among patients that skews toward better vision (Table 1; Fig 1).

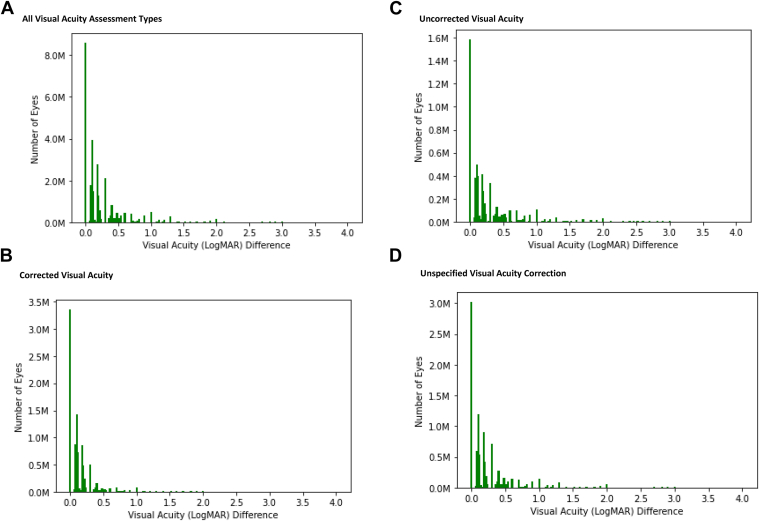

The mean spread in VA measurements per patient within each eye (difference between best and worst VA measurements) was 0.17 logMAR across all assessment corrections in 2018, but was 0.06 logMAR for CVA measurements (Fig 2). For patient visit dates with both right and left eye VA documented, the mean (SD) difference in VA between the 2 eyes was 0.24 (0.49) logMAR. However, in aggregate the mean and median VA values for right and left eyes were similar across the data set.

Figure 2.

Difference between best and worst visual acuity measurements per patient, per eye in 2018 American Academy of Ophthalmology IRIS® Registry (Intelligent Research in Sight) data in Rome. A, All visual acuity assessment types. B, Corrected visual acuity. C, Uncorrected visual acuity. D, Unspecified visual acuity correction. logMAR = logarithm of the minimum angle of resolution.

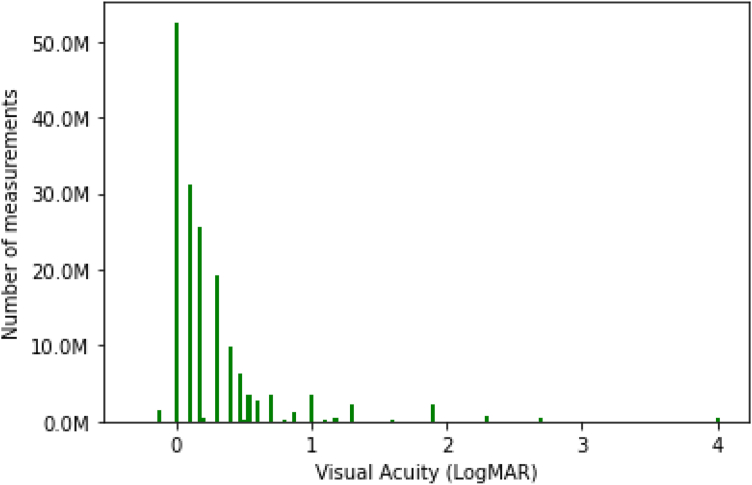

Chicago

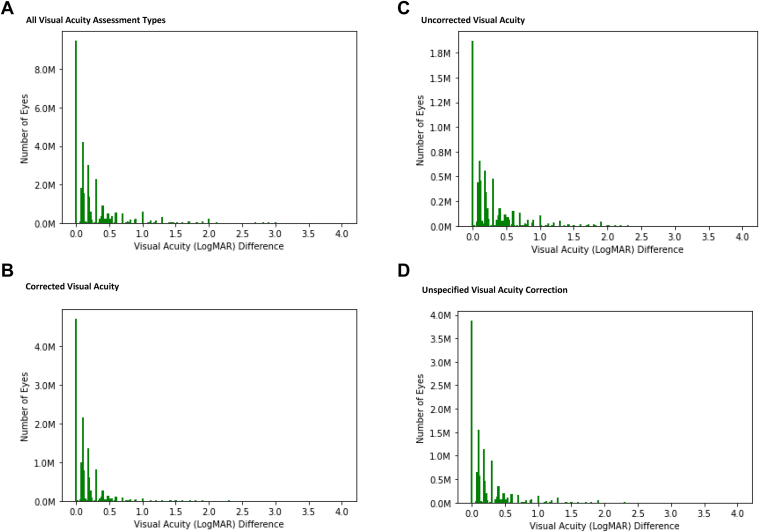

In Chicago, CF was assigned logMAR = 1.9, HM logMAR = 2.3, LP logMAR = 2.7, and NLP logMAR = 4. The vast majority of VA records (99.8%, or 168 530 763) were associated with a logMAR value. Chicago logMAR values were similar to recorded values in Rome; 98.9% of Chicago logMAR values ranged from −0.1 to 4.0 (Fig 3), 94.8% between 0 and 1. Median VA was better (lower logMAR) than mean VA (Table 2; Fig 3) and mean spread in VA measurements per patient within each eye (difference between best and worst VA measurements) was 0.19 logMAR across all assessment corrections in 2018 and 0.08 logMAR within only corrected VA measurements (Fig 4). For patient visit dates with both right and left eye VA documented, the mean (SD) difference in VA between the 2 eyes was 0.23 (0.45) logMAR. However, in aggregate the mean and median VA values for right and left eyes were similar across the Chicago data set, as in Rome.

Figure 3.

Distribution of logarithm of the minimum angle of resolution (logMAR) visual acuity measurements in 2018 American Academy of Ophthalmology IRIS® Registry (Intelligent Research in Sight) in Chicago.

Figure 4.

Difference between best and worst visual acuity measurements per patient, per eye in 2018 American Academy of Ophthalmology IRIS® Registry (Intelligent Research in Sight) data in Chicago. A, All visual acuity assessment types. B, Corrected visual acuity. C, Uncorrected visual acuity. D, Unspecified visual acuity correction. logMAR = logarithm of the minimum angle of resolution.

VA Assessment Correction

Visual acuity correction was classified as CVA, UCVA, or unspecified. Corrected VA refers to habitual correction (usually noted as “cc”). Out of all VA records in 2018, in both Rome and Chicago, a plurality was CVA, followed by slightly fewer unspecified, and the smallest fraction were UCVA. Measurement characteristics are shown in Tables 1, 2, 4, and 5.

Table 4.

Frequency of Visual Acuity Measurement Method and Type in 2018 American Academy of Ophthalmology IRIS® Registry (Intelligent Research In Sight) Rome Version∗

| Method | Type |

|||

|---|---|---|---|---|

| All (100.0%) | CVA (31.33%) | UCVA (18.96%) | Unspecified (59.71%) | |

| Distance | 89 653 937 (48.3%) | 36 398 894 (62.6%) | 26 094 692 (74.2%) | 27 160 351 (29.4%) |

| Near | 11 066 330 (6.0%) | 5 950 459 (10.2%) | 2 444 336 (6.9%) | 2 671 535 (2.9%) |

| Unspecified | 84 915 304 (45.7%) | 15 809 903 (27.2%) | 6 649 418 (18.9%) | 62 455 983 (67.7%) |

CVA = corrected visual acuity (visual acuity with correction); UCVA = uncorrected visual acuity

Version frozen on April 20, 2020. Values reported as N (%). Percentages are per column.

Table 5.

Frequency of Visual Acuity Measurement Method and Type in 2018 American Academy of Ophthalmology IRIS® Registy (Intelligent Research In Sight) Chicago Version∗

| Method | Type |

|||

|---|---|---|---|---|

| All (100.00%) | CVA (39.06%) | UCVA (23.41%) | Unspecified (37.53%) | |

| Distance | 100 696 572 (59.6%) | 40 131 958 (60.8%) | 28 966 865 (73.3%) | 31 597 749 (49.8%) |

| Near | 14 153 014 (8.4%) | 7 186 123 (10.9%) | 2 993 807 (7.6%) | 3 973 084 (6.3%) |

| Unspecified | 54 070 463 (32.0%) | 18 669 813 (28.3%) | 7 578 178 (19.2%) | 27 822 472 (43.9%) |

CVA = corrected visual acuity (visual acuity with correction); UCVA = uncorrected visual acuity

Version frozen on December 24, 2021. Values reported as N (%). Percentages are per column.

In aggregate, the mean of nonmissing modified logMAR measurements was similar for CVA and unspecified VA measurements in both Rome and Chicago (approximately 0.25 for each, corresponding to approximately 20/40 Snellen VA). Uncorrected VA was worse (mean, 0.35). Median logMAR was the same in both Rome and Chicago.

Notably, among patient visit dates where both CVA and UCVA were recorded for the same eye, CVA was better than UCVA in the majority (> 60%) of cases in both Rome and Chicago. It was tied in approximately 20–25% of cases and recorded instead of UCVA in 10% to 15% of cases. (Table 6). Among patient visit dates where both CVA and unspecified VA were recorded for the same eye, CVA was most commonly tied or slightly worse than unspecified VA (Table 6).

Table 6.

Differences in Visual Acuity Measurements in the Same Eye on the Same Day, based on Measurement Type and Method in 2018 American Academy of Ophthalmology IRIS® Registry (Intelligent Research In Sight)

| Rome N (%) |

Chicago N (%) |

|

|---|---|---|

| CVA versus UCVA | ||

| CVA better than UCVA | 3 332 460 (64.3) | 5 943 414 (62.3) |

| CVA same as UCVA | 1 239 915 (23.9) | 2 176 533 (22.8) |

| CVA worse than UCVA | 614 146 (11.8) | 1 417 713 (14.9) |

| CVA versus unspecified correction | ||

| CVA better than unspecified | 187 433 (14.1) | 6 482 994 (17.8) |

| CVA same as unspecified | 9 428 438 (41.8) | 14 368 639 (39.5) |

| CVA worse than unspecified | 9 931 952 (44.0) | 15 542 319 (42.7) |

| Distance versus unspecified distance/near | ||

| Distance better than unspecified | 5 554 632 (37.6) | 7 167 775 (34.5) |

| Distance same as unspecified | 4 025 703 (27.2) | 6 259 216 (30.1) |

| Distance worse than unspecified | 5 195 903 (35.2) | 7 358 935 (35.40) |

| Refraction-associated versus unrefracted | ||

| Refraction better than unrefracted | 5 139 578 (50.7) | 10 200 264 (50.3) |

| Refraction same as unrefracted | 4 293 141 (42.4) | 8 220 001 (40.6) |

| Refraction worse than unrefracted | 702 198 (6.9) | 1 849 873 (9.1) |

| Pinhole versus nonpinhole | ||

| Pinhole better than nonpinhole | 13 866 597 (68.8) | 17 629 140 (62.2) |

| Pinhole same as nonpinhole | 4 445 754 (22.1) | 6 800 762 (24.0) |

| Pinhole worse than nonpinhole | 1 844 468 (9.2) | 3 912 848 (13.8) |

CVA = corrected visual acuity (visual acuity with correction); UCVA = uncorrected visual acuity

VA Assessment Method

Visual acuity measurements were documented in the registry as obtained at distance, near, or unspecified. Among all 2018 nonmissing logMAR VA records, the majority were documented as distance measurements, followed by unspecified. Most CVA and UCVA measurements were documented at distance, however, the majority of unspecified correction measurements were also unspecified method (distance versus near) (Tables 4, 5).

There were no logMAR or modified logMAR values for “near” in Rome; in these cases, modified logMAR and Snellen were always 999, indicating all near logMAR data were missing. However, the near value field was populated with Jaeger notation, with 48.0% (n = 10 228 548) having missing data, and among nonmissing data, the most frequent record was Jaeger 1 (J1; 54.2%, n = 6 001 698). Values ranged from J1 to J16, and 80.2% of values were either J1, J2, or J3. In Chicago, near measurements with associated logMAR values ranged from 0 to 1, with 2.8% (n = 389 286) recorded as 999. One hundred percent (14 153 014) of near VA records were associated with specific labeled Jaeger notation VAs. Of these near Jaeger records in Chicago, 0% were associated with distant or unspecified VAs. Jaeger values in Chicago also ranged from J1 to J16; 55.4% (7 835 559) of values were J1 and 80.7% (11 426 085) of values were either J1, J2, or J3.

In aggregate, distance VA measurements were similar to unspecified VA measurements. When comparing patient visit dates where both a distance and unspecified VA measurement were recorded in the same eye, the relationship between distance and unspecified VA was (approximately one-third in each category, for both Rome and Chicago) (Table 6). However, the difference between mean distance and mean unspecified measurements across all 2018 nonmissing records was small (only 0.02 logMAR in Rome and 0.01 logMAR in Chicago).

VA Measurements Obtained with Refraction

A “refraction” flag was defined as true or false for each VA measurement in both Rome and Chicago. Among all 2018 VA measurements, approximately 5% were associated with a refraction flag (Tables 1, 2). Notably, among patient visit dates where both refraction-associated and unrefracted VA measurements were recorded for the same eye in Rome, refraction-associated VA was better than unrefracted VA in over half of cases in both Rome and Chicago, and it was seldom worse (Table 6). Unrefracted VA measurements could include corrected as well as uncorrected or unspecified VA measurements.

VA Measurements Associated with Pinhole Assessment

A “pinhole” flag was defined as true or false for each VA measurement in Rome and Chicago. Among all 2018 VA measurements, > 11% were obtained with pinhole (Tables, 1, 2). Pinhole-associated VA was better than without pinhole in > 60% of cases in both Rome and Chicago (Table 6).

A “no improvement” (NI) flag was also defined as true or false for each VA measurement in Rome. However, a total of 1788 VA measurements (< 0.01%) were labeled as NI, all with logMAR of 2, 3, or 4. The NI flag was not included in Chicago.

VA Refinement Algorithm

To develop a data-cleaning algorithm, we sought to identify representative corrected distance VA in the better-seeing eye at the patient level in 2018, as the closest representation of best-corrected VA in actual clinical practice (other than limiting analysis only to VA measurements associated with a refraction). We first excluded VA measurements obtained at near. Rome contained 0 values and Chicago’s 8.2% of near values were outliers (almost 3 × lower logMAR) compared with the distance values. These would introduce bias toward better vision in the proportion of patients with near VA values. Additionally, despite uncorrected values comprising of approximately 23% to 24% of records, we also excluded these measurements because their average logMAR was 0.11 less than corrected VA and would underestimate patients’ habitual vision. The algorithm retained refraction-associated and pinhole-flagged VA measurements. Unspecified VA measurements were evaluated and found to yield similar VA results as for CVA measurements. Because these accounted for the plurality of VA correction and method measurements and distance was assumed to be the default method of VA assessment, unspecified VA measurements were also retained. The PostgreSQL code for this filter can be found in the Supplemental Appendix (available at www.ophthalmologyscience.org) for both Rome and Chicago data sets. The code: (1) required each VA to be of type corrected/unspecified or a refraction-associated VA; (2) required each VA measurement method to be distance or unspecified; and (3) did not filter on pinhole or NI.

To validate our filter, we compared results to those with no filter. Approximately 19% of records were filtered in both Rome and Chicago data sets (n = 16 619 111 out of 88 692 944 in Rome, and 18 739 599 out of 98 262 368 in Chicago). Application of the filter reduced the impact of high-outlier VA measurements; on average in Rome after filtering, the mean logMAR measurement per visit date per eye was 0.25, whereas the average maximum logMAR measurement per visit date per eye was 0.33. In Chicago, on average the mean logMAR measurement per visit date per eye was 0.24 with our filter, compared with 0.32 when based on the maximum logMAR measurement per visit date per eye. Similarly, the SD of VA measurements was empirically narrower with filter application (0.47 prefilter vs. 0.23 postfilter in Rome, and 0.43 prefilter vs. 0.41 postfilter in Chicago).

To estimate representative VA in the better-seeing eye, we limited to the best VA measurement on each visit date in 2018 for each eye, condensed VA measurements to the mean of best per visit date values for each eye in 2018, and identified the better of the 2 eyes’ mean VA measurements.

Discussion

The IRIS Registry offers a myriad of research potential and has already enabled a variety of research questions, ranging from prevalence of rare disease7 to treatment patterns9 and surgical complication rates.5 The inclusion of VA measurements, which are not available from other large national clinical data sources such as insurance claims, is essential for studying visual impact and outcomes, which are of critical importance in ophthalmology. However, analysis and interpretation of VA data requires careful consideration of data limitations and metadata fields. To date, studies have used variable methodologies for cleaning VA data, and there is a lack of published data to help researchers and readers.8,9 Furthermore, there are 2 versions of the database: Rome, which was available to researchers through 2021, and Chicago, which became available mid-2021. It is important to understand how VA information is recorded and interpreted in IRIS Registry-based analyses; analyses may benefit from development of targeted algorithms to evaluate specific VA information.

As expected for a database derived from clinical practice, we found considerable evidence for inconsistencies in VA data. For example, an appreciable fraction of visit dates had duplicate VA records. Among Rome VA records, 10.8% shared identical metadata, which vastly outnumbered the 1.4% of Chicago records with duplicate metadata. On the other hand, Rome had slightly fewer visit dates with > 10 VA records (1.4%) compared with 1.6% in Chicago. In Rome, when both logMAR and modified logMAR were present, the difference between the 2 was negligible. In Chicago, only logMAR was present.

Although we anticipated CVA to generally be better than UCVA when measurements were obtained on the same day, we were surprised to find CVA measurements worse than UCVA 12% of the time and worse than unspecified VA measurements 44% of the time in Rome (15% and 43% of the time in Chicago, respectively), when measurements were obtained on the same day. This perhaps represents documentation error, ingestion errors, difficult refraction exams, CVA collected after only autorefraction, confounders, and/or refraction performed late in a patient’s visit or after other examination, testing, or treatment. There may also be differences in VA assessment method (e.g., a visit may have CVA recorded without pinhole refinement and UCVA recorded based on pinhole) and we suspect that many VA measurements recorded as unspecified actually reflect CVA, given similarities in aggregate characteristics of the 2 groups. Particularly because most unspecified correction measurements also had unspecified distance versus near measurement method, it is likely that some EHR systems may not retain correction status and measurement method in structured data fields. Furthermore, relationships between data may not always be as expected. For example, for visit dates that had both refraction-associated and non-refraction VA measurements or pinhole and nonpinhole measurements on the same day, respectively, 7% (Rome) and 9% (Chicago) of nonrefracted VA measurements were better than refracted and 9% (Rome) and 14% (Chicago) of the nonpinhole VAs were better than pinhole.

Of note, there were no major differences between the Rome and Chicago databases for 2018 VA data aggregate statistics. Chicago average VAs were slightly lower than in Rome, likely because: (1) near VAs were also associated with logMARs and the average near logMAR was 0.11, significantly lower than the overall average logMAR of 0.26; and (2) HM and LP were assigned logMAR values of 2.3 and 2.7 in Chicago versus 3.0 for each in Rome, causing a leftward shift in the mean Chicago logMAR.

Rome contained more VA records; however, Chicago contained more VA records with nonmissing VA data. Chicago also contained slightly more visit dates and patients. Mean, SD, and median logMAR for all laterality, correction, distance, pinhole, and refraction measurements were nearly identical between Rome and Chicago, as expected, supporting the fidelity of the IRIS Registry data set. Chicago contained fewer duplicate values but still had a nontrivial number of duplicate records that required data cleaning. The frequency of atypical pairings (i.e., some unrefracted VA better than refracted VA in the same eye on the same day, or some nonpinhole values better than pinhole values on the same day) were similar between Rome and Chicago.

Based on these caveats, we propose applying selective algorithms to filter VA values based on study purpose. For example, because most analyses of visual outcomes emphasize corrected and distance VA, we suggest selecting the best logMAR value from a filtered subset of CVA/unspecified measurements obtained at distance/unspecified, including refractions and not filtering on pinhole or NI flags. Unspecified VA measurements were very similar to CVA in aggregate. We suggest excluding UCVA (or evaluating UCVA measurements on a case-by-case basis) because when UCVA and CVA were not identical, UCVA was worse than CVA 84% (Rome) and 81% (Chicago) of the time. We also suggest excluding near VA measurements because in Rome all corresponding logMARs are null and in Chicago Jaeger charts may demonstrate too much variability (empirically demonstrating much lower vision than distance values).11,12 And we suggest neither including nor excluding on the presence of pinhole and NI flags due to clinical relevance/measurement consistency as well as record sparsity (pinhole flags were present in 12% [Rome] and 11% [Chicago], and NI flags present in < 1% [Rome] and 0% [Chicago]). Issues of data duplicates or misclassification can be addressed by selecting the best VA measurement in the filtered subset and flattening an arbitrary number of VA measurements to a single entry per visit date per eye.

Where possible, depending on the research question, we suggest reducing the impact of data entry errors or outliers by measuring VA per eye over a span of time rather than at a single time point. For example, running a data selection algorithm per date per eye, identifying the best VA measurement in each eye per visit date (e.g., corrected or unspecified), and retaining the mean of these values. Unlike median, utilizing mean values: (1) considers measurements that are better or worse, giving weight to notable decline or improvement in vision; (2) smoothes out the impact of each individual measurement; and (3) is less susceptible to outliers than minimum or maximum filters. We recently used this approach for an epidemiologic study assessing for blindness over a calendar year (Brant, unpublished data). Approaches may be more complex for research questions evaluating clinical outcomes or changes over time that require reliable repeat measurements, necessitating more careful validation of VA measurements. Currently IRIS Registry VA data are specific to a given date, but not a particular visit, information that may be difficult to glean even from EHR review (e.g., multiple measurements on the same date could represent encounters with different providers in a multispecialty group, or appointments for a provider visit and imaging/testing, respectively). However, for most analyses, data scientists will need to map VA measurements into a composite value for analysis. We expect that most IRIS research questions would benefit from availability of standardized filtered eye-level VA data in addition to the more detailed, unfiltered VA data. We have shared our algorithm, recognizing that site-specific implementation may yield inconsistencies. Current Verana Health efforts to improve the reliability of IRIS Registry VA data may support the eventual integration of standardized filtered VA in the IRIS Registry research extracts.

The etiology of data errors and inconsistencies in IRIS Registry VA measurements is likely multifactorial, reflecting many of the expected challenges inherent to data reflecting actual clinical practice, including ophthalmic technician variability, data entry errors, patient inconsistency, and ingestion pipeline bugs or value classification errors. Current work to integrate data extraction and transformation under a single entity, the same registry vendor as for data analysis (Verana Health), will improve transparency and reliability of data ingestion and transformation going forward. Although large data sets enable smoothing of noise, if the distribution of data is shifted due to underlying biases, smoothing and aggregation algorithms may still propagate the bias. Because the IRIS Registry contains data for most United States ophthalmology patients, most studies have large sample size, and analysis of thousands or millions of patient records lessens the impact of individual entry errors. However, to further minimize the influence of erroneous data and strengthen data interpretability, the IRIS Registry data set is actively curated with implementation of continuous enhancements to data-cleaning methods, algorithms to filter outliers and strengthen data interpretability, and application of clinical logic to understand results. In addition, researchers can and should employ specific analysis methods to more specifically infer the VA records of interest for a particular research question. We recommend that researchers prefilter and aggregate VAs using an approach as described above. Our suggested approach further ameliorates the influence of outliers.

In summary, the IRIS Registry offers the unique opportunity to study vision for millions of United States patients. Given the complexity of documentation, VA data are subject to expected challenges. Although these considerations do not eclipse the overall unprecedented strengths of the data set to answer research questions, particular care and attention is required to design studies and clean the data to ensure consistency and reliability of analyses. We propose a data filtering strategy and present an example algorithm for excluding outliers and aggregating duplicate data, informed by the underlying caveats of the data set. We recommend that future researchers standardize and document their methodology to improve accuracy and reproducibility of IRIS Registry analyses. This is a topic that warrants careful attention, and because VA coding, logic, and content are likely to evolve in the future, it also warrants iterative review.

Manuscript no. XOPS-D-22-00139R2.

Footnotes

Supplemental material available atwww.ophthalmologyscience.org.

Disclosures:

All authors have completed and submitted the ICMJE disclosures form.

The authors have made the following disclosures: A.B.: Consultant – Google Inc., Healthcare AI.

N.K.: Consultant – AbbVie/Allergan; Grants – AbbVie/Allergan, Guardion Health Services Inc, Equinox, Nicox, Olleyes, Santen, Glaukos, Diopsys, Aerie.

S.P.: Consulting fees/equity – Acumen, LLC, Verana Health (outside the submitted work)

J.H.: Consulting fees – KalVista, Novartis, Spark Therapeutics, Lowy Medical Research Institute, Bionic Sight LLC; Data safety monitoring – Aura Bioscience; Chair – Janssen; Director – Bristol-Myers Squibb

A.L.: Grants – Santen, Carl Zeiss Meditec, Novartis; Personal fees – Genentech, Topcon, Verana Health (outside of the submitted work)

A.Lorch: Consulting fees – Regeneron

J.M.: Consulting fees – KalVista Pharmaceuticals, Sunovion, Heidelberg Engineering, ONL Therapeutics; Royalties – Valeant Pharmaceuticals/Mass Eye and Ear; Patents/intellectual property – Valeant Pharmaceuticals/Mass Eye and Ear, ONL Therapeutics, Drusolv Therapeutics; Stock options/equity – ONL Therapeutics, Aptinyx, Inc., Ciendias Bio

Supported by departmental support from Research to Prevent Blindness and National Eye Institute (grant no.: P30-EY026877). The funding organizations had no role in the design or conduct of this research.

HUMAN SUBJECTS: The study was reviewed and deemed exempt by the Stanford University institutional review board and the research adhered to the Declaration of Helsinki.

No animal subjects were included in this study.

Author Contributions:

Conception and design: Brant, Kolomeyer, Goldberg, Hyman, Pershing

Data collection: Brant, Hyman, Pershing

Analysis and interpretation: Brant, Kolomeyer, Goldberg, Haller, C.Lee, A.Lee, Lorch, Miller, Hyman, Pershing

Obtained funding: N/A

Overall responsibility: Brant, Kolomeyer, Goldberg, Haller, C.Lee, A.Lee, Lorch, Miller, Hyman, Pershing

Supplementary Data

References

- 1.Chiang M.F., Sommer A., Rich W.L., et al. The 2016 American Academy of Ophthalmology IRIS Registry (Intelligent Research in Sight) database: characteristics and methods. Ophthalmology. 2018;125:1143–1148. doi: 10.1016/j.ophtha.2017.12.001. [DOI] [PubMed] [Google Scholar]

- 2.Parke D.W., II, Rich W.L., III, Sommer A., Lum F. The American Academy of Opthalmology's IRIS Registry (Intelligent Research in Sight clinical data): a look back and a look to the future. Ophthalmology. 2017;124:1572–1574. doi: 10.1016/j.ophtha.2017.08.035. [DOI] [PubMed] [Google Scholar]

- 3.Resnikoff S., Felch W., Gauthier T.M., Spivey B. The number of ophthalmologists in practice and training worldwide: a growing gap despite more than 200,000 practitioners. Br J Ophthalmol. 2012;96:783–787. doi: 10.1136/bjophthalmol-2011-301378. [DOI] [PubMed] [Google Scholar]

- 4.IRIS Registry Data Analysis. https://www.aao.org/iris-registry/data-analysis/requirements

- 5.Pershing S., Lum F., Hsu S., et al. Endophthalmitis after cataract surgery in the United States: A report from the Intelligent Research in Sight Registry, 2013–2017. Ophthalmology. 2020;127:151–158. doi: 10.1016/j.ophtha.2019.08.026. [DOI] [PubMed] [Google Scholar]

- 6.Repka M.X., Lum F., Burugapalli B. Strabismus, strabismus surgery, and reoperation rate in the United States: Analysis from the IRIS Registry. Ophthalmology. 2018;125:1646–1653. doi: 10.1016/j.ophtha.2018.04.024. [DOI] [PubMed] [Google Scholar]

- 7.Willis J.R., Vitale S., Morse L., et al. The prevalence of myopic choroidal neovascularization in the United States: analysis of the IRIS Data Registry and NHANES. Ophthalmology. 2016;123:1771–1782. doi: 10.1016/j.ophtha.2016.04.021. [DOI] [PubMed] [Google Scholar]

- 8.Parke D.W., III, Lum F. Return to the operating room after macular surgery: IRIS Registry analysis. Ophthalmology. 2018;125:1273–1278. doi: 10.1016/j.ophtha.2018.01.009. [DOI] [PubMed] [Google Scholar]

- 9.Cantrell R.A., Lum F., Chia Y., et al. Treatment patterns for diabetic macular edema: an Intelligent Research in Sight (IRIS) registry analysis. Ophthalmology. 2020;127:427–429. doi: 10.1016/j.ophtha.2019.10.019. [DOI] [PubMed] [Google Scholar]

- 10.Chu X., Ilyas I.F., Krishnan S., Wang J. Proceedings of the 2016 International Conference on Management of Data - SIGMOD ’16. ACM Press; 2016. Data cleaning; pp. 2201–2206. [DOI] [Google Scholar]

- 11.Radner W. Reading charts in ophthalmology. Graefes Arch Clin Exp Ophthalmol. 2017;255:1465–1482. doi: 10.1007/s00417-017-3659-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Colenbrander A., Runge P. Can Jaeger Numbers Be Standardized. Invest Ophthalmol Vis Sci. 2007;48:3563. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.