Abstract

Introduction:

The increasing use of Artificial Intelligence (AI) in medicine has raised ethical concerns, such as patient autonomy, bias, and transparency. Recent studies suggest a need for teaching AI ethics as part of medical curricula. This scoping review aimed to represent and synthesize the literature on teaching AI ethics as part of medical education.

Methods:

The PRISMA-SCR guidelines and JBI methodology guided a literature search in four databases (PubMed, Embase, Scopus, and Web of Science) for the past 22 years (2000–2022). To account for the release of AI-based chat applications, such as ChatGPT, the literature search was updated to include publications until the end of June 2023.

Results:

1384 publications were originally identified and, after screening titles and abstracts, the full text of 87 publications was assessed. Following the assessment of the full text, 10 publications were included for further analysis. The updated literature search identified two additional relevant publications from 2023 were identified and included in the analysis. All 12 publications recommended teaching AI ethics in medical curricula due to the potential implications of AI in medicine. Anticipated ethical challenges such as bias were identified as the recommended basis for teaching content in addition to basic principles of medical ethics. Case-based teaching using real-world examples in interactive seminars and small groups was recommended as a teaching modality.

Conclusion:

This scoping review reveals a scarcity of literature on teaching AI ethics in medical education, with most of the available literature being recent and theoretical. These findings emphasize the importance of more empirical studies and foundational definitions of AI ethics to guide the development of teaching content and modalities. Recognizing AI’s significant impact of AI on medicine, additional research on the teaching of AI ethics in medical education is needed to best prepare medical students for future ethical challenges.

Introduction

The use of artificial intelligence (AI) in medicine is expected to have a significant impact on patient care, medical research, and the entire healthcare system. The term ‘artificial intelligence’ was first coined in the 1950s by McCarthy et al. as ‘… the basis of the conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it’ [1]. AI is typically associated with the field of computer science and can be divided into the so-called ‘strong AI’ and ‘weak AI’ [2]. Strong AI aims to develop a general AI with capabilities comparable to that of humans, while weak focuses on creating systems that can perform very specific tasks [3]. Weak AI is further divided into ‘symbolic AI’ and ‘statistical AI’, each with distinctive approaches to problem-solving and data analysis. While ‘symbolic AI’ is commonly based on predefined rules, currently used as expert-based clinical decision support systems (CDSS) in medicine for example, ‘statistical AI’ includes the field of ‘machine learning (ML)’, where numeric functions are used to establish patterns and correlations from the data [3].

Because of the ability to precisely analyze and process large amounts of data, applications based on ML are being successfully used in various medical fields, such as radiology, pathology, or dermatology [4,5,6,7]. Various other benefits are expected from the use of AI, such as increased accuracy in diagnosis, the possibility of personalized treatments, or a reduction in the workload of medical staff [7,8,9]. A more recent application of AI based on machine learning are large language models (LLM), which form the core of AI-based chat applications such as ChatGPT by OpenAI. First released to the public in November 2022, ChatGPT can be considered as the first consumer-grade and broadly used AI application [10]. Owing to the capabilities of ChatGPT in the medical field, for example, being able to pass the written portion of the US medical licensing examination (USMLE) [11], AI-based chat applications are expected to have a significant impact on medical practice [10,12].

One use case of symbolic AI in medicine is CDSS, which are applied to recommend treatment options based on predefined rules and expert knowledge [13]. Moreover, the use of AI in medicine is expected to reduce health-related expenditures and improve the accessibility of health services for patients [8,14,15].

Despite the many potential benefits expected from the use of AI, possible disadvantages should not be neglected. In addition to essential questions of user liability in the event of any errors in the use of AI-based applications in patient care or the security of patient data, ethical challenges are expected to be the most important [16,17]. In this context, for example, there is the possibility of bias due to a lack of representativeness of the applications used to train AI, potentially leading to the under- or overtreatment of patients [17,18]. Both symbolic and statistical AI can contribute to these ethical challenges [18]. For instance, CDSS as part of symbolic AI rely on predefined rules and decision trees, that may carry over existing biases from the developers and experts used in the development process [18,19]. Similarly, statistical AI algorithms may amplify biases in the data on which they are trained, further reinforcing inequalities [20]. Ethical challenges also include issues related to patient autonomy, data privacy, and transparency [5,14,21].

Owing to the potential challenges posed by using AI in medicine, current research on the topic recommends consistent and early teaching of future users [22,23,24]. In addition to imparting knowledge and fostering an understanding of AI in general, teaching about the ethics of AI is broadly recommended [24,25,26]. Recent studies further suggest that medical students anticipate significant ethical challenges posed by AI in medicine [27,28]. To ensure the best possible education for medical students, knowledge of the current literature regarding the teaching of AI ethics as part of medical curricula is necessary.

AI ethics definition

The inconsistency in the scientific definition of AI is also reflected in the attempt to define AI ethics [29]. The interdisciplinary nature of the field, involving computer science and philosophy as well as different associated schools of thoughts such as humanism or transhumanism, further complicates the issue [30].

One prominent definition of AI ethics was provided by Leslie in 2019, stating that ‘AI ethics is a set of values, principles, and techniques that employ widely accepted standards of right and wrong to guide moral conduct in the development and use of AI technologies’ [31]. Whittlestone et al. also emphasize the importance of principles and standards regarding the use and development of AI and define AI ethics as ‘the emerging field of practical AI ethics, which focuses on developing frameworks and guidelines to ensure the ethical use of AI in society (analogous to the field of biomedical ethics, which provides practical frameworks for ethical practice in medicine.)’ [32]. Both definitions are consistent with current scientific and governmental efforts, including the European Commission’s High-Level Expert Group on Artificial Intelligence, to develop AI ethics guidelines for the ethical implementation and use of AI [33,34,35].

The analysis of current guidelines indicates that the effort is significantly influenced by the fundamental principles of medical ethics formulated by Beauchamp and Childress [36]. In addition to the principles of autonomy, non-maleficence, beneficence, and justice, recurring themes of AI ethics are for example transparency, explainability, accountability, and fairness [37,38]. Despite the focus to define suitable principles and values for the development and use of AI, the guidelines lack a clear definition of AI ethics [38].

Given the broad definitions formulated by Leslie and Whittlestone et al., we propose a more specific definition of ‘medical AI ethics’ that can be used for teaching and implementing AI ethics in medical education. We define ‘medical AI ethics’ as an interdisciplinary subfield of AI ethics concerned with the application of ethical principles and standards to the research, development, implementation, and use of AI technologies within the practice of medicine. Our definition emphasizes the importance of ethical principles and standards, following current efforts, such as from the World Health Organization (WHO), and reflects the importance of the established principles of medical ethics from Beauchamp and Childress [36,39]. By providing a narrower definition, we hope to reduce the complexity and scope of the topic of AI ethics in medical education and promote consistency in scientific publications in this field.

Based on the significant ethical challenges expected from the use of AI in medicine and the associated demand for teaching AI ethics within medical education, this study aims to synthesize and comprehensively overview the existing scientific literature on the teaching of AI ethics in medical education. Specifically, the current AI ethics teaching content and methods will be explored and discussed, with the goal of identifying areas for future research and providing the necessary groundwork to enhance the education of medical students.

Methods

The present research was conducted based on the PRISMA-ScR guidelines as well as the methodological guidance for scoping reviews (JBI methodology) [40,41]. PubMed, Embase, Scopus, and Web of Science databases were searched. The initial search period was limited to the last 22 years (2000–2022). No scoping review protocol was published in advance of the research. The publication and all associated research have been approved by the ethical committee of the UMIT TIROL – Private University for Health Sciences and Health Technology.

Inclusion criteria

Only publications that could be found in the databases mentioned above were included in the search. Furthermore, only publications written in English or German and that had an accessible abstract were considered for analysis.

Search strategy

The following Medical Subject Headings (MeSH) terms and keywords were used for the database search: ethic* AND artificial intelligence OR ai AND medical school OR medical education OR medical curriculum OR medical students.

Study selection

After database searching and removal of duplicates using literature management software (Mendeley; Elsevier), the results were evaluated for thematic relevance. In this context, the title and abstract of the respective publications were assessed for their suitability. Unsuitable publications, for example, due to lack of thematic relevance, were excluded from further evaluation. For further estimation of the suitability of the publications, an assessment of the full text took place. If there was thematic relevance concerning the objective of the present research, the publications were included in the study.

Data extraction

During data extraction, data from all included publications were transferred to a table using Microsoft Excel (version 16.66). For each publication, the following data were recorded: First author’s name, year of publication, title, study type, the rationale for teaching AI ethics as part of medical curricula, recommendations on potential teaching content, recommendations on teaching modalities, and integration of teaching into medical curricula.

Updated literature search

Due to the release of AI-based chat applications such as ChatGPT, Bard, or Bing Chat, a new literature search was performed in July 2023 using the original search strategy to account for any additional scientific literature published until the 30th of June.

Results

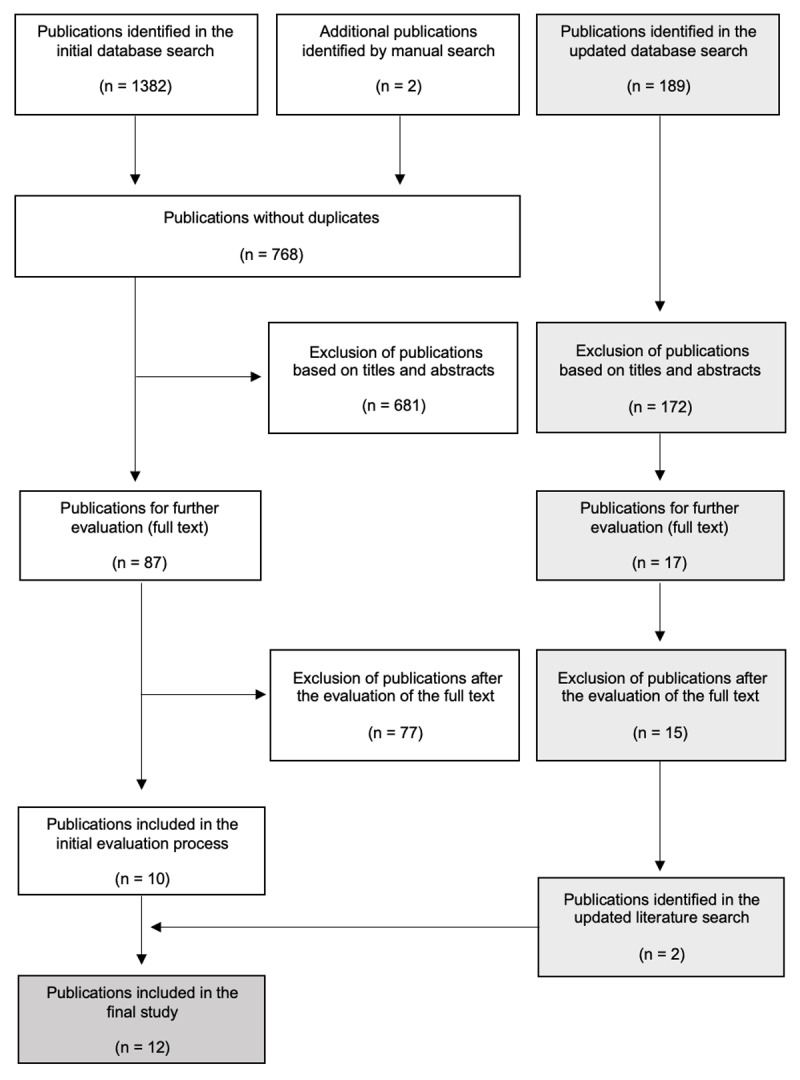

The initial database search (January 2023) retrieved 1382 publications. By manual search, two additional publications were identified and included in the selection process. After removing duplicates (n = 616), and screening 10 publications remained. In the updated literature search (July 2023), 189 additional publications were identified. After reviewing the titles and abstracts, the full texts of 17 articles were further evaluated. Based on the prespecified exclusion criteria, 15 publications were excluded owing to a lack of thematic focus (e.g., publications regarding the impact of ChatGPT on medical education). Consequently, two additional publications were included in the subsequent analysis, resulting in a total of 12 publications. See Figure 1 for the flowchart of the search and selection process.

Figure 1.

Flow chart of the search and selection process (adapted from PRISMA) [40].

Study characteristics

Despite the initial search period of 22 years (2000–2022), all publications included in the first analysis were published in the last four years (2018: n = 1; 2019: n = 2; 2020: n = 1; 2021: n = 4; 2022: n = 2). Two of the publications here were published by the same first author [42,43]. In addition to two reviews [43,44] and one cross-sectional study among medical students [45], commentaries or ‘viewpoints’ were the predominant study design among the included studies [22,23,42,46,47,48,49]. Except for two studies [47,49], the publications identified during the initial literature search focused on the integration and teaching of AI within medical curricula, without an emphasis on AI ethics. In the updated literature search two additional publications published in 2023 were identified and included into analysis. The included publications are presented in Table 1.

Table 1.

Results of the literature search and selection process (included publications).

|

| ||

|---|---|---|

| TITLE (YEAR) | METHODOLOGY | KEY FINDINGS |

|

| ||

| Grounded in reality: artificial intelligence in medical education (2023) [50] | Development, delivery, and assessment of an online, AI-integrated multidisciplinary course |

|

|

| ||

| Commentary: The desire of medical students to integrate artificial intelligence into medical education: An opinion article (2023) [51] | Commentary |

|

|

| ||

| Needs, Challenges, and Applications of Artificial Intelligence in Medical Education Curriculum (2022) [42] | Viewpoint |

|

|

| ||

| Artificial intelligence in medical education: a cross-sectional needs assessment (2022) [45] | Cross-sectional multi-center study |

|

|

| ||

| The need for health Al ethics in medical school education (2021) [47] | Reflection |

|

|

| ||

| Readying Medical Students for Medical AI: The Need to Embed AI Ethics Education (2021) [49] | Viewpoint |

|

|

| ||

| Educating Future Physicians in Artificial Intelligence (AI): An Integrative Review and Proposed Changes (2021) [43] | Integrative review |

|

|

| ||

| Artificial Intelligence in Undergraduate Medical Education: A Scoping Review (2021) [44] | Scoping review |

|

|

| ||

| What do medical students actually need to know about artificial intelligence? (2020) [22] |

Commentary |

|

|

| ||

| Reimagining Medical Education in the Age of AI (2019) [23] | Viewpoint |

|

|

| ||

| Introducing Artificial Intelligence Training in Medical Education (2019) [46] | Viewpoint |

|

|

| ||

| Machine learning and medical education (2018) [48] | Perspective |

|

|

| ||

The rationale for teaching ethical aspects of AI as part of medical education

There is unanimity among the authors regarding the expected significant impact that the use of AI in medicine will bring. The expected significant impact of AI in medicine also brings various ethical challenges, such as the potential loss of empathy in the doctor-patient relationship and changes in the structure of trust [42,45]. Given the lack of guidelines on the use of AI in medicine and the associated ethical challenges, it is recommended that medical education consistently include teaching on the ethical aspects of AI [43,46,51]. The potential for bias due to unrepresentative data and the resulting disadvantage for certain populations is a frequently cited reason for the need to integrate or expand the teaching of AI ethics in medical education, as well as recommended teaching content [22,42,45,47,49,50].

Recommendations for teaching content

Four publications do not specify possible teaching content for AI ethics [43,46,48,50]. Instead, three of the four publications propose broadly addressing general ethical problems and challenges that the use of AI in medicine may pose [43,46,48]. The authors of these publications do not provide further information about their definition of general ethical problems or challenges [43,46,48]. While a discussion on ethics regarding the use of AI is proposed to be taught in the clinical years in one of these publications there was no further specification [50].

In contrast, half of the included publications recommend teaching data protection and its potential impact on patient care when using AI in clinical practice [22,44,45,47,49,51]. Furthermore, four publications emphasized the importance of teaching the ethical aspects of user liability when AI is used in a clinical context [22,44,45,49].

Wartman et al. highlight empathy as a cornerstone of teaching and curriculum development on AI [23]. Rethinking the teaching of ethics is recommended to prepare medical students for the complex ethical issues that may arise between patients, caregivers, and AI [23]. The anticipated ethical implications of using AI in medicine, including issues related to bias and patient and physician autonomy, are identified as the basis for developing detailed teaching content on AI ethics in three of the included publications which will be further examined in more detail [22,47,49].

In their publication, McCoy et al. recommend the promotion of an understanding of fairness, transparency, and responsibility regarding the use of AI, similar to the established principles of medical ethics outlined by Beauchamp and Childress, including beneficence, justice, autonomy, and non-maleficence [22,36]. Four of the 12 included publications also recommend these principles as the foundation for teaching AI ethics [44,45,47,49].

Two publications that focus on teaching ethics on AI as part of medical education were identified. Katznelson et al. not only illustrate the relevance of teaching ethics on AI but also present six specific ethical challenges (‘informed consent’, ‘bias’, ‘safety’, ‘transparency’, ‘patient privacy’, and ‘allocation’) that should be addressed as part of medical school teaching and student training [47]. Quinn et al. also echo the possible teaching content defined by Katznelson et al. on the ethics of AI as part of medical school teaching and illustrate its relevance. In addition, Quinn et al. cite other possible teaching content based on fundamental ethical challenges and issues that may arise from the use of AI such as the ethical issues that may arise from overreliance on AI by users and potential interference with patient autonomy [49]. Quinn et al. further emphasize the need to understand the impact of AI on existing basic principles of medical ethics. Teaching as part of medical curricula should continue to address the ethical aspects that may arise from incorrect, absent, or abusive use of AI in medicine [49].

Table 2 provides a more detailed overview of the recommended teaching content for AI ethics in medical education identified in the publications reviewed.

Table 2.

Recommended artificial intelligence (AI) ethics teaching content.

|

| ||

|---|---|---|

| MAIN TEACHING RECOMMENDATION | DETAILED AI ETHICS TEACHING CONTENT RECOMMENDATIONS | PUBLICATIONS |

|

| ||

| Ethical challenges and issuesa |

|

[22,42,43,45,46,47,48,49,50] |

|

| ||

| Data protectiona | [22,44,45,47,49,51] | |

|

| ||

| Liabilitya | [22,44,45,46,49] | |

|

| ||

| Ethical values and principlesa |

|

[22,23,42,44,45,47,49] |

|

| ||

aassociated with the use of AI in medicine.

Recommendations on teaching modalities and integration of teaching content

Recommendations on teaching modalities and the procedure for integrating the teaching of ethical aspects of AI vary based on the included publications [43,48]. Wartman et al. advocate for a major overhaul of current medical curricula, emphasizing empathy and compassion and focusing on knowledge management rather than information acquisition and retention [23]. This approach would involve a fundamental and radical rethinking of teaching in medicine to prepare for the expected impact of AI on the field [23]. Quinn et. Al present a much less disruptive approach, which is intended not only to allow the integration of teaching ethical aspects of AI without significant changes to existing medical ones, but also to allow simultaneous teaching of ethical and technical backgrounds to AI [49]. These authors propose four steps to integrate AI ethics teaching into medical curricula [49]. In the first step, ‘formulation’, teaching content should be defined based on potential ethical problems and challenges. In the second step, ‘readying lessons’, previously defined teaching content needs to be aligned with existing modules in the field of ethics. ‘readying staff’, the third step, provides ethics instructors with the technical knowledge to effectively communicate potential ethical issues and challenges posed by AI. The fourth and final step, ‘readying students’, is intended to teach medical students. The presented approach is expected to allow a timely integration of teaching on the ethics of AI without often necessary and costly accreditation processes that would require a complete restructuring of medical curricula [49]. While Quinn et al. present concrete steps for integrating the teaching of AI ethics in their publication, there is no specification of possible teaching modalities concerning the respective teaching units.

Case-based teaching using real-world examples of AI in clinical contexts and the associated ethical challenges is recommended in five publications [22,42,45,46,47]. In this context, three of the publications recommend teaching in small groups as well as interactive-oriented seminars [22,44,45]. Linking theoretical teaching with practical self-application of AI is a preferred teaching method in three of the publications [22,45,48]. While one of the two publications that were identified throughout the updated literature research lacks specification on teaching modalities or the integration of AI ethics teaching content [51], the ethics of AI is proposed to be taught in the clinical years based on discussions in the second one [50].

Discussion

This scoping review synthesizes the current literature, largely comprising commentaries and viewpoints, on AI ethics in medical education, with a focus on 12 publications published within the past five years (2018 – 2023). Compared to recent reviews on the teaching of AI as part of medical curricula, only a reduced number of publications could be included, due to a narrower focus on AI ethics [24,43,44]. Of the 12 publications included, only two specifically focused on the teaching of AI ethics in medical curricula [47,49]. The remaining ten publications emphasized the relevance of AI ethics in medical education but varied in their specification of possible teaching content and modalities [22,23,42,43,44,45,46,48]. Both publications which focused on teaching AI ethics within medical education were published in 2021, which, in addition to recent research interest, may also imply limited awareness within the scientific community [47,49].

Recommended teaching content

This review highlights the high need for research regarding the teaching of ethics in the aspect of AI as part of medical education. Although all publications included in the evaluation emphasize the relevance of teaching the ethics of AI, possible teaching was only concretized in three publications [43,46,48]. While the lack of concretization might not only be attributable to the divergent focus on the general integration of AI within the scientific community, but rather due to the missing content on AI ethics in general [35,52].

The inconsistency in the definition of AI within the evaluated publications further limits the comparability of current literature and scientific research efforts regarding the definition of AI ethics-related teaching content [43,44,45]. As the authors’ understanding of AI and AI ethics is crucial to interpret the results of the respective studies, such as the recommended teaching contents, this review highlights the need for a common and clear understanding of these terms in the context of medical education. This requires disclosing the knowledge and understanding that the publications are based on. Out of 12 publications included in this review, only one provided a definition of AI ethics [47].

The publication by Katznelson et al. (2021) was identified as the first to not only focus on the definition of AI ethics and the integration of AI in medical education but also to formulate specific recommendations for teaching content by anticipating ethical challenges and problems that may arise from the use of AI in medicine [47]. Similarly, Quinn et al. (2021) defined anticipated ethical challenges as the foundation of potential teaching content [49].

The principles of medical ethics defined by Beauchamp and Childress are widely cited as the essential foundation of any teaching content [36,44,45,47,49]. While utilizing expected ethical challenges from AI in medicine, and Beauchamp and Childress’s principles of medical ethics, as a foundation for AI ethics teaching content seems beneficial, the practicability and implications for teaching AI ethics have yet to be assessed in subsequent research.

The inconsistency of the publications regarding possible teaching content on AI ethics, as well as the general definition of AI and AI ethics highlight the significant need for further research in the field. However, the definition of medical AI ethics presented in this review can serve as a basis for establishing more uniform teaching content on AI ethics. By addressing the recommendations from the authors of the publications included in this review, the definition reflects the growing importance of ethical considerations in the development and use of AI in medicine.

Recommended teaching modalities

The authors’ recommendations for the best possible integration of teaching on the ethics of AI range from a fundamental restructuring of medical school teaching [22] to an integrative teaching of technical and ethical content on AI without the need for significant changes to existing curricula [49].

The publication by Quinn et al. was the only publication that could be identified, presenting a concrete concept regarding the teaching and integration of AI ethics within medical education [49]. A widely anticipated difficulty in teaching AI ethics, or AI in general, as part of medical education, is the lack of sufficient teaching staff or knowledge on the part of existing teaching staff [42,46,49,50,51,53,54]. Other significant challenges include overloaded medical curricula with no dedicated time for AI ethics, and a lack of established standards for this emerging field of study [49,50]. Although the complexity of AI and the novelty of the field of AI ethics seem to be leading factors regarding the difficulty to find sufficiently trained teaching staff, without standardization in terms of AI ethics and related teaching content, the preparation of teaching staff and students will be significantly more difficult. While establishing clear terminology regarding AI, AI ethics, and teaching content can contribute to a shared academic understanding, it may not directly address the current limitations of faculty expertise in this area. This lack of expertise can likely be linked to the novelty of AI in medical education and the rapidly evolving nature of the field. Furthermore, the current lack of consistency in teaching AI ethics, often viewed as a challenge, may also reflect the necessary diversity native to ethics. This diversity allows for a range of perspectives and facilitates interdisciplinarity, which could be particularly valuable in such a rapidly evolving field. Nonetheless, reaching some level of agreement on the core elements of AI ethics and related teaching content, at least at the national level, could contribute to the comparability and standardization of medical education beyond individual institutions.

Five of the evaluated publications recommend case-based learning, which aligns with the current efforts for competence-oriented and evidence-based teaching in medical education [22,42,45,46,47,55,56]. Furthermore, the evaluated publications recommend the integration of practical and theoretical teaching content on AI ethics as well as teaching in interactive small groups and seminars [22,45,48]. Given the emphasis on case-based teaching by nearly half of the publications, the lack of concretization on possible examples related to teaching AI ethics becomes imminent [42,43,46].

Analogous to the heterogeneity of the results regarding the recommended teaching modalities of AI ethics, the question of who is ideally qualified to instruct AI ethics remains largely undetermined.

Quinn et al. proposed the idea of incorporating the technical aspects of AI into the medical ethics segment of the curriculum, where ethicists, once trained in the basics of AI, would serve as educators [49]. Additional research is required to assess the viability of this approach in an educational setting and to determine whether traditionally trained ethicists could adequately deliver not only content on AI ethics but also general instruction on AI in medicine.

Further divergence exists regarding the optimal time for implementing AI ethics into the medical education curriculum. While the majority of authors do not explicitly suggest when AI instruction should be implemented, there are varied opinions among those who do. Some propose introducing AI ethics in the preclinical phase in line with the conventional schedule for medical ethics instruction [43,44,46,49], while others advocate for its introduction during the clinical years, reasoning that students would have a better comprehension of the potential challenges and issues at this later stage [50].

Updated literature search

In response to the release of AI-based chat applications, such as ChatGPT, in November 2022, an updated literature search was conducted identifying two new publications [50,51]. Although both of these works were published after November 2022 and, thus, after the launch of ChatGPT, neither specifically mentions AI-based chat applications or ChatGPT. Both publications underscore the significance of ethics in the context of AI usage in medicine, with references to potential bias [50] and ethical principles concerning AI data utilization [51]; however, they fail to provide a clear definition of AI ethics or specific teaching content. The lack of precise recommended teaching content in these two newly identified publications aligns with the results of the initial literature search. For instance, while Krive et al. proposed a four-week elective course on AI in medicine to be incorporated into the preclinical years of medical education, they suggested teaching AI ethics in clinical years without providing specifics.

During the updated literature search, articles discussing the use of AI-based chat applications, such as ChatGPT, in medical education were discovered. Although these publications did not meet the predefined selection criteria due to insufficient focus on AI ethics, they underscored the anticipated substantial impact of AI-based chat applications on medical education [57,58,59]. The adoption of AI-based chat applications, such as ChatGPT, in medical education highlights the need for additional research on AI ethics in this context [59]. Notably, unlike medical products or applications specifically developed for use in healthcare, which must comply with ethical standards and principles during their development and implementation processes, AI-based chat applications such as ChatGPT do not need to comply with the same strict and formal requirements, as they are not explicitly designed for medical use. Therefore, the utilization of ChatGPT and similar AI-based chat applications by medical students during their education poses novel ethical challenges [59]. These include, but are not limited to, the transparency and explainability of the provided medical information and the accessibility of the applications [58]. This further emphasizes the necessity for future physicians to be acutely aware of the ethical challenges inherent to AI utilization in medicine. This awareness should be fostered during their education to prepare them to navigate this increasingly complex landscape.

Limitations

The study’s limitations include a limited number of search terms and databases used, which may have resulted in missing relevant publications. Additionally, only publications written in English were included in the evaluation (no publications in German could be identified). Furthermore, only 12 publications were identified in the literature search, with only two publications focusing on teaching AI ethics in medical education [47,49]. While an updated literature search was performed to account for the release of AI-based chat applications, such as ChatGPT, the results of this study are likely to be subject to extensive changes due to the rapid developments in AI technologies.

Conclusion

This scoping review aimed to synthesize and present the current scientific literature on the teaching of AI ethics in medical education. The findings underscore the recency, theoretical nature, and scarcity of the available literature on this topic. AI is predicted to significantly impact medicine, which makes the teaching of AI ethics an indispensable part of medical education. However, there is a notable lack of empirical studies and evaluations of existing educational programs. Only two publications specifically focus on the teaching of AI ethics in medical curricula. These findings highlight an urgent need for further research, particularly empirical and practice-based studies, to successfully integrate teaching on the ethics of AI into medical curricula. Moreover, the results suggest that there is currently a lack of a foundational definition of AI ethics, which could be beneficial for guiding the creation of teaching content and modalities. Recognizing that such definitions will need to be adapted in response to advancements in AI technologies and our evolving understanding of the associated ethical implications, continuous dialogue and further research within the field will be essential.

Ethics and consent

The publication and all associated research have been approved by the ethical committee of the UMIT TIROL – Private University for Health Sciences and Health Technology.

Competing Interests

The authors have no competing interests to declare.

References

- 1.McCarthy J, et al. A Proposal for the Dartmouth Summer Research Project on Artificial Intelligence. http://jmc.stanford.edu/articles/dartmouth/dartmouth.pdf (accessed 02 August 2023).

- 2.Mccarthy J. What is Artificial Intelligence? http://www-formal.stanford.edu/jmc/whatisai.pdf (accessed 02 August 2023).

- 3.Russell S, Norvig P. Artificial Intelligence (A Modern Approach). London: Pearson; 2010. [Google Scholar]

- 4.Esteva A, Kuprel B, Novoa R, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017; 542: 115–8. DOI: 10.1038/nature21056 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Topol E. High-performance medicine: the convergence of human and artificial intelligence. Nature medicine. 2019; 25: 44–56. DOI: 10.1038/s41591-018-0300-7 [DOI] [PubMed] [Google Scholar]

- 6.Panayides A, Amini A, Filipovic N, et al. AI in Medical Imaging Informatics: Current Challenges and Future Directions. IEEE J Biomed Health Inform. 2020; 24(7): 1837–57. DOI: 10.1109/JBHI.2020.2991043 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Amisha P, Pathania M, Rathaur V. Overview of artificial intelligence in medicine. J Family Med Prim Care. 2019; 8(7): 2328–31. DOI: 10.4103/jfmpc.jfmpc_440_19 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jiang F, Jiang Y, Zhi H, et al. Artificial intelligence in healthcare: Past, present and future. Stroke Vasc Neurol. 2017; 2: 230–43. DOI: 10.1136/svn-2017-000101 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism. 2017; 69: 36–40. DOI: 10.1016/j.metabol.2017.01.011 [DOI] [PubMed] [Google Scholar]

- 10.Gilson A, Safranek CW, Huang T, et al. How Does ChatGPT Perform on the United States Medical Licensing Examination? The Implications of Large Language Models for Medical Education and Knowledge Assessment. JMIR Med Educ. 2023; 9: e45312; DOI: 10.2196/45312 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kung TH, Cheatham M, Medenilla A, et al. Performance of ChatGPT on USMLE: Potential for AI-assisted medical education using large language models. PLOS Digital Health. 2023; 2: e0000198. DOI: 10.1371/journal.pdig.0000198 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hosseini M, Gao CA, Liebovitz DM, et al. An exploratory survey about using ChatGPT in education, healthcare, and research. medRxiv; 2023. DOI: 10.1101/2023.03.31.23287979 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zhou L, Sordo M. Expert systems in medicine. Artificial Intelligence in Medicine. 2021; 75–100. DOI: 10.1016/B978-0-12-821259-2.00005-3 [DOI] [Google Scholar]

- 14.Briganti G, le Moine O. Artificial Intelligence in Medicine: Today and Tomorrow. Front Med (Lausanne). 2020; 7: 27. DOI: 10.3389/fmed.2020.00027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Park C, Seo S, Kang N, et al. Artificial Intelligence in Health Care: Current Applications and Issues. J Korean Med Sci. 2020; 2: 35–42. DOI: 10.3346/jkms.2020.35.e379 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Price WN 2nd, Gerke S, Cohen IG. Potential Liability for Physicians Using Artificial Intelligence. JAMA. 2019; 322(18): 1765–6. DOI: 10.1001/jama.2019.15064 [DOI] [PubMed] [Google Scholar]

- 17.Char D, Shah N, Magnus D. Implementing Machine Learning in Health Care – Addressing Ethical Challenges. N Engl J Med. 2018; 378(11): 981–3. DOI: 10.1056/NEJMp1714229 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Challen R, Denny J, Pitt M, et al. Artificial intelligence, bias and clinical safety. BMJ Qual Saf. 2019; 28(3): 231–7. DOI: 10.1136/bmjqs-2018-008370 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Parikh R, Teeple S, Navathe A. Addressing Bias in Artificial Intelligence in Health Care. JAMA. 2019; 322(24): 2377–8. DOI: 10.1001/jama.2019.18058 [DOI] [PubMed] [Google Scholar]

- 20.Cirillo D, Catuara-Solarz S, Morey C, et al. Sex and gender differences and biases in artificial intelligence for biomedicine and healthcare. NPJ Digit Med. 2020; 3: 81. DOI: 10.1038/s41746-020-0288-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Hamet P, Tremblay J. Artificial intelligence in medicine. Metabolism. 2017; 69: 36–40. DOI: 10.1016/j.metabol.2017.01.011 [DOI] [PubMed] [Google Scholar]

- 22.McCoy L, Nagaraj S, Morgado F, et al. What do medical students actually need to know about artificial intelligence? NPJ Digit Med. 2020; 3: 86. DOI: 10.1038/s41746-020-0294-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Wartman S, Combs C. Reimagining Medical Education in the Age of AI. AMA J Ethics. 2019; 21(2): 146–52. DOI: 10.1001/amajethics.2019.146 [DOI] [PubMed] [Google Scholar]

- 24.Chan K, Zary N. Applications and Challenges of Implementing Artificial Intelligence in Medical Education: Integrative Review. JMIR Med Educ. 2019; 5(1): e13930. DOI: 10.2196/13930 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.van der Niet A, Bleakley A. Where medical education meets artificial intelligence: ‘Does technology care?’ Med Educ. 2021; 55(1): 30–6. DOI: 10.1111/medu.14131 [DOI] [PubMed] [Google Scholar]

- 26.Webster C. Artificial intelligence and the adoption of new technology in medical education. Med Educ. 2021; 55(1): 6–7. DOI: 10.1111/medu.14409 [DOI] [PubMed] [Google Scholar]

- 27.Pinto Dos Santos D, Giese D, Brodehl S, et al. Medical students’ attitude towards artificial intelligence: a multicentre survey. Eur Radiol. 2019; 29(4): 1640–6. DOI: 10.1007/s00330-018-5601-1 [DOI] [PubMed] [Google Scholar]

- 28.Mehta N, Harish V, Bilimoria K, et al. Knowledge and Attitudes on Artificial Intelligence in Healthcare: A Provincial Survey Study of Medical Students. MedEdPublish; 2021. DOI: 10.15694/mep.2021.000075.1 [DOI] [Google Scholar]

- 29.Christoforaki M, Beyan O. AI Ethics—A Bird’s Eye View. Applied Sciences. 2022; 12: 4130. DOI: 10.3390/app12094130 [DOI] [Google Scholar]

- 30.Coeckelbergh M. AI Ethics. The MIT Press; 2020. DOI: 10.7551/mitpress/12549.001.0001 [DOI] [Google Scholar]

- 31.Leslie D. Understanding artificial intelligence ethics and safety. 2019. DOI: 10.1145/3306618.3314289 [DOI] [Google Scholar]

- 32.Whittlestone J, Alexandrova A, Nyrup R, et al. The role and limits of principles in AI ethics: Towards a focus on tensions. In: AIES 2019 – Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society. Association for Computing Machinery. 2019; 195–200. DOI: 10.1145/3306618.3314289 [DOI] [Google Scholar]

- 33.European Commission’s High-Level Expert Group on Artificial Intelligence. Ethics guidelines for trustworthy AI. https://ec.europa.eu/newsroom/dae/document.cfm?doc_id=60419 (accessed 02 August 2023).

- 34.IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems. Ethically Aligned Design: A Vision for Prioritizing Human Well-Being with Autonomous and Intelligent Systems. https://standards.ieee.org/wp-content/uploads/import/documents/other/ead_v2.pdf (accessed 02 August 2023).

- 35.Hagendorff T. The Ethics of AI Ethics: An Evaluation of Guidelines. Minds & Machines. 2020; 30: 99–120. DOI: 10.1007/s11023-020-09517-8 [DOI] [Google Scholar]

- 36.Beauchamp T, Childress J. Principles of biomedical ethics. Oxford University Press; 2021. [Google Scholar]

- 37.Fjeld J, Achten N, Hilligoss H, et al. Principled Artificial Intelligence: Mapping Consensus in Ethical and Rights-Based Approaches to Principles for AI. SSRN Electronic Journal; 2020. DOI: 10.2139/ssrn.3518482 [DOI] [Google Scholar]

- 38.Stahl BC. From computer ethics and the ethics of AI towards an ethics of digital ecosystems. AI Ethics. 2022; 2: 65–77. DOI: 10.1007/s43681-021-00080-1 [DOI] [Google Scholar]

- 39.World Health Organization (WHO). Ethics and Governance of Artificial Intelligence for Health: WHO guidance; 2021. [Google Scholar]

- 40.Tricco AC, Lillie E, Zarin W, et al. PRISMA Extension for Scoping Reviews (PRISMA-ScR): Checklist and Explanation. Ann Intern Med. 2018; 169(7): 467–73. DOI: 10.7326/M18-0850 [DOI] [PubMed] [Google Scholar]

- 41.on Elm E, Schreiber G, Haupt C. Methodische Anleitung für Scoping Reviews (JBI-Methodologie). Z Evid Fortbild Qual Gesundhwes. 2019; 143: 1–7. German. DOI: 10.1016/j.zefq.2019.05.004 [DOI] [PubMed] [Google Scholar]

- 42.Grunhut J, Marques O, Wyatt A. Needs, Challenges, and Applications of Artificial Intelligence in Medical Education Curriculum. JMIR Med Educ. 2022. DOI: 10.2196/35587 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Grunhut J, Wyatt AT, Marques O. Educating Future Physicians in Artificial Intelligence (AI): An Integrative Review and Proposed Changes. J Med Educ Curric Dev. 2021; 8: 23821205211036836. DOI: 10.1177/23821205211036836 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Lee J, Wu A, Li D, et al. Artificial Intelligence in Undergraduate Medical Education: A Scoping Review. Acad Med. 2021; 96(11S): 62–70. DOI: 10.1097/ACM.0000000000004291 [DOI] [PubMed] [Google Scholar]

- 45.Civaner M, Uncu Y, Bulut F, et al. Artificial intelligence in medical education: a cross-sectional needs assessment. BMC Med Educ. 2022; 22: 772. DOI: 10.1186/s12909-022-03852-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Paranjape K, Schinkel M, Panday R. Introducing artificial intelligence training in medical education. JMIR Med Educ. 2019. DOI: 10.2196/16048 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Katznelson G, Gerke S. The need for health AI ethics in medical school education. Adv Health Sci Educ Theory Pract. 2021; 26(4): 1447–58. DOI: 10.1007/s10459-021-10040-3 [DOI] [PubMed] [Google Scholar]

- 48.Kolachalama V, Garg P. Machine learning and medical education. npj Digital Med. 2018; 1: 54. DOI: 10.1038/s41746-018-0061-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Quinn T, Coghlan S. Readying Medical Students for Medical AI: The Need to Embed AI Ethics Education. 2021. DOI: 10.48550/arXiv.2109.02866 [DOI] [Google Scholar]

- 50.Krive J, Isola M, Chang L, et al. Grounded in reality: artificial intelligence in medical education. JAMIA Open. 2023. DOI: 10.1093/jamiaopen/ooad037 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Zhong J, Fischer N. Commentary: The desire of medical students to integrate artificial intelligence into medical education: An opinion article. Front. Digit. Health. 2023; 5: 1151390. DOI: 10.3389/fdgth.2023.1151390 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Jobin A, Ienca M, Vayena E. The global landscape of AI ethics guidelines. Nature machine intelligence. 2019; 1(9): 389–99. DOI: 10.1038/s42256-019-0088-2 [DOI] [Google Scholar]

- 53.Ötleş E, James C, Lomis K, et al. Teaching artificial intelligence as a fundamental toolset of medicine. Cell Reports Medicine. 2022; 3(12). DOI: 10.1016/j.xcrm.2022.100824 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.European Parliament: Panel for the Future of Science and Technology (STOA). Artificial intelligence in healthcare. 2022. DOI: 10.2861/568473 [DOI] [Google Scholar]

- 55.Lehane E, Leahy-Warren P, O›Riordan C, et al. Evidence-based practice education for healthcare professions: an expert view. BMJ Evid Based Med. 2019; 24(3): 103–8. DOI: 10.1136/bmjebm-2018-111019 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Marienhagen J, Brenner W, Buck A, et al. Entwicklung eines kompetenzbasierten Lernzielkatalogs Nuklearmedizin für das Studium der Humanmedizin in Deutschland [Development of a national competency-based learning objective catalogue for undergraduate medical education in Germany]. Nuklearmedizin. 2018; 57(4): 137–45. German. DOI: 10.3413/Nukmed-0969-18-03 [DOI] [PubMed] [Google Scholar]

- 57.Moldt J-A, Festl-Wietek T, Madany Mamlouk A, et al. Chatbots for future docs: exploring medical students’ attitudes and knowledge towards artificial intelligence and medical chatbots. Med Educ Online; 2023. DOI: 10.1080/10872981.2023.2182659 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Eysenbach G. The Role of ChatGPT, Generative Language Models, and Artificial Intelligence in Medical Education: A Conversation with ChatGPT and a Call for Papers. JMIR Med Educ. 2023; 9: e46885. DOI: 10.2196/46885 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Karabacak M, Ozkara BB, Margetis K, et al. The Advent of Generative Language Models in Medical Education. JMIR Med Educ. 2023; 9: e48163. DOI: 10.2196/48163 [DOI] [PMC free article] [PubMed] [Google Scholar]