Abstract

Artificial intelligence and machine learning techniques have progressed dramatically and become powerful tools required to solve complicated tasks, such as computer vision, speech recognition, and natural language processing. Since these techniques have provided promising and evident results in these fields, they emerged as valuable methods for applications in human physiology and healthcare. General physiological recordings are time-related expressions of bodily processes associated with health or morbidity. Sequence classification, anomaly detection, decision making, and future status prediction drive the learning algorithms to focus on the temporal pattern and model the non-stationary dynamics of the human body. These practical requirements give birth to the use of recurrent neural networks, which offer a tractable solution in dealing with physiological time series and provide a way to understand complex time variations and dependencies. The primary objective of this article is to provide an overview of current applications of recurrent neural networks in the area of human physiology for automated prediction and diagnosis within different fields. Lastly, we highlight some pathways of future recurrent neural network developments for human physiology.

Index Terms—: Deep learning, human physiology, recurrent neural network, signal processing

I. Introduction

MODERN artificial intelligence and machine learning techniques have significantly impacted a wide range of applications, and such powerful learning tools have dramatically improved results. Several ambitious goals have already been achieved: the early triumph of AlphaGo from DeepMind and a recent version of OpenAI that beat the top human players in Dota2 (a sophisticated video game)[1]. In terms of the existing achievements of machine learning, a natural question is raised: how can such an advanced technique serve human health? One answer is deep learning-assisted biomedical image processing, which can adapt Convolutional Neural Networks (CNNs) to analyze spatial information[2].

In another scenario, physiological recordings refer to sequential data rather than images. Such data sets commonly have the following characteristics: (1). They refer to collective electrical/mechanical signals representing physical variables of interest, such as electrical activity produced by the brain or skeletal muscles; (2). These data reflect the status variation of a subject/subjects in a given period of time; (3). They are naturally in the format of time-related recordings (e.g., time series), and latent causality governs two (or more) successive occurrences. In practice, detecting an event in real-time or the future is critical, and the results might be sensitive to the temporal dynamics determined by physiological conditions. Our literature survey found that most sensors used for signal acquisition were non-invasive. For example, electrocardiography (ECG) or electroencephalogram (EEG) signals were collected from electrodes attached to the skin. The data collection procedures are patient-friendly and ubiquitous for practical healthcare systems. However, interpreting these signals is not an easy task. The underlying complexity within the signals and actual physiological mechanisms are generally not visible or easy to understand. Therefore, it is challenging to predict outcomes solely based on a human expert’s experience since the physiological interactions are multidimensional, highly nonlinear, stochastic, time-variant, and patient-specific.

Artificial networks may offer solutions to the problems mentioned above. Neural networks can mathematically describe the underlying relationship. The “Universal Approximation Theorem” tells us that the neural network with one hidden layer can approximate a particular class of functions, which are large enough to capture processes of practical concern. In other words, all the members of the neural network family can approximate the nonlinear characteristics of a given system and explore the relationship from the inputs and corresponding labels, although this process is affected by many factors, such as network structures and learning algorithms. Basic feed-forward neural networks (or deep neural network, DNN) and convolutional neural networks have inherent limits in dealing with time series. DNNs cannot model the system dynamics, which describes the transitions (or time-dependencies) between states in a time sequence. Additionally, in most situations, samples always have variant lengths, which are unfeasible for DNNs to process. The CNNs are good at finding local patterns of temporal sequences, but it’s hard to discover the long-term dependency[3].

Besides the DNNs and CNNs, the Recurrent Neural Networks (RNNs), another deep learning architecture, are more suitable tools for physiological applications with sequential data or signals. RNN presents a class of artificial neural networks, which possess many of the qualities required for tackling the physiological problems: they possess both current and past features of the temporal sequences, adapt to the long-term historical changes in the data, store the past information to solve context-dependent tasks, and make predictions simultaneously with existing observations. Although RNNs were typically used to deal with sequential data like music or language, there have been attempts at applying RNNs to the area of physiology.

In computational physiology, designing a machine-learning algorithm aims to transform electrical recordings from the human cardiovascular, nervous, muscular, and other systems into computer computation in order to predict or identify events, monitor body activities, and detect anomalies[4]. For example, the ECG signal analysis focuses on classifying different types of heartbeat, thus assisting the cardiologist in achieving an accurate diagnosis for the patient; the EEG signal is a critical measure to evaluate many human functions, such as emotion and sleep qualities. It is also widely used to assess cerebral disorders, such as seizures and stroke. All these modalities carry human body information to create the solutions that transform healthcare delivery. As mentioned before, these recordings or signals are commonly presented in sequential manners, and the RNNs are thus successful paradigms in modeling complex physiological processes.

For machine learning practitioners, another goal is to improve the model performance. However, it is greatly constrained by specific conditions of physiological applications, such as feature extraction, data structure, model implementation, and subject issues. In this review, we first briefly introduce the RNN structures and highlight the model constructions according to two types of labels. Meanwhile, regarding the data collection from human subjects, we summarize the currently adopted validation strategies from a deep learning perspective, and discuss how they could affect the performance in later sections. We also present a variety of physiological applications with most representative studies and show that the RNN-based models outperform the other types of architectures, such as support vector machine (SVM) and CNN models. Furthermore, we summarize the existing issues in this field and propose possible solutions for future work.

II. RNN in general

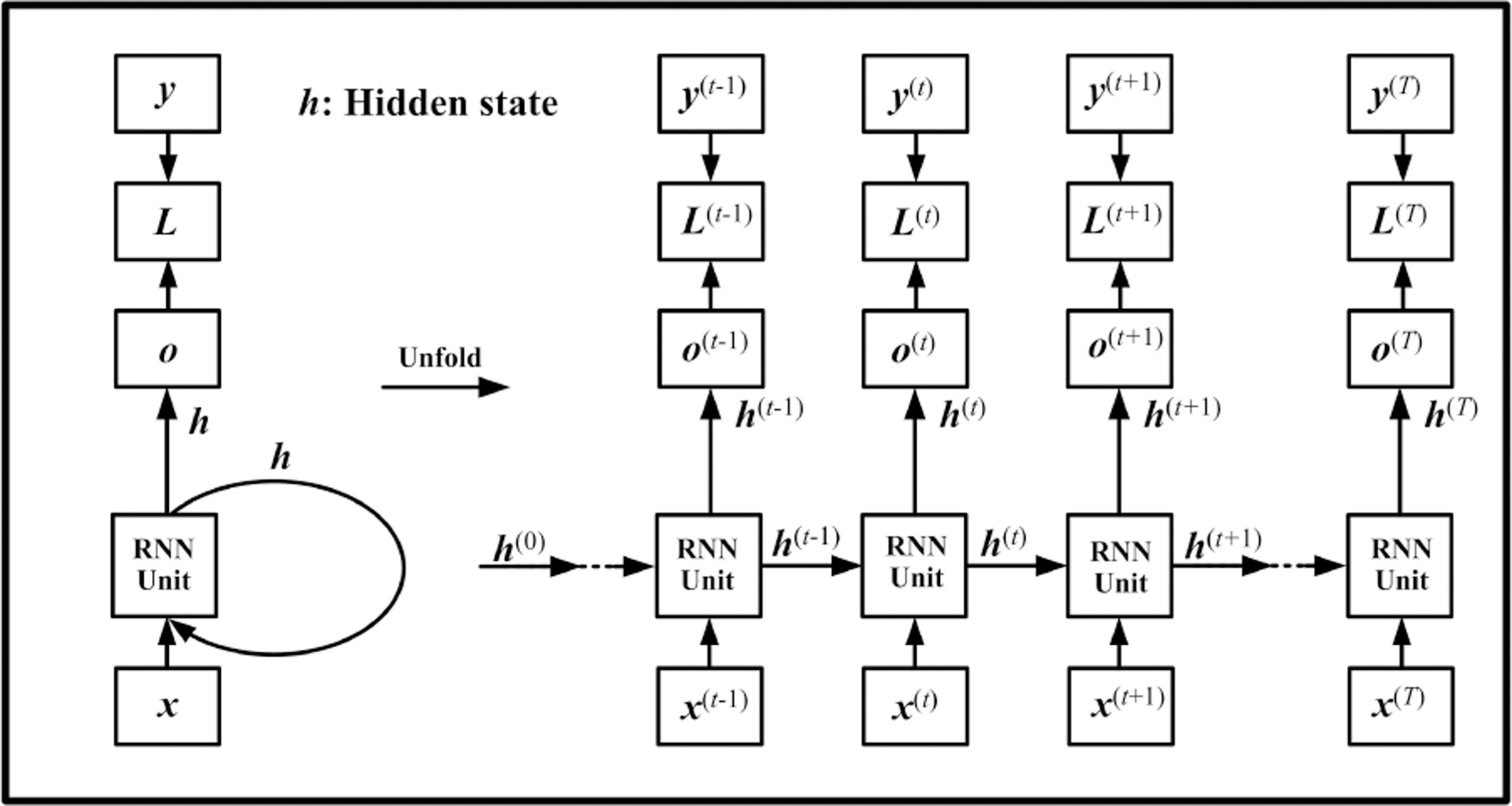

RNN is a kind of network specifically designed for processing time dependent sequential data. Given an input sequence, , a basic RNN architecture maps the input to a target sequence with a hidden layer, as shown in Fig.1. This hidden layer aims to learn the state-wise time dependency, which is modeled as:

| (1) |

where the RNN unit is a class of functions, which will be introduced later. Based on Eq.(1), the RNN structure models the relationship between adjacent hidden states and thus has the capability to process temporal information. This is the main difference between the RNN and CNN. Moreover, the RNNs have many characteristics benefiting the physiological activities and resultant multi-channel signals: (1) For the RNN models, the sequential examples do not necessarily have the same length[5], [6]. This is another difference between the RNN and CNN, because the CNNs request all the input samples have the same dimension;(2) the mapping process keeps the time consistency between the input and output; (3) the ith element could be multi-dimensional; (4) the hidden states described by the recurrent units can be stacked as a deeper structure [7], [8]. In practice, the training process and model performance are greatly affected by the construction of the RNN unit.

Fig. 1.

Computational graph of RNN. is the RNN output, and presents the difference between the RNN output and the desired output (target or label). is commonly used for calculating the loss function.

A. Structures of the RNN unit

1). Elman RNN:

The Elman RNN, which was named after Jeffrey Locke Elman, is the most basic RNN unit (sometimes called “Vanilla RNN”, meaning that it doesn’t have any extra features)[9]. The hidden state is calculated as:

| (2) |

where , are weights matrices, and is a bias term.

Elman RNN is one dense layer structure augmented by the inclusion of edges that span adjacent time steps[3]. The nonlinearity is introduced by using the activation function to transfer the hidden state dynamically.

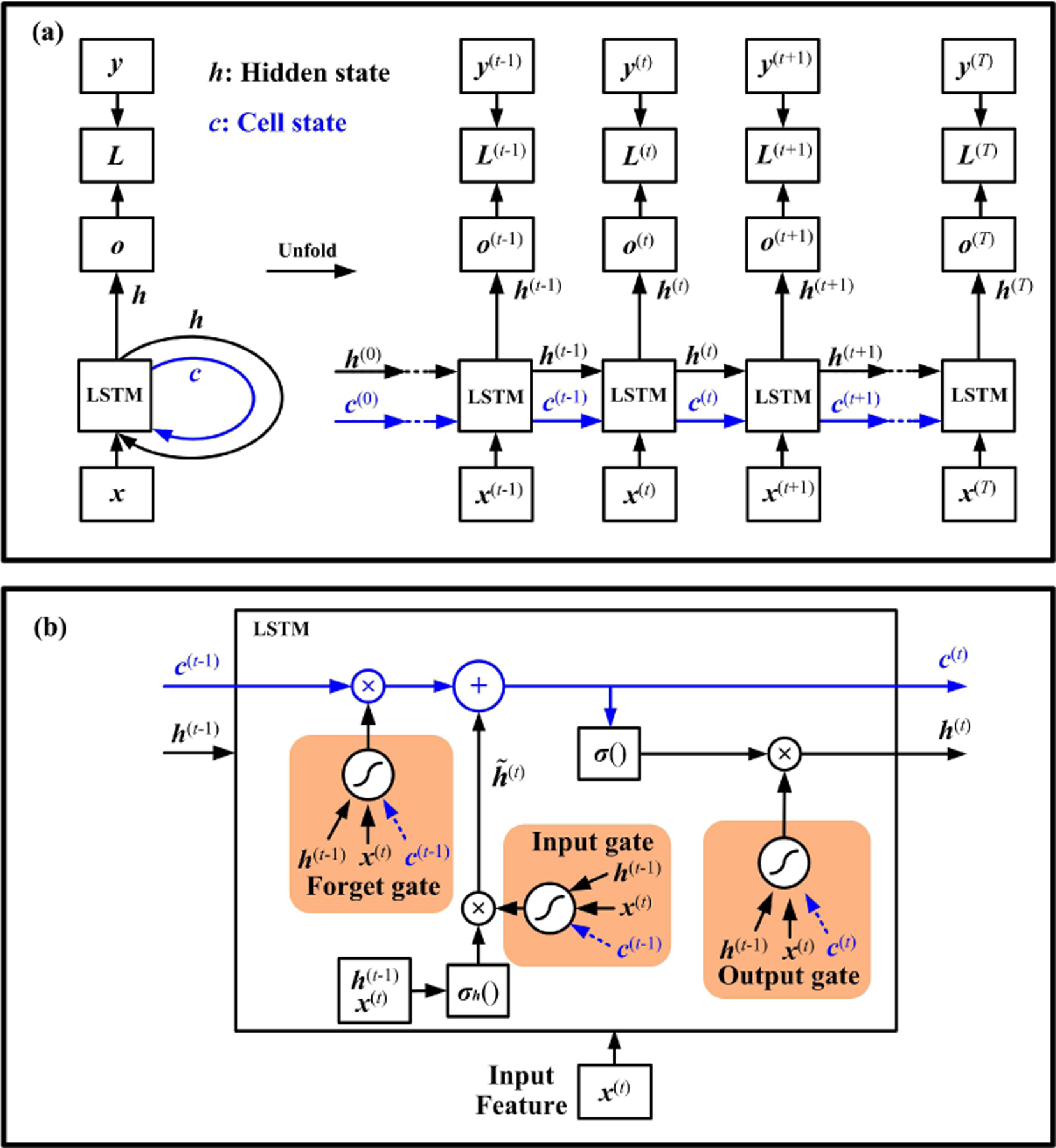

2). Long-Shot Term Memory (LSTM):

Compared with Elman RNN, LSTM contains an external cell structure. It delivers the information of input states through the entire time chain and forms a shortcut connection for the hidden states, as shown in Fig. 2. Since the cell state won’t be transferred to the next layer, it is also considered as a self-loop. Moreover, in LSTM, three gate components control the information flow: input gate, output gate, and forget gate. At each time step, the cell state updates itself by two actions: 1. gathering new information from the current input and hidden state, and 2. choosing old information from the past cell state.

Fig. 2.

LSTM recurrent neural network. The computational graph is shown in (a). The LSTM has an extra pathway for the cell state. A recurrent unit of LSTM is shown in (b). The arrows in blue represent the internal cell state.

The input feature and the previous hidden state are used to compute an intermediate state with an activation function . This procedure is similar to that of the Elman RNN (Eq.(2)). The state can accumulate into the cell state , if the input gate allows it. The cell state is controlled by the forget gate to drop irrelevant parts of the previous cell. Meanwhile, the input gate and forget gate determine how much information is chosen from the current and past time steps for updating the cell state. Moreover, the output state can be shut off by the output gate to limit the information passed to the next hidden state. The cell state can also act as an extra input to these gating units, as shown in Fig. 2(b).

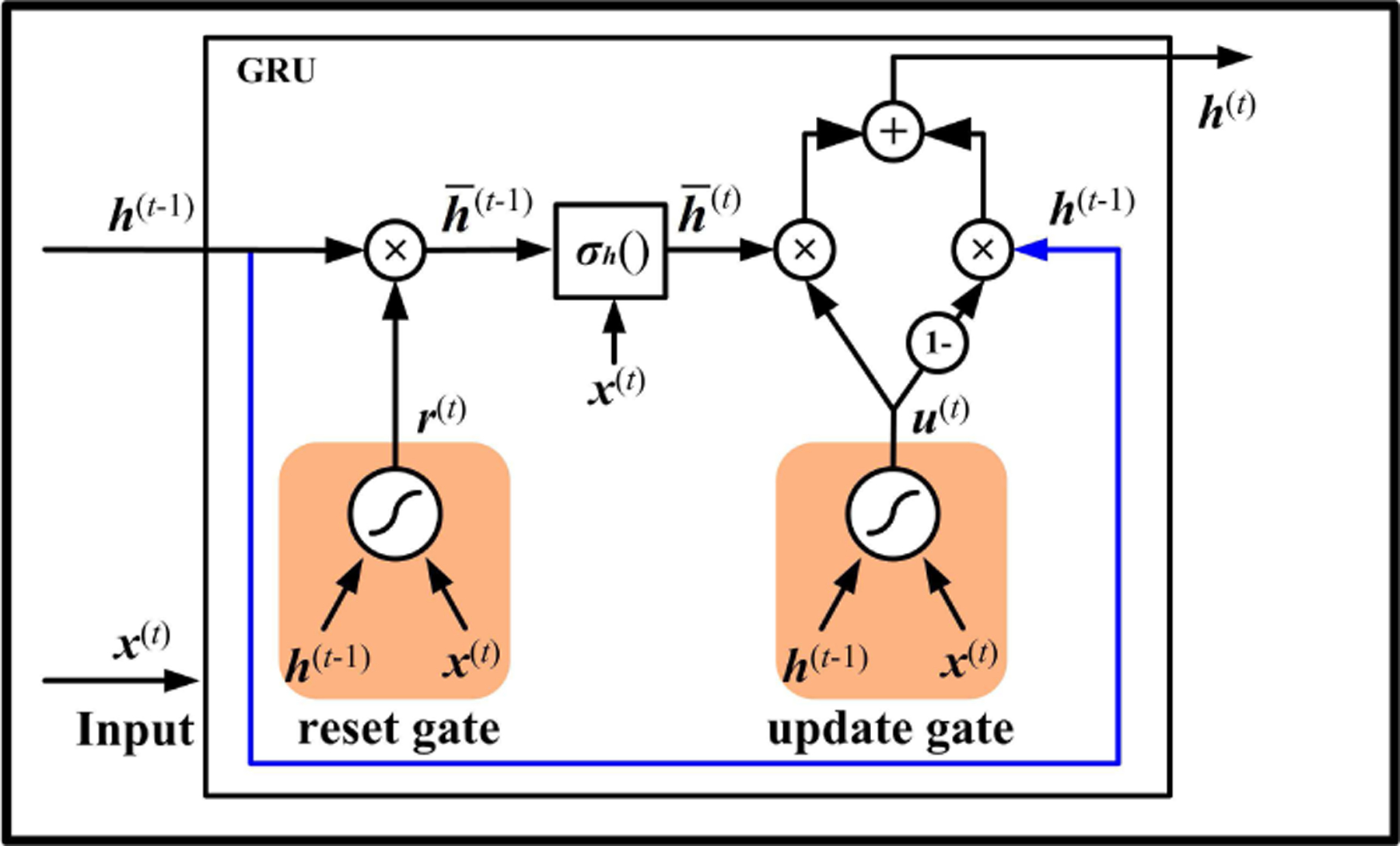

3). Gated Recurrent Unit(GRU):

GRU is another successful RNN unit design, as shown in Fig. 3 [5]. It contains two gating units: a reset gate and an update gate, as shown in Fig.3. The reset gate determines how much information is removed from the previous hidden state and generates a new state . Similar to Elman RNN (Eq.(2)), the input feature and the new state are used to compute a intermediate state with an activation function .

Fig. 3.

The unit structure of GRU.

If the reset gate outputs zero, the unit only involves the information of current input . To calculate the hidden state , the unit needs to select the meaningful information from the intermediate state by an update gate. Meanwhile, to make the output quickly react to the previous state , GRU also includes a shortcut connection, as delineated with blue lines in Fig. 3. The useful part of can also be controlled by the update gate, which acts like a two-way switch and simultaneously controls both forget and output information.

In these three RNN units, the Elman RNN employs the simplest structure, and it was thus widely applied in various physiological studies. However, training Elman RNN would raise issues known as exploding gradient and vanishing gradient with the first-order optimization method (like Gradient Descent)[10]. Compared to the Elman unit, both LSTM and GRU establish shortcut paths with gate components. The primary advantage of these two units is that they efficiently address the gradient vanishing problem when the time lag is extremely long[3]. Therefore, they have the capability of learning long-term dependency for time sequences. Besides, the GRU uses fewer parameters than the LSTM and thus reduces the computational time in the training and inference processes. In physiology, which kind of RNN unit is the most appropriate for a given task is still an open question. We will offer more discussion with physiological studies in Section V-B.

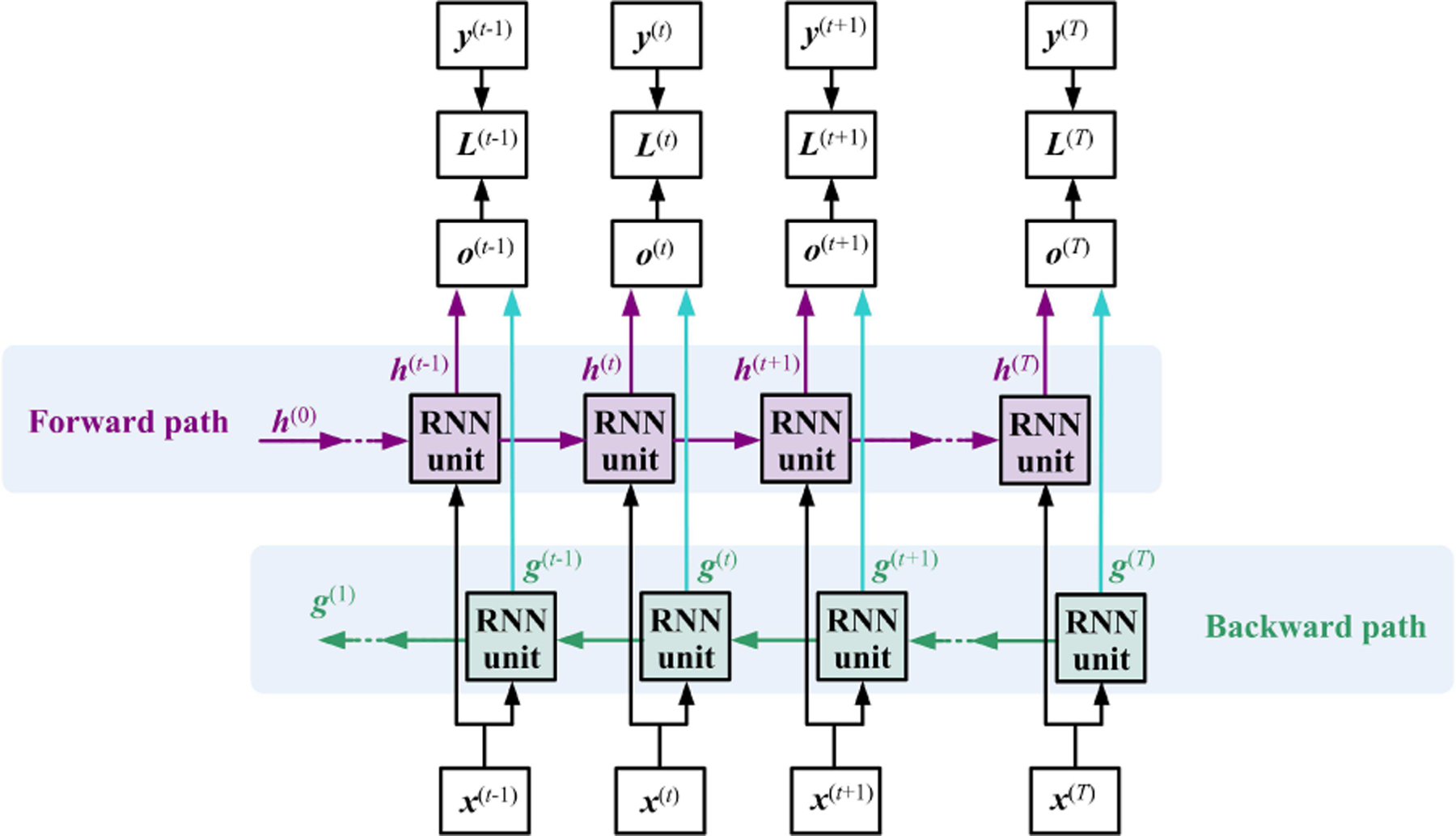

B. Bidirectional RNN

The previously discussed RNN computational graph (shown in Fig. 1) learns information from the previous time steps, meaning that RNNs are causal systems from a control theory perspective. In some cases, the RNN also needs to model the temporal dependency from the future to get better representations of the entire signal. Such a function can be conducted by simply adding an extra backward path to form a bidirectional RNN, as shown in Fig.4. The bidirectional RNN performs well on language-related tasks because the semantic analysis requires the previous and future words or sounds. Intuitively, bidirectional RNNs are not suitable for the physiological signal analysis, especially when online detection or classification is desired, because the future input is unobserved. A feasible solution is to specify a fixed size window (or buffer) around the current time step when the classification results are highly sensitive to the upcoming input. In abnormal heartbeat detection and emotion classification tasks, some studies attempted to use bidirectional RNNs, since the future observation on physiological signals may give us more evidence for decision-making at the current time point[11], [12], [7], [13].

Fig. 4.

The general bidirectional RNN has two time flow paths. The variables and present the hidden state for the sub-RNN moving forward and backward, respectively.

III. Experiment design with RNN

In physiological applications, Supervised learning is commonly used because the expected output (label) always exists. Besides, some Unsupervised learning techniques, such as autoencoder and clustering, may also help the physiological studies, which will be introduced in Section IV with examples. The experimental design depends on practical requirements and special considerations, which are sometimes beyond the scope of computer science or engineering. We will discuss the experiment design from two levels of view.

A. Model implementation

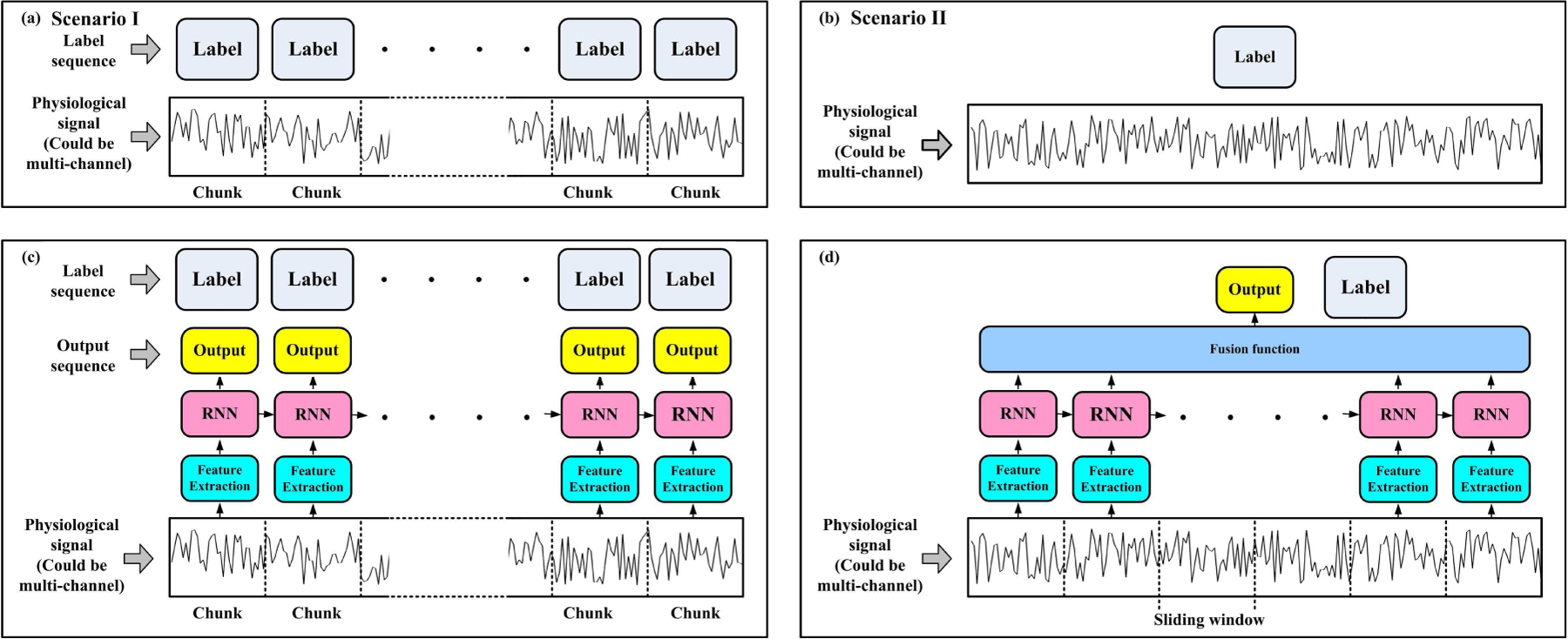

For model implementation, one should first analyze the data structure on hand. The physiological signals, which are always used as input data, are time sequences. As shown in Fig. 5(a) and (b), the annotated label structure would lead to two scenarios. Scenario I is that a sequence sample is annotated with a sequential label, framed as a ‘Many-to-Many’ problem, such as the study of sleep stage classification[14]. The model output was also a sequence with the same length as the label sequence. Another scenario II is that a sequence sample is annotated with a single label. For example, the ECG signal in a segment has only one label[15], [16]. This scenario is also described as a ‘Many-to-One’ problem since the considered output is a scalar value for each sequential input.

Fig. 5.

The implementations of RNN models are determined by the label structure of each signal sample. (a) shows a signal sequence with a sequential label. The general applied RNN could be designed in (c). Sometimes a signal sequence could only have one annotated label, as shown in (b), and the RNN could be designed in the form of (d). Although (c) and (d) show one-layer unidirectional RNN, multiple stacked layers or bidirectional RNN are also adoptable.

1). Model output construction:

The RNN model structures have slight differences at the final output part in these two scenarios. For scenario I, one can use the structure shown in Fig.1, Fig.2(a), and Fig.4, since the output in these figures is also sequential. For scenario II, there would be several ways to construct a fusion function (layer) to obtain the output, as shown in Fig.5(d).

A. The easiest way to construct the output is to use the hidden state at the last step with one or more dense layers. For example, to diagnose arrhythmia based on ECG signal, Oh et al. fed the LSTM’s hidden state at last time step to 3-dense layers for final output[17]. Chang et al. and Hofmann et al. also applied a similar configuration [18], [19]. For the bidirectional RNNs, one can concatenate the last hidden states of both forward and backward paths into a signal vector, and then feed it into dense layers for final output, as introduced in the studies of Lynn et al. and Supratak et al.[20], [21].

B. Another way of designing a fusion function is to employ an attention layer and then a dense layer as the final output. [22], [11], [23], [14]. The attention layer is calculated by a weighted sum of all the hidden state vectors from the RNN. A study from Shashikumar et al. suggested that the attention mechanism could improve the accuracy of classifying paroxysmal atrial fibrillation[11].

C. The third way of constructing the output also uses the information of all the hidden states. They could be flattened first and concatenated into a 1-D vector, then fed to dense layer(s) for the final output. For example, the studies reported by Yildirim and Liu et al. applied such a way for arrhythmia classification[24], [25], [26], [27]; Xing et al. also designed one dense layer with all hidden states as input for emotion recognition from EEG[28].

There could be other designs for the fusion function, such as sparse projection on hidden states[29] or averaging all the hidden states[30]. Method B and C may not be compatible with variant length samples since the concatenated vector should have a uniform length. Padding zeros might be a solution. However, more studies are needed to discuss the padding effects.

2). Input construction:

TThe input constructions of the two scenarios shown in Fig.5(a) and (b) are also slightly different. In scenario I, the signals are manually divided into consecutive chunks (or slices, epochs, segments) with clinical or other practical purposes, as shown in Fig.5(a). To construct an input sequence, a feature extraction module might be needed, which is also called ‘epoch processing block’ in the study of Phan et al.[31]. This module forms a vector representing each chunk’s information, and then vectors from all the chunks connect into an input sequence, as shown in Fig.5(c).

In each chunk, there are several ways to design a feature extraction module: 1. Directly flatten the chunk-wise data into a 1-D vector. The data lengths in all the chunks should be the same. 2. Knowledge-based features. Such as the R-R interval of the ECG signal[32], [33], [34]. 3. Handcraft features with engineering methods, including the statistic features. For example, mean value, standard deviation, frequency-domain features, and spectral features [35], [36], [27]. 4. Deep learning method to form a end-to-end system. For instance, CNN-1D, autoencoder, or even lower level RNN[21], [14], [22].

The sequential data in scenario II can also be sliced into chunks with sliding windows, and then the designer can apply the feature extraction module in each window to form the input sequence for RNN. The most straightforward design is that the window size is one and skip the feature extraction. In this case, the raw signal or recording is directly fed into the RNN as an input sequence, as introduced in [37]. Similar to scenario I, designers can also use the handcrafted features with overlapped windows[38], [11]. One typical design applies the Short-Time Fourier Transform (STFT) or Continuous Wavelet Transform (CWT) on the signals to form a spectral image, as introduced in [18], [39], [38]. Such an image composes a sequence of feature vectors. The CNN-1D layers can also be treated as a sliding window, in which the filter size determines the window size and stride determines how large two adjacent windows are overlapped[40], [41], [25], [17], [21]. More sophisticated designs could combine the two ways: calculate the spectral image and then apply CNN-1D on each frequency vector or CNN-2D on the spectral image[30], [42], [43].

Sliding windows can also serve the model design in scenario I. The chunks at a time could use as an input sequence for the label at , as suggested in [14]. The feature extraction module is critical for constructing the input sequence for RNN model. It can employ very flexible and complicated structures. The later sections will introduce more about this module.

B. Subject issue

Unlike other prevalent deep learning tasks, such as image classification or natural language processing, the physiological application has a very peculiar issue related to the subjects (persons, patients, participants, or users). One subject could offer more than one training pair in data collection, and all the data samples may not be independent of each other. Assigning of training, validation, and testing sets must consider the subject effect. Based on our survey, Cross-subject (Inter-subject) prediction and Within-subject (Intra-subject) prediction are commonly used strategies in physiological applications.

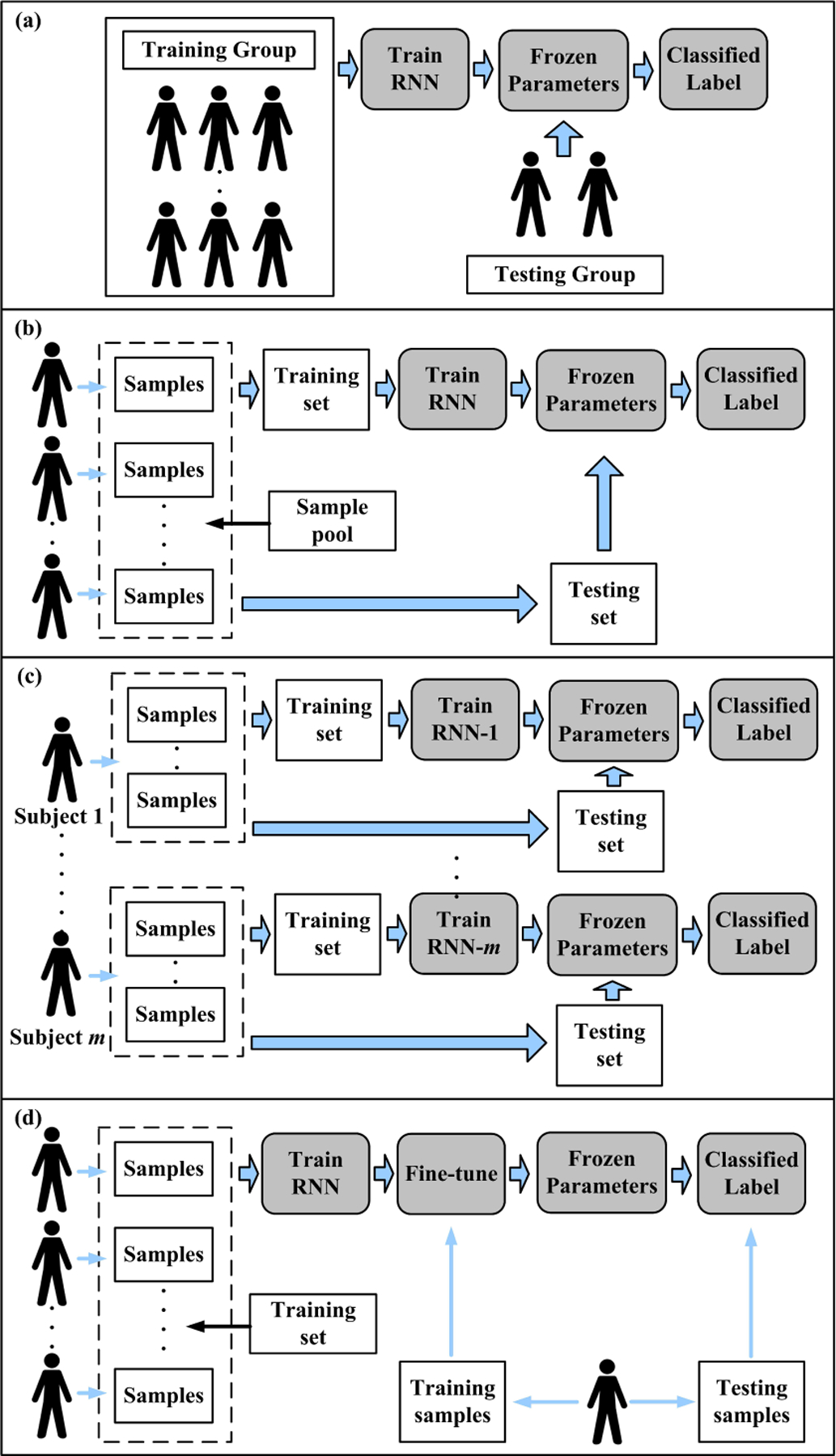

1). Cross-subject prediction:

Based on the samples acquired from certain subjects, it is desired for an RNN to model the universal pattern and predict the event of interest on the unseen subjects. The common practice of examining the model generalization is leave-one-subject-out, or k-fold subjects cross-validation, as shown in Fig 6(a). For example, Joseph Futoma et al. trained an RNN to detect sepsis on an unseen participant[44]. Chang et al., Hou et al., and Shashikumar et al. also conducted cross-subject experiments for the ECG classification studies[18], [45], [11]. The basic assumption of such a design is that the physiological information shared in the training group can benefit the testing group. Compared to the within-subject prediction, the cross-subject prediction is substantially challenging because human characteristics are subject-specific, and inter-subject variability can seriously degrade the performance[46].

Fig. 6.

The experiment designs in computational physiology. (a)Cross-subject prediction; (b), (c), and (d) describe three different strategies of within-subject prediction. (b): the mixed manner; (c) patient-specific manner; (d) fine-tuning manner

Diagnosing an unseen patient from the experience of diagnosing former patients is attractive for both clinical and technical investigators. To obtain robust RNNs, gathering data from more subjects is helpful, although it is costly or sometimes infeasible. We will discuss this issue in Section V-C.

2). Within-subject prediction:

In some studies, the RNN-based models were trained by some samples (or clinical attempts) collected from one/some subject(s) and validated/tested by the samples collected from the same subject(s). The validation/testing pairs were unseen for the model, but the subject(s) information was partially seen in the model. In practice, there are mainly three ways to implement within-subject studies.

1. Mixed manner.

Each subject provided several samples, and the whole sample pool was collected from multiple subjects. All the samples were mixed and randomly divided into training, validation, and testing sets, as shown in Fig. 6(b). This manner assumed that all the samples were independent and identically distributed regardless of the subject effect. Some ECG classification studies adopted this manner[37], [24], [17], [26]. In the applications of emotion recognition with EEG signals, some studies also applied ”trial-oriented” recognition, which was similar to mixed manner[30], [47], [28].

2. Subject-specific (subject-dependent) manner.

This manner tended to train a specific model for just one subject due to the variability among the subjects[32]. The training and validation sets were collected from the same subject for just one model training, and the participant’s group thus required multiple models, as shown in Fig6(c). This manner assumed that only the samples collected from the same subject share an identical pattern. Some epileptic seizures prediction studies preferred to use this manner, such as the studies reported in [27], [48].

3. Fine-tuning manner.

This manner attempted to balance the information of other subjects and the testing subject. The model could be first trained by the data collected from other subjects, and then fine-tuned by partitions data of the tested subject (also known as target domain). Such a manner believed that the training set collected from training group helped model the common patterns, but it has insufficient personalized information of unseen subjects due to the interuser differences. To build up the blood glucose prediction model, Dong et al. used this manner to train a model on multiple patients and then fine-tuned the model for one patient [49]. Similarly, Phan et al. fine-tuned SeqSleepNet[14] and DeepSleepNet[21] models, which are well-developed RNN-based models in sleep stage classification[31].

The strategy choice is greatly determined by the study purposes, practical requirement, data structure, and physiological considerations. However, different strategies on the same dataset could lead to significantly different results. We will discuss this issue in Section V-C.

IV. Applications in physiology

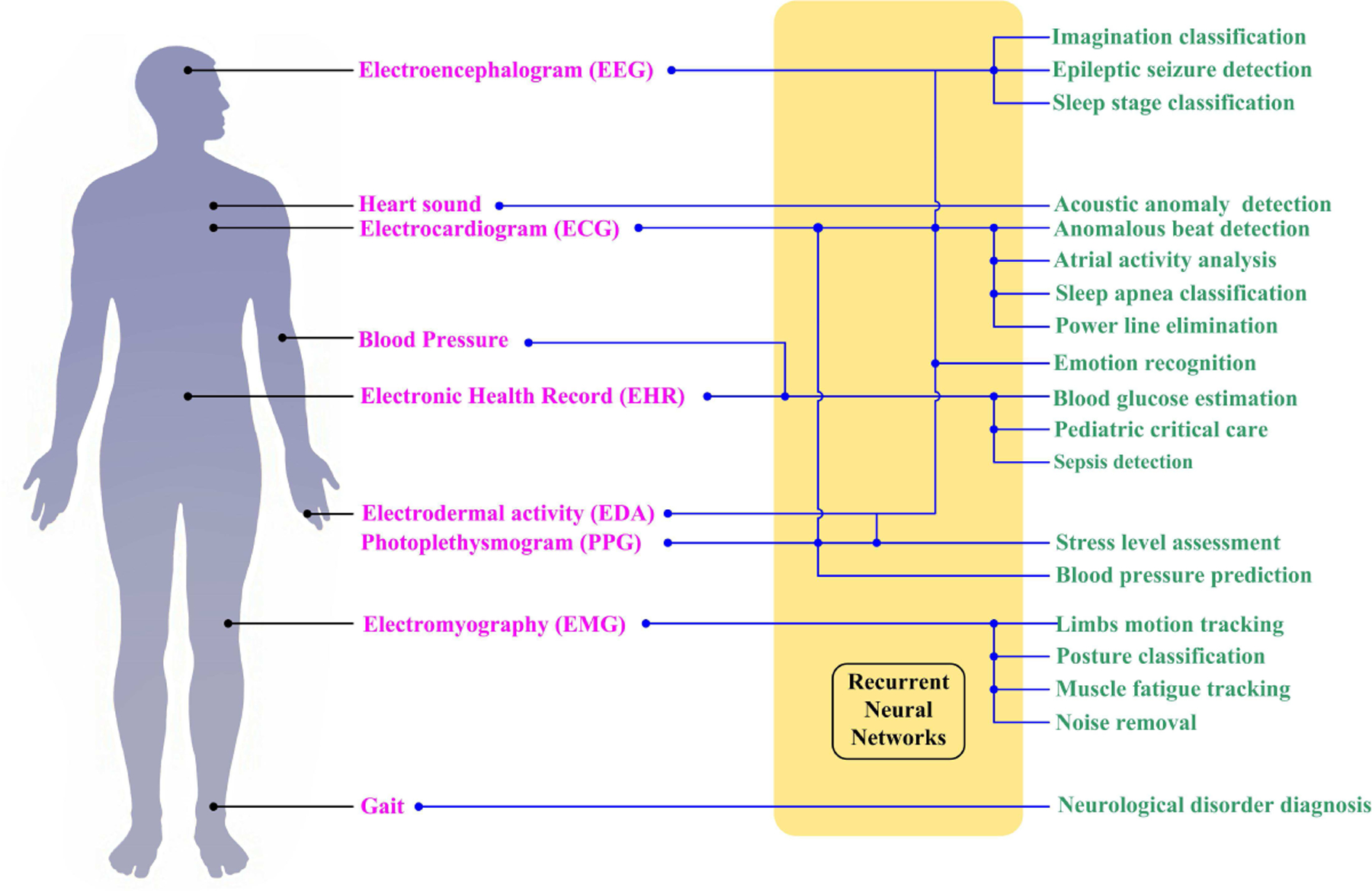

The main machine learning task in physiology is to develop an automatic diagnostic or patient status monitoring system. Analyzing the disorder, predicting the onset of a seizure, classifying a subjects’ state, and even forecasting a possible disease from the time-related signals are all desired tasks. RNNs are the top options for dealing with temporal information and learning the relationship between the signals and the symptoms. As mentioned before, such relationships are generally not well understood and are hard to evaluate with existing human knowledge. The RNN framework endows physiological data analysis with a highly flexible, inductive, nonlinear modeling ability. Based on our survey, we found that the RNNs have already served human physiology from the top (brain) to the bottom (gait), as shown in Fig.7.

Fig. 7.

Representative applications of RNN in the human body for diagnosis and event detection.

The works cited in this review were found by use of aggregate research databases including PubMed (MEDLINE), Springer Link, Google Scholar, and IEEE Xplore. Keyword searches were conducted through these databases with search terms such as ‘recurrent neural network’, ‘long short-term memory’ and ‘gated recurrent unit’ (and their acronyms) with the combination of ‘physiology’, ‘electrocardiogram’, ‘electromyography’, ‘electroencephalogram’, ‘photoplethysmogram’, ‘epileptic seizure’, ‘emotion recognition’, ‘sleep stage’, ‘blood glucose’ and ‘gait’ (and their acronyms). In the following sections, we will summarize the majority of studies in ECG classification, emotion recognition, epileptic seizure detection, sleep stage classification, and blood glucose level prediction with RNNs. To present up-to-date studies in these applications, we focus on the papers published after 2015. Besides, we also summarize the studies in other physiological fields from the most representative studies published after 2010. We mainly cover the human-subject studies that applied RNN for analyzing the physiological time sequence. Additional studies, such as biomedical image processing and document/tabular analysis, will not be included.

A. Electrocardiographic signal analysis

The ECG plays a significant role in the diagnosis of cardiovascular status[32]. It has become a focus of investigations since it consists of unobtrusive, effective, non-invasive, low cost, and widely available procedures using sensors (or electrodes). As a physiological measure, the ECG represents the sequential cardiac electrical activities, such as depolarization and repolarization of the cardiac muscle. When the deep learning models are applied, The ECG signal could be directly fed into the network without any elaborate preprocessing[50]. Alternatively, knowledge-based features, such as morphology (or shape) features, heartbeat interval (R-R interval), and heart rate, could also be used as input[45]. It is worth noticing that all of these features are also variant over time, which offers us a way to detect events of interest with RNNs.

The tasks of ECG classification are usually ‘Many to One’ problems, namely classifying the clinical label based on the signal segment in each beat. Therefore, most studies would consider segment-level classification. Some studies also attempted to model the dependency between successive heartbeats, such as the studies reported by Shashikumar et al., Chang et al., and Mousavi et al[18], [11], [54]. The detailed applications of RNNs in diagnosing abnormalities or analyzing signals are listed in Table I.

TABLE I.

Summary of works carried out using RNN structure with ECG Signals

| Author | Heartbeat typesa | Model architecture | Performance | Datasetb |

|---|---|---|---|---|

| Zhang et al. 2017[51] | N, VEB, SVEB and F. | The raw ECG signal segment was fed into a 2-layer stacked LSTM. | The accuracies were 99.6% and 99.0% for detecting SVEB and VEB, respectively. | MITDB |

| Maknickas et al. 2017[52] | N, AF, O, and noise. | • 23 handcraft features were extracted from each ECG segment and then connected in the time order to form an input sequence. • The model was composed of 3-layer LSTM and followed by a dense layer. |

Macro-averaging F1-score was 0.78. | CinC |

| Schwab et al. 2017[22] | N, AF, O, and noise. | • Handcrafted and autoencoder calculated features were extracted from beat-wise ECG segments and then connected in the time order to form an input sequence. • An ensemble model contained 15 RNNs and 4 Hidden Semi-Markov models. The outputs of sub-models were concatenated as an input for a dense layer to obtain the final result. |

Macro-averaging F1-score was 0.79. | CinC |

| Warrick et al. 2017[40] | N, AF, O, and noise. | The ECG signal segment was firstly fed into one CNN-1D layer. Three layers of LSTM were then applied at the top of the CNN layer | Macro-averaging F1-score was 0.80. | CinC |

| Zihlmann et al. 2017[39] | N, AF, O, and noise. | • Proposed an ensemble model with 5 deep networks, either CNN only models or CNN and RNN models. • The authors first calculated the logarithmic time-frequency spectrogram as an input image. • The RNN part was composed of a 3-layer bidirectional LSTM. |

Macro-averaging F1-score was 0.821 | CinC |

| Xiong et al. 2018[41] | N, AF, O, and noise. | • The ECG signal segment was first fed into a CNN part composed of 16 residual blocks with CNN-1D layers. • Three layers of Elman RNN were then applied at top of CNN parts. |

Macro-averaging F1-score was 0.864. | CinC |

| Singh et al. 2018[37] | N and A. | The authors applied three kinds of RNNs with Elman, LSTM, and GRU. Each RNN had three stacked layers, and the ECG signal segment was used as an input sequence. | LSTM showed the best accuracy of 88.1%. | MITDB |

| Shashikumar et al. 2018[11] | AF and O. | • The wavelet power spectrum was calculated in 30s non-overlapping windows. 5-layer CNN was applied on each spectrum to extract local features and thus form a feature sequence in 10 mins. • The feature sequence was fed into a bidirectional Elman RNN with a soft attention layer, followed by a dense layer for decision-making. |

• Testing accuracy was 96%. • Transfer learning was conducted on PPG recordings as input data and obtained 97% accuracy. |

Collected from 2850 patients |

| Yildirim. 2018[24] | N, LBBB, RBBB, PB and VPC | • The detailed coefficients were calculated from 4 levels of the discrete wavelet transform. These coefficients with original signals were connected into a 5-channel time sequence as input. • The sequence was fed into 2-layer unidirectional and bidirectional LSTMs separately. |

• For the unidirectional LSTM, the accuracy was 99.25%. • For the bidirectional LSTM, the accuracy was 99.39% |

MITDB |

| Oh et al. 2018[17] | N, LBBB, RBBB, APB and VPC | • The ECG signal segment was first fed into the CNN part, composed of 3 CNN-1D layers. • The CNN part was followed by 1-layer LSTM. The last time step’s output was fed to 3 dense layers for final classification. |

The accuracy was 98.10% | MITDB |

| Chang et al. 2018[18] | N and AF | • The author calculated the time-frequency spectrum on each ECG recording, which contained multiple heartbeats. • Fed the spectrum into the LSTM model according to the time order. |

The accuracies were 98.3% and 87.0% for within- and cross-subject experiments | MITDB, CinC and self recorded data set |

| Tan et al. 2018[12] | N and CAD | • Each ECG segment was sliced into multiple shorter segments with overlapping windows. Then they were put together into a 2D matrix as an input. • The input was first fed into 2-layer CNN, and 3-layer LSTM was designed on the top of CNN. |

• For non-patient specific task, the accuracy was 99.85%. • For patient specific task, the accuracy was 95.76%. |

INCARTDB |

| Yildirim et al. 2019[25] | N, LBBB, RBBB, APB and VPC | • The authors applied a CNN-based autoencoder to extract the feature from each ECG segment. • The feature sequence was fed into 1-layer LSTM. |

The accuracy was 99.11% | MITDB |

| Hou et al. 2019[45] | Two tasks: 1.N, LBBB, RBBB, APB and VPC; 2. AAMI standardc |

• The authors applied an RNN-based autoencoder to extract the features from each ECG signal segment. • Both encoder and decoder were designed with 1-layer LSTM. • An SVM was used for the final decision-making based on the features. |

• For task 1, the accuracy was 99.74%. • For task 2, the accuracy was 99.45% |

MITDB |

| Wang et al. 2019[32] | N, VEB, SVEB and F | • The ECG signal segment was fed into the RNN as a morphological vector. • The RR interval features were fed into a dense layer as temporal input with an extra clinical label. • RNN and dense layer outputs were combined and fed to another dense layer for final decision-making. |

• Accuracies of detection SVEB on three datasets were: 99.7%, 99.0% and 99.9%. • Accuracies of detection VEB on three datasets were: 99.7%, 99.8% and 99.4% |

MITDB, SVDB and INCARTDB |

| Liu et al. 2019[26] | N, VPC, RBBB and APB | • An ensemble model with 3 CNNs and 3 RNNs was proposed. • The signal was decomposed into 6 levels of intrinsic mode functions (IMF)with empirical mode decomposition. • Three low IMFs were fed into three bidirectional LSTMs, and three high IMFs were fed into three CNNs • The sub-models were first trained separately, and an SVM was used as a fusion layer. |

The accuracy was 99.1% | INCARTDB |

| Saadatnejad et al. 2019[53] | VEB vs. non-VEB and SVEB vs. non-SVEB | • The authors proposed an ensemble model with two sub-RNN models. • The original signal, RR-interval features, and wavelet features were combined as pairs for the inputs of sub-RNN models. |

Evaluated the model with three different sub-set of MITDB. The average accuracies for those two tasks were 99.4% and 98.6% | MITDB |

| Mousavi et al. 2019[54] | NA, SA, VA, FA, and QA | • A Seq2seq model was designed. The input was a sequence of multiple beats, and the output was sequential labels. • The ECG signals were first fed into CNN-1D layers to extract feature sequences, which were then fed into an encoder. • Both encoder and decoder were bidirectional Elman RNN. |

Intra-patient (within-subject) paradigm achieved 99.92% accuracy. Inter-patient (cross-subject) paradigm achieved 99.53% accuracy. | MITDB |

N: Normal rhythm; A: Arrhythmia; AF: Atrial fibrillation rhythm; APB: Atrial premature beats or atrial premature complex; CAD: Coronary artery disease; F: Fusion of a ventricular ectopic beat and a normal beat; LBBB: Bundle branch block; PB: Paced beat; RBBB: Right bundle branch block; VEB: Ventricular ectopic beat; VPC: Ventricular premature contraction; SVPC: Supraventricular premature contraction; SVEB: Supraventricular ectopic beat; O: Other types of rhythms.

CinC: A database provided by Computing in Cardiology 2017 challenge[15]; MITDB: MIT-BIH arrhythmia database[16]; SVDB: MIT-BIH Supraventricular Arrhythmia Database; INCARTDB: St. Petersburg Institute of Cardiological Technics 12-lead Arrhythmia Database[15]

AAMI standard includes 5 classes: NA: Normal beat, LBBB, RBBB, atrial escape beat, Nodal escape beat; SA: APB, SVPC, Aberrated atrial premature beat, Nodal premature beat; VA: VPC, VEB; FA: Fusion of normal and ventricular beat; QA: PB, Fusion of paced and normal beat, unclassified beat.

The LSTM is becoming more popular in recent years for ECG-based heart disorder diagnosis, although the Elman RNN is still competitive due to its computational efficiency[41]. Besides the RNN method, many studies attempted to apply CNN solely for the ECG classification problems. Moreover, the CNN models were much deeper than the proposed RNN models, as listed in Table I. Acharya et al. proposed an 11-layer deep structure with one-dimensional CNN to detect coronary artery disease and achieved 95% accuracy[55]. Kachuee et al. also attempted residual blocks with CNN-1D layers in a 13-layer deep learning model, which obtained 93.4% accuracy on the MIT-BIH database[56]. Jun et al. designed also designed an 11-layer deep structure with 2-dimensional CNN layers for arrhythmia classification on the same database, and the beat waveform was treated as a gray-scale image for input construction[57]. They achieved 99.05% accuracy, which was slightly lower than RNN-based models, such as the study presented by Hou et al[45].

B. Emotion Recognition via RNN

Human emotions influence all aspects of our diurnal experience. Automatic emotion recognition has been an active research field for decades from psychology, cognitive science, and engineering. Physiological signals are strongly correlated with emotion and offer more objective evidence, since emotion leaded physiological reactions are involuntary. Among all the modalities, such as EEG, ECG, electrooculography (EOG), temperature, blood volume pressure, electromyography (EMG), electrodermal activity(EDA), the EEG signal has drawn particular attention as it comes directly from the human brain[58], [59], [47]. EEG signals are recorded by electrodes placed on the participants’ scalp and reflect the electrical activity of the brain signals. The EEG-based assessment method is superior in the neuroscience domain due to its non-invasive ability to detect deep brain structures. The recent studies of emotion recognition with EEG and RNN are listed in Table II.

TABLE II.

A summary of contributions dealing with the applications of RNNS to emotion recognition with EEG signals.

| Author | Emotion classesa | Model architecture | Performance | Datasetb |

|---|---|---|---|---|

| Li, Xiang et al. 2016[30] | Low/high arousal and low/high valence. | • The authors calculated a scalogram (a time-frequency representation) in each channel by CWTc, then stacked the scalograms from all the channels to form a frame sequence. • Each frame was fed into 2 CNN-1D layers, followed by one LSTM layer. • The average values of all hidden states were fed to a softmax layer for final output. |

Mean accuracies were 72.06% and 74.12% on valence and arousal classification, respectively. | DEAP |

| Li,Youjun et al. 2017 [47] | Low/high arousal and low/high valence. | • The 32 leads conduction was first mapped to a 9*9 matrix. The PSD features from the raw EEG signal were filled into this matrixc. • The matrix was then interpolated into a 200*200 image at each time step. • The images were first fed into 2layer CNN with max pooling and one dense layer. • An LSTM and a dense layer were used for final decision-making with the feature sequence obtained from the last time step. |

The average accuracy was 75.21% | DEAP |

| Alhagry et al. 2017[64] | Low/high arousal, low/high valence, and low/high liking. | • Raw signal in each segment was used as input for two-layer LSTM. | For the arousal, valence, and liking classes, average accuracies were 85.65%, 85.45%, and 87.99% . | DEAP |

| Yang et al. 2018[65] | Low/high arousal and low/high valence. | • The authors designed a parallel model constructed with RNN and CNN. • The signal was first fed into one dense layer and two stacked LSTM layers. The last hidden state was fed to another dense layer. • Single vector at single time step was transferred into 2D frame according to the position of the electrodes, and the frame sequences were fed into 3 CNN layers. • Both CNN and RNN outputs were concatenated for final decision-making. |

Mean accuracies were 90.80% and 91.03% on valence and arousal classification tasks, respectively | DEAP |

| Hofmann et al. 2018[19] | Low/high arousal. | • Raw signals were decomposed with either Spatio-Spectral decomposition or Source Power Comodulation. • In the 1s segment, the decomposed signal with 250 samples was fed to 2-layer LSTM, and 2 dense layers were used for final classification. |

• Model with Spatio -Spectral decomposition got 63.4% accuracy. • Model with Source Power Comodulation got 62.3% accuracy. |

Self-collected EEG data from 45 participants. |

| Li, Yang et al. 2018[62] | Positive, Neutral and Negative emotion. | • Both left and right hemispheres had 31 channels of EEG signals. • The authors calculated DEcin 9s signals with 1s window and 5 frequency bands to form a time sequence. • LSTM was applied as a feature extractor for sequence. • Domain Adversarial Neural Networks was used based on the LSTM. |

• When conducted subject-specific experiment, the accuracy was 92.38% • When leave-one-subject-out cross-validation was considered, the accuracy was 83.28% |

SEED |

| Li, Yang et al. 2018[63] | Positive, Neutral and Negative emotion. | Proposed an extension version of [62] by adding a subject discriminator. | Achieved 84.14% accuracy. | SEED |

| Zhang et al. 2018[29] | Positive, Neutral and Negative emotion. | • The authors extracted DEcfeatures in 1s for all 62 electrodes. • In a single time step, Elman RNN was applied to model the spatial dependency from 4 directions. • The outputs of the spatial model at each time step were formed as sequences and fed into bi-directional Elman RNN. |

Achieved 89.5% accuracy. | SEED |

| Xing et al. 2019[28] | Low/high arousal and low/high valence. | • A stacked DNN autoencoder was applied for obtaining the sequence representation with the raw signal as input. • A Hanning window is used for segmenting the sequence representation, and frequency band power features and correlation coefficients were extracted in each window. • The feature sequence was fed to 1layer LSTM, and all the hidden states were fed into a dense layer for final decision-making. |

Mean accuracies were 81.10% and 74.38% on valence and arousal classification tasks, respectively. | DEAP |

| Li, Xiang et al. 2020[66] | Positive and Negative for SEED. Low/high arousal and low/high valence for DEAP | • Different types of autoencoder (Traditional, Restricted Boltzmann machine, and variational autoencoder) were first applied to obtain the latent sequences. • The latent sequence was fed into LSTM as an input, and 2 dense layers were used for final decision-making. |

• For DEAP, mean accuracies were 76.23% and 79.89% on valence and arousal classification tasks, respectively. • For SEED, the accuracy was 85.81%. |

DEAP and SEED |

Valence, arousal, and dominance space is a dimensional representation of emotions. Valence ranges from unpleasant to pleasant; arousal ranges from calm to activated and can describe the emotional intensity[67];

SEED: Shanghai Jiao Tong University Emotion EEG Dataset, which contains 15 subjects’ EEG data with 62 channels sorted according to 10–20 systems. In SEED, there are three categories of emotions (positive, neutral, and negative)[61]. DEAP: a Database for Emotion Analysis using Physiological signals, which contains EEG signals from 32 subjects, and the EEG signals were collected with 32 channels according to 10–20 system. Each participant rated the emotion in terms of arousal, valence, like/dislike, dominance, and familiarity[58].

DE: differential entropy; PSD: power spectral density; CWT: continuous wavelet transform.

Most analyses focused on the time-frequency representation for features extraction from the EEG signals since some studies suggested the correlation between valence/arousal and the frequency bands. For example, pictures and music-induced higher arousal associates decreased alpha oscillatory power[60]. Considering these characteristics of EEG signals, the power spectrum densities, continuous wavelet transformation analysis, or differential entropy features offers promising features for investigating the patterns of brain activities for specific emotion [61], [29], [62], [63], [47]. For the dataset SEED, DEAP, and other 10–20 system constructed EEG data, an additional concern is how to represent not only the temporal dependency but also the spatial connection of the multi-channel signals. The EEG components from different brain regions may also correlate with emotions. Collaborating with RNN, some studies listed in Table. II attempted to model the spatially-adjacent dependency according to the positions of electrodes. For example, the studies reported in [47] mapped the multi-channel signals to 2-dimensional image sequences and further extracted deep features by CNN layers, thus improving the accuracy compared with the study in [30].

Although various approaches have been proposed for EEG-based emotion recognition, most experimental results cannot be compared directly for different setups of experiments. Recently, we have several publicly available emotional EEG datasets, but there is still a lack of standard protocol for evaluating the performance. For example, the studies of Li, Youjun et al., and Li, Xiang et al. employed the trial-oriented 5-fold cross-validation (mixed manner) to implement their model[30], [47]. In contrast, Alhagry et al. and Yang et al. trained the model in a subject-specific manner. Nevertheless, some studies compared their performances with non-RNN methods under similar experiment setups. Li, Xiang et al. proposed C-RNN architecture achieved better performance than the random forest and support vector machine[30]. On both DEAP and SEED datasets, Li, Xiang et al. also suggested RNN with variational autoencoder outperformed support vector machine, random forest, k-nearest neighbors, sample logistic regression, naive Bayes classifier, and DNN[66].

C. Epileptic Seizure Detection

Epilepsy is a chronic neurological disorder caused by abnormal excessive or synchronous neuronal activities in the patient’s brain[68]. Based on the EEG recording, the study of the brain activity and the neurodynamic behavior of epileptic seizures provides required clinical diagnostic information. However, EEG analysis is time-consuming for the neurologist through qualitative visual inspection of raw data. Current studies in automatically detecting epileptic seizures have already utilized the merit of RNN, which helps to explore the characteristics of EEG, as summarized in Table. III.

TABLE III.

A summary of contributions describing the application of RNNS for epileptic seizure detection.

| Author | Learning taska | Model architecture | Performance | Datasetb |

|---|---|---|---|---|

| Thodoroff et al. 2016[69] | Whether a 30-second segment of EEG signal contains a seizure or not. | • In each 30s segment, the multi-channel signal was first coded into 3-channel images with 1s windows by spatial 2D projection and fast Fourier transform. • The image sequences were fed into CNN layers to extract local features. • The feature sequence was fed into a bidirectional LSTM, and a dense layer was applied on the RNN output through all the time steps for final decision-making. |

Average sensitivity was 85% and positive rate was 0.8/hour. | CHB-MIT |

| Raghu et al. 2017 [70] | Three tasks: 1. Normal vs. pre-ictal; 2. normal vs. epileptic; 3. pre-ictal vs. epileptic. | • A Weiner filter first removed the 50Hz power line noise. • In the 1s segment, log energy entropy, wavelet packet log energy entropy, and wavelet packet norm entropy were calculated as input features. • Elman RNN with two hidden layers was used as a classifier. |

The accuracies of three tasks were: 99.70%, 99.70% and 99.85%. | Uni Bonn |

| Ahmedt-Aristizabal et al. 2018 [71] | normal vs. inter-ictal vs. ictal | The raw EEG signals were directly fed to 2-layer LSTM, in which the output at the last step was fed into a dense layer for final decision-making. | The accuracy and average AUC were 95.54% and 0.9582. | Uni Bonn |

| Tsiouris et al. 2018[27] | pre-ictal vs. inter-ictal. | • First extracted 643 features in 5s chunk and then formed an input feature sequence for each signal segment. • Fed the sequence into 2-layer LSTM, followed by fully connected layers for final output. |

The sensitivity and specificity were 99.28% and 99.60%. | CHB-MIT |

| Daoud et al. 2019[48] | pre-ictal vs. inter-ictal. | • Multi-channel raw signals were fed into the CNN layers or a pre-trained encoder to extract features. • The feature sequences were then fed into bidirectional LSTM, and the outputs at last step were concatenated for final decision-making. |

Both models obtained 99.66% accuracy, 99.72% sensitivity, and 99.60% specificity. | CHB-MIT |

| Huang et al. 2019[72] | Seizure or not seizure. | • A channel dropout layer was first applied to the multi-channel EEG signals. The signals were then fed to the multi-scale CNN layers to extract multi-scale features, followed by an attention model. • The feature sequences were fed into bidirectional LSTM and GRU as two streams. The outputs of the RNN were concatenated as inputs for a dense layer at each time step. Then, the dense layer’s output sequences were fed into a global average pooling layer for final output. |

Specificity and Sensitivity were 93.94% and 92.88%, respectively. | CHB-MIT |

| Abbasi et al. 2019[73] | Four tasks: 1. pre-ictal vs. ictal; 2. pre-ictal vs. inter-ictal; 3. inter-ictal vs. ictal; 4. pre-ictal vs. inter-ictal vs. ictal. | • The raw EEG signal was divided into five components by discrete cosine transform. • Hurst exponent and auto-regressive–moving-average features were extracted for each component. • The features were used as input of 2-layer LSTM for final output. |

The accuracies for the four tasks were 99.17%, 97.78%, 97.78% and 94.81%. | Uni Bonn |

| Hussein et al. 2019[74] | Four tasks: 1. normal vs. ictal; 2. non-ictal vs. ictal; 3. normal vs. inter-ictal vs. ictal; 4. 5 type ictal states classificationc. | • The 4096*1 raw signal was first reshaped into 2048*2, and then fed into an LSTM with 2048 steps. • A dense layer was applied to the RNN output at all the time steps. • The output of the dense layer was fed into an averaging pooling layer, and a softmax layer was used for final decision-making. |

All tasks reached 100% accuracy | Uni Bonn |

For the epileptic seizure studies, there are several states: normal state, which describes healthy subjects’ EEG signal without any seizure history; pre-ictal state, which is defined by the period just before the seizure; ictal state, which is during the seizure occurrence, post-ictal state, that is assigned to the period after the seizure took place; and post-ictal state, that is assigned to the period after the seizure took place.[75].

CHB-MIT: CHB( Children’s Hospital Boston)-MIT(Massachusetts Institute of Technology) Scalp EEG Database, contains 23 patients divided among 24 cases (a patient has two recordings). The dataset consists of 969 Hours of scalp EEG recordings with 173 seizures[15]. Uni Bonn: data samples were collected in the Department of Epileptology at Bonn University[76]. This database was divided into five sets named A, B, C, D, and E. Sets A and B included surface EEG signals collected from five healthy participants. Set A was recorded from the five participants when they were awake and rested with their eyes open, while set B was recorded when their eyes were closed. Sets C, D, and E included signals collected from the cerebral cortex of five epileptic patients. Set E was taken from those patients while experiencing active seizures, and sets C and D were recorded throughout the seizure-free interims. The electrodes of set D and set C were implanted within the brain epileptogenic zone and the hippocampal formation of the obverse cerebral hemisphere, respectively.

The 5 types were A∼E sets provided by Uni Bonn dataset.

Almost all the studies in Table. III adopted the with-in subject strategy, leading to relatively fair comparisons. Only the study carried out by Thodoroff et al. considered cross-subject detection[69]. With a similar reason to the EEG-based emotion classification tasks, some studies constructed handcraft features from time-frequency representations[69], [70], [27]. Alternatively, deep models, such as CNN and autoencoder, were also applied to learn the lower-level features[48], [72].

On the CHB-MIT dataset, the model designed by Daoud et al. gave the state-of-art performance (99.72% accuracy) when the CNN layers and pre-trained encoder were used to extract the features[48]. Their model outperformed the CNN and DNN models. On the Uni Bonn dataset, a state-of-art performance was achieved by directly feeding the reshaped raw signal into RNN. The accuracy reached 100%[74], suggesting that the handcraft features were not robust enough. Other studies also applied non-RNN-based methods on the same dataset, but they did not exceed the best performance. For example, Acharya et al. conducted a 13-layer deep CNN, and obtained 88.7% accuracy[77]. Lu and Triesch proposed a deep CNN with residual structure and the system gave 99.0% accuracy[78].

D. Sleep Stage Classification

Sleep plays a vital role in human health. Abnormalities in sleep timing and circadian rhythm are common comorbidities in numerous disorders, such as apnea, insomnia, and narcolepsy[79]. Automatically monitoring the sleep stage would significantly benefit the clinical research and practice for evaluating a subject’s neurocognitive performance. Many studies have been trying to automate sleep stage scoring based on multi-channel signals from electrodes. These signals are generally called polysomnogram (PSG), typically consisting of EEG, EOG (electrooculogram), EMG, and ECG. Most sleep stage classification problems could be described as ‘Many-to-Many’ problems1, since the labels were commonly in the sequential form synchronized with the PSG signals. Meanwhile, as described in Fig.5(a), each signal chunk had one corresponding label annotated by the human expert2.

The current studies for sleep staging are summarized in TABLE.IV. The RNN was indispensable for this task when deep learning methods were used. Similar to the task of emotion recognition, some studies for sleep staging used the frequency domain features for constructing the input, such as the log-power spectrum, based on the frequency bands of the rhythms of EEG signals. Meanwhile, according to the American Academy of Sleep Medicine (AASM) standard, the five sleeping stages are highly characterized by the frequency bands[86], [81]. Most of the studies applied a cross-subject strategy. For example, Suparatak et al. applied k-fold subjects cross-validation, and Phan et al. used leave-one-subject-out cross-validation[21], [23]. The DeepSleepNet developed by Supratak et al. obtained higher overall accuracy than the non-RNN sparse autoencoder[87] and CNN-based method[88]. Based on LSTM, Phan et al. further improved the accuracy with SeqSleepNet with learned features. They suggested that such a model worked better than the CNN-only[88], [89], DNN-only, and regular machine learning methods, such as SVM and random forest[38].

TABLE IV.

A summary of contributions describing the application of RNNS for sleep stage classification.

| Author | Model architecture | Dataseta,b | Performance |

|---|---|---|---|

| Supratak et al. 2017[21] | • The authors proposed a hierarchical structure called DeepSleepNet with CNN and RNN parts. • Each signal chunk was first fed into a CNN part composed of two parallel CNN-1Ds with different filter sizes, and the two outputs were then concatenated as a chunk-level feature. • All chunk features were connected as temporal sequences and fed into a 2-layer bidirectional LSTM with a residual connection. |

MASS and Sleep-EDF | For MASS, the accuracy and macro F1-score were 86.2% and 0.817; for Sleep-EDF, the accuracy and macro F1-score were 82.0% and 0.769. |

| Dong et al. 2017[38] | • In each signal chunk, engineering features were extracted.. • Each feature was first fed into 2 dense-layers. The outputs of all the chunks in each recording were then connected into a feature sequence. • The feature sequence was fed into an LSTM as an input vector. |

MASS | The accuracy and macro F1-score were 85.92% and 0.805 |

| Phan et al. 2018[23] | • The authors first calculated the log-power spectral coefficients in each chunk as model input. • A DNN model was used as a filter bank for feature extraction and dimension reduction. • A two-layer bidirectional GRU with an attention layer was then applied on the top of the DNN part. The attention vector was fed to a softmax layer for final output. • After the model training, the softmax layer was replaced by an SVM for final decision-making. |

Sleep-EDF Expanded | When in-bed part was considered only, the accuracy and macro F1-score were 79.1% and 0.698; when before and after sleep periods were also considered, the accuracy and macro F1-score were 82.5% and 0.72. |

| Michielli et al. 2018[81] | • Each 30s signal chunk was divided into 30 smaller chunks with 1s length, and 55 features were extracted in each chunk. • The authors designed a 2-level LSTM-based classifier. After feature selection, the feature sequences were fed into the first-level classifier. It classified 4-classes, in which the stages N1 and REM were merged into a single class. • The samples identified as N1 stage/REM were then fed to the second binary classifier. |

Sleep-EDF | The accuracy was 86.7% |

| Phan et al. 2019[14] | • The authors proposed a hierarchical structure called SeqSleepNet with filter bank layers and two levels of RNNs. • Raw data had 3 channels (EEG, EOG and EMG). In each chunk, power spectrums image was calculated in each channel. The filter bank layer was applied to these images. • Connected the time point features as chunk-level sequence, which was fed into first level RNN (bidirectional GRU) with attention layer. • The authors connected the features as a chunk-level sequence, which was fed into bidirectional GRU with an attention layer. |

MASS | The accuracy and macro F1-score were 87.1% and 0.833 |

| Phan et al. 2019[31] | • The authors proposed a transfer learning strategy. • The author conducted either the CNN part from DeepSleepNet or the RNN part from SeqSleepNet to extract chunk-level features in each chunk. • The chunk-level features were connected as feature sequences and fed into a 2-layer bidirectional LSTM with residual connection. |

The model was trained on MASS, and fine- turned on Sleep-EDF-SC, Sleep-EDF-ST, Surrey-cEEGGrid, and Surrey-PSG | The accuracy and macro F1-score obtained from transfer learning outperfomed directly training on all the four datasets. |

| Phan et al. 2019[82] | The authors proposed a Fusion model, which was composed of DeepSleepNet and SeqSleepNet with slight modifications. | MASS | The accuracy and macro F1-score were 88.0% and 0.843. |

| Mousavi et al. 2019[83] | • The authors proposed a Seq2seq model called SleepEEGNet. • The signal was first fed into CNN layers for extracting the feature sequence, which was used as input for an encoder.. • Both encoder and decoder were constructed with bidirectional LSTM and attention mechanism. |

Sleep-EDF Expanded (version 1 and 2) | The accuracy and macro F1-score were 84.26% and 0.7966 for version 1; 80.03% and 0.7355 for version 2. |

MASS: Montreal Archive of Sleep Studies[84]; Sleep-EDF: a database in European Data Format[15]; Sleep-EDF Expanded: An expanded version of Sleep-EDF[15]; Sleep-EDF-SC: the Sleep Cassette, a subset of the Sleep-EDF Expanded dataset; Sleep-EDF-ST: the Sleep Telemetry, a subset of the Sleep-EDF Expanded dataset; Surrey-cEEGGrid and Surrey-PSG: collected at the University of Surrey using the behind-the-ear electrodes and PSG electrodes respectively [85].

Sleeping stages are commonly categorized into five classes: rapid eye movement, three sleep stages corresponding to different depths of sleep (N1∼N3), and wakefulness.

E. Blood glucose level prediction

Diabetes mellitus is a common public health issue, and the prevalence of diabetes diagnoses has increased substantially over the past 30 years among adults in the U.S[90]. The culprit of this metabolic disorder is insulin release or action, which leads to hyperglycemia. Managing the blood glucose (BG) levels of a diabetic patient can benefit glycaemic control and reduce costly complications[91].

Continuous subcutaneous glucose monitoring is becoming the most popular tool with a micro-invasive sensor to measure BG, such as an adhesive patch. One primary task for machine learning algorithms is to forecast abnormal changes in glucose concentration to take preventive action in time and avoid life-threatening risks[92]. To implement this, there are two types of inputs: using the past BG concentration only, or using the past BG concentration and external factors, such as food, drug, insulin intake, and activity, as shown in Table.V. The features are commonly extracted from physiological models when the external factors were included as input modalities[91], [92]. All these features describe the effects of carbohydrate, insulin, exercise, sleep, and glucose dynamics, and they are characterized as glucose-related variables.

TABLE V.

A summary of existing studies applying RNNS for BG level prediction.

| Author | Dataset | Input modalities | Model architecture | Performance |

|---|---|---|---|---|

| Gu et al. 2017[91] | 35 non-diabetic subjects, 38 type I and 39 type II diabetic patients | The past BG data, meal, drug, insulin intake, physical, activity, and sleep quality. | • The features were conducted from physiological and temporal perspectives based on the external factors. • This study proposed an Md3RNN model, which had three divisions: 1. A grouped input layer with three different sets of weights corresponding to non-diabetic, type I, and type II diabetic patients; 2. shared staked LSTM; 3. personalized output layers assigned for individual users. |

The average accuracy was 82.14% |

| Fox et al. 2018[95] | 40 patients with type 1 diabetes over three years. | The past BG data only. | The authors proposed four models with Seq2seq structures. All the encoders used GRUs, and only the decoder parts were different: • DeepMO: Fully connected layers were used for prediction. • SeqMO: GRU was used for decoder. • PolyMO: Multiple fully connected layers were used to learn the coefficients of a polynomial model. • PolySeqMO: the latent vector was first fed into a GRU, and the hidden states were used to learn the coefficients of a polynomial model. |

PolySeqMO achieved the lowest absolute percentage error, which was 4.87. |

| Dong et al. 2019[49] | 40 type I diabetic patients. | The past BG data only. | • Raw data sequence was directly fed into a GRU layer. The output at the last step was fed to 2 dense layers for final output. • Train a model on multiple patients and then fine tune for one patient. |

The mean square error at 30 and 45 min were 0.419 and 0.594 (MMOL/L)2, respectively. |

| Dong et al. 2019[93] | 40 type I and 40 type II diabetic patients.. | The past BG data only. | • The 1-day sequential BG data were first assigned into different clusters by a k-mean method. • The authors designed parallel dense layers for different clusters. • Each sequence was first fed into the dense layer according to the corresponding cluster, and the outputs of all parallel layers were fed into a shared LSTM for final output. |

For type I, the mean square errors were 0.104, 0.318, and 0.556 (MMOL/L)2 at 30 min, 45 min, and 60 min prediction, respectively; for type II, the mean square errors were 0.060, 0.143 and 0.306 (MMOL/L)2 at 30 min, 45 min, and 60 min prediction, respectively. |

| He et al. 2020[92] | 112 subjects, including non-diabetic people, type I and type II diabetic patients. | The past BG data, food, drug intake, activity, sleep quality, time, and other personal configurations. | • The feature sequence was conducted from physiological models and auto-correlation encoder. • The input sequence was first fed into a personal characteristic dense layer, in which the weights and bias were uniquely assigned for each subject. • All the subject-specific dense layers shared a GRU layer for final output. |

The root mean square errors were 0.29, 0.47, and 0.91 (MMOL/L) at 15 min, 30 min, and 60 min prediction, respectively |

| Zhu et al. 2020[94] | OhioT1DM [96] and silicon dataset from the UVA-Padova simulator[97]. | The past BG recording, insulin bolus, meal intake and time index. | • The electronic health record in a sliding window was directly fed into the dilated RNN. • The dilated RNN was structured by 3-layer Elman RNN. The second and third layers had skipped connection through time. The last step was used for final decision-making. • The model was first trained on both datasets and then fine-tuned for the specific subject. |

The root-mean-square error was 18.9 mg/dL at 30 min prediction. |

Predicting the value at a future step is traditionally an auto-regressive problem. When RNN is applied, this problem could be re-formulated as a ”Many-to-One” scenario. For the prediction with multiple steps, such as predicting the BL in 15, 30, and 60 min, one can construct the model output as a multi-dimensional vector[93], [94]. Most RNN-based BL prediction studies have proven that the RNN models offered better performance (lower errors) than the other methods, such as the autoregressive model and support vector regression[92], [95], [94], indicating that RNN was more robust in searching the historical information with sufficient non-linearities. Besides, one innovative design structured this prediction problem into the seq2seq model adapting RNN-based autoencoder, as introduced in the study of Foxet al[95]. Their comparative studies suggested that the ‘Many-to-One’ structure (DeepMO) could not offer the best performance. Instead, the PolySeqMO outperformed all other structures. More details could be found in Table.V.

A critical challenge for the BG studies is modeling the typical pattern among all the subjects while considering the subject-specific characteristic. To address this issue, the practitioners in BG prediction generally design the model with 2 (or more) divisions: one learned the personalized pattern with different weights and biases, and the other one learned the common dynamics by shared RNN[93], [92], [91]. Other studies also attempt to apply the idea of fine-tuning manner, as shown in Fig. 6(c)[49], [94]. Glucose metabolism in the human body is a long-term process. For example, the effect of insulin intake can be present for more than ten hours, and measuring BGL variation demands days. Therefore, it is challenging to obtain a large number of samples. Meanwhile, within-subject experiment is the main strategy in the existing studies since the inter-patient variability makes it hard to find a generic model. We will discuss more in Section V-C.

F. Other applications

Besides the applications mentioned above, the RNN also exhibited its power in other physiological fields. Singh et al. attempted to classify automotive drivers’ stress levels based on the Galvanic Skin Response and Photoplethysmography signals[98]. Their study compared the traditional DNN and the Elman RNN, and pointed out that the RNN was the most optimal structure for stress level detection. Futoma et al. applied an LSTM combined with the Multitask Gaussian Process to detect the onset of sepsis[44]. Mastorocostas et al. designed a block-diagonal RNN, a modified version of the Elman RNN, to analyze lung sounds[99]. Cheng et al. used a deep LSTM to detect the obstruction of sleep apnea based on ECG signals[100]. Su et al. used ECG and PPG to predict blood pressure[34]. Their study applied a res-RNN architecture with a residual connection similar to the ResNet based on the CNNs[101]. Liu et al. involved historical blood pressure records, heart rate, and temperature in predicting future blood pressure with LSTMs[102]. More importantly, they used the subject’s profile as an extra input vector to address the cross-subject issue, and we will discuss it in Section V-C3. Hussain et al investigated the preterm prediction for pregnant women using Electrohysterography (EHG) technique [36]. Bahrami Rad et al. also used PSG to detect non-apneic and non-hypopneic arousals with 3-layers bidirectional LSTM[103]. Yang et al. detected heartbeat anomalies based on heart sounds with a two-layer GRU[35].

The EEG signals are also valuable measures for stroke detection and rehabilitation, and an increasing number of studies attempted to analyze such a disease with RNNs. Choi et al. designed a hybrid model with CNN and bidirectional LSTM for early stroke detection[104]. Fawaz et al. proposed a learnable Fast Fourier Transform method collaborating with LSTM RNN to classify stroke/non-stroke patients[105]. To identify post-stroke patients based on EEG, Sansiagi et al. applied a 3-layer LSTM with discrete wavelet representations[106].

Another physiological application of RNN is the pain assessment, which is challenging to complete in clinical practice. One method to objectively assess the pain level is to detect the protective behaviors by wearable motion sensors and the surface EMG signals. Based on these modalities, Wang et al. applied a 3-layer LSTM model to identify the patients with chronic lower back pain[107]; Li et al. proposed a similar structure with extra dense layers[108]; Yuan et al. constructed an LSTM-based autoencoder to extract the latent features, and then applied attention mechanism to the feature sequences[109]. Another physiological modality is functional near-infrared spectroscopy, which measures the hemodynamic response in the brain. Rojas et al. employed this modality and bidirectional LSTM to classify thermal-induced pain perceptions[110].

Some studies have also attempted to use RNN in EMG signals analysis. These signals reflect the muscular electrical activities and offer a widely adopted method for evaluating the neuromuscular status and identifying body movement. Xia et al. proposed an EMG-based forearm movement estimation system with LSTM [43]. By analyzing the EMG collected from seven upper body muscles, Bengoetxea et al. identified different figure-eight movements[111]. Wang et al. classified the left-hand postures using LSTM [42]. Li et al. built the relationship between EMG and stimulated muscular torque with a NARX strategy [46].

Some physiological signals can also use for identification with the help of RNNs. Salloum et al. and Lynn et al. used ECG signals to conduct biometric identification with RNN, and the accuracies were more than 98% [20], [112]. Moreover, Zhang et al. used ballistocardiogram as input for a similar task[113]. The above studies all compared the accuracies between GRU and LSTM and reported that there were no significant differences between these two units. More discussion will be presented in Section V-B.

Recently, some studies are attempting to measure the swallowing-induced events with on-neck sensors signals and RNN models. Mao et al. applied a multi-layer Elman RNN to track the hyoid bone movement during swallowing[114]. They then combined CNN-1D and GRUs to identify the laryngeal vestibule status (opening or closure)[115]. Importantly, they pointed out that the RNN-based model performed better than the CNN model. Khalifa et al. proposed a hybrid CNN-RNN model to detect the upper esophageal sphincter opening with swallowing acceleration signals. The proposed model used a GRU-based RNN for modeling time dependencies after time-localized feature extraction from raw signals using CNN[116], [117].

Another critical physiological task is human gait analysis, as the data can hold information about medical and neurodegenerative disorders. Zhao et al. applied LSTMs on force-sensitive resistors signals to identify neurodegenerative diseases, such as Parkinson’s disease, Huntington’s disease, and amyotrophic lateral sclerosis[118]. Zhen et al. used LSTM with accelerometer signals collected from the thigh, calf, and foot to identify swing and stance phases in the gait circles[119]. GAO et al. proposed a structure that combined LSTM and 1D CNN to classify the abnormal gait with wearable inertial measurement units[120]. Tortora et al. attempted to decode the gait patterns from EEG signals using LSTM[121]. In this case, the input was the sequential features of EEG, and the labels are swing or stance phase. The application of RNNs in human gait analysis still has a long way to go in physiology since the related studies are relatively limited.

V. Existing issues and future work

Although many studies have reported using RNNs to solve a wide range of problems, as introduced above, there remain several issues facing the further development of RNNs in physiological applications.

A. Finding features for RNN models

1). Knowledge-based feature engineering:

The principal idea of feature extraction is selecting the meaningful components of sequential data to predict events of interest. These features are supposed to be related to these events, and feature extraction requires external knowledge. Involving this knowledge in the RNN model design is a natural methodology, especially when some typical applications’ signal features have been previously explored. For example, in the epilepsy detection studies, wavelet-based methods were prevalent in constructing features from EEG signals, because wavelet transforms were extensively studied and well established to analyze brain activity[70], [122]. The study by Schwab et al. aimed to classify cardiac arrhythmias based on ECG and manually extracted features from engineering and clinical perspectives, such as the amplitude of R point and QRS duration in the ECG waveform[22]. All these features were widely studied biomarkers for cardiac disorders. They designed a 5-layer GRU or bidirectional LSTM with a Markov Model and attention mechanism. Although it was considerably complicated, such a sophisticated structure indeed provided state-of-the-art performance. All the previously reported studies in features extraction will help the RNNs’ design, especially in ECG and EEG-related tasks. However, seeking features might be intractable when the domain knowledge is insufficient[123].

2). Finding features through deep RNNs:

Besides extracting the features by human knowledge, scholars were also aware of the merit in deep learning: it is possible to seek features via the deep architecture itself. In computer vision, deep CNN architecture has been historically successful by generating “feature maps” in intermediate layers. However, the situations were more complicated in dealing with physiological data. If seeking features from the raw data is desirable, increasing the model capacity may be needed. Chauhan and Vig first attempted to feed raw electrocardiographic signals into a three-layer LSTM RNN to conduct anomaly detection[124]. It was quite a deep structure in processing the physiological temporal data. Qiu et al. proposed a three-layer LSTM to remove the power line interference in ECG, in which the input was also raw data[125].

The drawback of the raw signal input is the number of time steps through which the error signal of RNNs has to propagate[22]. The LSTM and GRU are specifically designed to solve the long time dependency problems, and they are not hardware friendly due to the difficulties in parallelized computation[126]. Therefore, the design of hardware accelerators is a path for future work. An alternative way is the modification of the entire deep RNN structures to reduce the calculation of back-propagation through time, as introduced in the next section.

3). Finding features through deep structures:

From 2018, there was a tendency to combine convolutional networks with RNNs (C-RNN) for physiological application[11], [12], [41], [43]. Although the purpose of each network in these studies was different, the deep structures were similar: the CNN layers aimed to extract the local features, and the RNN connected the temporal relationship among these features. Shashikumar et al. treated the 1-D ECG signals as 2-D pictures by calculating the wavelet power spectrum[11]. Based on the spectral “image”, they implemented a 5-layers CNN, at the top of which was a one-layer bidirectional Elman RNN. Unlike Shashikumar’s work, Tan et al. and Andrea et al. used a 1-D CNN as the bottom layer to extract the features of 1-D signals[12]. Andrea et al. also proposed a “siamese architecture” besides the C-RNN to improve accuracy. The structure reported by Xiong et al. was more advanced: they applied the residual block and the batch normalization techniques to cardiac arrhythmias detection with an Elman RNN[41]. These ideas are prevalent in image-related tasks and have been transferred to physiological studies. In the above studies, the CNN layers provide short-term local features and are easy to parallelize in computation. Additionally, when a convolutional layer is introduced, the pooling technique is also applicable to reduce the signal length or time steps, and the computation is therefore simplified. The studies of hybrid structures just started in physiological applications, but they created new ideas in future studies.