Summary

Ischemic retinal diseases (IRDs) are a series of common blinding diseases that depend on accurate fundus fluorescein angiography (FFA) image interpretation for diagnosis and treatment. An artificial intelligence system (Ai-Doctor) was developed to interpret FFA images. Ai-Doctor performed well in image phase identification (area under the curve [AUC], 0.991–0.999, range), diabetic retinopathy (DR) and branch retinal vein occlusion (BRVO) diagnosis (AUC, 0.979–0.992), and non-perfusion area segmentation (Dice similarity coefficient [DSC], 89.7%–90.1%) and quantification. The segmentation model was expanded to unencountered IRDs (central RVO and retinal vasculitis), with DSCs of 89.2% and 83.6%, respectively. A clinically applicable ischemia index (CAII) was proposed to evaluate ischemic degree; patients with CAII values exceeding 0.17 in BRVO and 0.08 in DR may be associated with increased possibility for laser therapy. Ai-Doctor is expected to achieve accurate FFA image interpretation for IRDs, potentially reducing the reliance on retinal specialists.

Keywords: artificial intelligence, ischemic retinal diseases, fundus fluorescein angiography, diabetic retinopathy, retinal vein occlusion

Graphical abstract

Highlights

-

•

Ai-Doctor is a multi-tasking artificial intelligence system

-

•

Ai-Doctor can assist the whole process of FFA image interpretation

-

•

Ai-Doctor may reduce the reliance on retinal specialists in FFA examination

Zhao et al. develop a multi-tasking artificial intelligence system (Ai-Doctor) capable of automated image phases identification, ischemic retinal diseases diagnosis, and ischemic areas segmentation using fundus fluorescein angiography images and propose a clinically applicable ischemia index, potentially improving the whole management process of ischemic retinal diseases.

Introduction

Ophthalmology is one of the medical specialties that embraced the earliest endeavors to explore the potential applications of artificial intelligence (AI) technology.1,2 However, previous efforts have often been limited to the screening or diagnosis of ocular diseases.3,4,5 Developing a comprehensive AI model, with the capability to think like a doctor, aid in areas from diagnosis to treatment suggestion, and be expanded to a series of diseases with common characteristics, will greatly improve equity and efficiency of eye care services.

Ischemic retinal diseases (IRDs) refer to a series of ocular diseases with common characteristics caused by retinal ischemia, including diabetic retinopathy (DR), retinal vein occlusion (RVO), retinal vasculitis, etc.6,7,8,9 Their accurate evaluation is dependent on fundus fluorescein angiography (FFA), a well-recognized standard imaging tool,10 with irreplaceable merit and reliable facilitation in visualizing retinal microvascular features, including non-perfusion areas (NPAs), neovascularization, and leakage.11,12 These are all important indicators in the evaluation of disease progression, treatment effectiveness, and the extent of regression after treatment in IRDs.13 Recently, optical coherence tomography angiography (OCTA) has been used to detect similar features of retinal diseases.9,14,15 However, its application in clinical settings has just started and is limited to provincial capitals or economically developed cities with certain limitations, including high cost, limited viewing fields, and higher cooperation requirements.9,14 Therefore, FFA remains the most commonly used tool for evaluation of retinal diseases, especially in less developed areas.15 However, among all the current types of ophthalmic images, interpreting FFA images poses the greatest challenge for eye professionals, requiring ophthalmologists with extensive clinical experiences or retinal specialists. As a result, some hospitals where FFA examinations are available nevertheless fail to produce qualified FFA reports due to a lack of ophthalmologists with the competence to reliably interpret the images.16 Furthermore, interpreting the FFA images and writing the report for one patient takes at least 5–10 min, thus creating a heavier burden for the already strained workforce. AI technology, which bridges this gap and releases such pressure, is considered practical and is urgently needed to promote easy, equitable, and accessible eye care.

However, AI for the automated detection of retinal diseases using FFA images is still in its infancy. Although several studies of FFA images have achieved the detection or segmentation of fluorescein features for retinal diseases,13,17,18,19,20,21,22 they have mainly focused on a specific disease, such as DR or RVO,13,17,18,19,20,21 rather than a series of diseases. Furthermore, FFA image interpretation should also include identifying the FFA image phases and providing treatment suggestions. Hence, existing AI models fail to reduce ophthalmologist involvement efficiently, thereby limiting their application in real-world clinical settings. While several studies have begun to develop automated detection of multiple retinal diseases with a single AI system, such developments also require numerous images of multiple retinal diseases.5,23 Currently, there is no AI system capable of simultaneous automated detection and segmentation of a series of IRDs using images of only one or two kinds of typical diseases, which, if developed, will greatly improve the efficiency of AI system development and universal clinical application.

To maximize the auxiliary function of AI for eye doctors, we herein aimed to develop a comprehensive AI system (Ai-Doctor) to achieve automated identification of FFA image phases, diagnosis of common IRDs (including DR and branch RVO [BRVO]), and segmentation of ischemic areas using FFA images. The application of automated NPA segmentation was expanded to other unencountered IRDs, including central RVO (CRVO) and retinal vasculitis. Furthermore, we proposed a modified clinically applicable ischemia index (CAII) based on the segmented FFA images to explore the optimal threshold for laser therapy suggestion.

Results

The study design is shown in Figure 1. A total of 24,316 images were included in the development and validation of Ai-Doctor. In the classification task, the numbers of images in the datasets of training, internal validation (Zhongshan Ophthalmic Center [ZOC]), external test 1 (Shenzhen Eye Hospital [SEH]), and external test 2 (Foshan Second People’s Hospital [FSPH]) were 14,058, 6,262, 1,432, and 2,564, respectively. In the segmentation task, 1,295 images (750 BRVO and 545 DR images) were used to develop segmentation model of the NPAs and BRVO areas. The numbers of images in the datasets of training, internal validation (ZOC), external test 1 (SEH), and external test 2 (FSPH) were 850, 232, 122, and 91, respectively. Furthermore, 278 images with CRVO and retinal vasculitis were used to test the generalizability of the segmentation model. Detailed information of all datasets is summarized in Table 1.

Figure 1.

Study design and workflow for Ai-Doctor development

The development of Ai-Doctor consisted of four steps: phase identification (A), disease diagnosis (B), segmentation of NPAs and BRVO areas (C), and CAII threshold exploration for laser therapy suggestion (D). AI, artificial intelligence; BRVO, branch retinal vein occlusion; CAII, clinically applicable ischemia index; CRVO, central retinal vein occlusion; DR, diabetic retinopathy; FFA, fundus fluorescein angiography; NPA, non-perfusion area; ResNet, residual network; ROC, receiver operating characteristic.

Table 1.

Summary of datasets

| Items | ZOC dataset | SEH dataset | FSPH dataset | Total | |

|---|---|---|---|---|---|

| Images | 20,320 | 1432 | 2,564 | 24,316 | |

| Eyes | 3,236 | 342 | 469 | 4,047 | |

| Participants | 2,107 | 232 | 349 | 2,688 | |

| Age (SD), years | 54.1 (13.7) | 57.0 (10.6) | 59.1 (12.5) | N/A | |

| Training set | Validation set | External test set 1 | External test set 2 | ||

| Image phases | |||||

| Venous phase | 7,393 | 3,150 | 800 | 1,200 | 12,543 |

| Arterial phase | 3,970 | 1,650 | 343 | 764 | 6,727 |

| Non-FFA | 2,695 | 1,184 | 289 | 600 | 4,768 |

| Disease diagnosis | |||||

| BRVO | 1,812 | 806 | 242 | 432 | 3,292 |

| DR without NPAs | 772 | 326 | 150 | 87 | 1,335 |

| DR with NPAs | 1,641 | 666 | 246 | 257 | 2,810 |

| Normal | 3,168 | 1,352 | 162 | 424 | 5,106 |

| Segmentation | |||||

| BRVO | 508 | 127 | 65 | 50 | 750 |

| DR with NPAs | 342 | 105 | 57 | 41 | 545 |

| CRVO | N/A | 111 | N/A | N/A | 111 |

| Retinal vasculitis | N/A | 167 | N/A | N/A | 167 |

| CAII validation | |||||

| BRVO | N/A | N/A | N/A | 202 | 202 |

| DR | N/A | N/A | N/A | 290 | 290 |

The dataset in ZOC was randomly divided into the training and internal validation sets in a 7:3 ratio in the classification task and in an 8:2 ratio in the segmentation task. The ages of participants in FSPH were significantly higher than those in ZOC (p < 0.001), while no significant difference was observed between participants at SEH and ZOC (p = 0.16) (Kruskal-Wallis test). BRVO, branch retinal vein occlusion; CRVO, central retinal vein occlusion; DR, diabetic retinopathy; FFA, fundus fluorescein angiography; FSPH, Foshan Second People’s Hospital; NPA, non-perfusion area; SEH, Shenzhen Eye Hospital; ZOC, Zhongshan Ophthalmic Center; CAII, clinically applicable ischemia index; N/A: not applicable; SD, standard deviation.

Model performance of image phase identification and disease diagnosis

The mean areas under the curve (AUCs) for image phase identification (non-FFA, arterial phase, and venous phase) in the datasets of internal validation (ZOC), external test 1 (SEH), and external test 2 (FSPH) were 0.999 (95% confidence interval [CI]: 0.999–1.000), 0.991 (0.986–0.995), and 0.998 (0.996–1.000), respectively. The mean AUCs for the common IRD diagnosis (BRVO, DR without NPAs, and DR with NPAs) in the datasets of internal validation (ZOC), external test 1 (SEH), and external test 2 (FSPH) were 0.992 (95% CI: 0.989–0.995), 0.979 (0.969–0.988), and 0.986 (0.980–0.993), respectively. The detailed indicator values, including AUC, accuracy, recall, and precision, for each category are shown in Table 2. The receiver operating characteristic (ROC) curves and confusion matrices for image phase identification and disease diagnosis are shown in Figure 2. Human-machine comparison results suggested that the performance of the disease diagnosis model was comparable to that by the expert (Figure S1).

Table 2.

Performance of Ai-Doctor in image phase identification and disease diagnosis (classification task)

| AUC (95% CI) | Accuracy (95% CI) | Precision (95% CI) | Recall (95% CI) | |

|---|---|---|---|---|

| Phase identification | ||||

| ZOC dataset | ||||

| Venous phase | 0.999 (0.998–1.000) | 0.995 (0.993–0.997) | 0.997 (0.987–0.993) | 0.990 (0.987–0.993) |

| Arterial phase | 0.999 (0.997–1.000) | 0.984 (0.978–0.990) | 0.990 (0.985–0.995) | 0.980 (0.973–0.987) |

| Non-FFA | 0.999 (0.997–1.000) | 0.998 (0.995–1.000) | 1.000 (1.000–1.000) | 1.000 (1.000–1.000) |

| SEH dataset | ||||

| Venous phase | 0.985 (0.977–0.993) | 0.962 (0.950–0.974) | 0.999 (0.997–1.000) | 0.960 (0.947–0.973) |

| Arterial phase | 0.980 (0.965–0.995) | 0.880 (0.846–0.914) | 0.900 (0.868–0.932) | 0.880 (0.846–0.914) |

| Non-FFA | 0.992 (0.982–1.000) | 1.000 (1.000–1.000) | 0.910 (0.877–0.943) | 1.000 (1.000–1.000) |

| FSPH dataset | ||||

| Venous phase | 0.998 (0.996–1.000) | 0.996 (0.992–1.000) | 0.970 (0.961–0.979) | 1.000 (1.000–1.000) |

| Arterial phase | 0.995 (0.990–1.000) | 0.948 (0.932–0.964) | 0.990 (0.983–0.997) | 0.950 (0.935–0.965) |

| Non-FFA | 0.996 (0.991–1.000) | 1.000 (1.000–1.000) | 0.990 (0.982–0.998) | 1.000 (1.000–1.000) |

| Disease diagnosis | ||||

| ZOC dataset | ||||

| BRVO | 0.985 (0.977–0.993) | 0.932 (0.915–0.949) | 0.970 (0.958–0.982) | 0.930 (0.912–0.948) |

| DR without NPAs | 0.970 (0.951–0.989) | 0.883 (0.848–0.918) | 0.820 (0.778–0.862) | 0.880 (0.845–0.915) |

| DR with NPAs | 0.979 (0.968–0.990) | 0.901 (0.879–0.925) | 0.920 (0.899–0.941) | 0.900 (0.877–0.923) |

| Normal | 0.988 (0.982–0.994) | 0.989 (0.983–0.995) | 0.970 (0.961–0.979) | 0.990 (0.985–0.995) |

| SEH dataset | ||||

| BRVO | 0.963 (0.940–0.986) | 0.921 (0.888–0.954) | 0.950 (0.923–0.977) | 0.920 (0.887–0.953) |

| DR without NPAs | 0.927 (0.885–0.969) | 0.851 (0.794–0.908) | 0.730 (0.658–0.802) | 0.850 (0.792–0.908) |

| DR with NPAs | 0.940 (0.911–0.969) | 0.803 (0.754–0.852) | 0.880 (0.840–0.920) | 0.800 (0.751–0.849) |

| Normal | 0.996 (0.987–1.000) | 0.979 (0.959–0.999) | 0.940 (0.906–0.974) | 0.980 (0.960–1.000) |

| FSPH dataset | ||||

| BRVO | 0.972 (0.956–0.988) | 0.933 (0.909–0.957) | 0.960 (0.942–0.978) | 0.930 (0.906–0.954) |

| DR without NPAs | 0.953 (0.909–0.997) | 0.885 (0.818–0.952) | 0.660 (0.560–0.760) | 0.890 (0.824–0.956) |

| DR with NPAs | 0.945 (0.917–0.973) | 0.809 (0.761–0.857) | 0.950 (0.923–0.977) | 0.810 (0.762–0.858) |

| Normal | 0.997 (0.992–1.000) | 0.995 (0.988–1.000) | 0.950 (0.929–0.971) | 1.000 (1.000–1.000) |

AUC, area under the curve; CI, confidence interval; BRVO, branch retinal vein occlusion; CRVO, central retinal vein occlusion; DR, diabetic retinopathy; FFA, fundus fluorescein angiography; FSPH, Foshan Second People’s Hospital; NPA, non-perfusion area; SEH, Shenzhen Eye Hospital; ZOC, Zhongshan Ophthalmic Center.

Figure 2.

Performance of Ai-Doctor in image phase identification and disease diagnosis (classification task)

(A) ROC curves and confusion matrices for the identification of non-FFA, arterial, and venous phases in internal (ZOC) and external (SEH and FSPH) datasets.

(B) ROC curves and confusion matrices for the diagnosis of normal, BRVO, DR without NPAs, and DR with NPAs in internal (ZOC) and external (SEH and FSPH) datasets. Both of the ROC curves and confusion matrices indicated the remarkable performance of classification models. AI, artificial intelligence; BRVO, branch retinal vein occlusion; DR, diabetic retinopathy; FFA, fundus fluorescein angiography; FSPH, Foshan Second People’s Hospital; NPA, non-perfusion area; ROC, receiver operating characteristic; SEH, Shenzhen Eye Hospital; ZOC, Zhongshan Ophthalmic Center.

Heatmap visualization for deep-learning explainability

Heatmap visualization was generated to highlight typical regions (Figure S2). In the non-FFA and normal images, heatmaps displayed a highlighted visualization of the total visual retinal area. In the arterial- and venous-phase FFA images, heatmaps displayed highlighted visualizations of retinal vessels. In images with a diagnosis of BRVO, DR without NPAs, and DR with NPAs, heatmaps displayed highlighted visualizations corresponding to BRVO areas, mild DR lesions (microaneurysms), and NPAs, respectively, indicating that important regions with typical features were accurately identified by the AI models. Heatmaps of some misclassified images displayed that the models were more likely to focus on more obvious or brighter features.

Model performance of NPA and BRVO area segmentations

We selected two models (Unet-visual geometry group [VGG]16 and Unet-Swin Transformer [ST]) for the segmentation of NPAs and BRVO areas. Compared with Unet-ST, the Unet-VGG16 model exhibited better performance and was thus used for further analysis (Table S1). The segmentation model (Unet-VGG16) achieved a Dice similarity coefficient (DSC) of 89.7% (95% CI: 85.8%–93.6%) in NPA segmentation and 94.4% (90.4%–98.4%) in BRVO area segmentation in the internal validation set. In external test sets 1 (SEH) and 2 (FSPH), the segmentation model also exhibited robust performance with DSCs of 90.1% (95% CI: 86.3%–93.9%) and 90% (86.1%–93.9%) for NPA segmentation and of 92.5% (87.9%–97.1%) and 91.7% (86.9%–96.5%) for BRVO area segmentation, respectively. Detailed indicator values for NPA and BRVO area segmentations, including DSCs, intersection over union (IoU) values, and F1 scores, are shown in Table 3.

Table 3.

Performance of Ai-Doctor in the segmentation of NPAs and BRVO areas (segmentation task)

| Segmented areas | DSC % (95% CI) | IoU % (95% CI) | F1 score % (95% CI) |

|---|---|---|---|

| ZOC dataset | |||

| NPAs | 89.7 (85.8–93.6) | 81.3 (76.3–86.3) | 80.0 (74.9–85.1) |

| BRVO areas | 94.4 (90.4–98.4) | 89.4 (84.0–94.8) | 92.0 (87.3–96.7) |

| SEH dataset | |||

| NPAs | 90.1 (86.3–93.9) | 82.0 (77.1–86.9) | 82.3 (77.4–87.2) |

| BRVO areas | 92.5 (87.9–97.1) | 86.0 (80.0–92.0) | 89.4 (84.0–94.8) |

| FSPH dataset | |||

| NPAs | 90.0 (86.1–93.9) | 81.8 (76.8–86.8) | 81.5 (76.5–86.5) |

| BRVO areas | 91.7 (86.9–96.5) | 84.7 (78.4–91.0) | 88.3 (82.7–93.9) |

| Expansion to other diseases (NPAs) | |||

| CRVO | 89.2 (83.4–95.0) | 80.6 (73.2–88.0) | 84.0 (77.2–90.8) |

| Retinal vasculitis | 83.6 (78.0–89.2) | 71.9 (65.1–78.7) | 73.0 (66.3–79.7) |

BRVO, branch retinal vein occlusion; CRVO, central retinal vein occlusion; CI, confidence interval; DR, diabetic retinopathy; DSC, Dice similarity coefficient; FSPH, Foshan Second People’s Hospital; IoU, intersection over union; NPA, non-perfusion area; SEH, Shenzhen Eye Hospital; ZOC, Zhongshan Ophthalmic Center.

Moreover, the NPA segmentation model of Ai-Doctor was trained with FFA images of two typical IRDs (DR and BRVO), and its performance was better than that trained with images with BRVO alone. Detailed indicator values, including DSCs, IoU values, and F1 scores, are shown in Table S2.

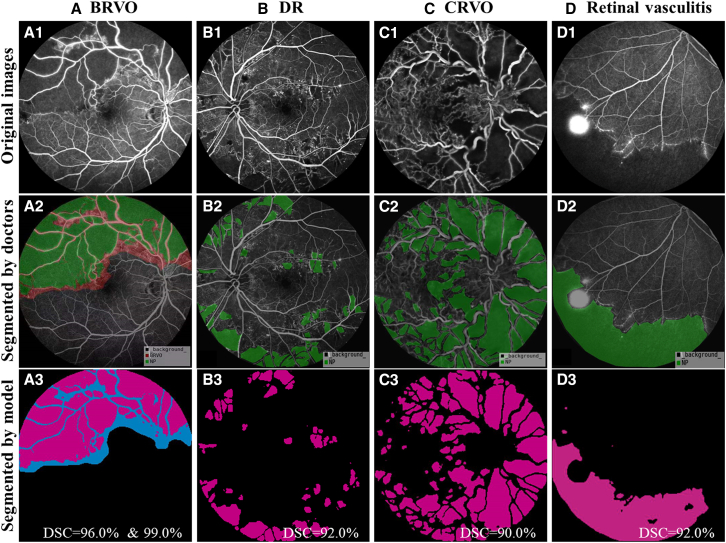

The DSCs for segmenting the NPAs in CRVO and retinal vasculitis (two previously unencountered IRDs) were 89.2% (95% CI: 83.4%–95.0%) and 83.6% (78.0%–89.2%), respectively (Table 3). Figure 3 shows that the AI model can accurately predict the targeted areas among the four IRDs mentioned above. Further validation of using images of patients with DR and BRVO with laser scars also suggested the robust model performance (DSC, 84.1% [95% CI: 75.8%–92.4%]).

Figure 3.

Comparison of segmentation results in NPAs and BRVO areas between Ai-Doctor and doctors

The first line displays the original images (A1–D1). The second line displays images segmented by doctors. For images diagnosed as BRVO (A2), both NPAs (green areas) and BRVO areas (green plus red area) were segmented. For images diagnosed as DR (B2), CRVO (C2), or retinal vasculitis (D2), the NPAs (green areas) were segmented. The third line displays images segmented by the AI model. For images diagnosed as BRVO (A3), both NPAs (magenta areas) and BRVO areas (magenta plus blue area) were segmented, with DSCs of 96.0% and 99.0%, respectively. For images diagnosed as DR (B3), CRVO (C3), and retinal vasculitis (D3), the NPAs (magenta areas) were segmented with the corresponding DSCs indicated in the lower right. AI, artificial intelligence; BRVO, branch retinal vein occlusion; CRVO, central retinal vein occlusion; DR, diabetic retinopathy; DSC, Dice similarity coefficient; NPA, non-perfusion area.

CAII threshold analysis

We proposed a CAII based on the multiple orientations and segmentation results of 55°-viewing field FFA images, the most commonly used modality. Five representative FFA images (posterior, superior, nasal, temporal, and inferior orientations) were selected to calculate the mean CAII for eyes with DR, while two representative FFA images (one posterior image and one peripheral image with the most obvious lesion area) were selected to calculate the mean CAII for eyes with BRVO. The CAII values of the groups not requiring laser therapy were significantly lower than those of the groups requiring laser therapy in eyes with BRVO (0.07 [range, 0.02–0.12] vs. 0.29 [range, 0.20–0.37], p < 0.001) and with DR (0.03 [range, 0.02–0.06] vs. 0.17 [range, 0.10–0.28], p < 0.001) (Table S3). We explored the optimal CAII threshold for laser therapy suggestion using the empirical ROC curves. We selected the cutoff point with the highest Youden index as the optimal threshold, at which point the AUC, sensitivity, and specificity were 0.955, 86.24%, and 93.83% in BRVO, and 0.919, 86.29%, and 86.05% in DR, respectively (Figure 4). Hence, the CAII thresholds for BRVO and DR were preliminarily selected as 0.17 and 0.08, respectively, suggesting that laser therapy may be considered when the CAII is over 0.17 for eyes with BRVO and 0.08 for eyes with DR.

Figure 4.

Exploring optimal CAII thresholds for laser therapy in patients with BRVO and DR

(A and B) The analyses for BRVO and DR, respectively. The CAII values were set to the threshold for laser therapy suggestions. ROC curve analysis was used to explore the optimal CAII threshold for laser therapy (first column). The second column displays the sensitivity and specificity under corresponding threshold. We selected the cutoff point with the highest Youden index as the optimal threshold. The CAII values in groups not requiring laser therapy (untreated groups) were significantly lower than those in groups requiring laser therapy (treated groups) (third column, Mann-Whitney test). BRVO, branch retinal vein occlusion; CAII, clinically applicable ischemia index; DR, diabetic retinopathy; ROC, receiver operating characteristic.

We further validated the CAII thresholds by an independent external dataset, including 101 eyes with BRVO and 58 eyes with DR. When a threshold of 0.17 was selected for eyes with BRVO, 82 of 101 eyes were correctly classified (sensitivity 76%; specificity 96.15%), and when a threshold of 0.08 was selected for eyes with DR, 48 of 58 cases were correctly classified (sensitivity 72.41%; specificity 93.10%). The corresponding confusion matrices are provided in Figure S3.

Clinical application of Ai-Doctor (visualization of AI reports)

To facilitate its usability in clinical settings, a detailed AI report for FFA image interpretation can be automatically generated by Ai-Doctor. Descriptions of the image phase, disease diagnosis, ischemic area segmentation, and CAII calculation are displayed in the report (Figure S4). The total running time of Ai-Doctor from image phase identification to ischemic area segmentation was about 8 s, suggesting its potential of high efficiency in future clinical application.

Discussion

Ai-Doctor functions across the whole process of FFA image interpretation, including image phase identification, common IRD diagnosis, ischemic area segmentation, and CAII calculation. Image phase identification model can first screen out the venous phase images. And in the disease diagnosis model, images classified as DR without NPAs will require follow-up, while images classified as DR with NPAs or BRVO will enter into the segmentation model for ischemic area segmentation and CAII calculation. Furthermore, the image phase identification model can be used in several unencountered fundus diseases with excellent performance (Figure S5), and the segmentation model can also segment the NPAs of other unencountered retinal diseases with a satisfactory DSC, indicating the generalizability in other fundus diseases. Ai-Doctor is expected to provide IRD diagnosis and treatment suggestions based on CAII values, offering significant potential for reliable triage of patients. The combination of these indispensable tasks may maximize the performance of Ai-Doctor for automated FFA image interpretation and therefore minimize the involvement of clinicians.

Due to the relatively lower cost and irreplaceable advantages of evaluating ischemic lesions, FFA devices have commonly been used in general hospitals in recent decades.9,15 However, there is only one ophthalmologist per 110,000 people in developing countries, a tenth of the ophthalmologist-to-population ratio in developed countries,24 and there are even fewer retinal ophthalmologists. Some ophthalmologists might be able to determine whether a patient with retinal disease needs FFA examination but not be able to accurately interpret the FFA images, posing great challenges to service coverage. Ai-Doctor offers promising potential to bridge this gap.

In primary hospitals, insufficiently staffed with experienced ophthalmologists, Ai-Doctor is expected to output expert-level reports from disease diagnosis to ischemic degree quantification, thus reducing the cost and trouble of patients traveling to tertiary hospitals and building up the experience of local ophthalmologists.5,24 In tertiary hospitals, Ai-Doctor is expected to save clinicians time in writing FFA reports and leave them more time to deal with more difficult cases.25,26 Patients are therefore expected to spend less time at the clinic, reducing the cost of long stays away from home. The segmentation and quantification capabilities of the segmentation model also provide researchers with the tools for various clinical analysis. As a result, Ai-Doctor is expected to be beneficial for technicians, junior doctors, researchers, etc.

Recently, AI technology has been applied to the diagnosis and treatment of retinal diseases using FFA images with different viewing fields. Based on 30°-FFA images, Ye et al.13,15,17 developed multiple deep-learning systems to achieve automated evaluation of DR, while Chen et al.18 detected leakage among patients with central serous chorioretinopathy using 2,014 images from 291 eyes. Li et al.19 developed a model for image quality assessment using 3,935 ultra-wide field FFA images. Tang et al.9 developed a model for RVO segmentation using 161 FFA images with a 55°-viewing field. However, most of these single-task models merely achieved the detection of fluorescein features or segmentation of ischemic areas in relatively small numbers of FFA images. Furthermore, these models were limited to a single disease, such as DR or RVO alone, while some lesions, such as NPAs, may appear in a series of retinal diseases.13,17,18 Therefore, these single-task models cannot meet the complex clinical needs of FFA image interpretation; systems with multi-task models are urgently needed. Ai-Doctor is capable of detecting a series of common IRDs and can be applied in the whole process from diagnosis to providing treatment suggestion with remarkable performance. Moreover, the NPA segmentation model trained with FFA images of two typical IRDs (DR and BRVO) achieved better performance than that trained with images of BRVO alone, making it a suitable and reproducible tool for use in a series of IRDs. We also selected images with laser scars to further validate the performance of the NPA segmentation model, and satisfactory DSCs further indicated its generalizability (Figure S6). In addition, although both NPAs and fluorescence blockage appear as hypofluorescence areas in FFA images, the segmentation model can distinguish NPAs accurately due to the difference of pixel-level features (Figure S6).

Ai-Doctor was developed using FFA images with a 55°-viewing field (the most widely used imaging modality compared with the 30°- or 200°-viewing fields), making it more applicable in clinical settings. Ultra-wide-field FFA images, which have not been widely used throughout the world, can capture a larger viewing field at one time but carry the risk of peripheral retina distortion due to unequal magnification, thus potentially leading to lesion size overestimation.8,27 In comparison, the 55°-viewing field FFA images captured by a higher-resolution lens are able to clearly and reliably visualize the retinal lesions and size in multiple orientations, exhibiting irreplaceability in accurate and quantitative assessment of retinal lesions in clinical scenarios.28

An increased ischemia index is associated with the presence of neovascularization and macular edema in patients with RVO and DR29,30,31 and is considered an important indicator for laser therapy.32,33 However, this index needs to be evaluated in clinical settings according to the panretinal viewing field, thus extensively compromising its clinical application.34 The 55°-viewing field FFA images are most commonly used by clinicians to subjectively estimate the ischemic degree of retinal diseases for the consideration of laser therapy.35 Therefore, a modified clinically applicable index is needed to achieve objective and specific evaluation. We proposed a CAII, which can be automatically calculated based on two or five representative 55°-viewing field FFA images within a few seconds. The CAII calculation can not only quantify the ischemic degree but can also evaluate the progression of ischemia or the treatment effectiveness by comparing the CAII changes in different time points. In addition, potential practicality of the associations may be better understood with quantifiable data.36 We preliminarily explored CAII thresholds for the possibility of laser therapy; with a CAII over 0.17 in BRVO and over 0.08 in DR, patients may be prone to require laser therapy. External validation results for CAII thresholds further suggested their feasibility for assisting clinicians in decision-making in the future.

Limitations of the study

When interpreting the results of this study, the following limitations should be considered. First, only some types of IRDs were included in this study. Nevertheless, DR, RVO, and retinal vasculitis, which were included in this study, are the most common and representative retinal diseases. Ai-Doctor can, therefore, meet the basic clinical demands. Second, despite the technological breakthroughs in the diagnosis and treatment suggestions of IRDs, prospective multi-center experiments in more populations are warranted to further validate the utility of Ai-Doctor in real-world clinical settings. In addition, the CAII thresholds we preliminarily proposed are calculated based on representative orientations of FFA images in existing data obtained from several clinical settings. Slight overlaps of images in multiple orientations may exist during CAII calculation, and thus further refinements with more reasonable CAII calculation methods and multi-centric data are required. Nevertheless, the segmentation model and method, which we proposed for CAII threshold exploration, provide a tool for objective and quantitative assessment of the retinal ischemic degree.

In summary, this study developed a comprehensive Ai-Doctor that is expected to achieve automated FFA image interpretation and ischemic degree quantification using the largest number of FFA images to date, which is particularly useful in clinical settings with a shortage of experienced retinal specialists.

STAR★Methods

Key resources table

| REAGENT or RESOURCE | SOURCE | IDENTIFIER |

|---|---|---|

| Software and algorithms | ||

| Python 3.7.16 | Python | https://www.python.org |

| Pytorch 1.11.0+cu113 | Pytorch | https://pytorch.org |

| Torchvision 0.12.0+cu113 | Pytorch | https://pytorch.org/vision/stable/ |

| Tensorflow 1.14.0 | Tensorflow | https://www.tensorflow.org |

| Opencv-python 4.5.5.64 | Opencv | https://docs.opencv.org/4.5.5/s |

| Matplotlib 3.5.1 | Matplotlib | https://matplotlib.org |

| Pillow 9.0.1 | Pillow | https://pillow.readthedocs.io |

| Numpy 1.22.3 | Numpy | https://numpy.org |

| Pytorch-grad-cam 1.4.6 | Github | https://github.com/jacobgil/pytorch-grad-cam |

Resource availability

Lead contact

Further information and requests for resources should be directed to and will be fulfilled by the lead contact, Duoru Lin (lindr5@mail.sysu.edu.cn).

Materials availability

This study did not generate new unique reagents.

Experimental model and subject details

Participants were included if they were over 18 years of age and diagnosed with DR, BRVO, CRVO, retinal vasculitis, and normal at ZOC from January 2016 to September 2021. Participants diagnosed with other fundus diseases, and those with a turbid medium caused by cataract, pre-retinal or vitreous hemorrhage were excluded. Participants allergic to contrast agent were also excluded. Repeated images with the same phase and orientation were excluded to alleviate overfitting when developing Ai-Doctor. Images met clinically acceptable quality (clear refracting media with the entire retinal area and vessel easily discriminated) were included. The included patients were all confirmed by retinal specialists using characteristics of corresponding fundus and FFA images, with comprehensive consideration of medical record data (including medical history and electronic records), textbooks, reported literatures,37,38,39,40 and the clinical experience. Diagnosis of BRVO mainly referred to the following criteria: branch retinal vein compression at an arteriovenous crossing outside the optic disc, upstream venous congestion, intraretinal hemorrhage, oedema, cotton wool spots, et cetera.38 DR without NPA and DR with NPA were defined according to FFA image features in DR patients. Images without NPA only showed microaneurysms and/or intraretinal microvascular abnormality, while images with NPA also showed NPAs and/or leakages. Images without any pathological features in the viewing field were defined as normal. Finally, a total of 24316 images from 2688 participants were included in the Ai-Doctor development and validation. In the development and internal validation, 20320 qualified images (3236 eyes of 2107 participants) were included (Table 1). In the external tests, 1432 images (342 eyes of 232 participants) from SEH and 2564 images (469 eyes of 349 participants) from FSPH from September 2020 to October 2021 were collected and analyzed.

This study was approved by the Institutional Review Board of ZOC, Sun Yat-sen University (IRB-ZOC-SYSU, 2021KYPJ195). All participants provided written informed consent before examination, and all procedures followed the tenets of the Declaration of Helsinki. All images were de-identified prior to the analysis.

Method details

Image collection and interpretation process

All FFA images were captured with mydriasis by experienced technicians using Spectralis HRA+OCT (Heidelberg Engineering, Heidelberg, Germany) with a 55°-viewing field. Images were captured immediately after injection of the contrast agent into the elbow vein. Generally, the following types of images were produced: red-free, fundus autofluorescence, and FFA images in the pre-arterial, arterial, arterial-venous, venous, and late-venous phases. For clinical application and research purposes, all images were simplified to non-FFA (including red-free and fundus autofluorescence images), arterial phase (including pre-arterial, arterial, and arterial-venous phases), and venous phase (including venous and late-venous phases) images. All the hospitals enrolled in this study adopted the same image capture protocol.

Image phase identification, disease diagnosis, and lesion segmentation

We established a labeling team of nine authorized ophthalmologists, including six specialists (with >10 years of clinical experience) and three retinal experts (with >20 years of clinical experience). All of them have received standardized training and testing before participation into classification labeling and segmentation annotation.

In the classification tasks of image phase identification and IRD diagnosis, each image was independently classified by three assigned specialists. When the same classification results were obtained by the three specialists, conclusive labels were added to the images. When the different classification results occurred, discussion among the three specialists was required to reach a consensus. If disagreement persisted after discussion, expert arbitration was conducted by a retinal expert. The final results were considered the ground truth for the training and evaluation of the classification models (Figure S7A). All images were classified as non-FFA, arterial phase, and venous phase, the latter of which were further diagnosed as normal, BRVO, DR without NPAs, or DR with NPAs (Figure S8). The principle of data classification mainly considers the balance of clinical data. Clinically, DR patients accounts for the largest proportion in FFA examination, and subdivision is conducive to guide follow-up, while BRVO patients (especially for BRVO without NPAs) accounts for the less proportion, and further subdivision may lead to model overfitting caused by unbalanced data distribution. Therefore, the data for BRVO patients was not subdivided. The phase identification and IRD diagnosis for all enrolled images were based on the corresponding FFA image characteristics (representative FFA images of BRVO and DR are shown in Figure S9), as well as comprehensive consideration of medical record data (including medical history and electronic records), textbooks, reported literature,37,38,39,40 and the clinical experience of retinal experts.

In the segmentation task, FFA images classified as BRVO and DR with NPAs were randomly selected to develop the segmentation model for NPAs and BRVO areas. For images diagnosed as DR with NPAs, NPAs were annotated; for images diagnosed as BRVO, both NPAs and BRVO areas were annotated (only BRVO areas were annotated for images without NPAs). Each selected FFA image was independently annotated by two assigned specialists using Labelme (an open-source platform).41 Each of the specialist generated his own annotation for boundaries in each type of lesion (NPAs or BRVO areas). The intersection over union (IoU) was used to describe the degree of similarity between annotations from the two specialists; when the IoU was greater than 0.85, the annotation from the specialist with more clinical experience was adopted. For images with an IoU less than 0.85, the annotations were discussed between and adjusted by the two specialists. If the IoU remained less than 0.85 after reannotations, a retinal expert was invited to inspect and fine-tune the reannotations. These segmentation annotations were used as the final ground truth to develop and evaluate the segmentation models (Figure S7B).

In addition, we tested the generalizability of the segmentation model for other IRDs using FFA images with CRVO and retinal vasculitis, two ischemic diseases previously unencountered in Ai-Doctor development. We further selected images with laser scars to validate the model performance.

Development of Ai-Doctor

Ai-Doctor has two models: Model 1 for classification (image phase identification and common IRD diagnosis) and Model 2 for segmentation (NPAs and BRVO areas). Model 1 was connected by two networks for classification, one for the image phase identification (non-FFA, arterial phase, and venous phase) and the other for common IRD diagnosis (BRVO, DR without NPAs, and DR with NPAs) using venous phase images. In Model 1 and Model 2, residual network (ResNet-152)42 and Unet,43,44 respectively, were selected for training and testing (Figure 1). The ResNet-152 and Unet are among the state-of-the-art convolutional neural networks (CNNs).45,46 Transfer learning was used to initialize the ResNet-152 weights to improve the convergence speed.42 The results were output through convolution and pooling computation. After the training iterations, the model with the best performance was saved and tested.

The Unet has an encoder-decoder structure and shortcuts connecting the encoder layers and the corresponding decoder layers.47 It extracted and combined image features of the encoding path with those of the decoding path, assembling a better output.44 The VGG-16 is a common and classical CNN that has been used in previous studies.48 ST is one of the vision transformer modules and is becoming the new talent in the computer vision area.49 VGG and ST were applied as the backbone of Unet for comparison.47,48,49

The pixel values of the input images were normalized from 0 to 1, and the size was selected as 512 × 512 pixels. The experiments were implemented on a computer with 2.5 GHz Intel Xeon Gold 6248 CPU, four Nvidia Tesla V100 graphics devices and 500 G of running memory (Intel Corporation, Santa Clara, CA, USA).

To highlight important regions of images in ResNet-152, heatmaps were generated by extracting the weights of the last layer to visualize the target region prior to the output of the model,16 thus providing deep learning explainability on image discrimination. Redder areas represent features of systemic classification with higher significance.

Clinically applicable ischemia index (CAII) calculation

The ischemia index is a useful indicator to identify patients at higher risk of developing complications.9,50 However, its calculation requires a panretinal viewing field that is difficult to achieve in the clinical practice. We proposed a CAII based on the multiple orientations and segmentation results of 55°-viewing field FFA images, the most commonly used modality. Five representative FFA images (posterior, superior, nasal, temporal, and inferior orientations) based on the physiological and anatomical structure of retina were included to calculate the mean CAII for eyes with DR. Two representative FFA images (one posterior image and one peripheral image with the most obvious lesion area) were included to calculate the mean CAII for eyes with BRVO. The CAII was the proportion of NPAs to the total retinal area in the visual range of the FFA image. A total of 1433 FFA images from 167 eyes with DR (835 images) and 299 eyes with BRVO (598 images) were included in the CAII development. Detailed principles for image selection and CAII calculations are shown in Figure S9.

Three assigned specialists preliminarily divided all the enrolled eyes into the group not requiring laser therapy and the group requiring laser therapy based on the characteristics of their FFA images, medical record data (including blood pressure, blood glucose, et cetera.), clinical experience, and guidelines for DR37,51,52 and BRVO.53,54 In the groups requiring laser therapy, patients with BRVO mainly included ischemic BRVO with macular oedema and/or with neovascularization, and patients with DR mainly included severe nonproliferative DR and proliferative DR. The groups not requiring laser therapy mainly included patients with diseases that did not reach the severity of those in groups requiring laser therapy. Subsequently, one retinal expert with more than 20 years of clinical experience rechecked, adjusted, and determined the classification results. The CAII values of each enrolled eye in the two groups were objectively quantified under the segmentation model. The CAII values were set as the threshold for laser therapy suggestions. The sensitivity and specificity under corresponding threshold were derived to generate empirical receiver operating characteristic (ROC) curve, aiming to explore the optimal threshold. In addition, we validated the optimal thresholds using an independent external dataset from FSPH, including 101 eyes with BRVO and 58 eyes with DR.

Quantification and statistical analysis

The classification evaluation was conducted by obtaining the accuracy, recall, and precision and calculating the ROC and areas under the ROC curve (AUC). The mean AUC in this study refers to weighted mean AUC. Segmentation evaluation was conducted using the Dice similarity coefficient (DSC), IoU value, and F1 score. These indicators were calculated based on true positives (TP), true negatives (TN), false positives (FP), and false negatives (FN). Meanwhile, the 95% confidence interval (CI) was calculated for these indicators. The following equations define how these parameters are related to the metrics.

The DSC and IoU represent the degree of similarity (contact ratio) between the segmented area of the segmentation model and the ground truth. In the formula for DSC and IoU calculation, A and B represent two sets of pixels in the retinal images (A represents the segmented areas of the model, and B represents the ground truth).55

The empirical ROC curves were analyzed to explore the optimal CAII threshold for laser therapy suggestion. The Mann–Whitney test was performed to evaluate the differences in CAII values between the two groups, and the data are given as medians and ranges. The Kruskal-Wallis test was performed to evaluate the age differences among participants in different datasets, and the data are given as mean and standard deviation (SD). Statistical analyses were conducted using GraphPad Prism (v8.0) (GraphPad Software Inc.)

Acknowledgments

This study was supported by the National Natural Science Foundation of China (82271099, 82171035, and 82000946); the Natural Science Foundation of Guangdong Province (2022A1515010355 and 2021A1515012238); a Research Grant from Guangzhou Municipal Science and Technology Bureau in China (202201020507); Guangdong Natural Science Funds for Distinguished Young Scholar (2023B1515020100); the Science and Technology Program of Guangzhou (202201020522); the Guangdong Science and Technology Program (2021B1111610006); and the Hainan Province Clinical Medical Center.

Author contributions

Conception and design, X.Z., X.L., H.L., D.L., S.Y., and Z.L.; funding obtainment, X.L, D.L., H.L., Y.X., and S.Y.; provision of study data, X.L., G.Z., S.Z., and Y.L.; collection and assembly of data, X.Z., Z.L., J.X., D.L., Y.X., K.C., and C.-K.T.; data analysis and interpretation, X.Z., D.L, S.Y., Z.L., L.X., L.Z., and X.L.; manuscript writing, X.Z., D.L., Z.L., and S.Y.; revised the manuscript, D.L., X.L., and H.L.

Declaration of interests

The authors declare no competing interests.

Published: September 20, 2023

Footnotes

Supplemental information can be found online at https://doi.org/10.1016/j.xcrm.2023.101197.

Contributor Information

Haotian Lin, Email: linht5@mail.sysu.edu.cn.

Xiaoling Liang, Email: liangxlsums@qq.com.

Duoru Lin, Email: lindr5@mail.sysu.edu.cn.

Supplemental information

Data and code availability

-

•

All data reported in this paper will be shared by the lead contact upon request.

-

•

This paper does not report the original code.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.

References

- 1.Ting D.S.W., Cheung C.Y.L., Lim G., Tan G.S.W., Quang N.D., Gan A., Hamzah H., Garcia-Franco R., San Yeo I.Y., Lee S.Y., et al. Development and validation of a deep learning system for diabetic retinopathy and related eye diseases using retinal images from multiethnic populations with diabetes. JAMA. 2017;318:2211–2223. doi: 10.1001/jama.2017.18152. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Long E., Lin H., Liu Z., Wu X., Wang L., Jiang J., An Y., Lin Z., Li X., Chen J., et al. An artificial intelligence platform for the multihospital collaborative management of congenital cataracts. Nat. Biomed. Eng. 2017;1 [Google Scholar]

- 3.Gargeya R., Leng T. Automated identification of diabetic retinopathy using deep learning. Ophthalmology. 2017;124:962–969. doi: 10.1016/j.ophtha.2017.02.008. [DOI] [PubMed] [Google Scholar]

- 4.Grassmann F., Mengelkamp J., Brandl C., Harsch S., Zimmermann M.E., Linkohr B., Peters A., Heid I.M., Palm C., Weber B.H.F. A deep learning algorithm for prediction of age-related eye disease study severity scale for age-related macular degeneration from color fundus photography. Ophthalmology. 2018;125:1410–1420. doi: 10.1016/j.ophtha.2018.02.037. [DOI] [PubMed] [Google Scholar]

- 5.Lin D., Xiong J., Liu C., Zhao L., Li Z., Yu S., Wu X., Ge Z., Hu X., Wang B., et al. Application of comprehensive artificial intelligence retinal expert (CARE) system: a national real-world evidence study. Lancet. Digit. Health. 2021;3:e486–e495. doi: 10.1016/S2589-7500(21)00086-8. [DOI] [PubMed] [Google Scholar]

- 6.Rogers S., McIntosh R.L., Cheung N., Lim L., Wang J.J., Mitchell P., Kowalski J.W., Nguyen H., Wong T.Y., International Eye Disease Consortium The prevalence of retinal vein occlusion: pooled data from population studies from the United States, Europe, Asia, and Australia. Ophthalmology. 2010;117:313–319.e1. doi: 10.1016/j.ophtha.2009.07.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Li Z., Keel S., Liu C., He Y., Meng W., Scheetz J., Lee P.Y., Shaw J., Ting D., Wong T.Y., et al. An automated grading system for detection of vision-threatening referable diabetic retinopathy on the basis of color fundus photographs. Diabetes Care. 2018;41:2509–2516. doi: 10.2337/dc18-0147. [DOI] [PubMed] [Google Scholar]

- 8.Wang X., Ji Z., Ma X., Zhang Z., Yi Z., Zheng H., Fan W., Chen C. Automated grading of diabetic retinopathy with ultra-widefield fluorescein angiography and deep learning. J. Diabetes Res. 2021;2021 doi: 10.1155/2021/2611250. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Tang Z., Zhang X., Yang G., Zhang G., Gong Y., Zhao K., Xie J., Hou J., Hou J., Sun B., Wang Z. Automated segmentation of retinal nonperfusion area in fluorescein angiography in retinal vein occlusion using convolutional neural networks. Med. Phys. 2021;48:648–658. doi: 10.1002/mp.14640. [DOI] [PubMed] [Google Scholar]

- 10.Gu C., Chen J., Su T., Qiu Q. Time-lapse angiography of the ocular fundus: a new video-angiography. BMC Med. Imag. 2019;19:98. doi: 10.1186/s12880-019-0398-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Wessel M., Aaker G., Parlitsis G., Cho M., D'Amico D., Kiss S. Vol. 32. Retina; 2012. pp. 785–791. (Ultra-wide-field Angiography Improves the Detection and Classification of Diabetic Retinopathy). [DOI] [PubMed] [Google Scholar]

- 12.Nunez do Rio J.M., Sen P., Rasheed R., Bagchi A., Nicholson L., Dubis A.M., Bergeles C., Sivaprasad S. Deep learning-based segmentation and quantification of retinal capillary non-perfusion on ultra-wide-field retinal fluorescein angiography. J. Clin. Med. 2020;9 doi: 10.3390/jcm9082537. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pan X., Jin K., Cao J., Liu Z., Wu J., You K., Lu Y., Xu Y., Su Z., Jiang J., et al. Multi-label classification of retinal lesions in diabetic retinopathy for automatic analysis of fundus fluorescein angiography based on deep learning. Graefes Arch. Clin. Exp. Ophthalmol. 2020;258:779–785. doi: 10.1007/s00417-019-04575-w. [DOI] [PubMed] [Google Scholar]

- 14.Alibhai A.Y., De Pretto L.R., Moult E.M., Or C., Arya M., McGowan M., Carrasco-Zevallos O., Lee B., Chen S., Baumal C.R., et al. Quantification of retinal capillary nonperfusion in diabetics using wide-field optical coherence tomography angiography. Retina. 2020;40:412–420. doi: 10.1097/IAE.0000000000002403. [DOI] [PubMed] [Google Scholar]

- 15.Gao Z., Pan X., Shao J., Jiang X., Su Z., Jin K., Ye J. Automatic interpretation and clinical evaluation for fundus fluorescein angiography images of diabetic retinopathy patients by deep learning. Br. J. Ophthalmol. 2022 doi: 10.1136/bjo-2022-321472. 2022-321472. [DOI] [PubMed] [Google Scholar]

- 16.Li Z., Jiang J., Chen K., Chen Q., Zheng Q., Liu X., Weng H., Wu S., Chen W. Preventing corneal blindness caused by keratitis using artificial intelligence. Nat. Commun. 2021;12:3738. doi: 10.1038/s41467-021-24116-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Jin K., Pan X., You K., Wu J., Liu Z., Cao J., Lou L., Xu Y., Su Z., Yao K., Ye J. Automatic detection of non-perfusion areas in diabetic macular edema from fundus fluorescein angiography for decision making using deep learning. Sci. Rep. 2020;10 doi: 10.1038/s41598-020-71622-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Chen M., Jin K., You K., Xu Y., Wang Y., Yip C.C., Wu J., Ye J. Automatic detection of leakage point in central serous chorioretinopathy of fundus fluorescein angiography based on time sequence deep learning. Graefes Arch. Clin. Exp. Ophthalmol. 2021;259:2401–2411. doi: 10.1007/s00417-021-05151-x. [DOI] [PubMed] [Google Scholar]

- 19.Li H.H., Abraham J.R., Sevgi D.D., Srivastava S.K., Hach J.M., Whitney J., Vasanji A., Reese J.L., Ehlers J.P. Automated quality assessment and image selection of ultra-Widefield fluorescein angiography images through deep learning. Transl. Vis. Sci. Technol. 2020;9:52. doi: 10.1167/tvst.9.2.52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Ding L., Bawany M.H., Kuriyan A.E., Ramchandran R.S., Wykoff C.C., Sharma G. A novel deep learning pipeline for retinal vessel detection in fluorescein angiography. IEEE Trans. Image Process. 2020;29:6561–6573. doi: 10.1109/TIP.2020.2991530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Rageh A., Ashraf M., Fleming A., Silva P.S. Automated microaneurysm counts on ultrawide field color and fluorescein angiography images. Semin. Ophthalmol. 2021;36:315–321. doi: 10.1080/08820538.2021.1897852. [DOI] [PubMed] [Google Scholar]

- 22.Li W., Fang W., Wang J., He Y., Deng G., Ye H., Hou Z., Chen Y., Jiang C., Shi G. A Weakly Supervised Deep Learning Approach for Leakage Detection in Fluorescein Angiography Images. Transl. Vis. Sci. Technol. 2022;11:9. doi: 10.1167/tvst.11.3.9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Cen L.P., Ji J., Lin J.W., Ju S.T., Lin H.J., Li T.P., Wang Y., Yang J.F., Liu Y.F., Tan S., et al. Automatic detection of 39 fundus diseases and conditions in retinal photographs using deep neural networks. Nat. Commun. 2021;12:4828. doi: 10.1038/s41467-021-25138-w. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Cao J., You K., Zhou J., Xu M., Xu P., Wen L., Wang S., Jin K., Lou L., Wang Y., Ye J. A cascade eye diseases screening system with interpretability and expandability in ultra-wide field fundus images: A multicentre diagnostic accuracy study. EClinicalMedicine. 2022;53 doi: 10.1016/j.eclinm.2022.101633. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Guo Y., Camino A., Wang J., Huang D., Hwang T.S., Jia Y. MEDnet, a neural network for automated detection of avascular area in OCT angiography. Biomed. Opt Express. 2018;9:5147–5158. doi: 10.1364/BOE.9.005147. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Wilson M., Chopra R., Wilson M.Z., Cooper C., MacWilliams P., Liu Y., Wulczyn E., Florea D., Hughes C.O., Karthikesalingam A., et al. Validation and clinical applicability of whole-volume automated segmentation of optical coherence tomography in retinal disease using deep learning. JAMA Ophthalmol. 2021;139:964–973. doi: 10.1001/jamaophthalmol.2021.2273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Son G., Kim Y.J., Sung Y.S., Park B., Kim J.G. Analysis of quantitative correlations between microaneurysm, ischaemic index and new vessels in ultrawide-field fluorescein angiography images using automated software. Br. J. Ophthalmol. 2019;103:1759–1764. doi: 10.1136/bjophthalmol-2018-313596. [DOI] [PubMed] [Google Scholar]

- 28.Li S., Wang J.J., Li H.Y., Wang W., Tian M., Lang X.Q., Wang K. Performance evaluation of two fundus oculi angiographic imaging system: Optos 200Tx and Heidelberg Spectralis. Exp. Ther. Med. 2021;21:19. doi: 10.3892/etm.2020.9451. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Khayat M., Williams M., Lois N. Ischemic retinal vein occlusion: characterizing the more severe spectrum of retinal vein occlusion. Surv. Ophthalmol. 2018;63:816–850. doi: 10.1016/j.survophthal.2018.04.005. [DOI] [PubMed] [Google Scholar]

- 30.Tsui I., Kaines A., Havunjian M.A., Hubschman S., Heilweil G., Prasad P.S., Oliver S.C.N., Yu F., Bitrian E., Hubschman J.P., et al. Ischemic index and neovascularization in central retinal vein occlusion. Retina. 2011;31:105–110. doi: 10.1097/IAE.0b013e3181e36c6d. [DOI] [PubMed] [Google Scholar]

- 31.Prasad P.S., Oliver S.C.N., Coffee R.E., Hubschman J.P., Schwartz S.D. Ultra wide-field angiographic characteristics of branch retinal and hemicentral retinal vein occlusion. Ophthalmology. 2010;117:780–784. doi: 10.1016/j.ophtha.2009.09.019. [DOI] [PubMed] [Google Scholar]

- 32.Fan W., Wang K., Ghasemi Falavarjani K., Sagong M., Uji A., Ip M., Wykoff C.C., Brown D.M., van Hemert J., Sadda S.R. Distribution of nonperfusion area on ultra-widefield fluorescein angiography in eyes with diabetic macular edema: DAVE study. Am. J. Ophthalmol. 2017;180:110–116. doi: 10.1016/j.ajo.2017.05.024. [DOI] [PubMed] [Google Scholar]

- 33.Franco-Cardenas V., Shah S.U., Apap D., Joseph A., Heilweil G., Zutis K., Trucco E., Hubschman J.P. Assessment of ischemic index in retinal vascular diseases using ultra-wide-field fluorescein angiography: single versus summarized image. Semin. Ophthalmol. 2017;32:353–357. doi: 10.3109/08820538.2015.1095304. [DOI] [PubMed] [Google Scholar]

- 34.Ehlers J.P., Wang K., Vasanji A., Hu M., Srivastava S.K. Automated quantitative characterisation of retinal vascular leakage and microaneurysms in ultra-widefield fluorescein angiography. Br. J. Ophthalmol. 2017;101:696–699. doi: 10.1136/bjophthalmol-2016-310047. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Li X., Xie J., Zhang L., Cui Y., Zhang G., Wang J., Zhang A., Chen X., Huang T., Meng Q. Differential distribution of manifest lesions in diabetic retinopathy by fundus fluorescein angiography and fundus photography. BMC Ophthalmol. 2020;20:471. doi: 10.1186/s12886-020-01740-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Yu G., Aaberg M.T., Patel T.P., Iyengar R.S., Powell C., Tran A., Miranda C., Young E., Demetriou K., Devisetty L., Paulus Y.M. Quantification of Retinal Nonperfusion and Neovascularization With Ultrawidefield Fluorescein Angiography in Patients With Diabetes and Associated Characteristics of Advanced Disease. JAMA Ophthalmol. 2020;138:680–688. doi: 10.1001/jamaophthalmol.2020.1257. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Wilkinson C.P., Ferris F.L., 3rd, Klein R.E., Lee P.P., Agardh C.D., Davis M., Dills D., Kampik A., Pararajasegaram R., Verdaguer J.T., Global Diabetic Retinopathy Project Group Proposed international clinical diabetic retinopathy and diabetic macular edema disease severity scales. Ophthalmology. 2003;110:1677–1682. doi: 10.1016/S0161-6420(03)00475-5. [DOI] [PubMed] [Google Scholar]

- 38.Bertelsen M., Linneberg A., Rosenberg T., Christoffersen N., Vorum H., Gade E., Larsen M. Comorbidity in patients with branch retinal vein occlusion: case-control study. Bmj. 2012;345 doi: 10.1136/bmj.e7885. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Stem M.S., Talwar N., Comer G.M., Stein J.D. A longitudinal analysis of risk factors associated with central retinal vein occlusion. Ophthalmology. 2013;120:362–370. doi: 10.1016/j.ophtha.2012.07.080. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Agarwal A., Karkhur S., Aggarwal K., Invernizzi A., Singh R., Dogra M.R., Gupta V., Gupta A., Do D.V., Nguyen Q.D. Epidemiology and clinical features of inflammatory retinal vascular occlusions: pooled data from two tertiary-referral institutions. Clin. Exp. Ophthalmol. 2018;46:62–74. doi: 10.1111/ceo.12997. [DOI] [PubMed] [Google Scholar]

- 41.Russell B.C., Torralba A., Murphy K.P., Freeman W.T. LabelMe: A database and web-based tool for image annotation. Int. J. Comput. Vis. 2008;77:157–173. [Google Scholar]

- 42.Shao L., Zhu F., Li X. Transfer learning for visual categorization: a survey. IEEE Transact. Neural Networks Learn. Syst. 2015;26:1019–1034. doi: 10.1109/TNNLS.2014.2330900. [DOI] [PubMed] [Google Scholar]

- 43.Lee H., Kang K.E., Chung H., Kim H.C. Automated segmentation of lesions including subretinal hyperreflective material in neovascular age-related macular degeneration. Am. J. Ophthalmol. 2018;191:64–75. doi: 10.1016/j.ajo.2018.04.007. [DOI] [PubMed] [Google Scholar]

- 44.Sun G., Liu X., Yu X. Multi-path cascaded U-net for vessel segmentation from fundus fluorescein angiography sequential images. Comput. Methods Progr. Biomed. 2021;211 doi: 10.1016/j.cmpb.2021.106422. [DOI] [PubMed] [Google Scholar]

- 45.Wiedemann S., Muller K.R., Samek W. Compact and Computationally Efficient Representation of Deep Neural Networks. IEEE Transact. Neural Networks Learn. Syst. 2020;31:772–785. doi: 10.1109/TNNLS.2019.2910073. [DOI] [PubMed] [Google Scholar]

- 46.Paluru N., Dayal A., Jenssen H.B., Sakinis T., Cenkeramaddi L.R., Prakash J., Yalavarthy P.K. Anam-Net: Anamorphic Depth Embedding-Based Lightweight CNN for Segmentation of Anomalies in COVID-19 Chest CT Images. IEEE Transact. Neural Networks Learn. Syst. 2021;32:932–946. doi: 10.1109/TNNLS.2021.3054746. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Wang F., Xing L., Bagshaw H., Buyyounouski M., Han B. Deep learning applications in automatic needle segmentation in ultrasound-guided prostate brachytherapy. Med. Phys. 2020;47:3797–3805. doi: 10.1002/mp.14328. [DOI] [PubMed] [Google Scholar]

- 48.Ghosh S., Chaki A., Santosh K.C. Improved U-Net architecture with VGG-16 for brain tumor segmentation. Phys. Eng. Sci. Med. 2021;44:703–712. doi: 10.1007/s13246-021-01019-w. [DOI] [PubMed] [Google Scholar]

- 49.Chen Y., Zhang P., Kong T., Li Y., Zhang X., Qi L., Sun J., Jia J. Scale-aware automatic augmentations for object detection with dynamic training. IEEE Trans. Pattern Anal. Mach. Intell. 2022;45:2367–2383. doi: 10.1109/TPAMI.2022.3166905. [DOI] [PubMed] [Google Scholar]

- 50.Fan W., Nittala M.G., Velaga S.B., Hirano T., Wykoff C.C., Ip M., Lampen S.I.R., van Hemert J., Fleming A., Verhoek M., Sadda S.R. Distribution of nonperfusion and neovascularization on ultrawide-field fluorescein angiography in proliferative diabetic retinopathy (RECOVERY Study): report 1. Am. J. Ophthalmol. 2019;206:154–160. doi: 10.1016/j.ajo.2019.04.023. [DOI] [PubMed] [Google Scholar]

- 51.Ghanchi F., Diabetic Retinopathy Guidelines Working Group The Royal College of Ophthalmologists' clinical guidelines for diabetic retinopathy: a summary. Eye (Lond) 2013;27:285–287. doi: 10.1038/eye.2012.287. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Royle P., Mistry H., Auguste P., Shyangdan D., Freeman K., Lois N., Waugh N. Pan-retinal photocoagulation and other forms of laser treatment and drug therapies for non-proliferative diabetic retinopathy: systematic review and economic evaluation. Health Technol. Assess. 2015;19:1–248. doi: 10.3310/hta19510. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Hayreh S.S. Photocoagulation for retinal vein occlusion. Prog. Retin. Eye Res. 2021;85 doi: 10.1016/j.preteyeres.2021.100964. [DOI] [PubMed] [Google Scholar]

- 54.An S.H., Jeong W.J. Early-scatter laser photocoagulation promotes the formation of collateral vessels in branch retinal vein occlusion. Eur. J. Ophthalmol. 2020;30:370–375. doi: 10.1177/1120672119827857. [DOI] [PubMed] [Google Scholar]

- 55.Dai L., Wu L., Li H., Cai C., Wu Q., Kong H., Liu R., Wang X., Hou X., Liu Y., et al. A deep learning system for detecting diabetic retinopathy across the disease spectrum. Nat. Commun. 2021;12:3242. doi: 10.1038/s41467-021-23458-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

-

•

All data reported in this paper will be shared by the lead contact upon request.

-

•

This paper does not report the original code.

-

•

Any additional information required to reanalyze the data reported in this paper is available from the lead contact upon request.