Abstract

People have a unique ability to represent other people’s internal thoughts and feelings – their mental states. Mental state knowledge has a rich conceptual structure, organized along key dimensions, such as valence. People use this conceptual structure to guide social interactions. How do people acquire their understanding of this structure? Here we investigate an underexplored contributor to this process: observation of mental state dynamics. Mental states – including both emotions and cognitive states – are not static. Rather, the transitions from one state to another are systematic and predictable. Drawing on prior cognitive science, we hypothesize that these transition dynamics may shape the conceptual structure that people learn to apply to mental states. Across nine behavioral experiments (N = 1439), we tested whether the transition probabilities between mental states causally shape people’s conceptual judgements of those states. In each study, we found that observing frequent transitions between mental states caused people to judge them to be conceptually similar. Computational modeling indicated that people translated mental state dynamics into concepts by embedding the states as points within a geometric space. The closer two states are within this space, the greater the likelihood of transitions between them. In three neural network experiments, we trained artificial neural networks to predict real human mental state dynamics. The networks spontaneously learned the same conceptual dimensions that people use to understand mental states. Together these results indicate that mental state dynamics – and the goal of predicting them – shape the structure of mental state concepts.

Keywords: theory of mind, emotion, concepts, prediction, artificial neural network

People have a powerful ability to represent other people’s internal thoughts and feelings – their mental states. Mental state knowledge has a rich conceptual structure (Cowen & Keltner, 2017; Posner et al., 2005; Russell, 1980; Thornton & Tamir, 2020a). This conceptual structure can be largely summarized using a small set of psychological dimensions. For example, the dimension of valence captures how positive or negative a state is, and this structural dimension reflects people’s understanding of those experiences (Russell, 1980). Certain basic dimensions, such as valence, seem to be widely shared across cultures, though the number and importance of dimensions may vary (Jackson et al., 2019; Russell & Lewicka, 1989; Thornton et al., 2022). This raises a fundamental question: where does this shared structure come from?

Here we investigated the informational origins of people’s mental state concepts. It has long been assumed that this conceptual structure reflects solely the static features of mental states, such as the similar facial expressions stereotypically elicited by surprise and fear. However, mental states – including both emotions and cognitive states – are not static. We thus challenge this assumption: we suggest that static features alone cannot explain the structure of mental state representation. Instead, we propose that a complete account of mental state concepts must also consider how people dynamically transition from one thought or feeling to another. We hypothesize that observation of these transition dynamics shapes the structure of people’s mental state concepts.

What is a mental state concept?

The definition of mental state varies considerably in the affective science and social psychological literatures. Our operational definition of “mental state” is an umbrella term that refers to any hidden state that occupies the mind. By hidden, we mean that mental states must be latent, rather than manifest: one can infer that another person is happy, but not directly sense it in the same way that one could observe the motion of a body in the physical state of running. This definition of mental states includes what are often termed affective states: moods, emotions, and feelings. It also includes cognitive states, such as remembering the past, imagining or planning the future, making decisions, reasoning, or performing mental calculations. Specific instances of a mental state often have propositional content. For example, one might be happy “that their friend got a job” or be thinking “about what to have for lunch”; one could also just feel happy. Although the propositional content of mental states is doubtless important to theory of mind (Saxe & Kanwisher, 2003), here we abstract away from this specific content, and instead focus on the more general context-agnostic conceptualizations of mental states.

A static account of mental state concepts

We propose that mental state dynamics play an important role in shaping the conceptual structure of mental states. However, there is another clear, intuitive account: that mental states concepts are defined by “static features.” Under this static account, each state is associated with a different set of features: the racing heart of excitement, the scrunched face of disgust, the social implications of gratitude, and so forth. People use these features to identify what state another person is in. For instance, people use perceptual features, such as facial expressions and tone of voice, to infer others’ emotions (Zaki et al., 2009): a smiling person is likely feeling happy. People also use behavioral and contextual features to infer others’ states (Barrett et al., 2011; Frijda, 2004); a person throwing punches is likely angry, and a person at a rave is likely excited.

When we think about why people hold the mental state concepts that they do, it is easy to look to these static features for an answer. A pair of states with many features in common – such as joy and gratitude – will be considered conceptually similar, while a pair of states with little in common – such as surprise and sleepiness – will be considered conceptually dissimilar. Indeed, there is evidence that people use static features as a basis for understanding mental state concepts. For example, research on the bodily representation of mental states (Nummenmaa et al., 2018) shows that states that people feel in similar parts of the body (e.g., the head, limbs, or gut) are considered more conceptually similar. In addition, Skerry and Saxe (2015) examined contextual features of states (e.g., Who caused them? Are they associated with safety or danger?) and found that these features likewise shape state representations, measured using neural pattern similarity (Skerry & Saxe, 2015).

A static account of mental state concepts is compatible with both a basic emotions perspective or a constructivist perspective on emotions (Adolphs et al., 2019). From a basic emotions perspectives, emotions are evolutionarily determined responses to specific situations, such as feeling fear in the face of danger (Ekman, 1992). As such, emotions concepts are innate and tied to fixed features. However, one could also arrive at somewhat different static account of mental state concepts from a constructivist perspective (Barrett, 2017b). Constructivists generally eschew the notion that certain static features are deterministically associated with specific states (Barrett et al., 2019; Gendron et al., 2014). For example, one does not smile 100% of the time that one is happy. However, more probabilistic and contextual associations between emotions and features are still argued to be the basis for the formation of emotion concepts. For example, a recent perspective on the cultural evolution of emotions suggests that, over the course of development, people learn about the existence of relatively high-density (i.e., frequently occurring) clusters of affective experiences in certain regions of feature space (Lindquist et al., 2022). The number and locations of these clusters are determined flexibly by culture, but within a culture they eventually come to acquire verbal labels. Under this version of the static account, the proximity in feature space between these labeled clusters of affective experience shape our emotion concepts. However, constructivist accounts can also incorporate dynamics, as we discuss later.

By virtue of its compatibility with both basic and constructivist perspectives, the static account of emotion concepts has come to reflect a widespread default assumption among many psychologists. However, we believe that it is worth questioning this implicit orthodoxy and considering whether dynamic influences on mental state concepts have been overlooked. Importantly, these dynamic and static accounts are not mutually exclusive. Both static features and dynamics could contribute to the conceptual structure of mental states. Indeed, we explore potential complementarity between them in this investigation. Nonetheless, this static account serves as a useful foil against which to compare the dynamic account we propose.

The dynamic account of mental state concepts

Despite the evidence supporting a static account of mental state concepts, we suggest that this account cannot provide a complete explanation for people’s structured knowledge of this domain. Mental states are not static. A mental state does not exist as an isolated event, independent of the other states before or after it. Mental states are dynamic. States ebb and flow over time and transition from one to the next with regularity. Indeed, states are defined in part by the sequence of which they are a part. Confidence followed by sadness seems to us to tell a very different story than confidence followed by joy. Understanding someone’s mental states without considering the sequence in which those states arrived would be as difficult as understanding a sentence without knowing the order of the words. We suggest that these dynamics are key to understanding the structure of mental state concepts.

We propose that the conceptual structure of mental states serves an important goal of the social cognitive system: to make social predictions. Mental states are the hidden movers of the social world. They help people predict which actions a person will take (Frijda, 2004; Hudson et al., 2016): angry people aggress, tired people rest, hungry people eat, and happy people laugh. Mental states also predict other mental states. Experience-sampling studies have demonstrated that people transition between emotions in highly systematic ways (Thornton & Tamir, 2017). For example, someone feeling happy is more likely to next feel relaxed rather than agitated. These dynamics are key to understanding the structure of mental state concepts because state dynamics inform inferences about the social future (Tamir & Thornton, 2018).

The information people take in about states is, by its nature, dynamic: people sample others’ states and experience their own states in a serial manner. In other domains of knowledge, data about dynamics shapes associated concepts. For example, high-level neural representations of objects are shaped by how often they co-occur in people’s natural experience, and not just by their visual features (Bonner & Epstein, 2021). Objects that co-occur frequently are represented by similar patterns of brain activity, even if they look quite different from each other. There is a clear analog in the maxim of Hebbian learning: just as neurons that fire together wire together, representations of objects that appear together come to resemble each other. Similar results have been observed for learning abstract events and motor sequences: transitions between stimuli create event boundaries, shape neural pattern similarity, and give rise to abstract representations of event structure (Kahn et al., 2018; Lynn et al., 2020; Schapiro et al., 2013). Complex networks of associations between such stimuli become manifest in how people predict sequences will unfold. We suggest that the dynamics of mental states may likewise shape the conceptual structure we apply to them. By applying this general learning principle to the specific domain of mental state representation, we may gain new insight into the computational goals of human socio-affective systems, and the information they draw upon.

A growing body of literature suggests that the structure of mental state concepts is at least correlated with – though not necessarily caused by – state dynamics. The more likely one state is to precede or follow another, the more conceptually similar people judge them to be (Thornton & Tamir, 2017). That is, conceptual similarity reflects the temporal relation between each state and the other states that are likely to precede or follow it. This association between transition probability and similarity ratings is whopping: in previous research, we estimated a Spearman rank order correlation of ρ = 0.97 across a sample of hundreds of state pairs (Thornton & Tamir, 2017). This association is high enough that some might consider them the same construct!

How might we account for this close association between mental state transitions and mental state concepts? There are three simple possibilities. First, people may not know the actual transition probabilities between mental states. When asked to judge how likely a state is to follow another, they might instead report their similarity. However, this explanation is inconsistent with existing data showing that people can report emotion transition likelihoods with high accuracy (Thornton & Tamir, 2017). Similarity and experienced transition dynamics seem unlikely to share the same structure by coincidence.

Second, common causes may shape both the transition dynamics and conceptual similarity. For example, heart rate is a key indicator of arousal, an important conceptual dimension of affect (Russell, 1980). Human physiology also constrains how quickly one’s heart rate can change (Borst et al., 1982). This may make it more likely that a person will transition from a high arousal state to another high arousal state than from a high arousal state to a low arousal state. High arousal states thus would have high transition probabilities and high static featural similarity. In this way, the nature of the circulatory system may be a common cause of both static and dynamic features of mental states. If common causes shape both the static and dynamic features of mental states, this would lead to both correlations between these different types of features, and two discrete, albeit correlated, pathways for shaping state concepts. The current work tests whether such common causes are necessary for explaining the correspondence between dynamics and concepts.

The final possible explanation of the correlation between mental state dynamics and concepts is that dynamics causally shape concepts. This point of view is supported by prior neuroimaging research on this topic. In a functional magnetic resonance imaging (fMRI) study of mental state representation, we found that neural representations of mental states systematically resemble the neural representation of likely future states (Thornton, Weaverdyck, et al., 2019). That is, merely thinking of a state like anger reflexively elicits neural activity associated with likely subsequent states, like regret. This relation cannot be explained solely by conceptual similarity because asymmetries in transition probabilities (e.g., tiredness following excitement more than vice versa) were uniquely associated with asymmetric neural representations. That is, the brain spontaneously encodes mental state dynamics over and above any shared variance with conceptual similarity. This result suggests that prediction is the brain’s priority, and that we form concepts as a downstream product of optimizing our representations for mental state prediction.

The conceptual structure of mental states

If mental state dynamics do shape mental state concepts, how are the former translated into the latter? The brain tends to seek out representations of the world that are not only accurate but also efficient (Olshausen & Field, 1996). Representing the transition probability between every pair of mental states might maximize accuracy, but it would be a highly inefficient route to capture mental states dynamics (i.e., due to the size of the transition probability matrix in question). Instead, a geometric representation offers a more parsimonious route: a mind that represents mental states as points within a geometric space, such that transitions are more likely between closer states, has access to a highly efficient representation of state dynamics using just a few conceptual dimensions. Geometric similarity spaces have a long history in psychology (Shepard, 1987), closely tied to need to learn and generalize efficiently. Thus, this proposition represents a logical application of a general cognitive principle to the specific case of mental states.

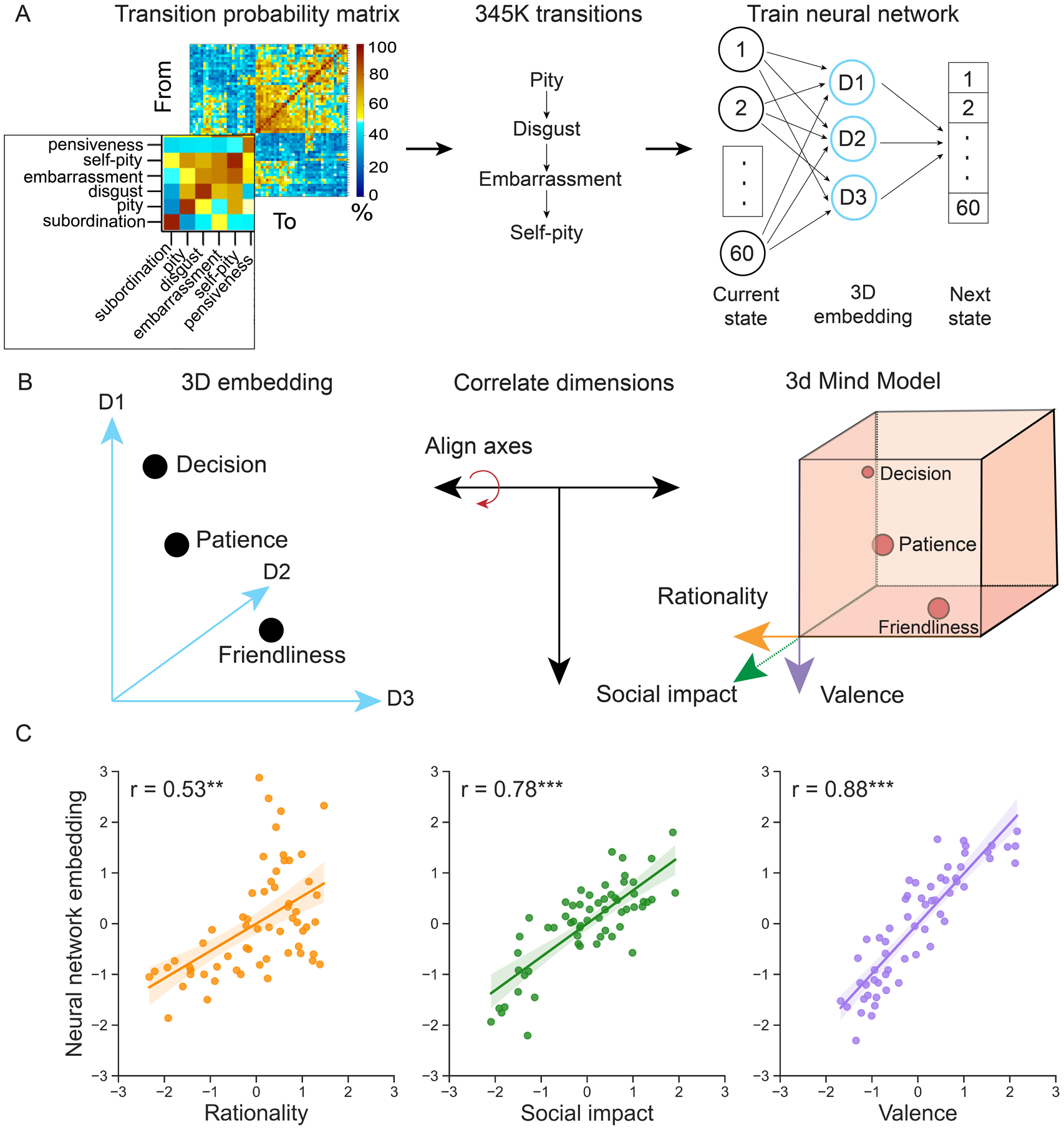

There is also empirical evidence that the brain employs a geometric approach to represent real-world mental state concepts. A variety of different geometric models have been proposed and tested in this domain. For example, the Circumplex Model (Posner et al., 2005; Russell, 1980), suggests that emotion concepts are organized by two psychological dimensions: valence (pleasant unpleasant) and intensity (high vs. low arousal). More recently, the 3d Mind Model suggests that mental states – inclusive of both affective and cognitive states – are organized by three psychological dimensions (Tamir et al., 2016; Thornton et al., 2022; Thornton & Tamir, 2020a): rationality (vs. emotionality), social impact (the extent to which a state affects other people), and valence (positive vs. negative). The 3d Mind Model also offers a good description of the conceptual structure of mental states, while also predicting their dynamics by encoding transition probability in terms of distance along its dimensions (Thornton, Weaverdyck, et al., 2019; Thornton & Tamir, 2017). These theories offer further grounding for the proposition that people translate the transition dynamics of real-world mental states into a low-dimensional geometric space.

The present investigation

The present investigation had three main goals. First, we tested whether mental state dynamics shape mental state concepts. In nine behavioral studies, participants first observed transitions between mental states, and then judged their conceptual similarity. We expected that states with higher transition probabilities between them would be rated as more conceptually similar. Studies 1a-f introduced participants to state transitions, using novel states with no meaningful static features. This design allowed us to test if state dynamics can play any role in state concepts. In Study 1g, participants observed both reliable state transitions and an orthogonal static feature, allowing us to test if dynamics can play any role in shaping concepts even when competing with a salient static feature.

Second, we sought to understand the mechanism by which people translate mental state dynamics into concepts. We expected that participants would efficiently represent transition probabilities using a low-dimensional geometric state-space. Within this space, closer mental states should be more likely to precede or follow one another in time, and in turn, be judged to be more conceptually similar. Study 1f tested this hypothesis using mental state transitions structured around an easily interpretable geometric structure. In this context, the geometric account made predictions that were clearly distinguished from alternative accounts.

Third, in Study 2a-b, we generalized the findings from Study 1 to a scenario that more closely matches mental state inferences in everyday life. Here, participants had to infer latent states from static features rather than directly observe them, and in which the latent states traversed a continuous rather than discrete state. This allowed us to test the effects of dynamics on mental state concepts in a more naturalistic context.

Finally, in Study 3, we sought to test if real-world mental state dynamics can explain real-world mental state concepts. To this end, we trained artificial neural networks on realistic sequences of mental states. The network was trained specifically with the goal of prediction. The networks used a compressed representation of the current state to predict the next state in a sequence. After training, we compared the representations that the networks had learned to known conceptual dimensions of mental state representation from both the Circumplex and 3d Mind Model. If the artificial neural network spontaneously learned a similar structure, this would indicate that human mental state dynamics – and the goal of predicting them – suffice to explain the real-world structure of mental state concepts.

Study 1

Across Studies 1a-g, we tested the hypothesis that people learn mental state concepts by observing state dynamics. We manipulated mental state dynamics – in the form of transition probabilities – and then tested for corresponding effects on the conceptual structure of those states. These experiments used variations of the same task (Figure 1). Participants played the role of xenopsychologists on a mission to understand the emotions of an alien creature. They first observed the creature experience novel emotions in a sequential learning task, and then rated the conceptual similarity between the emotions. We controlled the statistical regularities of the state sequences they observed, and predicted that it would shape their subsequent conceptual judgements.

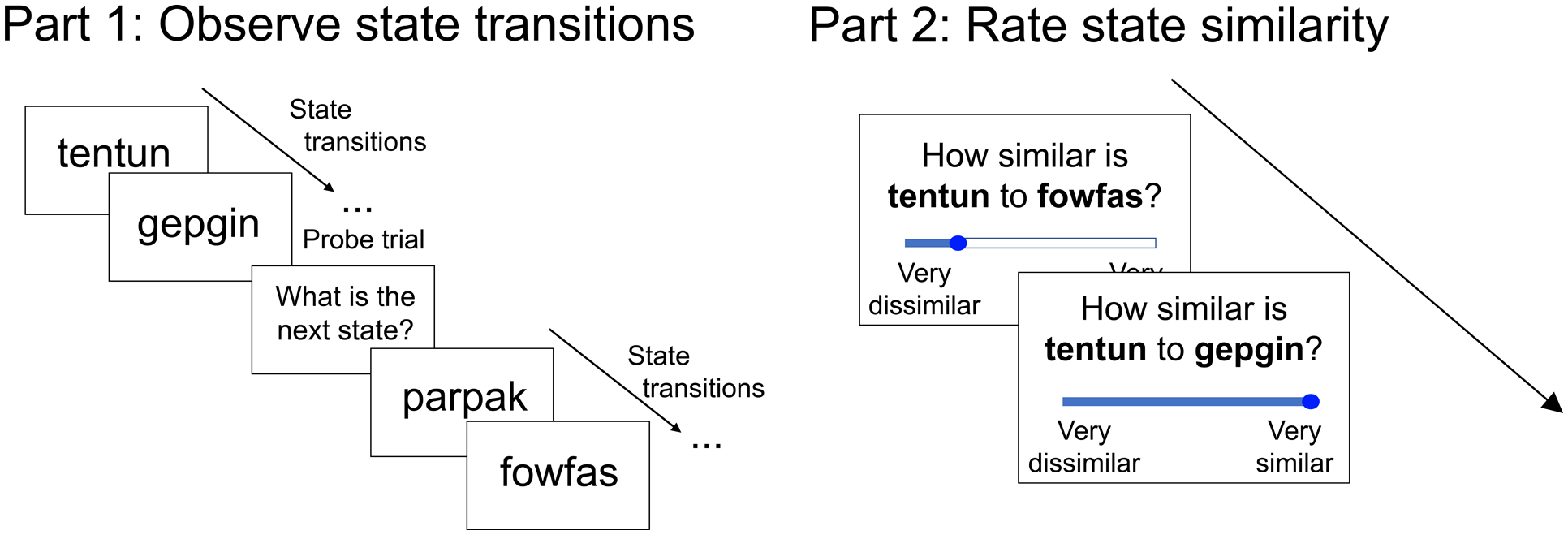

Figure 1. Task schematic for Studies 1a-g.

In Part 1, participants viewed a sequence of 50–100 states drawn from a specified transition probability matrix. They were probed during this training phase to predict the next state in the sequence or recall the previous state. In Part 2, participants rated the conceptual similarity between each pair of states.

Studies 1f and 1g also examined the mechanism and robustness of the observed effects, respectively. Study 1f compared several accounts of how dynamics might translate into concepts: (i) a naïve account, in which conceptual similarity perfectly mirrors transition probabilities; (ii) a successor representation account (Dayan, 1993; Momennejad et al., 2017), in which concepts reflect not only the next likely state, but also a weighted combination of more distant future states; and (iii) a geometric account, in which transitions probabilities are encoded by the proximity between states within a low-dimensional space. Study 1g tested whether dynamics would still shape concepts even when they had competition from static features. We paired each alien emotion with a different eye color, creating a different static feature along which people could potentially judge the similarity between these states. We then tested whether the transition dynamics predicted concepts even with such a salient alternative available.

Methods

Overview.

Across all seven experiments in Study 1, participants observed sequence of the mental states of an alien creature. We manipulated the transition probabilities between the alien’s mental states, such that certain states were more or less likely to precede or follow each other. After observing the alien’s states, participants rated the similarities the alien’s states. Our primary prediction in all studies was that transition probabilities would causally shape conceptual similarity, such that states with higher transitions between them would be judged as more similar. The main substantive difference between the different variants of the experimental paradigm across Studies 1a-g was the structure of the transition probability matrices that generated the state sequences that participants observed. These different structures allowed us to ensure the generalizability of our findings, and to test different mechanisms by which transition dynamics might be translated into concepts. The latter was the particular focus of Study 1f, which sought to distinguish between several different computational accounts of this process. Additionally, in Study 1g, we introduced a static feature – eye color – to accompany each alien state. This allowed us to determine whether dynamics would still influence concepts in the presence of a clear alternative.

Transparency and openness.

Of the seven experiments in Study 1, six (b-g) were preregistered on the Open Science Framework (https://osf.io/4m9kw/). Deviations from our preregistered plans are detailed where applicable. For all studies, we report how we determined sample size, all data exclusions, all manipulations, and all measures. All statistical tests were two-tailed. All data and code from this investigation are freely available (Thornton, Vyas, et al., 2019) on the Open Science Framework (https://osf.io/4m9kw/).

Participants.

We recruited a total of 1,012 participants across Studies 1a-g. Eleven participants were excluded due to unanticipated issues with data recording and demographics (see Supplemental Material), leaving 1,001 for analysis. Table 1 reports the distribution of these participants across studies, gender, and age. See Supplementary Materials for additional demographic information. All participants were recruited from Amazon Mechanical Turk using TurkPrime (Litman et al., 2017), with study availability limited to workers from the USA with 95%+ positive feedback. Within Studies 1a-c and separately within Studies 1d-g, participants who had taken part in one of the earlier experiments in a set were excluded. Participants in Studies 1a-c were not prohibited from participating in Studies 1d-g due to the significant time gap between these sets of studies (5+ months) and the different stimuli used. Participants in all studies provided informed consent in a manner approved by Princeton University Institutional Review Board.

Table 1.

Participants breakdown for Studies 1a-g.

| Study | N | Gender | Age | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Women | Men | Other | Not stated | Mean | SD | Minimum | Maximum | ||

| 1a | 20 | 8 | 11 | 1 | 0 | 33.55 | 10.48 | 22 | 55 |

| 1b | 42 | 18 | 24 | 0 | 0 | 36.00 | 10.36 | 21 | 60 |

| 1c | 92 | 34 | 57 | 1 | 0 | 34.32 | 8.94 | 19 | 62 |

| 1d | 213 | 69 | 143 | 0 | 1 | 34.45 | 10.01 | 19 | 73 |

| 1e | 212 | 98 | 112 | 2 | 0 | 35.13 | 10.03 | 20 | 72 |

| 1f | 213 | 86 | 125 | 1 | 1 | 35.30 | 10.69 | 20 | 72 |

| 1g | 209 | 73 | 134 | 1 | 1 | 36.35 | 9.86 | 21 | 70 |

Study 1a was an initial pilot study, and the target sample size was arbitrarily set to 20. The results from Study 1a informed a parametric power analysis which we used to determine the target sample size for Study 1b. We estimated the effect size (Cohen’s d) for this power analysis by correlating transition probabilities and similarity ratings within each participant, Fisher z-transforming them, and dividing the mean by the standard deviation. The power analysis – conducted using the one-sample t-test function from the ‘pwr’ package (Champely et al., 2018) in R (R Core Team, 2015) – targeted 95% power with α = .05. This process was repeated using Study 1b results to estimate sample size for Study 1c, and Study 1c results to estimate the sample size for Study 1d. We retained the target sample size based on the Study 1c data (n = 212) for Studies 1d-g, as similar power analyses based on later studies indicated that this sample size was more than adequate to achieve the desired power. Over the course of Studies 1a-d, we also increased the length of the learning phase of the paradigm and the size of the manipulation in order to improve statistical power (details below).

Experimental Paradigm.

Participants were forwarded from TurkPrime to a custom Javascript-based experiment. After consenting to participate, they were introduced to the study narrative: participants read that they would play the role of a xenopsychologist on an expedition to explore a new world. An advance team had already landed on the planet and identified an interesting alien creature (pictured in the instructions) which they believed to be capable of several different mental states. Participants were instructed that their mission as a xenopsychologist was to understand the alien creature’s mental states so that the expedition could be continued in a manner which was safe – and ideally, beneficial – for both the explorers and the aliens.

The advance team did not yet understand the aliens’ mental states but had labeled them for ease of reference: parpak, bembit, tentum, niznel, fowfas, and gepgin. These labels were 2-syllable nonsense words that we generated to be pronounceable but meaningless. The set listed above was used for Studies 1a-c. We switched the syllables across words to produce a new set for Studies 1d-f. This was repeated to produce another new set for Study 1g.

In Part 1 of the experiment, participants learned about the alien’s states by observing them over time. This observation period constituted the training portion of our experiment (Figure 1). During the training period, participants observed a sequence of 50 (Study 1a) or 100 (Studies 1b-g) mental states. Participants could advance through the sequence at their own pace. The sequence would periodically be interrupted by a probe trial. In Studies 1a-b, the probe trials asked participants to indicate which of the six possible states was least likely to come next in the sequence. In Study 1c, the probe trials asked participants which state had occurred just previously (i.e., 1-back). In Study 1d-g, the probe trials asked participants to indicate which of five states (other than the current one) was most likely to come next in the sequence. Probe trials were presented at regular 10-trial intervals in Studies 1a-b and 1d-g. In Study 1c, the probe trials were randomly distributed within each set of 10 trials, subject to the constraint that there could not be two probe trials in a row.

Due to a programming issue in Studies 1a-c, the state shown after each probe trial was shown twice. In Study 1a, there were 5 probe trials, so as a result of this bug, we showed a sequence of 55 states instead of the planned 50 states. In Study 1b, there were 10 probe trials, so we showed a sequence of 110 states instead of the planned 100 states. Finally, in Study 1c, there were 10 probe trials, so we showed a sequence of 100 states instead of the planned 90 states. This error did not adversely affect our analyses because we modeled only the off-diagonal structure of the transition and similarity matrices, and the error only systematically increased the probability of transitions along the diagonal.

The sequences of states presented in each study were independently randomly sampled from 6 × 6 transition probability matrices (Figure 2 & Figure S2). Studies 1a, 1b, and 1g used a two-cluster structure to approximate the actual structure of emotion transitions observed from experience sampling in previous research (Thornton & Tamir, 2017). Transition probabilities were high (22%, 22%, 40% respectively) within cluster, and low (11%, 11%, and 6.67%) across clusters. Study 1c used a three-cluster structure (within cluster probability = 33% vs. across cluster = 8.3%). Study 1d used a ‘ring’ structure in which states could transition clockwise or counterclockwise with high probability (36.4%) but only crossed the “face” of the clock with low probability (9.1%). This was meant to emulate the structure of the circumplex model of affect (Russell, 1980). Study 1e used a complex structure that approximately orthogonalized transition probabilities from transition profile similarity (see Supplemental Material) with the highest probability being 44% and the lowest non-zero probability being 17%. Study 1f used an asymmetric ring structure, where high probability transitions occurred only in the “clockwise” direction (80%) and lower probabilities for all other transitions (5%). This allowed us to examine asymmetric state transitions and disentangle the successor representation and geometric representation of the state dynamics. In Studies 1a-c, “self” transitions (i.e., repetitions of the same state) were allowed. In Studies 1d-g, states could only transition to other states and repetitions were not allowed. We generally used larger differences between high and low transition probabilities as the experiments progressed in order to maximize effect sizes. Note that, in all studies, the nonsense words were assigned to positions on the transition probability matrix randomly for each participant to avoid any confound that might results from the letter in the words.

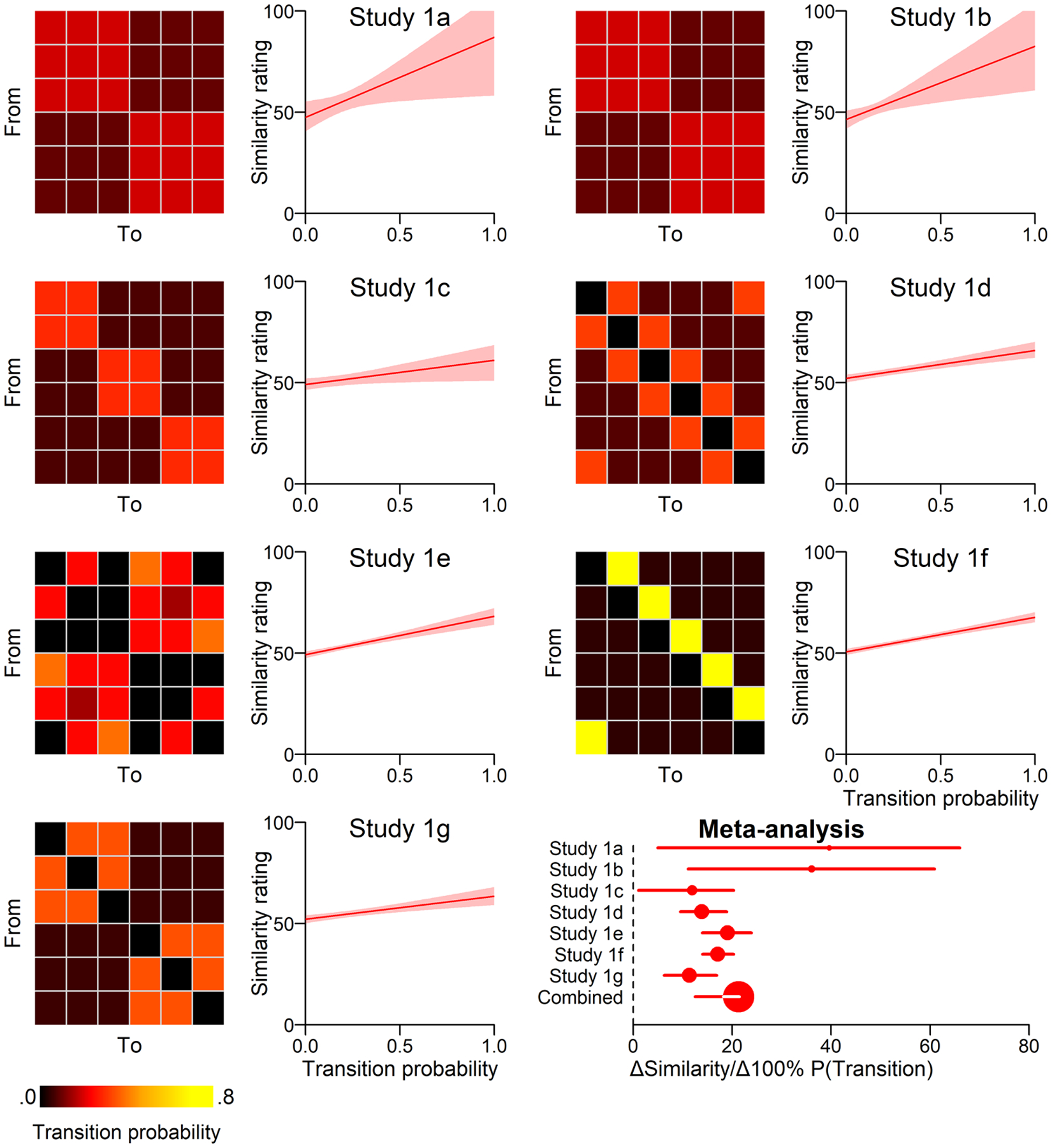

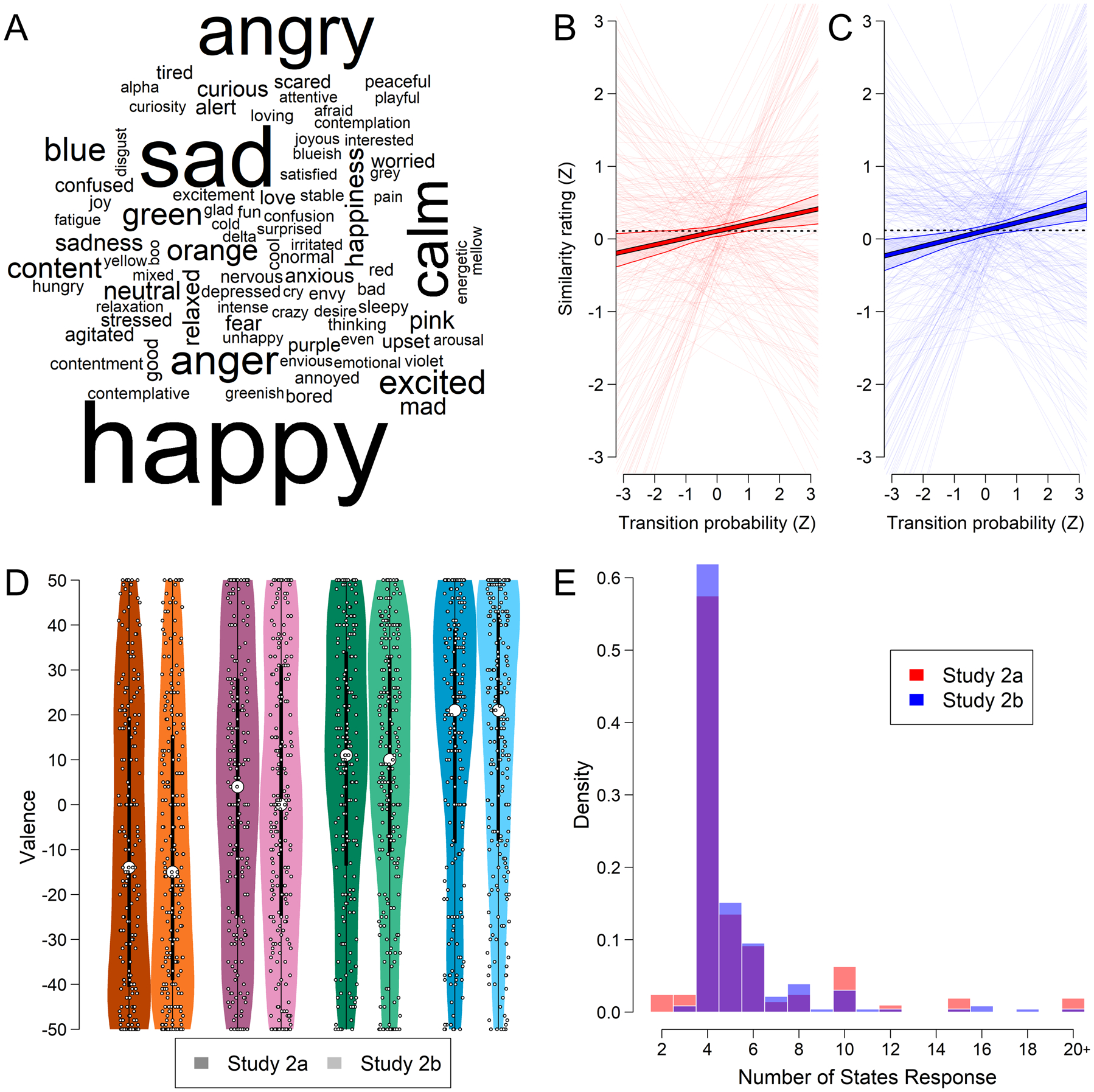

Figure 2. Mental state transition probabilities predict similarity ratings.

In Studies 1a-g, participants observed a sequence of mental states determined by a predefined transition matrix (heatmaps), and then rated the conceptual similarity between each pair of states. Mental state concepts were significantly predicted by the observed transition probabilities (line graphs) in all studies. Multilevel bootstrapping was used to estimate a meta-analytic effect size and confidence interval (circle radius reflects sample size). The transition probability matrices represent the ideal from which each participant’s sequence was independently sampled; the transitions probabilities each participant observed differed due to random sampling.

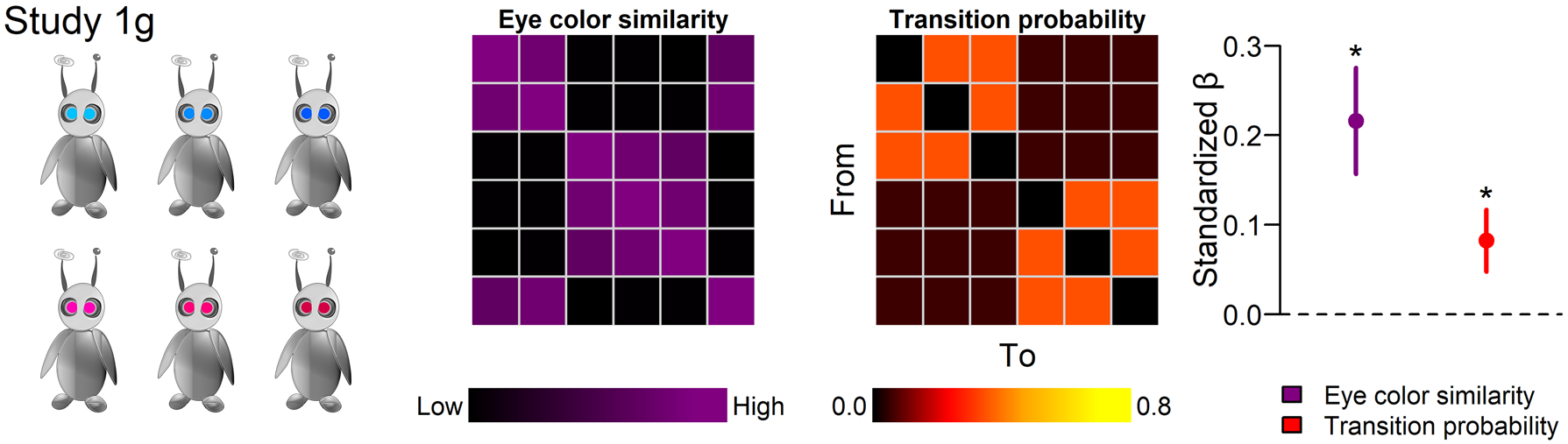

Study 1g featured an additional manipulation. Each mental state was paired with an eye color in a drawing of the alien, in addition to the mental state label. The six states fell into two clusters of eye color: reds and blues (Figure 3). We manipulated the shades of reds and blues such that the ratio of within-to-between cluster distance in CIELAB space matched the ratio of within-to-between cluster transition probabilities in the transition probability matrix. The color clusters and the transition clusters contained different sets of states. As a result, the eye color similarity matrix was approximately orthogonal to the transition probability matrix (Figure 3). This allowed us to independently assess the effects of the static feature of eye color and the dynamics of transition probabilities. The eye colors were paired with the state labels throughout training, but not in the subsequent similarity rating phase of the experiment, where participants saw only the labels.

Figure 3. Static and dynamic mental state features jointly shape mental state concepts.

Study 1g manipulated a static feature of mental states (associated eye color) independently of transition dynamics. Heatmaps visualize the competing predictions about state similarity made by eye color and transition probabilities. Both eye color and transition probabilities were statistically significant predictors of similarity judgements, as indicated by standardized betas with confidence intervals in the dot plot.

In Part 2 of the study, after the training phase, participants rated the similarity between pairs of states using a continuous line scale (Figure 1). With six states, there were 15 unique pairs for participants to rate – in random order – in Studies 1a-b. Starting in Study 1c, participants rated both “directions” of similarity (e.g., “How similar is state A to state B?” and “How similar is state B to state A?”) in two blocks, with randomized order within each. This increased the amount of data available for analysis and allowed us to study asymmetric transitions. We never asked participants to rate how similar a state was to itself. Following the similarity rating task, participants reported their demographics and were debriefed.

Statistical analysis.

Effect of dynamics on conceptual structure.

The primary hypothesis examined across all studies was that mental state dynamics causally shape the structure of mental state concepts. To test this hypothesis, we implemented linear mixed effects models that predicted judgements of the conceptual similarity between states, which participants provided, with the transition probabilities between those states, which we manipulated. We computed the transition probabilities that each participant observed and used these as the independent variable in our mixed effects model. Diagonal components of the transition probability matrix, which represent a state recurring after an instance of the same state, were excluded from the model.

In addition to the fixed effect of transition probability, we included a random intercept for subject, and a random slope for transition probability within subject. Study 1 included random effects to account for the nonsense words used to label each mental state. These effects included random intercepts for each of the two states being compared and for the interaction between them. However, we observed that these item effects accounted for zero variance, so they were dropped in subsequent studies.

We ran these linear mixed effects models with the “lme4” package (Bates et al., 2015) in R (R Core Team, 2015). Statistical significance was computed using the Satterthwaite approximation for degrees of freedom implemented in the “lmerTest” package (Kuznetsova et al., 2017). In Studies 1a-d, we preregistered this analysis as our primary confirmatory hypothesis test. Several additional exploratory analyses were also listed (see Supplemental Materials).

Testing the effect of dynamics on geometric representations.

Study 1f examined three potential mechanisms by which mental state dynamics might be translated into mental state concepts. We hypothesized that people translate mental state dynamics into concepts by constructing a geometric representational space. Such a space could be constructed by moving states close to states to which they tend to transition. Proximity within this space would efficiently encode dynamics while also serving as the basis for conceptual judgements. Encoding dynamics via proximity also distinguishes this geometric account from more generic spatial representations, since any matrix can be represented spatially given sufficient dimensions.

The transition probability matrix we used in Study 1f was well suited to testing this geometric account. The matrix could be represented geometrically by a ring structure (i.e., circle), in which transitions were likely around the circle, but not across its face (Figure 4). To quantify the predictions of the geometric account, we computed the Euclidean distances between the six points on this circle which represented the six alien mental states. To aid model convergence and comparison, we amended our registered plans and rescaled these distances to a [0, 1] interval. We then fit a mixed effects model containing this geometric distance predictor as well as the observed transition probabilities (together with random slopes for each predictor within participant, and the participant random intercept, as in all the other mixed effects models). If the geometric predictor in this model was significant, this would corroborate the hypothesis that people translate mental state dynamics into concepts by using the dynamics to construct a low-dimensional representational space.

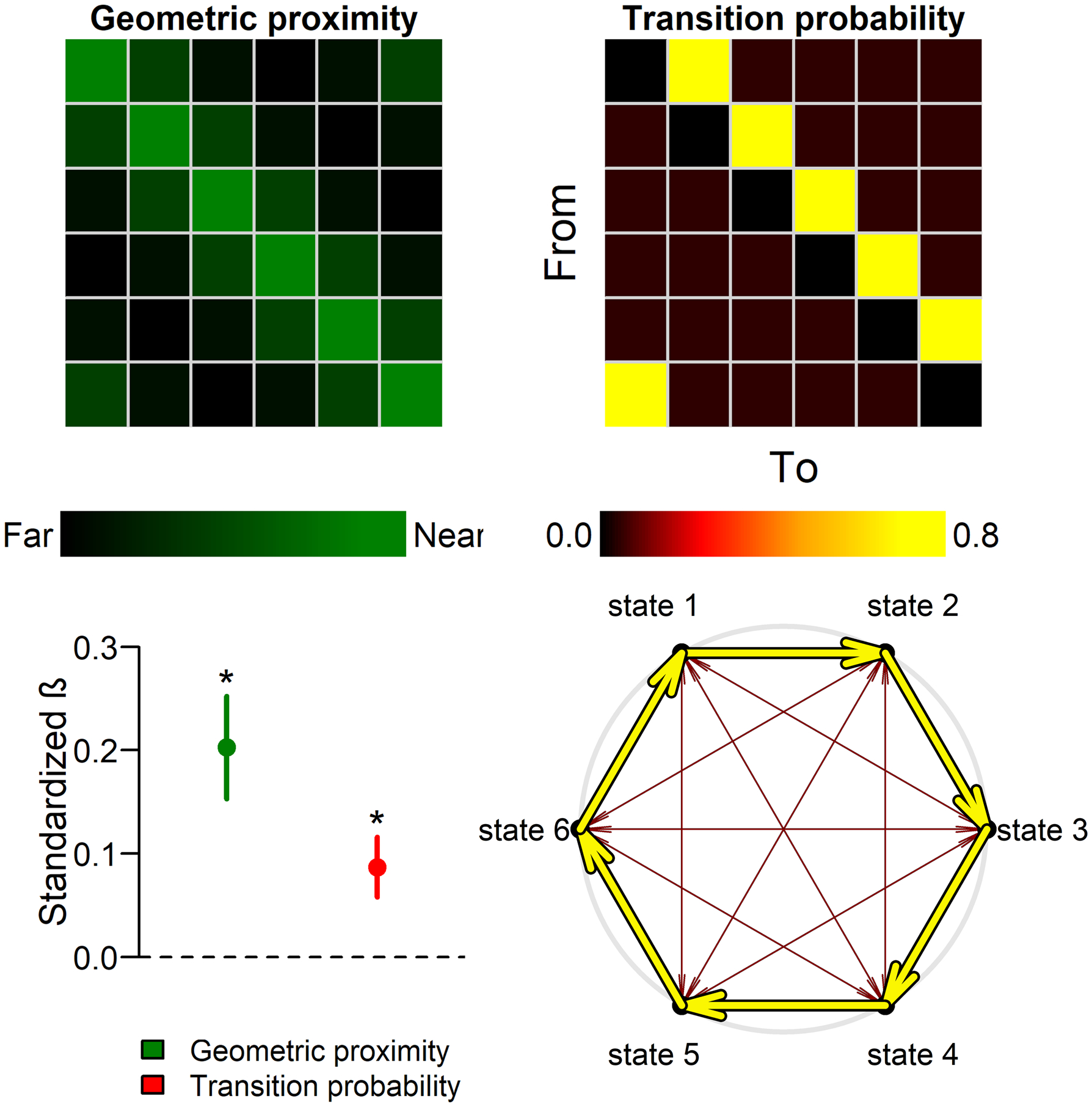

Figure 4. People use a geometric space to translate state dynamics into concepts.

Study 1f tested the hypothesis that dynamics are translated into concepts by embedding states in a geometric space where proximity encodes transition probabilities (bottom right). This model (top left) predicted conceptual similarity more effectively than either the successor representation (not pictured) or the raw transition probabilities (top right).

We also considered two potential alternative models. First, we considered the possibility that participants directly convert transition probabilities into conceptual similarity without any alteration. The analyses described in the previous section – performed for all studies, including 1f – tested whether transition probabilities and conceptual similarity are correlated. Rejection of the null in those analyses would thus corroborate the ‘direct translation’ account (i.e., that there is a direct, linear association between transition probabilities and conceptual similarity). The analysis described in the previous paragraph provides a second test of this direct translation account: if only the transition probabilities, and not the geometric predictor, significantly predicted conceptual similarity, then this would further corroborate the direct translation account. We also conducted a third analysis to test the direct translation account. We hypothesized that this account would fail if participants symmetrized their conceptual similarity judgements. Transition probabilities in this study were highly asymmetric. For example, state A might always precede state B (but never the reverse). The geometric account would predict that participants would judge A similar to B and that they would judge B similar to A. In contrast, the direct translation account would predict asymmetric similarity judgements.

To test for this symmetrizing effect, we mirror reflected the observed transition probabilities across the diagonal of the transition probability matrix. For example, if the original probabilities were p(A → B) = 100% and p(B → A) = 0%, then in the mirror version p(A → B) = 0% and p(B → A) = 100%. This mirror term was included in a mixed effects model alongside the original transition probabilities. We tested each coefficient against zero using the Satterthwaite approximation. If the mirror term was significant, this would indicate that participants were indeed symmetrizing the transitions, and thereby both further support the geometric account and falsify the direct translation account. We compared the mirrored and unmirrored coefficients to each other using bootstrapping. Due to all bootstrapped estimates sharing the same sign, we used percentile bootstrapping rather than the bias-corrected accelerated bootstrapping we had planned. This analysis allowed us to test whether people symmetrized the transitions when they translated them into concepts. We also conducted an exploratory analysis using a different approach to symmetrizing the transition probabilities (see Supplemental Materials).

We planned to test a third account for how dynamics might translate into concepts, the successor representation account (Momennejad et al., 2017). This account proposes that people cache the long-run likelihood of moving from one state to another, rather than just the 1-step transition probabilities. If people used such a representation, we would expect that pairs of states linked together by a third state would be similar to each other, even if there were never any direct transitions between them. For example, if A often led to B, and B often led to C, then the successor representation would predict that people judge A similar to C. However, we found that the optimal successor representation proved to be degenerate: the optimal decay parameter caused the successor representation to converge on the raw transitional probabilities. It was therefore not distinguishable from the direct translation account. As a result, we did not proceed with analyses comparing this account to others (see Supplemental Materials). We did, however, conduct an exploratory analysis in which we tested a symmetrized version of the successor representation, and it performed substantially better (see Supplemental Materials).

Dynamic vs. static effects.

Study 1g tested the independent influence of static and dynamic features on people’s mental state concepts. As described above, we assigned eye colors to the alien’s mental states to create a static feature space for the mental states. We first tested whether this static feature had any effect on mental state concepts. We computed the similarity between the eye colors as the opposite of the distance between the colors. We amended our analysis plans by normalizing this measure to a [0, 1] scale for easier comparison with the transition probabilities. We entered the color similarity into a mixed effects model predicting similarity ratings, with a random intercept for subject and a random slope for color similarity within subject. If the fixed effect of color was significant, this would indicate that this static feature influences the conceptual similarity between states.

Next, we tested whether mental state dynamics still influence mental state concepts when competing with salient static features. We fit a second mixed effects model, in which we included both the eye color similarities and the transition similarities, with corresponding random effects. If the transition similarity predictor in this model was significant, it would indicate that mental state dynamics causally shape mental state concepts even in the presence of a salient static feature. In addition to testing the significance of each coefficient in this model, we compared the standardized coefficients to each other via bootstrapping of linear regressions. These regressions featured the same predictors as the mixed effects model but excluded random effects to accelerate computation. We deviated from our registered plans by using percentile bootstrapping instead of bias-corrected accelerated bootstrapping.

Results

Mental state transitions shape conceptual similarity.

The primary goal of Studies 1a-g was to test for a causal effect of mental state dynamics on mental state concepts. Participants were xenopsychologists on an expedition to understand the emotions of an alien creature. They first observed a sequence of this alien’s mental states, and then rated the conceptual similarity between these states. If mental state dynamics shape mental state concepts, then transition probability should be positively associated with similarity. This is precisely what we observed across all seven experiments (Figure 2 & Figure S3).

In Studies 1a-b, transitions were structure around two clusters of three states each, with high transition probabilities within each cluster, and low transition probabilities across clusters. This structure mimicked the two-cluster structure of emotion transitions observed in human experience sampling data (Thornton & Tamir, 2017). A mixed effects model indicated a statistically significant effect of dynamics on concepts in both Study 1a (b = 39.59, β = .17, t(15.81) = 2.72, p = .015) and Study 1b (b = 36.09, β = .11, t(36.90) = 2.86, p = .0069). Study 1c varied the transition structure, using three clusters of two states instead of two clusters of three. The findings replicated the effects observed in the first two studies (b = 11.93, β = .061, t(86.86) = 2.49, p = .015). Study 1d varied the transition structure again, using a ring-shaped transition structured inspired by the Circumplex Model of affect (Russell, 1980). States had a high probability of transitioning “clockwise” or “counterclockwise” around this ring, but a low probability of transitioning to nonadjacent states across the “face” of the clock. Once again, we observed a significant effect of dynamics on concepts (b = 13.82, β = .090, t(200.25) = 6.06, p = 6.64 × 10−9). In Study 1e, we used a complex transition structure tailored to test a hypothesis regarding second-order transition statistics (see Supplemental Materials; Figure S1). This study also replicated the main effect of dynamics on concepts (b = 19.06, β = .14, t(208.71) = 7.43, p = 2.75 × 10−12). In Study 1f, we returned to the ring structure of Study 1d, this time with the additional constraint that states could only transition “clockwise” with high probability. Again we observed a significant effect of transition probabilities on conceptual similarity judgements (b = 17.08, β = .20, t(213.49) = 10.71, p = 1.07 × 10−21). In Study 1g, participants observed a two-cluster structure of emotion transitions, similar to Studies 1a-b. We again replicated the effect of state dynamics on concepts (b = 11.32, β = .073, t(212.18) = 4.11, p = 5.61 × 10−5).

Together, the results of Studies 1a-g provide unanimous support for the hypothesis that mental state dynamics shape mental state concepts. In all seven experiments, we observed statistically significant effects of transition probabilities on similarity ratings. To precisely estimate the size of this effect, we conducted a post-hoc meta-analysis across studies (Figure 2). This analysis indicated that, on average, changing the transition probability from 0% (impossible transition) to 100% (certain transition) would produce a change in similarity of 21.27 on a 100-point continuous line scale (95% bootstrap CI = [12.40, 21.49]). The high consistency of this effect across seven studies – six of them preregistered – provides strong evidence in favor of a dynamic account of mental state concepts.

Mental state dynamics are represented in a geometric space.

Studies 1a-g support the hypothesis that mental state dynamics shape mental state concepts. Next, we asked how transitions translate into conceptual similarity. Study 1f examined three possible mechanisms explaining how this translation could occur. The first account – suggested by our previous research – was that people translate mental state dynamics into concepts by translating state transition into a low-dimensional geometric space, such that proximity encodes transition probabilities. To test this geometric account, we entered a predictor based on the Euclidean distance between states on a hypothetical ring into a mixed effects model. The results indicated robust support for the geometric account (b = 15.74, β = .25, t(212.00) = 9.98, p = 1.75 × 10−19). Geometric proximity remained a significant predictor when the transition probabilities were included in the model (b = 12.58, β = .20, t(210.90) = 8.06, p = 5.67 × 10−14). This indicates that the geometric account offers unique explanatory power, over and above the transition probabilities themselves (Figure 4). This result does not necessarily mean that participants representational space was 2d – they could have represented the same geometry in higher dimensions – but it does suggest that their representations can be projected onto a 2d manifold. The transitions probabilities also remained a statistically significant predictor, albeit smaller in magnitude (b = 7.26, β = .087, t(234.03) = 5.93, p = 1.07 × 10−8).

The second account we considered was a “direct translation” account in which people would convert transition probabilities directly to similarity ratings without any systematic alteration. Under this account, we would expect similarity ratings to be a direct, linear function of transition probabilities – our default assumption in Studies 1a-e. The primary analyses of all seven studies provided initial support for this account: transition probabilities causally shaped similarity judgements in this manner. However, the asymmetric transition probabilities matrix used in Study 1f permitted a stronger test of this hypothesis. Specifically, we tested whether people symmetrized their similarity judgements relative to the asymmetric transitions. To do so, we entered both the transition probabilities and their mirror reflection into a mixed effects model. We found that both the transitions (b = 20.25, β = .24, t(212.02) = 10.73, p = 9.53 × 10−22) and their mirror image (b = 13.57, β = .163, t(212.74) = 8.00, p = 8.03 × 10−14) were significant predictors of similarity ratings. The statistical significance of the mirror image predictor indicates that people do indeed symmetrize their conceptual similarity judgements relative to the raw transition probabilities. As a result, the similarity judgements do not reflect unaltered transition probabilities, falsifying the direct translation account.

In addition to falsifying the direct translation account, these analyses provide further insight into the geometric account. Euclidean distance is symmetric – just like people’s similarity judgements – so the geometric account performed better in part because it naturally symmetrized the data. However, the symmetrizing was not complete. The raw transition probabilities predicted similarity judgements significantly better than their mirror image (Δb = 6.78, 95% CI = [4.26, 9.24]). This indicates that the conceptual similarity judgements retained some of the asymmetries present in the transition probabilities. This helps to explain why the transition probabilities remained significant – if less important – predictors of similarity when included in a model alongside the geometric distances. Since the geometric account predicted purely symmetric conceptual similarity, it allowed room from the (asymmetric) transition probabilities to explain the residual asymmetry, thereby remaining significant.

Importantly, this symmetrization does not completely explain the success of the geometric account. In an exploratory analysis, we tested the geometric account while controlling for both the raw transition probabilities and their mirror image. Even with the mirror image transitions included, the geometric account remained a significant predictor of similarity (b = 9.45, β = .152, t(197.71) = 3.771, p = .00022). This indicates that geometric account extends beyond the 1-off-diagonals of the transition matrix: in other words, it explains variance in similarity between mental states that are not directly adjacent to one another on the ring, despite the fact that there was no systematic variation in the transition probabilities between nonadjacent states. This provides further, and more specific, evidence for the geometric account.

The third account we considered was the successor representation, which proposes that people cache the long-run likelihood of moving from one state to another, rather than just the 1-step transition probabilities (Dayan, 1993; Momennejad et al., 2017). This account makes asymmetric predictions, but predicts a graded drop-off in the similarity between states in the direction of likely transition around the ring. A decay parameter determines how heavily to weigh near-term vs. far-term transitions when generating a successor representation. We fit this decay parameter to the similarity ratings but found that the optimal value was zero (see Supplemental Materials). This indicates that only the 1-step transitions should be included in the successor representation and not any later steps. As a result, the successor representation was identical to the direct translation account described above. We, therefore, did not test this account separately since the result would have been redundant.

Together, the results of Study 1f support the hypothesis that people efficiently encode mental state dynamics by arranging them within a geometric space. However, it demonstrates that they also retain additional dynamic information – such as asymmetries – that cannot be fully encoded by such a space on its own.

The effect of state dynamics is robust to static features.

Study 1g tested whether dynamics shaped concepts even when forced to compete with a strong signal offered by a static state feature. Study 1g introduced such a competing static feature: alien eye color. Each state was associated with a unique color, in addition to its specific transition profile (Figure 3). The manipulations of transitions and eye color were orthogonal, allowing us to test the independent influence of both dynamic and static features of states on concepts. A mixed effects model containing both predictors revealed indicated significant effects of both transitions (b = 12.64, β = .082, t(210.36) = 4.69, p = 4.98 × 10−6) and eye color (b = 15.35, β = .22, t(208.08) = 7.18, p = 1.19 × 10−11) on similarity judgements. Eye color was a significantly stronger predictor of similarity ratings than transitions (Δβ = .13, 95% CI = [.10, .16]). The results of Study 1g show that dynamic influences on mental state concepts can coexist with even highly salient static features. Static features also had a significant effect, supporting the implicit assumption in the field.

Discussion

Studies 1a-g establish three important findings. First, all seven experiments corroborate the primary hypothesis that mental state dynamics causally shaped the structure of mental state concepts. Second, Study 1f provides insight into the mechanism by which observed dynamics translate into conceptual representations. We found evidence that the mind represents these states using a geometric space, within which more proximal states have high transition probabilities between them. Study 1f does not exhaust all of the possible mechanisms by which people could translate dynamics into concepts, but does at least suggest that conceptual similarity is not purely a linear function of transitions probabilities. Third, Study 1g tested whether the dynamic account of mental state concepts could survive the introduction of salient, reliable static cues to mental state similarity. Such static features are doubtless present in the real world, so it is important to test if they completely override dynamic information. We found that dynamics persisted in influencing mental state concepts, even in the face of this competing static influence (which also shaped conceptual similarity). Together these findings present strong evidence that the way we think about thoughts and feelings may be shaped, at least in part, by how those states typically change over time.

Studies 1a-g all used variants of the same basic experimental paradigm. This paradigm granted us complete experimental control over participants’ experiences with the mental states they learned about. The paradigm included an engaging scenario to increase participant “buy-in,” and included an illustration of the alien that participants were learning about to give them something to imagine. This sort of social-affective framing is common and effective across a wide range of established paradigms. For example, in the false-belief/false-photo task commonly used in fMRI research on theory of mind, the experimental and control conditions are deliberately identical in logical structure, and similar in terms of low-level features such as word lengths (Dodell-Feder et al., 2011; Saxe & Kanwisher, 2003). The only difference between these conditions is the social framing of the false-belief condition, versus the nonsocial framing of the false photo condition. Despite this minimal difference, this task is so reliable that is routinely used as a localizer for social brain regions. More generally, research on anthropomorphism illustrates how readily humans will spontaneously imbue even clearly mindless systems with a human-like mind, in an attempt to achieve a better understand of their environment (Waytz et al., 2010). This established literature thus supports the face validity of the social framing in these studies.

A deeper form of validity was established by the statistical structures we imposed on the task. In Studies 1a-b & g, we created a two-cluster transition probability matrix which closely resembles the true structure of emotion transitions observed from experience sampling (Thornton & Tamir, 2017). In Studies 1d&f, we used a ring-based transition structure which closely resembles the conceptual structure of the Circumplex Model (Russell, 1980). Thus, in addition to the social framing of the task, we also specifically examined the ability of participants to learn concepts from mental state transition structures similar to those that they might actually encounter in their everyday lives.

Despite these efforts toward naturalism, Studies 1a-g lacked many characteristics of perceiving emotions in everyday life. For example, we used arbitrary words and a small number of featureless discrete states to represent the alien’s mental experiences. We presented participants these mental states completely explicitly but in the real world, people’s mental states are rarely so transparent. Rather, people must often use a combination of noisy indicators, such as facial expressions, tone of voice, and context, to infer what others may be thinking or feeling (Barrett et al., 2011; Zaki et al., 2009). Moreover – although the debate over the discrete vs. continuous nature of emotions continues (Barrett et al., 2018; Cowen & Keltner, 2018) – it seems likely that at least some aspects of affective experience are continuous, rather than discrete.

In principle, one could address these problems by running a fully naturalistic version of the Study 1, in which participants observe other people’s emotions change over time. However, people’s prior knowledge and beliefs about emotions make it unlikely that we could manipulate the corresponding concepts through a short statistical learning task. Indeed, it would be worrying if we could substantially alter a person’s concept of happiness in a 20-minute online study. Due to this practical barrier, Studies 2 and 3 adopt different approaches to address these limitations and connect our findings back to the world of actual human thoughts and feelings. Study 2 does this by reintroducing many important features of human mental states into our xenopsychology paradigm. Study 3 complements this by examining real human mental state dynamics, but through the lens of an artificial, rather than human, perceiver.

Study 2

Study 2a – and its preregistered replication, Study 2b – introduced two key elements of real-world human mental states back into a modified version of the statistical learning task from Study 1. First, to better mirror the process of real-world mental state inference, we made the alien’s states latent, rather than directly observable. Specifically, colors of the alien’s eyes became noisy indicators of its hidden mental states. Second, the alien’s latent states became continuous, rather than discrete. That is, the alien’s actual state was represented by numerical coordinates within a continuous state-space, rather than by a discrete word. Within this space, the alien’s emotions wandered nonlinear paths shaped by a combination of pull towards attractors within the space, momentum, and noise.

These modifications to the paradigm allowed Study 2 to achieve three main goals. First, we aimed to replicate the findings from Study 1, showing that transitions between states causally shaped mental state concepts when these more naturalistic elements were reintroduced. Second, we aimed to understand how state concepts come into being in the first place. We tested an account of how discrete state concepts could emerge from observation of the dynamics of a continuous underlying state space. Third, we aimed to understand how dynamic and static features of states complement each other in the development of mental state concepts. We hypothesized that static feature may provide “orientation” (e.g., positive vs. negative valence) to the otherwise orientation-free conceptual space learned through transitions dynamics. Achieving these goals would both strengthen and deepen the insights we arrived at in Study 1.

Methods

Transparency and openness.

Study 2a was a pilot study meant to establish a new behavioral paradigm. Study 2b was a direct replication of Study 2a, and was preregistered on the Open Science Framework (https://osf.io/96twz). Deviations from our preregistered plans are detailed where applicable. For all studies, we report how we determined sample size, all data exclusions, all manipulations, and all measures. All statistical tests were two-tailed. All data and code from these experiments are freely available on the Open Science Framework (https://osf.io/4m9kw/).

Participants.

In Study 2a we targeted the same sample size that we had converged on in Study 1: 212. Ultimately we recruited 207 (74 women and 133 men; mean age = 38.91; age range = 20–71) participants from Amazon Mechanical Turk using TurkPrime (Litman et al., 2017), with study availability limited to workers from the USA with 95%+ positive feedback and who passed TurkPrime’s quality filter.

Based on the results of Study 2a, we conducted a parametric power analysis in R (R Core Team, 2015). This power analysis used the participant-level Pearson correlations between participants’ state similarity judgements and the transitions probabilities they observed. The correlations were Fisherized and then entered into the one-sample t-test function from the ‘pwr’ package (Champely et al., 2018). The results indicated that a sample size of 237 participants would provide greater than 99% power for our primary hypothesis test. An analogous power analysis targeting the correlation between transition probabilities and valence was also conducted, and a sample size of 237 was found to offer greater than 95% power for this hypothesis test as well. We therefore targeted a sample of 240 participants for Study 2b to allow for exclusions.

We ultimately collected a sample of 236 participants from the same pool as Study 2a. Participants from Study 2a were prohibited from participating in Study 2b. After preregistered exclusions (see Supplemental Materials), we were left with a final sample size of 231 (102 women, 124 men, 1 non-binary, 1 other, and 3 preferred not to provide gender; mean age = 40.91; age range = 23–73). See Supplemental Materials for additional demographic information. Participants in both studies provided informed consent in a manner approved by Dartmouth College Committee on the Use of Human Subjects.

Experimental Paradigm.

Participants were forwarded from TurkPrime to a custom Javascript-based experiment. The framing of the experiment was almost identical to that used in Study 1: participants adopted the role of a xenopsychologist on a mission to an alien planet to learn about the mental states of the aliens. Unlike in Study 1, participants in Study 2 were not told in advance the names of the alien’s states, nor how many there were. Rather, they were instructed to pay attention to the alien’s changing eye colors to understand the states that they expressed.

Over the course of the learning phase of the experiment, participants watched 10 1-minute-long videos. Each video was framed as being a recording of the alien over one day. These videos featured the same alien cartoon as in previous studies. Aliens experienced four mental states, each reflected as a location within color space (blue, green, orange, or pink; Figure 5). Participants needed to infer the latent state space model from eye color. The alien’s eye colors changed continuously, albeit noisily, transitioning between the four latent states as described in the stimulus section below.

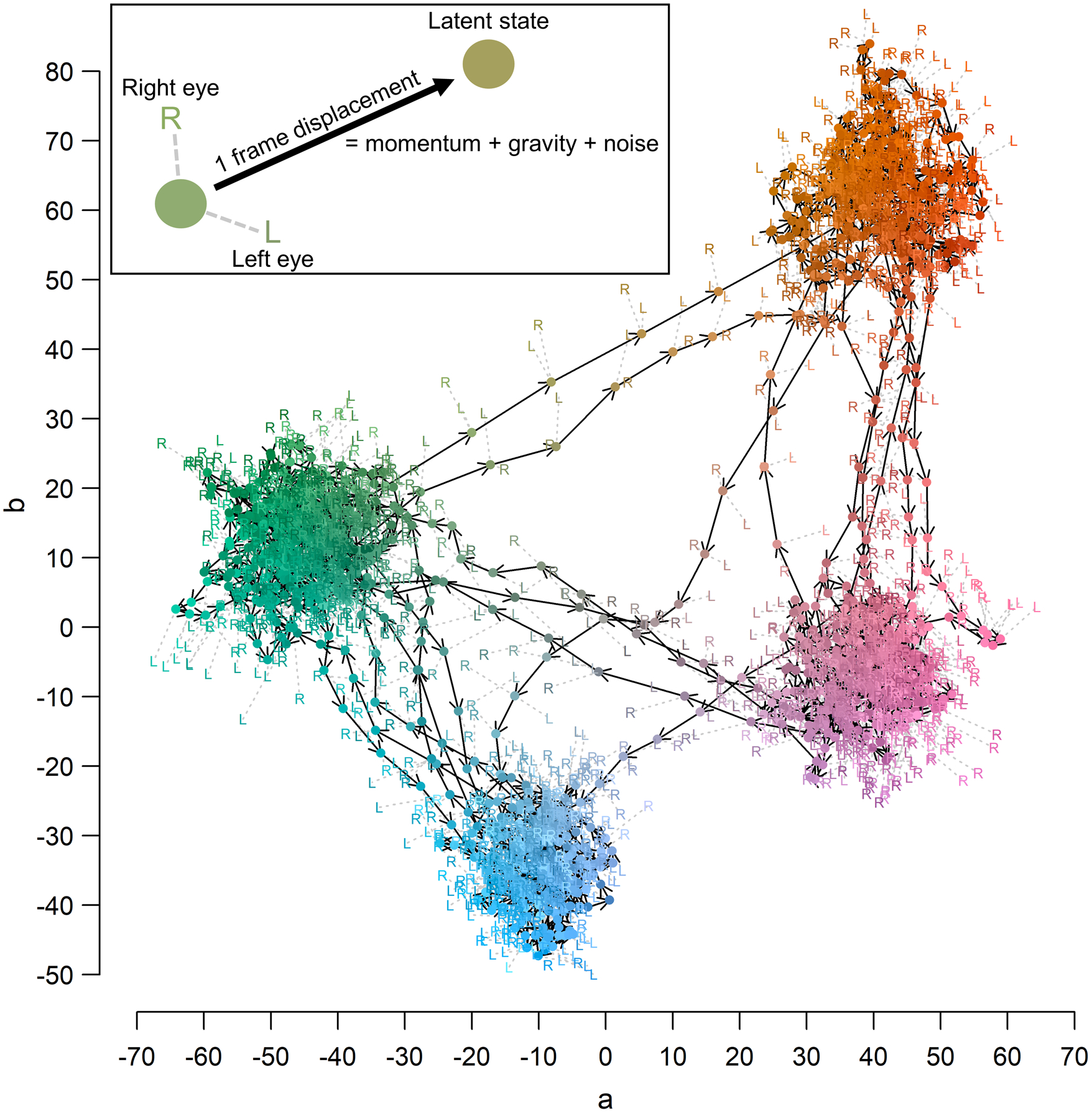

Figure 5. Illustration of an alien’s state space trajectory.

Aliens experienced four mental states, each reflected as a location within color space (blue, green, orange, or pink). States transitioned either to a ‘within cluster’ state (e.g., blue to green) with high probability or to a ‘between cluster’ state (e.g., blue to pink) with low probability. The location of the eye at each time point was determined by a combination of “gravitational” attraction to the currently active state color, momentum along its prior trajectory, and Gaussian noise. Each point in the diagram illustrates the alien’s true latent location in CIELAB space in one frame of one stimulus video. The x- and y-axes represent the a and b parameters of CIELAB space, respectively. Each point is colored as its position in this 3d space. The locations and colors of the “L”s and “R”s reflect the colors of the alien’s left and right eyes, as observed by participants. These colors are noisy indicators of the alien’s true latent position in the state space at the same timepoint (indicated by the circle linked to each “L” and “R” by a dashed grey line). The observable eye colors were semi-randomly displaced from the latent coordinates with their own set of independent dynamics. Arrows indicate the direction of the alien movement in state space from one frame to the next.

After each video, participants completed a probe trial in which they were shown a still frame of the alien in one of the four attractor states, randomly selected. They were asked to select from one of three other still frames – reflecting the other attractor states – to indicate which they though would be most likely to follow the target eye color. Additionally, for all videos after the first, participants were asked to press the “1” key on their keyboard whenever they detected a state change during the video. Due to a data collection error, the response times were only recorded for Study 2a.

After completing the learning phase of the study, participants then completed a post-test to probe how well they learned the latent state space. First, we asked participants to indicate the number of states that the alien had experienced using a free response box. Next, we told participants that – after conferring with their xenopsychologist colleagues – the team had come to the conclusion that the aliens had four different states. We then asked participants to indicate how frequently each of those states occurred with each of the four attractor colors using slider bars. This procedure allowed us to match up the participants’ chosen states to the underlying task states as best possible. Each of the participant’s states was matched with a task state based on the highest rated color (e.g., if the slider bar for “green” was highest on the first state, this would be matched up with green attractor state in the task). Ties were broken based on which of the tied states had the highest second-highest color rating. For example, if two states were both rated as orange 75%, but one state had green 65% as its second highest, and the other had pink 46% as its second highest, then the first state would be matched with the green task state and the second state would be matched with the orange task state.

Using the participant-assigned labels/colors, participants then rated the pairwise similarities between all four states in both directions (i.e., A to B and B to A) using a continuous line scale. On each trial, participants saw the label which they had assigned to the two states in question (e.g., state “X” and state “Y”) and were asked to judge the similarity between them. To help participants remember which state was which, we colored the text of the state names based on which attractor state we had matched with that name using participants color rating judgments. This procedure may have increased the relative impact of the static feature (color) on similarity, relative to the dynamics, because it made color highly salient when making these judgments.

Finally, participants answered several questions designed to test whether they treated them as mental states, per se, or as more domain-general states. First, after participants were told how many states the alien experienced (four), we asked them to provide names for those states using free response boxes. Participants also rated the valence of each of the four states on a line scale from negative to positive. They then matched each of the alien’s states to one of four human states: Content, Relaxed, Rage, and Panic. The matching was performed via a drag-and-drop procedure. Finally, they completed a demographic questionnaire and received debriefing and payment information.

Stimuli.

The primary stimuli in the experiment consisted of videos of a grey cartoon alien (as shown in Figure 3) with varying eye colors (as shown in Figure 5). These videos were generated based upon a 3-dimensional continuous state space model. Within this space, we placed four “attractor” states. Each attractor would be “on” for a period of 3 s, during which it exerted a gravitational pull on the alien’s state. After this period had elapsed, a new attractor state would begin pulling the alien’s state towards it instead, resulting in a transition. The probabilities of these transitions were determined by a predefined transition probability matrix. This transition matrix consisted of two clusters of two attractors each. There was a 60% chance of transition to the other attractor in the same cluster, and a 20% chance of transitioning to either of the other two attractors.

The alien’s latent state traversed the state space in a manner determined by three factors: “gravity” – attraction to whichever of the four attractor states was active, with an effect equal to 10% of the projected distance to that attractor; “momentum” – continuing along its prior trajectory, with an effect proportional to half of its velocity in the previous frame; and 3d Gaussian noise ~N(0,2). After the alien’s states was initialized at one of the four attractor’s coordinates (randomly chosen), its movement through the space proceeded according to these dynamics until the 60 second (1440 frames) duration for each video had elapsed (Figure 5).

The alien’s latent state was not directly observable in the videos. Rather, participants had to infer the latent state from the alien’s eyes, the colors of which provided noisy manifest indicators of its true state. Both the latent state, and these manifest indicators, occupied positions in the same 3-dimensional space. On each frame of the video, the coordinates of each eye were independently, semi-randomly displaced from coordinates of the alien’s latent state. This displacement was governed by its own set of dynamics. These dynamics were similar in concept to the dynamics of the latent state described above, but distinct from them. First, “gravity” attracted the eyes to the latent state, with a forced proportional to 10% of the distance from the eye to the latent state. This gravity force was what kept the eyes bound to the latent state: without it, the eyes would have freely roamed the state space on their own. The gravity force was “unidirectional” (i.e., it attracted the eye to the latent state, but not vice versa). As a result, the latent state influenced the eyes, but the dynamics of the eyes did not influence the trajectory of the latent state. Second, an “inertia” factor provided continuity from one frame to the next: each eye would tend to stay in the same location relative to the true state across frames. Finally, random 3d Gaussian noise ~ N(0, 2) was added. This noise was independent across the two eyes, and also independent of the noise added to the trajectory of the latent state. The eyes were thus completely independent of each other when conditioned upon the latent state. Together, these features meant that representing the alien’s latent state with maximal accuracy requires integrating information over time and over independent information channels. The eyes’ coordinates in the state space were translated into colors to make them directly observable to participants. Specifically, the three dimensions of the state space were translated to the three parameters of the CIELAB color space: L(uminance), a, and b. We used the CIELAB space because it is relatively perceptually uniform compared to other common color spaces such as RGB.

Using the attractor state transition matrix described above, we generated 20 unique sequences of attractor states. We chose locations in the state space for each attractor by selecting four colors from a colorblind-friendly palette, yielding blue: #56B4E9, green: #009E73, orange: #D55E00, and pink: #CC79A7 (Wong, 2011). These attractor colors were randomized with respect to the transition probabilities of the attractors. For example, sometimes blue and green were in the same attractor cluster and had a high transition probability between them, as shown in Figure 5, and sometimes they were in different clusters and had a low transition probability between them. For each of the 20 unique sequences of attractor states, we generated 24 different videos, corresponding to all 24 possible permutations of the attractor colors with respect to the transition probability matrix. During the learning phase of the experiment, each participant saw only 1 of the 24 different permutations of attractor colors and transition probabilities. Within this permutation, they viewed 10 randomly selected videos from the 20 possible. As a result, each participant had a unique experience of the alien’s underlying dynamics. Although the attractor state transitions were identical in each of the 24 variants of the same sequence, the movement of the alien through state space was randomly different in each variant due to the different noise applied to the alien’s latent state trajectory, and the additional noise applied to its eye color displacements. Each frame of the video was rendered as an image based upon the alien illustration (as shown in Figure 3) with edited eye colors. The resulting frame images were rendered into mp4 video files with 500 × 1080 pixel resolution at 24 frames per second using ffmpeg (https://ffmpeg.org/). These videos were then viewed by participants in the experimental paradigm described in the previous section.

Statistical analysis.

Testing dynamic and static influences on conceptual similarity.

To test our primary hypothesis, that mental state transition dynamics shape conceptual similarity, we fit a linear mixed effects model predicting participants’ similarity ratings from the transition probabilities that they had observed in the learning phase. This model included a random intercept for participant, and a random slope for transition probabilities within participant. As in Study 1, we expected higher transition probabilities to predict higher similarity ratings.

We also fit two different variants of this model. In the first variant, we simply substituted the visual similarity between the attractor states in CIELAB space (measured via reverse-coded Euclidean distance) in for the transition probabilities in the initial model. This model allowed us to test the impact of static features (i.e., eye colors) on conceptual similarity, to replicate the results of Study 1g.

In the second variant, we included three additional covariates which we anticipated might impact similarity judgements: the visual similarity between the attractor state colors; how often each color actually co-occurred with each attractor state (there is variability in these co-occurrences due to the noisy, nonlinear dynamics of the alien’s state trajectories); and the typical “times-of-day” (i.e., point within the video) during which state occurred (i.e., the frame-by-state co-occurrence matrix). To make predictions about similarity ratings from each of these three covariates, we took the reverse-coded Euclidean distances between the four attractor states with respect to each variable. The resulting linear mixed effects model contained these three covariates, plus transition probabilities, as fixed effects. The random effects included an intercept for participant, and slopes for transition probability and visual similarity within participant. An additional variant included a learning performance moderated (see Supplemental Materials). We expected that transition probabilities and visual color similarity would both continue to predict conceptual similarity, even when included in the same model as each other and the other covariates.