Abstract

Introduction

Previous reviews on active learning in dental education have not comprehensibly summarized the research activity on this topic as they have largely focused on specific active learning strategies. This scoping review aimed to map the breadth and depth of the research activity on active learning strategies in undergraduate classroom dental education.

Methods

The review was guided by Arksey & O’Malley’s multi-step framework and followed the PRISMA Extension Scoping Reviews guidelines. MEDLINE, ERIC, EMBASE, and Scopus databases were searched from January 2005 to October 2022. Peer-reviewed, primary research articles published in English were selected. Reference lists of relevant studies were verified to improve the search. Two trained researchers independently screened titles, abstracts, and full-texts articles for eligibility and extracted the relevant data.

Results

In total, 93 studies were included in the review. All studies performed outcome evaluations, including reaction evaluation alone (n = 32; 34.4%), learning evaluation alone (n = 19; 20.4%), and reaction and learning evaluations combined (n = 42; 45.1%). Most studies used quantitative approaches (n = 85; 91.3%), performed post-intervention evaluations (n = 70; 75.3%), and measured student satisfaction (n = 73; 78.5%) and knowledge acquisition (n = 61; 65.6%) using direct and indirect (self-report) measures. Only 4 studies (4.3%) reported faculty data in addition to student data. Flipped learning, group discussion, problem-based learning, and team-based learning were the active learning strategies most frequently evaluated (≥6 studies). Overall, most studies found that active learning improved satisfaction and knowledge acquisition and was superior to traditional lectures based on direct and indirect outcome measures.

Conclusion

Active learning has the potential to enhance student learning in undergraduate classroom dental education; however, robust process and outcome evaluation designs are needed to demonstrate its effectiveness in this educational context. Further research is warranted to evaluate the impact of active learning strategies on skill development and behavioral change in order to support the competency-based approach in dental education.

Introduction

Active learning (AL) has been broadly defined as a type of learning that demands active gathering, processing, and application of information rather than passive assimilation of knowledge [1]. This form of learning is well aligned with principles of adult learning, including self-direction, purposefulness, experience-based, ownership, problem orientation, mentorship, and intrinsic motivation [2]. Because students regularly enroll in dental programs as young adults after completing an undergraduate degree, active learning has been encouraged in dental education to help students gain knowledge and develop basic and advanced dental, cognitive, and social skills [3]. Active learning, along with curricular integration, early exposure to clinical care, and evidence-based teaching and assessment are important reforms introduced in dental education to ensure that students develop the competencies they need to become entry-level general dentists in the 21st century [4].

Numerous teaching strategies have been developed to promote active learning across health professions education, including problem-based learning, case-based learning, flipped learning, team-based learning, and group discussion. Research suggests that students and instructors positively value active learning [5, 6]; however, inconclusive evidence exists on the actual impact of active learning on knowledge acquisition, skill development, and attitudinal change in health sciences education [7, 8].

Many studies have been conducted on active learning in dental education, especially in the last two decades. Some primary and review studies have found that active learning is well received by students and instructors and may be more effective than traditional lecture-based teaching in dental education [9, 10]. However, review studies, in particular, have fallen short of providing a comprehensive overview of the existing literature on active learning in dental education [9, 11, 12]. For example, they have largely focused on the outcomes of a few active learning strategies (e.g., problem-based learning, flipped learning) providing limited data on their implementation and evaluation designs. These review studies have also failed to differentiate the scope, range, and nature of the research activity on active learning in different learning environments, including classroom dental education. This learning environment has unique characteristics and is of particular importance because it provides the foundational knowledge that students are expected to apply in laboratory and clinical settings.

Our scoping review aimed to map the breadth and depth of the research activity on active learning strategies in undergraduate classroom dental education from January 2005 to October 2022. Mapping this extensive body of literature is important to inform future research directions on active learning in dental education.

Methods

The scoping review framework developed by Arksey & O’Malley (2005) guided the study design, which includes the following stages: (1) formulating research questions, (2) identifying potentially relevant studies, (3) selecting relevant studies, (4) charting the data, and (5) collating, summarizing, and reporting results [13]. Unlike systematic reviews that typically synthesize the existing evidence on relationships between exposure and outcome variables, scoping reviews are well suited to map the breadth and depth of the research activity on complex topics and identify gaps in the relevant literature [13]. Our review report followed the guidelines of PRISMA Extension for Scoping Reviews [14].

Stage 1: Formulating research questions

Our scoping review sought to answer the following questions:

What are the characteristics of the studies conducted on active learning in classroom dental education in the study period?

How were active learning strategies evaluated?

What were the main results of the studies conducted?

Stage 2: Identifying potentially relevant studies

Four databases (MEDLINE, ERIC, EMBASE, and Scopus) were searched from January 2005 to October 2022. A preliminary search suggested that most studies on the study topic were published in the last two decades and the quality of the reports produced had substantially improved in the same study period. The search strategy for MEDLINE was developed by two authors (JG and AP) in consultation with a librarian at the University of Alberta. This strategy was then adapted for each database included in the review. Search terms used in each database are shown in Table 1. Reference lists of included studies and articles selected in previous reviews on specific active learning strategies were verified to enhance the search and test its sensitivity.

Table 1. Detail of search terms and search results.

| Database | Search Terms | Search Results (Number of Papers) | Year |

|---|---|---|---|

| MedLine | [active learn. OR problem based learning.mp. or exp Problem-Based Learning/ OR case based learning.mp. OR Group adj2 discuss*).mp. OR (small adj2 group*).mp. OR (small adj2 group*).mp. OR (peer adj2 teach*).mp. OR (critical adj2 think*).mp. OR (role adj2 play*).mp. OR team based learning.mp. OR (peer adj2 learn*).mp. OR (flipped adj2 class*).mp. OR (flipped adj2 learn*).mp. OR (blended adj2 learn*).mp.] AND [class.mp. OR class*.mp. OR classes.mp. OR preclinical.mp. OR non-clinical.mp. OR in-class.mp. OR course.mp. OR courses.mp.] AND [exp Students, Dental/ OR exp Education, Dental/ OR (dental adj2 learn*).mp. OR ((dental or dentist*) adj2 (educat* or learn* or student* or teach* or instruct* or curricul*)).mp. OR exp Schools, Dental/] | 422 | 2005–2022 |

| ERIC | [exp Active Learning/ or active learn*.mp. OR Case based learning.mp. or exp "Case Method (Teaching Technique)"/ OR case-based learning.mp. OR problem based learning.mp. or exp Problem Based Learning/ OR problem-based learning.mp. OR (think* adj1 pair* adj1 share*).mp. OR (peer* adj2 learn*).mp. OR critical adj2 think*).mp. OR exp Critical Thinking/ OR (role adj2 play).mp. OR exp Classrooms/ or class*.mp. OR discuss*.mp. or exp Discussion Groups/ or exp Discussion/ or exp Group Discussion/ OR reflection.mp. or exp Reflection/ OR teaching methods.mp. or exp Teaching Methods/] AND [((dental or dentist*) adj2 (educat* or learn* or student*)).mp. OR undergraduate dent*.mp. OR dental schools.mp. or exp Dental Schools/ OR exp Dentistry/ OR dental college.mp.] | 132 | 2005–2022 |

| Scopus | [active learn* OR Problem based Learn* OR Case based learn* OR Group discuss* OR think pair share OR Peer learn* OR "peer teach* OR critical think* OR Role play* OR flipped learn* OR Flipped Class* OR blended learn*] AND [Class* OR preclinical OR non-clinical OR in-class OR course*] AND [dental school OR dentistry OR dental learn* OR dental educat* OR dental student* OR dental teach* OR dental instruct* OR dental curricul*] | 442 | 2005–2022 |

| EMBASE | [active learn*.mp. OR problem based learning.mp. or exp Problem Based Learning/ OR exp problem based learning/ OR case-based learning.mp. OR (Group adj2 discuss*).mp. OR (small adj2 group*).mp. OR (think* adj1 pair* adj1 share*).mp. OR (peer adj2 learn*).mp. OR (peer adj2 teach*).mp. OR (critical adj2 think*).mp. OR (role adj2 play*).mp. OR team based learning.mp. OR (peer adj2 learn*).mp. OR (flipped adj2 class*).mp. OR (flipped adj2 learn*).mp. OR teaching methods.mp. or exp teaching/ OR (blended adj2 learn*).mp.] AND class*.mp. OR preclinical.mp. OR non-clinical.mp. OR in-class.mp. OR course*.mp.] AND [exp dental student/ OR exp dental education/ OR (dental adj2 learn*).mp. OR ((dental or dentist*) adj2 (educat* or learn* or student* or teach* or instruct* or curricul*)).mp. OR dental school.mp.] | 1200 | 2005–2022 |

Stage 3: Selecting relevant studies

Inclusion and exclusion criteria were based on the research questions and refined during the screening process. Primary studies published in English were included if they met the following criteria: (i) focused on undergraduate dental education in classroom settings, (ii) used at least one active learning strategy, (iii) involved dental students, and (iv) reported dental student data when students from other programs (e.g., medical students) were involved in the study. Studies were excluded if they were published in a language other than English, reported active learning in clinical or laboratory settings or at program level, and were not available as full-text articles. Review studies and perspective articles were also excluded. No restrictions were set on research methods. All references were exported to Zotero and duplicates were removed by JG. The remaining papers were then exported to Rayyan. A training session was held to ensure understanding of inclusion and exclusion criteria and consistency in their application. Two researchers (JG and SGC) independently screened for titles and abstracts and three researchers independently reviewed the full texts of articles selected in the first phase of screening (JG, SGC, MM). Consensus was obtained by discussion or consulting a fourth reviewer (AP).

Stage 4: Charting the data

A piloted, literature-informed data collection form was used to extract data on publication (year of publication, country of publication), study characteristics (research inquiry, research methodology, means of data collection), participant characteristics (type of student, sample size), intervention (content area, active learning strategy, comparator, and length of the exposure), evaluation (type of evaluation, level of evaluation, evaluation design, and outcome of interest) and main findings. Data extraction was completed independently by two trained researchers (JG and MM) and the completed data extraction forms were compared. Consensus was obtained by discussion or consulting a third reviewer (AP). Authors of studies that did not report key aspects included in the data extraction form were contacted to provide that information. Missing information was then categorized as “not reported.”

Stage 5: Collating, summarizing, and reporting results

Descriptive statistics were used to summarize quantifiable data using previously developed or data-driven classifications. Evaluation data such as level, outcomes (directly and indirectly measured), and results were summarized according to Kirkpatrick’s Model (1998) [15]. This model suggests four levels of outcome evaluation, including reaction (satisfaction and perceived outcomes), learning (direct measures of outcomes such knowledge, skills, and attitudes), behavior (behavioral changes resulting from the intervention), and results (organizational changes resulting from the intervention).15 This model is widely used to describe evaluations of educational interventions in a variety of contexts. Papers reporting more than one outcome level and active learning strategy were classified separately to calculate the number of evaluations per level and active learning strategy, respectively.

Results

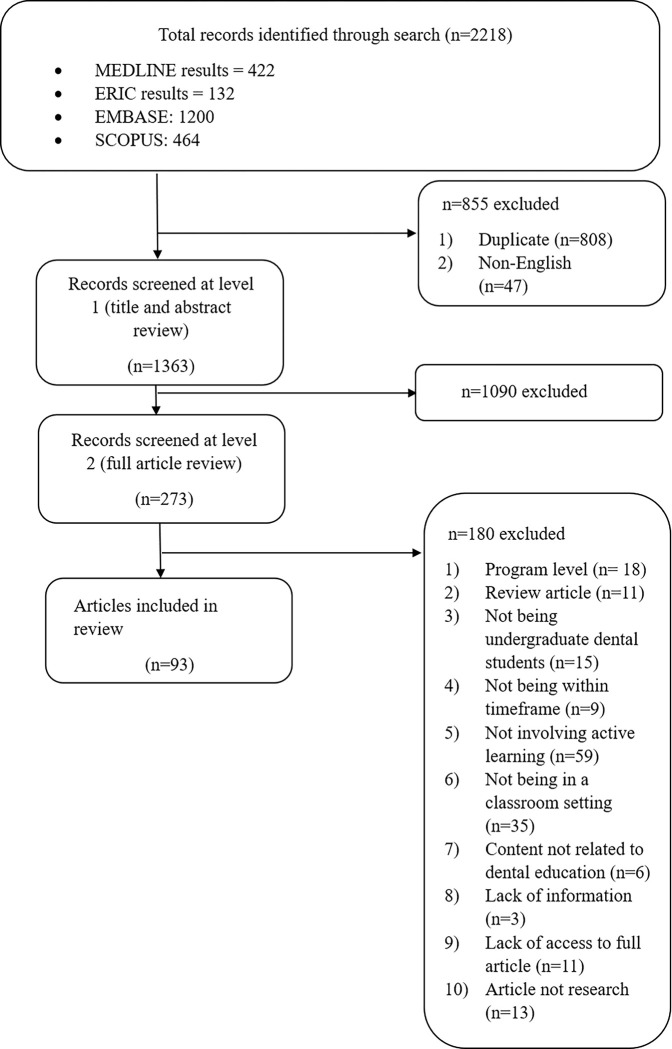

Searches in EMBASE (n = 1200), MEDLINE (n = 422), Scopus (n = 464), and ERIC (n = 132) databases generated 2,218 records. Duplicates (n = 808) and articles not published in English (n = 47) were removed. The screening of titles and abstracts yielded 273 potentially eligible articles and the screening of full texts identified 93 eligible articles, which were included in this review (Fig 1). No additional articles were identified through checking the reference lists of eligible studies and studies included in previous. A total of 10,473 students and 199 faculty were involved in the selected studies. Students involved were from dentistry (n = 10,297; 98.3%), medicine (n = 126; 1.2%), and dental hygiene (n = 50; 0.5%).

Fig 1. Flow diagram of the study selection process.

Characteristics of reviewed studies

As shown in Table 2, selected studies originated from different geographical areas, including Asia (n = 46; 49.4%), North America (n = 29; 31.1%), Europe (n = 10; 10.7%), South America (n = 6; 6.4%), Australia (1) and Africa (1). Twenty-eight of the studies produced in North America were conducted in the United States and 1 in Canada. Thirty-one studies were published between 2005 and 2014 and 62 between 2015 and 2022. Nine studies (9.6%) did not indicate the content area. Most studies reported active learning in clinical (n = 54; 58%) and basic (n = 25; 26.8%) sciences, and only 5 (5.3%) in behavioral and social sciences.

Table 2. Summary of characteristics of reviewed studies.

| Authors, year | Country | Inquiry | Study Design | Content Area | Active Learning Strategies | Comparator (if any) | Level of Evaluation |

|---|---|---|---|---|---|---|---|

| Mitchell & Brackett, 2017 [35] | USA | Quantitative | Not reported | Basic sciences | Flipped learning with TBL* | Traditional lecture | Reaction |

| Omar, 2017 [36] | Saudi Arabia | Quantitative | Not reported | Clinical sciences | Group discussions | N/A | Reaction |

| Gali et al., 2015 [37] | India | Quantitative | RCT** | Basic sciences | Group discussions | Traditional lecture | Reaction and Learning |

| Ihm et al., 2017 [38] | Korea | Quantitative | Not reported | Basic sciences | Flipped learning | Traditional lecture | Reaction |

| Kim et al., 2018 [39] | Korea | Quantitative | Not reported | Basic sciences | Flipped learning | Traditional lecture | Reaction and Learning |

| Luchi et al., 2017 [40] | Brazil | Quantitative | Not reported | Basic sciences | Game | Traditional lecture | Reaction and Learning |

| Almajed et al., 2016 [41] | Australia | Qualitative | Not reported | Not reported | Group discussion | Traditional lecture | Reaction |

| Ha-Ngoc & Park, 2015 [42] | USA | Quantitative | Not reported | Clinical sciences | Peer teaching | Traditional lecture | Reaction |

| Park et al., 2014 [43] | USA | Quantitative | Not reported | Clinical sciences | TBL*** | Individual learning | Learning |

| Miller et al., 2013 [44] | USA | Quantitative | Not reported | Basic sciences | Think-pair-share | Traditional lecture | Reaction and Learning |

| Khan, 2011 [45] | South Africa | Quantitative | Not reported | Clinical sciences | Group discussion | Active learning activities | Reaction |

| Kieser et al., 2008 [46] | New Zealand | Quantitative | Not reported | Clinical sciences | PBL | PBL | Reaction |

| Reich et al., 2007 [47] | Germany | Quantitative | Not reported | Clinical sciences | PBL | Traditional lecture | Reaction and Learning |

| Qutieshat et al., 2020 [48] | Jordan | Quantitative | Not reported | Clinical sciences | Flipped learning | Traditional lecture | Reaction and Learning |

| Ashwini et al., 2019 [49] | India | Quantitative | Not reported | Behavioral Sciences | Flipped learning | Traditional lecture | Reaction |

| Kohli et al., 2019 [50] | Malaysia | Quantitative | Cohort study | Clinical sciences | Flipped learning | Traditional lecture | Reaction and Learning |

| Tricio et al., 2019 [51] | Columbia | Mixed method | Not reported | Clinical sciences | Fishbowl | Traditional lecture | Reaction and Learning |

| Tauber et al., 2019 [52] | Czech Republic | Quantitative | Not reported | Basic sciences | Group discussion | Traditional lecture | Reaction and Learning |

| Himida et al., 2019 [53] | Scotland | Mixed method | Not reported | Behavioral sciences | Forum theatre | Traditional lecture | Reaction |

| Slaven et al., 2019 [54] | USA | Quantitative | Not reported | Clinical sciences | Flipped learning | Traditional lectures | Reaction and Learning |

| Park et al., 2019 [55] | USA | Quantitative | Not reported | Clinical sciences | TBL | Individual learning | Reaction and Learning |

| Yang et al., 2019 [56] | China | Quantitative | Not reported | Basic sciences | Group discussion | Traditional lectures | Reaction and Learning |

| Veeraiyan et al., 2019a [57] | India | Quantitative | Not reported | Basic sciences | TBL | Traditional lectures | Reaction and Learning |

| Veeraiyan et al., 2019b [58] | India | Quantitative | Retrospective | Clinical sciences | Flipped learning | Traditional lectures | Learning |

| Veeraiyan et al., 2019c [59] | India | Quantitative | Prospective | Clinical sciences | Flipped learning | Traditional lectures | Learning |

| Veeraiyan et al., 2019d [60] | India | Quantitative | Not reported | Clinical sciences | Flipped learning | Traditional lectures | Learning |

| Al-Madi et al., 2018 [61] | Saudi Arabia | Quantitative | Cross-sectional | Basic sciences | PBL | Traditional lectures | Reaction and Learning |

| Chutinan et al., 2018 [62] | USA | Mixed method | Not reported | Basic sciences | Flipped learning | Traditional lectures | Reaction and Learning |

| Jones, 2019 [63] | USA | Mixed method | Not reported | Clinical sciences | Group discussion | Traditional lectures | Reaction and Learning |

| Xiao et al., 2018 [64] | USA | Quantitative | Comparative | Basic sciences | Flipped learning | Traditional lectures | Reaction and Learning |

| Varthis & Anderson, 2018 [65] | USA | Quantitative | Not reported | Basic sciences | Blended learning | Traditional lectures | Reaction |

| Islam et al., 2018 [66] | Malaysia | Quantitative | Case control | Clinical sciences | Flipped learning | Traditional lectures | Reaction and Learning |

| Lee & Kim, 2018 [67] | USA | Quantitative | Not reported | Clinical sciences | Flipped learning | Traditional lectures | Reaction and Learning |

| Costa-Silva et al., 2018 [68] | Brazil | Quantitative | Not reported | Basic sciences | Group discussion | Traditional lectures | Learning |

| AbdelSalam et al., 2017 [69] | Saudi Arabia | Quantitative | Not reported | Basic sciences | Peer teaching | Traditional lectures | Learning |

| Bai et al., 2017 [70] | China | Mixed method | RCT | Clinical sciences | PBL | Traditional lectures | Reaction and Learning |

| Nishigawa et al., 2017a [71] | Japan | Quantitative | Cohort | Clinical sciences | TBL | Traditional lectures | Learning |

| Gadbury-Amyot et al., 2017 [72] | USA | Quantitative | Not reported | Clinical sciences | Flipped learning | Traditional lectures | Reaction |

| Sagsoz et al., 2017 [73] | Turkey | Quantitative | Pre- and post- test | Clinical sciences | Jigsaw method | Traditional lectures | Learning |

| Nishigawa et al., 2017b [74] | Japan | Quantitative | Not reported | Clinical sciences | Flipped learning | TBL | Learning |

| Samuelson et al., 2017 [75] | USA | Quantitative | Crossover | Clinical sciences | Group discussion | Traditional lectures | Reaction and Learning |

| Eachempati et al., 2016 [76] | Malaysia | Qualitative | Cross-sectional | Clinical sciences | Blended learning with group learning | Traditional lectures | Reaction |

| Cardozo et al., 2016 [77] | Brazil | Quantitative | Not reported | Basic sciences | Game | Traditional lectures | Learning |

| Bohaty et al., 2016 [78] | USA | Quantitative | Not reported | Clinical sciences | Flipped learning | Traditional lectures | Reaction |

| Echeto et al., 2015 [79] | USA | Quantitative | Not reported | Clinical sciences | TBL | Traditional lectures | Learning |

| Park & Howell, 2015 [80] | USA | Quantitative | Not reported | Basic Sciences | Flipped learning | Traditional lectures | Reaction |

| Takeuchi et al., 2015 [81] | Japan | Quantitative | Not reported | Clinical sciences | TBL | Traditional lectures | Reaction and Learning |

| Ilgüy et al., 2014 [82] | Turkey | Quantitative | Not reported | Clinical sciences | Group discussion | Traditional lectures | Learning |

| Guven et al., 2014 [83] | Turkey | Quantitative | Not reported | Basic sciences | PBL | Traditional lectures | Reaction and Learning |

| Du et al., 2013 [84] | China | Quantitative | Not reported | Clinical sciences | Group discussion | Traditional lectures | Reaction and Learning |

| Haj-Ali & Al Quran, 2013 [85] | United Arab Emirates | Quantitative | Not reported | Clinical sciences | TBL | Traditional lectures | Reaction and Learning |

| Ratzmann et al., 2013 [86] | Germany | Quantitative | Not reported | Clinical sciences | PBL | Traditional lectures | Reaction |

| McKenzie, 2013 [87] | USA | Quantitative | Pre-and post-test | Clinical sciences | Group discussion | Traditional lectures | Reaction |

| Kumar & Gadbury-Amyot, 2012 [88] | USA | Quantitative | Not reported | Clinical sciences | TBL | Traditional lectures | Reaction and Learning |

| Alcota et al., 2011 [89] | Chile | Quantitative | Not reported | Clinical sciences | PBL with debate and group discussion | Traditional lectures | Reaction and Learning |

| Romito & Eckert, 2011 [90] | USA | Quantitative | Not reported | Basic sciences | PBL | Traditional lecture | Learning |

| Obrez et al., 2011 [91] | USA | Quantitative | Not reported | Basic sciences | Group discussion | Traditional lecture | Reaction and Learning |

| Dantas et al., 2010 [92] | Brazil | Quantitative | Not reported | Clinical sciences | Group discussion | Text reading | Learning |

| Grady et al., 2009 [93] | UK | Quantitative | Not reported | Clinical sciences | Group discussion | Traditional lecture | Reaction |

| Moreno-López et al., 2009 [94] | Italy | Quantitative | Not reported | Clinical sciences | PBL | Traditional lecture | Learning and Reaction |

| Pileggi & O’Neill, 2008 [95] | USA | Quantitative | Not reported | Clinical sciences | TBL | Traditional lecture | Reaction and Learning |

| Park et al., 2007 [96] | USA | Quantitative | Retrospective | Clinical sciences | PBL with tutor expertise | PBL without tutor expertise | Learning and Reaction |

| Rich et al., 2005 [97] | USA | Quantitative | Not reported | Clinical sciences | PBL | Traditional lecture | Reaction |

| Croft et al., 2005 [98] | UK | Quantitative | Not reported | Behavioral sciences | Role Play | Traditional lecture | Reaction |

| Deepak et al., 2019 [58] | India | Quantitative | Prospective | Clinical sciences | Flipped learning | Traditional lecture | Learning |

| Qutieshat et al., 2018 [99] | Jordan | Quantitative | Not reported | Clinical sciences | Debate | Reply Speech | Reaction |

| Paul et al., 2019 [100] | Malaysia | Quantitative | Cross-sectional | Clinical sciences | Blended learning | Traditional lecture | Reaction and Learning |

| Youssef et al., 2012 [101] | Egypt | Quantitative | Not reported | Basic sciences | Group discussion | Traditional lecture | Reaction |

| Al Kawas & Hamdy, 2017 [102] | United Arab Emirates | Mixed method | Not reported | Not reported | TBL | Traditional lecture | Reaction |

| Nishigawa et al., 2017c [74] | Japan | Quantitative | Not reported | Clinical sciences | TBL and flipped learning | Flipped learning | Reaction and Learning |

| Khan et al., 2012 [103] | Malaysia | Quantitative | Not reported | Basic sciences | Debate | Traditional lecture | Reaction |

| Katsuragi, 2005 [104] | Japan | Quantitative | Not reported | Basic sciences | PBL | Traditional lecture | Reaction and Learning |

| Zhang et al., 2012 [105] | China | Quantitative | Not reported | Clinical sciences | PBL | Traditional lectures | Reaction and Learning |

| Zain-Alabdeen, 2017 [106] | Saudi Arabia | Quantitative | Not reported | Clinical sciences | Flipped learning | Traditional lectures | Reaction |

| Elledge et al., 2018 [107] | UK | Quantitative | Not reported | Clinical sciences | Flipped learning | Traditional lectures | Reaction and Learning |

| Richards & Inglehart, 2006 [108] | USA | Quantitative | Not reported | Clinical sciences | Group discussion | Traditional lectures | Reaction |

| Tack & Plasschaert, 2006 [109] | Netherlands | Quantitative | Not reported | Clinical sciences | PBL | Traditional lectures | Reaction and Learning |

| Markose et al., 2018 [110] | India | Quantitative | Comparative | Behavioral sciences | PBL | Traditional lectures | Reaction and Learning |

| Ahmadian et al., 2017 [111] | Iran | Quantitative | Interventional | Behavioral sciences | PBL | Role play | Reaction |

| Metz et al., 2015 [112] | USA | Quantitative | Not reported | Clinical sciences | Group discussion | Traditional lectures | Reaction and Learning |

| Shigli et al., 2017 [113] | India | Quantitative | Experiment | Clinical sciences | Group discussion | Traditional lectures | Reaction |

| Roopa et al., 2013 [114] | India | Quantitative | Not reported | Basic sciences | Peer teaching | Traditional lectures | Reaction |

| Rimal et al., 2015 [115] | Nepal | Quantitative | Not reported | Basic sciences | PBL | Traditional lectures | Reaction |

| Ihm et al., 2017 [116] | Republic of Korea | Quantitative | Not reported | Not reported | PBL | Traditional lectures | Learning |

| Chandelkar & Kulkarni, 2014 [117] | India | Quantitative | Not reported | Basic sciences | Peer teaching | Traditional lectures | Reaction and Learning |

| Huynh et al., 2022 [118] | USA | Quantitative | Not reported | Clinical sciences | Blended Learning | Traditional lectures | Reaction |

| Özcan, 2022 [119] | USA | Quantitative | Not reported | Clinical sciences | Flipped Learning | Traditional lectures | Learning and Reaction |

| Gallardo et al., 2022 [120] | Spain | Quantitative | Pre- and post-test | Clinical sciences | Flipped Learning | Traditional lectures | Reaction |

| Alharbi et al., 2022 [121] | Saudi Arabia | Quantitative | Pre- and post-test | Not reported | Flipped Learning | Traditional lectures | Learning and Reaction |

| Zhou et al., 2022 [122] | China | Quantitative | Pre- and post-test | Clinical sciences | Flipped Learning | Traditional lectures | Reaction |

| Xiao et al., 2021 [123] | USA | Quantitative | Pre- and post-test | Basic science | Flipped Learning | Traditional lectures | Learning |

| Veeraiyan et al., 2022 [124] | India | Quantitative | Not reported | Not reported | Multiple active learning strategies | NA | Learning |

| Ganatra et al., 2021 [125] | Canada | Mixed method | Not reported | Clinical sciences | Think pair share | NA | Reaction |

*TBL: Team-based learning

**RCT: Randomized control trial

***PBL: Problem-based learning

Methodologically, most studies (n = 85; 91.3%) were quantitative in nature. Only a few used qualitative (n = 2; 2.1%) and mixed-method (n = 6; 6.4%) approaches. Most studies (n = 67; 72%) did not explicitly report the methodology used and some (n = 8; 8.6%) reported features of the methodology employed (e.g., prospective, comparative). Reported quantitative methods (n = 26; 27.9%) included pre- and post-tests (n = 6), randomized controlled trials (n = 4), cross-sectional studies (n = 3), cohort studies (n = 2), qualitative description (n = 1), case-control studies (n = 1), and experiments without randomization (n = 1). Two reported randomized controlled trials did not describe sequence generation, none reported allocation concealment details, and only 1 reported blinding of outcome assessors. Most common means of data collection included surveys (n = 74; 79.5%) and test scores (n = 59; 63.4%) alone or combined.

Evaluation types and designs

All studies performed outcome evaluations. No process evaluations were reported alone or combined with outcome evaluations. Outcomes evaluated included satisfaction (n = 73), knowledge acquisition (n = 61), skill development (e.g., clinical, problem-solving, communication skills) (n = 3), and engagement (n = 2). Studies performed post-intervention (n = 70; 75.2%), pre-post-intervention (n = 18; 19.3%), and during-post-intervention (n = 5; 5.3%) evaluations.

Of all the evaluations performed (n = 93), post-intervention evaluations (n = 70) included a single group exposed to one condition (n = 23; 24.7%) or two compared conditions (n = 9; 9.6%), two compared groups exposed to two conditions including (n = 10; 10.7%) and not including (n = 21; 22.5%) randomization, and two or more non-compared groups exposed to one condition, including one-time (n = 6; 6.4%) or two-time (n = 1; 1.07%) evaluation points. In the one-time evaluation point, the outcome variables of interest were evaluated after the intervention, whereas in the two-time evaluation points, the outcome variables of interest were evaluated after the intervention by asking participants to assess those variables before and after the intervention. In both cases, the evaluation data of the study groups were aggregated. Pre-and-post intervention evaluations (n = 18), included a single group exposed to one condition (n = 4; 4.3%) or two compared conditions (n = 1; 1.07%), two compared groups exposed to two conditions including (n = 8; 8.6%), and not including (n = 4; 4.3%) randomization, and two or more non-compared groups exposed to one condition with one-time evaluation point (n = 1; 1.07%). During-post-intervention evaluations (n = 5), included a single group exposed to one condition (n = 1; 1.07%) or two compared conditions (n = 1; 1.07%) and two compared groups exposed to two conditions including (n = 1; 1.07%) and not including (n = 2; 2.1%) randomization.

Evaluated active learning strategies

Studies evaluated several active learning strategies. Strategies frequently (more than 10 studies) and fairly (between 6 and 10 studies) evaluated included flipped learning, group discussion, problem-based learning (PBL), and team-based learning (TBL). Blended learning, peer teaching, debate, and role play were occasionally evaluated (between 3 to 5 studies). Strategies seldom evaluated (1 or 2 studies) included games, think-pair-share, and others such as fishbowl and Jigsaw. All outcome evaluations were performed at reaction and learning levels as the present review focused on classroom dental education. Thirty-two studies (34.4%) performed reaction evaluations alone, 19 (20.4%) learning evaluations alone, and 42 (45.1%) reaction and learning evaluations combined. Only 4 studies (4.3%) reported faculty data in addition to student data. The lengths of the exposures to active learning ranged from one hour to three years.

Reaction-level evaluations, including self-reported learning

Seventy-six student reaction evaluations alone or combined were conducted. In these evaluations, active learning was perceived to improve satisfaction in 66 studies (86.8%) and knowledge acquisition in 4 studies (5.3%). Sixty-five of these evaluations or studies compared active learning and lectures, 3 compared two active learning strategies, and 3 compared different forms of the same active learning strategy. In fifty-nine studies, active learning was perceived as superior to lectures, 5 found no differences between active learning and lectures, and only 1 reported lectures as superior to active learning. Only 4 evaluations reported instructors’ reaction data. In all these evaluations, instructors positively valued active learning.

Frequently, fairly, and occasionally evaluated (three or more studies) strategies using reaction-level data included flipped learning, PBL, group discussion, TBL, and blended learning. Peer teaching, role play debate, game, and think-pair-share were seldom evaluated (1 or 2 studies) using reaction data. Flipped learning was perceived to improve satisfaction in 16 studies and was regarded as superior to lectures in 16 studies. PBL was viewed as effective to improve knowledge acquisition in 2 studies and satisfaction in 13 studies and perceived as superior to lectures in 13 studies. Group discussion was deemed effective for knowledge acquisition in 1 study and satisfaction in 12 studies and reported to be superior to lectures in 12 studies. TBL was viewed as beneficial to improve knowledge in 1 study and satisfaction in 7 studies and considered more effective than lectures in 7 studies. Blended learning was deemed to improve satisfaction in 4 studies and regarded as superior to lectures in 4 studies.

Learning-level evaluations

All studies in which learning was directly measured (n = 57) found that active learning was effective to improve knowledge acquisition largely based on test scores. Forty-eight of these studies (84.2%) compared active learning and lectures and 4 studies (7.0%) compared two active learning strategies. Based on the learning data, 39 studies found that active learning was superior to lectures in knowledge acquisition and 9 reported no difference between active learning and traditional lectures.

Frequently and fairly evaluated strategies using direct measures of learning included flipped learning, PBL, group discussion, and TBL. Blended learning, peer teaching, debate, game, and think-pair-share were rarely evaluated using such measures. Based on direct learning data, flipped learning was found to improve knowledge acquisition in 12 studies and to be more effective than lectures in knowledge acquisition in all 12 studies. Similarly, PBL was found to enhance knowledge acquisition in 9 studies and to be superior to traditional teaching in knowledge acquisition in all 9 studies. Direct learning data also supported the effectiveness of group discussion and TBL. Specifically, group discussion and TBL were found to improve knowledge acquisition in 5 and 7 studies, respectively. Regarding this outcome, group discussion was reported to be more effective than lectures in 5 studies and TBL in 7 studies.

Discussion

Most studies on active learning in classroom dental education were quantitative in nature and published in the last decade, did not report the study methodology, performed outcome evaluations, engaged in post-intervention evaluations, relied on student data, mainly measured satisfaction and knowledge acquisition, and focused on clinical and basic sciences. Our review also revealed that flipped learning, group discussion, problem-based learning, and team-based learning were the active learning strategies most frequently evaluated in classroom dental education. Based on both reaction and factual (direct measure) data, these strategies improved satisfaction and knowledge acquisition and were superior to traditional lectures in improving these outcomes. To our knowledge, this is the first attempt to map the literature on active learning strategies in classroom dental education. Our findings provide a much-needed overview of this body of literature, which previous strategy-specific reviews were not in a position to provide [10, 16, 17]. Such an overview is of critical importance to describe the available evidence and inform future research directions on the study topic.

Consistent with the data from previous reviews, the number of studies on active learning in dental education has increased over time, especially within the last decade [9, 16, 18]. This shows a positive response to repeated calls for transforming the learning environments in dental education. This surge of publications is encouraging as a proxy for innovation in dental education and as a vehicle for knowledge dissemination among dental researchers and educators. In research, though, more publication does not necessarily mean better research activity. Although scoping reviews are not intended to assess the quality of the studies conducted and the credibility of the evidence generated [13], they can shed light on these issues based on the research methods and designs employed and the nature of the evidence produced. Quality of research in educational innovations can also be inferred by examining the types of evaluations conducted.

Most studies included in our review did not explicitly indicate the methodology used, which previous review research in medical education has also reported [19]. This is of concern as methodologies are supposed to be deliberately chosen to inform study designs [20]. We did not assess whether the reported methodologies were correctly classified; however, misclassifications of study methodologies have been documented [21, 22]. Such misclassifications may be due to lack of methodological understanding and attempts to pursue methodological credibility by claiming the use of “more robust” designs than those actually employed [22]. Several recommendations have been made to help researchers frame their projects methodologically and conceptually, including the engagement of methodologists throughout the research process [19].

Many studies included in our review employed a post-intervention evaluation design with a single cohort. This design is known to have several limitations, such as the inability to assess the magnitude of the improvement, if any, and to account for extraneous variables that may influence the learning outcomes apart from the intervention. Additionally, none of the studies included in our review reported process evaluation. This type of evaluation examines the extent to which an intervention was implemented as expected, met the parameters of effectiveness for the intervention (conditions under which it works), and was aligned with the underlying principles of the type of learning (e.g., collaborative learning) it aimed to promote [23]. Process evaluations are particularly helpful to determine whether an intervention did not work because of its effectiveness, implementation, or both. Failure to report process evaluation and properly design and implement active learning strategies have been previously documented [6]. Such shortcomings can be misleading in two fundamental ways: suggesting that a strategy was not effective when it could potentially be and suggesting it was delivered as expected when it was not.

Our findings highlight the importance of reporting not only the research inquiries (e.g., quantitative, qualitative) and methodologies (e.g., cross-sectional, RCT), but also the specific evaluation designs employed in the studies. Since methododologies may not be reported or properly classified, the specific evaluation design used becomes the best proxy for the quality of the outcome evaluation performed. This aspect should be determined by the researchers conducting the review because it may not be clearly defined in published papers. Our classification of evaluation designs can be used for this purpose, although further research may be needed to ascertain its instrumental value.

Few studies in our review used qualitative and mixed-method designs, which best practices in curriculum evaluation at the course and program levels recommend [24]. Such practices include using multiple evaluators, collecting and combining qualitative and quantitative data to provide a comprehensive evaluation, and using an evaluation framework (e.g., a logic model) to guide the evaluation process. Qualitative research is particularly suited to shed light into the circumstances under which interventions work (why and how) and the contextual factors shaping the outcomes of interventions and participants’ experiences [25].

Reviews on active learning in dental and medical education have revealed that active learning strategies are commonly evaluated using student feedback [6, 9]. Our study confirms the use of student feedback as the main source of evaluation, which is useful to judge some aspects of teaching effectiveness, such as engagement and organization, but not others such as appropriateness of the pedagogical strategy used to achieve the learning objectives [26]. Faculty feedback is important to comprehensively evaluate active learning across health professions education and ascertain their uptake and continued use of active learning strategies in classroom and clinical learning environments.

Similar to previous review research on active learning across health professions education [5], many studies included in our review used reactionary and factual data to evaluate the impact of several active learning strategies on the outcomes of interest, especially knowledge acquisition. This is an important strength of the literature on active learning in classroom dental education. Reaction- and learning-level outcome evaluations serve slightly different purposes, but both are needed to establish whether students and faculty are satisfied with the active learning strategies used and the actual impact of those strategies on knowledge acquisition, skill development, and attitudinal change. Further research is needed to critically appraise the validity of the means used to collect direct measures of learning, especially when knowledge tests were not originally developed and validated for research purposes.

Satisfaction and knowledge acquisition were the main outcomes evaluated in the studies included, while skill development (e.g., critical thinking, problem-solving skills) was infrequently considered. The latter is an important learning outcome in the context of competency-based education, which has been highly recommended in dental education [27]. Failure to measure whether active learning promotes important skills in this type of education may be due to the length and nature of the exposures (interventions) needed to achieve these outcomes and “inherent” difficulties to measure high-level outcomes [28].

Research on active learning in classroom dental education reflects the emphasis that traditional dental programs place on basic and clinical sciences. We identified only a few papers on active learning in behavioral and social sciences, which are a key component of dental education. These sciences have expanded the understanding of diseases beyond their biological determinants and that of treatment and management beyond clinical procedures [29]. Additionally, behavioral sciences provide dental students with competencies for personalized care, inter-professional care, disease prevention and management, and personal well-being of patients and care providers [30]. While integrating the behavioral science curriculum remains an important task [31], our findings suggest that research is warranted to demonstrate the effectiveness of active learning in delivering behavioral science content in dental education.

Active learning strategies most frequently evaluated in classroom dental education (flipped learning, group discussion, PBL, TBL) are similar to those commonly evaluated in dental and medical education [5, 9, 32]. Properly evaluated strategies provide dental educators with a menu of teaching options from which to choose the most suitable strategy(ies) to achieve their learning objectives. However, other active learning strategies (e.g., peer teaching, role play, think-pair-share) need to be further evaluated in dental education as they have proven effective to achieve certain learning objectives alone or in conjunction with other strategies [33, 34].

Despite the diversity of research designs, populations, settings, and evaluated strategies, active learning in classroom dental education was positively valued by students and faculty, was perceived as beneficial and ‘proven’ effective to promote satisfaction and knowledge acquisition, and was found to be superior to traditional lectures to promote these outcomes. These findings are consistent with those of previous reviews in dental education and health professions education in general [6, 9, 16]. Given the limitations of traditional lectures to promote deep and meaningful learning, dental researchers are encouraged to compare active learning strategies to achieve similar generic and specific learning objectives in order to demonstrate their relative effectiveness to achieve those objectives.

Our review also uncovered several reporting issues. These issues included not reporting or underreporting the research methodology, key aspects (e.g., allocation concealment) of the research design, characteristics of the instruments used for data collection, validity evidence of those instruments, active learning strategies employed, and length of the exposure to those strategies. Sufficient details of studies’ designs and conduct are important to judge the quality of the studies and that of the evidence produced. For example, without knowing the actual length of the exposure, it is not possible to appraise whether the expected learning outcomes were not achieved because the strategy used was not effective or because the exposure to the strategy was insufficient.

Limitations of our study encompasses general limitations of scoping reviews and study-specific limitations. General limitations include the potential for publication bias (published literature often prioritizes studies with significant findings over those with non-significant findings) and the absence of quality assessments for the included studies. While this assessment is not required in scoping reviews, it is important to note that the research designs of most included studies do not offer sufficient evidence to demonstrate the effectiveness of active learning in dental education classrooms. Several study-specific limitations need to be acknowledged. We relied on authors’ classifications of research methodologies and active learning strategies, which may not be the actual methods and strategies used. Misclassification of active learning strategies has been previously reported [17]. We excluded papers in languages other than English due to limited resources for translation, which may impact the generalizability of our study findings. However, based on the number of papers included, we are confident that the inclusion of this literature would not have changed the patterns observed in the extracted data. Our summary of the main results of previous studies by level of outcome evaluation (reaction and learning) may not account for noticeable differences in study design, sample, settings, and measures across studies.

Conclusion

Although active learning strategies were positively valued and found effective using reaction and factual data, robust evaluation designs are needed to further demonstrate their effectiveness in classroom dental education. Aside from effectiveness questions, other issues remain to be elucidated, including for whom, how, when, and in what respect active learning may work in dental education. Future research should evaluate not only the impact of active learning strategies on satisfaction and knowledge acquisition, but also on skill development to support competency-based teaching and assessment in dental education. Similarly, active learning should be used and evaluated across all the main components of dental education, including behavioral and social sciences. Dental education journals should encourage researchers to comply with evaluation and reporting standards for educational innovations to ensure that these innovations are designed, conducted, and reported as expected.

Supporting information

(DOCX)

Acknowledgments

The authors would like to thank Drs. Tania Doblanko and Tanushi Ambekar for their involvement in the study design and preliminary search for relevant articles.

Data Availability

All relevant data are within the manuscript and its Supporting Information files.

Funding Statement

The authors received funding (SDERF-02) for this work from the School of Dentistry at the University of Alberta. SG was the PI. The funder had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Bonwell CC, Eison JA. Active learning: Creating excitement in the classroom. 1991 ASHE-ERIC higher education reports. ERIC Clearinghouse on Higher Education, The George Washington University, One Dupont Circle, Suite 630, Washington, DC 20036–1183; 1991. [Google Scholar]

- 2.Knowles M. Andragogy in action: Applying modern principles of adult learning. San Francisco: Jossey-Bass; 1984. [Google Scholar]

- 3.Pyle M, Andrieu SC, Chadwick DG, et al. ADEA Commission on change and innovation in dental education. The case for change in dental education. J Dent Educ. 2006;70(9):921–924. [PubMed] [Google Scholar]

- 4.Palatta AM, Kassebaum DK, Gadbury-Amyot CC, Karimbux NY, Licari FW, Nadershahi NA, et al. Change Is Here: ADEA CCI 2.0-A Learning Community for the Advancement of Dental Education. J Dent Educ. 2017; 81(6):640–648. doi: 10.21815/JDE.016.040 [DOI] [PubMed] [Google Scholar]

- 5.Reimschisel T, Herring AL, Huang J, Minor TJ. A systematic review of the published literature on team-based learning in health professions education. Med Teach. 2017; 39(12):1227–1237. doi: 10.1080/0142159X.2017.1340636 [DOI] [PubMed] [Google Scholar]

- 6.Thistlethwaite JE, Davies D, Ekeocha S, Kidd JM, MacDougall C, Matthews P, et al. The effectiveness of case-based learning in health professional education. A BEME systematic review: BEME Guide No. 23. Med Teach. 2012;34(6):e421–44. [DOI] [PubMed] [Google Scholar]

- 7.Hew KF, Lo CK. Flipped classroom improves student learning in health professions education: a meta-analysis. BMC Med Educ. 2018;18(1):38. doi: 10.1186/s12909-018-1144-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Polyzois I, Claffey N, Mattheos N. Problem‐based learning in academic health education. A systematic literature review. European Journal of Dental Education. 2010;14(1):55–64. doi: 10.1111/j.1600-0579.2009.00593.x [DOI] [PubMed] [Google Scholar]

- 9.Vanka A, Vanka S, Wali O. Flipped classroom in dental education: A scoping review. Eur J Dent Educ. 2020;24(2):213–226. doi: 10.1111/eje.12487 [DOI] [PubMed] [Google Scholar]

- 10.Bassir SH, Sadr-Eshkevari P, Amirikhorheh S, Karimbux NY. Problem-based learning in dental education: a systematic review of the literature. J Dent Educ. 2014;78(1):98–109. [PubMed] [Google Scholar]

- 11.Eslami E, Bassir SH, Sadr-Eshkevari P. Current state of the effectiveness of problem-based learning in prosthodontics: a systematic review. J Dent Educ. 2014;78(5):723–34. [PubMed] [Google Scholar]

- 12.Huang B, Zheng L, Li C, Li L, Yu H. Effectiveness of problem-based learning in Chinese dental education: a meta-analysis. J Dent Educ. 2013;77(3):377–83. [PubMed] [Google Scholar]

- 13.Arksey H, O’Malley L. Scoping studies: towards a methodological framework. International journal of social research methodology. 2005;8(1):19–32. [Google Scholar]

- 14.Tricco AC, Lillie E, Zarin W, et al. PRISMA extension for scoping reviews (PRISMA-ScR): checklist and explanation. Ann Intern Med. 2018;169:467–73. doi: 10.7326/M18-0850 [DOI] [PubMed] [Google Scholar]

- 15.Kirkpatrick DL. Evaluating Training Program: The Four Level. 2nd ed. San Francisco, CA: Berrett-Koehler Publisher; 1998. [Google Scholar]

- 16.Dong H, Guo C, Zhou L, Zhao J, Wu X, Zhang X, et al. Effectiveness of case-based learning in Chinese dental education: a systematic review and meta-analysis. BMJ Open. 2022;12(2):e048497. doi: 10.1136/bmjopen-2020-048497 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gianoni-Capenakas S, Lagravere M, Pacheco-Pereira C, Yacyshyn J. Effectiveness and Perceptions of Flipped Learning Model in Dental Education: A Systematic Review. J Dent Educ. 2019;83(8):935–945. doi: 10.21815/JDE.019.109 [DOI] [PubMed] [Google Scholar]

- 18.Woldt JL, Nenad MW. Reflective writing in dental education to improve critical thinking and learning: A systematic review. J Dent Educ. 2021;85(6):778–785. doi: 10.1002/jdd.12561 [DOI] [PubMed] [Google Scholar]

- 19.Webster F, Krueger P, MacDonald H, Archibald D, Telner D, Bytautas J, et al. A scoping review of medical education research in family medicine. BMC Med Educ. 2015;15:79. doi: 10.1186/s12909-015-0350-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Crotty M. The foundations of social research. London: Sage Publications Ltd; 1998. [Google Scholar]

- 21.Esene IN, Mbuagbaw L, Dechambenoit G, Reda W, Kalangu KK. Misclassification of Case-Control Studies in Neurosurgery and Proposed Solutions. World Neurosurg. 2018;112:233–242. doi: 10.1016/j.wneu.2018.01.171 [DOI] [PubMed] [Google Scholar]

- 22.Sandelowski M. Whatever happened to qualitative description? Res Nurs Health. 2000; 23(4):334–40. doi: [DOI] [PubMed] [Google Scholar]

- 23.Frye AW, Hemmer PA. Program evaluation models and related theories: AMEE guide no. 67. Med Teach. 2012;34(5):e288–99. doi: 10.3109/0142159X.2012.668637 [DOI] [PubMed] [Google Scholar]

- 24.Kogan JR, Shea JA. Course evaluation in medical education. Teach Teacher Educ. 2007;23(3):251–264. [Google Scholar]

- 25.Pope C, Mays N, editors. Qualitative research in health care. 3rd ed. Massachusetts: Blackwell Publishing; 2006. [Google Scholar]

- 26.Kuwaiti AA. Health Science students’ evaluation of courses and Instructors: the effect of response rate and class size interaction. Int J Health Sci (Qassim). 2015;9(1):51–60. [PMC free article] [PubMed] [Google Scholar]

- 27.American Dental Education Association Commission on Change and Innovation in Dental Education. Beyond the crossroads: change and innovation in dental education. [Internet]. Washington, DC: American Dental Education Association, 2009.[cited 2023 March 5]. Available from: https://www.adea.org/cci/old/ [Google Scholar]

- 28.Prince M. Does active learning work? A review of the research. Journal of engineering education. 2004;93(3):223–31. [Google Scholar]

- 29.McGrath C. Behavioral Sciences in the Promotion of Oral Health. J Dent Res. 2019; 98(13):1418–1424. doi: 10.1177/0022034519873842 [DOI] [PubMed] [Google Scholar]

- 30.Centore L. Trends in Behavioral Sciences Education in Dental Schools, 1926 to 2016. J Dent Educ 2017;81(8):eS66–eS73. doi: 10.21815/JDE.017.009 [DOI] [PubMed] [Google Scholar]

- 31.Perez A, Green JL, Ball GDC, Amin M, Compton SM, Patterson S. Behavioural change as a theme that integrates behavioural sciences in dental education. Eur J Dent Educ. 2022; 26(3):453–458. doi: 10.1111/eje.12720 [DOI] [PubMed] [Google Scholar]

- 32.Trullàs JC, Blay C, Sarri E, Pujol R. Effectiveness of problem-based learning methodology in undergraduate medical education: a scoping review. BMC Med Educ. 2022;22(1):104. doi: 10.1186/s12909-022-03154-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Zhang H, Liao AWX, Goh SH, Wu XV, Yoong SQ. Effectiveness of peer teaching in health professions education: A systematic review and meta-analysis. Nurse Educ Today. 2022;118:105499. doi: 10.1016/j.nedt.2022.105499 [DOI] [PubMed] [Google Scholar]

- 34.Gelis A, Cervello S, Rey R, Llorca G, Lambert P, Franck N, et al. Peer Role-Play for Training Communication Skills in Medical Students: A Systematic Review. Simul Healthc. 2020;15(2):106–111. doi: 10.1097/SIH.0000000000000412 [DOI] [PubMed] [Google Scholar]

- 35.Mitchell J, Brackett M. Dental Anatomy and Occlusion: Mandibular Incisors-Flipped Classroom Learning Module. MedEdPORTAL. 2017. May 24;13:10587. doi: 10.15766/mep_2374-8265.10587 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Omar E. Perceptions of Teaching Methods for Preclinical Oral Surgery: A Comparison with Learning Styles. Open Dent J. 2017;11:109–119. doi: 10.2174/1874210601711010109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Gali S, Shetty V, Murthy NS, Marimuthu P. Bridging the gap in 1(st) year dental material curriculum: A 3 year randomized cross over trial. J Indian Prosthodont Soc. 2015;15(3):244–9. doi: 10.4103/0972-4052.161565 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ihm J, Choi H, Roh S. Flipped-learning course design and evaluation through student self-assessment in a predental science class. Korean J Med Educ. 2017;29(2):93–100. doi: 10.3946/kjme.2017.56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Kim M, Roh S, Ihm J. The relationship between non-cognitive student attributes and academic achievements in a flipped learning classroom of a pre-dental science course. Korean J Med Educ. 2018;30(4):339–346. doi: 10.3946/kjme.2018.109 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Luchi KC, Montrezor LH, Marcondes FK. Effect of an educational game on university students’ learning about action potentials. Adv Physiol Educ. 2017;41(2):222–230. doi: 10.1152/advan.00146.2016 [DOI] [PubMed] [Google Scholar]

- 41.Almajed A, Skinner V, Peterson R, Winning T. Collaborative learning: Students’ perspectives on how learning happens. Interdisciplinary Journal of Problem-Based Learning. 2016;10(2):9. [Google Scholar]

- 42.Ha-Ngoc T, Park SE. Peer Mentorship Program in Dental Education. Journal of Curriculum and Teaching. 2015;4(2):104–9. [Google Scholar]

- 43.Park SE, Kim J, Anderson N. Evaluating a Team-Based Learning Method for Detecting Dental Caries in Dental Students. Journal of Curriculum and Teaching. 2014;3(2):100–5. [Google Scholar]

- 44.Miller CJ, McNear J, Metz MJ. A comparison of traditional and engaging lecture methods in a large, professional-level course. Adv Physiol Educ. 2013;37(4):347–55. doi: 10.1152/advan.00050.2013 [DOI] [PubMed] [Google Scholar]

- 45.Khan S. Effect of active learning techniques on students’ choice of approach to learning in Dentistry: a South African case study. South African Journal of Higher Education. 2011;25(3):491–509. [Google Scholar]

- 46.Kieser J, Livingstone V, Meldrum A. Professional storytelling in clinical dental anatomy teaching. Anat Sci Educ. 2008;1(2):84–9. doi: 10.1002/ase.20 [DOI] [PubMed] [Google Scholar]

- 47.Reich S, Simon JF, Ruedinger D, Shortall A, Wichmann M, Frankenberger R. Evaluation of two different teaching concepts in dentistry using computer technology. Adv Health Sci Educ Theory Pract. 2007;12(3):321–9. doi: 10.1007/s10459-006-9004-8 [DOI] [PubMed] [Google Scholar]

- 48.Qutieshat AS, Abusamak MO, Maragha TN. Impact of Blended Learning on Dental Students’ Performance and Satisfaction in Clinical Education. J Dent Educ. 2020;84(2):135–142. doi: 10.21815/JDE.019.167 [DOI] [PubMed] [Google Scholar]

- 49.Ashwini KM, Devi RG, Jyothipriya A. A survey on the student engagement in physiology education among dental students. Drug Invention Today, 2019;1666–1668. [Google Scholar]

- 50.Kohli S, Sukumar AK, Zhen CT, Yew ASL, Gomez AA. Dental education: Lecture versus flipped and spaced learning. Dent Res J (Isfahan). 2019;16(5):289–297. [PMC free article] [PubMed] [Google Scholar]

- 51.Tricio J, Montt J, Orsini C, Gracia B, Pampin F, Quinteros C, et al. Student experiences of two small group learning-teaching formats: Seminar and fishbowl. Eur J Dent Educ. 2019;23(2):151–158. doi: 10.1111/eje.12414 [DOI] [PubMed] [Google Scholar]

- 52.Tauber Z, Cizkova K, Lichnovska R, Lacey H, Erdosova B, Zizka R, et al. Evaluation of the effectiveness of the presentation of virtual histology slides by students during classes. Are there any differences in approach between dentistry and general medicine students? Eur J Dent Educ. 2019;23(2):119–126. doi: 10.1111/eje.12410 [DOI] [PubMed] [Google Scholar]

- 53.Himida T, Nanjappa S, Yuan S, Freeman R. Dental students’ perceptions of learning communication skills in a forum theatre-style teaching session on breaking bad news. Eur J Dent Educ. 2019;23(2):95–100. doi: 10.1111/eje.12407 [DOI] [PubMed] [Google Scholar]

- 54.Slaven CM, Wells MH, DeSchepper EJ, Dormois L, Vinall CV, Douglas K. Effectiveness of and Dental Student Satisfaction with Three Teaching Methods for Behavior Guidance Techniques in Pediatric Dentistry. J Dent Educ. 2019;83(8):966–972. doi: 10.21815/JDE.019.091 [DOI] [PubMed] [Google Scholar]

- 55.Park SE, Salihoglu-Yener E, Fazio SB. Use of team-based learning pedagogy for predoctoral teaching and learning. Eur J Dent Educ. 2019;23(1):e32–e36. doi: 10.1111/eje.12396 [DOI] [PubMed] [Google Scholar]

- 56.Yang Y, You J, Wu J, Hu C, Shao L. The Effect of Microteaching Combined with the BOPPPS Model on Dental Materials Education for Predoctoral Dental Students. J Dent Educ. 2019;83(5):567–574. doi: 10.21815/JDE.019.068 [DOI] [PubMed] [Google Scholar]

- 57.Veeraiyan D, Rangalakshmi S, Subha M. Peer assisted team-based learning in undergraduate dental education. International Journal of Research in Pharmaceutical Sciences. 2019. a; 10. 607–611. doi: 10.26452/ijrps.v10i1.1890 [DOI] [Google Scholar]

- 58.Veeraiyan D, Manoharan, Subha M, Ramesh A. Conventional lectures vs the flipped classroom: Comparison of teaching models in undergraduate curriculum. International Journal of Research in Pharmaceutical Sciences. 2019. b; 10(1). 572–576. doi: 10.26452/ijrps.v10i1.1913 [DOI] [Google Scholar]

- 59.Veeraiyan D, Solete P, Subha M. Comparative study on conventional lecture classes versus flipped class in teaching conservative dentistry and endodontics. International journal of research in pharmaceutical sciences. 2019. c;10(1), 689‐693. doi: 10.26452/ijrps.v10i1.1904 [DOI] [Google Scholar]

- 60.Veeraiyan D, Abhinav, Subha M. Flipped classes and its effects on teaching oral and maxillofacial surgery. International Journal of Research in Pharmaceutical Sciences. 2019. d; 10. 677–680. doi: 10.26452/ijrps.v10i1.1902 [DOI] [Google Scholar]

- 61.Al-Madi EM, Celur SL, Nasim M. Effectiveness of PBL methodology in a hybrid dentistry program to enhance students’ knowledge and confidence. (a pilot study). BMC Med Educ. 2018;18(1):270. doi: 10.1186/s12909-018-1392-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Chutinan S, Riedy CA, Park SE. Student performance in a flipped classroom dental anatomy course. Eur J Dent Educ. 2018. Aug;22(3):e343–e349. doi: 10.1111/eje.12300 [DOI] [PubMed] [Google Scholar]

- 63.Jones TA. Effect of Collaborative Group Testing on Dental Students’ Performance and Perceived Learning in an Introductory Comprehensive Care Course. J Dent Educ. 2019;83(1):88–93. doi: 10.21815/JDE.019.011 [DOI] [PubMed] [Google Scholar]

- 64.Xiao N, Thor D, Zheng M, Baek J, Kim G. Flipped classroom narrows the performance gap between low-and high-performing dental students in physiology. Advances in physiology education. 2018;42(4):586–92. doi: 10.1152/advan.00104.2018 [DOI] [PubMed] [Google Scholar]

- 65.Varthis S, Anderson OR. Students’ perceptions of a blended learning experience in dental education. Eur J Dent Educ. 2018;22(1):e35–e41. doi: 10.1111/eje.12253 [DOI] [PubMed] [Google Scholar]

- 66.Islam MN, Salam A, Bhuiyan M, Daud SB. A comparative study on achievement of learning outcomes through flipped classroom and traditional lecture instructions. International Medical Journal. 2018;25(5):314–7. [Google Scholar]

- 67.Lee C, Kim SW. Effectiveness of a Flipped Classroom in Learning Periodontal Diagnosis and Treatment Planning. J Dent Educ. 2018; 82(6):614–620. doi: 10.21815/JDE.018.070 [DOI] [PubMed] [Google Scholar]

- 68.Costa-Silva D, Côrtes JA, Bachinski RF, Spiegel CN, Alves GG. Teaching Cell Biology to Dental Students with a Project-Based Learning Approach. J Dent Educ. 2018;82(3):322–331. doi: 10.21815/JDE.018.032 [DOI] [PubMed] [Google Scholar]

- 69.AbdelSalam M, El Tantawi M, Al-Ansari A, AlAgl A, Al-Harbi F. Informal Peer-Assisted Learning Groups Did Not Lead to Better Performance of Saudi Dental Students. Med Princ Pract. 2017;26(4):337–342. doi: 10.1159/000477731 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70.Bai X, Zhang X, Wang X, Lu L, Liu Q, Zhou Q. Follow-up assessment of problem-based learning in dental alveolar surgery education: a pilot trial. Int Dent J. 2017;67(3):180–185. English. doi: 10.1111/idj.12275 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Nishigawa K, Hayama R, Omoto K, Okura K, Tajima T, Suzuki Y, et al. Validity of Peer Evaluation for Team-Based Learning in a Dental School in Japan. J Dent Educ. 2017;81(12):1451–1456. doi: 10.21815/JDE.017.106 [DOI] [PubMed] [Google Scholar]

- 72.Gadbury-Amyot CC, Redford GJ, Bohaty BS. Dental Students’ Study Habits in Flipped/Blended Classrooms and Their Association with Active Learning Practices. J Dent Educ. 2017;81(12):1430–1435. doi: 10.21815/JDE.017.103 [DOI] [PubMed] [Google Scholar]

- 73.Sagsoz O, Karatas O, Turel V, Yildiz M, Kaya E. Effectiveness of Jigsaw learning compared to lecture-based learning in dental education. Eur J Dent Educ. 2017;21(1):28–32. doi: 10.1111/eje.12174 [DOI] [PubMed] [Google Scholar]

- 74.Nishigawa K, Omoto K, Hayama R, Okura K, Tajima T, Suzuki Y, et al. Comparison between flipped classroom and team-based learning in fixed prosthodontic education. J Prosthodont Res. 2017;61(2):217–222. doi: 10.1016/j.jpor.2016.04.003 [DOI] [PubMed] [Google Scholar]

- 75.Samuelson DB, Divaris K, De Kok IJ. Benefits of Case-Based versus Traditional Lecture-Based Instruction in a Preclinical Removable Prosthodontics Course. J Dent Educ. 2017. r;81(4):387–394. doi: 10.21815/JDE.016.005 [DOI] [PubMed] [Google Scholar]

- 76.Eachempati P, Kiran Kumar KS, Sumanth KN. Blended learning for reinforcing dental pharmacology in the clinical years: A qualitative analysis. Indian J Pharmacol. 2016;48(Suppl 1):S25–S28. doi: 10.4103/0253-7613.193315 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Cardozo LT, Miranda AS, Moura MJ, Marcondes FK. Effect of a puzzle on the process of students’ learning about cardiac physiology. Adv Physiol Educ. 2016;40(3):425–31. doi: 10.1152/advan.00043.2016 [DOI] [PubMed] [Google Scholar]

- 78.Bohaty BS, Redford GJ, Gadbury-Amyot CC. Flipping the Classroom: Assessment of Strategies to Promote Student-Centered, Self-Directed Learning in a Dental School Course in Pediatric Dentistry. J Dent Educ. 2016;80(11):1319–1327. [PubMed] [Google Scholar]

- 79.Echeto LF, Sposetti V, Childs G, Aguilar ML, Behar-Horenstein LS, Rueda L, et al. Evaluation of Team-Based Learning and Traditional Instruction in Teaching Removable Partial Denture Concepts. J Dent Educ. 2015;79(9):1040–8. [PubMed] [Google Scholar]

- 80.Park SE, Howell TH. Implementation of a flipped classroom educational model in a predoctoral dental course. J Dent Educ. 2015;79(5):563–70. [PubMed] [Google Scholar]

- 81.Takeuchi H, Omoto K, Okura K, Tajima T, Suzuki Y, Hosoki M, et al. Effects of team-based learning on fixed prosthodontic education in a Japanese School of Dentistry. J Dent Educ. 2015;79(4):417–23. [PubMed] [Google Scholar]

- 82.Ilgüy M, Ilgüy D, Fişekçioğlu E, Oktay I. Comparison of case-based and lecture-based learning in dental education using the SOLO taxonomy. J Dent Educ. 2014;78(11):1521–7. [PubMed] [Google Scholar]

- 83.Guven Y, Bal F, Issever H, Can Trosala S. A proposal for a problem-oriented pharmacobiochemistry course in dental education. Eur J Dent Educ. 2014;18(1):2–6. doi: 10.1111/eje.12049 [DOI] [PubMed] [Google Scholar]

- 84.Du GF, Li CZ, Shang SH, Xu XY, Chen HZ, Zhou G. Practising case-based learning in oral medicine for dental students in China. Eur J Dent Educ. 2013;17(4):225–8. doi: 10.1111/eje.12042 [DOI] [PubMed] [Google Scholar]

- 85.Haj-Ali R, Al Quran F. Team-based learning in a preclinical removable denture prosthesis module in a United Arab Emirates dental school. J Dent Educ. 2013;77(3):351–7. [PubMed] [Google Scholar]

- 86.Ratzmann A, Wiesmann U, Proff P, Kordaß B, Gedrange T. Student evaluation of problem-based learning in a dental orthodontic curriculum—a pilot study. GMS Z Med Ausbild. 2013;30(3):Doc34. doi: 10.3205/zma000877 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87.McKenzie CT. Dental student perceptions of case-based educational effectiveness. J Dent Educ. 2013;77(6):688–94. [PubMed] [Google Scholar]

- 88.Kumar V, Gadbury-Amyot CC. A case-based and team-based learning model in oral and maxillofacial radiology. J Dent Educ. 2012; 76(3):330–7. [PubMed] [Google Scholar]

- 89.Alcota M, Muñoz A, González FE. Diverse and participative learning methodologies: a remedial teaching intervention for low marks dental students in Chile. J Dent Educ. 2011;75(10):1390–5. [PubMed] [Google Scholar]

- 90.Romito LM, Eckert GJ. Relationship of biomedical science content acquisition performance to students’ level of PBL group interaction: are students learning during PBL group? J Dent Educ. 2011;75(5):653–64. [PubMed] [Google Scholar]

- 91.Obrez A, Briggs C, Buckman J, Goldstein L, Lamb C, Knight WG. Teaching clinically relevant dental anatomy in the dental curriculum: description and assessment of an innovative module. J Dent Educ. 2011; 75(6):797–804. [PubMed] [Google Scholar]

- 92.Dantas AK, Shinagawa A, Deboni MC. Assessment of preclinical learning on oral surgery using three instructional strategies. J Dent Educ. 2010;74(11):1230–6. [PubMed] [Google Scholar]

- 93.Grady R, Gouldsborough I, Sheader E, Speake T. Using innovative group-work activities to enhance the problem-based learning experience for dental students. Eur J Dent Educ. 2009;13(4):190–8. doi: 10.1111/j.1600-0579.2009.00572.x [DOI] [PubMed] [Google Scholar]

- 94.Moreno-López LA, Somacarrera-Pérez ML, Díaz-Rodríguez MM, Campo-Trapero J, Cano-Sánchez J. Problem-based learning versus lectures: comparison of academic results and time devoted by teachers in a course on Dentistry in Special Patients. Med Oral Patol Oral Cir Bucal. 2009;14(11):e583–7. doi: 10.4317/medoral.14.e583 [DOI] [PubMed] [Google Scholar]

- 95.Pileggi R, O’Neill PN. Team-based learning using an audience response system: an innovative method of teaching diagnosis to undergraduate dental students. J Dent Educ. 2008;72(10):1182–8. [PubMed] [Google Scholar]

- 96.Park SE, Susarla SM, Cox CK, Da Silva J, Howell TH. Do tutor expertise and experience influence student performance in a problem-based curriculum? J Dent Educ. 2007;71(6):819–24. [PubMed] [Google Scholar]

- 97.Rich SK, Keim RG, Shuler CF. Problem-based learning versus a traditional educational methodology: a comparison of preclinical and clinical periodontics performance. J Dent Educ. 2005;69(6):649–62. [PubMed] [Google Scholar]

- 98.Croft P, White DA, Wiskin CM, Allan TF. Evaluation by dental students of a communication skills course using professional role-players in a UK school of dentistry. Eur J Dent Educ. 2005;9(1):2–9. doi: 10.1111/j.1600-0579.2004.00349.x [DOI] [PubMed] [Google Scholar]

- 99.Qutieshat A, Maragha T, Abusamak M, Eldik OR. Debate as an Adjunct Tool in Teaching Undergraduate Dental Students. Med Sci Educ. 2018;29(1):181–187. doi: 10.1007/s40670-018-00658-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100.Paul SA, Priyadarshini HR, Fernandes B, Abd Muttalib K, Western JS, Dicksit DD. Blended classroom versus traditional didactic lecture in teaching oral surgery to undergraduate students of dentistry program: A comparative study. Journal of International Oral Health. 2019;11(1):36. [Google Scholar]

- 101.Youssef MH, Nassef NA, Rayan Ael R. Towards active learning case study at the end of the physiology course in dental student. Saudi Med J. 2012;33(4):452–4. [PubMed] [Google Scholar]

- 102.Al Kawas S, Hamdy H. Peer-assisted learning associated with team-based learning in dental education. Health Professions Education. 2017;3(1):38–43. [Google Scholar]

- 103.Khan SA, Omar H, Babar MG, Toh CG. Utilization of debate as an educational tool to learn health economics for dental students in Malaysia. J Dent Educ. 2012;76(12):1675–83. [PubMed] [Google Scholar]

- 104.Katsuragi H. Adding problem-based learning tutorials to a traditional lecture-based curriculum: a pilot study in a dental school. Odontology. 2005;93(1):80–5. doi: 10.1007/s10266-005-0054-9 [DOI] [PubMed] [Google Scholar]

- 105.Zhang Y, Chen G, Fang X, Cao X, Yang C, Cai XY. Problem-based learning in oral and maxillofacial surgery education: the Shanghai hybrid. J Oral Maxillofac Surg. 2012;70(1):e7–e11. doi: 10.1016/j.joms.2011.03.038 [DOI] [PubMed] [Google Scholar]

- 106.Zain-Alabdeen EH. Perspectives of undergraduate oral radiology students on flipped classroom learning. Perspectives. 2017;6(3):135–9. [Google Scholar]

- 107.Elledge R, Houlton S, Hackett S, Evans MJ. "Flipped classrooms" in training in maxillofacial surgery: preparation before the traditional didactic lecture? Br J Oral Maxillofac Surg. 2018;56(5):384–387. doi: 10.1016/j.bjoms.2018.04.006 [DOI] [PubMed] [Google Scholar]

- 108.Richards PS, Inglehart MR. An interdisciplinary approach to case-based teaching: does it create patient-centered and culturally sensitive providers? J Dent Educ. 2006;70(3):284–91. [PubMed] [Google Scholar]

- 109.Tack CJ, Plasschaert AJ. Student evaluation of a problem-oriented module of clinical medicine within a revised dental curriculum. Eur J Dent Educ. 2006. May;10(2):96–102. doi: 10.1111/j.1600-0579.2006.00403.x [DOI] [PubMed] [Google Scholar]

- 110.Markose J, Eshwar S, Rekha K, Naganandini S. Problem-based learning vs lectures—comparison of academic performances among dental undergraduates in India: A pilot study. World Journal of Dentistry. 2013;8(1):59–66. [Google Scholar]

- 111.Ahmadian M, Khami MR, Ahamdi AE, Razeghi S, Yazdani R. Effectiveness of two interactive educational methods to teach tobacco cessation counseling for senior dental students. Eur J Dent. 2017;11(3):287–292. doi: 10.4103/ejd.ejd_352_16 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 112.Metz MJ, Miller CJ, Lin WS, Abdel-Azim T, Zandinejad A, Crim GA. Dental student perception and assessment of their clinical knowledge in educating patients about preventive dentistry. Eur J Dent Educ. 2015;19(2):81–6. doi: 10.1111/eje.12107 [DOI] [PubMed] [Google Scholar]

- 113.Shigli K, Aswini YB, Fulari D, Sankeshwari B, Huddar D, Vikneshan M. Case-based learning: A study to ascertain the effectiveness in enhancing the knowledge among interns of an Indian dental institute. J Indian Prosthodont Soc. 2017;17(1):29–34. doi: 10.4103/0972-4052.194945 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114.Roopa S, Geetha M B, Rani A, Chacko T. What type of lectures students want?—a reaction evaluation of dental students. J Clin Diagn Res. 2013;7(10):2244–6. doi: 10.7860/JCDR/2013/5985.3482 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 115.Rimal J, Paudel BH, Shrestha A. Introduction of problem-based learning in undergraduate dentistry program in Nepal. Int J Appl Basic Med Res. 2015;5(Suppl 1):S45–9. doi: 10.4103/2229-516X.162276 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 116.Ihm JJ, An SY, Seo DG. Do Dental Students’ Personality Types and Group Dynamics Affect Their Performance in Problem-Based Learning? J Dent Educ. 2017;81(6):744–751. doi: 10.21815/JDE.017.015 [DOI] [PubMed] [Google Scholar]

- 117.ChandelkarUK RP, Kulkarni MS. Assessment of the impact of small group teaching over didactic lectures and self-directed learning among second-year BDS students in general and dental pharmacology in GOA Medical College. Pharmacologyonline. 2014;3:51–7. [Google Scholar]

- 118.Huynh AV, Latimer JM, Daubert DM, Roberts FA. Integration of a new classification scheme for periodontal and peri-implant diseases through blended learning. J Dent Educ. 2022;86(1):51–56. doi: 10.1002/jdd.12740 [DOI] [PubMed] [Google Scholar]

- 119.Özcan C. Flipped Classroom in Restorative Dentistry: A First Test Influenced by the Covid-19 Pandemic. Oral Health Prev Dent. 2022;20(1):331–338. doi: 10.3290/j.ohpd.b3276187 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 120.Gallardo NE, Caleya AM, Sánchez ME, Feijóo G. Learning of paediatric dentistry with the flipped classroom model. Eur J Dent Educ. 2022;26(2):302–309. doi: 10.1111/eje.12704 [DOI] [PubMed] [Google Scholar]

- 121.Alharbi F, Alwadei SH, Alwadei A, Asiri S, Alwadei F, Alqerban A, et al. Comparison between two asynchronous teaching methods in an undergraduate dental course: a pilot study. BMC Med Educ. 2022;22(1):488. doi: 10.1186/s12909-022-03557-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 122.Zhou Y, Zhang D, Guan X, Pan Q, Deng S, Yu M. Application of WeChat-based flipped classroom on root canal filling teaching in a preclinical endodontic course. BMC Med Educ. 2022;22(1):138. doi: 10.1186/s12909-022-03189-x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 123.Xiao N, Thor D, Zheng M. Student preferences impact outcome of flipped classroom in dental education: students favoring flipped classroom benefited more. Education Sciences. 2021;11(4):150. [Google Scholar]

- 124.Veeraiyan DN, Varghese SS, Rajasekar A, Karobari MI, Thangavelu L, Marya A, et al. Comparison of Interactive Teaching in Online and Offline Platforms among Dental Undergraduates. International journal of environmental research and public health, 2022; 19(6), 3170. doi: 10.3390/ijerph19063170 [DOI] [PMC free article] [PubMed] [Google Scholar]